Abstract

Spatial and nonspatial auditory processing is hypothesized to occur in parallel dorsal and ventral pathways, respectively. In this study, we tested the spatial and nonspatial sensitivity of auditory neurons in the ventrolateral prefrontal cortex (vPFC), a cortical area in the hypothetical nonspatial pathway. We found that vPFC neurons were modulated significantly by both the spatial and nonspatial attributes of an auditory stimulus. When comparing these responses with those in anterolateral belt region of the auditory cortex, which is hypothesized to be specialized for processing the nonspatial attributes of auditory stimuli, we found that the nonspatial sensitivity of vPFC neurons was poorer, whereas the spatial selectivity was better than anterolateral neurons. Also, the spatial and nonspatial sensitivity of vPFC neurons was comparable with that seen in the lateral intraparietal area, a cortical area that is a part of the dorsal pathway. These data suggest that substantial spatial and nonspatial processing occurs in both the dorsal and ventral pathways.

Keywords: spatial processing, nonspatial processing, prefrontal cortex, auditory, rhesus, vocalization

Introduction

In nonhuman primates, the spatial and nonspatial attributes of visual and auditory stimuli are hypothesized to be processed in parallel processing streams (Ungerleider and Mishkin, 1982; Rauschecker, 1998). More specifically, this hypothesis proposes that a “dorsal” pathway processes the spatial attributes of a stimulus, whereas a “ventral” pathway processes the nonspatial attributes.

In early sensory areas of these pathways, neurons respond to both the spatial and nonspatial attributes but may be more selective for one of these two attributes. This is seen, for instance, when comparing the tuning properties of neurons in the caudomedial belt of the auditory cortex (a cortical area in the dorsal pathway) with those in the anterolateral belt (a cortical area in the ventral pathway) (Tian et al., 2001). Anterolateral neurons respond more selectively to different exemplars of monkey vocalizations than caudomedial neurons. In contrast, caudomedial neurons respond more selectively to the location of an auditory stimulus than anterolateral neurons.

In more central cortical areas, it is hypothesized that this functional specialization becomes more refined such that neurons in the dorsal pathway are relatively insensitive to the nonspatial features of a stimulus, whereas neurons in the ventral pathway are relatively insensitive to the spatial features of a stimulus (Ungerleider and Mishkin, 1982). However, recent data provide evidence contrary to this hypothesis (Ferrera et al., 1992, 1994; Sereno and Maunsell, 1998; Toth and Assad, 2002). For instance, neurons in the lateral intraparietal (LIP) area, a cortical area in the dorsal (spatial) pathway, are sensitive to the shape of a visual stimulus (which is regarded as a nonspatial attribute); this sensitivity is comparable with that seen in the ventral pathway (Sereno and Maunsell, 1998). Other experiments indicate that parietal neurons are modulated by the color of a visual stimulus when it is relevant for the successful completion of a behavioral task (Toth and Assad, 2002). Similarly, we have recently shown that auditory neurons in the lateral intraparietal area are modulated by the nonspatial properties (e.g., spectrotemporal structure) of an auditory stimulus (Gifford and Cohen, 2004b).

To further examine the nature of parallel spatial and nonspatial auditory processing, we tested the spatial and nonspatial response properties of neurons in a cortical area of the ventral pathway, the ventrolateral prefrontal cortex (vPFC) (Romanski et al., 1999; Rauschecker and Tian, 2000). First, we tested their response properties using experimental procedures that mirror those used to test the spatial and nonspatial properties of neurons in the auditory cortex (Tian et al., 2001). We found that vPFC neurons were sensitive to both the nonspatial and spatial attributes of an auditory stimulus. When comparing these responses with those in anterolateral belt region of the auditory cortex, we found that the nonspatial sensitivity of vPFC neurons was poorer, whereas the spatial selectivity was better, than anterolateral neurons. Second, we tested the response properties of vPFC neurons using experimental procedures that mirror those used to test the spatial and nonspatial properties of auditory activity in the lateral intraparietal area (Gifford and Cohen, 2004b). We found that, on average, the selectivity of vPFC neurons for the spatial and nonspatial attributes of an auditory stimulus was comparable with that seen in the lateral intraparietal area. Together, these data suggest that substantial spatial and nonspatial processing occurs in both the dorsal and ventral pathways.

Materials and Methods

This study was designed to test the spatial and nonspatial response properties of vPFC neurons so that we could compare our data with analogous studies of neural responsivity in the auditory cortex (Tian et al., 2001) and the lateral intraparietal area (Gifford and Cohen, 2004b). To facilitate these comparisons, the experimental procedures (i.e., stimulus arrays, stimulus sets, behavioral tasks, recording procedures, and data analysis) were tailored to mirror the procedures used in these previous studies. When the experimental procedures differ, we demarcate those procedures designed to mirror those used in the auditory-cortex study as the auditory-cortex configuration and those designed to mirror those used in the parietal study as the parietal configuration.

Subjects

Two female rhesus monkeys (Macaca mulatta) were used in these experiments. Both monkeys (weighing between 8.0 and 9.0 kg) were trained on both of the tasks described in this study. All surgical, recording, and training sessions were in accordance with the National Institutes of Health Guide for the Care and Use of Laboratory Animals and were approved by the Dartmouth Institutional Animal Care and Use Committee.

Surgical procedures

Surgical procedures were conducted under aseptic, sterile conditions using general anesthesia (isoflurane). These procedures were performed in a dedicated surgical suite operated by the Animal Resource Center at Dartmouth College.

In the first procedure, titanium bone screws were implanted in the skull, and a methyl methacrylate implant was constructed. A Teflon-insulated, 50 gauge, stainless-steel wire coil was also implanted between the conjunctiva and the sclera; the wire coil allowed us to monitor the monkey's eye position (Judge et al., 1980). Finally, a head-positioning cylinder (FHC-S2; Crist Instruments, Hagerstown, MD) was embedded in the implant. This cylinder connected to a primate chair and stabilized the monkey's head during behavioral training and recording sessions.

After the monkeys learned the behavioral tasks (see below), a craniotomy was performed, and a recording cylinder (ICO-J20; Crist Instruments) was implanted. This surgical procedure provided chronic access to the vPFC for neurophysiological recordings.

Experimental setup

Behavioral training and recording sessions were conducted in a darkened room with sound-attenuating walls. The walls and floor of the room were covered with anechoic foam insulation (Sonomatt; Auralex, Indianapolis, IN). When inside the room, the monkeys were seated in the primate chair and placed in front of a stimulus array; because the room was darkened, the speakers producing the auditory stimuli were not visible to the monkeys. The primate chair was placed in the center of a 1.2 m diameter, two-dimensional, magnetic coil (CNC Engineering, Seattle, WA) that was part of the eye position-monitoring system (Judge et al., 1980). Eye position was sampled with an analog-to-digital converter (PXI-6052E; National Instruments, Austin, TX) at a rate of 1.0 kHz. The monkeys were monitored during all sessions with an infrared camera.

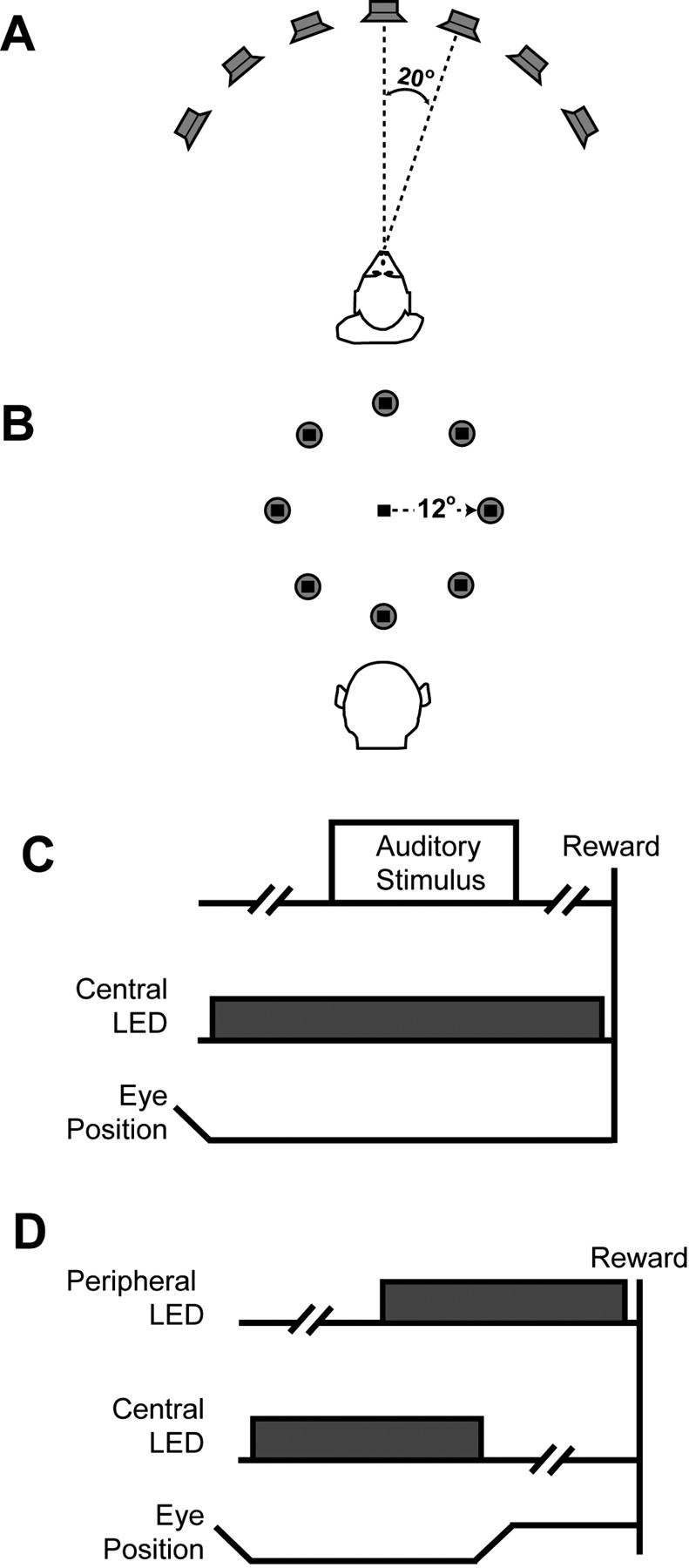

Stimulus arrays

Auditory-cortex configuration. The stimulus array (Fig. 1 A) consisted of seven speakers (PLX32; Pyle, Brooklyn, NY) that formed an arc centered on the monkey; the speakers were 1.2 m above the floor, which was the approximate eye level of the monkeys. Relative to the monkey's position in the room, the seven speakers were arranged such that each speaker was separated by 20° in azimuth; the speaker at 0° was centered in front of the monkey. As a result, the stimulus array spanned the range between 60° left and 60° right of the monkey. A light-emitting diode (LED) (model 276-307; Radio Shack, Fort Worth, TX) was also mounted on the face of the speaker at 0°. This “central” LED served as a fixation point for the monkeys during the visual fixation task (see below).

Figure 1.

Configuration of stimulus arrays and behavioral tasks. A, Schematic of the auditory-cortex configuration of the stimulus array. Seven speakers formed an arc centered on the monkey's position in the room. The speaker-to-speaker separation was 20°. B, Schematic of the parietal configuration of the stimulus array. Eight speakers formed a circle centered on the central LED (black square). Relative to the monkey, the radius of the circle was 12°. C, Visual-fixation task. The monkeys first fixated a central LED. After a random delay (as indicated by the broken line), an auditory stimulus was presented. During auditory-stimulus presentation and after auditory-stimulus offset, the monkeys maintained their gaze at the central light to receive a reward. D, Overlap-saccade task. The monkeys first fixated a central LED. After a random delay (as indicated by the broken line), a peripheral LED was illuminated. After another delay, the central LED was extinguished, and the monkeys saccaded to the location of the peripheral LED.

Parietal configuration. The stimulus array (Fig. 1 B) consisted of eight speakers (PLX32; Pyle). The speakers were arranged in a circle centered on a central LED (model 276-307; Radio Shack). The central LED was 1.2 m above the floor, which was the approximate eye level of the monkeys. Relative to the monkey's position in the room and the central LED, the speakers formed a circle with a radius of 12°. An LED was also mounted and centered on each speaker's face. The central LED served as a fixation point during the visual fixation and overlap-saccade tasks (see below). The “peripheral” LEDs that were mounted on the speakers produced the visual stimuli used during the overlap-saccade task.

Behavioral tasks

When the stimulus array was in the auditory-cortex configuration, the monkeys only participated in the visual fixation task. When the stimulus array was in the parietal configuration, the monkeys participated in both the visual fixation task and the overlap-saccade task. The overlap-saccade task was designed to test the visual and saccade-related properties of neurons in the lateral intraparietal area.

During the visual fixation task (Fig. 1C), 1000-1500 msec after fixating the central LED, an auditory stimulus was presented from one of the speakers. The monkeys maintained their gaze at the central LED during auditory-stimulus presentation and for an additional 1000-1500 msec after auditory-stimulus offset to receive a juice reward.

During the overlap-saccade task (Fig. 1 D), 500-1000 msec after fixating the central LED, a peripheral LED was illuminated. After an additional 500-1000 msec, the central LED was extinguished, and the monkeys shifted their gaze to the peripheral LED. They maintained their gaze at this location for an additional 500-1000 msec to receive a juice reward. During both tasks, the monkeys maintained their gaze within 2° of the central or peripheral LEDs.

Auditory stimuli

Auditory-cortex configuration. Seven different types of auditory stimuli were presented. Each stimulus type was an exemplar from a different class of species-specific vocalizations; an exemplar's membership in a particular class depends on its spectrotemporal structure (Hauser, 1998). The seven exemplars were “copulation scream,” “aggressive,” “harmonic arch,” “girney,” “warble,” “grunt,” and “coo.” The spectrograms of the seven vocalization exemplars used in this study are shown in Figure 2 A-G. These vocalizations were recorded and digitized as part of a previous set of studies (Hauser, 1998).

Figure 2.

Spectrographic representations of species-specific vocalization exemplars (A-G) and bandpass noise (H, I). Note that the range of values on the abscissa and ordinate in each panel are different.

Parietal configuration. Two different types of auditory stimuli were presented. The first type was species-specific vocalizations. The spectrograms of the seven vocalization exemplars are shown in Figure 2 A-G; unlike the auditory-cortex configuration, the seven vocalization exemplars in the parietal configuration were treated as a single type. The second type was bandpass noise. “Fresh” exemplars of bandpass noise were generated on each trial in a digital signal-processing environment that is based on the AP2 DSP card (Tucker Davis Technologies, Alachua, FL). Broadband Gaussian noise was filtered to achieve the correct pass-band. The bandpass noise had a pass-band of either 0.55-2.8 kHz (“low-pass”) or 9.75-15.25 kHz (“high-pass”). The onset and offset of each noise burst was modulated by a 10 msec linear ramp. The durations of the noise stimuli were matched to the distribution of the durations of the vocalizations used in this study. A spectrogram of a low-pass noise exemplar and a spectrogram of a high-pass noise exemplar are shown in Figure 2, H and I. Like the vocalizations, the bandpass noise bursts were treated as a single type, regardless of differences in the pass-bands of individual exemplars.

The auditory stimuli were presented through a digital-to-analog converter (DA1; Tucker Davis Technologies) and an amplifier (SA1, Tucker Davis Technologies; MPA-250, Radio Shack) and transduced by a speaker. Each exemplar was presented at a sound level of 60 dB sound pressure level (relative to 20 μPa).

Visual stimuli

Visual stimuli were produced by illuminating an LED. Each LED subtended <0.2° of visual angle. The luminance of each LED was 12.6 cd/m2.

Recording procedures

Single-neuron extracellular recordings were obtained with varnish-coated tungsten microelectrodes (∼2MΩ impedance at 1 kHz; Frederick Haer Company, Bowdoinham, ME) that were seated inside a stainless-steel guide tube. The electrode and guide tube were advanced into the brain with a hydraulic microdrive (MO-95; Narishige, East Meadow, NY). The electrode signal was amplified by a factor of 104 (MDA-4I; Bak Electronics, Mount Airy, MD) and bandpass filtered (model 3700; Krohn-Hite, Avon, MA) between 0.6 and 6.0 kHz. Single-neuron activity was isolated using a variable-delay, two-window, time-voltage, window discriminator (model DDIS-1; Bak Electronics). Neural events that passed through both time-voltage windows were classified as originating from a single neuron. When a neural event passed through both windows, the discriminator produced a TTL pulse. This pulse was relayed to a counting circuit on a data-acquisition board (PXI-6052E; National Instruments) that recorded the time, with an accuracy of 0.01 msec, of the pulse occurrence. The time of occurrence of each action potential was stored for on-line and off-line analyses.

Recording strategy

Auditory-cortex configuration. An electrode was lowered into the vPFC. To avoid sampling bias, any neuron that was isolated was tested. Once a neuron was isolated, the monkeys participated in trials of the visual fixation task. The seven auditory-stimulus types and the seven auditory-stimulus locations were varied randomly on a trial-by-trial basis. The monkey participated in the visual fixation task until at least five successful trials were completed for each combination of auditory-stimulus type and location. The intertrial interval was 1-2 sec.

Parietal configuration. An electrode was lowered into the vPFC. To avoid sampling bias, any neuron that was isolated was tested. The monkeys first participated in a block of trials of the overlap-saccade task. vPFC activity during this task was correlated with the location of the peripheral LED to construct a spatial response field. The visual stimulus location that elicited the highest mean firing rate during the period in which the peripheral LED was illuminated was designated as the “IN” location. The location that was diametrically opposite to the IN location was the “OUT” location. If a vPFC neuron was not modulated during the visual saccade task, we operationally defined the IN and OUT locations as the speaker locations 12° to the right and 12° to the left of the monkey, respectively. Next, the monkeys participated in a block of trials of the visual fixation task. The two auditory-stimulus types (species-specific vocalizations or bandpass noise) and the two locations of the auditory stimuli (IN or OUT) were varied randomly on a trial-by-trial basis. The monkey participated in the visual fixation task until at least five successful trials were completed for each combination of auditory-stimulus type and location. The intertrial interval was 1-2 sec.

Data analysis

All data analysis was based on data collected during the visual fixation task. Neural activity evoked during the visual fixation task was tested during the “baseline” and “stimulus” periods. The baseline period began 50 msec after the monkey fixated the central LED and ended 50 msec before auditory-stimulus onset; neural activity was aligned relative to the onset of the central LED. The stimulus period began at auditory-stimulus onset and ended at its offset; neural activity was aligned relative to the onset of the auditory stimulus. Data were analyzed in terms of the firing rate of a vPFC neuron (i.e., the number of action potentials divided by task-period duration).

Data were quantified with a two-factor ANOVA, information (I) theory, or response indices. For data collected in the auditory-cortex configuration, all three analyses were conducted. For data collected in the parietal configurations, only the ANOVA and information analyses were conducted.

On a neuron-by-neuron basis, a two-factor ANOVA tested whether the mean stimulus-period firing rate of a vPFC neuron was modulated by auditory-stimulus type, auditory-stimulus location, or the interaction of these two main effects. The null hypotheses were rejected at a level of p < 0.05 in favor of the alternative hypotheses. In the auditory-cortex configuration, each of the seven vocalization exemplars was a separate level of the auditory stimulus-type factor, and each of the seven stimulus locations was a separate level of the auditory stimulus-location factor. In the parietal configuration, vocalizations were one level of the auditory stimulus-type factor, and the bandpass noise was the second level. The IN and OUT locations were two different levels of the auditory stimulus-location factor.

We quantified the amount of information (Shannon, 1948a,b; Cover and Thomas, 1991) carried in the firing rate of vPFC neurons during the stimulus period using a formulization analogous to one that we described previously (Cohen et al., 2002; Gifford and Cohen, 2004a). In this analysis, we quantified the amount of auditory-type information and auditory-location information. Auditory-type information was the amount of information carried in the firing rates of vPFC neurons regarding differences in the auditory-stimulus type, independent of their location. Auditory-location information was the amount of information carried in the firing rates of vPFC neurons regarding auditory-stimulus location, independent of their type.

Information was defined as follows:

|

where s is the index of each auditory-stimulus type or location, r is the index of the stimulus-period firing rate, P(s,r) is the joint probability, and P(s) and P(r) are the marginal probabilities.

Similar to the ANOVA analysis, in the auditory-cortex configuration, there were seven auditory-stimulus types and seven auditory-stimulus locations. In the parietal configuration, there were two auditory-stimulus types and two auditory-stimulus locations.

To facilitate comparisons across monkeys and brain areas and to eliminate biases in the amount of information resulting from small sample sizes, bit rates are reported in terms of bias-corrected information (Panzeri and Treves, 1996; Grunewald et al., 1999; Cohen et al., 2002; Gifford and Cohen, 2004a). On a neuron-by-neuron basis, we first calculated the amount of information from the original data and from bootstrapped trials. In bootstrapped trials, the relationship between the firing rate, auditory-stimulus type, and auditory-stimulus location of a neuron was randomized and then the amount of information was calculated. This process was repeated 500 times, and the median value from this distribution of values was determined. The amount of bias-corrected information was calculated by subtracting the median amount of information obtained from bootstrapped trials from the amount obtained from the original data.

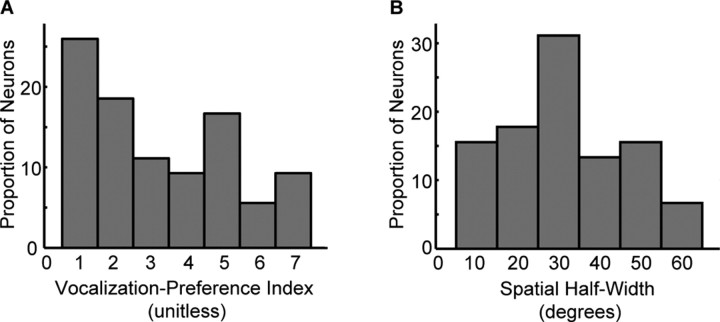

Finally, for data collected in the auditory-cortex configuration, we calculated two response indices analogous to those used by Tian et al. (2001). These two indices, “vocalization-preference index” and “spatial half-width,” are metrics of the nonspatial and spatial selectivity a neuron, respectively.

The two indices were calculated using the following procedure. The vocalization exemplar that elicited the highest mean stimulus-period firing rate (the “preferred firing rate”) was the “preferred vocalization.” The azimuthal location that elicited this firing rate was the “preferred azimuth.” The vocalization-preference index of a neuron was the number of vocalization exemplars at the preferred azimuth that elicited a mean stimulus-period firing rate >50% of the preferred firing rate. The spatial half-width of a neuron was the spatial separation of the speakers that elicited a mean stimulus-period firing rate >50% of the preferred firing rate. The spatial half-width was characterized only with the preferred vocalization.

Verification of recording locations

Recording locations were confirmed by visualizing a recording microelectrode in the vPFC of each monkey through magnetic resonance images. These images were obtained at the Dartmouth Brain Imaging Center using a GE (Fairfield, CT) 1.5T scanner (three-dimensional T1-weighted gradient echo pulse sequence with a 5 inch receive-only surface coil) (Groh et al., 2001; Cohen et al., 2004).

Results

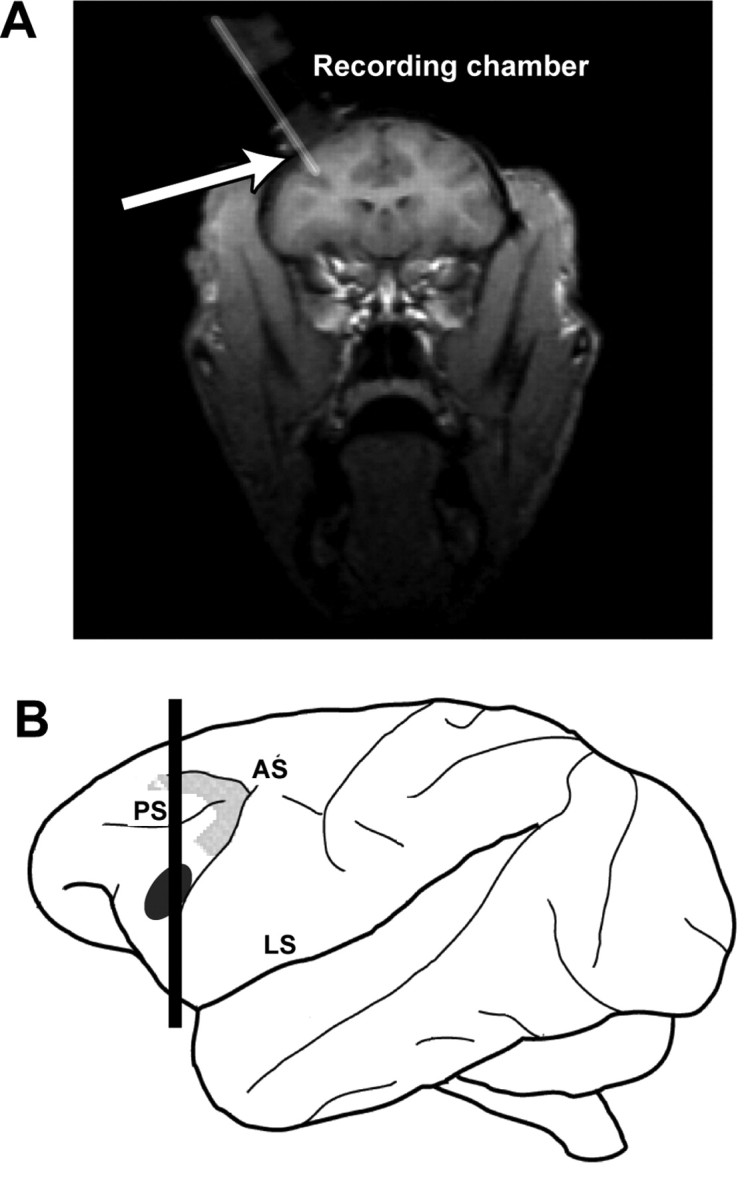

The vPFC was identified by its anatomical location and its neurophysiological properties. Anatomically, the vPFC is located anterior to the arcuate sulcus and area 8a and lies below the principal sulcus (Romanski and Goldman-Rakic, 2002), as shown in Figure 3. Physiologically, vPFC neurons were characterized by their strong responses to species-specific vocalizations and noise bursts (Newman and Lindsley, 1976; Romanski and Goldman-Rakic, 2002).

Figure 3.

A, A coronal magnetic resonance image illustrating the approach of a recording electrode, as indicated by the arrow, through the recording chamber and into the vPFC. The approximate plane of section of this image is illustrated by the vertical black line through the schematic of the rhesus brain (B). The black ellipse on this schematic encompasses the region where we recorded auditory neurons from two rhesus monkeys, and the enclosed gray area outlines the approximate location of area 8a, a cortical area involved in auditory spatial processing (Russo and Bruce, 1994). AS, Arcuate sulcus; LS, lateral sulcus; PS, principal sulcus.

We recorded from the left vPFC of two rhesus monkeys. Neural data were pooled for presentation, because the results were similar for both monkeys. When data were collected in the auditory-cortex configuration, we recorded from 143 vPFC neurons. Of these neurons, 63 were auditory; a neuron was auditory if a t test determined that its mean baseline- and stimulus-period firing rates, independent of auditory-stimulus type and location, were different at p < 0.05. We report data from the 52 auditory neurons in which we were able to record at least five successful trials of each combination of auditory-stimulus type and location; neural isolation was lost before we collected enough data from the remaining 11 neurons. When data were collected in the parietal configuration, we recorded from 71 vPFC neurons. Similar to the methods used in our parietal study (Gifford and Cohen, 2004b), we report data from the entire population, independent of whether the neurons were auditory.

Qualitative observations of data collected in the auditory-cortex configuration

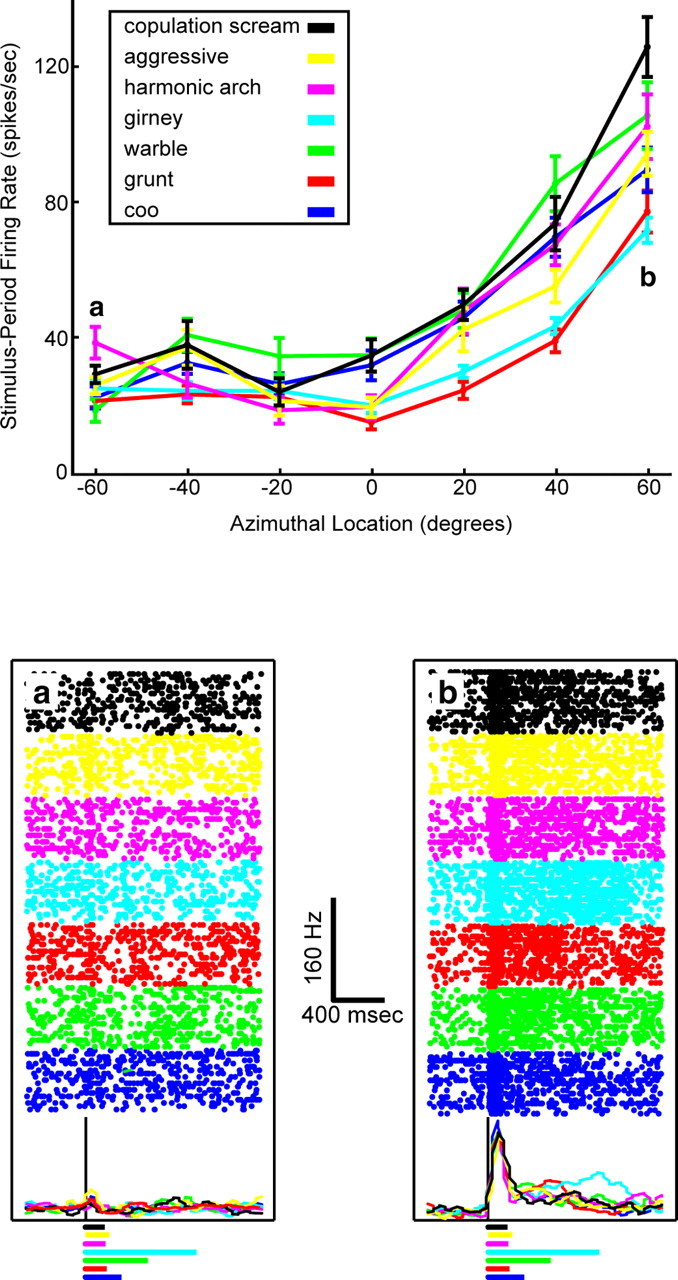

Overall, we found that vPFC neurons were modulated by both the nonspatial and spatial attributes of an auditory stimulus. A population-tuning curve is shown in Figure 4. As can be seen, the mean response of a vPFC neuron was dependent on both auditory-stimulus type and location. Neurons were typically modulated more during presentation of the coo or copulationscream exemplars than the other vocalizations. vPFC neurons also had generally higher firing rates when vocalizations were presented at more eccentric locations.

Figure 4.

Population tuning curve of vPFC activity generated from data collected in the auditory-cortex configuration. On a neuron-by-neuron basis, the mean stimulus-period firing rate of the response of a neuron to each combination of auditory-stimulus type and location was normalized relative to the highest stimulus-period firing rate. These normalized firing rates were then averaged together as a function of auditory-stimulus type and auditory-stimulus location to form the population tuning curve. Error bars represent SEM. Negative azimuthal locations are ipsilateral to the recording site, and positive values are contralateral.

A tuning curve from an individual vPFC neuron is shown in Figure 5. This neuron was modulated primarily by auditory-stimulus location but also somewhat by auditory-stimulus type. For all seven vocalization exemplars, the firing rate of the neuron increased as the exemplars were presented at more contralateral locations. The sensitivity to auditory-stimulus type can be seen when comparing the stimulus-period firing rates elicited by the copulation scream (Fig. 5, black data points), the girney (cyan data points), and the grunt (red data points); the stimulus-period firing rate of the neuron was highest when the copulation scream was presented and lowest when the girney or grunt was presented.

Figure 5.

Tuning curve of a vPFC neuron generated from data collected in the auditory-cortex configuration. Top, The panel illustrates the mean stimulus period firing rate of a vPFC neuron as a function of auditory-stimulus type and auditory-stimulus location. The error bars in the panel represent SEM. Negative azimuthal locations are ipsilateral to the recording site, and positive values are contralateral. a and b indicate those auditory stimulus locations where neural activity is shown in more detail in the bottom panel. Bottom, Rasters and peristimulus time histograms for the seven vocalization exemplars at the two locations indicated by a and b in the top panel. The rasters and histograms are aligned relative to auditory-stimulus onset; the solid black line indicates stimulus onset. The histograms were generated by binning spike times into 40 msec bins and then smoothing them with a [0.25 0.5 0.25] kernel. The colored lines underneath the raster and histogram plots illustrate the duration of each vocalization. The legend at the top indicates the relationship between line color and auditory-stimulus type for data in both panels.

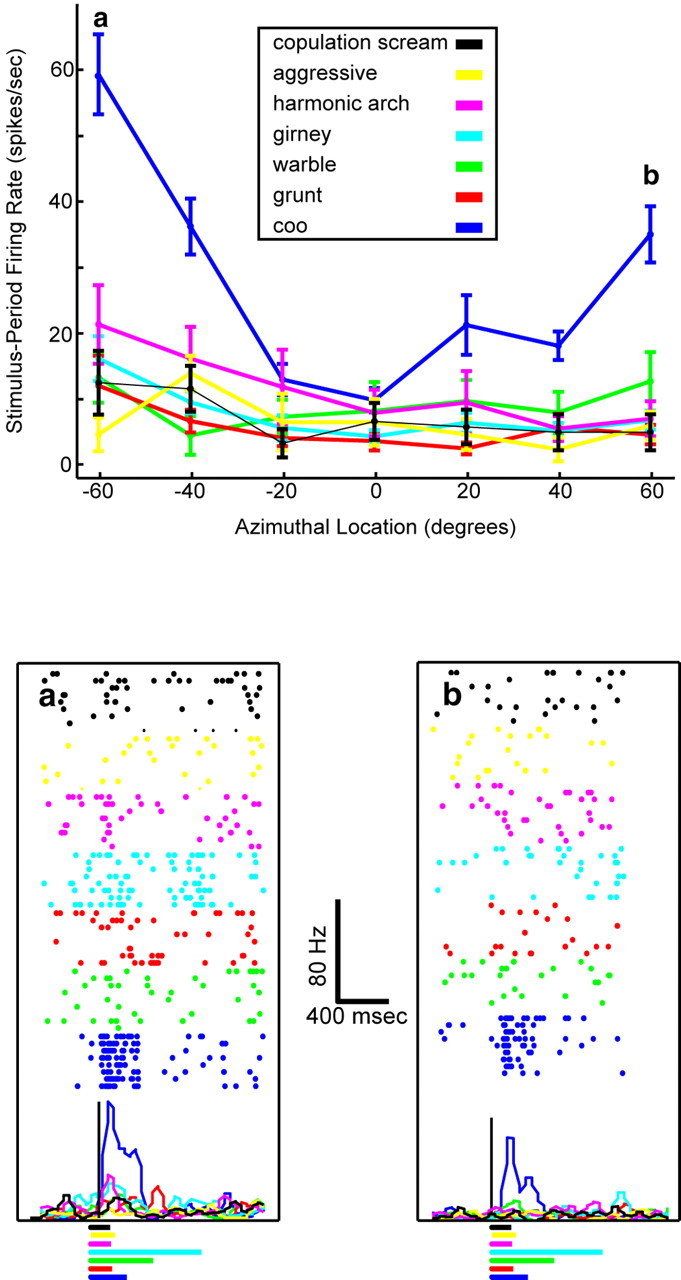

A vPFC neuron with a different response pattern is shown in Figure 6. This neuron was more selective for auditory-stimulus type than the neuron shown in Figure 5; the stimulus-period firing rate of this neuron was primarily modulated by the coo exemplar (blue data point). However, the firing rate of this neuron was also modulated by the location of the coo exemplar. Its firing rate was highest when the coo was presented at the more eccentric contralateral or ipsilateral locations.

Figure 6.

Tuning curve of a vPFC neuron generated form data collected in the auditory-cortex configuration. The data are presented in the same format as that in Figure 5.

Quantitative analyses of data collected in the auditory-cortex configuration

A two-factor (auditory-stimulus type × auditory-stimulus location) ANOVA tested, on a neuron-by-neuron basis, the stimulus-period firing rates of the 52 auditory vPFC neurons. We found that 67% (n = 35 of 52) of vPFC neurons were modulated significantly (p < 0.05) by auditory-stimulus type and that 63% (n = 33 of 52) of vPFC neurons were modulated by the auditory-stimulus location. Twenty-nine percent (n = 15 of 52) of vPFC neurons were modulated significantly (p < 0.05) by the interaction of auditory-stimulus type and location. All three of these proportions were greater than that expected by chance (binomial probability, p < 0.05).

We cannot infer from these ANOVA analyses the relative degree to which auditory-stimulus type or location modulated vPFC activity. To address this issue, we used response indices and information theory to quantify the spatial and nonspatial sensitivity of our population of auditory vPFC neurons.

The distributions of the vocalization-preference index and spatial half-width are shown in Figure 7. Consistent with the population-tuning curve (Fig. 4), these two distributions indicate that, on average, vPFC neurons were modulated by several vocalization types over a range of spatial locations. The mean vocalization-preference index (Fig. 7A) was 3.3 (SD = 2.0) with a median value of 3. The mean spatial half-width (Fig. 7B) was 32.6° (SD = 18.1) with a median value of 31.0°. A Spearman-rank correlation analysis indicated that there was a significant positive relationship between vocalization-preference index and spatial half-width (r = 0.41; p < 0.05).

Figure 7.

Responses indices. The distributions of vocalization-preference index (A) and spatial half-width (B) for the population of 52 vPFC auditory neurons tested in the auditory-cortex configuration.

Using the nomenclature of Tian et al. (2001), we classified the spatial responses of vPFC neurons into four categories. Forty-five of the 52 auditory vPFC neurons (87%) were tuned (i.e., the mean stimulus-period firing rate of the neuron was <50% of the preferred firing rate at one location). Of these 45 neurons, 24% (n = 11) of the neurons were peaked (i.e., the mean stimulus-period firing rate of the neuron was <50% of the preferred firing rate at a location both contralateral and ipsilateral to the preferred azimuth). Forty-two percent (n = 19) of the neurons were contralateral tuned (i.e., the mean stimulus-period firing rate of the neuron was <50% of the preferred firing rate only at location contralateral to the preferred azimuth). Finally, 33% (n = 15) of the vPFC neurons were ipsilateral tuned (i.e., the mean stimulus-period firing rate of the neuron was <50% of the preferred firing rate only at location ipsilateral to the preferred azimuth). The pooling of these contralateral- and ipsilateral-tuned neurons may have contributed to the double-peaked nature of the spatial profile of the population-tuning curve (Fig. 4).

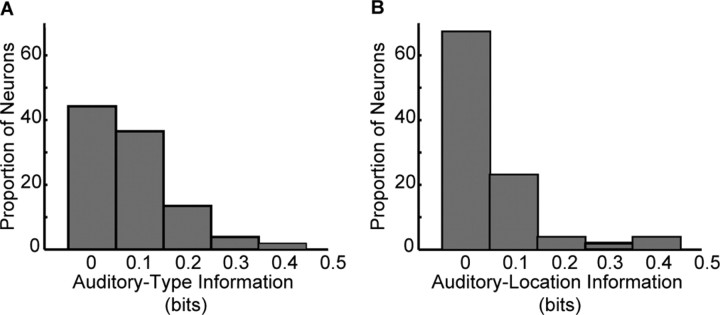

The distributions of auditory-type and auditory-location information for the population of auditory vPFC neurons are shown in Figure 8. The median amount of auditory-type information (Fig. 8A) was 0.08 bits, a value that was reliably different (Mann-Whitney; p < 0.05) from zero bits. The median amount of auditory-location information (Fig. 8B) was 0.02 bits, a value that was reliably different (Mann-Whitney; p < 0.05) from zero bits. Because both distributions were on average greater than zero, it suggests that the firing rates of vPFC neurons carry information about differences between auditory-stimulus types and auditory-stimulus locations. We could not identify (p > 0.05) a relationship between the amount of auditory-type and auditory-location information.

Figure 8.

Information analyses. The distributions of auditory-type information (A) and auditory-location information (B) for the population of 52 vPFC auditory neurons tested in the auditory-cortex configuration.

Quantitative observations of data collected in the parietal configuration

How do the spatial and nonspatial response properties of vPFC neurons compare with those in cortical areas of the dorsal (spatial processing) pathway? To address this question, we recorded from another population of vPFC neurons using methodologies analogous to those used in our study of the spatial and nonspatial response properties of neurons in the lateral intraparietal area (Gifford and Cohen, 2004b).

A two-factor (auditory-stimulus type × auditory-stimulus location) ANOVA tested, on a neuron-by-neuron basis, the stimulus-period firing rates of 71 vPFC neurons. Of these 71 neurons, we found that 35% (n = 25 of 71) of vPFC neurons were modulated significantly (p < 0.05) by auditory-stimulus type, and 18% (n = 13 of 71) were modulated significantly (p < 0.05) by auditory-stimulus location. Thirteen percent (n = 9 of 71) of this population was modulated significantly (p < 0.05) by the interaction of auditory-stimulus type and location. All three of these proportions were greater than that expected by chance (binomial probability; p < 0.05).

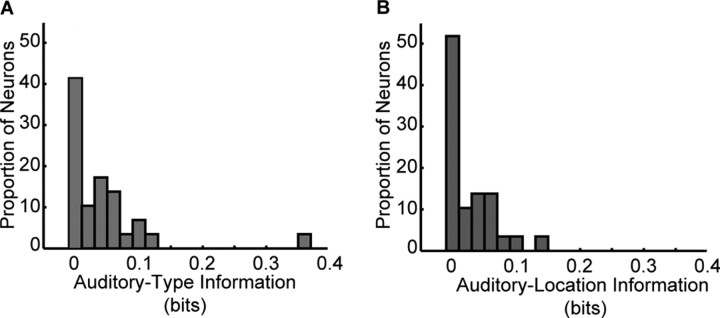

The distributions of auditory-type and auditory-location information for these 71 vPFC auditory neurons are shown in Figure 9. The median amount of auditory-type information (Fig. 9A) was 0.02 bits, and the median amount of auditory-location information (Fig. 9B) was 0.02 bits. Both of these distributions were reliably different (Mann-Whitney; p < 0.05) from zero bits, suggesting that the firing rates of vPFC neurons carry information about differences between auditory-stimulus types and auditory-stimulus locations. We could not identify (p > 0.05) a relationship between the amount of auditory-type and auditory-location information. It is important to note that because the spatial array in the parietal configuration did not cover a large range of spatial locations, we may be underestimating the spatial selectivity of neurons in the lateral intraparietal area.

Figure 9.

Information analyses. The distributions of auditory-type information (A) and auditory-location information (B) for the population of 71 vPFC neurons tested in the parietal configuration.

When the response properties of vPFC neurons and neurons in the lateral intraparietal area are compared (Table 1) using the same methodologies and analyses techniques, we found that the spatial and nonspatial attributes of auditory stimuli modulate neural activity in these two areas in a comparable manner. A χ2 analysis indicates that the frequency of neurons that were sensitive (by the two-factor ANOVA analysis) to auditory-stimulus type, auditory-stimulus location, and the interaction of auditory-stimulus type and location was independent of brain region (χ2 = 3.2; p > 0.05). A Kruskal-Wallis analysis indicated that the differences in the amount of auditory-type information and auditory-location information between the vPFC and the lateral intraparietal area were not different from that expected by chance (p > 0.05).

Table 1.

Comparison of auditory-type and auditory-location sensitivity in the vPFC and the lateral intraparietal area

|

|

Proportion of neurons sensitive to auditory type |

Proportion of neurons sensitive to auditory location |

Proportion of neurons sensitive to auditory type and auditory location |

Auditory-type information (bits) |

Auditory-location information (bits) |

|---|---|---|---|---|---|

| vPFC (n = 71) | 35% | 18% | 13% | 0.02 | 0.02 |

| Area LIP (n = 70) |

21% |

13% |

1% |

0.01 |

0.02 |

Discussion

vPFC neurons were modulated by the spatial and nonspatial attributes of an auditory stimulus, regardless of the experimental procedures. When we used a methodology comparable with one used in a parietal study (Gifford and Cohen, 2004b), we found that vPFC sensitivity to spatial and nonspatial attributes was comparable with that seen in the lateral intraparietal area. These data suggest that substantial spatial and nonspatial processing occurs in both the dorsal and ventral pathways. Below, we compare these results with those in the rhesus auditory cortex, compare our data with human studies, and conclude with a discussion of functional streams of auditory processing.

Comparison with auditory cortex

A compelling study by Tian et al. (2001) indicated that functional specialization for spatial and nonspatial processing begins in the belt region of the rhesus auditory cortex. This study demonstrated that neurons in the anterolateral belt region were significantly more selective for different types of vocalizations than those in the caudolateral belt region. The mean vocalization-preference index [or “monkey call index” as termed by Tian et al. (2001)] for anterolateral neurons was approximately two calls, whereas for caudomedial neurons, it was approximately two to three calls. In contrast, neurons in the caudolateral belt region were significantly more selective for the location of a vocalization than those in the anterolateral belt region. The mean spatial half-width for caudomedial neurons was ∼40°, whereas it was ∼80° for anterolateral neurons. These neurophysiological data supported anatomical studies (Romanski et al., 1999; Rauschecker and Tian, 2000), suggesting that the caudomedial belt region was part of a spatial dorsal-processing pathway and the anterolateral belt region was part of a nonspatial ventral-processing pathway.

How do our data compare with those reported by Tian et al. (2001) (Fig. 7)? The mean vocalization-preference index (3.3) of vPFC neurons was bigger (i.e., less selective) than that reported in the anterolateral belt. The mean spatial half-width (32.6°) of vPFC neurons was smaller than that reported in the anterolateral belt. In other words, the nonspatial sensitivity of vPFC neurons was poorer, whereas the spatial selectivity was better than anterolateral neurons; although, the spatial selectivity of vPFC neurons was comparable with that seen in caudomedial neurons.

Before any interpretation of the relationship between our data and those of Tian et al. (2001), it is important to note an important methodological difference. In our study, our monkeys were awake and behaving (i.e., fixating a central LED to get a reward). However, in the study by Tian et al. (2001), the monkeys were anesthetized (isoflurane). This difference in the behavioral-cognitive state (Miller et al., 1972; Pfingst et al., 1977; Epping and Eggermont, 1985; Recanzone et al., 2000; Cheung et al., 2001; Gaese and Ostwald, 2001) of the monkeys may underlie the differences in the mean values of the two response indices. Although it seems likely that behavioral-cognitive state could affect the spatial and nonspatial properties of a neuron, it is not obvious whether this effect would cause the neurons to be more or less selective for the spatial and nonspatial attributes of an auditory stimulus.

With this caveat in mind, a comparison between the response indices from the two brain areas suggests two points. First, relative to these indices, neural sensitivity to auditory-stimulus type and location does not seem to increase between the belt region of the auditory cortex and the vPFC. Second, because the nonspatial selectivity of vPFC neurons is poorer than that seen in the anterolateral belt, whereas the spatial selectivity is better, it is unclear how the anterolateral belt, the vPFC, and other areas in the ventral pathway form a processing stream specialized to process the nonspatial attributes of an auditory stimulus. Furthermore, when we consider the fact that, relative to the small stimulus set and small spatial separation of the stimulus locations, the spatial and nonspatial response properties of vPFC and parietal (dorsal pathway) neurons are comparable (Table 1), the issue of functional specialization in the dorsal and ventral pathways becomes further complicated.

Comparison with human studies

In humans, there are several lines of anatomical (Galaburda and Sanides, 1980; Rivier and Clarke, 1997; Tardif and Clarke, 2001), neurophysiological (Alain et al., 2001; Anourova et al., 2001; Maeder et al., 2001; Warren et al., 2002; Warren and Griffiths, 2003; Hart et al., 2004; Rämä et al., 2004), and neuropsychological (Clarke et al., 2000) evidence to suggest that distinct pathways process different attributes of an auditory stimulus (Warren and Griffiths, 2003). An anterior pathway is thought to process the nonspatial attributes of auditory stimuli (Zatorre et al., 1992; Griffiths et al., 1998; Scott et al., 2000; Maeder et al., 2001; Vouloumanos et al., 2001; Patterson et al., 2002; Hart et al., 2004). A posterior pathway that includes regions of inferior parietal lobule is thought to process the spatial attributes of an auditory stimulus (Baumgart et al., 1999; Alain et al., 2001; Maeder et al., 2001; Warren et al., 2002; Hart et al., 2004). The areas of the auditory cortex and the frontal and parietal areas that constitute these two pathways are thought to be analogous to those areas identified as important for spatial and nonspatial processing in the rhesus monkey (Romanski et al., 1999; Rauschecker and Tian, 2000).

One interesting theme that has arisen from the human neurophysiological studies is the relationship between task demand and the engagement of frontal and parietal areas in spatial and nonspatial processing. When subjects are engaged in tasks that require them to attend to the spatial or nonspatial attributes of an auditory stimulus, the frontal and parietal areas that are part of the anterior (nonspatial) and posterior (spatial) pathways are differentially activated (Alain et al., 2001; Maeder et al., 2001; Hart et al., 2004; Rämä et al., 2004). In contrast, when subjects listen passively to a stimulus, these frontal and parietal areas are not engaged (Warren and Griffiths, 2003; Hart et al., 2004).

These sets of studies may be important when considering our data. Although our monkeys were awake and behaving, they did not have to attend to the spatial or nonspatial attributes of an auditory stimulus to be rewarded. Consequently, it is possible that if the monkeys were engaged in a task in which the attributes of an auditory stimulus were relevant for successful completion of the task, we may have identified more functional specialization between the vPFC and lateral intraparietal area (Gifford and Cohen, 2004b). Indeed, when monkeys are required to attend to the spatial or nonspatial attributes of a visual stimulus, distinct regions of the prefrontal cortex are modulated by one of these attributes (Wilson et al., 1993).

However, the fact that our monkeys did not overtly attend to an auditory stimulus cannot wholly explain the difference between our data and the human-functional studies. For instance, visual neurons in the lateral intraparietal area are actually more selective for differences in the shape of a visual stimulus when monkeys are engaged in a task that does not require them to attend to stimulus shape than when they are required attend to stimulus shape (Sereno and Maunsell, 1998). Also, in humans, moving auditory stimuli preferentially activates regions of the inferior parietal lobule even when participants do not overtly attend to the stimulus, although this activity may relate to covert motor planning (Warren et al., 2002).

We cannot reconcile the issues raised in this section and the previous section with data from the current study. However, it is clear that more experiments are required in which the spatial and nonspatial sensitivity of neurons in both the dorsal and ventral pathways are recorded while monkeys are engaged in identical tasks that vary in their task demands and use the same sets of stimuli. Also, when exploring the neural correlates of auditory cognition in rhesus monkeys, especially in areas like the prefrontal cortex, it may be necessary to devise experimental paradigms that take advantage of a monkey's natural behavior (Gifford et al., 2003; Glimcher, 2003).

Functional streams of auditory processing

Although the spatial and nonspatial attributes of auditory stimuli have been hypothesized to be processed in parallel pathways (Ungerleider and Mishkin, 1982; Rauschecker and Tian, 2000), the utility of this important conceptual model has come under close scrutiny. At one level, there is considerable debate as to whether the conceptual organization of auditory processing into “what” and “where” processing is even appropriate (Cohen and Wessinger, 1999; Middlebrooks, 2002; Recanzone, 2002; Zatorre et al., 2002; Griffiths et al., 2004). For instance, a recent study suggested that functional auditory streams do not code the what-where attributes of an auditory stimulus but instead code sound identification (what) and changes in frequency over time (how) (Belin and Zatorre, 2000). Similarly, it has been proposed that auditory processing may constitute more than two functional streams (Kaas and Hackett, 1999).

At a second level, our results and data from other studies (Sereno and Maunsell, 1998; Ferrera et al., 1992, 1994; Toth and Assad, 2002) suggest that even if the parcelization of auditory processing into what and where is appropriate, these processing pathways may not be strictly parallel. Instead, they appear to be interconnected (Ferrera et al., 1992; Troscianko et al., 1996; Goldberg and Gottlieb, 1997; Sereno and Maunsell, 1998; Middlebrooks, 2002; Recanzone, 2002; Toth and Assad, 2002). This cross-talk between the nonspatial and spatial pathways may be mediated by connections between the two pathways (Webster et al., 1994; Sereno and Maunsell, 1998; Kaas and Hackett, 1999, 2000).

What purpose does spatial information serve in the nonspatial pathway? Similarly, what purpose does nonspatial information serve in the spatial pathway? Although the answers to these questions are not known, we hypothesize that the mixture of spatial and nonspatial information may benefit those computations that create consistent perceptual representation that guide goal-directed behavior (Ferrera et al., 1994; Graziano et al., 1997; Rao et al., 1997; Kusunoki et al., 2000; Toth and Assad, 2002).

Footnotes

Y.E.C. was supported by grants from the Whitehall Foundation and National Institutes of Health, by the Class of 1962 Faculty Fellowship, and by a Burke Award. We are grateful for A. Underhill's help with animal care. Also, we greatly appreciate the helpful suggestions from S. Grafton, H. Hersh, M. Kilgard, and J. Groh and the generosity of M. Hauser in providing the recordings of the rhesus vocalizations.

Correspondence should be addressed to Dr. Yale E. Cohen, Department of Psychological and Brain Sciences, Dartmouth College, Hanover, NH 03755. E-mail: yec@dartmouth.edu.

Copyright © 2004 Society for Neuroscience 0270-6474/04/2411307-10$15.00/0

References

- Alain C, Arnott SR, Hevenor S, Graham S, Grady CL (2001) “What” and “where” in the human auditory system. Proc Natl Acad Sci USA 98: 12301-12306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anourova I, Nikouline VV, Ilmoniemi RJ, Hotta J, Aronen HJ, Carlson S (2001) Evidence for dissociation of spatial and nonspatial auditory information processing. NeuroImage 14: 1268-1277. [DOI] [PubMed] [Google Scholar]

- Baumgart F, Gaschler-Markefski B, Woldorff MG, Scheich H (1999) A movement-sensitive area in auditory-cortex. Nature 400: 724-725. [DOI] [PubMed] [Google Scholar]

- Belin P, Zatorre RJ (2000) “What,” “where” and “how” in auditory-cortex. Nat Neurosci 3: 965-966. [DOI] [PubMed] [Google Scholar]

- Cheung SW, Nagarajan SS, Bedenbaugh PH, Schreiner CE, Wang X, Wong A (2001) Auditory cortical neuron response differences under isoflurane versus pentobarbital anesthesia. Hear Res 156: 115-127. [DOI] [PubMed] [Google Scholar]

- Clarke S, Bellmann A, Meuli RA, Assal G, Steck AJ (2000) Auditory agnosia and auditory spatial deficits following left hemispheric lesions: evidence for distinct processing pathways. Neuropsychologia 38: 797-807. [DOI] [PubMed] [Google Scholar]

- Cohen YE, Wessinger CM (1999) Who goes there? Neuron 24: 769-771. [DOI] [PubMed] [Google Scholar]

- Cohen YE, Batista AP, Andersen RA (2002) Comparison of neural activity preceding reaches to auditory and visual stimuli in the parietal reach region. NeuroReport 13: 891-894. [DOI] [PubMed] [Google Scholar]

- Cohen YE, Cohen IS, Gifford III GW (2004) Modulation of LIP activity by predictive auditory and visual cues. Cereb Cortex 14: 1287-1301. [DOI] [PubMed] [Google Scholar]

- Cover TM, Thomas JA (1991) Elements of information theory. New York: Wiley.

- Epping WJ, Eggermont JJ (1985) Single-unit characteristics in the auditory midbrain of the immobilized grassfrog. Hear Res 18: 223-243. [DOI] [PubMed] [Google Scholar]

- Ferrera VP, Nealey TA, Maunsell JH (1992) Mixed parvocellular and magnocellular geniculate signals in visual area V4. Nature 358: 756-761. [DOI] [PubMed] [Google Scholar]

- Ferrera VP, Rudolph KK, Maunsell JH (1994) Responses of neurons in the parietal and temporal visual pathways during a motion task. J Neurosci 14: 6171-6186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gaese BH, Ostwald J (2001) Anesthesia changes frequency tuning of neurons in the rat primary auditory-cortex. J Neurophysiol 86: 1062-1066. [DOI] [PubMed] [Google Scholar]

- Galaburda A, Sanides F (1980) Cytoarchitectonic organization of the human auditory-cortex. J Comp Neurol 190: 597-610. [DOI] [PubMed] [Google Scholar]

- Gifford III GW, Cohen YE (2004a) The effect of a central fixation light on auditory spatial responses in area LIP. J Neurophysiol 91: 2929-2933. [DOI] [PubMed] [Google Scholar]

- Gifford III GW, Cohen YE (2004b) Spatial and non-spatial auditory processing in the lateral intraparietal area. Exp Brain Res, in press. [DOI] [PubMed]

- Gifford III GW, Hauser MD, Cohen YE (2003) Discrimination of functionally referential calls by laboratory-housed rhesus macaques: implications for neuroethological studies. Brain Behav Evol 61: 213-224. [DOI] [PubMed] [Google Scholar]

- Glimcher PW (2003) Decisions, uncertainty, and the brain: the science of neuroeconomics. Boston: MIT.

- Goldberg ME, Gottlieb J (1997) Neurons in monkey LIP transmit information about stimulus pattern in the temporal waveform of their discharge. Soc Neurosci Abstr 23: 17. [Google Scholar]

- Graziano MS, Hu XT, Gross CG (1997) Coding the locations of objects in the dark. Science 277: 239-241. [DOI] [PubMed] [Google Scholar]

- Griffiths TD, Buchel C, Frackowiak RS, Patterson RD (1998) Analysis of temporal structure in sound by the human brain. Nat Neurosci 1: 422-427. [DOI] [PubMed] [Google Scholar]

- Griffiths TD, Warren JD, Scott SK, Nelken I, King AJ (2004) Cortical processing of complex sound: a way forward? Trends Neurosci 27: 181-185. [DOI] [PubMed] [Google Scholar]

- Groh JM, Trause AS, Underhill AM, Clark KR, Inati S (2001) Eye position influences auditory responses in primate inferior colliculus. Neuron 29: 509-518. [DOI] [PubMed] [Google Scholar]

- Grunewald A, Linden JF, Andersen RA (1999) Responses to auditory stimuli in macaque lateral intraparietal area. I. Effects of training. J Neurophysiol 82: 330-342. [DOI] [PubMed] [Google Scholar]

- Hart HC, Palmer AR, Hall DA (2004) Different areas of human non-primary auditory-cortex are activated by sounds with spatial and nonspatial properties. Hum Brain Mapp 21: 178-204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hauser MD (1998) Functional referents and acoustic similarity: field playback experiments with rhesus monkeys. Anim Behav 55: 1647-1658. [DOI] [PubMed] [Google Scholar]

- Judge SJ, Richmond BJ, Chu FC (1980) Implantation of magnetic search coils for measurement of eye position: an improved method. Vision Res 20: 535-538. [DOI] [PubMed] [Google Scholar]

- Kaas JH, Hackett TA (1999) “What” and “where” processing in auditory-cortex. Nat Neurosci 2: 1045-1047. [DOI] [PubMed] [Google Scholar]

- Kaas JH, Hackett TA (2000) Subdivisions of auditory-cortex and processing streams in primates. Proc Natl Acad Sci USA 97: 11793-11799. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kusunoki M, Gottlieb J, Goldberg ME (2000) The lateral intraparietal area as a salience map: the representation of abrupt onset, stimulus motion, and task relevance. Vision Res 40: 1459-1468. [DOI] [PubMed] [Google Scholar]

- Maeder PP, Meuli RA, Adriani M, Bellmann A, Fornari E, Thiran JP, Pittet A, Clarke S (2001) Distinct pathways involved in sound recognition and localization: a human fMRI study. NeuroImage 14: 802-816. [DOI] [PubMed] [Google Scholar]

- Middlebrooks JC (2002) Auditory space processing: here, there or everywhere? Nat Neurosci 5: 824-826. [DOI] [PubMed] [Google Scholar]

- Miller JM, Sutton D, Pfingst B, Ryan A, Beaton R, Gourevitch G (1972) Single cell activity in the auditory-cortex of Rhesus monkeys: behavioral dependency. Science 177: 449-451. [DOI] [PubMed] [Google Scholar]

- Newman JD, Lindsley DF (1976) Single unit analysis of auditory processing in squirrel monkey frontal cortex. Exp Brain Res 25: 169-181. [DOI] [PubMed] [Google Scholar]

- Panzeri S, Treves A (1996) Analytical estimates of limited sampling biases in different information measures. Network 7: 87-107. [DOI] [PubMed] [Google Scholar]

- Patterson RD, Johnsrude IS, Uppenkamp S, Griffiths TD (2002) The processing of temporal pitch and melody information in auditory-cortex. Neuron 36: 767-776. [DOI] [PubMed] [Google Scholar]

- Pfingst BE, O'Connor TA, Miller JM (1977) Response plasticity of neurons in auditory-cortex of the rhesus monkey. Exp Brain Res 29: 393-404. [DOI] [PubMed] [Google Scholar]

- Rämä P, Poremba A, Sala JB, Yee L, Malloy M, Mishkin M, Courtney SM (2004) Dissociable functional topographics for working memory maintenance of voice identity and location. Cereb Cortex 14: 768-780. [DOI] [PubMed] [Google Scholar]

- Rao SC, Rainer G, Miller EK (1997) Integration of what and where in the primate prefrontal cortex. Science 276: 821-824. [DOI] [PubMed] [Google Scholar]

- Rauschecker JP (1998) Parallel processing in the auditory-cortex of primates. Audiol Neurootol 3: 86-103. [DOI] [PubMed] [Google Scholar]

- Rauschecker JP, Tian B (2000) Mechanisms and streams for processing of “what” and “where” in auditory-cortex. Proc Natl Acad Sci USA 97: 11800-11806. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Recanzone GH (2002) Where was that? Human auditory spatial processing. Trends Cogn Sci 6: 319-320. [DOI] [PubMed] [Google Scholar]

- Recanzone GH, Guard DC, Phan ML (2000) Frequency and intensity response properties of single neurons in the auditory-cortex of the behaving macaque monkey. J Neurophysiol 83: 2315-2331. [DOI] [PubMed] [Google Scholar]

- Rivier F, Clarke S (1997) Cytochrome oxidase, acetylcholinesterase and NADPH-diaphorase staining in human supratemporal and insular cortex: evidence for multiple auditory areas. NeuroImage 6: 288-304. [DOI] [PubMed] [Google Scholar]

- Romanski LM, Goldman-Rakic PS (2002) An auditory domain in primate prefrontal cortex. Nat Neurosci 5: 15-16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romanski LM, Tian B, Fritz J, Mishkin M, Goldman-Rakic PS, Rauschecker JP (1999) Dual streams of auditory afferents target multiple domains in the primate prefrontal cortex. Nat Neurosci 2: 1131-1136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Russo GS, Bruce CJ (1994) Frontal eye field activity preceding aurally guided saccades. J Neurophysiol 71: 1250-1253. [DOI] [PubMed] [Google Scholar]

- Scott SK, Blank CC, Rosen S, Wise RJS (2000) Identification of a pathway for intelligible speech in the left temporal lobe. Brain 123: 2400-2406. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sereno AB, Maunsell JH (1998) Shape selectivity in primate lateral intraparietal cortex. Nature 395: 500-503. [DOI] [PubMed] [Google Scholar]

- Shannon CE (1948a) A mathematical theory of communication. The Bell Syst Tech J 27: 379-423. [Google Scholar]

- Shannon CE (1948b) A mathematical theory of communication. The Bell Syst Tech J 27: 623-656. [Google Scholar]

- Tardif E, Clarke S (2001) Intrinsic connectivity of human auditory areas: a tracing study with DiI. Eur J Neurosci 13: 1045-1050. [DOI] [PubMed] [Google Scholar]

- Tian B, Reser D, Durham A, Kustov A, Rauschecker JP (2001) Functional specialization in rhesus monkey auditory-cortex. Science 292: 290-293. [DOI] [PubMed] [Google Scholar]

- Toth LJ, Assad JA (2002) Dynamic coding of behaviourally relevant stimuli in parietal cortex. Nature 415: 165-168. [DOI] [PubMed] [Google Scholar]

- Troscianko T, Davidoff J, Humphreys G, Landis T, Fahle M, Greenlee M, Brugger P, Phillips W (1996) Human colour discrimination based on a non-parvocellular pathway. Curr Biol 1: 200-210. [DOI] [PubMed] [Google Scholar]

- Ungerleider LG, Mishkin M (1982) Two cortical visual systems. In: Analysis of visual behavior (Ingle DJ, Goodale MA, Mansfield RJW, eds). Cambridge, MA: MIT.

- Vouloumanos A, Kiehl KA, Werker JF, Liddle PF (2001) Detection of sounds in the auditory stream: event-related fMRI evidence for differential activation to speech and nonspeech. J Cogn Neurosci 13: 994-1005. [DOI] [PubMed] [Google Scholar]

- Warren JD, Griffiths TD (2003) Distinct mechanisms for processing spatial sequences and pitch sequences in the human auditory brain. J Neurosci 23: 5799-5804. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Warren JD, Zielinski BA, Green GG, Rauschecker JP, Griffiths TD (2002) Perception of sound-source motion by the human brain. Neuron 34: 139-148. [DOI] [PubMed] [Google Scholar]

- Webster MJ, Bachevalier J, Ungerleider LG (1994) Connections of inferior temporal areas TEO and TE with parietal and frontal cortex in macaque monkeys. Cereb Cortex 4: 470-483. [DOI] [PubMed] [Google Scholar]

- Wilson FA, Scalaidhe SP, Goldman-Rakic PS (1993) Dissociation of object and spatial processing domains in primate prefrontal cortex. Science 260: 1955-1958. [DOI] [PubMed] [Google Scholar]

- Zatorre RJ, Evans AC, Meyer E, Gjedde A (1992) Lateralization of phonetic and pitch discrimination in speech processing. Science 256: 846-849. [DOI] [PubMed] [Google Scholar]

- Zatorre RJ, Bouffard M, Ahad P, Belin P (2002) Where is “where” in the human auditory-cortex? Nat Neurosci 5: 905-909. [DOI] [PubMed] [Google Scholar]