Abstract

We present a novel approach based on deep learning for decoding sensory information from non-invasively recorded Electroencephalograms (EEG). It can either be used in a passive Brain-Computer Interface (BCI) to predict properties of a visual stimulus the person is viewing, or it can be used to actively control a BCI application. Both scenarios were tested, whereby an average information transfer rate (ITR) of 701 bit/min was achieved for the passive BCI approach with the best subject achieving an online ITR of 1237 bit/min. Further, it allowed the discrimination of 500,000 different visual stimuli based on only 2 seconds of EEG data with an accuracy of up to 100%. When using the method for an asynchronous self-paced BCI for spelling, an average utility rate of 175 bit/min was achieved, which corresponds to an average of 35 error-free letters per minute. As the presented method extracts more than three times more information than the previously fastest approach, we suggest that EEG signals carry more information than generally assumed. Finally, we observed a ceiling effect such that information content in the EEG exceeds that required for BCI control, and therefore we discuss if BCI research has reached a point where the performance of non-invasive visual BCI control cannot be substantially improved anymore.

Introduction

A brain-computer interface (BCI) is a device that translates brain signals into output signals of a computer system. The BCI output is mainly used to restore several functionalities of motor disabled people, e.g., for prosthesis control or communication [1]. Besides the use of BCIs that gives the user the ability to actively control a device, passive BCIs have been accepted as a different kind of BCIs that do not have the purpose of voluntary control [2].

In the area of BCIs for communication purposes, BCIs based on visual evoked potentials (VEPs) have emerged as the fastest and most robust approach for BCI communication. Although the idea to use VEPs for BCI control dates back to Vidal [3], Sutter [4] suggested the use of VEPs for a BCI-controlled keyboard in 1984 and showed in 1992 that an ALS patient with invasive electrodes can use such a system at home to write 10 to 12 words per minute [5].

Since then, different approaches were demonstrated that use VEPs for BCI control. The majority of VEP-based BCI systems is based on frequency-modulated SSVEPs. The highest information transfer rate (ITR) for an SSVEP-based system was reported by Chen et al. [6], with 267 bit/min on average and up to 319 bit/min for the best subject. The authors improved the method to an average ITR of 325 bit/min [7], which happens to be the highest ITR being reported for any BCI system, so far.

Instead of using a frequency-modulated visual stimulus for SSVEPs, visual stimuli can also be modulated with a certain pattern to evoke so-called code-modulated visual evoked potentials (c-VEPs) that can be used for BCI control. Although Sutter was the first to use this approach [5], it was ignored by BCI research for a long time and reemerged in 2011 when Bin et al. [8] presented a c-VEP based BCI-system reaching an average ITR of 108 bit/min up to 123 bit/min for the best subject, which was the highest reported ITR at that time. In 2012, Spüler et al. [9] improved the methods for detection of c-VEPs and reached an average ITR of 144 bit/min, up to 156 bit/min for the best subject, which was the highest reported ITR at that time.

A completely different approach to utilize VEPs for BCI control was presented by Thielen et al. [10] in 2015, who created a model to predict the response to arbitrary compositions of short and long visual stimulation pulses and showed that it can be used for BCI control reaching an average ITR of 48 bit/min.

While the model by Thielen et al. was only able to predict responses consisting of short and long pulses, we presented the Code2EEG method [11] to create a model that allows predicting the EEG response to arbitrary stimulation patterns (codes). The Code2EEG method was based on the idea that the VEP response to a complex stimulus is generated by a linear superposition of single-flash VEP responses [12, 13]. In the same work, we also presented the EEG2Code method, which is the backward model and predicts the stimulation pattern of arbitrary VEP responses. Using the EEG2Code model for BCI communication resulted in an average ITR of 108 bit/min. In a following publication, we further improved the method and presented an asynchronous self-paced BCI with robust non-control state detection to reach an average ITR of 122 bit/min, up to 205 bit/min for the best subject [14].

In a previous work [11] it was shown that a linear method could be used to model the VEP response, but it was also found that the VEP response has non-linear properties that cannot be described by a superposition and cannot be reconstructed with a linear model.

As deep learning has become popular in the last years as a powerful machine learning method to create non-linear prediction models, that outperform other methods in tasks like image classification or speech recognition [15], deep learning methods seem like a natural fit to apply them for neural data. One class of neural networks used in deep learning are convolutional neural networks (CNNs), which were already used in the field of BCIs. The first work which explored CNNs for a BCI is by Cecotti et al. [16], who applied CNNs to P300 data and found CNNs to outperform other methods. In 2017, Kwak et al. [17] proposed a 3-layer CNN that uses frequency features as input for robust SSVEP detection. They compared their CNN approach to other state-of-the-art methods for SSVEP decoding and found CNNs to outperform all of them. Especially for noisy EEG data obtained by a moving participant, they achieved an accuracy of 94.03% compared to 84.65% achieved by the best-compared method. Thomas et al. [18] performed a similar comparison, with the result that the CNN outperformed all other state-of-the-art methods as well.

As we found that linear methods can not appropriately model the VEP response, this paper presents an approach to combine the EEG2Code method with deep learning to create a non-linear model that predicts arbitrary stimulation patterns based on the VEP response, and we show how this method can be used in a BCI. Furthermore, we propose to make a clearer distinction between signal decoding performance and BCI control performance and to ensure that the BCI system is viable in an end-user scenario when evaluating BCI control performance.

Materials and methods

EEG2Code model

In a previous work [11], we proposed a new stimulation paradigm, based on fully random visual stimulation patterns for the use in BCI. In the same work, we proposed the EEG2Code model, which allows predicting the stimulation pattern based on the EEG. Furthermore, we have shown how the model can be used for synchronous BCI control. In a subsequent work [14] we have shown that the EEG2Code model can also be used for high-speed self-paced BCI control. In those works, the EEG2Code model was based on linear ridge regression. For the sake of completeness, the central parts of the EEG2Code method are explained briefly in the following paragraphs, but a more detailed description can be found in our previous works [11, 14].

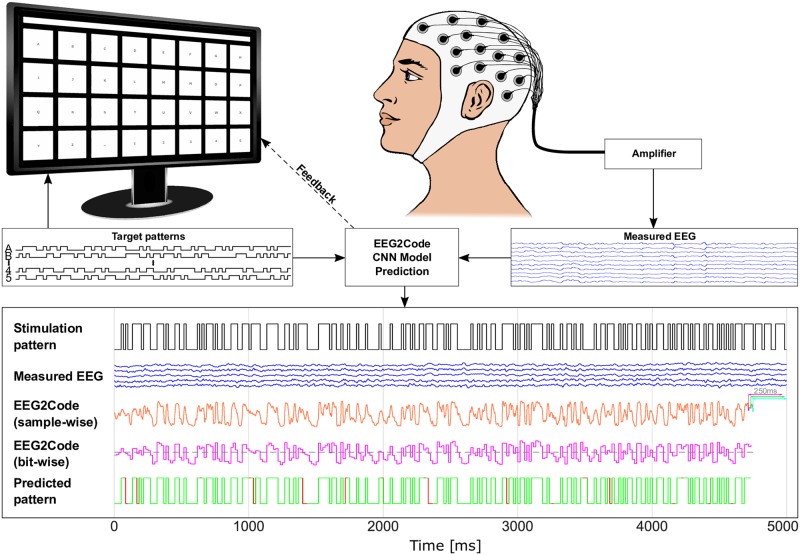

The general setup of the BCI is shown in Fig 1. The EEG2Code method is based on a bit-representation (code) of the visual stimulation pattern, where the properties of the visual stimulus are encoded as one bit (0: black, 1: white). The idea of the EEG2Code method is to predict each bit of the stimulation pattern based on the following 250 ms of EEG data. A 250 ms window is used as the most prominent components of a flash VEP last for around 250 ms [19].

Fig 1. Example of the EEG2Code CNN pattern prediction.

The 32-target matrix-keyboard layout is as shown on the monitor. Each target is modulated with its own random stimulation pattern (black lines) and flicker between white (binary 1) and black (binary 0). The measured EEG (VEP response) is amplified, and afterward, the EEG2Code model predicts the stimulation pattern. Shown is one run where the EEG2Code model with a convolutional neural network (CNN) was used in the passive BCI setting to predict the visual stimulation pattern. The participant had to focus on a target which was modulated with the shown random stimulation pattern (black line). For each 250 ms window (slid sample-wise) of the measured EEG signals (blue lines), the EEG2Code CNN model predicts a probability value (orange line), which indicates whether the stimulus is black (0) or white (1). This procedure is shown for three exemplary windows (magenta, green, cyan). Note that the model prediction is delayed by 250 ms due to the sliding window approach. The resulting model prediction is now down-sampled to the corresponding number of bits (magenta line). The EEG2Code prediction is transformed to the predicted stimulation pattern using a threshold of 0.5 (gray dotted line), which in turn can be compared to the real stimulation pattern (green = match, red = mismatch). For this plot, EEG data and results from the best online run were used, where an accuracy of 92.6% was achieved, corresponding to an ITR of 2122 bit/min. An animated version of the lower part of this figure can be found in S1 Video. The upper part of the figure is modified from our previous work [11].

In our previous works, we trained a linear ridge regression method for that purpose, but any regression method can be used. For training the model, the subject has to watch a visual stimulation that is randomly flickering between black and white. After training the EEG2Code model using the bit-sequence of the stimulation pattern and the concurrently recorded EEG signal, the resulting model can be used to predict the property of the visual stimulus in real-time. This procedure is also depicted in Fig 1.

In our work, we recorded EEG with a sampling rate of 600 Hz and shifted the 250 ms prediction window sample-wise to obtain 600 predictions per second. The sampling rate was chosen such that the visual stimulus presentation rate (60 Hz, refresh rate of the monitor) is a full divisor, which means that 10 prediction samples were obtained for each bit in the stimulation sequence. Those 10 real-valued predictions are averaged to obtain one prediction value for each bit. For the final prediction of the pattern, a threshold of 0.5 is used to either predict the stimulus property as black (0) or white (1).

The EEG2Code method can either be used in a passive BCI approach to predict the property of the visual stimulus a subject is currently focusing (as described above), or to actively control a BCI application, e.g., a spelling application.

In the BCI control scenario, multiple stimuli (targets) are presented to the subject, where each stimulus corresponds to a different action (or letter). During a trial, the subject has to focus on one of those targets. By comparing the prediction of the EEG2Code model with the stimulation patterns of all targets, we can identify the target that is attended by the subject. For synchronous BCI control, we calculated the correlation between the predicted stimulation pattern and the patterns of all targets, and the target with the highest correlation is selected. For asynchronous self-paced BCI control, the EEG2Code prediction is compared to all stimulation patterns continuously. Instead of using the correlation, we calculate the p-values under the hypothesis that the correlation is greater than zero, as this takes the length of a trial into account. If a certain threshold is exceeded, the corresponding target is selected. A more detailed description of the asynchronous classification method can be found in our previous work [14].

Combining EEG2Code with deep learning

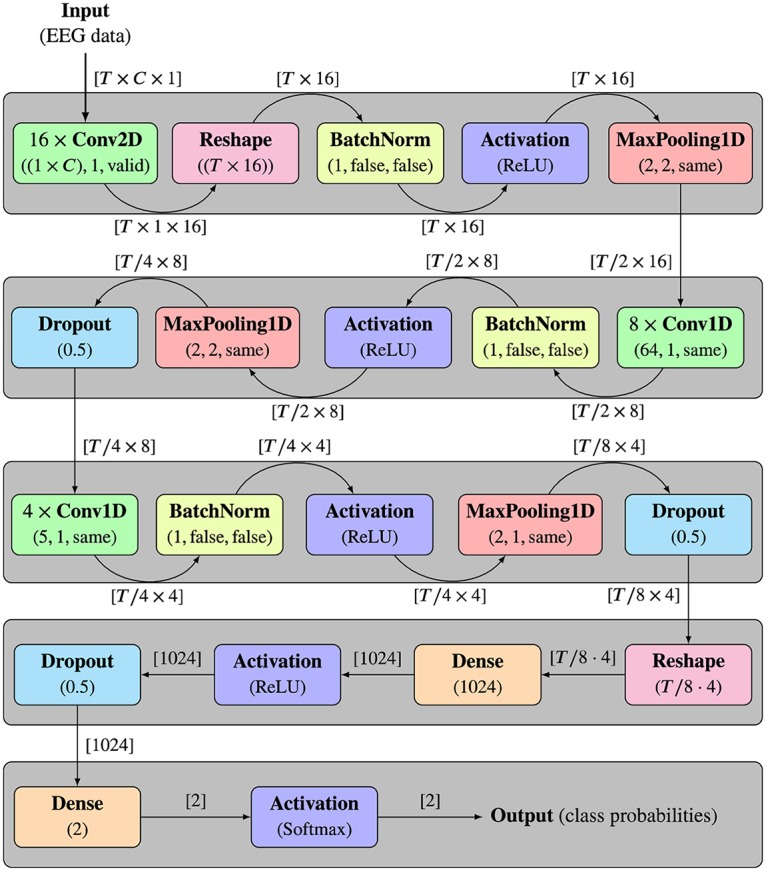

Instead of the linear ridge regression that was used in our previous works, a convolutional neural network (CNN) was used for the EEG2Code method. The architecture of the CNN model is based on the work of Gunsteren [20], where it was used for classification of P300 data. Contrary to the EEG2Code ridge regression model, the data is not spatially filtered beforehand. This means the model takes windows of size T × C, whereby T = 150 corresponds to the number of samples (250 ms), and C = 32 corresponds to the number of EEG channels. The model consists of five layers, whereby the first layer uses convolutional kernels of size 1 × 32, which means the kernels act as spatial filters. As 16 different convolutional kernels are used, this corresponds to 16 spatial filters in total. The second layer consists of 8 convolutional kernels with a size of 64 × 1, which act as different temporal filters. The remaining layers are used for predicting the stimulation property based on the spatially, and temporally filtered data.

A detailed structure of the EEG2Code CNN is depicted in Fig 2. It shows each performed operation, including the used parameters as well as the input and output dimensions of each operation.

Fig 2. Architecture of the EEG2Code convolutional neural network.

The five layers are indicated by the gray boxes. Each layer consists of different operations, whereby the same operations are colored the same. Below each operation, the used parameters are given, whereby the parameters for the Conv and MaxPooling are (kernel/pooling size, stride, padding), and for BatchNorm (axis, scale, center). The edges are labeled with the shape of the input and output data, respectively. The input of the model is a 250 ms (T = 150 samples) window of EEG data with C = 32 channels. The outputs are two values, which are the probabilities that the input belongs to each class (binary 1 or 0). As the window is shifted sample-wise over the complete trial data, the output is the prediction of the corresponding stimulation pattern.

MaxPooling operations are performed to reduce the size of intermediate representation between layers. The BatchNorm operation results in a faster and more stable training. The Dropout operation is used for regularization to avoid overfitting of the model. The Dense operation fully connects the input neurons with the given number of output neurons. The Activation operations define/transform the output of each neuron depending on a given activation function, whereby the softmax function takes an un-normalized vector, and normalizes it into a probability distribution, and therefore gives the probabilities that the initial input belongs to one of the classes. Since the model is trained on two classes (0 or 1), the outputs are two probabilities p0 and p1, one for each class. It must be noted that p1 = 1 − p0. The model is trained using a learning rate of 0.001 and a batch size of 256. In total, 25 epochs were trained, and the best model was selected as the final model. In this case, the best model refers to the model with the highest validation accuracy, where the validation dataset is independent of the testing dataset.

Offline analysis

To evaluate the combination of EEG2Code and deep learning, we evaluated the method offline on different data that was obtained in two previous studies, where the EEG2Code method was used for synchronous BCI control [11], and an improved version of EEG2Code was demonstrated with asynchronous self-paced BCI control [14]. For the offline evaluation, we simulated an online experiment, whereby the data for training and testing the model was the same as in the online experiment.

The data was used for two different scenarios: the active BCI scenario where a target/letter is selected, and the passive BCI scenario, where each bit of the stimulation sequence is predicted. In the latter scenario, the terminology is different, and one trial in the passive BCI scenario refers to predicting one bit (16.6 ms stimulus presentation) based on 250 ms of EEG data.

For the synchronous mode, we used a presentation layout with 32 targets arranged as a matrix-keyboard. In total, 384 s of training data were recorded, which means 384 s ⋅ 60 bit/s = 23040 random bits were presented. The testing phase was split into 14 runs with a trial duration of 2 s each. Those runs were alternated using fully random stimulation patterns and optimized stimulation patterns, see [11] for details. For the bit prediction accuracy, the 7 runs with fully random stimulation were used, whereby for the synchronous BCI control, the 7 runs with optimized stimulation were used. The participants had to perform each run in lexicographic order, with 32 trials per run, so that a total of 448 letters were selected. As we found in our previous study [11] that a trial duration of 1 s is optimal for the synchronous scenario, the simulated online experiment in this study was performed with a trial duration of 1 s and an inter-trial time of 0.5 s.

We further tested how well the EEG2Code method can discriminate 500,000 different stimuli. As each target is modulated with a random stimulation pattern, there is no need to record data from 500,000 targets. Instead, we compared the predicted stimulation pattern, to a set of 500,000 patterns, where one pattern is identical to the one used for stimulation, while the other 499,999 are different random stimulation patterns. Such an analysis was already performed for the EEG2Code ridge regression model in our previous publication [11], where we tested different numbers of stimuli. Although one could test for larger numbers, we stopped at 500,000 due to the increasing computational demands and therefore only used this number for the current work.

For the asynchronous self-paced BCI control, the data from [14] was used. The training data also consists of 384 s of recorded EEG data, whereby the optimized stimulation patterns were used. The testing phase consists of 6 runs with 32 trials each and with optimized stimulation patterns. Note that the trial duration varies due to the asynchronous approach, whereby the inter-trial time was set to 500 ms. The trials were performed in lexicographic order. For comparison reasons, the same p-value thresholds were used as determined during the online experiment, see [14] for details.

The experimental setup for recording these data was very similar to the setup described later in this paper but is also described in more detail in the corresponding publications [11, 14].

Online experiment

To demonstrate that the EEG2Code model using a CNN can also be used in an online BCI to provide real-time feedback, we performed an online experiment. This online experiment should only serve as a proof-of-concept, and only one subject was tested.

The subject was the best-performing subject (S01) from our first study. The participation in our first study and the proof-of-concept experiment were approximately 14 months apart.

For training the EEG2Code CNN model, the participant first performed a training phase consisting of 96 runs with 4 s of random stimulation each. Afterward, 96 runs were performed, whereby each run consisted of 285 trials, with each trial corresponding to one bit of the random stimulation sequence. At the end of the run, an additional 250 ms of random stimulation followed, so that one run had a total length of 5 seconds.

Hardware & software

The setup was similar to the one used in a previous study [14], except that the EEG2Code CNN computations were performed on an IBM Power System S822LC with four Nvidia® Tesla P100 GPUs using Python v2.7 [21] and the Keras framework [22].

The system consists of a g.USBamp (g.tec, Austria) EEG amplifier, three personal computers (PCs), Brainproducts Acticap system with 32 channels and an LCD monitor (BenQ XL2430-B) for stimuli presentation. Participants are seated approximately 80 cm in front of the monitor.

PC1 is used for the presentation on the LCD monitor, which is set to refresh rate of 60 Hz and its native resolution of 1920 × 1080 pixels. A stimulus can either be black or white, which can be represented by 0 or 1 in a binary sequence and is synchronized with the refresh rate of the LCD monitor, which means each bit of the stimulation patterns are presented for 1/60 s. The timings of the monitor refresh cycles are synchronized with the EEG amplifier by using the parallel port.

As correct synchronization of EEG and visual stimulation is crucial, we corrected for the monitor raster latency as described in our previous work [23].

PC2 is used for data acquisition, whereby BCI2000 [24] is used as a general framework for recording the data of the EEG amplifier. The amplifier sampling rate was set to 600 Hz, resulting in 10 samples per frame/stimulus. A TCP network connection was established to PC1 in order to send instructions to the presentation layer and to get the modulation patterns of the presented stimuli. During the online experiment, the EEG data was continuously sent to PC3 using a TCP connection. PC3 performs the EEG2Code prediction and sent back the prediction to PC2.

A 32 electrodes EEG layout was used, 30 electrodes were located at Fz, T7, C3, Cz, C4, T8, CP3, CPz, CP4, P5, P3, P1, Pz, P2, P4, P6, PO9, PO7, PO3, POz, PO4, PO8, PO10, O1, POO1, POO2, O2, OI1h, OI2h, and Iz. The remaining two electrodes were used for electrooculography (EOG), one between the eyes and one left of the left eye. The ground electrode (GND) was positioned at FCz, and the reference electrode (REF) at OZ.

Performance evaluation

The BCI control performance is evaluated using the accuracy, the number of correct letters per minute (CLM) [25], the utility bitrate (UTR) [26] and the information transfer rate (ITR) [27]. The CLM, which considers that erroneous letters must be deleted using a backspace symbol, can be computed with the following equation:

| (1) |

The ITR and UTR can be computed with the following equations:

| (2) |

| (3) |

with N the number of classes, P the accuracy, and T the time in seconds required for one prediction. For both, the unit is given in bits per minute (bit/min).

The ITR is generally used to assess how much information a user can convey by BCI control and therefore mostly used as a performance measure for BCI communication. However, the ITR is not a good measure for communication performance [26] as it is a pure measure of information that does not take into account how humans use a communication system (e.g., backspace to correct errors). For this reason, metrics like the UTR [26] or CLM [25] are a more appropriate measure.

Additionally, It should also be noted that the ITR is based on Shannon-Weaver’s model for communication [28], which consists of an information source sending (binary) information that is being encoded, transmitted via a noisy channel, decoded and received by a receiver. As the passive BCI scenario in this paper shows a clearer analogy to Shannon-Weaver’s model than other BCIs, it should be pointed out: Computer A is the information source that encodes binary information in a visual stimulus. The user’s nervous system (eye and brain) is the noisy channel. The BCI acts as a decoder for the brain signals, and the decoded information is received by computer B.

Results

Offline analysis: Simulated online experiment

In the simulated online experiment, we first analyzed the EEG2Code stimulation pattern prediction, which resulted in an average accuracy of 74.9% (ITR of 701.3 bit/min) using the fully random stimulation patterns. It is worth noting that for S01 an average accuracy of 83.4% was achieved, which corresponds to 1262.1 bit/min. It must be noted that the ITR calculation with T = 1/60 s is slightly biased due to the required window length of 250 ms, but T approaches to 1/60 s and the bias decreases with increasing trial duration.

Also, the synchronous BCI control was simulated, which resulted in an average accuracy of 95.9% using the optimized stimulation patterns and a trial duration of 1 s, which in turn corresponds to an ITR of 183.1 bit/min including the inter-trial time of 0.5 s. Detailed results for each subject are listed in Table 1.

Table 1. Simulated online results of the EEG2Code method in a passive BCI scenario and for active BCI control.

| Subject | Pattern prediction | Synchronous BCI control | ||||||

|---|---|---|---|---|---|---|---|---|

| Ridge regression | CNN | Ridge regression | CNN | |||||

| ACC [%] | ITR [bpm] | ACC [%] | ITR [bpm] | ACC [%] | ITR [bpm] | ACC [%] | ITR [bpm] | |

| S01 | 69.1 | 389.9 | 83.4 | 1262.1 | 99.2 | 195.8 | 100.0 | 200.0 |

| S02 | 64.5 | 222.4 | 72.9 | 567.3 | 94.1 | 175.5 | 94.6 | 177.3 |

| S03 | 63.7 | 196.5 | 72.5 | 545.7 | 83.4 | 141.2 | 95.5 | 180.6 |

| S04 | 65.6 | 257.8 | 76.8 | 787.9 | 95.9 | 182.1 | 98.7 | 193.2 |

| S05 | 66.3 | 282.7 | 78.7 | 908.9 | 96.4 | 183.8 | 99.6 | 197.5 |

| S06 | 67.1 | 308.2 | 75.4 | 704.9 | 98.1 | 191.0 | 98.7 | 193.2 |

| S07 | 63.7 | 196.8 | 68.5 | 363.9 | 88.4 | 156.4 | 87.9 | 154.9 |

| S08 | 60.9 | 124.5 | 77.1 | 807.1 | 65.6 | 94.6 | 99.6 | 197.5 |

| S09 | 60.2 | 109.4 | 68.5 | 363.7 | 53.5 | 68.0 | 87.5 | 153.5 |

| mean | 64.6 | 232.0 | 74.9 | 701.3 | 86.1 | 154.3 | 95.9 | 183.1 |

Shown are the average results of all subjects, whereby best results are in bold font. The left part shows the results for the EEG2Code pattern prediction, whereas the right part shows the results for the simulated synchronous BCI control with a trial duration of 1 s. For both, the previous results using the ridge regression model as well as the new results using the CNN model are shown. For all, the accuracies (ACC) and the corresponding ITRs are given. The ITRs are calculated using Eq 2 with N = 2 (N = 32) and T = 1/60 s (T = 1.5s).

Furthermore, also the asynchronous self-paced BCI control was simulated using the 32-target matrix-keyboard layout. The results for each participant are shown in Table 2. The average target prediction accuracy is 98.5% (ITR: 175.5 bit/min) with an average trial duration of 1.71 s (including 0.5 s inter-trial time). In total, 91.6% of all trials could be classified faster compared to the ridge regression model. Finally, this results in an average spelling speed of 35.3 correct letters per minute (CLM) with a maximum of 48.2 CLM, which corresponds to a utility rate of 175 bit/min and 239 bit/min, respectively.

Table 2. Simulated online results for an asynchronous self-paced BCI speller.

| Subject | CLM | ACC [%] | ITR [bpm] | Time [s] | UTR [bpm] |

|---|---|---|---|---|---|

| S10 | 33.3 | 99.0 | 165.4 | 1.77 | 165.0 |

| S11 | 48.2 | 97.9 | 238.9 | 1.19 | 238.8 |

| S12 | 39.7 | 99.5 | 197.8 | 1.49 | 196.8 |

| S13 | 28.7 | 99.5 | 142.8 | 2.07 | 142.1 |

| S14 | 35.8 | 95.3 | 177.8 | 1.52 | 177.6 |

| S15 | 30.7 | 99.0 | 152.4 | 1.92 | 152.0 |

| S16 | 45.1 | 100.0 | 225.3 | 1.33 | 223.2 |

| S17 | 25.8 | 97.4 | 127.8 | 2.21 | 127.7 |

| S18 | 30.9 | 100.0 | 154.4 | 1.94 | 153.0 |

| S19 | 34.8 | 97.4 | 172.3 | 1.64 | 172.2 |

| mean | 35.3 | 98.5 | 175.5 | 1.71 | 174.8 |

Shown are the results for the lexicographic-spelling (matrix-layout, 32 targets). The number of correct letters per minute (CLM), the target prediction accuracy (ACC), the information transfer rates (ITR), the average trial duration (including an inter trial time of 0.5 s) and the utility bitrate (UTR). Best results are in bold font.

Discriminating 500,000 different stimuli

The results for using the EEG2Code approach for the discrimination of 500,000 different stimuli patterns can be seen in Table 3. While the EEG2Code method with ridge regression was able to identify the correct stimulus with an average accuracy of 54.9%, the EEG2Code deep learning approach achieved an average accuracy of 84.0%. Notably, subject S01 achieved an accuracy of 100% showing that for all trials, the correct stimulus pattern was identified.

Table 3. Simulated online results of the EEG2Code method for the discrimination of 500,000 different stimuli.

| Subject | Ridge regression | CNN | ||

|---|---|---|---|---|

| ACC [%] | ITR [bpm] | ACC [%] | ITR [bpm] | |

| S01 | 96.3 | 431.8 | 100.0 | 454.3 |

| S02 | 55.4 | 227.7 | 82.1 | 357.0 |

| S03 | 42.0 | 167.1 | 79.9 | 345.7 |

| S04 | 76.8 | 330.1 | 97.8 | 440.5 |

| S05 | 71.9 | 306.0 | 96.9 | 435.3 |

| S06 | 84.4 | 368.4 | 95.5 | 427.8 |

| S07 | 53.1 | 217.4 | 52.7 | 215.4 |

| S08 | 8.0 | 26.8 | 93.8 | 417.9 |

| S09 | 6.3 | 20.3 | 57.1 | 236.0 |

| mean | 54.9 | 232.9 | 84.0 | 370.0 |

Shown are the average results of all subjects, whereby best results are in bold font. The results are for the simulated synchronous BCI control with 500,000 simulated targets based on 2 s of EEG data. The accuracies (ACC) and the corresponding ITRs are given, whereby the ITRs are calculated using Eq 2 with N = 500000 and T = 2.5s.

Online experiment

To demonstrate that the EEG2Code CNN model can also be used in an online BCI, we performed an experiment where we invited the best subject (S01) from the first experiment to participate. Averaged over all runs, an average bit prediction accuracy of 83.4% was achieved which corresponds to an average ITR of 1237 bit/min using N = 2 and T = 5/285 s, considering that 285 trials are predicted in a 5 s run. As mentioned, the ITR calculation with T = 1/60 s is slightly biased, which is why T = 5/285 s is used for the online experiment.

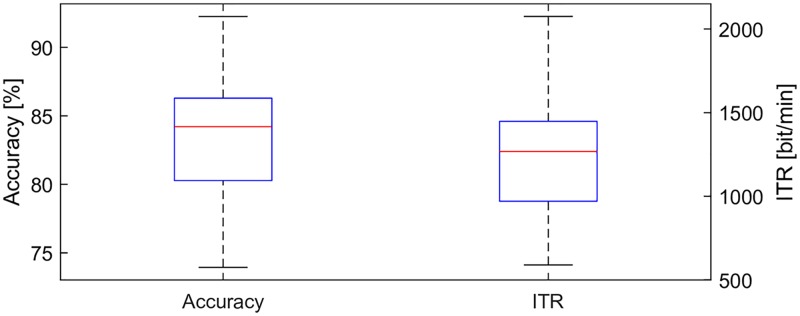

The distribution of the average performance per run is depicted in Fig 3. The results also show that the prediction of the run with the best performance had an accuracy of 92.6% and an ITR of 2122 bit/min.

Fig 3. Results of the online experiment.

Bit prediction accuracies and corresponding ITRs achieved during an online experiment of subject S01. Shown is the distribution of the average performance per run with the red line representing the median. The data consists of 96 runs, with each running having 285 trials (bits). The ITRs were calculated using Eq 2 with N = 2 and T = 5/285 s.

Discussion

In this work, we have combined the EEG2Code method with deep learning to show that this approach can be used in two different kinds of BCI: a passive BCI to predict the properties of the visual stimulus the user is viewing, or for controlling a BCI to select letters.

In an online experiment, we were able to show that the approach is online capable and that a subject can reach 1237 bit/min when using the method in a passive BCI. In an offline analysis, we compared the EEG2Code method using a ridge regression against the EEG2Code method with deep learning and could show an increase in the classification accuracy from 64.6% to 74.9% for the pattern prediction. With regard to the information that can be extracted from the EEG, the ITR could be improved by 202% with deep learning (from 232 bit/min to 701 bit/min).

Compared to current state-of-the-art approaches, the EEG2Code deep learning approach clearly outperforms the previously fastest system by Chen et al. [6]. They reported the previously highest ITR for a BCI, with an average ITR of 267 bit/min and an online ITR of 319 bit/min for the best subject, which we could raise to 701 bit/min and 1237 bit/min, respectively. This comparison only holds true in respect to brain signal decoding performance, and this illustrates why it is important to make a clearer distinction between signal decoding performance and BCI control performance, which will be further discussed below.

Furthermore, as the method is able to extract a high amount of information from the EEG and can discriminate 500,000 different stimuli based on 2 s of EEG data, we suggest that EEG is less noisy and carries more information than generally assumed.

Ceiling effect

When comparing the results of the passive BCI for pattern prediction (701 bit/min) with the results for BCI control (183 bit/min), we observe a ceiling effect. Although we are able to extract an average of 701 bit/min of information from the EEG, we can only use 183 bit/min of this information for BCI control. The discrepancy between extracted information and BCI control becomes even larger when looking at single subjects. The worst subject (S09) had an ITR of 364 bit/min for the pattern prediction, which translates into an ITR of 153 bit/min for BCI control. The best subject (S01) had an ITR of 1262 bit/min for the pattern prediction, which translates into an ITR of 200 bit/min. Comparing those two subjects shows that an increase of 898 bit/min for the pattern prediction translates into an increase of only 47 bit/min for BCI control.

Due to the limited number of targets (N = 32) and trial duration given by the BCI communication system (T = 1.5; 1s trial + 0.5 pause), the ITR is limited to a maximum of 200 bit/min. This limit can only be increased by either reducing the trial duration or by increasing the number of targets. For chasing higher ITRs in the lab, the trial duration could be reduced to 1 s (0.5 s trial + 0.5 s pause), which corresponds to a maximum ITR of 300 bit/min, but with values below 1 s, the system becomes too fast to be realistically usable.

Increasing the number of targets is another way to increase the ITR. The EEG2Code method has the unique property that it allows a virtually unlimited number of targets (e.g., 2120 = 1.3 ⋅ 1036 targets with 2 s of visual stimulation). In this work, we simulated an online BCI with N = 500, 000 targets and could show that in this case, the performance of the EEG2Code ridge regression model translates completely to BCI control with 232.0 bit/min for the pattern prediction and 232.9 bit/min for BCI control. However, when using deep learning, we again observe a ceiling effect with 701 bit/min for the pattern prediction translated into 370 bit/min for BCI control. While it is likely that the ITR for BCI control would increase further when using more targets, it should be pointed out that this is just a theoretical exercise, because communication systems with such a higher number of targets are practically unusable. Nevertheless, these simulations underscore the power of the EEG2Code method as, even with such a large number of targets, high accuracies were reached. In the case of the best subject, we could discriminate 500,000 different stimuli with an accuracy of 100% based on 2 s of EEG data. It must be noted that only 32 out of 500,000 targets can be classified for each run, as no EEG responses are recorded for the simulated targets. However, this has no significant effect on the result.

While there theoretically are ways to overcome the ceiling effect, real BCI control is still limited by a minimum trial duration and a maximum number of targets. While those limits may vary between users, it is likely that 60 characters per minute are a limit that cannot be surpassed significantly by BCI systems based on visual stimuli.

Brain signal decoding vs. BCI communication

As Sutter showed in 1992 [5] that an ALS patient can use a VEP-based BCI at home to write up to 12 words (about 60 characters) per minute, cynical voices might argue that BCI research has not made any progress since then as there is still no system that allows higher communication rates. While the limit for VEP-based BCI communication seems to have already been reached in 1992, the methods for decoding brain signals have evolved since then.

Currently, in the BCI literature, there is no distinction being made between the performance for brain signal decoding and BCI communication. This was not necessary because all information that could be extracted from the EEG could be used for BCI control so that the performance of a method from a decoding perspective was equal to the performance of a method from the perspective of BCI control. However, this has changed due to the ceiling effect that we observed in this work.

Therefore, we argue that BCI research should make a clearer distinction between those two perspectives. From the perspective of brain signal decoding, the aim is to decode signal as good as possible, which means to extract as much information from signals as possible. Therefore, the information transfer rate (ITR) is the perfect measure to evaluate a method in terms of its signal decoding power. When evaluating a method in terms of decoding power, it is also irrelevant if it is being used for BCI control, in a passive BCI or for other purposes.

From a perspective of BCI communication, the ITR is not a good measure for communication performance [26] as it is a pure measure of information that does not take into account how humans use a communication system (e.g., backspace to correct errors). For this reason, metrics like the utility metric [26] or correct letters per minute [25] are a more appropriate measure. Further, and more importantly, when evaluating a BCI system regarding its communication performance, it should be ensured that the system can be used in an end-user scenario. From our perspective, the end-user scenario is not limited to the use by patients, but we refer to any user. With the term end-user scenario, we want to stress the importance of a non-control state detection that detects if a user wants to use the BCI or not. In an end-user scenario, there are always periods where the user does not want to control the BCI either because they are currently listening to another person, reading a webpage or sitting in their wheelchair and enjoying a beautiful sunset. Without a non-control state detection, the BCI will output random garbage, click random webpage-links, or drive the wheelchair off in random directions, which is why a non-control state detection is essential for end-user BCIs.

With regard to the work of Chen et al. [6] who presented a BCI control performance with an average ITR of 267 bit/min, we do not see their system as end-user suitable as it does not have a non-control state detection. Further, the user only has 280 ms between letter selection and the start of the next trial, which is insufficient to react to errors and is only usable by highly trained subjects. We thereby consider the system by Chen et al. [6] as a purely technical demonstration of a brain signal decoding approach.

In contrast, we have shown the EEG2Code method to be usable in an asynchronous self-paced BCI with robust non-control state detection [14] and achieve average communication speeds of 35 correct letters per minute (utility bitrate of 175 bit/min) and thereby consider the BCI be the fastest end-user suitable system for non-invasive BCI communication. For comparison, the previously fastest non-invasive BCI system, that can be considered end-user suitable, was presented by Suefusa et al. [29] and reached an average ITR of 67.7 bit/min.

We encourage fellow researchers to make a clearer distinction between signal decoding performance and BCI control performance and to ensure that the BCI system is viable in an end-user scenario when evaluating BCI control performance. As we have demonstrated a ceiling effect and that it becomes increasingly difficult to improve BCI control performance by better signal decoding, other approaches for improving BCI communication, like language modeling or predictive spelling, gain more importance and further demonstrate the need to separate signal decoding performance from the performance of BCI communication.

Conclusion

In this paper, we have presented a novel approach that combines deep learning with the EEG2Code method to predict properties of a visual stimulus from EEG signals. We could show that a subject can use this approach in an online BCI to reach an information transfer rate (ITR) of 1237 bit/min, which makes the presented BCI system the fastest system by far. In a simulated online experiment with 500,000 targets, we could further show that the presented method allows differentiating 500,000 different stimuli based on 2 s of EEG data with an accuracy of 100% for the best subject. As the presented method can extract more information from the EEG than can be used for BCI control, we discussed a ceiling effect that shows that more powerful methods for brain signal decoding do not necessarily translate into better BCI control, at least for BCIs based on visual stimuli. Furthermore, it is important to differentiate between the performance of a method for decoding brain signals and its performance for BCI control.

Supporting information

(MP4)

Acknowledgments

This work was supported by the German Research Council (DFG; SP 1533/2-1). The server running the deep-learning framework was provided by the IBM Shared University Research Grant.

Data Availability

All data used in this paper were made publicly available. The data can be found online separated for the synchronous simulated online experiment (DOI: 10.6084/m9.figshare.7058900.v1), the asynchronous simulated online experiment (DOI: 10.6084/m9.figshare.7611275.v1), and the online BCI experiment (DOI: 10.6084/m9.figshare.7701065.v1). To demonstrate that our results can be reproduced, we made a python script publicly available (DOI: 10.6084/m9.figshare.7701065.v1) that allows reproducing the results from the online passive BCI experiment. The script predicts the stimulation patterns for each run and outputs the corresponding accuracy. The attached CNN model is the one used online. When running the script to train a new CNN model, the results may slightly differ due to random properties of the method (e.g., dropout learning).

Funding Statement

This work was supported by the Deutsche Forschungsgemeinschaft (DFG; grant SP 1533/2-1; www.dfg.de). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Wolpaw JR, Birbaumer N, McFarland DJ, Pfurtscheller G, Vaughan TM. Brain–computer interfaces for communication and control. Clinical neurophysiology. 2002;113(6):767–791. 10.1016/S1388-2457(02)00057-3 [DOI] [PubMed] [Google Scholar]

- 2. Zander TO, Kothe C. Towards passive brain–computer interfaces: applying brain–computer interface technology to human–machine systems in general. Journal of neural engineering. 2011;8(2):025005 10.1088/1741-2560/8/2/025005 [DOI] [PubMed] [Google Scholar]

- 3. Vidal JJ. Real-time detection of brain events in EEG. Proceedings of the IEEE. 1977;65(5):633–641. 10.1109/PROC.1977.10542 [DOI] [Google Scholar]

- 4.Sutter EE. The visual evoked response as a communication channel. In: Proceedings of the IEEE Symposium on Biosensors; 1984. p. 95–100.

- 5. Sutter EE. The brain response interface: communication through visually-induced electrical brain responses. Journal of Microcomputer Applications. 1992;15(1):31–45. 10.1016/0745-7138(92)90045-7 [DOI] [Google Scholar]

- 6. Chen X, Wang Y, Nakanishi M, Gao X, Jung TP, Gao S. High-speed spelling with a noninvasive brain–computer interface. Proceedings of the national academy of sciences. 2015;112(44):E6058–E6067. 10.1073/pnas.1508080112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Nakanishi M, Wang Y, Chen X, Wang YT, Gao X, Jung TP. Enhancing detection of SSVEPs for a high-speed brain speller using task-related component analysis. IEEE Transactions on Biomedical Engineering. 2017;65(1):104–112. 10.1109/TBME.2017.2694818 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Bin G, Gao X, Wang Y, Li Y, Hong B, Gao S. A high-speed BCI based on code modulation VEP. Journal of neural engineering. 2011;8(2):025015 10.1088/1741-2560/8/2/025015 [DOI] [PubMed] [Google Scholar]

- 9. Spüler M, Rosenstiel W, Bogdan M. Online adaptation of a c-VEP brain-computer interface (BCI) based on error-related potentials and unsupervised learning. PloS one. 2012;7(12):e51077 10.1371/journal.pone.0051077 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Thielen J, van den Broek P, Farquhar J, Desain P. Broad-Band visually evoked potentials: re (con) volution in brain-computer interfacing. PloS one. 2015;10(7):e0133797 10.1371/journal.pone.0133797 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Nagel S, Spüler M. Modelling the brain response to arbitrary visual stimulation patterns for a flexible high-speed Brain-Computer Interface. PloS one. 2018;13(10):e0206107 10.1371/journal.pone.0206107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Lalor EC, Pearlmutter BA, Reilly RB, McDarby G, Foxe JJ. The VESPA: a method for the rapid estimation of a visual evoked potential. Neuroimage. 2006;32(4):1549–1561. 10.1016/j.neuroimage.2006.05.054 [DOI] [PubMed] [Google Scholar]

- 13. Capilla A, Pazo-Alvarez P, Darriba A, Campo P, Gross J. Steady-State Visual Evoked Potentials Can Be Explained by Temporal Superposition of Transient Event-Related Responses. PLOS ONE. 2011;6(1):1–15. 10.1371/journal.pone.0014543 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Nagel S, Spüler M. Asynchronous non-invasive high-speed BCI speller with robust non-control state detection. Scientific reports. 2019;9(1):8269 10.1038/s41598-019-44645-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:14091556. 2014;.

- 16. Cecotti H, Graser A. Convolutional neural networks for P300 detection with application to brain-computer interfaces. IEEE transactions on pattern analysis and machine intelligence. 2011;33(3):433–445. 10.1109/TPAMI.2010.125 [DOI] [PubMed] [Google Scholar]

- 17. Kwak NS, Müller KR, Lee SW. A convolutional neural network for steady state visual evoked potential classification under ambulatory environment. PloS one. 2017;12(2):e0172578 10.1371/journal.pone.0172578 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Thomas J, Maszczyk T, Sinha N, Kluge T, Dauwels J. Deep learning-based classification for brain-computer interfaces. In: Systems, Man, and Cybernetics (SMC), 2017 IEEE International Conference on. IEEE; 2017. p. 234–239.

- 19. Odom JV, Bach M, Brigell M, Holder GE, McCulloch DL, Mizota A, et al. ISCEV standard for clinical visual evoked potentials:(2016 update). Documenta Ophthalmologica. 2016;133(1):1–9. 10.1007/s10633-016-9553-y [DOI] [PubMed] [Google Scholar]

- 20. van Gunsteren F. Deep Neural Networks for Classification of EEG Data. University of Tübingen; WSI, Tübingen; 2018. [Google Scholar]

- 21.Python. version 2.7. Wilmington, Delaware: Python Software Foundation; 2010.

- 22.Chollet F, et al. Keras; 2015. keras.io.

- 23. Nagel S, Dreher W, Rosenstiel W, Spüler M. The effect of monitor raster latency on VEPs, ERPs and Brain–Computer Interface performance. Journal of neuroscience methods. 2018;295:45–50. 10.1016/j.jneumeth.2017.11.018 [DOI] [PubMed] [Google Scholar]

- 24. Schalk G, McFarland DJ, Hinterberger T, Birbaumer N, Wolpaw JR. BCI2000: a general-purpose brain-computer interface (BCI) system. IEEE Transactions on biomedical engineering. 2004;51(6):1034–1043. 10.1109/TBME.2004.827072 [DOI] [PubMed] [Google Scholar]

- 25. Spüler M. A high-speed brain-computer interface (BCI) using dry EEG electrodes. PloS one. 2017;12(2):e0172400 10.1371/journal.pone.0172400 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Dal Seno B, Matteucci M, Mainardi LT. The utility metric: a novel method to assess the overall performance of discrete brain–computer interfaces. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2010;18(1):20–28. 10.1109/TNSRE.2009.2032642 [DOI] [PubMed] [Google Scholar]

- 27. Wolpaw JR, Ramoser H, McFarland DJ, Pfurtscheller G. EEG-based communication: improved accuracy by response verification. IEEE transactions on Rehabilitation Engineering. 1998;6(3):326–333. 10.1109/86.712231 [DOI] [PubMed] [Google Scholar]

- 28. Shannon CE. A mathematical theory of communication. Bell system technical journal. 1948;27(3):379–423. 10.1002/j.1538-7305.1948.tb01338.x [DOI] [Google Scholar]

- 29. Suefusa K, Tanaka T. Asynchronous brain–computer interfacing based on mixed-coded visual stimuli. IEEE Transactions on Biomedical Engineering. 2018;65(9):2119–2129. 10.1109/TBME.2017.2785412 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(MP4)

Data Availability Statement

All data used in this paper were made publicly available. The data can be found online separated for the synchronous simulated online experiment (DOI: 10.6084/m9.figshare.7058900.v1), the asynchronous simulated online experiment (DOI: 10.6084/m9.figshare.7611275.v1), and the online BCI experiment (DOI: 10.6084/m9.figshare.7701065.v1). To demonstrate that our results can be reproduced, we made a python script publicly available (DOI: 10.6084/m9.figshare.7701065.v1) that allows reproducing the results from the online passive BCI experiment. The script predicts the stimulation patterns for each run and outputs the corresponding accuracy. The attached CNN model is the one used online. When running the script to train a new CNN model, the results may slightly differ due to random properties of the method (e.g., dropout learning).