Abstract

Objective:

Self-efficacy expectations are associated with improvements in problematic outcomes widely considered clinically significant (i.e., emotional distress, fatigue, pain), related to positive health behaviors, and, as a type of personal agency, inherently valuable. Self-efficacy expectancies, estimates of confidence to execute behaviors, are important in that changes in self-efficacy expectations are positively related to future behaviors that promote health and well-being. The current meta-analysis investigated the impact of psychological interventions on self-efficacy expectations for a variety of health behaviors among cancer patients.

Methods:

Ovid Medline, PsycINFO, CINAHL, EMBASE, Cochrane Library, and Web of Science were searched with specific search terms for identifying randomized controlled trials (RCTs) that focused on psychologically-based interventions. Included studies had: 1) an adult cancer sample, 2) a self-efficacy expectation measure of specific behaviors and 3) an RCT design. Standard screening and reliability procedures were used for selecting and coding studies. Coding included theoretically informed moderator variables.

Results:

Across 79 RCTs, 223 effect sizes, and 8678 participants, the weighted average effect of self-efficacy expectations was estimated as g=0.274 (p<.001). Consistent with Self-Efficacy Theory, the average effect for in-person intervention delivery (g=0.329) was significantly greater than for all other formats (g=0.154, p=.023; e.g., audiovisual, print, telephone, web/internet).

Conclusions:

The results establish the impact of psychological interventions on self-efficacy expectations as comparable in effect size to commonly reported outcomes (distress, fatigue, pain). Additionally, the result that in-person interventions achieved the largest effect is supported by Social Learning Theory and could inform research related to the development and evaluation of interventions.

Keywords: Cancer, Intervention, meta-analysis, oncology, RCTs, self-efficacy

Introduction

Meta-analytic reviews of psychological interventions for cancer patients have focused on emotional distress (1, 2), fatigue (3), and pain (4). These reviews and others like them reflect the fact that such problems are very common in cancer patients. Current estimates of clinically relevant emotional distress in cancer patients put the rate at 20% to 50%, well above the estimated rate of 10% of the general population experiencing depression and anxiety (5, 6, 7). In addition, it is widely accepted that fatigue and pain are among the most common and debilitating symptoms experienced by cancer patients (8). If untreated, these symptoms could impair ongoing adherence to medical advice and regimens, diet and physical activity recommendations, work activity, help-seeking, and quality of life.

The extent of the meta-analytic work on distress, pain, and fatigue reflects not only cancer patients’ increased risk for emotional distress (1, 3, 9, 10, 11, 12, 13, 14), fatigue and pain (4), but also the development of many short-term treatments to mitigate those negative outcomes. However, comparatively little attention has been given to strategic variables and mechanisms that can enhance adjustment throughout the continuum of cancer care, optimize outcomes and promote resilience in the course of treatment and after (15). For example, constructs such as self-efficacy, positive affect, and meaning and purpose represent domains of human functioning that serve as mechanisms that affect outcomes such as emotional distress, fatigue and pain (16) and resources that facilitate improvement in quality of life. Moreover, these constructs are patient-reported outcomes that are inherently meaningful, valued by patients in their own right, used by patients to describe their functioning, and as such, critical to patient-centered care and quality of life. Thus, it is important, and complementary to other existing meta-analyses, to document the impact of interventions on positive variables, which are building blocks in an affirmative adjustment to cancer and help promote and sustain quality of life outcomes.

Generally, past meta-analyses of interventions that focused on addressing problems (e.g., decreasing symptoms) have provided significant support for their impact on outcomes with effect sizes in the small to medium range (Cohen’s d=0.2 to 0.5). For example, the average effect of psychological interventions on depression was d =.43 (1). For the reduction of self-reported fatigue, the results were very similar whether the intervention for fatigue was psychologically based (d=.27) or exercise based (d =.30) (3). In another systematic review, exercise was highly effective in decreasing fatigue up to 12 weeks post-treatment (d =−0.82; 95% CI −1.50 to −0.14), with effects that were reduced but retained in a 12 week to 6-month follow-up period (d =−0.42; 95% CI −0.02 to −0.83; 2). For reduction of pain severity (4), the overall impact of intervention (e.g., skills training, educational, individual counseling) versus control conditions had an estimated average effect size of d=.34. Similarly, with regard to a reduction of pain interference relative to control conditions, the average effect was medium (d =−.40; 4). Although less effective, exercise interventions reduced pain relative to control conditions (d = −0.29; 95% CI −0.55 to −0.04; (2). In summary, the results for emotional distress, fatigue, and pain provide support for psychological treatments as one form of intervention that may help patients improve problematic symptoms. However, these studies do not establish which skills or personal resources are being built-up or reinforced, and in what way those resources assist patients to confront the challenges of cancer, its treatments, and the residual effects that may influence post-treatment adjustment or advanced cancer care. The current meta-analysis focuses on personal agency (i.e., self-efficacy expectations) as a positive resource in the context of psychologically-based interventions for persons with cancer.

Positive, skill-based resources fall under the general rubrics of autonomy, self-determination (17), self-regulation (18), and self-efficacy (19). Peoples’ beliefs and expectations about their ability to exercise control or mastery over their behaviors represent underlying and central mechanisms of human agency and personal efficacy (17–19). Self-efficacy expectations are precursors to improved outcomes in many chronic conditions such as rheumatoid arthritis (20), cardiac conditions (21) and stroke (22). In the context of cancer, patients with higher levels of self-efficacy expectations are better able to manage challenges associated with cancer and report a higher quality of life and greater disease-adjustment (23, 24, 25, 26, 27) than those who have low self-efficacy. Self-efficacy, which is a part of general Social Learning Theory, promotes progress in resolving cancer patients’ emotional distress and fosters skills in symptom management, the primary outcomes for interventions (19, 28, 29, 30). That is, self-efficacy expectations are associated with improvements in problematic outcomes already widely considered clinically significant. In summary, because of the relationship of self-efficacy expectations to improvements in problematic behaviors, its relationship to positive health behaviors (31), and the value that people place on a sense of control and personal agency in certain domains of their lives (17–19), self-efficacy is, in addition to a catalyst for positive outcomes, itself an independently important positive outcome.

In contrast to other meta-analyses that are generally atheoretical, a meta-analysis of self-efficacy outcomes allows the use of theory (i.e., Self-Efficacy and Social Learning Theory) to guide hypothesis generation. That is, certain components or features of interventions (32) that are consistent with Self-Efficacy Theory may foster greater gains in outcomes compared to those that are not consistent with the theory. Thus, we hypothesize that “in person”, face-to-face interventions would be more effective than those that are delivered via other formats (e.g., audiovisual, print, telephone, web/internet). Also, we would hypothesize, based on theory, that group-based interventions would be more effective in enhancing self-efficacy than individually-based interventions. In both instances, that is in-person and group-based interventions, the social learning environment as defined by Self-Efficacy Theory, which involves persuasion, social modeling, and social support, is hypothesized to markedly enhance outcomes over other formats. In summary, the objectives of this meta-analysis are to 1) examine the impact of interventions on self-efficacy expectations for cancer patients through a systematic analysis of RCTs and 2) to test moderators (e.g., group versus individual format; in-person versus other formats) that are consistent with Self-Efficacy Theory in order to investigate optimal formats of delivery.

Method

Search Strategy

For this systematic review, standardized search strategies were applied to 6 electronic databases (See Table 1 and Supplemental Materials: Ovid Medline, PsycINFO, CINAHL, EMBASE, Cochrane Library, Web of Science). Controlled search terms specific to each database were used for RCTs that focused on psychologically-based interventions (e.g., psychotherapy, counseling, educational, support, quality of life, depression, anxiety, etc.) for persons with a cancer diagnosis. The search period covered the earliest publication date available in each database through January 11, 2017. For the MEDLINE search, we used the McMaster multi-term filters with the best balance of sensitivity and specificity for retrieving RCTs (33) and systematic reviews (34). We translated from Ovid to EBSCOhost syntax the McMaster multi-term filters with best balance of sensitivity and specificity for retrieving RCTs for PsycINFO (35) and the McMaster highly sensitive filters for retrieving randomized controlled trials for CINAHL (36). For EMBASE, we translated from Ovid to embase.com syntax the multi-term EMBASE filter with the best balance of sensitivity and specificity (36). For Web of Science databases, we used a simplified single-term search strategy for identifying RCTs (37). In PsycINFO and CINAHL databases, we limited retrieval to academic journals and dissertations. Published articles were supplemented by unpublished studies (n=6) requested from professional LISTSERVs (L-Soft International, Inc., Bethesda, Md) of the Society of Behavioral Medicine (Cancer Special Interest Group), the American Psychosocial Oncology Society (general membership listserv), the American Psychological Association, Division 38 (Health Psychology), or, in the case of recent dissertations, available from ProQuest. Finally, we reached out to 39 study authors to request missing data. For 16 studies, the authors were unavailable or unresponsive and we excluded those studies. Of the remaining 23 studies, authors provided data for 15 studies and the remaining 8 studies had enough data to compute an effect size.

Table 1.

Meta-Analysis Search Strategies

| Database searched | Totals |

|---|---|

| Ovid MEDLINE(R) 1946 to December Week 1 2016; Ovid MEDLINE(R) In-Process & Other Non-Indexed Citations January 10, 2017; Ovid MEDLINE(R) Epub Ahead of Print January 10, 2017 | 366 |

| PsycINFO (EBSCOhost) | 999 |

| CINAHL with Full Text (EBSCOhost) | 355 |

| EMBASE (embase.com) | 937 |

| Cochrane Database of Systematic Reviews : Issue 1 of 12, January 2017 (Wiley) | 3 |

| Cochrane Central Register of Controlled Trials: Issue 11 of 12, November 2016 (Wiley) | 258 |

| Science Citation Index Expanded (SCI-EXPANDED) −-1900-present; Social Sciences Citation Index (SSCI) −-1900-present; Conference Proceedings Citation Index- Science (CPCI-S) −-1990-present; Conference Proceedings Citation Index- Social Science & Humanities (CPCI-SSH) −-1990-present (Web of Science) |

378 |

| Total | 3,296 |

| After de-duplication | 2,657 |

Inclusion and Exclusion Criteria

The goal of selection was to include studies with high quality RCT designs in order to draw definitive conclusions from the data that were extracted. Studies were chosen if they 1) included a sample of adults with a diagnosis of cancer (any site/stage), 2) included a self-efficacy measure related to specific behaviors (e.g., pain, physical activity, coping with cancer, stress management), and 3) were psychological intervention studies. Studies were excluded if they 1) included only pediatric samples, 2) included only qualitative assessments, 3) did not have a self-efficacy measure or included a general measure of self-efficacy rather than one related to specific behaviors or 4) used a comparative treatment design with no control group.

Screening Procedures

The review team included two pairs of raters, who had prior experience in conducting meta-analyses, were well-versed in theory and research in health psychology related to self-efficacy, and had PhD degrees in psychology. Using the Covidence platform (www.covidence.org), each abstract was reviewed by a pair of raters to determine which articles warranted full review. Studies potentially meeting inclusion criteria were then moved on to full-text review. Each full-text review was also conducted by a pair of raters; each rater independently appraised the study and extracted information that was contained in the coding strategy as well as the outcome data for entry into a database. Discrepancies at all levels of the review process were resolved by discussion, review, and consensus within pairs of raters. When full-text articles could not be acquired through the databases that were searched or the data were not useable (e.g., correlations, regressions), efforts were made to contact authors for data that would allow for the inclusion of the article as described above.

Coding Strategies

Each RCT study in the final set was coded with respect to: 1) intervention type (cognitive behavior therapy [CBT], complementary/integrative medicine [CIM], educational, multi-modal, self-management, social support, psychotherapy, or other); 2) skills-based (active skill-based training components in the intervention) versus non-skills-based (social support, information-based, traditional psychotherapy) interventions; 3) intervention focus (individual, dyad, group); 4) delivery format (in-person, audiovisual, print, telephone, web/internet); 5) cancer type; 6) cancer stage (early, advanced, mixed); 7) phase in cancer continuum (pre-treatment, curative treatment, palliative treatment, post-treatment, mixed). The coding for intervention type was based on the strategy used by Moyer, Sohl, Knapp-Oliver, and Schneider (38).

Theoretically-Based Moderator Variables

In order to test hypotheses related to Self-Efficacy Theory, two moderators were specifically targeted for comparisons. Based on Social Learning Theory, which is the larger theoretical framework for self-efficacy, effectiveness of interventions should be enhanced in a setting in which the person with cancer is interacting in–person with the provider of the intervention or other participants. To allow for sufficient power in the moderator analyses, in-person delivery was contrasted with all other formats (audiovisual, print, telephone, web/internet), combined. Also, according to Social Learning Theory, group-focused interventions should be more effective than individually focused in that persuasion, support, and social modeling are optimized in the group setting. We contrasted group-based interventions with dyadic-based as well as with individual-based interventions.

Risk of Bias Coding

To describe the potential for risk of bias, we reviewed each article and coded for the following Cochrane Risk of Bias categories that were relevant: sequence generation, allocation concealment, attrition, and outcome reporting. For sequence generation and allocation concealment, we described the method used to generate the allocation sequence so as to determine whether it should produce comparable groups. We also coded the method used to conceal the allocation sequence so as to determine whether intervention allocations could have been foreseen in advance of, or during, enrollment. Outcome reporting was coded as fully reported, partially reported, or not reported. Attrition numbers were derived for the CONSORT study flow diagram. For each category, we used the coded values to inform an assessment of bias as either low, unclear, or high risk of bias (39).

Effect Size Calculations and Meta-Analytic Procedures

To quantify the effects of interventions on self-efficacy scores, we used Hedge’s g estimate (40) of the standardized mean differences between treatment and control groups. For the numerator of the effect size estimate, we used the estimated difference at post-test between treatment and control groups, adjusted for baseline differences (i.e., change-score adjustment or regression adjustment). For the denominator of the effect size estimate, we used the baseline (pre-intervention) standard deviation of the self-efficacy measure, pooled across groups. We calculated effect size estimates from reported mean and SD estimates by group if available; otherwise, we used the statistical tests reported to calculate comparable effect size estimates. Study authors were contacted if available information was insufficient to calculate an effect size estimate.

An examination of the distribution of raw effect size estimates included in the meta-analysis revealed several positive outliers. We used Tukey’s (41) definition of outliers as values below the 1st quartile minus 3 times the inter-quartile range (g < −1.34) or above the 3rd quartile plus 3 times the interquartile range (g > 1.74). Following Lipsey and Wilson’s (42) method of winsorizing outliers, a total of four outlying effect size estimates, all from a single study (43), were re-coded to g=1.74, the upper edge of the outlier boundary.

Many included studies reported intervention effects on multiple measures of self-efficacy and/or at multiple follow-up times. Effect size estimates for multiple measures or multiple time points are likely to be correlated because they are based on a common sample, but the information necessary to estimate the degree of correlation was seldom available from published sources. For purposes of synthesizing the effect size estimates across the studies, we used random effects meta-analysis in combination with robust variance estimation techniques (44) to account for potential dependencies among effect size estimates from common samples. More specifically, we used a “correlated effects” working model, assuming a correlation of 0.7, as well as small-sample corrections to standard errors, hypothesis tests, and confidence intervals (45, 46). To measure the extent of heterogeneity among the effect sizes, we reported the restricted maximum likelihood estimate of the between-study standard deviation, denoted as . We also reported the I2 statistic, which is a relative measure of the extent to which heterogeneity among true effect sizes contributes to observed variation in the effect size estimates (47).

To examine differences in effect size across moderating variables, we used random effects meta-regression models. Following graphical diagnostics, the meta-regression models allowed for between-study variance components to differ across levels of the moderator (rather than assuming that the between-study variance was constant). To investigate possible risks of bias due to incomplete outcome reporting, we examined a funnel plot of effect size estimates and conducted a modified version of Egger’s regression test for funnel plot asymmetry. The funnel plot asymmetry test examines the association between the magnitude of effect size estimates and a measure of their precision. To measure precision, we used the scaled standard error, calculated as the numerator of the effect size estimate, scaled by the denominator of the effect size estimate; this measure was used to avoid artefactual association between the effect size estimate and its standard error (48). The funnel plot asymmetry test also used robust variance estimation to account for dependence of effect size estimates nested within studies. All analyses were conducted using the metafor package (49) and clubSandwich package (50) for the R statistical computing environment.

Results

Across 79 RCTs, 223 effect sizes, and 8678 participants (Table 2), the weighted average effect of self-efficacy outcomes was estimated as g = 0.274, 95% CI [0.2015, 0.346], p < .001. As anticipated, there was substantial heterogeneity of effects across studies, with an estimated between-study standard deviation of (I2 = 73%). One way of characterizing this degree of variability is to consider that, if effect sizes are normally distributed, then about 2/3 of effects should fall within 1 SD of the mean effect. According to these estimates, 2/3 of true effects should be between 0.032 and 0.516.

Table 2.

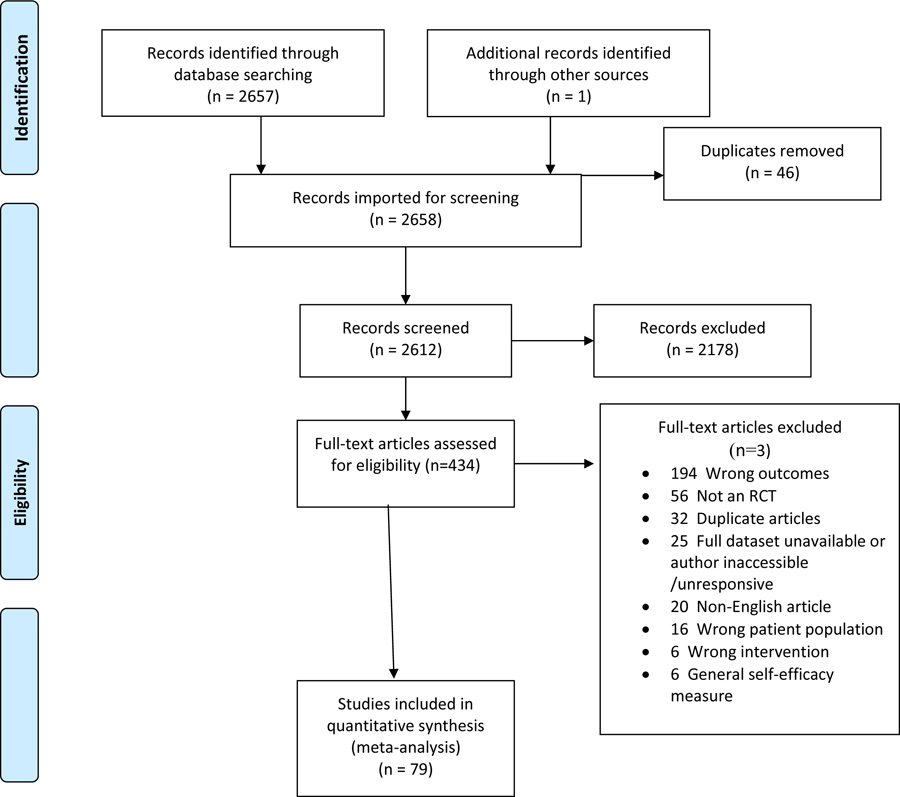

PRISMA Flow Chart

|

Risk of Bias

Risk-of-bias analyses that were relevant for the type of studies included in this meta-analysis were conducted to determine the extent to which studies included a random sequence generation plan, an allocation concealment plan, full outcome reporting, and low levels of attrition. Table 3 reports the results of a sensitivity analysis examining how study risk-of-bias affected estimates of the overall average effect size and extent of heterogeneity. The first row reports the estimated distribution of effect sizes across all included studies. Subsequent rows report estimates for subsets of studies defined by successively more stringent inclusion criteria for risk-of-bias. Thus, including only the 61 studies (with 185 effects) that were at low risk-of-bias for outcome reporting, the overall average effect size estimate was g = 0.284, 95% CI [0.199, 0.368], . Including only the 31 studies (79 effects) that were also at low risk-of-bias for sequence generation, the overall average effect was slightly lower (g = 0.218, 95% CI [0.096, 0.340], . Imposing further criteria related to allocation concealment and attrition resulted in successively smaller pools of studies, but that had very little influence on the overall average effect size estimate. As a whole, these analyses indicated that potential risk of bias factors were not predictive of effect magnitude.

Table 3.

Risk-of-bias analysis using successive inclusion criteria

| Criteria | Studies (Effects) | Estimate [SE] | 95% CI | I2 | |

|---|---|---|---|---|---|

| All studies | 79 (223) | 0.274 [0.036] | [0.201, 0.346] | 0.242 | 73% |

| Low ROB outcome reporting | 61 (185) | 0.284 [0.042] | [0.199, 0.368] | 0.249 | 75% |

| Low ROB sequence generation | 31 (79) | 0.218 [0.059] | [0.098, 0.340] | 0.262 | 76% |

| Low ROB allocation concealment | 17 (37) | 0.320 [0.078] | [0.153, 0.487] | 0.255 | 76% |

| Low overall attrition | 11 (23) | 0.244 [0.070] | [0.084, 0.404] | 0.148 | 57% |

Note: ROB = risk-of-bias.

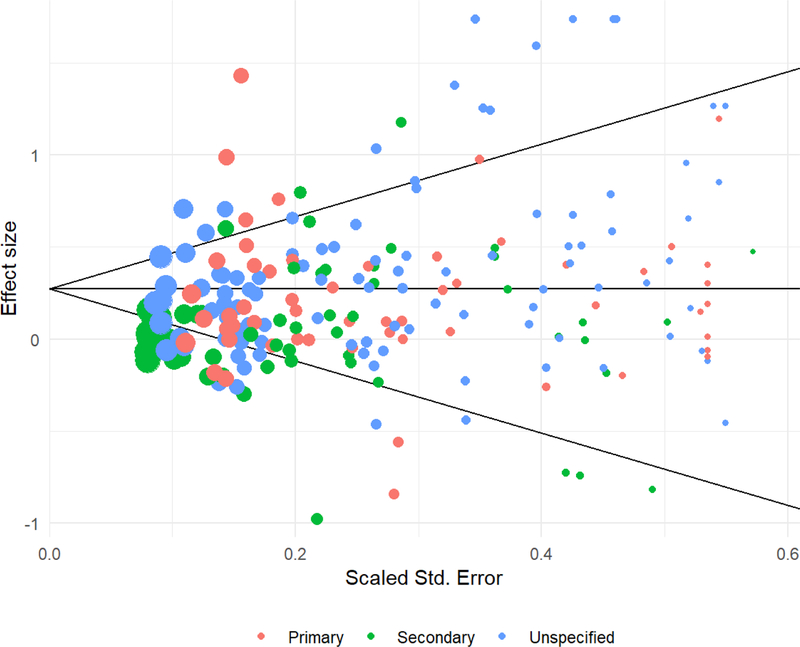

To further investigate possible risks of bias due to incomplete outcome reporting, we examined a funnel plot of effect size estimates and conducted modified versions of Egger’s regression test for funnel plot asymmetry. Figure 1 displays a funnel plot of effect size estimates versus scaled standard errors. No asymmetry is visually apparent. The modified version of Egger’s regression test indicated that there was no conclusive evidence of asymmetry, with an estimated slope for the scaled standard error of , 95% CI [−0.543, 0.757], p = .740. Adjusting for the scaled standard error, the overall average effect size from a perfectly precise study was estimated as g = 0.220, 95% CI [0.066, 0.374], p = .008. An alternative approach to examining the consequences of selective reporting is to include only large studies. Limiting the analytic sample to the 27 studies with sample sizes of 100 or larger at post-test leads to similar overall effect size estimates (g = 0.253, 95% CI [0.135, 0.372], p < .001) and heterogeneity estimates (, I2 = 85%). Thus, using techniques to account for the possibility of bias due to selective reporting, the average effect size remained statistically distinguishable from zero and marginally attenuated in magnitude relative to the unadjusted effect.

Figure 1.

Funnel plot of effect size estimates versus scaled standard errors. The area of each point is inversely proportional to the sampling variance of the effect size estimate. red = primary outcome; green = secondary outcome; blue = unspecified outcome

Overarching Moderators

In order to test whether design features were predictive of effect size, general type of intervention in each RCT (i.e., skills training versus some other form of intervention) and whether the self-efficacy measure was a primary or secondary outcome were examined. Interventions focusing primarily on skills training (g = 0.306, 95% CI [0.218, 0.394], k = 58 studies; 171 effects]) were not statistically distinguishable from those that were not primarily focused on skills training (g = 0.198, 95% CI [0.039, 0.357], k = 23 studies; 52 effects), F(1, 32.6) = 1.4, p=.245. Also, no differences could be distinguished statistically between effect sizes for studies where the measurement of self-efficacy was primary measure (g = 0.254, 95% CI [0.087, 0.42], k = 21 studies; 56 effects), secondary (g = 0.222, 95% CI [0.069, 0.374], k = 19 studies; 64 effects), or unspecified as either a primary or secondary (g = 0.293, 95% CI [0.194, 0.392], k=41 studies; 103 effects), F(2, 37.6) = .343, p=.712. These results indicate that the assessment of self-efficacy was not associated with the prioritization of outcomes nor the overarching type of treatment (i.e., skills training versus other types). These findings provided evidence in support of not distinguishing studies on the bases of these design features.

Demographic and Disease Moderator Analyses

Moderator analyses were conducted on demographic (age, sex) and disease variables (cancer type, phase of treatment). Effect size magnitude was not significantly associated with average age of the sample (, 95% CI [−0.016, 0.004], p=.223), nor with the proportion of females in the sample (, 95% CI [−0.001, 0.003], p=.179), nor with the number of weeks post-treatment for follow-up assessment (, 95% CI [−0.003, 0.004], p=.743). There was no statistically distinguishable difference in effects between studies of breast cancer patients versus studies with all other types of cancer, (breast: g = 0.334, 95% CI [0.228, 0.439], k = 23 studies; 58 effects; other: g = 0.253, 95% CI [0.154, 0.352], k = 54 studies; 158 effects, F(1,52.3) = 1.316, p = .257). Finally, there were no statistically distinguishable differences between samples with respect to phase in treatment, [F(2,37.3) = 2.01, p = 0.148] or stage of cancer, [F(2,15.4) = 0.925, p =.418].

Theory-Based Moderator Hypotheses

In terms of hypothesis testing, there were significant differences in intervention formats [F(1,43) = 5.560, p=0.023]). As hypothesized, the average effect for in-person intervention delivery (g = 0.329, 95% CI [0.240, 0.418], k=54 studies; 147 effects) was significantly greater than for all other formats (g=0.154, 95% CI [0.031, 0.277], k=25 studies; 72 effects), which included online, print media, telephone, and digital media (CDs, DVDs). Contrary to our hypothesis, there was no significant difference for the focus of the intervention [F(2,23.5) = 0.754, p=.481]; the effect size for group-based interventions (g = 0.358, 95% CI [0.166, 0.551], k = 16 studies; 52 effects) was greater although not significantly distinct from dyadic-based interventions (g = 0.264, 95% CI [0.049, 0.479], k = 13 studies; 32 effects), or individual-based interventions (g = 0.235, 95% CI [0.153, 0.317], k = 49 studies; 138 effects).

Intervention Type Moderator Analysis

The interventions from 79 studies included in the meta-analysis were classified according to Moyer et al. (38) as one of the following: CBT, self-management (e.g., self-management of symptoms, problems and health care), social support, CIM (guided imagery, relaxation, mindfulness meditation), physical activity, psychotherapy, educational, other (not otherwise codable), and multimodal (combination of at least two of the other types of interventions). We found statistically distinguishable differences between these types [F(8, 9.8) =3.37, p=.039)]. The effects for each type (compared to control conditions) revealed a group with medium effect sizes (CIM, g = 0.526, k = 6 studies, 14 effects, p = .014; social support, g = 0.516, k = 5 studies, 7 effects, p = .003; CBT, g = 0.497, k = 13 studies, 40 effects, p < .001; self-management, g = 0.409, k = 6 studies, 10 effects, p = .002); a single intervention type with a small/medium effect size (physical activity, g = 0.251, k=10 studies, 19 effects, p = .033); and a group with small effects sizes (multimodal, g = 0.190, k=17 studies, 67 effects, p=.031; psychotherapy, g = −0.112, k=2 studies, 9 effects, p = .700; educational, g = 0.194, k = 19 studies, 44 effects, p = .008; other, g = −0.044, k = 6 studies, 13 effects, p = .700).

Discussion

Results from this meta-analytic synthesis suggest that self-efficacy expectations significantly improved in the context of psychological interventions compared to control conditions. Moreover, the overall average effect size was comparable to those found in other recent meta-analyses that focused on emotional distress (d=.43) (1), fatigue (d=.33) (3), and pain (d=.34) (4). Importantly, these results occur in the context of studies that were screened for high quality, where there was low risk of bias and no apparent small-study bias.

The current meta-analysis was grounded in theory, which led to hypotheses about conditions where the interventions would be more effective. Consistent with Social Learning and Self-Efficacy Theories (19), conducting the intervention in-person was more effective in enhancing self-efficacy behaviors compared to other formats. The in-person format allows for the exchange of information and ideas in a social context that might encourage clarification, persuasion, support, and social modeling, even if the delivery were accomplished in a setting with just the facilitator and the patient. Mustian et al (3) also reported a significant effect of in-person format for decreasing fatigue, however, in that meta-analysis there were not enough studies with other formats to conduct post-hoc comparisons. However, given the variability of formats included in our comparison category, the optimal delivery format for implementing psychologically-based interventions is yet to be definitively determined. For example, even internet-based interventions can have different levels of human interaction from being exclusively self-directed to requiring considerable content guidance from a human provider (51). A fine-tuned level of comparative analyses among all formats was not possible in the current meta-analysis, but would be a goal for future reviews of interventions with formats that include varying degrees of in-person contact.

In contrast, the results did not confirm the hypothesis regarding intervention-delivery focus in spite of the larger effect size for group interventions compared to dyadic or individually administered interventions. Pending more comparative findings, we tentatively conclude that in-person formats are preferred to other formats of delivery, regardless of whether they are delivered in groups, dyads, or individually. Interestingly, Mustian et al (3) did not conduct post-hoc comparisons, however, based on the non-overlapping confidence intervals for their respective effect sizes, they found that group delivery was more effective than individual delivery. Given the larger effect sizes for group delivery versus individual in the current study and in Mustian et al (3), further study of the optimal delivery format is warranted.

The analyses as a function of the type of intervention were exploratory, but do point to some consistency in the relationship between interventions that were aligned with Self-Efficacy Theory and the quality of self-efficacy effects, especially in the case of those interventions for which there were larger numbers of studies and effects. Although not statistically distinguishable, CBT interventions had the most robust effect sizes compared to those that included multiple intervention components (multimodal) or were strictly educational, though not different from physical activity. Moreover, when grouping studies by effect size, three of the four interventions in the medium effect size range (CBT, social support, and self-management) were theoretically consistent with Self-Efficacy Theory. Bolstering the notion that CBT may be the preferred treatment, in meta-analyses that focused on depression (1) and fatigue (3), CBT was found to be significantly more effective than Problem Solving Therapy in the former and more effective than psychoeducational interventions in the latter.

Finally, although there were fewer studies classified as CIM, several included mindfulness and relaxation with guided imagery (52), which are designed to provide new cognitive perspectives on symptoms and problems. At the very least, we might speculate that CIM, as defined in this analysis, and CBT are effective treatments and more commonly delivered in person, which in this meta-analysis was the most effective delivery format. That said, none of the categories were statistically distinguishable from any other category after accounting for multiple comparisons; the large number of categories and small number of studies in some categories limited power for such comparisons. With the accumulation of more intervention studies in the future, perhaps a more fine-grained analysis of the differences between types of interventions will shed light on interventions that are clearly superior to others.

Clinical Implications

The current study draws attention to the importance of in-person formats for the delivery of interventions for persons with a diagnosis of cancer. This conclusion is drawn tentatively because of the comparison of in-person formats with all other formats combined. However, whereas the results do suggest that in-person formats are better, there is also the possibility that they can be integrated into other formats. For example, accompanying interventions that are primarily delivered with materials that are accessible on-line, there could be periodic in-person sessions or regular face-to-face contact (e.g., Skype) to assist with processing and personalizing the intervention materials. Supporting these clinical implications is the finding by Mustian et al (3) that in-person formats were significantly different from the null. In addition, Mustian et al (3) found that group delivery was significantly better than individual delivery. Whereas, it was not determined to be superior in the current study, group delivery had a much larger effect size than dyadic or individually delivered interventions. Pending more evidence, there is support for in person and group formats for the delivery of interventions to persons with cancer, which are consistent with Social Learning Theory, the guiding conceptual framework for the current study.

Limitations and Future Directions

It is a limitation of the current study that the control conditions were restricted to those that were not intended to be active treatments. Thus, no comparative studies were included. However, not all control conditions are equal. Future work could look more closely at, for example, placebo and attention controls compared to usual care. This approach would further refine the specific effects of treatments in the situation in which the control condition was more plausible from the perspective of participants. Although there were too few studies to test all formats head-to-head, the increase in the use of online and media-based formats should allow for that comparison in the future. These findings also raise issues concerning the need to take into account scalability in future research. Digital and on-line health modalities may be more scalable interventions than in-person approaches, and, therefore, the trade-off between broad reach and effectiveness will need to be included in research that compares those formats. Along those lines, perhaps well-designed, digital health interventions that include key social modeling components (even tailored for demographic similarity to the participant) would be worth exploring.

As a follow-up to this more general analysis, it would be important to look more closely at the studies in which the interventions were exclusively delivered to those in curative treatment and those that were delivered post-treatment. There might be some differences in the types of interventions for those off curative treatment compared to those for patients-in-treatment. Alternatively, there might be a differential responsiveness of patients-in-treatment and off treatment to the same intervention. Thus, while speculative, the transition to being off treatment may set the stage for a readiness to engage in the intervention in a way that is different from the circumstances of still being on active treatment (15, 53).

Conclusions

The focus on self-efficacy grounded this meta-analysis on the positive side of coping with cancer and, generally, supported the impact of treatments on self-efficacy expectations. Those results are important in that gains in self-efficacy foster important gains in other health behaviors and patient-centered outcomes and is itself a valued outcome. In addition, the result that in-person interventions may achieve the largest effect is supported by theory, however, future research would need to compare each format individually to confirm the general findings. In addition, based on the importance for scalable interventions to meet the needs of patients, innovative formats that combine in-person and/or social modeling in the context of on line and digital methods may have utility. Thus, the challenge in the future is the ability to develop interventions that are effective and scalable, that is effective interventions that are able to address demand.

Supplementary Material

Acknowledgments

This research project was supported, in whole or in part, by the National Cancer Institute’s Small Grants Program (Salsman: R03 CA184560) and the National Center for Complementary & Integrative Health (Sohl: K01AT008219). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. The authors would like to thank Carolyn A. Heitzmann Ruhf for her contributions.

Footnotes

Conflict of Interest

The authors declared no conflicts of interest with respect to the research, authorship, and/or publication of this article.

Ethical Statement

The current project was based on a reanalysis of published data and therefore was exempt from Institutional Review Board review.

Data Availability

A spreadsheet with data extracted from the 79 studies is archived at CurateND: Self-Efficacy RCT Meta-Analysis Spreadsheet with an embargo until March 13, 2020 (54).

References

- (1).Hart S, Hoyt M, Diefenbach M, Anderson D, Kilbourn K, Craft L, et al. Meta-analysis of efficacy of interventions for elevated depressive symptoms in adults diagnosed with cancer. Journal of the National Cancer Institute 2012;104(13):990–1004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (2).Mishra S, Scherer R, Snyder C, Geigle P, Gotay C. The effectiveness of exercise interventions for improving health-related quality of life from diagnosis through active cancer treatment. Oncology Nursing Forum 2015;42(1):E33–E53. [DOI] [PubMed] [Google Scholar]

- (3).Mustian KM, Alfano CM, Heckler C, Kleckner AS, Kleckner IR, Leach CR, et al. Comparison of pharmaceutical, psychological, and exercise treatments for cancer-related fatigue - A meta-analysis. JAMA Oncology 2017. [DOI] [PMC free article] [PubMed]

- (4).Sheinfeld Gorin S, Krebs P, Badr H, Janke E, Jim HSL, Spring B, et al. Meta-analysis of psychosocial interventions to reduce pain in patients with cancer. Journal of Clinical Oncology 2012;30(5):539–547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (5).Foster C, Wright HH, Hopkinson J, Roffe L. Psychosocial implication of living 5 years or more following a cancer diagnosis: A systematic review of the research evidence. European Journal of Cancer Care 2009;18:223–247. [DOI] [PubMed] [Google Scholar]

- (6).Harrington CB, Hansen J, Moskowitz M, Todd BL, Feuerstein M. It’s not over when it’s over: Long-term symptoms in cancer survivors - a systematic review. International Journal of Psychiatry in Medicine 2010;40(2):163–181. [DOI] [PubMed] [Google Scholar]

- (7).Zabora J, BrintzenhofeSzoc K, Curbow B, Hooker C, Piantadosi S. The prevalence of psychological distress by cancer site. Psychooncology 2001;10(1):19–28. [DOI] [PubMed] [Google Scholar]

- (8).van den Beuken-van Everdingen, Hochstenbach, Joosten, Tjan-Heijnen, Janssen Update on prevalence of pain in patients with cancer: Systematic review and meta-analysis. Journal of Pain and Symptom Management 2016. June;51(6):1070–1090. doi: 10.1016/j.jpainsymman.2015.12.340 [DOI] [PubMed] [Google Scholar]

- (9).Dieng M, Butow P, Costa DSJ, Morton R, Menzies S, Mireskandari S, et al. Psychoeducational Intervention to Reduce Fear of Cancer Recurrence in People at High Risk of Developing Another Primary Melanoma: Results of a Randomized Controlled Trial. Journal of Clinical Oncology 2016;34(36):4405–4414. [DOI] [PubMed] [Google Scholar]

- (10).Richter D, Koehler M, Friedrich M, Hilgendorf I, Mehnert A, Weißflog G. Psychosocial interventions for adolescents and young adult cancer patients: A systematic review and meta-analysis. Critical Reviews in Oncology 2015;95(3):370–386. [DOI] [PubMed] [Google Scholar]

- (11).Pai ALH, Drotar D, Zebracki K, Moore M, Youngstrom E. A meta-analysis of the effects of psychological interventions in pediatric oncology on outcomes of psychological distress and adjustment. Journal of Pediatric Psychology 2006;31(9):978–988. [DOI] [PubMed] [Google Scholar]

- (12).Hilfiker R, Meichtry A, Eicher M, Nilsson Balfe L, Knols RH, Verra MM, et al. Exercise and other non-pharmaceutical interventions for cancer-related fatigue in patients during or after cancer treatment: a systematic review incorporating an indirect-comparisons meta-analysis. British Journal of Sports Medicine 2017. [DOI] [PMC free article] [PubMed]

- (13).Lipsett A, Barrett S, Haruna F, Mustian K, O’Donovan A. The impact of exercise during adjuvant radiotherapy for breast cancer on fatigue and quality of life: A systematic review and meta-analysis. Breast 2017;32:144–155. [DOI] [PubMed] [Google Scholar]

- (14).van de Wal M, Thewes B, Gielissen M, Speckens A, Prins J. Efficacy of blended cognitive behavior therapy for high fear of recurrence in breast, prostate, and colorectal cancer rurvivors: The SWORD Study, a Randomized controlled trial. Journal of Clinical Oncology 2017:JCO2016705301. [DOI] [PubMed]

- (15).Philip E, Merluzzi T, Zhang Z, Heitzmann C. Depression and cancer survivorship: importance of coping self-efficacy in post-treatment survivors. Psychooncology 2013;22(5):987–994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (16).Taylor C, Lyubomirsky S, Stein M. Upregulating the positive affect system in anxiety and depression: Outcomes of a positive activity intervention. Depression and Anxiety 2017;34(3):267–280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (17).Ryan RM, Deci EL. Self-determination theory and the facilitation of intrinsic motivation, social development, and well-being. American Psychologist 2000;55(1):68–78. [DOI] [PubMed] [Google Scholar]

- (18).Carver CS, Scheier M. On the self-regulation of behavior New York: Cambridge University Press; 1998. [Google Scholar]

- (19).Bandura A Self-efficacy: The exercise of control New York: Freeman; 1997. [Google Scholar]

- (20).Rahman A, Reed E, Underwood M, Shipley ME, Omar RZ. Factors affecting self-efficacy and pain intensity in patients with chronic musculoskeletal pain seen in a specialist rheumatology pain clinic. Rheumatology 2008;47(12):1803–1808. [DOI] [PubMed] [Google Scholar]

- (21).Schwerdtfeger A, Konermann L, Schönhofen K. Self-efficacy as a health-protective resource in teachers? A biopsychological approach. Health Psychology 2008;27(3):358–368. [DOI] [PubMed] [Google Scholar]

- (22).Jones F, Partridge C, Reid F. The Stroke Self-Efficacy Questionnaire: measuring individual confidence in functional performance after stroke. Journal of Clinical Nursing 2008;17(7B):244–252. [DOI] [PubMed] [Google Scholar]

- (23).Chirico A, Lucidi F, Merluzzi T, Alivernini F, De Laurentiis M, Botti G, et al. A meta-analytic review of the relationship of cancer coping self-efficacy with distress and quality of life. Oncotarget 2017;8(22):36800–36811. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (24).Liang S, Chao T, Tseng L, Tsay S, Lin K, Tung H. Symptom-management self-efficacy mediates the effects of symptom distress on the quality of life among Taiwanese oncology outpatients with breast cancer. Cancer Nursing 2016;39(1):67–73. [DOI] [PubMed] [Google Scholar]

- (25).Merluzzi TV, Nairn RC, Hegde K, Martinez Sanchez MA, Dunn L. Self-efficacy for coping with cancer: revision of the Cancer Behavior Inventory (version 2.0). Psychooncology 2001;10(3):206–217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (26).Merluzzi T, Philip E, Heitzmann Ruhf C, Liu H, Yang M, Conley C. Self-Efficacy for Coping With Cancer: Revision of the Cancer Behavior Inventory (Version 3.0). Psychological Assessment 2017. [DOI] [PMC free article] [PubMed]

- (27).Robb C, Lee A, Jacobsen P, Dobbin K, Extermann M. Health and personal resources in older patients with cancer undergoing chemotherapy. Journal of Geriatric Oncology 2013;4(2):166–173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (28).Meredith P, Strong J, Feeney J. Adult attachment variables predict depression before and after treatment for chronic pain. European Journal of Pain 2007;11(2):164–170. [DOI] [PubMed] [Google Scholar]

- (29).Linde J, Rothman A, Baldwin A, Jeffery R. The impact of self-efficacy on behavior change and weight change among overweight participants in a weight loss trial. Health Psychology 2006;25(3):282–291. [DOI] [PubMed] [Google Scholar]

- (30).Marszalek J, Price L, Harvey W, Driban J, Wang C. Outcome expectations and osteoarthritis: Association of perceived benefits of exercise with self-efficacy and depression. Arthritis Care Research 2017;69(4):491–498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (31).Sheeran P, Maki A, Montanaro E, Avishai Yitshak A, Bryan A, Klein WMP, et al. The impact of changing attitudes, norms, and self-efficacy on health-related intentions and behavior: A meta-analysis. Health Psychology 2016;35(11):1178–1188. [DOI] [PubMed] [Google Scholar]

- (32).Graves K Social cognitive theory and cancer patients’ quality of life: a meta-analysis of psychosocial intervention components. Health Psychology 2003;22(2):210–219. [PubMed] [Google Scholar]

- (33).Haynes RB, McKibbon KA, Wilczynski NL, Walter SD, Werre SR. Optimal search strategies for retrieving scientifically strong studies of treatment from Medline: analytical survey. British Medical Journal 2005;330(7051):1179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (34).Montori V, Wilczynski N, Morgan D, Haynes RB. Optimal search strategies for retrieving systematic reviews from Medline: analytical survey. British Medical Journal 2005;330(7482):68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (35).Eady AM, Wilczynski NL, Haynes RB. PsycINFO search strategies identified methodologically sound therapy studies and review articles for use by clinicians and researchers. Journal of Clinical Epidemiology 2008;61(1):34–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (36).Wong SS, Wilczynski NL, Haynes RB. Optimal CINAHL search strategies for identifying therapy studies and review articles. Journal of Nursing Scholarship 2006;38(2):194–199. [DOI] [PubMed] [Google Scholar]

- (37).Royle P, Waugh N. A simplified search strategy for identifying randomised controlled trials for systematic reviews of health care interventions: a comparison with more exhaustive strategies. BMC Medical Research Methodology 2005;5:23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (38).Moyer A, Sohl SJ, Knapp-Oliver SK, Schneider S. Characteristics and methodological quality of 25 years of research investigating psychosocial interventions for cancer patients. Cancer Treatment Reviews 2009;35(5):475–484. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (39).Higgins JPT, Ramsay C, Reeves BC, Deeks JJ, Shea B, Valentine JC, et al. Issues relating to study design and risk of bias when including non-randomized studies in systematic reviews on the effects of interventions. Research Synthesis Methods 2001;4(1):12–25. [DOI] [PubMed] [Google Scholar]

- (40).Hedges L Distribution Theory for Glass’s Estimator of Effect Size and Related Estimators. Journal of Educational Statistics 1981;6(2):107. [Google Scholar]

- (41).Tukey J Exploratory Data Analysis Boston: Addison-Wesley; 1977. [Google Scholar]

- (42).Lipsey MW, Wilson DB. Practical Meta-analysis Thousand Oaks CA: Sage Publications; 2001. [Google Scholar]

- (43).Telch CF, Telch MJ. Group coping skills instruction and supportive group therapy for cancer patients: A comparison of strategies. Journal of Consulting and Clinical Psychology 1986;54(6):802–808. [DOI] [PubMed] [Google Scholar]

- (44).Tipton E, Hedges L, Johnson M. Robust variance estimation in meta-regression with dependent effect size estimates. Research Synthesis Methods 2010;1(1):39–65. [DOI] [PubMed] [Google Scholar]

- (45).Tipton E Small sample adjustments for robust variance estimation with meta-regression. Psychological Methods 2015;20(3):375–393. [DOI] [PubMed] [Google Scholar]

- (46).Tipton E, Pustejovsky J. Small-sample adjustments for tests of moderators and model fit using robust varienace estimation in meta-regression. Journal of Educational and Behavioral Statistics 2015;40(6):604–634. [Google Scholar]

- (47).Borenstein M, Higgins J, Hedges L, Rothstein H. Basics of meta-analysis: I2 is not an absolute measure of heterogeneity. Research Synthesis Methods 2018;8(1):5–18. [DOI] [PubMed] [Google Scholar]

- (48).Pustejovsky JE, Rodgers MA. Testing for funnel plot asymmetry of standardized mean differences. Research Synthesis Methods doi: 10.1002/jrsm.1332 [DOI] [PubMed] [Google Scholar]

- (49).Viechtbauer W Conducting meta-analysis in R with metafor package. Journal of Statistical Software 2010;36(3):1–48. [Google Scholar]

- (50).Pustejovsky J clubSandwich: Cluster-robust (Sandwich) variance estimators with small-sample corrections. R package version 0.2.3. 2017; Available at: https://CRAN.R-project.org/package=clubSandwich.

- (51).Mohr D, Cuijpers P, Lehman K. Supportive accountability: A model for providing human support to enhance adherence to eHealth interventions. Journal of Medical Internet Research 2011;13(1):e30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (52).Brown KW, Ryan RM. The benefits of being present: Mindfulness and its role in psychological well-being. Journal of Personality and Social Psychology 2003;84(4):822–848. [DOI] [PubMed] [Google Scholar]

- (53).Merluzzi T, Philip E, Yang M, Heitzmann C. Matching of received social support with need for support in adjusting to cancer and cancer survivorship. Psychooncology 2016;25(6):684–690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- (54).[dataset] Merluzzi TV, Pustejovsky JE, Philip EJ, Sohl SJ, Berendsen MA, & Salaman JS; March 14, 2019; Self-efficacy data.csv; CurateND; Link: https://curate.nd.edu/show/jw827943c2d. [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.