Abstract

Objective:

To evaluate the individual-level impact of an electronic clinical support (ECDS) tool, PedsGuide, on febrile infant clinical decision making and cognitive load.

Methods:

A counterbalanced, prospective, cross-over simulation study was performed amongst attending and trainee physicians. Participants performed simulated febrile infant cases with use of PedsGuide and with standard reference text. Cognitive load was assessed using the NASA-Task Load Index (NASA-TLX) which determines mental, physical, temporal demand, effort, frustration, and performance. Usability was assessed with the System Usability Scale (SUS). Scores on cases and NASA-TLX scores were compared between condition states.

Results:

A total of 32 participants completed the study. Scores on febrile infant cases using PedsGuide were higher compared to standard reference text (89% vs. 72%, p=0.001). NASA-TLX scores were lower (i.e. more optimal) with use of PedsGuide vs. control (mental 6.34 vs. 11.8, p<0.001; physical 2.6 vs. 6.1, p=0.001; temporal demand 4.6 vs. 8.0, p=0.003; performance 4.5 vs. 8.3, p<0.001; effort 5.8 vs. 10.7, p<0.001; frustration (3.9 vs. 10, p<0.001). The SUS had an overall score of 88 out of 100 with rating of acceptable on the acceptability scale.

Conclusions:

Use of PedsGuide led to increased adherence to guidelines and decreased cognitive load in febrile infant management when compared to use of a standard reference tool. This study employs a rarely used method of assessing ECDS tools using a multi-faceted approach (medical decision-making, assessing usability, and cognitive workload,) that may be utilized to assess other ECDS tools in the future.

Keywords: electronic clinical decision support, febrile infant, usability, mental workload

Introduction

Medical knowledge and information is increasing at a rate faster than the human mind can assimilate.1 Increased information assimilation results in increased cognitive load which can lead to medical errors.2 One way to mitigate mental fatigue and disseminate knowledge is through electronic clinical decision support (ECDS) tools. ECDS tools have been deployed across a variety of platforms including mobile devices via smartphone applications (apps) and can range from risk calculators and stratification tools, to stepwise management guidance.3 Given that a majority of providers use their cell phone in clinical practice, mobile applications can be particularly useful in settings where such providers lack access to expertise. 4,5

The explosion of technology in healthcare has led to a need for better assessment of these tools. Assessment of ECDS tools have varied from opinion-based only 6, 7 to many assessing task completion, performance of a case, or accuracy,8–14 oftentimes with usability evaluation. 8,10–12,15,16 Assessment of cognitive demand with electronic health record use has been performed, however very few studies exist assessing the effects of ECDS tool use on cognitive demand2,12,14,17 Some usability studies have been performed during the development phase of an ECDS tool to assess for usability issues.15,16 Very few of these studies combine all of these elements: 1) accuracy or performance evaluation; 2) usability; and 3) effects on cognitive load.12

Despite the large number of ECDS tools available, the United States Food and Drug Administration (FDA) only provides oversight to ECDS applications that are considered direct extensions of medical devices.18,19 Some ECDS tools evaluated in the past have not been suitable for the task designed,8 have had errors or violations that could cause harm to the patient, or have not been user-friendly.20

In 2016, our institution developed a mobile device-based ECDS tool for managing febrile infants entitled PedsGuide.21 Fever in infants less than 90 days of age can indicate the presence of serious bacterial infection (SBI; defined as urinary tract infection, bacteremia, pneumonia, osteomyelitis, and/or meningitis). SBI is diagnosed in 8-12.5% of patients,22 however the majority of febrile infants have non-life threatening, non-SBI illness, such as viral infections.23 There is wide variation in the evaluation of febrile infants for suspected SBI across hospitals, emergency departments, and ambulatory settings.24 Variation in the management of young febrile infants is due in part to the availability of different criteria for determining an infant’s risk of SBI. However, when a clinical practice guideline (CPG) is adopted by an institution there is more uniform care provided to febrile infants,24 which can result in more appropriate use of diagnostic tests, antibiotics, hospitalization,25 and lower health care costs.26 Thus, implementation of CPGs to help guide febrile infant management poses significant benefits to patients, families, and the healthcare system; however dissemination and implementation of CPGs on a large scale can be a challenge.27 ECDS tools are one such way to disseminate CPGs on a large scale, as evidenced by the recent deployment of PedsGuide in a national standardization project.21

The objective of this study was to assess the individual-level impact on performance and cognitive workload of PedsGuide use on medical decision-making and cognitive workload by comparing management of simulated febrile infants with and without use of PedsGuide.

Methods

Design and population

This study was a counterbalanced, prospective cross-over simulation study to assess the individual-level impact on performance and cognitive load of PedsGuide on febrile infant management. We hypothesized that PedsGuide use would be associated with: 1) increased adherence to evidence-based recommendations as compared to use of a standard reference tool; and 2) lower cognitive demand.

Eligible subjects included attending pediatric emergency medicine and urgent care physicians who were at least 3 years post completion of training, and resident (trainee) physicians. The resident physicians were recruited from pediatric, family medicine, and emergency medicine residency programs that include clinical rotations at Children’s Mercy Kansas City (CMKC); however, the family medicine and emergency medicine residency programs for the most part practice medicine at other institutions. The attending physicians were recruited from the pediatric emergency medicine and urgent care divisions at CMKC. Recruitment of subjects from both trainee and established attending backgrounds was performed in order to ensure diversity of experience and competency in pediatric healthcare within the study. Attending physicians who had been practicing for less than three years post completion of training were excluded as these physicians have been found to practice more similarly to trainees.28 Recruitment was performed using a Research Electronic Data Capture (REDCap) survey sent out via email. This study was approved by the Children’s Mercy Kansas City (CMKC) Institutional Review Board.

Study Protocol

Two febrile infant scenarios were created by the research team. These scenarios included a low risk infant presenting with fever at 40 days of age (case 1) and a higher risk infant presenting at 28 days of age (case 2). These cases included content on the history of presenting illness, birth history, review of systems, and the physical exam. An answer key was created for these cases in accordance with previously developed febrile risk stratification criteria, especially modified Rochester criteria.26,29 The same answers could be obtained from use of the ECDS tool or use of the standard reference tool, The Harriet Lane Handbook.30 The Harriet Lane Handbook is a widely accepted pediatric guide published by the Johns Hopkins Hospital first in 1953, and has become internationally respected as a clinical reference.30 The Harriet Lane Handbook presents a modified Rochester criteria for febrile infant management guidance.29

Prior to completing the simulations, participants were counterbalanced. Counterbalance was completed in blocks to ensure equal distribution of condition states for simulation condition, and an equal number of slots was allocated to resident and attending physicians.31 This means that each participant performed one case with ECDS and one without. If the participant started without ECDS he or she would then “cross-over” and perform the other case with ECDS. Half of the participants started by using the ECDS tool and half did not. Half of the participants started with case number 1 and half started with case number 2. An example of the counterbalance is depicted in Table 1.

Table 1.

Sample of block counterbalance used to determine order of case and condition.

| Attending/Resident 1, 5 | Case 1 without PedsGuide | Case 2 with PedsGuide |

| Attending/Resident 2, 6 | Case 1 with PedsGuide | Case 2 without PedsGuide |

| Attending/Resident 3, 7 | Case 2 with PedsGuide | Case 1 without PedsGuide |

| Attending/Resident 4, 8 | Case 2 without PedsGuide | Case 1 with PedsGuide |

When not using PedsGuide the resident or attending physician had the option to use The Harriet Lane Handbook,30 but its use by the participant was not required. Use of PedsGuide, in order to assess the strengths and weaknesses of the ECDS tool, was required. If the participant had not used or downloaded PedsGuide, up to five minutes was allowed for the participant to become familiar with the app. The participants were only instructed in initial navigation of PedsGuide such as how to advance forwards or backwards within the app (how to use the back button within the app and when using an Android the back button on an Android phone). The interactive nature of the app was not demonstrated. The use of PedsGuide or The Harriet Lane Handbook on each question within each case was recorded by study personnel. The participant was provided a smartphone with PedsGuide already installed and the participant was not required to use his or her personal phone. Either an Android or iPhone device was used based on compatibility with the recording equipment (laptop) available. The functionality of the app is identical across all platforms (Android or iPhone). The screen on the phone was shared with the laptop using either AirServer® (App Dynamic ehf, Kópavogur, Iceland) or AirPlay mirroring on iPhone (Apple Inc., Cupertino, CA). Morae recording software (TechSmith, Okemos, MI) was used to record screens and voice to determine screens viewed, time on screen, and comments from the participants.

The study was performed in an office setting at the participant’s convenience. On the study day each participant completed a survey that recorded demographics, level of training, previous clinical training, and comfort/barriers with use of ECDS tools. The participants then performed the cases with and without PedsGuide according to counterbalance. After each case was performed each participant completed the NASA-Task Load Index (NASA-TLX), a validated subjective workload assessment tool which measures 6 different domains impacting cognitive workload.32 The NASA-TLX assesses mental demand, physical demand, temporal demand, performance, effort, and frustration of task completion on a scale of 1-21. For example, ‘How hard did you have to work to accomplish your level of performance?’ was measured from very low (1) to very high (21) for the effort score. Lower scores are deemed better, indicating less demand, effort, frustration, and better performance (Supplementary Figs. 1,2).32

After the participant completed the case using PedsGuide, he or she completed the System Usability Scale (SUS, Supplementary Fig. 3).33 The SUS is a subjective measurement of the usability of an application and was used in this study to assess participants’ perceptions of the usability of PedsGuide. The SUS was first developed in the 1980s, its applicability to determine usability has been extensively documented in the literature.34 At the end of both cases the participant was asked for feedback regarding PedsGuide.

Data Analysis

Assessment of adherence to evidence-based recommendations within each simulation was compared by condition state (PedsGuide used during the scenario versus control). For each scenario the highest possible score a participant could achieve was 14. These scores were converted to percentages for the purposes of analysis. All statistical analysis was performed using SPSS v. 23.0 (IBM Corp, Armonk, NY). An a priori power analysis indicated a minimum of 30 participants in order to have 95% power for .05 criterion (two-tailed) of statistical significance at an effect size of 0.7. Average percentage scores were compared by condition state using Wilcoxon signed-rank paired test as the scores were not normally distributed. The scores from the NASA-TLX were averaged by category (mental demand, physical demand, temporal demand, performance, effort, frustration) and means were compared. Significant differences for the NASA-TLX were determined using ANOVA for mental demand and for the other factors using Welch’s test as there was not homogeneity of variances based on Levene’s statistic.

The individual scores on each question of the SUS are combined to give one total score which is assessed out of 100. This number is then applied to either the acceptability range (e.g. not acceptable, marginal, acceptable), grade scale (e.g. A, B, C, D, F), or adjective ratings (e.g. worst imaginable, poor, OK, good, excellent, best imaginable) scales.

Results

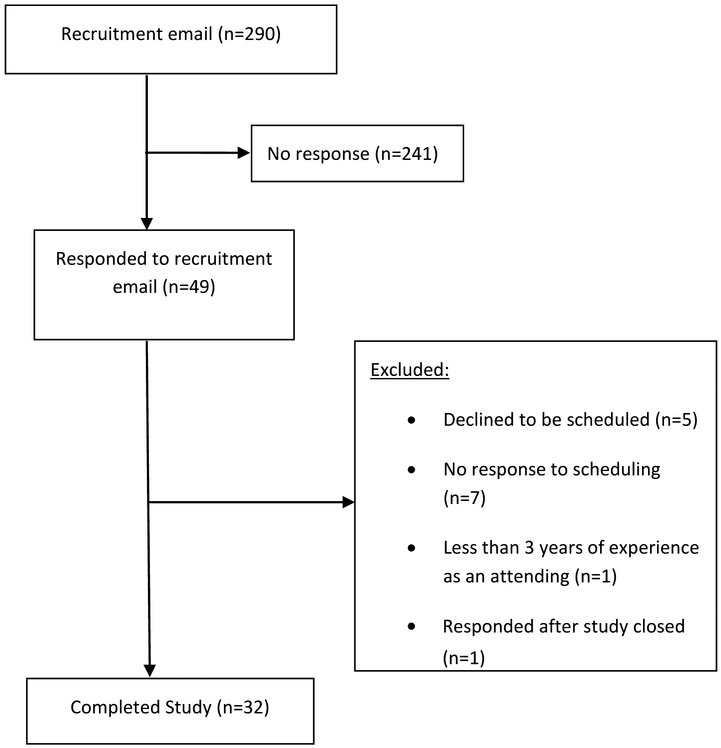

A total of 290 resident and attending physicians were sent the recruitment email; 32 participants completed the study. Details of recruitment and enrollment are summarized in Figure 1.

Figure 1.

Consolidated Standards of Reporting Trials diagram of participants recruited and enrolled for study of PedsGuide.

Participant demographics are summarized in Table 2. A majority of attending physician participants had been in practice for >10 years. Most participants had used ECDS tools at least once a week or more frequently (N=25). The majority of participants reported that they felt comfortable with using ECDS and felt that they would use it in the future. A total of 20 (63%) participants had used PedsGuide prior to participating in the study.

Table 2.

Characteristics of study participants divided by resident and attending physicians.

| Demographics | n=32(%) |

|---|---|

| Gender | |

| Female | 16 (50) |

| Mean Age (years) | 39.29 (st. dev. 12.4) |

| Range age (years) | 24-62 |

| Resident physicians | 16 (50) |

| Training level | n=16 |

| PGY1 | 4 (25) |

| PGY2 | 6 (37.5) |

| PGY3 | 6 (37.5) |

| Attending physicians | 16 (50) |

| Years of practice post residency/fellowship | |

| 3- <10 | 4 (25) |

| 10- <20 | 3 (18.8) |

| 20- <30 | 7 (43.8) |

| ≥30 | 2 (12.5) |

| Primary specialty | |

| Pediatrics | 27 |

| Other* | 5 |

| Race | |

| Non-Hispanic Caucasian | 26 (81.3) |

| Asian | 5 (15.6) |

| African-American | 0 |

| Hispanic | 1 (3.1) |

| Prior use of ECDS | |

| Multiple Times a day | 11 (34.4) |

| Daily | 8 (25) |

| About once a week | 6 (18.8) |

| Monthly | 2 (6.3) |

| A few times a year | 3 (9.4) |

| Never | 2 (6.3) |

| Download of PedsGuide prior to study | 19 (59.4) |

| Use of PedsGuide prior to study | 20 (62.5) |

| Comfort with ECDS (1=uncomfortable, 5=very comfortable) | |

| 1 | 0 |

| 2 | 1 (3.1) |

| 3 | 4 (12.5) |

| 4 | 16 (50) |

| 5 | 11 (34.4) |

| Plan on ECDS use in future | |

| Strongly disagree | 0 |

| Somewhat disagree | 0 |

| Neutral | 0 |

| Somewhat agree | 7 (21.9) |

| Strongly agree | 25 (78.1) |

Internal Medicine-Pediatrics 1, Emergency Medicine 3, Family Medicine 1; PGY=post-graduate year

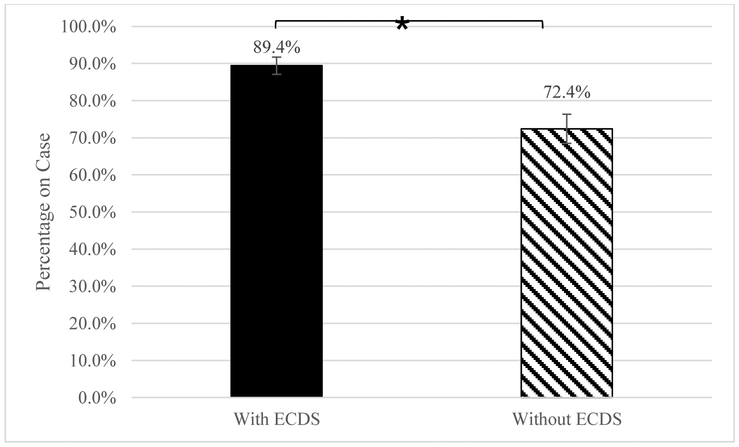

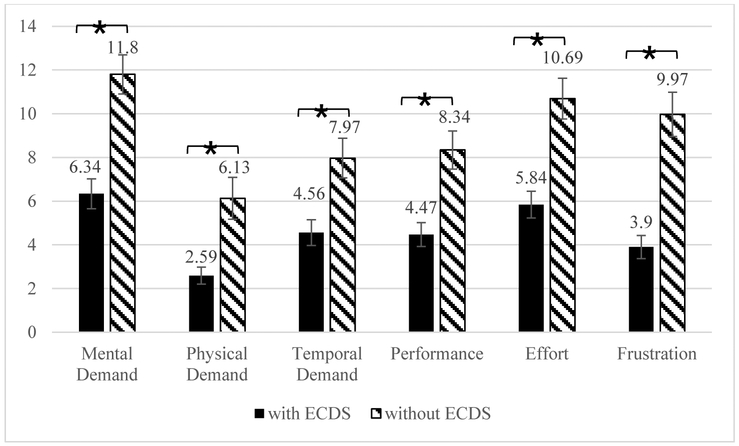

Mean scores on the febrile infant cases were significantly higher with use of PedsGuide versus use of standard reference tool 89% versus 72%, (p=0.001) (Fig. 2). Analysis of NASA-TLX responses revealed that participants reported experiencing less frustration (p<0.001), mental demand (p<0.001), physical demand (p=0.001), temporal demand (p=0.003), and overall effort (p<0.001) with ECDS versus the standard reference tool (Fig. 3). Respondents also self-reported better performance when completing simulations with PedsGuide (4.47 vs 8.34, p<0.001).

Figure 2.

Mean percentage on cases with and without use of ECDS. Bars represent standard error. *P=0.001. ECDS indicates electronic clinical decision support.

Figure 3.

NASA-TLX average scores with and without use of ECDS. Bars represent standard error. Lower score is more optimal indicating less mental, physical, temporal demand, effort, frustration, and better performances. *P<0.01. NASA-TLX indicates NASA-Task load index; and ECDS, electronic clinical decision support

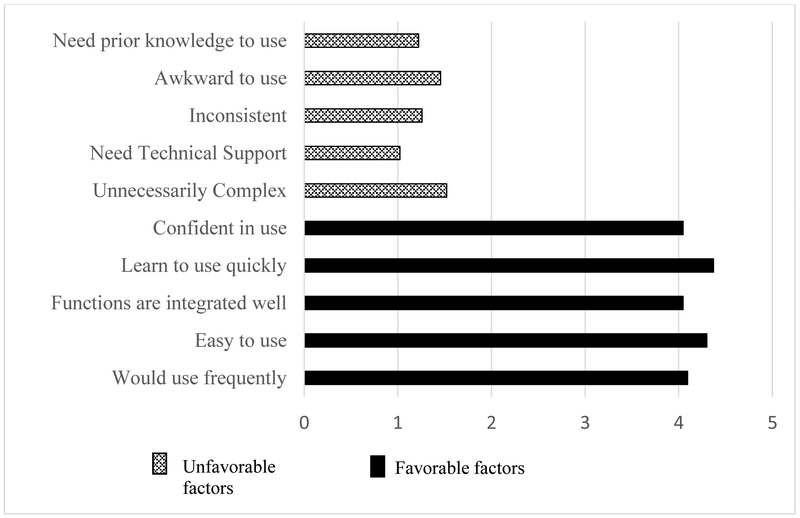

Results from the SUS showed that participants considered PedsGuide to be a usable ECDS tool. There was strong agreement with favorable factors such as the app being easy to use and that use could be learned quickly. Users disagreed that PedsGuide required technical support or prior knowledge to be able to use the app (Fig. 4). The average converted scale derived from the SUS was 88 out of 100, which translated to a “B” on the overall grade scale, “excellent” on the adjective ratings scale, and “acceptable” on the acceptability ranges.34

Figure 4.

System Usability Scale (SUS) average results. Scale 1-5, 1=strongly disagree, 5=strongly agree.

Discussion

This study demonstrates that use of the ECDS tool PedsGuide led to an increase in adherence to evidence-based recommendations as demonstrated by higher scores on simulation cases when PedsGuide was used as compared to a standard reference tool. Participants also reported lower cognitive load when using PedsGuide in terms of mental demand, physical demand, temporal demand, effort, frustration and significantly higher self-rated performance. The adjective and grade ratings on the SUS suggests that PedsGuide is a usable ECDS tool. Our study demonstrates how usability assessment, cognitive effort/performance assessment, and simulation can be utilized to assess the impact of ECDS tool use on a clinician’s medical decision-making and cognitive workload.

Assessment of ECDS tools in a multi-faceted approach is important to ensure assessment for safety as well as clinical effectiveness. Some ECDS tools evaluated in the past have not been suitable for the task designed,8 have had errors or violations that could cause harm to the patient, have not been user-friendly,20 or have found that the SUS (usability assessment) had a weak correlation with task completion.8 Due to variances in opinion and that the SUS does not always correlate with task completion, the assessment of an ECDS tool should include task completion, usability assessment and evaluation of cognitive load.35 The multi-faceted approach performed in this study includes completion of a task assessing for clinical effectiveness and safety (performance of scenarios with and without ECDS tool), survey usability assessment of the ECDS tool (completion of SUS), and assessment of cognitive load which contributes to patient and physician safety (completion of NASA-TLX). Feedback from this study varied in regards to usability. Some participants thought the app would benefit from “having a progress bar or showing where you’ve been or where you’re going” while others thought the app was “easy to use, set up one step at a time.” This type of analysis can also prompt changes in post-development to improve usage. Many participants felt that PedsGuide helped with being “thorough about all aspects [of febrile infant care],” and expressed that PedsGuide “makes me feel safer,” and that they would “show it to anybody who will listen.” In contrast there are some participants who felt they would feel more confident in use if there was “both an endorsement and source page,” while others displayed some distrust of ECDS in general “Sometimes using ECDS is like making decisions with blinders on.” ECDS tools may be used to recognize clinical conditions or condition states, however this does not always translate to desired clinical effect.36 Assessments of individual-level impact of ECDS tools that include task completion/assessment of accuracy, together with usability and cognitive load assessments have been rarely performed.12 Our approach described in the present study has the potential to enhance future ECDS tool development, if utilized prior to release of the ECDS tool.

This study has limitations. First, the use of clinical vignettes without high-fidelity simulation did not provide a real-world experience for participants. However, the communication of specific cues and information that should clearly prompt specific clinical decisions allows for better assessment of participants’ use of decision support resources, which was the primary focus of the simulation experience. Additionally, in a simulated setting there may be a decrease in stress or cognitive load as it is not a real patient being assessed. However, given that participants completed vignettes for both conditions (app and standard reference), the reported differences in adherence to guidelines and cognitive load cannot be ascribed to vignette use alone. All of the attending physicians were trained in pediatrics and the majority of resident physicians were in a pediatric residency training program. Therefore, the majority of participants had more experience with febrile infants than is typical of an emergency medicine or family medicine trained physician. Despite this capacity for expertise, performance was still better when using PedsGuide. It would be reasonable to hypothesize that there would be an even greater impact of PedsGuide use on febrile infant management for providers with less experience in pediatrics. Many participants had also used PedsGuide prior to study participation, which may have resulted in more familiarity with the tool and improved scores on the NASA-TLX and/or SUS. However, usability and cognitive load responses from participants with no prior experience using PedsGuide were similar to those who reported prior use (Supplementary Figs. 4, 5). Lastly, use of The Harriet Lane Handbook as a control, rather than an alternative electronic health resource, may not provide an optimal comparison of ECDS tools. However, given the broad availability of The Harriet Lane Handbook across clinical settings, and the lack of a single, universal electronic reference/tool, The Harriet Lane Handbook was determined to be the most appropriate comparator.

Conclusions

Use of PedsGuide led to increased adherence to guidelines and decreased cognitive load in febrile infant management during completion of standardized scenarios when compared to use of a standard non-electronic reference tool. Our study highlights a rarely used method of combining ECDS usability, cognitive workload, and medical decision-making assessment to evaluate ECDS tools that could improve the effectiveness of such tools when deployed to healthcare providers in future. Future studies are planned to assess the effect of PedsGuide use on clinical practice patterns and associated health outcomes at a population-level.

Supplementary Material

NASA-TLX provided after case performed without ECDS tool.32

NASA-TLX provided after case performed with use of ECDS tool.32

System Usability Scale.33

NASA-TLX scores with and without prior use of PedsGuide on the case where ECDS was used. Bars indicate standard error.

Score on SUS with and without prior use of PedsGuide. Bars indicate standard error.

What’s New:

This study shows that the use of an electronic clinical decision support tool led to increased adherence to guidelines and decreased cognitive workload in a simulated setting. It demonstrates a multi-faceted approach for assessing electronic clinical decision support tools.

Acknowledgements

The salaries for Russell McCulloh and Ellen Kerns were supported in part by the National Institutes of Health Grants UG1HD090849 and UG1OD024953. These funding sources had no involvement in the study design, collection, analysis, or interpretation of the data.

Conflict of interest: The salaries for Russell McCulloh and Ellen Kerns were supported in part by the National Institutes of Health Grants UG1HD090849 and UG1OD024953. These funding sources had no involvement in the study design, collection, analysis, or interpretation of the data.

Footnotes

Study performed at: Children’s Mercy Kansas City, 2401 Gillham Road, Kansas City, MO, 64108, USA.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Densen P Challenges and Opportunities Facing Medical Education. Trans Am Clin Climatol Assoc. 2011;122:48–58. doi: 10.4172/2155-9619.S6-009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Wilbanks BA, McMullan SP. A Review of Measuring the Cognitive Workload of Electronic Health Records. CIN Comput Informatics, Nurs. 2018;36:579–588. doi: 10.1097/CIN.0000000000000469 [DOI] [PubMed] [Google Scholar]

- 3.Gálvez JA, Lockman JL, Schleelein LE, et al. Interactive pediatric emergency checklists to the palm of your hand - How the Pedi Crisis App traveled around the world. Paediatr Anaesth. 2017;27:835–840. doi: 10.1111/pan.13173 [DOI] [PubMed] [Google Scholar]

- 4.Ginsburg AS, Delarosa J, Brunette W, et al. MPneumonia: Development of an innovative mHealth application for diagnosing and treating childhood pneumonia and other childhood illnesses in low-resource settings. PLoS One. 2015;10:e0139625. doi: 10.1371/journal.pone.0139625 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Wallace S, Clark M, White J. “It’s on my iPhone”: attitudes to the use of mobile computing devices in medical education, a mixed-methods study. BMJ Open. 2012:2:e0011099. doi: 10.1136/bmjopen-2012-001099 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Webb K, Bullock A, Dimond R, Stacey M. Can a mobile app improve the quality of patient care provided by trainee doctors? Analysis of trainees’ case reports. BMJ Open. 2016;6:e013075. doi: 10.1136/bmjopen-2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Payne KFB, Weeks L, Dunning P. A mixed methods pilot study to investigate the impact of a hospital-specific iPhone application (iTreat) within a British junior doctor cohort. Health Informatics J. 2014;20(1):59–73. doi: 10.1177/1460458213478812 [DOI] [PubMed] [Google Scholar]

- 8.Kim MS, Aro MR, Lage KJ, Ingalls KL, Sindhwani V, Markey MK. Exploring the Usability of Mobile Apps Supporting Radiologists’ Training in Diagnostic Decision Making. J Am Coll Radiol. 2016;13:335–343. doi: 10.1016/j.jacr.2015.07.021 [DOI] [PubMed] [Google Scholar]

- 9.McEvoy MD, Hand WR, Stiegler MP, et al. A Smartphone-based decision support tool improves test performance concerning application of the guidelines for managing regional anesthesia in the patient receiving antithrombotic or thrombolytic therapy. Anesthesiology. 2016;124:186–198. doi: 10.1097/ALN.0000000000000885 [DOI] [PubMed] [Google Scholar]

- 10.Meyer AND, Thompson PJ, Khanna A, et al. Evaluating a mobile application for improving clinical laboratory test ordering and diagnosis. J Am Med Informatics Assoc. 2018;25(7):841–847. doi: 10.1093/jamia/ocy026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Li AC, Kannry JL, Kushniruk A, et al. Integrating usability testing and think-aloud protocol analysis with “near-live” clinical simulations in evaluating clinical decision support. Int J Med Inform. 2012;81:761–772. doi: 10.1016/j.ijmedinf.2012.02.009 [DOI] [PubMed] [Google Scholar]

- 12.Militello LG, Diiulio JB, Borders MR, et al. Evaluating a Modular Decision Support Application For Colorectal Cancer Screening. Appl Clin Inform. 2017;8(1):162–179. doi: 10.4338/ACI-2016-09-RA-0152 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Cairns AW, Bond RR, Finlay DD, et al. A decision support system and rule-based algorithm to augment the human interpretation of the 12-lead electrocardiogram. J Electrocardiol. 2017;50(6):781–786. doi: 10.1016/j.jelectrocard.2017.08.007 [DOI] [PubMed] [Google Scholar]

- 14.Thongprayoon C, Harrison AM, O’Horo JC, Berrios RAS, Pickering BW, Herasevich V. The Effect of an Electronic Checklist on Critical Care Provider Workload, Errors, and Performance. J Intensive Care Med. 2016;31(3):205–212. doi: 10.1177/0885066614558015 [DOI] [PubMed] [Google Scholar]

- 15.Melnick ER, Hess EP, Guo G, et al. Patient-Centered Decision Support: Formative Usability Evaluation of Integrated Clinical Decision Support With a Patient Decision Aid for Minor Head Injury in the Emergency Department. J Med Internet Res. 2017; 19(5):e174. doi: 10.2196/jmir.7846 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Press A, McCullagh L, Khan S, Schachter A, Pardo S, McGinn T. Usability Testing of a Complex Clinical Decision Support Tool in the Emergency Department: Lessons Learned. JMIR Hum Factors. 2015;2(2):e14. doi: 10.2196/humanfactors.4537 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ancker JS, Edwards A, Nosal S, Hauser D, Mauer E, Kaushal R. Effects of workload, work complexity, and repeated alerts on alert fatigue in a clinical decision support system. BMC Med Inform Decis Mak. 2017;17(1):36. doi: 10.1186/s12911-017-0430-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Cortez NG, Cohen IG, Kesselheim AS. FDA regulation of mobile health technologies. N Engl J Med. 2014;371(4):372–379. doi: 10.1056/NEJMhle1403384 [DOI] [PubMed] [Google Scholar]

- 19.FDA. Mobile Medical Applications: Guidance for Industry and Food and Drug Administration Staff. Food Drug Adm. 2015:1–45. [Google Scholar]

- 20.Beuscart-Zéphir M-C, Elkin P, Pelayo S, Beuscart R. The human factors engineering approach to biomedical informatics projects: state of the art, results, benefits, and challenges. IMIA Yearbook of Medical Informatics. 2007;46(1):109–127. [PubMed] [Google Scholar]

- 21.McCulloh RJ, Fouquet SD, Herigon J, et al. Development and implementation of a mobile device-based pediatric electronic decision support tool as part of a national practice standardization project. J Am Med Informatics Assoc. 2018;25(9):1175–1182. doi: 10.1093/jamia/ocy069 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Aronson PL. Evaluation of the febrile young infant: an update. Pediatr Emerg Med Pract. 2013;10(2):1–17. [PubMed] [Google Scholar]

- 23.Colvin JM, Muenzer JT, Jaffe DM, et al. Detection of Viruses in Young Children With Fever Without an Apparent Source. Pediatrics. 2012;130(6):e1455–e1462. doi: 10.1542/peds.2012-1391 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Aronson PL, Thurm C, Williams DJ, et al. Association of clinical practice guidelines with emergency department management of febrile infants ≤56 days of age. J Hosp Med. 2015;10(6):358–365. doi: 10.1002/jhm.2329 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Byington CL, Reynolds CC, Korgenski K, et al. Costs and Infant Outcomes After Implementation of a Care Process Model for Febrile Infants. Pediatrics. 2012;130:e16–e24. doi: 10.1542/peds.2012-0127 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Baker M, Bell L, Avner J. Outpatient management without antibiotics of fever in selected infants. J Pediatr. 1993;329(20):1437–1441. doi: 10.1016/S0022-3476(05)81384-8 [DOI] [PubMed] [Google Scholar]

- 27.McCulloh RJ, Alverson BK. The Challenge-and Promise-of Local Clinical Practice Guidelines. Pediatrics. 2012;130(5):941–942. doi: 10.1542/peds.2012-2085 [DOI] [PubMed] [Google Scholar]

- 28.Goodwin JS, Salameh H, Zhou J, Singh S, Kuo YF, Nattinger AB. Association of hospitalist years of experience with mortality in the hospitalized medicare population. JAMA Intern Med. 2018;179(2):196–203. doi: 10.1001/jamainternmed.2017.7049 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Baraff LJ. Management of infants and young children with fever without source. Pediatr Ann. 2008;37(10):673–679. doi: 10.3928/00904481-20081001-01 [DOI] [PubMed] [Google Scholar]

- 30.Hughes HK, Kahl LK, eds. The Johns Hopkins Hospital The Harriet Lane Handbook. Twenty-Fir. Philadelphia, PA: Elsevier; 2018. [Google Scholar]

- 31.Lin Y, Zhu M, Su Z. The pursuit of balance: An overview of covariate-adaptive randomization techniques in clinical trials. Contemp Clin Trials. 2015;45(Pt A):21–25. doi: 10.1016/j.cct.2015.07.011 [DOI] [PubMed] [Google Scholar]

- 32.Hart SG, Staveland LE. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. Adv Psychol. 1988;52:139–183. doi: 10.1016/S0166-4115(08)62386-9 [DOI] [Google Scholar]

- 33.Brooke J SUS-A "Quick and Dirty" Usability Scale In: Jordan PW, Thomas B, Weerdmeester BA, McClelland AL, eds. Usability Evaluation in Industry. London: Taylor and Francis; 1996. [Google Scholar]

- 34.Brooke J SUS: A Retrospective. J Usability Stud. 2013;8(2):29–40. [Google Scholar]

- 35.Harrison R, Flood D, Duce D. Usability of Mobile Applications: Literature Review and Rationale for a New Usability Model. J of Interact Sci. 2013;1:1–16. http://www.journalofinteractionscience.com/content/1/1/1 [Google Scholar]

- 36.Kharbanda EO, Asche SE, Sinaiko A, et al. Evaluation of an Electronic Clinical Decision Support Tool for Incident Elevated BP in Adolescents. Acad Pediatr. 2018;18(1):43–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

NASA-TLX provided after case performed without ECDS tool.32

NASA-TLX provided after case performed with use of ECDS tool.32

System Usability Scale.33

NASA-TLX scores with and without prior use of PedsGuide on the case where ECDS was used. Bars indicate standard error.

Score on SUS with and without prior use of PedsGuide. Bars indicate standard error.