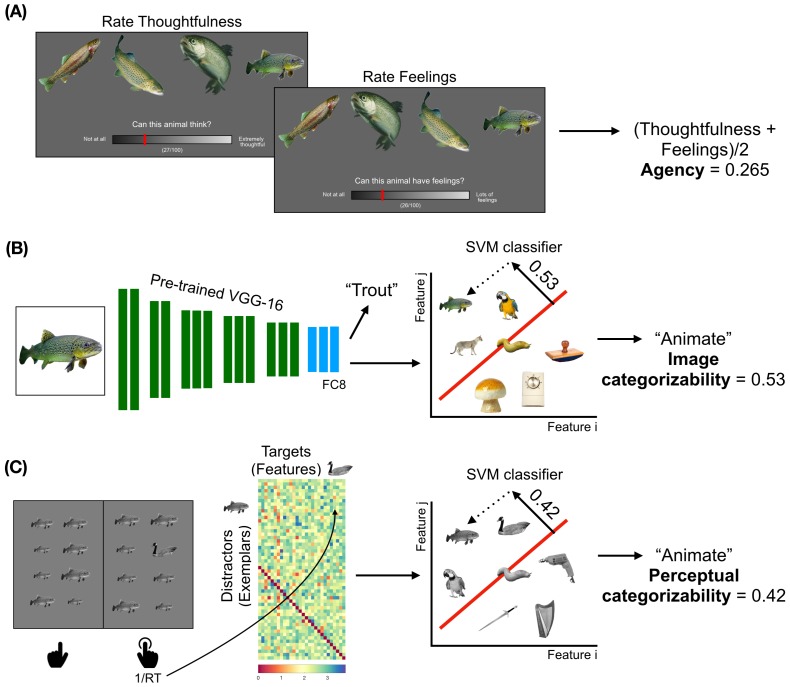

Figure 1. Obtaining the models to describe animacy in the ventral temporal cortex.

(A) Trials from the ratings experiment are shown. Participants were asked to rate 40 animals on three factors - familiarity, thoughtfulness, and feelings. The correlations between the thoughtfulness and feelings ratings are at the noise ceilings of both these ratings. Therefore, the average of these ratings was taken as a measure of agency. (B) A schematic of the convolutional neural network (CNN) VGG-16 is shown. The CNN contains 13 convolutional layers (shown in green), which are constrained to perform the spatially-local computations across the visual field, and three fully-connected layers (shown in blue). The network is trained to take RGB image pixels as inputs and output the label of the object in the image. Linear classifiers are trained on layer FC8 of the CNN to classify between the activation patterns in response to animate and inanimate images. The distance from the decision boundary, toward the animate direction, is the image categorizability of an object. (C) A trial from the visual search task is shown. Participants had to quickly indicate the location (in the left or right panel) of the oddball target among 15 identical distractors which varied in size. The inverse of the pairwise reaction times are arranged as shown. Either the distractors or the targets are assigned as features of a representational space on which a linear classifier is trained to distinguish between animate and inanimate exemplars (Materials and methods). These classifiers are then used to categorize the set of images relevant to subsequent analyses; the distance from the decision boundary, towards the animate direction, is a measure of the perceptual categorizability of an object.