Abstract

The main focus of this study is bridging the “evidence gap” between frontline decision-making in health care and the actual evidence, with the hope of reducing unnecessary diagnostic testing and treatments. From our work in pulmonary embolism (PE) and over ordering of computed tomography pulmonary angiography, we integrated the highly validated Wells' criteria into the electronic health record at two of our major academic tertiary hospitals. The Wells' clinical decision support tool triggered for patients being evaluated for PE and therefore determined a patients' pretest probability for having a PE. There were 12,759 patient visits representing 11,836 patients, 51% had no D-dimer, 41% had a negative D-dimer, and 9% had a positive D-dimer. Our study gave us an opportunity to determine which patients were very low probabilities for PE, with no need for further testing.

INTRODUCTION

A fundamental problem with health care delivery in the United States is that a large percentage of the care delivered is not indicated and often harmful. U.S. citizens receive only half of recommended medical care (1) and one-third of the care received is unnecessary (2). In addition, in many clinical situations there is readily available evidence to inform proper decision-making to help reduce unnecessary testing and treatments, but that evidence is not used. This juxtaposition of patients receiving tests or treatments not indicated when available evidence exists to inform the decision not to perform those tests or treatment we classify as the “evidence gap” (Figure 1).

Fig. 1.

Bridging the “EVIDENCE GAP.”

Bridging the evidence gap between frontline decision-making in health care and the published evidence with the hope of reducing unnecessary diagnostic testing and treatments is the main focus of our Center for Predictive Medicine at Northwell Health. Our center's particular focus is on the integration of well-validated clinical prediction rules into the point-of-care through a process of usability testing. A clinical prediction rule (CPR) is a decision aid that brings together various components of the history, physical exam, and other easily obtainable lab data and quantifies them as to their ability to predict certain outcomes. CPRs are typically developed to help with quick frontline decisions in clinical care (3).

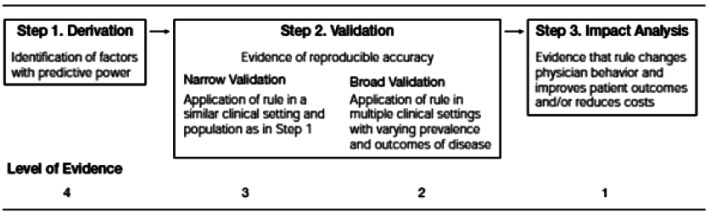

The process of deriving and validating CPRs is complex and includes multiple steps (Figure 2). The first step in the process is the derivation study where the rule is created often using a retrospective database. Once a CPR is derived, it must be both retrospectively and prospectively validated on multiple patient populations. Ideally, these patient populations will vary in important demographics, prevalence, and severity of the condition being studied. Finally, once a CPR is validated, the third step is an impact analysis which is performed to determine the true impact of the CPR on care delivery, once deployed. Impact analysis can determine such things as cost avoidance, cost increase, as well as doctor and patient satisfaction with using the CPR.

Fig. 2.

Development of a clinical prediction rule.

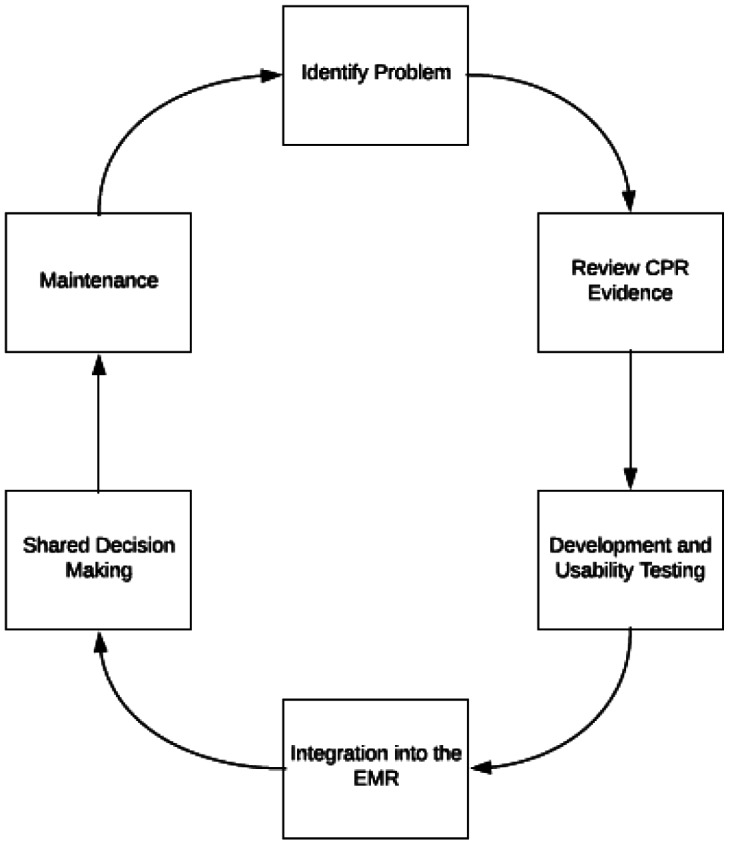

After years of deriving and validating CPRs, it became clear that although these tools had tremendous potential to reduce gaps in care, few providers were actually adopting them at the point of care. This problem of adoption launched the research projects called “Integrated Clinical Prediction Rules (iCPR).” The goal of iCPR (4-6) studies is to take well-validated CPRs and integrate them into clinical workflow to enhance adoption and to then measure the impact of that process on test ordering or treatment selection. Our process for integrating CPRs at the point of care has multiple steps (Figure 3) (7). The first step includes a vigorous assessment of the quality and validity of the CPR to ensure it is of the highest validity and should therefore be broadly adopted into the decision-making process throughout health care. The second step involves looking for areas of potential overuse and underuse of both treatments and diagnostic tests where a CPR might enhance the evidence-based decision-making process. The third step in our iCPR process is assessing whether a CPR can be easily integrated into the workflow and electronic health record. Some CPRs are too complex for integration.

Fig. 3.

Steps to complex clinical decision support tool integration.

After applying these three basic parameters, a high level of evidence CPR, a clinical area of overuse or underuse of evidence-based health care, and finally the ease or difficulty of integration of the CPR into the flow of care, our group identified two clinical areas which required attention. The first area was antibiotic prescribing for upper respiratory infections in primary care settings and the second area was the use of computed tomography pulmonary angiography (CTPA) in patients suspected for pulmonary embolism (PE) in emergency room settings. In both instances there are well-validated CPRs, significant over prescribing of antibiotics, and over ordering of CTPAs, and we believed that with appropriate usability testing the CPR could be integrated into the point of care (8,9).

For our work in PE and over ordering of CTPAs, we integrated the highly validated Wells' criteria (10-12) (Table 1) into the electronic health record at two our major academic tertiary hospitals (13). Use of CTPA in the assessment for PE in the emergency department (ED) has significantly increased over the past 2 decades. Whereas this has improved the accuracy of radiologic testing for PE, CTPA also carries the risk of significant iatrogenic harm (14) including a 14% risk of contrast-induced nephropathy (15) and a lifetime malignancy risk of 2.76% in young female patients (16). In 2006, results from the landmark PIOPED II trial established CTPA as the first choice diagnostic imaging modality, with sensitivity over 90% for patients with high clinical suspicion of PE and specificity of 96% (17,18). Over the next 5 years, there was a 4-fold increase in CTPA use and a 33% decrease in ventilation/perfusion imaging (19).

TABLE 1.

Wells' CPR for Pulmonary Embolism

| Clinical Characteristics of PE | Score |

|---|---|

| Clinical signs and symptoms of DVT (minimum of leg swelling and pain with palpation of the deep veins) | 3 |

| PE as or more likely than an alternative diagnosis | 3 |

| Heart rate greater than 100 | 1.5 |

| Immobilization in the past 4 days or surgery in the previous 4 weeks | 1.5 |

| Previous DVT/PE | 1.5 |

| Hemoptysis | 1 |

| Malignancy | 1 |

PE, pulmonary embolism; DVT, deep vein thrombosis.

As part of an ongoing study, our research team developed a Wells' criteria clinical decision support (CDS) tool with a user-centered design method by applying our previous experience in creating these tools and tailoring for the ED workflow and environment (9,13,20). Using adaptive principles in web and health information technology design, this tool was developed in three phases: 1) focus-group feedback on documented workflow and compliance barriers; 2) key-informant interviews on the tool's wireframes; and 3) iterative rounds of usability testing of the initial prototype. Following the three phases, the Wells' CDS tool was integrated into the order entry workflow and was triggered when a provider attempted to order tests that are used to evaluate a suspected PE (i.e., CTPA or D-dimer). With the launch of the CDS tool, a tracking tool was developed to monitor the Wells' CDS tool post-implementation. As the provider used the CDS tool to place orders for PE evaluation, the tracking tool monitored the number of times the CDS tool was completed which was defined as completion of the Wells' risk assessment score for PE, following recommendations, and accompanying orders.

CTPA is not recommended in patients who are deemed a low probability for PE. However, we began to realize that a large percentage of low-probability patients had a CTPA performed and were being recommended for follow-up studies based on incidental findings of their CTPA. In an attempt to assess the complete nature of this, we retrospectively characterized the rate of incidental findings on low-probability CTPA patients discharged home after the workup of PE. Highlighted below are the preliminary results of our ongoing study.

MATERIALS AND METHODS

As part of a larger ongoing cost-effectiveness study, we performed a retrospective analysis on all ED patients being evaluated for suspected pulmonary embolism seen at our two tertiary academic hospitals from August 1, 2015, to July 31, 2017. The Wells' CPR CDS tool triggered for patients being evaluated for PE and therefore determined a patients' pretest probability for having had a PE.

Each CDS visit, the first visit per patient was analyzed. Low-probability patients were determined based on a negative D-dimer during the visit (<500 ng/mL). Very-low-probability patients were determined based on CDS patients with a negative D-dimer who were discharged home. For those with negative D-dimer who were discharged home, all CTPAs within 24 hours of a negative D-dimer were evaluated for computed tomography findings. This was chosen to ensure that the physician had the negative D-dimer before ordering the test. The percent of patients with positive PE was determined for each group. For each PE visit, PE diagnosis was determined based on diagnosis code, ICD 9 415.xx, ICD 10 I26., as principal or secondary ED discharge diagnosis. Computed tomography angiography (CTA) chest was defined as any CTA chest without concurrent CTA neck, upper extremity, or abdomen. All CTA chest reports were manually confirmed to be CTPA and not CTA aorta by a cardiothoracic radiologist.

A fellowship-trained cardiothoracic radiologist performed manual review of the reports of all very-low-risk CDS patients with CTPA within 24 after D-dimer to account for incidental findings. CTPA follow-up recommendations were categorized into nine categories of follow-up (Table 2).

TABLE 2.

Categories of Recommended Follow-Up

| Lung nodule |

| “Other” chest finding |

| Upper abdominal finding |

| Thyroid finding |

| Breast, cardiac, musculoskeletal, vascular finding |

RESULTS

There were 12,759 patient visits representing 11,836 patients (Table 3). Of all the visits, 51% (6,456 of 12,759) had no D-dimer, 41% (5,195 of 12,759) had a negative D-dimer, and 9% (1,108 of 12,759) had a positive D-dimer. For the 11,836 patients, 5,068 were patients in the low-probability category and 3,645 were in the very-low-probability category. Of the very-low-probability group, 877 had a CTPA within 24 hours after a negative D-dimer.

TABLE 3.

Risk Categories and Percentage of PE Diagnosis

| Number Patients | % PE Diagnosis | |

|---|---|---|

| CDS patients | 11,836 | 5.86% |

| Low prob (CDS and negative D-dimer) | 5,068 | 1.05% |

| Very low prob (CDS and neg D-dimer and + discharged home) | 3,645 | 0.08% |

| Very low prob with CTPA within 24 hours D-Dimer | 877 | 0.34% |

CDS, clinical decision support; PE, pulmonary embolism; CTPA, computed tomography pulmonary angiograph; prob, probability.

Review of the follow-up recommendations for the 877 low-probability patients resulted in 79.8% (200 of 877) with no follow-up, 17.6% (154 of 877) had one item follow-up recommended, 2.5% (2 of 877) had two items recommended, and 0.1% (1 of 877) had three items recommended for follow-up. Test recommendation follow-ups are summarized in Table 4.

TABLE 4.

Test Recommendation Follow-Ups

| Name of Test | Number of Tests |

|---|---|

| Chest CT scans for nodules | 69 |

| Thyroid follow-up | 33 |

| Non nodule chest follow-ups recommended (CT, PET-CT, CT versus MRI, lab values, or follow-up to resolution) | 31 |

| Abdominal finding (clinical correlation, abd MRI, abd CT, abd US, abd CT or MRI, dedicated liver imaging, or endoscopy) | 30 |

| Breast follow-up | 19 |

| Repeat CTPA | 7 |

| Vascular studies for follow-up (neck US, MRI or CTA, aneurysm follow-up, vascular follow-up, or clinical correlation) | 5 |

| Echocardiograms | 4 |

| Musculoskeletal follow-up (2 spine MRIs, 1 chest MRI) | 3 |

abd, abdominal; CT, computed tomography; PET, positron-emission tomography; MRI, magnetic resonance imaging; US, ultrasound; CTPA, computed tomography pulmonary angiograph; CTA, computed tomography angiography.

DISCUSSION

Avoiding unnecessary medical testing is important for several reasons. There are the more obvious reasons such as cost to the patient, health systems, and the country. In addition, there is the potential harm and inconvenience of the test to the patient. But a more concerning issue regarding expense and harm is the potential for unnecessary follow-up tests that may need to be performed but could have been avoided if the test had never been performed. Our study gave us a unique opportunity by having available pre-test probability estimates; therefore, we could determine which patients were very-low-probability for PE and not in need of further testing.

We defined unnecessary CTPA scans as patients receiving the scan who were known to be very-low-probability for having had a PE and therefore unlikely to benefit from testing with a CTPA. Beyond cost and inconvenience, there is potential for direct harm related to dye load and nephropathy, radiation exposure leading to increased cancer risks, and the potential for unnecessary biopsies for false-positive findings. In addition, there is the possibility for indirect harm such as emotional distress including anxiety and depression related to the false-positive study (21).

Typically, low-risk patients can be defined by the Wells' criteria, but in our retrospective review we defined it by a negative D-dimer. This was confirmed by the low PE diagnosis rate of 1.05% and that those patients discharged home had a PE diagnosis rate of 0.08%. Wells' criteria were not used because of lack of complete information. CDS patients were used because despite the lack of Wells' criteria data; it insured that patients were being evaluated for PE. Previous published work evaluating the effectiveness of the Wells' criteria found similar rates of 0% PE diagnosis rate in patients with a low Wells' score and a negative D-dimer (22).

Physicians are testing more for PE and seem to be finding and treating clinically insignificant PEs. In addition, CDS tools built to estimate pre-test probability of PE and discourage the CTPA use in low-risk patients have been shown to improve the CTPA yield (14). We have evidence to help reduce unnecessary testing and we must support studies to determine the best way to seamlessly integrate that evidence into care.

ACKNOWLEDGMENTS

This study was supported by the U.S. Department of Health and Human Services, Agency for Healthcare Research and Quality 1R24HS022061-01.

Footnotes

Potential Conflicts of Interest: None disclosed.

Contributor Information

THOMAS MCGINN, NEW HYDE PARK, NEW YORK.

STUART COHEN, NEW HYDE PARK, NEW YORK.

SUNDAS KHAN, NEW HYDE PARK, NEW YORK.

SAFIYA RICHARDSON, NEW HYDE PARK, NEW YORK.

MICHAEL OPPENHEIM, NEW HYDE PARK, NEW YORK.

JASON WANG, NEW HYDE PARK, NEW YORK.

DISCUSSION

Selker, Boston: That was a very astute example of what a learning health system should actually be — where you're actually learning stuff. You're applying science and hopefully improving care. At the rate we make clinical predictions, by the next millennium we'll have sufficient numbers to actually affect care. What is a way that we can actually get prediction support in a way that is timely (i.e., in our lifetime)?

McGinn, Manhasset: I'm a firm believer that just using large datasets and doing retrospective validation is not going to take the day. When I first arrived in Northwell we derived our prediction rule and we validated our prediction rule on clots and bleeds. It took me about a year and a half just to do the derivation and retrospective study. Then, a couple of years later, I could do the same dataset in about 3 months. So, the speed in which we can derive and retrospectively validate I think is astronomically improved.

Golden, Baltimore: I was looking at your EMR and it looked like EPIC. You don't have to comment one way or the other but that's what we….

McGinn, Manhasset: I have one comment about EMRs. You know they all really suck? Some suck more than others.

Golden, Baltimore: So, I won't comment about that part specifically, but in the Johns Hopkins Health System we do use EPIC. We have developed some insulin prescribing clinical decision support tools. I was really impressed with the degree of uptake you had in usage. So, my question is how did you integrate it into EPIC so that it was actually easy for providers to use it?

McGinn, Manhasset: I have a whole talk on usability — the science of integration. The biggest problem we have right now in electronic health records is people don't think it's a science — to create methods by which the doctor and the patient in the computer can interface. The first year of our study was just the integration of EHR and doing it in a way that we could adopt. We had simulation lab usage. We had patients coming in and doctors testing it. These days, people just shove things into the computer and then people click through it. So, the key is time, effort and design.

Thibault, Boston: You've got a new medical school there doing innovative things. How are you and Larry Smith incorporating this into how you're teaching the next generation of doctors?

McGinn, Manhasset: When you start introducing some of these tools you do it the right way. They are sophisticated users. So, if you talk about design, they get really angry very quickly if you don't know how to design these things. But once they learn how to use them and they like them, it's actually hard to do randomized trials because they find the tools and use them. So, they are really some of the great partners in creating these things and designing them….and they love it. Also, this a way to get people to do a history and physical, because the prediction models are basically good solid approaches of history and physical exam findings and trying to take them away from just ordering diagnostic tests inappropriately. So, we use it as a great teaching tool.

Petersen, Houston: A lot of the reasons we physicians do things, or don't do things, are very complicated and have more to do with the field of implementation science than they do with evidence. Sometimes we know evidence, but there are other reasons, whether they're patient-driven or system-driven. So, what are your thoughts about other ways to overcome this?

McGinn, Manhasset: Another body of work that we look at is what we call doctor-patient evidence congruence. Take the Ottawa ankle rule for ankle fracture. Doctors wanted help to protect them from the demands of their patients for getting the x-rays. So, how do you help them. If there's not congruence between what the doctor really wants, and the evidence adoption is poor? They don't want to prescribe an antibiotic, so you can help them. But in cardiac work and chest pain workup they don't want to be told not to admit the patient. So, then you have to work on the doctor's side of the equation. It's a cultural phenomenon. That's the whole usability technique; to get into the mind of the physician and why they're resisting the adoption. One also has to examine the general work area. ERs are very difficult to work in; you've got PAs and nurses. If you don't take into account the cultural environment, it doesn't matter. The electronic health record is a small, tiny piece of the puzzle. We usually do environmental workflow analysis and cultural analysis and other things before we go in.

Del Rio, Atlanta: On your data, you say there's a lot of things that we discover. When we start to chasing something, maybe some of those chasing things are actually good. It'll be interesting to see what is a false-positive versus a true new incidental finding that actually changes outcomes on a patient. The other thing is waste. We all quote there's 30% of waste. But the problem with waste is you don't know it's waste until later. No physician I know does a test and says, “Oh this is a waste of time.” The waste happens afterwards that you look back. How good is the prediction model in actually helping people in that decision-making?

McGinn, Manhasset: That's what a prediction model is supposed to do. The Wells' criteria with a negative D-dimer is an amazingly accurate predictor of not finding a PE. You don't need to do anything else after that. Now it doesn't know that you happen to have a small lung nodule that could end up being an early stage cancer. But you weren't ordering the test for that reason. That's the whole job of prediction model. It is to tell you with a high degree of confidence there's no ankle fracture, there's no head fracture, there's no pneumonia…and they do a very good job of it.

Sherman, Houston: Your talk started with a one-sided hypothesis of overutilization. Can you discuss the application of these prediction rules to areas of notorious underutilization, under-diagnosis and under-treatment, like cardiac disease in women?

McGinn, Manhasset: Rachel Burger at the University of Pittsburgh described the under-detection of infant child abuse. We created two prediction models that are now integrated in all of Pennsylvania and now rolling out in New York. So, it can work on increasing use of tests and of treatments. Some prediction models can highlight missed increased risk and show the need for a test or treatment that has not been ordered enough.

REFERENCES

- 1.McGlynn EA, Asch SM, Adams J, et al. The quality of health care delivered to adults in the United States. N Engl J Med. 2003;348:2635–45. doi: 10.1056/NEJMsa022615. [DOI] [PubMed] [Google Scholar]

- 2.Makary MA, Daniel M. Medical error-the third leading cause of death in the US. BMJ. 2016;353:i2139. doi: 10.1136/bmj.i2139. [DOI] [PubMed] [Google Scholar]

- 3.McGinn TG, Guyatt GH, Wyer PC, et al. Users' guides to the medical literature: XXII: how to use articles about clinical decision rules. Evidence-Based Medicine Working Group. JAMA. 2000;284:79–84. doi: 10.1001/jama.284.1.79. [DOI] [PubMed] [Google Scholar]

- 4.McGinn TG, McCullagh L, Kannry J, et al. Efficacy of an evidence-based clinical decision support in primary care practices: a randomized clinical trial. JAMA Intern Med. 2013;173:1584–91. doi: 10.1001/jamainternmed.2013.8980. [DOI] [PubMed] [Google Scholar]

- 5.Mann DM, Kannry JL, Edonyabo D, et al. Rationale, design, and implementation protocol of an electronic health record integrated clinical prediction rule (iCPR) randomized trial in primary care. Implement Sci. 2011;6:109. doi: 10.1186/1748-5908-6-109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Feldstein DA, Hess R, McGinn T, et al. Design and implementation of electronic health record integrated clinical prediction rules (iCPR): a randomized trial in diverse primary care settings. Implement Sci. 2017;12:37. doi: 10.1186/s13012-017-0567-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.McGinn T. Putting meaning into meaningful use: a roadmap to successful integration of evidence at the point of care. JMIR Med Inform. 2016;4:e16. doi: 10.2196/medinform.4553. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Li AC, Kannry JL, Kushniruk A, et al. Integrating usability testing and think-aloud protocol analysis with “near-live” clinical simulations in evaluating clinical decision support. Int J Med Inform. 2012;81:761–72. doi: 10.1016/j.ijmedinf.2012.02.009. [DOI] [PubMed] [Google Scholar]

- 9.Press A, McCullagh L, Khan S, et al. Usability testing of a complex clinical decision support tool in the emergency department: lessons learned. JMIR Hum Factors. 2015;2:e14. doi: 10.2196/humanfactors.4537. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Bates SM, Jaeschke R, Stevens SM, et al. Diagnosis of DVT: Antithrombotic Therapy and Prevention of Thrombosis, 9th ed: American College of Chest Physicians Evidence-Based Clinical Practice Guidelines. Chest. 2012;141((suppl 2)):e351S–418S. doi: 10.1378/chest.11-2299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Wells PS, Owen C, Doucette S, et al. Does this patient have deep vein thrombosis? JAMA. 2006;295:199–207. doi: 10.1001/jama.295.2.199. [DOI] [PubMed] [Google Scholar]

- 12.Ceriani E, Combescure C, Le Gal G, et al. Clinical prediction rules for pulmonary embolism: a systematic review and meta-analysis. J Thromb Haemost. 2010;8:957–70. doi: 10.1111/j.1538-7836.2010.03801.x. [DOI] [PubMed] [Google Scholar]

- 13.Khan S, McCullagh L, Press A, et al. Formative assessment and design of a complex clinical decision support tool for pulmonary embolism. Evid Based Med. 2016;21:7–13. doi: 10.1136/ebmed-2015-110214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Richardson S, Solomon P, O'Connell A, et al. A computerized method for measuring computed tomography pulmonary angiography yield in the emergency department: validation study. JMIR Med Inform. 2018;6:e44. doi: 10.2196/medinform.9957. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Niemann T, Zbinden I, Roser HW, et al. Computed tomography for pulmonary embolism: assessment of a 1-year cohort and estimated cancer risk associated with diagnostic irradiation. Acta Radiol. 2013;54:778–84. doi: 10.1177/0284185113485069. [DOI] [PubMed] [Google Scholar]

- 16.Mitchell AM, Jones AE, Tumlin JA, et al. Prospective study of the incidence of contrast-induced nephropathy among patients evaluated for pulmonary embolism by contrast-enhanced computed tomography. Acad Emerg Med. 2012;19:618–25. doi: 10.1111/j.1553-2712.2012.01374.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Stein PD, Woodard PK, Weg JG, et al. diagnostic pathways in acute pulmonary embolism: recommendations of the PIOPED II Investigators 1. Radiology. 2007;242:15–21. doi: 10.1148/radiol.2421060971. [DOI] [PubMed] [Google Scholar]

- 18.Remy-Jardin M, Pistolesi M, Goodman LR, et al. Management of suspected acute pulmonary embolism in the era of CT angiography: a statement from the Fleischner Society 1. Radiology. 2007;245:315–29. doi: 10.1148/radiol.2452070397. [DOI] [PubMed] [Google Scholar]

- 19.Mettler FA, Huda W, Yoshizumi TT, Mahesh M. Effective doses in radiology and diagnostic nuclear medicine: a catalog. Radiology. 2008;248:254–63. doi: 10.1148/radiol.2481071451. [DOI] [PubMed] [Google Scholar]

- 20.Press A, Khan S, McCullagh L, et al. Avoiding alert fatigue in pulmonary embolism decision support: a new method to examine “trigger rates”. Evid Based Med. 2016;21:203–7. doi: 10.1136/ebmed-2016-110440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Koroscil MT, Bowman MH, Morris MJ, et al. Effect of a pulmonary nodule fact sheet on patient anxiety and knowledge: a quality improvement initiative. BMJ Open Qual. 2018;7:e000437. doi: 10.1136/bmjoq-2018-000437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Drescher FS, Chandrika S, Weir ID, et al. Effectiveness and acceptability of a computerized decision support system using modified Wells criteria for evaluation of suspected pulmonary embolism. Ann Emerg Med. 2011;57:613–21. doi: 10.1016/j.annemergmed.2010.09.018. [DOI] [PubMed] [Google Scholar]