Abstract

Orienting covert spatial attention to a target location enhances visual sensitivity and benefits performance in many visual tasks. How these attention-related improvements in performance affect the underlying visual representation of low-level visual features is not fully understood. Here we focus on characterizing how exogenous spatial attention affects the feature representations of orientation and spatial frequency. We asked observers to detect a vertical grating embedded in noise and performed psychophysical reverse correlation. Doing so allowed us to make comparisons with previous studies that utilized the same task and analysis to assess how endogenous attention and presaccadic modulations affect visual representations. We found that exogenous spatial attention improved performance and enhanced the gain of the target orientation without affecting orientation tuning width. Moreover, we found no change in spatial frequency tuning. We conclude that covert exogenous spatial attention alters performance by strictly boosting gain of orientation-selective filters, much like covert endogenous spatial attention.

Keywords: exogenous attention, reverse correlation, feature representation

Introduction

Covert spatial attention is a selective process that allows us to filter incoming information by enhancing visual processing at relevant spatial locations while suppressing signals at irrelevant locations in the absence of eye movements. There are two types of covert spatial attention. One is transient, automatic, and stimulus driven—exogenous attention—and is deployed in about 100 ms. The other is slow, voluntary, and goal driven—endogenous attention—and is deployed in about 300 ms (reviews by Carrasco, 2011, 2014). Both types of attention improve performance in tasks mediated by contrast sensitivity and spatial resolution (reviews by Anton-Erxleben & Carrasco, 2013; Carrasco & Barbot, 2015).

Exogenous and endogenous spatial attention often have similar perceptual effects, but there are notable differences (for reviews, see Carrasco, 2011, 2014; Carrasco & Barbot, 2015; Carrasco & Yeshurun, 2009). For instance, using the external noise paradigm (Lu & Dosher, 1998), it has been reported that both exogenous and endogenous attention increase contrast sensitivity in the presence of high external noise, but exogenous attention does so under low noise conditions too (Lu & Dosher, 2000). Moreover, both types of attention improve performance in tasks mediated by spatial resolution, such as visual search (Carrasco & Yeshurun, 1998), acuity, and hyperacuity (Carrasco, Williams, & Yeshurun, 2002; Golla, Ignashchenkova, Haarmeier, & Thier, 2004; Montagna, Pestilli, & Carrasco, 2009), and crowding (Grubb et al., 2013; Yeshurun & Rashal, 2017). However, a seminal difference was revealed between these two attentional mechanisms with texture segmentation tasks that are constrained by spatial resolution. Whereas endogenous attention flexibly alters resolution, thereby improving performance at central and peripheral locations (Barbot & Carrasco, 2017; Jigo & Carrasco, 2018; Yeshurun, Montagna, & Carrasco, 2008), exogenous attention inflexibly increases resolution resulting in a benefit in performance in the periphery and a decrement at central locations where resolution is already too high for the task (Yeshurun & Carrasco, 1998). This pattern of results is known as the central attentional impairment (Talgar & Carrasco, 2010; Yeshurun et al., 2008; Yeshurun & Carrasco, 1998, 2000). Adapting observers to high spatial frequencies (SF) removes this impairment, suggesting that exogenous attention automatically increases resolution by enhancing sensitivity to high-SFs (Carrasco, Loula, & Ho, 2006).

Whether and how covert attention affects sensory tuning has been under study for the past couple of decades. In electrophysiological studies, attention affects neural tuning function by modulating gain—scaling the amplitude of the response (McAdams & Maunsell, 1999), tuning width—increasing selectivity by narrowing the response (Martinez-Trujillo & Treue, 2004), or both (for reviews, see Ling, Jeehee, & Pestilli, 2015; Maunsell, 2015; Reynolds & Chelazzi, 2004; Reynolds & Heeger, 2009). The attentional modulations on the tuning function depend on the type of attention being manipulated. For example, endogenous spatial attention has been shown to affect visual representations via strictly boosting gain without changes in tuning in both psychophysical (Barbot, Wyart, & Carrasco, 2014; Ling, Liu, & Carrasco, 2009; Lu & Dosher, 2004; Paltoglou & Neri, 2012; Wyart, Nobre, & Summerfield, 2012) and neurophysiological studies (Cook & Maunsell, 2004; McAdams & Maunsell, 1999; Treue, 2001).

Another way to selectively attend to spatial locations is with presaccadic attention, which is quickly deployed, about 70 ms after cue onset (Deubel & Schneider, 2003; Kowler, Anderson, Dosher, & Blaser, 1995; Rolfs & Carrasco, 2012), just before an eye-movement is made. Recent studies have shown that presaccadic attention enhances the gain and reduces the width of the orientation tuning for the saccade target (Li, Barbot, & Carrasco, 2016; Ohl, Kuper, & Rolfs, 2017). Moreover, it increases the gain to high SFs at the saccade landing position (Li et al., 2016), even when doing so is detrimental for the task (Li, Pan, & Carrasco, 2019). Given that presaccadic attention is deployed voluntarily in response to a central cue, it has been compared to endogenous attention (Li et al., 2016; Li, Pan, & Carrasco, 2019; Rolfs & Carrasco, 2012). However, like exogenous attention, it is deployed much faster than endogenous attention; thus, comparing these three types of attention is of interest.

How exogenous attention alters visual representations remains unclear. This question has been addressed using various techniques. Using classification images, previous reports have been mixed regarding the effect of exogenous attention on position tuning. It has been reported that spatial attention enhances the gain of position tuning without changing its width (Eckstein, Shimozaki, & Abbey, 2002). Others have reported narrower position tuning and increased gain on high SFs at the cued location (Megna, Rocchi, & Baldassi, 2012). Using pattern masking, researchers have reported changes in orientation gain but not in tuning width (Baldassi & Verghese, 2005). Lastly, a study using a critical-band-masking and letter identification found attention increased the gain without affecting the channel's spatial frequency tuning (Talgar, Pelli, & Carrasco, 2004). Given such variations in methods and findings, it is not possible to directly compare exogenous attention and other attentional modulations at the level of visual representation.

To investigate how exogenous spatial attention affects feature representations, we asked observers to detect a vertical grating embedded in noise and performed psychophysical reverse correlation. Doing so enabled us to compare our findings with those of endogenous spatial attention (Barbot, Wyart, & Carrasco, 2014; Wyart et al., 2012) and presaccadic attention (Li et al., 2016) studies in which the same task and analysis were utilized. Specifically, we focus on two fundamental features of early vision, SF and orientation, which are jointly represented in V1 (Hubel & Wiesel, 1959; Maffei & Florentini, 1977). There are two likely possible outcomes: (a) Exogenous attention may reshape sensory tuning similarly to presaccadic attention, given similarities between presaccadic and exogenous attention, both in speed of deployment and enhancement of high SFs, even when detrimental to the task (Carrasco, Loula, & Ho, 2006; Li et al., 2019; Yeshurun & Carrasco, 1998); (b) Alternatively, exogenous spatial attention may alter visual representations similarly to endogenous spatial attention, thereby only increasing gain in the orientation domain and leaving SF tuning unaffected, given the fact that both are covert attention systems.

Methods

Participants

Fifteen observers (nine females, six males; aged 20–34), including two of the authors, participated in the study. The dataset of one observer was not included because it was very noisy and difficult to fit (mean R2 collapsed across orientation/SF and attention condition of 0.62; all other observers' R2 fell within 0.87 and 0.97). All observers provided written informed consent and had normal or corrected-to-normal vision. All experimental procedures were in agreement with the Helsinki declaration and approved by the University Committee on Activities Involving Human Subjects at New York University.

Apparatus

Observers sat in a dark room with their head positioned on a chinrest 57 cm away from a color-calibrated CRT monitor (1280 × 960 resolution; 100 Hz refresh rate). An Apple iMac computer was used to control stimulus presentation and collect responses. Stimuli were generated using MATLAB (MathWorks, Natick, MA) and the Psychophysics toolbox (Brainard, 1997; Pelli, 1997). An EyeLink 1000 Desktop Mount (SR Research) recorded eye position at 1000 Hz.

Procedure

Observers performed a visual detection task. A test stimulus, defined as the patch probed by the response cue, was presented at one of four possible spatial locations denoted by placeholders composed of four dots forming a square (3° width; Figure 1A). The placeholders were presented at each intercardinal location at 10° eccentricity and remained on the screen for the entirety of the trial. All stimuli were presented on a gray background with a luminance of 70 cd/m2. The target was a vertically oriented Gabor generated by modulating a 1.5 cycle/° sine wave with a Gaussian envelope (0.8°SD; Figure 1B). The Gabor's phase was random on each trial. Four independent patches of white noise were randomly generated on each trial and bandpass-filtered to contain SFs between 0.75 and 3.0 cycles/°. The noise was scaled to have a 0.35 root-mean-square (RMS) contrast and was fixed across trials to keep the total power of the noise image constant. In half of the trials, the test stimulus was the target embedded in the noise patch; in the other half of the trials only noise was presented (Figure 1B).

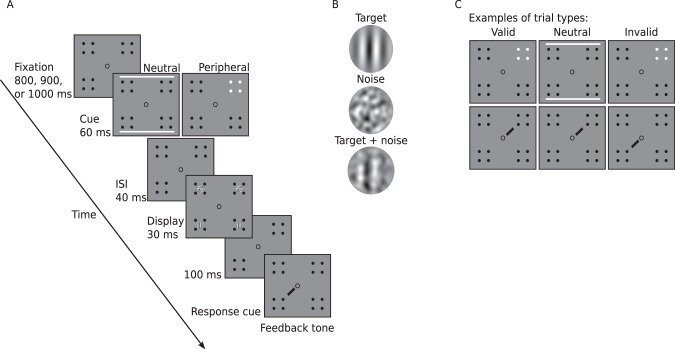

Figure 1.

Behavioral task. (A) A trial sequence. Following a brief fixation period, a peripheral placeholder would flash white (peripheral cue) or two white bars would simultaneously flash in the upper and lower visual field, directly above or below the corresponding placeholders (neutral cue). After an interstimulus interval (ISI), either noise patches (N) or targets embedded in noise (T+N) would be concurrently presented inside all four placeholders for 30 ms. On any given trial, the response cue location was predetermined and what was presented in all other three locations was randomized. After a 100 ms interval, a response cue appeared at fixation to indicate the location for which the observer had to report whether a Gabor was present or absent. On one third of the trials the response cue pointed to a precued location. On another third of the trials the response cue pointed to a location that was not precued. In the remaining trials, the precue did not indicate any particular location, and the response cue had equal probability of asking for the detection of a target at any location. (B) Stimuli. On half of the trials, only noise was presented at the precued location; in the other half, the target was presented with noise. The noise was filtered white noise, and the target was a vertical Gabor with a randomly chosen phase. (C) Examples of types of trials. In a valid trial the location indicated by the peripheral cue matched the response cue. In an invalid trial the location indicated by the peripheral cue did not match the response cue. In a neutral trial the cue was presented above and below the placeholders in the upper and lower visual field, and the response cue had equal probability of pointing to a location in either the right or left hemifield.

Each trial began with one of three possible fixation periods (800, 900, or 1000 ms with equal probability) to diminish temporal expectation and ensure that our attentional effects were solely driven by the cues. Following the fixation period, a precue was presented for 60 ms (Figure 1A). After a brief interstimulus interval of 40 ms, four patches (one test stimulus and three irrelevant stimuli), one at each location, were simultaneously presented for 30 ms. We note that the SOA between the cue and target (100 ms) is optimal for exogenous attention and that it is too fast for endogenous attention to exert its effects, which takes ∼300 ms (Cheal, Lyon, & Hubbard, 1991; Hein, Rolke, & Ulrich, 2006; Ling & Carrasco, 2006; Liu, Stevens, & Carrasco, 2007; Müller & Rabbitt, 1989; Nakayama & Mackeben, 1989; Remington, Johnston, & Yantis, 1992). Each location had equal probability (50%) of displaying a patch composed of the noise only or a target embedded in the noise. Whether a target was presented in a trial was independently determined for each location. The test stimulus was indicated by the central response cue, which was displayed 100 ms after the offset of the four patches. There were three experimental conditions: (a) In the valid-cue condition, the precue location matched the response cue location, pointing toward the test stimulus; (b) In the invalid-cue condition, the precue location did not match the response cue, pointing toward one of the three irrelevant stimuli that were not the test stimulus; and (c) In the neutral-cue condition, the precue did not indicate any particular location (Figure 1C). In the valid and invalid condition, the precue was presented by changing the polarity of the placeholder (four dots) of the cued location to white color for 60 ms (Figure 1A and C). In the neutral condition, the cue was two horizontal white bars presented across both hemifields and above and below the upper and lower placeholders respectively (Figure 1A and C). Three different cueing conditions were interleaved and were presented with equal probability (33%). The precue was uninformative regarding which location will be probed by the response cue, so overall there was no incentive to attend to the precued location.1 The response cue remained on the screen until response. The observer's task was to detect whether the test stimulus contained a target grating. Observers pressed the “z” key on a mechanical keyboard to indicate present; “?” to indicate absent. Auditory feedback was presented at the end of each trial; a high tone indicated a correct response, and a low tone indicated an incorrect response. At the end of each block, observers were also given feedback in the form of percent correct.

All experimental conditions were randomized within each of seven blocks. Each block consisted of 144 trials for a total of 1008 trials per session; each observer completed five experimental sessions. Observers first participated in one training session to be familiarized with the task. During this session a titration procedure was completed using best PEST (Pentland, 1980) to measure contrast detection threshold, which was defined as 75% correct in the neutral condition. The average target contrast across observers was 14% for the first session. Each observer's performance was monitored for each session; a titration procedure was performed if performance had increased or decreased by more than 5%.

Eye position was monitored online. Stimulus presentation was contingent upon fixation. A trial was considered to have a fixation break if eye position deviated more than 1.5° away from fixation, from the beginning of a trial until presentation of the response cue. Trials with fixation breaks were removed and repeated at the end of the block.

Data analysis

Signal detection

Each observer's performance was evaluated by d′ (Figure 2A), Z(hit rate) − Z(false alarm rate), criterion (Figure 2B), −0.5Z[(hit rate) + Z(false alarm rate)], and reaction time (Figure 2C).

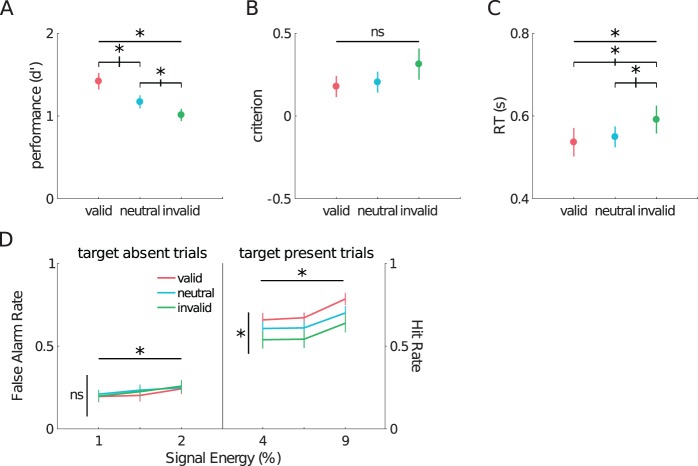

Figure 2.

Exogenous Attention's effect on behavior and energy. Behavior (A–C). Detection performance as measured by d' (A), criterion (B), and RT (C) for all experimental conditions. Colored dots are the group mean. (D) Behavior as a function of energy. False alarm and hit rate for target absent and present trials as a function of energy (binned into quartiles). The horizontal significance lines depict differences between energy strength. The vertical significance lines depict differences across experimental conditions. All error bars are SEM. *p < 0.01.

Reverse correlation

A general linear model (GLM) approach was used for reverse correlation analysis (Wyart et al., 2012; Li, et al., 2016). First, the noise image of the test stimulus was transformed from pixel space (luminance intensity at each pixel) to a 2D space defined by the noise energy of components responding to different orientations (−90° to +90° in steps of 10°) and spatial frequencies (SF; from 1 cycle/° to 2.25 cycles/° with 15 points evenly spaced on a log scale). We took the noise image of each trial and convolved it with two Gabor filters (0.8° SD; same size as target stimulus) in quadrature phase with the corresponding orientation  and SF

and SF  to compute the energy

to compute the energy  of each component. Energy equation:

of each component. Energy equation:

|

For each component with preferred orientation  and SF

and SF  , we correlated the energy of that component with behavioral responses using logistic regression. This resulted in a

, we correlated the energy of that component with behavioral responses using logistic regression. This resulted in a  weight representing the correlation between energy and behavioral reports. We used

weight representing the correlation between energy and behavioral reports. We used  weights as an index for perceptual sensitivity. A zero

weights as an index for perceptual sensitivity. A zero  weight indicated that the energy of that component did not influence an observer's responses. Before computing the correlation, the energy of each component was sorted into a present or absent group depending on whether the target was present or absent on each trial. The mean of the energy was removed for each group and normalized to have a standard deviation of one. This allowed us to use only the energy fluctuations produced by the noise. The estimated sensitivity kernel was a 2D matrix in which each element was a

weight indicated that the energy of that component did not influence an observer's responses. Before computing the correlation, the energy of each component was sorted into a present or absent group depending on whether the target was present or absent on each trial. The mean of the energy was removed for each group and normalized to have a standard deviation of one. This allowed us to use only the energy fluctuations produced by the noise. The estimated sensitivity kernel was a 2D matrix in which each element was a  weight. This was done independently for each experimental condition.

weight. This was done independently for each experimental condition.

Spatial frequency tuning was calculated by extracting the  weights across the slice containing the orientation of the target. Orientation tuning was computed by extracting the

weights across the slice containing the orientation of the target. Orientation tuning was computed by extracting the  weights for the slice containing the SF of the target (red boxes in Figure 3). Before fitting the orientation (or SF) tuning curves, for each observer, we normalized the tuning function by the peak of his/her tuning curve in the neutral condition.

weights for the slice containing the SF of the target (red boxes in Figure 3). Before fitting the orientation (or SF) tuning curves, for each observer, we normalized the tuning function by the peak of his/her tuning curve in the neutral condition.

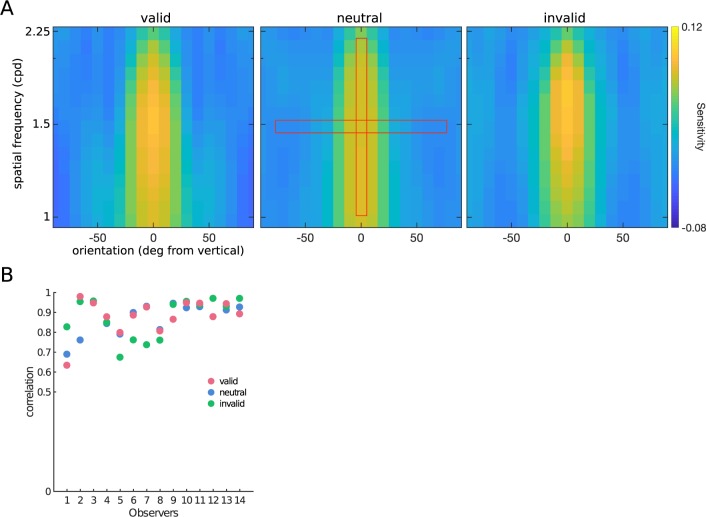

Figure 3.

Sensitivity kernels and separability. (A) Group-averaged sensitivity kernels for each experimental condition: valid, neutral, and invalid. Each pixel represents a beta-weight that represents the degree of correlation between the noise at each SF and orientation component and the behavioral response. We refer to these weights as perceptual sensitivity; the higher the beta-weight, the higher the correlation between the noise and behavioral responses. The red boxes in the neutral kernel are a cartoon depiction of the slices we extracted to fit the tuning functions for each experimental condition. We fit the slice containing the orientation of the target (0°, vertical box) and the slice containing the spatial frequency of the target (1.5 cycles/°, horizontal box). (B) Separability test: correlation between the reconstructed sensitivity kernels and original kernels across all observers. A correlation close to 1 implies high separability.

A Gaussian,  was used to fit orientation tuning functions and a Gaussian raised to a power,

was used to fit orientation tuning functions and a Gaussian raised to a power,  was used to fit SF tuning functions. All parameters were left free. The

was used to fit SF tuning functions. All parameters were left free. The  parameter represents the function's peak or center (where the tuning function reached highest sensitivity),

parameter represents the function's peak or center (where the tuning function reached highest sensitivity),  and

and  determined the shape of the tuning function while

determined the shape of the tuning function while  and

and  adjusted the amplitude (gain) and baseline, respectively. The tuning functions were fit by minimizing the sum-of-squared error (SSE) between the tuning function and the data.

adjusted the amplitude (gain) and baseline, respectively. The tuning functions were fit by minimizing the sum-of-squared error (SSE) between the tuning function and the data.

To estimate the level of separability between spatial frequency and orientation in our data, we conducted marginal reconstruction. We computed the marginal orientation (SF) tuning curve by averaging the sensitivity across spatial frequencies (orientations). We computed the outer product of the orientation and SF marginal tuning curves to reconstruct a sensitivity kernel. We then correlated the reconstructed sensitivity kernels with the original kernels.

Model comparisons

We tested whether a Gaussian or Difference of Gaussians (DoG) model would better fit the orientation tuning function by bootstrapping (Efron & Tibshirani, 1993). We conducted bootstrapping with 500 iterations. On each iteration we randomly sampled (with replacement) each observer's data twice, one as the training data and the other as the testing data. The training data and the testing data were then averaged across observers, generating one training tuning function and one testing tuning function. By using the training tuning function, we optimized the free parameters and computed an estimated tuning function. We then computed SSE (as the index for the goodness of fit) by using the estimated tuning function and the testing tuning function. After 500 iterations, we obtained a distribution (and thus the confidence interval) of SSE. We also conducted this model comparison by 10-fold cross validation and found the same pattern of results.

Results

Behavioral effect of exogenous attention

Exogenous attention improved detection sensitivity (d′) at the target location (Figure 2A). We conducted a one-way within-subjects ANOVA. There was a main effect of cue, F(2, 26) = 19.59, p < 0.0001, η2 = 0.19. Detection performance was higher for the valid cue than the neutral cue, t(13) = 3.81, p = 0.0021, d = 0.71, or the invalid cue, t(13) = 4.90, p < 0.0001, d = 1.16. There were no significant changes in criterion across different cueing conditions: one-way within-subjects ANOVA: F(2, 26) = 2.42, p = 0.10, η2 = 0.06 (Figure 2B). Additionally, we found that neither detection sensitivity nor decision criterion differed across all three intertrial intervals. One-way within-subjects ANOVA revealed no main effect of ITI on detection sensitivity or decision criterion (both F < 1).

The effect of cueing on detection performance cannot be explained by speed-accuracy trade-off. There was a benefit for reaction times (RT; Figure 2C). A one-way within-subjects ANOVA revealed a main effect of RT: F(2, 26) = 9.058, p = 0.0010, η2 = 0.036. Observers were faster to respond when presented with a valid than an invalid precue: t(13) = 5.97, p < 0.0001, d = 0.40. There was no significant difference between the valid and neutral cues: t(13) = 0.58, p = 0.56, and observers were slower when presented with an invalid than a neutral cue: t(13) = 3.31, p = 0.005, d = 0.36.

Relation between energy and behavior

To relate detection performance to energy, we explored how trial-wise energy fluctuations for the component consisting of the target SF and orientation influenced hit rate and false-alarm rate (Figure 2D). We grouped the trials based on the energy (without subtracting the target) of the target SF and orientation in the test stimulus in each trial. Low energy corresponded to the lower quartile (<25%), high energy to the upper quartile (>75%), and midenergy fell between these two.

We conducted a three-way, target presence (present/absent) × energy level (low/medium/high) × cue validity (valid/neutral/invalid) within-subjects ANOVA using detection performance (d′) as the dependent variable. There were two significant main effects: target presence, F(1, 13) = 176.31, p < 0.001, η2 = 0.78; and energy level, F(2, 26) = 73.61, p < 0.001, η2 = 0.09. There were two significant interactions: Target presence interacted with energy level, F(2, 26) = 25.96, p < 0.001, η2 = 0.02, and also with cue validity, F(2, 26) = 16.00, p < 0.001, η2 = 0.07. To explore these significant interactions, we performed a two-way (energy level × cue validity) within-subjects ANOVA on target absent and target present trials separately. For target absent trials, false-alarm rates increased with increasing energy, F(2, 26) = 28.36, p < 0.001, η2 = 0.046, but did not differ across cue conditions, F < 1, and these factors did not interact, F(4, 52) = 1.41, p = 0.24. These results suggest that regardless of attention, as the test-stimulus patch contained higher energy (at features that resembled the target), observers were more likely to false-alarm. For present trials, hit-rate increased with energy, F(2, 26) = 66.62, p < 0.001, η2 = 0.13, and cue validity, F(2, 26) = 8.32, p = 0.001, η2 = 0.17 was significant; hit rates were higher for valid than for neutral cues, t(41) = 4.11, p < 0.001, d = 0.59, than for invalid cues, t(41) = 5.20, p < 0.001, d = 0.98. To test whether energy fluctuations had a more pronounced effect on target present or target absent trials we compared the difference, collapsed across cue validity, between the low and high-energy bins for both trial types. Energy fluctuations had a more pronounced effect on performance for target present trials (hit-rate) than for target absent trials (false alarm rate), t(41) = 5.07, p < 0.001, d = 1.08. Thus, these findings suggest that the observed benefits in detection performance brought about by valid cues were driven by the energy in the target present trials.

Sensitivity kernels and tuning functions

We correlated the trial-wise energy fluctuations with each observer's perceptual report via logistic regression. This resulted in a 2D sensitivity kernel composed of β weights (Figure 3A). Greater weights indicate a high correlation between reporting the target as present in the test stimulus and the noise energy. Weights at or below zero indicate, respectively, no or a negative correlation between reporting “target present” and the noise energy. We observed the highest weights across all sensitivity kernels and spatial frequencies at the slice containing the target orientation.

To estimate the level of separability between spatial frequency and orientation in our data, we conducted marginal reconstruction, and then correlated the reconstructed sensitivity kernels with the original kernels (Figure 3B). The higher the correlation, the more separable both feature dimensions are (Mazer, Vinje, McDermott, Schiller, & Gallant, 2002). We observed high separability with a median correlation for all three attention conditions of 0.9.

Having established that both feature dimensions are highly separable, we proceeded to understand how exogenous attention affected the underlying feature representation. We extracted two slices from the 2D sensitivity kernels: (a) The orientation tuning function (Figure 4A), a horizontal slice containing all orientation β weights at the SF of the target (1.5 cycles/°); and (b) The SF tuning functions (Figure 4D), a vertical slice containing all SF β weights at the orientation of the target (0°).

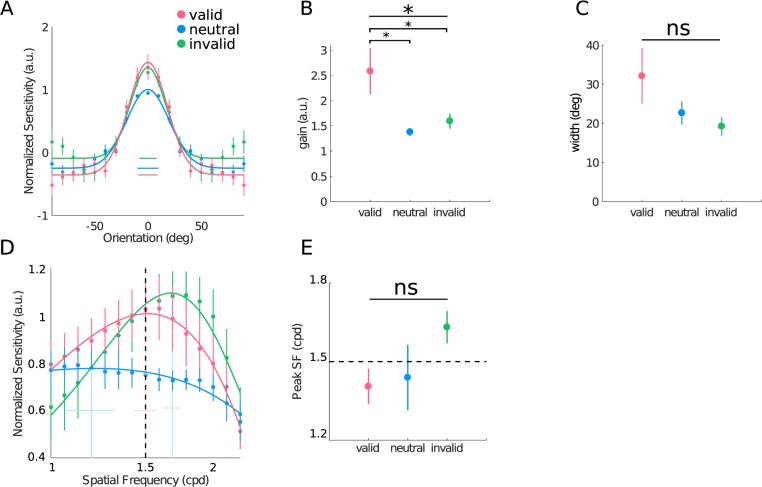

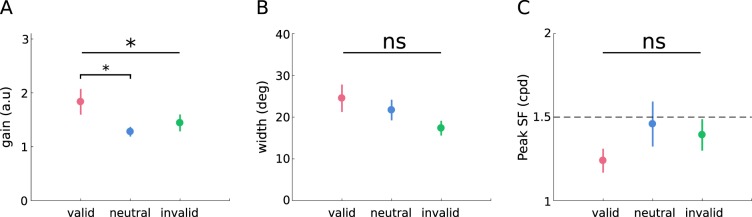

Figure 4.

Sensory tuning functions. Orientation tuning (A–C). (A) Group-averaged orientation tuning functions. (B) Group-averaged orientation gain for each experimental condition. (C) Group-averaged orientation tuning widths for all experimental conditions (Red = valid, neutral = blue, green = invalid). Spatial frequency tuning (D–E). (D) Group-averaged spatial frequency tuning functions. Black vertical dashed line represents the SF of the target. Colored vertical lines represent the peaks of each function; the horizontal colored lines represent the SEM of the group peaks. (E) Group average peak SF for all experimental conditions. Plots (B), (C), and (E) represent average of individual parameter fits. Error bars are SEM; * p < 0.05.

We fit the orientation tuning functions with Gaussians. A one-way within-subjects ANOVA showed a main effect of gain of the orientation tuning functions: F(2, 26) = 7.09, p = 0.004, Greenhouse-Geisser (GG) corrected to 0.014, η2 = 0.23 (Figure 4B). Pairwise comparisons revealed a higher gain in the valid than the neutral cues: t(13) = 2.92, p = 0.011, d = 1.04; or invalid cues: t(13) = 2.46, p = 0.028, d = 0.82. There were no significant differences in orientation tuning width (Figure 4C). One-way within-subjects ANOVA showed no main effect of width, F(2, 26) = 2.11, p = 0.14, or baseline, F(2, 26) = 3.67, p = 0.039 (GG corrected to 0.11) of the tuning functions across the three cueing conditions.

Given that the data for the invalid condition in Figure 4A exhibited a Mexican-hat shape, we evaluated whether a DoG model would better capture these data than the Gaussian. We compared the Gaussian and DoG models via bootstrapping. Both models captured the data equally well when examining both the individual observers' tuning functions and when fitting group-averaged tuning functions: Gaussian vs. DoG: valid: t(499) = −0.06, p = 0.95; neutral, t(499) = 0.16, p = 0.87; invalid: t(499) = 0.87, p = 0.38. We kept the Gaussian model because it is constrained by fewer parameters.

We fit the SF kernels with raised Gaussians. One-way within-subjects ANOVA revealed no main effect of peak SF, F(2, 26) = 1.716, p = 0.199 (Figure 4D and E). However, we did observe that the peak of the functions deviated slightly from the target spatial frequency (1.5 cycle/°), with the valid function peaking at the SF of the target: valid peak, 1.5 cycles/°; neutral peak, 1.2 cycle/°; invalid peak, 1.7 cycle/°. No differences in gain, F(2, 26) = 1.281, p = 0.298; tuning width of F(2, 26) = 1.281, p = 0.298; or baseline, F < 1, were found. Thus, the cueing condition did not affect the representation of SF information.

We further tested whether the above results would hold when the information from the entire kernels was used for analysis. To do this we fit directly to the marginal orientation tuning curves and the marginal SF tuning curves (Figure 5), for all three conditions (see Methods). We found consistent results in the orientation domain: main effect of attention on gain, F(2, 26) = 3.904, p = 0.032, η2 = 0.16; but no main effect on tuning width, F(2, 26) = 1.147, p = 0.246, or baseline, F(2, 26) = 2.822, p = 0.077. Pairwise comparisons revealed a higher gain in the valid than in the neutral cues, t(13) = 2.53, p = 0.025, d = 0.8. We also found consistent results in the SF domain: no significant shifts in the peak SF, F(2, 26) = 1.541, p = 0.233, with exogenous attention, as well as no differences in gain, F(2, 26) = 1.312, p = 0.287; tuning width, F(2, 26) = 1.063, p = 0.36; or baseline, F(2, 26) = 1.280, p = 0.295. The consistency in our results suggests that exogenous attention (a) affects the representation of orientations by enhancing the gain across all feature detectors without affecting tuning width or selectivity; and (b) does not alter the representation of spatial frequency.

Figure 5.

Marginals analysis. Orientation tuning (A–B). (A) Group-averaged orientation gain for each experimental condition. (B) Group-averaged orientation tuning widths for all experimental conditions (Red = valid, neutral = blue, green = invalid). Spatial frequency tuning (C). Group average peak SF for all experimental conditions. All plots represent average of individual parameter fits. Error bars are SEM; * p < 0.05.

Some authors have analyzed target present trials and target absent trials separately (Abbey & Eckstein, 2002; Eckstein et al., 2002). We fit them separately and found no statistically significant difference in parameters. Pairwise comparisons of parameter estimates, collapsed across cue validity, for present and absent trials revealed no significant difference in orientation gain, t(41) = 0.81, p = 0.42; orientation tuning, t(41) = 1.09, p = 0.28, or peak SF, t(41) = 0.97, p = 0.33. This lack of a significant difference in parameters between present and absent trials diminishes the possibility of a nonlinearity in our data and suggests that the assumption of linearity in reverse correlation is met.

Discussion

How does exogenous spatial attention modulate the processing of feature information? We used a cueing procedure to manipulate exogenous attention, and psychophysical reverse correlation to characterize perceptual sensory tuning functions. Detection performance was better with a valid cue than with either a neutral or an invalid cue. Reaction times were also faster when the test stimulus was validly cued. No differences in criterion were found across cueing conditions. Using reverse correlation, we found that exogenous attention modulates the representation of orientation information by enhancing gain without affecting tuning width, and that exogenous attention does not alter the representation of SF information.

The finding of significant gain changes by exogenous attention but no changes in tuning width held even after refitting orientation tuning functions to the marginals. We note that a gain increment can result from a reduction of internal noise (Ahumada, 2002; Eckstein, Pham, & Shimozaki, 2004). Here we did not aim to distinguish these two mechanisms nor could we. Our results are in line with a previous study assessing the orientation tuning of attention (Baldassi & Verghese, 2005). However, this study did not isolate exogenous attention (from endogenous attention) given that the peripheral cue was sustained until target offset and 100% valid, and the neutral and valid cue conditions were blocked. Here we isolated the effect of exogenous attention, by using a transient peripheral cue (a brief 40 ms ISI between cue and target presentation) and interleaving neutral and uninformative (50% valid) peripheral cues in each block.

Is it possible that the lack of an effect on tuning width was because we employed a detection task, which did not maximize the need to tune to the target orientation? This postulate seems feasible because observers were explicitly told that the target orientation was always vertical and thus did not have to select amongst many possible orientations (e.g., an orientation discrimination task). However, we think that this is not the reason why we did not observe a change of orientation tuning by exogenous attention, because using a detection task and analysis protocols similar to the ones employed here, a change of orientation tuning width by presaccadic attention (Li et al., 2016) and feature-based attention (Barbot et al., 2014) have been reported.

Exogenous attention did not significantly affect the representation of SF information. However, from Figure 4D one can observe that the valid cue shifted the peak sensitivity closer to the SF of the target more than the neutral or invalid cue. The flat shape of the neutral curve also reflects that SF sensitivity is highly variable among observers. Perhaps, had we tailored the SF of the target to each individual's peak sensitivity on the neutral condition, we may have observed significant shifts with exogenous attention. We think this possibility should be tested in the future. Here we kept the task and stimuli as similar as possible to our previous studies on endogenous attention (Barbot, Wyart, & Carrasco, 2014) and presaccadic attention (Li, Barbot, & Carrasco, 2017), so we could directly compare the gain, tuning, and spatial frequency properties of the effects of exogenous attention with their effects.

Results from previous psychophysical studies have implied that exogenous spatial attention preferentially enhances high SFs (e.g., Carrasco, Loula, & Ho, 2006; Jigo & Carrasco, 2018; Yeshurun & Carrasco, 1998). We can only speculate why we did not find a preferential enhancement of high spatial frequencies in the current study: (a) Preferential enhancement of higher spatial frequencies with exogenous attention may be task dependent; it emerges with acuity (Carrasco, Williams, & Yeshurun, 2002; Montagna, Pestilli, & Carrasco, 2009), texture segmentation (Carrasco, Loula, & Ho, 2006; Jigo & Carrasco, 2018; Yeshurun & Carrasco, 1998), or crowding (Grubb et al., 2013; Yeshurun & Rashal, 2010) tasks constrained by spatial resolution, or in tasks assessing temporal resolution (Yeshurun & Hein, 2011; Yeshurun & Levy, 2003), but not when directly assessing contrast sensitivity at individual frequencies; and (b) It has been recently reported that at each eccentricity exogenous attention preferentially increases contrast sensitivity at higher spatial frequencies than the frequency at which sensitivity peaks (Jigo & Carrasco, 2018), but the target stimuli we used may not have been within the optimal range; (c) The noise SF spectrum was chosen to compare with that used in presaccadic attention (Li et al., 2016), perhaps exogenous attention requires a wider spectrum for an enhancement at high SFs to emerge; (d) Reverse correlation can reflect both sensory and decision level components (Ahumada, 1996; Beard & Ahumada, 1998; Neri & Levi, 2006; Okazawa, Sha, Purcell, & Kiani, 2018); the expected enhancement at high SFs could be happening at the sensory stage but may be overcome by a later decision stage. In sum, the lack of an effect of exogenous attention on SF tuning may be due to the task, stimulus (noise), and/or the method used (reverse correlation).

From a perceptual standpoint, it seems easier to conceptualize a vertical orientation than a given spatial frequency, as we use specific labels for particular orientations in daily life. Given that psychophysically derived tuning curves have both sensory and decision components (Ahumada, 1996; Beard & Ahumada, 1998; Neri & Levi, 2006; Okazawa, Sha, Purcell, & Kiani, 2018), perhaps observers were biasing their decision towards the orientation of the target more so than towards its SF, leading to increased variability in the SF domain.

Previous studies have shown inconsistent findings regarding SF tuning. In a study using a letter identification task and critical-band-masking, exogenous attention did not affect spatial frequency tuning (Talgar et al., 2004). However, another study manipulating exogenous attention reported an increase in SF gain as well as narrower spatial (position) tuning at parafoveal locations (Megna et al., 2012). We note some critical differences between the studies: First, the study of Megna and collaborators falls within the position tuning domain rather than the orientation domain. Second, they inferred SF tuning from transformations of their classification images. Here, we explicitly filter our stimuli to various SFs, letting us directly access SF tuning.

Here we found that exogenous attention alters the representation of orientation and SF information similarly to its slower, voluntary counterpart—endogenous spatial attention. Using various tasks and methods with endogenous attention, studies have shown enhanced gain of orientation-selective filters without changes in tuning width (Ling et al., 2009; Paltoglou & Neri, 2012; Barbot, Wyart, & Carrasco, 2014). Given the consistent findings with endogenous attention and the fact that the task and analysis used by Barbot and colleagues (2014) are similar to those employed here, we can now make direct comparisons and conclude that both endogenous and exogenous attention modulate orientation information strictly by boosting gain. Similarities between these two covert attentional modulations also exist in the SF domain. Though less investigated, it has been reported that endogenous attention does not alter SF tuning even with increased gain (Lu & Dosher, 2004). Taking our findings and all these results together, we can conclude that covert spatial attention modulates orientation representations by strictly boosting gain without affecting SF tuning.

In contrast, we found that exogenous attention alters the representation of orientation and SF information differently than presaccadic attention, notwithstanding that both are rapidly deployed and increase sensitivity to high SFs even when detrimental to the task. Whereas exogenous attention strictly boosts gain in the orientation domain (Li et al., 2016; Ohl et al., 2017), presaccadic attention also narrows its width, sharpening its response profile (Li et al., 2016; Ohl et al., 2017). In the SF domain presaccadic attention increases the gain to high SFs (Li et al., 2016; Li, Pan, & Carrasco, 2019) whereas exogenous attention does not. Differences at the level of the representation may be unequivocally linked to the planning and preparation of eye-movements—intrinsic to presaccadic attention and absent for covert spatial attention.

Putting temporal dynamics aside, it could be argued that presaccadic attention (a form of overt attention) is more similar to endogenous attention because both are voluntary and deployed via a central cue. However, our findings show that, unlike presaccadic attention, both types of covert spatial attention (endogenous and exogenous) alter the representation of early visual features in a similar fashion through gain enhancement. These findings suggest that the cue type (central or peripheral) and the temporal window of deployment are not key factors influencing the underlying visual representation.

In conclusion, exogenous attention alters visual processing by boosting the gain of the target orientation without affecting tuning width. In the SF domain, no change in tuning was found. Our results reveal that exogenous covert spatial attention alters visual representation in a similar way to endogenous covert spatial attention, but distinct from presaccadic attention. Thus, it seems that when we selectively process information, it is the covert versus the overt nature of the attention that determines the changes in the visual representation.

Acknowledgments

This research was supported by NIH National Eye Institute R01 EY019693 and R01 EY016200 to MC and NIH grant R90DA043849 to NYU (HHL). We thank Antoine Barbot, Ian Donovan, and Michael Jigo for helpful comments.

Commercial relationships: none.

Corresponding author: Marisa Carrasco.

Email: marisa.carrasco@nyu.edu.

Address: Department of Psychology and Center for Neural Sciences, New York University, New York, NY, USA.

Footnotes

Within the 66% of peripherally cued trials, however, the precue and the response cue matched in 33% of the trials, instead of 25%, which would be completely noninformative. In any case, cue validity does not affect the magnitude of the benefit at the cued location and cost at uncued locations for exogenous attention (e.g., Giordano, McElree, & Carrasco, 2009; Yeshurun & Rashal, 2010).

Contributor Information

Antonio Fernández, Email: antonio.fernandez@nyu.edu.

Hsin-Hung Li, Email: hsin.hung.li@nyu.edu.

Marisa Carrasco, Email: marisa.carrasco@nyu.edu.

References

- Abbey C. K, Eckstein M. P. Classification image analysis: Estimation and statistical inference for two-alternative forced-choice experiments. Journal of Vision. (2002);2(1):66–78.:5. doi: 10.1167/2.1.5. https://doi.org/10.1167/2.1.5 PubMed] [ Article. [DOI] [PubMed] [Google Scholar]

- Ahumada A. J., Jr Perceptual classification images from Vernier acuity masked by noise. Perception. (1996);25 [Abstract] ECVP Abstract Supplement. [Google Scholar]

- Ahumada A. J., Jr Classification image weights and internal noise level estimation. Journal of Vision. (2002);2(1):121–131.:8. doi: 10.1167/2.1.8. https://doi.org/10.1167/2.1.8 PubMed] [ Article. [DOI] [PubMed] [Google Scholar]

- Anton-Erxleben K, Carrasco M. Attentional enhancement of spatial resolution: Linking behavioural and neurophysiological evidence. Nature Reviews Neuroscience. (2013);14(3):188–200. doi: 10.1038/nrn3443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baldassi S, Verghese P. Attention to locations and features: Different top-down modulation of detector weights. Journal of Vision. (2005);5(6):556–570.:7. doi: 10.1167/5.6.7. https://doi.org/10.1167/5.6.7 PubMed] [ Article. [DOI] [PubMed] [Google Scholar]

- Barbot A, Carrasco M. Attention modifies spatial resolution according to task demands. Psychological Science. (2017);28(3):285–296. doi: 10.1177/0956797616679634. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barbot A, Wyart V, Carrasco M. Spatial and feature-based attention differentially affect the gain and tuning of orientation-selective filters. Journal of Vision. (2014);14(10):703. https://doi.org/10.1167/14.10.703 Abstract. [Google Scholar]

- Beard B. L, Ahumada A. J., Jr A technique to extract relevant image features for visual tasks. Proceedings of SPIE. (1998);3299:79–85. [Google Scholar]

- Brainard D. H. The psychophysics toolbox. Spatial Vision. (1997);10:433–436. [PubMed] [Google Scholar]

- Carrasco M. Visual attention. The past 25 years. (2011);51(13):1484–1525. doi: 10.1016/j.visres.2011.04.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carrasco M. Spatial attention: Perceptual modulation. In: Kastner S, Nobre A. C, editors. The Oxford handbook of attention. Oxford University Press; (2014). pp. 183–230. (Eds.) [Google Scholar]

- Carrasco M, Barbot A. How attention affects spatial resolution. Cold Spring Harbor Symposia on Quantitative Biology. (2015);79:149–160. doi: 10.1101/sqb.2014.79.024687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carrasco M, Yeshurun Y. The contribution of covert attention to the set-size and eccentricity effects in visual search. Journal of Experimental Psychology:Human Perception and Performance. (1998);24(2):673. doi: 10.1037//0096-1523.24.2.673. [DOI] [PubMed] [Google Scholar]

- Carrasco M, Yeshurun Y. Covert attention effects on spatial resolution. In: Srinivasan N, editor. Progress in Brain Research. Vol. 176. The Netherlands: Elsevier; (2009). pp. 65–86. (Ed.) vol. Attention pp. [DOI] [PubMed] [Google Scholar]

- Carrasco M, Loula F, Ho Y.-X. How attention enhances spatial resolution: Evidence from selective adaptation to spatial frequency. Perception & Psychophysics. (2006);68(6):1004–1012. doi: 10.3758/bf03193361. [DOI] [PubMed] [Google Scholar]

- Carrasco M, Williams P. E, Yeshurun Y. Covert attention increases spatial resolution with or without masks: Support for signal enhancement. Journal of Vision. (2002);2(6):467–479.:4. doi: 10.1167/2.6.4. https://doi.org/10.1167/2.6.4 PubMed] [ Article. [DOI] [PubMed] [Google Scholar]

- Cheal M, Lyon D. R, Hubbard D. C. Does attention have different effects on line orientation and line arrangement discrimination? The Quarterly Journal of Experimental Psychology. (1991);43(4):825–857. doi: 10.1080/14640749108400959. [DOI] [PubMed] [Google Scholar]

- Cook E. P, Maunsell J. H. Attentional modulation of motion integration of individual neurons in the middle temporal visual area. Journal of Neuroscience. (2004);24(36):7964–7977. doi: 10.1523/JNEUROSCI.5102-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deubel H, Schneider W. X. Saccade target selection and object recognition: Evidence for a Common Attentional Mechanism. Vision Research. (1996);36(12):1827–1837. doi: 10.1016/0042-6989(95)00294-4. [DOI] [PubMed] [Google Scholar]

- Eckstein M. P, Pham B. T, Shimozaki S. S. The footprints of visual attention during search with 100% valid and 100% invalid cues. Vision Research. (2004);44(12):1193–1207. doi: 10.1016/j.visres.2003.10.026. [DOI] [PubMed] [Google Scholar]

- Eckstein M. P, Shimozaki S. S, Abbey C. K. The footprints of visual attention in the Posner cueing paradigm revealed by classification images. Journal of Vision. (2002);2(1):25–45.:3. doi: 10.1167/2.1.3. https://doi.org/10.1167/2.1.3 PubMed] [ Article. [DOI] [PubMed] [Google Scholar]

- Efron B, Tibshirani R. An introduction to the bootstrap. Chapman and Hall/CRC; UK: (1993). [Google Scholar]

- Giordano A. M, McElree B, Carrasco M. On the automaticity and flexibility of covert attention: A speed-accuracy trade-off analysis. Journal of Vision. (2009);9(3):1–10.:30. doi: 10.1167/9.3.30. https://doi.org/10.1167/9.3.30 PubMed] [ Article. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Golla H, Ignashchenkova A, Haarmeier T, Thier P. Improvement of visual acuity by spatial cueing: A comparative study in human and non-human primates. Vision Research. (2004);44(13):1589–1600. doi: 10.1016/j.visres.2004.01.009. [DOI] [PubMed] [Google Scholar]

- Grubb M. A, Behrmann M, Egan R, Minshew N. J, Heeger D. J, Carrasco M. Exogenous spatial attention: Evidence for intact functioning in adults with autism spectrum disorder. Journal of Vision. (2013);13(14):1–13.:9. doi: 10.1167/13.14.9. https://doi.org/10.1167/13.14.9 PubMed] [ Article. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hein E, Rolke B, Ulrich R. Visual attention and temporal discrimination: Differential effects of automatic and voluntary cueing. Visual Cognition. (2006);13(1):29–50. [Google Scholar]

- Hubel D. H, Wiesel T. N. Receptive fields of single neurones in the cat's striate cortex. The Journal of Physiology. (1959);148(3):574–591. doi: 10.1113/jphysiol.1959.sp006308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jigo M, Carrasco M. Attention alters spatial resolution by modulating second-order processing. Journal of Vision. (2018);18(7):1–12.:2. doi: 10.1167/18.7.2. https://doi.org/10.1167/18.7.2 PubMed] [ Article. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kowler E, Anderson E, Dosher B, Blaser E. The role of attention in the programming of saccades. Vision Research. (1995);35(13):1897–1916. doi: 10.1016/0042-6989(94)00279-u. [DOI] [PubMed] [Google Scholar]

- Li H.-H, Barbot A, Carrasco M. Saccade preparation reshapes sensory tuning. Current Biology. (2016);26(12):1564–1570. doi: 10.1016/j.cub.2016.04.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li H.-H, Pan J, Carrasco M. Presaccadic attention improves or impairs performance by enhancing sensitivity to higher spatial frequencies. Scientific Reports. (2019);9(2659) doi: 10.1038/s41598-018-38262-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ling S, Carrasco M. Sustained and transient covert attention enhance the signal via different contrast response functions. Vision Research. (2006);46(8–9):1210–1220. doi: 10.1016/j.visres.2005.05.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ling S, Jehee J. F, Pestilli F. A review of the mechanisms by which attentional feedback shapes visual selectivity. Brain Structure and Function. (2015);220(3):1237–1250. doi: 10.1007/s00429-014-0818-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ling S, Liu T, Carrasco M. How spatial and feature-based attention affect the gain and tuning of population responses. (2009);49(10):1194–1204. doi: 10.1016/j.visres.2008.05.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu T, Stevens S. T, Carrasco M. Comparing the time course and efficacy of spatial and feature-based attention. Vision Research. (2007);47(1):108–113. doi: 10.1016/j.visres.2006.09.017. [DOI] [PubMed] [Google Scholar]

- Lu Z. L, Dosher B. A. External noise distinguishes attention mechanisms. Vision Research. (1998);38(9):1183–1198. doi: 10.1016/s0042-6989(97)00273-3. [DOI] [PubMed] [Google Scholar]

- Lu Z. L, Dosher B. A. Spatial attention: Different mechanisms for central and peripheral temporal precues? Journal of Experimental Psychology: Human Perception and Performance. (2000);26(5):1534–1548. doi: 10.1037//0096-1523.26.5.1534. [DOI] [PubMed] [Google Scholar]

- Lu Z. L, Dosher B. A. Spatial attention excludes external noise without changing the spatial frequency tuning of the perceptual template. Journal of Vision. (2004);4(10):955–966.:10. doi: 10.1167/4.10.10. https://doi.org/10.1167/4.10.10 PubMed] [ Article. [DOI] [PubMed] [Google Scholar]

- Maffei L, Fiorentini A. Spatial frequency rows in the striate visual cortex. Vision Research. (1977);17(2):257–264. doi: 10.1016/0042-6989(77)90089-x. [DOI] [PubMed] [Google Scholar]

- Martinez-Trujillo J. C, Treue S. Feature-based attention increases the selectivity of population responses in primate visual cortex. Current Biology. (2004);14(9):744–751. doi: 10.1016/j.cub.2004.04.028. [DOI] [PubMed] [Google Scholar]

- Maunsell J. H. Neuronal mechanisms of visual attention. Annual Review of Vision Science. (2015);1:373–391. doi: 10.1146/annurev-vision-082114-035431. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazer J. A, Vinje W. E, McDermott J, Schiller P. H, Gallant J. L. Spatial frequency and orientation tuning dynamics in area V1. Proceedings of the National Academy of SciencesUSA. (2002);99(3):1645–1650. doi: 10.1073/pnas.022638499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McAdams C. J, Maunsell J. H. Effects of attention on orientation-tuning functions of single neurons in macaque cortical area V4. Journal of Neuroscience. (1999);19(1):431–441. doi: 10.1523/JNEUROSCI.19-01-00431.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Megna N, Rocchi F, Baldassi S. Spatio-temporal templates of transient attention revealed by classification images. Vision Research. (2012);54(C):39–48. doi: 10.1016/j.visres.2011.11.012. [DOI] [PubMed] [Google Scholar]

- Montagna B, Pestilli F, Carrasco M. Attention trades off spatial acuity. Vision Research. (2009);49(7):735–745. doi: 10.1016/j.visres.2009.02.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Müller H. J, Rabbitt P. M. Reflexive and voluntary orienting of visual attention: Time course of activation and resistance to interruption. Journal of Experimental Psychology: Human perception and performance. (1989);15(2):315–330. doi: 10.1037//0096-1523.15.2.315. [DOI] [PubMed] [Google Scholar]

- Nakayama K, Mackeben M. Sustained and transient components of focal visual attention. Vision Research. (1989);29(11):1631–1647. doi: 10.1016/0042-6989(89)90144-2. [DOI] [PubMed] [Google Scholar]

- Neri P, Levi D. M. Receptive versus perceptive fields from the reverse-correlation viewpoint. Vision Research. (2006);46(16):2465–2474. doi: 10.1016/j.visres.2006.02.002. [DOI] [PubMed] [Google Scholar]

- Ohl S, Kuper C, Rolfs M. Selective enhancement of orientation tuning before saccades. Journal of Vision. (2017);17(13):1–11.:2. doi: 10.1167/17.13.2. https://doi.org/10.1167/17.13.2 PubMed] [ Article. [DOI] [PubMed] [Google Scholar]

- Okazawa G, Sha L, Purcell B. A, Kiani R. Psychophysical reverse correlation reflects both sensory and decision-making processes. Nature Communications. (2018);9(1)(3479) doi: 10.1038/s41467-018-05797-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paltoglou A. E, Neri P. Attentional control of sensory tuning in human visual perception. Journal of Neurophysiology. (2012);107(5):1260–1274. doi: 10.1152/jn.00776.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelli D. G. The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spatial Vision. (1997);10(4):437–442. [PubMed] [Google Scholar]

- Pentland A. Maximum likelihood estimation: The best PEST. Attention, Perception, & Psychophysics. (1980);28(4):377–379. doi: 10.3758/bf03204398. [DOI] [PubMed] [Google Scholar]

- Remington R. W, Johnston J. C, Yantis S. Involuntary attentional capture by abrupt onsets. Perception & Psychophysics. (1992);51(3):279–290. doi: 10.3758/bf03212254. [DOI] [PubMed] [Google Scholar]

- Reynolds J. H, Chelazzi L. Attentional modulation of visual processing. Annual Review of Neuroscience. (2004);27:611–647. doi: 10.1146/annurev.neuro.26.041002.131039. [DOI] [PubMed] [Google Scholar]

- Reynolds J. H, Heeger D. J. The normalization model of attention. Neuron. (2009);61(2):168–185. doi: 10.1016/j.neuron.2009.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rolfs M, Carrasco M. Rapid simultaneous enhancement of visual sensitivity and perceived contrast during saccade preparation. Journal of Neuroscience. (2012);32(40):13744–13752a. doi: 10.1523/JNEUROSCI.2676-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talgar C. P, Carrasco M. Vertical meridian asymmetry in spatial resolution: Visual and attentional factors. Psychonomic Bulletin & Review. (2010);9(4):714–722. doi: 10.3758/bf03196326. [DOI] [PubMed] [Google Scholar]

- Talgar C. P, Pelli D. G, Carrasco M. Covert attention enhances letter identification without affecting channel tuning. Journal of Vision. (2004);4(1):22–31.:3. doi: 10.1167/4.1.3. https://doi.org/10.1167/4.1.3 PubMed] [ Article. [DOI] [PubMed] [Google Scholar]

- Treue S. Neural correlates of attention in primate visual cortex. Trends in Neurosciences. (2001);24(5):295–300. doi: 10.1016/s0166-2236(00)01814-2. [DOI] [PubMed] [Google Scholar]

- Wyart V, Nobre A. C, Summerfield C. Dissociable prior influences of signal probability and relevance on visual contrast sensitivity. Proceedings of the National Academy of SciencesUSA. (2012);109(16):6354–6354. doi: 10.1073/pnas.1204601109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yeshurun Y, Carrasco M. Attention improves or impairs visual performance by enhancing spatial resolution. Nature. (1998 Nov 5);396(6706):72–75. doi: 10.1038/23936. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yeshurun Y, Carrasco M. The locus of attentional effects in texture segmentation. Nature Neuroscience. (2000);3(6):622–627. doi: 10.1038/75804. [DOI] [PubMed] [Google Scholar]

- Yeshurun Y, Hein E. Transient attention degrades perceived apparent motion. Perception. (2011);40(8):905–918. doi: 10.1068/p7016. [DOI] [PubMed] [Google Scholar]

- Yeshurun Y, Levy L. Transient spatial attention degrades temporal resolution. Psychological Science. (2003);14(3):225–231. doi: 10.1111/1467-9280.02436. [DOI] [PubMed] [Google Scholar]

- Yeshurun Y, Montagna B, Carrasco M. On the flexibility of sustained attention and its effects on a texture segmentation task. Vision Research. (2008);48(1):80–95. doi: 10.1016/j.visres.2007.10.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yeshurun Y, Rashal E. Precueing attention to the target location diminishes crowding and reduces the critical distance. Journal of Vision. (2010);10(10):1–12.:16. doi: 10.1167/10.10.16. https://doi.org/10.1167/10.10.16 PubMed] [ Article. [DOI] [PubMed] [Google Scholar]

- Yeshurun Y, Rashal E. The typical advantage of object-based attention reflects reduced spatial cost. Journal of Experimental Psychology: Human Perception and Performance. (2017);43(1):69–77. doi: 10.1037/xhp0000308. [DOI] [PubMed] [Google Scholar]