Abstract

The brain operates via networked activity in separable groups of regions called modules. The quantification of modularity compares the number of connections within and between modules, with high modularity indicating greater segregation, or dense connections within sub-networks and sparse connections between sub-networks. Previous work has demonstrated that baseline brain network modularity predicts executive function outcomes in older adults and patients with traumatic brain injury after cognitive and exercise interventions. In healthy young adults, however, the functional significance of brain modularity in predicting training-related cognitive improvements is not fully understood. Here, we quantified brain network modularity in young adults who underwent cognitive training with casual video games that engaged working memory and reasoning processes. Network modularity assessed at baseline was positively correlated with training-related improvements on untrained tasks. The relationship between baseline modularity and training gain was especially evident in initially lower performing individuals and was not present in a group of control participants that did not show training-related gains. These results suggest that a more modular brain network organization may allow for greater training responsiveness. On a broader scale, these findings suggest that, particularly in low-performing individuals, global network properties can capture aspects of brain function that are important in understanding individual differences in learning.

Keywords: functional connectivity, brain network modularity, cognitive training, working memory, reasoning

1. Introduction

Computer-based cognitive training is an increasingly popular approach to improve cognitive function, yet training-related benefits can vary greatly across studies and individuals (Boot & Kramer, 2014; Jaeggi, Buschkuehl, Shah, & Jonides, 2014). To better inform the implementation of such interventions, it is important to examine individual differences that can predict training effectiveness. Pre-training patterns of neural activity (Mathewson et al., 2012; Vo et al., 2011) and brain volume (Basak, Voss, Erickson, Boot, & Kramer, 2011; Erickson et al., 2010; Verghese, Garner, Mattingley, & Dux, 2016) have been found to correlate with improvements after cognitive training, although these brain measures were often limited to a single region or to a small group of regions, which varied across studies. Furthermore, since complex cognitive functions likely involve widespread interactions between groups of brain regions (Cole et al., 2013; Medaglia, Lynall, & Bassett, 2015), or sub-networks, it is critical to consider whether baseline brain network properties can serve as a useful biomarker in assessing training outcomes (Gabrieli, Ghosh, & Whitfield-Gabrieli, 2015). Indeed, recent work has shown that functional brain networks are relatively stable within individuals (Gratton et al., 2018), suggesting that such individual brain characteristics can be reliably used to guide the implementation of interventions.

Graph theory can be used to describe the brain as a complex network, where individual brain regions represent network nodes and the structural or functional connections between them represent network edges. Previous work using structural and functional MRI has shown that brain networks exhibit a modular organization, comprised of separable sub-networks or modules (Bertolero, Yeo, & D’Esposito, 2015; Betzel et al., 2014; Bullmore & Sporns, 2009; Chen, He, Rosa-Neto, Germann, & Evans, 2008; Meunier, Lambiotte, & Bullmore, 2010; Newman, 2006b; Newman & Girvan, 2004). The extent of segregation of network modules can be quantified with a modularity metric (Newman and Girvan, 2004), where networks with high modularity have dense connections within modules and sparser connections between modules.

Modular brain organization is thought to be important for supporting cognitive functioning. While modularity has been observed to change during task “states” (Kitzbichler, Henson, Smith, Nathan, & Bullmore, 2011; Stanley, Dagenbach, Lyday, Burdette, & Laurienti, 2014; Cohen et al., 2016), in the current study, we focus on the “trait-level” modularity differences observed at rest, as functional brain networks have been shown to be dominated by individual factors (Gratton et al., 2018). Across individuals, modular organization at rest has been shown to correlate with inter-individual variability in working memory capacity (Stevens, Tappon, Garg, & Fair, 2012) and episodic memory (Chan, Park, Savalia, Petersen, & Wig, 2014). Moreover, computational models of biological networks show that a modular organization is more efficient and adaptable to changing environments (Kashtan & Alon, 2005; Tosh & McNally, 2015). Extending this concept to the human brain suggests that modularity is an ideal structure that allows for specialized functions via local or within-module processing and complex functions through global processing across modules. Taken together, these results suggest that modularity is a critical component of learning, with a more modular structure potentially allowing for more efficient and greater adaptive reorganization in response to changing demands (Bassett et al., 2011; Russo, Herrmann, & de Arcangelis, 2014). In addition, modular brain organization has been shown to be disrupted in patients with neuropsychiatric disorders (Alexander-Bloch et al., 2010; 2012; Fornito, Zalesky, & Breakspear, 2015) and in patients with lesions to highly connected brain areas (Gratton et al., 2012), highlighting the importance of modularity for healthy brain function by enabling both stability and flexibility (Sporns & Betzel, 2016).

We propose that brain modularity may uniquely predict outcomes of cognitive interventions when baseline behavioral measures may not reliably distinguish between individuals or cannot be reliably obtained (Gabrieli et al., 2015; Gallen & D’Esposito, 2019). Recent work has shown that higher brain network modularity at baseline predicts greater training-related cognitive improvements in healthy older adults (Gallen et al.,2016; Baniqued et al., 2017) and in patients with traumatic brain injury (TBI; Arnemann et al., 2015), above and beyond individual differences in baseline behavioral measures. Although previous studies have suggested that cognitive training can improve aspects of cognition in healthy young adults (Au et al., 2015; Baniqued et al., 2015; Boot, Kramer, Simons, Fabiani, & Gratton, 2008; Karbach & Verhaeghen, 2014; Strobach, Frensch, & Schubert, 2012; but see Boot & Kramer, 2014; Dougherty, Hamovitz, & Tidwell, 2016; Melby-Lervåg & Hulme, 2013; Redick et al., 2013), the functional significance of brain network modularity in predicting training outcomes in young adults has not yet been examined.

In the present study, we sought to test whether pre-training brain modularity is a useful predictor of training effectiveness in a large sample of healthy young adults (n=68) who displayed training-related improvements after 15 hours of playing video games that engaged working memory and reasoning processes (WM-REAS), compared to an active control group (n=37) and a no-contact control group (n=38) (Baniqued et al., 2014; 2013). Based on previous findings (Arnemann et al., 2015; Baniqued et al., 2018; Gallen et al., 2016), we hypothesized that higher baseline brain modularity would predict greater training-related gains in the WM-REAS group, but not in the control groups. In addition, we hypothesized that in the WM-REAS group, the relationship between baseline brain modularity and training-related gain would be driven by connectivity in “association” networks that support processes such as working memory and reasoning (e.g., the default mode and fronto-parietal networks; Chan, Park, Savalia, Petersen, & Wig, 2014). These networks have also been shown to drive the relationship between baseline brain modularity and training-related gains in older adults (Gallen et al., 2016).

2. Materials and Methods

2.1. Participants

209 right-handed adults aged 18-30 participated in a multi-session study (see Baniqued et al., 2014, Nikolaidis et al., 2017 and Kranz, Baniqued, Voss, Lee, & Kramer, 2017, for behavioral data and MRI spectroscopy data published from this same cohort). Individuals were recruited from the University of Illinois at Urbana-Champaign and surrounding communities through newspaper and web-based announcements advertising a “cognitive training study.” Eligible applicants had normal or corrected-to-normal vision, no major medical or psychiatric conditions, and must have reported playing video and board games for 3 hours or less per week in the last 6 months. Upon study enrollment, participants were randomly assigned to one of four groups: working memory and reasoning game group (WM-REAS 1), adaptive working memory and reasoning game group (WM-REAS 2), active control game group, or a no-contact control group. All games in each of the three game groups increased in difficulty within a session. More details about each group are provided in the next section. Participants were compensated $15/hour if they completed all required sessions. To encourage study completion, individuals who discontinued study participation were paid $7.50/hr for every session attended. The University of Illinois Institutional Review Board approved study procedures and all participants provided written informed consent. Additional details about recruitment procedures (e.g., initial e-mail survey, phone screening) are provided in the original publication of this study (Baniqued et al., 2014). Participants were excluded from the study if they reported in a post-experiment questionnaire that they 1) played any of the games used for training or testing and/or 2) were active video game players as defined by game play of more than 3 hours per week in the last 6 months (N=39). In this study, we excluded additional participants due to poor MRI data quality (N=27 due to artifacts and motion, see MRI Preprocessing), leaving 143 participants for analysis (WM-REAS 1=34, WM-REAS 2=34, active control=37, no-contact=38). Given their comparable training-related effects (Baniqued et al., 2014) and the reduced sample size after excluding participants with unusable MRI data, in the main MRI-behavioral analyses, we combined the two WM-REAS groups into one group and the active control group and no-contact control group into another group, referred to as “WM-REAS” and “CONTROL,” respectively.

The final sample of participants did not significantly differ in age (t(141)=1.94, p=0.055; WM-REAS M=21.41, SD=2.34; CONTROL M=20.71, SD=2.01), years of education (t(141)=1.21, p=0.230; WM-REAS M=15.06, SD=1.50; CONTROL M=14.76, SD=1.43), and sex (X2(1)=0.17, p=0.69; WM-REAS=51 females; CONTROL=54 females). We also confirmed that there were no significant group differences when analyzing the final sample as the four initial groups instead of the two combined groups (age: F(3,139)=1.27 p=0.29; years of education: F(3,139)=0.98 p=0.40; sex: χ2(3)=0.98, p=0.81).

2.2. Behavioral Methods

2.2.1. Protocol Summary

Participants completed four baseline testing sessions in a fixed session and task order (three behavioral sessions followed by one MRI session). For the training groups, participants then completed 10 sessions of casual game play. After training or after a comparable amount of time (3-4 weeks) elapsed for the no-contact control group, participants completed four testing sessions in reverse session order (MRI session followed by three behavioral sessions in reverse order as baseline testing).

2.2.2. Training Games

Participants assigned to the training groups completed 10 sessions at a rate of two to three sessions per week. During each session, participants played four games in pseudo-random order for 20 minutes each. Training games were selected based on results from a study that correlated performance on casual games with performance on various tests of cognitive abilities (Baniqued et al., 2013; 2014). Casual games range in genre, involve relatively simple rules and do not require long-term commitment or specialized skills for gameplay. These games are typically freely available on the web and can be easily played on personal devices (Casual Games Market Report, 2007). The WM-REAS groups played casual games that were highly correlated with performance on working memory and reasoning tests (Baniqued et al., 2013), while the active control group played games that were not highly correlated with these tests. Table 1 contains brief descriptions of each game. The WM-REAS 1 and WM-REAS 2 groups differed primarily in the adaptiveness of the training games: all WM-REAS 2 games were adaptive across sessions such that the level of difficulty increased not only within, but also across training sessions. Three out of 4 games in the WM-REAS 1 and active control groups were adaptive only within and not across training sessions. Since the two WM-REAS groups showed similar effects of training (Baniqued et al., 2014), they are analyzed together in the MRI section of this study. The no-contact group did not undergo any training and only completed pre- and post-testing.

Table 1.

Training Games

| Training Games |

Group | Description | Source |

|---|---|---|---|

| Silversphere | WM-REAS 1, WM-REAS 2 | Move a sphere to a blue vortex by creating a path using blocks of different features and avoiding obstacles along the path. | miniclip.com |

| Digital Switch | WM-REAS 1 | In the main game, switch “digibot” positions to collect falling coins corresponding to the same “digibot” color. | miniclip.com |

| TwoThree | WM-REAS 1 | Target rapidly presented numbers by pointing the mouse to the numbers and subtracting the numbers down to exactly zero in units of 2 or 3. | armorgames.com |

| Sushi Go Round | WM-REAS 1 | Serve a certain number of customers in the allotted time by learning and preparing different recipes correctly, cleaning tables, and ordering ingredients. | miniclip.com |

| Aengie Quest | WM-REAS 2 | Move across the board and exit each level by pushing switches and boxes, finding keys, and opening doors. | freegamesjungle.com |

| Gude Balls | WM-REAS 2 | Remove all plates by filling a plate with four of the same colored balls and switching balls to other plates while navigating around obstacles. | bigfishgames.com |

| Block Drop | WM-REAS 2 | Move around a gem on threedimensional blocks of varying shapes to remove all blocks except the checkered block. | miniclip.com |

| Alphattack | Active Control | Prevent bombs from landing by quickly typing the characters presented on the approaching bombs. | miniclip.com |

| Crashdown | Active Control | Prevent the wall from reaching the top of the screen by clicking on groups of three or more same colored blocks. | miniclip.com |

| Music Catch 2 | Active Control | Earn points by mousing over streams of colored shapes and avoiding contiguously appearing red shapes. | reflexive.com |

| Enigmata | Active Control | Navigate a ship while avoiding and destroying enemies, and collecting objects that provide armor or power. | maxgames.com |

Note. Table adapted from Baniqued et al., 2014

2.2.3. Cognitive Tests

Here, we focused on the behavioral measures that demonstrated training-related effects, although additional details about other behavioral measures assessed can be found in the original publication (Baniqued et al., 2014). Significant group by time interactions were found in three tests: Attentional Blink (Raymond, Shapiro, & Arnell, 1992), Trail Making (Raymond et al., 1992), and Dodge (Armor Games), with the WM-REAS groups showing better performance after training. These three tests all showed high loadings on the fifth principal component in a principal components analysis of pre-training behavioral measures (Baniqued et al., 2014); this component was called “Divided Attention” in the original publication, which we will simply refer to here as “training-related gain” when discussing improvement in this component, and “baseline performance” when referring to pre-test score on this component. Moreover, improvement in these tests was negatively correlated with baseline fluid intelligence scores (Gf; Ravens, 1962; Salthouse & Salthouse, 2005; Salthouse, Pink, & Tucker-Drob, 2008) as assessed by six tests: Form Boards (Ekstrom, French, Harman, & Dermen, 1976), Spatial Relations (Bennett, Seashore, & Wesman, 1997), Matrix Reasoning (Crone et al., 2009; Ravens, 1962), Paper Folding (Ekstrom et al., 1976), Shipley Abstraction (Zachary, 1986), and Letter Sets (Ekstrom et al., 1976). We reanalyzed data from these cognitive tests using the subset of participants with usable MRI data.

For baseline performance and baseline Gf, we computed standardized scores (z-scores) for each test and averaged the z-scores accordingly to create a composite “baseline performance” score and a composite “baseline Gf” score. We computed a composite measure of training-related improvement for each participant by averaging standardized gain scores of each test that showed improvement. For each test, standardized gain scores were computed by taking the difference between post-test and pre-test performance and dividing this gain measure by the standard deviation of pretest performance (collapsed across all groups). To reduce the influence of remaining extreme values in the correlation analyses, the composite scores were then winsorized (Tukey, 1962; Wilcox, 2005): any value 3 SD away from the mean was replaced with the 3 SD cut-off value. We replaced the scores of only one subject in the WM-REAS 1 group with training gain score > 3 SD from the mean and baseline performance score < 3 SD from mean.

In the next sections are brief descriptions of each cognitive test and the measures used for analyses. Attentional Blink, Trail Making, and Dodge were used to measure baseline performance and training gains, while Form Boards, Spatial Relations, Matrix Reasoning, Paper Folding, Shipley Abstraction, and Letter Sets were used to measure Gf.

2.2.3.1. Attentional Blink (Raymond et al., 1992).

Participants were asked to identify the white letter (target 1) in a sequence of rapidly presented black letters, and identify whether the white letter was followed by a black “X” (target 2). The attentional blink was computed from trials when target 1 was accurately detected, as the difference in target 2 accuracy when detection is easiest (appearing 8 letters after target 1) and when detection is most difficult (appearing 2 letters after target 1).

2.2.3.2. Trail Making (Reitan, 1958).

In “Trails A”, participants connected numbered circles as quickly as possible by drawing a line between them in numerical order. In “Trails B,” participants connected both numbered and lettered circles by drawing a line between them, alternating between numbers and letters in numerical and alphabetical order. The trail-making cost was computed by taking the difference in Trails B and Trails A completion time.

2.2.3.3. Dodge (Armor Games).

In this game, participants were directed to avoid enemy missiles and destroy enemy ships by guiding enemy missiles (directed at the participant’s ship) into other enemies. Participants pressed four buttons to navigate around the screen, which was increasingly populated with enemy ships and their missiles. Participants completed the first two levels on a laboratory computer, and practiced the same two levels in an MRI environment. Data used for analysis was the highest level reached after eight minutes of game play in an fMRI environment.

2.2.3.4. Shipley Abstraction (Zachary, 1986).

Participants were given a list of 20 word, letter, or number sequences and instructed to fill in the missing letters or numbers in each sequence. We analyzed the total number of correctly answered items within five minutes.

2.2.3.5. Matrix Reasoning (Crone et al., 2009; Ravens, 1962).

Participants were shown a 3 × 3 matrix of abstract patterns with one cell missing, and instructed to select which among three options best completes the matrix along both the rows and columns. We analyzed the total number of correctly answered items.

2.2.3.6. Paper Folding (Ekstrom et al., 1976).

Participants were asked to select the pattern of holes that would result from a punch through a sheet of paper folded in a certain sequence. We analyzed the total number of correctly answered items within 10 minutes.

2.2.3.7. Spatial Relations (Bennett et al., 1997).

Participants were instructed to identify the 3-dimensional object that would match a 2-dimensional object when folded. We analyzed the total number of correctly answered items in 10 minutes.

2.2.3.8. Form Boards (Ekstrom et al., 1976).

Participants were instructed to choose pieces that will exactly fill a certain shape. We analyzed the total number of correctly answered items in 8 minutes.

2.2.3.9. Letter Sets (Ekstrom et al., 1976).

Participants were presented with five sets of letter strings and asked to determine which letter set was different from the other four. We analyzed the total number of correctly answered items within 10 minutes.

2.3. MRI Acquisition and Preprocessing

During the fourth session of baseline testing, participants underwent MRI scanning on a 3 Tesla Siemens Trio MR scanner with a 12-channel head array receive coil. Anatomical data consisting of T1-weighted MPRAGE images were acquired with the following parameters: GRAPPA acceleration factor 2, voxel size = 0.9 × 0.9 × 0.9 mm, TR = 1900 ms, TI = 900 ms, TE = 2.32 ms, flip angle = 9°, FoV = 230 mm). Functional data during a six-minute resting state scan were obtained using a T2*-weighted echoplanar imaging (EPI) pulse sequence with the following parameters: GRAPPA acceleration factor 2, 180 volumes, in-plane resolution = 2.4 mm2, TR = 2000 ms, TE = 25 ms, flip angle = 80°, FoV = 220 mm; 38 3.5 mm ascending slices, no slice gap). The resting-state scan was performed immediately after 5 six-minute fMRI runs of performance of the Attention Network Task (Fan, McCandliss, Sommer, Raz, & Posner, 2002). During the resting-state scan, participants were instructed to close their eyes, stay awake, and remain as still as possible. We excluded three participants from analyses due to potential artifacts in the anatomical and functional scans (N=1 in WM-REAS 1, N=2 in no-contact).

Brain extraction of anatomical images was performed with Advanced Normalization Tools (ANTs; Avants et al., 2011; 2010) using the LPBA40 template (Shattuck et al., 2008). Subjects with remaining non-brain tissue after this step were run through ANTs brain extraction using the Kirby/MMRR template (Landman et al., 2011) instead of the LPBA40 template. The skull-stripped structural images and raw functional images were preprocessed through the Configurable Pipeline for the Analysis of Connectomes (CPAC; Giavasis et al., 2015). Structural scans were registered to the MNI152 template (Fonov, Evans, McKinstry, & Almli, 2009) using ANTs and segmented into grey matter (probability threshold = 0.7), white matter (probability threshold = 0.96) and CSF (probability threshold = 0.96) via FSL/FAST (Zhang, Brady, & Smith, 2001). EPI scans were slice-time corrected, motion-corrected using the Friston 24-Parameter Model (Friston, Williams, Howard, Frackowiak, & Turner, 1996), and co-registered to the anatomical images. Nuisance signal correction was performed by regressing out the aforementioned motion parameters, signals from the first five principal components from white matter and CSF voxels (CompCor; Behzadi, Restom, Liau, & Liu, 2007), and linear and quadratic trends. The functional data was then bandpass filtered from 0.009 to 0.08 Hz. Participants with maximum absolute displacement greater than 3.4 mm were excluded from analysis (N=8 in WM-REAS 1, N=6 in WM-REAS 2, N=9 in active control, N=4 in no-contact).

2.4. Functional Connectivity and Brain Modularity Analyses

The functional scans were warped to the MNI template and parcellated into 264 regions of interest (Power et al., 2011). Time series were averaged for all voxels in an ROI. Due to uneven partial coverage of the cerebellum in the functional data, we excluded the four cerebellum module ROIs prior to running the network analyses. Nine additional ROIs (2 in “default mode” module, 7 in “uncertain” module containing unassigned nodes, as identified in Power et al., 2011) were excluded due to lack of EPI coverage in at least one subject. For each participant, functional connectivity matrices were created by correlating the time-series between each pair of ROIs using Pearson’s coefficient and applying a Fisher z-transformation.

In the remaining 143 participants, the 251 × 251 functional connectivity matrices were binarized to create adjacency matrices that indicate the presence or absence of a connection between a pair of regions. Matrices were binarized over a range of connection density thresholds or “costs” (here, the top 2-10% of all possible connections in the network in 2% increments, following Power et al., 2011, 2013). Each of these thresholded matrices was used to create unweighted, undirected whole-brain graphs with which network metrics were examined. Network metrics were created separately for each connection threshold to determine the consistency of results. We report results at the middle 6% connection threshold and show that the results are consistent across connection density thresholds. The BrainX (https://github.com/nipy/brainx) and NetworkX Python packages (Hagberg, Schult, & Swart, 2008), as well as custom python scripts were used for network analyses.

To examine the role in modular network organization in predicting training-related gains, we quantified network modularity, a global network measure that compares the number of connections within to the number of connections between modules (Newman & Girvan, 2004). Modularity is defined as where ei is the fraction of connections that connect two nodes within module i, ai is the fraction of connections connecting a node in module i to any other node, and m is the total number of modules in the network (Newman & Girvan, 2004). Modularity will be close to 1 if all connections fall within modules and it will be 0 if there are no more connections within modules than would be expected by chance.

As there are multiple methods for identifying network modules, we used several approaches. We first quantified modularity using a spectral algorithm (Newman, 2006a) to identify the most optimal modular partition (i.e., maximal modularity) for each subject at each connection threshold. We also computed modularity using pre-defined modules, by assigning each node to a module as previously identified in Power et al. (2011) using the Infomap algorithm (Fortunato, 2010; Rosvall & Bergstrom, 2008). Thirteen modules were used for subsequent analysis (as identified in Power et al., 2011), with certain modules classified as an “association cortex” network (default mode, fronto-parietal, cingulo-opercular, salience, dorsal attention, ventral attention) or “sensory-motor” network: auditory, visual, sensory/somatomotor hand and sensory/somatomotor mouth (Chan et al., 2014). Three modules were not classified as either association or sensory-motor networks: memory, subcortical and “uncertain” (module containing unassigned nodes).

2.5. Statistical Analysis

2.5.1. Behavioral data

Although we combine the WM-REAS groups and the control groups in the subsequent analyses, we also verified that the pattern of training effects across the four groups in this study’s reduced sample was similar to the published findings in the larger sample (Baniqued et al., 2014). Training-related gains were primarily assessed at 1) a construct level using a one-way ANOVA with a between-subjects factor of training group (WM-REAS 1, WM-REAS 2, active control, no-contact) and with a composite score of training-related gain as the dependent measure (described in Cognitive Tests) and at 2) a task-level using repeated-measures ANOVAs with a within-subjects factor of time (pre- and post-test score) and between-subjects factor of training group. Effect size for ANOVAs is provided as partial-eta squared (η2p).

2.5.2. Baseline brain modularity and training-related gain

The relationship between baseline brain modularity and training-related gains was assessed with 1) correlations between modularity identified with spectral clustering (performed at 6% cost threshold, but confirmed pattern of results at other costs) and a composite training gain score, and tested with 2) linear regressions with factors of training group, modularity (6% cost threshold), and an interaction term of training group and modularity, with mean-centering of the input variables. We also verified that the patterns of results were similar for whole-brain modularity values derived using a predefined partition (Power et al., 2011).

Unless otherwise noted, for all analyses, we report two-tailed Pearson correlations (r), partial correlations (rp) and 95% bias-corrected and accelerated (BCa) confidence intervals based on 5000 samples. Follow-up correlation analyses (e.g., controlling for motion or baseline cognitive ability) were assessed with one-tailed tests to confirm the initial pattern of results. In the regression analyses, 95% BCa CIs were also computed for the coefficients (B), and p(∆F) denotes changes in fit when comparing models.

2.5.3. Contributions of specific sub-networks to the relationship between global modularity and training gain

Specific network contributions to the relationship between global brain modularity and training gains were assessed with the modularity of each sub-network (degree of within-to-between connectivity) using modules as defined in Power et al., 2011. In these subnetwork analyses, we used the average modularity value across connection density thresholds. In the analysis of sub-network contributions to global modularity in the WM-REAS group, we first confirmed whether modularity values differed across the 12 modules (as defined in Power et al. 2011, excluding the “uncertain” module) using repeated-measures ANOVA with a within-subjects factor of module. F-values and P-values were corrected for sphericity using the Greenhouse-Geisser method (GG). Motivated by previous findings (Baniqued et al., 2018; Gallen et al., 2016), we then correlated training gain with the modularity of the association cortex sub-network, and with the modularity of each module in the association cortex sub-network.

2.5.4. Controlling for in-scanner motion

Follow-up analyses were conducted to control for motion, which may influence functional connectivity estimates (Power, Barnes, Snyder, Schlaggar, & Petersen, 2012; Satterthwaite et al., 2013; 2012; Van Dijk, Sabuncu, & Buckner, 2012). We first examined whether mean framewise displacement (FD) differed across groups. The brain-behavior analyses were also repeated while controlling for mean FD. We also repeated all analyses after censoring volumes with FD > 0.2 mm, which included removing one volume before and two volumes after the flagged volume. It is important to note however, that motion censoring comes at a cost of a shorter time series, reduced degrees of freedom, and unequal numbers of volumes across subjects, and has been shown to confer no additional benefit when CompCor is applied (Muschelli et al., 2014).

3. Results

3.1. Behavioral Data

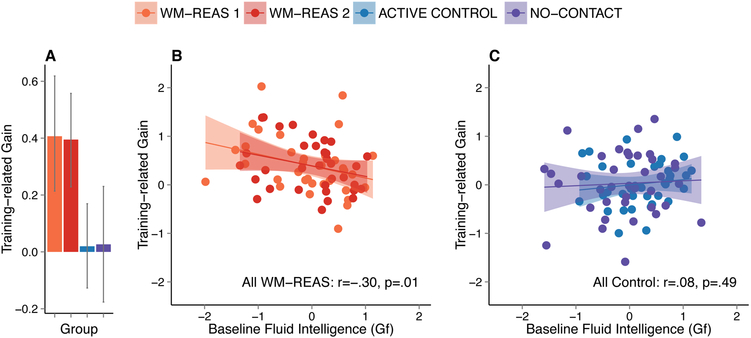

Consistent with findings from a larger sample of participants (Baniqued et al., 2014), the WM-REAS groups in this study showed greater training-related gains (Figure 1a) as measured by a one-way ANOVA with a between-subjects factor of the four training groups on the composite improvement score, (group effect: F(3,139)=5.263, p=0.002, η2=0.102), and by repeated-measures ANOVAs of pre- and post-test performance on each task (group by time interactions: Trail Making: F(3,135)=3.721, p=0.013, η2p=0.076; Attentional Blink: F(3,139)=3.464, p=0.018, η2p=0.070; Dodge: F(3,133)=3.153, p=0.027, η2p=0.066).

Figure 1.

Behavioral training effects. A) Mean training-related gain for all four groups. Error bars are 95% bootstrapped confidence intervals. The right panels (B, C) show the relationship between baseline fluid intelligence (Gf) and training-related gain in the WM-REAS groups (B) and control groups (C). Shown is the Pearson’s coefficient (r) and the two-tailed p-value. Shaded areas represent 95% confidence region of the regression line.

Consistent with previous findings (Baniqued et al., 2014), baseline Gf was negatively correlated with training gain (Figure 1b) in the WM-REAS groups (WM-REAS all: r(66)=−0.296, p=0.014, BCa 95% CI [−0.510 −0.054]; WM-REAS 1: r(32)=−0.307, p=0.077, BCa 95% CI [−0.599 −0.004]; WM-REAS 2: r(32)=−0.280, p=0.109, BCa 95% CI [−0.562 0.033]) but not in the control groups (Figure 1c; CONTROL all: r(73)=0.081, p=0.490, BCa 95% CI [−0.148 0.306]; no-contact: r(36)=0.055, p=0.743, BCa 95% CI [−0.281 0.369]; active: r(35)=0.137, p=0.417, BCa 95% CI [−0.177 0.454]). Specifically, in the WM-REAS groups, those with lower baseline Gf scores showed greater training gains.

As a follow-up analysis, we probed the relationship between training-related gain and baseline performance (on the tests with training-related gains), and found that higher baseline performers showed smaller gains. This significant negative relationship was observed in each of the two groups (WM-REAS all: r(66)=−0.665, p<0.001, BCa 95% CI [−0.508 −0.775]; CONTROL all: r(73)=−0.579, p<0.001, BCa 95% CI [−0.725 - 0.399]). Further, across all participants, baseline performance was positively correlated with baseline Gf, r(141)=0.306, p<0.001, BCa 95% CI [0.150 0.462]. However, including baseline Gf or baseline performance as a covariate in the analysis of group effects in training-related gain did not significantly change the results (one-way ANOVA on composite improvement score with baseline Gf as a covariate, group effect: F(3,138)=5.010, p=0.003, η2=0.098; one-way ANOVA on composite improvement score with baseline performance as a covariate, group effect: F(3,138)=5.392, p=0.002 η2=0.105). Similar results were found when using a composite score of post-training performance instead of composite improvement score.

Given their comparable training-related effects and the reduced sample size after excluding participants with unusable MRI data, in the subsequent MRI analyses, we combined the two WM-REAS groups into one group (“WM-REAS”) and the active control group and no-contact control group into another group (“CONTROL”). We verified that the training effects were similar when analyzing the combined groups. There was a similar group effect in composite improvement score even after controlling for baseline Gf (F(1,140)=15.220, p<0.001, η2=0.098) or baseline performance (F(1,140)=16.185, p<0.00l, η2=0.104), in which the WM-REAS group showed greater training-related improvements than the CONTROL group.

3.2. Brain Network Modularity Data

3.2.1. Baseline brain modularity and training-related gain

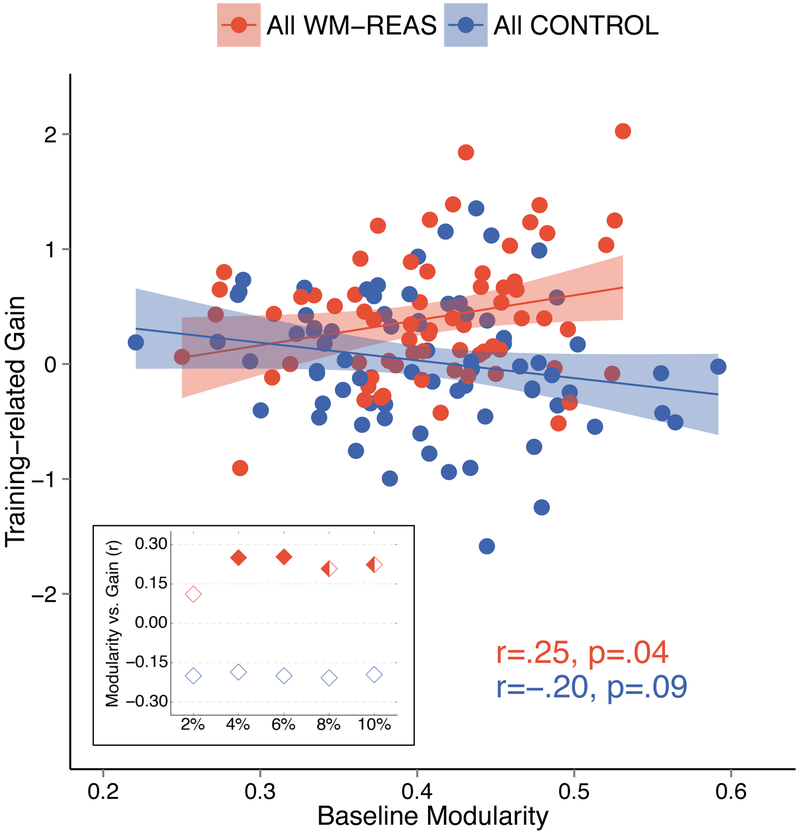

First, we determined whether the observed training-related gains could be predicted by baseline brain modularity (i.e., global modularity identified with spectral clustering). In the combined WM-REAS group, we found a significant positive relationship between training-related gain and baseline modularity (Figure 2a; 6% cost: r(66)=0.253, p=0.037, BCa 95% CI [0.006 0.468]), whereas no such relationship was found in the combined control group (Figure 2a; 6% cost: r(73)=−0.200, p=0.086, BCa 95% CI [−0.356 −0.029]). Further, the correlations between baseline modularity and training gain were significantly different between the WM-REAS and control groups (6% cost: Z=2.70, p=0.007, two-tailed). We confirmed that these relationships were similar at different cost thresholds applied to binarize each subject’s correlation matrix (Figure 2 inset). In the WM-REAS group, we also confirmed that the pattern of results was similar when using modularity values derived using the Power partition (see Supplementary Material).

Figure 2.

Relationship between baseline modularity (6% threshold) and training-related gain. Shown is the Pearson’s coefficient (r) and the two-tailed p-value. Shaded areas represent 95% confidence region of the regression line. Inset: Correlation (Pearson’s coefficient) between baseline modularity and training-related gain for each tested threshold. Solid diamonds indicate where the effect was significant at p<.05, two-tailed. Half-solid diamonds indicate where the effect was significant at p<. 10, two-tailed.

To further confirm this pattern of results, we ran a multiple regression analysis to predict training gain using the following variables: training group, modularity (6% cost) and a centered interaction term of group and modularity. The model was significant, R2=0.15, Adjusted R2=0.13, F(3,139)=8.055, p<0.001, with training group (B=0.38, p<0.001, BCa 95% CI [0.19 0.56]) and the interaction term (B=3.72, p=0.007, BCa 95% CI [1.25 6.54]) as significant predictors. Modularity itself was only partially informative as a predictor (B=−1.54, p=.081, BCa 95% CI [−2.84 −0.22]). These results, namely the significant interaction term, indicated that the relationship between modularity and training gain relationship was dependent on group. This model was a significantly better fit than a model with training group alone (p(∆F)=0.026, R2=0.10, Adjusted R2=0.10, F(1,141)=16.00, p<0.001) and a model with only training group and modularity (p(∆F)=0.007, R2=0.10, Adjusted R2=0.09, F(2,140)=7.95, p=0.001). Similar patterns of results were found when using other cost thresholds for spectral-derived modularity values (Supplementary Material), and when using modularity values derived using the Power partition (Supplementary Material).

3.2.2. Baseline brain modularity, baseline cognition, and training-related gain

As previous studies demonstrated that brain modularity is a better predictor of training-related cognitive gains than baseline behavioral measures, (Arnemann et al., 2015; Gallen et al., 2016), we examined the relationship between baseline modularity and baseline cognition (baseline Gf and baseline task performance) in this sample of young adults. Across all participants, baseline modularity was not significantly associated with baseline Gf (6% cost: r(141)=−0.074, p=0.381, BCa 95% CI [−0.256 0.105]) or baseline task performance (6% cost: r(141)=−0.133, p=0.113, BCa 95% CI [−0.276 0.010]). We confirmed this pattern of results at different cost thresholds, and using modularity values derived using the Power partition (Supplementary Material).

As baseline Gf was correlated with training gain in the WM-REAS group (Figure 1b), we also re-examined the relationship between modularity and training gain in the WM-REAS group while controlling for baseline Gf. This relationship remained significant after controlling for baseline Gf (6% cost: rp(65)=0.236, p=0.027, one-tailed, BCa 95% CI [−0.030 0.450]). We confirmed this pattern of results at different cost thresholds: except for 2% cost with r=0.106, p=0.197, one-tailed, all others r>0.181, p<0.071, one-tailed (Supplementary Material). The pattern of results was also similar when using modularity values derived using the Power partition (Supplementary Material).

To further examine the relationship between modularity and Gf in predicting training outcomes in the WM-REAS group, we performed a multiple regression analysis to predict training gain using baseline modularity (6% cost) and baseline Gf. This model was significant R2=0.14, Adjusted R2=0.11, F(2,65)=5.22, p=0.008, with baseline Gf as a significant predictor (B=−0.22, p=0.021, BCa 95% CI [−0.40 −0.04]) and modularity as a near-significant predictor (B=1.95, p=0.054, BCa 95% CI [−0.22 4.25]). A model with an interaction term was not a better fit (p∆F)=0.345, R2=0.15, Adjusted R2=0.11, F(3,64)=3.77, p=0.015). These results confirmed that, in the WM-REAS sample, modularity and Gf independently predict training-related gains and, further, that the relationship between modularity and training gain was not moderated by baseline Gf. Similar patterns of results were found when using other cost thresholds for spectral-derived modularity values, and when using modularity values derived using the Power partition (Supplementary Material).

As baseline task performance was also correlated with training gain, we reexamined the relationship between modularity and training gain in the WM-REAS group while controlling for baseline performance. Unlike controlling for baseline Gf, controlling for baseline performance attenuated the relationship between baseline modularity and training gain (6% cost: rp(65)=0.038, p=0.379, one-tailed, BCa 95% CI [−0.218 0.278]). We confirmed this pattern of results at different cost thresholds and when using modularity values derived using the Power partition (Supplementary Material).

These results may have been driven by a potential behavioral ceiling effect described previously, where higher performers at baseline showed smaller gains. There was also a positive correlation between baseline performance and post-training performance in both groups (all r>0.457, all p<0.001). Taken with the negative correlation between baseline performance and training gain, these findings point to a ceiling effect on training-related gains. To incorporate a potential moderating effect of baseline performance, we performed a multiple regression analysis to predict training gain using baseline modularity, baseline performance, and a centered interaction term. This model was significant R2=0.49, Adjusted R2=0.46, F(3,64)=20.37, p<0.001, with significant predictors of baseline performance (B=−0.45, BCa 95% CI [−0.60 −0.32], p<0.001) and the interaction of baseline performance and baseline modularity (B=−2.94, p=0.020, BCa 95% CI [−4.99 −0.74]). Modularity itself was not a significant predictor, B=0.37, BCa 95% CI [−1.26 2.37], p=0.652. This model was a significantly better fit than a model with only baseline performance and baseline modularity (p∆F)=0.020, R2=0.44, Adjusted R2=0.43, F(2,65)=25.87, p<0.001). These results confirmed that, in the WM-REAS sample, baseline performance independently predicted training-related gains and, further, that the relationship between modularity and training gains was moderated by baseline task performance. Specifically, modularity was a stronger predictor of training gains in lower-performing individuals at baseline. Similar patterns of results were found when using other cost thresholds for spectral-derived modularity values, and when using modularity values derived using the Power partition.

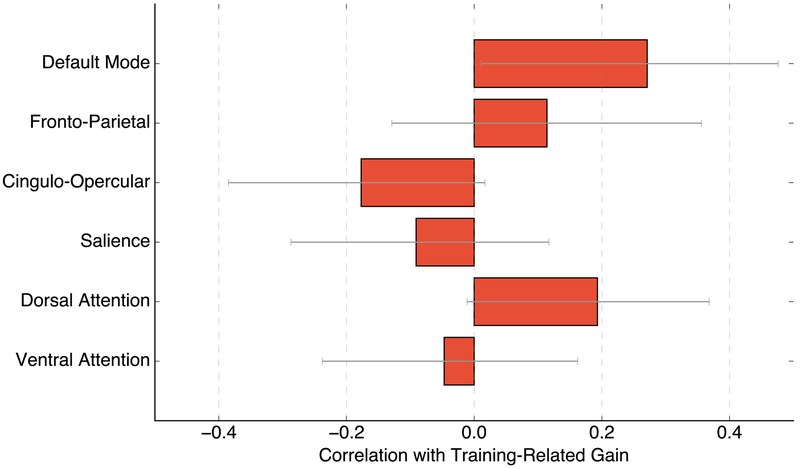

3.2.3. Contributions of specific sub-networks to the relationship between global modularity and training gain

Since modularity in the association system networks (i.e., DMN, FP, CO, VAN, DAN, Sal) has been shown to drive the relationship between baseline modularity and training gain, in the WM-REAS group, we examined the contribution of specific brain sub-networks to global modularity, which is the sum of contributions from all sub-networks. For these analyses, instead of using the spectral algorithm to identify sub-networks, we used those determined by the InfoMap algorithm (Fortunato, 2010; Rosvall & Bergstrom, 2008) in a previous study (Power et al., 2011), which yields modularity measures that are highly correlated with the spectral maximization approach (using the average modularity value across connection density thresholds, r(66)=0.874, p<0.001, BCa 95% CI [0.82 0.92])). A repeated measures ANOVA with a within-subjects factor of module revealed that modularity differed across the 12 sub-networks (excluding the “Uncertain” and cerebellum modules; F(3.037,203.467)=375.794, p(GG)<0.001, η2p=0.849), suggesting that connectivity of specific sub-networks may be contributing to training-related gains.

We then examined whether modularity in the association networks was correlated with training-related gains in the WM-REAS group. The correlation was significant, r(66)=.226, p=.032, one-tailed BCa 95% CI [0.008 0.417]. For comparison, the correlation between modularity in the sensory-motor system networks (Visual, Auditory, Somatomotor: hand and mouth) and training-related gains was not significant, r(66)=.066, p=.297, one-tailed BCa 95% CI [−0.179 0.289].

We then examined whether specific modules in the association network drove the relationship with training gain, as connectivity in specific brain networks has been shown to predict clinical outcomes (Reggente et al., 2018; Doucet et al., 2018). Thus, for each association sub-network, we examined the relationship between modularity and training gain and found a positive correlation in one of the modules, labeled the default mode network (DMN; Figure 3; r(66)=0.271, p=0.026, BCa 95% CI [0.028 0.465]). Specifically, higher baseline within-DMN connectivity was related to greater training gains, although this result was not statistically significant after Bonferroni correction for multiple comparisons across the six association modules. Nonetheless, we further examined whether DMN modularity interacts with baseline task performance to predict training gain using the following variables. The three-factor model of baseline task performance, DMN modularity and interaction of baseline task performance and DMN modularity was significant, R2=0.47, Adjusted R2=0.45, F(3,64)=19.22, p<0.001, with baseline performance as a significant predictor (B=−0.47, BCa 95% CI [−0.65 −0.34], p<0.001) and the interaction term as a near-significant predictor of training gain, (B=−6.35, BCa 95% CI [−11.95 0.08], p=0.063). DMN modularity itself was not a significant predictor (B=0.53, BCa 95% CI [−3.01 5.29], p=0.815).

Figure 3.

Association sub-network module contributions to the relationship between global modularity and training-related gain. Shown is the correlation between training-related gain and module-specific modularity (i.e., in each association sub-network module, degree of within-to-between connectivity, averaged across thresholds). Error bars are 95% bootstrapped confidence intervals.

As the above network-specific metrics were examined using modularity values averaged across thresholds, we also examined module segregation (Chan et al., 2014), a metric that retains the weights of all connections (lower than 2-10% of connections). Module segregation is quantified by (Zw -Zb)/Zw, where Zw is the average Fisher-transformed correlation between nodes in the same module (within-module connectivity) and Zb is the average Fisher- transformed correlation between nodes in a module to nodes in any other module (between-module connectivity). Guided by previous findings, we focused on whole-brain segregation and segregation in the association sub-network. Both segregation measures were not significantly related with training-related gain, although the whole-brain segregation results were in the same direction as the modularity vs. training-related gain results.

3.2.4. Controlling for in-scanner motion

As in-scanner motion can spuriously affect functional connectivity estimates (Power et al., 2012; Satterthwaite et al., 2012; Van Dijk et al., 2012; Satterthwaite et al., 2013), we confirmed that the relationship between baseline brain modularity and training-related gains was not due to motion.

First, mean framewise displacement (FD; Power et al., 2012) did not differ between the two groups, F(1,141)=0.084, p=0.772, η2p=0.001 (WM-REAS: M=0.141, SD=0.047; CONTROL: M=0.143, SD=0.052). Second, in both groups, controlling for mean FD in the correlation analyses and including it as a predictor in the regression analyses did not substantially change the relationship between baseline modularity and training gain, even when factoring in baseline Gf and baseline performance (Supplementary Material). Also, controlling for mean FD did not alter the findings in the association network and segregation analyses.

It has been suggested that prior to functional connectivity analyses with fMRI data collected in the resting state, one volume before and two volumes after any volume with a FD > 0.2 mm should be removed. This is not a widely accepted procedure, especially when a limited amount of resting state data is available because motion censoring comes at a cost of a shorter time series, reduced degrees of freedom, and unequal numbers of volumes across subjects (Muschelli et al., 2014). Moreover, it has been shown to confer no additional benefit when CompCor is applied, which is a procedure we implemented in our analyses (Muschelli et al., 2014). Nevertheless, we re-analyzed this dataset after removing 9356 out of 25740 volumes across subjects (36% of total volumes excluded after 573 volumes were flagged with FD > 0.2 mm). As expected by the reduced power of our analyses with this smaller dataset, the magnitude of the statistical significance of most of the analyses we performed was reduced. However, the patterns of relationships we found between brain modularity, baseline Gf, baseline performance and training gain did not change direction (Supplementary Material).

4. Discussion

Here, we demonstrate that higher baseline brain modularity predicts larger cognitive training-related gains in young adults after training with casual video games that engage working memory and reasoning processes. This modularity-gain relationship was more prominent in individuals with lower baseline performance in the tasks that showed training-related gains and remained significant after controlling for baseline cognitive ability. Critically, this relationship was not present in a control group composed of participants that played casual video games that were not significantly related to working memory and reasoning processes (Baniqued et al., 2013) as well as participants that did not undergo any cognitive training (Baniqued et al., 2014). These results are consistent with previous findings in smaller samples of TBI patients (Arnemann et al., 2015) and cognitively normal older adults (Baniqued et al., 2018; Gallen et al., 2016) and more importantly, demonstrate the predictive power of brain modularity for cognitive training outcomes in a young, high-functioning population. On a broader scale, these findings suggest that global network properties can capture unique aspects of brain function that are important in understanding individual differences in learning and neuroplasticity (Gallen & D’Esposito, 2019).

4.1. Baseline Whole-Brain Modularity and Training-Related Gains

Considering individual differences is important for determining and maximizing cognitive intervention effectiveness. Here, we show that brain modularity provides useful information about training-related gains in addition to those captured by behavioral measures. Specifically, even when controlling for baseline Gf, which also significantly predicted training-related gains, there was still a positive relationship between modularity and training-related gain in the WM-REAS group. Further, modularity was not correlated with baseline Gf, suggesting that a relationship between baseline modularity and Gf was not driving the modularity-gain prediction in the WM-REAS group.

Although controlling for baseline performance on the tests that showed training-related gains attenuated the modularity-gain relationship in the whole WM-REAS sample, this was likely due to a very high correlation between baseline performance and training-related gain on those measures. Re-evaluating this relationship in a multiple regression analysis that factors in a moderating effect of individual differences in baseline performance showed that the modularity-gain relationship was driven by low performers. It is important to note, however, that we observed a ceiling effect in high-performing individuals (i.e., high performers tended to not improve as much with training, although maintaining a high level of performance), which may have prevented us from detecting any potential relationships between brain modularity and cognitive improvement in this group. Baseline modularity and baseline performance were not significantly correlated, however, which indicates that baseline modularity only partly captures individual differences in baseline performance.

Although the WM-REAS group did not show gains in working memory and reasoning tests, which may be partly due to ceiling effects or lack of sensitivity in the assessments used, gains were observed in “divided attention” tasks where attention needed to be quickly deployed or coordinated between multiple elements. Six out of the eight training games played by the WM-REAS group involved speeded tasks (Silversphere, Sushi- Go-Round, DigiSwitch, TwoThree, Gude Balls), which demanded both planning ahead and paying attention to multiple stimuli on the screen. These additional demands in the WM-REAS groups are likely to have led to the improvements in “divided attention” tasks. Taken together with previous studies (Arnemann et al., 2015; Gallen et al., 2016; Baniqued et al., 2018), these results point to modularity as an index of neuroplasticity. Specifically, a more modular brain may be more “primed” to benefit from an intervention, such that it can more easily learn and respond to the demands of training. Moreover, although the specific outcome metrics varied widely in the previous studies, the metrics that showed a relationship with modularity can all be argued to tap “cognitive control” processes.

Together with findings in TBI patients (Arnemann et al., 2015) and healthy older adults (Baniqued et al., 2018; Gallen et al., 2016), these results suggest that brain modularity may be a useful biomarker for predicting training outcomes, especially in lower-performing individuals. Positive correlations between modularity and training gain were observed across all four studies, despite differences in study design and cohorts. Importantly, in the TBI patients, modularity predicted training gain when baseline behavioral measures did not. Likewise, in the older adult studies, modularity predicted training gain even after taking into account baseline cognitive performance. Modularity may thus be a useful metric in populations where behavioral measures may not reliably distinguish between individuals, be difficult to collect, or faced with confounds, such as in clinical populations that present with cognitive deficits (Gabrieli et al., 2015). In this way, brain measures can be more sensitive than behavioral measures in capturing individual variability in training responsiveness and may be used to better inform interventions, for example, by increasing training intensity or duration in an individual with lower baseline modularity.

Modular brain network organization is thought to be critical for supporting a range of behaviors (Sporns & Betzel, 2016), from specialized functions through local processing within modules, to complex functions through global processing across modules (Meunier et al., 2010; Meunier, Achard, Morcom, & Bullmore, 2009). Brain network modularity is related to both state- and trait-like aspects of cognition, such as stimulus detection on a trial by trial basis (Sadaghiani, Poline, Kleinschmidt, & D’Esposito, 2015) and working memory capacity (Stevens et al., 2012). In addition, computational work has demonstrated that modular organization allows for a system that is more adaptable to new environments (Clune, Mouret, & Lipson, 2013; Kashtan & Alon, 2005; Tosh & McNally, 2015). A recent fMRI study found that high-performing individuals showed smaller connectivity changes between a “resting state” and task performance, suggesting that these individuals have a more “optimal” brain network organization at rest (Schultz & Cole, 2016). In this sense, our findings suggest that individuals with higher brain modularity during a task-free state may have a more optimally organized network that allows them to more efficiently reconfigure in response to complex task demands, such as those encountered during learning or cognitive training.

More broadly, individual differences in other aspects of functional connectivity have been shown to be predictive of learning an artificial language (Sheppard, Wang, & Wong, 2012), a new motor skill (Bassett et al., 2011; 2013; Mattar et al., 2016) and a perceptual task (Baldassarre et al., 2012), underscoring the value of network analysis in providing a parsimonious characterization of brain interactions (Medaglia et al., 2015). Unlike these studies, the intervention in the current study involved more complex training that targeted processes such as working memory and reasoning, rather than a specific skill, and showed training-related gains on untrained tasks. Although other studies have examined how individual differences in neural measures predict training-related improvements in more complex tasks, these studies have often focused on a single brain region or small set of brain regions thought to be most relevant to the training task (Baldassarre et al., 2012; Basak et al., 2011; Erickson et al., 2010; Garner & Dux, 2015; Verghese et al., 2016). For example, volumetric measurements of the striatum and prefrontal cortex have been related to training gains in complex strategy-based video game (Basak et al., 2011; Erickson et al., 2010) and multi-tasking performance (Verghese et al., 2016), but the contribution of connectivity patterns among these regions remains to be examined. Our study goes a step further in examining how large scale, whole-brain network properties can predict training gains in untrained tasks, which may better capture individual variations that support complex task processing. Although there is some controversy about whether cognitive training is truly effective for enhancing performance on distantly-related tasks, or general cognition (Melby-Lervag et al., 2016; Simons et al., 2016; Katz et al., 2018; Green et al., 2019), there is value in examining the mechanisms that lead to improvements in behavioral performance. Furthermore, training itself requires a considerable investment of time, if not money, such that assessing the mechanisms underlying efficacy is worthwhile in terms of saving resources and guiding therapeutic use.

Our findings suggest that there is a relationship between brain modularity and training gains despite differences in the type of training. Specifically, the current study involved only laboratory-based video game exercises, while the TBI (Arnemann et al., 2015) and older adult cohorts (Gallen et al., 2016) underwent group-based sessions on attention and self-regulation, and gist-reasoning, respectively. We have also recently found the same relationship between modularity and training gains in an older adult population after an exercise intervention (Baniqued et al., 2018). Given these convergent findings, we speculate that network modularity may provide insight into the neural mechanisms that support individual differences in neuroplasticity and, consequently, training outcomes across interventions and populations (Gallen & D’Esposito, 2019). Importantly, these results reveal a potential mechanism for neuroplasticity and learning. Higher brain network modularity has also been related to faster learning rates (Mattar et al., 2018; Iordan et al., 2018), suggesting that this brain network “trait” indexes capacity for change. Indeed, computational work has shown that a more modular structure allows for a system that is more adaptable to new environments. (Kashtan and Alon, 2005; Clune et al., 2013; Tosh and McNally, 2015). Future research is needed to determine the significance of using such network-based measures to inform the implementation of interventions, such as determining training dosage or the ‘optimal’ type of intervention prior to training.

4.2. Baseline Association Network Properties and Training-Related Gains

In addition to examining whole-brain modularity, we also examined specific subnetworks’ contribution to predicting training gains and found that individuals with greater modularity in the association networks, and specifically in the DMN, showed greater training gains. During task-free “resting” states, high connectivity within DMN regions is well-documented (Fox et al., 2005; Greicius, Krasnow, Reiss, & Menon, 2003; Raichle & Snyder, 2007). Despite being linked to resting states, the modular organization of DMN regions has also been found to play active roles during task performance. For example, previous studies examining the reconfiguration of brain network properties due to cognitive demands have reported decreased within-module (Liang, Zou, He, & Yang, 2016) and increased between-module (Stanley et al., 2014) connectivity in DMN regions with increasing cognitive demand. The functional significance of the DMN is an area of active research (Raichle, 2015); recent studies show that DMN regions flexibly couple with other brain systems depending on task demands (Dixon, Andrews-Hanna, Spreng, Irving, & Christoff, 2016; Spreng, Sepulcre, Turner, Stevens, & Schacter, 2012; Vatansever et al., 2015), thus pointing to a central role of the DMN in supporting large-scale adaptive reconfiguration.

4.3. Limitations and Future Directions

Although this study involved a fairly large sample, a larger sample size would allow for examination of a variety of demographic and lifestyle factors (e.g., education, socioeconomic status, physical health; Smith et al., 2015) that, in addition to brain modularity, could provide more reliable and converging information regarding individual differences in training-related cognitive gains and, potentially, neuroplasticity. Here, we focused on training outcomes assessed immediately after completion of training; it would be important to determine if baseline network properties are also predictive of longer-lasting benefits from training. Finally, future research is needed to determine the neural changes that accompany the observed behavioral outcomes and the mechanisms by which pre-intervention brain modularity supports these neural alterations.

Supplementary Material

Highlights.

Higher baseline modularity predicted larger training-related cognitive gains in young adults

Individual differences in baseline performance moderated the brain modularity vs. gain relationship

Brain network properties can be used as biomarkers of cognitive plasticity

Acknowledgements

The authors thank Joshua Cosman, Hyunkyu Lee, Michelle Voss and Joan Severson for their contributions to the design of the training study, Grace Victoria Clark for assistance in data processing, and members of the Kramer Lifelong Brain and Cognition Lab and the Beckman Institute Biomedical Imaging Center for assistance in data collection. The authors declare no competing financial interests.

Funding: This work was supported by the Beckman Institute for Advanced Science and Technology (Graduate Fellowship to PLB); the National Science Foundation (IGERT Grant 0903622 to PLB and SBE Postdoctoral Research Fellowship Grant 1808384 to CLG); the Department of Defense (NDSEG to CLG); the Office of Naval Research (Grant N000140710903 to AFK); and the National Institutes of Health (Grant NS079698 to MD).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Alexander-Bloch AF, Gogtay N, Meunier D, Birn R, Clasen L, Lalonde F, et al. (2010). Disrupted Modularity and Local Connectivity of Brain Functional Networks in Childhood-Onset Schizophrenia. Frontiers in Systems Neuroscience, 4 10.3389/fnsys.2010.00147 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alexander-Bloch A, Lambiotte R, Roberts B, Giedd J, Gogtay N, & Bullmore E (2012). The discovery of population differences in network community structure: New methods and applications to brain functional networks in schizophrenia. Neuroimage, 59(4), 3889–3900. 10.1016/j.neuroimage.2011.11.035 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arnemann KL, Chen AJW, Novakovic-Agopian T, Gratton C, Nomura EM, & D’Esposito M (2015). Functional brain network modularity predicts response to cognitive training after brain injury. Neurology, 84(15), 1568–1574. 10.1212/WNL.0000000000001476 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Au J, Sheehan E, Tsai N, Duncan GJ, Buschkuehl M, & Jaeggi SM (2015). Improving fluid intelligence with training on working memory: a meta-analysis. Psychonomic Bulletin & Review, 22(2), 366–377. 10.3758/s13423-014-0699-x [DOI] [PubMed] [Google Scholar]

- Avants BB, Tustison NJ, Song G, Cook PA, Klein A, & Gee JC (2011). A reproducible evaluation of ANTs similarity metric performance in brain image registration. Neuroimage, 54(3), 2033–2044. 10.1016/j.neuroimage.2010.09.025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Avants BB, Yushkevich P, Pluta J, Minkoff D, Korczykowski M, Detre J, & Gee JC (2010). The optimal template effect in hippocampus studies of diseased populations. Neuroimage, 49(3), 2457–2466. 10.1016/j.neuroimage.2009.09.062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baldassarre A, Lewis CM, Committeri G, Snyder AZ, Romani GL, & Corbetta M (2012). Individual variability in functional connectivity predicts performance of a perceptual task. Proceedings of the National Academy of Sciences of the United States of America, 109(9), 3516–3521. 10.1073/pnas.1113148109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baniqued PL, Allen CM, Kranz MB, Johnson K, Sipolins A, Dickens C, et al. (2015). Working Memory, Reasoning, and Task Switching Training: Transfer Effects, Limitations, and Great Expectations? PloS One, 10(11), e0142169 10.1371/journal.pone.0142169 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baniqued PL, Gallen CL, Voss MW, Burzynska AZ, Wong CN, Cooke GE, et al. (2018). Brain Network Modularity Predicts Exercise-Related Executive Function Gains in Older Adults. Frontiers in Aging Neuroscience, 9, 924–17. 10.3389/fnagi.2017.00426 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baniqued PL, Kranz MB, Voss MW, Lee H, Cosman JD, Severson J, & Kramer AF (2014). Cognitive training with casual video games: points to consider. Frontiers in Psychology, 4, 1010 10.3389/fpsyg.2013.01010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baniqued PL, Lee H, Voss MW, Basak C, Cosman JD, Desouza S, et al. (2013). Selling points: What cognitive abilities are tapped by casual video games? Acta Psychologica, 142(1), 74–86. 10.1016/j.actpsy.2012.11.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Basak C, Voss MW, Erickson KI, Boot WR, & Kramer AF (2011). Regional differences in brain volume predict the acquisition of skill in a complex real-time strategy videogame. Brain and Cognition, 76(3), 407–414. 10.1016/j.bandc.2011.03.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bassett DS, Wymbs NF, Porter MA, Mucha PJ, Carlson JM, & Grafton ST (2011). Dynamic reconfiguration of human brain networks during learning. Proceedings of the National Academy of Sciences of the United States of America, 108(18), 7641–7646. 10.1073/pnas.1018985108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bassett DS, Wymbs NF, Rombach MP, Porter MA, Mucha PJ, & Grafton ST (2013). Task-Based Core-Periphery Organization of Human Brain Dynamics. PLoS Computational Biology, 9(9), e1003171 10.1371/journal.pcbi.1003171 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behzadi Y, Restom K, Liau J, & Liu TT (2007). A component based noise correction method (CompCor) for BOLD and perfusion based fMRI. Neuroimage, 37(1), 90–101. 10.1016/j.neuroimage.2007.04.042 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bennett GK, Seashore HG, & Wesman AG (1997). Differential aptitude test. San Antonio, TX: Psychological Corporation. [Google Scholar]

- Bertolero MA, Yeo BTT, & D’Esposito M (2015). The modular and integrative functional architecture of the human brain. Proceedings of the National Academy of Sciences of the United States of America, 112(49), E6798–807. 10.1073/pnas.1510619112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Betzel RF, Byrge L, He Y, Goñi J, Zuo X-N, & Sporns O (2014). Changes in structural and functional connectivity among resting-state networks across the human lifespan. Neuroimage, 102, 345–357. 10.1016/j.neuroimage.2014.07.067 [DOI] [PubMed] [Google Scholar]

- Boot WR, & Kramer AF (2014). The brain-games conundrum: does cognitive training really sharpen the mind? (Vol. 2014). Presented at the Cerebrum: the Dana forum on brain science, Dana Foundation. [PMC free article] [PubMed] [Google Scholar]

- Boot WR, Kramer AF, Simons DJ, Fabiani M, & Gratton G (2008). The effects of video game playing on attention, memory, and executive control. Acta Psychologica, 129(3), 387–398. [DOI] [PubMed] [Google Scholar]

- Bullmore E, & Sporns O (2009). Complex brain networks: graph theoretical analysis of structural and functional systems. Nature Reviews.Neuroscience, 10(3), 186–198. 10.1038/nrn2575 [DOI] [PubMed] [Google Scholar]

- Chan MY, Park DC, Savalia NK, Petersen SE, & Wig GS (2014). Decreased segregation of brain systems across the healthy adult lifespan. Proceedings of the National Academy of Sciences of the United States of America, 111(46), E4997–E5006. 10.1073/pnas.1415122111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen ZJ, He Y, Rosa-Neto P, Germann J, & Evans AC (2008). Revealing modular architecture of human brain structural networks by using cortical thickness from MRI. Cerebral Cortex (New York, N.Y.: 1991), 18(10), 2374–2381. 10.1093/cercor/bhn003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clune J, Mouret J-B, & Lipson H (2013). The evolutionary origins of modularity. Proc R Soc B, 280(1755), 20122863–20122863. 10.1098/rspb.2012.2863 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen JR, & D'Esposito M (2016). The segregation and integration of distinct brain networks and their relationship to cognition. Journal of Neuroscience, 36(48), 12083–12094 10.1523/JNEUROSCI.2965-15.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cole MW, Reynolds JR, Power JD, Repovs G, Anticevic A, & Braver TS (2013). Multi-task connectivity reveals flexible hubs for adaptive task control. Nature Neuroscience, 16(9), 1348–1355. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crone EA, Wendelken C, Van Leijenhorst L, Honomichl RD, Christoff K, & Bunge SA (2009). Neurocognitive development of relational reasoning. Developmental Science, 12(1), 55–66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dixon ML, Andrews-Hanna JR, Spreng RN, Irving ZC, & Christoff K (2016). Anticorrelation between default and dorsal attention networks varies across default subsystems and cognitive states. bioRxiv, 056424 10.1101/056424 [DOI] [Google Scholar]

- Doucet GE, Moser DA, Luber MJ, Leibu E, & Frangou S (2018). Baseline brain structural and functional predictors of clinical outcome in the early course of schizophrenia. Molecular psychiatry, 1, 10.1038/s41380-018-0269-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dougherty MR, Hamovitz T, & Tidwell JW (2016). Reevaluating the effectiveness of n-back training on transfer through the Bayesian lens: Support for the null. Psychonomic Bulletin & Review, 23(1), 306–316. 10.3758/s13423-015-0865-9 [DOI] [PubMed] [Google Scholar]

- Ekstrom RB, French JW, Harman HH, & Dermen D (1976). Manual for kit of factor-referenced cognitive tests. Princeton, NJ: Educational testing service. [Google Scholar]

- Erickson KI, Boot WR, Basak C, Neider MB, Prakash RS, Voss MW, et al. (2010). Striatal Volume Predicts Level of Video Game Skill Acquisition. Cerebral Cortex (New York, N.Y.: 1991), 20(11), bhp293–2530. 10.1093/cercor/bhp293 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, McCandliss BD, Sommer T, Raz A, & Posner MI (2002). Testing the efficiency and independence of attentional networks. Journal of Cognitive Neuroscience, 14(3), 340–347. [DOI] [PubMed] [Google Scholar]

- Fonov VS, Evans AC, McKinstry RC, Almli CR and Collins DL, Unbiased nonlinear average age-appropriate brain templates from birth to adulthood, Neuroimage 47 Supplement 1, 2009, S102 10.1016/S1053-8119(09)70884-5 [DOI] [Google Scholar]

- Fornito A, Zalesky A, & Breakspear M (2015). The connectomics of brain disorders. Nature Reviews.Neuroscience, 16(3), 159–172. 10.1038/nrn3901 [DOI] [PubMed] [Google Scholar]

- Fortunato S (2010). Community detection in graphs. Physics Reports, 486(3–5), 75–174. 10.1016/j.physrep.2009.11.002 [DOI] [Google Scholar]

- Fox MD, Snyder AZ, Vincent JL, Corbetta M, Van Essen DC, & Raichle ME (2005). The human brain is intrinsically organized into dynamic, anticorrelated functional networks. Proceedings of the National Academy of Sciences of the United States of America, 102(27), 9673–9678. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston KJ, Williams S, Howard R, Frackowiak RSJ, & Turner R (1996). Movement Related effects in fMRI time series. Magnetic Resonance in Medicine, 35(3), 346–355. 10.1002/mrm.1910350312 [DOI] [PubMed] [Google Scholar]

- Gabrieli JDE, Ghosh SS, & Whitfield-Gabrieli S (2015). Prediction as a Humanitarian and Pragmatic Contribution from Human Cognitive Neuroscience. Neuron, 85(1), 11–26. 10.1016/j.neuron.2014.10.047 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallen CL, Baniqued PL, Chapman SB, Aslan S, Keebler M, Didehbani N, & D’Esposito M (2016). Modular Brain Network Organization Predicts Response to Cognitive Training in Older Adults. PloS One, 11(12), e0169015 10.1371/journal.pone.0169015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallen CL, & D’Esposito M (2019). Brain Modularity: A Biomarker of Intervention-related Plasticity. Trends in cognitive sciences, 23(4), 293–304. 10.1016/j.tics.2019.01.014Get [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garner KG, & Dux PE (2015). Training conquers multitasking costs by dividing task representations in the frontoparietal-subcortical system. Proceedings of the National Academy of Sciences of the United States of America, 112(46), 14372–14377. 10.1073/pnas.1511423112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giavasis S, Khanuja R, Sikka S, Shehzad Z, Clark D, Cheung B, et al. (2015). C-PAC: CPAC Version 0.3.9 Alpha. 10.5281/zenodo.16557 [DOI]

- Gratton C, Gratton C, Nomura EM, Nomura EM, Pérez F, D’Esposito M, & D’Esposito M (2012). Focal Brain Lesions to Critical Locations Cause Widespread Disruption of the Modular Organization of the Brain. Journal of Cognitive Neuroscience, 24(6), 1275–1285. 10.1162/jocn_a_00222 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gratton C, Laumann TO, Nielsen AN, Greene DJ, Gordon EM, Gilmore AW, et al. (2018). Functional Brain Networks Are Dominated by Stable Group and Individual Factors, Not Cognitive or Daily Variation. Neuron, 98(2), 439–452.e5. 10.1016/j.neuron.2018.03.035 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green CS, Bavelier D, Kramer AF, Vinogradov S, Ansorge U, Ball KK, … & Facoetti A (2019). Improving methodological standards in behavioral interventions for cognitive enhancement. Journal of Cognitive Enhancement, 3(1), 2–29 10.1007/s41465-018-0115-y. [DOI] [Google Scholar]

- Greicius MD, Krasnow B, Reiss AL, & Menon V (2003). Functional connectivity in the resting brain: a network analysis of the default mode hypothesis. Proceedings of the National Academy of Sciences of the United States of America, 100(1), 253–258. 10.1073/pnas.0135058100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hagberg AA, Schult DA, & Swart PJ (2008). Exploring network structure, dynamics, and function using NetworkX In Varoquaux G, Vaught T, & Millman J (Eds.), (pp. 11–15). Presented at the Proceedings of the th Python in Science Conference SciPy, Pasadena, CA: Los Alamos National Laboratory (LANL) 10.1186/1471-2105-13-S2-S9 [DOI] [Google Scholar]

- Iordan AD, Cooke KA, Moored KD, Katz B, Buschkuehl M, Jaeggi SM, … & Reuter-Lorenz PA (2018). Aging and network properties: stability over time and links with learning during working memory training. Frontiers in aging neuroscience, 9, 419 10.3389/fnagi.2017.00419 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jaeggi SM, Buschkuehl M, Shah P, & Jonides J (2014). The role of individual differences in cognitive training and transfer. Memory & Cognition, 42(3), 464–480. 10.3758/s13421-013-0364-z [DOI] [PubMed] [Google Scholar]

- Karbach J, & Verhaeghen P (2014). Making Working Memory Work A Meta-Analysis of Executive-Control and Working Memory Training in Older Adults. Psychological Science, 25(11), 2027–2037. 10.1177/0956797614548725 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kashtan N, & Alon U (2005). Spontaneous evolution of modularity and network motifs. Proceedings of the National Academy of Sciences of the United States of America, 102(39), 13773–13778. 10.1073/pnas.0503610102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Katz B, Shah P, & Meyer DE (2018). How to play 20 questions with nature and lose: Reflections on 100 years of brain-training research. Proceedings of the National Academy of Sciences, 115(40), 9897–9904, 10.1073/pnas.1617102114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kitzbichler MG, Henson RN, Smith ML, Nathan PJ, & Bullmore ET (2011). Cognitive effort drives workspace configuration of human brain functional networks. The Journal of Neuroscience : the Official Journal of the Society for Neuroscience, 31(22), 8259–8270. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kranz MB, Baniqued PL, Voss MW, Lee H, & Kramer AF (2017). Examining the Roles of Reasoning and Working Memory in Predicting Casual Game Performance across Extended Gameplay. Frontiers in Psychology, 8, 288 10.3389/fpsyg.2017.00203 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Landman BA, Huang AJ, Gifford A, Vikram DS, Lim IAL, Farrell JAD, et al. (2011). Multi-parametric neuroimaging reproducibility: A 3-T resource study. Neuroimage, 54(4), 2854–2866. 10.1016/j.neuroimage.2010.11.047 [DOI] [PMC free article] [PubMed] [Google Scholar]