Abstract

Objective:

The aim of this study was to evaluate the reliability and validity of the updated 2019 CDC Worksite Health ScoreCard (CDC ScoreCard), which includes four new modules.

Methods:

We pilot tested the updated instrument at 93 worksites, examining question response concurrence between two representatives from each worksite. We conducted cognitive interviews and site visits to evaluate face validity, and refined the instrument for public distribution.

Results:

The mean question concurrence rate was73.4%. Respondents reported the tool to be useful for assessing current workplace programs and planning future initiatives. On average, 43% of possible interventions included in the CDC ScoreCard were in place at the pilot sites.

Conclusion:

The updated CDC ScoreCard is a valid and reliable tool for assessing worksite health promotion policies, educational and lifestyle counseling programs, environmental supports, and health benefits.

Keywords: health promotion, instrument validity and reliability, organizational health assessment, workplace

Americans spend more than half of their waking hours at work during a typical week.1 This presents employers with a unique opportunity to reach a large segment of the population that otherwise may not be exposed to, or engaged in, organized health improvement efforts. Over 30 years of research suggests that comprehensive, well-designed, well-executed, and properly evaluated workplace health promotion (WHP) programs can lead to reductions in healthcare utilization and costs2,3; enhance work performance4; improve employee health and well-being factors5 such as job stress,6 sleep,7 physical activity and nutrition8; impact value-on-investment metrics such as job satisfaction and quality of work9; and, in some cases, may offer positive business results such as bolstering stock market performance10–12 and a positive return-on-investment (ROI).13–16

Whereas 82% of United States (U.S.) employers report having WHP programs,17 only 12% to 13% have comprehensive offerings in place.18,19 Broadly, a comprehensive WHP program consists of health education, links to related employee services, a supportive physical and social environment for health improvement, integration of health promotion into the organization’s culture, and employee screenings with adequate follow-up.20 However, employers may not have all the tools, resources, and knowledge needed to identify best practices to implement comprehensive WHP programs.

Recognizing this need, many organizational assessments21 have been developed to measure and score the degree to which employers have best practices in place to support and improve employee health and well-being, including one by the Centers for Disease Control and Prevention (CDC). In partnership with the Institute for Health and Productivity Studies (IHPS; formerly located at the Emory University Rollins School of Public Health, now affiliated with the Johns Hopkins Bloomberg School of Public Health.), the CDC developed the CDC Worksite Health ScoreCard (CDC ScoreCard), an assessment tool designed to plan and evaluate worksite health promotion policies, educational and lifestyle counseling programs, environmental supports, and health benefits. The self-assessment instrument provides guidance on evidence-based strategies that employers can put in place to promote a healthy workforce and increase productivity. It was designed to facilitate three primary goals:

Assist employers in identifying gaps in their health promotion programs and help them prioritize high-impact strategies for health promotion at their worksites;

Increase understanding of organizational policies, programs, and practices that employers of various sizes and industry sectors can implement to support healthy lifestyle behaviors and monitor changes over time; and

Reduce the risk of chronic disease among employees and their families by instituting science-based WHP interventions and promising practices.

The first CDC ScoreCard was published in 2012 and consisted of 12 modules with 100 questions. Each question represented an action, strategy, or intervention that an employer could take to improve employee health and well-being. The modules addressed the following topics: (1) Organizational Supports, (2) Tobacco Control, (3) Nutrition, (4) Physical Activity, (5) Weight Management,(6) Stress Management, (7) Depression, (8) High Blood Pressure,(9) High Cholesterol, (10) Diabetes, (11) Signs and Symptoms of Heart Attack and Stroke, and (12) Emergency Response to Heart Attack and Stroke. The instrument underwent extensive psychometric testing for content validity, face validity, and inter-rater reliability.22

The initial purpose of the CDC ScoreCard was to help employers assess whether they had implemented evidence-based health promotion interventions or strategies at their worksites to prevent heart disease, stroke, and related conditions. Since its release in 2012, the CDC ScoreCard has evolved to address a wider range of behavioral health risks and conditions. In 2014, CDC added four new modules: (1) Lactation Support (six questions); (2) Occupational Health and Safety (10 questions); (3) Vaccine-Preventable Diseases (six questions); and (4) Community Resources (three questions; not scored), for a total of 16 modules. To ensure that the CDC ScoreCard remains current, evidence-based, and valid, IHPS again partnered with the CDC, along with IBM Watson Health, to develop a revised and updated tool, inclusive of new content. The aim of this paper is to report the results of validity and reliability testing of the 2019 CDC ScoreCard.

METHODS

Overview

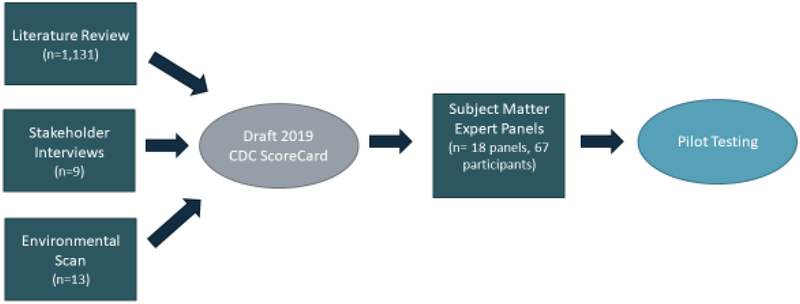

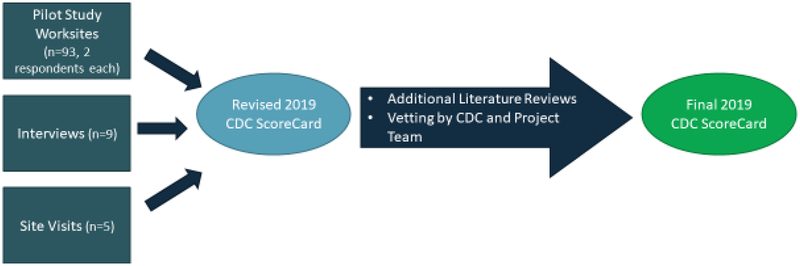

The review and update of the CDC ScoreCard was conducted in two phases. The first phase entailed a comprehensive literature review of previously cited and newly published research studies, an environmental scan of other existing instruments, interviews with stakeholders, and panel discussions with subject matter experts (SMEs). These steps led to the preparation of a revised CDC ScoreCard for use in the second phase of the project: validity and reliability testing. The second phase consisted of piloting the revised CDC ScoreCard to test inter-rater reliability and conducting follow-up interviews and site visits with a subset of the employer participants to examine face validity. Figures 1 and 2 depict the process flow for the first and second project phases, respectively. The methods for the project steps are described in detail below.

Figure 1.

Process flow for phase 1: survey development

Figure 2.

Process flow for phase 2: validity and reliability testing

Environmental Scan

We conducted an environmental scan of benchmarking resources and other existing instruments that assess WHP programs and define best or promising practices in the field. For instance, we reviewed the current versions of the tools (listed in Supplementary Appendix 2, http://links.lww.com/JOM/A634) and examined the evidence base for the topics covered, specifically with an eye for identifying any potentially important gaps in the CDC ScoreCard. Table 1 summarizes the content and format areas not covered by the CDC ScoreCard that were potential topics and approaches for inclusion on the 2019 version, pending the findings from the stakeholder interviews and literature review.

Table 1.

Environmental Scan Summary of Potential Gaps for Inclusion*

| Topic Areas/Specific Questions for Consideration |

Formatting Approaches for Consideration |

|---|---|

|

|

Through an environmental scan that evaluated other existing tools and benchmark resources, we identified these question topics and formatting approaches for potential inclusion in the 2019 update of the CDC ScoreCard.

Stakeholder Interviews

As part of formative research for updating the CDC Score-Card, we conducted a series of hour-long focus groups with key stakeholders. The nine stakeholders who participated in the focus groups were carefully selected to include representatives from the following constituencies: employers, workplace wellness vendors, professional groups, academic researchers, state/local agencies, and health plans. (These stakeholder interviewees are acknowledged in Supplementary Appendix 1, http://links.lww.com/JOM/A633). The team gathered feedback from these stakeholders on items they liked or disliked related to the 2014 version of the CDC ScoreCard, how the items aligned with the needs and efforts of their organizations, and any additions or revisions they would recommend in content or format. The suggested topics for potential inclusion are summarized in Table 2.

Table 2.

Stakeholders’ Suggested Topics for Inclusion on the Updated CDC ScoreCard*

|

|

Suggestions were collected during a series of 1-hour long interviews with employers, workplace wellness vendors, professional groups, academic researchers, state/local agencies, and health plans representatives regarding needs and interests related to the content and design of an updated scorecard.

Literature Review

We first completed an extensive literature review of all questions on the 2014 version of the CDC ScoreCard to examine whether they were still relevant in defining a comprehensive program. We then examined all previously cited literature that led to the inclusion of items in the 2014 CDC ScoreCard to determine whether the questions were still applicable for inclusion in the updated instrument and searched for more current or stronger studies to include as citations in the revised tool.

Our literature review focused on published peer-reviewed journal articles from 2005 to 2016 on the subject of each CDC ScoreCard item. Inclusion and exclusion criteria for the literature review can be found in Supplementary Appendix 3, http://links.lww.com/JOM/A635. Studies that did not meet these criteria were included or excluded on a case-by-case basis. The key questions guiding each review were (1) are there specific policies, programs, environmental supports, and/or benefits that employers can put in place to positively affect a specific health and well-being outcome? (2) What is the level of impact associated with these interventions? and (3) What is the strength of the evidence?

We synthesized our findings and developed recommendations for CDC ScoreCard updates. Specifically, this meant determining for existing modules and questions whether (1) new evidence was found; (2) any of the existing evidence was no longer applicable (because, eg, the evidence has shifted away from the study’s conclusion, or because there were larger, more recent, or more robust studies available); (3) the item was a candidate for rewording; (4) the item was a candidate for rescoring (using the scoring methodology previously used in the tool’s development; Information about the scoring methodology, the evidence, and impact ratings and points assigned to each survey item is available in Appendix D of the CDC Worksite Health ScoreCard Manual at: https://www.cdc.gov/workplacehealthpromotion/initiatives/healthscorecard/pdf/CDC-Worksite-Health-ScoreCard-Manual-Updated-Jan-2019-FINAL-rev-508.pdf); (5) the item was a candidate for elimination (because, eg, the question was reasonably similar to another item or no longer supported by substantial evidence); or (6) the item should be kept unaltered.

Finally, the literature review process helped identify potential new questions and modules to be included in the updated version. Our goal was to select two to four new topic modules with high potential to improve the health and well-being of employees. The literature review, together with the feedback from the stakeholders and gaps identified in the environmental scan, led to the identification of four new modules with high relevance and strong research support. Below, we summarize the salience of the four new topics selected for inclusion in the updated CDC ScoreCard.

Sleep and Fatigue. One-third of U.S. adults report that they usually get less than the recommended 7 to 8 hours of sleep per night.23 Sleep deprivation is linked to many chronic diseases and conditions, including diabetes, heart disease, obesity, and depression.24 Not getting enough sleep can also lead to motor vehicle crashes and mistakes at work, which may result in injury or disability.25,26 Fatigue and sleepiness at work is estimated to cost employers $136 billion a year in health-related productivity loss (eg, absenteeism, poor performance while at work)27 or about $1967 per employee on an annual basis.28 As such, the public health community believes that sleep is a foundational pillar for good health and should be addressed in any comprehensive WHP program.

Alcohol and Other Substance Use. Excessive alcohol consumption is the third leading cause of preventable death in the U.S.29 and is associated with many negative health outcomes, including cardiovascular disease, stroke, cancer, sexually transmitted diseases, depression, and accidental injury.30 In addition, excessive alcohol consumption costs the U.S. approximately $173 billion in lost wages due to reduced work productivity.31 With the growing recognition that most illicit drug users and heavy drinkers are members of the workforce,32 the worksite can be an important venue for the delivery of alcohol and substance abuse prevention services in the form of lifestyle campaigns, education programs, and insurance coverage that includes screening, counseling, and various treatment options.33,34 These actions, interventions, and services are of urgent need and importance to mitigate a host of negative societal and work-related outcomes as described above, especially given the opioid epidemic that is sweeping the nation and costing the U.S. an estimate of $504 billion annually.35

Musculoskeletal disorders (MSDs). Awkward posture or repetitive tasks can cause damage to muscles, nerves, tendons, joints, cartilage, and spinal discs and can contribute to the development of musculoskeletal disorders (MSDs).36 MSDs represent the biggest single cause of lost workdays, and contributes to presenteeism, early retirement, and economic inactivity. For the period 2004 to 2006, MSDs were estimated to cost the U.S. $567 billion in direct (medical) costs and $373 billion in indirect (lost wages) cost.37 There is strong evidence suggesting that highly prevalent and costly MSDs, such as back pain, arthritis, and carpal tunnel syndrome, can be mitigated through worksite policy and workstation design.38

Cancer. Cancer is the second most common cause of death in the U.S., accounting for nearly one-quarter of all deaths annually.39 American men have about a one in two lifetime risk of developing cancer; for women, the risk is approximately one in three.40 Working adults with cancer experience functional limitations that include an increase of absenteeism. One study found that people with cancer are absent from work an average of 37.3 days/year compared with 3 days/year among those that do not have any chronic diseases.41 Previous editions of the CDC ScoreCard addressed some cancer risk reduction interventions (eg, in the Tobacco Use and Weight Management modules); however, it was determined that a separate Cancer module should be considered for the updated tool to assess the use of educational materials/programming and insurance coverage to promote evidence-based cancer screenings, as well as access to vaccinations and environmental supports that may reduce the incidence of cancer, and in turn, the economic burden it presents to individuals and employers.

In all, our team examined 1131 studies across the 18 health module-specific literature reviews. Of these, 530 studies are included as citations in the updated version of the tool.

Subject Matter Expert Panel Meetings

Finally, we convened a series of 18 meetings with SMEs in specific topic areas addressed by the tool (SMEs are listed in Supplementary Appendix 1, http://links.lww.com/JOM/A633). Before each meeting, SMEs were provided the revised CDC Score-Card and a summary of the evidence base for each question in their domain area of expertise. They were asked to review and rank the strength of the evidence for each question in advance of the meeting, using the rating system previously developed for the CDC Score-Card.5 The SMEs completed their ratings independently, and then the three to six SMEs for each module met with facilitators from the research team to discuss the relevance, wording, evidence, and weighting of each question. A Delphi-like process was used with interactive ratings and rankings until group consensus was reached on each question. The CDC ScoreCard was then updated to reflect the consensus feedback of the SME teams and submitted for approval by the Office of Management and Budget (OMB) as required by the Paperwork Reduction Act of 1995. Finally, the approved tool was uploaded to an online platform for pilot testing.

Recruitment

We recruited a convenience sample of 145 worksites, representing 114 employers, to participate in this study. As our goal was to have a heterogeneous sample representing a range of employer and worksite sizes, business and industry types, and U.S. geographies, we collaborated with national business coalitions, employer groups, and state health departments to market the opportunity to participate in the study. These organizations assisted us by circulating study information to local employers, business leaders, and other organizations within the communities they serve through announcements at events and conferences and providing the recruitment package materials to interested parties via email. The recruitment package included a description of the study that could be used in email or newsletter announcements, a two-page overview of the study, “frequently asked questions” that provided key information about the opportunity to participate, and an electronic application form. The recruitment materials encouraged human resources managers, health insurance benefits managers, wellness program managers, and others involved in WHP to engage in the study. To incentivize participation, the study offered employers a benchmarking report that would provide them their score (overall and by module) relative to all other participants and in comparison to similar sized organizations. Interested employers self-selected into the study by submitting a completed application to participate.

The study defined the unit of analysis as a worksite—a single building or campus composed of a clustered set of buildings within walking distance—with ten or more employees. We permitted multiple worksites (eg, two or more geographically distinct locations) from a single employer to participate in the study; in these cases, the study team contacted and confirmed the uniqueness of each worksite. Having an active WHP program was not a requirement for participation.

Data Collection

Online Survey

To test inter-rater reliability, enrolled worksites were asked to have two knowledgeable employees (eg, worksite wellness program managers, human resources specialists, or health benefits managers) independently complete the pilot CDC ScoreCard survey. The survey was made available to participants online via the Qualtrics survey platform (https://www.qualtrics.com/), which enabled individuals to save their responses to the survey and complete the tool in multiple sittings.

The two respondents were asked not to consult with one another. However, they were encouraged to consult with any other person within the organization who might provide relevant information related to questions in areas in which they were unfamiliar, as would occur in a real-world setting when an employer is completing the publicly-available survey.

The pilot CDC ScoreCard was a 155-item survey instrument, consisting of 18 health topic-specific modules. All questions were answered in a yes/no format. Each item carried a weighted point value (where 1 = good, 2 = better, 3 best) reflecting the potential impact that the intervention or strategy has on the intended health behavior(s) or outcome(s) as well as the strength of the scientific evidence supporting the impact (Information about the scoring methodology, the evidence, and impact ratings and points assigned to each survey item is available at: https://www.cdc.gov/workplacehealthpromotion/initiatives/healthscorecard/pdf/CDC-Worksite-Health-ScoreCard-Manual-Updated-Jan-2019-FINAL-rev-508.pdf). The impact and evidence ratings were not visible to pilot participants while they completed the instrument (so as not to bias responses). Overall and module-specific scores were summed on the basis of the weighted value for each item receiving a yes response (with a possible total score range of 0 to 296). Conversely, no responses and skipped questions were assigned zero points.

Participants were instructed to answer each question regarding the presence of a given policy, program, environmental support, or health benefit currently in place at their worksite or in place within the past 12 months (eg, “During the past 12 months, did your worksite provide an exercise facility on site?”). We also captured information about the worksite’s demographics (eg, business type, number of employees, industry), community engagement efforts to support their WHP programming (eg, leveraging information from federal, state, or local public health agencies; participation in partnerships or business coalitions aimed at promoting population health and well-being), and survey respondents (eg, job role). In cases where key demographic features of the worksite differed between respondents at a given worksite (eg, the number of employees at the location or the primary industry was discordant), a team member followed up by phone and/or email to ensure the accuracy of the data.

For our primary analysis of inter-rater reliability, we examined the level of concordance (ie, index of percent agreement) between the two survey responses from each worksite. Organizations with fewer than two fully completed surveys were excluded from the analysis. The final study sample consisted of 93 organizations (186 survey responses).

Cognitive Interviews

Cognitive interviews were conducted with a subset of representatives from worksites who participated in the study to test the face validity of the tool (ie, if the instrument “looks like” it will measure what it is supposed to measure), identify and explain any issues with wording or content for questions with low reliability (ie, low levels of agreement between respondents), and determine specific ways to refine the CDC ScoreCard. Of the 93 employers who completed the online survey, we selected a random sample of nine employers and invited them to participate in 1-hour, telephone-based cognitive interviews.

We interviewed both survey respondents from each organization simultaneously, using a written interview protocol to ensure a consistent approach between interviewers and across interviews. A detailed report of each respondent’s answers to the survey was provided in advance of the interview, and respondents were asked to discuss discrepancies between their responses in advance of the scheduled call. Rather than reviewing each individual question of the survey, interviewers probed respondents by health topic, with a special emphasis placed on questions where there was a discrepancy between the two survey responses. In all cases, interviews first examined differences in responses to questions within the proposed new modules (ie, Cancer, Alcohol and Other Substance Use, Sleep and Fatigue, and MSDs), followed by differences in the responses to questions in modules that were previously validated.22

Interview probes were structured to assess the respondents’ comprehension of the question, how information was retrieved, and how a decision regarding the answer to the question was made and submitted. For example, in the case of discrepant answers, we asked respondents to explain their understanding of the general intent of the question; the meaning of specific terms; with whom they consulted to gather relevant information; how much effort was required to answer the question (eg, in terms of time allocation to recall, retrieve or seek the information, number of people asked for help); whether they answered the question critically and objectively or were swayed by “impression management” (answering questions with a desire to project a positive image of the organization); and whether they were able to match their actual program to the strategies as described in the survey. Finally, we asked about the degree to which respondents found individual modules and the CDC ScoreCard useful as an evaluation tool (ie, whether it adequately captured the types of policies, programs, environmental supports, and health benefits that are relevant, practical, and feasible to implement as part of a WHP program).

Site Visits

A convenience sample of five employers (out of the sample of 84, which excluded those participating in the cognitive telephone-based interviews; The sample size was limited to five employers from the Washington, DC, and New York City regions due to resource and time constraints.) agreed to participate in in-person interviews conducted as part of a site visit. The half-day site visit included a tour of the facilities, which the study team used to observe and confirm the presence of certain environmental interventions [eg, availability of automated external defibrillators (AEDs); physical activity supports, such as gyms] and review relevant documentation that might provide a better understanding of the organization’s WHP program policies and interventions (eg, a description of the smoking policy in the employee handbook, informational newsletters, educational materials available on a company intranet site).

Data Analysis

We cleaned, coded, and analyzed the survey data, using Microsoft Excel 2016 and R statistical analysis software.42 Organizations without two complete responses were excluded from the analysis. For the analysis of inter-rater reliability, we examined the index of percent agreement; that is, the percentage of times that corespondents at each worksite both answered yes or no to a question. Incidences where respondents left a question blank (missing data) were coded as nonconcurrence.

We designated a “substantial” level of agreement (61% and above) as the minimum acceptable level of concurrence for each question, using the Landis and Koch Kappa benchmark scale. This scale, summarized in Handbook of Inter-Rater Reliability,43 is considered a reliable benchmark for measuring the extent of agreement among raters. Items receiving a lower than 61% concurrence score were automatically flagged and subjected to review for revision or elimination from the tool. The interviews provided useful qualitative data, allowing us to ascertain the reasons that items did not meet the minimum level of concurrence threshold. The telephone-based interviews were conducted by two to three team members, so that the dedicated role of one researcher was to take careful notes using a standardized data capture form. The in-person interviews were conducted by two team members, allowing one person to assume a dedicated note-taking role. At the end of data collection, notes were aggregated into a thematic summary. Summaries were instrumental in highlighting potential areas for improvement, particularly in regard to questions that required edits or elimination because of inconsistent interpretation or unclear instructions. For each low-reliability question, the team also reviewed similar items in other modules to determine whether interpretation or understanding of those questions was also problematic even when they were not flagged based on the 61% concurrence threshold.

RESULTS

Sample Characteristics

The study sample included 93 worksites representing employers of varying sizes, business types, and industries, from 31 states across the U.S. Within these organizations, the 186 respondents who completed the survey were most often human resources or WHP program personnel. Table 3 provides a summary description of the final pilot study sample.

Table 3.

Summary Description of the Pilot Study Sample

| Category | N | Percent |

|---|---|---|

| Organization Size | ||

| Very small (<100) | 13 | 14.0 |

| Small (100–249) | 3 | 3.2 |

| Medium (250–749) | 17 | 18.3 |

| Large (>750) | 60 | 64.5 |

| Worksite Size | ||

| Very small (<100) | 22 | 23.7 |

| Small (100–249) | 15 | 16.1 |

| Medium (250–749) | 19 | 20.4 |

| Large (>750) | 37 | 39.8 |

| Business Type | ||

| For profit | 26 | 28.3 |

| Nonprofit/government | 43 | 46.7 |

| Nonprofit/other | 23 | 25 |

| Region | ||

| Midwest | 30 | 32.3 |

| Northeast | 10 | 10.8 |

| South | 41 | 44.1 |

| West | 12 | 12.9 |

| Industry | ||

| Health Care and Social Assistance | 35 | 37.6 |

| Manufacturing | 12 | 12.9 |

| Public Administration | 10 | 10.8 |

| Educational Services | 9 | 9.7 |

| Professional, Scientific, and Technical Services | 5 | 5.4 |

| Transportation, Warehousing, and Utilities | 5 | 5.4 |

| Finance and Insurance | 5 | 5.4 |

| Other | 12 | 12.9 |

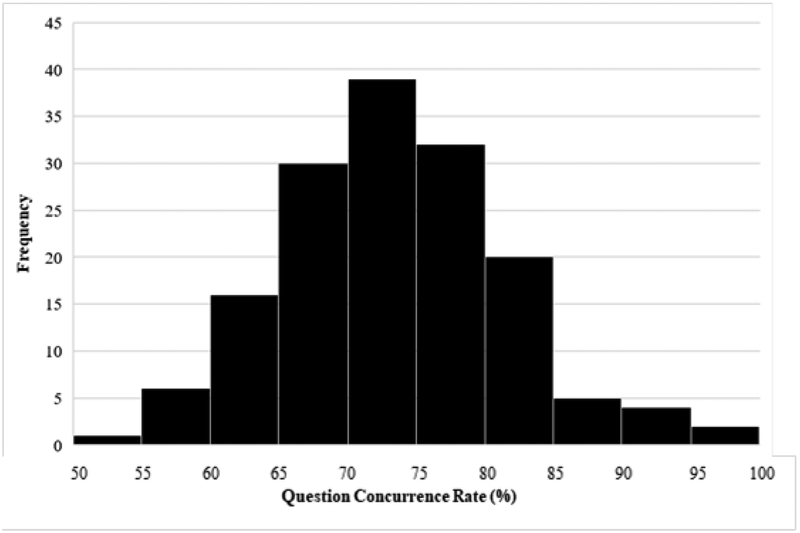

Inter-rater Reliability

We assessed the tool’s inter-rater reliability by comparing the two responses from each organization and calculating the index of percent agreement for each question. Question-level concurrence rates ranged from 54.8% to 97.8%, with a mean of 73.4%. Figure 3 shows the distribution of concurrence rates across the entire survey. Table 4 displays the average concurrence rate for each of the health topic-specific modules (eg, Nutrition, Tobacco Use); these ranged from 65.1% to 79.9%, with a mean of 73.1%.

Figure 3.

Distribution of concurrence rates for all survey questions

Table 4.

Average Concurrence Rates by Module and Overall

| Module | Number of Questions per Module | Percent Concurrence |

|---|---|---|

| Vaccine-preventable diseases | 7 | 79.9 |

| Physical activity | 10 | 79.7 |

| High blood pressure | 6 | 77.4 |

| Occupational health and safety | 9 | 77.1 |

| Tobacco use | 8 | 76.5 |

| Maternal health and lactation support | 7 | 75.3 |

| Organizational supports | 25 | 75.1 |

| Weight management | 4 | 73.9 |

| Cancer | 7 | 72.7 |

| Prediabetes and diabetes | 6 | 72.0 |

| Nutrition | 14 | 71.7 |

| Musculoskeletal disorders | 7 | 71.3 |

| Stress management | 7 | 70.7 |

| High cholesterol | 5 | 70.3 |

| Alcohol and other substance use | 6 | 69.9 |

| Heart attack and stroke | 12 | 69.4 |

| Sleep and fatigue | 8 | 67.6 |

| Depression | 7 | 65.1 |

| Total | 155 | 73.1 |

Five percent of the survey questions (8/155) fell below the 61% threshold of acceptable concurrence and were, therefore, targeted for review. Our initial data analysis revealed some notable patterns. Questions with the lowest concurrence rates included those inquiring about the availability of training for managers to recognize and reduce stress in the workplace as well as regarding the existence of written policies guiding employee work schedules. In addition, there were lower rates of concordance for several questions related to the provision of healthy food and beverage choices in cafeterias or snack bars. Finally, two of the eight survey questions that fell below the 61% threshold of acceptable concurrence were questions in modules that were included in the CDC ScoreCard for the first time and had not previously been field tested.

Qualitative findings from the interviews and site visits provided additional insights into the issues that led to low concurrence rates. We found that most discrepancies in responses were due to four main factors. First, many respondents did not consider the same unit of analysis as their co-respondent when answering the questions (eg, one respondent answered for the specific worksite where he or she was based while another answered for the organization as a whole and indicated yes if an intervention was in place at any of the worksite locations).

Second, many respondents were biased—to varying degrees—by impression management, wanting to present their worksite program positively and therefore tending to answer yes even when specific attributes of the question indicated a no response. The interviews and site visits highlighted specific issues and opportunities to refine the questions and instructions to address these biases. For instance, we found that the instructions should further emphasize timing, as many respondents indicated they answered yes to having program strategies in place if they had been offered more than a year ago but were not currently in place, or if they were in the planning stage, but strategies were not yet implemented (even though the question stem specified “within the last 12 months”). In addition, we found areas where question elements required additional emphasis; for example, many questions contained multiple components (like “provide and promote”) where some respondents answered yes when at least one component was met, while their colleague answered yes only when both components were met.

The decision process (choosing between the yes and no options based on what they knew about their program) was hard for many respondents. Our interviewees all expressed uncertainty on how to address issues of low/inadequate program dose or reach (eg, could they answer yes if they provided educational materials on a certain health topic, but only once a year during a sparsely-attended health fair?). Some respondents also reported having difficulty determining whether to count programming that cuts across multiple topics in separate modules, that is, for example, whether a 1-hour educational seminar that shared information about healthy eating and exercise was sufficient to fulfill the requirements for interactive educational programming in both the Weight Management and Physical Activity modules. Some respondents also indicated uncertainty about whether intentionality was required, particularly for questions related to workplace design (eg, should credit be given when employees have access to natural light or good ventilation even when this was not part of a planned design feature for a healthy workplace, as in the case where an organization takes occupancy of a building that already had these features?).

The third common reason for response discordance was related to fatigue or carelessness. Respondents frequently indicated that they had no explanation for their answer, stating, “I must have not read that carefully” or “I don’t know why I answered that way; now that I’m reading it again, I agree with my colleague.” Thus, in these cases, there was nothing inherently flawed about the questions themselves.

Finally, we found that question-level discordance reflected the unique conditions of the study design. Whereas in a “real-world” setting an individual completing the CDC ScoreCard would be free to consult with others to obtain the best information possible to answer each question, for the current study, the two respondents at each worksite were asked not to consult with one another. In several cases, one of the respondents had exclusive access to the information needed to answer the question, while the other respondent guessed or left the question blank. Respondents specifically reported having difficulty gaining access to the information needed to answer questions about insurance benefits, employee assistance program (EAP) offerings, and manager training. In these cases, they noted they answered based on their personal experience or general knowledge, and in many cases did not (or could not) reach out to others within the organization for confirmation. Respondents also noted uncertainty about how to answer to benefits-related questions in cases where there were many benefit packages to offer, or where employees were assigned specific benefits based on job type.

Face Validity

The 14 worksites that agreed to participate in interviews (by telephone or in-person) represented the range of size categories, including two very small worksites, four small worksites, and eight large worksites. These worksites were primarily concentrated in the Northeast, but at least one worksite was in each census region. Overall, respondents reported that the updated CDC ScoreCard was an effective tool for evaluating their worksite’s WHP program and that the grouping of questions by health topic and similar structure (ie, leading with policy-related questions, then programming type of questions such as education, followed by environmental supports, and ending with benefit-focused items) across modules facilitated their ability to answer items quickly and efficiently. The worksites that participated also noted that the new modules—Sleep and Fatigue, Cancer, Alcohol and Other Substance Use, and MSDs—addressed cutting edge topics and provided ideas for new policies, interventions, and supports they would consider offering in the future.

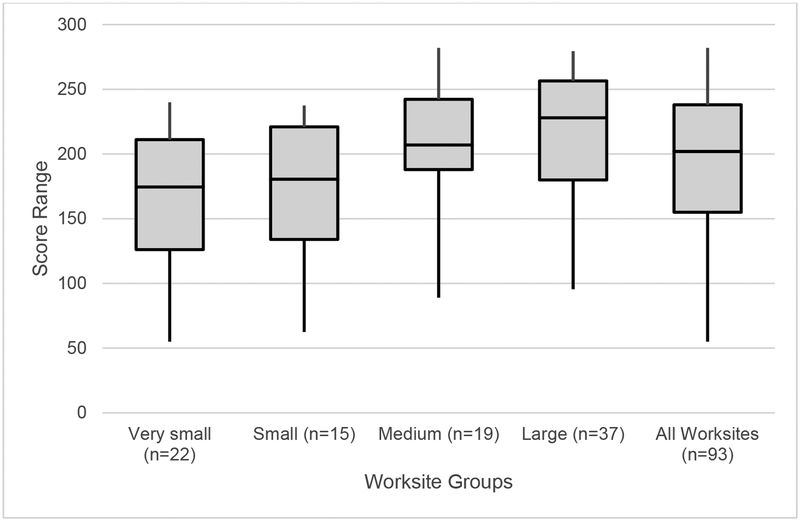

Figure 4 shows the score distribution for the entire study sample, overall and by worksite size. Worksite scores ranged from 55 to 282, with a mean of 194 out of a possible 296 points (SD = 56.8). Table 5 includes the average scores for each of the 18 modules, overall and by worksite size. There is a clear gradient of scores by location size, with larger worksites consistently scoring higher on the CDC ScoreCard than smaller worksites. This was consistent with our expectations based on the prior validation of the CDC ScoreCard22 and other published data44 that larger worksites, and in turn larger organizations, have more resources available for WHP programs.

Figure 4.

Score distributions for all worksites and by worksite size

Table 5.

Score Distributions by Worksite Size

| Module | Total Points Possible | All Worksites | Average Scores by Worksite Size | |||

|---|---|---|---|---|---|---|

| Very Small (<100 employees) |

Small (100–249 employees) |

Medium (250–749 employees) |

Large (750+ employees) |

|||

| Organizational Supports | 44 | 32.4 | 30.3 | 30.0 | 32.2 | 34.7 |

| Tobacco Use | 18 | 12.8 | 9.8 | 11.8 | 14.6 | 14.1 |

| High Blood Pressure | 16 | 11.0 | 10.0 | 9.7 | 11.1 | 12.1 |

| High Cholesterol | 13 | 8.7 | 8.3 | 7.6 | 9.3 | 9.0 |

| Physical Activity | 22 | 14.5 | 12.5 | 11.9 | 14.6 | 16.7 |

| Weight Management | 8 | 5.9 | 5.0 | 5.1 | 6.4 | 6.5 |

| Nutrition | 24 | 11.1 | 7.5 | 9.4 | 12.2 | 13.4 |

| Heart Attack and Stroke | 19 | 12.5 | 10.6 | 10.6 | 13.5 | 13.9 |

| Prediabetes and Diabetes | 15 | 11.1 | 10.4 | 10.1 | 11.4 | 11.7 |

| Depression | 16 | 9.6 | 8.0 | 7.6 | 10.6 | 10.9 |

| Stress Management | 14 | 8.7 | 7.9 | 7.2 | 9.7 | 9.3 |

| Alcohol and Other Substance Use | 9 | 6.1 | 5.4 | 5.3 | 7.4 | 6.2 |

| Sleep and Fatigue | 11 | 3.4 | 3.5 | 2.4 | 4.1 | 3.4 |

| Musculoskeletal Disorders | 9 | 5.0 | 3.4 | 4.2 | 5.7 | 5.9 |

| Occupational Health and Safety | 18 | 14.3 | 12.4 | 13.7 | 15.3 | 15.3 |

| Vaccine-Preventable Diseases | 14 | 11.4 | 9.5 | 11.2 | 12.5 | 12.0 |

| Maternal Health and Lactation Support | 15 | 9.6 | 6.8 | 9.0 | 10.6 | 11.1 |

| Cancer | 11 | 5.7 | 4.5 | 4.7 | 6.5 | 6.3 |

| TOTAL | 296 | 194 | 166 | 172 | 208 | 212 |

Our analysis suggests that the strategies and interventions included in the CDC ScoreCard are relevant and feasible for the business community. On average, worksites reported that they had 43% of all possible interventions in place. The percentage of interventions in place was correlated with worksite size. Of the 155 interventions in the CDC ScoreCard, large worksites had an average of 49% of interventions in place, medium-sized worksites an average of 47%, small worksites an average of 34%, and very small organizations an average of 33%.

Table 6 presents the 10 most and least common interventions reported among the study respondents. The most commonly reported intervention—the provision of paid time off to benefits-eligible employees—was in place at 98% (91/93) of participating worksites. Other common strategies in place included providing access to flu vaccinations (95%), a place to eat (90%), access to an EAP (87%), and a written policy banning alcohol and other substance use (86%). Of the 155 interventions represented in the pilot CDC ScoreCard, all but one intervention (allowing nap breaks during the workday to reduce fatigue) was in place at five or more of the 93 worksites that participated in the study. Six of the 10 least common interventions were related to supports recommended in the new Sleep and Fatigue module and challenged respondents across all employer worksite size categories. Follow-up interviews specific to these questions revealed that (1) sleep and fatigue is a new and untested area for most employers, and (2) several supports in this health domain are limited by the nature of the job itself and an inability for employers to alter existing job routines, requirements, or break areas. Two questions in this module were removed from the final updated CDC ScoreCard based on these findings. The first question, “Offer light-design solutions during shifts that are intended to reduce fatigue during working hours?” was not understood by many of the participants nor did it resonate as relevant to respondents’ worksites. For the napping question, “Allow employees to take short naps during the workday/shift to reduce fatigue and improve performance?” many respondents indicated that this intervention is unattainable or not realistic for most worksites. Re-review of the literature underscored that the intervention may not have broad relevance, as all related studies were conducted in a health care setting where short naps may be allowed to avoid medical error. In other cases, where this intervention is common (eg, trucking), the requirements are regulated by industry or governmental standards.

TABLE 6.

Ten Most and Least Common Interventions in Place at Responding Worksites

| Module | Question* | Number of Worksites | Percentage of Worksites | |

|---|---|---|---|---|

| Most Common Interventions in Place | ||||

| Module | Question | |||

| 1 | Organizational Supports | Offer all benefits-eligible employees paid time off for days or hours absent due to illness, vacation, or other personal reasons (including family illness or bereavement)? | 91 | 97.8 |

| 2 | Vaccine-Preventable Diseases | Provide health insurance coverage with free or subsidized influenza (flu) vaccinations? | 88 | 94.6 |

| 3 | Nutrition | Provide employees with food preparation/storage facilities and a place to eat? | 84 | 90.3 |

| 4 | Organizational Supports | Provide an employee assistance program (EAP)? | 81 | 87.1 |

| 5 | Alcohol and Other Substance Use | Have and promote a written policy banning alcohol and other substance use at the worksite? | 80 | 86 |

| 6 | Occupational Health and Safety | Encourage employees to report uncomfortable, unsafe, or hazardous working conditions to a supervisor, occupational health and safety professional or through another reporting channel? | 79 | 84.9 |

| 7 | Vaccine-Preventable Diseases | Promote good hand hygiene in the worksite? | 78 | 83.9 |

| 8 | Occupational Health and Safety | Carefully investigate the primary cause of any reported work-related illnesses or injuries and take specific actions to prevent similar events in the future? | 77 | 82.8 |

| 9 | Nutrition | Promote and provide access for increased water consumption? | 76 | 81.7 |

| 10 | Vaccine-Preventable Diseases | Provide free or subsidized influenza vaccinations at your worksite? | 76 | 81.7 |

| Least Common Interventions in Place | ||||

| 1 | Sleep and Fatigue | Allow employees to take short naps during the workday/shift in order to reduce fatigue and improve performance?† | 0 | 0 |

| 2 | Sleep and Fatigue | Offer solutions to discourage and prevent drowsy driving? | 5 | 5.4 |

| 3 | Sleep and Fatigue | Provide training for managers to improve their understanding of the safety and health risks associated with poor sleep and their skills for organizing work to reduce the risk of employee fatigue? | 5 | 5.4 |

| 4 | Sleep and Fatigue | Offer light-design solutions during shifts that are intended to reduce fatigue during working hours?† | 7 | 7.5 |

| 5 | Cancer | Have and promote a written policy that includes measures to reduce sun exposure for outdoor workers? | 7 | 7.5 |

| 6 | Sleep and Fatigue | Have and promote a written policy related to the design of work schedules that aims to reduce employee fatigue? | 11 | 11.8 |

| 7 | Nutrition | Subsidize or provide discounts on healthy foods and beverages offered in vending machines, cafeterias, snack bars, or other purchase points? | 12 | 12.9 |

| 8 | Sleep and Fatigue | Provide access to a self-assessment of sleep health followed by directed feedback and clinical referral, when appropriate? | 14 | 15.1 |

| 9 | Nutrition | Make most (more than 50%) of the food and beverage choices available in vending machines, cafeterias, snack bars, or other purchase points healthy food items? | 15 | 16.1 |

| 10 | Nutrition | Have and promote a written policy that makes healthier food and beverage choices available in cafeterias or snack bars? | 15 | 16.1 |

The question text reflects the verbiage included in the pilot CDC ScoreCard that was field-tested among employers.

The validation and reliability study outcomes informed the decision to remove this question from the final version of the 2019 CDC ScoreCard.

DISCUSSION

This paper represents a 3-year systematic and comprehensive process to review, revise, and retest the validity and reliability of the CDC ScoreCard. The review of the literature and meetings with stakeholders and SMEs led to several updates, including the addition of new questions, deletion of redundant or outdated questions, modification of wording or scoring, and identification of four new health topics. The revised tool underwent inter-rater reliability and face validity testing. Our objectives to identify problems with the wording and interpretation of the questions, as well as understand the information retrieval, decision-making, and response processes were by and large achieved. In terms of additional updates of the CDC ScoreCard, we anticipate these will occur at 5-year intervals to allow organizations to become comfortable with the current version, to track progress and trends over a reasonable time horizon, and to allow sufficient time for new research and evidence to emerge.

Limitations

This study was conducted with a convenience sample of motivated employers, most of whom either had previous experience using the CDC ScoreCard or were otherwise engaged in WHP, which may have introduced some bias in terms of familiarity or desire for impression management. As described above, our results indicate that respondents tended to answer yes when uncertain about the correct answer. Thus, our findings may be artificially inflated, and not be representative of the average employer.

The results showed that the average concurrence rate of 73% was above the 61% threshold. Much of the inter-rater discrepancies were explained by the following: (1) an artifact of the study design (ie, inability for respondents to confer with one another); (2) fatigue/carelessness (ie, the lengthy survey led to some respondents not reading questions carefully); (3) respondents reporting on different locations (eg, a specific worksite vs the organization representing multiple worksites); and (4) differential interpretation of key words (ie, one respondent erring on the side of subjectively and being more liberal in giving credit, while the other respondent answered more objectively/strictly and not giving credit for implementation status related to timing, dose, or intentionality).

As for the first limitation, in a real-world setting, respondents will be able to consult the most knowledgeable colleagues along with others within their organization with more complete knowledge of program elements in the tool. With the new release of the CDC Scorecard, an updated user-guide will be made available that provides detailed instructions about how to complete the tool online, including the ability to complete it in multiple sittings to avoid fatigue. In addition, on the basis of participant feedback, we added a new (unscored) skip-pattern question in the Cancer module to allow respondents to skip over questions related to outdoor workers if it is not applicable to their workforce. These updates will address the first and second common reason for discordance.

To address the third and fourth common reasons for low agreement rates between respondents, we made several changes to the instructions (eg, highlighting that the CDC ScoreCard should be completed for a single worksite, and that only activities that were in place during the last 12 months can be counted, even if they occurred just once) and the glossary of terms (eg, defining what constitutes “interactive” education). We also edited the wording of questions or added clarifying text (eg, modifying phrasing to address uncertainty or ambiguity related to dose or intentionality), and added visual emphasis where applicable (eg, bolding and italicizing the word “and” to denote that both components are required for a yes answer) to provide clearer guidance on parameters of when a question can be answered affirmatively. These edits, especially to the newly developed questions, should alleviate the differential interpretation of the questions, which led to discrepant responses.

In sum, the average concurrence rate was above the 61% threshold, and most items scored above 70% concurrence (105/155 questions). The quantitative and qualitative analysis from the validity and reliability testing led to further refinement of the instrument to improve its clarity and address most reasons for low concurrence items. However, we did not retest the instrument to measure whether our refinements improved the concurrence rate. Other reasons for the low concurrence rate would not necessarily apply in “real-world” practice (eg, limitations on collaboration) or were not due to the content of the questions (eg, not reading the question carefully before answering).

Altogether, the update process and validity and reliability testing results yielded the following key changes to the CDC Score-Card, where the 2019 version contains 154 total questions (up from 125 questions in the 2014 version. See Supplementary Appendix 4, http://links.lww.com/JOM/A636 for a summary of the key types of modifications and deleted questions. The 2019 updated CDC Score-Card can be accessed at: https://www.cdc.gov/workplacehealthpromotion/initiatives/healthscorecard/worksite-scorecard.html):

20 new questions added to the existing modules;

16 questions deleted from the existing modules;

Three questions transferred to other modules;

25 new questions added across four new modules;

96 questions and clarifying text modified;

23 questions’ point value adjusted to reflect current evidence (nine items increased; 13 items decreased).

Other updates included combining of two modules into one (heart attack/stroke), and placing the “Community Resources” module into the background (employer demographic) section of the instrument given that it remains an unscored section.

Questions in the tool represent the broad range of possible policies, programs, environmental supports, and health benefits that employers can provide to employees to promote health and well-being. Some questions reflect practices that are common at many worksites and, in some cases, are regulated by industry or other regulatory standards, while other items are cutting edge strategies that are in place at some worksites but do not have strong evidence of effectiveness.

While we tested inter-rater reliability and validity of the CDC ScoreCard, it was not subjected to other forms of validity testing, such as predictive, discriminant, nor convergent validity. Future studies should address these types of validity. For example, predictive validity studies can examine the degree to which organizations with higher scores outperform those with lower scores on a variety of outcomes such as healthcare costs and utilization, employee health risk factors, productivity, other business metrics (stock price), and humanistic measures (social responsibility, morale). Convergent validity studies could measure the degree to which scores on the CDC ScoreCard are correlated to scores on similar employer assessment tools, which would demonstrate whether it is indeed measuring theoretically related constructs. Also important to examine is whether improvement in the CDC ScoreCard scores correlate with improvements in aggregate health risk scores.

CONCLUSION

The research findings showed that the CDC ScoreCard is a reliable and valid assessment tool. The CDC Scorecard update was performed to ensure that it continues to be based on the best available evidence, easy-to use, and capable of assisting employers in (1) building comprehensive WHP programs by identifying gaps in their current program; (2) prioritizing high-impact, evidence-based strategies and promising practices; and (3) ultimately improving employee health and well-being.

This study revealed some notable strengths of the CDC ScoreCard as well as opportunities for further improvement. On the basis of these findings, we were able to add a substantial amount of new content, revise much of the old content to improve the clarity and focus of the items, and refine many items—inclusive of adjusting the scoring to be consistent with the current evidence-base—to improve the utility of the tool. Knowing that the questions are appropriately scored (ie, identifying which strategies are good, better or best based on the evidence) is valuable for employers’ planning purposes in selecting new the opportunities to focus on that gives them the most “bang for the buck” in terms of effectiveness and impact.

Moving forward with the newly updated CDC ScoreCard, employers are encouraged to remember that the goal is not simply to check every box but rather to implement evidence-based interventions that are tailored to their specific workplace culture and employee needs. Successful WHP practices are evidence-based, relevant to various population segments, consistently supported by the organizational culture, and delivered with adequate dose, intensity, and reach to yield meaningful results.

Supplementary Material

ACKNOWLEDGMENTS

Development of the revised and updated CDC Worksite Health ScoreCard was made possible through the time and expertise provided by those who participated in the Stakeholder Interviews and the Subject Matter Expert panel discussions. We are grateful to all the individuals listed in Supplementary Appendix 1, http://links.lww.com/JOM/A633.

Funding for this study was provided by Centers for Disease Control and Prevention, contract no. GS-10-F-0092P-200-2015-87943.

Footnotes

The findings and conclusions in this article are those of the authors and do not necessarily represent the official position of the Centers for Disease Control and Prevention.

Development of the revised and updated CDC Worksite Health ScoreCard was made possible through the time and expertise provided by those who participated in the Stakeholder Interviews and the SME panel discussions (see Supplementary Appendix 1, http://links.lww.com/JOM/A633).

The authors have no conflict of interest.

Supplemental digital contents are available for this article. Direct URL citation appears in the printed text and is provided in the HTML and PDF versions of this article on the journal’s Web site (www.joem.org).

REFERENCES

- 1.Bureau of Labor Statistics. Average Hours Employed People Spent Working on Days Worked by Day of Week. Chart from the America Time Use Survey – 2017 Results [American Time Use Survey web site]. June 28, 2018. Available at: https://www.bls.gov/charts/american-time-use/emp-by-ftptjob-edu-h.htm. Accessed November 1, 2018. [Google Scholar]

- 2.Carls GS, Goetzel RZ, Henke RM, Bruno J, Isaac F, McHugh J. The impact of weight gain or loss on healthcare costs for employees at the Johnson & Johnson Family of Companies. J Occup Environ Med. 2011;53:8–16. [DOI] [PubMed] [Google Scholar]

- 3.Dement JM, Epling C, Joyner J, Cavanaugh K. Impacts of workplace health promotion and wellness programs on health care utilization and costs. J Occup Environ Med. 2015;57:1159–1169. [DOI] [PubMed] [Google Scholar]

- 4.Edington DW. Emerging research: a view from one research center. Am J Health Promot. 2001;15:341–349. [DOI] [PubMed] [Google Scholar]

- 5.Goetzel RZ, Ozminkowski RJ, Bowen J, Tabrizi MJ. Employer integration of health promotion and health protection programs. Int J Workplace Health Manag. 2008;1:109–122. [Google Scholar]

- 6.Hoert J, Herd AM, Hambrick M. The role of leadership support for health promotion in employee wellness program participation, perceived job stress and health behaviors. Am J Health Promot. 2018;32:1054–1062. [DOI] [PubMed] [Google Scholar]

- 7.Smith KC, Wallace DP. Improving the sleep of children’s hospital employees through an email-based sleep wellness program. Clin Pract Pediatr Psychol. 2016;4:291–305. [Google Scholar]

- 8.LeCheminant J, Merrill RM, Masterson TD. Changes in behaviors and outcomes among school-based employees in a wellness program. Health Promot Pract. 2017;18:895–901. [DOI] [PubMed] [Google Scholar]

- 9.Santana A Bringing the employee voice into workplace wellness. Am J Health Promot. 2018;32:827–830. [Google Scholar]

- 10.Fabius R, Thayer RD, Konicki DL, et al. The link between workforce health and safety and the health of the bottom line: tracking market performance of companies that nurture a “culture of health”. J Occup Environ Med. 2013;55:993–1000. [DOI] [PubMed] [Google Scholar]

- 11.Goetzel RZ, Fabius R, Fabius D, et al. The stock performance of C. Everett Koop Award winners compared with the Standard & Poor’s 500 Index. J Occup Environ Med. 2016;58:9–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Grossmeier J, Fabius R, Flynn JP, et al. Linking workplace health promotion best practices and organizational financial performance: tracking market performance of companies with highest scores on the HERO scorecard. J Occup Environ Med. 2016;58:16–23. [DOI] [PubMed] [Google Scholar]

- 13.Goetzel RZ, Juday TR, Ozminkowski RJ. What’s the ROI? A systematic review of return on investment (ROI) studies of corporate health and productivity management initiatives. AWHP’s Worksite Health Summer. 1999. [Google Scholar]

- 14.Henke RM, Carls GS, Short ME, et al. The relationship between health risks and health and productivity costs among employees at Pepsi Bottling Group. J Occup Environ Med. 2010;52:519–527. [DOI] [PubMed] [Google Scholar]

- 15.Baxter S, Sanderson K, Venn AJ, Blizzard CL, Palmer AJ. The relationship between return on investment and quality of study methodology in workplace health promotion programs. Am J Health Promot. 2014;28:347–363. [DOI] [PubMed] [Google Scholar]

- 16.Musich S, McCalister T, Wang S, Hawkins K. An evaluation of the Well at Dell Health Management Program: health risk change and financial return on investment. Am J Health Promot. 2015;29:147–157. [DOI] [PubMed] [Google Scholar]

- 17.Claxton G, Rae M, Long M, Damico A, Foster G, Whitmore H. Employer Health Benefits: 2018 Annual Survey. San Francisco, CA: The Kaiser Family Foundation; Published October 3, 2018. [Google Scholar]

- 18.McCleary K, Goetzel RZ, Roemer EC, et al. Employer and employee opinions about workplace health promotion (wellness) programs: results of the 2015 Harris Poll Nielsen Survey. J Occup Environ Med. 2017;59:256–263. [DOI] [PubMed] [Google Scholar]

- 19.Linnan LA, Cluff L, Lang JE, Penne M, Leff MS. Results of the workplace health in America survey. Am J Health Promot. 2019;33:652–665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Childress JM, Lindsay GM. National indications of increasing investment in workplace health promotion programs by large- and medium-sized companies. North Carolina Med J. 2006;67:449–452. [PubMed] [Google Scholar]

- 21.Goetzel RZ, Tabrizi MJ, Roemer EC, Smith KJ, Kent K. A review of recent organizational health assessments. Am J Health Promot. 2013;27:TAH2–TAH4. [Google Scholar]

- 22.Roemer EC, Kent KB, Samoly DK, et al. Reliability and validity testing of The CDC Worksite Health ScoreCard: an assessment tool for employers to prevent heart disease, stroke, & related health conditions. J Occup Environ Med. 2013;55:520–526. [DOI] [PubMed] [Google Scholar]

- 23.Watson NF, Badr MS, Belenky G, et al. Recommended amount of sleep for a healthy adult: a joint consensus statement of the American Academy of Sleep Medicine and Sleep Research Society. Sleep. 2015;38:843–844. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Centers for Disease Control and Prevention (CDC). Sleep and Chronic Disease [CDC web site], 2013. Available at: https://www.cdc.gov/sleep/about_sleep/chronic_disease.html. Accessed October 25, 2017.

- 25.de Mello MT, Narciso FV, Tufik S, et al. Sleep disorders as a cause of motor vehicle collisions. Int J Prev Med. 2013;4:246–257. [PMC free article] [PubMed] [Google Scholar]

- 26.Uehli K, Mehta AJ, Miedinger D, et al. Sleep problems and work injuries: a systematic review and meta-analysis. Sleep Med Rev. 2014;18:61–73. [DOI] [PubMed] [Google Scholar]

- 27.National Safety Council (NSC). Cost of Fatigue in the Workplace [NSC website], 2019. Available at: https://www.nsc.org/work-safety/safety-topics/fatigue/calculator/cost. Accessed January 17, 2019.

- 28.Rosekind MR, Gregory KB, Mallis MM, Brandt SL, Seal B, Lerner D. The cost of poor sleep: workplace productivity loss and associated costs. J Occp Environ Med. 2010;52:91–98. [DOI] [PubMed] [Google Scholar]

- 29.Centers for Disease Control and Prevention (CDC). Alcohol and Public Health: Alcohol-related Disease Impact (ARDI). Average for United States 2006–2010 Alcohol-attributable Deaths due to Excessive Alcohol Use. [CDC web site], 2013. Available at: https://nccd.cdc.gov/DPH_ARDI/Default/Report.aspx?T=AAM&P=f6d7eda7-036e-4553-9968-9b17ffad620e&R=d7a9b303-48e9-4440-bf47-070a4827e1fd&M=8E1C5233-5640-4EE8-9247-1ECA7DA325B9&F=&D=. Accessed October 25, 2017.

- 30.World Health Organization (WHO). Global Status Report on Alcohol and Health 2018. CC BY-NC-SA 3.0 IGO. [WHO web site], 2018. Available at: http://www.who.int/substance_abuse/publications/global_alcohol_report/gsr_2018/en/. Accessed November 12, 2018.

- 31.Sacks JJ, Gonzales KR, Bouchery EE, Tomedi LE, Brewer RD. 2010 national and state costs of excessive alcohol consumption. Am J Prev Med. 2015;49:e73–e79. [DOI] [PubMed] [Google Scholar]

- 32.Center for Behavioral Health Statistics and Quality. Key Substance Use and Mental Health Indicators in the United States: Results from the 2015 National Survey on Drug Use and Health. HHS Publication No. SMA 16–4984, NSDUH Series H-51. [SAMHSA web site], 2016. Available at: http://www.samhsa.gov/data/.2016. Accessed November 12, 2018.

- 33.Ames GM, Bennett JB. Prevention interventions of alcohol problems in the workplace: a review and guiding framework. Alc Res Health. 2011;34:175–187. [PMC free article] [PubMed] [Google Scholar]

- 34.Webb G, Shakeshaft A, Sanson-Fisher R, Havard A. A systematic review of work-place interventions for alcohol-related problems. Addiction. 2009;104:365–377. [DOI] [PubMed] [Google Scholar]

- 35.U.S. Department of Health and Human Services (HHS), Office of the Surgeon General. Facing Addition in America: The Surgeon General’s Spotlight on Opioids. Washington, DC: HHS; 2018. [Google Scholar]

- 36.National Institute for Occupational Safety and Health (NIOSH). Musculo-skeletal Disorders [CDC web site], 2015. Available at: https://www.cdc.gov/niosh/programs/msd/. Accessed June 2016.

- 37.Summers K, Jinnett K, Brevan S. Musculoskeletal disorders, workforce health and productivity in the United States. [White Paper]. The Center for Workforce Health and Performance, and The Work Foundation, June 2015. www.theworkfoundation.com/wp-content/uploads/2016/11/385_White-paper-Musculoskeletal-disorders-workforce-health-and-productivity-in-the-USA-final.pdf [Google Scholar]

- 38.Yazdani A, Neumann WP, Imbeau D, et al. How compatible are participatory ergonomics programs with occupational health and safety management systems? Scand J Work Environ Health. 2015;41:111–123. [DOI] [PubMed] [Google Scholar]

- 39.Centers for Disease Control and Prevention (CDC). Deaths and Mortality [CDC web site], 2018, Available at: https://www.cdc.gov/nchs/fastats/deaths.htm. Accessed November 12, 2018.

- 40.American Cancer Society. Cancer facts & Figures 2018 [American Cancer Society web site], 2018. Available at: https://www.cancer.org/content/dam/cancer-org/research/cancer-facts-and-statistics/annual-cancer-facts-and-figures/2018/cancer-facts-and-figures-2018.pdf. Accessed December 11, 2018.

- 41.Vuong TD, Wei F, Beverly CJ. Absenteeism due to functional limitations caused by seven common chronic diseases in US workers. J Occup Environ Med. 2015;57:779–784. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.R Development Core Team. R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing; 2018. Available at: http:www.R-project.org. [Accessed March 8, 2018].

- 43.Gwet KL. The Handbook of Inter-Rater Reliability: The Definitive Guide to Measuring the Extent of Agreement Among Raters. 4th ed Gaithersburg: Advanced Analytics, LLC; 2014. [Google Scholar]

- 44.Baicker K, Cutler D, Song Z. Workplace wellness programs can generate savings. Health Aff (Millwood). 2010;29:304–311. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.