Abstract

The recent decline in energy, size and complexity scaling of traditional von Neumann architecture has resurrected considerable interest in brain-inspired computing. Artificial neural networks (ANNs) based on emerging devices, such as memristors, achieve brain-like computing but lack energy-efficiency. Furthermore, slow learning, incremental adaptation, and false convergence are unresolved challenges for ANNs. In this article we, therefore, introduce Gaussian synapses based on heterostructures of atomically thin two-dimensional (2D) layered materials, namely molybdenum disulfide and black phosphorus field effect transistors (FETs), as a class of analog and probabilistic computational primitives for hardware implementation of statistical neural networks. We also demonstrate complete tunability of amplitude, mean and standard deviation of the Gaussian synapse via threshold engineering in dual gated molybdenum disulfide and black phosphorus FETs. Finally, we show simulation results for classification of brainwaves using Gaussian synapse based probabilistic neural networks.

Subject terms: Electronic devices, Two-dimensional materials

Designing large-scale hardware implementation of Probabilistic Neural Network for energy efficient neuromorphic computing systems remains a challenge. Here, the authors propose an hardware design based on MoS2/BP heterostructures as reconfigurable Gaussian synapses enabling EEG patterns recognition.

Introduction

The last five decades have witnessed an unprecedented and exponential growth in computational power, primarily driven by the success of the semiconductor industry. Relentless scaling1 of complementary metal oxide semiconductor (CMOS) technology enabled by breakthroughs in material discovery2, innovation in device physics3, transformation in micro and nanolithography techniques4, and the triumph of von Neumann architecture5 contributed to the computing revolution. Scaling has three characteristic aspects to it. Energy scaling to ensure practically constant computational power budget, size scaling to ensure faster and cheaper computing since more and more transistors can be packed into the same chip area, and complexity scaling to ensure incessant growth in computational power of single on-chip processor. The golden era of metal oxide semiconductor field effect transistor (MOSFET) scaling, also referred to as the Dennard scaling era6, has witnessed concurrent scaling of all three aspects for almost three decades. However, around 2005, the energy scaling ended owing to fundamental thermodynamic limitations at the device physics level, popularly known as the Boltzmann tyranny7. Size scaling continued for another decade albeit with new challenges8 and eventually ended in 2017 owing to limitations at the materials level imposed by quantum mechanics1. Complexity scaling is also on decline owing to the non-scalability of traditional von Neumann computing architecture and the impending “Dark Silicon” era that presents a severe threat to multi-core processor technology9. In order to sustain the growth in computational power, it is imperative that all three aspects of scaling must be reinstated immediately through material rediscovery, device innovations, and advancement in higher complexity computing architectures.

The extraordinarily complex neurobiological architecture of the mammalian nervous system that seamlessly executes diverse and intricate functionalities such as adaption, perception, acquisition of sensory information, learning, memory formation, emotions, cognition, motor action, and many more has inspired computer scientists to think beyond the traditional von Neumann architecture in order to resurrect complexity scaling. The neural architecture deploys billions of information processing units, neurons, which are connected via trillions of synapses in order to accomplish massively parallel, synchronous, coherent, and concurrent computation. This is markedly different from the von Neumann architecture, where the logic and memory units are physically isolated and operate sequentially, i.e., instruction fetch and data operation cannot occur simultaneously. Furthermore, unlike the deterministic digital switches (logic transistors), neural architecture uses probabilistic and analog computational primitives in order to accomplish adaptive functionalities such as pattern recognition and pattern classification, which form the foundation for mammalian problem solving and decision making.

In the above context, IBM’s bioinspired CMOS chip, True North, is a remarkable breakthrough in neuromorphic computing, achieving the complexity of more than 1 million neurons or 256 million synapses while consuming a miniscule 70 mW of power10. Similarly, extensive work by Luca Benini et al. have recently shown that hardware digital neural networks consume comparable or less energy than the human brain for complex tasks, such as, image recognition11. While these are impressive advancements, the inherent scaling challenges associated with the digital CMOS technology can ultimately limit the implementation of very-large-scale artificial neural networks (ANNs), invoking the critical and imminent need for energy efficient analog computing primitives for ANNs. Recent years have, therefore, witnessed innovation in analog devices such as the memristors12–14, coupled oscillators15, and various targeted components16–18, which can emulate neural spiking, neural transmission, and neural plasticity and hence can be used as computational primitives in ANNs. While these devices do provide some energy benefit at the architectural level for specific applications, they fail to address the intrinsic energy and size scaling needs at the device level, which can ultimately lead to stagnation in complexity scaling. Further challenges associated with ANNs are often overlooked. For example, ANNs deployed for pattern classification problems require optimum training algorithms and learning rules to identify the class statistics with desired accuracy within a short training time. Unfortunately, the most popular and widely used heuristic backpropagation algorithm19, is inherently slow and remains vulnerable to local minima in spite of extensive modifications in recent years using methods such as conjugate gradient, quasi-Newton, and Levenberg–Marquardt (LM) to improve the convergence20. In order to address the slow learning, incremental adaptation, and inherent unreliability of ANNs, novel classification techniques based on statistical principles must be embraced.

In this article, we experimentally demonstrate how a new class of analog devices, namely, Gaussian synapses, based on the heterostructure of novel atomically thin two dimensional (2D) layered semiconductors enables the hardware implementations of probabilistic neural networks (PNNs) and thereby reinstates all three aforementioned quintessential scaling aspects of computing. In short, 2D materials facilitate aggressive size scaling, analog Gaussian synapses offer energy scaling, and PNNs enable complexity scaling. Combined, these new developments can facilitate Exascale Computing and ultimately benefit scientific discovery, national security, energy security, economic security, infrastructure development, and advanced healthcare programs21,22.

Results

Probabilistic neural network

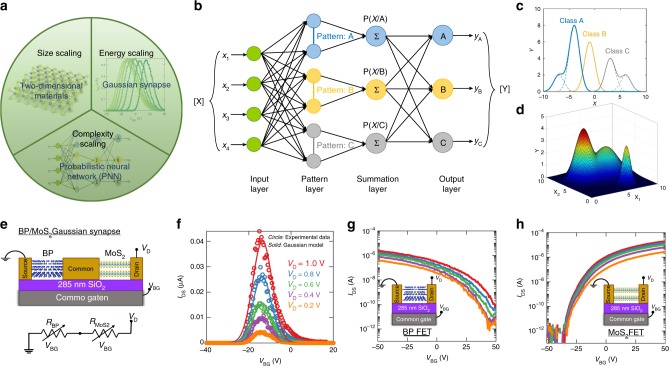

Our overall approach is summarized in Fig. 1a. First, we reintroduce PNN that was proposed by Specht, D. F.23 PNN is derived from Bayesian computing and Kernel density method24. As shown in Fig. 1b, unlike ANNs, which necessitate multiple hidden layers, each with a large number of nodes, PNNs are comprised of a pattern layer and a summation layer and can map any input pattern to any number of output classifications. Furthermore, ANNs use activation functions such as sigmoid, rectified linear unit (ReLU), and their various derivatives for determining pattern statistics. This is often extremely difficult to accomplish with reasonable accuracy for non-linear decision boundaries. PNNs, on the contrary, use parent probability distribution functions (PDFs) approximated by Parzen window25 and a non-parametric function to define the class probability, which in the case of Gaussian kernel is defined by Gaussian distribution as shown in Fig. 1c. PNNs, therefore, facilitate seamless and accurate classification of complex patterns with arbitrarily shaped decision boundaries. Furthermore, PNNs can be extended to map higher dimensional functions since multivariate Gaussian kernels are simply the product of univariate kernels as shown in Fig. 1d.

Fig. 1.

Gaussian Synapse based probabilistic neural network (PNN). a Resurrection of three quintessential scaling aspects of computation i.e., complexity scaling through PNNs, size scaling through atomically thin 2D materials and energy scaling through analog Gaussian synapses. b Schematic representation of PNN that comprise of a pattern layer and a summation layer for mapping any input pattern to any number of output classifications. c Gaussian probability density functions (PDFs) facilitating seamless and accurate classification of complex patterns with arbitrarily shaped decision boundaries. d Multivariate Gaussian kernel for mapping higher dimensional functions. e Schematic of two transistor Gaussian synapse based on heterogeneous integration of n-type MoS2 and p-type black phosphorus (BP) back-gated field effect transistors (FETs). The equivalent circuit diagram consists of two variable resistors connected in series. f Transfer characteristics i.e., the drain current () versus back-gate voltage () of the Gaussian synapse for different drain voltages (). Clearly, the experimental data (circles) can be modeled by Gaussian distributions (solid). g Transfer characteristics of p-type BP FET. h Transfer characteristics of n-type MoS2 FET

Unfortunately, in spite of the widespread applications and simplicity of PNNs, their hardware implementation is rather sparse. In fact, neither ANNs nor PNNs have been extensively realized using hardware components. While there is growing interest towards the development of devices for the hardware implementation of ANNs, the effort and investment towards the hardware implementation of PNNs are still very limited. One reason is that the hardware implementation of probability functions associated with the PNNs, such as the Gaussian, requires multicomponent digital CMOS circuits that leads to severe area and energy inefficiency. For example, the “Bump” circuit demonstrated by Delbruck, T. uses seven transistors26. Similarly, the Gaussian synapse proposed by Choi, J. et al. consists of a pair of differential amplifiers and several arithmetic computational units27. Madrenas, J. et al. introduced an alternate method to obtain Gaussian function by combining the exponential characteristics of MOSFETs in sub-threshold and square characteristics in inversion28. The circuit was further improved for better symmetry and greater control and tunability by adding more transistors in a floating gate configuration29,30. Another approach is to use a Gilbert Gaussian function, where two sigmoid curves are combined using a differential pair along with a current mirror31,32. Without any extra circuitry to reduce asymmetry, the most compact circuit uses five transistors28. As we will discuss in the following section, our experimental demonstration of Gaussian synapses uses only two transistors, which significantly improves the area and energy efficiency at the device level and provides cascading benefits at the circuit, architecture, and system levels. This will stimulate the much-needed interest in the hardware implementation of PNNs for a wide range of pattern classification problems.

Gaussian synapse

Figure 1e shows the schematic of our proposed two transistor Gaussian synapse based on heterogeneous integration of n-type molybdenum disulfide (MoS2) and p-type black phosphorus (BP) back-gated field effect transistors (FETs). Fig. 1e also shows the equivalent circuit diagram for the Gaussian synapse, which simply consists of two variable resistors in series. The two variable resistors, i.e., RMoS2 and RBP correspond to the MoS2 and BP FETs. Fig. 1f shows the experimentally measured transfer characteristics i.e., the drain current (ID) versus back-gate voltage (VBG) of the Gaussian synapse for different drain voltages (VD). The fabrication process flow and electrical measurement setup for Gaussian synapses are described in the experimental method section. Clearly, the transfer characteristics resemble a Gaussian distribution which can be modeled using the following equation.

| 1 |

Where, and are, respectively, the amplitude, mean, and standard deviation of the Gaussian. For a specific MoS2/BP pair, and σV are found to be constants, whereas, A varies linearly with VD. The emergence of Gaussian transfer characteristics can be explained using the experimentally measured transfer characteristics of its constituents, i.e., the MoS2 FET and the BP FET, as shown in Fig. 1g, h, respectively. MoS2 FETs exhibit unipolar n-type characteristics, irrespective of the choice of contact metal, owing to the phenomenon of metal Fermi level pinning close to the conduction band that facilitates easier electron injection, whereas, BP FETs are predominantly p-type with large work function contact metals such as Ni33–36. Furthermore, unlike conventional enhancement mode Si FETs used in CMOS circuits, both MoS2 and BP FETs are depletion mode, i.e., they are normally ON without applying any back-gate voltage. Remarkably, this simple difference results in the unique Gaussian transfer characteristics for the MoS2/BP pair in spite of the device structure closely resembling a CMOS logic inverter. From the equivalent circuit diagram, the current () through the Gaussian synapse can be written as:

| 2 |

For extreme values, i.e., large negative (lesser than −30 V) and large positive (greater than 30 V), the MoS2 FET and the BP FET are in their respective OFF states, making the corresponding resistances, i.e., and very large (approximately TΩ). This prevents any current conduction between the source and the drain terminal of the Gaussian synapse. However, as the MoS2 FET switches from OFF state to ON state, current conduction begins and increases exponentially with following the subthreshold characteristics and reaches its peak magnitude determined by . Beyond this peak, the current starts to decrease exponentially following the subthreshold characteristics of the BP FET. As a result, the series connection of the MoS2 and BP FETs exhibits non-monotonic transfer characteristics with exponential tails that mimics a Gaussian distribution.

It must be noted that the Gaussian synapses do not utilize the ON state FET performance and, therefore, are minimally influenced by the carrier mobility values of the n-type and p-type FETs. Instead, the Gaussian synapse exploits the sub-threshold FET characteristics, where the slope is independent of the carrier mobility of the semiconducting channel material. For symmetric Gaussian synapses, it is therefore more desirable to ensure similar sub-threshold slope (SS) values for the respective FETs than the carrier mobility. Ideally, the SS values for both FETs should be 60 mV decade−1. However, presence of a nonzero interface trap capacitance worsens the SS. The SS can be improved by minimizing interface states at the 2D/gate-dielectric interface, as well as by scaling the thickness of the gate dielectric. It is also desirable to have FETs with Ohmic contacts for Gaussian synapses to ensure that the SS is determined by the thermionic emission of carriers in order to reach the minimum theoretical value of 60 mV decade−1 at room temperature. For Schottky contact FETs, the SS can be severely degraded due to tunneling of carriers through the Schottky barrier.

While our proof-of-concept demonstration of Gaussian synapses is based on exfoliated MoS2 and BP flakes, it is well known that the micromechanical exfoliation is not a scalable manufacturing process for large-scale integrated circuits. Therefore, hardware implementation of PNNs using Gaussian synapses will necessitate large-area growth of MoS2 and BP. Fortunately, recent years have seen tremendous progress in wafer-scale growth of high quality MoS2 and BP using chemical vapor deposition (CVD) and metal organic chemical vapor deposition (MOCVD) techniques37–41. Furthermore, while we have used two different 2D materials, MoS2 and BP, for our demonstration of Gaussian synapses owing to their superior performance as n-type and p-type FETs, respectively, there are 2D materials, such as, WSe2, which offer ambipolar transport, i.e., the presence of both electron and hole conduction42 and can be grown over large area using CVD techniques43. However, the performance of WSe2 based n-type and p-type FETs are limited by the presence of large Schottky barriers at the metal/2D contact interfaces36. By resolving the contact resistance related issues36 and improving the quality of large-area synthesized WSe2, it is possible to implement Gaussian synapses based solely on WSe2 as well. Moreover, in recent years several groups have demonstrated p-type MoS2 and n-type BP, by implementing smart contact engineering and/or doping strategies44,45. Therefore, very-large-scale integration of Gaussian synapses based on a CVD grown single 2D material will be possible in the near future for the hardware realization of PNNs. Since the focus of this article is to introduce the novel Gaussian synapse and its benefit as a statistical computing primitive, we avoided material optimization.

Gaussian synapses are inherently low power since they exploit the subthreshold characteristics of the FET devices. In this context, we would like to remind the readers that the total power consumption () in digital CMOS circuit comprises, primarily, of dynamic switching power () and static leakage power () and is given by the following equation:

| 3 |

Note that, is the activity factor, C is the capacitance of the circuit, f is the switching frequency, and is the supply voltage. During the Dennard scaling era, the power consumption of the chip was dominated by , which was kept constant by scaling the threshold voltage () and concurrently the supply voltage () of the MOSFET. However, beyond 2005, the voltage scaling stalled since further reduction in resulted in an exponential increase in the static leakage current () and hence static power consumption. This is a direct consequence of the non-scalability of the subthreshold swing (SS) to below 60 mV decade−1, as determined by the Boltzmann statistics. In fact, in the present Dark-Si era is mostly dominated by . Regardless of whether the dynamic or static power dominates, reinstating scaling is the only way to escape the Boltzmann tyranny. This is why in recent years, subthreshold logic circuits, which utilize that is close to or even less than , have received significant attention for ultra-low power applications46,47. New subthreshold logic and memory design methodologies have already been developed and demonstrated on a fast Fourier transform (FFT) processor48, as well as analog VLSI neural systems49.

Note that our proposed Gaussian synapses naturally require operation in subthreshold regime in order to exploit the exponential feature in the transfer characteristics of the n-type and p-type transistors. Furthermore, as shown in Fig. 1f, Gaussian synapses maintain their characteristic features even when the supply voltage () is scaled down to 200 mV or beyond. This allows the Gaussian synapses to be inherently low power. For the proof-of-concept demonstration of Gaussian synapses, we have used relatively thicker back-gate and top-gate oxides with respective thicknesses of 285 nm and 120 nm. This necessitates the use of rather large back-gate and top-gate voltages in the range of −50 V to 50 V and −35 V to 35 V, respectively. The power consumption by our proof-of-concept Gaussian synapses will still be high in spite of scaling the supply voltage and exploiting the subthreshold current conduction in the range of nano amperes through the MoS2 and BP FETs. This is because power consumption by Gaussian synapse is simply the area under the versus curve. By scaling the thicknesses of both the top and bottom gate dielectrics, it is possible to scale the operating gate voltages and thereby achieve desirable power benefits from the Gaussian synapses. Ultra-thin dielectric materials such as Al2O3 and HfO2, which offer much larger dielectric constants, , of ≈9 and 25, respectively, are now routinely used as gate oxides for highly scaled Si FinFETs50. It must also be emphasized that the use of atomically thin 2D materials allows geometric miniaturization of Gaussian synapses without any loss of electrostatic integrity, which aids size scaling. We would like to remind the readers that the scalability of FETs is captured through a simple parameter called the screening length (), which determines the decay of the potential (band bending) at the source/drain contact interface into the semiconducting channel. In this expression, and are the thicknesses and and are the dielectric constants of the semiconducting channel and the insulating oxide, respectively. As discussed by Frank et al.51, to avoid short channel effects the channel length of an FET () has to be at least three times higher than the screening length (). For atomically thin semiconducting monolayers of 2D materials, is 0.6 nm, which corresponds to of 1.3 nm, whereas, for the most advanced FinFET technology the thickness of Si fins can be scaled down to only 5 nm without severely increasing the bandgap due to quantum confinement effects and reducing the mobility due to enhanced surface roughness scattering. Nevertheless, the above discussions, clearly articulate how BP/MoS2 2D heterostructure based Gaussian synapses can facilitate effortless hardware realization of PNNs and thereby aid complexity scaling without compromising energy and size scaling.

Reconfigurable Gaussian synapse

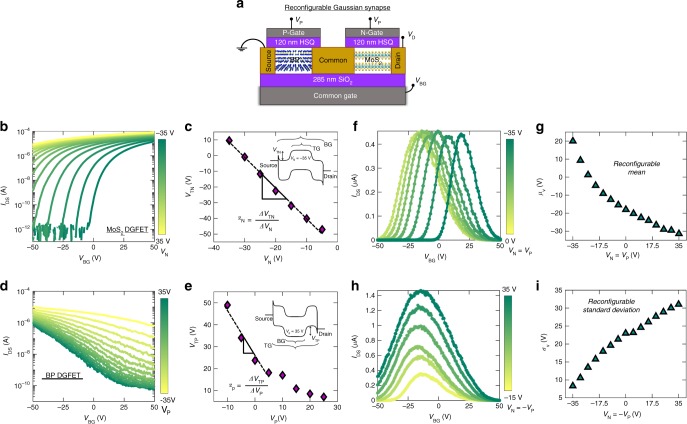

For the hardware implementation of Gaussian synapses, it is highly desirable to demonstrate complete tunability of the device transfer function, i.e., , and of the Gaussian distribution. This could be achieved, seamlessly, in our device structure via threshold engineering through additional gating of either or both MoS2 and BP FETs. Fig. 2a shows the schematic representation of a reconfigurable Gaussian synapse, where, both MoS2 and BP FETs are dual-gated (DG). The top-gate stack was fabricated using hydrogen silsesquioxane (HSQ)52,53 as the top-gate dielectric with nickel/gold (Ni/Au) as the top-gate electrode. The fabrication process flow is described in the experimental method section. Fig. 2b shows the experimentally measured back-gated transfer characteristics of the MoS2 FET at = 1 V for different top-gate voltages (). Clearly, controls the back-gate threshold voltage, of the MoS2 FET as shown in Fig. 2c. The energy band diagram shown in the inset of Fig. 2c can be used to explain the concept of threshold voltage engineering using gate electrostatics. The top-gate voltage determines the height of the potential barrier for electron injection inside the MoS2 channel, which must be overcome by applying a back-gate voltage to enable current conduction from the source to the drain terminal. Negative top-gate voltages increase the potential barrier for electron injection and hence necessitate larger positive back-gate voltages to achieve similar level of current conduction. As such, becomes more positive (less negative) for large negative values. Note that the slope () of versus in Fig. 2c must be proportional to the ratio of top-gate capacitance () to the back-gate capacitance (). This follows directly from the principle of charge balance, which ensures that the channel charge induced by the top-gate voltage must be compensated by the back-gate voltage at threshold. We extracted the value for to be 1.91. This is consistent with the theoretical prediction of approximately 1.94, given that the top-gate and back-gate dielectric thicknesses are 120 nm and 285 nm, respectively and the top-gate insulator, HSQ, has a slightly lower dielectric constant (≈3.2) than the back-gate insulator, SiO2 (3.9). Fig. 2d shows the experimentally measured back-gated transfer characteristics of the BP FET at = 1 V, for different top-gate voltages (). As expected, controls the back-gate threshold voltage, of the BP FET as shown in Fig. 2e. Here, the top-gate voltage influences the height of the potential barrier for hole injection, which is overcome by applying a back-gate voltage, enabling current conduction from the drain to the source terminal. The corresponding energy band diagram is shown in the inset of Fig. 2e. Positive top-gate voltages increase the potential barrier for hole injection and hence necessitate larger negative back-gate voltages to achieve similar level of current conduction. As such, becomes more negative (less positive) for large positive values. We also extracted the slope () of versus in Fig. 2e and, as expected, found a similar value of ≈2.

Fig. 2.

Reconfigurable Gaussian synapse. a Schematic of a reconfigurable Gaussian synapse involving dual-gated n-type MoS2 FET and p-type BP FET. The top-gate stack was fabricated using hydrogen silsesquioxane (HSQ) as the top-gate dielectric and nickel/gold (Ni/Au) as the top-gate electrode. b Back-gated transfer characteristics of the MoS2 FET at = 1 V, for different top-gate voltages (). c Back-gate threshold voltage, of MoS2 FET as function of was extracted using the constant current method. Inset shows the band diagram elucidating how controls by electrostatically adjusting the height of the thermal energy barrier for electron injection into the MoS2 channel. d Back-gated transfer characteristics of the BP FET at = 1 V, for different top-gate voltages (). e Back-gate threshold voltage, of BP FET as function of . Inset shows the band diagram elucidating how controls by electrostatically adjusting the height of the thermal energy barrier for hole injection into the BP channel. As expected the slope () of versus and the slope () of versus are found to be similar and equal to the ratio of top-gate capacitance () to the back-gate capacitance (), which in our case was ≈1.94. f Transfer characteristics of the Gaussian synapse for different values of . This configuration allows us to shift the mean () of the Gaussian synapse without changing the amplitude (A) and the standard deviation (). g as a function of . h Transfer characteristics of the Gaussian synapse for different values of . This configuration allows us to configure while keeping constant. i as a function of . However, this configuration also results in an increase in the amplitude (A) of the Gaussian synapse as increases. This increase can be adjusted by changing the drain voltage () since A is linearly proportional to . Nevertheless, by controlling and VD, it is possible to adjust the mean, standard deviation and amplitude of the Gaussian synapse

The dual-gated MoS2 and BP FETs allow complete control of the shape of the Gaussian synapse. Fig. 2f shows the experimentally measured transfer characteristics of the Gaussian synapse for different values of . This configuration allows us to shift the mean () of the Gaussian synapse without changing the amplitude (A) or the standard deviation () Fig. 2g shows plotted as a function of . We are able to do this since the back-gate threshold voltages for both MoS2 and BP FETs shift in the same direction in this configuration. Similarly, Fig. 2h shows the experimentally measured transfer characteristics of the Gaussian synapse for different values of . Under this configuration, the back-gate threshold voltages for MoS2 and BP FETs shift in opposite directions. As such the of the Gaussian distribution remains constant, whereas, keeps increasing. Fig. 2i shows plotted as a function of . However, this configuration also results in an increase in the amplitude (A) of the Gaussian synapse as increases. This increase can be adjusted by changing the drain voltage () since A is linearly proportional to VD. Nevertheless, by controlling , and VD, it is possible to adjust the mean, standard deviation, and amplitude of the Gaussian synapse.

Scaled Gaussian synapses

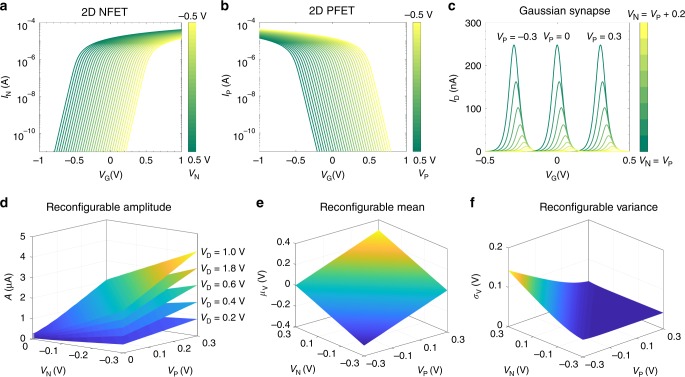

In order to project the performance of scaled Gaussian synapses, we used the Virtual Source (VS) model that was originally developed by Khakifirooz, A. et al. for short channel Si MOSFETs54. for short channel Si MOSFETs. The Gaussian transfer characteristics ( versus for different VD) were simulated in the following Eqs. 4, 5, and 6. In the VS model, both the subthreshold and the above threshold behavior is captured through a single semi-empirical and phenomenological relationship that describes the transition in channel charge density from weak to strong inversion (Eq. 5).

| 4 |

| 5 |

| 6 |

Here, RN and RP are the resistances, and LP are the lengths, and WP are the widths, μN and μP are the carrier mobility values, and and QP are the inversion charges corresponding to the n-type and the p-type 2D-FETs, respectively. The band movement factor m can assumed to be unity for a fully depleted and ultra-thin body 2D-FET with negligible interface trap capacitance. Finally, VTN and VTP are the threshold voltages of the n-type and p-type 2D FETs determined by their respective top-gate voltages VN and VP. Note, that in the subthreshold regime, the inversion charges i.e., and increase exponentially with , whereas above threshold, the inversion charge is a linear function of VG, which is seamlessly captured through the VS model. Fig. 3a, b show the simulated transfer characteristics of the individual n-type and p-type 2D FETs, respectively, and Fig. 3c shows the transfer characteristics of the Gaussian synapse based on their heterostructure for different combinations of the top-gate voltages, following the VS model, as described above. Furthermore, Fig. 3d–f, respectively, demonstrate the tunability of , and of the Gaussian synapse via top-gate voltages and . More details on the design of Gaussian synapses can be found in the supplementary information section.

Fig. 3.

Scaled Gaussian Synapses. Simulated back-gated transfer characteristics of (a) n-type and (b) p-type 2D FET for corresponding different top-gate voltages VN and VP, respectively, using the Virtual Source (VS) model. In the VS model, both the subthreshold and the above threshold behavior are captured through a single semi-empirical and phenomenological relationship that describes the transition in channel charge density from weak to strong inversion. c Transfer characteristics, (d) amplitude, (e) mean, and (f) standard deviation of the Gaussian synapse obtained via heterogeneous integration of the n-type and the p-type 2D FETs for different combinations of VN and VP. The following parameters were used for the simulation. ; ;;; M = 1; α = 1; VD = 1 V

Brainwave classification

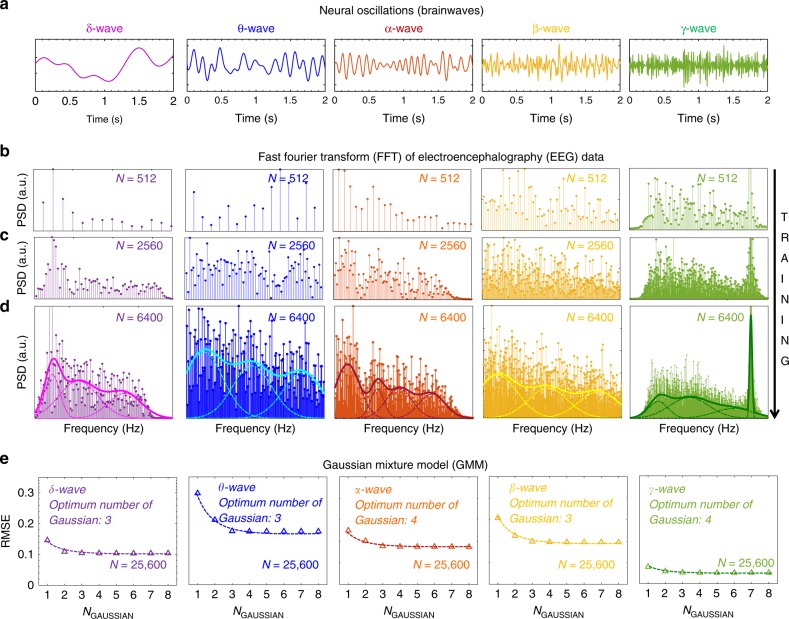

Next, we show simulation results suggesting that PNNs based on Gaussian synapses can be used for the classification of various neural oscillations, also known as the brainwaves that are fundamental to human awareness, cognition, emotions, and actions. These rhythmic and repetitive oscillations that originate from synchronous and complex firing of neural ensembles are observed throughout the central nervous system and are essential in controlling the neuro-physiological health of any individual. As shown in Fig. 4a, the brainwaves are divided into five frequency bands based on the present understanding and interpretation of their functions. Lower frequency (0.5–3.5 Hz) and higher amplitude delta waves (δ) are generated during deepest meditation and dreamless sleep, suspending all external awareness and facilitating healing and regeneration. Disruptions in δ-wave activity can lead to neurological disorders such as dementia, schizophrenia, parasomnia, epilepsy, and Parkinson’s disease. Low frequency (4–8 Hz) and high amplitude theta waves (θ) originate during sleep or deep meditation with senses withdrawn from the external world and focused within. Normal firing of θ-waves enables learning, memory, intuition, and introspection, while excessive activity can lead to attention-deficit/hyperactivity disorder (ADHD). Mid-frequency (8–14 Hz) and mid-amplitude alpha waves (α) represent the resting state of the brain and facilitate mind/body coordination, mental peace, alertness, and learning. High frequency (16–32 Hz) and low amplitude beta waves (β) dominate the wakeful state of consciousness, direct our concentration towards cognitive tasks such as problem solving and decision-making, and at the same time consume a tremendous amount of energy. In clinical context, β-waves can be used as biomarkers as they indicate the release of gamma aminobutyric acid, the principal inhibitory neurotransmitter in the mammalian nervous system. Finally, gamma waves (γ) are the fastest (32–64 Hz) and quietest brain waves. These waves were dismissed as neural noise until recently, when researchers discovered the connection to greater consciousness and spiritual activity culminating in the state of universal love and altruism55. The above discussion clearly shows the immense importance of brainwaves in regulating our daily experience. Instabilities in brain rhythm can be catastrophic, leading to insomnia, narcolepsy, panic attacks, obsessive-compulsive disorder, agitated depression, hyper-vigilance, and impulsive behaviors. Early diagnosis of abnormal brainwave activity through neural networks can help prevent chronic neuro diseases and mental and emotional disorders.

Fig. 4.

Recognition of Brainwaves using Gaussian Mixture Model (GMM). a Brainwaves are divided into five frequency bands. 0.5–3.5 Hz: Delta waves (δ), 4–8 Hz: Theta waves (θ), 8–14 Hz: Alpha waves (α), 16–32 Hz: Beta waves (β) and 32–64 Hz: Gamma waves (γ). Normalized power spectral density (PSD) as a function of frequency for each type of brainwaves, extracted using the Fast Fourier Transform (FFT) of the time domain Electroencephalography (EEG) data (sequential montage) with increasing sampling times that correspond to sample sizes of (b) N = 512, (c) N = 2560, and (d) N = 6400. As the training set becomes more and more exhaustive, the discrete frequency responses evolve into continuous spectrums. The highly nonlinear functional dependence of the PSDs on frequency for each type of brainwave can be classified using GMM, which is represented as the weighted sum of a finite number of scaled (different variance) and shifted (different mean) normal distributions. e Root mean square error (RMSE) as a function of K, i.e., the number of Gaussian used in the GMMs for each type of brainwave. Clearly, a very limited number of Gaussian synapses are necessary to capture the non-linear decision boundaries

Figure 4b, c, and 4d show the frequency pattern of the normalized power spectral density (PSD) for each type of brainwaves, extracted from the Fast Fourier Transform (FFT) of the time domain Electroencephalography (EEG) data (sequential montage) with increasing sampling times that correspond to sample sizes of N = 512, 2560, and 6400, respectively. Clearly, as the training set becomes more and more exhaustive, the discrete frequency responses corresponding to each type of brainwave evolve into continuous spectrums that show complex patterns. Furthermore, the system is highly nonlinear with functional dependence of the PSDs on frequency being rather complicated for each type of brainwave. As such, classification of brainwave patterns using conventional ANNs, can be challenging56–58. In addition, ANNs require optimum training algorithms and extensive feature extraction and preprocessing of the training sample in order to achieve reasonable accuracy. In contrast, as demonstrated in Fig. 4d, the PNN adopts single pass learning by defining the class PDF for each of the brainwave pattern in the frequency domain using Gaussian mixture model (GMM)59,60. GMM is represented as the weighted sum of a finite number of scaled (different variance) and shifted (different mean) normal distributions as described by Eq. 2.

| 7 |

A GMM with K components is parameterized by two types of values, the component weights () and the component means () and variances () with the constraint that , so that the total probability distribution normalizes to unity. For each type of brainwave pattern, the GMM parameters for the K component were estimated from the training data corresponding to N = 25,600, using the non-linear least square method. Figure 4e shows root mean square errors (RMSEs) calculated as a function of K, i.e., the number of Gaussian curves used in the corresponding GMMs. Clearly, a very limited number of Gaussian functions are necessary to capture the non-linear decision boundary for each of the brainwaves. This enormously reduces the energy and size constraint for the PNNs based on Gaussian synapses.

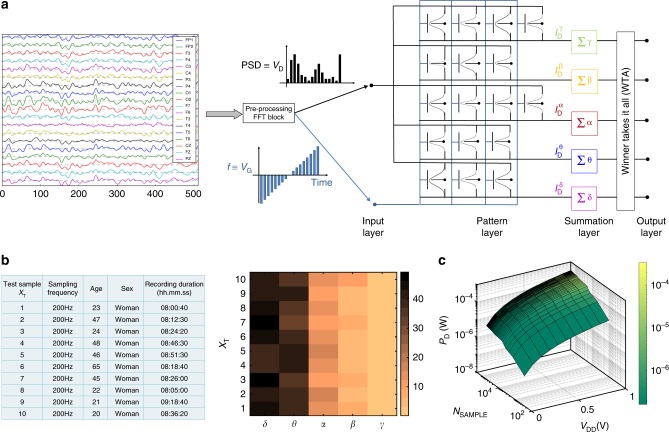

Finally, Fig. 5a shows simulation results evaluating the PNN architecture for the detection of new brainwave patterns. The PNN consists of 4 layers: input, pattern, summation, and output. The amplitude of the new FFT data is relayed from the input layer to the pattern layer as the drain voltage () of the Gaussian synapses, whereas, the frequency range (0–64 Hz) is mapped to the back-gate voltage () range. The summation layer integrates the current over the full swing of VG from the individual pattern blocks and communicates with the winner-takes-it-all (WTA) circuit that allows the output layer to recognize the brainwave patterns. We implemented our PNN architecture on 10 whole-night polysomnographic recordings, obtained from 10 healthy subjects in a sleep laboratory using a digital 32-channel polygraph (details can be found in the method section). The percentage of different brainwave components as recognized by the PNN are shown as a color map in Fig. 5b. As expected, the PNN recognizes the dominant presence of delta and theta waves in the sleep samples. Furthermore, Fig. 5c shows the total power consumption (details can be found in the supplementary information section) by the PNN as a function of the supply voltage () and sample volume (N). As expected, the power dissipation scales with N and VDD. Interestingly, even for a large sample volume of N = 2 × 105, corresponding to 8 h of EEG data, the power consumption by the proposed PNN architecture was found to be as frugal as 3 μW for , which increases to only 350 μW for . A direct comparison of power dissipation with digital CMOS will be premature at this time, especially since the peripheral circuits required for the proposed PNN architecture will add power dissipation overhead. Nevertheless, these preliminary results show that the PNN architectures based on Gaussian synapses can offer extreme energy efficiency.

Fig. 5.

PNN Architecture for Brainwave Recognition. a The PNN consists of 4 layers: input, pattern, summation, and output. The amplitude of the FFT data is relayed from the input layer to the pattern layer as drain voltage () of the Gaussian synapses, whereas, the frequency range (0–64 Hz) is mapped to the back-gate voltage () range. The summation layer integrates the current over the full swing of VG from the individual pattern blocks and communicates with the winner-takes-it-all (WTA) circuit that allows the output layer to recognize the brainwave patterns. b Implementation of PNN Architecture: 10 whole-night polysomnographic recordings and the corresponding outcome of the PNN architecture shown using a color map. The PNN recognizes the dominant presence of delta and theta waves in all the sleep samples. c Power Consumption by PNN Architecture: The total power consumption by the PNN as a function of the supply voltage () and sample volume (NSAMPLE). As expected, the power dissipation scales with NSAMPLE and VDD

Discussion

In conclusion, we have demonstrated reconfigurable Gaussian synapses based on the heterostructure of atomically thin 2D layered semiconductors as a new class of analog and probabilistic computational primitives that can reinstate both energy and size scaling aspects of computation. Furthermore, we elucidated how Gaussian synapses enable direct hardware realization of PNNs, which offer simple and effective solutions to a wide range of pattern classification problems and thereby resurrect complexity scaling. Finally, we show simulation results suggesting that PNN architecture based on Gaussian synapses is capable of recognizing complex neural oscillations or brainwave patterns from large volumes of EEG data with extreme energy efficiency. We believe that these findings will foster the much-needed interest in hardware implementation of PNNs and ultimately aid high performance and low power computing infrastructure.

Methods

Device fabrication and measurements

MoS2 and BP flakes were micromechanically exfoliated on 285 nm thermally grown SiO2 substrates with highly doped Si as the back-gate electrode. The thicknesses of the MoS2 and BP flakes were in the range of 3–20 nm. MoS2 is a 2D layered material with the lattice parameters a = 3.15 A°, b = 3.15 A°, c = 12.3 A°, α = 90°, β = 90°, and γ = 120°. The layered nature due to van der Waals (vdW) bonding results in a higher value for c. This enables mechanical exfoliation of the material to obtain ultra-thin layers of MoS2. BP exhibits a puckered honeycomb lattice structure. It has phosphorous atoms existing on two parallel planes. The lattice parameters are given by a = 3.31 A°, b = 10.47 A°, c = 4.37 A°, α = 90°, β = 90°, and γ = 90°. The Source/Drain contacts were defined using electron-beam lithography (Vistec EBPG5200). Ni (40 nm) followed by Au (30 nm) was deposited using electron-beam (e-beam) evaporation for the contacts. Both devices were fabricated with a channel length of 1 µm. The width of the MoS2 and BP devices were 0.78 µm and 2 µm, respectively. The top-gated devices were fabricated with hydrogen silsesquioxane (HSQ) as the top-gate dielectric. The top-gate dielectric was deposited by spin coating 6% HSQ in methyl isobutyl ketone (MIBK) (Dow Corning XR-1541–006) at 4000 rpm for 45 s and baked at 80 °C for 4 min. The HSQ was patterned using an e-beam dose of 2000 µC cm−2 and developed at room temperature using 25% tetramethylammonium hydroxide (TMAH) for 30 s following a 90 s rinse in deionized water (DI). Next, the HSQ was cured in air at 180 °C and then 250 °C for 2 min and 3 min, respectively. The thickness of the HSQ layer, used as the top-gate dielectric was 120 nm. Top-gate electrodes with Ni (40 nm) followed by Au (30 nm) were patterned with the same procedure as the source and drain contacts. Given the instability of BP, we took special care to ensure minimal exposure time to the air while fabricating BP devices by storing the material in vacuum chambers between different fabrication steps. In addition, all the three lithography steps involved in the device fabrication were done within a period of 3 days. The electrical characterizations were obtained at room temperature in high vacuum (≈10–6 Torr) a Lake Shore CRX-VF probe station and using a Keysight B1500A parameter analyzer.

EEG data

The data used for our study were obtained from the DREAMS project, which were acquired in a sleep laboratory of a Belgium hospital using a digital 32-channel polygraph (BrainnetTM System of MEDATEC, Brussels, Belgium). They consist of whole-night polysomnographic recordings, coming from healthy subjects. At least two EOG channels (P8—A1, P18—A1), three EEG channels (CZ—A1 or C3—A1, FP1—A1, and O1—A1), and one submental EMG channel were recorded. The standard European Data Format (EDF) was used for storing. The sampling frequency was 200 Hz. These recordings have been specifically selected for their clarity (i.e., that they contain few artifacts) and come from persons, free of any medication, volunteers in other research projects, conducted in the sleep lab.

Supplementary information

Acknowledgements

The authors would like to acknowledge the contribution of Joseph R Nasr, Harikrishnan Ravichandran, Harikrishnan Jayachandrakurup, and Sarbashis Das for their help in device fabrication. This work was partially supported through Grant Number FA9550–17–1–0018 from Air Force Office of Scientific Research (AFOSR) through the Young Investigator Program.

Author contributions

S.D. conceived the idea, designed the experiments and wrote the paper. A.S., S.S.R., and A.P. performed the experiments. All authors analyzed the data, discussed the results, agreed on their implications, and contributed to the preparation of the paper.

Data availability

The data that support the plots within this paper and other findings of this study are available from the corresponding authors upon reasonable request.

Code availability

The codes used for data analysis are available from the corresponding authors upon request.

Competing interests

The authors declare no competing interests.

Footnotes

Peer Review Information Nature Communications thanks Qing Wan, Su-Ting Han and other, anonymous, reviewers for their contribution to the peer review of this work. Peer reviewer reports are available.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information accompanies this paper at 10.1038/s41467-019-12035-6.

References

- 1.Frank DJ, et al. Device scaling limits of Si MOSFETs and their application dependencies. Proc. IEEE. 2001;89:259–288. doi: 10.1109/5.915374. [DOI] [Google Scholar]

- 2.Thompson SE, Parthasarathy S. Moore's law: the future of Si microelectronics. Mater. Today. 2006;9:20–25. doi: 10.1016/S1369-7021(06)71539-5. [DOI] [Google Scholar]

- 3.Sze SM, Sze S. Modern Semiconductor Device Physics. New York: Wiley; 1998. [Google Scholar]

- 4.Ma, X. & Arce, G. R. Computational Lithography. Vol. 77 (John Wiley & Sons, 2011).

- 5.Myers, G. J. Advances in Computer Architecture. (John Wiley & Sons, Inc., 1982).

- 6.Dennard RH, Gaensslen FH, Rideout VL, Bassous E, LeBlanc AR. Design of ion-implanted MOSFET's with very small physical dimensions. IEEE J. Solid-St Circ. 1974;9:256–268. doi: 10.1109/JSSC.1974.1050511. [DOI] [Google Scholar]

- 7.Meindl JD, Chen Q, Davis JA. Limits on silicon nanoelectronics for terascale integration. Science. 2001;293:2044–2049. doi: 10.1126/science.293.5537.2044. [DOI] [PubMed] [Google Scholar]

- 8.Yu, B. et al. in Electron Devices Meeting. IEDM'02 International. 251–254 (IEEE, 2002).

- 9.Esmaeilzadeh, H., Blem, E., Amant, R. S., Sankaralingam, K. & Burger, D. in Computer Architecture (ISCA) 38th Annual International Symposium on ISCA. 365–376 (IEEE, 2011).

- 10.Merolla PA, et al. A million spiking-neuron integrated circuit with a scalable communication network and interface. Science. 2014;345:668–673. doi: 10.1126/science.1254642. [DOI] [PubMed] [Google Scholar]

- 11.Benini L, Micheli Gd. System-level power optimization: techniques and tools. ACM Trans. Des. Autom. Electron. Syst. 2000;5:115–192. doi: 10.1145/335043.335044. [DOI] [Google Scholar]

- 12.Pickett MD, Medeiros-Ribeiro G, Williams RS. A scalable neuristor built with Mott memristors. Nat. Mater. 2013;12:114. doi: 10.1038/nmat3510. [DOI] [PubMed] [Google Scholar]

- 13.Prezioso M, et al. Training and operation of an integrated neuromorphic network based on metal-oxide memristors. Nature. 2015;521:61. doi: 10.1038/nature14441. [DOI] [PubMed] [Google Scholar]

- 14.Wang Z, et al. Memristors with diffusive dynamics as synaptic emulators for neuromorphic computing. Nat. Mater. 2017;16:101–108. doi: 10.1038/nmat4756. [DOI] [PubMed] [Google Scholar]

- 15.Baldi P, Meir R. Computing with arrays of coupled oscillators: an application to preattentive texture discrimination. Neural Comput. 1990;2:458–471. doi: 10.1162/neco.1990.2.4.458. [DOI] [Google Scholar]

- 16.Arnold AJ, et al. Mimicking neurotransmitter release in chemical synapses via hysteresis engineering in MoS2 Transistors. ACS Nano. 2017;11:3110–3118. doi: 10.1021/acsnano.7b00113. [DOI] [PubMed] [Google Scholar]

- 17.Tian H, et al. Anisotropic black phosphorus synaptic device for neuromorphic applications. Adv. Mater. 2016;28:4991–4997. doi: 10.1002/adma.201600166. [DOI] [PubMed] [Google Scholar]

- 18.Jiang J, et al. 2D MoS2 neuromorphic devices for brain-like computational systems. Small. 2017;13:1700933. doi: 10.1002/smll.201700933. [DOI] [PubMed] [Google Scholar]

- 19.Dreiseitl S, Ohno-Machado L. Logistic regression and artificial neural network classification models: a methodology review. J. Biomed. Inform. 2002;35:352–359. doi: 10.1016/S1532-0464(03)00034-0. [DOI] [PubMed] [Google Scholar]

- 20.Hagan MT, Menhaj MB. Training feedforward networks with the Marquardt algorithm. IEEE Trans. Neural Netw. 1994;5:989–993. doi: 10.1109/72.329697. [DOI] [PubMed] [Google Scholar]

- 21.Bergman, K. et al. Exascale computing study: technology challenges in achieving exascale systems. Defense Advanced Research Projects Agency Information Processing Techniques Office (DARPA IPTO), Techical Report15 (2008).

- 22.Reed DA, Dongarra J. Exascale computing and big data. Commun. ACM. 2015;58:56–68. doi: 10.1145/2699414. [DOI] [Google Scholar]

- 23.Specht DF. Probabilistic neural networks. Neural Netw. 1990;3:109–118. doi: 10.1016/0893-6080(90)90049-Q. [DOI] [PubMed] [Google Scholar]

- 24.Besag, J., Green, P., Higdon, D. & Mengersen, K. Bayesian computation and stochastic systems. Stat. Sci.10, 3–41 (1995).

- 25.Schløler H, Hartmann U. Mapping neural network derived from the Parzen window estimator. Neural Netw. 1992;5:903–909. doi: 10.1016/S0893-6080(05)80086-3. [DOI] [Google Scholar]

- 26.Delbruck, T. Bump circuits for computing similarity and dissimilarity of analog voltages. IEEE IJCNN, A475–A479 (1991).

- 27.Choi J, Sheu BJ, Chang JCF. A Gaussian synapse circuit for analog vlsi neural networks. 1994 IEEE Int. Symp . Circuits Syst. 1994;6:F483–F486. [Google Scholar]

- 28.Madrenas J, Verleysen M, Thissen P, Voz JL. A CMOS analog circuit for Gaussian functions. IEEE Trans. Circuits-Ii. 1996;43:70–74. [Google Scholar]

- 29.Lin SY, Huang RJ, Chiueh TD. A tunable Gaussian/square function computation circuit for analog neural networks. IEEE Trans. Circuits Syst. Ii-Analog Digit. Signal Process. 1998;45:441–446. doi: 10.1109/82.664259. [DOI] [Google Scholar]

- 30.Srivastava, R., Singh, U. & Gupta, M. Analog circuits for Gaussian function with improved performance. In Analog circuits for Gaussian function with improved performance 934–938 (IEEE, 2011).

- 31.Kang K, Shibata T. An on-chip-trainable Gaussian-Kernel analog support vector machine. IEEE Trans. Circuits-I. 2010;57:1513–1524. doi: 10.1109/TCSI.2009.2034234. [DOI] [Google Scholar]

- 32.Wu, N. et al. A real-time and energy-efficient implementation of difference-of-Gaussian with flexible thin-film transistors. IEEE Comp. Soc. Ann. 455–460 10.1109/Isvlsi.2016.87 (2016).

- 33.Das S, Chen HY, Penumatcha AV, Appenzeller J. High performance multilayer MoS2 transistors with scandium contacts. Nano Lett. 2013;13:100–105. doi: 10.1021/nl303583v. [DOI] [PubMed] [Google Scholar]

- 34.Das S, Demarteau M, Roelofs A. Ambipolar phosphorene field effect transistor. ACS nano. 2014;8:11730–11738. doi: 10.1021/nn505868h. [DOI] [PubMed] [Google Scholar]

- 35.Das S, Robinson JA, Dubey M, Terrones H, Terrones M. Beyond graphene: progress in novel two-dimensional materials and van der Waals Solids. Annu Rev. Mater. Res. 2015;45:1–27. doi: 10.1146/annurev-matsci-070214-021034. [DOI] [Google Scholar]

- 36.Schulman, D. S., Arnold, A. J. & Das, S. Contact engineering for 2D materials and devices. Chem. Soc. Rev. 3037–3058 (2018). [DOI] [PubMed]

- 37.Andrzejewski D, et al. Improved luminescence properties of MoS2 monolayers grown via MOCVD: role of pre-treatment and growth parameters. Nanotechnology. 2018;29:295704. doi: 10.1088/1361-6528/aabbb9. [DOI] [PubMed] [Google Scholar]

- 38.Nguyen TK, et al. High photoresponse in conformally grown monolayer MoS2 on a rugged substrate. ACS Appl. Mater. Interfaces. 2018;10:40824–40830. doi: 10.1021/acsami.8b15673. [DOI] [PubMed] [Google Scholar]

- 39.Smithe KKH, Suryavanshi SV, Munoz Rojo M, Tedjarati AD, Pop E. Low variability in synthetic monolayer MoS2 devices. ACS Nano. 2017;11:8456–8463. doi: 10.1021/acsnano.7b04100. [DOI] [PubMed] [Google Scholar]

- 40.Zhang J, et al. Scalable growth of high-quality polycrystalline MoS(2) monolayers on SiO(2) with tunable grain sizes. ACS Nano. 2014;8:6024–6030. doi: 10.1021/nn5020819. [DOI] [PubMed] [Google Scholar]

- 41.Smith JB, Hagaman D, Ji HF. Growth of 2D black phosphorus film from chemical vapor deposition. Nanotechnology. 2016;27:215602. doi: 10.1088/0957-4484/27/21/215602. [DOI] [PubMed] [Google Scholar]

- 42.Das, S. & Appenzeller, J. WSe2 field effect transistors with enhanced ambipolar characteristics. Appl. Phys. Lett.103, 103501 (2013).

- 43.Zhang Xiaotian, Zhang Fu, Wang Yuanxi, Schulman Daniel S., Zhang Tianyi, Bansal Anushka, Alem Nasim, Das Saptarshi, Crespi Vincent H., Terrones Mauricio, Redwing Joan M. Defect-Controlled Nucleation and Orientation of WSe2 on hBN: A Route to Single-Crystal Epitaxial Monolayers. ACS Nano. 2019;13(3):3341–3352. doi: 10.1021/acsnano.8b09230. [DOI] [PubMed] [Google Scholar]

- 44.Chuang S, et al. MoS2 p-type transistors and diodes enabled by high work function MoO x contacts. Nano Lett. 2014;14:1337–1342. doi: 10.1021/nl4043505. [DOI] [PubMed] [Google Scholar]

- 45.Perello DJ, Chae SH, Song S, Lee YH. High-performance n-type black phosphorus transistors with type control via thickness and contact-metal engineering. Nat. Commun. 2015;6:7809. doi: 10.1038/ncomms8809. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Wang, A., Calhoun, B. H. & Chandrakasan, A. P. in Sub-threshold Design for Ultra Low-Power Systems (Series on Integrated Circuits and Systems). (Springer-Verlag, 2006).

- 47.Hanson S, Seok M, Sylvester D, Blaauw D. Nanometer device scaling in subthreshold logic and SRAM. IEEE Trans. Electron Devices. 2008;55:175–185. doi: 10.1109/TED.2007.911033. [DOI] [Google Scholar]

- 48.Wang A, Chandrakasan A. A 180-mV subthreshold FFT processor using a minimum energy design methodology. IEEE J. Solid-St. Circ. 2005;40:310–319. doi: 10.1109/JSSC.2004.837945. [DOI] [Google Scholar]

- 49.Andreou AG, et al. Current-mode subthreshold MOS circuits for analog VLSI neural systems. IEEE Trans. Neural Netw. 1991;2:205–213. doi: 10.1109/72.80331. [DOI] [PubMed] [Google Scholar]

- 50.Wallace RM, Wilk GD. High-κ dielectric materials for microelectronics. Crit. Rev. Solid State Mater. Sci. 2003;28:231–285. doi: 10.1080/714037708. [DOI] [Google Scholar]

- 51.Frank DJ, Taur Y, Wong H-SP. Generalized scale length for two-dimensional effects in MOSFETs. Electron Device Lett. IEEE. 1998;19:385–387. doi: 10.1109/55.720194. [DOI] [Google Scholar]

- 52.Nasr JR, Das S. Seamless fabrication and threshold engineering in monolayer MoS2 dual-gated transistors via hydrogen silsesquioxane. Adv. Electron. Mater. 2019;5:1800888. doi: 10.1002/aelm.201800888. [DOI] [Google Scholar]

- 53.Nasr JR, Schulman DS, Sebastian A, Horn MW, Das S. Mobility deception in nanoscale transistors: an untold contact story. Adv. Mater. 2019;31:1806020. doi: 10.1002/adma.201806020. [DOI] [PubMed] [Google Scholar]

- 54.Khakifirooz A, Nayfeh OM, Antoniadis D. A simple semiempirical short-channel MOSFET current–voltage model continuous across all regions of operation and employing only physical parameters. IEEE Trans. Electron Devices. 2009;56:1674–1680. doi: 10.1109/TED.2009.2024022. [DOI] [Google Scholar]

- 55.Rubik B. Neurofeedback-enhanced gamma brainwaves from the prefrontal cortical region of meditators and non-meditators and associated subjective experiences. J. Altern. Complement. Med. 2011;17:109–115. doi: 10.1089/acm.2009.0191. [DOI] [PubMed] [Google Scholar]

- 56.Nakayama, K., Kaneda, Y. & Hirano, A. A Brain Computer Interface Based on FFT and multilayer neural network - feature extraction and generalization. International Symposium on ISPACS'07 826–829 (IEEE, 2007).

- 57.Nakayama, K. & Inagaki, K. A Brain Computer Interface Based on Neural Network with Efficient Pre-Processing. International Symposium on ISPACS'06 673–676 (IEEE, 2006).

- 58.Subasi A, Ercelebi E. Classification of EEG signals using neural network and logistic regression. Comput. Methods Prog. Biomed. 2005;78:87–99. doi: 10.1016/j.cmpb.2004.10.009. [DOI] [PubMed] [Google Scholar]

- 59.Zivkovic, Z. An Improved Moving Object Detection Algorithm Based on Gaussian Mixture Models. Proceedings of the 17th International Conference on Pattern Recognition (ICPR) 28–31 (IEEE, 2004).

- 60.Reynolds, D. Gaussian mixture models. Ency. Biometrics 827–832 (2015).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data that support the plots within this paper and other findings of this study are available from the corresponding authors upon reasonable request.

The codes used for data analysis are available from the corresponding authors upon request.