Abstract

It is well known that the unusual expression of long non-coding RNAs (lncRNAs) is closely related to the physiological and pathological processes of diseases. Therefore, inferring the potential lncRNA–disease associations are helpful for understanding the molecular pathogenesis of diseases. Most previous methods have concentrated on the construction of shallow learning models in order to predict lncRNA-disease associations, while they have failed to deeply integrate heterogeneous multi-source data and to learn the low-dimensional feature representations from these data. We propose a method based on the convolutional neural network with the attention mechanism and convolutional autoencoder for predicting candidate disease-related lncRNAs, and refer to it as CNNDLP. CNNDLP integrates multiple kinds of data from heterogeneous sources, including the associations, interactions, and similarities related to the lncRNAs, diseases, and miRNAs. Two different embedding layers are established by combining the diverse biological premises about the cases that the lncRNAs are likely to associate with the diseases. We construct a novel prediction model based on the convolutional neural network with attention mechanism and convolutional autoencoder to learn the attention and the low-dimensional network representations of the lncRNA–disease pairs from the embedding layers. The different adjacent edges among the lncRNA, miRNA, and disease nodes have different contributions for association prediction. Hence, an attention mechanism at the adjacent edge level is established, and the left side of the model learns the attention representation of a pair of lncRNA and disease. A new type of lncRNA similarity and a new type of disease similarity are calculated by incorporating the topological structures of multiple bipartite networks. The low-dimensional network representation of the lncRNA-disease pairs is further learned by the autoencoder based convolutional neutral network on the right side of the model. The cross-validation experimental results confirm that CNNDLP has superior prediction performance compared to the state-of-the-art methods. Case studies on stomach cancer, breast cancer, and prostate cancer further show the ability of CNNDLP for discovering the potential disease lncRNAs.

Keywords: lncRNA-disease association prediction, feature learning based on convolutional autoencoder, convolutional neural networks, attention at adjacent edge level, similarity calculation based on multiple bipartite networks

1. Introduction

For the past few years, genetic information has been thought to be stored only in protein-coding genes, while non-coding RNAs (ncRNAs) are only byproducts of the transcription process [1,2]. However, accumulating evidences indicate that ncRNAs play important roles in various biological processes, especially long non-coding RNAs (lncRNAs), with lengths > 200 nucleotides [3,4].

The previous methods have been presented for predicting the lncRNA-disease associations, and they are classified into three categories. The methods in the first category utilize machine learning methods to identify the candidate associations. Chen et al. develop a semi-supervised learning model, LRLSLDA, which uses Laplacian regularized least squares to identify possible associations between lncRNA and disease [5]. A model based on the Bayesian classifier was developed for predicting candidate disease lncRNAs [6]. However, most of the methods in this category fail to achieve the good performances for the lncRNAs with no any known associated diseases.

The second category of methods takes use of the biological premise that lncRNAs with similar functions tend to be associated with similar diseases [7]. First, the similarity between two lncRNAs is calculated by the diseases associated with the lncRNAs, and a network composed of lncRNA is constructed by using the similarities between lncRNAs [8]. Several methods are presented for predicting the lncRNAs related to a given disease based on the lncRNA network, for instance, via random walks on the lncRNA network [9,10] or by utilizing the information of neighboring nodes of lncRNA [11]. These methods are ineffective for the new diseases with no known related lncRNAs, as they rely on a set of seed lncRNAs that have been observed to be related to the disease. Some methods attempt to introduce additional information about diseases to solve this shortcoming. Disease information is incorporated with the lncRNA network to create a heterogeneous lncRNA-disease network that contains information of lncRNA similarities, that of disease similarities and that of lncRNA-disease associations. Several methods exploit the information, but they construct different models within the heterogeneous network to estimate the association scores between the lncRNAs and the diseases. For instance, the association scores are derived by random walks in the lncRNA-disease network [10,12], or by matrix factorization of lncRNA-disease associations [13,14]. Since lncRNAs are often involved in disease processes along with miRNAs, it is necessary to integrate the interactions and associations about the miRNAs. Nevertheless, most of the previous methods overlook these information related to the miRNAs.

The third category of methods integrates multiple biological data sources about the lncRNA, the miRNA, the proteins. MFLDA integrates various information about the genes and the miRNAs interacted with lncRNAs, and about the diseases associated with lncRNAs. The method constructs a matrix factorization based on data fusion model for predicting disease lncRNAs [15]. Zhang et al. introduce the protein information to establish the lncRNA-protein-disease network and predict the candidate associations between lncRNAs and diseases based on propagating information streams in the network [16]. The diverse information available about the lncRNAs, diseases, genes, and proteins reflect the associations of lncRNAs and diseases from the different perspectives. However, it is difficult for these methods to deeply integrate heterogeneous data from multiple sources. Therefore, we present a novel prediction method based on dual convolutional neural networks to learn the latent representations of lncRNA-disease pairs from the multiple-source data.

2. Experimental Evaluations and Discussions

2.1. Evaluation Metrics

Five-fold cross-validation is used to evaluate the prediction performances of CNNDLP and several state-of-the-art methods for predicting lncRNA-disease associations. All the known lncRNA–disease associations are regarded as positive samples, and the unobserved associations are taken as negatives samples. We randomly divided all the positive samples into five subsets, and four of them are used to training the model. As the number of positive samples is far less than that of the negative samples (ratio of positive samples to negative samples is nearly 1:36 in our study), during the training process, we select the negative samples randomly whose number match to the number of the positive training samples, and these negative samples are also used for training the model. The positive samples in the remaining subset and all the negative samples are considered as the testing samples. The number of positive samples and that of negative samples during the cross-validation process are listed in Supplement Table S1. In particular, during each cross-validation, the positive samples used for testing are removed and the lncRNA similarities are recalculated by using the remaining positive samples.

We obtain the association scores of testing samples and prioritize them by their scores. The positive samples are ranked higher, which indicate that the prediction performance is better. The lncRNA-disease node pairs whose scores are greater than a classification threshold are identified as positive samples, and the ones that have lower scores are determined as negative samples. The true positive rates (TPRs) and the false positive rates (FPRs) at various θ values are calculated as follows:

| (1) |

where and are the numbers of positive and negative samples that are identified correctly, while and are the numbers of misidentified positive and negative samples. The receiver operating characteristic (ROC) curve can be drawn according to the TPRs and FPRs at each various , while the area under the ROC curve (AUC) is usually used to evaluate the overall performance of a prediction method [17].

A serious imbalance between the positive samples and the negative ones appears since their ratio is 1:36. For such imbalanced cases, precision-recall (PR) curve is confirmed to be more informative than ROC curve [18]. Therefore, the PR curve is used as another important measurement for the prediction performance of each method. Precision and recall are calculated as follows:

| (2) |

where precision is the proportion of the real positive samples among the samples that are identified as the positive ones, while recall is the proportion of the real positive samples to the total actual positive ones. The area under the P-R curve (AUPR) is also utilized to evaluate the performance of lncRNA-disease association prediction [19].

In addition, the top candidate lncRNAs are usually selected by the biologists for further experimental verification of their associations with an interested disease. Therefore, we demonstrate the recall rates of the top 30, 60, and 240 candidates, which demonstrates how many of the positive samples are identified correctly within the ranking list of top k.

2.2. Comparison with Other Methods

To assess the prediction performance of CNNDLP, we compare it with several state-of-the-art methods for predicting disease lncRNAs: SIMCLDA [20], Ping’s method [21], MFLDA [15], LDAP [22] and CNNLDA [8]. CNNDLP and the other four methods have specific hyperparameters for fine-tuning to achieve their best association prediction performance. We choose the values of CNNDLP’s hyperparameters, α, β and λ, from {0.1, ..., 0.9}. CNNDLP achieved the best performance of five-fold cross-validation, when α = 0.9, β = 0.8 and λ = 0.3. The prediction performances of CNNDLP at different values of α, β, and λ on CNNDLP in the Supplementary Table S2, Supplementary Table S3, and Supplementary Table S4. In addition, the window size of all convolutional layers and pooling layers in CNNDLP is set as 2 × 2. The number of filters in the first and the second convolutional layers and are set to 16 and 32 respectively. CNNDLP has a great many parameters, which is easy to make the model overfit all the training samples. Therefore, we adopt dropout strategy and batch normalization to prevent the overfitting. To make a fair comparison, we set the hyperparameters of other methods to the optimal values that are recommended by their respective literatures (i.e., αl = 0.8, αd = 0.6 and λ = 1 for SIMCLDA, α = 0.6 for Ping’s method, α = 105 for MFLDA, Gap open = 10. Gap extend = 0.5 for LDAP).

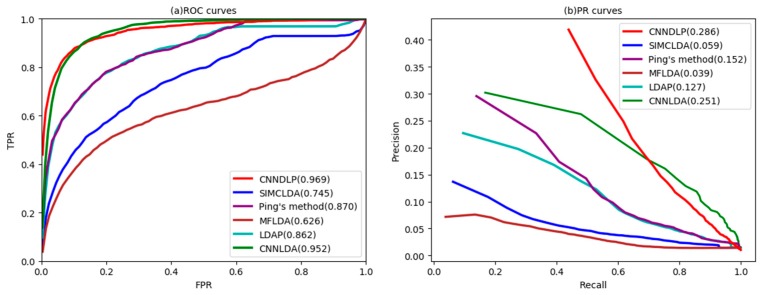

As shown in Figure 1a, CNNDLP yields the highest average performance on all of the 405 diseases (AUC = 0.969). In particular, its performance is increased SIMCLDA by 21.2%, Ping’s method by 9.3%, MFDDA by 34.4%, LDAP by 10.7%, and CNNLDA by 1.7%. The AUCs of the five methods on 10 well-characterized diseases are also listed in Table 1, and CNNDLP achieves the best performance in all of the 10 diseases. The AUC of CNNDLP is slightly better than CNNLDA, but the AUPR of the former is 3.5% higher than the latter. The possible reason for this is that CNNLDA did not learn the low-dimensional network representation of a lncRNA-disease pair. Ping’s method and the LDAP achieved decent performance as they take advantage of the various similarities of different types of lncRNAs and diseases. MFLDA does not perform as well as the other four methods. A possible reason is that it ignored the lncRNA similarity and the disease similarity, while are exploited by the other methods. The improvement of CNNDLP over the compared methods is primarily due to the fact that it deeply learns the attention representation and low-dimensional network-level representation of the lncRNA-disease node pairs.

Figure 1.

ROC curves and PR curves of CNNDLP and other methods for all diseases.

Table 1.

AUCs of CNNDLP and other methods on all the diseases and 10 well-characterized diseases.

| Disease Name | CNNDLP | Ping’s Method | AUC LDAP |

SIMCLDA | MFLDA | CNNLDA |

|---|---|---|---|---|---|---|

| Prostate cancer | 0.951 | 0.826 | 0.710 | 0.874 | 0.553 | 0.897 |

| Stomach cancer | 0.947 | 0.930 | 0.928 | 0.864 | 0.467 | 0.958 |

| Lung cancer | 0.976 | 0.911 | 0.882 | 0.790 | 0.676 | 0.940 |

| Breast cancer | 0.956 | 0.872 | 0.830 | 0.742 | 0.517 | 0.836 |

| Reproduce organ cancer | 0.943 | 0.818 | 0.742 | 0.707 | 0.740 | 0.922 |

| Ovarian cancer | 0.970 | 0.913 | 0.857 | 0.786 | 0.558 | 0.942 |

| Hematologic cancer | 0.989 | 0.908 | 0.903 | 0.828 | 0.716 | 0.934 |

| Kidney cancer | 0.984 | 0.979 | 0.977 | 0.728 | 0.677 | 0.956 |

| Liver cancer | 0.956 | 0.910 | 0.898 | 0.799 | 0.634 | 0.918 |

| Thoracic cancer | 0.921 | 0.860 | 0.792 | 0.792 | 0.649 | 0.890 |

| Average AUC of 405 diseases | 0.969 | 0.870 | 0.745 | 0.745 | 0.626 | 0.952 |

The bold values indicate the higher AUCs.

As shown in Figure 1b, CNNDLP’s average AUPR is also higher than other methods on 405 diseases (AUPR = 0.286). Its average AUPR is 22.7%, 13.4%, 24.7%, 15.9%, and 3.5% higher SIMCLDA, Ping’s method, MFLDA, LDAP and CNNLDA, respectively. In addition, CNNDLP performs the best performance among nine of the ten well-characterized diseases (Table 2).

Table 2.

AUPRs of CNNDLP and other methods on all the diseases and 10 well-characterized diseases.

| Disease Name | CNNDLP | Ping’s Method | AUPR LDAP |

SIMCLDA | MFLDA | CNNLDA |

|---|---|---|---|---|---|---|

| Prostate cancer | 0.538 | 0.333 | 0.297 | 0.176 | 0.092 | 0.390 |

| Stomach cancer | 0.373 | 0.364 | 0.094 | 0.138 | 0.008 | 0.286 |

| Lung cancer | 0.666 | 0.437 | 0.363 | 0.131 | 0.171 | 0.058 |

| Breast cancer | 0.485 | 0.403 | 0.396 | 0.047 | 0.031 | 0.964 |

| Reproduce organ cancer | 0.498 | 0.281 | 0.240 | 0.130 | 0.103 | 0.091 |

| Ovarian cancer | 0.552 | 0.483 | 0.427 | 0.027 | 0.023 | 0.526 |

| Hematologic cancer | 0.667 | 0.403 | 0.370 | 0.216 | 0.121 | 0.523 |

| Kidney cancer | 0.569 | 0.663 | 0.462 | 0.030 | 0.034 | 0.584 |

| Liver cancer | 0.630 | 0.498 | 0.511 | 0.140 | 0.110 | 0.666 |

| Thoracic cancer | 0.399 | 0.383 | 0.364 | 0.155 | 0.102 | 0.890 |

| Average AUC of 405 diseases | 0.286 | 0.152 | 0.127 | 0.059 | 0.039 | 0.251 |

The bold values indicate the higher AUPRs.

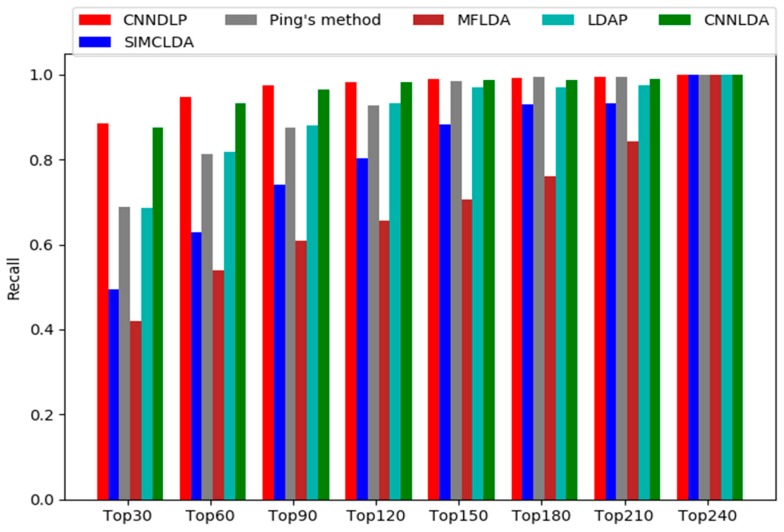

A higher recall value in the top k of ranking list indicates that more real lncRNA-disease associations are identified correctly. Figure 2 shows CNNDLP outperforms the other methods at different top k cutoffs, and ranks 88.6% in top 30, ranks 94.6% in top 60, ranks 97.5% in top 90, and ranks 98.3% in top 120. Most of the recall rates of Ping’s method are very close to LDAP. The former ranked 68.9%, 81.3%, 87.5% and 92.7% in top 30, 60, 90 and 120, respectively, and the latter ranked 68.5%, 81.7%, 88.0% and 93.3%. MFLDA is still worse than the other methods, and it ranked 42.0%, 53.9%, 61.0% and 65.6%.

Figure 2.

Recall values of top k candidates of CNNDLP and other four methods.

In addition, a paired Wilcoxon test is conducted to confirm whether CNNDLP’s prediction performance is significantly greater than the other methods. The statistical results in Table 3 show that CNNDLP yields better performance than the other methods in terms of not only AUCs but AUPRs, as well for the threshold p-value of 0.05.

Table 3.

Comparing of different methods based on AUCs with the paired Wilcoxon test.

| SIMCLDA | Ping’s Method | MFLDA | LDAP | CNNLDA | |

|---|---|---|---|---|---|

| p-value of ROC curve | 9.2454 × 10−6 | 0.00048 | 5.9940 × 10−7 | 0.00121 | 0.00773 |

| p-value of PR curve | 8.3473 × 10−7 | 0.04174 | 3.5037 × 10−8 | 0.00126 | 0.00024 |

2.3. Case Studies: Stomach Cancer, Breast Cancer and Prostate Cancer

To further demonstrate the capability of CNNDLP to discover potential disease-related candidate lncRNAs, we construct the case studies on stomach cancer, breast cancer, and prostate cancer. For each of these three diseases, we prioritize the candidate lncRNA-disease associations based on their association scores and gather their respective 15 candidates.

Stomach cancer is currently the fourth most common malignant tumor in the world and the second leading cause of cancer-related death [23]. First, Lnc2Cancer is a manually curated database that are verified associations between the lncRNAs and the human cancers by the biological experiments [24]. Twelve of 15 candidates are included by Lnc2Cancer (Table 4), which indicates that these lncRNAs are indeed associated with the disease.

Table 4.

The top 15 stomach cancer-related candidate lncRNAs.

| Rank | lncRNA Name | Description | Rank | lncRNA Name | Description |

|---|---|---|---|---|---|

| 1 | SPRY4-IT1 | Lnc2Cancer, LncRNADisease | 9 | CDKN2B-AS1 | LncRNADisease |

| 2 | TINCR | Lnc2Cancer, LncRNADisease | 10 | CCAT1 | Lnc2Cancer, LncRNADisease |

| 3 | H19 | Lnc2Cancer, LncRNADisease | 11 | HOTAIR | Lnc2Cancer, LncRNADisease |

| 4 | TUSC7 | Lnc2Cancer, LncRNADisease | 12 | GACAT2 | LncRNADisease |

| 5 | BANCR | Lnc2Cancer, LncRNADisease | 13 | UCA1 | Lnc2Cancer, LncRNADisease |

| 6 | MEG3 | Lnc2Cancer, LncRNADisease | 14 | PVT1 | Lnc2Cancer, LncRNADisease |

| 7 | GAS5 | Lnc2Cancer, LncRNADisease | 15 | MEG8 | literature |

| 8 | GHET1 | Lnc2Cancer, LncRNADisease |

Second, LncRNADisease records more than 4564 lncRNA-disease associations that are obtained from experiments, the published literatures or computation, and then the dysregulation of lncRNAs are manually confirmed [25]. There are 14 candidates contained by the LncRNADisease, indicating they are upregulated or downregulated in stomach cancer tissues. In addition, one candidate labeled by “literature” is supported by the literature, and it is confirmed to have dysregulation in the cancer when compared with the normal tissues [26].

Among the top 15 candidates for breast cancer, 11 candidates are reported in Lnc2Cancer with abnormal expression in breast cancer. (Table 5) LncRNADisease contains 12 candidates, which confirms the associations between these candidates and the disease. The remaining 2 candidates are confirmed by the literatures to have desregulation in the breast cancer [27,28].

Table 5.

The top 15 breast cancer-related candidate lncRNAs.

| Rank | lncRNA Name | Description | Rank | lncRNA Name | Description |

|---|---|---|---|---|---|

| 1 | SOX2-OT | Lnc2Cancer, LncRNADisease | 9 | CCAT1 | Lnc2Cancer, LncRNADisease |

| 2 | HOTAIR | Lnc2Cancer, LncRNADisease | 10 | GAS5 | Lnc2Cancer, LncRNADisease |

| 3 | LINC00472 | Lnc2Cancer, LncRNADisease | 11 | MIR124-2HG | literature |

| 4 | BCYRN1 | LncRNADisease | 12 | XIST | Lnc2Cancer, LncRNADisease |

| 5 | LINC-PINT | literature | 13 | LINC-ROR | Lnc2Cancer, LncRNADisease |

| 6 | MALAT1 | Lnc2Cancer, LncRNADisease | 14 | PANDAR | Lnc2Cancer, LncRNADisease |

| 7 | CDKN2B-AS1 | LncRNADisease | 15 | AFAP1-AS1 | Lnc2Cancer |

| 8 | SPRY4-IT1 | Lnc2Cancer, LncRNADisease |

The top 15 prostate cancer-related candidates and the corresponding evidences are listed in Table 6. Fourteen candidates are included by Lnc2Cancer and 14 ones are contained by LncRNADisease, which indicates that they truly are related to the disease. All the case studies confirm that CNNDLP is effective and impactful for discovering potential candidate disease lncRNAs.

Table 6.

The top 15 prostate cancer-related candidate lncRNAs.

| Rank | lncRNA Name | Description | Rank | lncRNA Name | Description |

|---|---|---|---|---|---|

| 1 | CDKN2B-AS1 | LncRNADisease | 9 | HOTAIR | Lnc2Cancer, LncRNADisease |

| 2 | PCGEM1 | Lnc2Cancer, LncRNADisease | 10 | LINC00963 | Lnc2Cancer, LncRNADisease |

| 3 | PVT1 | Lnc2Cancer, LncRNADisease | 11 | H19 | Lnc2Cancer, LncRNADisease |

| 4 | GAS5 | Lnc2Cancer, LncRNADisease | 12 | MEG3 | Lnc2Cancer, LncRNADisease |

| 5 | HOTTIP | Lnc2Cancer, LncRNADisease | 13 | TUG1 | Lnc2Cancer, LncRNADisease |

| 6 | NEAT1 | Lnc2Cancer, LncRNADisease | 14 | PCA3 | Lnc2Cancer, LncRNADisease |

| 7 | PCAT5 | Lnc2Cancer | 15 | DANCR | Lnc2Cancer, LncRNADisease |

| 8 | PRINS | Lnc2Cancer, LncRNADisease |

2.4. Prediction of Novel Disease lncRNAs

After five-fold cross validation and case studies to confirm its prediction performance, we further apply CNNDLP to 405 diseases. All the known lncRNA-disease associations are used for training CNNDLP’s to predict potential disease-related lncRNAs. The top 50 potential candidates for each of 405 diseases are demonstrated in Supplementary Table S5.

3. Materials and Methods

3.1. Datasets for lncRNA-Disease Association Prediction

We obtained thousands of lncRNA-disease associations, lncRNA-miRNA interactions and miRNA-disease associations from a published work [15]. The human lncRNA-disease database (LncRNADisease) consists of 2687 lncRNA-disease associations that were verified by the biological experiments, covering 240 lncRNAs and 405 diseases [29]. The disease similarities were calculated based on directed acyclic graphs (DAGs) and the DAGs were constructed based on the disease terms from the U.S. National Library of Medicine (MeSH). The 1002 lncRNA-miRNA interactions were originally extracted from starBasev2.0 and they have been confirmed by biological experiments [30], and were involved 495 miRNAs. The 13,559 miRNA-disease associations were obtained from HMDD database [31].

3.2. Bipartite Graphs about the lncRNAs, Diseases, miRNAs, and Representations

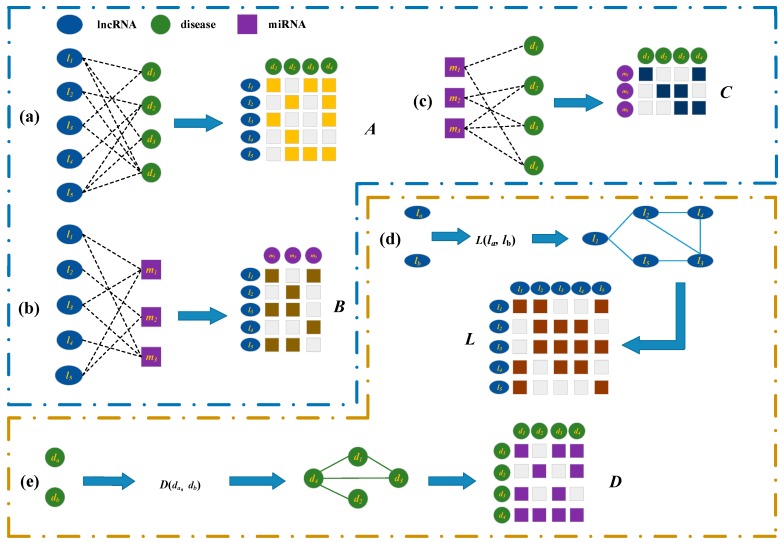

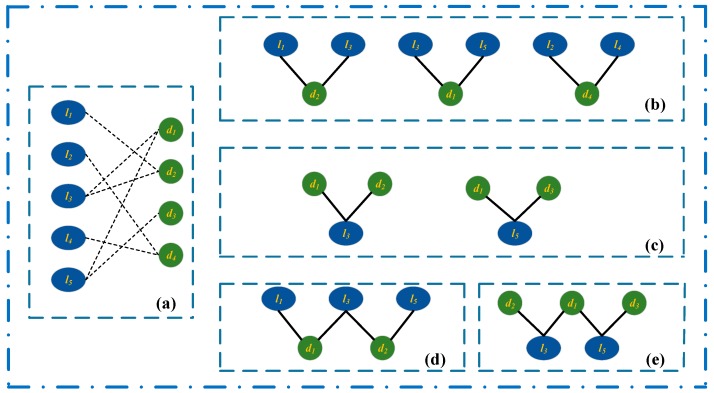

We firstly construct a bipartite graph composed of lncRNAs and diseases by connecting them according to the observed lncRNA-disease associations (Figure 3a). The graph is represented by matrix , where and are the number of lncRNAs and that of diseases, respectively. Each of rows corresponds to a lncRNA while each of columns represent a disease. If a lncRNA has been observed to be associated a disease , the in is set to 1, otherwise is 0.

Figure 3.

Construction and representation of multiple bipartite graphs. (a) Construct a lncRNA-disease association bipartite graph based on the known associations between lncRNAs and diseases, and its’ matrix representation A. (b) Construct lncRNA-miRNA interactions bipartite graph based on the known lncRNA-miRNA interactions, and its’ matrix representation B. (c) Construct miRNA-disease association bipartite graph based on known miRNA-disease associations, and its’ matrix representation C. (d) Calculate the lncRNA similarity, and construct the matrix representation L. (e) Calculate the disease similarity, and construct the matrix representation D.

There are a great many interactions between the lncRNAs and miRNAs that have been confirmed by the biological experiments [32]. A bipartite graph composed of lncRNA and miRNA nodes is established when there are known interactions between them (Figure 3b). is used to represent interaction matrix, the graph including lncRNAs and miRNAs. If it is known that lncRNA is interacted with miRNA , , or when their interaction has not been observed.

An edge is added to connect a miRNA and a disease, when they are observed to have association (Figure 3c). is a matrix representing a bipartite graph with miRNAs and diseases. We set to 1 if miRNA is associated with disease , or 0 when no such association is observed.

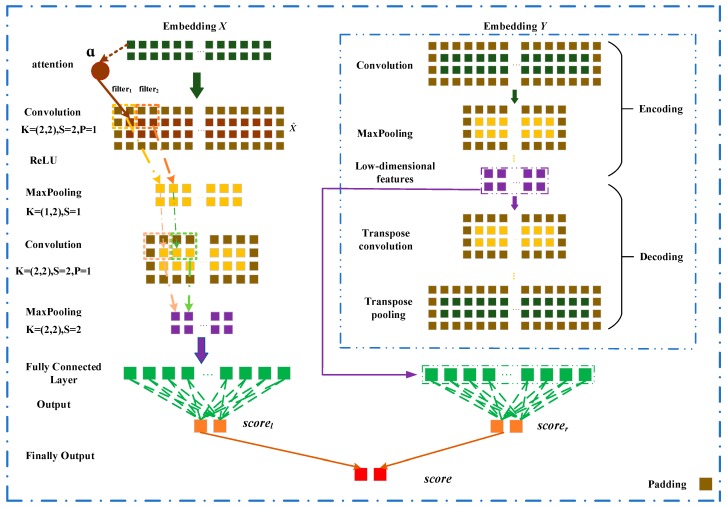

3.3. LncRNA-Disease Association Prediction Model Based on CNN

In this section, we describe the prediction model based on convolutional neural networks and attention mechanism for learning the latent representation and predicting the association score of lncRNA and disease . The embedding layer is firstly constructed by incorporating the associations, the similarities, the interactions about lncRNAs, diseases, miRNAs. A novel prediction model is constructed and it is composed of the left and right parts. The left side of the model learns the attention representation of and , while the network representation of and is learned in the right side of model. Each of the two representations goes through a fully connected layer and a softmax layer and the associated possibility between and is obtained and it is regarded as their association score. The final score is the weighted sum of two association scores.

3.3.1. Embedding Layer on the Left

lncRNA Functional Similarity Measurement

On the basis of the biological premise that lncRNAs with similar functions are more possibly to be associated with similar diseases, the similarity of two lncRNAs is measured by their associated diseases. For instance, lncRNA is associated with disease , and and lncRNA is associated with diseases , and . The similarity between and is regarded as the functional similarity of and . The lncRNA similarity that are used by us is calculated according to the Xuan’s method [8]. Matrix is the lncRNA similarity matrix (Figure 3d), where is the similarity of lncRNAs and , value changes between 0 and 1.

Disease Similarity Measurement

All semantic terms related to a disease form its directed acyclic graph (DAG). The semantic similarities between the diseases are successfully calculated by Wang et al. based on their DAGs [33]. We calculate the disease similarities according to Wang’s method, and the similarities can be represented by matrix , where is the similarity of disease and (Figure 3e). The similarity of two diseases also varies between 0 and 1.

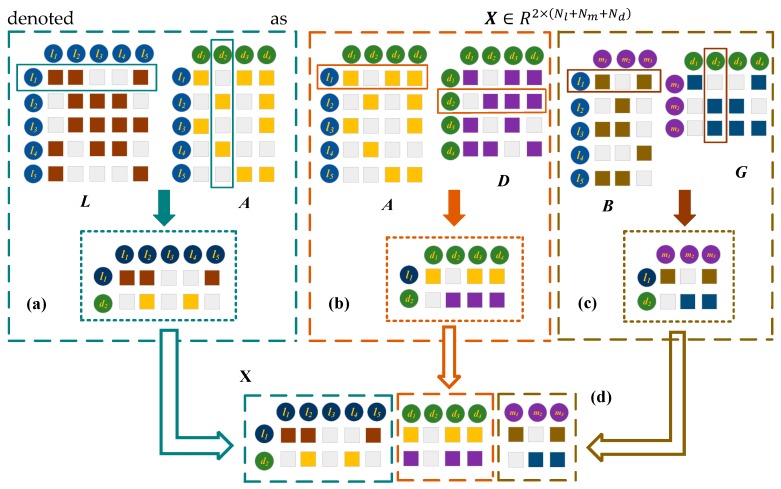

The Left Embedding Layer for Integrating the Original Information

If a lncRNA and a disease have similarity relationships and association relationships with the more common lncRNAs, they are more likely to associated with each other. We take the lncRNA and the disease as an example to explain the process of constructing the embedding layer on the left. As shown in Figure 4, let represents the first row of which records the similarities between and all the lncRNAs. The second row of , , contains the associations between and all the lncRNAs. For example, as is similar to and , and has been associated with , and , is possibly related to . We stack and together as the first part of the embedding layer. Similarly, and are more likely to associate when and have the similarity and association connections with more common diseases. Therefore, we stack and as the second part of the embedding layer. In addition, when a lncRNA and a disease have interaction and association relationships with the common miRNAs, they are more possibly to have association. For instance, there is a possible association between and , since interacts with miRNAs and , and disease is associated with and . The first row of and the second row are stacked as the third part of the embedding layer. The final embedding layer matrix between and is denoted as .

Figure 4.

Construction of the left embedding layer matrix of and , . (a) Construct the first part of by exploiting the lncRNA similarities and the lncRNA-disease associations. (b) Construct the second part of by integrating the lncRNA-disease associations and the disease similarities. (c) Construct the third part of by incorporating the lncRNA-miRNA interactions and the miRNA-disease associations. (d) Concatenate the three parts of .

Attention at the Adjacent Edge Level

For a lncRNA node or a disease node, not all the adjacent edges of the node have equal contributions for learning the representation of a pair of lncRNA-disease. In order to solve the issue, we establish the attention mechanism to enhance the adjacent edges that are important for predicting the lncRNA-disease associations. In the embedding layer matrix , represents the connection case between the i-th node and the j-th node, and is assigned an attention weight , which is defined as follows,

| (3) |

| (4) |

| (5) |

where and are a weight matrix and a context vector respectively, and is a bias vector. is an attention score that represents the importance of . is a normalized importance . is the enhanced embedding layer matrix after the attention mechanism at the adjacent edge level is applied for .

3.3.2. Embedding Layer on the Right

First, it is known that two lncRNA nodes are similar if they are associated with some common disease nodes [22]. In the bipartite network of lncRNA-disease (Figure 5a), lncRNA and are associated with a common disease node , so and are similar. and are also similar because they are related to a disease node (Figure 5b). Similarly, is similar to as they are associated with common lncRNA node (Figure 5c). Second, if two lncRNA nodes have no common neighboring nodes, while they are related to some similar disease nodes, they are also similar to each other [22]. As shown in Figure 5d, and are similar, because their neighboring nodes and are similar. Similarly, and are similar as they are associated with similar neighboring nodes and (Figure 5e). Ping et al. successfully measured the lncRNA similarities and the disease similarities by utilizing the lncRNA-disease bipartite network.

Figure 5.

Calculation of the first type of lncRNA similarity and the first type of disease similarity. (a) The lncRNA-disease association bipartite network. (b) Calculate the lncRNA similarities based on the common associated disease nodes. (c) Computer the disease similarities based on their common related lncRNA nodes. (d) Calculate the lncRNA similarities according to their associated similar disease nodes. (e) The disease similarity calculation based on their related similar lncRNA nodes.

Unlike Ping’s method that focused on a single bipartite network, multiple kinds of lncRNA similarities and disease similarities are calculated by utilizing the bipartite networks from different sources about lncRNA-disease associations, lncRNA-miRNA interactions and miRNA-disease associations. The first kind of lncRNA similarity , and the first kind of disease similarity are calculated according to Ping’s method. The second kind of lncRNA similarity is measured by exploiting the information of common miRNA nodes and similar ones interacting with two lncRNA nodes in the lncRNA-miRNA bipartite network, and it is denoted as . Finally, the second kind of disease similarity is calculated based on the miRNA-disease bipartite network.

In order to incorporate two kinds of lncRNA similarities and , the final lncRNA similarity is defined as follows,

| (6) |

where α is the parameter utilized to control the contributions of and .

Similarly, the final disease similarity is the weighted sum of and , as follows,

| (7) |

where β is a parameter for regulating the weights of and .

The right embedding layer for integrating the second kinds of lncRNA and disease similarities. The establishment of the right embedding layer matrix is similar to the left embedding layer matrix . First, we stack the first row of , , and together as the first part of the embedding layer. Second, and are stacked as the second part of the embedding layer. Finally, the first row of and the second row are stacked as the third part of the embedding layer.

3.4. Convolutional Module on the Left

In this section, we describe a novel model based on convolutional neural networks with adjacent attention for learning latent representations of lncRNA-disease node pairs. The overall architecture is showed in Figure 6. We describe the left convolutional module in detail. Left module includes convolution and activation layer, max-pooling layer, fully connected layer. The embedding matrix is inputted the convolutional module to learn an original representation of a pair of lncRNA-disease node.

Figure 6.

Construction of the prediction model based on the convolutional neural network and convolutional autoencoder for learning the attention representation and the low-dimensional network representation.

For a convolutional layer, the length and the width of a filter are set to and respectively, which means the filter is applied on features. In order to learn the marginal information of the embedding matrix , we pad zeros around . Let the number of filters is . The convolution filters are applied to the embedding matrix , and obtain the feature maps . is the element at the i-th row and j-th column of . represent a region in a filter when the kth filter slides the position .

| (8) |

| (9) |

is the element at the i-th row and the j-th column of the k-th feature map. is relu function that it is a nonlinear activation function [34], is the weight matrix of the k-th filter and b is a bias vector.

The max-pooling layer is used to down-sample the features of the feature maps , and it outputs the most important feature in each feature map. Given an input , the output of pooling layer is shown as follows,

| (10) |

where is the length of a filter of pooling layer and is the width. is the element at the i-th row and the j-th column in the kth feature map. goes through two convolutional and two max-pooling layers, and we obtain the original representation of and from the left convolutional module.

Finally, is flattened to a vector , which is feed to fully connected layer. A softmax layer is used to normalized the output of the fully connected layer and we have

| (11) |

where is the weight matrix, and is a bias vector. is an associated probability distribution of C class (C = 2). is the probability that the lncRNA is associated with the disease and is the probability that and have no association relationship.

Similarly, the embedding matrix is feed to the convolutional module on the right side of the prediction model for learning the network representation of and . of and are obtained when is feed to the full connection layer and the softmax layer.

3.5. Convalutional Autoencoder Module on the Right

The matrices about lncRNA-disease association, lncRNA-miRNA interaction, and miRNA-disease association are very sparse, resulting in many 0 elements are contained in the embedding matrix . An autoencoder based convolutional neural network is constructed to learn important and low-dimensional feature representations of lncRNA-disease pair on the right side of CNNDLP. The encoding and decoding strategies are given as follows,

3.5.1. Encoding Strategy

The embedding layer matrix is mapped into the low-dimensional feature space through encoding based on convolutional neural network. is inputted to the n-th convolution layer to obtain . is formed after passes the n-th max-pooling layer. They are defined as follows,

| (12) |

| (13) |

where is the total number of encoding layers, and . k represents the k-th filter and is the number of filters during encoding process. and are the elements at the i-th row and the j-th column of the k-th feature map, respectively. is a weight matrix and is a bias vector.

3.5.2. Decoding Strategy

The output of the -th encoding layer is used as the input of the decoder. It is a matrix that is similar to by decoding. The decoding process includes both the transpose convolution layer and transpose pooling layer, and they are respectively defined as,

| (14) |

| (15) |

and are the outputs of the n-th transpose convolution layer and transpose max-pooling layer, respectively. the total number of decoding layers, and is the number of filters for decoding. As should be consistent with , we defined the loss function as follows,

| (16) |

where is the output of decoding and is the input of encoding; is corresponding to the i-th training sample (lncRNA-disease pair), and T is the number of training samples. The of and is obtained after the is feed to the full connection layer and the softmax layer.

3.6. Combined Strategy

In our model, the cross-entropy is used as the loss function, for the left and right parts of the prediction model loss functions are defined as follows,

| (17) |

| (18) |

where denotes the actual association label between a lncRNA and a disease. is 1 when the lncRNA is indeed associated with the disease, otherwise is 0. is the number of training samples.

The final score of our model is a weighted sum of and as follows,

| (19) |

where the parameter is used to adjust the importance of and .

4. Conclusions

A novel method based on the convolutional neural network with adjacent edge attention and convolutional autoencoder, entitled CNNDLP, is developed for inferring potential candidate lncRNA-disease associations. Two embedding layers are constructed from the biological perspective for integrating heterogeneous data about lncRNAs, diseases, and miRNAs from multiple sources. We construct the attention mechanism at the adjacent edge level to discriminate the different contributions of edges and the latent representation of a lncRNA-disease pair is learned from the more informative edges by the left side of CNNDLP’s prediction model. On the basis of calculating the new type of lncRNA similarity and that of disease similarity, the right side of CNNDLP’s model captures the complex relationships among these similarities and the lncRNA-disease associations, as well as the topological structures of multiple heterogeneous networks. The novel prediction model based on the convolutional neural network learns the attention representation and the low-dimensional network one of the lncRNA-disease pair. The experimental results demonstrated that CNNDLP outperforms the other methods in terms of not only AUCs but AUPRs as well. In particular, CNNDLP is more beneficial for the biologists as the top part of its ranking list may retrieve more real lncRNA-disease associations. Case studies on three diseases further confirm that CNNDLP is able to discover the potential candidate disease-related lncRNAs. CNNDLP may serve as a powerful prioritization tool that screens prospective candidates for the subsequent discovery of actual lncRNA-disease associations through wet-lab experiments.

Acknowledgments

We would like to thank Editage (www.editage.com) for English language editing.

Supplementary Materials

Supplementary materials can be found at https://www.mdpi.com/1422-0067/20/17/4260/s1.

Author Contributions

Author Contributions: P.X. and N.S. conceived the prediction method, and they wrote the paper. N.S. and Y.L. developed the computer programs. T.Z. and Y.G. analyzed the results and revised the paper.

Funding

The work was supported by the Natural Science Foundation of China (61972135), the Natural Science Foundation of Heilongjiang Province (LH2019F049, LH2019A029), the China Postdoctoral Science Foundation (2019M650069), the Heilongjiang Postdoctoral Scientific Research Staring Foundation (BHL-Q18104), the Fundamental Research Foundation of Universities in Heilongjiang Province for Technology Innovation (KJCX201805), the Innovation Talents Project of Harbin Science and Technology Bureau (2017RAQXJ094), and the Fundamental Research Foundation of Universities in Heilongjiang Province for Youth Innovation Team (RCYJTD201805).

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Fu X.-D. Non-coding RNA: A new frontier in regulatory biology. Natl. Sci. Rev. 2014;1:190–204. doi: 10.1093/nsr/nwu008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Derrien T., Johnson R., Bussotti G., Tanzer A., Djebali S., Tilgner H., Guernec G., Martin D., Merkel A., Knowles D.G., et al. The GENCODE v7 catalog of human long noncoding RNAs: Analysis of their gene structure, evolution, and expression. Genome Res. 2012;22:1775–1789. doi: 10.1101/gr.132159.111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Guttman M., Rinn J.L. Modular regulatory principles of large non-coding RNAs. Nature. 2012;482:339–346. doi: 10.1038/nature10887. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Wapinski O., Chang H.Y. Long noncoding RNAs and human disease. Trends Cell Biol. 2011;21:354–361. doi: 10.1016/j.tcb.2011.04.001. [DOI] [PubMed] [Google Scholar]

- 5.Chen X., Yan G.-Y. Novel human lncRNA-disease association inference based on lncRNA expression profiles. Bioinformatics. 2013;29:2617–2624. doi: 10.1093/bioinformatics/btt426. [DOI] [PubMed] [Google Scholar]

- 6.Zhao T., Xu J., Liu L., Bai J., Xu C., Xiao Y., Li X., Zhang L. Identification of cancer-related lncRNAs through integrating genome, regulome and transcriptome features. Mol. Biosyst. 2015;11:126–136. doi: 10.1039/C4MB00478G. [DOI] [PubMed] [Google Scholar]

- 7.Lan W., Huang L., Lai D., Chen Q. Identifying Interactions Between Long Noncoding RNAs and Diseases Based on Computational Methods. Methods Mol. Biol. 2018;1754:205–221. doi: 10.1007/978-1-4939-7717-8_12. [DOI] [PubMed] [Google Scholar]

- 8.Xuan P., Cao Y., Zhang T., Kong R., Zhang Z. Dual Convolutional Neural Networks with Attention Mechanisms Based Method for Predicting Disease-Related lncRNA Genes. Front. Genet. 2019;10:416. doi: 10.3389/fgene.2019.00416. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Yu G., Fu G., Lu C., Ren Y., Wang J. BRWLDA: Bi-random walks for predicting lncRNA-disease associations. Oncotarget. 2017;8:60429–60446. doi: 10.18632/oncotarget.19588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Chen X., You Z.-H., Yan G.-Y., Gong D.-W. IRWRLDA: Improved random walk with restart for lncRNA-disease association prediction. Oncotarget. 2016;7:57919–57931. doi: 10.18632/oncotarget.11141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Xiao X., Zhu W., Liao B., Xu J., Gu C., Ji B., Yao Y., Peng L., Yang J. BPLLDA: Predicting lncRNA-Disease Associations Based on Simple Paths with Limited Lengths in a Heterogeneous Network. Front. Genet. 2018;9:411. doi: 10.3389/fgene.2018.00411. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Sun J., Shi H., Wang Z., Zhang C., Liu L., Wang L., He W., Hao D., Liu S., Zhou M. Inferring novel lncRNA-disease associations based on a random walk model of a lncRNA functional similarity network. Mol. Biosyst. 2014;10:2074–2081. doi: 10.1039/C3MB70608G. [DOI] [PubMed] [Google Scholar]

- 13.Ding L., Wang M., Sun D., Li A. TPGLDA: Novel prediction of associations between lncRNAs and diseases via lncRNA-disease-gene tripartite graph. Sci. Rep. 2018;8:1065. doi: 10.1038/s41598-018-19357-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Xuan Z., Li J., Yu J., Feng X., Zhao B., Wang L. A Probabilistic Matrix Factorization Method for Identifying lncRNA-disease Associations. Genes. 2019;10:126. doi: 10.3390/genes10020126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Fu G., Wang J., Domeniconi C., Yu G. Matrix factorization-based data fusion for the prediction of lncRNA–disease associations. Bioinformatics. 2017;34:1529–1537. doi: 10.1093/bioinformatics/btx794. [DOI] [PubMed] [Google Scholar]

- 16.Zhang J., Zhang Z., Chen Z., Deng L. Integrating Multiple Heterogeneous Networks for Novel LncRNA-Disease Association Inference. IEEE/ACM Trans. Comput. Biol. Bioinform. 2019;16:396–406. doi: 10.1109/TCBB.2017.2701379. [DOI] [PubMed] [Google Scholar]

- 17.Xuan P., Ye Y., Zhang T., Zhao L., Sun C. Convolutional Neural Network and Bidirectional Long Short-Term Memory-Based Method for Predicting Drug-Disease Associations. Cells. 2019;8:705. doi: 10.3390/cells8070705. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Saito T., Rehmsmeier M. The Precision-Recall Plot Is More Informative than the ROC Plot When Evaluating Binary Classifiers on Imbalanced Datasets. PLoS ONE. 2015;10:e0118432. doi: 10.1371/journal.pone.0118432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Xuan P., Shen T., Wang X., Zhang T., Zhang W. Inferring disease-associated microRNAs in heterogeneous networks with node attributes. IEEE/ACM Trans. Comput. Biol. Bioinform. 2018 doi: 10.1109/TCBB.2018.2872574. [DOI] [PubMed] [Google Scholar]

- 20.Lu C., Yang M., Luo F., Wu F.-X., Li M., Pan Y., Li Y., Wang J. Prediction of lncRNA–disease associations based on inductive matrix completion. Bioinformatics. 2018;34:3357–3364. doi: 10.1093/bioinformatics/bty327. [DOI] [PubMed] [Google Scholar]

- 21.Ping P., Wang L., Kuang L., Ye S., Iqbal M.F.B., Pei T. A Novel Method for LncRNA-Disease Association Prediction Based on an lncRNA-Disease Association Network. IEEE/ACM Trans. Comput. Biol. Bioinform. 2019;16:688–693. doi: 10.1109/TCBB.2018.2827373. [DOI] [PubMed] [Google Scholar]

- 22.Lan W., Li M., Zhao K., Liu J., Wu F.-X., Pan Y., Wang J. LDAP: A web server for lncRNA-disease association prediction. Bioinformatics. 2016;33:458–460. doi: 10.1093/bioinformatics/btw639. [DOI] [PubMed] [Google Scholar]

- 23.Ferlay J., Shin H.-R., Bray F., Forman D., Mathers C., Maxwell Parkin D. Estimates of worldwide burden of cancer in 2008: GLOBOCAN. Int. J. Cancer. 2010;127:2893–2917. doi: 10.1002/ijc.25516. [DOI] [PubMed] [Google Scholar]

- 24.Ning S., Zhang J., Wang P., Zhi H., Wang J., Liu Y., Gao Y., Guo M., Yue M., Wang L., et al. Lnc2Cancer: A manually curated database of experimentally supported lncRNAs associated with various human cancers. Nucleic Acids Res. 2016;44:D980–D985. doi: 10.1093/nar/gkv1094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Bao Z., Yang Z., Huang Z., Zhou Y., Cui Q., Dong D. LncRNADisease 2.0: An updated database of long non-coding RNA-associated diseases. Nucleic Acids Res. 2019;47:D1034–D1037. doi: 10.1093/nar/gky905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.He Y., Luo Y., Liang B., Ye L., Lu G., He W. Potential applications of MEG3 in cancer diagnosis and prognosis. Oncotarget. 2017;8:73282–73295. doi: 10.18632/oncotarget.19931. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Xu Y., Chen M., Liu C., Zhang X., Li W., Cheng H., Zhu J., Zhang M., Chen Z., Zhang B. Association Study Confirmed Three Breast Cancer-Specific Molecular Subtype-Associated Susceptibility Loci in Chinese Han Women. Oncologist. 2017;22:890–894. doi: 10.1634/theoncologist.2016-0423. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Lv X.-B., Jiao Y., Qing Y., Hu H., Cui X., Lin T., Song E., Yu F. miR-124 suppresses multiple steps of breast cancer metastasis by targeting a cohort of pro-metastatic genes in vitro. Chin. J. Cancer. 2011;30:821–830. doi: 10.5732/cjc.011.10289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Chen G., Wang Z., Wang D., Qiu C., Liu M., Chen X., Zhang Q., Yan G., Cui Q. LncRNADisease: A database for long-non-coding RNA-associated diseases. Nucleic Acids Res. 2013;41:D983–D986. doi: 10.1093/nar/gks1099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Li J.H., Liu S., Zhou H., Qu L.H., Yang J.H. starBase v2.0: Decoding miRNA-ceRNA, miRNA-ncRNA and protein-RNA interaction networks from large-scale CLIP-Seq data. Nucleic Acids Res. 2014;42:D92–D97. doi: 10.1093/nar/gkt1248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Li Y., Qiu C., Tu J., Geng B., Yang J., Jiang T., Cui Q. HMDD v2.0: A database for experimentally supported human microRNA and disease associations. Nucleic Acids Res. 2014;42:D1070–D1074. doi: 10.1093/nar/gkt1023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Paraskevopoulou M.D., Hatzigeorgiou A.G. Analyzing MiRNA-LncRNA Interactions. Methods Mol. Biol. 2016;1402:271–286. doi: 10.1007/978-1-4939-3378-5_21. [DOI] [PubMed] [Google Scholar]

- 33.Wang D., Wang J., Lu M., Song F., Cui Q. Inferring the human microRNA functional similarity and functional network based on microRNA-associated diseases. Bioinformatics. 2010;26:1644–1650. doi: 10.1093/bioinformatics/btq241. [DOI] [PubMed] [Google Scholar]

- 34.Nair V., Hinton G.E. Rectified linear units improve restricted boltzmann machines; Proceedings of the 27th International Conference on International Conference on Machine Learning; Haifa, Israel. 21–24 June 2010; pp. 807–814. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.