Abstract

Objectives. We assessed pediatric residents’ retention of knowledge and clinical skills according to the time since their last American Heart Association Pediatric Advanced Life Support (AHA PALS) certification. Methods. Sixty-four pediatric residents were recruited and divided into 3 groups based on the time since their last PALS certification, as follows: group 1, 0 to 8 months; group 2, 9 to 16 months, and group 3, 17 to 24 months. Residents’ knowledge was tested using 10 multiple-choice AHA PALS pretest questions and their clinical skills performance was assessed with simulation mock code scenarios using 2 different AHA PALS checklists, and mean scores were calculated for the 3 groups. Differences in the test scores and overall clinical skill performances among the 3 groups were analyzed using analyses of variance, χ2 tests, and Jonckheere-Terpstra tests. Statistical significance was set at P < .05. Results. The pediatric residents’ mean overall clinical skills performance scores declined within the first 8 months after their last AHA PALS certification date and continued to decrease over time (87%, 82.6%, and 77.4% for groups 1, 2, and 3, respectively; P = .048). Residents’ multiple-choice test scores declined in all 3 groups, but the scores were not significantly different. Conclusions. Residents’ clinical skills performance declined within the first 8 months after PALS certification and continued to decline as the time from the last certification increased. Using mock code simulations and reinforcing AHA PALS guidelines during pediatric residency deserve further evaluation.

Keywords: PALS, certification, pediatric resident, resuscitation

Introduction

The American Heart Association (AHA) first released the Pediatric Advanced Life Support (PALS) course in 1988. Since then, the course has undergone substantial transformation and is presently the standard for resuscitation training for pediatric health care providers in the United States. Its relevance has greatly increased over the past few decades.1,2 Pediatric residents are required to obtain PALS certification at the beginning of their residency and to recertify every 2 years.3 The AHA Emergency Cardiovascular Care Committee updated the PALS guidelines in 2015 and still recommends that residents recertify after 2 years.4

The goal of PALS is to improve the quality of care provided in pediatric emergencies and, consequently, patient outcomes. Through the PALS course, trainees gain skills in pediatric assessment and basic life support, enabling them to handle pediatric emergencies and critically ill children.5,6

The AHA PALS course consists of 3 parts. The first is a computer-based knowledge assessment using e-Simulation, the second is a skills practice session, and the third is an assessment of the skills learned by participants. Both the second and third parts are conducted under the supervision of an AHA PALS instructor.

Most pediatric residency programs in the United States require PALS certification to ensure that their residents have the necessary knowledge and clinical skills for dealing with emergencies and critically ill children, as these basic skills are essential for providing vital organ resuscitation.

One challenge pediatric programs face is how to maintain the residents’ PALS knowledge and clinical skills throughout their training. Residents should always be well prepared for pediatric emergencies, as they may occur anytime and anywhere, including in outpatient settings. Unfortunately, residents’ resuscitation knowledge and clinical skills performance diminish over time, partially because they witness fewer real pediatric codes during their training years. Furthermore, the only real hands-on opportunities residents have to reinforce their knowledge of and experience with these scenarios are limited to their rotations in the emergency room (ER) and pediatric/neonatal intensive care units (PICUs/NICUs). This, coupled with a decrease in duty hours, academic responsibilities, and lack of simulation of critical/emergency care scenarios, can lead to poor PALS skill performance.7 In our institution, residents spend on average 3 months in the ER, 3 months in the NICU, and 2 months in the PICU, and they are not part of the transport team.

To combat knowledge loss, the current AHA PALS course uses advanced simulation techniques, which reinforce effective resuscitation approaches and team dynamics. The AHA PALS course sets the passing score for the knowledge-based written multiple-choice question (MCQ) test at 84%. Resuscitation skills are then tested, with each trainee being required to correctly perform all (100%) of the clinical performance steps for each clinical scenario assigned. Research has shown that the efficiency of residents’ life-saving procedures greatly increases immediately after completing PALS training.8 Another study reported better retention of PALS knowledge 1 year after course completion in pediatric residents than in other groups of learners (nurses, paramedics).9 This is likely due to the academic curriculum of the pediatric residency program versus other programs, including greater exposure to repetitive teaching sessions and skill/knowledge reinforcement during rotations, especially in the ER and PICU.

Indeed, the current literature emphasizes the need for frequent practice sessions to reinforce PALS guidelines.3,7-13 Repetitive practice via simulations and the reinforcement of PALS guidelines can facilitate successful outcomes in pediatric emergencies, which is critical because pediatric codes can happen at any time during and after training, even in primary care offices. Thus, all pediatricians should be prepared to manage such emergencies. However, whether there is a need to modify AHA PALS training or increase its frequency to enhance resident performance, comfort, and confidence remains unclear. To resolve this, it is important to understand how pediatric residents’ PALS knowledge and skills are affected over the 2-year period following their AHA PALS certification.

Therefore, the aim of the present study was to use AHA PALS course material (ie MCQ and case scenario testing checklists) to assess the knowledge retention, clinical skill performance, and adherence to PALS guidelines by our pediatric residents who were grouped according to the time since their last PALS certification (0-8, 9-16, and 17-24 months). We thought that by using the AHA PALS tools themselves, we would be able to more reliably evaluate the retention of knowledge and skills gained during AHA PALS certification.

Materials and Methods

Our institutional review board approved this study (#798405-5). Written consent was not obtained because this evaluation was a part of the residents’ teaching sessions. We conducted this study at a university hospital in an urban setting from June 2015 to January 2016. Sixty-four of the 82 pediatric residents in our institution were included in the study. All residents were AHA PALS certified, as required by our institution, before starting their training as a postgraduate year (PGY) 1 resident, and then recertified after 24 months before the start of PGY 3. Residents were tested on weekdays during their dayshifts and divided into 3 groups based on the time from their last PALS certification or recertification date, as follows: group 1 (0-8 months; n = 21), group 2 (9-16 months; n = 22), and group 3 (17-24 months; n = 21). Those not participating were either on vacation, night float or at an outside rotation.

Participants had a 15 minute advance notice before their testing and were instructed not to share any of the clinical scenarios thereafter. All residents were current with their AHA PALS certification before and during the study period, meaning they had performed 100% of the skills correctly during AHA/PALS testing. We used AHA PALS course material as an evaluation tool to be more objective in our assessments.14 To test for theoretical knowledge, all residents were given the same 10 MCQs, which were selected from the AHA PALS pre-course self-assessment tool, and allowed 15 minutes to complete the test.15 These questions assessed their knowledge of airway management, identification of cardiac rhythms, fluid management, and medication use in pediatric emergencies. A pass mark of 84% was required for this component.

We assessed each resident’s decision-making skills by testing them on one of the AHA PALS mock code clinical scenarios. The actual AHA PALS course includes 12 practice case scenarios. We tested our residents’ clinical performance using either a respiratory (Upper Airway Obstruction) or a cardiac (Asystole/Pulseless Electrical Activity) scenario. The scenarios were alternated between consecutive subjects. Mock code sessions were performed using high-fidelity simulators. Each resident tested was evaluated as the “team leader” of the scenario assigned. He/she was expected to direct the other team members to perform the necessary steps listed in the AHA PALS evaluation checklist. Chief residents acted as facilitators. ER fellows and nurses, who were not participating in the study, acted as team members during the case scenario testing. Residents were provided with an AHA PALS pocket reference card, which contained the PALS guidelines, at the start of the testing and were allowed to use the card during the session. Each resident was given a brief clinical scenario, such as “A 1-year old child is brought in by first responders from home after the mother called 911 because the child was experiencing breathing difficulties.” Each session lasted approximately 15 minutes. A short debrief was conducted after the testing session to address knowledge gaps and to allow time for questions and teaching of specific hands-on skills. Residents tested were instructed not to share the scenario used with other residents. The sessions were not videotaped nor the information shared with other residents.

To score each resident’s performance, we used the AHA PALS Case Scenario Testing Checklists (Tables 1 and 2). These checklists assess different areas of the clinical performance steps. For each case scenario, the resident was evaluated on his/her team leadership skills, patient management skills, and final case conclusion. Each box (skill) was checked on the list if the task was performed correctly. Team leader skills included 2 items, namely, team member role assignments and effective communication throughout the testing session. The patient management performance skills section included 8 items in each of the 2 scenarios tested.

Table 1.

AHA PALS Case Scenario Testing Checklists: Asystole/PEA.

| Clinical Performance Steps | Check if Done Correctly |

|---|---|

| Team leader | |

| Assign team member roles | |

| Uses effective communication throughout | |

| Patient management | |

| Recognizes cardiopulmonary arrest | |

| Directs initiation of CPR by using the C-A-B sequence and ensures performance of high-quality CPR at all times | |

| Directs placement of pads/leads and activation of monitor | |

| Recognizes asystole or PEA | |

| Directs IO or IV access | |

| Directs preparation of appropriate dose of epinephrine | |

| Directs administration of epinephrine at appropriate intervals | |

| Directs checking rhythm on the monitor approximately every 2 minutes | |

| Case conclusion | |

| Verbalizes consideration of at least 3 reversible causes of PEA or asystole | |

Abbreviations: AHA, American Heart Association; PALS, Pediatric Advanced Life Support; PEA, pulseless electrical activity; CPR, cardiopulmonary resuscitation; IO, intraosseous; IV, intravenous.

Table 2.

AHA PALS Case Scenario Testing Checklist: Upper Airway Obstruction.

| Clinical Performance Steps | Check if Done Correctly |

|---|---|

| Team leader | |

| Assign team member roles | |

| Uses effective communication throughout | |

| Patient management section for upper airway obstruction | |

| Directs assessment of airway, breathing, circulation, disability, and exposure, including vital signs | |

| Directs manual airway maneuver with administration of 100% oxygen | |

| Directs placement of pads/leads and pulse oximetry | |

| Recognizes signs and symptoms of upper airway obstruction | |

| Categorizes as respiratory distress or failure | |

| Verbalizes indications of assisted ventilations or CPAP | |

| Directs IV or IO access | |

| Directs reassessment of patient in response to treatment | |

| Case conclusion | |

| Summarizes specific treatments for upper airway obstruction | |

| Verbalizes indications for endotracheal intubation and special considerations when intubation is anticipated | |

Abbreviations: AHA, American Heart Association; PALS, Pediatric Advanced Life Support; CPAP, continuous positive airway pressure; IO, intraosseous; IV, intravenous.

The present study utilized the same 3 evaluators for each of the residents tested, including a pediatric intensivist, pediatric emergency medicine physician, and a pediatric emergency medicine fellow. All 3 evaluators had completed the official AHA PALS instructor course. Prior to implementation, evaluators met to review the checklist tool to improve consistency in their assessment of each of the tasks completed. The same 3 evaluators scored each resident’s session. Evaluators did not lead or prompt residents during their session.

Similar to the official AHA PALS certification examination, the evaluation was pass or fail and no partial credit was given on any of the tasks listed on the checklist. All tasks in the checklist had to be performed satisfactorily in order to pass. We set the same criteria for passing or failing for the clinical skills assessment in our study. The Case Scenario Checklists for Asystole/Pulseless Electrical Activity and Upper Airway Obstruction had 11 and 12 checkpoints, respectively. The results were expressed in terms of percentages for each case scenario. The mean score from all 3 evaluators was calculated for each resident, and the overall means of the 3 groups were compared.

Statistical Analysis

We used SAS 9.4 (SAS Institute, Cary, NC) software for the analysis. To identify differences in the written MCQ test scores and overall skill performance scores among the 3 groups, we used analysis (ANOVA) of variance with Tukey adjustments for multiple comparisons. Within the overall skill performance checklist, we also determined the sub-scores for team leader, patient management, and case conclusion. The non-parametric Jonckheere-Terpstra test was used to identify differences over time (among the groups) for these secondary outcomes due to their nonnormal distribution. The χ2 test was used to compare the residents’ passing/failing the written test. Differences were considered significant at P < .05.

Results

Residents’ characteristics such as sex, medical school attendance in the United States versus a foreign country, and time since medical school graduation were not significantly different among the groups (Table 3).

Table 3.

Demographic and Background Data.

| Characteristic | 0-8 Months (N = 21) | 9-16 Months (N = 22) | 17-24 Months (N = 21) | P a |

|---|---|---|---|---|

| Gender, n (%) | ||||

| Males | 11 (52.4%) | 6 (27.3%) | 9 (42.9%) | |

| Females | 10 (47.6%) | 16 (72.7%) | 12 (57.1%) | .25 |

| Country of medical school training, n (%) | ||||

| IMG | 17 (80.9%) | 17 (77.3%) | 18 (85.7%) | |

| AMG | 4 (19.1%) | 5 (22.7%) | 3 (14.3) | .92 |

| Duration since medical school graduation, mean (SD) | 1.71 (2.53) | 2.0 (3.22) | 4.32 (5.05) | .52 |

| PGY year | ||||

| PGY 1 | 17 | 2 | 2 | |

| PGY 2 | 0 | 4 | 19 | |

| PGY 3 | 4 | 16 | 0 | |

Abbreviations: IMG, international medical graduate; AMG, American medical graduate; SD, standard deviation; PGY, postgraduate year.

P values were based on the Fisher’s exact or Kruskal-Wallis tests, as appropriate.

Theoretical Knowledge Assessment

We accepted the same passing score for our written test as the actual AHA PALS examination, which is 84%. We found that residents performed poorly on the written MCQ test, with the majority of residents in each group failing (ie, scoring <84%). The numbers of residents in each group that passed/failed were 9/12, 8/14, and 8/13 in groups 1, 2, and 3, respectively. No statistically significant differences in the number of residents who passed/failed were identified among the 3 groups. Residents’ mean test scores were 83%, 79%, and 82% for groups 1, 2, and 3, respectively, and these scores did not differ significantly among the groups (P = 0.55 [ANOVA]; Table 4).

Table 4.

Results From Multiple-Choice Examination Assessing AHA PALS Knowledge.

| Group 1 (0-8 Months) | Group 2 (9-16 Months) | Group 3 (17-24 Months) | P * | |

|---|---|---|---|---|

| Fail (%) | 57.14 | 63.64 | 61.9 | .9 |

| Pass (%) | 42.86 | 36.63 | 38.1 | |

| Test score, mean/SD | 82.86/13.47 | 78.64/16.99 | 82.38/9.95 | .55 |

Abbreviations: AHA, American Heart Association; PALS, Pediatric Advanced Life Support; SD, standard deviation; ANOVA, analysis of variance.

P value for written test was based on ANOVA. P values for pass/fail were based on χ2 test.

Clinical Skill Performance Assessment

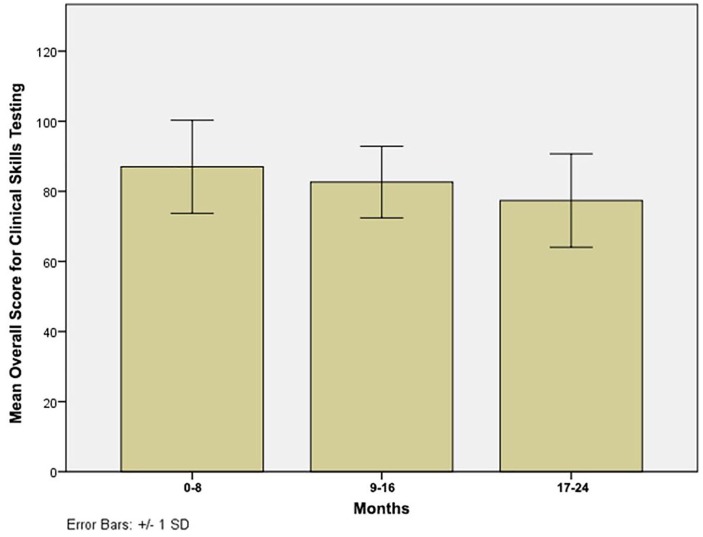

As is the case during actual AHA PALS certification, here, all clinical performance steps in the checklist of the assigned clinical scenario had to be performed correctly in order for the resident to pass (Tables 1 and 2). Residents’ mean overall clinical skill performance score started to decline in the first 8 months and continued to diminish over the 2 years after PALS certification, as indicated by significantly different scores among the groups (87.00%, 82.64%, and 77.38% in groups 1, 2, and 3, respectively; P = 0.048; Table 5). Specifically, the overall mean score of group 1 was 9.6 points higher (95% confidence interval [CI] = 0.5 to 18.8) than that of group 3 (P = .04). However, the differences between groups 1 and 2 (4.6, 95% CI = −4.7 to 13.4, P = .38) and groups 2 and 3 (5.3, 95% CI = −3.8 to 14.3, P = .35), were not statistically significant (Figure 1).

Table 5.

Results From Clinical Skill Performance Assessment.

| Group 1 (0-8 Months) | Group 2 (9-16 Months) | Group 3 (17-24 Months) | P * | |

|---|---|---|---|---|

| Overall mean (SD) | 87.00 (13.30) | 82.64 (10.23) | 77.38 (13.33) | .048 |

| Team leader, median (25th to 75th percentile) | 100 (50-100) | 66.5 (33-100) | 75 (16-83) | .035 |

| Patient management, mean (SD) | 89.67 (12.18) | 89.23 (10.61) | 79.90 (16.66) | .034 |

| Case conclusion, median (25th to 75th percentile) | 100 (100-100) | 100 (33-100) | 100 (0-100) | .14 |

Abbreviations: SD, standard deviation; ANOVA, analysis of variance.

P values for overall skill mean scores were based on ANOVA. P values for the remaining tests were based on Jonckheere-Terpstra test.

Figure 1.

Box plot differences in clinical skills performance between groups.

Within the overall skill performance score, we further evaluated the scores for the 3 clinical performance subsections in each case scenario (ie, team leader, patient management, and case conclusion; Table 5). We found that residents in group 1 received full credit for the team leader skills section. However, team leader median scores significantly declined over time after 8 months (P = .035). Residents’ patient management skills scores were similar between groups 1 and 2, but significantly declined in group 3. All 3 groups performed well on the case conclusion assessment receiving full credit for this section, with no statistical differences among the groups (P = .14).

Discussion

Currently, AHA PALS training courses are the only mandatory means of training pediatric residents for pediatric emergencies. The retention of PALS knowledge and skill development is crucial for successful outcomes in pediatric emergencies.16 Understanding the effects time has on the retention of PALS knowledge and skills is essential for recognizing the optimal timing for retraining and for establishing what factors may help counteract the gradual loss of PALS knowledge and skills over time. However, studies evaluating the effects of time on PALS knowledge retention are scarce. To our knowledge, ours is the only study to use AHA PALS testing material to assess the retention of both knowledge and clinical skills performance in pediatric residents according to the time since their last PALS certification.

The present study stemmed from the concern that the 2-year period between PALS certifications may be too long, considering residents are expected to retain complex algorithms and guidelines included in the AHA PALS curriculum. Our data showed that pediatric residents’ theoretical knowledge and clinical skills performance started to decline within the first 8 months after their last PALS certification date, a decline that progressed over time. Residents have less opportunity to put their knowledge and skills into practice during their training, given the reduction in training hours and the decrease in exposure to emergency and ICU settings mandated by the Accreditation Council for Graduate Medical Education. Hence, residents should not be expected to retain knowledge and skills if they have limited or no occasion to apply them during their training.

In our study, the greatest decline among the 3 clinical performance steps was in the team leader section. One of the most important determinants of a successful code is how well the team members are led and directed by the team leader during the code. A team leader should always maintain a global perspective of the code team while directing the team members.17 Residents demonstrated effective team leader skills in the first 8 months post-PALS certification. However, after 8 months, their performance as team leaders markedly declined. This is likely related to insufficient exposure to real emergencies and codes that would reinforce the clinical skills and confidence that are essential in leading a team during resuscitation. Given that pediatric residents may need to assume the role of team leader in pediatric codes, especially during night shifts when supervising attending physicians are not immediately available, and considering the team leader has the most important role in a code, the decline in team leader skills we observed over time, together with prior research,18,19 highlights the need for more frequent and focused retraining, particularly for team leader skills.

In an attempt to reduce the loss of PALS knowledge and skills, some programs are now introducing mock code sessions on a regular basis and noticing positive effects on residents’ knowledge retention and team leadership abilities. For instance, after implementing simulation sessions in their curriculum, Couloures and Allen20 demonstrated that pediatric residents performed better as team leaders and felt more confident in this role. The study also concluded that scripted debriefing sessions led by the AHA-certified PALS instructor had a positive effect on the team leader’s performance. Collectively, these findings suggest that increasing the number of simulation sessions led and debriefed by AHA PALS instructors in pediatric residency programs may be beneficial for improving the retention of knowledge and skills necessary to lead a code successfully.

Additionally, Prince et al21 aimed to improve team member dynamics, as well as the efficiency and success of codes, by restructuring their hospital code team. Team members were assigned roles and trained for 3 months prior to implementation of the restructured code team. The role of team leader was given only to physicians. At the 2-year follow-up survey, the authors identified significant improvements regarding the confidence in team leader–specific skills.21 Furthermore, another study performed by Yeung et al22 in adult cardiac arrest demonstrated that good team leadership skills would have a positive impact on the code team’s efficiency and teamwork.

The progressive loss of theoretical knowledge we identified here after PALS certification, coupled with the decrease in skill performance, particularly in team leader skills, is of great concern. Given that the decline in PALS theoretical knowledge and clinical skills became apparent in the first 8 months for all groups, before the 2-year PALS recertification period, our data support the notion that the 2-year period is too lengthy for pediatric residents to retain their PALS knowledge and skills. As such, additional training for pediatric residents in the 2-year interim period may be necessary to maintain an adequate level of PALS knowledge and skills. However, this would require training programs to have additional personnel and funding available, which may not be practical or cost-effective. A suitable alternative might be to implement frequent and regular mock code simulation sessions for residents during their training using AHA PALS material, preferably with AHA PALS instructors running the mock codes. Moreover, pediatric residency training programs should incentivize their chief residents, hospitalists, intensivists, and ER physicians to become AHA PALS instructors and have them play an active role in conducting regular mock codes.

Limitations

Several limitations of our study should be noted. First, this study was conducted at a single, urban, academic institution, with only a small number of residents in each group. A multicenter study that includes a larger number of residents would increase the study’s validity and generalizability. Our evaluators were not blinded to the study objectives. Also, because residents are required to recertify for PALS every 2 years and our focus was on retention of knowledge and skills over time after certification or recertification, the groups were not homogeneous with regard to the level of PGY training, which could have confounded the results. Additionally, we were not able to control for other confounders such as prior training (ie, some IMG [international medical graduate] had prior residency training) or rotation at the time of testing. Residents rotating through ER, PICU, or NICU would likely have an advantage over those rotating in outpatient or subspecialty clinics. Furthermore, as mentioned above, we did not assess the quality of cardiopulmonary resuscitation that was administered during the sessions, which would have provided additional information about residents’ PALS performance. Because this skill is also a vital part of the resuscitation effort, it should be included as part of the skills’ assessment in future studies.

Conclusions

The AHA PALS course teaches pediatric residents the knowledge and skills that are necessary to prepare them for pediatric emergencies. The results of the present study showed that pediatric residents lose some of their knowledge and skills during the 2-year period between certifications. As a result, residents are likely unprepared to deal with pediatric emergencies, which may subsequently result in poor outcomes. More studies testing a larger group of pediatric residents are needed to objectively delineate the causes of this decline in knowledge and skills and to evaluate feasible interventions such as regular mock codes and the use of high-fidelity simulators to improve residents’ retention of knowledge and skill performance.

Footnotes

Authors’ Note: All study participants provided informed consent, and the study design was approved by the appropriate ethics review board.

Author Contributions: SD: Contributed to conception and design; contributed to analysis; drafted the manuscript; critically revised the manuscript; gave final approval; agrees to be accountable for all aspects of work ensuring integrity and accuracy.

MR: Contributed to conception and design; drafted the manuscript; gave final approval; agrees to be accountable for all aspects of work ensuring integrity and accuracy.

MO: Contributed to conception and design; drafted the manuscript; gave final approval; agrees to be accountable for all aspects of work ensuring integrity and accuracy.

CG: Contributed to conception and design; contributed to analysis; drafted the manuscript; critically revised the manuscript; gave final approval; agrees to be accountable for all aspects of work ensuring integrity and accuracy.

Declaration of Conflicting Interests: The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The author(s) received no financial support for the research, authorship, and/or publication of this article.

ORCID iD: Sule Doymaz  https://orcid.org/0000-0003-3214-7210

https://orcid.org/0000-0003-3214-7210

References

- 1. Cheng A, Rodgers DL, van der Jagt E, Eppich W, O’Donnell J. Evolution of the Pediatric Advanced Life Support course: enhanced learning with a new debriefing tool and Web-based module for Pediatric Advanced Life Support instructors. Pediatr Crit Care Med. 2012;13:589-595. [DOI] [PubMed] [Google Scholar]

- 2. Bardella IJ. Pediatric advanced life support: a review of the AHA recommendations. American Heart Association. Am Fam Physician. 1999;60:1743-1750. [PubMed] [Google Scholar]

- 3. ACGME program requirements for Graduate Medical Education in pediatrics. https://www.acgme.org/Portals/0/PFAssets/ProgramRequirements/320_Pediatrics_2019_TCC.pdf?ver=2019-03-28-090932-520

- 4. de Caen AR, Berg MD, Chameides L, et al. Part 12: Pediatric Advanced Life Support: 2015 American Heart Association guidelines update for cardiopulmonary resuscitation and emergency cardiovascular care. Circulation. 2015;132(18 suppl 2):S526-S542. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Waisman Y, Amir L, Mimouni M. Does the pediatric advanced life support course improve knowledge of pediatric resuscitation? Pediatr Emerg Care. 2002;18:168-170. [DOI] [PubMed] [Google Scholar]

- 6. Waisman Y, Amir L, Mor M, Mimouni M. Pediatric Advanced Life Support (PALS) courses in Israel: ten years of experience. Isr Med Assoc J. 2005;7:639-642. [PubMed] [Google Scholar]

- 7. Hunt EA, Duval-Arnould JM, Nelson-McMillan KL, et al. Pediatric resident resuscitation skills improve after “rapid cycle deliberate practice” training. Resuscitation. 2014;85:945-951. [DOI] [PubMed] [Google Scholar]

- 8. Quan L, Shugerman RP, Kunkel NC, Brownlee CJ. Evaluation of resuscitation skills in new residents before and after pediatric advanced life support course. Pediatrics. 2001;108:E110. [DOI] [PubMed] [Google Scholar]

- 9. Grant EC, Marczinski CA, Menon K. Using pediatric advanced life support in pediatric residency training: does the curriculum need resuscitation? Pediatr Crit Care Med. 2007;8:433-439. [DOI] [PubMed] [Google Scholar]

- 10. Mancini ME, Kaye W. The effect of time since training on house officers’ retention of cardiopulmonary resuscitation skills. Am J Emerg Med. 1985;3:31-32. [DOI] [PubMed] [Google Scholar]

- 11. Mills DM, Wu CL, Williams DC, King L, Dobson JV. High-fidelity simulation enhances pediatric residents’ retention, knowledge, procedural proficiency, group resuscitation performance, and experience in pediatric resuscitation. Hosp Pediatr. 2013;3:266-275. [DOI] [PubMed] [Google Scholar]

- 12. Stone K, Reid J, Caglar D, et al. Increasing pediatric resident simulated resuscitation performance: a standardized simulation-based curriculum. Resuscitation. 2014;85:1099-1105. [DOI] [PubMed] [Google Scholar]

- 13. Dugan MC, McCracken CE, Hebbar KB. Does simulation improve recognition and management of pediatric septic shock, and if one simulation is good, is more simulation better? Pediatr Crit Care Med. 2016;17:605-614. [DOI] [PubMed] [Google Scholar]

- 14. American Heart Association. Pediatric Advanced Life Support—Provider Manual. Dallas, TX: American Heart Association; 2016. [Google Scholar]

- 15. http://heartcentertraining.com/wpcontent/uploads/2013/11/PALS-Pretest.pdf.

- 16. https://appd.org/ed_res/Handbooks/handbooks1.cfm

- 17. Cooper S, Wakelam A. Leadership of resuscitation teams: “Lighthouse Leadership.” Resuscitation. 1999;42:27-45. [DOI] [PubMed] [Google Scholar]

- 18. Cant RP, Porter JE, Cooper SJ, Roberts K, Wilson I, Gartside C. Improving the non-technical skills of hospital medical emergency teams: the Team Emergency Assessment Measure (TEAM™). Emerg Med Australas. 2016;28:641-646. [DOI] [PubMed] [Google Scholar]

- 19. McKay A, Walker ST, Brett SJ, Vincent C, Sevdalis N. Team performance in resuscitation teams: comparison and critique of two recently developed scoring tools. Resuscitation. 2012;83:1478-1483. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Couloures KG, Allen C. Use of simulation to improve cardiopulmonary resuscitation performance and code team communication for pediatric residents. MedEdPORTAL. 2017;13:10555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Prince CR, Hines EJ, Chyou PH, Heegeman DJ. Finding the key to a better code: code team restructure to improve performance and outcomes. Clin Med Res. 2014;12:47-57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Yeung JH, Ong GJ, Davies RP, Gao F, Perkins GD. Factors affecting team leadership skills and their relationship with quality of cardiopulmonary resuscitation. Crit Care Med. 2012;40:2617-2621. [DOI] [PubMed] [Google Scholar]