Abstract

Objective

This study tested validity, accuracy, and efficiency of the Orthopaedic Minimal Data Set Episode of Care (OME) compared with traditional operative report in arthroscopic surgery for shoulder instability. As of November 2017, OME had successfully captured baseline data on 97% of 18 700 eligible cases.

Materials and Methods

This study analyzes 100 cases entered into OME through smartphones by 12 surgeons at an institution from February to October 2015. A blinded reviewer extracted the same variables from operative report into a separate database. Completion rates and agreement were compared. They were assessed using raw percentages and McNemar’s test (with continuity correction). Agreement between nominal variables was assessed by unweighted Cohen’s kappa and a concordance correlation coefficient measured agreement between continuous variables. Efficiency was assessed by median time to complete.

Results

Of 37 variables, OME demonstrated equal or higher completion rates for all but 1 and had significantly higher capture rates for 49% (n = 18; P < .05). Of 33 nominal variables, raw proportional agreement was ≥0.90 for 76% (n = 25). Raw proportional agreement was perfect for 15% (n = 5); no agreement statistic could be calculated due to a single variable in operative note and OME. Calculated agreement statistic was substantial or better (κ > 0.61) for 51% (n = 17) for the 33 nominal variables. All continuous variables assessed (n = 4) demonstrated poor agreement (concordance correlation coefficient <0.90). Median time for completing OME was 103.5 (interquartile range, 80.5-151) seconds.

Conclusions

The OME smartphone data capture system routinely captured more data than operative report and demonstrated acceptable agreement for nearly all nominal variables, yet took <2 minutes to complete on average.

Keywords: smartphone, electronic medical record, information standardization, shoulder instability, outcomes

INTRODUCTION

In this study, the authors set out to demonstrate that the Orthopaedic Minimal Data Set Episode of Care (OME) data capture system, with its easy-to-use, smartphone-based technology and branching logic design, captures more relevant data than the traditional operative report without sacrificing accuracy (here defined as agreement with data extracted from the operative report) or efficiency (here assessed by measuring the time needed to complete OME in its entirety).

In an effort to advance national orthopedic outcome research and address the need for high-quality surgical data, the OME smartphone data collection tool was developed. OME collects baseline patient-reported outcome measures (PROMs) preoperatively on the day of surgery, as well as surgical data (eg, examination under anesthesia findings, intraoperative pathology, surgical technique, implant information) for high-volume elective orthopedic surgeries of the shoulder, hip, and knee. The same PROMs are collected 1-year postoperatively (estimated to be the time needed to reach peak function after surgery), allowing for the identification of important preoperative or intraoperative outcome predictors. OME’s goal is to reliably and consistently collect PROMs while simultaneously capturing surgical data in a way that is quicker, more standardized, and more detailed than current systems.

The incidence of arthroscopic labral surgery for shoulder instability has risen over the past decade.1–4 Current literature suggests that arthroscopic stabilization leads to improved outcomes compared with nonoperative treatment.5–7 Still, the effect that different surgical techniques and implants have on outcomes remains largely unknown. Answering these and other questions will become even more important as the American healthcare system continues to transition to value-based compensation. Because value is defined as quality divided by cost, accurately assessing the value of a procedure such as arthroscopic labral repair will play a major role in justifying its increased use.8–10 Accurately determining this value as it relates to optimized outcomes and decreased costs will almost certainly require large amounts of high-quality data that, currently, do not exist in any single database.

Examples of databases for shoulder instability surgery are few and, unfortunately, like most current large databases, are of limited utility. Such examples include the Norwegian Register for Shoulder Instability Surgery and the American College of Surgeons National Surgical Quality Improvement Program database.11,12 They rely almost exclusively on the electronic medical record (EMR) as their source, which is problematic for 2 main reasons: (1) its use of free text fields makes data extraction difficult and error prone8,10 and (2) the data that do exist are inconsistent and lack standardization, thus minimizing utility in high-quality research.

As mentiuned, the data contained in the EMR are challenging to obtain and often require manual extraction by an individual going through each chart 1 note at a time.13 For research related to surgery, this process almost certainly entails reviewing the operative report for desired variables. Error rates for manual extraction of data have been reported between 8% and 23%, with accuracy differing by site, surgical specialty, care provider, and clinical area.14 Although strategies can improve accuracy through quality assurance, this drives up cost associated with research.13,14

Additionally, the EMR, and especially the operative report, are often completed using free text or dictation. Dictated reports include significant errors, and, without guided structure, they vary considerably in content and inclusion of important details.15,16 Consequently, such datasets tend to have considerable amounts of missing data.

MATERIALS AND METHODS

OME database design

The OME database is a multiplatform system of multiple REDCap databases17 that was designed by an interdisciplinary team of orthopedic surgeons, software developers, and administrators with the intention to capture PROMs and surgeon-entered clinically relevant preoperative and intraoperative variables. OME is part of the standard of care for all patients undergoing elective knee, hip, and shoulder procedures, which is part of why it has a high adoption rate. In addition, the physicians have been dedicated to adding this to the workflow process, which has been reinforced by accountability by leadership.

Patients complete a combination of validated general (eg, the Veterans Rand 12-Item Health Survey) and joint-specific outcome surveys (eg, the Penn Shoulder Score, the Kerlan-Jobe Orthopaedic Clinic Shoulder and Elbow Score) immediately before admission to the preoperative unit.18–20 One year later, patients are contacted to complete the same PROMs so that a change can be measured over time.

In addition to the PROMs collected as part of the OME database, attending surgeons enter prespecified preoperative and intraoperative variables immediately after finishing each case. This is done via a secure smartphone, and variables collected include basic demographics (eg, height, weight, age, race), as well as past surgical history (for both the operative limb and the contralateral limb), results of examination under anesthesia, and other operative variables deemed clinically relevant based on current literature or expert opinion of the clinicians involved in OME’s design.

The OME system was implemented beginning February 2015. As of November 2017, OME had successfully captured baseline data (baseline PROMs and surgeon-entered preoperative and intraoperative variables) on 97% of 18 700 eligible cases.

Future integration is one of the goals for scaling the smartphone operative data collection with the idea to ultimately replace or supplement the current, individualized physician operative note into the EMR, ultimately standardizing operative details across the institute to collect specific surgical data.

The system is also capable of adopting to other surgical specialties. The process begins with identifying and listing the clinically relevant data points and surgical details that can be scalable for a particular surgical specialty, and an annual review of those captured data components with the users (physicians) of the system.

OME has already been partially commercialized and can be found on the disclosures of relevant authors as “nPhase.”

In December 2018, surgeons had the opportunity to provide feedback through a user satisfaction survey with the aim of improving surgeon experience. Seventy-one surgeons, who had each respectively contributed more than 10 cases to the database, completed the survey. Sixty-three percent (45 of 71) believe that OME more accurately collects major risk factors for patient outcome when compared with the operative note, and 54% (39 of 71) said if they only had to complete one, they would chose OME over the operative note. In written feedback, surgeons highlighted the benefits of OME as “outstanding and easy to use,” “OME is the same as my structured op note for routine cases,” and “What it does nicely is capture the pertinent data from operations without filler info (positioning, prep, etc.).”

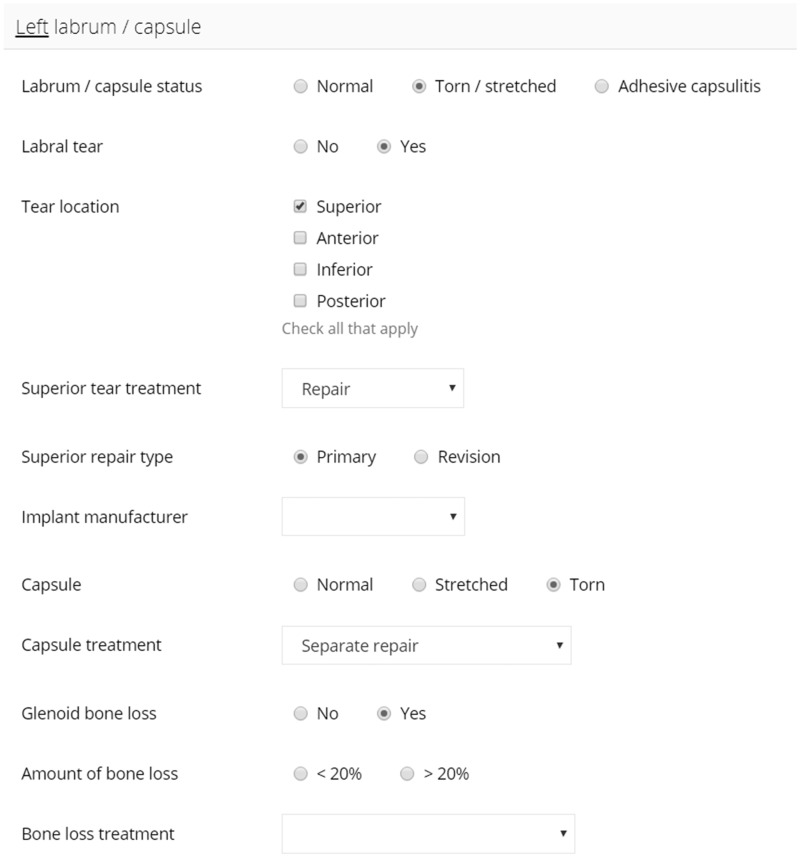

For shoulder instability surgery, OME collects 37 discrete data entry fields and utilizes branching logic to accelerate data entry while decreasing demand on working memory. For example, if the labrum is torn, the surgeon will first enter that information followed by the tear location, repair status, and repair method. Then, if anchors were used, the surgeon would be presented with fields to indicate anchor number, manufacturer, and specific implant type. If sutures were used, alternative pertinent fields would be presented instead. Branching logic guides the collection of operative detail and eliminates collection of extraneous data that is often required in EMR templates. Figure 1 illustrates the interface through which surgeons document an instability repair procedure.

Figure 1.

The Orthopaedic Minimal Data Set Episode of Care interface through which surgeons document an instability repair procedure.

The local institutional review board and information security approved OME.

Patient selection

The OME database for shoulder instability was first implemented on February 18, 2015. This study includes data from the first 100 shoulder instability repairs in the database, which were performed by 12 surgeons at a single institution from February-October 2015.

Data collection and validation

Prospective data were entered directly into OME by surgeons and exported into a study database to be evaluated for agreement with the surgeon’s dictated operative notes and implant logs. Operative note and implant log data were obtained by independent chart review from the Epic EMR system (Epic Systems, Verona, WI), and entered into a separate REDCap database. Reviewers of the EMR were blinded to the OME REDCap data. Before data analysis, the 2 datasets were scrutinized for discrepancies and any unmatched data were reexamined.

Assumptions in chart review data collection

The data were extracted with the following assumptions: (1) the operative note was used as the definitive source of data if a discrepancy occurred between the dictated operative report and implant log; (2) data were obtained from the implant log if implant details (eg, number, manufacturer, type) were not specifically stated in the operative report; (3) the position of anchors entered in REDCap was determined by the surgeon’s description of placement, and was inferred based on injury to the labrum if the surgeon did not describe anchor placement (if anchor placement could not be determined, then a comment was left that anchor placement could not be determined); and (4) omission of a variable from the operative report was considered to be an “implied negative,” and the variable of interest was treated as “absent/missing” when assessing completion rates, but treated as “no/not present/not performed” when assessing agreement between OME and the operative report.

Statistical analysis

Completion rates of the operative report and OME were compared using raw proportional agreement (whether the OME data and operative data matched) well as McNemar’s test (with continuity correction). Agreement between nominal variables was assessed using Cohen’s kappa (κ; unweighted).21 Agreement between continuous variables was assessed by calculating a concordance correlation coefficient (CCC). Suggested interpretation of Cohen’s kappa and the CCC are listed in Table 1. Data were analyzed with R software version 3.3.3 (R Foundation for Statistical Computing, Vienna, Austria).

Table 1.

Interpretation of Cohen’s kappa and the CCC

| Cohen’s kappa (κ)a | CCCb | ||

|---|---|---|---|

| 0.81 ≤ κ ≤ 1.00 | Almost perfect | 0.99 ≤ CCC | Almost perfect |

| 0.61 ≤ κ ≤ 0.80 | Substantial | 0.95 < CCC ≤ 0.99 | Substantial |

| 0.41 ≤ κ ≤ 0.60 | Moderate | 0.90 < CCC ≤ 0.95 | Moderate |

| 0.21 ≤ κ ≤ 0.40 | Fair | CCC ≤ 0.90 | Poor |

| 0.0 ≤ κ ≤ 0.20 | Slight | ||

RESULTS

Basic demographics

Of the 100 patients included in this study, 80% were men, with a mean age at time of surgery of 23.7 ± 8.0 years.

Completion rate

Operative note and OME completion rates for all 37 variables compared are listed in Table 2. Completion rates for OME were at least equal for all variables, and significantly higher for 49% (n = 18) of the variables assessed (P < .05).

Table 2.

Operative note and OME variable completion rates

| Variable | Operative note | OME | P value |

|---|---|---|---|

| Operative limb | 99 | 100 | >.99 |

| Left shoulder past surgical history | 7 | 100 | <.001 |

| Right shoulder past surgical history | 6 | 100 | <.001 |

| Labrum/capsule status | 99 | 100 | >.99 |

| Any labral tear | 96 | 100 | .134 |

| Superior labral tear | 95 | 100 | .074 |

| Anterior labral tear | 95 | 100 | .074 |

| Inferior labral tear | 95 | 100 | .074 |

| Posterior labral tear | 95 | 100 | .074 |

| Superior labral tear | |||

| Tear treatment | 19 | 19 | >.99 |

| Repair type | 13 | 15 | .683 |

| Number of anchors | 12 | 15 | .45 |

| Implant manufacturer | 13 | 15 | .683 |

| Implant make and model | 13 | 15 | .683 |

| Anterior labral tear | |||

| Tear treatment | 61 | 68 | .07 |

| Repair type | 60 | 67 | .07 |

| Number of anchors | 56 | 67 | .01 |

| Implant manufacturer | 58 | 67 | .027 |

| Implant make and model | 56 | 67 | .01 |

| Inferior labral tear | |||

| Tear treatment | 9 | 29 | <.001 |

| Repair type | 9 | 26 | <.001 |

| Number of anchors | 4 | 26 | <.001 |

| Implant manufacturer | 5 | 26 | <.001 |

| Implant make and model | 5 | 26 | <.001 |

| Posterior labral tear | |||

| Tear treatment | 38 | 39 | >.99 |

| Repair type | 37 | 38 | >.99 |

| Number of anchors | 34 | 38 | .343 |

| Implant manufacturer | 35 | 38 | .505 |

| Implant make and model | 34 | 38 | .343 |

| Capsule | 62 | 100 | <.001 |

| Capsule treatment | 59 | 40 | .002 |

| Glenoid bone loss | 57 | 100 | <.001 |

| Amount of bone loss | 9 | 18 | .027 |

| Bone loss treatment | 7 | 18 | .006 |

| Hill-Sachs lesion | |||

| Presence | 72 | 100 | <.001 |

| Lesion type | 13 | 28 | .001 |

| Lesion treatment | 7 | 28 | <.001 |

P values were calculated using McNemar’s test with continuity correction.

OME: Orthopaedic Minimal Data Set Episode of Care.

Agreement

Raw agreement proportions and calculated Cohen’s unweighted kappa values for each comparison made for the nominal variables collected (n = 33) are listed in Table 3. Raw proportional agreement was perfect for 15% (n = 5); however, an agreement statistic could not be calculated because only a single response was present in both the operative report and OME. In total, 76% (n = 25) of the nominal variables demonstrated raw proportional agreement > 90%. For the comparisons for which an agreement statistic could be calculated, 42% (n = 14) demonstrated almost perfect agreement (0.81 ≤ κ ≤ 1.00) and 9% (n = 3) demonstrated substantial agreement (0.61 ≤ κ ≤ 0.80), meaning that calculated agreement statistic was substantial/better (κ > 0.61) for 51% (n = 17) for the 33 nominal variables. Six percent (n = 2) demonstrated moderate agreement (0.41 ≤ κ ≤ 0.60), 9% (n = 3) demonstrated fair agreement (0.21 ≤ κ ≤ 0.40), 15% (n = 5) demonstrated slight agreement (0.0 ≤ κ ≤ 0.20), and 3% (n = 1) demonstrated poor agreement (κ < 0.00).

Table 3.

Agreement between the operative note and OME among nominal variables

| Variable | Records used | Raw % agreement | κ | 95% CI |

|---|---|---|---|---|

| Operative limb | 99 | 1.00 | 1.000 (AP) | 1.000, 1.000 |

| Left shoulder past surgical history | 100 | 0.98 | 0.846 (AP) | 0.636, 1.000 |

| Right shoulder past surgical history | 100 | 0.98 | 0.823 (AP) | 0.579, 1.000 |

| Labrum/capsule status | 99 | 1.00 | N/Aa | N/A |

| Any labral tear | 96 | 0.99 | 0.000 (SL) | –1.000, 1.000 |

| Superior labral tear | 95 | 0.95 | 0.832 (AP) | 0.689, 0.975 |

| Anterior labral tear | 95 | 0.93 | 0.836 (AP) | 0.720, 0.953 |

| Inferior labral tear | 95 | 0.81 | 0.385 (F) | 0.129, 0.641 |

| Posterior labral tear | 95 | 0.95 | 0.890 (AP) | 0.796, 0.984 |

| Superior labral tear | ||||

| Tear treatment | 16 | 0.94 | 0.846 (AP) | 0.554, 1.000 |

| Repair type | 11 | 0.91 | 0.000 (SL) | –1.000, 1.000 |

| Implant manufacturer | 11 | 1.00 | N/Aa | N/A |

| Implant make and model | 11 | 0.82 | 0.290 (F) | –0.599, 1.000 |

| Anterior labral tear | ||||

| Tear treatment | 59 | 0.98 | 0.000 (SL) | –1.000, 1.000 |

| Repair type | 58 | 1.00 | 1.000 (AP) | 1.000, 1.000 |

| Implant manufacturer | 56 | 1.00 | N/Aa | N/A |

| Implant make and model | 54 | 0.91 | 0.795 (SU) | 0.624, 0.966 |

| Inferior labral tear | ||||

| Tear treatment | 8 | 1.00 | N/Aa | N/A |

| Repair type | 8 | 0.88 | 0.000 (SL) | –1.000, 1.000 |

| Implant manufacturer | 5 | 1.00 | N/Aa | N/A |

| Implant make and model | 5 | 1.00 | 1.000 (AP) | 1.000, 1.000 |

| Posterior labral tear | ||||

| Tear treatment | 35 | 0.97 | 0.493 (M) | –0.487, 1.000 |

| Repair type | 34 | 0.97 | 0.785 (SU) | 0.369, 1.000 |

| Implant manufacturer | 32 | 1.00 | 1.000 (AP) | 1.000, 1.000 |

| Implant make and model | 31 | 0.97 | 0.941 (AP) | 0.827, 1.000 |

| Capsule | 62 | 0.47 | 0.088 (SL) | –0.125, 0.301 |

| Capsule treatment | 33 | 0.85 | –0.065 (P) | –0.924, 0.795 |

| Glenoid bone loss | 57 | 0.95 | 0.868 (AP) | 0.722, 1.000 |

| Amount of bone loss | 7 | 1.00 | 1.000 (AP) | 1.000, 1.000 |

| Bone loss treatment | 18 | 0.50 | 0.344 (F) | 0.041, 0.647 |

| Hill-Sachs lesion | ||||

| Presence | 72 | 0.90 | 0.800 (SU) | 0.659, 0.941 |

| Lesion type | 11 | 0.82 | 0.421 (M) | –0.305, 1.000 |

| Lesion treatment | 28 | 1.00 | 1.000 (AP) | 1.000, 1.000 |

Agreement was based on Cohen’s κ statistic.

AP: almost perfect agreement; CI: confidence interval; F: fair agreement; M: moderate agreement; N/A: Not applicable; OME: Orthopaedic Minimal Data Set Episode of Care; P: poor agreement; SL: slight agreement; SU: substantial agreement.

Agreement statistics cannot be calculated in situations in which the operative report and OME are in complete agreement with only 1 variable appearing in each data source.

The raw agreement between the operative note and OME, as well as the CCC calculated for each comparison, is listed in Table 4. Of the continuous variables compared (n = 4), all demonstrated poor agreement based on the CCC (<0.90).

Table 4.

Agreement between the operative note and OME among continuous variables

| Variable | Records used | Raw % agreement | CCC | 95% CI |

|---|---|---|---|---|

| Superior anchors | 10 | 0.8 | 0.750 (P) | 0.273, 0.931 |

| Anterior anchors | 54 | 0.83 | 0.815 (P) | 0.703, 0.887 |

| Inferior anchors | 4 | 0.5 | 0.286 (P) | –0.310, 0.720 |

| Posterior anchors | 31 | 0.74 | 0.780 (P) | 0.594, 0.887 |

CCC: concordance correlation coefficient;

CI: confidence interval; OME: Orthopaedic Minimal Data Set Episode of Care; P: poor agreement.

Time

The median time to complete data entry into the OME database was approximately 103.5 (interquartile range, 80.5-151) seconds per case. Provider completion time was automatically logged and recorded upon each case submission.

DISCUSSION

This study’s purpose was to compare OME, a novel smartphone-based electronic data capture system, with the operative report with regard to completion, defined as the presence of prespecified clinically relevant variables, and to assess the agreement between data extracted from the narrative operative report and that collected by OME. Last, we quantified the efficiency of OME by measuring the time needed to complete all appropriate data fields.

Compared with the operative report, OME demonstrated equal or higher rates of completion for all variables except capsule treatment, and rates of completion were significantly higher for 49% (n = 18; P < .05) of the variables collected. The completion rate for capsule treatment was significantly higher for the operative report than for OME (P = .002). This may be attributed to the OME branching logic intended to minimize unnecessary data elements (eg, asking about posterior labral tear repair type when no posterior labral tear was observed during the case). It is possible that capsule pathology existed and was documented in the operative report, but the attending surgeon mistakenly omitted capsular pathology when completing OME. Due to branching logic, the capsule treatment data entry field would not be presented to the attending surgeon, and, consequently, capsule treatment would be absent from the OME record. The authors learned this was a great demonstration of the system’s branching logic to capture clinically relevant data points and isolating the relevant data points as opposed to additional information included in an operative report, but not deemed not necessary as part of the surgical treatment. Despite this isolated finding, the overall trend suggests that OME captures clinically relevant data points at significantly higher rates than the operative report.

In addition to outperforming the operative report with regard to completion rates, OME data showed considerable agreement with the nominal data obtained from the operative report. The raw proportional agreement between the operative report and OME exceeded 90% for 76% (n = 25) of the nominal variables collected. Furthermore, 61% of the nominal variables compared demonstrated statistical agreement that was substantial or better (κ > 0.60). This indicates that OME is capable of collecting large amounts of data accurately. Additionally, OME took <2 minutes to complete for most cases, suggesting that OME is not only accurate and complete but also efficient.

Despite the high accuracy demonstrated with the nominal data, some discrepancies were found when comparing the continuous data (eg, number of anchors used in a given location of the labral repair) collected via OME with the data extracted from the operative report. The poor agreement between these 2 indicated by the CCC <0.90 should be viewed alongside the raw proportional agreement, which exceed 73% for 3 of the 4 continuous variables. This indicates that the operative report and OME were concordant in the majority of cases, but the discrepancies that did occur tended to be large. The low CCC values may be the byproduct of small sample sizes used for this portion of the analysis, as 1 or 2 discrepancies could easily lower the CCC beyond the level of significance.

One source of confusion that may explain a portion of the discrepancies observed between the operative report and OME is the use of a variety of descriptive conventions by some of the participating surgeons. For example, the “clock face” was commonly used to describe location of pathology in the dictated operative report, while OME displayed “check all that apply” featuring discrete options of “superior,” “anterior,” “inferior,” and “posterior” location. The “5 o’clock” location, for example, may elicit a checkbox of anterior, inferior, or both depending on the surgeon or the blinded chart reviewer. In the future, this problem could be ameliorated by amending the current OME options to include suggested positions on the clock face (eg, “superior; 10 to 2”).

In orthopedic research, retrospective data are typically obtained from the operative report. Various shortcomings to the operative note exist, including underreporting of quantitative variables, wide variation in content, and lack of quality and precision of details.23,24 Operative notes contain errors, especially when dictated by residents or dictated late, and, furthermore, dictated free text is prone to errors in data extraction, further magnifying data quality issues.25–27 Generally, retrospective data are considered less reliable and valid than prospective data, which contributes to the difference in strength between most retrospective and prospective research.28 OME remedies these problems by ensuring efficient, prospective collection of a standardized dataset that not only includes more clinically relevant data than the operative report, but also eliminates the need for data extraction, as all data are automatically exported to a database, making it readily available for high-quality research.

Previous research has shown electronic synoptic templates and dropdown menus significantly improve operative note data by “enforcing” the inclusion of commonly omitted details.29,30 This was consistent with our findings that, apart from 1 category, OME collected more data than the operative report. Dropdown menus incorporated into the OME user interface likely serve as memory aids for important operative data.29,30 Also, because the OME system will not accept blank fields in its branching logic, all cases are entered with no missing data. Thus, key risk factors for shoulder instability outcomes research are entered for every case.18–20

Limitations

Currently, no definitive gold standard for recording surgical data exists, although the operative report is the most common documentation method. Thus, it is impossible to fully reconcile OME data with actual operative actions, and, consequently, to assess the true accuracy of the OME database. This study shows, however, that OME data are consistent with present operative documentation methods while exceeding these methods in information captured.

CONCLUSION

The OME smartphone data capture system can be used to quickly and efficiently capture important procedural data. OME’s use of discrete data entry fields and branching logic and inability to submit incomplete or missing data resulted in significantly higher rates of data collection for most fields when compared with the operative report. Despite being in agreement with the operative report and capturing significantly more data, OME took <2 minutes to complete for most cases. The OME smartphone data capture system is an efficient and effective method of data capture for arthroscopic shoulder instability repair and has the potential to serve as an important research tool in the future.

FUNDING

Research reported in this publication was partially supported by the National Institute of Arthritis and Musculoskeletal and Skin Diseases of the National Institutes of Health under Award Number R01AR053684 (to KPS) and under award number K23AR066133, which supported a portion of MHJ’s professional effort. The content is solely the responsibility of the authors and does not necessarily represent official views of the National Institutes of Health.

AUTHOR CONTRIBUTIONS

JM was involved in conception or design of work; acquisition of data; drafting work; revising work; final approval; agreement to be accountable. GJS was involved in conception or design of work; interpretation of data; revising of work; final approval; agreement to be accountable. LF was involved in acquisition of data; revising of work; final approval; agreement to be accountable. KH was involved in acquisition of data; revising of work; final approval; agreement to be accountable. CMH was involved in acquisition of data; revising of work; final approval; agreement to be accountable. MHJ was involved in acquisition of data; revising of work; final approval; agreement to be accountable. AM was involved in acquisition of data; revising of work; final approval; agreement to be accountable. ER was involved in acquisition of data; revising of work; final approval; agreement to be accountable. JR was involved in acquisition of data; revising of work; final approval; agreement to be accountable. MS was involved in acquisition of data; revising of work; final approval; agreement to be accountable. PS was involved in acquisition of data; revising of work; final approval; agreement to be accountable. JFV was involved in acquisition of data; revising of work; final approval; agreement to be accountable. KPS was involved in conception/design of work, acquisition and interpretation of data; revising of work; final approval; agreement to be accountable.

ACKNOWLEDGMENTS

Thank you to the Cleveland Clinic orthopedic patients, staff, and research personnel whose efforts related to regulatory, data collection, subject follow-up, data quality control, analyses, and manuscript preparation have made this consortium successful. Thank you to Michael Kattan and Alex Milinovich for the development of OME; to statistician William Messner; and to orthopedic surgeons Thomas Anderson, Peter Evans, Joseph Iannotti, Joseph Scarcella, and James Williams for data contribution. Also thank you to Brittany Stojsavljevic, editor assistant, Cleveland Clinic Foundation, for editorial management.

CONFLICT OF INTEREST STATEMENT

GJS reports other from nPhase during the conduct of the study. MHJ reports grants from the National Institutes of Health, personal fees from Samumed, and personal fees from Journal of Bone and Joint Surgery, outside the submitted work. ER reports grants and personal fees from Depuy Synthes, personal fees from DJO Surgical, personal fees from Journal of Bone and Joint Surgery, outside the submitted work. JR reports personal fees from Smith and Nephew, outside the submitted work. KPS reports other from nPhase during the conduct of the study; other from Smith & Nephew Endoscopy, other from DonJoy Orthopaedics, other from National Football League (NFL), other from Cytori, other from Mitek, other from Samumed, other from Flexion Therapeutics outside the submitted work. AM reports personal fees from Arthrosurface, other from Zimmer Biomet, Wolters Kluwer, other from Amniox Medical, other from Rock Medical, other from Linvatec Corporation, other from Stryker, other from Trice, outside the submitted work; and Board of ASES Foundation, Arthrosurface, Trice.

References

- 1. Onyekwelu I, Khatib O, Zuckerman JD, Rokito AS, Kwon YW.. The rising incidence of arthroscopic superior labrum anterior and posterior (SLAP) repairs. J Shoulder Elbow Surg 2012; 216: 728–31. [DOI] [PubMed] [Google Scholar]

- 2. Vogel LA, Moen TC, Macaulay AA, et al. Superior labrum anterior-to-posterior repair incidence: a longitudinal investigation of community and academic databases. J Shoulder Elbow Surg 2014; 236: e119–26. [DOI] [PubMed] [Google Scholar]

- 3. Weber SC, Martin DF, Seiler JG, Harrast JJ.. Superior labrum anterior and posterior lesions of the shoulder. Incidence rates, complications and outcomes as reported by. Am J Sports Med 2012; 40: 1538–43. [DOI] [PubMed] [Google Scholar]

- 4. Zhang AL, Kreulen C, Ngo SS, Hame SL, Wang JC, Gamradt SC.. Demographic trends in arthroscopic SLAP repair in the United States. Am J Sports Med 2012; 405: 1144–7. [DOI] [PubMed] [Google Scholar]

- 5. Brophy RH, Marx RG.. The treatment of traumatic anterior instability of the shoulder: nonoperative and surgical treatment. Arthrosc J Arthrosc Relat Surg 2009; 253: 298–304. [DOI] [PubMed] [Google Scholar]

- 6. Jakobsen BW, Johannsen HV, Suder P, Søjbjerg JO.. Primary repair versus conservative treatment of first-time traumatic anterior dislocation of the shoulder: a randomized study with 10-year follow-up. Arthrosc J Arthrosc Relat Surg 2007; 232: 118–23. [DOI] [PubMed] [Google Scholar]

- 7. Polyzois I, Dattani R, Gupta R, Levy O, Narvani AA.. Traumatic first time shoulder dislocation: surgery vs non-operative treatment. Arch Bone Jt Surg 2016; 42: 104–8. [PMC free article] [PubMed] [Google Scholar]

- 8. Cosgrove T. Value-based health care is inevitable and that’s good. Harvard Business Review. 2013. https://hbr.org/2013/09/value-based-health-care-is-inevitable-and-thats-good. Accessed September 24, 2013.

- 9. Porter ME, Lee TH.. From volume to value in health care: the work begins. JAMA 2016; 31610: 1047–8. [DOI] [PubMed] [Google Scholar]

- 10. Porter ME. What is value in health care? N Engl J Med 2010; 36326: 2477–81. [DOI] [PubMed] [Google Scholar]

- 11. Blomquist J, Solheim E, Liavaag S, et al. Shoulder instability surgery in Norway: the first report from a multicenter register, with 1-year follow-up. Acta Orthop 2012; 832: 165–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Saltzman BM, Cvetanovich GL, Bohl DD, et al. Comparisons of patient demographics in prospective sports, shoulder, and national database initiatives. Orthop J Sports Med 2016; 49: 232596711666558. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Capurro D, Yetisgen M, van Eaton E, Black R, Tarczy-Hornoch P.. Availability of structured and unstructured clinical data for comparative effectiveness research and quality improvement: a multisite assessment. EGEMS (Wash DC) 2014; 2: 1079.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Pan L, Fergusson D, Schweitzer I, Hebert PC.. Ensuring high accuracy of data abstracted from patient charts: the use of a standardized medical record as a training tool. J Clin Epidemiol 2005; 589: 918–23. [DOI] [PubMed] [Google Scholar]

- 15. David GC, Chand D, Sankaranarayanan B.. Error rates in physician dictation: quality assurance and medical record production. International J Health Care QA 2014; 27: 99–110. [DOI] [PubMed] [Google Scholar]

- 16. Stewart L, Hunter JG, Wetter A, Chin B, Way LW.. Operative reports: form and function. Arch Surg 2010; 1459: 865–71. [DOI] [PubMed] [Google Scholar]

- 17. Harris PA, Taylor R, Thielke R, et al. Research electronic data capture (REDCap)–a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform 2009; 422: 377–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Alberta FG, ElAttrache NS, Bissell S, et al. The development and validation of a functional assessment tool for the upper extremity in the overhead athlete. Am J Sports Med 2010; 385: 903–11. [DOI] [PubMed] [Google Scholar]

- 19. Leggin BG, Michener LA, Shaffer MA, et al. The Penn shoulder score: reliability and validity. J Orthop Sports Phys Ther 2006; 363: 138–51. [DOI] [PubMed] [Google Scholar]

- 20. Selim AJ, Rogers W, Fleishman JA, et al. Updated U.S. population standard for the Veterans RAND 12-item Health Survey (VR-12). Qual Life Res 2009; 181: 43–52. [DOI] [PubMed] [Google Scholar]

- 21. Landis JR, Koch GG.. The measurement of observer agreement for categorical data. Biometrics 1977; 331: 159–74. [PubMed] [Google Scholar]

- 22. McBride GB. A Proposal of Strength of Agreement Criteria for Lin's Concordance Correlation Coefficient: NINA Client Report 2005. Hamilton, New Zealand: National Institute for Water and Atmospheric Research; 2005. [Google Scholar]

- 23. Blackburn J; Severn Audit and Research Collaborative in Orthopaedics (SARCO). Assessing the quality of operation notes: a review of 1092 operation notes in 9 UK hospitals. Patient Saf Surg 2016; 10: 5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Scherer R, Zhu Q, Langenberg P, et al. Comparison of information obtained by operative note abstraction with that recorded on a standardized data collection form. Surgery 2003; 1333: 324–30. [DOI] [PubMed] [Google Scholar]

- 25. Novitsky YW, et al. Prospective, blinded evaluation of accuracy of operative reports dictated by surgical residents. Am Surg 2005; 71: 627–31; discussion 631–22 [PubMed] [Google Scholar]

- 26.Gliklich R, Dreyer N, Leavy M. Registries for Evaluating Patient Outcomes: A User's Guide. 3rd ed Rockville, MD: Agency for Healthcare Research and Quality; 2014. [PubMed] [Google Scholar]

- 27. Wang Y, Pakhomov S, Burkart NE, Ryan JO, Melton GB.. A study of actions in operative notes. AMIA Annu Symp Proc 2012; 2012: 1431–40. [PMC free article] [PubMed] [Google Scholar]

- 28. Nagurney JT, et al. The accuracy and completeness of data collected by prospective and retrospective methods. Acad Emerg Med 2005; 129: 884–95. [DOI] [PubMed] [Google Scholar]

- 29. Ghani Y, Thakrar R, Kosuge D, Bates P.. Smart' electronic operation notes in surgery: an innovative way to improve patient care. Int J Surg 2014; 121: 30–2. [DOI] [PubMed] [Google Scholar]

- 30. Gur I, Gur D, Recabaren JA.. The computerized synoptic operative report: a novel tool in surgical residency education. Arch Surg 2012; 1471: 71–4. [DOI] [PubMed] [Google Scholar]