Abstract

Cochlear implant (CI) users can only access limited pitch information through their device, which hinders music appreciation. Poor music perception may not only be due to CI technical limitations; lack of training or negative attitudes toward the electric sound might also contribute to it. Our study investigated with an implicit (indirect) investigation method whether poorly transmitted pitch information, presented as musical chords, can activate listeners’ knowledge about musical structures acquired prior to deafness. Seven postlingually deafened adult CI users participated in a musical priming paradigm investigating pitch processing without explicit judgments. Sequences made of eight sung-chords that ended on either a musically related (expected) target chord or a less-related (less-expected) target chord were presented. The use of a priming task based on linguistic features allowed CI patients to perform fast judgments on target chords in the sung music. If listeners’ musical knowledge is activated and allows for tonal expectations (as in normal-hearing listeners), faster response times were expected for related targets than less-related targets. However, if the pitch percept is too different and does not activate musical knowledge acquired prior to deafness, storing pitch information in a short-term memory buffer predicts the opposite pattern. If transmitted pitch information is too poor, no difference in response times should be observed. Results showed that CI patients were able to perform the linguistic task on the sung chords, but correct response times indicated sensory priming, with faster response times observed for the less-related targets: CI patients processed at least some of the pitch information of the musical sequences, which was stored in an auditory short-term memory and influenced chord processing. This finding suggests that the signal transmitted via electric hearing led to a pitch percept that was too different from that based on acoustic hearing, so that it did not automatically activate listeners’ previously acquired musical structure knowledge. However, the transmitted signal seems sufficiently informative to lead to sensory priming. These findings are encouraging for the development of pitch-related training programs for CI patients, despite the current technological limitations of the CI coding.

Keywords: music perception, cochlear implants, implicit investigation method, auditory sensory memory, priming

Introduction

Satisfactory music perception, emotional, intentional prosody, and tonal language intelligibility remain barriers yet to be overcome by cochlear implant (CI) technology (e.g., Zeng, 2004; McKay, 2005; Gfeller et al., 2007). CIs are surgically implanted devices that directly stimulate the auditory nerve in individuals with profound deafness. However, while the current CI technology can restore speech perception in quiet for most users, the spectral information it is able to transmit is severely limited. One consequence of this limitation is that pitch perception remains very limited compared to normal-hearing (NH) listeners (see, e.g., Moore and Carlyon, 2005, for a review). Melody processing—a major component of music perception—requires some capacity for pitch processing. Various tests for music perception have been proposed to investigate CI users’ abilities to use the information provided by electric hearing. These tests include the assessment of listeners’ capacities in the discrimination of pitch changes and pitch direction, the identification of melodies and timbres, as well as the processing of rhythms and emotions (e.g., Cooper et al., 2008; Looi et al., 2008; Nimmons et al., 2008; Kang et al., 2009; Brockmeier et al., 2010; Gaudrain and Başkent, 2018; Zaltz et al., 2018). While rhythmic processing is close to normal, CI listeners have been shown to be impaired in tasks requiring pitch discrimination or pitch direction judgments, even though inter-subject variability can be large (for reviews, see McDermott, 2004; Moore and Carlyon, 2005; Drennan and Rubinstein, 2008). For example, pitch discrimination thresholds have been reported to vary from one or two semitones to two octaves, also as a function of frequency (Drennan and Rubinstein, 2008; Jung et al., 2010). Large variability has been also observed in impaired melodic contour processing, with performance ranging from chance level to close-to-normal performance (Galvin et al., 2007; Fuller et al., 2018). Melodic contour processing is also influenced by the timbre of the material (Galvin et al., 2008). When tested for familiar melody recognition and identification, CI listeners are impaired, but helped by rhythm or lyrics. CI listeners’ difficulties in recognizing familiar melodies are considerably enhanced when these are presented without lyrics or without the familiar rhythmic pattern.

Interestingly, the poor musical outcome may not only be due to CI technical limitations in transmitting pitch. Lack of training or negative attitudes to the new electric sound might also affect music perception (e.g., Trehub et al., 2009). Indeed, useable pitch information seems to be coded, given that training and exposure have been shown to provide improvements in music perception and appreciation (Leal et al., 2003; Lassaletta et al., 2008; Fuller et al., 2018). First, several reports have indicated correlations between self-reported listening habits, such as the amount of music listening, music enjoyment, and perceptual accuracy (e.g., Gfeller et al., 2008; Migriov et al., 2009). Second, several data sets suggest the possibility of training pitch perception in prelingually deaf children (Chen et al., 2010) and postlingually deaf adults (Fu and Galvin, 2007, 2008; Galvin et al., 2007). Training has also been shown to have beneficial effects on the recognition of musical instruments (Driscoll et al., 2009), on musical performance (Yucel et al., 2009; Chen et al., 2010) and on emotion recognition (Fuller et al., 2018).

In currently available studies on CI, pitch and music perception have been investigated with explicit testing methods requiring discrimination, identification, or recognition. These methods do not test for the implicit processing of pitch and music in CI listeners. However, implicit (indirect) investigation methods in various domains have been shown to be more powerful to reveal spared, preserved processing abilities than can be done by explicit investigation methods. For example, experiments using the priming paradigm have provided evidence for spared implicit processes despite impaired explicit functions in either visual or auditory modalities (Young et al., 1988 for spared face recognition in a patient with prosopagnosia; Tillmann et al., 2007 for spared music processing in a patient with amusia).

The priming paradigm investigates the influence of a prime context on the processing of a target event that is either related or unrelated. Its central feature is that participants are not required to make explicit judgments on the relation between prime and target, but to make fast judgments on a perceptual feature of the target (manipulated independently of the relations of interest). Such indirect, implicit tests may shed new light on our understanding of CI listeners’ music perception. Our present study tested music perception in CI listeners with a musical priming paradigm. This behavioral experimental method does not require explicit judgments and should be more sensitive to reveal spared pitch processing in these listeners, as suggested by effects of training and exposure.

In addition, while pitch discrimination thresholds in CIs are generally too large to detect the musically relevant difference of one semitone (e.g., Gaudrain and Başkent, 2018), combining multiple tones into a chord may yield different results. Indeed, the interaction of different pitch components, like in a chord, may result in spectro-temporal patterns in the implant that are more detectable than the variation of each of the components in isolation. In other words, while pitch representation in CIs is unlikely to resemble that in NH listeners, the representation of chords may be better preserved across modes of hearing (as suggested, for instance, by Brockmeier et al., 2011).

Measuring brain responses with the methodology of electroencephalography (EEG) can also provide some indirect, implicit evidence for music perception. Koelsch et al. (2004) reported musical structure processing in postlingually deafened, adult CI users who were not required to explicitly judge the tonal structure of musical sequences1. Event-related brain potentials (ERPs) were measured for musical events that were either expected (confirming musical structures and regularities, i.e., in-key chords) or unexpected (violating musical regularities, i.e., Neapolitan sixth chord2, also referred to as “irregular” chord). Furthermore, the unexpected, irregular chord was more irregular in the fifth position of five-chord sequences (thus in the final position) than in the third position. For control NH participants, the ERPs (in particular an early right anterior negativity, referred to as ERAN) were larger for the irregular chords than for the in-key chords, and even larger for the irregular chords in the fifth position than in the third position. It is thus not only the chord per se, but also its structural position in the sequence that raised the violation. For the CI participants, the irregular chords also evoked an ERAN, suggesting that the CI users processed the musical irregularity, even though the amplitudes of the ERAN were considerably smaller than in the NH control participants (leading to a missing ERAN in the third position). According to the authors, the observed ERP patterns indicated that the neural mechanisms for music-syntactic irregularity-detection were still active in CI patients. This finding suggests that CI listeners’ knowledge about the Western tonal musical system, which they had acquired prior to deafness, can be accessed despite the poor spectral signal transmitted by the CI. This finding was particularly encouraging for CI users as it indicated that their brains might accurately process music, even though explicitly CI users report difficulties in discriminating and perceiving musical information.

However, since the publication of this work, the domain of music cognition and neuroscience has advanced and pointed out that musical structure violations might introduce new acoustic information in comparison to the acoustic information of the context. The introduction of this new acoustic information provides an alternative explanation to musical irregularity effects based on sensory processing (instead of cognitive processing of musical structures) (see Bigand et al., 2006; Koelsch et al., 2007). Some of the musical violations used to investigate musical structure processing introduced new notes, which had not occurred yet in the sequence. These musical violations, which confounded acoustic violations and context effects, can be explained on a sensory level only. To confirm the influence of listeners’ musical structure knowledge (beyond acoustic influences), controlled experimental material is needed. This has been done in more recent behavioral and ERP studies in NH listeners (e.g., Bigand et al., 2001; Koelsch et al., 2007; Marmel et al., 2010), but for CI listeners, this experimental approach is still missing.

Our study fills in this need by testing postlingually deaf adult CI users with experimental musical material that allows the investigation of musical structure processing without acoustic confounds (i.e., the material used in Bigand et al., 2001). The musical sequences in our experiment were eight-chord sequences, with the last chord (i.e., the target chord) being either the expected, tonally regular tonic chord (i.e., related target) or the less-expected, subdominant chord (i.e., less-related target). A cognitive hypothesis predicted faster processing for the expected tonic than for the less-expected subdominant chord. To avoid acoustic confounds, neither the tonic nor the subdominant target occurred in the sequence. Furthermore, the experimental material was constructed in such a way to contrast this cognitive hypothesis of musical structure processing with a sensory hypothesis: Even though neither the tonic nor the subdominant target chord occurred in the sequence, the pitches of the component tones of the less-related subdominant target chord occurred more frequently in the sequence than those of the related tonic target chord. Consequently, faster response times for the less-related chord than for the related chord (thus the reversed pattern of the cognitive hypothesis) would point to sensory priming (also referred to as repetition priming): Sensory information would be simply stored in a sensory memory buffer, leading to facilitated processing of repeatedly presented information. This hypothesis does not require the activation of tonal knowledge, but is based solely on the acoustic features of the presented auditory signal. Alternatively, if the coding of the pitch information transmitted by the CI was too poor to lead either to cognitive or sensory priming, no difference in response times should be observed between the related and less-related targets.

To back up the cognitive and sensory explanations of our experimental material, we present three types of analyses of the experimental material. These analyses compared the related and less-related conditions for (1) the number of shared tones (pitch classes3) between target and prime context; (2) the overlap in harmonic spectrum; and (3) the similarity of acoustic information between the target and the prime context in terms of pitch periodicity (as in Koelsch et al., 2007; Koelsch, 2009; Marmel et al., 2010)4. While these analyses are originally designed to represent the normal auditory system, we also implemented versions of (2) and (3) through a simulation of electrical stimulation by a cochlear implant.

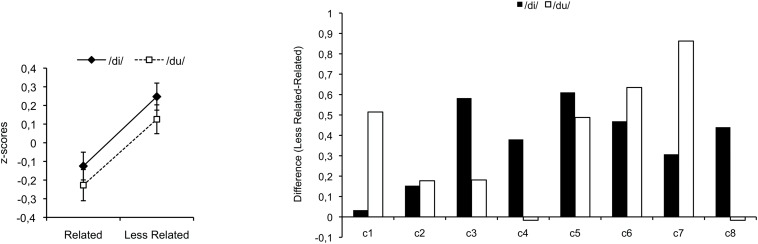

In the present musical priming study investigating CI listeners, musical sequences, which ended on either the related tonic target or the less-related subdominant target (as described above), were presented as sung material, with a sequence of sung nonsense syllables (e.g., /ka//sha/etc). The last chord was sung on the syllable /di/ or /du/. Participants had to discriminate syllables by judging as fast as possible whether the last chord was sung on /di/ or /du/. This well-established musical priming implicit method allows measuring response times, supposed to reflect processing times of the last chord. For various populations of NH listeners, previous studies have shown the influence of tonal knowledge on processing speed and thus supported the cognitive hypothesis: Response times were faster for the related tonic chord than for the less-related subdominant chord. This result has been observed not only for English and French college students (musicians and non-musicians, Bigand et al., 2001; Tillmann et al., 2008), but also for 6-year-old children (Schellenberg et al., 2005), cerebellar patients (Tillmann et al., 2008), and amusic patients (Tillmann et al., 2007). Figure 1 represents this data pattern for the control group tested in Tillmann et al. (2008) (on the left) and for the individual participants (on the right), with positive values indicating faster response times for the related tonic chord than for the less-related subdominant chord. Note that we here plotted the individual data patterns from Tillmann et al. (2008) as this allows us to show the consistency of the priming pattern in control participants (particularly important as our present study did not include NH participants). Based on Koelsch et al. (2004) conclusions, we expected to observe the same data pattern for the CI users than previously observed for the NH users. The faster processing of the related tonic chord would indicate that the transmitted signal of the CI is sufficient to activate listeners’ musical knowledge acquired prior to deafness. The construction of our material is such that another pattern of results is also informative of the underlying processes. The reverse data pattern, where the less-related subdominant chord is processed faster, would indicate that the transmitted signal allows accumulation of sensory information in a short-term memory buffer, which then influences processing times (based on repetition priming). Finally, if the limited spectral resolution available through implants is not sufficient to provide relevant information to the CI user’s brain, then processing times should not differ between the two priming conditions.

FIGURE 1.

Average data of the 8 control participants in Tillmann et al. (2008) on the left, and their individual data on the right [this group of control participants had a mean age of 65 (±10) years]. For comparable data patterns of group of students, see Bigand et al. (2001) and Tillmann et al. (2008), of groups of children, see Schellenberg et al. (2005), and of another group of control participants as well as their individual data patterns, see Tillmann et al. (2007). Positive and negative values indicate facilitated processing for related and less-related targets, respectively.

Materials and Methods

Participants

Seven CI patients were tested in the present experiment using their own processor without their contralateral hearing aid. They were all postlingually deafened adult CI users who were implanted unilaterally (see Table 1 for participants’ characteristics, including information of the implant type, speech processor, coding strategy, and stimulation rate). Only one of the participants (ci7) reported having some musical training (9 years, starting at the age of 11, with 8 years of piano and 1 year of guitar), and reported currently practicing music about 1 h per week. All participants provided written informed consent to the study, which was conducted in accordance with the guidelines of the Declaration of Helsinki, and approved by the local Ethics Committee (CPP Sud-Est II). They were paid a small honorarium to thank them.

TABLE 1.

Demographics of the seven participants.

| CI Participant | Gender | Age (years) |

Hearing Loss |

Implanted Ear | Duration CI use (years) | Contralateral hearing Aid | Implant | Speech processor | Strategy | Stimulation Rate | ||

| Onset Time | Age at Deafness | Duration before implantation (years) | ||||||||||

| ci_1 | F | 68 | Progressive | 20 | 30 | L | 1 | Y | CI24RE | Freedom SP | ACE 10 max | 1200 Hz |

| ci_2 | F | 69 | Progressive | 25 | 15 | R | 1 | Y | CI24RE | Freedom SP | ACE 12 max | 1200 Hz |

| ci_3 | F | 47 | Congenital and progressive | 10 | 38 | R | 9 | Y | CI24RE | Freedom SP | ACE 12 max | 1200 Hz |

| ci_4 | M | 32 | Early | 56 | 20 | R | 2 | Y | CI24RE | Freedom SP | ACE 10 max | 1200 Hz |

| ci_5 | F | 32 | Early | 66 | 24 | L | 1 | N | CI24RE | Freedom SP | ACE 10 max | 900 Hz |

| ci_6 | F | 30 | Progressive | 25 | 11 | L | 1 | Y | CI24M | Esprit 3G | CIS 12 channels | 900 Hz |

| ci_7 | F | 33 | Progressive | 23 | 21 | L | 1 | Y | CI24RE | Freedom SP | ACE 10 max | 1200 Hz |

Onset time refers to the onset time of profound deafness (early: deafness begun in childhood, but after the development of spoken language (thus postlingually); congenital and progressive: the subject had a hearing loss at birth, but was hearing-aid fitted when 10-years old as the hearing-loss worsened).

Materials

The 48 chord sequences of Bigand et al. (2001) were used (with permission). These eight-chord sequences ended on either the tonally related tonic chord or the less-related subdominant chord (defining the target). The tonal relatedness of the final chords (the targets) was manipulated by changing the last two chords of the musical sequences (defining either a pair of dominant chord followed by tonic chord or a pair of a tonic chord followed by a subdominant chord). A further control was performed over the entire set of sequences, the material was constructed in such a way that a given chord pair (containing the target) defined the ending of the sequences in both related and less-related conditions. For example, when the first six chords (prime context) instill the key of C Major, the chord pair G-C functions as a dominant chord followed by a tonic chord. If, however, the prime context instills the key of G Major, the same chord pair functions as a tonic chord followed by a subdominant chord. Accordingly, over the experimental set, the 12 possible major chords served as related and less-related targets depending on the prime context.

Each of the first seven chords sounded for 625 ms and the target chord sounded for 1250 ms. The chord sequences were composed in such a way that the target chord never occurred in the sequence (see below for further analyses of the acoustic similarity between prime and target for the two experimental conditions). Example sequences are available as Supplemental Digital Content.

The sequences were sung on CV-syllables by sampled voice sounds (using Vocal-Writer software, Woodinville, WA, United States). Chords were generated by the simultaneous presentation of 3 or 4 synthetized utterances of the same syllable with different fundamental frequencies, which corresponded to the component tones of the chords. The succession of the synthetic syllables did not form a meaningful, linguistic phrase (e.g., /da fei ku ∫o fa to kei/), and the last syllable (i.e., of the target) was either /di/ or /du/ to define the experimental task. The experimental session consisted of 50% of sequences ending on the related tonic target (25% being sung with /di/, 25% with /du/) and 50% ending on the less-related subdominant target (25% with /di/, 25% with /du/). The experiment was run on Psyscope software (Cohen et al., 1993).

Procedure

The sequences were presented over two loudspeakers (placed at about 80 cm in front of the participant, left and right from the screen of the laptop computer, thus at an azimuth of 45 degrees) at about 70 dB SPL, which was perceived as comfortable loudness level. The participants listened through the microphone of the processor using their everyday program and settings. The experiment was run in the main sound-field room of the University of Lyon CI clinic center.

The participants were asked to decide as quickly and as accurately as possible whether a chord was sung on /di/ or /du/ by pressing one of two keys. Incorrect responses were accompanied by an auditory feedback signal and a correct response stopped the sounding of the target. Participants were first trained on 16 isolated chords (50% sung on /di/ or /du/, respectively). The training phase was repeated in case the task was not understood, notably for difficulties to perceive the difference between the syllables or responding too slowly. Participants were encouraged to give their response while the target chord was still sounding, but a later time out was used (2800 ms) to not pressurize the participants too strongly. In the next phase of the experiment, the eight-chord sequences were explained to the participants with an example sequence and participants were asked to perform the same task on the last chord of each sequence. After four practice sequences, the 48 sequences were presented in random order twice in two blocks, separated by a short break. Two participants performed only one block (ci2, ci7). A short random-tone sequence was presented after each response to avoid carry-over effects between trials. The experiment lasted about 15 to 20 min.

Auditory Properties and Perceptual Processing

When musical violations used to investigate musical structure processing introduce new tones, which had not occurred yet in the context, they confound the processing of acoustic violations with the cognitive processing of musical structures. The processing of these musical violations could thus be explained on a sensory level only, without the need for the involvement of listeners’ knowledge of musical structures (acquired prior to deafness). Experimental material must thus be constructed in such a way to control acoustic influences and disentangle them from cognitive influences (linked to listeners’ musical structure knowledge). In this section, we first present analyses of the acoustic and estimated perceptual similarities between the prime context and the related/less-related target in three ways, as previously done in the studies investigating NH listeners. We then present simulations that take in consideration potential changes due to the implication of the implant. None of the simulations predict facilitated processing for the related tonic chord in comparison to the less-related subdominant chord.

Analyses of the Acoustic Similarity Between the Prime Context and the Target in Related and Less-Related Conditions

To check for acoustic influences, we analyzed our material in terms of (1) the number of pitch classes shared between targets and contexts as a function of the condition (related vs. less-related), (2) the spectral overlap of targets and contexts (simulating a place coding of pitch information), and (3) periodicity overlap in auditory short-term memory (simulating a temporal coding of pitch information). These analyses simulated different plausible pitch representations (place vs. time coding) and their integration over time. All analyses showed that acoustic influences would predict facilitated processing for the less-related subdominant chord. This prediction thus contrasts with cognitive, musical structure processing, which predicts facilitated processing for the related tonic chord.

Overlap in Pitch Classes Between Target and Context

To analyze the number of pitch classes shared between the target and the first seven chords (the context), we calculated (a) for each sequence, the number of occurrence of the target’s pitch classes in the first seven chords, and (b) the average over the sequence sets in the two conditions: The resulting mean was higher for the less-related condition (14.75 ± 2.05) than for the related condition (12.25 ± 2.93), t(11) = 2.61, p = 0.03. This finding thus represents a sensory advantage for the less-related subdominant targets and contrasts with the cognitive (tonal) advantage for the related tonic targets.

Spectral Contrast

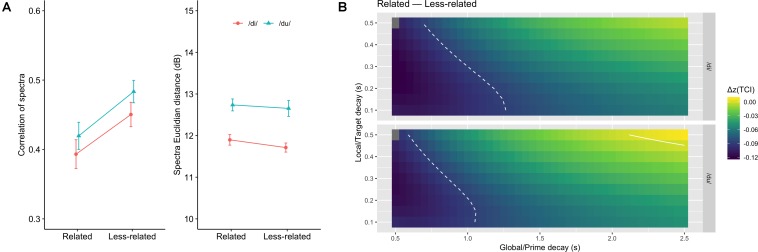

To estimate the spectral overlap between target and context, we compared the spectra obtained from the first seven chords (of each sequence) with the spectra obtained from the corresponding target chord. The spectra were obtained by averaging the spectrogram computed over either the context or the target with FFT-time windows of 186 ms and 50%-window overlap (93 ms). Two metrics were used to judge the similarity of prime and target spectra: a correlation and an Euclidian distance. Because correlations are very sensitive to edge effects, the spectra were limited to the range 100 to 8000 Hz, and the overall spectral slope was compensated, in each sequence, based on the average of the target and prime spectra. The Euclidian distance was calculated on the spectrum expressed in decibels. Average correlation (Fisher-transformed, Fisher, 1921) and Euclidian distance values obtained for the sequence sets in the related and less-related conditions were then compared (Figure 2A). We analyzed those results with a repeated measures ANOVA with the factors syllable and relatedness. Correlation values were higher for the less-related condition than for the related condition [F(1,11) = 7.74, p < 0.05, = 0.20] thus confirming the acoustic advantage of the less-related subdominant chord. However, while the Euclidian distance did not significantly depend on the relatedness [F(1,11) = 0.75, p = 0.41, = 0.02], it did depend on the nature of the target syllable [F(1,11) = 87.9, p < 0.001, = 0.46]. All other effects and interactions were non-significant [ps > 0.22].

FIGURE 2.

(A) Average spectral correlation (left) and Euclidian distance (right) for the related and less-related condition, and for the two target syllables. Higher correlations, and smaller Euclidian distances, are compatible with more sensory priming. (B) Difference in Fisher transformed tonal contextuality index between related and less-related conditions, as a function of syllable, and global and local decays. The solid line represents the value 0.0 where the two types of context have the same TCI. The dashed lines represent the critical limit beyond which differences can be considered significant.

Pitch Periodicity in a Model of Auditory Short-Term Memory: Tonal Contextuality Index

To further test the acoustic influences in the experimental material, we used Leman (2000) model (as implemented in the IPEMtoolbox v1.02 by Leman et al., 2005) that stores auditory information in a short-term memory buffer. Acoustic input is first processed in a frontend module mimicking the peripheral auditory system (Van Immerseel and Martens, 1992). The output is then processed with a pitch module that extracts periodicities using an autocorrelation approach. Finally, the periodicity output is passed into a memory module. This model relies on the comparison of pitch images of two echoic memories, which differ in duration. With longer memory decays, the pitch images are smeared out, so that the images reflect the context echoic memory, while with a shorter decay the pitch images reflect the target echoic memory. Measuring the differences between the two images by computing their correlation gives an indication of how well the target (local) pitch image acoustically fits with the given (global) context. This measure is referred to as the Tonal Contextuality Index (TCI). In our case, to avoid choosing a specific point sample during the target to compare the periodicity patterns, we averaged them over the duration of the target, both for the short (local) and long (global) memory decays, and correlated these summary images. For the choice of the memory decay durations, as currently no precise information about the dynamics of auditory memory in human listeners are available, our simulations were carried out with local and global decay parameters varying systematically by steps of 0.05, from 0.1 to 0.5 s and from 0.5 to 2.5 s, for the local and global decay parameters, respectively, in order to explore a large parameter space of the model.

For the present analyses, the audio files of the 48 chord sequences were given as input to the model. TCI was calculated for each sequence and transformed into z-values using Fisher’s transformation (Fisher, 1921). The spaces of the differences between the TCI of the two targets are reported in Figure 2B for each target syllable. In these figures, positive Δz(TCI) values indicate that the related condition yields stronger contextuality than the less-related condition, and negative values reflect the opposite. Here all Δz(TCI) values were negative, indicating stronger TCI for subdominant targets than for tonic targets, thus predicting facilitated processing for the less-related targets. In Figure 2B, it can be seen that significantly negative values are found for short global decay values, but no significant positive values were observed. To further assess the role of relatedness on TCI, we analyzed the TCI data with a linear-mixed model with relatedness and syllable as fixed factors and tonality, local decay and global decay as random intercepts (p-values were obtained with Satterthwaite’s method). The model yields a significant effect of relatedness [F(1,17605) = 578.6, p < 0.0001], confirming that the less-related condition produced higher TCIs than the related one. The effect of syllable was also significant [F(1,17605) = 137.4, p < 0.0001] and so was the interaction [F(1,17605) = 5.78, p = 0.016]: the TCI was higher for /du/ than for /di/, but the that difference was less important in the less-related condition.

These results, thus confirm that the facilitated processing for tonic targets reported for NH participants by Bigand et al. (2001) and others (Schellenberg et al., 2005; Tillmann et al., 2007, 2008) reflect the influence of listeners’ knowledge about musical structures on target chord processing (see also Bigand et al., 2003).

In sum, the results of these three analyses confirmed that sensory and cognitive hypotheses make contrasting predictions for our experimental material: The sensory hypothesis predicts facilitated processing for the less-related targets, while the cognitive hypothesis predicts facilitated processing for the related targets. These predictions are in agreement with the alternative hypotheses made here above for the CI users: If the CI users show faster response times for the less-related subdominant chord, this finding would suggest the influence of the contextual auditory information (stored in a memory buffer) on target chord processing. If, however, CI users show faster response times for the related tonic chord, this finding would suggest the influence of CI users’ musical knowledge (acquired prior to deafness).

Analyses of the Electrical Similarity Between the Prime Context and the Target in Related and Less-Related Conditions

In the previous sections, we examined the potential influence of sensory factors, rather than cognitive, on the priming effect induced by the material. Both the spectral contrast model (see section “Spectral Contrast”) and the tonal contextuality model (see section “Pitch Periodicity in a Model of Auditory Short-Term Memory: Tonal Contextuality Index”) assume a NH frontend. In the case of implant stimulation, the simultaneously presented tones that produce a chord interact and can generate other patterns. In other words, situations that would not induce sensory priming in NH listeners may very well do so in CI listeners.

To evaluate this possibility, we implemented a frontend mimicking a cochlear implant and the pattern of neural activation generated by electrical stimulation (Gaudrain et al., 2014). The model is based on the Nucleus Matlab Toolbox (Cochlear Ltd.), which generates patterns of electrical stimulation along the electrode array of the implant. The generated electrical field is then propagated in the cochlea to mimic current spread (Bingabr et al., 2008). Neural activation probability resulting from this electrical field is then calculated using approaches adapted from Rattay et al. (2001). This time-place image is used first to examine the spectral contrast between prime and target, and then to evaluate the tonal contextuality.

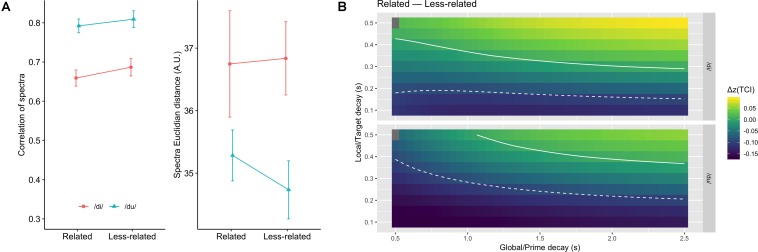

Spectral Contrast

As shown in Figure 3A, calculating the same metrics as in the section “Spectral Contrast,” i.e., correlation of detrended spectra, and Euclidian distance, again, there was no significant difference between the related and less-related conditions [for the correlation F(1,11) = 1.13, p = 0.31, = 0.03; for the Euclidian distance F(1,11) = 0.18, p = 0.68, = 0.003]. The nature of the syllable had a significant effect on both measures [for the correlation F(1,11) = 64.2, p < 0.001, = 0.40; for the Euclidian distance F(1,11) = 19.0, p < 0.01, = 0.17]. No interaction was significant.

FIGURE 3.

Same as Figure 2 for the CI model. (A) Average spectral correlation (left) and Euclidian distance (right) for the related and less-related conditions, and for the two target syllables. Higher correlations, and smaller Euclidian distances, are compatible with more sensory priming. (B) Difference in Fisher transformed tonal contextuality index between related and less-related conditions, as a function of syllable, and global and local decays. The solid line represents the value 0.0 where the two types of context have the same TCI. The dashed lines represent the critical limit beyond which differences can be considered significant.

Tonal Contextuality Index

The electrically induced neural activation image was used as auditory image to feed into the pitch module of the IPEMtoolbox in order to extract periodicity structures. The rest of the model (memory decay and TCI computation) was identical to the one used for the acoustic/NH model.

The results, shown in Figure 3B, indicate that no combination of local and global memory decay yields positive Δz(TCI) beyond the critical value. When applying the same linear-mixed model as in the section “Pitch Periodicity in a Model of Auditory Short-Term Memory: Tonal Contextuality Index,” the effect of relatedness was found to be significant [F(1,35318) = 165.2, p < 0.0001], with the less-related condition having a globally higher TCI. The effect of syllable was also significant [F(1,35318) = 20.4, p < 0.0001] and so was the interaction [F(1,35318) = 48.6, p < 0.0001]: the TCI was higher for /du/ than for /di/, but the that difference was less important in the less-related condition.

From this analysis, it appears that, like for the NH model, the CI model predicts faster response times for the less-related condition than for the related condition.

Results

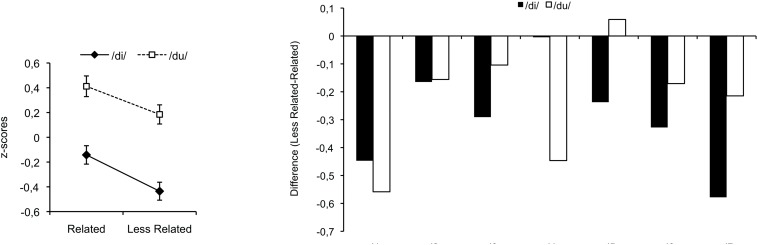

Percentages of correct responses were high overall, with an average accuracy of 95% (ranging from 92 to 100%). Because of differences in average response latency between participants (ranging from 561 ms to 1567 ms) and with the goal to focus on differences between related and less-related targets, correct response times were individually normalized to z-scores with a mean of 0 and a standard deviation of 1 (Figure 4, left). z-Scores were analyzed with a 2 × 2 ANOVA with Musical Relatedness (related/less related) and Target Syllable (di/du) as within-participant factors. The main effect of Musical Relatedness was significant, F(1,6) = 23.32, p = 0.003, MSE = 0.02, indicating faster processing for less-related targets than for related targets. Overall, responses were faster for the syllable /di/ than /du/, F(1,6) = 19.26, p = 0.005, MSE = 0.13, as previously observed for NH listeners (Bigand et al., 2001). The interaction between Musical Relatedness and Target Syllable was not significant (p = 0.55).

FIGURE 4.

Average data of the CI participants on the left and their individual data on the right. Positive and negative values indicate facilitated processing for related and less-related targets, respectively.

Figure 4 (right) displays differences between less-related and related targets for the two target syllables for each participant. Positive values indicate faster processing for related targets, and negative values indicate faster processing for less-related targets. Faster processing for less-related targets was observed for all participants (except /du/ for ci5).

Discussion

Our study investigated the perception of musical structures by postlingually deaf adult CI users. These listeners were mostly non-musicians without formal musical training. As shown in numerous studies in music cognition domain (e.g., Bigand and Poulin-Charronnat, 2006), NH non-musician listeners acquire implicit knowledge about the Western tonal musical system by mere exposure to music in everyday life and thanks to the cognitive ability of implicit learning (see also Tillmann et al., 2000). The postlingually deaf adult CI users participating in our present study had thus acquired this kind of implicit musical knowledge prior to deafness. We were investigating whether the signal quality of the CI—despite its poor spectral cues—is sufficient to activate this previously acquired implicit musical knowledge and to influence chord processing. We used a behavioral approach based on an indirect investigation method. The priming paradigm avoids asking for direct explicit judgments about the musical material, and takes advantage of implicit investigation. For music perception, the power of this implicit method has been previously shown by reporting musical structure knowledge in an amusic patient despite explicit music processing deficits (Tillmann et al., 2007) and in children as young as at the age of 6 years (Schellenberg et al., 2005), while explicit investigation methods had estimated the required age at 10 years (Imberty, 1981).

Based on our previous research, we used sung-chord sequences. The required priming task was a syllable-identification task (with syllables differing by one phoneme). The high accuracy we observed here showed that the CI users could perform this task without difficulty. The experimental material was constructed in such a way that three different data patterns could be expected, each indicating different underlying processes: (1) Faster processing of the related tonic chord would indicate that the transmitted signal of the CI is sufficient to activate listeners’ musical knowledge acquired prior to deafness; (2) faster processing of the less-related subdominant chord would indicate that the transmitted signal allows accumulation of sensory information in a short-term memory buffer, which then influences processing times (based on repetition priming); and (3) equal processing times for both chords would rather suggest that the limited spectral resolution available through implants is not sufficient to provide relevant information to the CI user’s brain.

The observed data pattern (see Figure 4) clearly supports the second hypothesis: Processing was facilitated for the less-related target, which is the target that benefited from sensory priming. This finding suggests that the signal transmitted via electric hearing was too different from the signal based on acoustic hearing, so that it did not automatically activate listeners’ previously acquired musical structure knowledge. However, the signal seems sufficiently informative to lead to sensory priming.

This conclusion seems to contradict the conclusion of Koelsch et al. (2004) based on ERPs in CI users, suggesting that tonal knowledge can be reached via electric hearing. We thus performed three acoustic analyses (pitch class, spectrum, pitch periodicity) also for the experimental material of Koelsch et al. (2004; see Supplemental Digital Content), notably to investigate acoustic similarities of the target with its preceding context. These analyses allowed us to resolve this contradiction. They showed that the material used by Koelsch et al. (2004) does not allow disentangling sensory and cognitive contributions in chord processing and the position effect. A more parsimonious explanation would thus be based on acoustic influences only. A similar argument has been made later by Koelsch et al. (2007), thus leading the authors to use new musical material with more subtle musical structure manipulations. However, this new material had not yet been used in an EEG study investigating CI users.

Our present findings support the hypothesis that despite the poor coding of spectral information, the CI transmits some pitch-related information of the musical material. Interestingly, based on our analyses, the observed sensory priming does not seem to be based on the individual pitches used, that is the spectral content per se (see section “Spectral contrast”). Instead, the sensory priming may be based on periodicity pattern similarity, as evidenced by the tonal contextuality index analysis (see section “Tonal Contextuality Index”). This is rather encouraging for implant users because, while their perception of pitch in natural sounds is very limited (e.g., in syllables, Gaudrain and Başkent, 2018 found discrimination thresholds for F0 between 4 and 20 semitones), it seems that when multiple tones are combined into chords, it makes periodicity patterns arise, to a degree that they can induce priming. This may suggest that, perhaps counter-intuitively, perception of chord sequences in CIs may be a more manageable goal than melody recognition. This finding thus integrates into other studies, suggesting that listeners can benefit from information provided by electric hearing even for music perception. Notably, studies have shown benefits of increased, self-imposed music exposure and of training programs to exploit the transmitted signal in music and pitch perception (e.g., Galvin et al., 2007). Converging evidence comes from the music appraisal of prelingually deaf CI listeners who are missing the comparison with music perception based on acoustic hearing (e.g., Trehub et al., 2009). Prelingually deaf children seem to find music interesting and enjoyable (Trehub et al., 2009; Looi and She, 2010) and children’s engagement with music may also enhance their progress in other auditory domains (Mitani et al., 2007; Rochette and Bigand, 2009). Galvin et al. (2009) have shown a high variability in melodic contour identification across CI users, with some musically experienced CI users performing as well as NH listeners. Most importantly, they showed that training on melodic contour (using visual support) improves performance in melodic contour identification in CI users, even though the transmitted signal was not changed. It is worth further clarifying that with “pitch” we are here referring to the acoustic information that is related to the pitch percept in NH listeners. Indeed, we need to acknowledge that we do not know whether this is a subjective pitch percept for the CI listeners, as it is for NH listeners, but can ascertain it is related to that acoustic information.

Our findings together with other data sets on the beneficial effects of musical training and musical exposure are thus encouraging for the development of pitch-related training programs for CI patients. Indeed, in parallel to the technological efforts aiming to improve the coding strategies implemented in the CI device, efforts need to be made for training programs in order to improve how the brains of CI users are exploiting the transmitted signal.

Training programs might need to work differently for postlingually deaf adult CI users and prelingually deaf child CI users. Because of the differences between acoustic and electric hearing, adults find music often disappointing or unacceptable, leading to changes in listening habits and decreased subjective enjoyment in comparison to prior to deafness (McDermott, 2004; Lassaletta et al., 2008; Looi and She, 2010). In contrast, the child implant users find music interesting and enjoyable (Trehub et al., 2009; Looi and She, 2010). The postlingually deaf adult CI users have acquired cognitive patterns and schemata for speech and music based on their previously normal (or impaired, but aided) hearing. However, the information provided by the CI is different, in particular for the coding of the spectro-temporal fine structure. The prior knowledge, which was developed based on the information available in acoustic hearing, might thus create perceptual filters and cognitive schema of “pitch” in music, which result in costs for picking out the relevant information from the transmitted signal. In contrast to the postlingually deaf adult CI users, early-implanted children CI users have developed their speech and music patterns based on the information in the electric hearing. As they are missing the comparison to the information provided by acoustic hearing, they appreciate and enjoy music, while for the postlingually implanted adults the CI version of music is only a poor representation of their memory. Training programs would thus need to increase exposure leading to the construction of newly shaped perceptual filters and schemata. The findings of our present study provide some further grounds for this training by showing that some pitch information is implicitly processed by the adult CI users. They thus have implications for rehabilitation programs, notably by encouraging training strategies that rely on spared implicit processing resources.

Data Availability

The datasets generated for this study are available on request to the corresponding author.

Ethics Statement

All participants provided written informed consent to this study, which was conducted in accordance with the guidelines of the Declaration of Helsinki, and approved by the local Ethics Committee (CPP Sud-Est II).

Author Contributions

BT, BP-C, IA, and LC contributed to the conception and design of the study. BT, BP-C, and IA acquired the data. BT, BP-C, EG, and CD performed the statistical analysis and modeling. ET and LC provided access to the patients and related information. BT wrote the first draft of the manuscript. EG and CD wrote the simulation sections of the manuscript. All authors contributed to the manuscript revision, and read and approved the submitted version.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank Fabien Seldran for the help with the experimental setup.

Appendix

Analyses of the Acoustic Similarity Between the Harmonic Context and the Target in Regular and Irregular Conditions of Koelsch et al. (2004)

We ran the three analyses investigating the acoustic similarity between contexts and targets also with the material of Koelsch et al. (2004), which is currently the only paper that tested musical structure processing in CI patients with an indirect investigation method. A total of 260 tonal harmonic sequences made up of five chords were generated as a MIDI file from the authors’ description.5 An audio file was then synthetized using a piano timbre. Duration of the first four chords was 600 ms, while the fifth chord sounded for 1200 ms. Sequences were presented in six blocks to the model. Each block was in a different tonal key (C, D, E, F#, Ab, and Eb keys). To conform to the experimental procedure reported by the authors, echoic memory state was reset between each block, but not between each sequence within a block.

Overlap in Pitch Class Between Targets and Contexts

We analyzed the number of pitch classes shared between a target chord and its preceding chords, as a function of target type and target position within the sequence. We used the MIDI file generated as described above. The mean number of pitch classes shared between target and preceding context was higher for the tonic regular condition (5.4 ± 3.29) than for the irregular condition (1.35 ± 1.34). More interestingly, the difference between regular and irregular targets was stronger for the fifth position (6.41) than for the third position (1.68), revealing a sensory advantage for the regular targets at the fifth position. Consequently, the cognitive (tonal) advantage for regular targets over irregular targets was confounded with acoustic similarity.

Spectrum Analyses

We estimated the spectral overlap between the target chords and their respective harmonic contexts. For each sequence of the material of Koelsch et al. (2004)’s study, the spectrum obtained from the target chord was correlated with the spectrum obtained from preceding chords. The spectra were obtained by averaging the spectrogram computed over the contexts and targets with FFT-time windows of 186 ms and 50%-window overlap (i.e., 93 ms). A Position (3 vs. 5) × Chord Type (Regular vs. irregular) ANOVA on average correlation values showed not only main effects of both Position, F(1,256) = 308.51, MSE = 0.175, p < 0.0001, and Chord type, F(1,256) = 359.82, MSE = 0.204, p < 0.0001, but—most importantly—a significant interaction, F(1,256) = 24.65, MSE = 0.014, p < 0.0001: The difference between regular and irregular chords was stronger for position 5 than for position 3, t(64) = 5.55, p < 0.0001 (see Appendix Figure 1A here below), thus confirming a sensory advantage at position 5.

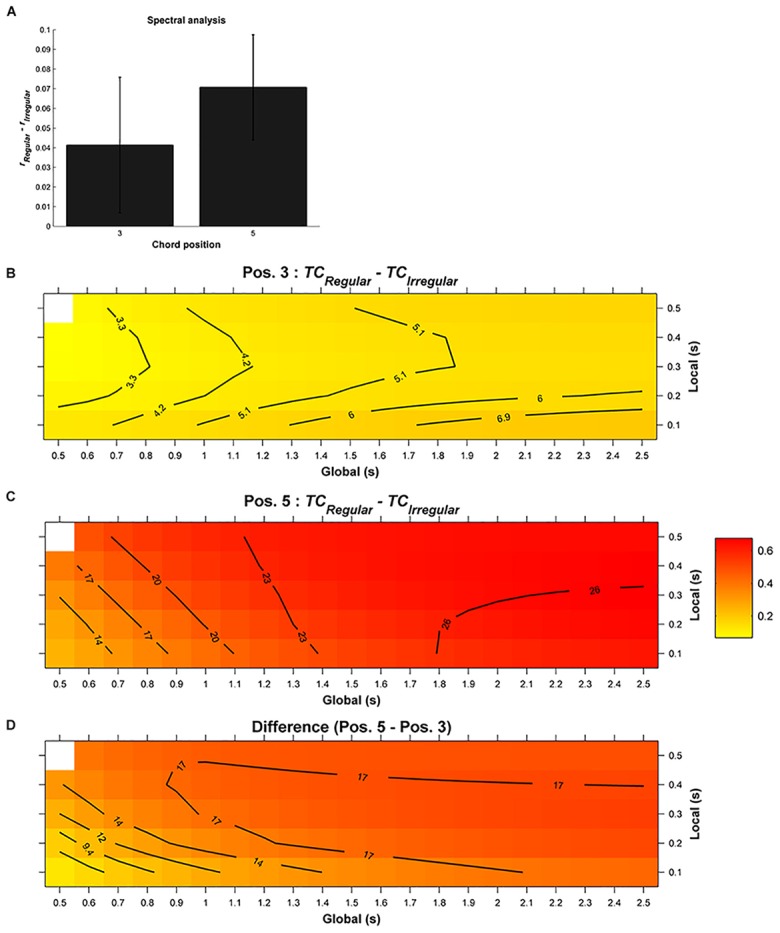

Pitch Periodicity in Auditory Short-Term Memory

We here ran the implementation of Leman (2000) model and its IPEM toolbox as used in Bigand et al. (2014) and its graphical presentation of the local and global parameters and Tonal Contextuality index (TC). For the material of Koelsch et al. (2004), the differences between regular chords (i.e., in-key) and irregular chords (i.e., Neapolitan sixth chord) are reported in Appendix Figures 1B,C here below, for positions 3 and 5, respectively. Irrespective of position within the sequence, irregular chords elicited a stronger dissimilarity in short-term pitch memory than did regular, in-key target chords (as illustrated by the hot colors, TC of the in-key chords were higher than for the irregular chords, i.e., TCin–key – TCirregular > 0). In addition, the size of the dissimilarity mirrored the size of the ERAN, thus accounting for the effect of position: Simulations predicted stronger dissimilarity for the irregular chords occurring at the syntactically incorrect position (i.e., at position 5; Appendix Figure 1C), compared to the syntactically correct position (i.e., at position 3; Appendix Figure 1B). The stronger dissimilarity of the target at position 5 in terms of TC is illustrated in Appendix Figure 1D that reports a (positive) difference between position 5 minus position 3. In sum, the sensory model accounts for the data pattern observed in Koelsch et al. (2004), suggesting that in this study the effect of position in participants’ data might have a sensory rather than a cognitive origin.

To summarize these analyses, for the experimental material used in Koelsch et al. (2004), cognitive and sensory hypothesis made the same predictions, that is, a cost of processing is expected for the musically irregular chord, in particular in the fifth position. The three types of analyses thus reveal that we cannot conclude whether CI participants’ ERPs reflected the violation of information accumulated in a sensory memory buffer or the activation of musical knowledge (see Koelsch et al., 2007, for a similar argument for this experimental material in NH listeners).

APPENDIX FIGURE 1.

Results of the acoustic analyses for the material Koelsch et al. (2004), presenting differences between the regular and irregular chords for positions 3 and 5, for the harmonic spectrum (A) and the model of Leman (B–D). For panels B and C, the mean differences between the tonal contextuality of the target chords (TCregular – TCirregular) are presented as a function of the local and global context integration windows. Positive, negative, and non-significant differences are represented by hot (i.e., red), cold (i.e., blue), and white colors, respectively (two-tailed paired t-test, p < 0.05; t-values are reported as contours). A positive difference indicates that the pitch similarity in the sensory memory induced by the related target is stronger than that of the less-related target. Panel D completes this presentation with the difference for positions 5 and 3.

Funding. This work was partly supported by the grant ANR-09-BLAN-0310. This work was also supported by LabEx CeLyA (“Centre Lyonnais d’Acoustique,” ANR-10-LABX-0060) of Université de Lyon, within the program “Investissements d’avenir” (ANR-11-IDEX-0007) operated by the French National Research Agency (ANR).

The sequences also contained timbral deviants and participants were required to detect these deviants (65% of correct responses for the CI users vs. 79% for the control group). CI listeners’ event-related brain potentials also reflected the detection of the timbre change, even though with smaller amplitude than NH control participants. As the timbres of the used instruments (piano, organ) also differed by their temporal attack, behavioral and neural responses to the timbre change (indicating acoustic irregularity detection) might be based on these temporal envelope changes, in addition to the spectral changes.

The “Neapolitan sixth chord” is a variation of the subdominant chord (an in-key chord), but contains two out-of-key notes (in the tonality of C major, these are the notes a♭ and d♭ in the chord: a♭ – f – d♭). When this chord is used as a substitution of a subdomimant chord, it sounds unusual, but not wrong (as in the third position of the sequences by Koelsch et al., 2004). However, when used as a substitution of a tonic chord, it sounds wrong (as in the fifth position of the sequences by Koelsch et al., 2004).

A pitch class is defined as a set of pitches at different pitch heights that are separated by octaves (i.e., an interval defined by multiples of the fundamental frequency of a tone), for example the tones at 220, 440, and 880 Hz all belong to the pitch class of A.

For the experimental material of Koelsch et al. (2004), these three analyses are presented as Supplemental Digital Content.

In each harmonic sequence, the first chord was always the tonic. Chords at the second position were tonic, mediant, submediant, subdominant, dominant to the dominant, secondary dominant to mediant, secondary dominant to submediant, or secondary dominant to supertonic. Chords at the third position were subdominant, dominant, dominant 6–4 chord or Neapolitan sixth chord. Chords at the fourth position were always the dominant seventh chord. Finally, chords at the fifth position were either a tonic or a Neapolitan sixth chord. Neapolitan chords at the third position never followed a secondary dominant. Neapolitan chords at the fifth position never followed a Neapolitan chord at the third position. Both Neapolitan chords occurred with a probability of 25% (resulting in the presentation of 65 Neapolitans at the third, and 65 at the fifth position).

References

- Bigand E., Delbé C., Poulin-Charronnat B., Leman M., Tillmann B. (2014). Empirical evidence for musical syntax processing? Computer simulations reveal the contribution of auditory short-term memory. Front. Syst. Neurosci. 8:94. 10.3389/fnsys.2014.00094 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bigand E., Poulin-Charronnat B. (2006). Are we ”experienced listeners”? A review of the musical capacities that do not depend on formal musical training. Cognition 100 100–130. 10.1016/j.cognition.2005.11.007 [DOI] [PubMed] [Google Scholar]

- Bigand E., Poulin B., Tillmann B., D’Adamo D. (2003). Cognitive versus sensory components in harmonic priming effects. J. Exp. Psychol. Hum. Percept. Perform. 29 159–171. [DOI] [PubMed] [Google Scholar]

- Bigand E., Tillmann B., Poulin B., D’Adamo D. A., Madurell F. (2001). The effect of harmonic context on phoneme monitoring in vocal music. Cognition 81 11–20. [DOI] [PubMed] [Google Scholar]

- Bigand E., Tillmann B., Poulin-Charronnat B. (2006). A module for syntactic processing in music? Trends Cogn. Sci. 10 195–196. 10.1016/j.tics.2006.03.008 [DOI] [PubMed] [Google Scholar]

- Bingabr M., Espinoza-Varas B., Loizou P. C. (2008). Simulating the effect of spread of excitation in cochlear implants. Hear. Res. 241 73–79. 10.1016/j.heares.2008.04.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brockmeier S. J., Fitzgerald D., Searle O., Fitzgerald H., Grasmeder M., Hilbig S., et al. (2011). The MuSIC perception test: a novel battery for testing music perception of cochlear implant users. Cochlear Implants Int. 12 10–20. 10.1179/146701010X12677899497236 [DOI] [PubMed] [Google Scholar]

- Brockmeier S. J., Peterreins M., Lorens A., Vermeire K., Helbig S., Anderson I., et al. (2010). Music perception in electric acoustic stimulation users as assessed by the Mu.S.I.C. Test. Adv. Otorhinolaryngol. 67 70–80. 10.1159/000262598 [DOI] [PubMed] [Google Scholar]

- Chen J. K. C., Chuang A. Y. C., McMahon C., Hsieh J. C., Tung T. H., Li L. P., et al. (2010). Music training improves pitch perception in prelingually deafened children with cochlear implants. Pediatrics 125 E793–E800. 10.1542/peds.2008-3620 [DOI] [PubMed] [Google Scholar]

- Cohen J., MacWhinney B., Flatt M., Provost J. (1993). PsyScope: an interactive graphic system for designing and controlling experiments in the psychology laboratory using Macintosh computers. Behav. Res. Methods Instrum. Comput. 25 257–271. 10.3758/bf03204507 [DOI] [Google Scholar]

- Cooper W. B., Tobey E., Loizou P. C. (2008). Music perception by cochlear implant and normal hearing listeners as measured by the montreal battery for evaluation of Amusia. Ear Hear. 29 618–626. 10.1097/AUD.0b013e318174e787 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Drennan W. R., Rubinstein J. T. (2008). Music perception in cochlear implant users and its relationship with psychophysical capabilities. J. Rehabil. Res. Dev. 45 779–790. 10.1682/jrrd.2007.08.0118 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Driscoll V. D., Oleson J., Jiang D., Gfeller K. (2009). Effects of training on recognition of musical instruments presented through cochlear implant simulations. J. Am. Acad. Audiol. 20 71–82. 10.3766/jaaa.20.1.7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fisher R. A. (1921). On the ‘probable error’ of a coefficient of correlation deduced from a small sample. Metron 1 3–32. [Google Scholar]

- Fu Q. J., Galvin J. J. (2007). Perceptual learning and auditory training in cochlear implant recipients. Trends Amplif. 11 193–205. 10.1177/1084713807301379 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fu Q. J., Galvin J. J. (2008). Maximizing cochlear implant patients’ performance with advanced speech training procedures. Hear Res. 242 198–208. 10.1016/j.heares.2007.11.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fuller C. D., Galvin J. J., Maat B., Başkent D., Free R. H. (2018). Comparison of two music training approaches on music and speech perception in cochlear implant users. Trends Hear. 22:2331216518765379. 10.1177/2331216518765379 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galvin J. J., Fu Q.-J., Nogaki G. (2007). Melodic contour identification by cochlear implant listeners. Ear Hear. 28 302–319. 10.1097/01.aud.0000261689.35445.20 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galvin J. J., Fu Q.-J., Oba S. (2008). Effect of instrument timbre on melodic contour identification by cochlear implant users. J. Acoust. Soc. Am. 124 EL189–EL195. 10.1121/1.2961171 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galvin J. J., Fu Q.-J., Shannon R. V. (2009). Melodic contour identification and music perception by cochlear implant users. Ann. N. Y. Acad. Sci. 1169 518–533. 10.1111/j.1749-6632.2009.04551.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gaudrain E., Başkent D. (2018). Discrimination of voice pitch and vocal-tract length in cochlear implant users. Ear Hear. 39 226–237. 10.1097/AUD.0000000000000480 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gaudrain E., Stam L., Baskent D. (2014). “Measure and model of vocal-tract length discrimination in cochlear implants,” in Proceedings of the 2014 International Conference on Audio, Language and Image Processing, Shanghai, 31–34. 10.1109/ICALIP.2014.7009751 [DOI] [Google Scholar]

- Gfeller K., Oleson J., Knutson J. F., Breheny P., Driscoll V., Olszewski C., et al. (2008). Multivariate predictors of music perception and appraisal by adult cochlear implant users. J. Am. Acad. Audiol. 19 120–134. 10.3766/jaaa.19.2.3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gfeller K., Turner C., Oleson J., Zhang X., Gantz B., Froman R., et al. (2007). Accuracy of cochlear implant recipients on pitch perception, melody recognition and speech reception in noise. Ear Hear. 28 412–423. 10.1097/aud.0b013e3180479318 [DOI] [PubMed] [Google Scholar]

- Imberty M. (1981). Acculturation tonale et structuration perceptive du temps musical chez l’enfant. Basic musical functions and musical ability. R. Swed. Acad. Music 32 81–130. [Google Scholar]

- Jung K. H., Cho Y. S., Cho J. K., Park G. Y., Kim E. Y., Hong S. H., et al. (2010). Clinical assessment of music perception in Korean cochlear implant listeners. Acta Otolaryncol. 130 716–723. 10.3109/00016480903380521 [DOI] [PubMed] [Google Scholar]

- Kang R., Nimmons G. L., Drennan W., Longnion J., Ruffin C., Nie K. (2009). Development and validation of the University of Washington clinical assessment of music perception test. Ear Hear. 30 411–418. 10.1097/AUD.0b013e3181a61bc0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koelsch S. (2009). Music-syntactic processing and auditory memory: similarities and differences between ERAN and MMN. Psychophysiology 46 179–190. 10.1111/j.1469-8986.2008.00752.x [DOI] [PubMed] [Google Scholar]

- Koelsch S., Jentschke S., Sammler D., Mietchen D. (2007). Untangling syntactic and sensory processing: an ERP study of music perception. Psychophysiology 44 476–490. 10.1111/j.1469-8986.2007.00517.x [DOI] [PubMed] [Google Scholar]

- Koelsch S., Wittfoth M., Wolf A., Müller J., Hahne A. (2004). Music perception in cochlear implant users: an event-related potential study. Clin. Neurophysiol. 115 966–972. 10.1016/j.clinph.2003.11.032 [DOI] [PubMed] [Google Scholar]

- Lassaletta L., Castro A., Bastarrica M., Pérez-Mora R., Herrán B., Sanz L., et al. (2008). Changes in listening habits and quality of musical sound after cochlear implantation. Otolaryngol. Head Neck Surg. 138 363–367. 10.1016/j.otohns.2007.11.028 [DOI] [PubMed] [Google Scholar]

- Leal M. C., Shin Y. J., Laborde M. L., Calmels M. N., Verges S., Lugardon S., et al. (2003). Music perception in adult cochlear implant recipients. Acta Otolaryngol. 123 826–835. [DOI] [PubMed] [Google Scholar]

- Leman M. (2000). An auditory model of the role of short-term memory in probe-tone ratings. Music Percept. 17 435–463. [Google Scholar]

- Leman M., Lesaffre M., Tanghe K. (2005). IPEM Toolbox for Perception-Based Music Analysis Version 1.02. Available at: https://www.ugent.be/ lw/kunstwetenschappen/en/research-groups/musicology/ipem/finishedprojects/ ipem-toolbox.htm (accessed April 24, 2019). [Google Scholar]

- Looi V., McDermott H., McKay C., Hickson L. (2008). Music perception for cochlear implant users compared with that of hearing aid users. Ear Hear. 29 421–434. 10.1097/AUD.0b013e31816a0d0b [DOI] [PubMed] [Google Scholar]

- Looi V., She J. (2010). Music perception of cochlear implant users: a questionnaire and its implications for a music training program. Int. J. Audiol. 49 116–128. 10.3109/14992020903405987 [DOI] [PubMed] [Google Scholar]

- Marmel F., Tillmann B., Delbé C. (2010). Priming in melody perception: tracking down the strength of cognitive expectations. J. Exp. Psychol. Hum. Percept. Perform. 36 1016–1028. 10.1037/a0018735 [DOI] [PubMed] [Google Scholar]

- McDermott H. J. (2004). Music perception with cochlear implants: a review. Trends Amplif. 8 49–82. 10.1177/108471380400800203 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McKay C. M. (2005). Spectral processing in cochlear implants. Aud. Spectr. Process. 70 473–509. 10.1016/s0074-7742(05)70014-3 [DOI] [PubMed] [Google Scholar]

- Migriov L., Kronenberg J., Henkin Y. (2009). Self-reported listening habits and enjoyment of music among cochlear implant recipients. Ann. Otol. Rhinol. Laryngol. 118 350–355. 10.1177/000348940911800506 [DOI] [PubMed] [Google Scholar]

- Mitani C., Nakata T., Trehub S. E. (2007). Music recognition, music listening, and word recognition by deaf children with cochlear implants. Ear Hear. 28 S38–S41. [DOI] [PubMed] [Google Scholar]

- Moore B. C. J., Carlyon R. P. (2005). “Perception of pitch by people with cochlear hearing loss and by cochlear implant users,” in Pitch, Neural Coding and Perception, eds Plack C. J., Oxenham A. J., Fay R. R., Popper A. N. (New York, NY: Springer; ), 234–277. 10.1007/0-387-28958-5_7 [DOI] [Google Scholar]

- Nimmons G. L., Kang R. S., Drennan W. R., Longnion J., Ruffin C., Worman T., et al. (2008). Clinical assessment of music perception in cochlear implant listeners. Otol. Neurotol. 29 149–155. 10.1097/mao.0b013e31812f7244 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rattay F., Lutter P., Felix H. (2001). A model of the electrically excited human cochlear neuron. I. Contribution of neural substructures to the generation and propagation of spikes. Hear. Res. 153 43–63. 10.1016/s0378-5955(00)00256-2 [DOI] [PubMed] [Google Scholar]

- Rochette F., Bigand E. (2009). Long-term effects of auditory training in severely or profoundly deaf children. Ann. N. Y. Acad. Sci. 1169 195–198. 10.1111/j.1749-6632.2009.04793.x [DOI] [PubMed] [Google Scholar]

- Schellenberg E. G., Bigand E., Poulin-Charronnat B., Garnier C., Stevens C. (2005). Children’s implicit knowledge of harmony in Western music. Dev. Sci. 8 551–566. 10.1111/j.1467-7687.2005.00447.x [DOI] [PubMed] [Google Scholar]

- Tillmann B., Bharucha J. J., Bigand E. (2000). Implicit learning of tonality: a self-organizing approach. Psychol. Rev. 107 885–913. 10.1037//0033-295x.107.4.885 [DOI] [PubMed] [Google Scholar]

- Tillmann B., Justus T., Bigand E. (2008). Cerebellar patients demonstrate preserved implicit knowledge of association strengths in musical sequences. Brain Cogn. 66 161–167. 10.1016/j.bandc.2007.07.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tillmann B., Peretz I., Bigand E., Gosselin N. (2007). Harmonic priming in an amusic patient: the power of implicit tasks. Cogn. Neuropsychol. 24 603–622. 10.1080/02643290701609527 [DOI] [PubMed] [Google Scholar]

- Trehub S. E., Vongpaisal T., Nakata T. (2009). Music in the lives of deaf children with cochlear implants. Ann. N. Y. Acad. Sci. 1169 534–542. 10.1111/j.1749-6632.2009.04554.x [DOI] [PubMed] [Google Scholar]

- Van Immerseel L. M., Martens J. (1992). Pitch and voiced/unvoiced determination with an auditory model. J. Acoust. Soc. Am. 91 3511–3526. 10.1121/1.402840 [DOI] [PubMed] [Google Scholar]

- Young A. W., Hellawell D., DeHaan E. H. F. (1988). Cross-domain semantic priming in normal subjects and a prosopagnosic patient. Q. J. Exp. Psychol. 40A 561–580. 10.1080/02724988843000087 [DOI] [PubMed] [Google Scholar]

- Yucel E., Sennaroglu G., Belgin E. (2009). The family-oriented musical training for children with cochlear implants: speech and musical perception results of two year follow-up. Int. J. Pediatr. Otorhinolaryngol. 73 1043–1052. 10.1016/j.ijporl.2009.04.009 [DOI] [PubMed] [Google Scholar]

- Zaltz Y., Goldsworthy R. L., Kishon-Rabin L., Eisenberg L. S. (2018). Voice discrimination by adults with cochlear implants: the benefits of early implantation for vocal-tract length perception. J. Assoc. Res. Otolaryngol. 19 193–209. 10.1007/s10162-017-0653-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeng F.-G. (2004). Trends in cochlear implants. Trends Amplif. 8 1–34. 10.1177/108471380400800102 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets generated for this study are available on request to the corresponding author.