Supplemental Digital Content is available in the text.

Key Words: access management, evidence synthesis, qualitative evaluation, quality improvement, veterans

Background:

Access to health care is a critical concept in the design, delivery, and evaluation of high quality care. Meaningful evaluation of access requires research evidence and the integration of perspectives of patients, providers, and administrators.

Objective:

Because of high-profile access challenges, the Department of Veterans Affairs (VA) invested in research and implemented initiatives to address access management. We describe a 2-year evidence-based approach to improving access in primary care.

Methods:

The approach included an Evidence Synthesis Program (ESP) report, a 22-site in-person qualitative evaluation of VA initiatives, and in-person and online stakeholder panel meetings facilitated by the RAND corporation. Subsequent work products were disseminated in a targeted strategy to increase impact on policy and practice.

Results:

The ESP report summarized existing research evidence in primary care management and an evaluation of ongoing initiatives provided organizational data and novel metrics. The stakeholder panel served as a source of insights and information, as well as a knowledge dissemination vector. Work products included the ESP report, a RAND report, peer-reviewed manuscripts, presentations at key conferences, and training materials for VA Group Practice Managers. Resulting policy and practice implications are discussed.

Conclusions:

The commissioning of an evidence report was the beginning of a cascade of work including exploration of unanswered questions, novel research and measurement discoveries, and policy changes and innovation. These results demonstrate what can be achieved in a learning health care system that employs evidence and expertise to address complex issues such as access management.

In a learning health care system (LHS), science, informatics, incentives, and culture are aligned for continuous improvement and innovation, with best practices seamlessly embedded in the delivery process and new knowledge captured as an integral by-product of the delivery experience.1 The Veterans Health Administration in the Department of Veterans Affairs (VA) emulates these values with the goal of meeting the National Academy of Sciences (NAS) 6 aims for health care: safe, effective, patient-centered, efficient, equitable, and timely.2 Using the LHS model, a recently published review article highlighted the contributions of VA research to drive system-wide change and improve care and outcomes, with reflections on ongoing challenges of moving evidence into practice.3

In adopting the LHS model, VA created the Evidence Synthesis Program (ESP) in 2007.4 This program makes high-quality evidence syntheses available to clinicians, managers, and policymakers as they work collaboratively to improve care of veterans. These reports have resulted in improvements in care delivery and outcomes, brought evidence to guidelines and performance measurement, informed policy, and guided future research.5 The reports are used both internally by VA and also result in peer-reviewed journal publications to inform care worldwide. Although VA’s ESP program focuses on veteran-related topics, they work collaboratively with other entities (eg, AHRQ Evidence-based Practice Centers, Cochrane Collaboration) to refine methodologies and optimize impact.

An important piece of historical context driving this ESP report and the VA sponsored evidence review of access by the NAS Transforming Health Care Scheduling and Access: Getting to Now6 was the wait time crisis at the Phoenix VA. Although an Office of Inspector General report found “no evidence that there was any intentional, coordinated scheme by management to create a secret wait list, delay patient appointments, or manipulate wait time metrics,” it did identify poor management practices, misunderstanding of VA scheduling directives, and lack of effective training for schedulers and managers.7 As a LHS, responding to a crisis with shared vision across stakeholders, systems thinking, and careful research on the determinants of high and low performers helps organizations learn from such events.

In addition, LHSs have immense potential for generating data and testing initiatives within the organization. As one of the largest health care systems in the Unite States, VA is ideally suited to evaluate quality improvement (QI) initiatives and randomized program evaluations. VA can not only test approaches in one-time data snapshots, but can evaluate generalizability by testing across sites and over time. Specifically, VA primary care occurs at a variety of sites including hospital-based, community-based, and contract clinics. These clinics follow the “medical home” model with a primary care provider (ie, physician, nurse practitioner, or physician assistant) on a team that includes a nurse, scheduling clerk, and medical assistant; telehealth modalities (eg, video, secure messaging) are increasingly integrated into care delivery. The site of care (eg, hospital or community-based clinic), organization of care (eg, medical home), and type of care (eg, face-to-face, telehealth) all impact access and success of organization-wide initiatives. Although much is known about primary care access, organizational questions remain where the evidence-base is not advanced enough to provide LHSs with guidance or recommendations.8,9

The objective of this paper is to describe an evidence-based approach building on LHS principles and capabilities to rapidly respond to high-priority organizational needs. In this case, primary care access management and the subsequent influence on research, policy, and innovation. Using LHS principles, we examine the literature, evaluate VA’s access measures, and come to consensus on optimal access management strategies using an expert panel.

METHODS

Generating an ESP Report: Evidence to Support Improved Access Management

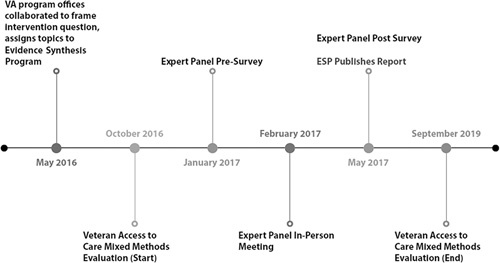

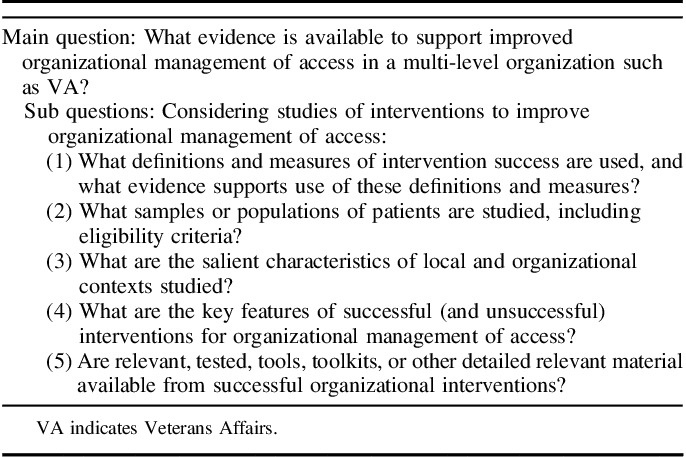

ESP reports are based on the concept of Responsive Innovation, a review process that identifies a needed intervention as its starting point and proceeds to information on key features of interventions, context, and tools or toolkits.10 As these reviews are best completed when built upon existing meta-analyses, a VA-sponsored evidence review of access by the NAS6 served as a starting point. The ESP topic was initiated by the Office of Veteran Access to Care and assigned to the Los Angeles ESP in May 2016 with a 7-month timeline (Fig. 1). For this report, an 11-member team consisting of ESP investigators (5), technical advisory panel members (5), and VA program office staff (1) with administrative, research, and clinical expertise, collaborated to establish and frame the question: What evidence is available to support improved organizational management of access in a multilevel organization such as VA? Additional subquestions are outlined in Table 1. ESP systematic review processes were followed to identify articles published from 2005 thru 2016 using PubMed and CINAHL, or prior from references. Studies that included primary care patients, access management interventions, and reported an access outcome were included. The final report was published in May 2017.11

FIGURE 1.

Timeline of learning health care system activities. ESP indicates Evidence Synthesis Program; VA, Veterans Affairs.

TABLE 1.

Evidence Synthesis Report Questions

Veteran Access to Care Evaluation: Elucidating Drivers of Objective Access Metrics and Subjective Perceptions of Care

The second evidence-generating component was the Veteran Access to Care evaluation, a 3-year initiative to understand how best to measure and improve access as reflected by objective metrics and veterans’ subjective perceptions of access. This multisite, interdisciplinary evaluation team used a mixed methods approach to develop and evaluate potential access metrics, assess trends over time, and identify facilitators and barriers to access improvement. The qualitative evaluation focused on in-person site visits by 2–4 person teams across 22 VA facilities. The interview teams consisted of at least one trained lead qualitative interviewer with additional members representing a range of research and clinical expertise. Semistructured interviews were conducted with key informants at each site, with focus groups of additional stakeholders to facilitate a greater number and diversity of opinions and perceptions. Facility tours allowed for direct observation and nonscheduled interaction with clinical staff.

The 22 clinical sites were selected by a separate quantitative team using VA administrative data to identify low-performing and high-performing facilities using existing access metrics incorporating both objective measures (eg, wait times, telehealth visits) and patient perceptions of access; geographic diversity (eg, regional representation and urban and rural sites) was also considered. This work was funded by the VA Office of Rural Health in concert with the Office of Veterans Access to Care.

Expert Panel: Coming to Consensus on Effective Access Management Strategies

A key component in the sequence of events was establishing a stakeholder panel to support the ESP workgroup during the project. Panel composition was deliberately broad and included different perspectives with potentially conflicting interests (eg, patients, health care providers, administrators). It involved an initial in-person meeting to establish priorities and follow-up teleconferences to develop recommendations for primary care access management. The 20 stakeholders initially responded to written surveys to direct the ESP workgroup, followed by a 2-day meeting during which panelists established priorities for access management given the topic complexity. The meeting used different modalities and varied from plenum discussions to small breakout groups and parallel subpanels. All sessions used an experienced moderator. The panel was joined by additional interested VA staff and RAND researchers.

Panel discussions were informed by evidence including a presentation of the evaluation results and providing online access to literature supporting the report. Consensus finding followed a modified Delphi process with a prepanel survey, in person discussions to discuss disagreements, and an independent postpanel survey. Postpanel activities (eg, teleconferences, report writing) focused on establishing recommendations useful to health care delivery organizations wishing to improve access management. An additional on-line follow-up survey was sent to panel participants requesting feedback on the process and potential impact on their home institution.

Dissemination Strategy: Communicating Evidence More Broadly

Throughout this 3-year experience, dissemination strategies were initiated within and outside of VA with the intent of maximizing impact. We established timelines to meet health care providers and administrator’s needs, incorporated feedback loops to allow input from stakeholders, and identified national conferences and opportunities within VA to disseminate finding. Conference calls, presentations, and reports were made to facilitate 2-way communication between VA leadership and the research teams.

RESULTS

ESP Report

The literature search identified 979 titles, from which 53 publications were included to identify 29 that assessed 19 implementations of interventions to manage primary care access. While details are in the full report,11 the key finding was that evidence about primary care access management is essentially limited to implementation of advanced or open-access and all but three publications were in a 10-year period (2001–2010). The most common metric for access to primary care—third-next available appointment—lacked empiric data linking it to health outcomes. Continuity and patient satisfaction were also included, but with no link to outcomes. Almost all research has been done in adult populations, mostly in family medicine clinics, including the VA. Little detail was available on organizational context, although many were in academic-affiliated clinics, Britain’s National Health Service, or VA. The principal successful intervention, advanced/open-access, included components to reduce backlogs, reconcile appointment types, and regular activity reporting; 5 toolkits were available for dissemination.

Most studies reported dramatic improvements in access, but studies of longer duration reported mixed results, with rising wait times and need for modifications to the access management strategies reported in 2 large and long-term studies.12,13 Although key intervention components were described, it is unclear which, if any, were associated with success. Thus, the expert panel and other methods were needed to further describe and understand access management both within and outside the VA.

Veteran Access to Care Evaluation

A parallel but related organizational initiative involved a multisite team of health services researchers formed in 2016 for a 3-year evaluation of access in VA with 2 foci. The first was a quantitative evaluation using VA administrative data of clinic wait times, patient-reported satisfaction with access, and measurement of non–face-to-face modalities of connecting patients to the health care system (eg, telemedicine, electronic consults, secure messages). The second was a 22-site in-person qualitative evaluation of the experience of health care providers and staff in adopting systematic changes to their primary care management structure.

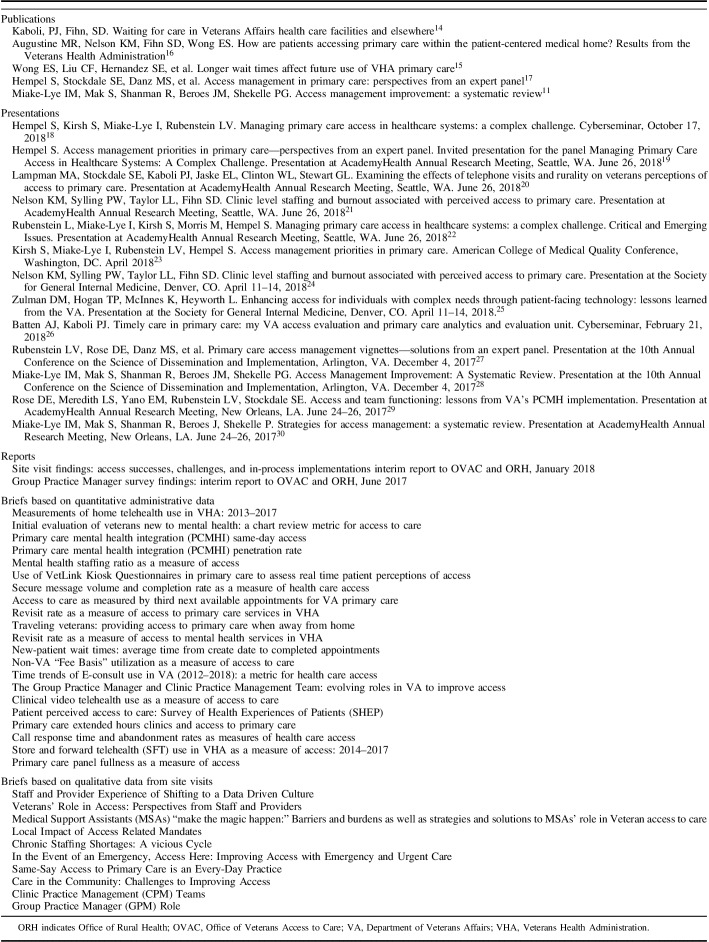

The quantitative evaluation team evaluated existing access metrics that incorporated both patient perceptions and objective measures of access. This evaluation produced several written internal VA reports, national presentations, access metric briefs for internal organization use, scientific journal manuscripts11,14–17 for broader dissemination (Table 2); additional manuscripts are in preparation and review. The metrics were in the domains of wait times (eg, third-next available, urgent care), virtual care (eg, secure messaging, telehealth), patient perceptions (eg, perceived wait for primary care), provider staffing (eg, ratio of patients to providers), and clinic use (eg, extended hours clinics, revisit rates). These metric reports will allow VA to determine which are of greatest value to measure access and which can be acted upon.

TABLE 2.

Publications, Presentations, and Reports

The qualitative evaluation used experienced site-visit teams for in-person evaluations in 2018 with a focus on how the 22 selected clinic sites were addressing local access needs and responding to national access standards. A major component of the national initiative was the adoption of a Clinical Practice Management initiative, including the hiring of Group Practice Managers at each VA health care system with a primary focus on improving access. They received national-level training and local oversight to implement this new management structure. The evaluation indicated that cultural and process changes were required to adopt a new method of practice management. In some cases, this involved system redesign of clinic work processes, staffing, and scheduling. Group Practice Managers had a key role in leading these efforts, including communication and education of staff about the goals of improving veteran access to care. They were also instrumental in managing expectations from top leadership to front line providers and staff. Group Practice Managers were expected to monitor local clinic access data and respond to VA Central Office data requests. Despite national efforts to support the Clinical Practice Management initiative, providers and staff felt the Group Practice Manager needed greater role clarify, support, and training.

Additional insights gained from site visits included: (1) local clinical leadership needed encouragement and support to implement changes to improve access; (2) need to establish links to QI activities rather than assuming links were already established; (3) knowledge gaps were identified for clinic staff and providers in the implementation of the Clinical Practice Management initiative; and (4) team members needed both access to and training on access metrics. Peer-reviewed publications with details of the findings are in preparation and review.

Expert Stakeholder Panel

Expert panel consensus-development activities resulted in a parsimonious set of 8 access management priorities: 2 organizational structure targets (ie, interdisciplinary primary care site leadership, clearly identified group practice management structure); 4 process improvements (ie, patient telephone access management, contingency staffing nurse management of demand through care coordination, proactive demand management by optimizing provider visit schedules); and 2 outcomes (ie, quality of patients’ experiences of access, provider and staff morale in relationship to supply-demand mismatch).

The process also resulted in 2 definitions of access management designed to facilitate QI: (1) Access management encompasses the set of goals, evaluations, actions and resources needed to achieve patient-centered health care services that maximizes access for defined eligible populations of patients; and (2) Optimal access management engages patients, providers, and teams in continuously improving care design and delivery to achieve optimal access.17

All 8 priority domains were translated into recommendations and documented in a detailed report.17 The panel established up to 3 recommendations for each priority domain including concrete suggestions of how to implement the recommendations in clinical practice and were anchored in literature citations and accompanied by suggestions for further reading.

Overall Evaluation and Result Dissemination

The ESP report, Veteran Access to Care evaluation, and expert panel findings informed VA research activities resulting in requests for research proposals that outlined the importance of access management improvement. Multilevel engagement of stakeholders yielded a rigorous set of high-value recommendations to researchers tasked with characterizing how proposed research meets VA priorities. As a result, VA research leaders organized a systematic agenda-setting effort, bringing together 30 research and operations leaders to consider VA access-related information needs, discern which aspects warrant research (vs. evaluation/QI), and incentivize research that meets priority needs (eg, request for proposals). This effort was followed by widespread dissemination of access research priorities, and an uptick in relevant grant submissions. For example, one newly approved-for-funding study builds on this evidence-generating approach by proposing to develop a national organizational survey of access management strategies to explore associations of different strategies with patient-reported vs. administrative access metrics. Other proposals are in various stages of preparation and review.

Findings from the evidence-generating components were strategically disseminated using VA and independent venues. Presentations were given at Academy Health,22 the American College of Medical Quality, the Society of General Internal Medicine, and national cyber-seminars for the Health Services Research and Development (HSRD) service (Table 1). Project activities also resulted in 2 public reports available to any health care delivery organizations aiming to improve access.11,17

Impacts of LHS Approach to Evidence Generation and Action

Survey results from the in-person expert panel participants revealed the impact of the meeting, including the review of international research and VA-generated data. Participants valued the information received, but also commented on the importance of disseminating information in large organizations in general. Positive effects included hearing different perspectives regarding the issue of access. A recurring theme regarding barriers to implementing the ideas was the lack of additional resources needed to make changes. Suggestions for additional approaches to help a LHS included additional venues for dissemination, thinking about maintaining improvement efforts, providing plans for implementing theoretical ideas, considering that not all tools will work in all settings, and establishing feedback loops that evaluate the suggestions. Full details are shown in Appendix, (Supplemental Digital Content 1 http://links.lww.com/MLR/B837) 2 with 12 of 40 (30%) participants responding.

The impact on access-related policy and practice management in VA is less quantifiable. Key interventions were initiated to improve training of Group Practice Managers and the entire Clinical Practice Management initiative because of the qualitative feedback on need for greater role clarity and training. For example, the need to pair clinical and administrative staff using change management principles was critical to disseminating new knowledge and national guidance. A long-standing challenge of improving the telephone system within VA was repeatedly identified, but due to the complex nature of the issues and competing priorities, slight improvement was made. Additional innovations may be adopted over time as more research findings are published and disseminated within the organization.

DISCUSSION

Commissioning an evidence-based report was the beginning of a cascade of work including exploration of unanswered questions, novel research and measurement discoveries, and policy changes and innovation. These results demonstrate what can be achieved in a LHS that employs evidence and expertise to address complex problems. Access challenges were highlighted in a 2001 NAS report recognizing a lack of timeliness as one of the 6 principle features of the growing quality chasm in US health care.2 Central to their conceptualization was reduction in wait-times and avoidance of harmful delays, principles that broadly define a vision of “access.” In 2010 the VA convened a State of the Art conference to “examine the determinants of access, and the impact of access on utilization, quality and outcomes.”31,32 And yet, only a few years after that, VA faced what was widely referred to as an access crisis. This painfully visible event not only represented a failure to provide access and timeliness, it undermined the perceived efficacy of the VA as a LHS.

As described in this paper, VA adopted a more sophisticated vision as a LHS to address the complex and persistent issues around access that aligns science, informatics, incentives, and culture toward continuous improvement and innovation. Over 3 years, the authors aligned science (ESP report) and organizational culture (Veteran Access to Care evaluation and Expert Panel) to trigger work around timeliness of VA care. Most importantly, the work reverberated through the VA system stimulating research, measurement discoveries, policy changes, and innovation.

Commissioning an ESP report as a first step established a baseline for VA, but also a state of the current science and a contextual approach for the work. This report was invaluable for understanding access metrics (eg, third next available), limitations of existing research (eg, few validated measures), and adoption of advanced or open-access principles as the “gold standard” in primary care access management.

The Veterans Access to Care evaluation was, in a sense, a real-world assessment of the access principles and concepts elucidated by the ESP. The ambitious undertaking of 22 site visits was a critical part of the LHS approach, but may not be feasible for all systems or even necessary. The evaluation identified the need to align culture and incentives around the common goal of sound access and yielded a complex set of observations, including variability in how access data were collected and interpreted from site to site. This variability arose from diverse levels within the organization including leadership engagement, front-line scheduling, and dissemination and training of the Group Practice Manager role.

The Expert Panel rounded out the process by mixing VA and non-VA thought leaders in the domain of access to care. The structure of the group was informed by the ESP report and original research from the Veterans Access to Care evaluation and resulted in elucidating concrete, actionable recommendations for dissemination in a LHS. As is often the case with an expert panel, the product may be limited to the breadth of its membership in terms of views and system-based bias (eg, single-payer vs. fee-for-service model). Moreover, the translation of recommendations into action across a system as large and complex as VA is challenging.

Several limitations should be mentioned. First, by its very nature, an ESP is retrospective running the risk of predominantly considering historical views of access. These views might in turn be shaped by typical through-put measures in a face-to-face, fee-for-service health care model. As the promise of novel virtual care modalities becomes a reality32 so too do concepts of timeliness, related measures of success, and models of care delivery. Second, the evaluation was limited to VA sites only; applying this evaluative schema to non-VA sites might have yielded richer data. Third, VA’s capture of patient satisfaction data regarding access to care is restricted largely to the Consumer Assessment of Healthcare Providers and Systems (CAHPS) surveys33 that lack granularity, are limited in scope, and often contrast with measured access. Lastly, the quantitative evaluation was limited by means of feeding off available metrics of perceived success, which in turn may be themselves limited by the VA’s electronic medical record, data capture, and informatics culture. A broader range of leading and lagging measures around timeliness of care could have led to a better quantitative understanding of site-to-site differences and somewhat different conclusions.

The quantitative and measurable impact of this journey on actual and perceived access in the VA health care system deserves further discussion. Although a recently published study comparing VA to private sector (PS) concluded that “wait times in the VA and PS appeared to be similar in 2014, there have been interval improvements in VA wait times since then, while wait times in the PS appear to be static,”34 more evidence is needed, especially from the perception of Veterans. In addition, continuously evolving priorities and pressures are illustrated in the recently passed MISSION Act of 201835 with a specific charge to improve access to care both within VA and through further development of networks of non-VA providers and health care systems. By applying the principals of a LHS, VA can optimally respond to the mandate from Congress to meet the needs of the patients served.

A 2-year, LHS, multicomponent approach to a complex systems-level problem like access confers many advantages and can result in a robust, evidenced-based approach to organizational change. This work is limited by lack of long-term outcome measures, competing organizational pressures, idiosyncratic features that might confound measures of success, and requires more research to understand the critical element of sustainment. As shown in the ESP report, organizations can adopt the gold standard of advanced or open-access, but few demonstrate long-term sustainment. This limited sustainment may be due to entrenched concepts of access that are hindered by a myopic view of the traditional through-put approach of face-to-face and fee-for-service medicine. This model relies on a supply-demand point of view and measures success through a utilization lens.

A more forward-looking approach should expand the concept of “timeliness” to reflect virtual care options with a robust sense of patient preference, the latter of which has not been well-studied. And while timeliness and access to care no doubt remains a cornerstone of high-quality health care, focusing on them may limit the breadth of measurement outcomes. Future research is needed to demonstrate alignment of both objective and subjective access measures and other quality metrics. Lastly, operational-based research is needed to understand why management concepts in scheduling practices or group practice management are not easily translated from setting to setting.

Supplementary Material

Supplemental Digital Content is available for this article. Direct URL citations appear in the printed text and are provided in the HTML and PDF versions of this article on the journal's website, www.lww-medicalcare.com.

Footnotes

This material is based upon work supported (or supported in part) by the Department of Veterans Affairs, Veterans Health Administration, VA Office of Rural Health and the Office of Research and Development, Health Services Research and Development (HSR&D) Service through the Comprehensive Access and Delivery Research and Evaluation (CADRE) Center (CIN 13-412). E.M.Y. supported by a VA HSR&D Senior Research Career Scientist Award (RCS 05-195).

The views expressed in this article are those of the authors and do not necessarily represent the views of the Department of Veterans Affairs or the United States Government.

The authors declare no conflict of interest.

REFERENCES

- 1.Institute of Medicine (IOM). Best Care at Lower Cost: The Path to Continuously Learning Health Care in America. Washington, DC: IOM; 2012. [PubMed] [Google Scholar]

- 2.Institute of Medicine (IOM). Crossing the Quality Chasm. Washington, DC: IOM; 2001. [Google Scholar]

- 3.Atkins D, Kilbourne AM, Shulkin D. Moving from discovery to system-wide change: the role of research in a learning health care system: experience from three decades of health systems research in the Veterans Health Administration. Annu Rev Public Health. 2017;38:467–487. [DOI] [PubMed] [Google Scholar]

- 4.Evidence-based Synthesis Program. 2018. Available at: www.hsrd.research.va.gov/publications/esp/. Accessed December 28, 2018.

- 5.Evidence-based Synthesis Program Reports. 2018. Available at: www.hsrd.research.va.gov/publications/esp/reports.cfm. Accessed December 28, 2018.

- 6.Institute of Medicine. Transforming Health Care Scheduling and Access: Getting to Now. Washington, DC: National Academies Press; 2015. [PubMed] [Google Scholar]

- 7.VA OIG Administrative Summary. Administrative Summary of Investigation by the VA Office of Inspector General in Response to Allegations Regarding Patient Wait Times. Affairs DoV, 2017. Available at: https://www.va.gov/oig/pubs/admin-reports/VAOIG-14-02890-126.pdf.

- 8.Campbell SM, Braspenning J, Hutchinson A, et al. Research methods used in developing and applying quality indicators in primary care. Qual Saf Health Care. 2002;11:358–364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Baker J, Lovell K, Harris N. How expert are the experts? An exploration of the concept of “expert” within Delphi panel techniques. Nurse Res. 2006;14:59–70. [DOI] [PubMed] [Google Scholar]

- 10.Danz MS, Hempel S, Lim YW, et al. Incorporating evidence review into quality improvement: meeting the needs of innovators. BMJ Qual Saf. 2013;22:931–939. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Miake-Lye IM, Mak S, Shanman R, et al. Access Management Improvement: A Systematic Review. Washington, DC: Department of Veterans Affairs; 2017. [PubMed] [Google Scholar]

- 12.Lukas CV, Meterko MM, Mohr D, et al. Implementation of a clinical innovation: the case of advanced clinic access in the Department of Veterans Affairs. J Ambul Care Manage. 2008;31:94–108. [DOI] [PubMed] [Google Scholar]

- 13.Pickin M, O’Cathain A, Sampson FC, et al. Evaluation of advanced access in the national primary care collaborative. Br J Gen Pract. 2004;54:334–340. [PMC free article] [PubMed] [Google Scholar]

- 14.Kaboli PJ, Fihn SD. Waiting for care in Veterans Affairs health care facilities and elsewhere. JAMA Netw Open. 2019;2:e187079. [DOI] [PubMed] [Google Scholar]

- 15.Wong ES, Liu CF, Hernandez SE, et al. Longer wait times affect future use of VHA primary care. Healthcare (Amst). 2018;6:180–185. [DOI] [PubMed] [Google Scholar]

- 16.Augustine MR, Nelson KM, Fihn SD, et al. How are patients accessing primary care within the patient-centered medical home? Results from the Veterans Health Administration. J Ambul Care Manage. 2018;41:194–203. [DOI] [PubMed] [Google Scholar]

- 17.Hempel S, Stockdale S, Danz M, et al. Access management in primary care: perspectives from an expert panel. RAND Corporation. 2018. Available at: www.rand.org/pubs/research_reports/RR2536.html. Accessed December 31, 2018.

- 18.Hempel S, Kirsh S, Miake-Lye I, et al. Managing primary care access in healthcare systems: a complex challenge. Cyberseminar, October 17, 2018.

- 19.Hempel S. Access management priorities in primary care—perspectives from an expert panel. Invited presentation for the panel managing primary care access in healthcare systems: a complex challenge. Presentation at AcademyHealth Annual Research Meeting, Seattle, WA. June 26, 2018.

- 20.Lampman MA, Stockdale SE, Kaboli PJ, et al. Examining the effects of telephone visits and rurality on veterans perceptions of access to primary care. Presentation at AcademyHealth Annual Research Meeting, Seattle, WA. June 26, 2018. [DOI] [PubMed]

- 21.Nelson KM, Sylling PW, Taylor LL, et al. Clinic level staffing and burnout associated with perceived access to primary care. Presentation at AcademyHealth Annual Research Meeting, Seattle, WA. June 26, 2018.

- 22.Rubenstein L, Miake-Lye I, Kirsh S, et al. Managing primary care access in healthcare systems: a complex challenge. Critical and emerging issues in HSR AcademyHealth Annual Research Meeting, Seattle, WA, June 26, 2018.

- 23.Kirsh S, Miake-Lye I, Rubenstein LV, et al. Access management priorities in primary care. American College of Medical Quality Conference, Washington, DC. April 2018.

- 24.Nelson KM, Sylling PW, Taylor LL, et al. Clinic level staffing and burnout associated with perceived access to primary care. Presentation at the Society for General Internal Medicine, Denver, CO. April 11–14, 2018.

- 25.Zulman DM, Hogan TP, McInnes K, et al. Enhancing access for individuals with complex needs through patient-facing technology: lessons learned from the VA. Presentation at the Society for General Internal Medicine, Denver, CO. April 11–14, 2018.

- 26.Batten AJ, Kaboli PJ. Timely care in primary care: My VA access evaluation and primary care analytics and evaluation unit. Cyberseminar, February 21, 2018.

- 27.Rubenstein LV, Rose DE, Danz MS, et al. Primary care access management vignettes—solutions from an Expert Panel. Presentation at the 10th Annual Conference on the Science of Dissemination and Implementation, Arlington, VA. December 4, 2017.

- 28.Miake-Lye IM, Mak S, Shanman R, et al. Access management improvement: a systematic review. Presentation at the 10th Annual Conference on the Science of Dissemination and Implementation, Arlington, VA. December 4, 2017. [PubMed]

- 29.Rose DE, Meredith LS, Yano EM, et al. Access and team functioning: lessons from VA’s PCMH Implementation. Presentation at AcademyHealth Annual Research Meeting, New Orleans, LA. June 24–26, 2017.

- 30.Miake-Lye IM, Mak S, Shanman R, et al. Strategies for access management: a systematic review. Presentation at AcademyHealth Annual Research Meeting, New Orleans, LA. June 24–26, 2017.

- 31.Fortney J, Kaboli P, Eisen S. Improving access to VA care. J Gen Intern Med. 2011;26(suppl 2):621–622. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Fortney JC, Burgess JF, Jr, Bosworth HB, et al. A re-conceptualization of access for 21st century healthcare. J Gen Intern Med. 2011;26(suppl 2):639–647. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Consumer Assessment of Healthcare Providers and Systems (CAHPS). 2018. Available at: www.ahrq.gov/cahps/index.html. Accessed December 31, 2018.

- 34.Penn M, Bhatnagar S, Kuy S, et al. Comparing wait times in the Veterans Administration and Private Sector. JAMA-Network Open. 2019. In press. [DOI] [PMC free article] [PubMed]

- 35.VA Mission Act of 2018 S.2372. 2018. Available at: www.govtrack.us/congress/bills/115/s2372. Accessed November 28, 2018.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental Digital Content is available for this article. Direct URL citations appear in the printed text and are provided in the HTML and PDF versions of this article on the journal's website, www.lww-medicalcare.com.