Abstract

How do we recall vivid details from our past based only on sparse cues? Research suggests that the phenomenological reinstatement of past experiences is accompanied by neural reinstatement of the original percept. This process critically depends on the medial temporal lobe (MTL). Within the MTL, perirhinal cortex (PRC) and parahippocampal cortex (PHC) are thought to support encoding and recall of objects and scenes, respectively, with the hippocampus (HC) serving as a content-independent hub. If the fidelity of recall indeed arises from neural reinstatement of perceptual activity, then successful recall should preferentially draw upon those neural populations within content-sensitive MTL cortex that are tuned to the same content during perception. We tested this hypothesis by having eighteen human participants undergo functional MRI (fMRI) while they encoded and recalled objects and scenes paired with words. Critically, recall was cued with the words only. While HC distinguished successful from unsuccessful recall of both objects and scenes, PRC and PHC were preferentially engaged during successful versus unsuccessful object and scene recall, respectively. Importantly, within PRC and PHC, this content-sensitive recall was predicted by content tuning during perception: Across PRC voxels, we observed a positive relationship between object tuning during perception and successful object recall, while across PHC voxels, we observed a positive relationship between scene tuning during perception and successful scene recall. Our results thus highlight content-based roles of MTL cortical regions for episodic memory and reveal a direct mapping between content-specific tuning during perception and successful recall.

Keywords: episodic memory, fMRI, hippocampus, medial temporal lobe, parahippocampal cortex, perirhinal cortex

Significance Statement

Episodic memory, our ability to encode and later recall experiences, involves neural overlap between perceptual and recall activity. Research has shown that this phenomenon depends on the medial temporal lobe (MTL). Within MTL, perirhinal (PRC) and parahippocampal (PHC) cortices are engaged during encoding and recall of objects and scenes, respectively, linked by content-independent hippocampus (HC). Here, we find that within MTL cortex, content tuning during perception predicts successful recall of that content: We observe a positive relationship between object tuning and object recall across PRC voxels, and between scene tuning and scene recall across PHC voxels. These results highlight the role of stimulus content for understanding MTL and demonstrate a clear mapping between content tuning and content recall.

Introduction

One of the most intriguing features of the human brain is its ability to recall vivid episodes from long-term memory in response to sparse cues. For example, the word “breakfast” may elicit recall of visual information including spatial (e.g., a bright kitchen) and object details (e.g., a croissant). This phenomenological reinstatement of past experiences is mirrored in cortical reinstatement, a neural reactivation of the original perceptual trace (Danker and Anderson, 2010). The medial temporal lobe (MTL) and its subregions play a key role in recall (Zola-Morgan and Squire, 1990; Eichenbaum et al., 2007). Anatomically, the MTL’s input/output regions, perirhinal cortex (PRC) and parahippocampal cortex (PHC), have differentially weighted reciprocal connections to the ventral and dorsal visual stream, respectively (Suzuki and Amaral, 1994a; Lavenex and Amaral, 2000; van Strien et al., 2009). They are therefore well suited to relay content-sensitive signals from sensory areas to the hippocampus (HC) during perception and encoding and vice versa during retrieval. Indeed, these parallel information streams converge in the HC, enabling it to support memory in a content-independent manner (Davachi, 2006; Eichenbaum et al., 2007; Danker and Anderson, 2010). In support of this view, human functional imaging studies have linked object-related versus spatial processing to PRC versus PHC for a range of tasks, including perception (Litman et al., 2009), context encoding (Awipi and Davachi, 2008; Staresina et al., 2011), reactivation after interrupted rehearsal (Schultz et al., 2012), and associative retrieval of object-scene pairs (Staresina et al., 2013b). Conversely, the HC, instead of representing perceptual content, is thought to store indices linking distributed cortical memory traces (Teyler and DiScenna, 1986; Teyler and Rudy, 2007), thereby well suited to coordinate pattern completion from partial cues (Marr, 1971; Norman and O’Reilly, 2003; Staresina et al., 2012; Horner et al., 2015).

The reciprocity of MTL connectivity implies overlapping activity profiles between perception and retrieval in content-sensitive pathways, and is thought to underlie cortical reinstatement (Eichenbaum et al., 2007; Danker and Anderson, 2010). Indeed, there is evidence that neural activity that was present during the original encoding of a memory is reinstated during retrieval, as demonstrated using univariate analyses of encoding-retrieval overlap (Nyberg et al., 2000; Wheeler et al., 2000; Kahn et al., 2004), correlative encoding-retrieval similarity (ERS) measures (Staresina et al., 2012; Ritchey et al., 2013), and multivariate decoding approaches (Polyn et al., 2005; Johnson et al., 2009; Mack and Preston, 2016; Liang and Preston, 2017). Moreover, cortical reinstatement scales with the reported fidelity of recall (Kuhl et al., 2011; Kuhl and Chun, 2014). Content-sensitive retrieval representations in higher-order visual cortex/MTL, as investigated here, may differ from frontoparietal representations in that they may be closer to the perceptual trace (Favila et al., 2018). The precise topographical mapping of content-sensitivity at perception to cortical reinstatement at retrieval, however, is unclear. If cortical reinstatement reflects a restoration of a distinct neural state during the original encoding experience, then successful recall of content should predominantly draw on neural populations that distinguished the content from others during perception. That is, the more content-tuned neural populations are during perception, the more diagnostic they should be of successful recall of their preferred content.

Here, we investigated content-sensitivity of MTL subregions during episodic memory recall, and how it maps to content tuning during perception. To this end, we had participants undergo functional MRI (fMRI) while they encoded and retrieved adjectives paired with an object or scene image. During retrieval, they only saw the adjective cue and tried to recall the associated object or scene. If HC contributes to recall in a content-independent fashion (as predicted by MTL connectivity), we would expect similar involvement during cued recall of both objects and scenes. Conversely, since MTL anatomy predicts content-sensitivity in PRC and PHC, we expect a preference for object recall in PRC and for scene recall in PHC. Critically, within PRC, we expect a positive correlation such that voxels exhibiting stronger object tuning during perception should be recruited more strongly for successful object recall. In contrast within PHC, we expect a positive correlation such that voxels exhibiting stronger scene tuning during perception should be recruited more strongly for successful scene recall.

Materials and Methods

Participants

A total of 34 volunteers (all right-handed, native English speakers, normal or corrected-to-normal vision) participated in the fMRI experiment. Sixteen participants were excluded from data analysis. Of those, one was excluded due to excessive movement, and one due to non-compliance. Fourteen datasets suffered data loss due to scanner malfunction. The results of the remaining n = 18 participants (11 female; mean age 22.7 years, range 18–33 years) are reported here. We note that the final sample size is within range, albeit on the lower end, of recent fMRI studies investigating content specificity in MTL cortex (Liang and Preston, 2017, n = 15; Mack and Preston, 2016, n = 24; Reagh and Yassa, 2014, n = 18; Staresina et al., 2012, n = 20). All participants gave written informed consent in a manner approved by the local ethics committee and were paid for their participation.

Stimuli and procedure

Stimuli consisted of 60 images of objects and 60 images of scenes (Konkle et al., 2010a,b) as well as 120 English adjectives (Staresina et al., 2011). Additionally five objects, five scenes, and 10 adjectives were used for practice. Per stimulus subcategory (e.g., desk, garden, etc.), only one image was used. Adjective-image pairs were randomized for each participant.

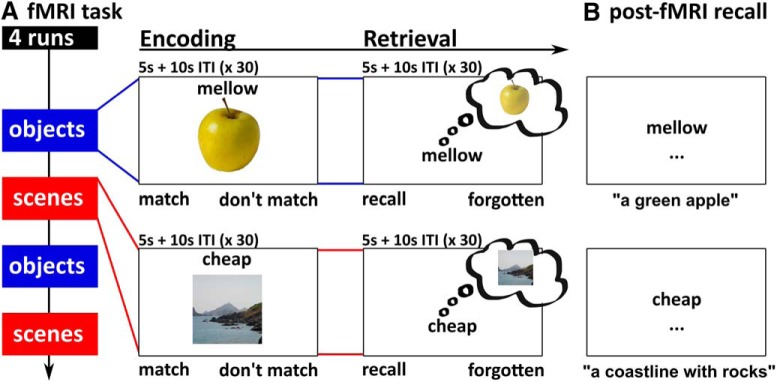

During fMRI, participants viewed stimuli via projection to a mirror mounted on the head coil and responded using an MR compatible button box. The fMRI task (Fig. 1A) used a slow event-related design, consisting of four runs (two object runs, two scene runs). Object and scene runs were presented in an alternating order that was counterbalanced across participants. Each run included an encoding and a retrieval phase (30 trials each), as well as pre- and post-encoding resting phases (3 min each). In each trial of the encoding phase, participants saw an object or scene image (400 × 400 pixels) presented in the center of the screen together with an adjective. Participants were asked to press the left or right button on a right-hand button box if they thought the adjective and image matched or did not match, respectively (“decide whether the adjective could be used to describe the image”). Adjective-image pairs were presented for 5 s, followed by 10 s of an arrows task (active baseline task; Stark and Squire, 2001) during which participants indicated the direction of left- or right-pointing arrows by pressing the left or right button. In the retrieval phase, the adjectives from the encoding phase were presented again in randomized order. Adjectives were presented for 5 s, and participants were asked to press the left button if they successfully recalled the associated image, and the right button if they did not. Each retrieval trial was again followed by 10 s of the arrows task. Before and after encoding, participants additionally engaged in an odd-even numbers task for 180 s (offline resting phase), separated from the task phases by a transition screen (10 s each). In the odd-even task, participants were presented with random numbers between 1 and 99 and pressed the left button for even numbers and the right button for odd numbers. Altogether, each run lasted 22 min.

Figure 1.

Experimental paradigm. A, The fMRI task consisted of two object and two scene runs, each comprising an encoding and a retrieval phase. During encoding, participants saw adjective-object or adjective-scene pairs. During retrieval, only the adjective was presented, and participants tried to recall the associated object or scene from memory. Not shown: each fMRI trial was followed by 10 s of an active baseline task (inter-trial interval, ITI, arrows task), and the encoding phase was preceded and followed by a resting phase (odd-even numbers task, 180 s; see main text for details). B, In the post-fMRI recall task, participants typed in descriptions of the associated object and scene for each adjective.

Since memory responses given during the fMRI task were subjective, two measures were taken to ensure that the scanned retrieval portion accurately captured brain activity related to success versus failure to recall. First, before the fMRI task, participants were explicitly instructed only to press “recall” if they could vividly recall details of the associated image and to press “forgotten” otherwise. Second, we additionally employed a post-fMRI recall task (Fig. 1B) to obtain an objective memory measure. Again, participants were presented with each adjective, in the same order as during the fMRI retrieval phase. The task was to type a brief description of the associated image or a “?” in case the target image was not recalled.

Critically, only trials with matching subjective and objective memory responses entered fMRI analyses (i.e., subjective “recall” response during the fMRI task plus successful recall in the post-fMRI test, or subjective “forgotten” response during the fMRI task plus unsuccessful recall in the post-fMRI test). This resulted in the following conditions of interest: object-recalled (OR), object-forgotten (OF), scene-recalled (SR), scene-forgotten (SF).

fMRI acquisition

Brain data were acquired using a GE Discovery MR750 3T system (GE Medical Systems) and a 32-channel head coil. For the functional runs, we used a gradient-echo, echo-planar pulse sequence (48 slices, 2.5 mm isotropic voxels, TR = 1000 ms, TE = 30 ms, ascending acquisition order, multiband factor 3, 1300 volumes per run). The slice stack was oriented in parallel to the longitudinal MTL axis and covered nearly the whole brain (in some participants with larger brains, superior frontal cortex was not covered). The first 10 images of each run were discarded before analysis to allow for stabilization of the magnetic field. Additionally, a high-resolution whole-brain T1-weighted structural image (1 × 1 × 1 mm, TR = 7.9 ms, TE = 3.06 ms) was acquired for each participant.

fMRI preprocessing and analysis

Regions of interest (ROIs) strategy

Considering the high anatomic variability of the MTL (Pruessner et al., 2002), all analyses were conducted in unsmoothed, single-participant space within anatomical ROIs of the MTL (HC, PRC, PHC). These were hand-drawn on each participant’s T1 image using existing guidelines (Insausti et al., 1998; Pruessner et al., 2000, 2002), and resampled to functional space. To maximize object versus scene sensitivity in the MTL cortex ROIs, considering gradual changes in content sensitivity along the parahippocampal gyrus (Litman et al., 2009; Liang et al., 2013), the posterior third of PRC and the anterior third of PHC were excluded from analysis (Staresina et al., 2011, 2012, 2013b). Across participants, the average number of voxels per bilateral ROI, in functional space and accounting for signal dropout, was 649.89 voxels (SEM: 15.07 voxels) for HC, 146.83 (11.68) for PRC, and 345.22 (10.92) for PHC. Signal dropout was defined through the implicit masking procedure in the SPM first-level GLM estimation, using a liberal masking threshold of 0.2.

Preprocessing

All analyses were conducted using MATLAB and SPM12. Functional images were first corrected for differences in acquisition time (slice time correction), then corrected for head movement and movement-related magnetic field distortions using the “realign and unwarp” algorithm implemented in SPM12. Structural images were then coregistered to the mean functional image before being segmented into gray matter, white matter, and CSF. Deformation fields from the segmentation procedure were used for Montreal Neurological Institute (MNI) normalization (used for visualization only, see Fig. 2A; all analyses were done in native space).

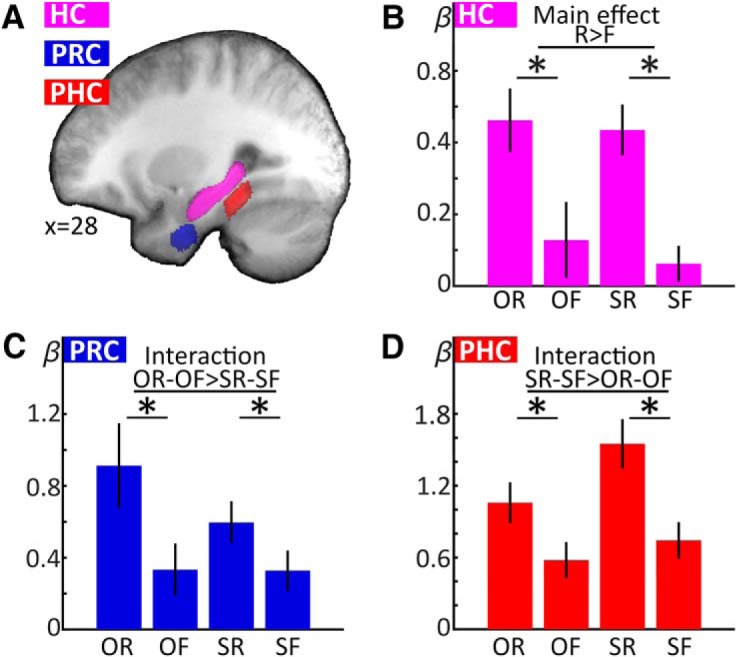

Figure 2.

MTL ROIs and univariate retrieval results. A, To illustrate ROI localization, manually delineated ROIs for each participant’s HC, PRC, and PHC were MNI normalized, averaged across participants, and projected on the mean normalized T1 (averaged ROI threshold > 0.5). B–D, Retrieval-phase β values were averaged within each participant’s individual ROIs and submitted to group analyses. HC (B) showed a main effect of successful recall, while PRC (C) and PHC (D) additionally showed interaction effects, indicating preference for object recall (PRC) and scene recall (PHC), respectively. O: object, S: scene, R: recalled, F: forgotten. Error bars denote SEM; *p < 0.05 (two-tailed) for pairwise t tests.

Univariate analyses

For the first-level general linear model, all runs were concatenated and the high-pass filter (128 s) and autoregressive model AR(1) + w were adapted to account for run concatenation. Regressors for our conditions of interest (OR, OF, SR, and SF for the encoding and retrieval phase, respectively) were modeled using a canonical hemodynamic response function (HRF) with a variable duration of each trial’s RT, assuming that memory-related processing of the stimulus is concluded at the time of the response. These regressors only included trials with matching memory responses during the fMRI task and post-fMRI recall. Non-matching trials (e.g., recall response during the scan, but failed explicit recall during the post-scan) entered separate regressors of no interest. Additionally, the first-level model included non-convolved nuisance regressors for each volume of the transition and resting periods as well as run constants. The resulting β estimates from the retrieval phase were averaged across each participant’s ROIs before entering a group-level repeated-measures ANOVA with the factors region, content, and recall success. In case of sphericity violations, the degrees of freedom were adjusted using Greenhouse–Geisser correction.

Perception-retrieval overlap (PRO)

We asked whether, within each MTL cortex ROI (PRC, PHC), successful recall of a particular content is predicted, across voxels, by content tuning during perception. In that case, within PRC, there should be a positive correlation such that voxels that show stronger tuning to objects compared to scenes during perception should also be more engaged during successful compared to unsuccessful object recall. Similarly, within PHC, there should be a positive correlation such that voxels that show stronger tuning to scenes compared to objects during perception should be more engaged during successful compared to unsuccessful scene recall. This should be reflected in an across-voxel correlation of the effect sizes of the respective perception and recall contrasts, which we tested in the following way: We computed, for each participant, four t contrast images: (1, 2) the between-content perception contrasts from the encoding phase [objects > scenes (O > S), computed as (OR + OF) > (SR + SF), and scenes > objects (S > O), computed as (SR + SF) > (OR + OF), irrespective of subsequent memory outcome]; (3) the within-content recall contrast for objects from the retrieval phase (OR > OF); (4), the within-content recall contrast for scenes from the retrieval phase (SR > SF). t values across voxels were then vectorized for each participant and ROI. The PRO for objects (PRO-O) was defined as the Pearson correlation coefficient between the object perception contrast t values (O > S) and the object recall contrast t values (OR > OF). Likewise, the PRO for scenes (PRO-S) was defined as the Pearson correlation coefficient between the scenes perception contrast (S > O) and the scene recall contrast (SR > SF). Note that we only included voxels with positive values in the perception contrast (O > S for PRO-O, S > O for PRO-S) in this analysis to ensure that correlations are carried by voxels tuned to objects rather than scenes for PRO-O, and to scenes rather than objects for PRO-S. To ensure that these correlations would capture local rather than cross-hemispheric topographical relationships, the correlation coefficients were computed in left and right ROIs separately, then Fisher z-transformed and averaged. The resulting values were submitted to a two-way repeated-measures ANOVA with the factors region (PRC, PHC) and correlation type (PRO-O, PRO-S), and followed up with two-sample and one-sample t tests.

One possible concern is that PRO might be biased by temporal autocorrelations, which are greater within a run than between runs. Note though that the task consists of four functional runs, with two object- and two scene-only runs in alternating order. Each run contains an encoding and retrieval phase. Thus, in PRO, we correlate a contrast containing data from all four runs (O vs S from all encoding phases) with contrasts containing data from only two runs (PRO-O: OR > OF; PRO-S: SR > SF). Consequently, both PRO-O and PRO-S correlate a contrast spanning all four runs with a contrast spanning two runs, making the overall temporal distance between contrasts equal. Moreover, whereas any bias arising from temporal autocorrelation would have similar impact across brain regions, we expect opposing patterns of PRO-O and PRO-S in PRC and PHC.

Control analysis 1: specificity

In the above analysis, we correlate, across voxels of each ROI, the object perception contrast with the object recall contrast for PRO-O, and the scene perception contrast with the scene recall contrast for PRO-S. Importantly, we use only voxels with positive values in the perception contrast, i.e., object-selective voxels for PRO-O and scene-selective voxels for PRO-S. We expect positive values for PRO-O but not PRO-S in PRC, and for PRO-S but not PRO-O in PHC. However, one might argue that such results lack specificity: The object perception contrast in PRC may not only correlate with object recall (PRO-O), but also with scene recall. Similarly, the scene perception contrast in PHC may not only correlate with scene recall (PRO-S), but also object recall. This would indicate a non-specific relationship between perception and recall such that stronger content tuning during perception would predict stronger recall effects for either content. To control for this, we additionally computed the correlation between the object perception contrast (O > S, positive voxels only) and the scene recall contrast (SR > SF) for PRC, and the correlation between the scene perception contrast (S > O, positive voxels only) and the object recall contrast (OR > OF) for PHC.

Control analysis 2: signal-to-noise ratio

Another possible concern might arise regarding the possible impact of differences in signal-to-noise ratio (SNR) across voxels. Since the analysis is based on t contrasts between conditions, rather than estimates of activation in single conditions, we consider it unlikely that SNR gradients across voxels bias these results. Nevertheless, we additionally computed PRO as described above, but using partial Pearson correlations that included the temporal SNR of each voxel as a control variable. Temporal SNR was computed as the mean value of the preprocessed, unfiltered functional time series, divided by its standard deviation (separately per run, then averaged across runs).

Results

Behavioral results

We queried successful recall of objects and scenes at two time points. During the fMRI task, participants merely responded “recall” or “forgotten” in response to each word cue (subjective recall). During a post-scan explicit word-cued recall task, participants typed in descriptions of the associated image, which were then scored by the authors (objective recall). Subjective responses during the fMRI task did not significantly differ by content (t(17) = 0.685, p = 0.502), with nearly 50% “recall” and “forgotten” responses for both objects and scenes [mean (SEM) % subjective recall responses: objects: 51.2 (1.8), scenes 52.6 (2.6)]. Likewise, objective recall rates during the post-fMRI test did not significantly differ by content [t(17) = 0.043, p = 0.966, mean (SEM) % objective recall: objects: 38.2 (2.8), scenes: 38.3 (3.1)]. To test whether subjective recall responses in the scanner were more likely to be followed by objective recall during the post-fMRI test, we calculated the proportions of successful objective recall separately for subjective “recall” and “forgotten” responses, and submitted these to a two-way repeated-measures ANOVA with the factors content (objects, scenes) and subjective response (“recall”, “forgotten”). This analysis yielded a significant effect of subjective response (F(1,17) = 280.661, p < 0.001; no effect of content or interaction, ps ≥ 0.682); compared to subjective “forgotten” responses, subjective “recall” responses in the scanner were more likely to be followed by objective recall during the post-fMRI test for both objects [mean (SEM) % objective recall: 67.1 (4.3) after subjective “recall” vs 9.0 (1.6) after subjective “forgotten”; and scenes, 66.5 (4.8) vs 8.2 (2.2)]. Note that only trials with consistent subjective and objective memory responses entered fMRI analysis [i.e., in the fMRI analysis, “R” (“recalled”) corresponds to a subjective “recall” response during the fMRI task as well as accurate objective recall during the post-fMRI test; “F” (“forgotten”) corresponds to a subjective “forgotten” response during the fMRI task as well as failed objective recall during the post-fMRI test]. A repeated-measures ANOVA on the numbers of trials that entered fMRI analysis with the factors content (objects, scenes) and trial type (R, F) showed a significant effect of trial type (F(1,17) = 6.473, p = 0.021), with more F than R trials [mean (SEM) number of trials: OR: 19.8 (1.5). OF: 25.9 (1.3), SR: 20.1 (1.7), SF: 25.2 (1.6); no effect of content or interaction, ps ≥ 0.668]. All participants in the final sample contributed at least eight trials per regressor of interest (OR, OF, SR, SF).

Content-independent versus content-sensitive retrieval processing in MTL subregions

Univariate analyses were conducted within bilateral single-participant ROIs of HC, PRC, and PHC (Fig. 2A). To characterize each MTL ROI with regard to its overall content-independent or content-sensitive response profile during retrieval, single-participant β values for the regressors of interest (OR, OF, SR, SF) from the retrieval phase were averaged across all voxels for each individual’s ROIs. ROI averages were then submitted to a repeated-measures three-way ANOVA with the factors region (HC, PRC, PHC), content (objects, scenes), and recall success (recalled, forgotten). This yielded a significant three-way interaction of region, content, and recall success (F(1.46,24.80) = 10.014, p = 0.002), as well as significant two-way interactions of region with content (F(1.42,24.10) = 13.544, p < 0.001) and region with recall success (F(1.81,30.79) = 6.305, p = 0.006).

Subsequent analyses were conducted separately for each ROI, using two-way repeated-measures ANOVAs (including the factors content and recall success; Fig. 2B–D). We expected content-independent recall in the HC, reflected in a main effect of successful recall. Conversely, we expected content-sensitive recall in the PRC and PHC, reflected in interaction effects of content and recall, with a preference for object recall in the PRC and scene recall in the PHC.

HC showed a significant main effect of successful recall (F(1,17) = 24.509, p < 0.001), but no effect of content nor a recall success × content interaction (p ≥ 0.496). By contrast, PRC showed a significant main effect of successful recall (F(1,17) = 18.137, p = 0.001), as well as a recall × content interaction (F(1,17) = 4.579, p = 0.047) due to a stronger recall effect for objects relative to scenes. There was no main effect of content in PRC (p = 0.173). Finally, PHC showed a significant main effect of content (F(1,17) = 16.804, p = 0.001), recall success (F(1,17) = 27.329, p < 0.001), and a significant recall success × content interaction (F(1,17) = 7.723, p = 0.013) due to a stronger recall effect for scenes relative to objects. To further characterize each ROI’s response profile, we computed post hoc paired t tests to assess object recall effects (OR vs OF) and scene recall effects (SR vs SF) in each ROI. All single comparisons were significant (ts(17) ≥ 2.667, ps ≤ 0.016). Critically, however, as indicated by the above interaction effects, the object recall effect was greater than the scene recall effect in PRC, and vice versa in PHC. Taken together, the ROI results show content-independent recall-related activity in HC versus a preference for object recall activity in PRC and for scene recall activity in PHC.

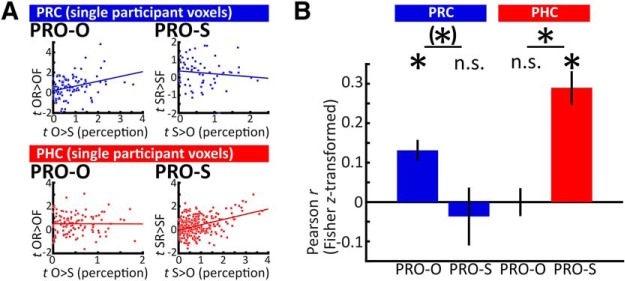

PRO

The preceding analysis established a preference for object recall in PRC and a preference for scene recall in PHC. Next, we assessed whether successful recall in these ROIs preferentially recruited voxels that were also diagnostic of object versus scene perception during encoding. Note that this approach goes beyond a simple overlap of contrasts (as in a conjunction analysis); rather than asking whether two contrasts exceed threshold in the same voxels, we ask whether there is a positive relationship between two contrasts such that voxels with a greater effect size in one contrast tend to show a greater effect size in the other (for illustrative participant-level data, see Fig. 3A). To this end, for each participant and ROI, we computed PRO-O [the correlation between the object perception contrast (O > S) from the encoding phase and the object recall contrast (OR > OF) from the retrieval phase], and PRO-S [the correlation between the scene perception contrast (S > O) from the encoding phase and the scene recall contrast (SR > SF) from the retrieval phase; for details, see Materials and Methods]. Note that PRO-O and PRO-S only included voxels tuned to either objects or scenes, as only voxels with positive values in the perception contrasts entered the correlation. We expected that PRC would show evidence for PRO-O: voxels that are more tuned to objects over scenes during perception would be preferentially recruited during successful compared to unsuccessful object recall. In PHC, we expected evidence for PRO-S: voxels that are more tuned to scenes over objects during perception would be preferentially recruited during successful compared to unsuccessful scene recall. We did not expect evidence for PRO-S in PRC or evidence for PRO-O in PHC.

Figure 3.

PRO. A, Illustrative data from two single participants’ ROIs; t values from the objects > scenes perception contrast (x-axes, positive voxels only) are plotted against t values from the object recall contrast (PRO-O, left column), while t values from the scenes > objects perception contrast (positive voxels only) are plotted against the scene recall contrast (PRO-S, right column). Data points indicate single voxels. In these example data, PRC voxels with greater effect sizes for object perception tended to show greater effect sizes for successful object recall (upper left scatterplot). Similarly, PHC voxels with greater effect sizes for scene perception tended to show greater effect sizes for successful scene recall (lower right scatterplot). Note that these within-participant scatterplots are for visualization only. B, Group averages of Fisher z-transformed correlation coefficients for PRO-O and PRO-S for PRC and PHC. Across PRC voxels, object tuning predicted object recall (PRO-O), but scene tuning did not predict scene recall (PRO-S). Across PHC voxels, scene tuning predicted scene recall, but object tuning did not predict object recall; *p < 0.05, (*)p < 0.1 (two-tailed) for one-sample and paired t tests, n.s.: not significant. Error bars denote SEM.

Before assessing the correlation between perception and retrieval contrasts, we confirmed PRC and PHC showed overall content tuning during perception. First, we tested whether the perception contrast yielded significant differences between objects and scenes when averaged across all voxels of each ROI. Second, we tested whether a majority of voxels in each ROI would show content tuning. Averaged across voxels, activation during object perception differed significantly from scene perception for both PRC (objects > scenes, t(17) = 7.367, p < 0.001) and PHC (scenes > objects, t(17) = 7.640, p < 0.001). As expected, HC showed no significant content tuning (t(17) = 1.470, p = 0.160, numerically scenes > objects). Furthermore, the majority of PRC voxels showed object tuning, i.e., positive values in the O > S perception contrast [mean proportion: 63.70% (SEM: 1.60%); one-sample t test against 50%: t(17) = 8.59, p < 0.001], whereas the majority of PHC voxels showed scene tuning, i.e., positive values in the S > O perception contrast [69.93% (2.04%), t(17) = 9.78, p < 0.001]. In the HC, the numerical majority of voxels were positive in the S > O contrast [S > O: 51.20% (1.16%); t(17) = 1.03, p = 0.315].

Results from the PRO analysis are summarized in Figure 3B. First, to confirm differences between PRC and PHC, we submitted the Fisher z-transformed correlation coefficients to a two-way repeated-measures ANOVA with the factors region (PRC, PHC) and content (PRO-O, PRO-S). This confirmed a significant interaction between region and content (F(1,17) = 21.866, p < 0.001).

In PRC, correlation coefficients between the object perception contrast at encoding and the object recall contrast were significantly above zero (PRO-O, t(17) = 4.910, p < 0.001), while correlation coefficients between the scene perception contrast at encoding and the scene recall contrast were not (PRO-S, t(17) = 0.500, p = 0.623). Furthermore, PRO-O trended to be greater than PRO-S (t(17) = 2.073, p = 0.054). In contrast, in PHC, correlation coefficients between the scene perception contrast at encoding and the scene recall contrast were significantly above zero (PRO-S, t(17) = 6.832, p < 0.001), while correlation coefficients between the object perception contrast at encoding and the object recall contrast were not (PRO-O, t(17) = 0.008, p = 0.994). PRO-S was significantly greater than PRO-O (t(17) = 5.124, p < 0.001).

To test whether these findings are restricted to MTL cortical regions, we repeated the above analysis in HC. PRO-S, but not PRO-O, differed significantly from 0 [mean (SEM) PRO-O: 0.017 (0.030), t(17) = 0.565, p = 0.580, PRO-S: 0.059 (0.025), t(17) = 2.330, p = 0.032]. Furthermore, PRO-O and PRO-S did not differ from each other (t(17) = 0.823, p = 0.422).

Our findings of PRO-O in PRC and PRO-S in PHC show that content tuning during perception in these ROIs predicts successful recall of that same content. To test the specificity of these findings, we repeated the analysis, this time testing whether content tuning would additionally predict recall of the non-preferred content. This would imply a non-specific relationship between content tuning during perception and recall. Hence, in PRC, we correlated the object perception contrast (O > S, positive voxels only) with the scene recall contrast. Correlation coefficients did not differ significantly from 0 [mean (SEM): 0.031 (0.031), t(17) = 1.011, p = 0.323], and were significantly smaller than PRO-O (t(17) = 3.536, p = 0.003). In PHC, we correlated the scene perception contrast (S > O, positive voxels only) with the object recall contrast. Correlation coefficients were significantly >0 [mean (SEM): 0.120 (0.043), t(17) = 2.789, p = 0.013]. Importantly, they were also significantly smaller than PRO-S (t(17) = 5.007, p < 0.001). In sum, across PRC voxels, object tuning during perception predicted object recall (PRO-O) but not scene recall, and there was no relationship between scene tuning and scene recall. In contrast, across PHC voxels, scene tuning during perception predicted scene recall (PRO-S) to a greater extent than object recall, and there was no relationship between object tuning and object recall.

As a second control analysis, we computed PRO-O and PRO-S for PRC and PHC using partial Pearson correlations with each voxel’s temporal SNR as a control variable (see Materials and Methods). The statistical pattern was nearly identical for both the ANOVA and follow-up t tests, with the exception of the paired t test between PRO-O and PRO-S in PRC, which was now significant (t(17) = 2.486, p = 0.024).

Multiple comparisons correction

Throughout our univariate and PRO analyses, a number of paired and one-sample t tests was used to further characterize the result patterns. Applying Holm–Bonferroni correction to all groups of t tests in our main analyses, the following results emerge. For the univariate analysis, all paired t tests remain significant [n = 6 tests (OR vs OF and SR vs SF in all three ROIs); Fig. 2B–D; see above, Content-independent versus content-sensitive retrieval processing in MTL subregions]. For PRO, the significance pattern of the one-sample t tests against 0 remains identical [n = 4 tests (PRO-S and PRO-O in PRC and PHC); Fig. 3B; see above, PRO]. Similarly, for PRO, the significance pattern of the paired t tests remains identical [n = 2 tests (PRO-O vs PRO-S in PRC and PHC); Fig. 3B; see above, PRO], with a significant difference between PRO-O and PRO-S in PHC, and a trend difference in PRC.

Discussion

Investigating cued recall of objects and scenes in the human MTL, we observed a triple dissociation across MTL subregions: While HC was engaged during successful recall of both content types, PRC preferentially tracked successful object recall and PHC preferentially tracked successful scene recall. Moreover, we demonstrate an across-voxel mapping of content-sensitive recall effects in PRC and PHC to content-tuning during the preceding encoding phase, suggesting that successful recall tends to draw on the same voxels that represent percepts with high specificity.

Before proceeding with the discussion, some notes on terminology. We refer to the object versus scene contrasts as “tuning” responses to emphasize the fact that PRO is based on differential responses (one category over the other). As our stimuli are categorical, we do not mean this to imply that these voxels respond in a graded fashion to a more or less optimal value of a continuous variable (Priebe, 2016). Furthermore, our results are agnostic to the debate whether MTL processing contributes to perception, or whether it necessarily serves a mnemonic function (Bussey and Saksida, 2007; Baxter, 2009; Suzuki, 2009, 2010; Graham et al., 2010; Squire and Wixted, 2011). We refer to the observed content tuning in the MTL cortex as perception as it results from sensory processing of objects and scenes, but we note that it may ultimately serve to encode representations into memory. In fact, as our perceptual contrast comes from the encoding phase of the experiment (albeit averaging successful and unsuccessful memory encoding), it may contain content-sensitive encoding activity (Staresina et al., 2011) in addition to perceptual activity. In future work, an objects/scenes contrast from an independent localizer may reduce the amount of concurrent encoding activity, however it does not resolve the aforementioned question whether MTL processing can be purely perceptual.

The present study provides strong evidence for an MTL memory model emphasizing an interplay of both content-sensitive and content-independent modules. According to this view, PRC and PHC show differential involvement in object and scene processing, respectively, based on their anatomical connectivity profiles with the ventral and dorsal visual streams. HC links both circuits through direct and indirect (via entorhinal cortex) connections to PRC and PHC, implying a content-independent role of HC in memory (Suzuki and Amaral, 1994a, b; Lavenex and Amaral, 2000; Davachi, 2006; Eichenbaum et al., 2007; van Strien et al., 2009; Wixted and Squire, 2011; Ranganath and Ritchey, 2012). Our findings of content-independent recall effects in HC, accompanied by preferential object recall in PRC and preferential scene recall in PHC, are in line with this view. Importantly, these connections are bidirectional (Suzuki and Amaral, 1994a, b; Lavenex and Amaral, 2000; Eichenbaum et al., 2007), enabling information transfer from visual cortex via PRC/PHC to HC during perception and encoding, and vice versa during retrieval (Staresina et al., 2013b). This parallelism of MTL connectivity may underlie the phenomenon of cortical reinstatement, the reactivation of the same sensory cortical regions during recall that were already active during perception (Eichenbaum et al., 2007; Danker and Anderson, 2010). Our findings extend this concept: even within content-sensitive cortical regions, voxels that are particularly tuned to one content type over the other during perception tend to be differentially reactivated when that content is successfully recalled. Importantly, such cortical reinstatement may underlie the psychological phenomenon of “re-living” episodic memories during vivid recall (Eichenbaum et al., 2007; Danker and Anderson, 2010; Kuhl et al., 2011; Kuhl and Chun, 2014).

Our results constitute an important update to an existing body of work investigating content-sensitive recall. Previous studies have investigated cortical reinstatement by comparing cued retrieval of object-related and spatial information. However, most did not focus on differences between MTL cortices (Khader et al., 2005, 2007; Kuhl et al., 2011; Gordon et al., 2014; Kuhl and Chun, 2014; Morcom, 2014; Skinner et al., 2014; Bowen and Kensinger, 2017; Lee et al., 2018). Those that did demonstrate that recall success is accompanied by content-sensitive activity in MTL, largely in line with our present findings. Staresina et al. (2012) showed that PHC reinstates scene information, while PRC reinstates low-level visual information (color) during successful, but not unsuccessful recall. Similarly, Staresina et al. (2013b) demonstrated content-sensitive recall responses in PHC and PRC during successful, but not unsuccessful retrieval of object-scene associations, driven by content-independent HC signals. One study presented evidence that, during object-cued recall of famous faces and places, PRC and PHC reinstate perceptual activity from an independent localizer task in a category-specific manner, with face reinstatement in PRC, and place reinstatement in PHC (Mack and Preston, 2016). While that study only included correct memory trials, making it difficult to directly link the observed category reinstatement in PRC and PHC to successful versus unsuccessful recall, the authors could demonstrate that item-specific reinstatement in PRC and HC (not PHC) predicted variations in subsequent response times for correct responses to a memory probe. Finally, one study showed a dissociation between PRC versus PHC for the reinstatement of an imagery task (person vs place/object) during successful but not unsuccessful source memory, but that study did not involve perceptual processing during the imagery task (Liang and Preston, 2017). Importantly, the present study is the first to demonstrate a clear double dissociation between PRC and PHC during successful versus unsuccessful object and scene recall triggered by a content-neutral cue, and to tie it to perceptual content tuning in a direct, voxel-wise manner.

Importantly, we observed preferential, but not exclusive, processing of objects and scenes in PRC and PHC, respectively. Both regions also show significant recall effects for their less-preferred content. Furthermore, in PHC, voxels that were tuned to scenes over objects during perception were also more active during successful object recall, albeit significantly less so than during scene recall. Previous studies have shown such overlap in content sensitivity in the MTL, with some object processing in PHC and some scene processing in PRC (Buffalo et al., 2006; Preston et al., 2010; Hannula et al., 2013; Liang et al., 2013; Martin et al., 2013, 2018; Staresina et al., 2013b). In particular, content sensitivity in the MTL cortex may not be abruptly demarcated, but follow a gradient (Litman et al., 2009; Liang et al., 2013). We sought to minimize this overlap by restricting analyses to the anterior two thirds of PRC and posterior two thirds of PHC, excluding the transition zone of the parahippocampal gyrus (Staresina et al., 2011, 2012, 2013b). Nevertheless, these two MTL subregions are not anatomically segregated, but show considerable interconnections (Suzuki and Amaral, 1994a; Lavenex and Amaral, 2000), facilitating cooperation. Furthermore, naturalistic scene images typically contain discernible objects, and many objects have a spatial/configurational component. A cardboard box, for example, may have the same general shape as a building, which has been shown to engage PHC (Epstein and Kanwisher, 1998). Similarly, object size modulates PHC activity (Cate et al., 2011; Konkle and Oliva, 2012). Thus, the significant (albeit weaker) responses of PRC and PHC during recall of their less-preferred content could stem from functional overlap in objects and scene processing, or from ambiguity in the stimuli themselves. Future studies could elucidate this ambiguity by controlling object and spatial features in these stimuli, albeit perhaps at the expense of decreasing natural validity.

It is important to note that our PRO analysis differs from existing approaches that test for pattern similarity between encoding and retrieval (pattern reinstatement). For instance, ERS has shown that, relative to forgotten trials, successfully remembered trials are more similar to their respective encoding trials (Staresina et al., 2012; Ritchey et al., 2013). Similarly, in multivariate pattern analysis (MVPA), a classifier may be trained on encoding trials to distinguish between voxel patterns associated with different content or tasks, and then tested on retrieval trials (Polyn et al., 2005; Johnson et al., 2009; Mack and Preston, 2016; Liang and Preston, 2017). PRO, on the other hand, relies on across-voxel correlations of contrasts, rather than single conditions or trials. Hence, voxels with low values in the perception contrast may still be highly activated relative to baseline. Previously, Haxby and colleagues used contrast correlations (Haxby et al., 2001) to demonstrate that content tuning during perception is stable between runs. Here, we test contrast correlations between tasks; specifically, whether content tuning, i.e., the difference between object and scene responses, can predict activity associated with successful recall of objects (PRO-O) and scenes (PRO-S) across voxels. It is important to note that, unlike ERS and MVPA, PRO does not reflect pattern reinstatement in the strictest sense, as all correlations contain a contrast between both content types, whereas reinstatement assumes the reactivation of only one content type. Thus, PRO could be considered a more constrained form of a conjunction, or inclusive masking, analysis. These methods test whether two or more contrasts exceed some threshold in the same voxels - implying topographical overlap of the constituting contrasts, but, critically, not a positive correlation across voxels. While our results likely reflect an influence of cortical reinstatement, they illuminate a distinct aspect of it compared to pattern similarity in the sense of ERS and MVPA. The latter methods demonstrate that distributed patterns of activity associated with a certain content are reinstated during recall, while our results link content-sensitive recall effects to voxels that are highly tuned to that content over another. A similar link has been demonstrated between content tuning and recognition memory for PRC activity (Martin et al., 2016). In that study, distributed voxel patterns in PRC that were diagnostic of face recognition also showed face-sensitive perceptual tuning. However, that study did not establish a positive across-voxel relationship between the magnitudes of the two effects.

Content-sensitive recall effects have been demonstrated in (not predominantly perceptual) brain regions outside MTL (Kahn et al., 2004; Johnson et al., 2009; Kuhl et al., 2013; Ritchey et al., 2013; Kuhl and Chun, 2014; Long et al., 2016; Xiao et al., 2017; Favila et al., 2018). What distinguishes these representations from those observed in MTL? Recent findings indicate that frontoparietal reinstatement effects may not only be stronger (Long et al., 2016), but represent a transformed version of the original trace (Xiao et al., 2017) that is less perceptual in nature (Favila et al., 2018) and more modulated by retrieval goals (Kuhl et al., 2013). In contrast, reinstatement in ventral temporal lobe/MTL has been shown to be more incidental in nature (Kuhl et al., 2013). These findings underline that multiple systems are involved during successful recall, and while the overlap of perceptual and retrieval activity appears to be an important pillar of recollection, not all content-sensitive recall effects involve reinstatement of the exact perceptual trace.

Links can also be drawn between our PRO findings to other forms of memory, such as recognition memory and repetition suppression. In these studies, the stimuli themselves, rather than associative cues, are presented during retrieval, leading to concurrent perceptual and retrieval processes. Litman et al. (2009) showed similar gradients along the anterior-posterior MTL cortex axis (1) for processing of novel objects and scenes, and (2) for repetition suppression effects for objects and scenes (although there appears to be some overlap between object and scene repetition suppression effects; Berron et al., 2018). Prince et al. (2009) demonstrated differential effects of successful face and place recognition within clusters that responded preferentially to faces and places in general. Finally, Martin et al. (2016) demonstrated a link between face recognition and face tuning in PRC voxels.

Taken together, our results support an MTL model of episodic memory based on anatomical connectivity and demonstrate a direct topographical mapping between content-sensitive perception and recall in the MTL cortex. One remaining question is how mnemonic content is conveyed and transformed from content-sensitive MTL cortex to content-independent HC. Much of the information exchange between PRC/PHC and HC is relayed via the entorhinal cortex and its anterolateral and posteriormedial subregions (Suzuki and Amaral, 1994b; Maass et al., 2015; Navarro Schröder et al., 2015), which have similarly been shown to support content-sensitive processing (Schultz et al., 2012; Reagh and Yassa, 2014; Navarro Schröder et al., 2015; Berron et al., 2018), albeit potentially in a more integrated fashion (Schultz et al., 2015). How entorhinal retrieval processing relates to content tuning is unclear, although there is evidence for reinstatement of encoding representations in the entorhinal cortex (Staresina et al., 2013a). Future research may investigate the relationship between encoding and retrieval in the entorhinal cortex by making use of advanced high-resolution and ultra-high field approaches, thereby enhancing our understanding of the human MTL in its entirety.

Synthesis

Reviewing Editor: Morgan Barense, University of Toronto

Decisions are customarily a result of the Reviewing Editor and the peer reviewers coming together and discussing their recommendations until a consensus is reached. When revisions are invited, a fact-based synthesis statement explaining their decision and outlining what is needed to prepare a revision will be listed below. Note: If this manuscript was transferred from JNeurosci and a decision was made to accept the manuscript without peer review, a brief statement to this effect will instead be what is listed below.

I have carefully read the three reviews provided from the Journal of Neuroscience, and I believe that the revised manuscript thoroughly and convincingly addresses all the comments raised by the reviewers. I see no additional value in having it re-reviewed. I congratulate the authors on an excellent piece of work and a valuable contribution to the literature.

References

- Awipi T, Davachi L (2008) Content-specific source encoding in the human medial temporal lobe. J Exp Psychol Learn Mem Cogn 34:769–779. 10.1037/0278-7393.34.4.769 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baxter MG (2009) Involvement of medial temporal lobe structures in memory and perception. Neuron 61:667–677. 10.1016/j.neuron.2009.02.007 [DOI] [PubMed] [Google Scholar]

- Berron D, Neumann K, Maass A, Schütze H, Fliessbach K, Kiven V, Jessen F, Sauvage M, Kumaran D, Düzel E (2018) Age-related functional changes in domain-specific medial temporal lobe pathways. Neurobiol Aging 65:86–97. 10.1016/j.neurobiolaging.2017.12.030 [DOI] [PubMed] [Google Scholar]

- Bowen HJ, Kensinger EA (2017) Recapitulation of emotional source context during memory retrieval. Cortex 91:142–156. 10.1016/j.cortex.2016.11.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buffalo EA, Bellgowan PSF, Martin A (2006) Distinct roles for medial temporal lobe structures in memory for objects and their locations. Learn Mem 13:638–643. 10.1101/lm.251906 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bussey TJ, Saksida LM (2007) Memory, perception, and the ventral visual-perirhinal-hippocampal stream: thinking outside of the boxes. Hippocampus 17:898–908. 10.1002/hipo.20320 [DOI] [PubMed] [Google Scholar]

- Cate AD, Goodale MA, Köhler S (2011) The role of apparent size in building- and object-specific regions of ventral visual cortex. Brain Res 1388:109–122. 10.1016/j.brainres.2011.02.022 [DOI] [PubMed] [Google Scholar]

- Danker JF, Anderson JR (2010) The ghosts of brain states past: remembering reactivates the brain regions engaged during encoding. Psychol Bull 136:87–102. 10.1037/a0017937 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davachi L (2006) Item, context and relational episodic encoding in humans. Curr Opin Neurobiol 16:693–700. 10.1016/j.conb.2006.10.012 [DOI] [PubMed] [Google Scholar]

- Eichenbaum H, Yonelinas AP, Ranganath C (2007) The medial temporal lobe and recognition memory. Annu Rev Neurosci 30:123–152. 10.1146/annurev.neuro.30.051606.094328 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Epstein R, Kanwisher N (1998) A cortical representation of the local visual environment. Nature 392:598–601. 10.1038/33402 [DOI] [PubMed] [Google Scholar]

- Favila SE, Samide R, Sweigart SC, Kuhl BA (2018) Parietal representations of stimulus features are amplified during memory retrieval and flexibly aligned with top-down goals. J Neurosci 38:7809–7821. 10.1523/JNEUROSCI.0564-18.2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gordon AM, Rissman J, Kiani R, Wagner AD (2014) Cortical reinstatement mediates the relationship between content-specific encoding activity and subsequent recollection decisions. Cereb Cortex 24:3350–3364. 10.1093/cercor/bht194 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Graham KS, Barense MD, Lee ACH (2010) Going beyond LTM in the MTL: a synthesis of neuropsychological and neuroimaging findings on the role of the medial temporal lobe in memory and perception. Neuropsychologia 48:831–853. 10.1016/j.neuropsychologia.2010.01.001 [DOI] [PubMed] [Google Scholar]

- Hannula DE, Libby LA, Yonelinas AP, Ranganath C (2013) Medial temporal lobe contributions to cued retrieval of items and contexts. Neuropsychologia 51:2322–2332. 10.1016/j.neuropsychologia.2013.02.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P (2001) Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science 293:2425–2430. 10.1126/science.1063736 [DOI] [PubMed] [Google Scholar]

- Horner AJ, Bisby JA, Bush D, Lin W-J, Burgess N (2015) Evidence for holistic episodic recollection via hippocampal pattern completion. Nat Commun 6:7462. 10.1038/ncomms8462 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Insausti R, Juottonen K, Soininen H, Insausti AM, Partanen K, Vainio P, Laakso MP, Pitkänen A (1998) MR volumetric analysis of the human entorhinal, perirhinal, and temporopolar cortices. AJNR Am J Neuroradiol 19:659–671. [PMC free article] [PubMed] [Google Scholar]

- Johnson JD, McDuff SGR, Rugg MD, Norman KA (2009) Recollection, familiarity, and cortical reinstatement: a multivoxel pattern analysis. Neuron 63:697–708. 10.1016/j.neuron.2009.08.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahn I, Davachi L, Wagner AD (2004) Functional-neuroanatomic correlates of recollection: implications for models of recognition memory. J Neurosci 24:4172–4180. 10.1523/JNEUROSCI.0624-04.2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Khader P, Burke M, Bien S, Ranganath C, Rösler F (2005) Content-specific activation during associative long-term memory retrieval. Neuroimage 27:805–816. 10.1016/j.neuroimage.2005.05.006 [DOI] [PubMed] [Google Scholar]

- Khader P, Knoth K, Burke M, Ranganath C, Bien S, Rösler F (2007) Topography and dynamics of associative long-term memory retrieval in humans. J Cogn Neurosci 19:493–512. 10.1162/jocn.2007.19.3.493 [DOI] [PubMed] [Google Scholar]

- Konkle T, Brady TF, Alvarez GA, Oliva A (2010a) Conceptual distinctiveness supports detailed visual long-term memory for real-world objects. J Exp Psychol Gen 139:558–578. 10.1037/a0019165 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Konkle T, Brady TF, Alvarez GA, Oliva A (2010b) Scene memory is more detailed than you think: the role of categories in visual long-term memory. Psychol Sci 21:1551–1556. 10.1177/0956797610385359 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Konkle T, Oliva A (2012) A real-world size organization of object responses in occipitotemporal cortex. Neuron 74:1114–1124. 10.1016/j.neuron.2012.04.036 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl BA, Chun MM (2014) Successful remembering elicits event-specific activity patterns in lateral parietal cortex. J Neurosci 34:8051–8060. 10.1523/JNEUROSCI.4328-13.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl BA, Rissman J, Chun MM, Wagner AD (2011) Fidelity of neural reactivation reveals competition between memories. Proc Natl Acad Sci USA 108:5903–5908. 10.1073/pnas.1016939108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl BA, Johnson MK, Chun MM (2013) Dissociable neural mechanisms for goal-directed versus incidental memory reactivation. J Neurosci 33:16099–16109. 10.1523/JNEUROSCI.0207-13.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lavenex P, Amaral DG (2000) Hippocampal-neocortical interaction: a hierarchy of associativity. Hippocampus 10:420–430. [DOI] [PubMed] [Google Scholar]

- Lee H, Samide R, Richter FR, Kuhl BA (2018) Decomposing parietal memory reactivation to predict consequences of remembering. Cereb Cortex 29:3305–3318. [DOI] [PubMed] [Google Scholar]

- Liang JC, Preston AR (2017) Medial temporal lobe reinstatement of content-specific details predicts source memory. Cortex 91:67–78. 10.1016/j.cortex.2016.09.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liang JC, Wagner AD, Preston AR (2013) Content representation in the human medial temporal lobe. Cereb Cortex 23:80–96. 10.1093/cercor/bhr379 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litman L, Awipi T, Davachi L (2009) Category-specificity in the human medial temporal lobe cortex. Hippocampus 19:308–319. 10.1002/hipo.20515 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Long NM, Lee H, Kuhl BA (2016) Hippocampal mismatch signals are modulated by the strength of neural predictions and their similarity to outcomes. J Neurosci 36:12677–12687. 10.1523/JNEUROSCI.1850-16.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maass A, Berron D, Libby LA, Ranganath C, Düzel E (2015) Functional subregions of the human entorhinal cortex. Elife 4 10.7554/eLife.06426 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mack ML, Preston AR (2016) Decisions about the past are guided by reinstatement of specific memories in the hippocampus and perirhinal cortex. Neuroimage 127:144–157. 10.1016/j.neuroimage.2015.12.015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marr D (1971) Simple memory: a theory for archicortex. Philos Trans R Soc Lond B Biol Sci 262:23–81. 10.1098/rstb.1971.0078 [DOI] [PubMed] [Google Scholar]

- Martin CB, McLean DA, O’Neil EB, Köhler S (2013) Distinct familiarity-based response patterns for faces and buildings in perirhinal and parahippocampal cortex. J Neurosci 33:10915–10923. 10.1523/JNEUROSCI.0126-13.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martin CB, Cowell RA, Gribble PL, Wright J, Köhler S (2016) Distributed category-specific recognition-memory signals in human perirhinal cortex. Hippocampus 26:423–436. 10.1002/hipo.22531 [DOI] [PubMed] [Google Scholar]

- Martin CB, Douglas D, Newsome RN, Man LL, Barense MD (2018) Integrative and distinctive coding of visual and conceptual object features in the ventral visual stream. Elife 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morcom AM (2014) Re-engaging with the past: recapitulation of encoding operations during episodic retrieval. Front Hum Neurosci 8:351. 10.3389/fnhum.2014.00351 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Navarro Schröder T, Haak KV, Zaragoza Jimenez NI, Beckmann CF, Doeller CF (2015) Functional topography of the human entorhinal cortex. Elife 4 10.7554/eLife.06738 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Norman KA, O’Reilly RC (2003) Modeling hippocampal and neocortical contributions to recognition memory: a complementary-learning-systems approach. Psychol Rev 110:611–646. 10.1037/0033-295X.110.4.611 [DOI] [PubMed] [Google Scholar]

- Nyberg L, Habib R, McIntosh AR, Tulving E (2000) Reactivation of encoding-related brain activity during memory retrieval. Proc Natl Acad Sci USA 97:11120–11124. 10.1073/pnas.97.20.11120 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polyn SM, Natu VS, Cohen JD, Norman KA (2005) Category-specific cortical activity precedes retrieval during memory search. Science 310:1963–1966. 10.1126/science.1117645 [DOI] [PubMed] [Google Scholar]

- Preston AR, Bornstein AM, Hutchinson JB, Gaare ME, Glover GH, Wagner AD (2010) High-resolution fMRI of content-sensitive subsequent memory responses in human medial temporal lobe. J Cogn Neurosci 22:156–173. 10.1162/jocn.2009.21195 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Priebe NJ (2016) Mechanisms of orientation selectivity in the primary visual cortex. Annu Rev Vis Sci 2:85–107. 10.1146/annurev-vision-111815-114456 [DOI] [PubMed] [Google Scholar]

- Prince SE, Dennis NA, Cabeza R (2009) Encoding and retrieving faces and places: distinguishing process- and stimulus-specific differences in brain activity. Neuropsychologia 47:2282–2289. 10.1016/j.neuropsychologia.2009.01.021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pruessner JC, Li LM, Serles W, Pruessner M, Collins DL, Kabani N, Lupien S, Evans AC (2000) Volumetry of hippocampus and amygdala with high-resolution MRI and three-dimensional analysis software: minimizing the discrepancies between laboratories. Cereb Cortex 10:433–442. 10.1093/cercor/10.4.433 [DOI] [PubMed] [Google Scholar]

- Pruessner JC, Köhler S, Crane J, Pruessner M, Lord C, Byrne A, Kabani N, Collins DL, Evans AC (2002) Volumetry of temporopolar, perirhinal, entorhinal and parahippocampal cortex from high-resolution MR images: considering the variability of the collateral sulcus. Cereb Cortex 12:1342–1353. 10.1093/cercor/12.12.1342 [DOI] [PubMed] [Google Scholar]

- Ranganath C, Ritchey M (2012) Two cortical systems for memory-guided behaviour. Nat Rev Neurosci 13:713–726. 10.1038/nrn3338 [DOI] [PubMed] [Google Scholar]

- Reagh ZM, Yassa MA (2014) Object and spatial mnemonic interference differentially engage lateral and medial entorhinal cortex in humans. Proc Natl Acad Sci USA 111:E4264–E4273. 10.1073/pnas.1411250111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ritchey M, Wing EA, LaBar KS, Cabeza R (2013) Neural similarity between encoding and retrieval is related to memory via hippocampal interactions. Cereb Cortex 23:2818–2828. 10.1093/cercor/bhs258 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultz H, Sommer T, Peters J (2012) Direct evidence for domain-sensitive functional subregions in human entorhinal cortex. J Neurosci 32:4716–4723. 10.1523/JNEUROSCI.5126-11.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultz H, Sommer T, Peters J (2015) The role of the human entorhinal cortex in a representational account of memory. Front Hum Neurosci 9:628. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skinner EI, Manios M, Fugelsang J, Fernandes MA (2014) Reinstatement of encoding context during recollection: behavioural and neuroimaging evidence of a double dissociation. Behav Brain Res 264:51–63. 10.1016/j.bbr.2014.01.033 [DOI] [PubMed] [Google Scholar]

- Squire LR, Wixted JT (2011) The cognitive neuroscience of human memory since H.M. Annu Rev Neurosci 34:259–288. 10.1146/annurev-neuro-061010-113720 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Staresina BP, Duncan KD, Davachi L (2011) Perirhinal and parahippocampal cortices differentially contribute to later recollection of object- and scene-related event details. J Neurosci 31:8739–8747. 10.1523/JNEUROSCI.4978-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Staresina BP, Henson RNA, Kriegeskorte N, Alink A (2012) Episodic reinstatement in the medial temporal lobe. J Neurosci 32:18150–18156. 10.1523/JNEUROSCI.4156-12.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Staresina BP, Alink A, Kriegeskorte N, Henson RN (2013a) Awake reactivation predicts memory in humans. Proc Natl Acad Sci USA 110:21159–21164. 10.1073/pnas.1311989110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Staresina BP, Cooper E, Henson RN (2013b) Reversible information flow across the medial temporal lobe: the hippocampus links cortical modules during memory retrieval. J Neurosci 33:14184–14192. 10.1523/JNEUROSCI.1987-13.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stark CE, Squire LR (2001) When zero is not zero: the problem of ambiguous baseline conditions in fMRI. Proc Natl Acad Sci USA 98:12760–12766. 10.1073/pnas.221462998 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Suzuki WA (2009) Perception and the medial temporal lobe: evaluating the current evidence. Neuron 61:657–666. 10.1016/j.neuron.2009.02.008 [DOI] [PubMed] [Google Scholar]

- Suzuki WA (2010) Untangling memory from perception in the medial temporal lobe. Trends Cogn Sci 14:195–200. 10.1016/j.tics.2010.02.002 [DOI] [PubMed] [Google Scholar]

- Suzuki WA, Amaral DG (1994a) Perirhinal and parahippocampal cortices of the macaque monkey: cortical afferents. J Comp Neurol 350:497–533. 10.1002/cne.903500402 [DOI] [PubMed] [Google Scholar]

- Suzuki WA, Amaral DG (1994b) Topographic organization of the reciprocal connections between the monkey entorhinal cortex and the perirhinal and parahippocampal cortices. J Neurosci 14:1856–1877. 10.1523/JNEUROSCI.14-03-01856.1994 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Teyler TJ, DiScenna P (1986) The hippocampal memory indexing theory. Behav Neurosci 100:147–154. [DOI] [PubMed] [Google Scholar]

- Teyler TJ, Rudy JW (2007) The hippocampal indexing theory and episodic memory: updating the index. Hippocampus 17:1158–1169. 10.1002/hipo.20350 [DOI] [PubMed] [Google Scholar]

- van Strien NM, Cappaert NLM, Witter MP (2009) The anatomy of memory: an interactive overview of the parahippocampal-hippocampal network. Nat Rev Neurosci 10:272–282. 10.1038/nrn2614 [DOI] [PubMed] [Google Scholar]

- Wheeler ME, Petersen SE, Buckner RL (2000) Memory’s echo: vivid remembering reactivates sensory-specific cortex. Proc Natl Acad Sci USA 97:11125–11129. 10.1073/pnas.97.20.11125 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wixted JT, Squire LR (2011) The medial temporal lobe and the attributes of memory. Trends Cogn Sci 15:210–217. 10.1016/j.tics.2011.03.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xiao X, Dong Q, Gao J, Men W, Poldrack RA, Xue G (2017) Transformed neural pattern reinstatement during episodic memory retrieval. J Neurosci 37:2986–2998. 10.1523/JNEUROSCI.2324-16.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zola-Morgan S, Squire LR (1990) The neuropsychology of memory. Parallel findings in humans and nonhuman primates. Ann NY Acad Sci 608:434–450; discussion 450–456. 10.1111/j.1749-6632.1990.tb48905.x [DOI] [PubMed] [Google Scholar]