Abstract

This study was aimed at describing temporal synergies of hand movement and determining the influence of sensory cues on the control of these synergies. Subjects were asked to reach to and grasp various objects under three experimental conditions: (1) memory-guided movements, in which the object was not in view during the movement; (2) virtual object, in which a virtual image of the object was in view but the object was not physically present; and (3) real object, in which the object was in view and physically present. Motion of the arm and of 15 degrees of freedom of the hand was recorded. A principal components analysis was developed to provide a concise description of the spatiotemporal patterns underlying the motion. Vision of the object during the reaching movement had no influence on the kinematics, and the effect of the physical presence of the object became manifest primarily after the fingers had contacted the object. Two principal components accounted for >75% of the variance. For both components, there was a strong positive correlation in the rotations of metacarpophalangeal and proximal interphalangeal joints of the fingers. The first principal component exhibited a pattern of finger extension reversing to flexion, whereas the second principal component became important only in the second half of the reaching movement.

Keywords: grasping, fingers, kinematics, synergies, memory guided, visually guided

As the hand reaches out to grasp an object, its shape gradually evolves into a posture that is appropriate. Perhaps the best-known manifestation of this phenomenon is the finding that the maximum aperture of the hand, reached early on in the movement, is scaled to the size of the object (Jeannerod, 1986; Chieffi and Gentilucci, 1993, 1999). However, hand posture in grasp depends on other factors as well, among these being the shape of the object (Santello and Soechting, 1998) and its intended use (Napier, 1956). The manner in which the motions of individual finger joints are coordinated to produce a particular hand shape and the influence of sensory information (visual and tactile) on the evolving movement remain relatively unexplored. In this paper, we take up these questions.

Recently we described the results of an experiment in which subjects reached and shaped the hand as if to grasp various familiar objects (Santello et al., 1998). Focusing on the static hand posture at the end of the reach, we found (using principal components analysis) that two patterns of coordination could account for a large portion (>80%) of the variability. However, small, higher order principal components provided additional information about the object that was intended to be grasped. Because that study was restricted to static postures, we did not characterize the time course of the motion. Is there a strict pattern of temporal covariation among all of the joints such as has been described for the proximal arm (for review, see Georgopoulos, 1986; Soechting and Flanders, 1991; Desmurget et al., 1998)? Alternatively, is there a proximal-to-distal progression of finger motion, or is the pattern variable? To address these questions, we have extended our previous analysis to the temporal domain.

In the previous study, the subjects' movements were prompted by imagination and guided by memory, and the subjects never actually saw the object that they were to grasp. However, it has been proposed that memory-guided movements are controlled by neural mechanisms with substrates that are different from those controlling visually guided movements (Goodale et al., 1994). More generally, vision of the object and the hand could affect the kinematics during the course of the movement (Prablanc et al., 1986). Furthermore, tactile cues available at the end of the movement can provide a powerful stimulus for the control of finger muscles (Johansson and Cole, 1992), and it is possible that subjects also take advantage of the hand's compliance in grasping an object (Hajian and Howe, 1997).

The present study was designed to characterize the temporal coordination of finger motion during the movement to grasp an object. An additional aim was to assess the extent to which continuous visual feedback and the physical presence of the object contribute to the kinematic coordination patterns during the reach and at contact with the object. We addressed these questions by asking the subjects to grasp remembered objects (in pantomime), the projected image of virtual objects, and objects that were physically present.

MATERIALS AND METHODS

Experimental tasks. We asked four subjects to reach and grasp various objects in three experimental conditions. The subjects gave informed consent to the procedures, which were approved by the Institutional Review Board of the University of Minnesota.

For experiments 1 (remembered objects) and 2 (virtual objects), we used a concave focusing mirror to project a three-dimensional (3-D) image of the object in front of the seated subject (Schneider et al., 1995). The object appeared to be semitransparent, and the subject's hand could occlude the object as well as pass through it. Before the experiment was started, the distance and orientation of the subject relative to the mirror were adjusted to project the 3-D image of the object at a comfortable reaching distance and height, i.e., at a distance slightly shorter than arm's length and at shoulder height.

For experiment 1, subjects were shown each object for ∼2 sec, after which the projected image was extinguished. As soon as the image disappeared, subjects were to reach and mold the hand to the remembered contours as if they were grasping the object. For experiment 2, we asked subjects to reach and grasp the same set of objects; however, now they were allowed to view the virtual image throughout the entire reaching and grasping movement. Experiment 3, which was used as a control for the first two experiments, consisted of reaching and actually making contact with the same objects, which were placed at the same location as the virtual objects in experiments 1 and 2.

As was done in an earlier study (Santello et al., 1998), we selected objects spanning a wide variety of shapes and sizes to best characterize the modulation of hand posture as a function of object geometry. The same set of 20 objects (Table1) was used for each experiment. The three tasks were presented within a single recording session in a sequential order, i.e., experiments 1, 2, and then 3. Subjects performed a total of 100 trials (5 trials per object) for each experiment, and a different (randomly chosen) object was presented in each consecutive trial.

Table 1.

List of objects used in the tasks

| 1 | Beer mug |

| 2 | Bowl |

| 3 | Calculator |

| 4 | Chinese tea cup |

| 5 | Coffee mug |

| 6 | Coke bottle |

| 7 | Compact disc |

| 8 | Computer mouse |

| 9 | Frying pan |

| 10 | Iron |

| 11 | Key |

| 12 | Pen |

| 13 | Playing card |

| 14 | Scissors |

| 15 | Screw driver |

| 16 | Skewer |

| 17 | Stapler |

| 18 | Tea spoon |

| 19 | Videotape |

| 20 | Wrench |

In all experiments, the elbow and wrist initially rested on a flat surface, the forearm was horizontal, the arm was oriented in the parasagittal plane passing through the shoulder, and the hand was in a semipronated position. Subjects were instructed to maintain the same initial hand posture (a loosely clenched fist) before initiating each reaching movement.

Data acquisition and analysis. Hand posture was measured by 15 sensors embedded in a glove (CyberGlove; Virtual Technologies, Palo Alto, CA) as described previously (Santello and Soechting, 1997, 1998;Santello et al., 1998). We measured the angles at the metacarpal–phalangeal (mcp) and proximal interphalangeal (pip) joints of the four fingers as well as the angles of abduction (abd) between adjacent fingers. For the thumb, the mcp, abd, and interphalangeal (ip) angles were measured, as was the angle of thumb rotation (rot) about an axis passing through the trapeziometacarpal joint of the thumb and index mcp joint. Flexion and abduction were defined as positive; the mcp and pip angles were defined as 0° when the finger was straight and in the plane of the palm. At the thumb, positive values of thumb rotation denoted internal rotation. Wrist pitch and yaw were also measured with this device. The spatial resolution of the CyberGlove was <0.1°. The output of the transducers was sampled at 12 msec intervals.

We used a Polhemus system to track the three-dimensional position of the wrist during the reach (sampling frequency: 120 Hz). Wrist velocity, obtained by numerically differentiating the position records, was used to determine onset and termination of the reaching movement (defined by the tangential velocity crossing a threshold of 5% of peak velocity). Data were analyzed beyond the termination of the proximal arm movement, i.e., +20% of the normalized reach duration, because the grasping movement is not yet completed when the wrist stops. After normalizing the duration of each reaching–grasping movement (from 0 to 1.2) and resampling the data at intervals of 0.01 of the normalized movement time, we analyzed the hand postures during this interval using (1) discriminant analysis and (2) principal components analysis.

We used discriminant analysis (Johnson and Wichern, 1992) to determine the extent to which hand postures differed from each other as a function of object geometry. Discriminant functions were computed throughout the reaching–grasping movement at intervals of 0.1 of the normalized movement time. Discriminant functions are the linear combinations of the joint angles that maximize the ratio of the between-group variance to the within-group variance [for more details, see Santello and Soechting (1998)]. In our experiment, each group corresponded to the data sets from the five trials for 1 of the 20 objects. The results of the discriminant analysis were used to construct a confusion matrix (Sakitt, 1980) that provided a summary of the extent to which hand posture on each trial could correctly predict the object that was grasped. This was quantified by computing a sensorimotor efficiency index (SME), defined as the ratio between the information transmitted by hand posture and the maximum possible amount of information that could be transmitted (Sakitt, 1980; Santello and Soechting, 1998; Santello et al., 1998).

Principal components analysis was used to characterize the patterns of covariation between the angular excursions of the digits. To compute the principal components (PCs), we first computed a covariance matrixCij, where the subscripts i andj refer to data from pairs of trials (of n = 100) for a particular experimental condition. The data for one trial consisted of the temporal waveforms of the 15 joint angles of the fingers and the thumb (see Fig. 1). From these data, we constructed a vector in 15-D space that varied in time. The covariance matrix was computed from the dot product between pairs of vectors representing trials i and j, integrated over the movement time:

| Equation 1 |

where θ̂(0) is the average posture (over all trials) at movement onset. The · denotes the dot-product between the two vectors. Numerically, the elements of the covariance matrix were computed as follows:

| Equation 2 |

where the subscript l refers to the joint angles (15), the subscript k refers to the normalized time intervals (120), and θl0 refers to the value of the lth joint angle at movement onset.

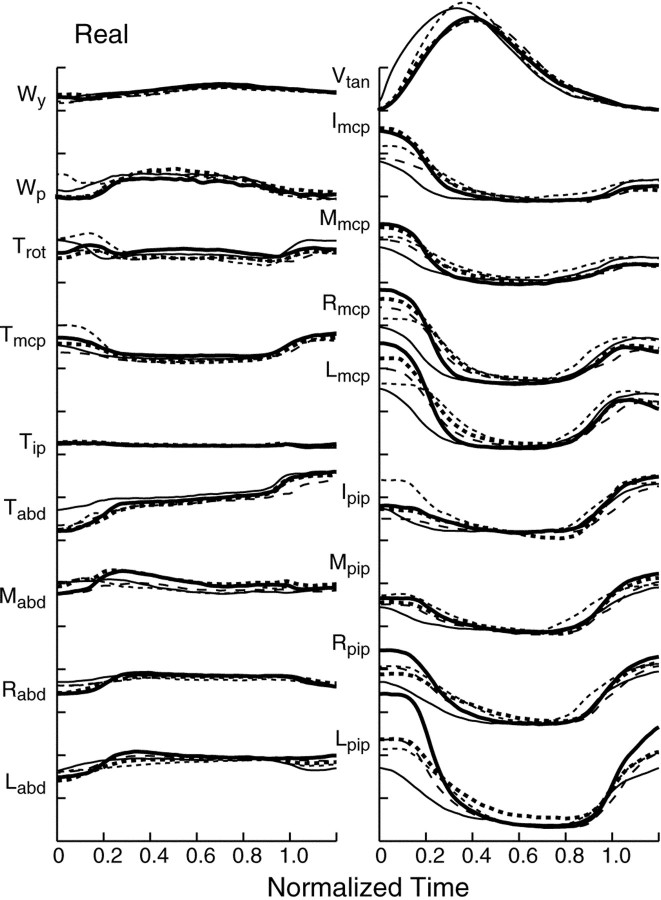

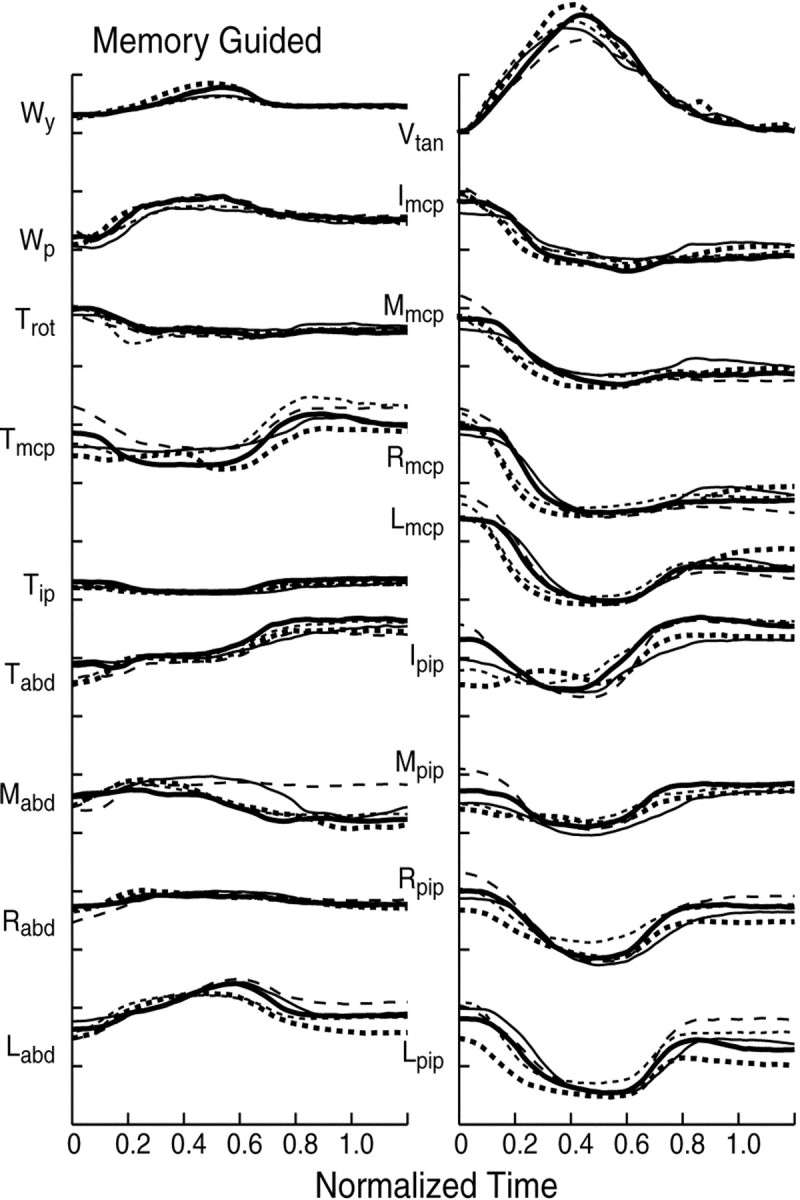

Fig. 1.

Time course of motion of the hand during memory-guided reaching to one object (experiment 1). Thetraces depict data from five trials for one object (stapler). From top to bottom, thetraces in the left column depict the motion of the wrist [yaw (Wy) and pitch (Wp)], of the thumb [rotation (Trot), flexion at the mcp (Tmcp) and ip joints (Tip), and abduction (Tabd)], and the abduction angles between adjacent fingers: index–middle fingers (Mabd), middle–ring fingers (Rabd), and ring–little fingers (Labd). From top tobottom, the traces in the right column show the wrist tangential velocity (Vtan) and the angular excursion at mcp joints of the index (I), middle (M), ring (R), and little (L) fingers and at the pip joints. Positive values denote flexion and abduction. At the thumb, positive values denote internal rotation. The data are for one subject (S3). Time has been normalized from the onset to the end of the movement, both defined by the wrist tangential velocity (0 and 1). Scale: 25°/division.

Principal components were computed using the eigenvectorsun of the covariance matrix, ordered according to the magnitude of the eigenvalues λn (Glaser and Ruchkin, 1976):

| Equation 3 |

The pattern of joint motion for a particular trial iis reconstructed as the sum of the PCs, multiplied by weighting coefficients ain:

| Equation 4 |

In this procedure, the first PC (PC1) represents the motion of all of the joints that accounts for most of the variance; in it each joint may move with a different temporal profile. In other words, PC1 accounts for the spatiotemporal pattern of coordination across joints that best represents the data. This is in contrast to a previous procedure in which we treated the data from each joint as a separate “trial,” yielding weighting coefficients that differed across joint angles (Soechting and Flanders, 1997). It is also in contrast to the procedure used recently by Mason et al. (2001). In their analysis, the PCs in Equation 4 were constants in time, but the weighting coefficients ain varied with time. In such a representation, all of the joints for a particular PC are constrained to move in synchrony. The present analysis did not impose any pattern of covariation among the joints. Thus we could characterize patterns of coordination in the temporal and spatial domains.

We computed principal components separately for each of the three experimental conditions. However, because the principal components are ordered according to the variance that each accounts for, it is possible that the principal components in two experimental conditions can differ, even when the patterns of coordination are the same. Principal components represent orthogonal vectors in a multidimensional space (Glaser and Ruchkin, 1976), and it is possible that the axes of one set are rotated with respect to the axes of the second set. Consider for simplicity an example in two dimensions. If the two setsPC and PC′ differ by a rotation of the axes by an amount ϕ, then the dot productPCi · PC′jis given by:

| Equation 5 |

We brought the first three PCs for each experimental condition into alignment with each other. We used the PCs for experiment 1 (remembered targets) as the reference and computed the elements of the covariance matrix for pairs of PCs in two experimental conditions. If they differ only by a rotation ϕ, then ϕ = tan−1(a21 − a12)/(a11 + a22). We rotated the axes of the PCs for experiments 2 and 3 by ϕ to bring them as closely as possible into alignment with those of experiment 1.

Each eigenvalue corresponds to the variance accounted for by that particular principal component. We also computed the variance not accounted for at discrete time points during the reaching by reconstructing the hand motion using variable amounts of principal components.

RESULTS

Hand shaping during the reach

When grasp is restricted to two digits, such as the thumb and index finger, it is well known that the digits extend to a maximum aperture larger than the object (Jeannerod, 1986, 1999). This maximum is reached about midway into the transport phase, and as the hand approaches the object, the two digits flex to grasp the object. This general pattern holds as well when an object is grasped with the participation of all of the digits. This can be appreciated in Figures1 and 2, which show the data for all of the trials for one subject and one object (stapler) in the memory-guided experiment (Fig. 1) and when the object was physically present (Fig. 2). The general features of the movement were similar in the two conditions.

Fig. 2.

Time course of motion of the hand during reaches to a real object. The traces depict data from five movements when the object (stapler) was physically present (experiment 3). Data are from the same subject (S3) and are shown in the same format as in Figure 1.

The motion of the wrist followed a bell-shaped velocity profile (Vtan) (Figs. 1, 2, top trace in right column). Early in the reach, there was a gradual extension of the mcp and pip joints at all fingers (Figs. 1, 2, other traces in right column). This was generally coupled to an abduction of the thumb and fingers (Figs.1, 2, traces in left column). Motion of the other degrees of freedom at the thumb was not as well defined in this example. At ∼60% of normalized movement time in Figure 1 (and at ∼80% in Fig. 2), motion of the joints reversed to flexion and adduction of the digits as the hand approached the object. The pattern of motion at each of the joints was highly repeatable from trial to trial. In these examples, the largest variability occurred at movement onset. Furthermore, the motion at all mcp joints, as well as at all pip joints, followed a similar time course but with different amplitudes. This temporal profile, which was typical for objects in all three experimental conditions, is similar to that described previously (Santello and Soechting, 1998; Santello et al., 1998).

There were some quantitative differences in the wrist velocities and time-to-peak wrist velocities among the three experimental conditions. On average, peak wrist velocity occurred at ∼35% of the movement time in the memory-guided and virtual target conditions. When subjects grasped real objects, peak wrist velocity tended to occur earlier in the movement (at 31%; p < 0.01). The amplitude of peak wrist velocity was also affected by experimental condition, reaching to real objects being characterized by a lower peak velocity (1.13 m/sec) than reaching to either remembered or virtual objects (1.18 and 1.25 m/sec, respectively; p < 0.01). Accordingly, movement time (which averaged from ∼750 msec to 1000 msec in different subjects) was ∼10% longer when subjects actually grasped the object.

There were also qualitative differences in the hand kinematics between experimental conditions. After the gradual opening and closure of the hand, a static hand posture was attained later in the reach when subjects grasped a real object than when they reached to a remembered object. Specifically, hand closure occurred at the very end of the transport phase of the hand when grasping a real object but occurred earlier in the other two experimental conditions. These features were found in three subjects, whereas in the fourth subject (S2) they were difficult to assess because of large intertrial variability in the time course of finger motion. Subsequent analysis confirmed the existence of subtle between-conditions differences in hand shaping over the final stages of the movement (see below).

Intertrial variability in the time courses of joint rotation (for a given object) was similar in the three experimental conditions. This was also the case for the variability in the final posture of the hand. The average SD in the joint angles at the end of the movement was 5.3°, with a range from 2.2° forTip to 9.3o forLpip. Experimental condition had no statistically significant effect (p > 0.05) on the variability of any of the joint angles at the end of the movement.

The static hand postures for each object showed a high degree of correlation among the three experimental conditions. This is demonstrated in Table 2, where we report the pairwise coefficients of determination for each of the joint angles. The r2 values were typically above 0.5. However, the joint angles varied over a smaller range for the set of objects in the memory-guided and virtual object conditions compared with when subjects grasped the actual object. Thus, the slopes of the regression lines were consistently less than unity, when the values for the memory-guided and virtual conditions were regressed against those obtained with the real object.

Table 2.

Correlations between final postures

| Memory versus real | Memory versus virtual | Virtual versus real | ||||

|---|---|---|---|---|---|---|

| Slope | r2 | Slope | r2 | Slope | r2 | |

| Trot | 0.716 | 0.511 | 0.775 | 0.698 | 0.801 | 0.559 |

| Tmcp | 0.267 | 0.218 | 1.022 | 0.821 | 0.284 | 0.079 |

| Tip | 0.312 | 0.262 | 0.682 | 0.615 | 0.422 | 0.176 |

| Tabd | 0.523 | 0.534 | 0.734 | 0.785 | 0.610 | 0.522 |

| Imcp | 0.955 | 0.656 | 0.884 | 0.855 | 0.994 | 0.690 |

| Ipip | 0.856 | 0.736 | 1.046 | 0.889 | 0.788 | 0.762 |

| Mmcp | 0.966 | 0.690 | 0.954 | 0.887 | 0.924 | 0.652 |

| Mpip | 0.742 | 0.672 | 1.049 | 0.862 | 0.660 | 0.703 |

| Mabd | 0.842 | 0.479 | 0.889 | 0.847 | 0.854 | 0.486 |

| Rmcp | 0.845 | 0.602 | 0.931 | 0.858 | 0.857 | 0.612 |

| Rpip | 0.803 | 0.689 | 1.031 | 0.863 | 0.739 | 0.720 |

| Rabd | 0.755 | 0.738 | 0.920 | 0.885 | 0.774 | 0.784 |

| Lmcp | 0.709 | 0.522 | 0.929 | 0.779 | 0.664 | 0.497 |

| Lpip | 0.826 | 0.649 | 0.901 | 0.836 | 0.883 | 0.704 |

| Labd | 0.770 | 0.649 | 1.121 | 0.856 | 0.667 | 0.673 |

| Wp | 0.683 | 0.768 | 0.857 | 0.881 | 0.759 | 0.781 |

| Wy | 0.833 | 0.758 | 0.902 | 0.902 | 0.897 | 0.790 |

The slope and the coefficient of determination (r2) were computed for the relation between the joint angles at the end of the movement for each object for pairs of experimental conditions. The values reported are averages for the four subjects. The r2 values were significant (p < 0.05) for all joints in the memory versus virtual comparison and for all but Tmcp andTip in the other two comparisons.

Gradual discrimination of hand posture during the reach

When subjects reach to grasp objects with different shapes, the shape of the hand evolves gradually to conform to the contours of the object (Santello and Soechting, 1998). However, when they reach to grasp remembered and virtual objects, there is no mechanical interaction with the object at the end of the reach. Furthermore, in the remembered condition, vision of the object is lacking. Accordingly, as mentioned in the introductory remarks, one might expect sensory guidance based on visual and tactile cues to affect the evolution of the hand's posture. One way to test this supposition is to determine the extent to which hand shape can predict the object to be grasped at different times during the movement. This was assessed by using discriminant analysis to compute the information transmitted by hand shape, expressed as the SME.

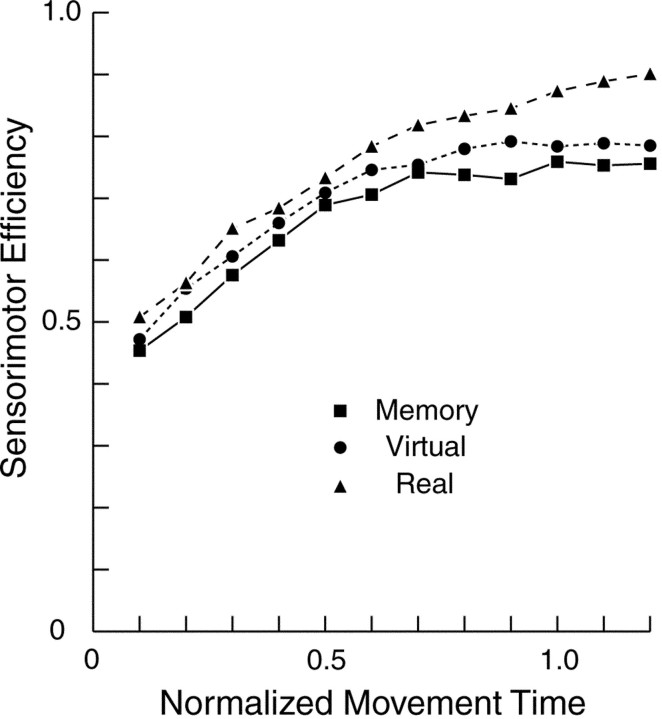

The information transmitted increased linearly up to ∼60% of movement time, after which it leveled off in the memory-guided and virtual conditions and increased at a slower rate when subjects actually grasped the object (Fig. 3). At each point in time, SME was greatest in the real condition and smallest in the memory condition. A statistical analysis showed that there was a significant effect of experimental condition (F(2,108) = 18.0; p < 0.01). A post hoc analysis (with Bonferroni adjustment) showed that SME was significantly larger for the real condition than it was for the other two conditions, which did not differ from each other. Qualitatively, it appeared that the results were similar for the three experimental conditions for the first 60% of the movement, the results for the real condition diverging after that. This impression was confirmed by regression analysis. A linear regression of SME against time in the interval [0.1, 0.6] gave slopes (0.54 on average) and intercepts (0.43) that did not differ significantly for the three conditions. Thereafter [0.7, 1.2], the slope of the SME in the real condition (0.17), differed significantly from zero (p < 0.01), whereas the slopes for the other two conditions did not. In summary, these results suggest that vision did not affect the evolution of the hand's kinematics. The biomechanical interactions between the fingers and the solid object and/or the tactile feedback provided by it became important only at the end of the reach, as the hand made contact with the object.

Fig. 3.

Information transmitted by hand shape in the three experimental conditions. The information transmitted defined as theSensorimotor Efficiency was computed at intervals of 10% of the normalized movement time. The data shown are averages from all subjects.

Covariation of joint rotations during reach and grasping

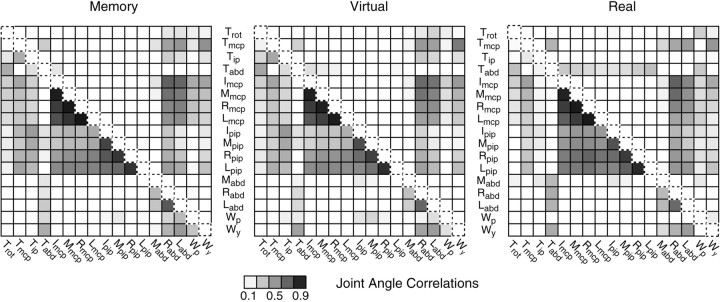

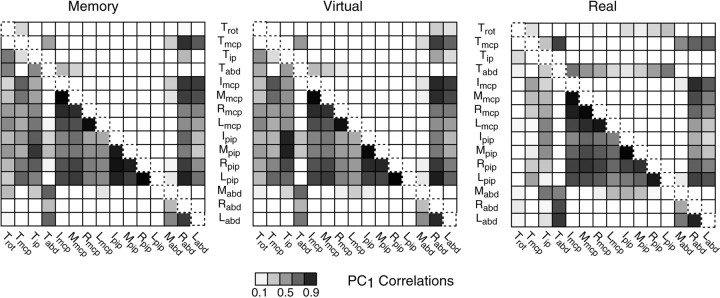

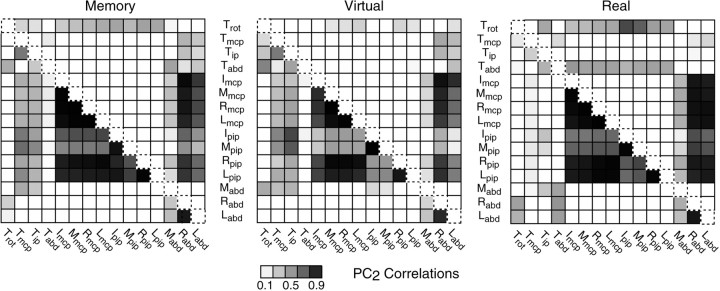

As is the case for the static posture at the end of the transport phase (Santello et al., 1998), there is a high degree of covariation among the rotations of the mcp and pip joints of the fingers. This is apparent in the examples shown in Figures 1 and 2. To quantify the extent of covariation among joint rotations and the influence of experimental condition on this phenomenon, we computed pairwise correlation coefficients on a trial-by-trial basis. The average results for all subjects (4) and objects (20) are shown in Figure4. The extent of the correlation is shown in gray scale, with positive values shown below the diagonal and negative values shown above the diagonal. It is apparent that the pattern of coordination among joint rotations was similar for all three experimental conditions. Correlation coefficients for pairs of mcp joints and for pairs of pip joints were large and positive, with the strongest pattern of covariation being found for adjacent digits (squares just below the diagonal). Weaker positive correlations were found between the mcp and pip joints, whereas abduction (especially of the ring and little finger) was negatively correlated with angular excursion at both mcp and pip joints. Weak or no correlations were found between the remaining joints.

Fig. 4.

Correlation coefficients of the relations between joint angles of the hand. The gray scale in eachsquare denotes the correlation coefficient (r) for the relation between the angles indicated in the respective column and row. Correlation coefficients were computed from individual trials over the normalized movement time (0–1.2). Entries below the diagonal denote positive rvalues, whereas entries above the diagonal denote negative correlations. The values shown are averages of all trials from all subjects.

Principal components of time course of joint rotations

The above results indicate that the motion of the hand during the reaching movement is characterized by consistent, joint-specific covariations in angular excursions. The presence of these covariation patterns indicates that not all the finger joints were controlled independently, resulting in a reduction in the number of mechanical degrees of freedom. The question remains: to what extent is the time course of the overall motion of the hand consistent from object to object and from experimental condition to experimental condition? We used a principal components analysis (see Materials and Methods) to address this question and to characterize the kinematic features that were common to all objects and experimental conditions.

We found that the first two principal components could account for a large proportion of the variance, i.e., 75, 77, and 74% for the remembered, virtual, and real grasping, respectively. This implies that within each grasping condition there was a high degree of similarity in the time course of finger motion when reaching to grasp objects with different sizes and shapes (Table 1). Furthermore, the waveforms of the first two principal components were remarkably similar across experimental conditions and across subjects. Representative results for one subject (S1) are shown in Figures5, 6, and7, and the waveforms for the first principal component are shown in Figure 8for the one subject whose pattern differed the most from the others.

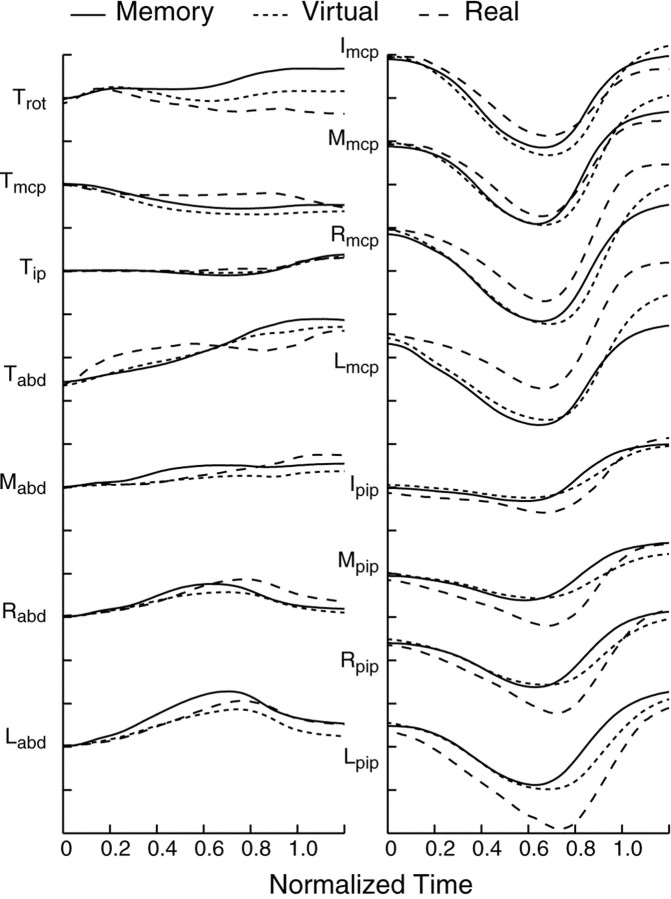

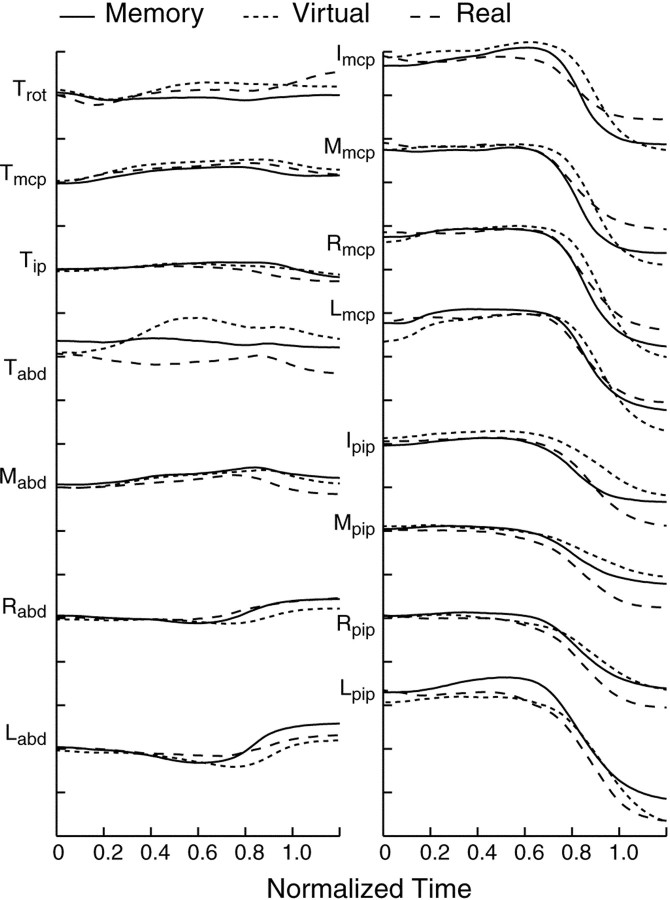

Fig. 5.

Time course of joint rotations: first principal component. The first principal component is shown for one subject (S1) and for each experimental condition. The scale is arbitrary, but it is the same for all joint angles. The layout is similar to that used in Figure 1.

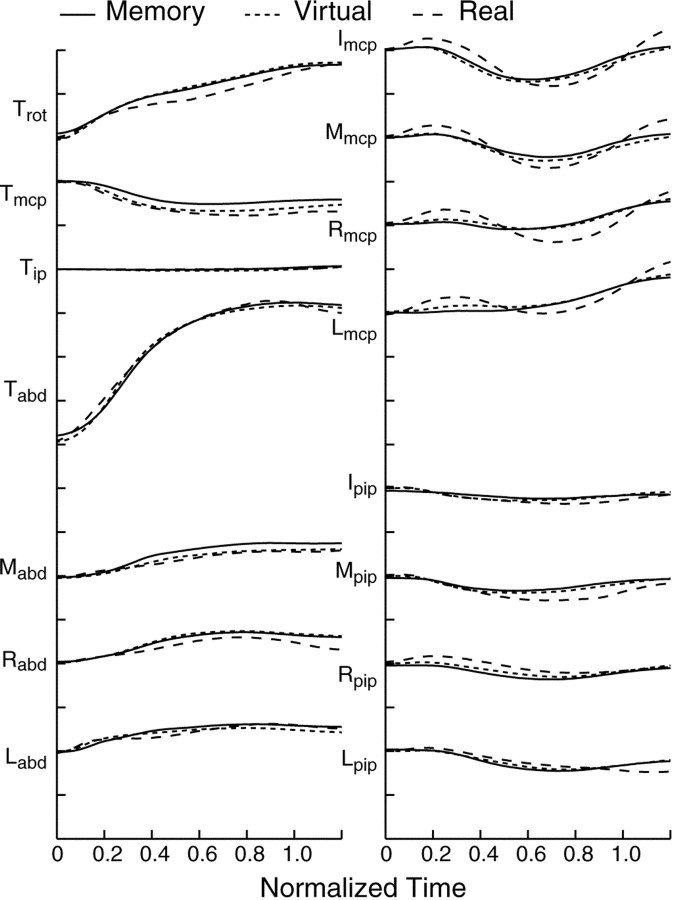

Fig. 6.

Time course of joint rotations: second principal component. Data are from the same subject (S1) and are shown in the same format as in Figure 5 (see also Fig. 1).

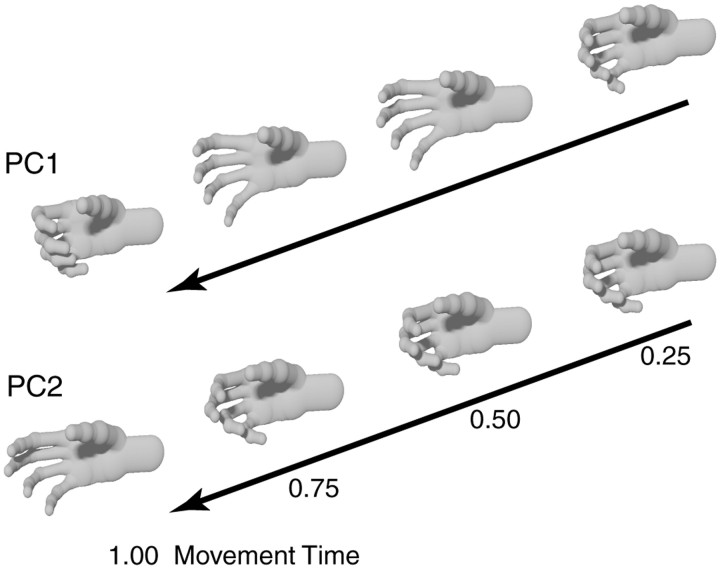

Fig. 7.

Reconstruction of hand postures during the movement. In the top row, hand postures were derived by adding the first principal component (with a weighting factor of 15) to the average posture at movement onset. Postures in the second row were obtained by adding the second principal component (with a weighting factor of 10) to the starting posture. The data are from subject S1 for memory-guided movements. The weighting coefficients for individual trials for this subject ranged from −4.6 to 19.1 for PC1 and from −12.7 to 11.2 for PC2.

Fig. 8.

Time course of joint rotations: first principal component. The data are results from the one subject (S4) whose first principal component differed the most from the others. Note that the excursion in Tabd was much larger for this subject, but that the pattern of covariation of motion among the pip and mcp joints in this instance was similar to that depicted in Figure 5.

We begin by considering the first principal component (Figs. 5, 7). On average, the first PC accounted for 52, 50, and 40% of the variance in the memory-guided, virtual, and real conditions. The main kinematic features of the examples shown in Figures 1 and 2 were well captured by the first PC. Specifically, all the mcp and pip joints tended to extend and flex together during the movement, simultaneously reaching a maximum excursion. At the same time, the digits were gradually abducted and later adducted toward the end of the reach. In contrast, abduction of the thumb tended to be monotonic, and there was little motion at the thumb's mcp and ip joints. This general pattern of coordinated motion of the hand can be appreciated in Figure 7 (top row), where we have reconstructed hand posture at different epochs of the movement, adding the first PC to the average posture at movement onset. The reconstruction shows snapshots of the movement that would occur if only the joint synergy represented by PC1 were used.

Overall, this pattern for PC1 was found for all grasping conditions. The biggest effect of experimental condition was on the time of maximum finger extension, which tended to occur later when subjects grasped real objects. For the subject shown in Figure 5, the maximum pip angular excursion (i.e., finger extension) was attained at ∼70% of the movement for real objects versus 60% when reaching to remembered and virtual objects. This temporal shift, however, was not found when comparing mcp angular excursion across conditions. Furthermore, for subject 2, no clear temporal shift was found.

Another between-condition difference was the amplitude of joint rotation at the mcp and pip joints. In general, the memory-guided and virtual conditions were characterized by a similar extent of joint rotation, this being different from the joint rotation amplitude associated with reaching to grasp real objects. For the subject shown in Figure 5, reaching to grasp real objects was characterized by a larger amplitude of mcp joint rotation, and smaller amplitude of pip joint rotation, than the other two conditions. Subtler differences were found in the temporal profiles of thumb joints and abduction angles.

The joint rotations for PC1 for the remaining subject (S4, shown in Fig. 8) appeared to be different. In particular, there was a large monotonic rotation and abduction of the thumb (Trot andTabd), whereas the modulation at the mcp and pip joints of the fingers was much smaller than it was for the other three subjects (compare with Fig. 5). In part, this difference may have resulted because this subject began the movement with a different hand posture than did the other three subjects. In particular, the initial value of thumb abduction (Tabd) was ∼20° smaller than it was for the other subjects. The percentage variance accounted for by this PC (44–52%) was comparable to the values obtained for the other three subjects. Although the amplitudes are small, one can still discern the same general pattern (initial extension, followed by flexion) in the motions at the mcp and pip joints of the fingers. Furthermore, the pattern of covariation in the motion of the pip and mcp joints also held true for this subject.

The pattern of motion of the second PC (PC2), shown in Figure 6 and in the bottom row of Figure 7, was dramatically different from that of PC1. Posture was relatively static until ∼70% of the movement time, followed by a simultaneous extension of the digits. As was the case for the first PC, the pattern was similar across the three experimental conditions, the major difference being that finger extension terminated later when subjects actually grasped the object. The variance accounted for by PC2 was 23, 19, and 27% for the memory guided, virtual, and real conditions, respectively. As was the case for PC1, motions at all mcp and all pip joints were characterized by similar time courses. The results shown in Figures 6 and 7 (for PC2) were representative of the results for all four subjects.

The patterns of motion of the first two PCs lead to a simple interpretation and conclusion. The weighting coefficients ai1 (Eq. 4) for the first PC were mostly positive, whereas the coefficients ai2 for the second PC could be positive or negative. Although the pattern of covariation among the joints was similar for both PCs, the second PC began to contribute to hand shape only in the latter stages of the movement. Accordingly, the maximum finger span, achieved at the time of maximum extension at the pip and mcp joints, was determined principally by the magnitude of the first PC, but the finger span at the end of the movement was determined by the weighted sum of PC1 and PC2, with different weighting coefficients for different objects. There was not a unique correspondence between the maximum finger span and the final static posture.

The pattern of covariation among the joint rotations of the first two principal components was quantified by computing the coefficients of correlation, in the same way as was done for the actual joint rotations (Fig. 4). The results of this analysis are shown in Figures9 and10. For PC1 and PC2, the covariation patterns were similar to those described for the raw data (Fig. 4). However, the covariation matrices were characterized by subtle between-conditions differences. Specifically, the correlations between the joints of the thumb (thumb rotation, mcp and ip) and the remaining degrees of freedom were stronger for reaches to remembered and virtual objects than real objects. This feature is most evident in the covariation matrices for PC2 (Fig. 10).

Fig. 9.

Correlation coefficients of the relations between the joint angle waveforms of the first principal component. The plots were constructed in the same manner as those in Figure 4, by computing the pairwise correlations (r) between joint angles over the interval 0–120% of normalized movement time. Results for the four subjects were averaged. The principal component axes from the virtual and real conditions were rotated to best align them with those for memory-guided movements.

Fig. 10.

Correlation coefficients of the relations between joint angle waveforms of the second principal component. Data are presented in the same format as in Figures 4 and 9.

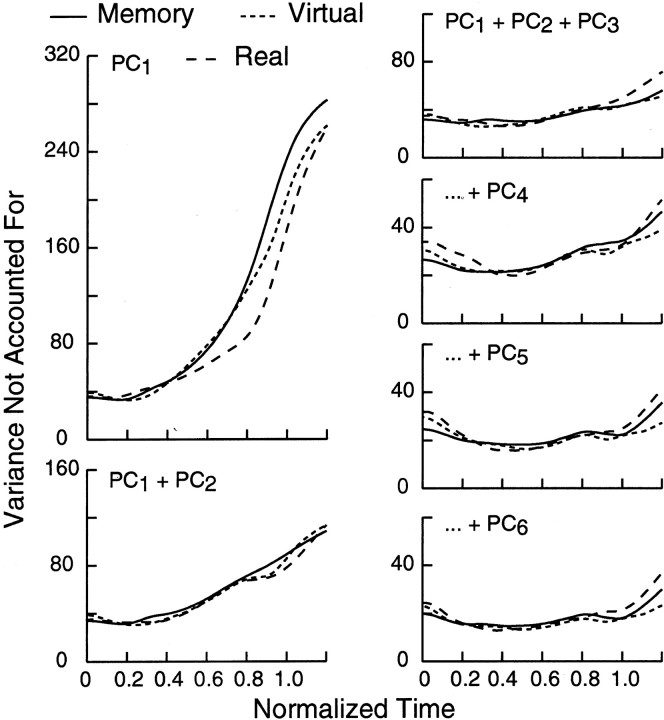

Variance not accounted for by principal components during the reach and grasp movement

Our final analysis of the data was devoted to determining how much and when each of the PCs contributed to the variance of the overall motion of the hand and fingers. To this end, we reconstructed the data for each trial using one to six principal components and computed the variance that was not accounted for by this reconstruction as a function of time. The results of this analysis are shown in Figure11. We begin by considering the results for the first principal component. The variance not accounted for (VNAC) was relatively constant until ∼50% of the movement time and increased significantly thereafter. This increase was delayed when subjects actually grasped the object (real), but the goodness of fit provided by the first PC was comparable for the other two experimental conditions. The high VNAC during the second half of the movement was greatly reduced when PC2 was added to PC1, but there remained a slower increase in the VNAC in the second half of the movement. The results of this analysis confirm the conclusions already reached: (1) the closing phase of the grasp began later when real objects were in view, and (2) the second PC only began to influence the hand movement after 50% of the transport phase had elapsed.

Fig. 11.

Variance not accounted for by different sets of principal components. The variance not accounted for by different combinations of principal components was computed at each point of the normalized movement interval of 0–1.2. Results for PC1 alone and for the sum of PC1 and PC2 are shown in the left column. In the right column and from top tobottom, results are shown for the sum of the first three, four, five, and six PCs, respectively. Data are averages from all subjects. Note the change in scale in the last three panels. VNAC is reported in arbitrary units. The first three PCs accounted for 84% of the variance, on average. Thus, a VNAC equal to 40 corresponds to ∼15% of the variance.

When higher order PCs were added to PC1 + PC2, VNAC was further reduced, remaining at a relatively stable level throughout all but the last stages of the reach and grasp. The VNAC was comparable for all three experimental conditions, except for the terminal phase. As the hand was about to stop (i.e., at normalized time 1.0), VNAC quickly started to increase for the memory guided and real conditions, but to a much lesser extent when virtual objects were presented. A subject-by-subject analysis of these data showed that, for the memory-guided condition, this increase at the end of the movement was contributed by one subject (S2), who exhibited the most intertrial variability in the time course of her finger movements. By contrast, VNAC increased abruptly after t = 1.0 in all four subjects when they actually made contact with the real object. One possible interpretation of this finding is that once contact with objects of varying sizes and shapes was made, finger movements became more individuated, perhaps as a consequence of passive biomechanical interactions between the fingers and the object, requiring a greater number of principal components to represent the hand posture.

DISCUSSION

The experiments described in this paper had two interrelated aims. First, we wanted to assess the extent to which the evolution of the hand's posture was influenced by sensory information provided by visual and tactile cues about the object to be grasped. Surprisingly, vision had no measurable influence on hand posture. Not surprisingly, however, tactile cues (and/or mechanical interactions) did influence hand posture as the hand contacted the object.

The second aim was to uncover patterns of coordination among the joints of the fingers and thumb in the time domain. Using principal components analysis, we were able to describe two such patterns. In both, the pattern of coordination among the fingers was similar, with simultaneous extension (or flexion) at the mcp and pip joints of the fingers, along with finger abduction (or adduction). The largest difference between these two principal components was in the time domain. PC1 described a pattern of motion in which the fingers first extended and then reversed to flexion in the later stages of the transport phase. To the contrary, PC2 showed little modulation until ∼70% of the transport phase had elapsed. Thereafter, all of the fingers extended (or flexed) together. Accordingly, PC1 had the greatest influence on the maximum finger span, achieved at 50–60% of the transport phase, and a smaller influence on the final posture. To the contrary, PC2 had its largest influence on the final posture, and only a negligible effect on the maximum finger span. The reason that two PCs were required, although they showed similar patterns of joint covariation, is that the posture at maximum aperture was uncorrelated (on an object to object basis) with the final posture. Otherwise, one PC would have been able to account for the overall motion. If one accepts that the PCs reflect neural control mechanisms, then this finding implies that maximum finger span and final hand posture are controlled separately.

Technical considerations

In the following we will discuss the results in more detail, but we will first take up some technical issues. Although the presentation of objects was randomized, the experiments were always conducted in the same order, beginning with memory-guided movements and ending with the subjects actually grasping the objects. We did not randomize the order of the experiments because we wanted to prevent subjects from using experience gained in seeing the objects in the “virtual” condition, and in handling them in the “real” condition, from guiding the motion in the “memory” condition.

Nevertheless, it is most likely that some trial to trial learning did occur, and it may have affected our results. The information transmitted by hand shape was consistently least for the memory-guided condition and consistently the most for the real condition (Fig. 3), i.e., following the order of the experiments. Although the difference between the memory-guided and virtual conditions never reached statistical significance, it may reflect a small effect of experience with the objects and the reaching movements. However, there was no effect of the serial ordering of the experiments on the variability of hand postures, either during the movement or at its end.

The second technical issue concerns the termination of the motion. Because the real object was held rigidly in place, some of the braking of the proximal arm's motion could have been provided by contact with the object. If so, this would have affected the time course of the wrist tangential velocity (Vtan) (Figs. 1, 2) and the timing of hand closure relative toVtan. Indeed, hand flexion occurred later in the movement when subjects actually grasped the object than it did in the other two conditions. This change in the timing could have been induced by a braking of wrist motion by external forces. Because we did not measure the time of contact of the fingers with the object, we are unable to resolve this point. However, the physical presence of the object did influence the transport phase: peak velocity of the wrist was lower, and the movement time was longer. Braking by external forces would not produce these effects, and they are also contrary to what one would expect if learning had taken place.

Sensory guidance of hand motion

We did not find any evidence that vision of the object influenced the shaping of the hand, compared with memory-guided movements. Pointing movements with accuracy constraints show evidence of small corrective submovements (cf. Crossman and Goodeve, 1983; Novak et al., 2000) that are though to be visually mediated. Furthermore, if the size of the object to be grasped is changed suddenly during the course of the reaching movement, hand kinematics are altered at short latency (Paulignan et al., 1991), just as arm movements are modified at short latency if the location of a target is shifted suddenly (Soechting and Lacquaniti, 1983; Pelisson et al., 1986).

In the present experiment, had visual information been used for corrective actions during the virtual condition, the hand would have been shaped more precisely to the object, and accordingly its shape would have transmitted more information about the object to be grasped. This expectation was not met (Fig. 3). Similarly, visually mediated corrective actions would manifest themselves in a significant addition of higher order principal components. If so, the first few PCs would account for less of the variance in the virtual condition than in the memory condition. This expectation also was not met (Fig. 11).

Our conclusions are in accord with recent observations of Johansson et al. (2001) who monitored eye as well as hand movements while subjects reached to grasp an object and then transported it to another site. They found that subjects fixated the object, rather than the moving hand. More importantly, subjects generally broke fixation before the hand's contact with the object (by as much as 400 msec), redirecting their gaze to subsequent salient landmarks for the motion. In agreement with our interpretation, gaze was often directed elsewhere during the critical period as the hand approached and contacted the object. We have suggested previously that gaze direction may be used by the limb motor system to define the spatial location of the object (Soechting et al., 2001) (see also Batista et al., 1999). Thus vision may be important in defining the location of an object and for monitoring changes in its location, size, and orientation and less so for defining an error signal derived from the shape of the hand and the object.

When tactile information was available, hand posture provided more information about the object to be grasped (particularly in the interval of 1.0–1.2 of movement time; see Fig. 3). PCs of order higher than six were required to account for the variance around the time of closure (Fig. 11). These findings indicate that as the hand made contact with the object, there were subtle variations in hand posture. They could have resulted from adjustments in the points of contact of the fingers and/or from a gradual molding of the entire hand to enclose the object.

Hand synergies during grasping

Two principal components accounted for a large proportion of the variance of the hand postures. Both PC1 and PC2 manifested themselves as a synergy that is qualitatively similar to the first principal component that we identified in a previous analysis of static hand posture at the end of memory-guided movements (Santello et al., 1998, their Figs. 5, 6). There are also quantitative similarities (e.g., the amplitude of the excursion at the pip and mcp joints was largest for the little finger and smallest for the index finger) and quantitative differences (e.g., in the previous results, the modulation in the mcp joints was larger than the modulation in the pip joints) between the present PC1 and PC2 and the static first principal component described previously.

For the static hand postures, we had previously identified a second synergy (PC2), comprising extension at the mcp joints and flexion at the pip joints, a motion that would result if the extended finger tips are drawn closer to the palm of the hand. We suspect that this synergy was present as well during the motion and represented by PCs of order higher than two. Those PCs generally accounted for <10% of the variance, individually, and were more variable from experimental condition to experimental condition. Accordingly, we did not attempt to push the analysis any further. Nevertheless, it appears that the principal components analysis that we have used here, permitting the identification of patterns of coordination simultaneously in the time and spatial domains, is useful to bring an understanding of the control of complex movements.

Footnotes

This work was supported by National Institutes of Health Grant NS-15018. We thank Thomas Jerde for the rendering of the hand using POV-Ray Tracer. We also thank Dr. Apostolos Georgopoulos for making available to us the system for projecting a virtual, three-dimensional image.

Correspondence should be addressed to John Soechting, Department of Neuroscience, University of Minnesota, 6-145 Jackson Hall, 321 Church Street Southeast, Minneapolis, MN 55455. E-mail:john@shaker.med.umn.edu.

M. Santello's present address: Department of Exercise Science and Physical Education, Arizona State University, P.O. Box 870404, Tempe, AZ 85287.

REFERENCES

- 1.Batista AP, Buneo CA, Snyder LH, Andersen RA. Reach plans in eye-centered coordinates. Science. 1999;285:257–260. doi: 10.1126/science.285.5425.257. [DOI] [PubMed] [Google Scholar]

- 2.Chieffi S, Gentilucci M. Coordination between the transport and the grasp components during prehension movements. Exp Brain Res. 1993;94:471–477. doi: 10.1007/BF00230205. [DOI] [PubMed] [Google Scholar]

- 3.Crossman ERFW, Goodeve PJ. Feedback control of hand-movement and Fitts' Law. Q J Exp Psychol A. 1983;35:251–278. doi: 10.1080/14640748308402133. [DOI] [PubMed] [Google Scholar]

- 4.Desmurget M, Pélisson D, Rosetti Y, Prablanc C. From eye to hand: planning goal-directed movements. Neurosci Biobehav Rev. 1998;22:761–788. doi: 10.1016/s0149-7634(98)00004-9. [DOI] [PubMed] [Google Scholar]

- 5.Georgopoulos AP. On reaching. Annu Rev Neurosci. 1986;9:147–170. doi: 10.1146/annurev.ne.09.030186.001051. [DOI] [PubMed] [Google Scholar]

- 6.Glaser EM, Ruchkin DS. Principles of neurobiological signal analysis. Academic; New York: 1976. [Google Scholar]

- 7.Goodale MA, Jakobson LS, Keillor JM. Differences in the visual control of pantomimed and natural grasping movements. Neuropsychology. 1994;32:1159–1178. doi: 10.1016/0028-3932(94)90100-7. [DOI] [PubMed] [Google Scholar]

- 8.Hajian AZ, Howe RD. Identification of the mechanical impedance at the human finger tip. J Biomech Eng. 1997;119:109–114. doi: 10.1115/1.2796052. [DOI] [PubMed] [Google Scholar]

- 9.Jeannerod M. The formation of finger grip during prehension. A cortically mediated visuomotor pattern. Behav Brain Res. 1986;19:99–116. doi: 10.1016/0166-4328(86)90008-2. [DOI] [PubMed] [Google Scholar]

- 10.Jeannerod M. Visuomotor channels: their integration in goal-directed prehension. Hum Mov Sci. 1999;18:201–218. [Google Scholar]

- 11.Johansson RS, Cole KJ. Sensory-motor coordination during grasping and manipulative actions. Curr Opin Neurobiol. 1992;2:815–823. doi: 10.1016/0959-4388(92)90139-c. [DOI] [PubMed] [Google Scholar]

- 12.Johansson RS, Westling G, Bäckström A, Flanagan JR. Eye-hand coordination in object manipulation. J Neurosci. 2001;21:6917–6932. doi: 10.1523/JNEUROSCI.21-17-06917.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Johnson RA, Wichern DW. Applied multivariate statistical analysis. Prentice Hall; Englewood Cliffs, NJ: 1992. [Google Scholar]

- 14.Mason CR, Gomez JE, Ebner TJ. Hand synergies during reach-to-grasp. J Neurophysiol. 2001;86:2896–2910. doi: 10.1152/jn.2001.86.6.2896. [DOI] [PubMed] [Google Scholar]

- 15.Napier JR. The prehensile movements of the human hand. J Bone Joint Surg Br. 1956;58:902–913. doi: 10.1302/0301-620X.38B4.902. [DOI] [PubMed] [Google Scholar]

- 16.Novak KE, Miller LE, Houk JC. Kinematic properties of rapid hand movements in a knob turning task. Exp Brain Res. 2000;132:419–433. doi: 10.1007/s002210000366. [DOI] [PubMed] [Google Scholar]

- 17.Paulignan Y, Jeannerod M, MacKenzie M, Marteniuk R. Selective perturbation of visual input during prehension movements. II. The effect of changing object size. Exp Brain Res. 1991;87:407–420. doi: 10.1007/BF00231858. [DOI] [PubMed] [Google Scholar]

- 18.Pelisson D, Prablanc C, Goodale MA, Jeannerod M. Visual control of reaching movements without vision of the limb. II. Evidence of fast unconscious processes correcting the trajectory of the hand to the final position of a double-step stimulus. Exp Brain Res. 1986;62:303–311. doi: 10.1007/BF00238849. [DOI] [PubMed] [Google Scholar]

- 19.Prablanc C, Pelisson D, Goodale MA. Visual control of reaching movements without vision of the limb. I. Role of retinal feedback of target position in guiding the hand. Exp Brain Res. 1986;62:293–302. doi: 10.1007/BF00238848. [DOI] [PubMed] [Google Scholar]

- 20.Sakitt B. Visual-motor efficiency (VME) and the information transmitted in visual-motor tasks. Bull Psychon Soc. 1980;16:329–332. [Google Scholar]

- 21.Santello M, Soechting JF. Matching object size by controlling finger span and hand shape. Somatosens Mot Res. 1997;14:203–212. doi: 10.1080/08990229771060. [DOI] [PubMed] [Google Scholar]

- 22.Santello M, Soechting JF. Gradual molding of the hand to object contours. J Neurophysiol. 1998;79:1307–1320. doi: 10.1152/jn.1998.79.3.1307. [DOI] [PubMed] [Google Scholar]

- 23.Santello M, Flanders M, Soechting JF. Postural hand synergies for tool use. J Neurosci. 1998;18:10105–10115. doi: 10.1523/JNEUROSCI.18-23-10105.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Schneider W, Harris TJ, Feldberg IE, Massey JT, Georgopoulos AP, Meyer RA. System for projection of a three-dimensional, moving virtual target for studies of eye-hand coordination. J Neurosci Methods. 1995;62:135–140. doi: 10.1016/0165-0270(95)00068-2. [DOI] [PubMed] [Google Scholar]

- 25.Soechting JF, Flanders M. Arm movements in three-dimensional space: computation, theory and observation. Exerc Sports Sci Rev. 1991;19:389–418. [PubMed] [Google Scholar]

- 26.Soechting JF, Flanders M. Flexibility and repeatability of finger movements during typing: analysis of multiple degrees of freedom. J Comput Neurosci. 1997;4:29–46. doi: 10.1023/a:1008812426305. [DOI] [PubMed] [Google Scholar]

- 27.Soechting JF, Lacquaniti F. Modification of trajectory of a pointing movement in response to a change in target location. J Neurophysiol. 1983;49:548–564. doi: 10.1152/jn.1983.49.2.548. [DOI] [PubMed] [Google Scholar]

- 28.Soechting JF, Engel KC, Flanders M. The Duncker illusion and eye-hand coordination. J Neurophysiol. 2001;85:843–854. doi: 10.1152/jn.2001.85.2.843. [DOI] [PubMed] [Google Scholar]