Abstract

The prefrontal cortex is involved in acquiring and maintaining information about context, including the set of task instructions and/or the outcome of previous stimulus–response sequences. Most studies on context-dependent processing in the prefrontal cortex have been concerned with such executive functions, but the prefrontal cortex is also involved in motivational operations. We thus wished to determine whether primate prefrontal neurons show evidence of representing the motivational context learned by the monkey. We trained monkeys in a delayed reaction task in which an instruction cue indicated the presence or absence of reward. In random alternation with no reward, the same one of several different kinds of food and liquid rewards was delivered repeatedly in a block of ∼50 trials, so that reward information would define the motivational context. In response to an instruction cue indicating absence of reward, we found that neurons in the lateral prefrontal cortex not only predicted the absence of reward but also represented more specifically which kind of reward would be omitted in a given trial. These neurons seem to code contextual information concerning which kind of reward may be delivered in following trials. We also found prefrontal neurons that showed tonic baseline activity that may be related to monitoring such motivational context. The different types of neurons were distributed differently along the dorsoventral extent of the lateral prefrontal cortex. Such operations in the prefrontal cortex may be important for the monkey to maximize reward or to modify behavioral strategies and thus may contribute to executive control.

Keywords: context, reward, motivation, prefrontal cortex, monkey, delayed reaction task

It is well established that the lateral part of the prefrontal cortex (LPFC) plays important roles in executive control, such as planning, problem solving, and behavioral inhibition (Luria, 1980; Stuss and Benson, 1986; Grafman et al., 1995;Shallice and Burgess, 1996; Fuster, 1997). It has been proposed that such executive functions are supported by the ability of the LPFC to acquire and represent task-relevant context information, consisting of the set of task instructions and/or the outcome of previous stimulus–response sequences (Pribram, 1971; Cohen et al., 1996). The activity of LPFC neurons is context dependent; they show differential activity to identical visual cues depending on the task requirement (Niki and Watanabe, 1976; Asaad et al., 2000; Wallis et al., 2001), or they show different patterns of activity changes depending on the way cues are presented in a delayed response task (Watanabe, 1996).

Although the LPFC has been investigated mostly in relation to its executive functions (Roberts et al., 1998; Schneider et al., 2000), the LPFC is also involved in motivational operations. Although the orbitofrontal cortex (OFC) is indicated to be more concerned with motivational operations than the LPFC (Stuss and Benson, 1986;Damasio, 1994; Fuster, 1997), a noninvasive study has shown activations of the LPFC, as well as of the OFC in relation to motivational operations, e.g., delivery of monetary reward (Thut et al., 1997). Neuronal activity related to reward and/or expectancy of reward is observed in the LPFC, as well as the OFC, of the monkey (Niki and Watanabe, 1979; Rosenkilde et al., 1981; Watanabe, 1996; Leon and Shadlen, 1999; Tremblay and Schultz, 1999, 2000; Hikosaka and Watanabe, 2000). Thus, given that the LPFC represents task-relevant context information, as well as information about rewards, we hypothesized that these two types of processes may be integrated in the LPFC to generate motivational context.

To test this hypothesis, we devised a paradigm in which the monkey could expect different kinds of rewards depending on the context. We trained the monkey in a delayed reaction task in which the instruction cue indicated whether a reward would be delivered rather than which response the monkey should make. In random alternation with no reward, the same one of several different kinds of rewards was repeatedly delivered in a block of ∼50 trials. In this way, the monkey would have context information concerning which reward could or could not be expected in any given trial within a block.

We wished to determine how such context information is represented in neuronal activities of the LPFC. In response to a cue presentation indicating future no reward, we found that LPFC neurons not only predicted the absence of reward but also represented more specifically which kind of reward would be omitted. These neurons seem to code contextual information concerning which reward may be delivered in subsequent trials. We also found LPFC neurons showing tonic baseline activity that may be related to monitoring of motivational context.

MATERIALS AND METHODS

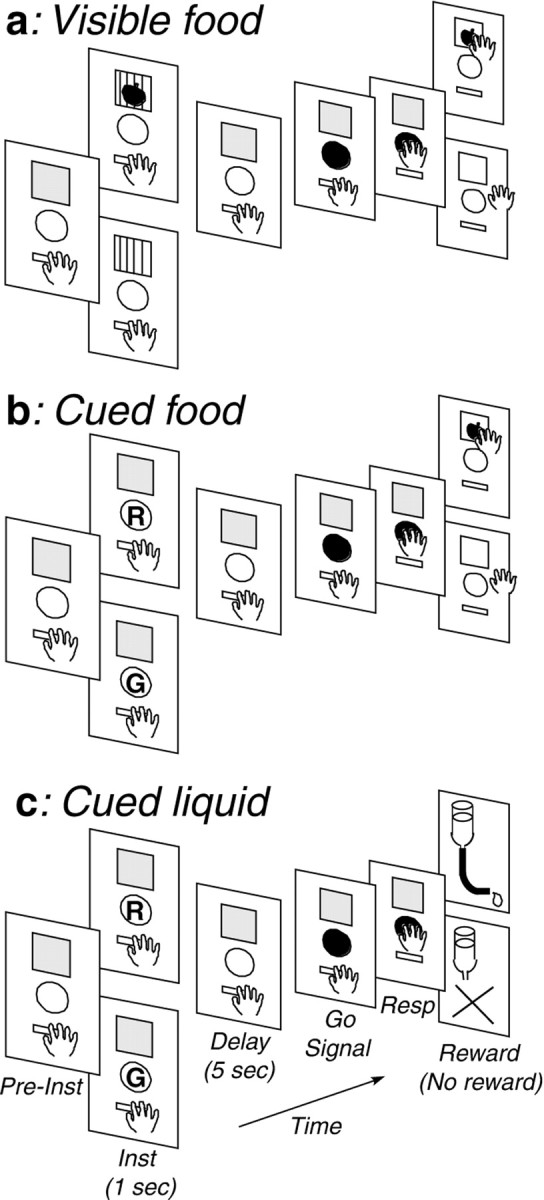

Subjects and behavioral training. Three male Japanese monkeys (Macaca fuscata) weighing 5.5–6.5 kg were used in the experiment. Monkeys were trained on a delayed reaction task. The monkey faced a panel that was placed at 33 cm from the monkey at eye level and on which a rectangular window (6 × 7.5 cm), a circular key (5 cm in diameter), and a holding lever (5 cm wide, protruding 5 cm) were vertically arranged (Fig. 1). The window contained one opaque screen and one transparent screen with thin vertical lines. The monkey first depressed the lever for 10–12 sec (Pre-Inst). Then the opaque screen was raised and revealed a food tray for 1 sec with (rewarded trial) or without (unrewarded trial) a reward behind the transparent screen as an instruction (Inst) (Fig. 1a, Visible food). After a delay of 5 sec (Delay), a white light appeared on the key as a go signal (Go Signal). When the monkey released the hold lever and pressed the key within 2 sec after the go signal, both screens were raised and the monkey either collected the food reward (rewarded trials) or went unrewarded (unrewarded trials) depending on the trial type. Rewarded and unrewarded trials alternated pseudorandomly at a ratio of 3:2. The monkey had to press the key even on unrewarded trials to advance to the next trial. The monkey was also trained in other task situations in which a color instruction (red or green) of 1 sec duration on the key indicated whether a reward would be delivered (Fig. 1b,Cued food, c, Cued liquid). In the cued food task, depending on the instruction, food reward could be collected (rewarded trials) or not (unrewarded trials) behind the screens at the end of the trial. In the liquid reward task, the window was kept closed throughout the trial, and a drop of liquid was delivered through a spout close to the monkey's mouth (rewarded trials) or not (unrewarded trials). Pieces (∼0.5 gm) of raisin, sweet potato, cabbage, or apple were used as food rewards. Drops (0.3 ml) of water, sweet isotonic beverage, orange juice, or grape juice were used as liquid rewards. The same reward was used for a block of ∼50 trials, so that the used reward would define the motivational context for the current block of trials. The monkey was not required to perform any differential operant action related to differences between rewards. On food reward tasks, both windows were closed when the monkey returned its hand to the holding lever after the key press. The trial was aborted when the monkey released the hold lever before the go signal. From time to time, the meaning of the color cue (red or green) was reversed after a block of ∼100–200 trials in the cued food or cued liquid task. Monkeys were also trained on a right–left delayed response task, and part of the results obtained in this task was presented previously (Watanabe, 1996). The task was controlled by a personal computer (PC9801FA; NEC, Tokyo, Japan). No attempt was made to restrict or control the monkey's eye movements. On weekdays, the monkeys received their daily liquid requirement while performing the task. Water was available ad libitum during weekends. Monkey pellets were available ad libitum at the home cage at all times, whereas more preferable foods were used as rewards in the laboratory.

Fig. 1.

Sequence of events in the three different kinds of delayed reaction tasks (a, Visible food;b, Cued food; c,Cued liquid). For each task, the top panel indicates rewarded trials, whereas the bottom panel indicates unrewarded trials. Inst, Instruction; Resp, response; R, red light cue; G, green light cue.

Reward preference tests. Preferences for different kinds of food rewards by individual monkeys were examined by free choice tests among different kinds of foods and by choice tests between each pair. Preferences for different kinds of food or liquid rewards were also examined by testing the monkey's willingness to perform the task with one kind of reward after refusing to perform it with another kind of reward.

Surgery and recording. On completion of training, the monkey was surgically prepared under sodium pentobarbital anesthesia (Nembutal; 30 mg/kg, i.p.). A stainless steel cylinder (18 mm in diameter) was implanted as a microdrive receptacle at appropriate locations on the skull. A hollow rod (15 mm in diameter) for head fixation was attached to the skull using dental acrylic. Antibiotics were administered for 10 d postoperatively. During recording sessions, the monkey's head was rigidly fixed to the frame of the monkey chair by means of the head holder, and a hydraulic microdrive (MO95-C; Narishige, Tokyo, Japan) was attached to the implanted cylinder. Elgiloy electrodes (Suzuki and Azuma, 1976) were used for recording. Neuronal activity was recorded from the principalis, inferior convexity, and arcuate areas of the LPFC of both hemispheres of three monkeys. Because we were interested in how motivational context is represented in the LPFC, we focused on reward-related neurons. This caused sampling bias. Action potentials were passed through a window discriminator and converted into square-wave pulses. The action potentials, shaped pulses, and task events were monitored on a computer display and stored on a digital data recorder (PC208A; Sony, Tokyo, Japan). Data on shaped pulses and task events were also stored on magneto-optical disks for off-line analysis. During the recording, we changed the type of task and/or the type of reward approximately every 50 trials. After having examined each neuron on one kind of reward, we tested the same neuron on the same kind of reward at several separate times to ascertain the stability and consistency of neuronal activity, (1) from time to time by visually inspecting the activity for four to five trials and (2) as far as possible by recoding the activity for another 50 trials. Because we concentrated on examining neuronal activity in relation to changing the reward block rather than in relation to the reversal of the meaning of the instruction cue, we did not extensively examine each neuron in the reversal (green instruction) situation. We monitored the position and movement of the monkey's eyes with an infrared eye-camera system (sampling rate, 4 msec; R-21C-A, RMS, Hirosaki, Japan) during the task performance after the neuronal recording experiment was over. The data were stored on a digital data recorder. We examined how long the monkey looked at the instructional cue during the instruction period by measuring the total time when the eye was located within a vertical window of 5 × 5° around the instruction cue. We also examined the frequency of saccadic eye movements during the delay period by counting the number of saccades whose magnitudes were of >10°.

Histology. After termination of recording, the monkeys were deeply anesthetized with sodium pentobarbital (45 mg/kg) and perfused with saline, followed by 10% formalin. The brains were frozen and sectioned in the coronal plane (50 μm). Electrode tracks were reconstructed from traces of electrode penetrations and electrolytic lesions that had been made at selected penetration sites at the end of a recording session.

Data analysis. Impulse data were displayed as raster display and frequency histograms. Nonparametric statistics were used for analysis. The data on the initial few trials after changing the kind of task and/or the kind of reward were omitted from analysis. Magnitudes of neuronal activity in relation to task events (preinstruction, instruction presentation, delay, go signal, monkey's key press response, reward delivery, and no-reward delivery) were first compared with control activity (2–3 sec before the instruction) within the same block of trials, separately for rewarded and unrewarded trials by the Mann–Whitney U test. The criterion for statistical significance was set at p < 0.05 (two tailed). Then the magnitudes of neuronal activity in relation to each task event (including the control period) were compared between rewarded and unrewarded trials by the U test and among different reward blocks by the Kruskal–Wallis H test. The U test served for post hoc analysis after a significant difference was observed on the H test. Reaction time (RT) (the time between the go signal presentation and the monkey's key press) data were also examined by nonparametric U and Htests. All experiments were conducted following the National Institutes of Health guidelines for animal experiments and were approved by the ethics committee of our institute.

RESULTS

Behavioral results

Reward preference tests revealed that the monkeys consistently preferred cabbage and apple to potato to raisin within food rewards (>95% on free choice tests). Preferences for cabbage and apple did not significantly differ. Among liquid rewards, they invariably preferred grape juice, orange juice, and isotonic beverage to water, and they preferred grape juice to orange juice and isotonic beverage. It was found that RTs of the monkey were influenced by the reward used in each trial. Details of RT data were presented separately (Watanabe et al., 2001). RTs of the monkey were significantly shorter on rewarded than on unrewarded trials on any kind of reward block. RTs were shorter when a highly preferred reward was used compared with a less preferred reward, on rewarded and/or on unrewarded trials. For example, median (50th percentile) RTs ± quartile deviations ((75th − 25th percentiles)/2) observed in one animal for cabbage (most preferred food) and raisin (least preferred food) rewards were 340 ± 15 versus 370 ± 25 msec on rewarded trials and 440 ± 53 versus 535 ± 80 msec on unrewarded trials, respectively (Watanabe et al., 2001). Because the monkey's gaze was not controlled, the monkey moved its eyes spontaneously and randomly during the preinstruction and delay periods. At the time of the instruction presentation, an eye movement was elicited to the instruction cue, but the animal did not continue to fixate it throughout the instruction period. Except for an initial few trials after changing the type of reward, there was no significant difference both in the duration of instruction-looking time during the instruction period and in the frequency of saccadic eye movements during the delay period, between rewarded and unrewarded trials, as well as among different kinds of reward blocks. Median instruction-looking time ± quartile deviations during the instructional period in the cued food task on rewarded and unrewarded trials in one monkey, for example, were 288 ± 72 versus 270 ± 50.5 msec with raisin, 277 ± 61.5 versus 280 ± 54 msec with potato, and 290 ± 62.5 versus 287.5 ± 58 msec with cabbage as reward. Median frequency of saccadic eye movements (with amplitudes of >10° degrees) ± quartile deviations during the delay period on rewarded and unrewarded trials were 4.5 ± 3.0 and 5.0 ± 2.0 with raisin, 5.5 ± 3.0 and 4.25 ± 2.0 with potato, and 5.0 ± 1.5 and 4.5 ± 2.0 with cabbage as reward. Neither between rewarded and unrewarded trials (p > 0.05; two-tailed U test) nor among different reward blocks (p > 0.05;H test) were there significant differences in these values.

Reward-dependent instruction–delay activity

Of 230 task-related LPFC neurons recorded in three monkeys, 179 discriminated between rewarded and unrewarded trials during the instruction and/or delay period. One hundred six of them showed a higher firing rate and 69 a lower firing rate on rewarded compared with unrewarded trials with any type of reward tested. Four of these neurons showed an inconsistent pattern across tasks, in one task firing more and in another task firing less on rewarded trials than on unrewarded trials. Here we focus on 91 of these neurons that were examined on several kinds of reward blocks and could be tested repeatedly on each kind of reward block across time. Of these, 72 (79%) showed instruction and/or delay activity that differed depending on which particular reward was used in a given block of trials, with the reward effect reproducible across repeated tests.

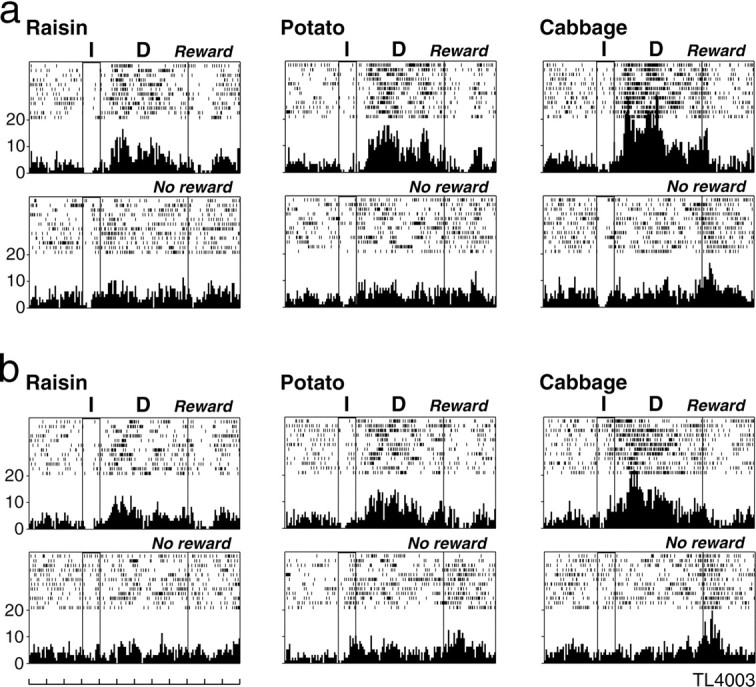

The neuron shown in Figure 2adiscriminated between rewarded and unrewarded trials as well among different reward blocks. This neuron, which was examined in the visible food task, showed a significantly higher firing rate on rewarded than on unrewarded trials during the delay period on any kind of reward block (two-tailed U tests; raisin, p < 0.05; potato and cabbage, p < 0.01). On rewarded trials, this neuron showed significantly different activity changes depending on the reward block, showing the highest firing rate for cabbage, an intermediate firing rate for potato, and the lowest firing rate for raisin as the reward in a block of trials. H test indicated that activity changes of this neuron during the delay period were not significantly different on unrewarded trials, whereas they were significantly different on rewarded trials (χ2 = 31.62; df = 2;p < 0.001) among the three different reward blocks. Paired comparisons using two-tailed U tests indicated that activity changes during the delay period on rewarded trials significantly differ between any pair of reward blocks (all pairs,p < 0.01).

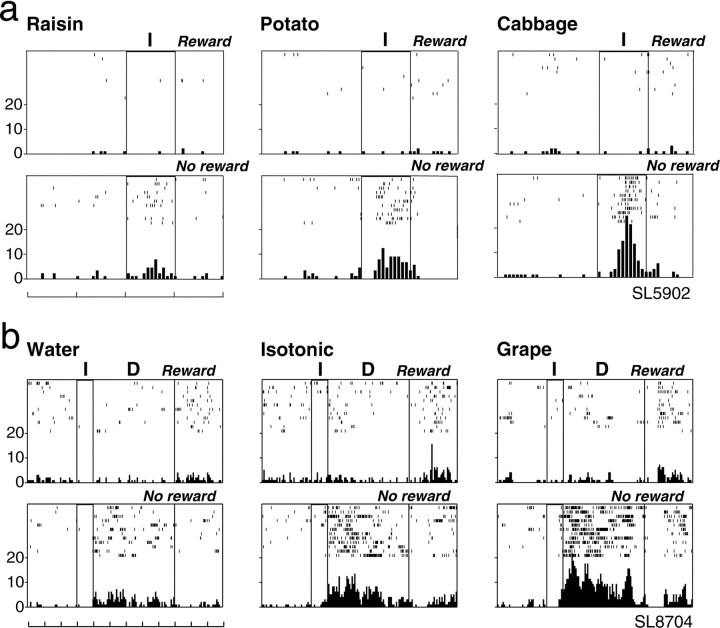

Fig. 2.

An example of an LPFC neuron that responded differently between rewarded and unrewarded trials, as well as showing differential activity during the delay period on rewarded trials depending on the reward block. a shows the activity of this neuron when examined for the first time on each reward block.b shows the activity for the same neuron examined at a later time, after being examined on several kinds of liquid rewards. For both a and b, neuronal activity is shown separately for each reward block in raster and histogram displays, with the top display for rewarded trials and the bottom display for unrewarded trials. For each display, the first two vertical lines from theleft indicate instruction onset and offset, and thethird line indicates the end of the delay period. Eachrow indicates one trial. The reward used is stated.Leftmost scales indicate impulses per second, and the time scale at the bottom indicates 1 sec.I, Instruction; D, delay;Reward, rewarded trials; No reward, unrewarded trials.

Figure 2b shows the activity of the same neuron when we repeated the tests in the visible food task after having examined it on several kinds of liquid rewards. On these repeated tests also, this neuron showed a significantly higher firing rate on rewarded than on unrewarded trials during the delay period on any kind of reward block (U tests; raisin, p < 0.05; potato and cabbage, p < 0.01). H test indicated that activity changes of this neuron during the delay period were not significantly different on unrewarded trials, whereas they were significantly different on rewarded trials (χ2 = 14.63; df = 2;p < 0.001) among the three different reward blocks. Paired comparisons indicated that activity changes during the delay period on rewarded trials significantly differ between any pair of reward blocks (raisin vs potato, p < 0.05; raisin vs cabbage and potato vs cabbage, p < 0.01). This figure demonstrates that this neuron consistently discriminated between rewarded and unrewarded trials, as well as among different reward blocks, as confirmed by repeated tests.

The neurons in Figure 3 showed activations only on unrewarded trials during the instruction period (a) or during the delay period (b). Considering only unrewarded trials, the neuron in Figure 3a, which was examined in the visible food task, showed the highest firing rate when cabbage and the lowest firing rate when raisin was used as reward in a block of trials. Statistical tests revealed that this neuron showed a significantly higher firing rate during the instruction period on unrewarded than on rewarded trials on any reward block (all food blocks, p < 0.01). H test indicated that activity changes of this neuron during the instruction period were not significantly different on rewarded trials, whereas they were significantly different on unrewarded trials (χ2 = 15.54; df = 2;p < 0.001) among the three different reward blocks. Paired comparisons using U tests indicated that there were significant differences in activity changes during the instruction period on unrewarded trials between raisin and potato (p < 0.01), as well as between raisin and cabbage (p < 0.01), but not between potato and cabbage reward blocks.

Fig. 3.

Example of LPFC neurons that showed significant activations during the instruction (a) or during the delay (b) period only on unrewarded trials and showed differential activity depending on the reward block. Ina, neuronal activity is shown for 4 sec, whereas inb, it is shown for 12 sec. Isotonic, Sweet isotonic beverage reward. Conventions are as in Figure2.

Similarly, the neuron in Figure 3b, examined in the cued liquid task, was sensitive to the reward context only on unrewarded trials, showing the highest firing rate when grape juice and the lowest firing rate when water was used as reward in a block of trials. Statistical tests indicated that the neuron also showed a significantly higher firing rate during the delay period on unrewarded than on rewarded trials on any reward block (all liquid blocks,p < 0.01). H test indicated that activity changes of this neuron during the delay period were not significantly different on rewarded trials, whereas they were significantly different on unrewarded trials (χ2 = 20.22; df = 2; p < 0.001) among the three different reward blocks. Paired comparisons using U tests indicated that there were significant differences in activity changes during the delay period on unrewarded trials between any pair of reward blocks (water vs isotonic and water vs grape, p < 0.01; isotonic vs grape, p < 0.05).

Reward-discriminative LPFC activity (depending on the type of reward used in a block of trials) appeared to be related to the monkey's preference among different types of reward. Among 72 LPFC neurons examined, eight neurons showed reward-discriminative activity only on rewarded trials (Fig. 2), 14 only on unrewarded trials (Fig. 3), and 50 on both rewarded and unrewarded trials (Fig.4) (see Fig. 6). A majority of them (55 of 72, 76.4%; 6 of 8, 8 of 14, and 41 of 50) showed a higher firing rate (Figs. 2, 3) (see Fig. 6), whereas 13 of them (2 of 8, 6 of 14, and 5 of 50) showed a lower firing rate on more preferred reward blocks than on less preferred reward blocks. Four neurons (5.6%) showed a complex activity pattern, with different directions on rewarded versus unrewarded trials: on rewarded trials, a higher firing rate and, on unrewarded trials, a lower firing rate for the more preferred reward blocks than for the less preferred reward blocks. Figure 4 shows such an example, which was examined in the visible food task. On rewarded trials, this neuron showed the highest activity in the apple block and the least activity in the raisin block. In contrast, on unrewarded trials, the neuron showed the highest activity in the raisin block and the least activity in the apple block. Statistical tests revealed that this neuron showed a significantly higher firing rate during the instruction and delay periods on rewarded than on unrewarded trials on any reward block (all reward blocks, p < 0.01).H tests revealed that this neuron showed significantly different activity changes among the three different reward blocks both on rewarded (χ2 = 35.61; df = 2;p < 0.001) and unrewarded (χ2 = 9.45; df = 2;p < 0.01) trials. Paired comparisons among different reward blocks showed that this neuron showed significantly different firing rates between any pair of reward blocks on rewarded trials (all pairs, p < 0.01), as well as on unrewarded trials (raisin vs apple, p < 0.01; raisin vs potato and potato vs apple, p < 0.05). None of the 72 reward-discriminative neurons showed instruction–delay activity changes exclusively in a particular reward block without showing activity changes in other reward blocks.

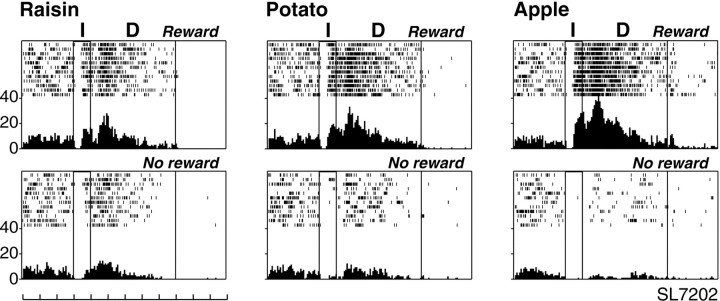

Fig. 4.

An example of an LPFC neuron that showed differential activity depending on the reward block, during both the instruction and delay periods on both rewarded and unrewarded trials. This neuron showed greater activation on rewarded trials and less activation on unrewarded trials as more preferred rewards were used within the block. Conventions are as in Figure 2.

Fig. 6.

An example of an LPFC neuron showing differential preinstruction baseline activity depending on the reward block. This neuron also showed differential activity during the cue and delay periods depending on the reward block on both rewarded and unrewarded trials. For this neuron, the activity is shown from 9 sec before the instruction presentation to 3 sec after the go signal presentation. Other conventions are as in Figure 2. The magnitude of activity (in impulses per second expressed by median ± quartile deviation) of this neuron during the preinstruction period after rewarded and unrewarded trials were 2 ± 2 and 3 ± 1 for the raisin block, 6 ± 2 and 5 ± 2.5 for the potato block, and 9 ± 2 and 10 ± 4 for the cabbage block, respectively.H test indicated significant differences in preinstruction baseline activity among different reward blocks both for the preinstruction period after rewarded trials (χ2= 11.0; df = 2; p < 0.01) and for that after unrewarded trials (χ2 = 11.9; df = 2;p < 0.01). Paired comparisons usingU test indicated significant differences in preinstruction baseline activity between any pair of reward blocks (all pairs, p < 0.01), except that there was no significant difference between potato and cabbage reward blocks after unrewarded trials. However, there was no significant difference in preinstruction baseline activity after rewarded compared with unrewarded trials within any reward block.

Neither among rewarded trials nor among unrewarded trials within a certain reward block was there significant correlation between RT and the magnitude of neuronal activity during the instruction–delay periods in all 72 neurons examined, whereas there were sometimes significant correlations between them when both rewarded and unrewarded data were combined, probably because of the large differences in both RT and the magnitude of neuronal activity between rewarded and unrewarded trials.

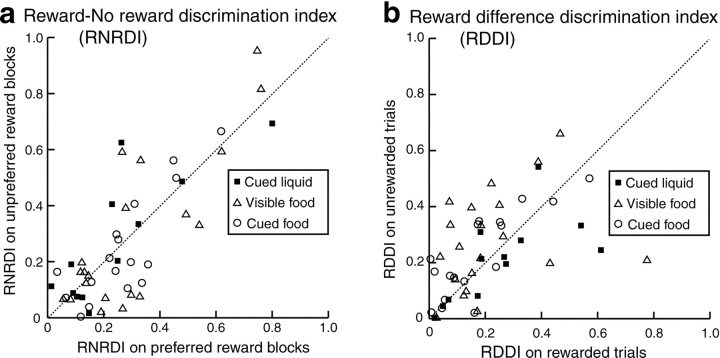

Reward discriminability of prefrontal neurons

We qualitatively examined whether the discriminability of LPFC neurons between rewarded and unrewarded trials differed depending on what reward was used in a block of trials, more specifically depending on whether the reward was the most preferred or the least preferred by the monkey within a task. To do so, we calculated the “reward–no reward discrimination index (RNRDI)” of individual neurons for each kind of reward block using the following formula: RNRDI = (absolute value) (rewarded − unrewarded)/(rewarded + unrewarded) (absolute value), where “rewarded” and “unrewarded” indicate the mean discharge rate during the cue and delay periods in a certain reward block for rewarded and unrewarded trials, respectively. We also examined whether the discriminability of LPFC neurons between two different kinds of reward blocks, more specifically between the monkey's most preferred and the least preferred reward blocks within a task, on rewarded trials differed from that on unrewarded trials. To do so, we calculated the “reward difference discrimination index (RDDI)” of individual neurons, separately for rewarded and unrewarded trials, using the following formula: RDDI = (absolute value) (preferred − unpreferred)/(preferred + unpreferred) (absolute value), where “preferred” and “unpreferred” indicate the mean discharge rate during the cue and delay periods for the monkey's most preferred and the least preferred reward blocks within a task, respectively. Both indices were calculated from data obtained in 29 LPFC neurons that were examined on both the most preferred (cabbage or grape juice) and the least preferred (raisin or water) rewards in one or more of the three kinds of tasks. Both indices ranged from 0 to 1, with the larger value indicating greater discriminability between rewarded and unrewarded trials (RNRDI) for a certain kind of reward block and greater discriminability between preferred and unpreferred reward blocks (RDDI) within a task.

In Figure 5a, RNRDI values of individual LPFC neurons on the unpreferred reward block are plotted against those on the preferred reward block separately for three different kinds of tasks. Median RNRDI values ± quartile deviations on preferred and unpreferred reward blocks were 0.196 ± 0.171 and 0.189 ± 0.088 in the cued liquid, 0.162 ± 0.181 and 0.265 ± 0.139 in the visible food, and 0.190 ± 0.089 and 0.250 ± 0.091 in the cued food task, respectively. There was no significant difference in this value (U test;p > 0.05) between preferred and unpreferred reward blocks for any kind of task. However, there were significant correlations in RNRDI value between preferred and unpreferred reward blocks in LPFC neurons, with correlation coefficients being 0.815 in the cued liquid, 0.812 in the visible food, and 0.831 in the cued food tasks. Thus, discriminability of LPFC neurons between rewarded and unrewarded trials on the preferred reward block did not differ from that on the unpreferred reward block. Also, there was no significant difference in RNRDI value for both preferred and unpreferred food reward blocks between the visible food and cued food tasks, indicating that discriminability of LPFC neurons between rewarded and unrewarded trials was not dependent on whether food itself or a vacant food tray was presented as an instruction or simply a color instruction indicated the presence or absence of a reward.

Fig. 5.

Discriminability of LPFC neurons (a) between rewarded and unrewarded trials observed on preferred and unpreferred reward blocks and that (b) between preferred and unpreferred reward blocks observed on rewarded and unrewarded trials. a, RNRDI values of individual LPFC neurons on the unpreferred reward block are plotted against those on the preferred reward block.b, RDDI values of individual LPFC neurons on unrewarded trials are plotted against those on rewarded trials. For botha and b, filled squaresindicate data in the cued liquid task, open triangles in the visible food task, and open circles in the cued food tasks, respectively. Dashed line indicates 45° line.

In Figure 5b, RDDI values of individual LPFC neurons on unrewarded trials are plotted against those on rewarded trials separately for three different kinds of tasks. Median RDDI values ± quartile deviations on rewarded and unrewarded trials were 0.216 ± 0.110 and 0.228 ± 0.097 in the cued liquid, 0.239 ± 0.120 and 0.1615 ± 0.075 in the visible food, and 0.186 ± 0.103 and 0.159 ± 0.101 in the cued food task, respectively. There was no significant difference in this value between rewarded and unrewarded trials for any kind of task. However, there were significant correlations in RDDI value between rewarded and unrewarded trials in LPFC neurons, with correlation coefficients being 0.680 in cued liquid, 0.320 in visible food, and 0.821 in cued food tasks. Thus, discriminability of LPFC neurons between preferred and unpreferred reward blocks on rewarded trials (in which the monkey could expect actual reward delivery) did not differ from that on unrewarded trials (in which the animal could expect no reward). There was also no significant difference in RDDI value for both rewarded and unrewarded trials between the visible food and cued food tasks, indicting that discriminability of LPFC neurons between preferred and unpreferred reward blocks was not dependent on whether food itself or color cue was presented as an instruction.

Reward context monitored in baseline activity

Among different reward blocks, we compared baseline activities of LPFC neurons during the 3 sec preinstruction period, as well as during all periods throughout the trial (from the preinstruction period to the monkey's response). We found that 28 of 72 reward-discriminative instruction–delay LPFC neurons also showed differences in baseline activity, during the preinstruction period and often even throughout the entire trial, among different reward blocks (Fig.6). The relative magnitude of neuronal activity during the instruction–delay periods compared with the preinstruction period differed significantly among different reward blocks for 11 neurons. However, the relative magnitude was not significantly different for the remaining 17 neurons whose reward-discriminative activity during the instruction–delay periods directly reflected differences in the preinstruction baseline level (Fig. 6). Statistical tests indicated that the preinstruction activity of this neuron, which was examined in the cued food task, differed significantly among the three different reward blocks (χ2 = 19.2; df = 2;p < 0.001). Paired comparisons among different reward blocks indicated that there were significant differences in preinstruction activity between any pair of reward blocks (all pairs,p < 0.01). This neuron showed a significantly higher firing rate on unrewarded than on rewarded trials during the instruction and delay periods on any kind of reward block (all reward blocks, p < 0.01). This neuron showed significantly different activity changes during the instruction and delay periods among the three different reward blocks on both rewarded (χ2 = 29.44; df = 2;p < 0.001) and unrewarded (χ2 = 14.38; df = 2;p < 0.001) trials. Paired comparisons among different reward blocks revealed that this neuron showed significantly different firing rates between raisin and potato (p < 0.01), as well as between raisin and cabbage (p< 0.01), but not between potato and cabbage reward blocks on rewarded trials, while showing significantly different firing rates between any pair of reward blocks on unrewarded trials (raisin vs potato and raisin vs cabbage, p < 0.01; potato vs cabbage,p < 0.05) during the instruction and delay periods.

In most of these neurons (24 of 28; 85.7%), the magnitude of activity changes during the preinstruction period after rewarded trials did not significantly differ from that after unrewarded trials. Only two neurons showed higher activations, whereas the remaining two neurons showed lower activations during the preinstruction period after rewarded trials compared with those after unrewarded trials.

Location of prefrontal neurons responsive to reward context

Histological examination revealed that neurons that showed changes in baseline activity depending on the reward block were observed predominantly in and above the upper bank of the principal sulcus (Fig.7a). Neurons that showed differential activity during the instruction–delay periods depending on the kind of reward used in a block of trials, but did not show differential preinstruction baseline activity, were observed mostly in and below the lower bank of the principal sulcus and in the arcuate area of the LPFC (Fig. 7b). We compared the numbers of both types of neurons located in and above the upper bank of the principal sulcus with those located in and below the lower bank of the sulcus (excluding those in the arcuate area) by χ2 test. A significant difference (χ2 = 14.60; df = 1;p < 0.001) provided statistical support for differential distribution between neurons with and without differential preinstruction baseline activities.

Fig. 7.

a, Locations of penetrations of LPFC neurons that changed their preinstruction baseline activity, with the relative magnitude of neuronal activity during the instruction–delay periods compared with the baseline level being significantly different (▾) or not (■) among different reward blocks. b, Locations of penetrations of LPFC neurons that showed instruction–delay-related, but not preinstruction baseline-related, differential activity among different reward blocks only on rewarded (○), only on unrewarded (▵), or on both rewarded and unrewarded trials (●). AS, Arcuate sulcus;PS, principal sulcus.

DISCUSSION

This study examined neuronal activity of the primate LPFC in relation to motivational information during a delayed reaction task. We found the following. (1) LPFC neurons showed differential instruction–delay activity between rewarded and unrewarded trials (Figs. 2-4, 6). (2) On rewarded trials, LPFC neurons showed differential instruction–delay activity among different reward blocks (Figs. 2, 4, 6). (3) Most importantly, LPFC neurons coded not only the absence of reward but also which reward would be absent on unrewarded trials (Figs. 3, 4, 6). (4) Thirty-three percent of reward-discriminative neurons showed reward block dependent differential preinstruction baseline activity (Fig. 6), and these neurons were observed predominantly in and above the upper bank of the principal sulcus (Fig. 7).

We reported previously LPFC neurons that discriminated juice-rewarded and -unrewarded trials (Watanabe, 1990, 1992). We replicated the previous finding using several different kinds of food and liquid rewards. We also reported LPFC neurons that showed differential activity depending on which reward was expected during a delayed response task (Watanabe, 1996). We obtained similar neuronal activities in the present nonworking memory task.

Coding of motivational context in prefrontal neurons

Of particular interest are the LPFC neurons that showed differential activity during the instruction–delay periods on unrewarded trials depending on the reward block, despite the fact that the monkey could not expect to obtain anything as a reward on unrewarded trials and the monkey was not required to respond differently according to the reward information. Each block implied only one possible kind of reward. With the pseudorandom alternation of rewarded and unrewarded trials, this task provides a context in which neuronal activity in one trial type may be influenced by events in another trial type. Thus, these neurons may be involved in coding and representing the motivational context that is incidentally acquired by the monkey.

Because there are LPFC neurons that retain visual stimuli in working memory (Rosenkilde et al., 1981; Watanabe, 1986; Quintana et al., 1988;Quintana and Fuster, 1992; Miller et al., 1996; Rao et al., 1997;Rainer et al., 1999), the reward-dependent delay activity in the present study could also be related to a mental image of visual, gustatory, and olfactory aspects of the rewarding object in rewarded trials and to that of the absence of a particular reward in unrewarded trials. However, there were no neurons that responded exclusively to the presence or absence of a particular reward, indicating that the activity of LPFC neurons is not sharply tuned to the stimulus properties of the reward. The activity of these neurons was related to the monkey's reward preference as assessed by behavioral tests. Thus, the neuronal activity seems to reflect rather the motivational value of the outcome, such as the degree of pleasure or aversion associated with the presence or absence of a particular reward. A few neurons showed a complex activity pattern with different directions for rewarded versus unrewarded trials (Fig. 4). Considering that the absence of the more preferred reward would be more disappointing for the monkey than the absence of the less preferred reward, the neuron activity may reflect the degree of desirability of the outcome associated with the presence or absence of a specific reward.

The discriminability of LPFC neurons between rewarded and unrewarded trials did not differ depending on the reward block (Fig.5a), indicating that the discrimination by LPFC neurons between rewarded and unrewarded trials was not facilitated by using the more preferable reward. The discriminability of LPFC neurons between preferred and unpreferred reward blocks on unrewarded trials did not differ from that on rewarded trials (Fig. 5b). Also, the discriminability did not differ between the visible food and cued food tasks. Thus, activity of reward-discriminative LPFC neurons seems to depend more on the motivational context, i.e., what reward is used in a current block of trials, rather than on the presence or absence of the reward in each trial or on whether the instruction is an actual food or color cue.

Both prefrontal neuronal activity and RTs varied depending on the reward block. However, neither in rewarded nor in unrewarded trials within a certain reward block was there significant correlation between the magnitude of neuronal activity and the monkey's RT. Thus, the reward-discriminative instruction–delay activities should not be directly associated with differences in behavioral reactions.

There are LPFC neurons that show fixation-related (Suzuki and Azuma, 1977) and saccade-related (Funahashi et al., 1991) activity changes in task situations in which the monkey is required to control eye position. However, when the monkey was not required to control eye position to obtain reward, there were no significant differences in eye movements between rewarded and unrewarded trials nor among different reward blocks. Thus, the observed reward-discriminative neuronal activities do not reflect the monkey's eye movements.

Monitoring of motivational context in prefrontal neurons

Simulation studies indicate that the prefrontal cortex is indispensable for representing and maintaining context information for executive control (Cohen et al., 1996). LPFC neurons show differential baseline activity depending on the task requirement (Sakagami and Niki, 1994; Asaad et al., 2000). To code the motivational context, context information should be monitored continuously during task performance. Our data show that LPFC neurons are involved in this process with their reward-discriminative tonic baseline activities. It might be argued that such differential baseline activity reflects differences among taste stimuli in the mouth. However, there was no significant difference in preinstruction baseline activity after rewarded compared with unrewarded trials in most LPFC neurons in the present experiment, although the taste stimuli were different in these two cases.

Functional differentiation of the lateral prefrontal cortex in relation to the baseline activity

The present study found that neurons with and without preinstruction baseline activities were distributed differently along the dorsoventral extent of the LPFC (Fig. 7). The dorsal and ventral LPFC are proposed to be concerned, respectively, with spatial and object working memory (Wilson et al., 1993; Goldman-Rakic, 1996), although there also exists evidence against this hypothesis (Fuster et al., 1982; Rao et al., 1997; White and Wise, 1999). It has also been proposed that the ventral LPFC is involved in maintaining information in working memory, whereas the dorsal LPFC is concerned with monitoring information in working memory (Petrides, 1994, 1996). The present study suggests a new dimension in the differentiation of LPFC in relation to motivational operations, the dorsal LPFC being more involved in monitoring the motivational context and the ventral LPFC and arcuate area being more concerned with coding and representing the motivational value associated with the presence or absence of a specific reward. Such differentiation may reflect the fact that the ventral and dorsal LPFC have different cortico-cortical and limbic connections (Pandya et al., 1971; Barbas, 1993). Additional studies are needed to clarify how LPFC is functionally differentiated in relation to different kinds of operations.

Functional significance of representation of motivational context in the prefrontal cortex

The level of attention modifies baseline neuronal activity in the LPFC (Lecas, 1995). Hasegawa et al. (2000) reported LPFC neurons whose precue activity reflects the monkey's performance level in the past trial, or predicts the performance level in the future trial, but not in the present trial. Such activity is considered to reflect the monkey's motivational or arousal level. The preinstruction baseline activity observed in the present experiment may also be related to the monkey's attention or motivational level because the monkey may be more attentive to the task situation and more motivated on preferred than on nonpreferred reward blocks.

The OFC plays important roles in motivational operations. Rodent OFC neurons encode the incentive value of expected outcomes (Schoenbaum et al., 1998). Primate OFC neurons code reinforcement–error (Rosenkilde et al., 1981; Tremblay and Schultz, 2000), are related to expectancy of the presence or absence of reward (Hikosaka and Watanabe, 2000), and reflect the relative but not absolute value of the reward (Tremblay and Schultz, 1999). If motivational operations in the PFC are supported mainly by OFC (Fuster, 1997; Rolls, 1999), then what is the functional significance of neuronal activities related to the motivational context in LPFC? Although such activities are not directly associated with correct task performance, they may be essential for detecting the congruency or discrepancy between expectancy and outcome, even in the case in which no reward can be expected, and thus serve for the acquisition–maintenance and modification of behavioral strategies according to the response outcome. Such a neural mechanism may have survival value for an animal seeking the more preferable reward in given circumstances.

Whereas neurons related to spatial working memory are quite rare in the OFC (Tremblay and Schultz, 1999), LPFC neurons are involved in both working memory and reward expectancy (Watanabe, 1996; Leon and Shadlen, 1999). Interestingly, working memory-related activity of primate LPFC neurons is enhanced when a more preferred reward is used (Leon and Shadlen, 1999). Thus, the functional significance of motivational operations of the LPFC may lie in the integration of motivational and cognitive operations for goal-directed behavior.

Footnotes

This study was supported by a Grant-in-Aid for scientific research from the Ministry of Education, Science, Sports, and Culture of Japan and a Grant-in-Aid for target-oriented research and development in brain science from Japan Science and Technology Corporation. We thank W. Schultz and J. Lauwereyns for comments and suggestions and T. Kodama, M. Odagiri, T. Kojima, H. Takenaka, and K. Tsutsui for assistance.

Correspondence should be addressed to Masataka Watanabe, Department of Psychology, Tokyo Metropolitan Institute for Neuroscience, Musashidai 2-6, Fuchu, Tokyo 183-8526, Japan. E-mail: masataka@tmin.ac.jp.

REFERENCES

- 1.Asaad WF, Rainer G, Miller EK. Task-specific neural activity in the primate prefrontal cortex. J Neurophsiol. 2000;84:451–459. doi: 10.1152/jn.2000.84.1.451. [DOI] [PubMed] [Google Scholar]

- 2.Barbas H. Architecture and cortical connections of the prefrontal cortex in the rhesus monkey. In: Chauvel P, Delgado-Escueta AV, editors. Advances in neurology, Vol 57. Raven; New York: 1993. pp. 91–115. [PubMed] [Google Scholar]

- 3.Cohen JD, Braver TS, O'Reilly RC. A computational approach to prefrontal cortex, cognitive control, and schizophrenia: recent developments and current challenges. Philos Trans R Soc Lond B Biol Sci. 1996;351:1515–1527. doi: 10.1098/rstb.1996.0138. [DOI] [PubMed] [Google Scholar]

- 4.Damasio AR. Descartes error: emotion, reason and the human brain. Grosset/Putnum; New York: 1994. pp. 52–79. [Google Scholar]

- 5.Funahashi S, Bruce CJ, Goldman-Rakic PS. Neuronal activity related to saccadic eye movements in the monkey's dorsolateral prefrontal cortex. J Neurophysiol. 1991;65:1464–1483. doi: 10.1152/jn.1991.65.6.1464. [DOI] [PubMed] [Google Scholar]

- 6.Fuster JM. The prefrontal cortex: anatomy, physiology, and neuropsychology of the frontal lobe, Ed 3. Lippincott-Raven; New York: 1997. [Google Scholar]

- 7.Fuster JM, Bauer RH, Jervey JP. Cellular discharge in the dorsolateral prefrontal cortex of the monkey in cognitive tasks. Exp Neurol. 1982;77:679–694. doi: 10.1016/0014-4886(82)90238-2. [DOI] [PubMed] [Google Scholar]

- 8.Goldman-Rakic PS. The prefrontal landscape: implications of functional architecture for understanding human mentation and the central executive. Philos Trans R Soc Lond B Biol Sci. 1996;351:1445–1453. doi: 10.1098/rstb.1996.0129. [DOI] [PubMed] [Google Scholar]

- 9.Grafman J, Holyoak KJ, Boller F. Structure and functions of the human prefrontal cortex. Ann NY Acad Sci. 1995;769:1–411. [PubMed] [Google Scholar]

- 10.Hasegawa R, Blitz AM, Geller NL, Goldberg ME. Neurons in monkey prefrontal cortex that track past or predict future performance. Science. 2000;290:1786–1789. doi: 10.1126/science.290.5497.1786. [DOI] [PubMed] [Google Scholar]

- 11.Hikosaka K, Watanabe M. Delay activity of orbital and lateral prefrontal neurons of the monkey varying with different rewards. Cereb Cortex. 2000;10:263–271. doi: 10.1093/cercor/10.3.263. [DOI] [PubMed] [Google Scholar]

- 12.Lecas JC. Prefrontal neurons sensitive to increased visual attention in the monkey. NeuroReport. 1995;7:305–309. [PubMed] [Google Scholar]

- 13.Leon MI, Shadlen MN. Effect of expected reward magnitude on the response of neurons in the dorsolateral prefrontal cortex of the macaque. Neuron. 1999;24:415–425. doi: 10.1016/s0896-6273(00)80854-5. [DOI] [PubMed] [Google Scholar]

- 14.Luria AR. Higher cortical functions in man, Ed 2, pp 246–365. Basic Books; New York: 1980. [Google Scholar]

- 15.Miller EK, Erickson CA, Desimone R. Neural mechanisms of visual working memory in prefrontal cortex of the macaque. J Neurosci. 1996;16:5154–5167. doi: 10.1523/JNEUROSCI.16-16-05154.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Niki H, Watanabe M. Prefrontal unit activity and delayed response: relation to cue location versus direction of response. Brain Res. 1976;105:79–88. doi: 10.1016/0006-8993(76)90924-0. [DOI] [PubMed] [Google Scholar]

- 17.Niki H, Watanabe M. Prefrontal and cingulate unit activity during timing behavior in the monkey. Brain Res. 1979;171:213–224. doi: 10.1016/0006-8993(79)90328-7. [DOI] [PubMed] [Google Scholar]

- 18.Pandya DN, Dye P, Butters N. Efferent cortico-cortical projections of the prefrontal cortex in the rhesus monkey. Brain Res. 1971;31:35–46. doi: 10.1016/0006-8993(71)90632-9. [DOI] [PubMed] [Google Scholar]

- 19.Petrides M. Frontal lobes and working memory: evidence from investigations of the effects of cortical excisions in nonhuman primates. In: Boller F, Grafman J, editors. Handbook of neuropsychology, Vol 9. Elsevier; Amsterdam: 1994. pp. 59–82. [Google Scholar]

- 20.Petrides M. Specialized systems for the processing of mnemonic information within the primate frontal cortex. Philos Trans R Soc Lond B Biol Sci. 1996;351:1455–1461. doi: 10.1098/rstb.1996.0130. [DOI] [PubMed] [Google Scholar]

- 21.Pribram KH. Languages of the brain, pp 332–351. Prentice-Hall; Englewood Cliffs, NJ: 1971. [Google Scholar]

- 22.Quintana J, Fuster JM. Mnemonic and predictive functions of cortical neurons in a memory task. NeuroReport. 1992;3:721–724. doi: 10.1097/00001756-199208000-00018. [DOI] [PubMed] [Google Scholar]

- 23.Quintana J, Yajeya J, Fuster JM. Prefrontal representation of stimulus attributes during delay tasks. I. Unit activity in cross-temporal integration of sensory and sensory-motor information. Brain Res. 1988;474:211–221. doi: 10.1016/0006-8993(88)90436-2. [DOI] [PubMed] [Google Scholar]

- 24.Rainer G, Rao SC, Miller EK. Prospective coding for objects in primate prefrontal cortex. J Neurosci. 1999;19:5493–5505. doi: 10.1523/JNEUROSCI.19-13-05493.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Rao SC, Rainer G, Miller EK. Integration of what and where in the primate prefrontal cortex. Science. 1997;276:821–824. doi: 10.1126/science.276.5313.821. [DOI] [PubMed] [Google Scholar]

- 26.Roberts AC, Robbins TW, Weiskrantz L. The prefrontal cortex: executive and cognitive functions. Oxford UP; Oxford: 1998. [Google Scholar]

- 27.Rolls ET. The brain and emotion, pp 75–147. Oxford UP; Oxford: 1999. [Google Scholar]

- 28.Rosenkilde CE, Bauer RH, Fuster JM. Single cell activity in ventral prefrontal cortex of behaving monkeys. Brain Res. 1981;209:375–394. doi: 10.1016/0006-8993(81)90160-8. [DOI] [PubMed] [Google Scholar]

- 29.Sakagami M, Niki H. Encoding of behavioral significance of visual stimuli by primate prefrontal neurons: relation to relevant task conditions. Exp Brain Res. 1994;97:423–436. doi: 10.1007/BF00241536. [DOI] [PubMed] [Google Scholar]

- 30.Schneider WX, Owen AM, Duncan J. Executive control and the frontal lobe: current issues. Springer; Berlin: 2000. [Google Scholar]

- 31.Schoenbaum G, Chiba AA, Gallagher M. Orbitofrontal cortex and basolateral amygdala encode expected outcome during learning. Nat Neurosci. 1998;1:155–159. doi: 10.1038/407. [DOI] [PubMed] [Google Scholar]

- 32.Shallice T, Burgess P. The domain of supervisory processes and the temporal organization of behavior. Philos Trans R Soc Lond B Biol Sci. 1996;351:1405–1411. doi: 10.1098/rstb.1996.0124. [DOI] [PubMed] [Google Scholar]

- 33.Stuss DT, Benson DF. The frontal lobes. Raven; New York: 1986. [Google Scholar]

- 34.Suzuki H, Azuma M. A glass-insulated “Elgiloy” microelectrode for recording unit activity in chronic monkey experiments. Electroencephalogr Clin Neurophysiol. 1976;41:93–95. doi: 10.1016/0013-4694(76)90218-2. [DOI] [PubMed] [Google Scholar]

- 35.Suzuki H, Azuma M. Prefrontal neuronal activity during gazing at a light spot in the monkey. Brain Res. 1977;126:497–508. doi: 10.1016/0006-8993(77)90600-x. [DOI] [PubMed] [Google Scholar]

- 36.Thut G, Schultz W, Roelcke U, Nienhusmeier M, Missimer J, Maguire RP, Leenders KL. Activation of the human brain by monetary reward. NeuroReport. 1997;8:1225–1228. doi: 10.1097/00001756-199703240-00033. [DOI] [PubMed] [Google Scholar]

- 37.Tremblay L, Schultz W. Relative reward preference in primate orbitofrontal cortex. Nature. 1999;398:704–708. doi: 10.1038/19525. [DOI] [PubMed] [Google Scholar]

- 38.Tremblay L, Schultz W. Reward-related neuronal activity during Go-Nogo task performance in primate orbitofrontal cortex. J Neurophysiol. 2000;83:1864–1876. doi: 10.1152/jn.2000.83.4.1864. [DOI] [PubMed] [Google Scholar]

- 39.Wallis JD, Anderson KC, Miller EK. Single neurons in prefrontal cortex encode abstract rules. Nature. 2001;411:953–956. doi: 10.1038/35082081. [DOI] [PubMed] [Google Scholar]

- 40.Watanabe M. Prefrontal unit activity during delayed conditional go/no-go discrimination in the monkey. I. Relation to the stimulus. Brain Res. 1986;382:1–14. doi: 10.1016/0006-8993(86)90104-6. [DOI] [PubMed] [Google Scholar]

- 41.Watanabe M. Prefrontal unit activity during associative learning in the monkey. Exp Brain Res. 1990;80:296–309. doi: 10.1007/BF00228157. [DOI] [PubMed] [Google Scholar]

- 42.Watanabe M. Frontal units of the monkey coding the associative significance of visual and auditory stimuli. Exp Brain Res. 1992;89:233–247. doi: 10.1007/BF00228241. [DOI] [PubMed] [Google Scholar]

- 43.Watanabe M. Reward expectancy in primate prefrontal neurons. Nature. 1996;382:629–632. doi: 10.1038/382629a0. [DOI] [PubMed] [Google Scholar]

- 44.Watanabe M, Cromwell HC, Tremblay L, Hollerman JR, Hikosaka K, Schultz W. Behavioral reactions reflecting differential reward expectations in monkeys. Exp Brain Res. 2001;140:511–518. doi: 10.1007/s002210100856. [DOI] [PubMed] [Google Scholar]

- 45.White IM, Wise SP. Rule-dependent neuronal activity in the prefrontal cortex. Exp Brain Res. 1999;126:315–335. doi: 10.1007/s002210050740. [DOI] [PubMed] [Google Scholar]

- 46.Wilson FAW, O Scalaidhe SP, Goldman-Rakic PS. Dissociation of object and spatial processing domains in primate prefrontal cortex. Science. 1993;260:1955–1958. doi: 10.1126/science.8316836. [DOI] [PubMed] [Google Scholar]