Abstract

Objective

A clinical decision support system (CDSS) for empirical antibiotic treatment has the potential to increase appropriate antibiotic use. Before using such a system on a broad scale, it needs to be tailored to the users preferred way of working. We have developed a CDSS for empirical antibiotic treatment in hospitalized adult patients. Here we determined in a usability study if the developed CDSS needed changes.

Methods

Four prespecified patient cases, based on real life clinical scenarios, were evaluated by 8 medical residents in the study. The “think-aloud” method was used, and sessions were recorded and analyzed afterwards. Usability was assessed by 3 evaluators using an augmented classification scheme, which combines the User Action Framework with severity rating of the usability problems and the assessment of the potential impact of these problems on the final task outcomes.

Results

In total 51 usability problems were identified, which could be grouped into 29 different categories. Most (n = 17/29) of the usability problems were cosmetic problems or minor problems. Eighteen (out of 29) of the usability categories could have an ordering error as a result. Classification of the problems showed that some of the problems would get a low priority based on their severity rating, but got a high priority for their impact on the task outcome. This effectively provided information to prioritize system redesign efforts.

Conclusion

Usability studies improve lay-out and functionality of a CDSS for empirical antibiotic treatment, even after development by a multidisciplinary system.

Introduction

Misuse and overuse of antimicrobial drugs have contributed to the selection of resistant bacteria, which occurs worldwide and has been estimated to contribute to an extra mortality of 10 million people by 2050 [1]. Studies have shown that about 30–50% of antibiotics are being prescribed inappropriately [2–4], and empirically started antibiotics are considered appropriate in only around 60% of the prescriptions [5–7]. Guideline-adherent empirical therapy is associated with a relative risk reduction for mortality of 35% and is therefore described as one of the most important objectives of antimicrobial stewardship programs [8, 9]. The use of a clinical decision support system (CDSS) is a promising method to improve guideline-adherent empirical therapy [10–14]. As part of antimicrobial stewardship, a CDSS can play an important role to prescribe antimicrobial drugs appropriately and according to the guidelines.

CDSSs to support appropriate use of antibiotics have been developed since 1980 [15] and have increased in number in the last years. These systems combine relevant individual patient information with a computerized knowledge base to support decision-making in individual patients. By integrating relevant clinical data and evidence-based guidelines, these systems can help physicians to effectively manage all relevant information necessary for decision making in an increasingly complex clinical practice environment [16]. These systems are considered potentially highly valuable tools to improve clinical decision making and thereby quality of healthcare [15, 16]. CDSSs to support appropriate use of antibiotics target a variety of aspects, such as optimizing antimicrobial dosing [17–19] or supporting antimicrobial de-escalation [20, 21]. Most of these systems however focus on antimicrobial prescribing [15, 22, 23]. It has been shown that CDSS can increase confidence of general practitioners in their antibiotic prescriptions [24]. The systems that are designed to support antimicrobial prescribing in secondary care tend to focus more on a broader population than in primary care, where the systems are often focused on specific syndrome presentation in adults [15]. We have developed a CDSS for empirical antibiotic treatment in hospitalized adult patients, which combines relevant patient information with relevant local antibiotic treatment guidelines. Several other CDSSs for empirical antibiotic prescription have been developed. These CDSS differ on different aspects. Some systems use expert rules to predict the pathogen’s susceptibility to antibiotics, using antibiotic susceptibility profiles from patients with similar characteristics [11, 13, 25], but don’t take into account for example the antibiotic resistance history of the patients of interests or presence of neutropenia [13, 25], like our system does. Others use causal probabilistic networks to predict the probability of a bacterial infection, site of infection and pathogens and their susceptibility to antibiotics. The CDSS we developed generates antibiotic advices based on relevant guidelines. Like many other CDSS for empirical antibiotic therapy input of the physicians was needed in our system for the generation of an antibiotic advice [10–13, 25].

CDSS for empirical antibiotic therapy have shown benefits in terms of improving empirical antibiotic prescribing [10–14]. However, in many of these studies the CDSS was not assessed while or after the end-users, the physicians themselves, used the system[10, 12, 13].

An important issue with the implementation of CDSSs is that they are, until now, not frequently used despite their potential benefits [26]. Studies have shown that poor usability negatively affects CDSS acceptance and effectiveness [27, 28]. Poorly designed CDSS have a negative impact on the use of these systems and can result in medication errors, potentially compromising patient safety [27, 28]. Therefore, the usability of these systems need to be well tested before being implemented in clinical practice. For this purpose we used an augmented classification scheme developed by Khajouei et al.[27] to test the usability of our developed CDSS for empirical antimicrobial therapy. This augmented classification scheme combines the User Action Framework (UAF), a standardized validated classification framework, with severity rating of the usability problems and the assessment of the potential (clinical) impact of these problems on the final task outcomes [27]. To our knowledge no other studies have assessed and described the usability of a developed CDSS for antimicrobial drug prescription using this systematic framework.

The aim of this study was to detect usability problems in our developed CDSS for empirical antimicrobial therapy, to rate the severity of these problems, and to determine the impact on the task outcome.

Materials and methods

Setting

This study was conducted at the Erasmus MC, University Medical Center in Rotterdam, the Netherlands, a tertiary care center with all medical specialties available. The Erasmus MC uses an electronic health record (EHR) with integrated computerized prescriber order entry (CPOE) which was introduced in December 2001.

Clinical decision support system (CDSS)

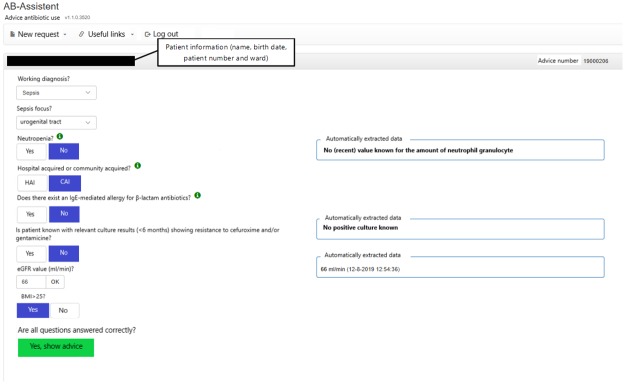

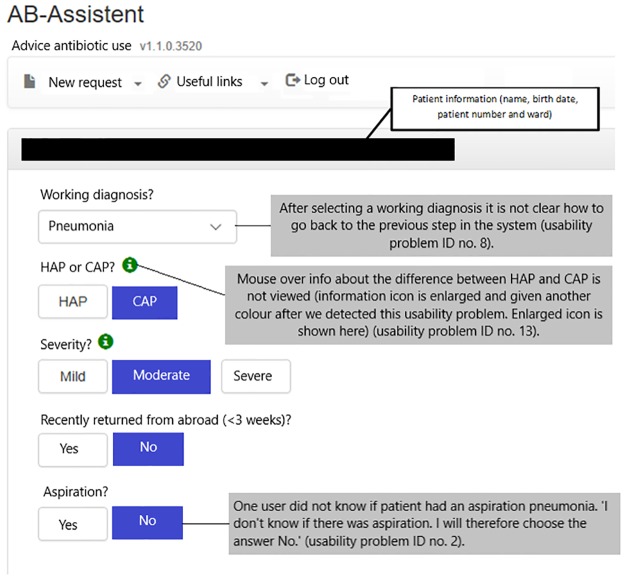

A rule-based CDSS for empirical antibiotic treatment in adult patients was built as a web application by a multidisciplinary team of clinical experts and information and communications technology (ICT) professionals (Fig 1). The system has been developed to give empirical antibiotic treatment advice for the following infections: pneumonia, sepsis, urinary tract infections, meningitis and secondary peritonitis.

Fig 1. The developed CDSS, which combines relevant electronic patient information with relevant local antibiotic treatment guidelines.

The developed CDSS combines relevant electronic patient information derived from the Erasmus MC electronic medical record (such as kidney function, microbiological results from the previous 6 months and presence or absence of neutropenia) with relevant local antibiotic treatment guidelines, which are in line with national guidelines (http://www.swabid.nl). The result is an indication driven advice that is patient specific and in accordance with current guidelines. Relevant patient information were as much as possible automatically extracted from our HER, to which the CDSS was connected. To generate an appropriate antibiotic advice some information input, which could not be automatically extracted from our hospital information system, had to be entered manually by the user (for example the working diagnosis).

Testing the usability of the CDSS

To identify usability problems in the design of a CDSS, different usability evaluation methods can be used. One of the methods to assess usability is the use of surveys, for example the often used System Usability Scale [29, 30]. This is a validated survey instrument, which consists of 10 items that have to be rated on a 5-point agreement scale [29]. It is a relative quick and easy instrument to use and it covers areas such as user satisfaction, efficiency of use and system effectiveness [31]. This method has already been used for assessing the usability of a CDSS for antibiotic prescription [24, 32]. We did not use this survey instrument, because it does not provide insight in details or causes of identified problems. Other usability evaluation methods, which are often used are the heuristic evaluation, the cognitive walkthrough and the think aloud method [33]. The first two mentioned methods are expert-based methods, whereas the think aloud method is a user-based method. With the heuristic evaluation potential usability problems are uncovered using heuristics, which are recognized usability principles [34]. An example of a heuristic is ‘provide help and documentation’. We did not use this method because the used heuristics are often very generally described, making them multi interpretable, resulting in different outcomes. This method is also highly dependent on skills and experience of the evaluator to improve the results overall [33]. With the cognitive walkthrough a usability expert simulates a new user by walking through the system step-by-step using typical tasks and details about the user’s background. This is a really structured approach, however it is a very tedious method, time consuming and the results are affected by the task description and given details about the user’s background [33]. We have chosen to use the think aloud method [35], because this method is a very rich source of data regarding usability problems. This is a user-based usability evaluation method where participants have to verbalize their thoughts during the execution of a set of specified tasks. It provides detailed insight into usability problems actually experienced by end-users of the system. Of added value is that this method provides insight in the causes of the identified problems. The verbal data are used to evaluate the system’s design on usability flaws.

The usability study was performed in 2 steps. During the first step residents completed tasks using the CDSS and during the second step the usability of the system was assessed using the data that were collected during the first step. During the first step 15 medical and surgical residents were invited by e-mail to participate in the study. Residents were invited as participants in this study, because they are the intended users of the CDSS. Selection of residents was based on: I) diversity in discipline, II) prescribers of different antimicrobial drugs, III) years of residency and IV) not being involved in the development or analysis of the CDSS. Eight residents (3 from internal medicine, 2 from surgery, 2 from medical microbiology, 1 from neurology) participated in this study. The residents were on average 31 years old, and in their first to 6th year(s) of residency and 4 were female.

The residents were given a short demonstration of the CDSS before the usability test. The CDSS was not used in hospital before the study. Four test cases were developed based on real life clinical scenarios (for description of these test cases see S1 Table). The test cases were assessed on correctness, completeness and clearness by clinical experts in our study team. During the usability test, participants were asked to complete the tasks of antimicrobial drug prescription while an observer watched, listened with minimum interruption and recorded (audiotaped and videotaped) the entire test session. All participants used the same web browser during the test and completed all four test cases.

Evaluating the usability of the CDSS

During the second step usability of the system was assessed using the data that were collected during the first step. This assessment was done by 3 unblinded evaluators, a physician, a hospital pharmacist experienced in clinical decision support and a researcher in the field of quality. Assessment was done by 2 evaluators, independently of each other. One of these primary evaluators had not been involved in the development of the CDSS. Disagreements in the sets of usability problems were resolved in discussion with a third evaluator. For this assessment an augmented classification scheme developed by Khajouei et al. was used [27]. This augmented classification scheme combines the User Action Framework (UAF), a standardized validated classification framework, with severity rating of the usability problems and the assessment of the potential (clinical) impact of these problems on the final task outcomes [27]. Each cycle of the user system interaction, which contains 4 phases (planning, translation, physical actions and assessment) was assessed. Planning is the phase of the user system interaction cycle including all cognitive actions by users to determine what to do. In the translation phase users determine how to accomplish the intentions that emerge during the planning phase. The phase in which the actions are being carried out by manipulating user interface objects is the physical action phase. The assessment phase is about the perception, interpretation and evaluation of the resulting system state by the user. Usability problems were identified using the videotapes of the cases and classified under different subcategories to the most detailed level using the UAF hierarchy [27]. Severity rating of usability problems was performed using the Nielsen’s classification [35]. This severity rating is based on the (potential) impact of the problem on the users, the (potential) persistence of the problem and the frequency with which a problem (might) occur(red).

Results

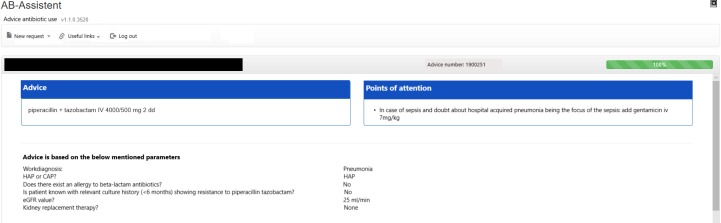

In total, 51 usability problems were identified in the usability evaluation studies, of which 7 in the planning phase (Table 1), 28 in the translation phase (Table 2), 4 in the physical actions phase (Table 3) and 12 in the assessment phase (Table 4). These 51 usability problems could be grouped into 29 different categories. A description and illustration of some of these usability problems can also be found in Figs 2 and 3. Fig 4 shows the final screen with a patient specific antibiotic advice generated.

Table 1. Usability problems in the UAF planning phase with their severity and potential effect on task outcome.

| ID No. | Level 1 | Level 2 | Level 3 | Level 4 | Description of usability problem | No.1 | Severity | Identifying potential outcomes2 |

|---|---|---|---|---|---|---|---|---|

| 1 | Planning | Users model of the system | Users ability to determine what to do first | It is not immediately clear for the user which diagnosis has to be chosen in case of urosepsis (two possible pathways). The first information input that had to be entered manually by the user is the diagnosis. This is done by selecting one of the diagnosis in a drop down menu. For the diagnosis urosepsis the user has two possible pathways, namely the user can select sepsis, with sepsis focus urogenital tract or high urinary tract infection. | 3 | 2 | None (both pathways same result) | |

| 2 | Goal decomposition | Users ability to determine what to do next | User has to answer if patient has an aspiration pneumonia. Because user doesn’t know user chooses the answer ‘no’. The option ‘unknown’ does not exist in the system. | 1 | 0 | Wrong antibiotic | ||

| 3 | Goal decomposition | Users ability to determine what to do next | Information is missing about what has to be filled in when the existence of neutropenia is unknown. | 2 | 2 | Wrong antibiotic | ||

| 4 | Users knowledge of system state, modalities. | When entering a new patient identification number nothing happens. | 1 | 2 | None |

1The number of usability problems with the same classification path, in the interaction of different or the same user with the system.

2The mentioned outcomes are potential and did not have to occur.

Table 2. Usability problems in the UAF translation phase with their severity and potential effect on task outcome.

| ID No. | Level 1 | Level 2 | Level 3 | Level 4 | Description of usability problem | No.1 | Severity | Identifying potential outcomes2 |

|---|---|---|---|---|---|---|---|---|

| 5 | Translation | Existence | Existence of a way | The user calculates, or even guesses, the BMI with a calculator outside the system. | 8 | 3 | Wrong dosage | |

| 6 | Existence | Existence of a way | The user calculates the needed dosage of gentamicin with a calculator outside the system. The user mentions that it would be helpful if the dosage is calculated by the system. | 3 | 4 | Wrong dosage | ||

| 7 | Existence | Existence of a way | User has to fill in the weight and body height of the patient. This information is not automatically retrieved from the hospital information system. User expresses the wish that dosage of antibiotic is calculated with automatic retrieved weight and body height. | 1 | 2 | Wrong dosage | ||

| 8 | Existence | Existence of a way | The user has to select a working diagnosis from a drop down menu. After selecting a working diagnosis it is not clear how to go back to the previous step in the system. | 1 | 3 | None | ||

| 9 | Presentation | Perceptual issues | Noticeability | The user does not know (immediately) how to perform a request for another patient. | 4 | 1 | None | |

| 10 | Presentation | Perceptual issues | Noticeability | The user does not use the filter to assemble possible resistant micro-organisms in the resistance overview profile, which shows the culture history (if filter is not being used resistant micro-organisms can be overlooked). | 1 | 0 | Wrong antibiotic | |

| 11 | Presentation | Perceptual issues | Noticeability | User does not view the overview of AST3, and the materials (for example sputum, urine and blood) in the resistance overview profile which shows the culture history. | 2 | 0 | Wrong antibiotic | |

| 12 | Presentation | Perceptual issues | Noticeability | Overview of resistance, which shows the culture history, is not seen immediately. | 1 | 2 | Wrong antibiotic | |

| 13 | Presentation | Perceptual issues | Noticeability | Mouse over info about difference between HAP4 and CAP4 is not viewed. | 3 | 2 | Wrong antibiotic | |

| 14 | Presentation | Perceptual issues | Noticeability | Mouse over info about severity of pneumonia is not viewed. Argument for severity classification is not correct. | 3 | 2 | Wrong antibiotic | |

| 15 | Presentation | Perceptual issues | Noticeability | User overlooks the information provided about the ESBL5 positivity of the patient. | 1 | 0 | Wrong antibiotic |

1 The number of usability problems with the same classification path, in the interaction of different or the same user with the system.

2The mentioned outcomes are potential and did not have to occur.

3 AST: Antibiotic Susceptibility Tests.

4HAP: hospital acquired pneumonia, CAP: community acquired pneumonia.

5 ESBL: extended spectrum betalactamase

Table 3. Usability problems in the UAF physical action phase with their severity and potential effect on task outcome.

| ID No. | Level 1 | Level 2 | Level 3 | Level 4 | Description of usability problem | No.1 | Severity | Identifying potential outcomes2 |

|---|---|---|---|---|---|---|---|---|

| 16 | Physical actions | Manipulating objects | Physical layout | To view the complete resistance overview, which shows the culture history, the user has to scroll down in the resistance viewer. The user does not scroll down in this viewer. | 1 | 2 | None | |

| 17 | Manipulating objects | Preferences and efficiency | User wants to review the culture history, when advice is generated, but this is not possible (functionality not available). User thinks this is not convenient, because the user wishes to review this history while consulting an infectious diseases consultant. | 1 | 43 | None | ||

| 18 | Manipulating objects | Preferences and efficiency | Physician mentions that she misses a button (button does not exist in the system). There is only the possibility to answer ‘yes’ or ‘no’ on the question if patient has been abroad. She mentions there has to be a button ‘unknown’. | 1 | 2 | None | ||

| 19 | Perceiving physical objects | Perceiving objects as they are being manipulated | The user tries to click through the resistance viewer, which shows the culture history. This is not possible (this functionality is not available in the system) | 1 | 13 | None |

1The number of usability problems with the same classification path, in the interaction of different or the same user with the system.

2The mentioned outcomes are potential and did not have to occur.

3.The difference in severity between these 2 usability problems stands out. The usability problem ‘The user tries to click through the resistance viewer, which is not possible’ is scored as 1 (cosmetic problem), because it has a low impact on the user interaction, the problem only occurred once and is an usability problem which is not persistent.

Table 4. Usability problems in the UAF assessment phase with their severity and potential effect on task outcome.

| ID No. | Level 1 | Level 2 | Level 3 | Level 4 | Description of usability problem | No.1 | Severity | Identifying potential outcomes2 |

|---|---|---|---|---|---|---|---|---|

| 20 | Assessment | Feedback | Content and meaning | Completeness and sufficiency of meaning | The user questions what to do with ‘Advice number’. | 1 | 0 | None |

| 21 | Information display | Content and meaning | Error avoidance | The message ‘No relevant cultures known’ is confusing. This message only refers to cultures in this hospital | 2 | 1 | Wrong antibiotic | |

| 22 | Information display | Content and meaning | Error avoidance | The user wonders why the resistance overview includes empty fields. | 1 | 3 | Wrong antibiotic | |

| 23 | Information display | Content and meaning | Error avoidance | The advice does not clearly indicate for what antibiotic the trough level has to be determined. | 2 | 2 | Determining medication dosage for the wrong antibiotic | |

| 24 | Information display | Content and meaning | Error avoidance | Physician reads essential information accompanying the advice, but prescribes the wrong antibiotic which is contrary to this information. | 1 | 2 | Wrong antibiotic | |

| 25 | Information display | Content and meaning | Layout and grouping | The final advice already appears earlier under a mouse over (which can be confusing). | 1 | 2 | None | |

| 26 | Information display | Content and meaning | Layout and grouping | The resistance overview displays the results of a bone marrow biopsy, which confuses the physician. | 1 | 2 | Wrong antibiotic | |

| 27 | Information display | Existence | Human memory aids | It is not clear whether the resistance viewer also takes resistance into account determined in other hospitals. | 1 | 0 | None | |

| 28 | Information display | Presentation | Perceptual issues > noticeability | Not clear whether the user realizes the Gentamicin doses has to be adjusted. | 1 | 2 | Wrong dosage | |

| 29 | Information display | Presentation | Perceptual issues > noticeability | The physician does not read the text which states that the Gentamicin dose has to be adjusted in case of a too high body mass index. | 1 | 3 | Wrong dosage |

1The number of usability problems with the same classification path, in the interaction of different or the same user with the system.

2The mentioned outcomes are potential and did not have to occur.

Fig 2. Some usability problems in the CDSS for empirical antibiotic therapy.

Fig 3. The resistance viewer in the CDSS for empirical antibiotic therapy and illustration of 2 usability problems.

Fig 4. Final screen of the CDSS for empirical antibiotic therapy with a patient specific antibiotic advice.

Planning

Seven (14%) of the identified usability problems were found in the planning phase of user-system interaction (Table 1). The usability problems in this phase were mainly caused by the user’s difficulties in choosing the correct diagnosis (two possible pathways), lack of a third option such as an ‘unknown’ button, and perceived lack of information (user is not provided with information about the system state, when entering a new patient identification number fails).

Classification of the problems with the augmented scheme showed that some of the problems would get a low priority based on their severity rating, but got a high priority for their impact on the task outcome. For example, the severity of the usability problems leading to the prescription of wrong antibiotics was rated as minor or no problem while the impact of prescribing the wrong antibiotic can be high.

Translation

Twenty-eight (55%) of the usability problems concerned the translation phase (Table 2). The usability problems in this phase were mainly caused by the fact that the mouse over functions were not noticed or correctly used, and that extra patient information (culture results) were not noticed by users. Also, the needed doses of gentamicin and the BMI were calculated with a calculator outside the system or guessed, leading to wrong dose advices.

Most usability problems had low severity ratings. Only one usability catastrophe (severity rating of 4) was observed when the gentamicin dose had to be calculated and users did look for a calculator, which was not available in the CDSS. The users expressed the need for a calculator. Not only the usability problem had a high severity rating of 4, but the impact of the problem is high too.

Physical actions

Four (8%) of the usability problems were encountered in the phase of physical actions (Table 3). One of these usability problems was caused by the layout of an object, for instance the scroll down button that had to be used. Another usability problem in this phase was the lack of user control over screen objects as these objects were being manipulated. For example, the user tried to click through the resistance viewer, but this was not possible. Two usability problems in this phase concerned the failure of the system to meet specific preferences of users for performing physical actions. One of these problems was the inability to review the culture history when the CDSS had generated an advice. This problem was rated as severity 4, although it would not lead to a wrong medication selection. The user indicated that this problem had a great impact on him, because he wanted to review the culture history during the consultation of an infectious disease specialist when an advice is generated.

Assessment

In total 12 (24%) of the 51 identified usability problems were classified in the assessment phase (Table 4). These problems concerned the existence, presentation, content and meaning of system feedback about the course of the user-interaction and the display of information resulting from users’ actions.

Not all the problems, that influence the outcome were highly severe problems since three of the problems potentially resulting in wrong antibiotic selection were assigned severity 2, and one problem assigned severity 1. The UAF classification showed that 4 (33%) of the problems concerning the assessment phase of interaction were caused by absent or unclear information displayed after the user’s action to avoid errors. The remaining eight (67%) problems in this phase were caused by the absence, poor presentation or noticeability of information or feedback displayed after the users’ actions.

A general striking finding was that four users indicated that they would not indiscriminately follow the advice given, because they were aware of the fact that the CDSS was recently developed and might contain errors.

Discussion

With the augmented scheme for classifying and prioritizing usability problems described by Khajouej et al. (2011) we found 51 usability problems in different phases of the user system interaction. Most usability problems were found in the translation phase (55%). Testing the usability of a CDSS with this scheme proved to be a simple, but effective way to identify usability problems and prioritize system redesign efforts. With the use of the augmented UAF the existence of usability problems, that were not foreseen, were identified. Also, the frequency of problems of CDSS use, the severity and potential impact of these problems on task outcome were identified. Assessing usability of a CDSS is important to increase the chance of its adoption.

This study is the first to report usability testing of a CDSS for empirical antibiotic treatment in adult patients using the systematic framework developed by Khajouei et al.[27]. A strength of this study is that we used the standardized and validated UAF, augmented with a severity rating based on Nielsen’s classification and the assessment of potential effect of the problem on the task outcome. This approach enables the report of existing usability problems in an accurate, complete and consistent way. This is needed for guiding and prioritizing system redesign efforts. Some limitations of this study should also be recognized. Firstly, we could have missed usability problems because of the small group of participants. However, the group of 8 participants was a well representative group, composed out of residents from different disciplines and different years of residence. In addition, about 80% of usability problems can be discovered with only 8 participants and the more severe a problem is, the more likely it will be uncovered within the first few subjects [36–38]. Studies to determine the optimal number of participants for a usability study have shown that the complexity of the study itself is an important factor to consider [37, 38]. Because the tasks the user had to perform in our study were simple and really straightforward we think that 8 participants were enough to detect most usability problems. Another limitation is that participants may have modified their behavior and reported thoughts in response to their awareness of being observed during the usability test. This so-called Hawthorne effect is inherent to simulated usability studies and not possible to rule out [39]. Because all participants were residents, lack of experience could have contributed to the existence of certain usability problems. These problems will probably not be experienced by medical specialists. However, given the fact that residents and specialists with not much experience in antibiotic prescribing, will be the mainly end-users/are the intended users of the CDSS, these problems are important to discover and take into account in the system redesign.

In this usability study participants completed tasks of antimicrobial drug prescription using four prespecified test cases which were based on real life clinical scenarios. In a setting with real patients, the physician know his or her patients and can answer certain questions about a patient better than with the use of a prespecified case, such as the question if the patient has neutropenia. It could therefore be that certain usability problems will not exist or exist less in a setting with real patients which are known by the user. However, this only applies for usability problems where continuing in the system is not possible without knowing certain information (for example neutropenia or if the patient has been abroad). In addition other usability problems could also be revealed when using this CDSS in real clinical conditions.

With this study we found that some of the residents did not follow the advice that was given by the CDSS without thought. They were aware of the fact that the CDSS was recently developed and might contain errors. We also found that time has been invested in the development of functionalities, which were not (optimally) used. An example is presenting mouse over information in addition to certain questions, providing relevant information to the user. Our study showed that these help texts were often not used, which prompted us to enlarge the information icon that makes this help text appear when moving the cursor towards the information icon. Also, simple improvements such as the introduction of a calculator and patient information that is automatically retrieved from the hospital information system such as weight and body height are worthwhile investments. Another simple modification we made to the CDSS is the introduction of a new option, namely the option to review the culture history in the final screen when an antibiotic advice is generated. With these alterations in the system design we made the CDSS more specific to users’needs. For ultimate system usability, iterative usability evaluation during the development and implementation of CDSS are important [28, 40, 41].

Conclusion and recommendations

Our study revealed several usability problems in different phases of the interaction between the intended user and a CDSS developed for empirical antibiotic treatment, the severity of these problems and the impact on the task outcome. It shows that even though the CDSS has been developed by a multidisciplinary team of clinical experts and ICT professionals, many usability problems can exist that are not foreseen. Assessing usability before CDSS implementation is recommended for improving CDSS adoption, effectiveness and safety. When designing a CDSS the following elements have to be considered to avoid usability problems:

‘When a question has to be answered with a yes or no also provide the answer ‘unknown’. If answering with yes or no is necessary for the system to generate an advice, provide users with this information.

Make it easy to do right by providing calculators for everything that has to be calculated (the recommended dosage of an antibiotic drug, BMI etc.).

Retrieve as much information as possible automatically from the hospital information system.

Pay attention to the noticeability of relevant information (for example mouse over info with relevant explanatory information/definitions, resistance overview with information that is relevant for the final antibiotic advice).

Provide users with information that is clear and as specific as possible and avoid reporting of irrelevant, confusing information.

Supporting information

(DOCX)

Acknowledgments

We thank Saloua Akoudad, Ga-Lai Chong, Ante Prkic, Linda van Schinkel, George Sips, Myrte Tielemans, Michiel Voute and Nick Wlazlo for their cooperation in this study. We also thank Conrad van der Hoeven, Arjen van Vliet, Bart Dannis, Michiel Smet and Marius Vogel who helped developing the CDSS algorithm. Finally we would like to show our gratitude to Annelies Blom who helped us with the equipment to perform the video- and audiotapes.

Data Availability

All relevant data are within the manuscript and its Supporting Information files.

Funding Statement

This work was supported by the Netherlands Organization for Health Research and Development (ZonMW) [grant number 836021021] to AV. The funding source had no involvement in study design, in the collection, analysis and interpretation of the data or in writing this report. Also there was no involvement in the decision to submit the article for publication.

References

- 1.O'Neill J. Review on Antimicrobial Resistance. Antimicrobial Resistance: Tackling a Crisis for the Health and Wealth of Nations. 2014 [cited 2018 February 2, 2018]. https://amr-review.org/sites/default/files/AMR%20Review%20Paper%20-%20Tackling%20a%20crisis%20for%20the%20health%20and%20wealth%20of%20nations_1.pdf.

- 2.Camins BC, King MD, Wells JB, Googe HL, Patel M, Kourbatova EV, et al. Impact of an antimicrobial utilization program on antimicrobial use at a large teaching hospital: a randomized controlled trial. Infect Control Hosp Epidemiol. 2009;30(10):931–8. 10.1086/605924 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Ingram PR, Seet JM, Budgeon CA, Murray R. Point-prevalence study of inappropriate antibiotic use at a tertiary Australian hospital. Intern Med J. 2012;42(6):719–21. 10.1111/j.1445-5994.2012.02809.x . [DOI] [PubMed] [Google Scholar]

- 4.Akhloufi H, Streefkerk RH, Melles DC, de Steenwinkel JE, Schurink CA, Verkooijen RP, et al. Point prevalence of appropriate antimicrobial therapy in a Dutch university hospital. Eur J Clin Microbiol Infect Dis. 2015;34(8):1631–7. 10.1007/s10096-015-2398-6 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kerremans JJ, Verbrugh HA, Vos MC. Frequency of microbiologically correct antibiotic therapy increased by infectious disease consultations and microbiological results. J Clin Microbiol. 2012;50(6):2066–8. 10.1128/JCM.06051-11 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Willemsen I, Groenhuijzen A, Bogaers D, Stuurman A, van Keulen P, Kluytmans J. Appropriateness of antimicrobial therapy measured by repeated prevalence surveys. Antimicrob Agents Chemother. 2007;51(3):864–7. 10.1128/AAC.00994-06 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Kerremans JJ, Verboom P, Stijnen T, Hakkaart-van Roijen L, Goessens W, Verbrugh HA, et al. Rapid identification and antimicrobial susceptibility testing reduce antibiotic use and accelerate pathogen-directed antibiotic use. J Antimicrob Chemother. 2008;61(2):428–35. 10.1093/jac/dkm497 . [DOI] [PubMed] [Google Scholar]

- 8.Schuts EC, Hulscher ME, Mouton JW, Verduin CM, Stuart JW, Overdiek HW, et al. Current evidence on hospital antimicrobial stewardship objectives: a systematic review and meta-analysis. The Lancet infectious diseases. 2016;16(7):847–56. Epub 2016/03/08. 10.1016/S1473-3099(16)00065-7 . [DOI] [PubMed] [Google Scholar]

- 9.Kallen MC, Prins JM. A Systematic Review of Quality Indicators for Appropriate Antibiotic Use in Hospitalized Adult Patients. Infect Dis Rep. 2017;9(1):6821 10.4081/idr.2017.6821 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Leibovici L, Gitelman V, Yehezkelli Y, Poznanski O, Milo G, Paul M, et al. Improving empirical antibiotic treatment: prospective, nonintervention testing of a decision support system. J Intern Med. 1997;242(5):395–400. 10.1046/j.1365-2796.1997.00232.x . [DOI] [PubMed] [Google Scholar]

- 11.Evans RS, Classen DC, Pestotnik SL, Lundsgaarde HP, Burke JP. Improving empiric antibiotic selection using computer decision support. Arch Intern Med. 1994;154(8):878–84. . [PubMed] [Google Scholar]

- 12.Warner H Jr., Reimer L, Suvinier D, Li L, Nelson M. Modeling empiric antibiotic therapy evaluation of QID. Proc AMIA Symp. 1999:440–4. . [PMC free article] [PubMed] [Google Scholar]

- 13.Mullett CJ, Thomas JG, Smith CL, Sarwari AR, Khakoo RA. Computerized antimicrobial decision support: an offline evaluation of a database-driven empiric antimicrobial guidance program in hospitalized patients with a bloodstream infection. Int J Med Inform. 2004;73(5):455–60. 10.1016/j.ijmedinf.2004.04.002 . [DOI] [PubMed] [Google Scholar]

- 14.Paul M, Andreassen S, Tacconelli E, Nielsen AD, Almanasreh N, Frank U, et al. Improving empirical antibiotic treatment using TREAT, a computerized decision support system: cluster randomized trial. J Antimicrob Chemother. 2006;58(6):1238–45. 10.1093/jac/dkl372 . [DOI] [PubMed] [Google Scholar]

- 15.Rawson TM, Moore LSP, Hernandez B, Charani E, Castro-Sanchez E, Herrero P, et al. A systematic review of clinical decision support systems for antimicrobial management: are we failing to investigate these interventions appropriately? Clin Microbiol Infect. 2017;23(8):524–32. 10.1016/j.cmi.2017.02.028 . [DOI] [PubMed] [Google Scholar]

- 16.James BC. Making it easy to do it right. N Engl J Med. 2001;345(13):991–3. 10.1056/NEJM200109273451311 . [DOI] [PubMed] [Google Scholar]

- 17.Vincent WR, Martin CA, Winstead PS, Smith KM, Gatz J, Lewis DA. Effects of a pharmacist-to-dose computerized request on promptness of antimicrobial therapy. J Am Med Inform Assoc. 2009;16(1):47–53. 10.1197/jamia.M2559 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Phillips IE, Nelsen C, Peterson J, Sullivan TM, Waitman LR. Improving aminoglycoside dosing through computerized clinical decision support and pharmacy therapeutic monitoring systems. AMIA Annu Symp Proc. 2008:1093 . [PubMed] [Google Scholar]

- 19.Diasinos N, Baysari M, Kumar S, Day RO. Does the availability of therapeutic drug monitoring, computerised dose recommendation and prescribing decision support services promote compliance with national gentamicin prescribing guidelines? Intern Med J. 2015;45(1):55–62. 10.1111/imj.12627 . [DOI] [PubMed] [Google Scholar]

- 20.Beaulieu J, Fortin R, Palmisciano L, Elsaid K, Collins C. Enhancing clinical decision support to improve appropriate antimicrobial use. Am J Health Syst Pharm. 2013;70(13):1103–4, 13 10.2146/ajhp120589 . [DOI] [PubMed] [Google Scholar]

- 21.Schulz L, Osterby K, Fox B. The use of best practice alerts with the development of an antimicrobial stewardship navigator to promote antibiotic de-escalation in the electronic medical record. Infect Control Hosp Epidemiol. 2013;34(12):1259–65. 10.1086/673977 . [DOI] [PubMed] [Google Scholar]

- 22.Rodrigues JF, Casado A, Palos C, Santos C, Duarte A, Fernandez-Llimos F. A computer-assisted prescription system to improve antibacterial surgical prophylaxis. Infect Control Hosp Epidemiol. 2012;33(4):435–7. 10.1086/664923 . [DOI] [PubMed] [Google Scholar]

- 23.Filice GA, Drekonja DM, Thurn JR, Rector TS, Hamann GM, Masoud BT, et al. Use of a computer decision support system and antimicrobial therapy appropriateness. Infect Control Hosp Epidemiol. 2013;34(6):558–65. 10.1086/670627 . [DOI] [PubMed] [Google Scholar]

- 24.Tsopra R, Sedki K, Courtine M, Falcoff H, De Beco A, Madar R, et al. Helping GPs to extrapolate guideline recommendations to patients for whom there are no explicit recommendations, through the visualization of drug properties. The example of AntibioHelp(R) in bacterial diseases. J Am Med Inform Assoc. 2019. 10.1093/jamia/ocz057 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Mullett CJ, Thomas JG. Database-driven computerized antibiotic decision support: novel use of expert antibiotic susceptibility rules embedded in a pathogen-antibiotic logic matrix. AMIA Annu Symp Proc. 2003:480–3. . [PMC free article] [PubMed] [Google Scholar]

- 26.Khairat S, Marc D, Crosby W, Al Sanousi A. Reasons For Physicians Not Adopting Clinical Decision Support Systems: Critical Analysis. JMIR Med Inform. 2018;6(2):e24 10.2196/medinform.8912 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Khajouei R, Peute LW, Hasman A, Jaspers MW. Classification and prioritization of usability problems using an augmented classification scheme. J Biomed Inform. 2011;44(6):948–57. 10.1016/j.jbi.2011.07.002 . [DOI] [PubMed] [Google Scholar]

- 28.Yen PY, Bakken S. Review of health information technology usability study methodologies. J Am Med Inform Assoc. 2012;19(3):413–22. 10.1136/amiajnl-2010-000020 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Jordan P.W. T B, Weerdmeester B.A., McClelland I.L. Usability Evaluation in Industry. London: Taylor & Francis; 1996. [Google Scholar]

- 30.Sauro J. L JR. Quantifying the User Experience: Practical Statistics for User Research Amsterdam: Elsevier/Morgan Kaufmann; 2012. [Google Scholar]

- 31.Bangor A, Kortum PT, Miller JT. An Empirical Evaluation of the System Usability Scale. International Journal of Human–Computer Interaction. 2008;24(6):574–94. 10.1080/10447310802205776 [DOI] [Google Scholar]

- 32.Tsopra R, Jais JP, Venot A, Duclos C. Comparison of two kinds of interface, based on guided navigation or usability principles, for improving the adoption of computerized decision support systems: application to the prescription of antibiotics. J Am Med Inform Assoc. 2014;21(e1):e107–16. 10.1136/amiajnl-2013-002042 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Jaspers MW. A comparison of usability methods for testing interactive health technologies: methodological aspects and empirical evidence. Int J Med Inform. 2009;78(5):340–53. 10.1016/j.ijmedinf.2008.10.002 . [DOI] [PubMed] [Google Scholar]

- 34.Nielsen J. Heuristic Evaluation. New York: Wiley; 1994. 1994. [Google Scholar]

- 35.Nielsen J. Usability Engineering: Morgan Kaufmann Publishers Inc; 1993. [Google Scholar]

- 36.Nielsen J. How Many Test Users in a Usability Study? 2012 https://www.nngroup.com/articles/how-many-test-users/.

- 37.Virzi RA. Refining the Test Phase of Usability Evaluation: How Many Subjects Is Enough? Human Factors. 1992;34(4):457–68. 10.1177/001872089203400407 [DOI] [Google Scholar]

- 38.Macefield R. How To Specify the Participant Group Size for Usability Studies: A Practitioner's Guide. J Usability Stud. 2009;5. [Google Scholar]

- 39.McCambridge J, Witton J, Elbourne DR. Systematic review of the Hawthorne effect: new concepts are needed to study research participation effects. Journal of clinical epidemiology. 2014;67(3):267–77. 10.1016/j.jclinepi.2013.08.015 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.R J. Handbook of Usability Testing: How to Plan, Design, and Conduct Effective Tests New York, USA: John Wiley & Sons; 1994. [Google Scholar]

- 41.Litvin CB, Ornstein SM, Wessell AM, Nemeth LS, Nietert PJ. Adoption of a clinical decision support system to promote judicious use of antibiotics for acute respiratory infections in primary care. International Journal of Medical Informatics. 2012;81(8):521–6. 10.1016/j.ijmedinf.2012.03.002. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(DOCX)

Data Availability Statement

All relevant data are within the manuscript and its Supporting Information files.