Abstract

The complete assessment of vision-related abilities should consider visual function (the performance of components of the visual system) and functional vision (visual task-related ability). Assessment methods are highly dependent upon individual characteristics (e.g. the presence and type of visual impairment). Typical visual function tests assess factors such as visual acuity, contrast sensitivity, color, depth, and motion perception. These properties each represent an aspect of visual function and may impact an individual’s level of functional vision. The goal of any functional vision assessment should be to measure the visual task-related ability under real-world scenarios. Recent technological advancements such as virtual reality (VR) can provide new opportunities to improve traditional vision assessments by providing novel objective and ecologically valid measurements of performance, and allowing for the investigation of their neural basis. In this review, visual function and functional vision evaluation approaches are discussed in the context of traditional and novel acquisition methods.

Introduction

The complete assessment of an individual’s vision-related abilities requires the consideration and characterization of both visual function and functional vision, particularly in the case of visual impairment1. Visual function describes how well the eyes and basic visual system can detect a target stimulus. By varying a single parameter at a time (for example, the size of the target), testing is typically carried out in a repeated fashion under controlled testing conditions until a threshold of performance is obtained. In contrast, functional vision refers to how well an individual performs while interacting with the visual environment. That is to say, how their vision is used in everyday activities. Characterizing functional vision involves the assessment of multiple and varying parameters captured under complex, real-life conditions. In this instance, how well an individual is able to sustain performance is a crucial factor1. The concepts of visual function and functional vision are certainly linked and assessing one can often provide useful information regarding the other. For example, if an individual shows evidence of impaired visual functioning (e.g. reduced acuity), one may predict potential impairments with certain visual tasks (e.g. reading) and possible strategies to help remediate the situation (such as magnification or large print). Observing a patient’s functional visual behaviors in the environment (e.g. trouble descending a flight of stairs) can signal which test of visual function should be carried out in the formal clinical setting (e.g. contrast sensitivity, visual field perimetry), or help identify modifications needed to ensure accurate assessment.

It is important to recognize however, that there are situations where an individual’s visual function may be assessed as “within normal range”, yet their functional vision is still clearly impaired. This disconnect is often evident in the setting of evaluating visual performance in pediatric and adolescent populations with early developmental brain damage, particularly in the case of brain based visual impairment such as cerebral (or cortical) visual impairment (CVI)2. If comprehensive assessments and careful consideration of both visual function and functional vision are not carried out, individuals with visual cognitive and higher order perceptual impairments may not be able to secure needed services if determinations are based on visual function measures alone such as visual acuity3. Furthermore, the necessity to accurately characterize visual function and functional vision performance is crucial in order to generate an appropriate management plan that best suits the needs and developmental goals of an individual4.

In this review, we present a summary of behavioral tests employed for assessing visual function and functional vision, including traditional techniques as well as innovative approaches incorporating virtual reality (VR) environments and eye tracking methodologies. The potential utility of VR technology combined with modern brain imaging techniques for the purposes of identifying the neural correlates related to visual performance will also be discussed.

Behavioral Assessment of Visual Function

The visual system is comprised of multiple interdependent structures and pathways that are highly functionally specialized and follow a general hierarchical organization5,6. This specialization begins as early as the retina, where light sensitive photoreceptor and ganglion cells selectively respond to different spatial and temporal properties of light. Connecting the eyes (via the optic nerves) to subcortical structures (e.g. the lateral geniculate nucleus), visual information passes to the visual cortex, where multiple specialized brain areas preferentially process different features of the captured image. The functioning of these structures and pathways may be selectively affected across the lifespan of an individual as a result of different developmental trajectories, degeneration, and vulnerabilities7–9. The complex organization of the visual system means that in order to characterize visual performance in a comprehensive manner, multiple tests must be carried out to assess the functioning of different visual structures and pathways. Practical testing limitations related to instrumentation and the demands placed on a participant, coupled with challenges associated with testing unique clinical populations, has led to an evolution and refinement of the assessment methods employed. In the first section of this review, we consider behavioral methods and test designs that have been developed to measure what we consider pillars of visual function. These visual function outcomes include: visual acuity, contrast sensitivity, color, depth, and motion. Note that visual fields (measured by formal perimetry testing) are also critically important in the assessment of visual function. However, a discussion of visual field function is beyond the scope of this review and the reader is directed to a number of excellent reviews on this topic for further discussion10,11.

1. Visual Acuity

Visual acuity estimates the level of finest detail that can be detected or identified, and remains a very important measure of visual function in both clinical evaluation and research. The classical method of measuring acuity in subjects who are able to report what they perceive is with acuity charts (such as the familiar Snellen acuity chart; see figure 1 left) composed of high contrast black targets (i.e. optotypes such as letters) presented on a white background. By convention, acuity is reported in units relative to a visually healthy observer’s performance at 6 meters (approximately 20 feet). At this distance, normal visual acuity is reported as 6/6 (or 20/20) indicating the subject can resolve optotypes that subtend 5 arcmin on the viewer’s retina with lines or gaps that subtend 1 arcmin. This translates to a minimum angle of resolution (MAR) of 1.0, the logarithm of which (logMAR) is 0.0. All of these reporting standards are used interchangeably. According to the World Health Organization12, moderate visual impairment (or moderate low vision) is defined as best corrected visual acuity in the best seeing eye worse than 6/18, severe visual impairment is acuity worse than 6/60, and profound visual impairment (or blindness) is defined with presenting visual acuity worse than 3/6013.

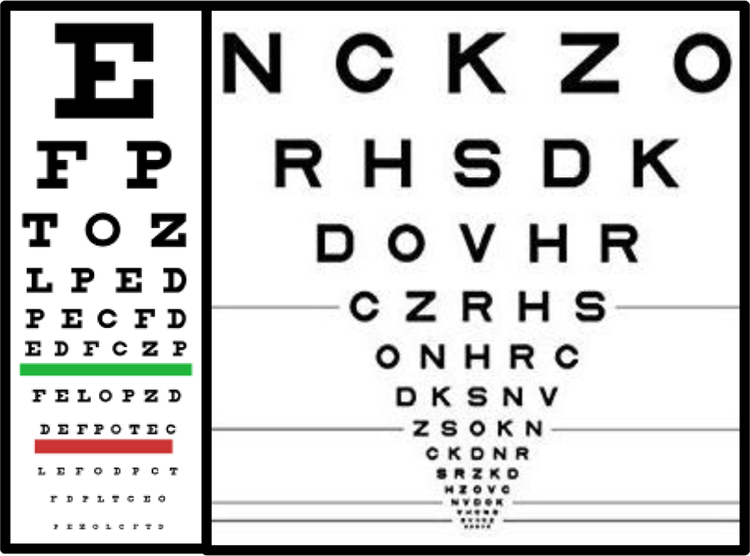

Figure 1.

Example charts and approaches used in measuring visual acuity. (Left) Classic Snellen letter chart. (Right) Early Treatment of Diabetic Retinopathy Study (ETDRS) chart. Note how this chart uses 5 letters per line with decreasing size and with equal logarithmic spacing of the rows and letters.

Many types of letter acuity charts have been designed for the purposes of testing visual acuity (for review see14). The Early Treatment of Diabetic Retinopathy Study (ETDRS) chart15 has emerged as the method of choice for visual acuity testing (see figure 1 right). This chart has a log-scaled layout with 5 letters per line as the apposed to the standard layout of the Snellen chart. Typically, a subset of 10 letters from the English alphabet are used and approximately matched for difficulty16, to discourage guessing and improve test precision17. For participants who do not know the English alphabet, a subset of letters, numbers, symbols of differing orientation (e.g. Landolt Cs or Tumbling Es), or pictograms18 may be employed, allowing the subject to name the symbols or match the target to a template. For subjects who cannot perform matching or identification tasks (e.g. infants), responses may be collected from eye movement responses, such as Preferential Looking19, pupillary responses, optokinetic nystagmus reflex, or electroencephalography based recordings such as a visual evoked potential (VEP)20. It is worth noting that these alternative tests may however, speak more about detection rather than resolution ability with regard to acuity. Nonetheless, they can all be important tools for the early detection of visual acuity impairments in infants.

A range of heuristics have been developed for determining scoring and termination criteria for letter charts which can lead to different acuity specification21. Nevertheless, there is fairly good agreement in acuity estimates with different methods, and many studies have shown that best-corrected visual acuity increases monotonically from birth up to around 10 years of age (for review see22) and decreases monotonically after around the age of 60 (for review see23).

2. Contrast Sensitivity

While visual acuity measures the smallest target size that can be identified, testing is generally carried out using full black targets presented on a white high contrast background. However, the natural environment is composed of objects at multiple sizes and intensities. Differences in image intensity are quantified by contrast (typically the difference between the lightest and darkest features in an image divided by the mean intensity), and differences in size are quantified by spatial frequency (the reciprocal of the retinal distance between light or dark image regions in degrees of visual angle) (see figure 2). Contrast sensitivity is the reciprocal of the smallest luminance difference required for target identification, and is highly dependent on spatial frequency24. This relationship between spatial frequency and contrast sensitivity is called the Contrast Sensitivity Function (CSF). It is important to note that contrast sensitivity has been shown to be a better measure than visual acuity in terms of predicting performance on activities of daily living25 and the detection of real objects26.

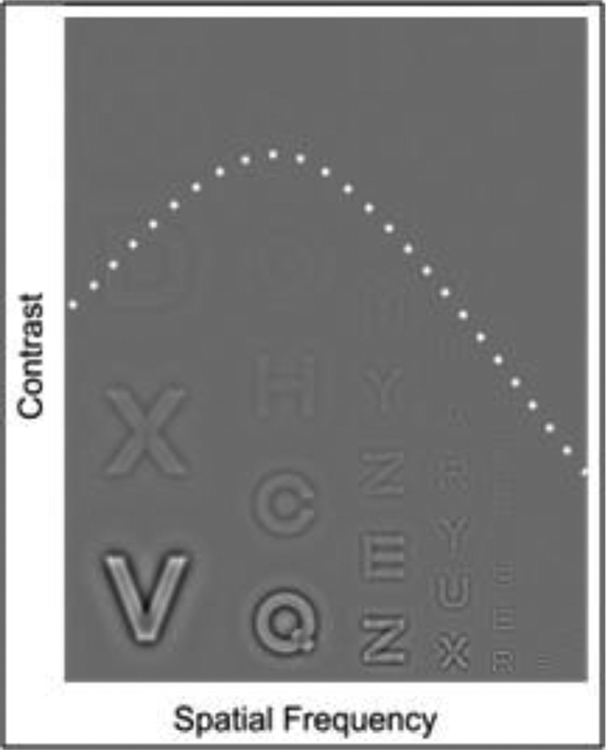

Figure 2:

Example Contrast Sensitivity Function. Contrast increases from top to bottom and spatial frequency increases from left to right most observers perceive an inverted U shape that separates visible from non-visible letters, illustrated by the dashed line. Adapted from24).

Contrast sensitivity is impaired in many common clinical conditions even when visual acuity is found to be within normal limits. The assessment of contrast sensitivity is therefore an important complement to visual acuity27. Contrast sensitivity can be assessed for a single target size with charts composed of letters of decreasing contrast (e.g.28,29) with similar scoring principles used for acuity charts. Alternatively, contrast sensitivity can also be measured with sine wave grating patches presented at several spatial frequencies (e.g. Vistech and Vectorvision charts). From a clinical standpoint, it is important to realize that a given type of visual impairment can selectively affect the visibility of only a specific range of spatial frequencies. For example, the detection of high spatial frequencies is impaired in uncorrected refractive error30 and amblyopia31. Medium spatial frequencies are selectively impaired in Parkinson’s Disease32. Finally, cataracts have been shown to selectively impair the detection of low spatial frequencies33. This means that the comprehensive assessment of contrast sensitivity requires measurement at several spatial frequencies in order to detect any potential deficits related to both detection and resolution ability (for review see34).

3. Color

Both acuity and contrast sensitivity measure the limits of perception for black, white, and greyscale stimuli. However, color is also an important signal in the natural environment and can help facilitate object recognition35. Human color vision is referred to as trichromatic, meaning it depends on three classes of cone photoreceptors in the retina. These color sensitive cones have overlapping spectral sensitivities peaking at long (L), medium (M), and short (S) wavelengths corresponding to red, green, and blue cones respectively, based on the color appearance of light at each wavelength. Color selective responses have been reported in many cortical areas, including primary visual cortex (V1) and area V2, but responses in area V4 have been shown to be correlated with color appearance (for review see36). If genes coding for photosensitive pigments are defective, this can lead to the impaired perception of color referred to as anomalous trichromacy (protanomaly, deuteranomaly or tritanomaly depending if the L, M, or S cone pigments are affected, respectively). Detailed color vision assessment can be carried out using an anomaloscope37, or color chip arrangement38 tasks. However, these tests are typically very time consuming and demanding for the participant. Alternative screening tests (e.g. Ishihara) employ pseudoisochromatic stimuli (chromatic targets presented in luminance noise patterns that mask non-chromatic cues to the target’s identity39). These tests have good agreement for screening if a color perception anomaly is present, but limited agreement on quantifying the severity of impairment40. For individuals that cannot provide verbal responses (such as infants), preferential looking methods, color detection41, and color preference42 approaches can be used. In general, the ability to discriminate colors is present in the first few months after birth, and matures to adult levels over the first few years (for review see43).

4. Depth

Acuity, contrast sensitivity, and color tests all measure the limits of perception of stimuli presented in two dimensions. However, the relative position of objects in depth is also a critical cue for interacting with the environment. Depth information is available from monocular and binocular cues. Monocular cues may be exogenous (e.g. occlusion, shadow, size, texture; which are approximately assessed by acuity and contrast sensitivity), or endogenous (e.g. accommodation; which can be estimated from pupillary responses, see44). The interocular separation of human eyes and vergence eye movements that direct the fovea of each eye to an object moving closer or farther away, generate two important endogenous binocular depth cues. The kinesthetic responses of the extraocular muscles produce convergence cues and differences in the retinal positions of objects imaged in each eye produce stereoscopic disparity cues. Binocular depth perception therefore critically depends on eye movement control, which develops into adolescence45. Since stereoscopic disparity requires a comparison between responses from both eyes, depth disparity processing begins in the visual cortex. Visually responsive neurons that are selective for disparity are found in all visual areas of the brain46, although some have argued for specialization of area V3 of primate brains47.

Sensitivity to binocular disparity is generally assessed with random dot stereogram tests that present slightly differing images to each eye (for review see48). The observer’s task is to report an embedded pattern that is defined only by binocular disparity, and cannot be detected monocularly. As with acuity testing, binocular disparity is decreased over several stereogram targets so as to find the smallest disparity the observer is able to detect; termed stereoacuity. Stereoacuity increases with age from around 100 arcsec at age 3, and reaches adult levels of around 40 arcsec at around age 749.

5. Motion

All the aforementioned assessments measure the limits of perception for static images. However, there is continuous motion of the images captured on the retina that is generated by relative movements between the observer and surrounding objects. Safe interaction and mobility in the environment critically depend on our ability to detect motion. Selectivity for temporal modulation (e.g. a flickering stimulus), begins at the level of the retina50. However, direction selectivity first appears in area V151. Direction selective visual neurons project to the middle temporal gyrus (area MT/V5); which is specialized for motion processing over large areas of visual space52.

As with acuity, contrast sensitivity, color and depth, motion assessment may be measured with perceptual report methods, or with observational methods such as preferential looking and pupillometry. An alternative observational method that is particularly suited to motion perception exploits optokinetic nystagmus (OKN) responses to moving stimuli (for review see53). OKN is an involuntary oculomotor reflex response to moving stimuli while maintaining a steady gaze, composed of slow pursuit eye movements interspersed with fast saccadic eye movements that produces a “sawtooth” pattern of eye positions over time. This reflex is very difficult to suppress and the patterns are elicited only if the stimulus is processed by the visual system. The OKN response can be used to measure visual acuity based on electrooculogram54 and contrast sensitivity55. The OKN is present in the first few months (for review see56).

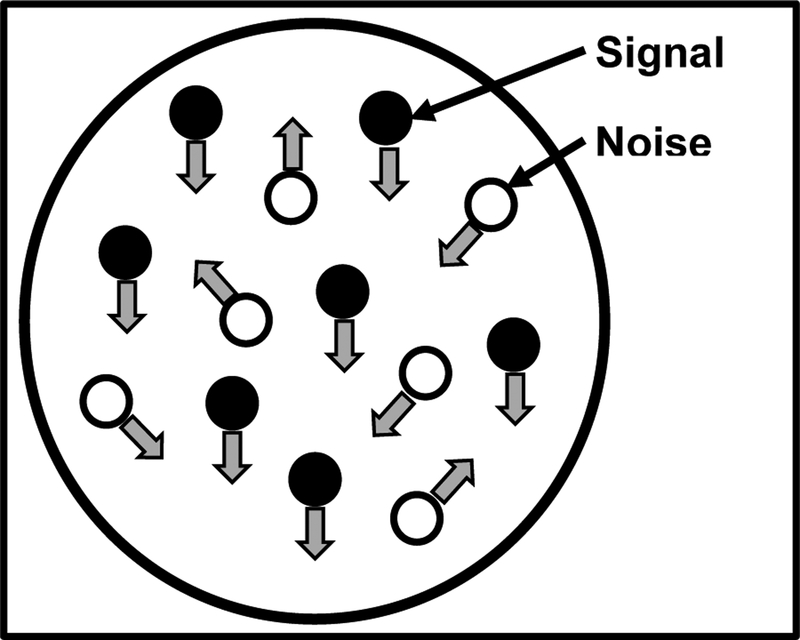

Sensitivity to motion has been measured with a broad range of moving images, including drifting sine wave gratings and preferential looking methods. It has been shown that infants as young as 1 month can detect moving gratings57. However, in a sine grating stimulus, the direction of motion is the same across all areas. Therefore, to study the integration of motion signals, global motion coherence patterns are generally used58. Motion coherence stimuli are composed of a number of independently moving dots. A proportion of the dots move in a single direction, while the remainder move in random directions (noise) (see figure 3 for example). The observer’s task is to report the overall direction of motion signal (e.g. left or right). This task requires the observer to combine estimates of multiple dot directions across space. Since observing the motion of individual dots are uninformative, these patterns are therefore thought to test the function of higher order stages of motion processing and integration. Reduced motion sensitivity could lead to real-world deficits of visual information integration, impacting daily life tasks (e.g., navigation)59. Testing results can be used to generate a psychometric function (see section below), which can be constructed from the proportion of correct responses at a range of signal levels to determine an individual’s motion coherence threshold. Sensitivity to motion coherence is present in infants as early as 24 weeks60 and continues to develop through to adolescence59.

Figure 3.

Illustration of a random dot kinetogram (RDK). In this pattern, two different types of dot motion are used. Signal dots move coherently in a given direction while noise dots move randomly.

Psychometric Functions and Thresholds

A cornerstone of behavioral assessment concerns the relationship between stimulus intensity and the subject’s perceptual report. At high signal levels (e.g. large or high contrast letters), an observer can easily perceive a target and correctly answer questions about it (e.g. its identity, location, or orientation). At low signal levels, the observer may not perceive anything and would have to guess if forced to give a response. Note that guesses will sometimes be correct, and at a level equal to the reciprocal of the number of choices. For this reason, a large number of choices (e.g. the letters of the English alphabet, where guessing rate is 1/26) are more efficient than a small number (e.g. left or right position, where guessing rate is 1/2). These tasks are called “N Alternative Forced Choice” or AFC, where N indicates the number of possible responses (e.g. 26 AFC for letters and 2 AFC for left/right preferential looking). A psychometric function describes the profile of response data and a threshold can be estimated at a criterion performance level that is usually halfway between guessing rate and 100% correct. Given that thresholds vary across individuals and over time, the range spanning guessing to 100% correct is not known before the test begins. The problems of selecting the optimal signal range and the number of trials to present at each signal level have been addressed by adaptive computer algorithms that change the test stimulus each trial, based on the observer’s responses to previous stimuli (for review see61). These methods have reduced the number of trials required to estimate a threshold to around 3062. This threshold is extremely informative because small differences in visual function (e.g. between groups of subjects or following disease progression or therapy treatment) may change performance near threshold without affecting performance at high signal levels (which are still easy) or low signal levels (which still elicit a guess).

Behavioral Assessment of Functional Vision

As mentioned in the previous section, behavioral methods to assess important measures of visual function can be very useful, and many are used routinely in clinical practice. However, it is important to note that assessing visual function does not definitively inform us as to how an individual uses their vision in everyday activities. Furthermore, clinical tests of visual function may fail in detecting the full array of possible visual perceptual deficits, and characterize the broad heterogeneity of performance levels in individuals with visual impairment (either ocular- or brain-based). The potential reasons for this include first, assessments of visual function are typically performed in the clinical setting under optimal testing conditions (e.g. good lighting and minimal environmental clutter). Second, traditional assessments (e.g. acuity and color) do not typically characterize the complex higher-order visual processes involved with the analysis of a dynamic and complex visual scene. Finally, visual performance can vary based on task demands and environmental complexity3. These issues are not meant to question the value nor the validity of traditional visual function assessments, but rather highlight that importance of developing more adaptive means of testing in order to fully characterize the visual performance of an individual4,63–65. Moreover, while certain tests may be useful for assessing and characterizing vision in individuals with ocular based conditions, they may be less informative in the case of brain based visual impairment such as in CVI. This is particularly true when considering that many individuals with CVI may show normal or near-normal performance on assessments of acuity, color, and contrast, yet these same individuals will often describe a variety of visual perceptual deficits such as difficulties with visuo-spatial/motion processing, environmental complexity/crowding, and sustaining attention during tasks with high visual demands64,66–68. Furthermore, because individuals with CVI may present with co-occurring delays in motor, cognitive, and/or other sensory functions, traditional methods of visual testing remain challenging to administer and may not necessarily be appropriate for every child.

The Need for New Techniques

The impact of varying visual task demands and environmental parameters may lead to variable performance levels. For example, differences in visual processing performance between ocular and cerebral based visual impairment have been previously well characterized69–71. A review of pertinent literature in the case of CVI includes characteristic behaviors such as preference for dynamic versus static objects, interactions between sensory modalities (e.g. turning away when reaching), and variability of visual attention depending upon the familiarity or complexity of the surrounding environment and/or stimuli. Additionally, deficits in visuospatial processing can lead to reduced functional performance66,67. The presence of behavioral and etiological differences between CVI and ocular-based visual impairments means that the testing parameters themselves may influence performance and, consequently, the conclusions drawn from their results. As mentioned previously, although traditional clinical assessments may adequately characterize an individual’s visual function (e.g. acuity and contrast sensitivity), it may fail to capture deficits in functional vision that manifest in real-world settings and situations. Therefore, there is an urgent need to develop novel methods of testing that approach more realistic scenarios (referred to as “ecological validity”) that will complement traditional clinical assessments. To this end, virtual reality (VR) may serve as an ideal platform for characterizing visual performance in an ecologically valid manner. The technologies required for VR (such high power computers, rendering of high quality graphics, and eye-tracking systems) have become increasingly cost efficient, readily available, and can now be more easily incorporated for the purposes of testing visual performance72. Thus, there is the opportunity to leverage the benefits of VR as a useful tool for the assessment of functional vision in individuals with visual impairment such as CVI.

VR has long since been implemented in clinical and behavioral neuroscience applications and research73. Indeed, VR has been used to not only assess and test attention, memory, and decision making, but also as a clinical rehabilitation tool for stroke recovery and cognitive rehabilitation in dementia, Attention Deficit Hyperactivity Disorder (ADHD), and Post Traumatic Stress Disorder (PTSD)73–75. VR-based approaches have also been used for training and rehabilitation in various pediatric populations, including individuals with ADHD, autism, and cerebral palsy (See76 for review). There are numerous benefits to using VR as a tool for training and performance assessment, including task flexibility, reproducibility, experimental control, objective data capture, participant engagement and motivation, participant safety, and the ability to mimic real-world scenarios with a high level of ecological validity75,77–79. With these potential benefits in mind, we have attempted to leverage the benefits of VR to develop ecologically valid testing platforms to characterize and objectively assess visual perceptual difficulties reported in individuals with visual impairments with a particular focus on CVI.

Virtual Reality Paradigms

A focus group study (consisting of parents as well as teachers and clinicians working with children with CVI) was carried out to identify key aspects of functional vision assessment that should be incorporated in the design of our VR tasks. This revealed that individuals with CVI often demonstrate difficulties associated with static and dynamic visual search such as identification of familiar objects or persons in a complex visual environment. The two most commonly described scenarios identified in the focus group study were: challenges with finding a favorite toy in a toy box, and locating a specific person in a crowd. Based on this information, two corresponding VR-based simulations were designed and developed. Furthermore, design features were incorporated that enabled the manipulation of important factors of visual environmental complexity (e.g. the number of distractor elements present and level of environmental clutter). Following development, further focus group evaluation confirmed the realism, engagement, and relevance of the final VR-based simulations were rated as very high while prompting additional manipulations of interest that were to be incorporated in subsequent design iterations80,81.

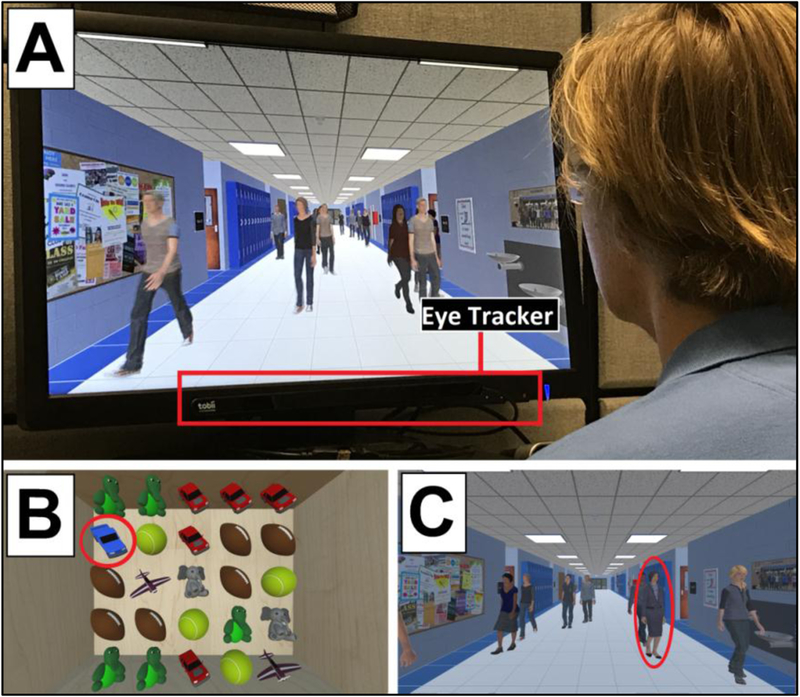

Two VR scenarios were developed to evaluate the ability to perform static and dynamic visual search. Respectively; these were referred to as the Virtual Toy Box and the Virtual Hallway. While the general premise behind both simulations is to find a specific target (i.e. a particular toy or person) among surrounding distractor elements, each employs a unique task paradigm. Considering the Virtual Hallway first, this task is presented in a dynamic and continuous manner where the participant chooses the person they wish to search for (in this case, the target is a principal of a fictitious school), and then views a video-like scenario of a crowded hallway where the number of people walking and presence of a target person vary smoothly over time (see80 for a complete explanation of the methodological details). The Virtual Toy Box is presented in a static trial-by-trial fashion where participants choose the toy they want to search for (i.e. the participant chooses between a yellow duck, an orange basketball, or a blue truck as their target of interest). During the task, a participant has 4 seconds to locate that toy before all the objects are covered and shuffled (see81 for a complete explanation of the methodological details). Figure 4 displays a photo of the experimental set up (A) and screenshots from both VR tasks (B and C). An eye tracking system is employed that continuously monitors and collects eye gaze (search pattern) data during the task. Participants are instructed to visually search for and fixate on the chosen target once it’s found. Importantly, because responses are based solely on eye-tracking data, the ability to assess performance on the task is not dependent on verbal responses.

Figure 4.

Photo and screenshots from VR Simulations. (A) photo of participant viewing the Virtual Hallway task. The eye-tracking unit (mounted to monitor display) is highlighted. (B) screenshot of the Virtual Toy Box task. In this example, the participant must find the target toy (blue truck, circle) amongst the high number of surrounding distractor elements. (C) screenshot of the Virtual Hallway. In this task, the participant must find the target (principal, circle) walking amongst a high number of distractors (other individuals).

Additional elements have also been built in to assess real-world interaction with the scenarios. For instance, hand tracking can be incorporated into the Virtual Toy Box to mimic the experience of reaching for the toy as well as visually searching for it. Additionally, the perspective in the Virtual Hallway can be presented in a manner in order to simulate walking down the hallway while searching for the target. Compensatory and adaptive strategies can also be tested using the virtual simulations. For example, the clothes that the principal wears in the Virtual Hallway can be changed to colors of high contrast compared to the rest of the hallway scene to test the effect of changing target saliency on visual search performance. Similarly, cues to help target identification (e.g. motion such as spinning) can be added to the target toy in the Virtual Toy Box to help draw and focus attention. Overall, these VR-based approaches create a highly flexible, immersive, and realistic platform for testing and collecting objective performance data, as well as monitoring performance over time.

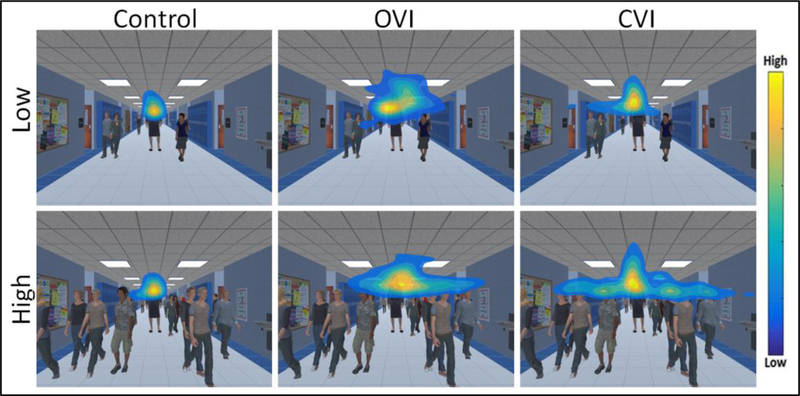

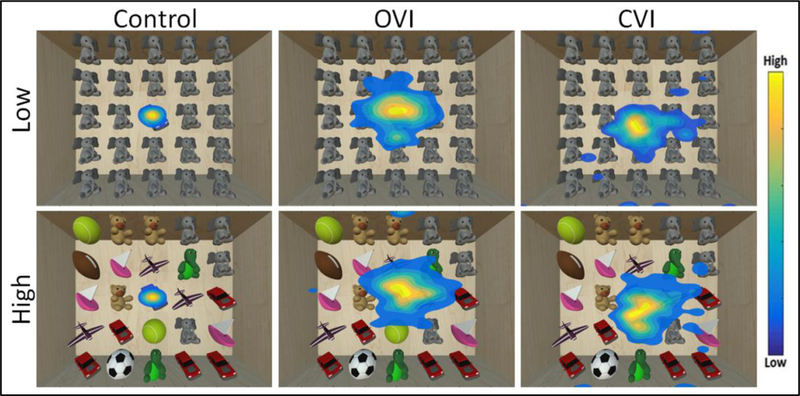

Eye tracking data is acquired at a sampling rate of 90 Hz, ensuring that the precise position of the participant’s eyes on the screen with respect to the target is logged throughout the task. A heat map based on the raw coordinate gaze data is then generated to visualize the overall distribution pattern of eye movements82. The color within the heat map represents the level of gaze data density across spatial regions of the screen, with each color corresponding to a ratio of point density80,81. Using this scheme, warm colors indicate that a participant spent a large portion of time looking in a given area, and cool colors indicate areas where a participant spent less time. Representative data for the Virtual Hallway task obtained from a neuro-typically developed control, an individual with ocular visual impairment (OVI; ocular albinism), and an individual with CVI are shown and compared in Figure 5. For the control subject, the overall distribution of the heat map pattern appears tight around the target principal in the center of the image (note that the data is centered across runs)80. In contrast, the individual with OVI shows a much larger spread in the overall pattern, while it is somewhat intermediate in the individual with CVI. We can also assess how performance changes by increasing the number of surrounding distractor elements (i.e. other individuals walking in the scene). Note that with increasing distractors, the control and OVI subjects show similar distributions of eye movements compared to their respective low distractor condition performance. This suggests that for both control and OVI participants, increasing the number of distractor elements did not greatly influence the overall distribution pattern of visual search. Interestingly, in the CVI participant, the overall extent of the pattern is markedly increased with the presence of increased distractor elements. This increase in the overall distribution of the heat map pattern in the individual with CVI can be interpreted as a broader search strategy employed in order to find the target in the presence of multiple distractors. A similar overall pattern of performance is observed in the case of the Virtual Toy Box shown in figure 6. Again, a robust clustering of data around the target toy (centered data) is observed in both low and high distractor conditions for the control individual. The OVI participant reflects an overall baseline increase in eye gaze scatter across both the low and high distractor number conditions. Similar to what was observed in the Virtual Hallway, the distribution of the heat map pattern in the individual with CVI is in between that of the control and OVI participant for the low distractor condition, but increases greatly for the high distractor condition. Again, this is suggestive that in the individual with CVI, a broader search pattern was employed to perform a thorough visual search when the environment requires higher visual demand. These observations are in agreement with previous clinical reports that individuals with CVI tend to exhibit decreased visual performance in the setting of increased visual environmental complexity69,70.

Figure 5.

Heat map displays of eye search patterns for the Virtual Hallway. Data from a control, OVI, and CVI participant are shown for both the low and high number of distractor conditions. Note how the search pattern in the CVI participant is markedly more diffuse in response to the high distractor condition. Adapted from80.

Figure 6.

Heat map displays of eye search patterns for the Virtual Toy Box. Data from a control, OVI, and CVI participant are shown for both the low and high number of unique distractor conditions. Note that the search pattern of the CVI participant shows greater spread in response to the high unique distractor condition. Adapted from81.

Additional elements of eye tracking behavior can also be examined to provide a more complete analysis of visual search performance. Gaze error (i.e., the distance between the target and participant’s eyes on the screen) can be measured to quantify fixation/pursuit eye movements during the search task83,84. It is also possible to calculate two reaction time metrics for each trial. A “hit” is measured as the first moment the participant’s eye gaze reaches the target on the screen and “fixate” is the first time that the participant’s eyes arrives and fixates onto the target80,81. Finally, because the position of the participant’s eyes on the screen is constantly recorded, it is possible to quantify the frequency and duration that the participant looks away from the screen for each trial. This provides an index measure of test reliability. Each of these metrics can then be analyzed across different condition levels and manipulation factors.

In summary, VR simulations like the Virtual Toy Box and Hallway have been designed and developed to help assess functional vision performance in settings that approach real world situations. The use of eye-tracking data capture ensures an objective and accurate measure of visual performance, even in individuals who cannot verbalize their responses. Given the flexibility and engaging nature of these VR based evironments, these scenarios can be readily modified on an individual basis for montoring performance as a result of a training program, or probing the effect of potential environmental modifications and compenstary strategies. Finally, these same VR based paltforms can be coupled with brain imaging techniques such as functional magnetic resonance imaging (fMRI) and electroencephalography (EEG) to investigate the neural corraletes associated with visual task performance. Preliminary results employing these same VR based approaches will be discussed in the following section.

Virtual Reality and Functional Imaging

Combining behavioral assessments and task-based functional neuroimaging can be useful for characterizing brain processes related to visual perception such as those associated with navigation85,86. In combination with VR, multiple types of neuroimaging techniques can be implemented, each with their own comparative strengths and weakness. Functional MRI (fMRI) provides good spatial resolution information (on the order of millimeters) and is particularly well-suited for identifying areas of localized brain activity associated with performing a behavioral task. On the other hand, the temporal resolution of fMRI is relatively poor and is on the order of seconds87, making fMRI less optimal for characterizing the timing of brain responses to a behavioral task. In contrast to fMRI, electroencephalography (EEG) affords high temporal resolution (on the order of milliseconds) and is thus useful in characterizing the temporal profile of task related brain activity. However, EEG has comparatively poor spatial resolution (on the order of centimeters) as compared to fMRI88, and is therefore less optimal for the purposes of identifying a specific area of localized activity. Leveraging the assets of a given neuroimaging technique, combined with an appropriately designed behavioral task paradigm, defines and strengthens the interpretation of results that can be obtained.

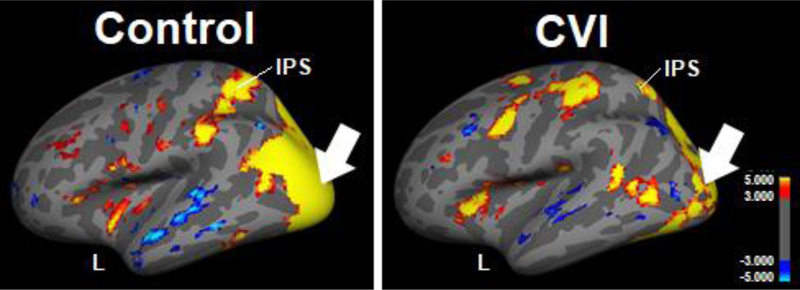

In order to determine the patterns of cortical activation associated with performing dynamic search task, the Virtual Hallway was adapted as part of an fMRI paradigm. In a pilot study, patterns of activation (i.e., blood oxygenation level-dependent (BOLD) signal) were obtained in a neuro-typically developed control and individual with CVI with a particular focus on characterizing activity within visual cortical areas known to be implicated with complex motion, spatial processing, and object recognition89–91. In the control subject (Figure 7 left panel), visual search of the dynamic visual scene was associated with robust activation within a confluence of occipital visual cortical areas as well as within the intraparietal sulcus (IPS); the latter area playing an important role in perceptual-motor coordination (e.g., directing eye movements) and visual attention92–94. In contrast, the overall pattern of occipital activation observed in the individual with CVI was not as robust, including in the IPS (Figure 7 right panel). These differences in the overall magnitude and extent of activation (as characterized by fMRI) within cortical areas known to be implicated in visual spatial processing may be related to observed deficits in visual search performance associated with CVI.

Figure 7.

fMRI activation patterns resulting from viewing the Virtual Hallway task in a control participant (left) and individual with CVI (right) (left hemisphere and lateral view is shown). Robust activation is seen within a confluence of occipital visual areas (arrow) and intra parietal sulcus (IPS) in the control. Overall occipital area (arrow) and IPS activation is less robust for the individual with CVI as compared to the control.

A second neuroimaging study was designed using EEG combined with the Virtual Toy Box environment to further assess variations in the timing of visual cortical responses during static visual search. Electrodes placed on the surface of the scalp record the electrical activity of underling cortical neurons as the participant views the toy presented at varying locations on the screen. Cortical activation patterns are measured using event-related potentials (ERPs; see87 for review), which represent an average response signal from repeated trials of the task and at different time points during the presentation of the stimulus. In the control participant, (figure 8, top row) cortical activation centered around the occipital and parietal areas is strongest around the 350 to 375 ms temporal window after the visual stimulus onset and remains sustained through 400 ms. In comparison, the temporal pattern of cortical responses in the individual with CVI shows an overall reduction in signal magnitude (figure 8, bottom row) appearing within the same temporal window (350 to 375 ms), as observed in the control subject. At 400 ms after the visual stimulus onset, note how the occipital signal does not appear as robustly sustained as it is in the control participant. Further quantification of the signal fluctuations can be done by analyzing the magnitude and timing of ERP peaks as well as further distinguishing the various frequency bands of each channel. As with the results obtained from the fMRI experiment, observed differences in patterns of cortical activity may help uncover the neurophysiological basis of visual perceptual deficits associated with CVI as well as other visual impairment conditions.

Figure 8.

Scalp map plots of 20 channel EEG data obtained from viewing the Virtual Toy Box task in a control and individual with CVI (posterior view, right side). Scalp maps are displayed in 25 ms intervals from 300 ms to 400 ms. The occipital-parietal signal observed in the control (top) appears robust and peaks between 350 and 375 ms. The individual with CVI (bottom) shows an overall reduction in signal with the peak being at a similar time to the control. Note further how the occipital signal does not appear as robustly sustained as it is in the control participant (at 400 ms).

Concluding Remarks

In this review, we discussed how a comprehensive evaluation of an individual’s overall visual abilities includes assessments of both visual function and functional vision performance. Established techniques commonly used in the clinical setting and novel VR-based approaches each have their own strengths in characterizing an individual’s visual abilities and deficits. Incorporating functional neuroimaging methodologies such as with the VR-based environments described here can help provide further insight into the underlying neural correlates and neurophysiological mechanisms associated with impairments in visual processing, such as in the case of CVI. The combination of all these techniques can provide a holistic understanding regarding an individual’s visual abilities.

Acknowledgements

This work was supported by the Knights Templar Eye Foundation Inc. to C.R.B as well as the Research to Prevent Blindness / Lions Clubs International Foundation and National Institutes of Health (R01 EY019924–08) to L.B.M.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Colenbrander A Visual functions and functional vision, International Congress Series 1282:482–486; 2005. [Google Scholar]

- 2.Hyvärinen L Considerations in Evaluation and Treatment of the Child With Low Vision, American Journal of Occupational Therapy 49:891–897; 1995. [DOI] [PubMed] [Google Scholar]

- 3.Colenbrander A Aspects of vision loss – visual functions and functional vision, Visual Impairment Research 5:115–136; 2003. [Google Scholar]

- 4.Kran BS, Mayer DL. Vision Impairment and Brain Damage.In: Bartuccio M, Maino DM, eds Visual diagnosis and care of the patient with special needs: Lippincott Williams & Wilkins; 2012:135–146. [Google Scholar]

- 5.Katzner S, Weigelt S. Visual cortical networks: of mice and men, Current Opinion in Neurobiology 23:202–206; 2013. [DOI] [PubMed] [Google Scholar]

- 6.Kanwisher N, Chun MM, McDermott J, et al. Functional imaging of human visual recognition, Cognitive Brain Research 5:55–67; 1996. [DOI] [PubMed] [Google Scholar]

- 7.Hoffmann MB, Dumoulin SO. Congenital visual pathway abnormalities: a window onto cortical stability and plasticity, Trends in neurosciences 38:55–65; 2015. [DOI] [PubMed] [Google Scholar]

- 8.Fazzi E, Bova S, Giovenzana A, et al. Cognitive visual dysfunctions in preterm children with periventricular leukomalacia, Developmental Medicine & Child Neurology 51:974–981; 2009. [DOI] [PubMed] [Google Scholar]

- 9.Dagnelie G Age-related psychophysical changes and low vision, Investigative Ophthalmology & Visual Science 54:ORSF88–ORSF93; 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Nouri-Mahdavi K Selecting visual field tests and assessing visual field deterioration in glaucoma, Canadian Journal of Ophthalmology/Journal Canadien d’Ophtalmologie 49:497–505; 2014. [DOI] [PubMed] [Google Scholar]

- 11.Wu Z, Medeiros FA. Recent developments in visual field testing for glaucoma, Current opinion in ophthalmology 29:141–146; 2018. [DOI] [PubMed] [Google Scholar]

- 12.(WHO) WHO. Visual impairment and blindness: Fact Sheet N°282. 2012.

- 13.Bourne RR, Flaxman SR, Braithwaite T, et al. Magnitude, temporal trends, and projections of the global prevalence of blindness and distance and near vision impairment: a systematic review and meta-analysis, The Lancet Global Health 5:e888–e897; 2017. [DOI] [PubMed] [Google Scholar]

- 14.Bailey IL, Lovie-Kitchin JE. Visual acuity testing. From the laboratory to the clinic, Vision Research 90:2–9; 2013. [DOI] [PubMed] [Google Scholar]

- 15.Bailey IL, Lovie JE. New Design Principles for Visual Acuity Letter Charts, Optometry and Vision Science 53:740–745; 1976. [DOI] [PubMed] [Google Scholar]

- 16.Ferris FL, Freidlin V, Kassoff A, et al. Relative letter and position difficulty on visual acuity charts from the Early Treatment Diabetic Retinopathy Study, American Journal of Ophthalmology 116:735–740; 1993. [DOI] [PubMed] [Google Scholar]

- 17.Hou F, Lesmes L, Bex P, et al. Using 10AFC to further improve the efficiency of the quick CSF method, Journal of Vision 15:2; 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Hyvärinen L, Nasanen R, Laurinen P. New visual acuity test for pre-school children, Acta ophthalmologica 58:507–511; 1980. [DOI] [PubMed] [Google Scholar]

- 19.Teller DY. The forced-choice preferential looking procedure: A psychophysical technique for use with human infants, Infant Behavior and Development 2:135–153; 1979. [Google Scholar]

- 20.Sokol S Measurement of infant visual acuity from pattern reversal evoked potentials, Vision Research 18:33–39; 1978. [DOI] [PubMed] [Google Scholar]

- 21.Carkeet A Modeling logMAR Visual Acuity Scores: Effects of Termination Rules and Alternative Forced-Choice Options, Optometry and Vision Science 78:529–538; 2001. [DOI] [PubMed] [Google Scholar]

- 22.Leat SJ, Yadav NK, Irving EL. Development of Visual Acuity and Contrast Sensitivity in Children, Journal of Optometry 2:19–26; 2009. [Google Scholar]

- 23.Owsley C. Vision and Aging, Annual Review of Vision Science 2:255–271; 2016. [DOI] [PubMed] [Google Scholar]

- 24.Campbell F, Robson J. Application of fourier analysis to modulation response of eye. Paper presented at: Journal of the Optical Society of America1964. [Google Scholar]

- 25.Owsley C Contrast sensitivity, Ophthalmology Clinics of North America 16:171–177; 2003. [DOI] [PubMed] [Google Scholar]

- 26.Owsley C, Sloane ME. Contrast sensitivity, acuity, and the perception of ‘real-world’ targets, British Journal of Ophthalmology 71:791–796; 1987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Woods RL, Wood JM. The role of contrast sensitivity charts and contrast letter charts in clinical practice, Clinical and Experimental Optometry 78:43–57; 1995. [Google Scholar]

- 28.Pelli D, Robson J. The design of a new letter chart for measuring contrast sensitivity. Paper presented at: Clinical Vision Sciences1988. [Google Scholar]

- 29.Arditi A Improving the Design of the Letter Contrast Sensitivity Test, Investigative Opthalmology & Visual Science 46:2225; 2005. [DOI] [PubMed] [Google Scholar]

- 30.Green DG, Campbell FW. Effect of Focus on the Visual Response to a Sinusoidally Modulated Spatial Stimulus*, Journal of the Optical Society of America 55:1154; 1965. [Google Scholar]

- 31.Freedman RD, Thibos LN. Contrast sensitivity in humans with abnormal visual experience, The Journal of Physiology 247:687–710; 1975. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Bodis-Wollner I, Marx MS, Mitra S, et al. Visual dysfunction in parkinson’s disease, Brain 110:1675–1698; 1987. [DOI] [PubMed] [Google Scholar]

- 33.Abrahamsson M, Sjöstrand J. Impairment of contrast sensitivity function (CSF) as a measure of disability glare, Investigative ophthalmology & visual science 27:1131–1136; 1986. [PubMed] [Google Scholar]

- 34.Pelli DG, Bex P. Measuring contrast sensitivity, Vision Research 90:10–14; 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Albany-Ward K What do you really know about colour blindness?, British Journal of School Nursing 10:197–199; 2015. [Google Scholar]

- 36.Roe Anna W, Chelazzi L, Connor Charles E, et al. Toward a Unified Theory of Visual Area V4, Neuron 74:12–29; 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Nagel WA. I. Zwei Apparate für die augenärztliche Funktionsprüfung, Ophthalmologica 17:201–222; 1907. [Google Scholar]

- 38.Munsell AH. A pigment color system and notation, The American Journal of Psychology 23:236–244; 1912. [Google Scholar]

- 39.Stilling J Lehre von den Farbenempfindungen, Klin MBL Augenheilk; 1873. [Google Scholar]

- 40.Council NR. Procedures for testing color vision: Report of working group 41. National Academies; 1981. [PubMed] [Google Scholar]

- 41.Peeles D, Teller D. Color vision and brightness discrimination in two-month-old human infants, Science 189:1102–1103; 1975. [DOI] [PubMed] [Google Scholar]

- 42.Pereverzeva M, Teller DY. Infant color vision: Influence of surround chromaticity on spontaneous looking preferences, Visual Neuroscience 21:389–395; 2004. [DOI] [PubMed] [Google Scholar]

- 43.Brown AM. Development of visual sensitivity to light and color vision in human infants: A critical review, Vision Research 30:1159–1188; 1990. [DOI] [PubMed] [Google Scholar]

- 44.Hainline L Development of accommodation and vergence in infancy Accommodation and Vergence Mechanisms in the Visual System: Birkhäuser Basel; 2000:161–170. [Google Scholar]

- 45.Luna B, Velanova K, Geier CF. Development of eye-movement control, Brain and Cognition 68:293–308; 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Parker AJ. Binocular depth perception and the cerebral cortex, Nature Reviews Neuroscience 8:379–391; 2007. [DOI] [PubMed] [Google Scholar]

- 47.Tsao DY, Vanduffel W, Sasaki Y, et al. Stereopsis Activates V3A and Caudal Intraparietal Areas in Macaques and Humans, Neuron 39:555–568; 2003. [DOI] [PubMed] [Google Scholar]

- 48.Read JCA. Stereo vision and strabismus, Eye 29:214–224; 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Birch E, Williams C, Drover J, et al. Randot® Preschool Stereoacuity Test: Normative data and validity, Journal of American Association for Pediatric Ophthalmology and Strabismus 12:23–26; 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Enroth-Cugell C, Robson JG. The contrast sensitivity of retinal ganglion cells of the cat, The Journal of Physiology 187:517–552; 1966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Hubel DH, Wiesel TN. Receptive fields and functional architecture of monkey striate cortex, The Journal of Physiology 195:215–243; 1968. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Albright TD. Direction and orientation selectivity of neurons in visual area MT of the macaque, Journal of Neurophysiology 52:1106–1130; 1984. [DOI] [PubMed] [Google Scholar]

- 53.London R Optokinetic nystagmus: a review of pathways, techniques and selected diagnostic applications, Journal of the American Optometric Association 53:791–798; 1982. [PubMed] [Google Scholar]

- 54.Reinecke RD. Standardization of Objective Visual Acuity Measurements, A.M.A. Archives of Ophthalmology 60:418; 1958. [DOI] [PubMed] [Google Scholar]

- 55.Dakin SC, Turnbull PRK. Similar contrast sensitivity functions measured using psychophysics and optokinetic nystagmus, Scientific Reports 6; 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Shupert C, Fuchs AF. Development of conjugate human eye movements, Vision Research 28:585–596; 1988. [DOI] [PubMed] [Google Scholar]

- 57.Volkmann FC, Dobson MV. Infant responses of ocular fixation to moving visual stimuli, Journal of Experimental Child Psychology 22:86–99; 1976. [DOI] [PubMed] [Google Scholar]

- 58.Williams DW, Sekuler R. Coherent global motion percepts from stochastic local motions, Vision Research 24:55–62; 1984. [DOI] [PubMed] [Google Scholar]

- 59.Bogfjellmo LG, Bex PJ, Falkenberg HK. The development of global motion discrimination in school aged children, Journal of Vision 14:19–19; 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Wattam-Bell J Coherence thresholds for discrimination of motion direction in infants, Vision Research 34:877–883; 1994. [DOI] [PubMed] [Google Scholar]

- 61.Leek MR. Adaptive procedures in psychophysical research, Perception & Psychophysics 63:1279–1292; 2001. [DOI] [PubMed] [Google Scholar]

- 62.Watson AB, Pelli DG. Quest: A Bayesian adaptive psychometric method, Perception & Psychophysics 33:113–120; 1983. [DOI] [PubMed] [Google Scholar]

- 63.Dutton GN, Calvert J, Ibrahim H, et al. Structured clinical history taking for cognitive and perceptual visual dysfunction and for profound visual disabilities due to damage to the brain in children, Visual impairment in children due to damage to the brain. Mac Keith Press, London: 117–128; 2010. [Google Scholar]

- 64.Merabet LB, Mayer DL, Bauer CM, et al. Disentangling How the Brain is “Wired” in Cortical (Cerebral) Visual Impairment, Seminars in Pediatric Neurology 24:83–91; 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Ortibus E, Laenen A, Verhoeven J, et al. Screening for Cerebral Visual Impairment: Value of a CVI Questionnaire, Neuropediatrics 42:138–147; 2011. [DOI] [PubMed] [Google Scholar]

- 66.Boot FH, Pel JJM, van der Steen J, et al. Cerebral Visual Impairment: Which perceptive visual dysfunctions can be expected in children with brain damage? A systematic review, Research in Developmental Disabilities 31:1149–1159; 2010. [DOI] [PubMed] [Google Scholar]

- 67.Fazzi E, Bova SM, Uggetti C, et al. Visual–perceptual impairment in children with periventricular leukomalacia, Brain and Development 26:506–512; 2004. [DOI] [PubMed] [Google Scholar]

- 68.Hoyt C Brain injury and the eye, Eye 21:1285; 2007. [DOI] [PubMed] [Google Scholar]

- 69.Jan J, Groenveld M, Sykanda A, et al. Behavioural characteristics of children with permanent cortical visual impairment, Developmental medicine & child neurology 29:571–576; 1987. [DOI] [PubMed] [Google Scholar]

- 70.Jan J, Groenveld M, Anderson D. Photophobia and cortical visual impairment, Developmental Medicine & Child Neurology 35:473–477; 1993. [DOI] [PubMed] [Google Scholar]

- 71.Braddick O, Atkinson J. Development of human visual function, Vision Research 51:1588–1609; 2011. [DOI] [PubMed] [Google Scholar]

- 72.Bennett CR, Corey RR, Giudice U, et al. Immersive Virtual Reality Simulation as a Tool for Aging and Driving Research Human Aspects of IT for the Aged Population. Healthy and Active Aging: Springer International Publishing; 2016:377–385. [Google Scholar]

- 73.Tarr MJ, Warren WH. Virtual reality in behavioral neuroscience and beyond, Nature Neuroscience 5:1089–1092; 2002. [DOI] [PubMed] [Google Scholar]

- 74.Parsons TD, Rizzo AA. Affective outcomes of virtual reality exposure therapy for anxiety and specific phobias: A meta-analysis, Journal of Behavior Therapy and Experimental Psychiatry 39:250–261; 2008. [DOI] [PubMed] [Google Scholar]

- 75.Parsons TD, Phillips AS. Virtual reality for psychological assessment in clinical practice, Practice Innovations 1:197–217; 2016. [Google Scholar]

- 76.Wang M, Reid D. Virtual Reality in Pediatric Neurorehabilitation: Attention Deficit Hyperactivity Disorder, Autism and Cerebral Palsy, Neuroepidemiology 36:2–18; 2011. [DOI] [PubMed] [Google Scholar]

- 77.Loomis JM, Blascovich JJ, Beall AC. Immersive virtual environment technology as a basic research tool in psychology, Behavior Research Methods, Instruments, & Computers 31:557–564; 1999. [DOI] [PubMed] [Google Scholar]

- 78.Dickey MD. Teaching in 3D: Pedagogical Affordances and Constraints of 3D Virtual Worlds for Synchronous Distance Learning, Distance Education 24:105–121; 2003. [Google Scholar]

- 79.Merchant Z, Goetz ET, Cifuentes L, et al. Effectiveness of virtual reality-based instruction on students’ learning outcomes in K-12 and higher education: A meta-analysis, Computers & Education 70:29–40; 2014. [Google Scholar]

- 80.Bennett CR, Bailin ES, Gottlieb TK, et al. Assessing Visual Search Performance in Ocular Compared to Cerebral Visual Impairment Using a Virtual Reality Simulation of Human Dynamic Movement. Proceedings of the Technology, Mind, and Society on ZZZ - TechMindSociety ‘18; 2018. [Google Scholar]

- 81.Bennett CR, Bailin ES, Gottlieb TK, et al. Virtual Reality Based Assessment of Static Object Visual Search in Ocular Compared to Cerebral Visual Impairment Universal Access in Human-Computer Interaction. Virtual, Augmented, and Intelligent Environments: Springer International Publishing; 2018:28–38. [Google Scholar]

- 82.Gibaldi A, Vanegas M, Bex PJ, et al. Evaluation of the Tobii EyeX Eye tracking controller and Matlab toolkit for research, Behavior Research Methods 49:923–946; 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Kooiker MJG, Pel JJM, van der Steen-Kant SP, et al. A Method to Quantify Visual Information Processing in Children Using Eye Tracking, Journal of Visualized Experiments; 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Pel JJM, Manders JCW, van der Steen J. Assessment of visual orienting behaviour in young children using remote eye tracking: Methodology and reliability, Journal of Neuroscience Methods 189:252–256; 2010. [DOI] [PubMed] [Google Scholar]

- 85.Spiers HJ, Maguire EA. Thoughts, behaviour, and brain dynamics during navigation in the real world, Neuroimage 31:1826–1840; 2006. [DOI] [PubMed] [Google Scholar]

- 86.Spiers HJ, Barry C. Neural systems supporting navigation, Current opinion in behavioral sciences 1:47–55; 2015. [Google Scholar]

- 87.Luck SJ. An introduction to the event-related potential technique MIT press, Cambridge, Ma: 45–64; 2005. [Google Scholar]

- 88.Lopez-Calderon J, Luck SJ. ERPLAB: an open-source toolbox for the analysis of event-related potentials, Frontiers in Human Neuroscience 8; 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Putcha D, Ross RS, Rosen ML, et al. Functional correlates of optic flow motion processing in Parkinson’s disease, Frontiers in Integrative Neuroscience 8; 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Tootell RBH, Mendola JD, Hadjikhani NK, et al. Functional Analysis of V3A and Related Areas in Human Visual Cortex, The Journal of Neuroscience 17:7060–7078; 1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Vaziri-Pashkam M, Xu Y. Goal-Directed Visual Processing Differentially Impacts Human Ventral and Dorsal Visual Representations, The Journal of Neuroscience 37:8767–8782; 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Visual Andersen R. And Eye Movement Functions Of The Posterior Parietal Cortex, Annual Review of Neuroscience 12:377–403; 1989. [DOI] [PubMed] [Google Scholar]

- 93.Colby CL, Goldberg ME. Space and attention in parietal cortex, Annual Review of Neuroscience 22:319–349; 1999. [DOI] [PubMed] [Google Scholar]

- 94.Culham JC, Kanwisher NG. Neuroimaging of cognitive functions in human parietal cortex, Current Opinion in Neurobiology 11:157–163; 2001. [DOI] [PubMed] [Google Scholar]