Abstract

Objective:

The aim of this study was to assess motivation as a factor in mental fatigue using subjective, performance, and physiological measures.

Background:

Sustained performance on a mentally demanding task can decrease over time. This decrement has two possible causes: a decline in available resources, meaning that performance cannot be sustained, and decrement in motivation, meaning a decline in willingness to sustain performance. However, so far, few experimental paradigms have effectively and continuously manipulated motivation, which is essential to understand its effect on mental fatigue.

Method:

Twenty participants performed a working memory task with 14 blocks, which alternated between reward and nonreward for 2.5 hr. In the reward blocks, monetary rewards could be gained for good performance. Besides reaction time and accuracy, we used physiological measures (heart rate variability, pupil diameter, eyeblink, eye movements with a video distractor) and subjective measures of fatigue and mental effort.

Results:

Participants reported becoming fatigued over time and invested more mental effort in the reward blocks. Even though they reported fatigue, their accuracy in the reward blocks remained constant but declined in the nonreward blocks. Furthermore, in the nonreward blocks, participants became more distractable, invested less cognitive effort, blinked more often, and made fewer saccades. These results showed an effect of motivation on mental fatigue.

Conclusion:

The evidence suggests that motivation is an important factor in explaining the effects of mental fatigue.

Keywords: time-on-task, effort, distraction, heart rate variability, pupil diameter

Introduction

In modern society where many jobs are demanding and challenging, fatigue is a problem faced by many people. In addition, there are two types of fatigue: physical fatigue and mental fatigue. Physical fatigue is the loss of a muscle capability to optimally perform a physical task (Gawron, French, & Funke, 2001; Hagberg, 1981). On the other hand, mental fatigue is a combination of both psychological and biological state (Marcora, Staiano, & Manning, 2009) of reduced performance because of doing a demanding cognitive task for a long time (Boksem, Meijman, & Lorist, 2006; Mizuno et al., 2011; van der Linden, Frese, & Meijman, 2003). Nonetheless, to induce mental fatigue, the task length does not necessarily have to be long for a task that requires sustained effort (DeLuca, 2005; Helton et al., 2007).

In general, excluding sleep deprivation (Akerstedt et al., 2004), there are two factors that can cause mental fatigue (Gergelyfi, Jacob, Olivier, & Zenon, 2015; Helton & Russell, 2017). The first factor is thought to be a depletion of limited resources over time and a failure to allocate resources (Grillon, Quispe-Escudero, Mathur, & Ernst, 2015; Helton & Russell, 2015, 2017; Lorist et al., 2000; Warm, Parasuraman, & Matthews, 2008). Moreover, several studies have shown that the performance decrement after doing a cognitive task coincides with a reduction in cerebral blood flow (Shaw et al., 2009; Warm, Matthews, & Parasuraman, 2009), which suggests linkages between resources and mental fatigue. Nevertheless, the specific physiological mechanism of the depletion remains obscure (Helton & Russell, 2017).

The second factor causing mental fatigue is motivation; one is no longer willing to do a particular task (Boksem & Tops, 2008; Earle, Hockey, Earle, & Clough, 2015). More specifically, Hockey (2011) mentioned that “the fatigue state has a metacognitive function, interrupting the currently active goal and allowing others into contention” (p. 173). Rewards have been shown to counteract the effect of mental fatigue (e.g., Hopstaken, van der Linden, Bakker, & Kompier, 2015) by restoring performance to prefatigue levels. In addition, over time, people tend to disengage more from a task and are more easily distracted (Boksem & Tops, 2008; Kurzban, Duckworth, Kable, & Myers, 2013; van der Linden, 2011).

There are still few experimental paradigms that have effectively manipulated motivation before fatigue arises (Gergelyfi et al., 2015), which is essential to understand its effect on mental fatigue. To have a more continuous assessment of the influence of motivation, we conducted a 2.5-hr experiment in which we manipulated motivation by alternating blocks with and without monetary reward to separate the effects of time-on-task from motivation effects.

To assess motivation comprehensively, we used three types of measures in the experiment. First, since mental fatigue is a subjective feeling (Gergelyfi et al., 2015), we used two subjective measures. We used the Visual Analog Scale (VAS) (Mizuno et al., 2011) as a measure of fatigue feeling and the Rating Scale Mental Effort (RSME) (Zijlstra & van Doorn, 1985) as a measure of subjective mental effort. Second, we measured response time (RT) and accuracy as performance measures. Last, to monitor mental fatigue as a biological state (Marcora et al., 2009), we used two physiological measures (i.e., heart rate variability [HRV] and pupillometry).

HRV provides an overview of the autonomic nervous system (Berntson et al., 1997; Evans et al., 2013; Kang, Kim, Hong, Lee, & Choi 2016). Therefore, HRV is functional and practical in monitoring the physiological condition of participants throughout the experiment. We measured the midfrequency (MF) band of HRV as an indicator of mental effort (Aasman, Mulder, & Mulder, 1987) and the high-frequency (HF) band of HRV as an indicator of parasympathetic activity (Berntson et al., 1997; Task Force of the European Society of Cardiology, 1996) during the experiment. Furthermore, for pupillometry, we used pupil diameter to measure workload (Karatekin, 2004), eyeblink to measure fatigue (Martins & Carvalho, 2015), and eye movements to indicate disengagements by monitoring how often participants were distracted and shifted their attention during the experiment.

We predicted that if motivation were an essential factor in mental fatigue, participants would be able to maintain their performance and attention to the task in the reward blocks over time. On the other hand, if motivation were not essential, performance would decline over time, and they would be susceptible to distractions regardless of rewards.

Method

Participants

The sample size was calculated at the start of the study. The experiment was designed to have a large effect size (d = .60), with a power of .90 (type II error = .10), and a significance level (α) of .05. Based on these parameters, the required sample size was 20.

A total of 25 university students took part in the study and received a monetary reward for their participation. Of these, 4 participants gave up halfway through the experiment. Data from 1 participant was lost due to equipment problems. The final sample consisted of 20 participants (8 male; mean age = 24.95 years, SD = 3.01).

This research complied with the American Psychological Association Code of Ethics. All participants gave written informed consent in accordance with Dutch law.

Experimental Task

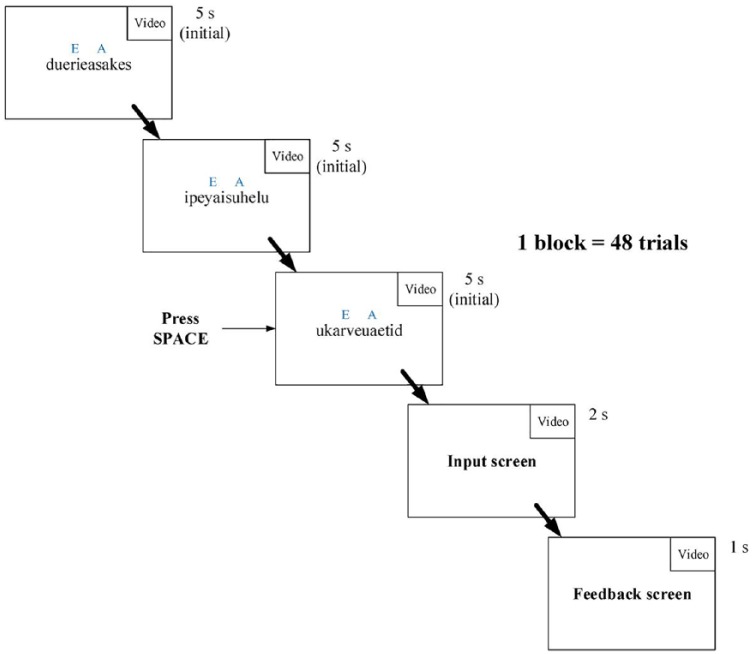

The experiment consisted of 14 blocks of 48 trials each. In each trial, participants were presented with three consecutive 12-letter pseudowords on a computer screen consisting of a randomized sequence of seven vowels (randomly drawn from a, i, u, and e) and five consonants (randomly drawn from all 21 consonants in the English alphabet) (see Figure 1). In each pseudoword, each of the four vowels appeared one to three times. The font for pseudowords was Droid Sans Mono, with 25-point font size.

Figure 1.

The task view of one trial. The first three screens present the stimuli (three consecutive pseudowords) with an initial presentation duration of 5 s. After the third screen, participants proceeded to the answer input screen if they pressed the space bar when they knew the answer or automatically when the last pseudoword presentation duration had elapsed. On the last screen, feedback was presented for 1 s. Note that all screens showed a video distractor in the top right of the screen.

Participants were asked to count how often two vowels, specified at the start of each trial, appeared in the three pseudowords in total. This target vowel set always included the vowel a, while the other vowel was randomly drawn among i, u, or e. At the end of each trial, participants were asked to report the total number of target vowels separately in an answer input screen as fast as possible. If participants knew the answer before the answer input screen appeared (i.e., within the time the last pseudoword was still being presented), they could press the space bar to call up the answer screen. Participants had 2 s to input their answers in the answer screen and received feedback showing the correct answer.

At the start of each block, each pseudoword was displayed for 5 s. To counteract practice effects and individual differences, presentation duration was varied according to the participants’ performance to ensure that the task was equally challenging throughout the experiment. If a participant gave a correct answer (correct) and pressed the space bar before the answer screen had appeared (fast), each pseudoword in the new trial would be presented 0.1 s faster; alternatively, if the answer was incorrect, presentation duration in the next trial would slow down to the duration of the last correct and fast trial. Therefore, the speed would never slow down beyond that of the last correct and fast answer, but not beyond 5 s either (to ensure participants did not strategically make the task too easy). Participants were naive to this speed manipulation. Because of the speed manipulation, the total length of the experiment varied between participants.

In the experiment, reward blocks, in which participants could earn monetary rewards, were alternated with nonreward blocks. Before a block started, participants saw a text informing them whether the block was the reward or nonreward condition for 3 s. In the reward blocks (the even blocks), participants could earn two rewards on each trial. If their answers were correct (both vowels), they received a 2.5 cent accuracy reward. If their answers were both correct and fast (they pressed the space bar while the last pseudoword was still being presented) they received another 2.5 cent speed reward; they received 5 cents cumulatively. In nonreward blocks (the odd blocks), participants did not receive any reward for accuracy or speed. After a block ended, the presentation duration in the first trial of the next block would be reset to 5 s. Each block lasted for 11 min approximately and varied depending on the participant’s performance.

Apparatus

Participants sat at a distance of 60 cm in front of a 20-in. LCD monitor with a screen resolution of 1,280 × 1,024 pixels. Throughout the experiment, a sequence of distractor videos was shown in the top right of the computer screen with a resolution of 320 × 180. The videos were Simon’s Cat animations, black-and-white videos of a cat. Simon’s Cat Ltd. had granted permission to use the video. It played continuously until the experiment ended.

We used the EyeLink 1000 from SR Research as an eye-tracker device positioned in front of the LCD monitor. Participants used a chin rest during the experiment. We presented stimuli using OpenSesame (Mathôt, Schreij, & Theeuwes, 2012), and we used PyGaze (Dalmaijer, Mathôt, & van der Stigchel, 2014) to interact with the EyeLink 1000.

We measured the right eye’s diameter with a sample rate of 250 Hz. Before the experiment started, we performed calibration and drift correction. The EyeLink 1000 recorded the eye’s diameter, gaze positions, saccades, and blinks.

During the experiment, the participants wore a Cortrium C3 Holter Monitor from Cortrium ApS. The device recorded three ECG channels in real time with a sample rate of 250 Hz. We linked the ECG data to an iPad device to save all the data.

Measures

Subjective measures

To measure fatigue in each block, we used VAS, a horizontal rating scale with a fixed length of 100 mm (Lee, Hicks, & Nino-Murcia, 1990). This scale has anchors and ranges from 1 (not at all fatigued) on the far left side to 100 (extremely fatigued) on the opposite side. It has high internal consistency, reliability, and validity to measure fatigue (Mizuno et al., 2011).

To measure participants’ subjective mental effort in each block, we used RSME (Zijlstra & van Doorn, 1985). This scale has good validity to measure mental workload and has been used in many studies (van der Linden et al., 2003). RSME uses a vertical scale from 0 to 150 with some anchors from absolutely no effort to extreme effort. These two measures were printed double-sided on a page, with VAS as the front page.

Performance measures

For each trial, RT was calculated as the time between the presentation onset of the last pseudoword and the moment the participant pressed the space bar. If the participant had not pressed the space bar, RT was equal to the duration of the last pseudoword being presented in that trial (because three consecutive pseudowords were never presented longer than 15 s, the maximum RT for a trial was, therefore, 5 s). To average all RTs per block, we used only the times of correct trials.

A response was considered correct if the reported number was correct for both target vowels; one vowel correct was defined as incorrect (we also used one vowel correct as a measure of accuracy, but because this had no effect on the results, we will report only on both vowels correct). Accuracy was expressed as the percentage of correct responses in a block.

Physiological measures

We processed the ECG data derived from the Cortrium device using the PreCAR software (van Roon & Mulder, 2017) to create an R peak event series from the ECG raw data and also to correct missing R peaks or double-triggered R peaks. From the corrected R peak event series, we used CARSPAN (Mulder, Hofstetter, & van Roon, 2009) to determine HRV in the MF band (0.07–0.14 Hz) and HF band (0.15–0.4 Hz) for each experimental block. We used the power in the MF band as an indicator of cognitive mental effort (Aasman et al., 1987; Mulder & Mulder, 1981; Schellekens, Sijtsma, Vegter, & Meijman, 2000) and the HF band as an indicator of parasympathetic activity during the experiment (Berntson et al., 1997; Task Force of the European Society of Cardiology, 1996). Power data for each block were normalized for each participant by expressing power as the proportion of power in a block to the average power across the experiment.

We obtained eyeblink, pupil diameter, eye gaze, and eye saccades data directly from the EyeLink 1000. We converted all pupil measures from the EyeLink 1000 to ASCII format using EDF2ASC (a dedicated program from SR Research). Afterward, we used Eyelinker (Barthelme, 2016), a package from R (R Development Core Team, 2008), to convert the ASCII format into a more structured format. Furthermore, for every trial, we filtered these data sets from the beginning of a stimulus until the onset of the answer input screen.

We used eyeblink data as a further measure of fatigue (Martins & Carvalho, 2015). For each block, we determined eyeblink frequency and calculated the mean eyeblink duration.

We used the pupil diameter as a measure of cognitive effort and cognitive load (Mathôt, 2018). Pupils react to workload, which dilate when the workload increases (Karatekin, 2004). We calculated the mean pupil diameter for each block and normalized it for each participant by dividing it by the average pupil diameter for the entire experiment.

To measure attention to the main task stimuli, we also measured the frequency of eye saccades within the part of the screen in which the pseudowords were presented (i.e., we detected it when the starting and ending of eye saccades coordinates were within the stimulus-screen window, i.e., outside the video screen coordinates). The stimulus-screen window that was used for saccades detection was not shown to participants and was located in the center of the screen as displayed in Figure 1. The window’s size was approximately the same as the size of the pseudowords being displayed. We also calculated the mean amplitude of eye saccades for each block.

We used eye gaze data as a measure of visual distraction. Every time a participant shifted his or her eyes to the video distractor (i.e., when the point of gaze was within the video coordinates) for longer than 200 ms, this was noted as an instance of visual distraction frequency. Also, we calculated the mean visual distraction duration for each block.

Procedure

A few days before the experiment, all participants received an email that explained the experimental procedure, the reward scheme, and other aspects of the study. It also asked them not to drink coffee 24 hr before the study and to have adequate sleep. The email explained that the study was focused on attention and did not mention mental fatigue. Participants were informed that the experiment would last for 2.5 hr. However, participants were not aware of the number of blocks in the experiment.

Upon arrival, participants received further instructions on the experiment and signed informed consent forms. They had to turn off their mobile device(s) and hand over their wristwatches if they had them.

Participants were seated at a distance of 60 cm in front of LCD monitors. Heart rate monitors were then attached to their chests, and they were asked to rest for 5 min so their heart rates could stabilize. After that, participants were asked to put their chins on the chin supports, after which their forehead positions were adjusted if needed. Then, the eye trackers were set up and calibrated. Participants were asked not to move their heads (always maintaining their forehead positions) during the entire course of the experiment but were allowed to move their bodies while remaining seated on the chairs.

After they had received the task instructions, participants practiced on three sets of 10 trials. They were allowed to practice more if desired. After practice, the participants proceeded to the experimental blocks.

At the end of each block, the participants were asked to rate their experienced mental fatigue and subjective mental effort in 10 s before proceeding to the next block. The subjective rating sheets were placed on the left side of the table. When they completed the rating for each block, they were asked to place the sheet on their right side. Consequently, participants could notice when the experiment would end by looking at the remaining sheets.

The experiment lasted for approximately 2.5 hr (not including the setup and the practice session) and ended after 14 blocks. After it ended, or when participants decided to give up, they had a debriefing session wherein they were informed about the purpose of the study.

Statistical Analysis

We used linear mixed-effect models for all measures using the Lme4 package (Bates, Mächler, Bolker, & Walker, 2015) in R (Version 3.4.2), except for pupil diameter. For pupil diameter analysis, we used polynomial regression because the pupil diameter was not linear. We used log-transformation on the visual distraction frequency and binomial distribution for accuracy.

To determine the best fitting model for each measure, we used maximum likelihood estimates by comparing Akaike information criteria and using the function anova in R; we started the comparison from the simplest model to more complex models. The fixed-effect factors of our model were time-on-task and reward, and the random-effect factor was the participants. The interaction between factors was accounted for. The residuals and fitted values were examined for compliance of assumption of constant variance. To detect influential outliers, we examined Cook’s D for each measure.

We obtained p values using the Car package with type-III Wald chi-square test for linear models and type-III F test for polynomial models (Fox & Weisberg, 2011). For estimation of effect size, we obtained R2s for the mixed-effect models by calculating Ωo2 (Nakagawa & Schielzeth, 2012). To measure correlations between measures, we used the Hmisc package (Harrell, 2017) in R.

Results

Subjective Measures

Fatigue

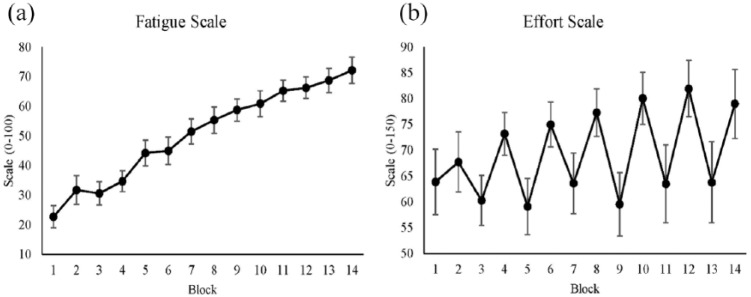

To test whether fatigue manipulation was successful, we used VAS. Figure 2a shows that the feeling of fatigue was successfully induced in our participants. Fatigue ratings increased linearly from the beginning to the last block, which indicates a significant effect of time (see Table 1). However, the effect of reward was not significant.

Figure 2.

(a) Average fatigue rating for each block using the Visual Analog Scale. The y-axis shows the subjective fatigue score, which runs from 0 to 100. (b) Average effort rating for each block using the Rating Scale Mental Effort. The y-axis shows the subjective mental effort score from 0 to 150. Both x-axes show blocks, where even blocks are the reward conditions. Standard errors are represented by the error bars in each block. All figures were plotted with raw values.

Table 1:

The Mixed-Effect Result of Mental Fatigue From the Best Fitted Model

| Mean | Standard Error | p Value | 95% Confidence Interval | R 2 | ||

|---|---|---|---|---|---|---|

| Lower Limit | Upper Limit | |||||

| (Intercept) | 22.33 | 3.38 | .75 | |||

| Time | 3.76 | 0.18 | < .001 | 3.41 | 4.12 | |

Mental Effort

We used RSME to measure participants’ subjective mental effort during the experiment and to monitor reward manipulation. The effect of reward on subjective effort was significant (see Table 2), which is shown by an increase in subjective effort in reward blocks (see Figure 2b). However, the effect of time was not significant.

Table 2:

The Mixed-Effect Result of Mental Effort From the Best Fitted Model

| Mean | Standard Error | p Value | 95% Confidence Interval | R 2 | ||

|---|---|---|---|---|---|---|

| Lower Limit | Upper Limit | |||||

| (Intercept) | 69.14 | 3.48 | .41 | |||

| Reward | 7.17 | 1.26 | < .001 | 4.69 | 9.66 | |

Performance Measures

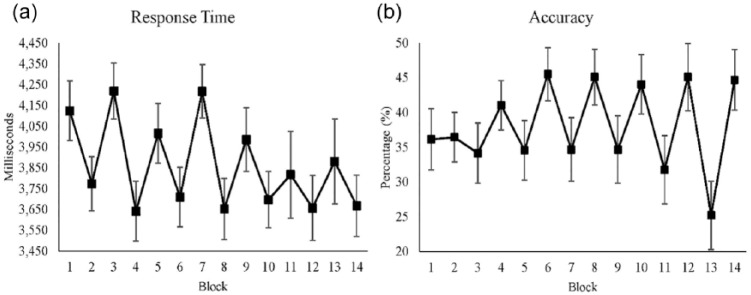

RT

RT decreased significantly over time (see Table 3). RTs were significantly faster in reward conditions. The interaction between time and reward was also significant: Over time, RT decreased in the nonreward conditions, but it did not in reward conditions (see Figure 3a).

Table 3:

The Mixed-Effect Result of Response Time From the Best Fitted Model

| Mean | Standard Error | p Value | 95% Confidence Interval | R 2 | ||

|---|---|---|---|---|---|---|

| Lower Limit | Upper Limit | |||||

| (Intercept) | 4011.49 | 125.16 | .42 | |||

| Reward | −225.49 | 20.41 | < .001 | −265.51 | −185.47 | |

| Time | −25.98 | 2.46 | < .001 | −30.81 | −21.15 | |

| Reward × Time | 11.49 | 2.45 | < .001 | 6.69 | 16.28 | |

Figure 3.

(a) Average response time for each block when participants gave correct answers. (b) Average accuracy for each block. Both x-axes show blocks, where even blocks are the reward conditions. Standard errors are represented by the error bars in each block. All figures were plotted with raw values.

Accuracy

Accuracy shows that the task was difficult for the participants; less than 50% of the trials resulted in correct answers. Time and reward had a significant interaction effect on accuracy (see Table 4). Figure 3b shows the interaction effect where the accuracy depended on time and condition. When the time increased and the block was the nonreward condition, accuracy decreased over time. On the other hand, when the time increased and the block was the reward condition, accuracy was maintained over time. Accuracy had a significant correlation with subjective mental effort r(12) = .85, p < .01.

Table 4:

The Mixed-Effect Result of Accuracy From the Best Fitted Model

| Mean | Standard Error | p Value | 95% Confidence Interval | R 2 | ||

|---|---|---|---|---|---|---|

| Lower Limit | Upper Limit | |||||

| (Intercept) | −0.535 | 0.172 | .11 | |||

| Reward | 0.021 | 0.041 | .6 | −0.057 | 0.099 | |

| Time | −0.004 | 0.005 | .38 | −0.013 | 0.005 | |

| Reward × Time | 0.029 | 0.004 | < .001 | 0.021 | 0.039 | |

Physiological Measures

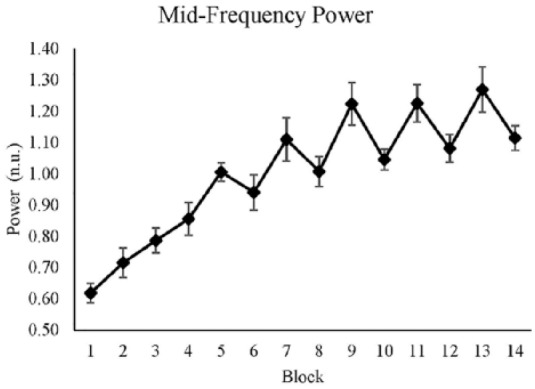

MF band

We used the power in the MF band of HRV as an indicator of cognitive mental effort. A higher value means the participants invested less cognitive effort, whereas a lower value expresses the opposite. Time had a significant effect on MF power, with an increase in MF power over time, while the main effect of reward was not significant (see Table 5). There was a significant interaction effect of time and reward: over time, the difference between reward and nonreward conditions increased (see Figure 4).

Table 5:

The Mixed-Effect Result of Midfrequency Power From the Best Fitted Model

| Mean | Standard Error | p Value | 95% Confidence Interval | R 2 | ||

|---|---|---|---|---|---|---|

| Lower Limit | Upper Limit | |||||

| (Intercept) | 0.652 | 0.039 | .38 | |||

| Reward | 0.062 | 0.058 | .28 | −0.052 | 0.177 | |

| Time | 0.054 | 0.004 | < .001 | 0.044 | 0.064 | |

| Reward × Time | −0.023 | 0.006 | < .001 | −0.036 | −0.009 | |

Figure 4.

Average midfrequency power for each block. The y-axis shows the normalized value of the midfrequency power, and the x-axis shows blocks, where even blocks are the reward conditions. Standard errors are represented by the error bars in each block. The figure was plotted with raw values.

HF band

We calculated the power in the HF band as an indicator of parasympathetic activity during the experiment. We did not find any significant effect in the HF band.

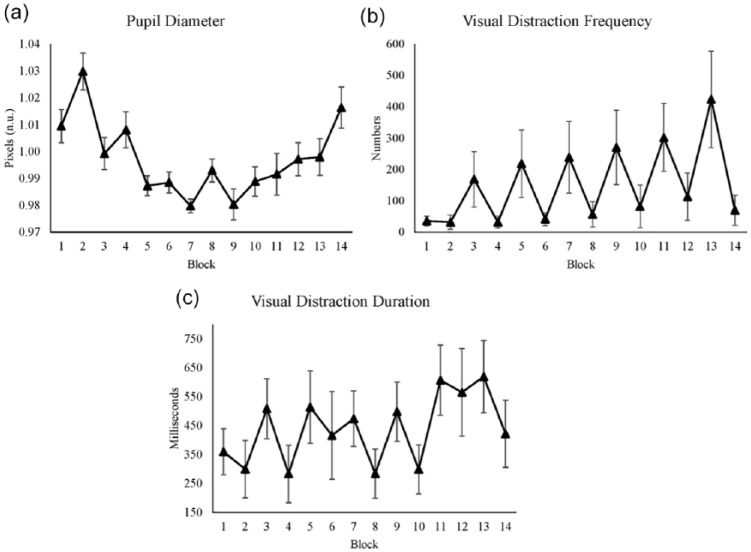

Pupil diameter

We measured pupil diameter as a measure of cognitive load and cognitive control. Time had a significant effect on pupil diameter (see Table 6). Time2 had a significant effect on pupil diameter, as did reward. From the first to the seventh block, pupil diameter decreased and after that increased until the last block (see Figure 5a). The significant effect of reward is illustrated by a bigger size of pupil diameter in reward blocks.

Table 6:

Polynomial Regression of Pupil Diameter From the Best Fitted Model

| Mean | Standard Error | p Value | 95% Confidence Interval | R 2 | ||

|---|---|---|---|---|---|---|

| Lower Limit | Upper Limit | |||||

| (Intercept) | 1.0261 | 0.0058 | .2 | |||

| Reward | 0.0246 | 0.0068 | < .001 | 0.0111 | 0.0382 | |

| Time | −0.0123 | 0.0016 | < .001 | −0.0156 | −0.0091 | |

| Time2 | 0.0008 | 0.0001 | < .001 | 0.0006 | 0.0011 | |

| Reward × Time | −0.0017 | 0.0008 | .037 | −0.0033 | −0.0001 | |

Figure 5.

(a) Average pupil diameter for each block. (b) Average visual distraction frequency for each block. (c) Average visual distraction duration for each block. All figures’ y-axes show their value respectively, and x-axes show blocks, where even blocks are the reward conditions. Standard errors are represented by the error bars in each block. All figures were plotted with raw values.

Moreover, time and reward showed a significant interaction effect. While pupil dilation showed a difference between reward and nonreward conditions in the first part of the experiment, this difference disappeared in the last part of the experiment (see Figure 5a).

Visual distraction frequency

We measured visual distraction frequency as an indicator of whether participants were distracted during the experiment by a video distractor. Time had a significant effect on visual distraction frequency, which is shown by an increase in visual distraction frequency over time (see Table 7). Reward had a significant effect on visual distraction frequency, with fewer distractions in reward conditions (see Figure 5b). We found a significant correlation between visual distraction frequency and MF power r(12) = .71, p < .01. Moreover, a negative correlation between visual distraction frequency and subjective mental effort was significant r(12) = −.6, p < .05. This indicates that task disengagement was related to effort investment.

Table 7:

The Mixed-Effect Result of Visual Distraction Frequency From the Best Fitted Model in Logarithmic Scales

| Mean | Standard Error | p Value | 95% Confidence Interval | R 2 | ||

|---|---|---|---|---|---|---|

| Lower Limit | Upper Limit | |||||

| (Intercept) | 1.25 | 0.16 | .69 | |||

| Reward | −0.64 | 0.13 | <.001 | −0.91 | −0.38 | |

| Time | 0.04 | 0.01 | <.001 | 0.02 | 0.07 | |

| Reward × Time | −0.01 | 0.01 | .286 | −0.04 | 0.01 | |

Visual distraction duration

The purpose of this measure was similar to the visual distraction frequency. Visual distraction duration significantly increased over time (see Table 8). Reward also had a significant effect on visual distraction duration. In reward conditions, durations were shorter (see Figure 5c).

Table 8:

The Mixed-Effect Result of Visual Distraction Duration From the Best Fitted Model

| Mean | Standard Error | p Value | 95% Confidence Interval | R 2 | ||

|---|---|---|---|---|---|---|

| Lower Limit | Upper Limit | |||||

| (Intercept) | 400.43 | 94.97 | .63 | |||

| Reward | −160.31 | 37.75 | <.001 | −257.28 | −112.53 | |

| Time | 15.84 | 4.68 | <.001 | 5.31 | 23.26 | |

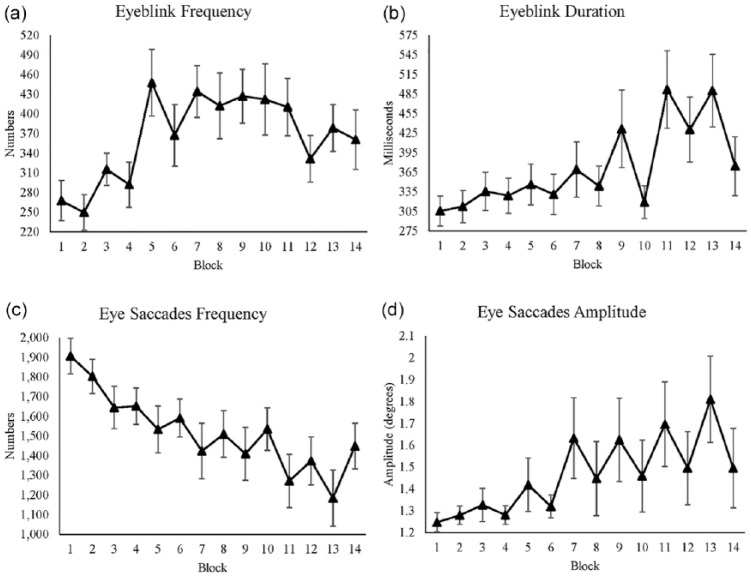

Eyeblink frequency

We used eyeblink frequency as a measure of fatigue. Eyeblink frequency significantly increased over time (see Table 9). In reward blocks, eyeblink frequency was significantly lower (see Figure 6a).

Table 9:

The Mixed-Effect Result of Eyeblink Frequency From the Best Fitted Model

| Mean | Standard Error | p Value | 95% Confidence Interval | R 2 | ||

|---|---|---|---|---|---|---|

| Lower Limit | Upper Limit | |||||

| (Intercept) | 322.49 | 29.63 | .31 | |||

| Reward | −43.78 | 19.52 | .024 | −82.19 | −5.36 | |

| Time | 8.65 | 2.42 | < .001 | 3.89 | 13.42 | |

Figure 6.

(a) Average eyeblink frequency for each block. (b) Average eyeblink duration for each block. (c) Average eye saccades frequency for each block. (d) Average eye saccades amplitude for each block. All figures’ y-axes show their value respectively, and x-axes show blocks, where even blocks are the reward conditions. Standard errors are represented by the error bars in each block. All figures are plotted with raw values.

Eyeblink duration

Similar to eyeblink frequency, we used eyeblink duration to measure fatigue. Time had a significant effect on the eyeblink duration (see Table 10), which is shown by an increase in eyeblink duration over time (see Figure 6b). However, the effect of reward was not significant. Time and reward had a significant interaction effect on eyeblink duration. In nonreward blocks, eyeblink duration increased over time, while there was no change in duration in reward blocks. We found a significant correlation between visual distraction frequency and eyeblink duration r(12) = .81, p < .001, which indicates that the more frequently participants shifted their attention to the cat video, the longer they blinked their eyes (see Figure 6b).

Table 10:

The Mixed-Effect Result of Eyeblink Duration From the Best Fitted Model

| Mean | Standard Error | p Value | 95% Confidence Interval | R 2 | ||

|---|---|---|---|---|---|---|

| Lower Limit | Upper Limit | |||||

| (Intercept) | 276.96 | 34.02 | .47 | |||

| Reward | 18.02 | 35.48 | .61 | −51.77 | 87.83 | |

| Time | 16.99 | 2.94 | < .001 | 11.19 | 22.78 | |

| Reward × Time | −10.27 | 4.16 | .013 | −18.46 | −2.07 | |

Eye saccades frequency

We calculated saccades frequency to measure participants’ attention toward the main stimuli. Time had a significant effect on saccades frequency (see Table 11), with an increase in saccades frequency over time. The main effect of reward was not significant, but reward and time showed a significant interaction: As the experiment progressed, the difference between reward and nonreward conditions increased, with higher saccades frequency in reward than in nonreward blocks (see Figure 6c).

Table 11:

The Mixed-Effect Result of Eye Saccades Frequency From the Best Fitted Model

| Mean | Standard Error | p Value | 95% Confidence Interval | R 2 | ||

|---|---|---|---|---|---|---|

| Lower Limit | Upper Limit | |||||

| (Intercept) | 1861.97 | 101.83 | .59 | |||

| Reward | −62.97 | 91.45 | .49 | −242.88 | 116.92 | |

| Time | −54.24 | 7.59 | <.001 | −69.18 | −39.31 | |

| Reward × Time | 24.29 | 10.74 | .02 | 3.17 | 45.42 | |

Eye saccades amplitude

Saccades amplitude is the distance (in degrees) during rapid eye movements (eye saccades). Therefore, similar to eye saccades frequency, we used the saccades amplitude to measure participants’ focus toward the main stimuli. Time had a significant effect on eye saccades amplitude (see Table 12), with an increase in the amplitude over time. The effect of reward was not significant. Time and reward had a significant interaction effect on eye saccades amplitude: As the experiment progressed, the difference between reward and nonreward conditions increased, with higher saccades amplitude in nonreward than in reward blocks (see Figure 6d).

Table 12:

The Mixed-Effect Result of Eye Saccades Amplitude From the Best Fitted Model

| Mean | Standard Error | p Value | 95% Confidence Interval | R 2 | ||

|---|---|---|---|---|---|---|

| Lower Limit | Upper Limit | |||||

| (Intercept) | 1.21 | 0.12 | .59 | |||

| Reward | 0.01 | 0.11 | .88 | −0.21 | 0.23 | |

| Time | 0.04 | 0.01 | <.001 | 0.02 | 0.06 | |

| Reward × Time | −0.02 | 0.01 | .04 | −0.05 | −0.01 | |

Discussion

In this study, we performed a continuous assessment of the contribution of motivation to mental fatigue by conducting a mentally fatiguing experiment for 2.5 hr with two conditions (i.e., reward and nonreward). To do a comprehensive assessment, we used three types of measures: subjective, performance, and physiological measures.

Several measures showed an effect of motivation where participants were able to maintain their performance and regulated mental effort by investing more in reward blocks but not in nonreward blocks. First, the main performance measure (i.e., accuracy) remained stable and did not decline in reward blocks, which also was supported by higher subjective mental effort (RSME) in these blocks. Second, the power of HRV in the MF band was lower in reward blocks than in nonreward blocks, suggesting that in the reward blocks participants invested more cognitive effort. Third, the visual distraction data showed that participants were still engaged with the task in the reward conditions but that in the nonreward conditions, participants were susceptible to distractions. Last, participants showed less blinking (less frequent and of shorter duration) in the reward conditions, which also suggests that participants exerted more cognitive control in the reward blocks (Hockey, 2011; McIntire, McKinley, Goodyear, & McIntire, 2014).

These results are consistent with a number of other studies. Boksem et al. (2006) showed that in a sustained attention task in which performance steadily decreased over time, performance significantly increased after an increase in motivation (monetary reward) at the end of the study. Hopstaken et al. (2015) also showed that in a prolonged task, reward caused performance and subjective task engagement to increase significantly.

Given the increase in the discrepancy between reward and nonreward blocks throughout the experiment, it is evident that reward plays an important role in mental fatigue. Participants were gradually less willing to do the task and only sustained their performance if there was a sufficiently large, extrinsic reward. Although we do not see a decline in performance in reward blocks, participants might have recovered resources during the nonreward blocks, helping them to sustain performance (Helton & Russell, 2017; Szalma & Matthews, 2015).

An interesting measure was RT. It shows that participants gave faster RTs over time, which are slower in nonreward and faster in reward blocks. The task became more challenging by getting 0.1 s faster every time participants gave correct and fast answers; it could not be slowed down. Therefore, the task design per se compelled participants to react faster over time, following their performance. Consequently, the better participants did the task (as shown in the accuracy), the more difficult the task became, and the more effort they had to put into the task (subjectively and physiologically). This is reflected by faster RTs in reward blocks and slower RTs in nonreward blocks. Furthermore, the RTs indicate a learning effect and transfer of cognitive skills (see Taatgen, 2013); participants became proficient at doing the task in both conditions, which is shown by a smaller difference in RTs between reward and nonreward blocks over time. Although participants learned how to do the task, we designed the task to be equally challenging throughout the experiment; therefore, over time, the task did not become easier. Another alternative explanation for the difference of RTs between the two conditions was that participants hurried their responses in nonreward conditions over time, leading to poorer accuracy in these blocks (see Dang, Figueroa, & Helton, 2018). This is reflected by a decrease in RTs in nonreward conditions over time; in reward conditions, RTs remained stable.

Another interesting measure in this experiment was pupil size, which slowly decreased in the first part and increased in the second part of the experiment. As pupil size increases when workload increases (Karatekin, 2004), our results suggest that in the beginning of the experiment, mental workload decreased (also evident in MF power), which we assume was due to a learning effect. Interesting to note, larger pupil sizes also have been linked to task engagement rather than disengagement (Hopstaken et al., 2015), to an expectation of reward (van der Linden, 2011), and to exploration rather than exploitation (Hopstaken et al., 2015). As a result, at the beginning of the experiment, participants explored how to do the task best, particularly in the reward conditions, resulting in large pupil size, but as they discovered how to do it, their workload decreased, and so did pupil size. Especially in the reward blocks, participants started exploiting the task and expected to obtain rewarding outcomes, which caused the pupils to dilate. However, as the experiment progressed, participants became less engaged in the task and started exploring for more rewarding activities, causing pupil size to increase again.

In summary, it is apparent that motivation is an essential factor. People can maintain their performance in a particular task as long as they are still motivated to do the task (when the expected trade-off between cost and reward is favorable) by investing more effort in or by allocating more resources to the task. Outside the laboratory, people continuously have to weigh different task goals to decide to keep investing effort in the same task or look for other, potentially more rewarding goals such as eating, watching television, or playing on smartphones.

In future research, it would be interesting to have a control group in the same experiment wherein participants would not be rewarded throughout the study to see a clear comparison between motivational and nonmotivational conditions. Also, since our study was limited to a laboratory experiment, future research should address real-life tasks (e.g., extended surgical procedures, long-distance bus driving, air traffic control). It would be interesting to build a cognitive model of this study to have a picture of how we process information when fatigued. In addition, it will be interesting to study how the mental competition between doing the primary task and other tasks is modulated and controlled.

Key Points

Participants showed task performance decrements over time in nonreward blocks but not in reward blocks.

Participants became more distracted and less engaged with the task in nonreward blocks but not in reward blocks.

Our findings suggest that motivation is essential in explaining the effects of mental fatigue.

Acknowledgments

This research was funded by Lembaga Pengelola Dana Pendidikan (LPDP) from the Indonesia government to M. B. Herlambang.

Biography

Mega B. Herlambang is a PhD student at the University of Groningen. He received his master’s in industrial engineering from Bandung Institute of Technology, Indonesia.

Niels A. Taatgen is a full professor and chair of the Bernoulli Institute of Mathematics, Computer Science and Artificial Intelligence at the University of Groningen. He obtained his PhD in psychology in 1999 from the University of Groningen.

Fokie Cnossen is an assistant professor at the University of Groningen, Bernoulli Institute of Mathematics, Computer Science and Artificial Intelligence. She obtained her PhD in psychology in 2000 from the University of Groningen.

Footnotes

ORCID iD: Mega B. Herlambang  https://orcid.org/0000-0001-8946-2604

https://orcid.org/0000-0001-8946-2604

References

- Aasman J., Mulder G., Mulder L. J. M. (1987). Operator effort and the measurement of heart-rate variability. Human Factors, 29, 161–170. doi: 10.1177/001872088702900204 [DOI] [PubMed] [Google Scholar]

- Akerstedt T., Knutsson A., Westerholm P., Theorell T., Alfredsson L., Kecklund G. (2004). Mental fatigue, work and sleep. Journal of Psychosomatic Research, 57(5), 427–433. doi: 10.1016/j.jpsychores.2003.12.001 [DOI] [PubMed] [Google Scholar]

- Barthelme S. (2016). Eyelinker: Load raw data from EyeLink eye trackers. R package version 0.1. Retrieved from https://CRAN.R-project.org/package=eyelinker

- Bates D., Mächler M., Bolker B., Walker S. (2015). Fitting linear mixed-effects models using lme4. Journal of Statistical Software, 67, 1–48. doi: 10.18637/jss.v067.i01 [DOI] [Google Scholar]

- Berntson G. G., Bigger J. T., Jr., Eckberg D. L., Grossman P., Kaufmann P. G., Malik M., . . . van der Molen M. W. (1997). Heart rate variability: Origins, methods, and interpretive caveats. Psychophysiology, 34, 623–648. doi: 10.1111/j.1469-8986.1997.tb02140.x [DOI] [PubMed] [Google Scholar]

- Boksem M. A. S., Meijman T. F., Lorist M. M. (2006). Mental fatigue, motivation and action monitoring. Biological Psychology, 72, 123–132. doi: 10.1016/j.biopsycho.2005.08.007 [DOI] [PubMed] [Google Scholar]

- Boksem M. A. S., Tops M. (2008). Mental fatigue: Cost and benefits. Brain Research Reviews, 59, 125–139. doi: 10.1016/j.brainresrev.2008.07.001 [DOI] [PubMed] [Google Scholar]

- Dalmaijer E. S., Mathôt S., van der Stigchel S. (2014). PyGaze: An open-source, cross-platform toolbox for minimal-effort programming of eyetracking experiments. Behavior Research Methods, 46, 913–921. doi: 10.3758/s13428-013-0422-2 [DOI] [PubMed] [Google Scholar]

- Dang J. S., Figueroa I. J., Helton W. S. (2018). You are measuring the decision to be fast, not inattention: The Sustained Attention to Response Task does not measure sustained attention. Experimental Brain Research, 236, 2255–2266. doi: 10.1007/s00221-018-5291-6 [DOI] [PubMed] [Google Scholar]

- DeLuca J. (2005). Fatigue, cognition, and mental effort. In DeLuca J. (Ed.), Fatigue as a window to the brain (pp. 37–57). London, UK: MIT Press. [Google Scholar]

- Earle F., Hockey B., Earle K., Clough P. (2015). Separating the effects of task load and task motivation on the effort-fatigue relationship. Motivation and Emotion, 39(4), 467–476. doi: 10.1007/s11031-015-9481-2 [DOI] [Google Scholar]

- Evans S., Seidman L., Tsao J., Lung K. C., Zeltzer L., Naliboff B. (2013). Heart rate variability as a biomarker for autonomic nervous system response differences between children with chronic pain and healthy control children. Journal of Pain Research, 6, 449–457. doi: 10.2147/JPR.S43849 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox J., Weisberg S. (2011). An R companion to applied regression (2nd ed.). Thousand Oaks, CA: Sage; Retrieved from https://socialsciences.mcmaster.ca/jfox/Books/Companion-2E/index.html [Google Scholar]

- Gawron V. J., French J., Funke D. (2001). An overview of fatigue. In Hancock P. A., Desmond P. A. (Eds.), Human factors in transportation: Stress, workload, and fatigue (pp. 581–595). Mahwah, NJ: Erlbaum. [Google Scholar]

- Gergelyfi M., Jacob B., Olivier E., Zenon A. (2015). Dissociation between mental fatigue and motivational state during prolonged mental activity. Frontiers in Behavioral Neuroscience, 9, 176. doi: 10.3389/fnbeh.2015.00176 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grillon C., Quispe-Escudero D., Mathur A., Ernst M. (2015). Mental fatigue impairs emotion regulation. Emotion, 15, 383–389. doi: 10.1037/emo0000058 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hagberg M. (1981). Muscular endurance and surface electromyogram in isometric and dynamic exercise. Journal of Applied Physiology, 51, 1–7. doi: 10.1152/jappl.1981.51.1.1 [DOI] [PubMed] [Google Scholar]

- Harrell F. E. (2017). Hmisc: Harrell miscellaneous. R package version 4.0-3. Retrieved from https://CRAN.R-project.org/package=Hmisc

- Helton W. S., Hollander T. D., Warm J. S., Tripp L. D., Parsons K., Matthews G., . . . Hancock P. A. (2007). The abbreviated vigilance task and cerebral hemodynamics. Journal of Clinical and Experimental Neuropsychology, 29(5), 545–552. doi: 10.1080/13803390600814757 [DOI] [PubMed] [Google Scholar]

- Helton W. S., Russell P. N. (2015). Rest is best: The role of rest and task interruptions on vigilance. Cognition, 134, 165–173. doi: 10.1016/j.cognition.2014.10.001 [DOI] [PubMed] [Google Scholar]

- Helton W. S., Russell P. N. (2017). Rest is still best: The role of qualitative and quantitative load of interruptions on vigilance. Human Factors, 59(1), 91–100. doi: 10.1177/0018720816683509 [DOI] [PubMed] [Google Scholar]

- Hockey G. R. J. (2011). A motivational control theory of cognitive fatigue. In Ackerman P. L. (Ed.), Cognitive fatigue: Multidisciplinary perspective on current research and future application (pp. 167–188). Washington, DC: American Psychological Association. [Google Scholar]

- Hopstaken J. F., van der Linden D., Bakker A. B., Kompier M. A. J. (2015). The window of my eyes: Task disengagement and mental fatigue covary with pupil dynamics. Biological Psychology, 110, 100–106. doi: 10.1016/j.biopsycho.2015.06.013 [DOI] [PubMed] [Google Scholar]

- Kang J. H., Kim J. K., Hong S. H., Lee C. H., Choi B. Y. (2016). Heart rate variability for quantification of autonomic dysfunction in fibromyalgia. Annals of Rehabilitation Medicine, 40(2), 301–309. doi: 10.5535/arm.2016.40.2.301 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karatekin C. (2004). Development of attentional allocation in the dual task paradigm. International Journal of Psychophysiology, 52, 7–21. doi: 10.1016/j.ijpsycho.2003.12.002 [DOI] [PubMed] [Google Scholar]

- Kurzban R., Duckworth A., Kable J. W., Myers J. (2013). An opportunity cost model of subjective effort and task performance. Behavioral and Brain Sciences, 36, 661–679. doi: 10.1017/S0140525X12003196 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee K. A., Hicks G., Nino-Murcia G. (1990). Validity and reliability of a scale to assess fatigue. Psychiatry Research, 36, 291–298. doi: 10.1016/0165-1781(91)90027-M [DOI] [PubMed] [Google Scholar]

- Lorist M. M., Klein M., Nieuwenhuis S., de Jong R., Mulder G., Meijman T. F. (2000). Mental fatigue and task control: Planning and preparation. Psychophysiology, 37, 614–625. doi: 10.1111/1469-8986.3750614 [DOI] [PubMed] [Google Scholar]

- Marcora S. M., Staiano W., Manning V. (2009). Mental fatigue impairs physical performance in humans. Journal of Applied Physiology, 106, 857–864. doi: 10.1152/japplphysiol.91324.2008 [DOI] [PubMed] [Google Scholar]

- Martins R., Carvalho J. M. (2015). Eye blinking as an indicator of fatigue and mental load—A systematic review. In Arezes P. M., Baptista J. S., Barroso M. P. (Eds.), Occupational safety and hygiene III (pp. 231–235). London, UK: Taylor & Francis. [Google Scholar]

- Mathôt S. (2018). Pupillometry: Psychology, physiology, and function. Journal of Cognition, 1(1), 16. doi: 10.5334/joc.18 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mathôt S., Schreij D., Theeuwes J. (2012). OpenSesame: An open-source, graphical experiment builder for the social sciences. Behavior Research Methods, 44, 314–324. doi: 10.3758/s13428-011-0168-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McIntire L. K., McKinley R. A., Goodyear C., McIntire J. P. (2014). Detection of vigilance performance using eye blinks. Applied Ergonomics, 14, 354–362. doi: 10.1016/j.apergo.2013.04.020 [DOI] [PubMed] [Google Scholar]

- Mizuno K., Tanaka M., Yamaguti K., Kajimoto O., Kuratsune H., Watanabe Y. (2011). Mental fatigue caused by prolonged cognitive load associated with sympathetic hyperactivity. Behavioral and Brain Functions, 7, 17. doi: 10.1186/1744-9081-7-17 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mulder B., Hofstetter H., van Roon A. M. (2009). Carspan for windows user’s manual (Version 0.0.1.36). Groningen, the Netherlands: Author. [Google Scholar]

- Mulder G., Mulder L. J. M. (1981). Information processing and cardiovascular control. Psychophysiology, 18, 392–402. doi: 10.1111/j.1469-8986.1981.tb02470.x [DOI] [PubMed] [Google Scholar]

- Nakagawa S., Schielzeth H. (2012). A general and simple method for obtaining R2 from generalized linear mixed-effects models. Methods in Ecology and Evolution, 4, 133–142. doi: 10.1111/j.2041-210x.2012.00261.x [DOI] [Google Scholar]

- R Development Core Team. (2008). R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing; Retrieved from http://www.R-project.org [Google Scholar]

- Schellekens J. M. H., Sijtsma G. J., Vegter E., Meijman T. F. (2000). Immediate and delayed after-effects of long lasting mentally demanding work. Biological Psychology, 53, 37–56. doi: 10.1016/s0301-0511(00)00039-9 [DOI] [PubMed] [Google Scholar]

- Shaw T. H., Warm J. S., Finomore V., Tripp L., Matthews G., Weiler E., Parasuraman R. (2009). Effects of sensory modality on cerebral blood flow velocity during vigilance. Neuroscience Letters, 461, 207–211. doi: 10.1016/j.neulet.2009.06.008 [DOI] [PubMed] [Google Scholar]

- Szalma J. L., Matthews G. (2015). Motivation and emotion. In Hoffman R. R., Hancock P. A., Scerbo M. W., Parasuraman R., Szalma J. L. (Eds.), The Cambridge handbook of applied perception research (pp. 218–240). New York, NY: Cambridge University Press. [Google Scholar]

- Taatgen N. A. (2013). The nature and transfer of cognitive skills. Psychological Review, 120(3), 439–471. doi: 10.1037/a0033138 [DOI] [PubMed] [Google Scholar]

- Task Force of the European Society of Cardiology and the North American Society of Pacing and Electrophysiology. (1996). Heart rate variability: Standards of measurement, physiological interpretation and clinical use. Circulation, 93, 1043–1065. doi: 10.1161/01.CIR.93.5.1043 [DOI] [PubMed] [Google Scholar]

- van der Linden D. (2011). The urge to stop: The cognitive and biological nature of acute mental fatigue. In Ackerman P. L. (Ed.), Cognitive fatigue: Multidisciplinary perspectives on current research and future applications (pp. 149–164). Washington, DC: American Psychological Association. [Google Scholar]

- van der Linden D., Frese M., Meijman T. F. (2003). Mental fatigue and the control of cognitive processes: Effects on perseveration and planning. Acta Psychologica, 113, 45–65. doi: 10.1016/S0001-6918(02)00150-6 [DOI] [PubMed] [Google Scholar]

- van Roon A. M., Mulder L. J. M. (2017). PreCAR version 3.7 user’s manual. Groningen, the Netherlands: Author. [Google Scholar]

- Warm J. S., Matthews G., Parasuraman R. (2009). Cerebral hemodynamics and vigilance performance. Military Psychology, 21, S75–S100. doi: 10.1080/08995600802554706 [DOI] [Google Scholar]

- Warm J. S., Parasuraman R., Matthews G. (2008). Vigilance requires hard mental work and is stressful. Human Factors, 50, 433–441. doi: 10.1518/001872008X312152 [DOI] [PubMed] [Google Scholar]

- Zijlstra F., van Doorn L. (1985). The construction of a scale to measure subjective effort (Technical report). Delft, the Netherlands: Delft University of Technology. [Google Scholar]