Abstract

Background:

Rapid advancements in biomedical research have accelerated the number of relevant electronic documents published online, ranging from scholarly articles to news, blogs, and user-generated social media content. Nevertheless, the vast amount of this information is poorly organized, making it difficult to navigate. Emerging technologies such as ontologies and knowledge bases (KBs) could help organize and track the information associated with biomedical research developments. A major challenge in the automatic construction of ontologies and KBs is the identification of words with its respective sense(s) from a free-text corpus. Word-sense induction (WSI) is a task to automatically induce the different senses of a target word in the different contexts. In the last two decades, there have been several efforts on WSI. However, few methods are effective in biomedicine and life sciences.

Methods:

We developed a framework for biomedical entity sense induction using a mixture of natural language processing, supervised, and unsupervised learning methods with promising results. It is composed of three main steps: (1) a polysemy detection method to determine if a biomedical entity has many possible meanings; (2) a clustering quality index-based approach to predict the number of senses for the biomedical entity; and (3) a method to induce the concept(s) (i.e., senses) of the biomedical entity in a given context.

Results:

To evaluate our framework, we used the well-known MSH WSD polysemic dataset that contains 203 annotated ambiguous biomedical entities, where each entity is linked to 2–5 concepts. Our polysemy detection method obtained an F-measure of 98%. Second, our approach for predicting the number of senses achieved an F-measure of 93%. Finally, we induced the concepts of the biomedical entities based on a clustering algorithm and then extracted the keywords of reach cluster to represent the concept.

Conclusions:

We have developed a framework for biomedical entity sense induction with promising results. Our study results can benefit a number of downstream applications, for example, help to resolve concept ambiguities when building Semantic Web KBs from biomedical text.

Keywords: Word sense induction, Polysemy detection, Biomedicine, BioNLP, Clustering, Classification, Number of cluster prediction

1. Introduction

The World Wide Web is by far the most extensive information growing exponentially every day with inputs from a large number of Internet users. Much of the information on the web is textual and contains rich information related to a wide range of domains. In particular, recent advances in biomedical research have accelerated the rate of health information being published on the web, ranging from scholar articles and news to blogs and user-generated social media content. The increasing capability and sophistication of biomedical tools and instruments have also contributed to the build-up of large volumes of biomedical data (e.g., the use of electronic health records for storing patient information).

Ontologies and knowledge bases (KBs) can help organize and track the information associated with biomedical research developments. Last few years have witnessed significant research efforts in automating ontology enrichment and KB construction leveraging the vast amount of electronic free-text data on the web. Nevertheless, one of the major challenges associated with automating KB and ontology constructions is the identification of words or phrases (entities) with their respectively sense(s), which has received attention only very recently [44,23,29].

Word-sense induction (WSI) is a task to automatically induce the different senses of a target word in a piece of text. The output of WSI is a sense inventory (a set of senses for the target word). Most existing WSI approaches are based on unsupervised learning algorithms with senses represented as clusters of tokens (e.g., words or phrases). There have been very few studies that use WSI in the context of information retrieval [74,58].

In general, existing WSI approaches only consider sense induction for individual words, such as verbs, nouns, and adjectives [2,53]. However, biomedical entities (or biomedical terms) are often composed of more than one word. Indeed, more than 80% of biomedical entities are composed of two or more words in the Unified Medical Language System (UMLS) metathesaurus.1

Another issue with existing WSI methods is that they do not first check whether a target word is polysemic (i.e., ambiguous) or not. Thus, a significant amount of computing time is wasted on identifying the different senses for non-polysemic words. Reducing the runtime for WSI algorithms is crucial for real-world applications. A subsequent challenge is to determine the number of senses (i.e., the number of clusters) of an entity. Further, for a new entity (i.e., entities that do not exist in existing reference KBs or ontologies), there is no a priori knowledge about the candidate entities, which makes it more challenging to determine the exact number of clusters. Thus, the clustering algorithms for WSI often suffer from poor performance [25].

To address these challenges associated with applying WSI in biomedicine, we propose a novel framework for biomedical entity sense induction. Our framework is composed of three main steps: (1) a polysemy detection method to determine if a biomedical entity is ambiguous; (2) a clustering quality index-based approach to predict the number of senses for a biomedical entity; and (3) a method to induce the concept(s) (i.e., senses) of a biomedical entity.

The primary contributions of our work in comparison to our previous studies in [50,52], are detailed below:

- We conducted a series of new evaluation experiments of our polysemy detection method (i.e., a supervised learning method based on 23 novel features we proposed in [50]) following the best practice in machine learning, which achieved an F-measure of 98%. We compared our polysemy detection approach with other similar methods (i.e., word classification tasks), which we adapted for the polysemy detection task.

- We presented in detail how we used a set of new clustering quality indexes and associated objective functions to predict the number of senses. Our method obtained an F-measure of 93%. We compared our method with 8 state-of-the-art clustering quality indexes that have been widely used for finding the number of clusters of a dataset. We also explored the 23 features [50] we used in polysemy detection for predicting the number of senses, which achieved an F-measure of 91%. Through these comprehensive evaluation experiments, our clustering quality index-based method outperformed all other benchmark methods significantly.

- We presented our methods based on clustering and keyword extractions for concept induction to complete the proposed biomedical entity sense induction framework. We evaluated the concept induction methods against the gold-standard definitions of the senses in UMLS. Our concept induction method performed reasonably well in our evaluations.

We used a gold-standard dataset based on 203 ambiguous biomedical entities extracted from MEDLINE article abstracts, where each entity is annotated with one or more MeSH concepts2 [37] and linked to the abstracts from which it was extracted. We have also experimented with a wide range of well-known supervised and unsupervised learning algorithms for the three steps in our framework.

The rest of the paper is organized as follows. Section 2 discusses existing work related to WSI and its applications in the biomedical domain. We detail our biomedical entity sense induction framework in Section 3. We present and discuss our evaluation results in Section 4. We conclude the work and discuss future directions in Section 5.

2. Related work

2.1. Word-sense induction

WSI is one of the natural language processing (NLP) tasks that aims to automatically identify the different senses (i.e., meanings) of a word in a piece of text. WSI is closely related to word-sense disambiguation (WSD), which is the task of determining the correct sense of a word in a context [57]. Unsupervised WSD methods are considered as WSI techniques aimed at discovering senses automatically based on unlabeled corpora [57]. The output of a WSI algorithm is a set of senses (i.e., a sense inventory) for each target entity from the text corpora without any other knowledge resources (e.g., existing terminology services). WSI methods are mostiy based on clustering algorithms, where each cluster represents a distinct sense of the word. Thus, a major problem of WSI is to determine the number of clusters (i.e., senses) within a given context, which is usually taken as a prior in most clustering algorithms.

Existing WSI approaches can be categorized in four types [57,83]: (1) context clustering, whose main idea is that the distributional profile of words in a corpus implicitly expresses their semantics [69,79,14,66,73,74,64,65,80,34,6,7], SenseClusters3 [68]; (2) word clustering, which seeks to cluster words that are semantically similar and each cluster represents a specific sense [48,63,19,80,67,60,46]; (3) co-occurrence graphs, where the semantics of a word can be deduced by building and analyzing a word co-occurrence graph [58,85,81,82,1,26,3,38,42,39]; and (4) probabilistic clustering, which can discover latent topic structures from the contexts of the words without involving feature engineering [15,12,88,78,45,20,77,83].

Despite the number of WSI studies mentioned above, very few methods have been developed specially for the biomedical domain. In [61], a network of word co-occurrences was defined to induce both word senses and word contexts. Another work [27] presented an efficient graph-based algorithm to cluster words into groups for WSI. A comparison between graph-based approaches and topic modeling approaches was conducted to evaluate the state-of-the-art WSI methods in the clinical domain [18]. Another study has also been proposed to find semantic ambiguities [72] using agglomerative clustering methods on the context vectors of a particular target word in biomedical texts.

2.2. Polysemy detection

Polysemy detection seeks to detect whether an entity has more than one meaning (i.e., polysemic, true or false) based on the context of the entity. Term ambiguity detection is a task related to polysemy detection, as proposed in [10]: given a term and a corresponding topic domain, determining whether the term uniquely references a member of that topic domain. In [41], the authors proposed a rank-based distance measure to explore the vector-spatial properties of the ambiguous entity and to decide if a German preposition is polysemous or not. Polysemy detection is also related to other well-studied NLP topics such as named-entity disambiguation and WSD. However, named-entity disambiguation and WSD both assume that the number of senses for a target word is given. This assumption is inappropriate for enriching KBs, ontologies, and terminologies because the number of senses of a new candidate entity is not known.

In general, polysemy detection approaches (as well as WSI methods) discussed above characterize the text data into a vector of features, e.g., using the most well-known “bag-of-words” language model, and then use a learning algorithm (either supervised or unsupervised) to capture the polysemousness and the senses of a word.

2.3. Sense number prediction

In WSI, the number of senses (clusters) for ambiguous words is normally treated as an a priori knowledge; and most popular clustering methods require the number of clusters to be defined as an input parameter. Nevertheless, in clustering analysis, a major problem is to determine the most appropriate number of clusters, which can significantly affect the clustering results, often leading to poor performance [25]. Hence, learning the appropriate numbers of senses for ambiguous words is crucial for WSI tasks [56]. Klapaftis et al. [39] used the Hierarchical Dirichlet Processes [78] to predict the number of senses of a target word. More recently, Lau et al. [45] combined Hierarchical Dirichlet Processes with a non-parametric Bayesian method for the same purpose. Niu et al. [60] applied a cluster validation method to estimate the number of senses, where the number of clusters ranged from kmin = 2, to kmax = 5. However, all these approaches produced larger numbers of word senses (up to 89) on the gold standard SEMEVAL-2010 WSI dataset [45].

Several other strategies for estimating the optimal number of clusters have also been proposed [40,89,47,8,87]. In 1985, Milligan et al. conducted a very extensive comparative evaluation of 30 methods [55] for determining the number of clusters. In a more recent study [9], it was shown that Calinski and Harabasz’s index was the most effective measure for determining the most appropriate number of clusters, followed by the Duda and Hart’s method [35]. There is also a R package named NbClust which was developed for calculating a number of these measures to determine the number of clusters discussed in [55].

Although many algorithms have been suggested to tackle the problem of determining the number of clusters, there does not appear to be a single method proven to be the most reliable, possibly due to the high complexity in real-world datasets. Thus, task-specific method for determining the number of clusters is always preferred.

3. A new framework for biomedical entity sense induction

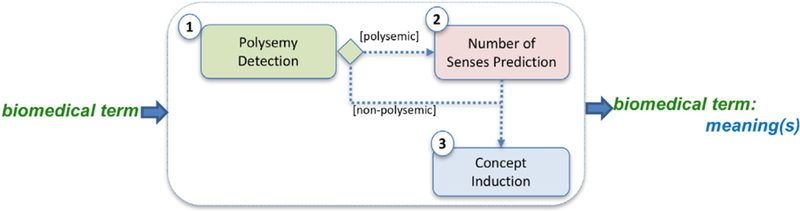

Our framework is composed of three steps tackling two specific problems in automating the biomedical entity sense induction task, as depicted in Fig. 1.

Fig. 1.

The proposed framework for biomedical entity sense induction.

Polysemy detection is modeled as a binary classification task (i.e., true, whether a biomedical entity is associated with more than one sense; or false, otherwise in the specific context expressed in the source text). It is an important step as it narrows down the targets and reduces the number of options the downstream steps have to explore.

Number of senses prediction is to predict the number of concepts (fc) associated with a biomedical entity, which is based on a set of clustering quality measures; and

Concept induction is to induce the concepts of a biomedical entity according to its context, based on the extracted keywords of the clusters.

Our dataset contained 203 ambiguous biomedical entities extracted from MEDLINE4 abstracts as a part of the word sense disambiguation (WSD) test collections published by the National Library of Medicine [37]. Each biomedical entity in the dataset was annotated with one or more MeSH concepts and linked to the abstracts from which it was extracted. To construct the classification models for polysemy detection, we manually curated a dataset of 203 non-ambiguous biomedical entities as negative samples. The curation process of this dataset is described in the results section.

In the following sections, we will describe each step of the framework in detail.

3.1. Polysemy detection

In [50], we presented a set of statistical measures as features to characterize a piece of text. These features were extracted either directly from the text (i.e., direct features, e.g., the number of UMLS terms in the text) or from an undirected graph induced from the text based on co-occurrences (i.e., graph-based features, e.g., the unweighted degree of the target entity). A total of 23 features was proposed. A detailed description of these 23 features is presented in the supplemental material. We also used two terminology resources: UMLS (i.e., biomedical) and AGROVOC (i.e., agronomic)5 to derive these features. These two dictionaries have a certain degree of overlapping concepts, which can be considered as polysemic entities that belong to both biomedical and agricultural domains. For instance, the entity “cold” can represent either a disease (i.e., the common cold) or the feeling of no warmth in UMLS, as well as the temperature of the weather in AGROVOC. Thus, we hypothesized that new entities (that did not appear in these two dictionaries) that co-occurred with existing polysemic entities were more likely to be polysemic as well.

Based on these 23 features, we then experimented with a wide range of learning algorithms to determine whether an entity is polysemic.

3.2. Number of senses prediction

One of the most essential problems in WSI is to determine the number of senses k. Many algorithms have been proposed to identify k, but none was tailored for biomedical text [57,59,54,17]. Further, one limitation of these approaches is that they tend to predict a high number of senses, possibly due to the nature of the text they were targeting. In contrast, in the biomedical domain, according to UMLS version 2015 AA, polysemic terms were linked to only 2–5 senses. Thus, as we aim to identify possible senses for a new biomedical candidate term, we will limit the number of senses between 2 and 5, which was also used in [60].

Table 1 shows the descriptive statistics of polysemic entities in UMLS version 2015 AA for English, French, and Spanish. The English version of UMLS contained about 9,919,000 distinct entities, 65,546 of which were polysemic (i.e., ~0.66%, roughly one out of every 200 UMLS entities was polysemic). Similarly, Table 2 shows the descriptive statistics of polysemic entities in MeSH6 (Medical Subject Headings). The number of polysemic entities in the English version of MeSH was 179, which was ~0.02% (i.e., roughly one out of every 5000 MeSH entities was polysemic). In short, there are more non-polysemic (monosemous) than polysemic entities in the biomedical domain for the three languages: English, French, and Spanish.

Table 1.

Polysemic entities in UMLS.

| # of Senses | English | French | Spanish |

|---|---|---|---|

| 2 | 54,257 | 1292 | 10,906 |

| 3 | 7770 | 36 | 414 |

| 4 | 1842 | 1 | 56 |

| 5 + | 1677 | 1 | 18 |

Table 2.

Polysemic entities in MeSH.

| # of Senses | English | French | Spanish |

|---|---|---|---|

| 2 | 178 | 11 | 0 |

| 3 | 1 | 0 | 0 |

| 4 | 0 | 0 | 0 |

| 5 + | 0 | 0 | 0 |

To determine the number of senses, we executed a number of different clustering algorithms varying k (i.e., the number of clusters) from 2 to 5, then evaluated the quality indexes of the resulting clusters, and picked a k with the best clustering quality index.7 We used the CLUTO8 application – a flexible software program for clustering and analyzing the characteristics of the clusters, to conduct our experiments. We experimented with 5 different clustering algorithms (i.e., rb, rbr, direct, agglo, graph,) of three different types: partitional, agglomerative, and graph-partitioning.

Two common types of quality indexes [30], external and internal, are often used to evaluate the quality of the clustering results. External indexes use pre-labelled datasets with known cluster configurations; while, internal indexes are used to evaluate the goodness of a cluster configuration without any prior knowledge of the clusters. In this project, we proposed 5 new internal clustering quality indexes, as shown in Table 4, built upon the intra-cluster similarity (i.e., internal similarities, ISIM, of the objects within a cluster) and inter-cluster similarity (i.e., external similarities, ESIM, between clusters) measures offered by CLUTO. To find the optimal number of clusters, we also need an objective function to rank the quality of a clustering solution based on a quality measure [13]. We can obtain the optimal clustering results by optimizing (i.e. maximize/minimize) the objective function, which gives us an idea as to whether the obtained clusters are homogeneous. CLUTO has a set of built-in objective functions, as shown in Table 3. Nevertheless, each quality measure and each objective function have both strengths and weaknesses. Thus, we took an ensembing approach and examined the combinations of the different quality measures and objective functions.

Table 4.

New internal indexes for choosing the best k.

| (1) Average ISIM: represented as ak,OF, is the average of the ISIM value of each cluster of a clustering solution with the number of clusters = k. |

| then we choose the maximal value of the ISIM average of all clusters: |

| max(ak,OF) = max(a2,OF, a3,OF, a4,OF, a5,OF) |

| (2) Average ESIM: represented as bk,OF, is the average of the ESIM value of each cluster of a clustering solution with the number of clusters = k. |

| then |

| (3) Average of the difference between ISIM and ESIM: represented as ck,OF, is the average of the difference between ISIM and ESIM multiplied by the number of objects in such clusters |Si|. |

| then we choose the maximal value as the clustering solution should have a high difference between ISIM and ESIM, showing that each cluster is compact and the clusters are well separated. |

| (4) Division between the ISIM sum and ESIM sum: represented as ek,OF, is the division between the sum of ISIM multiplied by the number of objects in each cluster , and the sum of ESIM multiplied by the number of objects in each cluster. |

| then, we choose the maximal average value, because the clustering solution should have a high quotient between ISIM and ESIM, showing that each cluster is compacter and the clusters are well separated. |

| (5) Global objective function divided by the logarithm: represented as fk,OF, is the division between the value of the objective function (OF) and the logarithm of k to the base of 10. |

| then, we choose the maximal value. In general, the value of the objective function is higher when the number of clusters is high, so we address this drawback via taking the logarithm of the number of clusters. |

Notation: is the number of objects assigned to the ith cluster, OF = I1, I2, E1, H1, H2 is the objective function used by the clustering algorithm.

Table 3.

Objective functions for finding the number of clusters (k) in CLUTO.

Where k is the number of clusters, S is the total number of objects to be clustered, Si are the set of objects assigned to the ith cluster, ni is the number of objects in the ith cluster, v and u represent two objects, and sim(v, u) is the similarity between the two objects v and u.

Note that when optimizing the objective functions, we aim to maximize the internal similarity (ISIM), and minimize the external similarity (ESIM).

3.3. Concept induction

Following best practice for concept induction, we used the rb clustering algorithm that proved to perform well for text data, and used the predicted number of senses “k” from the previous step. Then we extracted the most relevant keywords of each cluster to represent the concept of the cluster. If a candidate entity was non-polysemic, then k = 1. Therefore, we did not need to apply a clustering algorithm, and directly extracted the most relevant keywords to represent the concept.

4. Results and discussion

We first discuss our datasets and then the results of our experiments for the proposed biomedical entity sense induction framework in this section. Each step of the framework was evaluated independently to show its effectiveness.

4.1. Datasets

4.1.1. Polysemic dataset

We extracted 203 ambiguous entities in English from the MSH WSD9 [37] dataset, which contained 106 ambiguous abbreviations, 88 ambiguous terms, and 9 entities that were combinations of both ambiguous abbreviations and terms. Each ambiguous entity was linked to on average 180 titles/abstracts obtained from MEDLINE. The MSH WSD dataset was a well-known benchmark dataset in biomedical word sense disambiguation literature [36,71,62,84].

Table 5 shows a few example entities in the dataset and their respective numbers of senses.

Table 5.

Details of the polysemic dataset.

| Term | Number of Senses |

|---|---|

| Ca | 4 |

| Cold | 3 |

| Cortical | 3 |

| Yellow Fever | 2 |

| … | … |

4.1.2. Non-polysemic dataset

We needed negative samples (i.e., non-ambiguous biomedical entities) to build the classifiers for polysemic detection. Therefore, we constructed a non-polysemic dataset using the MEDLINE MeSH terms in two steps: (i) selecting non-polysemic terms from MeSH, and (ii) extracting a set of titles and abstracts containing those terms from MEDLINE. We made this annotated dataset publicly available online at http://simbig.org/NotPolysemicCorpus.zip.

Table 6 summarizes our polysemic and non-polysemic datasets.

Table 6.

Summary of the polysemic and non-polysemic datasets.

| Description | |

|---|---|

| Number of Entities | 406 |

| Number of Ambiguous Entities | 203 |

| Number of Non-ambiguous Entities | 203 |

| Number of Tokens of the Context of Ambiguous Entities | 7,597,337 |

| Number of Tokens of the Context of Non-ambiguous Entities | 8,294,378 |

| Mean number of Tokens for each Ambiguous Entity | 37,425 |

| Mean number of Tokens for each Non-ambiguous Entity | 40,859 |

4.2. Experiments for polysemy detection

We proposed 23 novel features and conducted a preliminary analysis with these features for detecting polysemic biomedical entities in our previous work [50]. In this paper, we conducted a comprehensive evaluation and in-depth analysis of these 23 novel features. Following best practices in machine learning, we split the dataset into a training set (70%) and a test set (30%). The training set was used to build the model, while the remaining 30% of the dataset was a hold-out test set. Both types of biomedical terms, i.e., polysemic and non-polysemic terms, are important in the biomedical entity sense induction framework, since we seek to build/enrich dictionaries with new polysemic and non-polysemic biomedical entities. Therefore, it was important to evaluate the performance of our model over each class (polysemic as P and non-polysemic as A/P). We reported the classification performance in terms of F-measure (F) for each class, on the hold-out test set, as well as the average of the two F-measure values. We experimented with a set of well-known supervised algorithms, implemented in the Weka10 software with the default parameters for each algorithm.

Table 7 shows our experiment results. The Naive Bayes (NB) obtained the best results, with an average F-measure of 98.4%. Excluding ZeroR and OneR, the average F-measures of the other classifiers were between 94.25% (i.e., MCC) and 98.4% (i.e., NB). These results show that our novel features performed well in the polysemy detection task.

Table 7.

Evaluating classifiers with both direct and graph-based features.

| FP | FNP | FAverage | |

|---|---|---|---|

| Zero Rule (ZeroR) | 66.7% | 00.0% | 33.35% |

| One Rule (OneR) | 85.2% | 85.2% | 85.20% |

| Naive Bayes (NB) | 98.4% | 98.4% | 98.40% |

| AdaBoost (AB) | 96.0% | 95.8% | 95.90% |

| Decision Tree (DT) | 97.6% | 97.5% | 97.55% |

| Support Vector Machine (SVM) | 97.5% | 97.6% | 97.55% |

| Meta Bagging (MB) | 98.4% | 98.3% | 98.35% |

| k-nearest neighbors (k-NN), k = 1 | 98.3% | 98.4% | 98.35% |

| Multilayer Perception (NN) | 95.8% | 96.0% | 95.90% |

| MultiClassClassifier Logistic (MCC) | 94.3% | 94.2% | 94.25% |

Where FP is the F-measure of the polysémie class and FNP the F-measure of non-polysemic class.

4.2.1. Discussion

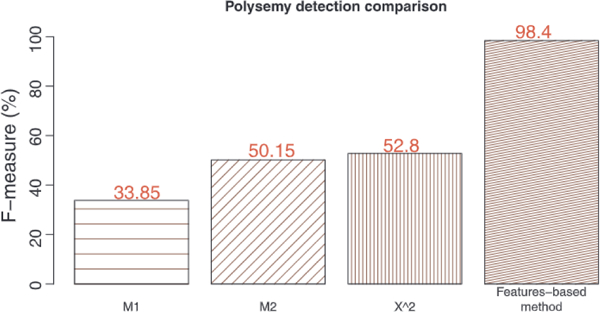

To the best of our knowledge, no previous studies has focused on polysemy detection (i.e., as a binary classification task) to determine whether a biomedical term is polysemic or not. However, a few studies have also used machine learning methods to classify words or phrases. For instance, in [4], Al-Mubaid et al. built a binary classification model to classify ambiguous entities into two semantic categories: genes or proteins. In a follow up study, the authors used similar methods for biomedical word-sense disambiguation [5]. In both studies, the authors built support vector machines (SVMs) and used neighborhood context words of the target word as features. In addition, they used a number feature selection methods such as chi-square (χ2) [4], mutual information (MI) [5], and M2 [5]. Since these studies were framed as word classification tasks similar to our polysemy detection task, we adapted their methods to evaluate our polysemy detection approach.

We used the same processes presented in [4,5] for building the polysemy detection classifier. From the labeled training examples of the target biomedical entity, we built the feature vectors using their neighborhood context words. The top 20, 30, 50, 100, and 200 context words were selected using the feature selection methods: χ2> MI, and M2. We then built SVMs11 with the selected features. In our experiments, the best results were obtained with the top 200 context words. Table 8 summarizes the performance results of these SVMs in terms of their F-measures. The χ2 feature selection method obtained the best results, with an average F-measure of 52.8% between polysemic and non-polysemic samples. These results show that using neighboring words as features with SVMs are suboptimal in classifying biomedical terms as polysemic or not.

Table 8.

Results of using neighborhood context words as features with support vector machines for polysemy detection.

| FP | FNP | FAverage | |

|---|---|---|---|

| χ2 | 40.1% | 65.5% | 52.80% |

| MI | 01.7% | 66.0% | 33.85% |

| M2 | 35.6% | 64.7% | 50.15% |

Where FP is the F-measure of the polysemic class and FNP the F-measure of non-polysemic class.

As shown in Fig. 2, our method achieved better performance than the baseline studies.

Fig. 2.

Comparing our method with the baseline studies.

4.3. Predicting the number of senses

We evaluated our approach to induce the possible number of senses of an entity. Note that we only need to consider the entities that have been classified as polysemic. Thus, we only used the polysemic dataset.

Our evaluation experiments were conducted in threefold: (1) applied clustering algorithms over the bag-of-words representation and evaluated the proposed new internal quality indexes of the clusters; (2) applied clustering algorithms over a graph representation of the titles/abstracts associated with each entity and evaluated the proposed new internal quality indexes of the clusters; and (3) evaluated the direct and graph-based features we used for polysemy detection above (see Section 3.1) with supervised algorithms to predict the number of senses.

For clustering tasks, we used five well-known clustering algorithms implemented in the CLUTO software, including rb, rbr, direct, agglo, graph.

4.3.1. Bag-of-words representation

We used the cosine similarity measure for our clustering experiments with the BoW representation. We tested various sizes of the feature vectors, and determined that the best results were obtained with 3, 000 BoW features. The BoW features were extracted with the BioTex application12 [49,51].

Table 9 illustrates the process for determining the number of clusters (number of possible senses) for the entity “yellow fever” according to ak,l2 and ck,l2, where k is the number of clusters, a and c are two quality indexes, 12 is the objective function, and the clustering algorithm is Partidonal. In our dataset, “yellow fever” was linked to 2 senses (“virus” and “vaccine”). Therefore, the correct number of clusters is 2. As shown in Table 9, we varied the number of clusters from 2 to 5, applied the clustering algorithm, and computed the quality indexes.

Table 9.

Choosing k according to ak,I2 and ck,I2 values.

| Algorithm: Partitional (rb) | ||||||

|---|---|---|---|---|---|---|

| k | Cluster ID | ISIM | ESIM | ak,I2 | ck,I2 | |

| 2 | Cluster-1 | 110 | 0.058 | 0.025 | 0.053 | 2.655 |

| Cluster-2 | 73 | 0.048 | 0.025 | |||

| 3 | Cluster-1 | 43 | 0.087 | 0.029 | 0.070 | 2.374 |

| Cluster-2 | 67 | 0.074 | 0.030 | |||

| Cluster-3 | 73 | 0.048 | 0.025 | |||

| 4 | Cluster-1 | 16 | 0.118 | 0.008 | 0.085 | 2.299 |

| Cluster-2 | 43 | 0.087 | 0.029 | |||

| Cluster-3 | 67 | 0.074 | 0.030 | |||

| Cluster-4 | 57 | 0.063 | 0.028 | |||

| 5 | Cluster-1 | 16 | 0.118 | 0.008 | 0.094 | 2.191 |

| Cluster-2 | 26 | 0.105 | 0.025 | |||

| Cluster-3 | 43 | 0.087 | 0.029 | |||

| Cluster-4 | 31 | 0.086 | 0.032 | |||

| Cluster-5 | 67 | 0.074 | 0.030 | |||

| k = 5 | ||||||

| k = 2 | ||||||

k is the number of clusters, is the number of entities in the cluster i, ISIM is the intra-cluster similarity, ESIM is the inter-cluster similarity, a and c are two quality indexes, and I2 is the objective function.

As shown in Table 9, according to the quality index c, the optimal number of clusters is k = 2; while according to the quality index a, the optimal number of clusters is k = 5.

Note that we considered 5 different objective functions for 5 different clustering algorithms with 5 different quality indexes, for each entity in our dataset. Table 10 summarizes the results for “yellow fever” considering two objective functions I1 and I2.

Table 10.

Predicting the number of senses (k) for “yellow fever”, with bag-of-words representation. (The true number of senses is 2.)

| Internal Indexes | rb | rbr | direct | agglo | graph |

|---|---|---|---|---|---|

| max(ak,I1) | 5 | 5 | 4 | 5 | 2 |

| min(bk,I1) | 3 | 3 | 3 | 2 | 2 |

| max(ck,I1) | 3 | 3 | 2 | 2 | 2 |

| max(ek,I1) | 5 | 5 | 5 | 5 | 2 |

| max(fk,I1) | 2 | 2 | 2 | 2 | 2 |

| max(ak,I2) | 5 | 5 | 5 | 5 | 5 |

| min(bk,I2) | 4 | 4 | 4 | 2 | 2 |

| max(ck,I2) | 2 | 2 | 2 | 2 | 2 |

| max(ek,I2) | 5 | 5 | 5 | 5 | 5 |

| max(fk,I2) | 2 | 2 | 2 | 2 | 2 |

∀ k = {2, 3, 4, 5}; rb, rbr, direct, and agglo are the clustering algorithms; a, b, c, e, and f are the quality indexes.

To evaluate the performance of the combinations of different quality indexes, clustering algorithms and objective functions, we carried out the experiment for all of our 203 ambiguous entities. Table 11 summarizes the F-measure for determining the number of clusters on the entire dataset, while considering two objective functions I1 and I2. Overall, Table 11 shows that max (fk,OF) gave the best results for all of the clustering algorithms with the objective function I2.

Table 11.

F-measures for predicting the number of senses with the 203 ambiguous entities using the bag-of-words representation.

| Internal indexes | rb | rbr | direct | agglo | graph |

|---|---|---|---|---|---|

| max(ak,I1) | 6.40% | 5.42% | 6.90% | 1.97% | 91.63% |

| min(bk,I1) | 36.45% | 38.92% | 34.98% | 92.12% | 93.10% |

| max(ck,I1) | 32.02% | 30.54% | 31.53% | 42.86% | 93.10% |

| max(ek,I1) | 0.99% | 1.48% | 1.48% | 8.87% | 93.10% |

| max(fk,I1) | 92.12% | 92.12% | 93.10% | 93.10% | 92.61% |

| max(ak,I2) | 0.99% | 0.99% | 0.49% | 1.97% | 91.63% |

| min(bk,I2) | 81.77% | 84.73% | 86.21% | 92.12% | 93.10% |

| max(ck,I2) | 88.67% | 87.68% | 91.63% | 42.86% | 93.10% |

| max(ek,I2) | 3.45% | 2.96% | 4.93% | 8.87% | 93.10% |

| max(fk,I2) | 93.10% | 93.10% | 93.10% | 93.10% | 93.10% |

∀ k = {2, 3, 4, 5}; rb, rbr, direct, and agglo are the clustering algorithms; a, b, c, e, and f are the quality indexes.

4.3.2. Graph representation

Similar to the evaluation of bag-of-words representations, we evaluated the graph representations with the combinations of 5 objective functions, 5 clustering algorithms, and 5 quality indexes.

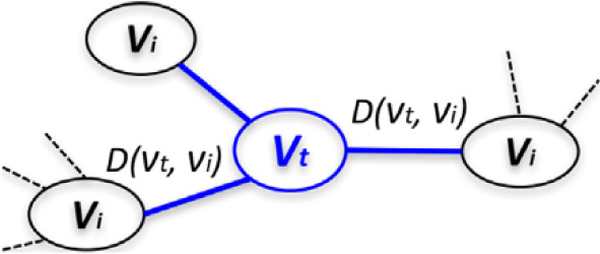

Graph construction:

For each biomedical entity of interest, we built an undirected weighted graph (see Fig. 3), where the vertices were biomedical entities, and the edges denoted co-occurrence relationships (i.e., thus undirected) between the biomedical entities. The weight of an edge represented the degree of association between two biomedical entities. Each entity graph contained the entity of interest and the top 1000 most important entities that co-occurred with the entity of interest. The co-occurring entities and their importance values were extracted with BioTex from the set of abstracts (Al) that contain the entity of interest (t). We used the Dice coefficient (D), a measure to compute the degree of co-occurrence between two entities x and y in a set of texts (i.e., titles and abstracts) in the graph.

Fig. 3.

An example of an entity graph, where t is the entity of interest.

In Fig. 3, vertex vt represents the entity of interest t, vertex vi (i = 1. .n) represents an entity i that co-occurred with t, the weight of the edge between vt, and vi is the dice coefficient D(vt, vi) between entity t and i (i.e., weight (vt, vi) = D(vt, vi)).

Table 12 summarizes the results for the entity yellow fever” with two objective functions I1 and I2. In our dataset, the number of clusters (concepts) of the term yellow fever” is 2. We observed that max (fk,I1) and max (fk,I1) predicted the correct number of senses in general.

Table 12.

Predicting the number of senses (k) for “yellow fever”, with graph representation. (The true number of senses is 2.)

| Internal indexes | rb | rbr | direct | agglo | graph |

|---|---|---|---|---|---|

| max(ak,I1) | 2 | 2 | 2 | 5 | 2 |

| min(bk,I1) | 2 | 2 | 2 | 2 | 2 |

| max(ck,I1) | 2 | 2 | 2 | 5 | 2 |

| max(ek,I1) | 5 | 5 | 5 | 5 | 2 |

| max(fk,I1) | 2 | 2 | 2 | 2 | 2 |

| max(ak,I2) | 5 | 5 | 4 | 5 | 2 |

| min(bk,I2) | 2 | 2 | 2 | 2 | 2 |

| max(ck,I2) | 2 | 2 | 2 | 5 | 2 |

| max(ek,I2) | 5 | 5 | 5 | 5 | 2 |

| max(fk,I2) | 2 | 2 | 2 | 2 | 2 |

∀ k = {2, 3, 4, 5}; rb, rbr, direct, and agglo are the clustering algorithms; a, b, c, e, and f are the quality indexes.

Similar to evaluating the bag-of-words representations, we conducted this experiment for all of our 203 ambiguous entities. Table 13 shows the F-measure results for the prediction of the number of clusters. As shown, max (fk,OF) gives the best F-measure results for all the clustering algorithms for both objective functions.

Table 13.

F-measures for predicting the number of senses with the 203 ambiguous entities using the graph representation.

| Internal indexes | rb | rbr | direct | agglo | graph |

|---|---|---|---|---|---|

| max(ak,I1) | 1.97% | 1.97% | 1.48% | 1.48% | 9.36% |

| min(bk,I1) | 77.83% | 77.83% | 75.86% | 93.10% | 64.04% |

| max(ck,I1) | 76.35% | 74.88% | 76.85% | 85.22% | 64.53% |

| min(ck,I1) | 8.37% | 7.88% | 7.39% | 0.49% | 21.67% |

| max(ek,I1) | 3.94% | 4.43% | 4.93% | 47.78% | 3.94% |

| max(fk,I1) | 93.10% | 93.10% | 93.10% | 93.10% | 93.10% |

| max(ak,I2) | 0.49% | 0.99% | 0.49% | 1.48% | 2.96% |

| min(bk,I2) | 82.27% | 82.76% | 86.21% | 93.10% | 80.3% |

| max(ck,I2) | 91.13% | 91.13% | 90.15% | 85.22% | 87.19% |

| min(ck,I2) | 0.99% | 0.99% | 0.99% | 0.49% | 1.48% |

| max(ek,I2) | 4.43% | 3.94% | 3.94% | 47.78% | 2.46% |

| max(fk,I2) | 93.10% | 93.10% | 93.10% | 93.10% | 93.10% |

∀ k = {2, 3, 4, 5}; rb, rbr, direct, and agglo are the clustering algorithms; a, b, c, e, and f are the quality indexes.

4.3.3. Prediction with both the direct and graph-based features

Similar to our approach for polysemy detection (see Section 3.1), we used both the direct and graph-based features to form a multiclass supervised classification task to predict the number of senses (i.e., 4 classes: 2, 3, 4, 5). The results are provided in terms of Accuracy (A), Precision (P), Recall (R), and F-Measure (F).

Table 14 summarizes the results on a hold-out independent test dataset. The MultiClassClassifier Logistic (MCC) obtained the best results, with an F-measure of 91.2%, followed by Meta Bagging (MB) with an F-measure of 90.9%. These results show that these features are useful for predicting the number of clusters.

Table 14.

Number of senses prediction using both direct and graph-based features.

| Faverage | |

|---|---|

| Naive Bayes (NB) | 76.9% |

| AdaBoost (AB) | 89.3% |

| Tree Decision (TD) | 89.0% |

| Support Vector Machine (SVM) | 89.8% |

| Meta Bagging (MB) | 90.9% |

| k-nearest neighbors (k-NN), k = 1 | 88.6% |

| Multilayer Perceptron (NN) | 89.0% |

| MultiClassClassifier Logistic (MCC) | 91.2% |

4.3.4. Discussion

The bag-of-words and graph representations obtained similar F-measure values in predicting the number of senses. In both cases, the best F-measure is 93.1%. As shown in Tables 11 and 13, the best F-measure results are given by the fk index. The fk index is the division between the value of the objective function and the logarithm of k.

When using supervised learning algorithms based on the direct and graph-based feature representations, MultiClassClassifier Logistic (MCC) and Meta Bagging (MB) were two of the best models. MB is a kind of ensemble learning algorithm that generates multiple versions of a predictor to build an aggregated predictor. MCC is also a meta-classifier that handles multi-class datasets with multiple binary classifiers. In short, the direct and graph-based features are effective in both determining the polysemy of candidate terms as well as predicting the associated number of senses (or concepts), which makes it easier to adopt in real-world implementations.

Most existing WSI studies were in the general domain (e.g., Duluth-WSI [65], UoY [42], NMFlib [80], NB [20], RPCL [33]) and have used the SemEval-2010 WSI shared task dataset [53] to test their approaches. The SemEval-2010 dataset contains 100 target words: 50 nouns and 50 verbs. A common step between the SemEval-2010 WSI task (task 14) [53] and our framework is the number of sense prediction. The dataset used in SemEval-2010 differs significantly from ours as: (1) it contains both single-word nouns and single-word verbs as target words, while ours contains single- and multi-word entities which are composed mainly of nouns and adjectives; (2) the target words are associated with a higher number of senses (2 ≤ k ≤ 14), while ours are between 2 and 5; and (3) texts supplied for each target word were extracted from different websites using Yahoo Search API, and directly from additional sources including Wall Street Journal, CNN, ABC and others; while our texts were extracted from PubMed. Nevertheless, we evaluated our number of sense prediction method over the SemEval-2010 dataset, which resulted in F-measures of 13.5% and 43% when predicting the number of senses for nouns and verbs, respectively. These poor results are mainly caused by the fact that our feature representations (i.e., the bag-of-words and graph-based representations) were based on knowledge from the biomedical domain (i.e., UMLS). In our feature representations, we used the LIDF-measure, which were derived based on the linguistic patterns of biomedical entities in UMLS. These results suggest that in these NLP tasks, using features tailored to the specific domain of the study will improve model performances significantly.

Moreover, a particular challenge related to WSI in the biomedical domain in comparison to the general domain is the different types of lexical ambiguity that exist and the unique characteristics of biomedical documents. In addition to ambiguous terms (words or phrases), abbreviations occur more frequently within biomedical documents [76] and they can have more than one possible expansion [76]. Moreover, the names of genes also contain lexical ambiguities, especially when standardized naming conventions are not always followed. More than one thousand gene terms overlap with generic English meanings [75,86]. Another challenge associated with the biomedical domain is the growing adoption of Electronic Health Record (EHR) and clinical documents that are manually created under a time constrain in which healthcare professionals often use shortened biomedical entity forms that are frequently ambiguous [11]. In addition, to the best of our knowledge, there is no biomedical WSI studies focusing on predicting the number of senses. Therefore, a direct baseline measure was not available. Nevertheless, as mentioned previously, the number of senses (clusters) was predicted by evaluating the clustering quality.

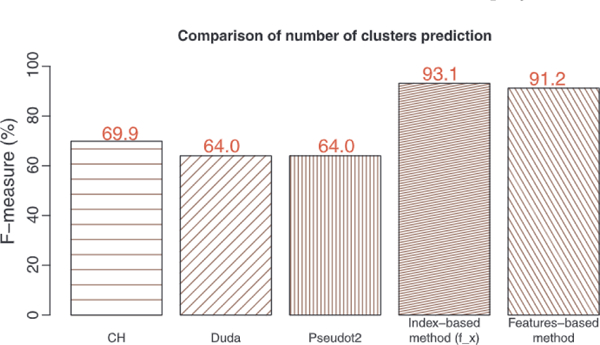

Thus, we used our dataset to our proposed internal clustering quality indexes with the indexes implemented in the R package NbClust including CH [16] (proved to be the most effective in [9]), DB [24], Duda [28] (proved to be the second most effective in [35]), KL [43], Pseudot2 [28], Sdbw [31], Sdindex [32], and Silhouette [70].

Another strong point of NbClust is that it allows to range the number of clusters between kmin = 2, to kmax = 5, as proposed in our method. Table 15e shows the results in terms of F-measure average of eight state-of-the-art indexes to predict the number of senses of polysemic biomedical terms. The CH index reached the highest performance with an F-measure of 69.9% followed by the Duda and Pseudot2 index with an F-measure of 64%, which proved the assertions done in [9,35].

Table 15.

Results of predicting the number of senses using eight state-of-the-art clustering quality indexes.

| Faverage | |

|---|---|

| CH | 69.9% |

| DB | 21.2% |

| Duda | 64.0% |

| KL | 40.9% |

| Pseudot2 | 64.0% |

| Sdbw | 47.8% |

| Sdindex | 55.7% |

| Silhouette | 55.7% |

As shown in Fig. 4, where the y-axis is the average F-measure (F), our proposed clustering quality index (fx) outperformed other methods including our own feature-based method in predicting the number of senses on our dataset. Even though our feature-based method performed slightly worse than our clustering quality index-based method, it outperformed all other state-of-the-art clustering quality indexes. One possible reason is that our index and novel features were built using domain dictionaries such as UMLS, AGROVOC, which might provide more representative characteristics of our dataset.

Fig. 4.

Results of the comparison between three state-of-the-art indexes and our methods.

4.4. Concept induction

In this section we evaluate our method to induce the possible concepts) of an entity. We considered both the polysemic and non-polysemic entities. The most difficult challenge is to identify the distinct senses of the entities that have been classified as polysemic. We evaluated the results of inducing the concept(s) of biomedical entities applying clustering algorithms over the bag-of-words representation. The number of senses predicted in the section above was used as an input of the clustering algorithms. For clustering tasks, we used the rb clustering algorithm, which gave the best results according to the CLUTO software. We used two objective functions: I1 and I2 (see Section 3.2), which are recommended in CLUTO. We then extracted the top ranked (5,10, and 20) keywords for each generated cluster with the tf-idf, okapi-bm25, and LIDF-value measures. Finally, we compared the keywords with the definitions of each entity in UMLS, and measured the overlapping of the keywords over the definition. The definitions of each entity were extracted from UMLS based on the CUIs provided in both datasets. Since for an entity, there are multiple UMLS definitions and clusters, there are also different combinations to match these UMLS definitions to clusters. For instance, “yellow fever” has two definitions and two clusters, there are two different combinations for its evaluation: “Definition 1-clusterl—Definition 2-cluster2” or “Definition 1-cluster2—Definition 2-clusterl”. In our evaluation, we automatically took the combination which maximized the overlapping rate between the keywords in clusters and the words in definitions. Table 16 summarizes the results of the overlapping of the keywords over the definitions. The tf-idf obtained the best results, with an average overlapping rate of 42%.

Table 16.

The average overlapping rates (KW@5, 10, 20) of extracted keywords over UMLS entity definitions.

| KW@5 | KW@10 | KW@20 | |

|---|---|---|---|

| I1 and LIDF-value | 0.352 | 0.311 | 0.270 |

| I1 and okapi | 0.380 | 0.326 | 0.259 |

| I1 and tf-idf | 0.404 | 0.326 | 0.276 |

| I2 and LIDF-value | 0.349 | 0.311 | 0.278 |

| I2 and okapi | 0.399 | 0.324 | 0.270 |

| I2 and tf-idf | 0.420 | 0.355 | 0.280 |

4.4.1. Discussion

The overlapping rates showed in Table 16 give meaningful information as a performance metric to evaluate concept induction results. However, overlapping rates do not give the full picture, as many entity definitions in UMLS do not contain the exact keywords, but contain words that have similar semantics to the extracted keywords.

Table 17 shows an example of keywords extracted per cluster for the entity “yellow fever”, where we can see that every cluster contains keywords strongly related to the concepts of the entity “yellow fever” (i.e., “vaccine” and “virus”).

Table 17.

Samples of keywords extracted per cluster for the entity “yellow fever”.

| CUI | Definition | Keywords |

|---|---|---|

| C0043395 | An acute infectious disease primarily of the tropics, caused by a virus and transmitted to man by mosquitoes of the genera Aedes and Haemagogus. The severe form is characterized by fever, HEMOLYTIC JAUNDICE, and renal damage | outbreak virus news outbreak news dengue disease epidemic mosquito fever fever virus |

| C0301508 | Vaccine used to prevent YELLOW FEVER. It consists of a live attenuated 17D strain of the YELLOW FEVER VIRUS | virus vaccination vaccine disease encephalitis fever vaccination yellow fever accination 17d vaccine response fever vaccine |

We also performed a manual evaluation of the induced concepts to validate if the extracted keywords conveyed the right semantics of each biomedical entity (i.e., the reference definitions are concepts extracted from UMLS). The evaluation was conducted on the polysemic dataset for 174 entities. 73.33% of the induced concepts can adequately represent their UMLS definitions. We also reviewed the remaining 26.67% entities whose induced concepts were considered of low quality to represent their UMLS definitions. We made several observations of these low-quality clusters (induced concepts). First, in our framework, we extracted multiple clusters for each polysemic entity, where each cluster was supposed to represent a distinct sense. Often in these low-quality induced concepts, we observed that only part of the clusters could convey the UMLS definitions of the corresponding biomedical entity, while other clusters did not. However, the clusters that did not correspond to any UMLS definitions themselves were still cohesive internally. One possibility is that these clusters might represent new meanings (i.e., senses) of the biomedical entity that were not captured in UMLS. Second, some of the UMLS definitions associated with a single entity were semantically close. Table 18 illustrates an example of the automatically detected low-quality results induced for the entity “HIV”, where the two distinct concepts (i.e., C0019693 and C0019682) defined in the UMLS for “HIV” have very similar meanings. Third, the keywords we extracted were single words (rather than phrases), which might not be able to convey the semantic information of the definition. Fourth, our evaluation can also have been affected by the quality of the terminologies in the UMLS. UMLS merged hundreds of different terminology resources, and it contains many different types of errors, including semantic [21,90] and lexical errors [90], and numerous redundancy [22]. For instance, a quality assurance study of UMLS in 2009 found errors in 81% of the concepts studied [90].

Table 18.

Keywords extracted of the induced concepts for the entity “HIV”.

| CUI | Definition | Keywords |

|---|---|---|

| C0019693 | Includes the spectrum of human immunodeficiency virus infections that range from asymptomatic seropositivity, thru AIDS-related complex (ARC), to acquired immunodeficiency syndrome (AIDS) | virus peptide replication vaccine activity infection antibody immunodeficiency host fusion |

| C0019682 | Human immunodeficiency virus. A non-taxonomic and historical term referring to any of two species, specifically HIV-1 and/or HIV-2. Prior to 1986, this was called human T-lymphotropic virus type III/lymphadenopathy-associated virus (HTLV-III/LAV). From 1986 to 1990, it was an official species called HIV. Since 1991, HIV was no longer considered an official species name; the two species were designated HIV-1 and HIV-2 | treatment prevention testing transmission research infection study youth risk group |

5. Conclusions

In this paper, we present a framework for biomedical entity sense induction with three steps: (1) to predict whether a biomedical term is polysemic, (2) to induce the number of senses for a biomedical entity, and (3) to induce the concepts (senses) through ranked keyword extraction. The first two steps are novel, which existing WSI frameworks have often neglected.

Through extensive evaluations, we have shown that the novel features allowed a more effective polysemy classification task. We also presented a novel approach to predict the number of senses (clusters) for candidate biomedical entities. Our contribution is the internal clustering quality indexes, which are then used to predict the number of senses. We also experimented with the same direct and graph-based features used for polysemy detection to predict the number of senses, which have also shown promising results. Using the predicted number of senses as a priori, the clustering task for concept induction is then straightforward. From the clusters, we can easily extract keywords to represent the different senses (concepts) of the biomedical entity.

As future work, different strategies could be considered to improve the proposed framework, such as introducing more features using other dictionaries like WordNet and BabelNet.

Supplementary Material

Acknowledgements

This work was supported in part by the French National Research Agency (ANR) grant ANR-12-JS02-01001, the University of Montpellier, the French National Center for Scientific Research (CNRS), the FINCyT program, Peru, the National Science Foundation (NSF) award #1734134, and the National Institutes of Health (NIH) grant UL1TR001427.

Footnotes

Conflict of interest

The authors declared that there is no conflict of interest.

Appendix A. Supplementary material

Supplementary data associated with this article can be found, in the online version, at http://dx.doi.org/10.1016/j.jbi.2018.06.007.

This paper is a significant extension of our previous studies published in the proceedings of: (1) The 10th International Conference on Language Resources and Evaluation (LREC’2016) titled, “Automatic biomedical term polysemy detection”; and (2) The 19th International Conference on Extending Database Technology (EDBT’2016) titled, “A way to automatically enrich biomedical ontologies”, with new scientific methodologies and results.

UMLS is a large biomedical thesaurus that is organized by concept or meaning, and links similar names for the same concept from nearly 200 different ontologies and terminologies http://www.nlm.nili.gov/research/umls.

MEDLINE is a bibliographic database of life sciences and biomedical information.

AGROVOC is a multilingual controlled vocabulary covering all areas of interest to the Food and Agriculture Organization of the United Nations: http://aims.fao.org/agrovoc.

MeSH is the NLM controlled vocabulary used for indexing articles in PubMed.

This approach was an expansion of our previous study published as a poster in EDBT 2016 [52].

The SVM algorithm uses a polynomial kernel.

BIOTEX is an application we previously built to automatically extract biomedical terms from free text: http://tubo.lirmm.fr/biotex/.

References

- [1].Agirre E, Edmonds P, Word Sense Disambiguation: Algorithms and Applications, first ed., Springer Publishing Company, Incorporated, 2007. [Google Scholar]

- [2].Agirre E, Soroa A, Semeval-2007 task 02: evaluating word sense induction and discrimination systems, Proceedings of the 4th International Workshop on Semantic Evaluations, SemEval ‘07, Stroudsburg, PA, USA, Association for Computational Linguistics, 2007, pp. 7–12. [Google Scholar]

- [3].Agirre E, Soroa A, UBC-AS: a graph based unsupervised system for induction and classification, Proceedings of the 4th International Workshop on Semantic Evaluations, SemEval ‘07, Stroudsburg, PA, USA, Association for Computational Linguistics, 2007, pp. 346–349. [Google Scholar]

- [4].Al-Mubaid H, Chen P, Biomedical term disambiguation: an application to gene-protein name disambiguation, Proceedings of the Third International Conference on Information Technology: New Generations, ITNG ‘06, IEEE Computer Society, Washington, DC, USA, 2006, pp. 606–612. [Google Scholar]

- [5].Al-Mubaid H, Gungu S, A learning-based approach for biomedical word sense disambiguation, Sci. World J (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Albano L, Beneventano D, Bergamaschi S, Word sense induction with multilingual features representation, 2014 IEEE/WIC/ACM International Joint Conferences on Web Intelligence (WI) and Intelligent Agent Technologies (IAT), vol. 2, IEEE, 2014, pp. 343–349. [Google Scholar]

- [7].Albano L, Beneventano D, Bergamaschi S, Multilingual word sense induction to improve web search result clustering, Proceedings of the 24th International Conference on World Wide Web Companion, WWW ‘15, New York, NY, USA, International World Wide Web Conferences Steering Committee, ACM, 2015, pp. 835–839. [Google Scholar]

- [8].Albatineh AN, Niewiadomska-Bugaj M, Mcs: a method for finding the number of clusters, J. Classif 28 (2) (2011) 184–209. [Google Scholar]

- [9].Anderson MJ, A new method for non-parametric multivariate analysis of variance, Austral Ecol. 26 (1) (2001) 32–46. [Google Scholar]

- [10].Baldwin T, Li Y, Alexe B, Stanoi IR, Automatic term ambiguity detection, Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics, Sofia, Bulgaria, 2013, pp. 804–809. [Google Scholar]

- [11].Blair W, Smith B, Nursing documentation: frameworks and barriers, Contemp. Nurse 41 (2) (2012) 160–168. [DOI] [PubMed] [Google Scholar]

- [12].Blei DM, Ng AY, Jordan MI, Latent Dirichlet allocation, J. Mach. Learn. Res 3 (2003) 993–1022. [Google Scholar]

- [13].Booth JG, Casella G, Hobert JP, Clustering using objective functions and stochastic search, J. Roy. Stat. Soc.: Ser. B (Stat. Methodol.) 70 (1) (2008) 119–139. [Google Scholar]

- [14].Bordag S, Word sense induction: triplet-based clustering and automatic evaluation, Proceedings of the 11th Conference of the European Chapter of the Association for Computational Linguistics, EACL’06, Association for Computational Linguistics, Trento, Italy, 2006, pp. 137–144. [Google Scholar]

- [15].Brody S, Lapata M, Bayesian word sense induction, Proceedings of the 12th Conference of the European Chapter of the Association for Computational Linguistics, Association for Computational Linguistics, 2009, pp. 103–111. [Google Scholar]

- [16].Calinski T, Harabasz J, A dendrite method for cluster analysis, Commun. Stat.-Theory Methods 3 (1) (1974) 1–27. [Google Scholar]

- [17].Camacho-Collados J, Pilehvar MT, Navigli R, Nasari: integrating explicit knowledge and corpus statistics for a multilingual representation of concepts and entities, Artif. Intell 240 (2016) 36–64. [Google Scholar]

- [18].Chasin R, Rumshisky A, Uzuner O, Szolovits P, Word sense disambiguation in the clinical domain: a comparison of knowledge-rich and knowledge-poor unsupervised methods, J. Am. Med. Inform. Assoc 21 (5) (2014) 842–849. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Chen P, Ding W, Bowes C, Brown D, A fully unsupervised word sense disambiguation method using dependency knowledge, Proceedings of Human Language Technologies: The 2009 Annual Conference of the North American Chapter of the Association for Computational Linguistics, NAACL ‘09, Stroudsburg, PA, USA, Association for Computational Linguistics, 2009, pp. 28–36. [Google Scholar]

- [20].Choe DK, Chamiak E, Naive bayes word sense induction, Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, EMNLP ‘13, Seattle, Washington, USA, Association for Computational Linguistics, 2013, pp. 1433–1437. [Google Scholar]

- [21].Cimino JJ, Auditing the unified medical language system with semantic methods, J. Am. Med. Inform. Assoc 5 (1) (1998) 41–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Cimino JJ, Battling scylla and charybdis: the search for redundancy and ambiguity in the 2001 umls metathesaurus, Proceedings of the AMIA Symposium, American Medical Informatics Association, 2001, p. 120. [PMC free article] [PubMed] [Google Scholar]

- [23].Cook P, Lau JH, McCarthy D, Baldwin T, Novel word-sense identification, Proceedings of COLING 2014, the 25th International Conference on Computational Linguistics: Technical Papers, Dublin, Ireland, Dublin City University and Association for Computational Linguistics, 2014, pp. 1624–1635. [Google Scholar]

- [24].Davies DL, Bouldin DW, A cluster separation measure, IEEE Trans. Pattern Anal. Mach. Intell (2) (1979) 224–227. [PubMed] [Google Scholar]

- [25].Dehkordi MY, Boostani R, Tahmasebi M, A novel hybrid structure for clustering, Advances in Computer Science and Engineering, Springer, 2009, pp. 888–891. [Google Scholar]

- [26].Dorow B, Widdows D, Discovering corpus-specific word senses, Proceedings of the Tenth Conference on European Chapter of the Association for Computational Linguistics, EACL ‘03, vol. 2, Association for Computational Linguistics, Stroudsburg, PA, USA, 2003, pp. 79–82. [Google Scholar]

- [27].Duan W, Song M, Yates A, Fast max-margin clustering for unsupervised word sense disambiguation in biomedical texts, BMC Bioinformat. 10 (Suppl. 3) (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Duda RO, Hart PE, et al. , Pattern Classification and Scene Analysis vol. 3, Wiley, New York, 1973. [Google Scholar]

- [29].Frermann L, Lapata M, A bayesian model of diachronic meaning change, TACL 4 (2016) 31–45. [Google Scholar]

- [30].Gordon AD, Classification, (Chapman & Hall/crc Monographs on Statistics & Applied Probability; ), 1999. [Google Scholar]

- [31].Halkidi M, Vazirgiannis M, Clustering validity assessment: finding the optimal partitioning of a data set, Proceedings of the 2001 IEEE International Conference on Data Mining, ICDM ‘01, Washington, DC, USA, IEEE Computer Society, 2001, pp. 187–194. [Google Scholar]

- [32].Halkidi M, Vazirgiannis M, Batistakis Y, Quality scheme assessment in the clustering process, Proceedings of the 4th European Conference on Principles of Data Mining and Knowledge Discovery, PKDD ‘00, London, UK, UK, Springer-Verlag, 2000, pp. 265–276. [Google Scholar]

- [33].Huang Y, Shi X, Su J, Chen Y, Huang G, Unsupervised word sense induction using rival penalized competitive learning, Eng. Appl. Artif. Intell 41 (C) (2015) 166–174. [Google Scholar]

- [34].Ide N, Erjavec T, Automatic sense tagging using parallel corpora, Natural Language Pacific Rim Symposium (artificial intelligence), NLPRS ‘01, 2001. [Google Scholar]

- [35].Javed O, Shafique K, Rasheed Z, Shah M, Modeling inter-camera space-time and appearance relationships for tracking across non-overlapping views, Comput. Vis. Image Underst 109 (2) (2008) 146–162. [Google Scholar]

- [36].Jimeno-Yepes A, Higher Order Features and Recurrent Neural Networks based on Long-short Term Memory Nodes in Supervised Biomedical Word Sense Disambiguation, CoRR, abs/1604.02506, 2016. [DOI] [PubMed] [Google Scholar]

- [37].Jimeno-Yepes AJ, Mclnnes BT, Aronson AR, Exploiting mesh indexing in medline to generate a data set for word sense disambiguation, BMC Bioinformat. 12 (1) (2011) 223 (bibi). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [38].Klapaftis IP, Manandhar S, Word sense induction using graphs of collocations, Proceedings of the 2008 Conference on ECAI 2008: 18th European Conference on Artificial Intelligence, ECAI ‘08, Amsterdam, The Netherlands, IOS Press, 2008, pp. 298–302. [Google Scholar]

- [39].Klapaftis IP, Manandhar S, Word sense induction & disambiguation using hierarchical random graphs, Proceedings of the 2010 Conference on Empirical Methods in Natural Language Processing, EMNLP ‘10, Stroudsburg, PA, USA, Association for Computational Linguistics, 2010, pp. 745–755. [Google Scholar]

- [40].Kolesnikov A, Trichina E, Kauranne T, Estimating the number of clusters in a numerical data set via quantization error modeling, Pattern Recogn. 48 (3) (2015) 941–952. [Google Scholar]

- [41].Koper M, im Walde SS, A rank-based distance measure to detect polysemy and to determine salient vector-space features for german prepositions, Proceedings of the Ninth International Conference on Language Resources and Evaluation, LREC’14, Reykjavik, Iceland, European Language Resources Association (ELRA), 2014, pp. 4459–4466. [Google Scholar]

- [42].Korkontzelos I, Manandhar S, Uoy: graphs of unambiguous vertices for word sense induction and disambiguation, Proceedings of the 5th International Workshop on Semantic Evaluation, SemEval ‘10, Stroudsburg, PA, USA, Association for Computational Linguistics, 2010, pp. 355–358. [Google Scholar]

- [43].Krzanowski WJ, Lai Y, A criterion for determining the number of groups in a data set using sum-of-squares clustering, Biometrics (1988) 23–34. [Google Scholar]

- [44].Lau JH, Cook P, McCarthy D, Newman D, Baldwin T, Word sense induction for novel sense detection, Proceedings of the 13th Conference of the European Chapter of the Association for Computational Linguistics, Association for Computational Linguistics, 2012, pp. 591–601. [Google Scholar]

- [45].Lau JH, Cook P, McCarthy D, Newman D, Baldwin T, Word sense induction for novel sense detection, Proceedings of the 13th Conference of the European Chapter of the Association for Computational Linguistics, EACL ‘12, Stroudsburg, PA, USA, Association for Computational Linguistics, 2012, pp. 591–601. [Google Scholar]

- [46].Lee YK, Ng HT, An empirical evaluation of knowledge sources and learning algorithms for word sense disambiguation, Proceedings of the ACL-02 Conference on Empirical Methods in Natural Language Processing, EMNLP ‘02, vol. 10, Association for Computational Linguistics, Stroudsburg, PA, USA, 2002, pp. 41–48. [Google Scholar]

- [47].Liang J, Zhao X, Li D, Cao F, Dang C, Determining the number of clusters using information entropy for mixed data, Pattern Recogn. 45 (6) (2012) 2251–2265. [Google Scholar]

- [48].Lin D, Automatic retrieval and clustering of similar words, Proceedings of the 36th Annual Meeting of the Association for Computational Linguistics and 17th International Conference on Computational Linguistics, ACL-COLING ‘98, vol. 2, Association for Computational Linguistics, Stroudsburg, PA, USA, 1998, pp. 768–774. [Google Scholar]

- [49].Lossio-Ventura JA, Jonquet C, Roche M, Teisseire M, BIOTEX: a system for biomedical terminology extraction, ranking, and validation, Proceedings of the 13th International Semantic Web Conference, Posters & Demonstrations Track, ISWC’14, 2014, pp. 157–160. [Google Scholar]

- [50].Lossio-Ventura JA, Jonquet C, Roche M, Teisseire M, Automatic biomedical term polysemy detection, Proceedings of the Tenth International Conference on Language Resources and Evaluation, LREC’2016, Paris, France, European Language Resources Association (ELRA), 2016, pp. 1684–1688. [Google Scholar]

- [51].Lossio-Ventura JA, Jonquet C, Roche M, Teisseire M, Biomedical term extraction: overview and a new methodology, Inform. Retrieval J 19 (1–2) (2016) 59–99. [Google Scholar]

- [52].Lossio-Ventura JA, Jonquet C, Roche M, Teisseire M, A way to automatically enrich biomedical ontologies, Proceedings of the 19th International Conference on Extending Database Technology, EDBT’2016, New York, NY, USA, ACM, 2016, pp. 676–677. [Google Scholar]

- [53].Manandhar S, Klapaftis IP, Dligach D, Pradhan SS, Semeval-2010 task 14: word sense induction & disambiguation, Proceedings of the 5th International Workshop on Semantic Evaluation, SemEval ‘10, Stroudsburg, PA, USA, Association for Computational Linguistics, 2010, pp. 63–68. [Google Scholar]

- [54].McCarthy D, Apidianaki M, Erk K, Word sense clustering and clusterability, Comput. Linguist 42 (2) (2016) 245–275. [Google Scholar]

- [55].Milligan GW, Cooper MC, An examination of procedures for determining the number of clusters in a data set, Psychometrika 50 (2) (1985) 159–179. [Google Scholar]

- [56].Mirkin B, Choosing the number of clusters, Wiley Interdiscip. Rev.: Data Min. Knowl. Discov 1 (3) (2011) 252–260. [Google Scholar]

- [57].Navigli R, A quick tour of word sense disambiguation, induction and related approaches, SOFSEM 2012: Theory and Practice of Computer Science, Springer, 2012, pp. 115–129. [Google Scholar]

- [58].Navigli R, Crisafulli G, Inducing word senses to improve web search result clustering, Proceedings of the 2010 Conference on Empirical Methods in Natural Language Processing, EMNLP ‘10, Stroudsburg, PA, USA, Association for Computational Linguistics, 2010, pp. 116–126. [Google Scholar]

- [59].Navigli R, Vannella D, Semeval-2013 task 11: Word Sense Induction and Disambiguation within an End-user Application, vol. 2, 2013, pp. 167–174. [Google Scholar]

- [60].Niu Z-Y, Ji D-H, Tan C-L, I2r: three systems for word sense discrimination, Chinese word sense disambiguation, and English word sense disambiguation, Proceedings of the 4th International Workshop on Semantic Evaluations, SemEval ‘07, Stroudsburg, PA, USA, Association for Computational Linguistics, 2007, pp. 177–182. [Google Scholar]

- [61].Noh T-G, Park S-B, Lee S-J, Unsupervised word sense disambiguation in biomedical texts with co-occurrence network and graph kernel, Proceedings of the ACM Fourth International Workshop on Data and Text Mining in Biomedical Informatics, DTMBIO ‘10, New York, NY, USA, ACM, 2010, pp. 61–64. [Google Scholar]

- [62].Pakhomov SV, Finley G, McEwan R, Wang Y, Melton GB, Corpus domain effects on distributional semantic modeling of medical terms, Bioinformatics 32 (23) (2016) 3635–3644. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [63].Pantel P, Lin D, Discovering word senses from text, Proceedings of the Eighth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, ACM, 2002, pp. 613–619. [Google Scholar]

- [64].Pedersen T, Umnd2: senseclusters applied to the sense induction task of senseval-4, Proceedings of the 4th International Workshop on Semantic Evaluations, SemEval ‘07, Stroudsburg, PA, USA, Association for Computational Linguistics, 2007, pp. 394–397. [Google Scholar]

- [65].Pedersen T, Duluth-wsi: senseclusters applied to the sense induction task of semeval-2, Proceedings of the 5th International Workshop on Semantic Evaluation, Stroudsburg, PA, USA, Association for Computational Linguistics, 2010, pp. 363–366. [Google Scholar]

- [66].Pedersen T, Bruce R, Distinguishing word senses in untagged text, Second Conference on Empirical Methods in Natural Language Processing, EMNLP ‘97, 1997, pp. 197–207. [Google Scholar]

- [67].Pinto D, Rosso P, Jimenez-Salazar H, Upv-si: word sense induction using self term expansion, Proceedings of the 4th International Workshop on Semantic Evaluations, Association for Computational Linguistics, 2007, pp. 430–433. [Google Scholar]

- [68].Purandare A, Pedersen T, Senseclusters: finding clusters that represent word senses, Demonstration Papers at HLT-NAACL 2004, Association for Computational Linguistics, 2004, pp. 26–29. [Google Scholar]

- [69].Purandare A, Pedersen T, Word sense discrimination by clustering contexts in vector and similarity spaces, Proceedings of the Conference on Computational Natural Language Learning, CoNLL, vol. 72, 2004. [Google Scholar]

- [70].Rousseeuw PJ, Silhouettes: a graphical aid to the interpretation and validation of cluster analysis, J. Comput. Appl. Math 20 (1987) 53–65. [Google Scholar]

- [71].Sabbir AKM, Jimeno-Yepes A, Kavuluru R, Knowledge-based Biomedical Word Sense Disambiguation with Neural Concept Embeddings and Distant Supervision, CoRR, abs/1610.08557, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [72].Savova G, Pedersen T, Resolving Ambiguities in Biomedical Text with Unsupervised Clustering Approaches, University of Minnesota Supercomputing Institute Research Report, 2005. [Google Scholar]

- [73].Schutze H, Dimensions of meaning, Proceedings of Supercomputing’92, IEEE, 1992, pp. 787–796. [Google Scholar]

- [74].Schütze H, Automatic word sense discrimination, Comput. Linguist 24 (1) (1998) 97–123. [Google Scholar]

- [75].Sehgal AK, Srinivasan P, Bodenreider O, Gene terms and english words: an ambiguous mix, Proc. of the ACM SIGIR Workshop on Search and Discovery for Bioinformatics, Sheffield, UK, Citeseer, 2004. [Google Scholar]

- [76].Stevenson M, Guo Y, Disambiguation in the biomedical domain: the role of ambiguity type, J. Biomed. Inform 43 (6) (2010) 972–981. [DOI] [PubMed] [Google Scholar]

- [77].Tang G, Xia Y, Sun J, Zhang M, Zheng TF, Statistical word sense aware topic models, Soft. Comput 19 (2014) 1–15. [Google Scholar]

- [78].Teh YW, Jordan MI, Beal MJ, Blei DM, Hierarchical Dirichlet processes, J. Am. Stat. Assoc 101 (476) (2006) 1566–1581. [Google Scholar]

- [79].Udani G, Dave S, Davis A, Sibley T, Noun sense induction using web search results, Proceedings of the 28th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, SIGIR ‘05, New York, NY, USA, ACM, 2005, pp. 657–658. [Google Scholar]

- [80].Van de Cruys T, Apidianaki M, Latent semantic word sense induction and disambiguation, Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, vol. 1, Association for Computational Linguistics, 2011, pp. 1476–1485. [Google Scholar]

- [81].van Dongen S, Graph Clustering by Flow Simulation (Ph.D. Thesis), University of Utrecht, 2000. [Google Scholar]

- [82].Veronis J, Hyperlex: lexical cartography for information retrieval, Comput. Speech Lang 18 (3) (2004) 223–252. [Google Scholar]

- [83].Wang J, Bansal M, Gimpel K, Ziebart B, Yu C, A sense-topic model for word sense induction with unsupervised data enrichment, Trans. Assoc. Comput. Linguist 3 (2015) 59–71. [Google Scholar]

- [84].Wang Y, Zheng K, Xu H, Mei Q, Clinical word sense disambiguation with interactive search and classification, AMIA Annual Symposium Proceedings, vol. 2016, American Medical Informatics Association, 2016, p. 2062. [PMC free article] [PubMed] [Google Scholar]

- [85].Widdows D, Dorow B, A graph model for unsupervised lexical acquisition, Proceedings of the 19th International Conference on Computational Linguistics, COLING ‘02, Stroudsburg, PA, USA, vol. 1, Association for Computational Linguistics, 2002, pp. 1–7. [Google Scholar]

- [86].Xu H, Markatou M, Dimova R, Liu H, Friedman C, Machine learning and word sense disambiguation in the biomedical domain: design and evaluation issues, BMC Bioinformat. 7 (1) (2006) 334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [87].Yan M, Methods of Determining the Number of Clusters in a Data Set and a New Clustering Criterion (Ph.D. Thesis), Virginia Polytechnic Institute and State University, 2005. [Google Scholar]

- [88].Yao X, Van Durme B, Nonparametric bayesian word sense induction, Proceedings of TextGraphs-6: Graph-based Methods for Natural Language Processing, TextGraphs-6, Stroudsburg, PA, USA, Association for Computational Linguistics, 2011, pp. 10–14. [Google Scholar]

- [89].Yu H, Liu Z, Wang G, An automatic method to determine the number of clusters using decision-theoretic rough set, Int. J. Approx. Reason 55 (1) (2014) 101–115. [Google Scholar]

- [90].Zhu X, Fan J-W, Baorto DM, Weng C, Cimino JJ, A review of auditing methods applied to the content of controlled biomedical terminologies, J. Biomed. Inform 42 (3) (2009) 413–425. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.