Abstract

Aberrant expressions of long non-coding RNAs (lncRNAs) are often associated with diseases and identification of disease-related lncRNAs is helpful for elucidating complex pathogenesis. Recent methods for predicting associations between lncRNAs and diseases integrate their pertinent heterogeneous data. However, they failed to deeply integrate topological information of heterogeneous network comprising lncRNAs, diseases, and miRNAs. We proposed a novel method based on the graph convolutional network and convolutional neural network, referred to as GCNLDA, to infer disease-related lncRNA candidates. The heterogeneous network containing the lncRNA, disease, and miRNA nodes, is constructed firstly. The embedding matrix of a lncRNA-disease node pair was constructed according to various biological premises about lncRNAs, diseases, and miRNAs. A new framework based on a graph convolutional network and a convolutional neural network was developed to learn network and local representations of the lncRNA-disease pair. On the left side of the framework, the autoencoder based on graph convolution deeply integrated topological information within the heterogeneous lncRNA-disease-miRNA network. Moreover, as different node features have discriminative contributions to the association prediction, an attention mechanism at node feature level is constructed. The left side learnt the network representation of the lncRNA-disease pair. The convolutional neural networks on the right side of the framework learnt the local representation of the lncRNA-disease pair by focusing on the similarities, associations, and interactions that are only related to the pair. Compared to several state-of-the-art prediction methods, GCNLDA had superior performance. Case studies on stomach cancer, osteosarcoma, and lung cancer confirmed that GCNLDA effectively discovers the potential lncRNA-disease associations.

Keywords: graph convolutional network, convolutional neural network, lncRNA-disease association prediction, attention mechanism at node feature level

1. Introduction

Long non-coding RNAs (lncRNAs) are non-coding RNAs with more than 200nt (nucleotides) in length [1]. There is mounting evidence that lncRNAs participate in the development and progression of numerous diseases [2,3]. Mutations and disorders of lncRNAs are associated with breast and colon cancer, atherosclerosis, and neurodegenerative diseases [4,5,6,7]. Therefore, identification of disease-related lncRNAs may help elucidate pathogenesis.

Computational biology techniques are essential and often used in many fields of biomedicine, ranging from the discovery of biomarkers to the development of drugs [8]. Machine learning and deep learning are being increasingly used to solve the most challenging problems [9,10,11,12,13,14,15]. In recent years, computational methods have been proposed to predict the associations between diseases and lncRNAs. These techniques can reliably screen disease-related lncRNA candidates. One forecasting method is the use of biological information related to the lncRNAs to infer potential lncRNA-disease associations such as genome location and tissue specificity. The lncRNAs near each other in the genome are often associated with similar diseases. Thus, Chen et al. and Li et al. proposed methods for predicting lncRNA-disease associations using genomic location data [16,17]. However, they cannot be applied to lncRNAs without first identifying the adjacent genes. Liu et al. and Biswas et al. used tissue specificity to predict potential disease-related lncRNAs [18,19]. However, this approach does not work for diseases without related tissue-specific gene records and cannot, therefore, predict their potential related lncRNAs.

Another forecasting method is based on machine learning prediction. Chen et al. developed a computational model based on Laplacian regularized least squares (LRLSLDA) to predict lncRNA-disease associations [20]. Chen et al. and Huang et al. optimized the similarity calculation method based on LRSLDA to improve its prediction performance [21,22,23]. However, these methods did not integrate multiple biological data related to the lncRNAs. The bipartite network was constructed using known lncRNA-disease associations to predict the potential lncRNA-disease associations [24,25]. Nevertheless, these methods are ineffective for diseases without known related lncRNAs. Potential lncRNA-disease associations are also inferred from random walk algorithms in heterogeneous networks containing disease and lncRNA nodes [26,27,28,29,30]. On the other hand, these methods depend on network topology data and the prediction results are biased towards disease nodes known to be associated with several lncRNAs.

Forecasting may also be performed by integrating various data sources related to lncRNAs or diseases such as the proteins and micro RNAs (miRNAs) interacting with lncRNAs and proteins associated with disease and so on. Lan et al. used the Karcher mean to merge numerous lncRNA and disease similarities calculated from multiple data sources [31,32]. They then identified potential lncRNA-disease associations based on a bagging support-vector machine (SVM) [32]. Certain matrix factorization-based prediction methods merge various data related to lncRNA, disease, and proteins [33,34]. However, none of the forecasting methods mentioned in this paragraph deeply integrate the topology information of the heterogeneous network.

In this study, we propose a model based on the graph convolution and convolution neural network, named GCNLDA, to predict potential lncRNA-disease associations. GCNLDA makes full use of topological information of lncRNA-disease-miRNA heterogeneous networks and data of similarities, correlations, and interactions among lncRNAs, diseases, and miRNAs. We constructed a heterogeneous network composed of lncRNA, miRNA, and disease nodes. The nodes were connected based on their similarities, associations, and interactions. We also constructed an embedding matrix of lncRNA-disease node pairs based on several biological premises regarding the probable associations between lncRNAs and diseases. A new framework based on a graph convolution and convolution neural network was developed to learn the network—and local representations of lncRNA-disease node pairs. The frame was made of two parts—the left and the right. On the left side of the framework, the autoencoder based on the graph convolution combines the attention mechanism of the node feature level to integrate the topological information of the heterogeneous lncRNA-disease-miRNA network. The right side of the framework focuses on learning the local representation of the lncRNA-disease node via the correlations among similarity, association, and interaction. A fivefold cross-validation showed that GCNLDA performance is significantly superior to other state-of-the-art prediction methods. Case studies on stomach cancer, osteosarcoma, and lung cancer confirmed that GCNLDA may successfully infer potential disease-associated lncRNA candidates.

2. Materials and Methods

2.1. Dataset for lncRNA-Disease Association Prediction

Data of lncRNA disease associations, lncRNA-miRNA interactions, and miRNA-disease correlations were obtained from previous reports [33]. Fu et al. extracted data for 2687 lncRNA-disease associations from LncRNADisease, lnc2cancer, and GeneRIF databases [16,35,36]. The original 1002 lncRNA-miRNA interaction and 5218 miRNA-disease association data were obtained from Starbase and the Human microRNA Disease Database (HMDD), respectively [37,38]. Semantic disease similarities were derived from the Dincrna database [39]. The associations, interrelationships, and similarities were compiled for 240 lncRNAs, 402 diseases, and 495 miRNAs.

2.2. Prediction Method Based on Graph Convolutional Network and Convolutional Neural Network

Our goal was to predict potential lncRNA-disease associations. A heterogeneous node network including lncRNA, disease, and miRNA was constructed. The embedding matrix of the lncRNA-disease node pairs was constructed based on several biological premises. The graph convolutional network module combined with the attention mechanism on the left side of the framework learned the network representation of the lncRNA-disease node pair. The convolutional neural network on the right side of the framework learned the local representation of the lncRNA-disease node pair. A combined strategy was used to obtain the final likelihood score of the association between the lncRNA and the disease. Here, the process is described using the lncRNA and the disease as examples.

2.2.1. Construction of the lncRNA-Disease-miRNA Network

A heterogeneous network was constructed and named LncDisMirNet. It consisted of the nodes lncRNA, miRNA, and disease. The LncDisMirNet comprised the lncRNA network (LncNet), the disease network (DisNet), the miRNA network (MirNet), and three types of connecting edges; which respectively represent the interaction between lncRNAs and miRNAs, the association between lncRNAs and diseases, and the association between miRNAs and diseases.

2.2.2. Construction of the lncRNA, miRNA, and Disease Networks

Two lncRNAs are usually associated with similar diseases if their functions are similar. Chen et al. calculated the functional similarity among lncRNAs [21]. To construct the lncRNA network, the similarity between two lncRNA nodes was determined by Chen’s method and an edge was added to connect them when their similarity was > 0. The weight of the edge was set to the similarity value (Figure 1a). The matrix denotes LncNet, where is the similarity between and and is the number of lncRNAs.

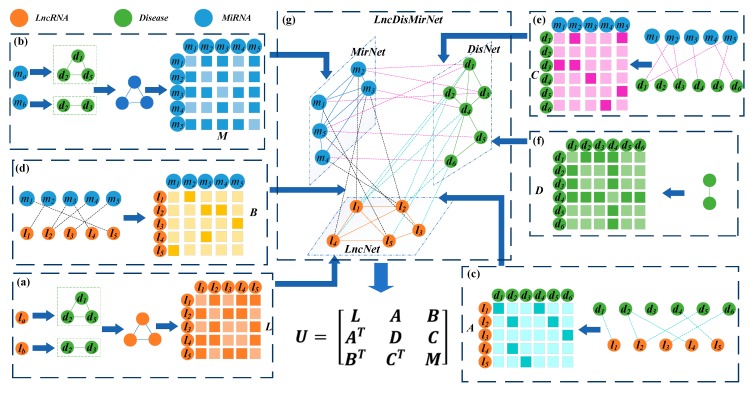

Figure 1.

Construction and representation of a heterogeneous network with three different nodes. (a) LncRNA network (LncNet) and its adjacency matrix L were constructed by calculating the functional similarity of the lncRNAs according to their associated diseases. (b) Calculation of the functional similarity of the lncRNAs based on their related diseases and construction of miRNA network (MirNet) and the adjacency matrix M. (c) Establishment of the connexion between LncNet and disease network (DisNet) based on known lncRNA-disease associations and construction of the adjacency matrix A. (d) Connexion of LncNet and MirNet according to known interactions between lncRNAs and miRNAs and construction of the adjacency matrix B. (e) Connexion of the miRNAs and diseases according to known miRNA-disease associations and construction of the adjacency matrix C. (f) Computation of the similarities based on the DAGs of the diseases and construction of DisNet and the adjacency matrix D. (g) LncNet, DisNet, MirNet, and the connexions among them were used to construct the heterogeneous network LncDisMirNet and its adjacency matrix U.

The same method was applied to determine the similarity between miRNAs and construct the network MirNet composed of miRNA nodes (Figure 1b). The matrix was used to represent the MirNet with miRNA nodes. represents the similarity between miRNA and .

Wang et al. calculated the similarity between two diseases [40]. This method represented a disease by using a directed acyclic graph (DAG) comprising all annotations related to it. Here, disease similarity was used to construct the DisNet network, and the matrix represented it. represents the similarity between disease and disease , and is the number of diseases (Figure 1f).

The connexion between the LncNet and DisNet nodes was established using the known lncRNA-disease correlation data. If the lncRNA node in LncNet is associated with a disease node in DisNet, an edge is added to connect them. The matrix denotes the set of edges. When , there is an association between lncRNA and disease . When , there is no association between them (Figure 1c).

Connexions between LncNet and MirNet and between DisNet and MirNet were established based on the data of the lncRNA-miRNA interaction and the miRNA-disease association. If lncRNA (disease ) in LncNet (DisNet) interacts (associate) with miRNA in MirNet, then . If not, then . The matrices and represented the connexions between LncNet and MirNet and between DisNet and MirNet, respectively (Figure 1d,e).

The heterogeneous network LncDisMirNet was constructed by combining LncNet, DisNet, and MirNet. LncDisMirNet is denoted by the matrix ,

| (1) |

where , and , , are transpose matrices of A, B, and C, respectively (Figure 1g).

2.2.3. Attention Mechanism on the Left Side of the Framework

The attention mechanism in a deep learning technique is similar to the visual attention mechanism in humans. The core goal was to select the information that was more critical to a given task. By applying our proposed attention mechanism, each feature of the nodes is assigned a different weight.

As shown in Figure 1g, the ith row in reflects the topology information between the ith node and all others in the network. For example, contains similarity links between lncRNA and , association links between and disease , and interaction links between and miRNA . Similarly, contains the links of disease to all lncRNAs, diseases, and miRNAs. Therefore, is the topology feature vector of the ith node in LncMirDisNet. The topology feature vector of the node is and that for the node is (Figure 2).

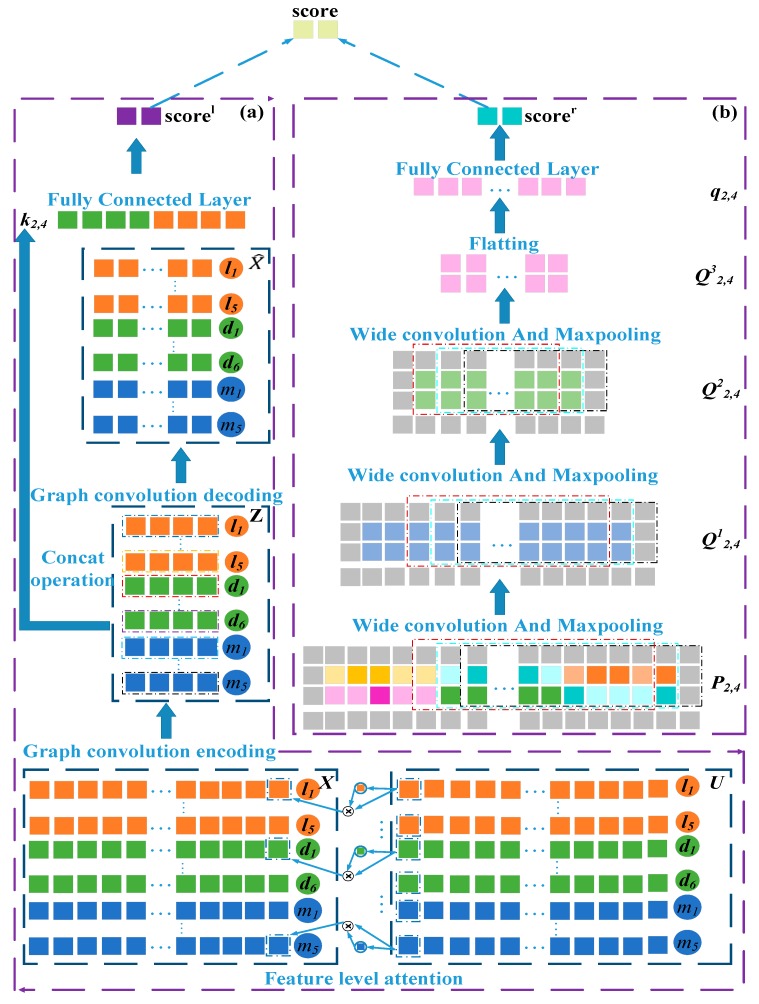

Figure 2.

Overall model structure. (a) Establish the attention mechanism at the feature levels and the autoencoder based on graph convolution. (b) Construct the convolutional and pooling layers.

The various features of the lncRNA and disease nodes contribute differently and uniquely to the association prediction. Thus, an attention mechanism was established at the node feature level to extract the important features of the association prediction. The attention scores of each node feature are defined as follows,

| (2) |

where and are parametric matrices, is a bias vector and is the activation function. The vector is the attention score vector of each feature of , where is the attention score of the jth feature of . was used to normalize the attention scores for all features of ,

| (3) |

where is the feature-level attention weight vector of , and is the weight of the kth feature of . Therefore, the node enhancement vector based on the feature-level attention mechanism is,

| (4) |

where is the element-wise product operator and is the enhancement vector of . The enhancement vectors of the lncRNA node and the disease node are and , respectively.

2.2.4. Graph Convolutional Network Module on the Right Side of the Framework

The graph convolutional network is a multilayer neural network proposed by Tomas Kpif in 2017 [41]. It uses the graph as an input, integrates the neighborhood node feature and structure information of the graph nodes, and represents them as a vector. Graph convolutional networks have been successfully applied towards the prediction of multidrug side effects, social networks, recommendation system and prediction of drug-target interactions [42,43,44,45]. Here, the graph convolutional network was used to predict lncRNA-disease associations. The heterogeneous network LncDisMirNet has connexions based on lncRNA, disease, and miRNA similarity, lncRNA-disease and miRNA-disease associations, and lncRNA-miRNA interactions. These are consistent so the entire heterogeneous network U is used as the input for the graph convolution.

First, is the adjacency matrix with added self-connections, where is the identity matrix. Then a symmetric Laplace normalization was performed on to get ,

| (5) |

where is a diagonal matrix such that , is actually the degree matrix of . The graph convolution autoencoder takes in the structure matrix and the node feature matrix as inputs. And the graph convolution autoencoder encodes the nodes in LncDisMirNet to obtain network representations of the lncRNA, disease, and miRNA nodes,

| (6) |

where is a weight matrix and n is a hyper-parameter. The matrix is multiplied by . This operation can be understood as an aggregation of spatial information. If = , where , the ith row in the matrix can be understood as the feature vector of the ith node. and are multiplied to map the nodes to the low-dimensional vector . As shown in Figure 2, the second row and the ninth row in the matrix are network representations of and , respectively.

Furthermore, we traced back to its original feature space. was subsequently decoded on the basis of the graph convolution,

| (7) |

is a parameter matrix and is the activation function. To make and as consistent as possible, the loss function of the graph convolution autoencoder was defined as MSE (mean-square error),

| (8) |

The network representations of the lncRNA nodes and of the disease nodes obtained by graph convolutional neural networks were then combined to obtain the network representation of the node pairs -,

| (9) |

As shown in Figure 2, the second row and the ninth row in the matrix are network representations of and , respectively. and were concatenated to get and then projected onto a C (C = 2) class association probability distribution using fully connected and softmax layers. In this two-class distribution pl, class 0 means that and are not associated whilst class 1 indicates association between and . The probability of class 1 was taken as the predictive of the association between and ,

| (10) |

where is the parameter matrix of the fully connected layer and is the bias term. measures the likelihood of association between lncRNA and disease , and the greater its value, the more likely they are to be associated. The probability in which and may be correlated can be obtained by the same method.

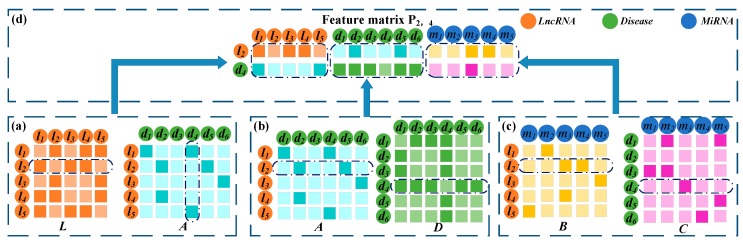

2.2.5. Construction of the Embedding Matrix of lncRNA-Disease Node Pairs

The and serve to illustrate the process of constructing embedding matrix as shown in Figure 3. If and have similarities and associations with common lncRNAs, the likelihood of association between them is high. In the matrices L and A, and have similarities and associations, respectively, with . Thus, there may be an association between them. The second row of L records the similarity between and all lncRNAs. The fourth column of A records the associations between and all lncRNAs. These were spliced together as the first part of the embedding matrix . Similarly, if and have connexions with common miRNAs and diseases, they are more likely to be associated. The second row of A and the fourth row of D were combined as the second part of . Finally, the second row of B and the fourth row of C were combined as the third part of . So far, lncRNA similarity, disease similarity, lncRNA-disease association, lncRNA-miRNA interaction, and disease-miRNA association were integrated to construct the embedding matrix of the node pair -. The same method is used to construct the embedding matrix for the other lncRNA-disease node pairs -.

Figure 3.

Construction of the embedding matrix of - pair. (a) Construction of the first part of the embedding matrix based on the similarity between and the other lncRNAs and the association between and all lncRNAs. (b) The second part of the embedding matrix was constructed based on the similarity between and the other lncRNA and the association between and the other diseases. (c) Construction of the third part using the lncRNA-miRNA interactions and miRNA-disease associations. (d) Construction of the final embedding matrix by combining the representations of the first, second, and third parts.

2.2.6. Convolutional Neural Networks Module on the Left Side of the Framework

The embedding matrix of node pairs - served as the input of the convolutional neural network to learn the local representation of -. To learn the marginal information of during the convolution process, a zero-padding operation was run on to obtain , to be precise, pad zeros around were operated, where and . In the first convolution layer, the filter length and width were set to and , respectively. If the number of filters is , the convolution filter is applied to to obtain the first feature maps . The area and process of convolution are defined as follows,

| (11) |

| (12) |

where is the region covered by the sliding window when filter slides to the mth row and the nth column of . is the activation function, and is the kth bias vector. If convolution filter is applied to the embedding matrix of node pairs -, the first feature map will be obtained.

Robust features can be extracted from feature map by applying max-pooling. In the pooling layer, the max-pooling operation was performed on to obtain the feature representation ,

| (13) |

where and are the length and width of the pooling layer sliding window, respectively. is the region covered by the sliding window when pooling window slides to the mth row and the nth column of . Robust features are extracted from this region. If max-pooling was performed on the feature maps of node pair -, the feature representation will be obtained. Next, we will continue to use node pairs - as an example.

was used as the input of the second convolution layer to obtain the feature representation after the convolution and max-pooling operations. Convolution and max-pooling were also run on in the third convolution layer and the pooling layer to obtain the feature representation . and are respectively the length and width of the feature representation after three convolutions and pooling. was flattened into the vector . Similarly, the fully connected and SoftMax layers served to project onto the C (C = 2)-associated probability distribution pr of class C (C = 2). The probability class 1 was taken as the predictive of the association between and ,

| (14) |

where is the parameter matrix of the fully connected layer and is the bias term. measures the probability of association between lncRNA and disease . The higher its value is, the more likely the association is between them. The probability in which and may be correlated can be obtained by the same method.

2.3. Combination Strategy

The left and right sides of the model analyzed the relationship between lncRNA and disease from different perspectives. To combine their characteristics and improve model performance, a combination strategy was designed for the final prediction.

The cross-entropy loss between the association prediction distribution pl and the real distribution on the left side of the model is defined as follows,

| (15) |

where T is the number of training samples and z is the sample label. The cross-entropy loss on the right side of the model is defined as follows,

| (16) |

The final association prediction of and is the weighted sum of and ,

| (17) |

evaluates the contributions of the left and right sides of the model.

2.4. Reducing Overfitting

There are many parameters in our neural network. The higher the number of parameters, the easier it is to cause over-fitting. The recent technique, “dropout”, consists of setting the output of each hidden neuron to zero with a probability of 0.5. The neurons that are “dropped out” in this way do not participate in the forward pass and back-propagation [46]. Thus, every time an input is presented, the neural network samples a different architecture, but all these architectures share weights. This technique reduces intricate co-adaptation of neurons, because a neuron cannot depend on the existence of other neurons. Therefore, it is forced to learn robust and beneficial features in conjunction with different random subsets of other neurons. During the test, we multiplied the output of all the neurons by 0.5, which reasonably approximates the geometric mean of the predictive distributions produced exponentially by many dropout networks.

3. Results and Discussion

3.1. Performance Evaluation Metrics

We used fivefold cross-validation to evaluate and compare the performance of GCNLDA with other state-of-the-art prediction methods. If there is an association between lncRNA and disease , then the node pair is regarded as a positive example. In contrast, the lack of association indicates that is a negative example. In the whole dataset, there were far fewer positive than negative examples. This discrepancy created a class imbalance affecting the model training. Therefore, we must randomly extract the same number of negative examples as the total number of positive samples from the dataset then randomly divide them into five equal subsets. All positive examples were also partitioned into five subsets of equal size. Four subsets each from the positive and negative examples were used to train the prediction model. All remaining samples were used for testing. Before each cross-validation, we removed the lncRNA-disease associations to be used for testing purposes then recalculated the similarity of the lncRNAs with the remaining associations.

We used the trained model to estimate the association prediction scores of the test samples then ranked them in descending order. When the association prediction score between lncRNA and disease was > θ (a threshold), this example was deemed positive. Otherwise, it was scored as a negative example. We used TP and TN to represent the numbers of correctly identified positive and negative example, respectively. FN and FP represented the numbers of misidentified positive and negative examples, respectively. The TPR (true positive rate), FPR (false positive rate), Precision (precision), and Recall (recall rate) were calculated as follows,

| (18) |

| (19) |

The TPRs, FPRs, Precisions, and Recalls were calculated by changing θ. The TPRs and FPRs were used to plot the receiver operating characteristic (ROC) curve. The area under the ROC curve (AUC) was used to measure the global performance of the prediction method. To improve the assessment of the model performance in the event of class imbalance, we plotted the precision-recall (PR) curve based on the calculated precisions and recalls. The area under the PR curve (AUPR) also quantified the overall performance of the prediction method. GCNLDA’s AUCs and AUPRs during each cross-validation are listed in Supplementary Table S1.

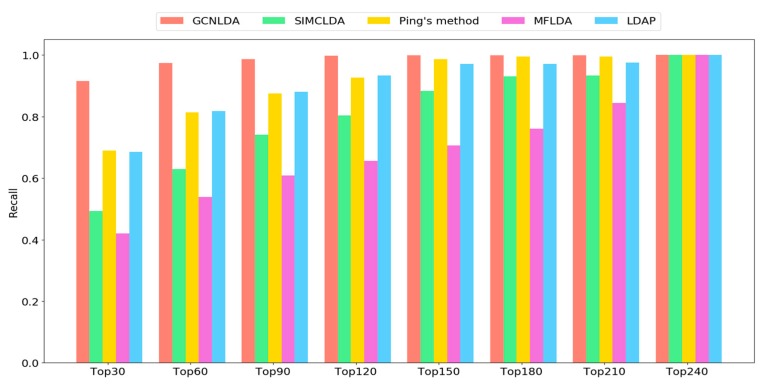

The preceding equation shows that recall is the ratio of correctly identified positive examples to all positive examples. The number of positive examples appearing as top k lncRNA candidates of the disease increases with the corresponding recall. Researchers usually select the top-ranked candidates from the prediction results for experimental verification. Thus, it is reasonable to use high Recall values. Therefore, we also calculated the recall values of the top 30, 60, 90…210, 240 candidates for ten diseases.

3.2. Comparison with Other Methods

GCNLDA’s hyperparameters,, n, , , , , and were tuned. The values of and n were selected from {0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9} and {50, 100, 200, 300, 400, 500}, respectively. The values of , , were selected from {5, 10, 20, 30, 40, 50, 60, 70}. The , values were selected from {1, 2, 3, 5, 7, 9, 11, 12, 14, 16, 18, 20}. GCNLDA’s yielded the best performance when , n = 100, , , , and . The optimal set parameters were obtained using a grid search.

In order to evaluate the ability of our model to predict lncRNA-disease associations, we compared it with other state-of-the-art prediction methods including Ping’s method [25], LDAP [32], MFLDA [33], and SIMCLDA [34]. We adjusted the parameters of GCNLDA based on the cross-validation to optimize its prediction performance. On the left side of the model, network node representations with n = 100 were obtained from the graph convolution encoding operation. The learning rate of the autoencoder was set to 0.001. On the right side of the model, = 20 filters, = 30 filters, and = 40 filters of length = 3 and width = 11 were used in three convolution layers. The learning rate was set to 0.0005. The parameters were updated by the Adam optimization algorithm throughout the training process. ReLu was the activation function for all fully connected layers. The optimal parameters of other methods are obtained through grid search. For SIMCLDA, = 0.8, = 0.6, and = 1; for Ping’s method, α = 0.6; for MFLDA, α = 105; for LDAP, gap open = 10, and gap extend = 0.5.

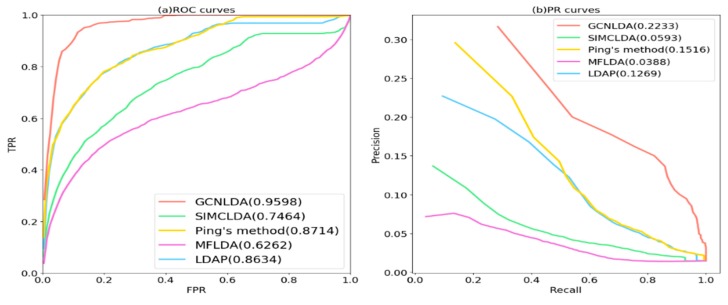

As shown in Figure 4a and Table 1, GCNLDA had the best performance for 405 diseases. The AUC of the ROC curve was 0.959. The performance of GCNLDA was superior to those of SIMCLDA, Ping’s method, MFLDA, and LDAP by 21.34%, 8.84%, 33.36%, and 9.64%, respectively. We listed the AUC of all five methods based on 10 well-characterized diseases. Each of these has > 15 known lncRNAs associated with them. GCNLDA presented with the best performance on these 10 diseases (Table 1). Ping’s method and LDAP fused the similarity of lncRNA and disease which improved the accuracy of their similarity calculations and achieved good performance. Ping’s method also exploited the topology information of the bipartite networks so its performance was slightly superior to that of LDAP. In contrast, SIMCLDA only fused multiple similarities of lncRNA. Consequently, its performance was inferior to those of the aforementioned methods. MFLDA integrates multiple data sources but ignores the similarity of lncRNAs and diseases. As a result, its performance is inferior to those of the other methods. The aforementioned methods focus mainly on lncRNA, disease similarity, and integration of multiple data sources. They make negligible use of network topology information. The advantages of GCNLDA over the other methods include deep learning to extract the local representation of lncRNA-disease node pairs and graph convolution to learn their network representation.

Figure 4.

Receiver operating characteristic (ROC) and precision-recall (PR) curves of GCNLDA and other methods for all diseases. (a) ROC curves of all the methods; (b) PR curves of all the methods.

Table 1.

Area under the ROC curves (AUCs) of GCNLDA and other methods for all the diseases and 10 well-characterized diseases.

| Disease Name | AUC | ||||

|---|---|---|---|---|---|

| GCNLDA | SIMCLDA | Ping’s Method | MFLDA | LDAP | |

| Average AUC on 405 diseases | 0.959 | 0.746 | 0.871 | 0.626 | 0.863 |

| respiratory system cancer | 0.948 | 0.789 | 0.911 | 0.719 | 0.891 |

| organ system cancer | 0.992 | 0.82 | 0.95 | 0.729 | 0.884 |

| intestinal cancer | 0.966 | 0.811 | 0.909 | 0.559 | 0.905 |

| prostate cancer | 0.944 | 0.873 | 0.826 | 0.553 | 0.71 |

| lung cancer | 0.961 | 0.79 | 0.911 | 0.676 | 0.883 |

| breast cancer | 0.963 | 0.742 | 0.871 | 0.517 | 0.83 |

| reproductive organ cancer | 0.962 | 0.707 | 0.818 | 0.74 | 0.742 |

| gastrointestinal system cancer | 0.977 | 0.784 | 0.896 | 0.582 | 0.867 |

| liver cancer | 0.978 | 0.799 | 0.91 | 0.634 | 0.898 |

| hepatocellular carcinoma | 0.983 | 0.765 | 0.903 | 0.688 | 0.902 |

The bold values indicate the higher AUCs.

As shown in Figure 4b and Table 2, GCNLDA had the best performance for 405 diseases (AUPR = 0.2233). It was 16.4% better than SIMCLDA, 7.17% better than Ping’s method, 18.45% better than MFLDA, and 9.64% better than LDAP. GCNLDA achieved the best performance for nine of the ten well-characterized diseases.

Table 2.

AUPRs of GCNLDA and other methods for all the diseases and 10 well-characterized diseases.

| Disease Name | AUPR | ||||

|---|---|---|---|---|---|

| GCNLDA | SIMCLDA | Ping’s Method | MFLDA | LDAP | |

| Average AUC on 405 diseases | 0.223 | 0.166 | 0.219 | 0.095 | 0.066 |

| respiratory system cancer | 0.465 | 0.149 | 0.414 | 0.072 | 0.303 |

| organ system cancer | 0.950 | 0.411 | 0.765 | 0.338 | 0.628 |

| intestinal cancer | 0.697 | 0.141 | 0.252 | 0.042 | 0.246 |

| prostate cancer | 0.594 | 0.176 | 0.333 | 0.095 | 0.297 |

| lung cancer | 0.600 | 0.138 | 0.334 | 0.008 | 0.094 |

| breast cancer | 0.623 | 0.445 | 0.803 | 0.476 | 0.629 |

| reproductive organ cancer | 0.625 | 0.047 | 0.403 | 0.031 | 0.396 |

| gastrointestinal system cancer | 0.812 | 0.130 | 0.271 | 0.104 | 0.238 |

| liver cancer | 0.671 | 0.201 | 0.526 | 0.086 | 0.498 |

| hepatocellular carcinoma | 0.787 | 0.096 | 0.239 | 0.082 | 0.303 |

The bold values indicate the higher AUPRs.

To verify whether the performance of our method was significantly better than those of the other methods, we conducted paired Wilcoxon tests on GCNLDA and the others. In all cases, p < 0.05 (Table 3). Relative to the other methods, then, the performance of GCNLDA in the AUPRs and AUCs was significantly better.

Table 3.

A pairwise comparison with a paired Wilcoxon-test on the prediction results.

| p-Value | SIMCLDA | Ping’s Method | MFLDA | LDAP |

|---|---|---|---|---|

| p-value of ROC curve | 1.131026 × 10−106 | 1.494908 × 10−44 | 4.534043 × 10−124 | 4.291344 × 10−50 |

| p-value of PR curve | 1.342560 × 10−89 | 2.204929 × 10−29 | 1.567472 × 10−112 | 2.844473 × 10−48 |

As shown in Figure 5, the recall rate on the top k ranked lncRNAs increases with the number of correctly identified known lncRNA-disease associations. GCNLDA consistently outperformed other methods at different k values. The average recall rates of the top 30, 60, 90, and 120 lncRNA candidates for GCNLDA were 91.5%, 97.3%, 98.5%, and 99.7%, respectively. For Ping’s method, they were 68.9%, 81.3%, 87.5%, and 92.7%, respectively. For LDAP, they were 68.5%, 81.3%, 88%, and 93.3%, respectively. For SIMCLDA, they were 49.3%, 63%, 74.1%, and 80.3%, respectively. For MFLDA, they were 42%, 53.9%, 61%, and 65.5%, respectively.

Figure 5.

Average recalls across all tested diseases under different top k cutoffs.

3.3. Case Studies on Stomach Cancer, Osteosarcoma, and Lung Cancer

To test the ability of GCNLDA to predict potential lncRNA-disease associations, we conducted a case analysis on stomach cancer, osteosarcoma, and lung cancer. We analyzed in detail the top 15 candidates for related diseases (Table 4). The top 15 candidates for all the 405 diseases were obtained through GCNLDA and are listed in Supplementary Table S2. All known lncRNA-disease associations were treated as training samples and all lncRNA-disease pairs with unknown associations were used as test samples.

Table 4.

The top 15 candidate lncrnas for stomach cancer, osteosarcoma and lung cancer.

| Disease Name | Rank | lncRNA | Evidence | Rank | lncRNA | Evidence |

|---|---|---|---|---|---|---|

| Stomach cancer | 1 | MALAT1 | Lnc2Cancer, LncRNADisease | 9 | HULC | Lnc2Cancer, LncRNADisease |

| 2 | NEAT1 | Lnc2Cancer, LncRNADisease | 10 | CCAT2 | Lnc2Cancer, LncRNADisease | |

| 3 | MIR17HG | Literature [47] | 11 | KCNQ1OT1 | Lnc2Cancer | |

| 4 | HOTTIP | Lnc2Cancer, LncRNADisease | 12 | BCYRN1 | LncRNADisease* | |

| 5 | TUG1 | Lnc2Cancer, LncRNADisease | 13 | CASC2 | Lnc2Cancer, LncRNADisease | |

| 6 | HNF1A-AS1 | Lnc2Cancer, LncRNADisease | 14 | PANDAR | Lnc2Cancer, LncRNADisease | |

| 7 | XIST | Lnc2Cancer, LncRNADisease | 15 | PCAT1 | LncRNADisease* | |

| 8 | AFAP1-AS1 | Lnc2Cancer | ||||

| Osteosarcoma | 1 | H19 | Lnc2Cancer, LncRNADisease | 9 | LINC00675 | LncRNADisease* |

| 2 | GAS5 | Lnc2Cancer | 10 | BCYRN1 | LncRNADisease* | |

| 3 | PVT1 | Lnc2Cancer | 11 | CCAT2 | Lnc2Cancer | |

| 4 | NEAT1 | Lnc2Cancer | 12 | CASC2 | Lnc2Cancer | |

| 5 | EWSAT1 | Lnc2Cancer | 13 | CCAT1 | Lnc2Cancer | |

| 6 | AFAP1-AS1 | Literature [48] | 14 | TP73-AS1 | Lnc2Cancer | |

| 7 | CDKN2B-AS1 | LncRNADisease | 15 | PCA3 | LncRNADisease* | |

| 8 | SPRY4-IT1 | Lnc2Cancer | ||||

| Lung cancer | 1 | KCNQ1OT1 | Lnc2Cancer | 9 | IGF2-AS | Lnc2Cancer |

| 2 | HOTTIP | Lnc2Cancer, LncRNADisease | 10 | PCAT1 | LncRNADisease | |

| 3 | SPRY4-IT1 | Lnc2Cancer, LncRNADisease | 11 | CASC2 | Lnc2Cancer, LncRNADisease | |

| 4 | TP73-AS1 | Lnc2Cancer | 12 | ESRG | LncRNADisease* | |

| 5 | MIAT | Lnc2Cancer | 13 | PCA3 | LncRNADisease* | |

| 6 | MIR155HG | Literature [49] | 14 | SNHG12 | Lnc2Cancer | |

| 7 | LINC00675 | LncRNADisease* | 15 | TUSC7 | Lnc2Cancer | |

| 8 | SOX2-OT | LncRNADisease |

“Lnc2Cancer” means the lncRNA candidate was included in the Lnc2Cancer database. “LncRNADisease” means the candidate was included among the experimentally verified data in LncRNADisease. “LncRNADisease*” means the candidate was included among the predicted data in LncRNADisease. “Literature” means the candidate was supported in published studies.

Lnc2Cancer is an experimentally corroborated database consisting of 4986 lncRNA-disease associations. It includes 1614 human lncRNAs and 165 human cancers. The database LncRNADisease contains lncRNA-disease associations verified by experimentation and predicted by state-of-the-art methods. Twelve of the 15 lncRNA candidates related to stomach cancer were included in the Lnc2Cancer database and 10 of them were included among the experimentally verified data in LncRNADisease. The databases confirmed whether the lncRNAs were associated with stomach cancer. If the disease-related lncRNA candidate was labelled as “Literature”, then it was supported in published studies. As shown in Table 4, candidate MIR17HG (alias mir-17-92) was labelled as “Literature” and proved to be dysregulated in stomach cancer [47].

Among the top 15 lncRNA candidates of osteosarcoma listed in Table 4, ten were included in the Lnc2Cancer database whilst two were queried in LncRNADisease with experimental support. They were confirmed to have definite associations with osteosarcoma. Recently published studies showed that AFAP1-AS1 enhances cell proliferation and invasion in osteosarcoma by regulating miR-4695-5p/TCF4-β-catenin signaling [48]. Nine of the top 15 lncRNA candidates of lung cancer were in Lnc2Cancer and eight appeared in LncRNADisease. Recent reports confirmed that lncRNA MIR155HG promotes lung cancer cell proliferation, migration, and invasion [49].

The remaining eight lncRNA candidates labelled “LncRNADisease*” were included in the predicted lncRNA-disease associations in the LncRNADisease database. These predictions reveal that GCNLDA effectively discovers potential lncRNA-disease associations.

4. Conclusions

GCNLDA predicts potential lncRNA-disease associations and it is based on graph convolutional network and convolutional neural networks. Attention mechanism was constructed at the node feature level to distinguish the various contributions of the node features. The graph convolution autoencoder with an attention mechanism deeply integrates the topological information of lncRNA-disease-miRNA heterogeneous networks. The convolutional neural network module captures various connection relationships related to lncRNA-disease on the node pair embedding. The network and local representations of lncRNA-disease node pairs were learned by the new framework based on graph convolutional network and convolutional neural networks. Cross-validation confirmed that GCNLDA is superior to other state-of-the-art methods in terms of both AUC and AUPR. Case studies on three diseases substantiated the ability of GCNLDA to predict potential disease-associated lncRNAs. GCNLDA may serve as an effective tool to screen reliable candidates for lncRNA-disease association validation with-lab experiment.

Acknowledgments

We would like to thank Editage (www.editage.com) for English language editing.

Supplementary Materials

The following are available online at https://www.mdpi.com/2073-4409/8/9/1012/s1, Table S1: AUC and AUPR of GCNLDA in each cross-validation. Table S2: The top 15 potential lncRNA candidates for 405 diseases.

Author Contributions

P.X. and S.P. conceived the prediction method, and they wrote the paper. Y.L. and S.P. developed the computer programs. T.Z. and H.S. analyzed the results and revised the paper.

Funding

The work was supported by the Natural Science Foundation of China (61972135), the Natural Science Foundation of Heilongjiang Province (LH2019F049, LH2019A029), the China Postdoctoral Science Foundation (2019M650069), the Heilongjiang Postdoctoral Scientific Research Staring Foundation (BHL-Q18104), the Fundamental Research Foundation of Universities in Heilongjiang Province for Technology Innovation (KJCX201805), the Innovation Talents Project of Harbin Science and Technology Bureau (2017RAQXJ094), and the Fundamental Research Foundation of Universities in Heilongjiang Province for Youth Innovation Team (RCYJTD201805).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- 1.Taft R.J., Pang K.C., Mercer T.R., Dinger M.E., Mattick J.S. Non-coding RNAs: Regulators of disease. J. Pathol. 2010;220:126–139. doi: 10.1002/path.2638. [DOI] [PubMed] [Google Scholar]

- 2.Chen X., Yan C.C., Zhang X., You Z.H. Long non-coding RNAs and complex diseases: From experimental results to computational models. Briefings Bioinform. 2017;18:558. doi: 10.1093/bib/bbw060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Harrow J., Frankish A., Gonzalez J.M., Tapanari E., Diekhans M., Kokocinski F., Aken B.L., Barrell D., Zadissa A., Searle S. GENCODE: The reference human genome annotation for the ENCODE project. Genome Res. 2012;22:1760–1774. doi: 10.1101/gr.135350.111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Marcia G., Danielle M., Buddy S.H., Dorssers L.C.J., Ton V.A. Characterization of BCAR4, a novel oncogene causing endocrine resistance in human breast cancer cells. J. Cell. Physiol. 2011;226:1741–1749. doi: 10.1002/jcp.22503. [DOI] [PubMed] [Google Scholar]

- 5.Hrdlickova B., Almeida R.C.D., Borek Z., Withoff S. Genetic variation in the non-coding genome: Involvement of micro-RNAs and long non-coding RNAs in disease. BBA Mol. Basis Dis. 2014;1842:1910–1922. doi: 10.1016/j.bbadis.2014.03.011. [DOI] [PubMed] [Google Scholar]

- 6.Ada C., Kei K., Ryousuke O., Osamu Y., Keishi M., Eiichiro Y., Tatsuo K., Hiroshi K., Hiroko Y., Yasushi T. Genetic variants at the 9p21 locus contribute to atherosclerosis through modulation of ANRIL and CDKN2A/B. Atherosclerosis. 2012;220:449–455. doi: 10.1016/j.atherosclerosis.2011.11.017. [DOI] [PubMed] [Google Scholar]

- 7.Johnson R. Long non-coding RNAs in Huntington’s disease neurodegeneration. Neurobiol. Dis. 2012;46:245–254. doi: 10.1016/j.nbd.2011.12.006. [DOI] [PubMed] [Google Scholar]

- 8.Mamoshina P., Vieira A., Putin E., Zhavoronkov A. Applications of deep learning in biomedicine. Mol. Pharm. 2016;13:1445–1454. doi: 10.1021/acs.molpharmaceut.5b00982. [DOI] [PubMed] [Google Scholar]

- 9.Zhang T., Wang M., Xi J., Ao L. LPGNMF: Predicting long non-coding RNA and protein interaction using graph regularized nonnegative matrix factorization. IEEE/ACM Trans. Comput. Biol. Bioinform. 2018 doi: 10.1109/TCBB.2018.2861009. [DOI] [PubMed] [Google Scholar]

- 10.Piro R.M., Marsico A. network-based methods and other approaches for predicting lncRNA functions and disease associations. In: Lai X., Gupta S.K., Vera J., editors. Computational Biology of Non-Coding RNA: Methods and Protocols. Springer; New York, NY, USA: 2019. pp. 301–321. [DOI] [PubMed] [Google Scholar]

- 11.Fu L., Peng Q. A deep ensemble model to predict miRNA-disease association. Sci. Rep. 2017;7:14482. doi: 10.1038/s41598-017-15235-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Bressin A., Schulte-Sasse R., Figini D., Urdaneta E.C., Beckmann B.M., Marsico A. TriPepSVM: De novo prediction of RNA-binding proteins based on short amino acid motifs. Nucleic Acids Res. 2019;47:4406–4417. doi: 10.1093/nar/gkz203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Heller D., Krestel R., Ohler U., Vingron M., Marsico A. ssHMM: Extracting intuitive sequence-structure motifs from high-throughput RNA-binding protein data. Nucleic Acids Res. 2017;45:11004. doi: 10.1093/nar/gkx756. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Budach S., Marsico A. pysster: Classification of biological sequences by learning sequence and structure motifs with convolutional neural networks. Bioinformatics. 2018;34:3035–3037. doi: 10.1093/bioinformatics/bty222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Krakau S., Richard H., Marsico A. PureCLIP: Capturing target-specific protein–RNA interaction footprints from single-nucleotide CLIP-seq data. Genome Biol. 2017;18:240. doi: 10.1186/s13059-017-1364-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Chen G., Wang Z., Wang D., Qiu C., Liu M., Chen X., Zhang Q., Yan G., Cui Q.J.N.A.R. LncRNADisease: A database for long-non-coding RNA-associated diseases. Nucleic Acids Res. 2012;41:983–986. doi: 10.1093/nar/gks1099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Li J.W., Gao C., Wang Y.C., Ma W., Tu J., Wang J.P., Chen Z.Z., Kong W., Cui Q.H. A bioinformatics method for predicting long noncoding RNAs associated with vascular disease. Sci. China Life Sci. 2014;57:852–857. doi: 10.1007/s11427-014-4692-4. [DOI] [PubMed] [Google Scholar]

- 18.Ming-Xi L., Xing C., Geng C., Qing-Hua C., Gui-Ying Y. A computational framework to infer human disease-associated long noncoding RNAs. PLoS ONE. 2014;9:e84408. doi: 10.1371/journal.pone.0084408. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Biswas A.K., Zhang B., Wu X., Gao J.X. A multi-label classification framework to predict disease associations of long non-coding RNAs (lncRNAs); Proceedings of the Third International Conference on Communications, Signal Processing, and Systems; Hohot, China. 14–15 July 2014; Basel, Switzerland: Springer; 2015. pp. 821–830. [Google Scholar]

- 20.Chen X., Yan G.-Y. Novel human lncRNA-disease association inference based on lncRNA expression profiles. Bioinformatics. 2013;29:2617–2624. doi: 10.1093/bioinformatics/btt426. [DOI] [PubMed] [Google Scholar]

- 21.Chen X., Yan C.C., Luo C., Ji W., Zhang Y., Dai Q. Constructing lncRNA functional similarity network based on lncRNA-disease associations and disease semantic similarity. Sci. Rep. 2015;5:11338. doi: 10.1038/srep11338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Xing C., Yuan H., Wang X.S., You Z.H., Chan K.C.C. FMLNCSIM: fuzzy measure-based lncRNA functional similarity calculation model. Oncotarget. 2016;7:45948–45958. doi: 10.18632/oncotarget.10008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Huang Y.A., Chen X., You Z.H., Huang D.S., Chan K.C.C. ILNCSIM: improved lncRNA functional similarity calculation model. Oncotarget. 2016;7:25902–25914. doi: 10.18632/oncotarget.8296. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Xiaofei Y., Lin G., Xingli G., Xinghua S., Hao W., Fei S., Bingbo W. A network based method for analysis of lncRNA-disease associations and prediction of lncRNAs implicated in diseases. PLoS ONE. 2014;9:e87797. doi: 10.1371/journal.pone.0087797. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Ping P., Wang L., Kuang L., Ye S., Mfb I., Pei T. A novel method for lncRNA-disease association prediction based on an lncRNA-disease association network. IEEE/ACM Trans. Comput. Biol. Bioinform. 2018;16:688–693. doi: 10.1109/TCBB.2018.2827373. [DOI] [PubMed] [Google Scholar]

- 26.Jie S., Hongbo S., Zhenzhen W., Changjian Z., Lin L., Letian W., Weiwei H., Dapeng H., Shulin L., Meng Z. Inferring novel lncRNA-disease associations based on a random walk model of a lncRNA functional similarity network. Mol. Biosyst. 2014;10:2074–2081. doi: 10.1039/c3mb70608g. [DOI] [PubMed] [Google Scholar]

- 27.Chen X., You Z.H., Yan G.Y., Gong D.W. IRWRLDA: Improved random walk with restart for lncRNA-disease association prediction. Oncotarget. 2016;7:57919–57931. doi: 10.18632/oncotarget.11141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Gu C., Liao B., Li X., Cai L., Li Z., Li K., Yang J. Global network random walk for predicting potential human lncRNA-disease associations. Sci. Rep. 2017;7:12442. doi: 10.1038/s41598-017-12763-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Yu G., Fu G., Chang L., Ren Y., Wang J. BRWLDA: Bi-random walks for predicting lncRNA-disease associations. Oncotarget. 2017;8:60429–60446. doi: 10.18632/oncotarget.19588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Yao Q., Wu L., Li J., Yang L.G., Sun Y., Li Z., He S., Feng F., Li H., Li Y. Global prioritizing disease candidate lncRNAs via a multi-level composite network. Sci. Rep. 2017;7:39516. doi: 10.1038/srep39516. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Pooya Z., Ben J., Raf V., Yves M. Protein fold recognition using geometric kernel data fusion. Bioinformatics. 2014;30:1850–1857. doi: 10.1093/bioinformatics/btu118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Lan W., Li M., Zhao K., Liu J., Wu F.X., Pan Y., Wang J. LDAP: A web server for lncRNA-disease association prediction. Bioinformatics. 2017;33:458–460. doi: 10.1093/bioinformatics/btw639. [DOI] [PubMed] [Google Scholar]

- 33.Fu G., Wang J., Domeniconi C., Yu G. Matrix factorization based data fusion for the prediction of lncRNA-disease associations. Bioinformatics. 2017;34:1529–1537. doi: 10.1093/bioinformatics/btx794. [DOI] [PubMed] [Google Scholar]

- 34.Lu C., Yang M., Luo F., Wu F.X., Li M., Pan Y., Li Y., Wang J. Prediction of lncRNA-disease associations based on inductive matrix completion. Bioinformatics. 2018;34:3357–3364. doi: 10.1093/bioinformatics/bty327. [DOI] [PubMed] [Google Scholar]

- 35.Ning S., Zhang J., Wang P., Zhi H., Wang J., Liu Y., Gao Y., Guo M., Yue M., Wang L. Lnc2Cancer: A manually curated database of experimentally supported lncRNAs associated with various human cancers. Nucleic Acids Res. 2016;44:980–985. doi: 10.1093/nar/gkv1094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Lu Z., Cohen K.B., Hunter L. GeneRIF quality assurance as summary revision; Proceedings of the Pacific Symposium on Biocomputing; Maui, HI, USA. 3–7 January 2007; Bethesda, MD, USA: National Institutes of Health; 2007. pp. 269–280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Li J., Liu S., Zhou H., Qu L., Yang J. starBase v2.0: Decoding miRNA-ceRNA, miRNA-ncRNA and protein–RNA interaction networks from large-scale CLIP-Seq data. Nucleic Acids Res. 2014;42:92–97. doi: 10.1093/nar/gkt1248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Li Y., Qiu C., Tu J., Geng B., Yang J., Jiang T., Cui Q. HMDD v2.0: A database for experimentally supported human microRNA and disease associations. Nucleic Acids Res. 2014;42:1070–1074. doi: 10.1093/nar/gkt1023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Cheng L., Hu Y., Sun J., Zhou M., Jiang Q. DincRNA: A comprehensive web-based bioinformatics toolkit for exploring disease associations and ncRNA function. Bioinformatics. 2018;34:1953–1956. doi: 10.1093/bioinformatics/bty002. [DOI] [PubMed] [Google Scholar]

- 40.Wang D., Wang J., Lu M., Song F., Cui Q. Inferring the human microRNA functional similarity and functional network based on microRNA-associated diseases. Bioinformatics. 2010;26:1644–1650. doi: 10.1093/bioinformatics/btq241. [DOI] [PubMed] [Google Scholar]

- 41.Kipf T.N., Welling M. Semi-supervised classification with graph convolutional networks; Proceedings of the ICLR 2017; Toulon, France. 24–26 April 2017. [Google Scholar]

- 42.Zitnik M., Agrawal M., Leskovec J. Modeling polypharmacy side effects with graph convolutional networks. Intell. Syst. Mol. Biol. 2018;34:258814. doi: 10.1093/bioinformatics/bty294. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Pan S., Hu R., Fung S., Long G., Jiang J., Zhang C. Learning Graph Embedding with Adversarial Training Methods. [(accessed on 16 June 2019)]; doi: 10.1109/TCYB.2019.2932096. Available online: https://arxiv.org/abs/1901.01250. [DOI] [PubMed]

- 44.Den Berg R.V., Kipf T.N., Welling M. Graph convolutional matrix completion; Proceedings of the KDD’18 Deep Learning Day; London, UK. 20 August 2018. [Google Scholar]

- 45.Torng W., Altman R.B. Graph convolutional neural networks for predicting drug-target interactions. bioRxiv. 2018:473074. doi: 10.1101/473074. [DOI] [PubMed] [Google Scholar]

- 46.Hinton G.E., Srivastava N., Krizhevsky A., Sutskever I., Salakhutdinov R.R. Improving Neural Networks by Preventing Co-Adaptation of Feature Detectors. [(accessed on 16 June 2019)]; Available online: https://arxiv.org/abs/1207.0580v1.

- 47.Bahari F., Emadibaygi M., Nikpour P. miR-17-92 host gene, uderexpressed in gastric cancer and its expression was negatively correlated with the metastasis. Indian J. Cancer. 2015;52:22–25. doi: 10.4103/0019-509X.175605. [DOI] [PubMed] [Google Scholar]

- 48.Li R., Liu S., Li Y., Tang Q., Xie Y., Zhai R. Long noncoding RNA AFAP1-AS1 enhances cell proliferation and invasion in osteosarcoma through regulating miR-4695-5p/TCF4-β-catenin signaling. Mol. Med. Rep. 2018;18:1616–1622. doi: 10.3892/mmr.2018.9131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Sun B., Yang N. Long non-coding RNA MIR155HG promotes proliferation, migration and invasion of A549 human lung cancer cells. J. Chongqing Med. Univ. 2017 In Chinese. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.