Abstract

Single-cell RNA-sequencing technologies suffer from many sources of technical noise, including under-sampling of mRNA molecules, often termed ‘dropout’, which can severely obscure important gene-gene relationships. To address this, we developed MAGIC (Markov Affinity-based Graph Imputation of Cells), a method that shares information across similar cells, via data diffusion, to denoise the cell count matrix and fill in missing transcripts. We validate MAGIC on several biological systems and find it effective at recovering gene-gene relationships and additional structures. MAGIC reveals a phenotypic continuum, with the majority of cells residing in intermediate states that display stem-like signatures and uncovers known and previously uncharacterized regulatory interactions, demonstrating that our approach can successfully uncover regulatory relations without perturbations.

One Sentence Summary: Graph diffusion-based imputation method recovers missing transcripts in scRNA-seq data, yielding insight into the epithelial-to-mesenchymal transition.

In brief - A new algorithm overcomes limitations of data loss in single cell sequencing experiments

Abstract highlights:

1. MAGIC restores noisy and sparse single-cell data using diffusion geometry.

2. Corrected data is amenable to myriad downstream analyses.

3. MAGIC enables archetypal analysis and inference of gene interactions.

4. Transcription factor targets can be predicted without perturbation after MAGIC. In brief - A new algorithm overcomes limitations of data loss in single cell sequencing experiments

INTRODUCTION

Single cell RNA-sequencing (scRNA-seq) is fast becoming one of the most widely used technologies in biomedical investigation. However, a vexing challenge in single cell genomics is that the observed expression counts capture a small random sample (typically 5–15%) of the transcriptome of each cell (Grun et al., 2014; Stegle et al., 2015). In the case of lowly expressed genes, this can lead to lack of detection of an expressed gene, a phenomenon called “dropout”. This impacts the signal for every gene, leading to loss of gene-gene relationships in the data, obscuring all but the strongest relationships. To overcome this sparsity, most methods aggregate cells, collapsing thousands of cells into a small number of clusters. Alternatively, other methods aggregate genes (e.g. PCA), creating “meta-genes”. While these approaches cope with sparsity to some extent, they lose single-cell or single-gene resolution.

To address these issues, we develop MAGIC (Markov Affinity-based Graph Imputation of Cells), a computational approach for recovering missing gene expression in single cell data. MAGIC leverages the large sample sizes in scRNA-seq (many thousands of cells) to share information across similar cells via data diffusion. MAGIC imputes likely gene expression in each cell, revealing the underlying biological structure. MAGIC uses signal-processing principles similar to those used to clarify blurry and grainy images. We validate MAGIC on several biological systems and find it effective at recovering gene-gene relationships and additional structures.

RESULTS

The MAGIC algorithm

MAGIC relies on structure in the data; possible cell states are constrained by regulatory mechanisms creating interdependencies between genes (Amir el et al., 2013). While data is observed in a high dimensional measurement space, cell phenotypes can be approximately embedded in a substantially lower dimensional manifold. This manifold can be represented using a nearest neighbor graph, where each node represents a cell, and edges connect most similar cells, based upon gene expression. Nearest neighbor graphs have been used to faithfully recover subpopulations (Levine et al., 2015; Shekhar et al., 2016) and developmental trajectories (Bendall et al., 2014; Haghverdi et al., 2015; Haghverdi et al., 2016; Setty et al., 2016). However, MAGIC uses a diffusion operator (Coifman and Lafon, 2006a) to learn the underlying manifold and map cellular phenotypes to this manifold, restoring missing transcripts in the process.

MAGIC takes an observed count matrix and recovers an imputed count matrix representing the likely expression for each individual cell, based on data diffusion between similar cells. For a given cell, MAGIC first identifies the cells that are most similar and aggregates gene expression across these highly similar cells to impute gene expression that corrects for dropout and other sources of noise. However, due to data sparsity, nearest neighbors in the raw data do not necessarily represent the most biologically similar cells. Therefore, we use data diffusion to construct a weighted affinity matrix representing a more faithful neighborhood of similar cells, and then use this matrix to restore the data. With a sufficient number of cells, this process (illustrated in figure 1) increases weights on cells that share similarity across a majority of biological processes.

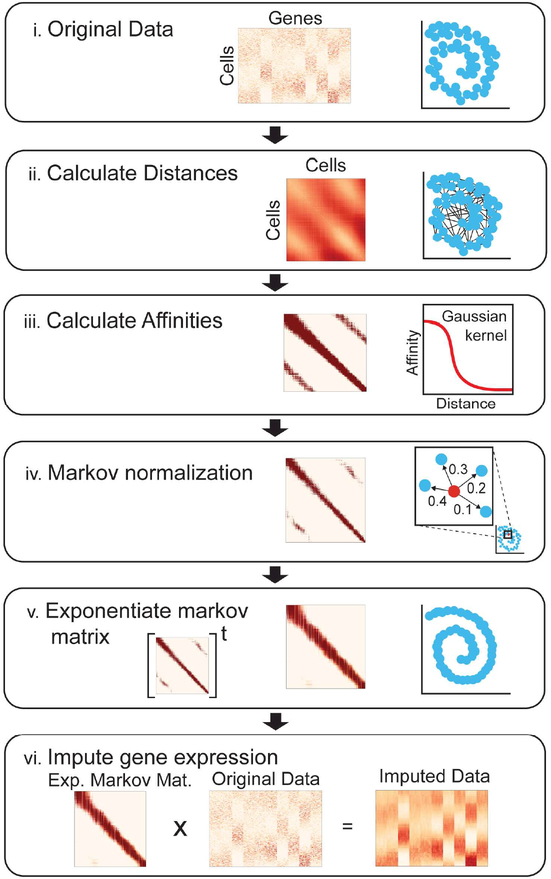

Fig 1: Steps of the MAGIC algorithm:

(i) The input data consists of a matrix of cells by genes (middle) of the data (right). (ii) We compute a cell by cell distance matrix. (iii) The distance matrix is converted to an affinity matrix (middle) using a Gaussian kernel. A graphical depiction of the kernel function is shown (right). (iv) The affinities are normalized, resulting in a Markov matrix (middle). The normalized affinities are shown for a single point as transition probabilities (right). (v) To perform diffusion we exponentiate the Markov matrix to a chosen power t. (vi) We matrix multiply the exponentiated Markov matrix (left) with the original data matrix (middle) to obtain a denoised and imputed data matrix (right). See also Figure S1.

Constructing the affinity matrix proceeds as follows: first PCA is used as a preprocessing step, similar to other graph-based approaches (Haghverdi et al., 2016; Setty et al., 2016; Shekhar et al., 2016). MAGIC uses an adaptive (width) Gaussian kernel to convert distances into affinities, so that similarity between two cells decreases exponentially with their distance. The adaptive kernel serves to equalize the effective number of neighbors for each cell, which helps recover finer structure in the data, whereas the non-adaptive kernel collapses the data into the densest regions (Figure S1A, B). From the affinity matrix we create a Markov transition matrix,M, representing the probability distribution of transitioning from one cell to another in a single step.

Technical noise prevents distinguish between similarity due to biological correspondence versus spurious chance. Mimicking scRNA-seq, if we randomly subsample a fraction of the transcripts, the expression observed across identical cells can appear dissimilar. However, these cells likely share many neighbors, whereas spurious edges connect cells that share few neighbors. Raising M, to the power t results in a matrix where each entry represents the probability that a random walk of length t starting at cell i will reach cell j (Figure 1v), a process akin to diffusion. While the exponentiated Markov affinity matrix increases the number of cell neighbors, unlike the effect of increasing k in knn-imputation, MAGIC does not bluntly smooth and average over increasingly distant cells. Instead, exponentiation refines cell affinities, increasing the weight of similarity along axes that follow data density, thus phenotypically similar cells have strongly weighted affinities, whereas spurious neighbors are down-weighted.

In the imputation step, MAGIC learns from cells in each neighborhood through multiplying the transition matrix by the original data matrix. (Figure 1vi), effectively restoring cells to the underlying manifold. In this data diffusion process, cells share information through local neighbors in a process that is mathematically akin to diffusing heat through the data, where raising the diffusion operator to the t-th power is akin to a t-step random walk through the data. Exponentiation is essentially a low-pass filter on the eigenvalues, which serves to eliminate noise dimensions with small eigenvalues, while simultaneously learning the manifold structure. While we use PCA to gain more robustness for computing the affinity matrix, the imputation is performed using the count matrix before PCA. Thus, while we average data across cells, each individual cell retains a unique neighborhood, resulting in a unique expression vector.

To select an optimal t, we consider the impact of t on the final imputed data. We evaluate the degree of change between the imputed data at time t and time t-1 and stop after this value stabilizes. As t increases, we observe two regimes (Figure S1C,D), a rapidly changing imputation regime and, after convergence, a smoothing regime. In the imputation regime, the first few steps of diffusion learn the manifold structure and remove the noise dimensions. As t increases we rapidly capture relations between cells that are biologically very similar, and only appeared different due to collection artifacts. At larger values of t, the structure of the data has already been recovered and diffusing further would smooth out trends that likely represent real biology. The knee-point (Figure S1C), determines an optimal t. A synthetic dataset demonstrates that best correspondence between the ground truth and imputed data is achieved at the defined optimal t (Figure S1D). See STAR for more details.

MAGIC Enhances Structures in Bone Marrow

We first evaluated MAGIC on a mouse bone marrow dataset (Paul et al., 2015), collected with MARS-seq2 (Jaitin et al., 2014). The data matrix is sparse and cells are missing many canonical genes in their respective cell types (Figure 2A,B). At the transcript level, canonical surface markers typically used to identify immune subsets are lowly expressed and hence detected at low levels. For example, in the monocyte clusters C14, C15 only 1.6% cells express CD14 and 5.8% cells express CD11b and only 10% of the dendritic cells (cluster C11) express CD32. After MAGIC (npca=100, ka=4, t=7), 94% of monocytes express CD14, 98% express CD11b and 97% of dendritic cells express CD32 at significant levels (Figure S2A).

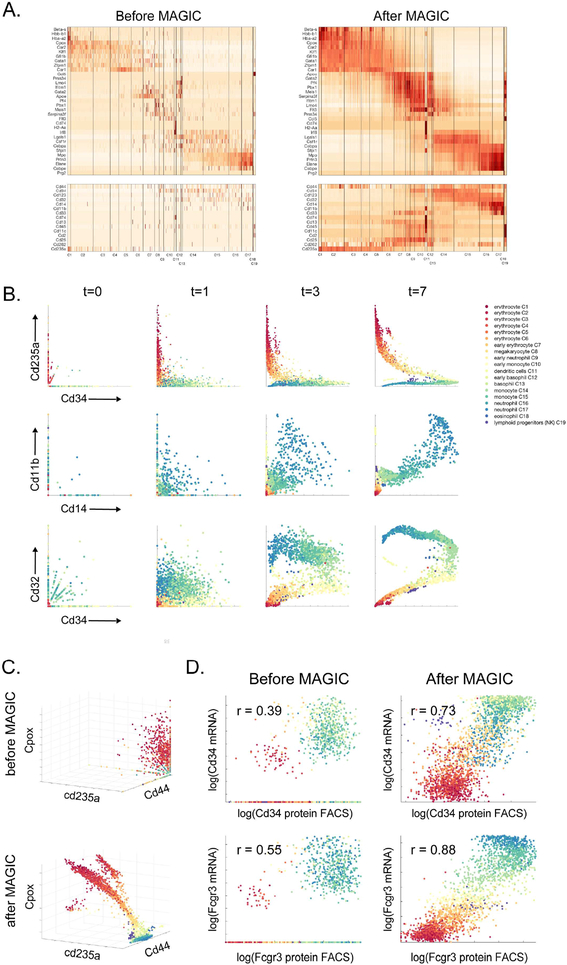

Fig 2: MAGIC applied to mouse myeloid progenitor data:

Mouse bone marrow dataset (Paul et al., 2015). A) Gene expression matrix for hematopoietic genes (top) and characteristic surface markers of immune subsets (bottom) before and after MAGIC. See also Figure S2A. B) Scatter plots of several gene-gene relationships after different amounts of diffusion. In these scatter plots, each dot represents a single cell, plotted according to its expression values (measured at t=0 and imputed for t=1,3,7), and colored based on the clusters identified in (Paul et al., 2015). C) Shows before and after MAGIC of a 3D relationships (with diffusion time t=7). D) FACS measurements of CD34 and FCGR3 protein levels versus transcript levels, before and after MAGIC. Both FACS measurements and mRNA levels are log-scaled as per FACS conventions.

The sparsity of the data is more evident when viewing the data with biaxial plots (Fig 2B, t=0). It is rare for both genes to be observed simultaneously in any given cell, obscuring relationships between genes. MAGIC restores missing values and relationships, recreating the biaxial plots typically seen in flow cytometry. Figure 2B shows established relationships during hematopoiesis that are undetectable in the raw data. By superimposing the reported clusters onto the biaxial plots we see that cells are grouped by cluster and gene-gene relations gradually change between clusters as the cells mature and differentiate. Also demonstrated are the effects of the diffusion process: a clear and well-formed structure emerges as t (number of time the matrix is exponentiated) grows. Figure 2C demonstrates gene-gene relationships in 3 dimensions. Little structure is visible in the raw data, yet after MAGIC we observe the emergence of a continuous developmental trajectory.

To provide further validation, we utilize the index sorting available with MARS-seq2 (Paul et al.,2015), providing FACS based measurement for CD34 and FCGR3. While the data has poor correlation between protein and original mRNA, after MAGIC, this correlation substantially increases for both proteins: FCGR3 from 0.55 to 0.88 and CD34 from 0.39 to 0.73 (Figure 2D). We note that a comparison between protein and transcriptomic data found a correlation of up to 0.6 between in mRNA and protein in bulk data (Greenbaum et al., 2003).

MAGIC Retains and Enhances Cluster Structure in Neuronal Data

We next evaluated MAGIC on two datasets measuring neuronal cells (Shekhar et al., 2016; Zeisel et al., 2015) known to have a high degree of functional specificity. Therefore, end-state differentiated neural cells are expected to have well-separated cluster structure.

We analyzed a mouse retina dataset collected with drop-seq (Shekhar et al., 2016). Following (Shekhar et al., 2016), we clustered the cells (using the original data) with Phenograph (Levine et al., 2015) (k=30). To verify that MAGIC preserves cluster structure, we ran MAGIC (npca=100, ka=10, t=6), re-clustered the post-MAGIC data and computed the rand index (a measure of similarity between clustering solutions (Rand, 1971)) between the pre-MAGIC and post-MAGIC clusters, resulting in a rand index of 0.93.

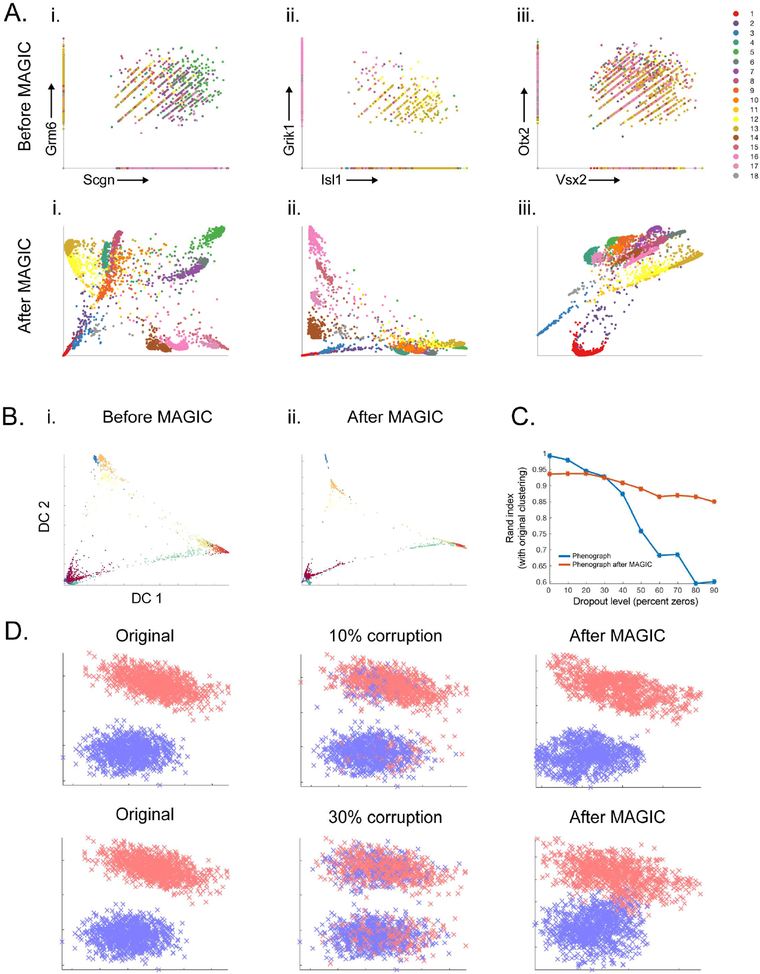

MAGIC extends beyond clustering to highlight heterogeneity and gene-gene relationships within each cluster. We plotted various gene-gene interactions before and after MAGIC, and colored cells by their pre-MAGIC cluster, finding gene-gene relations that vary across clusters (Figure 3A). For example, the ON bipolar cone markers SCGN and GRM6 relate to each other differently in different clusters of cells. In clusters 5–7 SCGN and GRM6 are both highly expressed and show a positive relationship (Figure 3Ai). Clusters 14–17 have high expression of SCGN and low expression of GRM6 and show a negative relationship within the clusters. These trends and distinctions are not detectable prior to MAGIC and would be missed by simple population averaging.

Fig 3: MAGIC preserves cluster structure.

A) Mouse retinal bipolar cells from (Shekhar et al., 2016) showing 2D relationships before and after MAGIC. Cells colored by Phenograph clusters and show differing trends among clusters. B-C): Mouse cortex and hippocampus cells (Zeisel et al., 2015). B) Diffusion components before MAGIC (i) and after MAGIC (ii) colored with clusters, MAGIC does not merge clusters. C) Rand index (Y-axis) of Phenograph clustering after dropout, with MAGIC (red) or without MAGIC (blue), against Phenograph original data. D) Synthetic mixture of two Gaussians embedded in high dimension (original, left), 10% and 30% of the values are corrupted by randomly switching values between the clusters (middle). MAGIC is able to fix the majority of the corruptions (right); 98% recovery for 10% corruption and 81% recovery for 30% corruption.

Next, we assessed MAGIC’s ability to maintain clusters using a deeply sequenced mouse cortex dataset from (Zeisel et al., 2015) collected with smart-seq2 (Islam et al., 2014). MAGIC preserved the discrete nature of the clusters and did not add spurious intermediate states between them; diffusion components remain the same before and after MAGIC (Figure 3B). The relatively deep sampling of this dataset enabled a systematic evaluation, where we dropout transcripts from the original data, cluster, and compare the original clustering, before and after MAGIC. We dropped out up to 90% of the data and compared clustering solutions. While clustering on the dropped out data steadily decreases in quality (dipping to rand index 0.6 at 80% dropout), clustering after MAGIC retains a consistent quality (Rand index 0.89–0.94) throughout all levels of dropout, including 90% (Figure 3C).

Evaluating MAGIC’s accuracy and robustness

To illustrate MAGIC’s ability to correct for contamination (e.g. ambient mRNA or cell barcode swapping), we generated a synthetic test case creating two cell clusters (Gaussian mixture in high dimensions) and then randomly selected a fraction of matrix entries and switched their values between clusters (10% and 30% corruption). We used MAGIC (ka=10, t=4, npca=10) to correct this high-frequency noise. Figure 3D shows that while corruption leads to placing cells in the wrong clusters, MAGIC is able to correct this; 98% recovery for 10% corruption and 81% recovery for 30% corruption.

To quantitatively evaluate the accuracy of MAGIC’s imputation, we created two synthetic datasets where ground truth is known. By directly comparing the original and imputed data, we found that MAGIC was able to correctly recover ground truth data both qualitatively and quantitatively (Figures S2B-C, S3A-B). MAGIC can also capture multivariate relations effectively -- surprisingly the agreement between the original and imputed data is even higher in the case of gene-gene correlations (Figure S3Aii), likely because these correlations are part of the structure that MAGIC harnesses for its imputation. We performed systematic robustness analysis on our EMT dataset and find that MAGIC is robust to sub-sampling of cells (Figure S3C) and to different parameters (Figures S3D-E). See STAR for full details.

Characterizing the Epithelial-to-Mesenchymal Transition

We chose to study EMT, a cell state transition during which cells gradually lose epithelial markers (including E-cadherin, Epcam and epithelial cytokeratins), and gain mesenchymal markers (including Vimentin, Fibronectin and N-cadherin) (Nieto et al., 2016). At a transcriptional level, multiple drivers of EMT have been characterized and include the transcription factors ZEB1, SNAIL (SNAI1) and TWIST1. However, knowledge of the EMT process has been largely derived from studies comparing the extreme states of the EMT, i.e. the beginning epithelial state with the endpoint mesenchymal state. Moreover, most studies have been conducted in bulk where the state of individual cells is not resolved. Hence, while the initiation and the final outcome of EMT are well characterized, little is known about intermediary states.

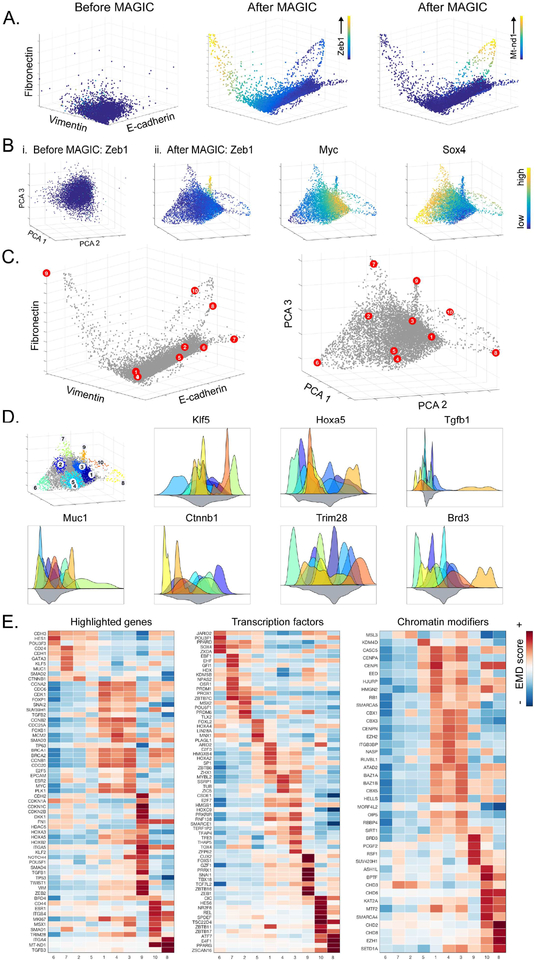

Transformed mammary epithelial cells (HMLE) were induced to undergo the EMT via TGFβ treatment (8 days) and measured using inDrops (Klein et al., 2015). We observe that induction of EMT is asynchronous; each cell progresses along the transition at a different rate. Consequently, on days 8 and 10, we see that cells reside in all phases along the continuum of the EMT. MAGIC unveils a continuum of transitional states that comprise EMT. Before MAGIC, the canonical decrease in CDH1 (E-cadherin) coinciding with an increase in VIM (Vimentin) and FN1 (Fibronectin) is obscured. After MAGIC (npca=20, ka=10, t=6) this relationship is successfully recovered (Figure 4A). ZEB1, a key transcription factor known to induce EMT (Lamouille et al., 2014), progressively increases as VIM and FN1 increase. Another progression revealed by MAGIC involves two branches that deviate from the main structure, which display an increase in mitochondrial RNA, reflecting a progression into apoptosis (Figure 4A). The apoptotic state is supported by the rise of additional apoptotic markers in these cells (data not shown).

Fig 4: MAGIC recovers a state space in EMT data.

EMT data collected 8 and 10 days after TGFβ-stimulation of HMLE breast cancer cells. A) 3D scatterplots between canonical EMT genes CDH1, VIM, and FN1. (Left) Before MAGIC (Middle) after MAGIC with cells colored by the level of ZEB1 and (Right) MT-ND1. See also Figure S3. B) 3D PCA plots before MAGIC (i) and after MAGIC (ii) with cells colored by levels of ZEB1, MYC and SOX4 respectively. C) 3D scatter plots after MAGIC, red dots represent each of the 10 archetypes in the data. Plotted by (Left) CDH1, VIM and FN1, and (right) PCA. D) (Left) most archetypal neighborhoods, cell colored by archetype, grey cells are not associated with any archetype. Histograms represent distributions of genes in archetypal neighborhoods, color-coded by the colors shown in the leftmost plot. E) A subset of differentially expressed genes for each archetype including highlighted genes, transcription factors and chromatin modifiers. Additional differentially expressed genes are shown in table S1. See also Figure S4.

Characterizing Intermediate States during EMT

A surprising revelation is that most of the cells (79%) reside in an intermediate state that is neither epithelial, nor mesenchymal. Moreover, the intermediate cells are highly heterogeneous, occupying a multi-dimensional manifold that does not seem to follow a simple one-dimensional progression. Thus, next characterized this structure and in particular, its boundaries. We used archetypal analysis (Cutler and Breiman, 1994) to characterize the extreme phenotypic states (Shoval et al., 2012), and states that lie in between these extrema. While archetypal analysis has been used to characterize single-cell data (Korem et al., 2015), MAGIC learns a better-formed structure that is amenable to archetypal analysis (Figures 4A,B).

Archetype analysis identified 10 archetypes (AT) in our data. While these archetypes represent extrema in a higher-dimensional space, Figure 4C shows their projection onto two different 3D plots. We use the neighborhood of cells around each archetype to characterize the gene expression profile for that archetype (see STAR) and find unique gene expression patterns for each AT (Figure 4D). We performed differential gene expression analysis (see STAR) to gain a more comprehensive characterization of each AT (Figures 4E, Supplementary Table 1). These archetypes fall into the following categories: ‘epithelial’ – AT6, AT7, ‘intermediary’ – AT1 to AT5, ‘mesenchymal’ – AT9, and ‘apoptotic’ – AT8, AT10. We performed 100 random sub-samplings of the cells and found that we repeatedly identified a very similar set of ATs, where similarity was quantified by correlating AT gene expression (Figure S4A), demonstrating the ATs are robust.

The epithelial ATs (AT6 and AT7) are defined by strong epithelial marker expression including CDH1, CDH3, MUC1 and CD24. The transcriptional profile of AT7 includes higher ESR2 and GATA3, commonly associated with the luminal mammary epithelial cells, and higher CD24 and CDH1, suggesting a more differentiated epithelial phenotype than AT6. Of note, AT6 and AT7 express high levels of SOX4, recently shown to be a master regulator of a TGFβ-induced EMT (Tiwari et al., 2013). The mesenchymal AT9 is characterized by high expression of core EMT TFs SNAI1, ZEB1, SMAD4, TGFB1, TWIST1 (see Figure 4E). Thus, AT9 may represent a gene expression program of cells that have undergone EMT in response to TGFβ.

Our analysis highlights five intermediate ATs (AT1–5), which reside along a continuous spectrum of phenotypes, supporting recent findings suggesting that cells undergoing the EMT move through a series of partial and/or metastable cell states (Nieto et al., 2016; Tam and Weinberg, 2013). AT2 shows a similar gene expression profile as AT7, including upregulation of SOX4 and is closest to the epithelial state. However, AT2 expresses a recently characterized partner in EMT, KLF5 (David et al., 2016). AT3 is closest to the mesenchymal state, with SMAD3 and mesenchymal regulator MSX1 upregulated. AT1 3,4 all express a large number of chromatin modifiers, including EZH2, and several CBX genes, suggesting that these might play a role in the reprogramming. ATs 1,4,5 segregate from the other ATs with concomitant increase in multiple embryonic genes (including TRIM28, FOXB1, HOXA5, HOXB2, HOXA3). Indeed, it has been postulated that epithelial cells undergoing EMT may revert to a more primitive state before acquiring the ability to differentiate into a mesenchymal cell (Ben-Porath et al., 2008). Together these data suggest AT1,4, have entered into a marked reprogramming phase of the EMT, while AT3 is further along this reprogramming phase, further supported by the increasing levels of VIM, along this progression. Gene set enrichments for the ATs appear in table S1.

Applying a similar archetypal analysis to the data prior to MAGIC fails to find distinct archetypes that differ in their expression profiles (Figure S4B-D). Further, genes involved in the EMT process do not vary across the identified archetypes. Thus the structure revealed by MAGIC enabled the characterization of previously unappreciated intermediate states.

MAGIC reveals gene-gene relationships

The core-regulatory circuit defining EMT has been well established, with both ZEB1 and SNAIL1 as potent repressors of the epithelial phenotype. However, the breadth of targets regulated by these EMT-TFs remains largely unknown. Defining the EMT circuitry, and importantly, the timing of different regulatory factors, can shed light upon how this state transition occurs.

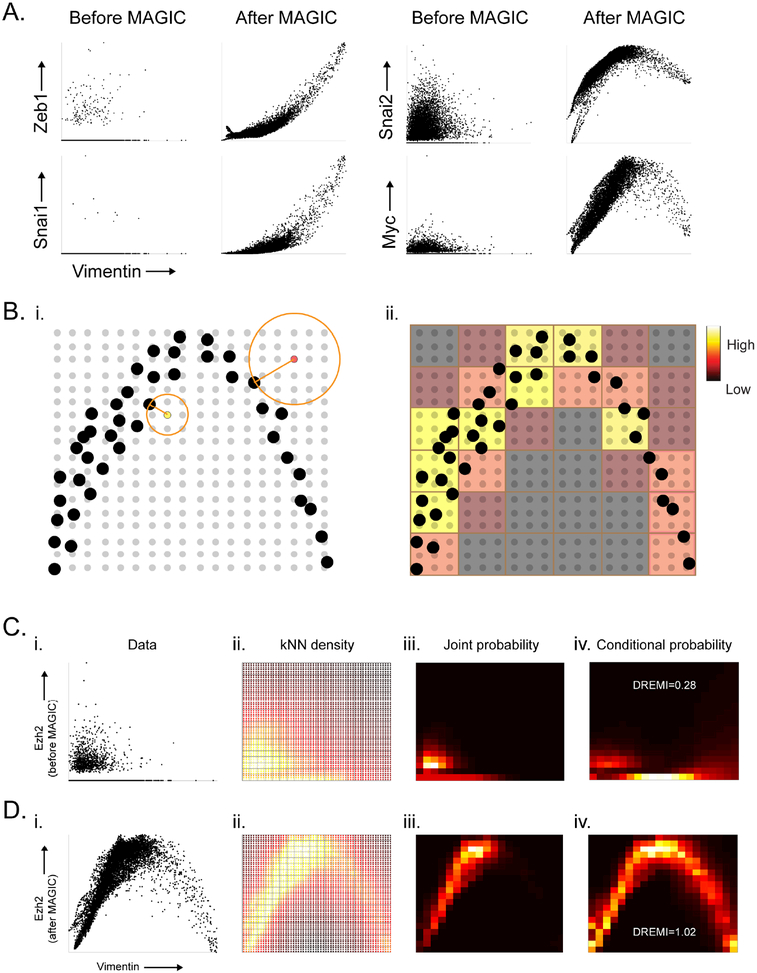

The asynchronous nature of the data allows us to explore temporal trends as cells progress from the epithelial to the mesenchymal state. We organize the cells along a pseudo-time progression, using VIM expression as a proxy for EMT state. Thus, we can observe temporal trends of regulatory factors along this transition. However, TFs are typically expressed at low levels and the signal is obscured. For example, the biaxial plots of both ZEB1 and SNAI1 against VIM lack any discernable trend (Figure 5A). However, after imputation, the rise in these key TFs is revealed, recapitulating their known temporal trends.

Fig. 5: Gene-Gene Relationships and kNN-DREMI.

A) 2D scatterplots before and after MAGIC. B) Illustrates the computation of kNN-based density estimation on an 18 × 18 grid, shown as gray points with data points shown in black. Each grid point (yellow, and red grid points are examples) is given density inversely proportional to the volume of a circle with radius r equal to the distance to its nearest data neighbor (black point). After density estimation on the grid-points, the grid is coarse grained into a 6×6 discrete density estimate (red and yellow squares show coarse grained partitions) by accumulation of all densities within each square bin. C) The steps for computing kNN-DREMI are shown for EZH2 (Y-axis) and VIM (X-axis) before MAGIC, with (i) a scatter plot, (ii) kNN-based density estimation on a fine grid (60×60), (iii) coarse-grained joint probability estimate on probability to obtain conditional probability density, resulting in 20 × 20 partition, and (iv) normalization of joint kNN-DREMI = 0.28. D) Same steps as (C) shown after MAGIC resulting in a kNN-DREMI = 1.02. See also Figure S5.

A considerable number of regulators peak at intermediate levels of VIM (e.g. MYC and SNAI2, Figure 5A). The activity of these genes is restricted to intermediate states, whereas their expression is similarly low in both the epithelial and mesenchymal states and would hence be missed by studies that focus only on end states. To systematically explore gene-gene interactions, we need a quantitative metric to score statistical dependency between genes, which takes into account non-linearity observed in the data (e.g. MYC and SNAI2).

To quantify relationships, we adapted DREMI (Krishnaswamy, 2014) to scRNA-seq data, which measures statistical dependency between genes. DREMI captures the functional relationship between two genes across their entire dynamic range. The key change to kNN-DREMI is the replacement of the heat diffusion based kernel-density estimator from (Botev et al., 2010) by a k-nearest neighbor based density estimator (Sricharan et al., 2012) (Figure 5B), which has been shown to be an effective method for sparse and high dimensional datasets (STAR). Moreover, we show that kNN-DREMI is highly robust over a wide range of parameters (Figures S5A).

We illustrate this computation using the relationship between VIM and EZH2 on the same data before MAGIC (Figure 5C) and after MAGIC (figure 5D). We note that Figure 5C is representative of almost any pair of genes in the data, even gene-pairs that are known to be related. The kNN-DREMI score between VIM and EZH2 is 0.28 and 1.02, before and after MAGIC respectively. For perspective, Figure S5B shows a histogram of DREMI scores of 10,000 random gene pairs. Note, there is limited correlation between DREMI before and after MAGIC (Figure S5C), indicating that MAGIC does not simply shift the values. Moreover, DREMI is able to capture gene-gene dependencies beyond correlation (Figure S5D,E).

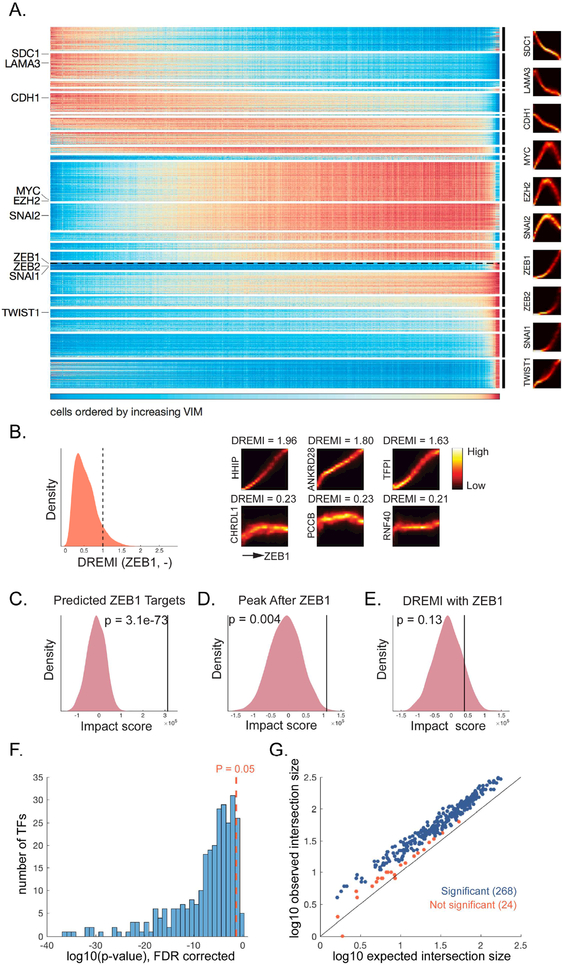

Characterizing Expression Dynamics Underlying EMT

We next constructed a genome-wide view of expression dynamics during the course of EMT to assess which genes change, when and how. We filtered out apoptotic cells (based MT-ND1 expression) and use the remaining cells to compute kNN-DREMI between VIM and all genes. We found that the majority of the genes demonstrate a temporal trend that follows VIM and selected 13,487 genes having kNN-DREMI > 0.5 with VIM for further study.

We used the DREVI plot (Figure 5Div) to cluster genes based on the shape and timing of their relationship with VIM (see STAR). This resulted in 22 groups of genes with distinct temporal trends. This clustering filters noise by averaging over trends with roughly similar shape and timing. We then fit a spline curve to each cluster, estimate the timing of peak expression, and order the clusters based on this timing.

The result is a global map of the pseudo-temporal gene dynamics leading to the mesenchymal state (Figure 6A), with the majority of the genes (2/3 of the genome) participating in this transition with clear temporal trends. We observe clusters of genes that change expression in waves as VIM rises, with three modes of behavior that vary in their timing. The first set of clusters decrease with VIM, for example, SDC1 and LAMA3, which are both involved with cell adhesion and binding. There are genes that increase and then decrease before entering the mesenchymal state, including MYC and EZH2. Finally, as cells transition into the mesenchymal state, a large number of genes monotonically increase, including the canonical EMT-TFs ZEB1, TWIST, SNAIL and SLUG. A full list of genes and their associated clusters appear in Table S2.

Fig. 6: Gene Expression Dynamics Underlying EMT and TF target predictions.

(A) Expression of genes (Y-axis) ordered by DREVI-based clustering and by peak expression along VIM (X-axis). ZEB1 is highlighted with dashed line. Representative DREVI plots with VIM shown to the right. B) (Left) Distribution of kNN-DREMI with ZEB1. The dashed line marks the threshold for genes that we include in the prediction. (Right) DREVI plots and DREMI values for a set of example genes above the threshold (top row) and below threshold (bottom row). C) Impact score of the predicted ZEB1 targets. D) Impact score of all genes that peak after ZEB1. E) Impact score of all genes with kNN-DREMI against ZEB1 >= 1. F) Histogram of 292 FDR corrected p-values (log transformed) obtained using a hypergeometric test on TF-target predictions soverlap with targets obtained from ATAC-seq data, 268 out of 292 TFs have p-value < 0.05. G) Expected number of genes in intersection (log10 scale, X-axis) based on the hypergeometric distribution, versus the observed intersection (log10 scale, Y-axis). For all TFs except one, the observed intersection is higher than expected from random. For 268 TFs (blue points) the difference is significant, and 24 (red points) are not significant. See also Figure S6.

To ensure these pseudo-temporal dynamics are robust and representative of EMT, we repeated this analysis with three other canonical markers of the mesenchymal state, CDH2, ITGB4 and CD44. The resulting gene dynamics are both visually and quantitatively similar for all four markers of EMT progression (Figure S6A-B).

Characterization of ZEB1 Targets

We have shown that MAGIC can recover gene-gene relationships, as well as a fine-grained pseudo-time ordering of gene activation. This offers the possibility of directly learning gene regulatory networks at large scales without perturbation. While DREMI only suggests statistical dependency, incorporating pseudo-time can indicate a causal relationship. In case of activation, we assume that target’s expression should peak after the TF. Thus, we harness the temporally ordered clusters to limit potential targets only to those that peak after the regulator. Additionally, the expression of the regulator should be strongly informative of the expression of its targets, meaning we should only consider genes that have strong kNN-DREMI with the TF. These two criteria combined can predict a set of candidate targets for each TF (see STAR).

With respect to the transcriptional targets of the EMT-TFs, it is clear that a certain level of redundancy exists. However, a recent study suggests that there are actually profound differences in the transcriptional programs they induce (Ye et al., 2015). We focused on ZEB1, a key regulator of EMT whose transcriptional targets remain poorly defined to date. We found 4,509 genes that changed with EMT and peaked along with or after ZEB1 (Figure 6A), and among these 1,085 genes had DREMI >= 1 with ZEB1 (Figure 6B). We predict that ZEB1 activates these genes, either directly or indirectly. See Table S3 for full list of targets.

To validate our predicted targets of ZEB1, we used a variant of the HMLE cell line, where ZEB1 can be over-expressed using a Dox-inducible promoter. We measured the cells after two days of continuous Dox treatment (see STAR), which is sufficient to induce significant numbers of mesenchymal cells (10% of the cells). In the ZEB1 induction, we expect ZEB1 targets to be up-regulated relative to other genes. For a given set of genes (e.g. list of predicted targets), we define an impact score, which compares the relative ranking of gene expression between the ZEB1 and TGFβ inductions (see STAR).

Our predicted ZEB1 targets are indeed up-regulated in the ZEB1 induction with a significance of p=3.1E-73, against a background of all genes involved in EMT (Figure 6C). Including all 4,509 genes that peak after ZEB1 results in a significant but diminished impact score (Figure 6D, p=0.004), indicating that while ZEB1 is a key regulator of EMT, there are additional regulatory factors at play in the TGFβ-induced EMT, even during late stages of the transition. Predicting targets based on DREMI with ZEB1 alone results in an impact score that is not significant (Figure 6E, p=0.13). We note that our prediction focuses only on genes activated by Zeb1, whereas Zeb1 is also a potent repressor, indeed among these high DREMI genes, ~1/3 are negatively correlated with ZEB1.

Our top predicted targets include many genes known to be involved in EMT, including SNAI1, ZEB2, BMP (bone morphogenic) antagonist family proteins and MMP (matrix metaloproteinase) family proteins such as MMP3. In addition, we see proteins involved in cell cycle, remodeling of cell cytoskeleton, extracellular matrix remodeling, and cell migration. This includes: CDKN1C, a negative regulator of proliferation, RHOA, involved in reorganization of the actin cytoskeleton and regulates cell shape, attachment, and motility, CCBE1, involved in extracellular matrix remodeling and migration, and interestingly NTN4, normally involved in neural migration. While these genes are less known in their EMT involvement, their phenotypic annotations match with known phenotypic changes involved in EMT, providing further evidence that ZEB1 is critical in activating a myriad of processes that result in cellular trans-differentiation. Thus we have demonstrated that combining MAGIC, pseudo-time and kNN-DREMI, we are able to predict regulatory targets, without perturbation.

Systematic Validation of an EMT regulatory network

To build a global regulatory network of EMT, we selected all TFs that change considerably along EMT (kNN-DREMI with VIM is >0.5) and predicted targets of each using the analysis applied to ZEB1. This resulted in a large regulatory network consisting of 719 regulators over a total of 11,126 targets (Supplementary Table 3). To systematically validate our target predictions, we used ATAC-seq (Assay for Transposase-Accessible Chromatin using sequencing) (Buenrostro et al., 2013) as an independent and well-accepted approach for target prediction (STAR) (Kundaje et al., 2015). ATAC-seq was carried out on HMLE cells 8 days following TGFb stimulation. Cells were FACS sorted by CD44+ to enrich for the mesenchymal population. We used the ATAC-seq peaks combined with motif analysis to derive a set of targets for each TF using standard approaches (STAR). Note, we do not expect the two approaches to perfectly align: our predictions identify both direct and indirect targets of a TF, whereas ATAC-seq only captures direct targets. ATAC-seq identifies binding of TFs that are activating, poised or inhibiting, whereas our predictions only focus on TF activation. Nevertheless, if our predictions are accurate, we expect a significant overlap between the two sets.

For each of 292 TFs in our predicted regulatory network, for which we also had ATAC-seq based predictions, we used the hypergeometric distribution to assess the significance of overlap between the two target sets and false discovery correction (FDR), to correct for multiple hypothesis testing (STAR). We find the overlap is greater than expected for 291/292 TFs, and after FDR this overlap is significant for 268/292 TFs (Figures 6F,G). Thus our predictions significantly overlapped with targets derived from ATAC-seq for 92% of the TFs tested.

To directly evaluate the gene-gene relationships recovered by MAGIC, we compared DREMI scores between targets and non-targets, for each of 418 TFs, and compared the distribution of DREMI scores using a one-sided Kolmogorov-Smirnoff (KS) test (STAR). In this analysis we comprehensively evaluate all TFs with ATAC-seq based predictions (regardless of their relationship to VIM) and all targets, regardless of pseudo-time ordering. We find that 372/418 TFs have significantly higher DREMI score with their ATAC-seq based targets than with other genes with p < 0.05, whereas many of these are insignificant before MAGIC. Figure S6D shows distributions for ZEB1, SNAI1 and MYC, after MAGIC all have significant KS scores (p=4.7e-25, p=3e-25 and p=e-8 respectively), whereas none of these are significant prior to MAGIC (p=0.16, p=0.99 and p=0.99 respectively).

In summary, we validated a computational approach to build a large-scale regulatory network from scRNA-seq data without genetic perturbations.

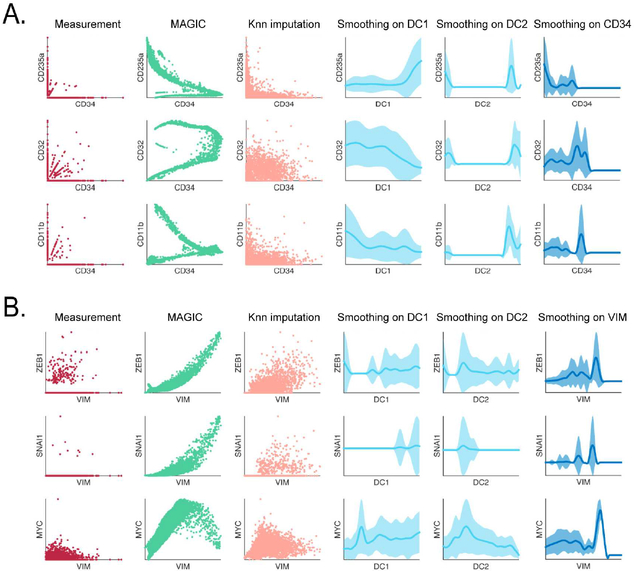

Comparison of MAGIC to Other Methods

We compare MAGIC to kNN-imputation and diffusion maps using a few known gene-gene relationships from the bone marrow (Figure 7A) and EMT (Figure 7B) datasets. Contrary to MAGIC, the simpler kNN-imputation approach fails to recover the known gene-gene relationships (Figure 7, peach). Unlike simple smoothing over a kNN-graph, which is limited to local information, by propagating data using the diffusion operator, MAGIC is able to recover data using longer range, global features. In essence, this pulls in noisy outlier data to the manifold and restores the structure.

Fig. 7: Comparison of MAGIC to other imputation and smoothing methods.

A) Comparison shown on bone marrow data (as in Figure 2), raw data (first column), MAGIC imputed (second column). The other columns show kNN-based imputation, smoothing on diffusion components 1 to 2, and smoothing on CD34, respectively. B) The same as in A but for the EMT data. See also Figure S7.

A popular aggregation approach utilizes diffusion maps (Coifman and Lafon, 2006a), which like MAGIC, compute a diffusion operator that defines similarity between data points along a manifold. However, diffusion maps find diffusion components (DCs), a nonlinear equivalent to a PCA, which have been recently utilized to find pseudotime trends in developmental systems (Haghverdi et al., 2015; Haghverdi et al., 2016; Setty et al., 2016). Moving average approaches have been successfully used to observe gene trends along DCs, smoothing along a single diffusion component, one gene at a time. This performs well when DCs correspond to tight developmental pseudo-time trajectories, and only for developmentally related genes, whose major component of variation is singular. Moreover, because smoothing occurs one gene at a time, the approach cannot be used to reveal gene-gene relationships. MAGIC, by contrast, uses the diffusion operator to propagate gene expression information between similar cells, taking all diffusion components and genes into account simultaneously in its inference.

The difference is illustrated in Figure 7, sky blue: while smoothing along DC1 (corresponding to erythrocytes) results in a roughly correct trend for CD235a (an erythrocyte marker), the relationship is entirely incorrect for markers belonging to other lineages such as CD11B. Moreover, this approach is unable to recover gene-gene relationships even in cases like CD335a and CD34, whose trends both follow DC1 relatively well. Additionally, the EMT dataset does not follow a simple trajectory and therefore diffusion components fail to capture trends for even the most canonical TFs in this process. For instance, ZEB1 or SNAIL vs VIM shows a fluctuating rather than positive trend.

We also compare MAGIC to methods used to fill in missing data, SVD-based low-rank data approximation (LRA) (Achlioptas and McSherry, 2007) and Nuclear-Norm-based Matrix Completion (NNMC) (Candes and Recht, 2012). Both methods have a low-rank assumption, i.e., like MAGIC, they assume that the intrinsic dimensionality of the data is much lower than the measurement space and utilize a singular value decomposition (SVD) of the data matrix. We compared the performance of the three techniques on synthetic and real data (Figure S7), where we demonstrate MAGIC is uniquely well suited to handle the dropout rampant in scRNA-seq data (STAR). A likely explanation for NNMC’s poor performance is that it “trusts” non-zero values and only attempts to impute possibly missing zero values. Whereas in scRNA-seq, dropout of molecules impacts all genes and even non-zero genes are likely lower than their true count in the data. Hence NNMC is poorly suited to this data type. LRA, a linear method, cannot separate the exact manifold from external noise, likely due to its inability to find non-linear directions in the data.

Discussion

Here, we presented MAGIC, an algorithm to alleviate sparsity and noise due to stochastic mRNA capture and recapitulate gene-gene interactions in single-cell data. The cost of sequencing limits our ability to measure large numbers of cells at depth, ensuring MAGIC’s utility even as scRNA-seq technology improves. Further, MAGIC can be used in newer single-cell technologies such as single-cell ATAC-seq, which suffer from similar sparsity and noise. Unlike other imputation algorithms, which simply fill in “missing values”, MAGIC uses diffusion of values between similar cells along an affinity-based graph structure, to correct the entire data matrix and restore it to its underlying manifold structure. This diffusion is akin to low-pass filtering of the graph spectrum. Previously, low-pass filtering has only been applied to structured data, i.e. data that has a given temporal or spatial ordering such as images or audio signals (Buades et al., 2005). Here, we extend this operation to data without such ordering, by learning a manifold structure de novo via the diffusion operator and filtering on the manifold structure. MAGIC is versatile and is able to denoise and correct a wide range of structures and is particularly well suited for structures underlying cell states and phenotypes.

MAGIC assumes cell phenotypes can be approximately embedded in a substantially lower dimensional structure, which can be of any shape and even comprise of well-separated components. Cells are regulated to reside within the boundaries of a restricted portion of the state space, i.e., a subspace. Moreover, gene-gene relationships ensure that these subspaces exist as lower-dimensional objects relative to the full measurement space. MAGIC’s key assumption is that such a subspace corresponds to low frequency trends in the data (technically the affinity graph representing the data) containing biological signals of interest, while noise, including dropout, are high frequency. Thus, low frequency batch effects or artifacts will not be removed and genes behaving in a noisy (high frequency) fashion may be smoothed out.

The diffusion time parameter determines the extent of smoothing performed by MAGIC. We recommended a diffusion time that retains biological signals but removes ‘intrinsic’ noise, such as bursting, as these cannot be distinguished from the large degree of technical noise in scRNA-seq data. Additionally, the number of cells affects the frequency of signals in the data. For instance, the same signal (such as EMT) can be high frequency if only a few cells are undergoing EMT, but this signal is captured as the cell number increases. Our data contained only 1% meshenchymal cells, but with thousands of cells we recovered the process in detail, including its regulatory process. Thus, while MAGIC is able to find gross structures using only hundreds of cells, increasing cell number enables MAGIC to find increasingly fine structures and more signals in the data.

We evaluated MAGIC on four different scRNA-seq datasets from different biological systems and measurement technologies. MAGIC recovers fine phenotypic structure in the data, including well-separated clusters (Figure 3), bifurcating developmental trajectories (Figure 2), as well as heterogeneous state transitions (Figure 4). Additionally, MAGIC refines cluster structure, trajectories and gene-gene relationships, and enables a myriad of subsequent analysis techniques. In the case of EMT, MAGIC recovered a complex structure that is not well represented by a simple trajectory. We applied archetypal analysis to characterize this complex structure and reveal several previously-unappreciated intermediate states.

We expect MAGIC to be broadly applicable to any single-cell genomics dataset, boosting the signal and the interpretability of the data. As with all post-processing, care must be taken when applying downstream tools. For example, most tools to detect differentially expressed genes (DEGs) assume sparsity and would likely over-estimate DEGs post-MAGIC. Thus, we recommend the earth-mover distance (EMD) used in the archetype analysis (STAR). We recommend running diffusion map analysis directly on the raw data (otherwise this could lead to over smoothing). On the other hand, MAGIC imputed data is well-suited to visualize trends along the diffusion components. Most cells no longer have zeros, but instead have very small values that can be interpreted as the probability a cell is expressing the transcript, thus we recommend treating the very low values as zero, i.e. the cell is not expressing that transcript.

Finally, the most important application is MAGIC’s ability to recover gene-gene relationships which are largely obscured in scRNA-seq data. We validated our approach using: 1) synthetic data, 2) known relationships, 3) by comparing Zeb1 overexpression-based EMT induction with a TGFb-induced EMT, and 4) an extensive systematic validation using ATAC-seq. For network learning, we developed an adaptation of DREMI (Krishnaswamy, 2014), termed kNN-DREMI, to quantify the strength of non-linear and noisy gene-gene relationships. Post-MAGIC, we inferred regulatory relationships and validated predicted targets of a large-scale regulatory network involving hundreds of TFs and over 10,000 target genes. Another approach to learn gene-gene interactions is based on perturbations through the combination of scRNA-seq with CRISPR(Dixit et al., 2016). However, these methods require a preselected set of genes to perturb, often disrupt the system in unintended ways, and require considerable experimental efforts that are not always applicable, e.g., the case of clinical tissue. Our approach requires no perturbations or other experimental manipulations, and can be applied to primary tissue and clinical samples. This offers the possibility of discovering rogue regulatory pathways in cancer, autoimmune disease and developmental disorders, in a patient specific manner, potentially suggesting therapeutic interventions.

STAR Methods

CONTACT FOR REAGENT AND RESOURCE SHARING

Further information and requests for resources and reagents should be directed to and will be fulfilled by the Lead Contact, Dana Pe’er (peerd@mskcc.org).

EXPERIMENTAL MODEL AND SUBJECT DETAILS

We used female HMLE breast cancer cell lines in this study. The cell lines were not authenticated. HMLE and all derived cell lines used in this work were cultured in MEGM (Mammary Epithelial Cell Growth Medium) media (Lonza, USA, CC-3051) at 37 °C. Cells were cultured in round tissue culture dishes 10cm in diameter (Corning, USA) and split to a ratio of 1:7 every 2 to 3 days or once they reached 80% confluence on a plate. All cell dissociations were performed using TrypLE™ (Ambion, USA) reagent.

METHOD DETAILS

TGF-beta and Zeb1 induction of EMT

EMT was induced in HMLE cells by addition of Recombinant Human TGF-β1 (HEK293 cell derived) (PeproTech, USA 100–21) to a final concentration of 5ng/ml. EMT was also induced by overexpression of Zeb1 transcription factor. HMLE cells transfected with FUW plasmid, a tetracycline operator, and minimal CMV promoter were used and Zeb1 gene overexpression was induced by addition of doxycycline (Sigma, D3447) to a final concentration of 1mg/ml. All cells under induction were passaged once they reached 80% confluence.

ATAC-seq profiling of TGF-beta induced EMT

HMLE cells were induced with TGF-beta (5 ng/mL, replenished every day) and grown for 8 days. TGF-beta induced HMLE cells were removed from the cell culture plate with TrypLE treatment, washed twice in 1X PBS buffer, and stained with DAPI dye and Anti-Human/Mouse CD44 (PE-Cyanine 7) antibody. The stained cells were then analyzed by flow cytometry and the top 3% (n=48,000) CD44 positive cells (mesenchymal population) were FACS sorted into a collection tube. FACS sorted cells were first lysed with 10 mM Tris-HCl [pH 7.4], 10 mM NaCl, 3 mM MgCl2 and 0.1% IGEPAL CA-630 buffer. The resulting nuclei suspension was pelleted and fragmented using Tn5 transposase reaction mix (Illumina), purified (Qiagen) and PCR amplified for sequencing following the protocol published previously (Buenrostro et al., 2013).

Single-cell RNA-seq profiling of EMT

Single-cell RNA-seq was performed using the inDrops platform (Klein et al., 2015; Zilionis et al., 2017), a droplet microfluidics based single-cell isolation and mRNA barcoding technology. Briefly, the cell culture flasks containing HMLE cells were treated with 2 mL TrypLE™ Express Enzyme (1X) no-phenol-red for 10 min at 37ºC, washed three times with 1X PBS containing 0.05% (w/v) BSA, and strained through 40 μm size mesh. The resulting suspension of single-cells was supplemented with 16% (v/v) Optiprep and 0.05% (w/v) BSA and encapsulated into 3 nL droplets together with custom-made DNA barcoding hydrogel beads and RT/lysis reagents. The cell encapsulation was set at ~30,000 cells per hour using a cell barcoding chip (v2) (Droplet Genomics), and over 75% of cells entering microfluidics chips were co-encapsulated with one DNA barcoding hydrogel bead. After loading cells, hydrogel beads and RT/lysis reagents into microfluidic droplets, the composition of a RT reaction under which cDNA synthesis was carried out was 155 mM KCl, 50 mM NaCl, 11 mM MgCl2, 135 mM Tris-HCl [pH 8.0], 0.5 mM KH2PO4, 0.85 mM Na2HPO4, 0.35 % (v/v) Igepal-CA630, 0.02 % (v/v) BSA, 4.4% (v/v) Optiprep, 2.4 mM DTT, 0.5 mM dNTPs, 1.3 U/ml RNAsIN Plus, and 11.4 U/ml SuperScript-III RT enzyme. After cell encapsulation the tube containing the emulsified components was exposed to 365 nm light to photo-release DNA barcoding primers attached to the hydrogel beads. The RT reaction was initiated by transferring the tube to 50ºC for 1-hour and terminated by incubating for 15 min at 75ºC. Post-RT droplets were chemically broken to release barcoded cDNA, which was then purified and amplified. At the final step, libraries were amplified using trimmed PE Read 1 primer (PE1): 5’-AATGATACGGCGACCACCGAGATCTACACTCTTTCCCTACACGA and indexing PE Read 2 primer (PE2): 5’-CAAGCAGAAGACGGCATACGAGAT[index]GTGACTGGAGTTCAGACGTGTGCTCTTCCGATCT, where [index] encoded one of the following sequences: CGTGAT, ACATCG, GCCTAA, TGGTCA, CACTGT or ATTGGC). Multiplexing of PCR libraries allowed for the pooling of different samples onto one lane of Illumina HiSeq2500 flow cell when desired. To prepare the cells for scRNA-seq experiments, they were cultured to 70% confluence and dissociated from the plate with the addition of 3ml of trypsin for 5 mins at 37 °C. After dissociation cells were kept at +4 °C at all times in MEGM-complete media. Two 1x PBS (Ambion, USA) washes were performed on the dissociated cells and cell viability was evaluated using trypan blue staining prior to scRNA-seq. All inDrops experiments were performed with cell viability exceeding 90%.

Overview of the MAGIC Algorithm

MAGIC begins with an n-by-m count matrix D, representing the observed transcript counts of m genes in n cells and returns an imputed count matrix Dimputed The expression of each individual cell, a row in D, defines a point in the high-dimensional measurement space representing the cell’s observed phenotype. The counts in the imputed data matrix Dimputed represent the likely expression vectors (phenotypes) for each individual cell, based on data diffusion between similar cells.

Key to the success of our graph-based method is a faithful neighborhood of similar cells, based on a good similarity metric. Given the sparsity of the data, finding the k-nearest neighbors in the raw data using a simple similarity metric is unlikely sufficient to find cells whose biology is most similar. Therefore, MAGIC builds its affinity matrix in four steps: (i) A data preprocessing step, which is PCA in the case of scRNA-seq. (ii) Converting distances to affinities using an adaptive Gaussian Kernel, so that similarity between two cells decreases exponentially with their distance. (iii) Converting the affinity matrix A into a Markov transition matrix M, representing the probability distribution of transitioning from each cell to every other cell in the data in a single step. (iv) Data diffusion through exponentiation of M, to filter out similarity based on high frequencies that typically represent noise and increase the similarity based on strong trends in the data. Once the affinity matrix is constructed, the imputation step of MAGIC involves sharing information between cells in the resulting neighborhoods through matrix multiplication Dimputed = Mt * D(Figure 1.vi).

Using PCA for data preprocessing

MAGIC can be generally applied to any type of high dimensional single cell data to remove noise and clarify structure in the data. However, before a cell-cell distance matrix is computed, each data-type typically requires specific pre-processing and normalization steps. Pre-processing is particularly important in the case of scRNA-seq to ensure that distances between cells reflect biology rather than experimental artifact. We perform two operations on the data which are typically applied to single-cell RNA-sequencing datasets (Haghverdi et al., 2016; Setty et al., 2016; Shekhar et al., 2016): 1) library size normalization on the cells, and 2) principal component analysis (PCA) on the genes.

ScRNA-seq data entails substantial cell-to-cell variation in library size (number of observed molecules) which is largely due to technical variation occurring due to multiple enzymatic steps, such as lysis efficiency, mRNA capture efficiency and the efficiency of multiple amplification rounds(Grun et al., 2014). For example, the cell barcode associated with each cell can have a substantial effect on the PCR efficiency and subsequently the number of transcripts in that cell. Therefore, we normalize transcript abundances (library size), so that each cell will have an equal transcript count.

Given a m ∗ n data matrix D, the normalized data matrix is defined as follows:

This effectively eliminates cell size as a signal in the measurement for the purposes of constructing the affinity matrix and thus the resulting weighted neighborhood is not biased by cell size.

Second, we apply principal component analysis (PCA) to further increase the robustness and reliability of the constructed affinity matrix. While dropout renders single cell RNA-seq data extremely noisy, the modularity of gene expression provides redundancy in the gene dimensions, which can be exploited. Therefore, we perform PCA dimensionality reduction to retain ~70% of the variation in the data, which typically results in 20 to 100 robust dimensions for each cell.

The cell-cell affinity matrix is computed off of these PCA dimensions, but imputation is performed on the full data matrix. While MAGIC still gives reasonable results without preprocessing with PCA, it gives the diffusion a better starting point, resulting in quicker and more robust computation. We also note that MAGIC is relatively robust to the number of principle components selected, within a reasonable range (Figure S3D).

Constructing MAGIC’s Markov Affinity Matrix

One of the most critical steps in MAGIC is computing the affinity matrix M. M defines the graph structure and cell neighborhoods; MAGIC can only succeed if the affinity matrix faithfully represents the geometry of the data. We compute a similarity matrix by applying a kernel function to the distance matrix using the following steps:

-

1)

Computation of a cell-cell distance matrix Dist (Figure 1.ii).

-

2)

Computation of the affinity matrix A based on Dist, via an adaptive Gaussian kernel (Figure 1.iii).

-

3)

Symmetrization of A using an additive approach

-

4)

Row-stochastic Markov-normalization of A (so each row sums to 1) into Markov matrix M. (Figure 1.iv)

We compute a similarity matrix by applying a kernel function to the distance matrix. After data processing (in a technology-dependent manner), MAGIC computes a cell-cell distance matrix Dist based on a cell-cell Euclidian distance. Distances are then converted into an affinity matrix A using a Gaussian kernel function that emphasizes close similarities between cells, as follows

Using the Gaussian kernel, similarity between two cells decreases double exponentially with their distance. With a negative double exponential function, distances beyond the standard deviation σ rapidly drop off to zero and hence the choice of σ, the kernel width, is a key parameter. If σ is too small, the graph becomes disconnected leading to noise and instability. If σ is too large, distinct and distant phenotypes will be collapsed and averaged together, losing resolution and structure in the data. However, cell phenotypic space is not uniform: a stem cell can be orders of magnitude less frequent than a mature cell type and transitional cell states are also rare. Therefore, s that would be appropriate for a mature cell type would be far too coarse to capture fine details of the differentiation in progenitor cell types.

Without proper care, denser phenotypes can dominate the imputation. Cells in dense areas have more neighbors and therefore exert more influence than cells with fewer neighbors. Moreover, dense phenotypes are further reinforced during diffusion, where dense phenotypes iteratively attract more and more cells towards them and dominate the data (Figure S1A,B). MAGIC uses an adaptive Gaussian kernel to equalize the effective number of neighbors for each cell, thereby diminishing the effect of differences in density. Instead of fixing a single value for the kernel width σ, we adapt this value for each cell, based on its local density. Specifically, to equalize the number of neighbors we set the value σ(i) for each cell i to the distance to its kath nearest neighbor:

Thus the kernel is wider in sparse areas and smaller in dense areas. To maximize our sensitivity to recover fine structure, we choose ka to be as small as possible, such that the graph remains connected. We note that MAGIC is relatively robust to selection of ka, within a reasonable range (Figure S3D).

Comparing non-adaptive to the adaptive kernel on the EMT data in Figure S1A, we see that the non-adaptive kernel coarsely captures only the single strongest trend in the data, whereas the adaptive kernel does not collapse the data, but rather imputes finer structures. Figure S1B shows this on synthetic data with 3 rotated sinusoidal arms. The adaptive kernel can impute the fine details of the geometry while the fixed bandwidth kernel averages the sinusoidal features into a line.

To improve computational efficiency and robustness, we ensure sparsity in the resulting affinity matrix A and allow each cell to have at most k neighbors. Since the standard deviation of the kernel bandwidth is set locally to the distance to the ka-th neighbor we set k = 3ka to ensure that the kNN graph covers the majority of the Gaussian kernel function. All additional affinities (which are already close to zero) are set to zero.

Another important factor in MAGIC’s success is the quality of the diffusion process that occurs when the affinity matrix is powered. A good process would smooth the data in a manner that follows the shape of the underlying manifold. It has been shown (Coifman and Lafon, 2006b) that to mimic a discretized diffusion that achieves these properties, the affinity matrix must be symmetric and positive semidefinite, with eigenvalues in the range of zero to one. Negative eigenvalues would simply flip back and forth at each powering, leading to instability. With values greater than one, things would be sensitive to outliers and powering would wildly amplify.

The adaptive kernel results in an asymmetric affinity matrix where A(i,j) ≠ A(j,i), which we need to symmetrize to achieve these desired properties for A. We take the additive approach to symmetrization, which averages the affinities, helps pull in outliers and denoises the data. We construct the symmetric affinity matrix as:

The final step is the row-stochastic normalization that renders the affinity matrix into a Markov transition matrix M. Each row represents a probability distribution, where M(i,j) is the probability of cell transitioning to cell j. Each row must sum to 1, which we achieve simply by dividing each entry in A by the sum of row affinities.

We note that we want a cell’s own observed values to have the highest impact on the imputation of its own values, thus our transition matrix allows for self-loops and these are the most probable steps in the random walk. The distance between a cell and itself is zero, therefore its weight in the affinity matrix before normalization is 1 (regardless of σ) ensuring the measured values in each cell retains a high weight in its imputation.

An adaptive kernel was previously used to handle the lack of uniformity in biological data in (Haghverdi et al., 2016). However, the key differences between approaches involve time-scale of diffusion. The kernel in (Haghverdi et al., 2016) sums up walks of all length scales after removal of the first eigenvector. By contrast, we prescribe a particular time scale of diffusion, based on convergence so as not to over-smooth in the context of imputation.

Markov affinity based graph diffusion

Due to sources of technical noise, such as drop out and others, one cannot distinguish between similarity due to biological correspondence vs spurious chance. This is demonstrated using a synthetically generated Swiss roll (with Gaussian noise) presented in figure 1. While most nearest-neighbor edges follow the spiral, there are many short cut edges that cut across the spiral (Figure 1.ii), which result in the off-diagonal affinities in Figure 1.iii. Consider the following thought experiment, starting with an identical cell, mimicking scRNA-seq, if we randomly subsample on a small fraction of the transcripts each time, the expression observed across these cells can appear dissimilar. However, each pair of cells are likely to at least share many neighbors that overlap with each of them. Whereas spurious edges would have similarity in the raw data, but these would not be supported by shared neighbors. Thus, exponentiation refines cell affinities, increasing the weight of similarity along axes that follow the data manifold. Following the exponentiation of M, phenotypically similar cells should have strongly weighted affinities, whereas spurious neighbors are down-weighted.

Raising M to the power t results in a matrix where each entry Mt(i,j) represents the probability that a random walk of length t starting at cell i will reach cell j, thus we call t the “diffusion time”. While the powered Markov affinity matrix increases the number of cell neighbors, unlike the effect of increasing k in knn-imputation, MAGIC does not bluntly smooth and average over increasingly distant cells. In MAGIC, even as t increases, reweighting also occurs: dense areas of the data result in more possible paths and thus weights are concentrated in these areas. Importantly, the closest neighbors remain with the highest probability: (i) The probability of a path is the product of its steps and hence longer paths are less likely; (ii) There will be many paths that linger in the region, points that are very close to each other will have many paths that are circular or back and forth that reach each other, including self-loops.

Powering M has the effect of low-pass filtering the eigenvalues of the Markov transition matrix. Markov matrices have nicely structured eigenvalues, in the range of [1, 0] with 1 being the highest eigenvalue, and 0 the lowest possible eigenvalue. Much like PCA, the magnitude of the eigenvalue is an indication of its importance in explaining (non-linear) variability of the associated eigen-dimension. Thus when a Markov matrix is powered, it decreases the magnitude of all the eigenvalues besides 1, and diminishes the importance of noise dimensions with near-zero explanatory power. In this process, the signal is filtered out from the noise. Thus, as t increases, similarity based on high frequency trends (which often correspond to technological noise) decreases and the affinity matrix represents similarity along lower frequency trends that follow data density. As a result, after the powering of M, phenotypically similar cells should have a strong weighted entry, whereas spurious neighbors are down-weighted. In our toy example, there are no off-diagonal entries in Figure 1.v.

Diffusion time for Markov Affinity Matrix

A key parameter in MAGIC is the amount of diffusion, or the power the Markov Affinity Matrix is raised to before the imputation step Dimputed = Mt * D. We need a method to determine the optimal value of t for a given dataset, that removes noise and effectively impute missing values, without over-smoothing the data. We assume that the data lies on a lower dimensional manifold, which is obscured by dropout and additional sources of noise in the data. The true manifold structure of the data is captured by the top eigenvectors of M, whereas the rest of the eigenvectors likely represent noise. The eigenvalues, which are in the range [1,0], are gradually reduced by exponentiation.

We divide the possible diffusion times into two regimes, an imputation regime and a smoothing regime. The first few steps of diffusion, which we call the imputation regime, diminishes the noise dimensions, bringing these small eigenvalues to zero and removing most of the noise in the data including dropout. As t increases, cells learn missing values from their neighbors and we rapidly capture proper relations between cells that are biologically very similar, and were only separated by collection artifacts. Thus, in the imputation regime, the imputed matrix rapidly changes from iteration to iteration.

In the smoothing regime, t is sufficiently large to have recovered the manifold with most of the noise removed. Once diffusion creates a common support for cells, diffusing further would smooth out lower frequency trends in the data that likely represent real biology. Therefore, optimal tuning of t relies on quantifying the point where the noise removal turns into signal removal. Since typically noise is of different frequency than the signal itself (i.e., high- versus low-frequency respectively), we initially expect to see a rapid change in the data as high-frequency information is being removed. Then, slower change, or convergence ensues. We therefore expect a regime change in terms of the convergence, or rate of data change, as a function of t. To quantify rate of change, we use the coefficient of determination (Rsq), between the imputed data at time t and time t-1, and choose a point after this value stabilizes. So that our metric is not dominated by few highly expressed genes, we normalize by dividing each gene by its sum. We then compute, for each t:

Where SSE is the sum of squared error and SST is the sum of squared total. Since R-squared is a normalized measure, between 0 and 1, we reason that the decay has approximately converged after it has gone below 0.05, i.e., less than 5% change from the previous t. To make this robust we select the second t after the decay has gone below 0.05 as the optimal t. We note that t is robust to a range of values of around the optimal t (Figure S3D), further supporting its selection.

In Figure S1C we plot 1 - R-sq(data_t,data_(t-1)) versus t to inspect how the rate of change decreases and converges. We show that there are two regimes: an imputation regime, and following convergence, a smoothing regime.

We created a ground truth dataset to test our approach for selecting t. We generated 2000 points on a random tree structure that was generated using a random walk process where points accumulate adjacent to existing points, with 4 branches and rotated it in 1000 dimensions (Figure S1Di). We then simulated dropout on this tree by subtracting random values sampled from an exponential distribution to achieve 0%, 2%, 39% and 79% zeros respectively. For each of these noise levels, we ran MAGIC on the dropped out data for increasing t values (t=1–8) and computed the convergence, as described in the previous section (Figure S1Dii). As expected, we find that increasing levels of noise causes convergence to occur at higher values of t. The optimal t is selected at t=0, t=3, t=4 and t=6 for the increasing noise levels respectively. To determine if these values correspond to actual optimal levels of t, we quantify the Rsq of the imputed data with the original data before dropout. We reason that the R-squared should be relatively low at low t, then increase and peak at the optimal t, after which it decreases for larger t. The closest match between the ground truth and imputed data is indeed corresponds very well with the optimal t for all tested noise levels (Figure S1Diii) and also looks good visually (Figure S1Div). Moreover, we see that the Rsq remains fairly stable as we increase t beyond the optimal value and the quality of imputation remains good even as we increase t (Figure S1Diii,iv).

Imputation after graph diffusion

Once Mt is computed, we have a vector of weighted neighbors associated with each cell in our data. We can now use this robust neighborhood operator to impute and correct data using the library-size normalized count matrix (before PCA). Thus, while we use PCA to gain more robustness for the computation of M, the imputation Dimputed = Mt * D.is performed at the resolution of individual genes.

The imputation step of MAGIC involves information transfer from cells in the cell neighborhoods and right-multiplying Mt by the original data matrix. Dimputed = Mt * D. (Figure 1.vi). When a matrix is applied to the right of the Markov Affinity matrix it is considered a backward diffusion operator and has the effect of replacing each entry.D(i,j) that is gene j in cell i, with the weighted average of the values of the same gene in other cells (weighted by Mt).

This process effectively restores the missing data to the underlying manifold, which captures the majority of the data.

In the final step of MAGIC, we re-scale the count matrix. The MAGIC process resembles heat diffusion in the graph, which has the effect of spreading out molecules, but keeping the total sum constant. This means that the average value of each non-zero matrix entry decreases after imputation. To match the observed expression levels (per cell), we rescale the values so that the max value for each gene equals the 99th percentile of the original data. Thus cells with high expression of a gene are brought up to similar levels as the original data and all other values are proportionally scaled up with them.

MAGIC pulls outliers into the data manifold

MAGIC is able to pull outlier data into the manifold due to the properties of diffusion with an adaptive kernel. As the Markov affinity matrix M is an asymmetric matrix, the walking probabilities from a particular cell, i.e., M(i, x) are not the same as the walking probabilities to the cell M(x,i). The gene values for a particular cell i, are the weighted averages of other cells based on the i-th row M(i,:). This row reflects the probability that if you start at cell i, you end up at a cell x in t steps. As the matrix is exponentiated, if cell x is an outlier cell, then there will not be many paths from i to x, and thus this entry in the Markov matrix M(i,x) gets down-weighted. Therefore cell i’s values D(i,j) will not veer towards the values of the outlier cell x. On the other hand cell x’s corrected values come from the x-th row M(x,:), and since cell x is an outlier its nearest neighbors will be on the manifold (and may include cell i). Thus the probability of x walking to the manifold is very high and thus cell x’s values will become closer to its manifold neighbors and cell x gets brought into the manifold as t increases. Due to use of the adaptive bandwidth for the Gaussian kernel, cell x is guaranteed to have k nearest-neighbors (based on our setting the kernel sigma to the distance to the kth neighbor) and those neighbors are likely to be on the manifold to aid the pulling in of outliers. See Figures 2, 4, S1 for examples of denoised manifolds after MAGIC.

Evaluation of the Synthetic Worm Dataset

To quantitatively evaluate the accuracy of MAGIC’s imputation, we created a validation dataset that was based on bulk transcriptomic data from 206 developmentally synchronized C. elegans young adults, measured at regular time intervals during a 12-hour developmental time-course using microarrays (Francesconi and Lehner, 2014). Do to the noise prevalent in early microarray experiments, similar to the analysis performed in the original publication of the data, we select only genes that load to the first two PCA components of the data. This results in a data matrix with 206 worms and 9861 genes.

We down-sampled this data to emulate the sparsity found in scRNA-seq data (Figure S2B-C). The log-scaled expression levels were exponentiated, and then each entry was downsampled using an exponential distribution such that the result had 80% and 90% of the values set to 0. Then the data was log-scaled and normalized based on z-score. We applied MAGIC (with parameters npca=20, ka=3, t=5) to this synthetically “dropped out” data and then compared between the original and imputed data. We note that this dataset is particularly challenging as it only contains 206 samples, whereas MAGIC is primarily intended for datasets consisting of thousands of samples, as is the case for most single cell datasets.

Based on the expression matrix, the imputed data largely matches the original data (Figure S2B). To zoom into finer structure and illustrate MAGIC’s ability to recover key trends in the data, we select 3 genes (C27A7.6, ERD2 and C53D5.2) based on their non-monotonic developmental time trends and compare the original and imputed shapes for each of these trends. For each gene, we find close concordance in the developmental trend between the original and imputed data (Figure S2B).

We quantitatively evaluate MAGIC’s accuracy by directly comparing the original and imputed values. At dropout of 90%, the R2 increases from 7% to 43% and for 80% dropout, the R2 increases from 13% to 53%. The agreement between the original and imputed data is even higher in the case of gene-gene correlations than that of the univariate case. For example, the agreement in gene-gene correlations between the original data and data with 90% of the values dropped out is 0.12. MAGIC recovers most of the gene-gene correlations so that after imputation we have a R2 of 0.65. For 80% of the values at zero, MAGIC improves from 0.35 to 0.78.

Validation Using a Synthetic EMT Dataset

We used the MAGIC-imputed count matrix of the EMT data as the “ground truth” of a synthetically created dataset and then re-created synthetic dropout. Starting with data from 7523 HMLE cells 8–10 days after TGFB treatment, we first imputed the data with MAGIC (npca=20, ka=10, t=6) and then we induce dropout by down-sampling using an exponential distribution such that 0%, 60%, 80% and 90% of the values are set to 0. We then re-imputed the data using MAGIC. We show that MAGIC can also capture multivariate relations effectively -- the agreement between the original and imputed data is even higher in the case of gene-gene correlations than that of the univariate case (Figure S3Aii).

With 90% zeros, the R2 between the original data and the down-sampled data is brought down to 0.04 and MAGIC corrects the data so that the R2 rises back to 0.7 (Figure S3Ai). We see that with 80% zeros, we have R2 of 0.09 after dropout, which is corrected to 0.81 after imputation. An important feature of MAGIC is that it is particularly good at capturing the “shape” of the data (Figure S3B). We note that the imputed data is less noisy and more accurately adheres to a low dimensional manifold. However, MAGIC may additionally remove some stochastic biological variation, as it removes unstructured, high frequency variation.

Robustness of MAGIC to Subsampling