Abstract

Purpose:

To determine if short-term computerized speech-in-noise training can produce significant improvements in speech-in-noise perception by cochlear implant (CI) recipients on standardized audiologic testing measures.

Method:

Five adult postlingually deafened CI recipients participated in 4 speech-in-noise training sessions using the Seeing and Hearing Speech program (Sensimetrics; Malden, MA). Each participant completed lessons concentrating on consonant and vowel recognition at word, phrase, and sentence levels. Speech-in-noise abilities were assessed using the QuickSIN (Killion, Niquette, Gudmundsen, Revit, & Banerjee, 2 004) and the Hearing in Noise Test (HINT (Nilsson, Soli & Sullivan, 1994)).

Results:

All listeners significantly improved key word identification on the HINT after training, albeit only at the most favorable signal-to-noise ratio (SNR). Listeners also showed a significant reduction in the degree of SNR loss on the QuickSIN after training.

Conclusion:

Short-term speech-in-noise training may improve speech-in-noise perception in postlingually deafened adult CI recipients.

Keywords: cochlear implant, postlingually deafened, training

For the past decade, cochlear implants (CIs) have been considered the standard method of rehabilitation for postlingually deafened adults with severe to profound hearing loss (e.g., Kos, Deriaz, Guyot, & Pelizzone, 2009). Unilateral CI recipients consistently show improved speech and sound perception, improved likelihood of developing or regaining potentially normal language abilities, improved educational development, and improved tinnitus suppression (Bichey & Miyamoto, 2008). New advancements in CIs now go as far as assisting individuals on the telephone, improving speech recognition in adverse conditions, and providing better music interpretation (e.g., Fu & Galvin, 2008). New sound strategies that maximize the signal-to-noise ratio (SNR) of individual channels have readily improved speech intelligibility in adverse listening conditions (Hu & Loizou, 2008). Recent developments in surgical techniques have allowed surgeons to sometimes preserve residual hearing, and the combination of electric and acoustic stimuli in the ear may lead to improved speech perception scores in both quiet and noise (e.g., Gantz, Turner, & Gfeller, 2006).

Despite the many positive contributions and notable advancements made, specific drawbacks of cochlear implantation persist. Current unilateral CI recipients have difficulties associated with unilateral deafness, including localization issues and difficulty interpreting speech in background noise (e.g., Hochberg, Boothroyd, Weiss, & Hellman, 1992; Nava, Bottari, Bonfioli, Beltrame, & Pavani, 2009; Nelson & Jin, 2004). Some individuals have compensated with an increase in bilateral abilities when wearing a hearing aid in the opposite ear (Potts, Skinner, Litovsky, Strube, & Kuk, 2009). However, for many postlingually deafened adult CI recipients, hearing in the nonimplanted ear also reaches unaidable levels, making the option of a hearing aid untenable (Bichey & Miyamoto, 2008). Bilateral implantation has addressed some of the limitations of unilateral implantation, including speech recognition and sound localization (Tyler, Dunn, Witt, & Noble, 2007). Unfortunately, a second implant remains financially untenable for many candidates (Summerfield et al., 2006), making it unlikely that they will receive the benefits of a second implant.

Fortunately, more efforts are being made to determine ways to eliminate common difficulties among adult CI recipients. The rationale behind many of these approaches is to engage the central auditory system to compensate for the impoverished cues of the CI device, earlier work having shown that central auditory structures are involved when perceiving speech in challenging listening situations (e.g., Lunner, 2003; Lunner & Sundewall-Thorén, 2007; Parbery-Clark, Marmel, Bair, & Kraus, 2011; Perrachione & Wong, 2007; Warrier et al., 2009; Wong, Ettlinger, Sheppard, Gunasekera, & Dhar, 2010) and that training can be effective for improving speech perception abilities (Logan, Lively, & Pisoni, 1991; Peach & Wong, 2004; Perrachione, Lee, Ha, & Wong, 2011). A number of studies have evaluated the effectiveness of short-term—relative to the duration of therapy—computerized training programs on the speech recognition skills of CI recipients. The results of these evaluations are summarized here; as shall be seen, the results are mixed.

The goal of one of the early studies was to determine if short-term computerized training improves speech perception in quiet (Fu, Galvin, Wang, & Nogaki, 2005). Ten adult CI recipients trained 1 hr per day, 5 days per week, for at least 4 weeks using the computer-assisted speech training program developed at the House Ear Institute. The authors administered tests of speech perception before training began and then after every 2 weeks of training. Significant improvement was seen on vowel perception, consonant perception, and sentence recognition.

In a study aimed at improving speech perception in quiet, 11 adult recipients who had used their CIs for more than 3 years completed computerized auditory training for 1 hr per day, 5 days per week, for a period of 3 weeks (Stacey et al., 2010). The authors found a significant improvement on the consonant discrimination test but found no significant improvements on the vowel or sentence recognition tests. Self-reported benefits were also nonsignificant.

Both of the aforementioned studies concentrated on speech-in-quiet abilities; however, speech perception in noise is consistently more difficult than speech in quiet for CI recipients (e.g., Nelson & Jin, 2004). Participants in the study by Stacey et al. (2010) had at least 3 years’ experience using the implants; therefore, they may have reached maximum attainment in quiet before training began, resulting in a lack of training benefit.

Authors of three different studies have attempted to directly address the issue of speech-in-noise perception by CI recipients through training. Fu and Galvin (2008) trained two participants, one on phoneme-in-word identification and one on key-word-in-sentence identification; both participants heard stimuli in the presence of multi-talker babble and were given four response options per trial. After 4 weeks of training, the phoneme-trained participant improved on vowel, consonant, and sentence recognition by about 8 percentage points; the key-word-trained participant improved on vowel recognition by 6 percentage points, on consonant recognition by 12 percentage points, and on sentence recognition by 17 percentage points.

Results of a second 4-week training study also showed improvements on speech-in-noise perception. Oba, Fu, and Galvin (2011) trained 10 CI recipients to identify digits in multitalker babble. All participants showed significant improvement on the training task from preto post-test, and they maintained these gains at 1 month post-training. Performance was more variable across the other measures, but overall, the authors found a mean increase in performance on digit recognition in speech-shaped noise at both moderately and extremely difficult SNRs, Hearing in Noise Test (HINT; Nilsson, Soli, & Sullivan, 1994) recognition in multitalker babble, Institute of Electrical and Electronics Engineers (IEEE) sentence recognition in speech-shaped noise, and IEEE sentence recognition in multitalker babble for moderately difficult SNRs. Participants maintained these gains at 1 month post-training. The authors found no improvement in HINT recognition in speech-shaped noise or in IEEE sentence recognition in multitalker babble at extremely difficult SNRs.

A third study involved the use of the commercially available Speech Perception Assessment and Training System (SPATS; Miller, Watson, Kistler, Wightman, & Preminger, 2008). Sixteen CI recipients trained for approximately 24 hr over the course of 6 weeks (two 2-hr sessions per week). After training, listeners showed a mean gain on the CNC test in quiet of 6 percentage points, on the HINT in quiet of 13 percentage points, and on the HINTat +10 dB SNR of 10 percentage points. Conversely, untrained controls showed a decrease in performance on the CNC test in quiet of −4 percentage points and on the HINT in quiet of −7 percentage points, and an increase on the HINT at +10 dB SNR of 1 percentage point. Thus, comparing the trained and untrained listeners shows a significant improvement following training, particularly on the HINT in quiet test.

These three studies demonstrate that training CI recipients can improve their speech-in-noise perception. However, all three training studies placed a heavy burden on the trainee, requiring either daily training or long training sessions for 4 to 6 weeks. It is worth investigating whether short-term training can result in benefits to CI recipients and, if so, how those benefits compare to those found in more intensive training studies.

The purpose of our study was to determine whether short-term speech-in-noise training for postlingually deafened adult CI recipients improves behavioral speech-in-noise abilities. We used a two-baseline design, increasing the likelihood that any post-test effects were the results of training and not of multiple test exposures. We also tested whether such short-term improvements would be detectable by widely used and commercially available speech-in-noise tests. In addition to improving certain listening conditions for adult CI recipients, we hoped to gain a better overall understanding of successful training methods for CI recipients and of how we can accurately measure their improvements using popular clinical testing measures.

Method

Participants

Five postlingually deafened adult CI recipients (three women and two men), ages 50–85 years (M = 71.4 years), participated in the study in exchange for payment. We recruited all patients from the Northwestern University Department of Otolaryngology and Audiology in Chicago or from the Illinois Cochlear Implant Club in Morton Grove, Illinois. Demographic and implant information can be found in Table 1. Participants had warble-tone aided sound-field thresholds that were lower than 30 dB HL at frequencies ranging from 250 Hz to 6000 Hz. All participants of this study had at least 1 year of experience with their device prior to the experiment (range = 2−14 years). Participants continued to report difficulty in noisy environments despite years of experience.

Table 1.

Demographic and implant information for the trained listeners.

| Age, in years (M, SD) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Listener | Gender | At HL identification (35.80, 12.52) |

At CI (70.25, 6.34) |

At test (71.40, 11.89) |

Highest education level | Implant type | Implant side | Processor | Internal device | Secondary device |

| S1 | Female | 31 | 47;49 | 51 | College | Advanced Bionics | Both | Harmony BTEs | 90K | Dual implant |

| S2 | Female | 50 | 70 | 77 | College | Advanced Bionics | Right | Harmony BTE | C2 | |

| S3 | Female | 18 | 68 | 71 | High school | Cochlear Corporation | Left | Freedom BTE | Freedom | HA Phonak BTE |

| S4 | Male | 45 | 64 | 78 | Graduate school | Advanced Bionics | Right | Platinum BTE | C1 | |

| S5 | Male | 35 | 79 | 80 | High school | Advanced Bionics | Right | Harmony body worn | 90K | HA Phonak BTE |

Note. HL = hearing loss; CI = cochlear implant; BTE = behind-the-ear; HA = hearing aid

Materials

Each participant completed the Speech, Spatial, and Qualities of Hearing Scale (SSQ; Gatehouse & Noble, 2004) before any testing measures or training began. We asked participants to answer all questions before their first day of baseline testing and again when the speech-in-noise training was complete.

We assessed speech perception in noise via recorded versions of the QuickSIN (Killion, Niquette, Gudmundsen, Revit, & Banerjee, 2004) and HINT (Nilsson et al., 1994). We presented both tests in sound-attenuated booths over 18-in. Vizio speakers. The listener was seated approximately 30 in. from the speaker at 0° azimuth. We presented the QuickSIN test at 70 dB HL and the HINT at 60 dB HL.

When tested on the QuickSIN, listeners heard three sets of lists, and we averaged their results together to estimate SNR loss (Killion et al., 2004). The administration of the HINT deviated from published guidelines (e.g., Nilsson et al., 1994; Wilson, McArdle, & Smith, 2007). Instead of modulating the signal level as a function of listeners’ response accuracy, we instead presented half of one list (five sentences) at each of seven constant SNR levels: +15 dB, +10 dB, +5 dB, 0 dB, −5 dB, −10 dB, and −15 dB.

To minimize the risk of practice effects, different lists were presented at each testing session. Lists were equivalent (McArdle & Wilson, 2006; Wilson et al., 2007), making it unnecessary to administer the same list at each time point. QuickSIN lists 1, 4, and 7 were presented at the first baseline; lists 10,13, and 16 were presented at the second baseline; lists 11, 14, and 17 were presented immediately after training; and lists 12, 15, and 18 were presented after the maintenance phase. HINT lists 1 through 4 were presented at the first baseline; lists 5 through 8 were presented at the second baseline; lists 9 through 12 were presented immediately after training; and lists 13 through 16 were presented after the maintenance phase. Half of each list was given at a particular SNR, and the second half of the list was given at the SNR level that was slightly less favorable. We repeated this procedure until we had given all SNR levels (e.g., the first half of list 1 was given in quiet and the second half at +15 dB SNR; the first half of list 2 was given at +10 dB SNR and the second half at +5 dB SNR).

Listeners completed the training within the Seeing and Hearing Speech program (Sensimetrics; Malden, MA) installed on a desktop computer running Windows. Listeners sat approximately 30 in. from the speakers at 0° azimuth, and they were allowed to choose a preferred loudness level for training stimuli at outset that was maintained throughout training.

The clinician set the program to use only auditory cues for training. The clinician always presented stimuli in the presence of multitalker babble (called the “party noise” parameter and set by the clinician). A visual noise scale, ranging from +3 to −3 (−3 being the least amount of noise), was present at the beginning of each exercise. This scale provided the listener with feedback as to how much noise was present. The center of the scale, 0, corresponded to 0 dB SNR. Each step away from this center point was 3.5 dB.

Seeing and Hearing Speech is a collection of lessons designed for the potential improvement of speech perception by means of auditory and visual cues. Each participant completed a series of lessons devoted to specific aspects of speech. Participants started by learning to identify vowels, then progressed to consonants. Vowels and consonants were subdivided into phonemes with similar visual features, such as rounded-lip vowels or bilabial consonants. Within each phoneme grouping, to be-learned phonemes were first presented in the context of words, then in phrases, and then in whole sentences; each context constituted a lesson, and there were 25 training trials in a lesson. On each trial, listeners made forced-choice identifications of the target sound; the number of response options ranged from 8 to 10 and varied from trial to trial. Participants had to reach 70% accuracy on a given lesson before progressing to the next lesson (i.e., before progressing from words to phrases or from phrases to sentences). Once listeners completed all lessons within a particular phoneme grouping (e.g., rounded-lip vowels), they progressed to the next phoneme grouping (e.g., spread-lip vowels), again starting at the word level. All vowel lessons had to be completed before moving to the consonant lessons.

Procedure

We asked participants to complete the SSQ prior to coming in for testing. We obtained baseline QuickSIN and HINT scores at two time points spaced 1 day apart, maximizing the possibility for test-retest effects. The assessment of two baselines from each listener allowed the listener to serve as his or her own control and limited the possibility that any effects seen following training were the result of the multiple exposures to the testing materials. At both time points, listeners first performed the QuickSIN and then performed the HINT.

Training started immediately following the second baseline assessment. Training consisted of four 1-hr sessions spread over 4 days, and it took place at the Northwestern University Audiology Clinic. The first training session started with a noise level of −3 (i.e., SNR of 10.5 dB). Once the listener completed all 25 trials within a lesson—defined as one presentation context (i.e., word, phrase, or sentence)—for one phoneme subdivision (e.g., spread-lip vowels or labial consonants), accuracy was checked by the clinician. Identification accuracy of 70% or greater was sufficient to progress to the next lesson.

If accuracy was less than 70% on the listener’s first attempt on this lesson, the settings were left unchanged, and the listener was given a second chance. If accuracy was again less than 70%, the noise level was decreased by one step (3.5 dB), and the lesson was repeated. This process continued until the listener’s accuracy on the lesson exceeded 70% or until the listener failed to reach 70% accuracy at a noise level of −3 (10.5 dB SNR). In that event, noise was removed, and the lesson was repeated (because training started at noise level −3 [10.5 SNR], noise would be removed after the first two consecutive failures to reach 70% accuracy). Once the lesson was completed with greater than 70% accuracy in the absence of noise, noise was reintroduced at the −3 level (10.5 dB SNR). The listener then had to complete the lesson with 70% accuracy at the −3 noise level (10.5 dB SNR) to progress to the next lesson.

After listeners had successfully completed three lessons (e.g., completed training on spread-lip vowels in word, phrasal, and sentence contexts) at 88% accuracy, the noise level was increased by one level (3.5 dB). Successful completion of three more lessons (e.g., bilabial consonants in word, phrasal, and sentence contexts) at 88% accuracy prompted clinicians to increase the noise level by another level. This metric continued until a noise level of +3 (−10.5 dB SNR) was reached or until the listener’s accuracy dipped below 70%. If accuracy dipped, the clinician used the metric described previously until accuracy exceeded 70%. If a noise level of +3 (−10.5 dB SNR) was reached, this level was maintained for as long as accuracy remained above 70%. The number of lessons each participant completed across all training sessions depended on his or her performance; participants who were more accurate overall completed more lessons, whereas participants who showed lower accuracy levels on particular lessons made less progress. The number of lessons individuals successfully completed did not predict ultimate training success (p > .05).

Immediately following their last training session, listeners completed the QuickSIN and HINT tests again, using the same protocol as in the baseline assessments. Clinicians administered these tests again 4 days later to assess maintenance of training. Participants were asked to complete the SSQ a second time at this second post-test assessment.

Results

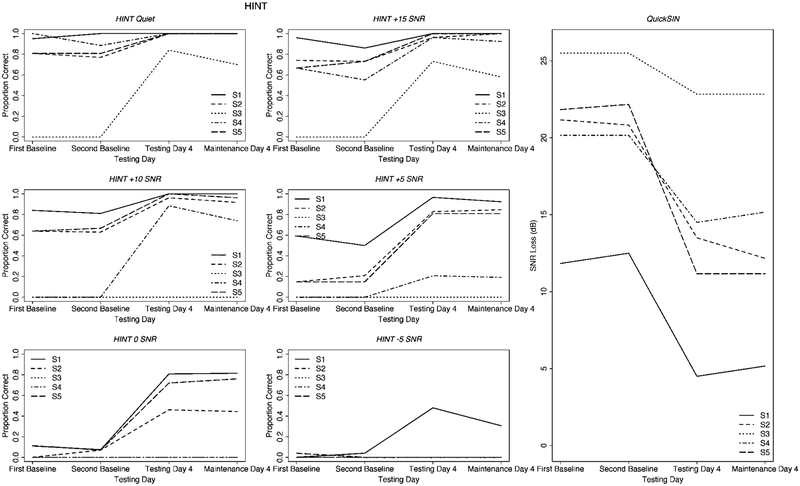

As shown in Figure 1, there was extensive individual variability among the four participants, though all participants appeared to improve as a function of training. We found no significant differences between the two baseline time points using Wilcoxon matched-pairs tests for the HINT (see Figure 1; Quiet, Z = 4.0,p = .79; SNR +15, Z = 8.0, p = .36; SNR +10, Z = 3.0, p = 1.00; SNR +5, Z = 2.0, p = 1.00; SNR 0, Z = 3.0, p = 1.00; SNR −5, Z = 1.5, p = 1.00) or for the QuickSIN (Z = 1.5, p = .59). We did not assess HINT SNR levels −10 dB and −15 dB because there were no correct responses. Thus, we averaged the two QuickSIN baseline scores to obtain one baseline QuickSIN score, and we averaged the 12 HINT baseline scores to obtain six baseline HINT scores (one for each SNR level).

Figure 1.

Individual performance on the Hearing in Noise Test (HINT) and QuickSIN at first baseline, second baseline, testing day 4, and maintenance day 4. HINT conditions −1 0 SNR and −1 5 SNR are not shown because all participants were at floor performance for all tests. SNR = signal-to-noise ratio.

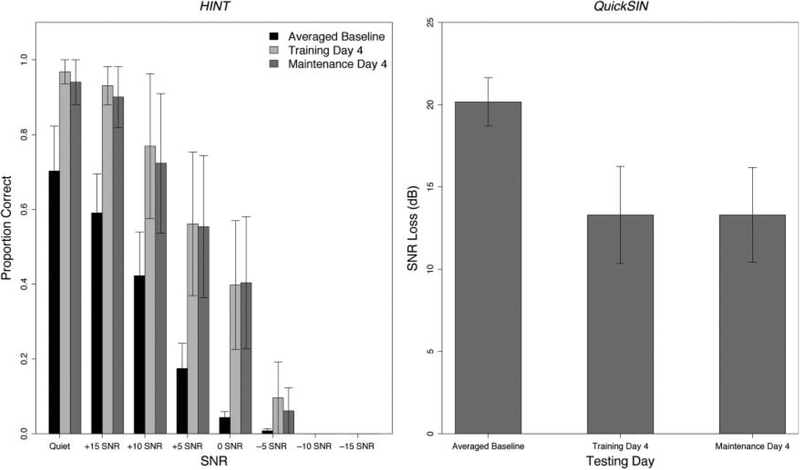

Aggregate performance as a function of testing day is shown in Figure 2. HINT conditions and testing day were entered into a within-subjects analysis of variance (ANOVA). We found a main effect of testing day, F (3, 12) = 138.81, p < .001, and of SNR condition, F (7, 28) = 21.668, p < .001, as well as a significant interaction, F(21, 84) = 2.05, p = .01, indicating that participants improved across test sessions, that listeners found some conditions were easier than others, and that listeners showed more improvement in some sessions than in others. Planned Wilcoxon signed-rank tests revealed differences between baseline and training day 4 and between baseline and maintenance day 4 but not between training day 4 and maintenance day 4 (all ps = .03). Tukey’s honestly significant difference (HSD) tests revealed no significant differences among averaged performance for the Quiet, + 15 SNR, and +10 SNR conditions, or among the averaged performance for the 0, −5, −10, and −15 SNR conditions (all ps < .001).

Figure 2.

Group mean performance on the HINT and QuickSIN at averaged baseline, testing day 4, and maintenance day 4.

We also found a main effect of day for the QuickSin, F(3, 12) = 24.02, p < .001. Again, planned Wilcoxon signed-rank tests revealed differences between baseline and training day 4 and between baseline and maintenance day 4 but not between training day 4 and maintenance day 4, indicating improvement following training and maintenance of these gains.

Based on the significant Day × Condition interaction for the HINT and the significant main effect of day for the QuickSIN, we assessed group performance at baseline, training day 4, and maintenance day 4 using Friedman tests for each level of the HINT and the QuickSIN. We found a significant difference among testing days for the HINT in Quiet, χ2(2, N = 5) = 9.50, p = .01, and at +15 SNR, χ2 (2, N = 5) = 8.44, p = .01. We also found a significant difference among testing days for the QuickSIN, χ2 (2, N = 5) = 7.89, p = .02. We found no significant differences among testing days for the HINT +10, +5, 0, −5, −1 0 SNR, or −15 SNR conditions. We suspect that the lack of a significant training effect at the HINT +10 and HINT +5 levels may be due to the high variability in a small number of participants, in which a lack of improvement by one or two listeners in a five-listener sample is sufficient to mask the aggregate effect.

These results indicate that speech-in-noise perception improved as a function of the training regime and that improvement was maintained for 4 days without additional intervention. However, it is worth noting that although improvement was seen, it appears to be reliable only when SNRs are reasonably favorable. This interpretation is supported by the lack of a significant difference from pre- to post-test on the SSQ (Z = 3957, p = .09), suggesting that the listeners perceived minimal benefit to their daily listening lives following training.

Discussion

Speech-in-noise perception continues to be a problem for CI recipients (e.g., Hochberg et al., 1992; Nelson & Jin, 2004). Despite this persistent difficulty, researchers have yet to develop a systematic, successful means of improving speech-in-noise perception in this population. In this study, we aimed to determine the efficacy of a short-term computerized speech-in-noise training in improving speech perception abilities in postlingually deafened adults with CIs. Our data suggest that even a very short-term training regimen may have a positive impact on listeners’ speech-in-noise-perception and that improvements may be maintained beyond the training period.

A possible limitation of the study—aside from the small sample size and short training time—is the heterogeneity of the sample. Participants differed greatly in age, highest level of education, and age at which hearing loss was identified. There were also differences in the type of device being used (i.e., internal device, speech processor, speech coding strategy), participants’ experience before training, and their pre-training discrimination skills. One participant was a bilateral CI recipient; two other participants were bimodal, using a behind-the-ear hearing aid on the contralateral ear; and the remaining two participants were unilateral implant users only. Any one of these factors could have contributed to each listener’s speech-in-noise ability as well as to the progress that he or she made. Future research is needed to determine the exact influence exerted by each factor on speech-in-noise perception and training improvements. However, all listeners made significant gains as a result of training, suggesting the efficacy of training despite demographic differences.

One possibility for our study’s success relative to earlier work may be listeners’ familiarity with the testing procedures. Although different lists were used each time, listeners were tested four times in rapid succession (i.e., four tests in 10 days). It is possible that continuous exposure to the protocol led to practice effects by familiarizing listeners with the talkers and with the noise levels. These repeated exposures, or a possible effect of training, may have altered listeners’ response thresholds by encouraging them to guess rather than to remain silent. A shift to guessing may have resulted in more correct responses on the tests without a true shift in hearing ability, which would also account for the lack of change in listeners’ self-rated speech-in-noise perception abilities. These explanations are consistent with the fact that improvement was only seen at the most favorable SNRs, in which targets may have been sufficiently audible to allow for practice effects or for accurate guessing, whereas listeners may not have been able to detect sufficient information to guess responses accurately in noisier conditions. However, it should be noted that the test items are open-set items, meaning that a high rate of success purely by guessing is unlikely.

Finally, it is possible that the gains noted here are true training gains but that the task of listening to speech in challenging conditions is not sufficiently similar to listening to speech at favorable SNRs in a noise-attenuated booth for listeners to notice a benefit in their daily lives. However, it should be noted that there is a trend toward a perceived improvement. This trend appears to be driven primarily by two of the subscales: (a) Distance and Movement and (b) Segregation of Sounds (see Gatehouse & Akeroyd, 2006, for the division into subscales). The ability to segregate multiple sound streams is related to the task of perceiving speech in noise, in which multiple streams must be separated in order to detect the target speech, suggesting that training may have begun to improve listeners’ self-perceptions of their abilities to separate target speech from background noise. Additional training may have further improved listeners’ self-perceived abilities, as well as their abilities to perceive speech at less favorable SNRs. Future work is needed to determine if longer training durations could result in more real-world benefits, and to rule out the possibility of practice effects.

It should be noted that both the Seeing and Hearing Speech and the computer-assisted speech training program of the House Ear Institute (e.g., Fu & Galvin, 2008; Fu et al., 2005), as well as the SPATS program (Miller et al., 2008), use the listeners’ initial abilities as a starting point and adapt to the listeners’ progress over the course of training. We think that such individually based training will prove to be crucial to the development of an efficacious speech-in-noise rehabilitation program (e.g., Perrachione et al., 2011).

Conclusions

The results from the present study suggest that short-term computerized speech-in-noise training may have the ability to increase speech-perception-in-noise in postlingually deafened adults with CIs. Although it is clear that there is much more work to be done in this arena, we hope that the positive outcomes found here will inspire other researchers to continue the effort to develop efficacious speech perception training programs for CI recipients.

Acknowledgments

This research was funded by National Institute on Deafness and Other Communication Disorders Grant R01-DC008333, NIA Grant K02-AG03582, and National Science Foundation Grant BCS-1125144. We thank Susan Erler and the Northwestern University Doctor of Audiology Program for supporting this study and Pat Zurek of Sensimetrics for answering our questions about the program.

References

- Bichey BG, & Miyamoto RT (2008). Outcomes in bilateral cochlear implantation. Otolaryngology-Head and Neck Surgery, 138, 655–661. [DOI] [PubMed] [Google Scholar]

- Fu Q-J, & Galvin JJ (2008). Maximizing cochlear implant patients’ performance with advanced speech training procedures. Hearing Research, 242(1–2), 198–208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fu Q-J, Galvin J, Wang X, & Nogaki G (2005). Moderate auditory training can improve speech performance of adult cochlear implant patients. Acoustics Research Letters Online, 6, 106–111. [Google Scholar]

- Gantz BJ, Turner C, & Gfeller KE (2006). Acoustic plus electric speech processing: Preliminary results of a multicenter clinical trial of the Iowa/Nucleus Hybrid implant. Audiology & Neurotology, 11(S1), 63–68. [DOI] [PubMed] [Google Scholar]

- Gatehouse S, & Akeroyd M (2006). Two-eared listening in dynamic situations. International Journal of Audiology, 45, S120–S124. [DOI] [PubMed] [Google Scholar]

- Gatehouse S, & Noble W (2004). The Speech, Spatial and Qualities of Hearing Scale (SSQ). International Journal of Audiology, 43, 85–99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hochberg I, Boothroyd A, Weiss M, & Hellman S (1992). Effects of noise and noise suppression on speech perception by cochlear implant users. Ear and Hearing, 13, 263–271. [DOI] [PubMed] [Google Scholar]

- Hu Y, & Loizou PC (2008). A new sound coding strategy for suppressing noise in cochlear implants. The Journal of the Acoustical Society ofAmerica, 124, 498–509. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Killion MC, Niquette PA, Gudmundsen GI, Revit LJ, & Banerjee S (2004). Development of a quick speech-in-noise test for measuring signal-to-noise ratio loss in normal-hearing and hearing-impaired listeners. The Journal of the Acoustical Society of America, 116, 2395–2405. [DOI] [PubMed] [Google Scholar]

- Kos M-I, Deriaz M, Guyot J-P, & Pelizzone M (2009). What can be expected from a late cochlear implantation? International Journal of Pediatric Otorhinolaryngology, 73, 189–193. [DOI] [PubMed] [Google Scholar]

- Logan JS, Lively SE, & Pisoni DB (1991). Training Japanese listeners to identify English /r/ and /l/: A first report. The Journal of the Acoustical Society of America, 89, 874–886. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lunner T (2003). Cognitive function in relation to hearing aid use. International Journal of Audiology, 42, S49–S58. [DOI] [PubMed] [Google Scholar]

- Lunner T, & Sundewall-Thoren E (2007). Interactions between cognition, compression, and listening conditions: Effects on speech-in-noise performance in a two-channel hearing aid. Journal of the American Academy of Audiology, 18, 604–617. [DOI] [PubMed] [Google Scholar]

- McArdle RA, & Wilson RH (2006). Homogeneity of the 18 QuickSIN lists. Journal of the American Academy of Audiology, 17, 157–167. [DOI] [PubMed] [Google Scholar]

- Miller JD, Watson CS, Kistler DJ, Wightman FL, & Preminger JE (2008). Preliminary evaluation of the speech perception assessment and training system (SPATS) with hearing-aid and cochlear-implant users. Proceedings of Meetings on Acoustics, 2, 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nava E, Bottari D, Bonfioli F, Beltrame MA, & Pavani F (2009). Spatial hearing with a single cochlear implant in late-implanted adults. Hearing Research, 255(1–2), 91–98. [DOI] [PubMed] [Google Scholar]

- Nelson PB, & Jin S-H (2004). Factors affecting speech understanding in gated interference: Cochlear implant users and normal-hearing listeners. The Journal of the Acoustical Society of America, 115, 2286. [DOI] [PubMed] [Google Scholar]

- Nilsson M, Soli SD, & Sullivan JA (1994). Development of the Hearing in Noise Test for the measurementof speech reception thresholds in quiet and in noise. The Journal of the Acoustical Society of America, 95, 1085–1099. [DOI] [PubMed] [Google Scholar]

- Oba SI, Fu Q-J, & Galvin JJ (2011). Digit training in noise can improve cochlear implant users’ speech understanding in noise. Ear and Hearing, 32, 573–581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parbery-Clark A, Marmel F, Bair J, & Kraus N (2011). What subcortical-cortical relationships tell us about processing speech in noise. European Journal of Neuroscience, 33, 549–557. [DOI] [PubMed] [Google Scholar]

- Peach RK, & Wong PCM (2004). Integrating the message level into treatment for agrammatism using story retelling. Aphasiology, 18, 429–441. [Google Scholar]

- Perrachione TK, Lee J, Ha LYY, & Wong PCM (2011). Learning a novel phonological contrast depends on interactions between individual differences and training paradigm design. The Journal of the Acoustical Society of America, 130, 461–472. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perrachione TK, & Wong PCM (2007). Learning to recognize speakers of a non-native language: Implications for the functional organization of human auditory cortex. Neuropsychologia, 45, 1899–1910. [DOI] [PubMed] [Google Scholar]

- Potts LG, Skinner MW, Litovsky RA, Strube MJ, & Kuk F (2009). Recognition and localization of speech by adult cochlear implant recipients wearing a digital hearing aid in the nonimplanted ear (bimodal hearing). Journal of the American Academy of Audiology, 20, 353–373. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stacey PC, Raine CH, O’Donoghue GM, Tapper L, Twomey T, & Summerfield AQ (2010). Effectiveness of computer-based auditory training for adult users of cochlear implants. International Journal of Audiology, 49, 347–356. [DOI] [PubMed] [Google Scholar]

- Summerfield AQ, Barton GR, Toner J, McAnallen C, Proops D, Harries C, … Ramsden R (2006). Self-reported benefits from successive bilateral cochlear implantation in post-lingually deafened adults: Randomised controlled trial. International Journal of Audiology, 45, S99–S107. [DOI] [PubMed] [Google Scholar]

- Tyler RS, Dunn CC, Witt SA, & Noble WG (2007). Speech perception and localization with adults with bilateral sequential cochlear implants. Ear and Hearing, 28, 86S–90S. [DOI] [PubMed] [Google Scholar]

- Warrier C, Wong P, Penhune V, Zatorre R, Parrish T, Abrams D, & Kraus N (2009). Relating structure to function: Heschl’s gyrus and acoustic processing. Journal of Neuroscience, 29, 61–69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson RH, McArdle RA, & Smith SL (2007). An evaluation of the BKB-SIN, HINT, QuickSIN, and WIN materials on listeners with normal hearing and listeners with hearing loss. Journal of Speech, Language, and Hearing Research, 50, 844–856. [DOI] [PubMed] [Google Scholar]

- Wong PCM, Ettlinger M, Sheppard JP, Gunasekera GM, & Dhar S (2010). Neuroanatomical characteristics and speech perception in noise in older adults. Ear and Hearing, 31, 471–479. [DOI] [PMC free article] [PubMed] [Google Scholar]