Abstract

Although lesion and functional imaging studies have broadly implicated the right hemisphere in the recognition of emotion, neither the underlying processes nor the precise anatomical correlates are well understood. We addressed these two issues in a quantitative study of 108 subjects with focal brain lesions, using three different tasks that assessed the recognition and naming of six basic emotions from facial expressions. Lesions were analyzed as a function of task performance by coregistration in a common brain space, and statistical analyses of their joint volumetric density revealed specific regions in which damage was significantly associated with impairment. We show that recognizing emotions from visually presented facial expressions requires right somatosensory-related cortices. The findings are consistent with the idea that we recognize another individual's emotional state by internally generating somatosensory representations that simulate how the other individual would feel when displaying a certain facial expression. Follow-up experiments revealed that conceptual knowledge and knowledge of the name of the emotion draw on neuroanatomically separable systems. Right somatosensory-related cortices thus constitute an additional critical component that functions together with structures such as the amygdala and right visual cortices in retrieving socially relevant information from faces.

Keywords: emotion, simulation, somatosensory, somatic, empathy, faces, social, human

How do we judge the emotion that another person is feeling? This question has been investigated in some detail using a class of stimuli that is critical for social communication and that contributes significantly to our representation of other persons: human facial expressions (Darwin, 1872; Ekman, 1973;Fridlund, 1994; Russell and Fernandez-Dols, 1997; Cole, 1998). Recognition of facial expressions of emotion has been shown to involve subcortical structures such as the amygdala (Adolphs et al., 1994;Morris et al., 1996; Young et al., 1995), as well as the neocortex in the right hemisphere (Bowers et al., 1985; Gur et al., 1994; Adolphs et al., 1996; Borod et al., 1998). Brain damage can impair recognition of the emotion signaled by a face, while sparing the ability to recognize other types of information, such as the identity or gender of the person (Adolphs et al., 1994), and vice versa (Tranel et al., 1988). These findings argue for the existence of neural systems that are relatively specialized to retrieve knowledge about the emotional significance of faces, an idea that also appears congenial from the perspective of evolution.

However, although the functional role of the amygdala in processing emotionally salient stimuli has received considerable attention, the contribution made by cortical regions within the right hemisphere has remained only vaguely specified, both in terms of the anatomical sites and of the cognitive processes involved. Previous studies have found evidence of the importance of visually related right hemisphere cortices in the recognition of facial emotion, but it is unclear how high-level visual processing in such regions ultimately permits retrieval of knowledge regarding the emotion shown in the stimuli. We suggested previously that both visual and somatosensory-related regions in the right hemisphere might be important to recognize facial emotion, but the sample size of that study (Adolphs et al., 1996) was too small to permit any statistical analyses or to draw conclusions about more restricted cortical regions. Furthermore, neither our previous study (Adolphs et al., 1996) nor, to our knowledge, any other studies of the right hemisphere's role in emotion have volumetrically coregistered lesions from multiple subjects in order to investigate quantitatively their shared locations.

In the present quantitative study of 108 subjects with focal brain lesions, we used three different tasks to assess the recognition and naming of six basic emotions from facial expressions. We obtained MRI or CT scans from all 108 subjects suitable for three-dimensional reconstruction of their lesions. Lesions were analyzed as a function of task performance by coregistration in a common brain space, and statistical analyses of their joint volumetric density revealed specific regions in which damage was significantly associated with impairment. We show that recognition of facial emotion requires the integrity of the right somatosensory cortices. We provide a theoretical framework for interpreting these data, in which we suggest that recognizing emotion in another person engages somatosensory representations that may simulate how one would feel if making the facial expression shown in the stimulus.

MATERIALS AND METHODS

Subjects

We tested a total of 108 subjects with focal brain damage and 30 normal controls with no history of neurological or psychiatric impairment. All brain-damaged subjects were selected from the patient registry of the Division of Cognitive Neuroscience and Behavioral Neurology (University of Iowa College of Medicine, Iowa City, IA) and had been fully characterized neuropsychologically (Tranel, 1996) and neuroanatomically (Damasio and Frank, 1992) (side of lesion,n = 60 left, 63 right, and 15 bilateral). We included only subjects with focal, chronic (>4 months), and stable (nonprogressive) lesions that were clearly demarcated on MR or CT scans. We sampled the entire telencephalon, and subjects with lesions in different regions of the brain were similar with respect to mean age and visuoperceptual ability (see Table 1).

Table 1.

Background demographics and neuropsychology by lesion location

| Lesion location | n | Age | VIQ | PIQ | FSIQ | Face discrimination |

|---|---|---|---|---|---|---|

| All Subjects | 108 | 53.6 | 100.3 | 100.1 | 100.3 | 44.8 |

| Right parietal | 23 | 57.7 | 98.4 | 90.3 | 94.9 | 43.2 |

| Left parietal | 17 | 54.1 | 102.4 | 111.9 | 106.9 | 46.5 |

| Right temporal | 28 | 51.5 | 100.2 | 94.7 | 97.6 | 42.8 |

| Left temporal | 25 | 43.7 | 94.8 | 103.5 | 98.5 | 46.2 |

| Right frontal | 35 | 55.1 | 100.5 | 95.2 | 98.4 | 43.3 |

| Left frontal | 25 | 57.2 | 100.9 | 107.7 | 104.5 | 46.0 |

| Right occipital | 18 | 58.1 | 100.8 | 91.1 | 96.6 | 43.1 |

| Left occipital | 17 | 59.1 | 99.9 | 93.3 | 97.2 | 43.9 |

VIQ, PIQ, FSIQ, Verbal, performance, and full-scale IQs, respectively, from the WAIS-R or the WAIS-III. Face discrimination, Discrimination of faces from the Benton Facial Recognition Task (normal for all subject groups; a score of 43 corresponds to the 30th percentile). Note that n values add up to >108, because some subjects had lesions that included more than one neuroanatomical region.

All 108 brain-damaged subjects and 18 of the normal subjects participated in rating the intensity of emotions (see Figs. 1-3; Experiment 1). Fifty-five of the 108 participated in the naming task (see Fig. 4a; Experiment 2); 77 of the 108 as well as 12 normal subjects participated in the pile-sorting task (see Fig.4b; Experiment 3). All subjects had given informed consent according to a protocol approved by the Human Subjects Committee of the University of Iowa.

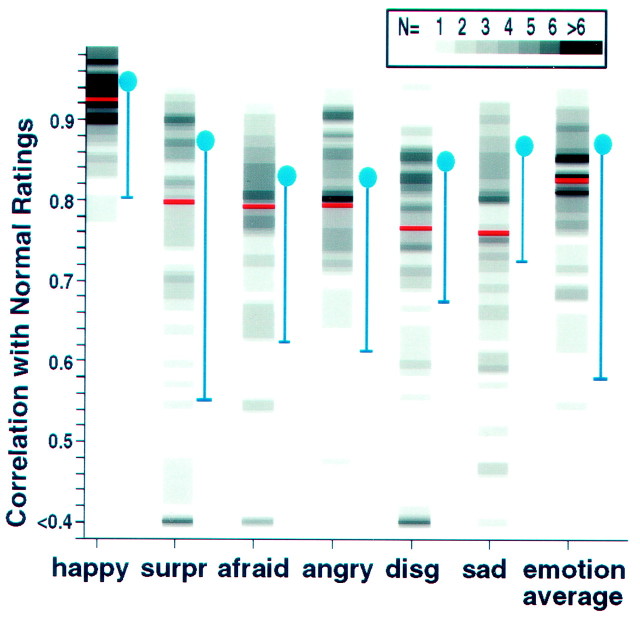

Fig. 1.

Histograms of performances in Experiment 1. Shown are the number of subjects with a given correlation score for each emotion category. The subjects' ratings of 36 emotional facial expressions were correlated with the mean ratings from 18 normal controls for each face stimulus and then averaged for each emotion category. The number of subjects is encoded by the gray scale value (scale at top). Red lines,Division into the 54 subjects with the lowest and the 54 with the highest scores. Blue areas, Means (circles) and 2 SDs (error bars) of correlations among the 18 normal controls. These correlations were calculated between each normal individual and the remaining 17. disg, Disgusted;surpr, surprised.

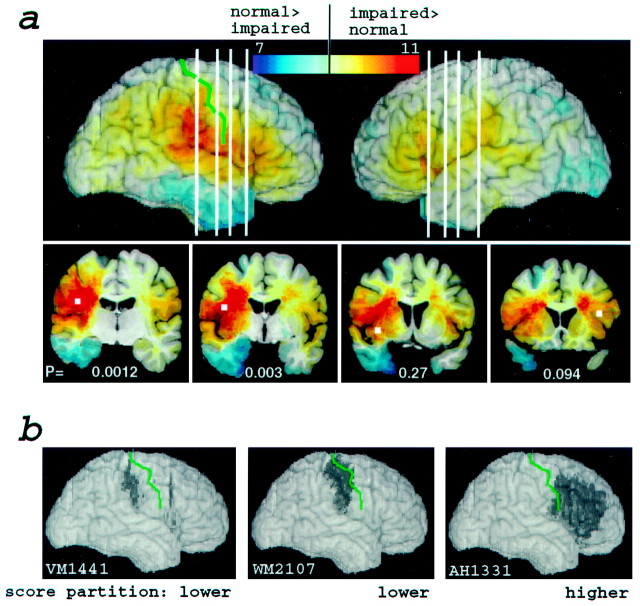

Fig. 2.

Distribution of lesions as a function of mean emotion recognition (Experiment 1). a, Lesion overlaps from all 108 subjects. Color (scale attop) encodes the difference in the density of lesions between the subjects with the lowest and those with the highest scores. Thus, redregions correspond to locations at which lesions resulted in impairment more often than not, andblueregions correspond to locations at which lesions resulted in normal performance more often than not.p values indicating statistical significance are shown in white for voxels in four regions (white squares) on coronal cuts (bottom) that correspond to the whiteverticallines in the three-dimensional reconstructions (top). Because adjacent voxels cannot be considered independent, we analyzed the significance of six specific separate voxels, determined a priori from the density distribution of all 108 lesions, as follows. We selected contiguous regions within which at least nine subjects had lesions and picked the voxel at the centroid of each of these regions. The voxels (white squares shown in the coronal cuts at the bottom; the two in the temporal lobe not shown because lesions there did not result in impaired emotion recognition) were located in six regions; details are given in Table 2. Voxels were chosen by one of us (G.C.) who was blind to the outcome of the task data. The central sulcus is shown ingreen. b, Three examples of individual subjects' lesions in the right frontoparietal cortex. Two lesions (left, middle) are from subjects in the bottom partition who had the smallest lesions; the other lesion (right)is from a subject in the top partition. The data from these three subjects provide further evidence, at the individual subject level, that lesions in somatosensory cortices impair the recognition of facial emotion. The central sulcus is shown in green.

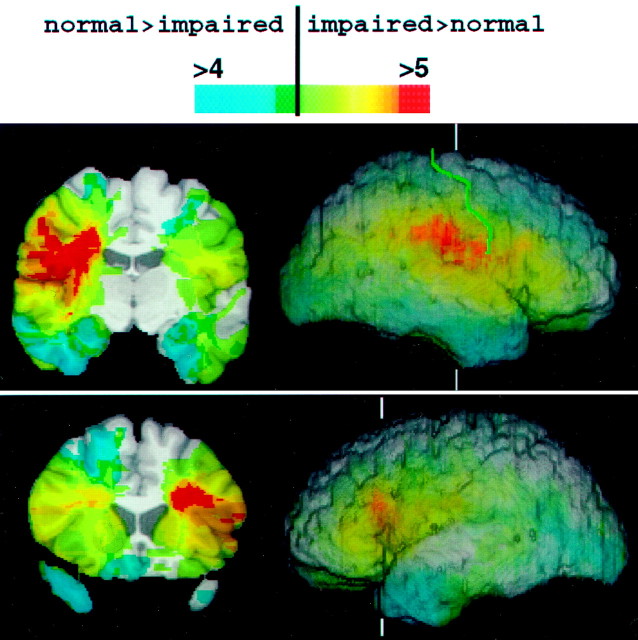

Fig. 3.

Distribution of lesion overlaps from the most-impaired and the least-impaired 25% of subjects. Subjects were again partitioned using the mean emotion correlation from Experiment 1. Lesion overlaps from the 27 least-impaired subjects were subtracted from those of the 27 most-impaired subjects; data from the middle 54 subjects were not used. The resulting images are directly comparable with those in Figure 2 but show more focused regions of difference because of the extremes of performances that are being compared. Coronal cuts are shown on the left, and three-dimensional reconstructions of brains that are rendered partially transparent are shown on the right, indicating the level of the coronal cuts (whiteverticallines) and the location of the central sulcus (green).

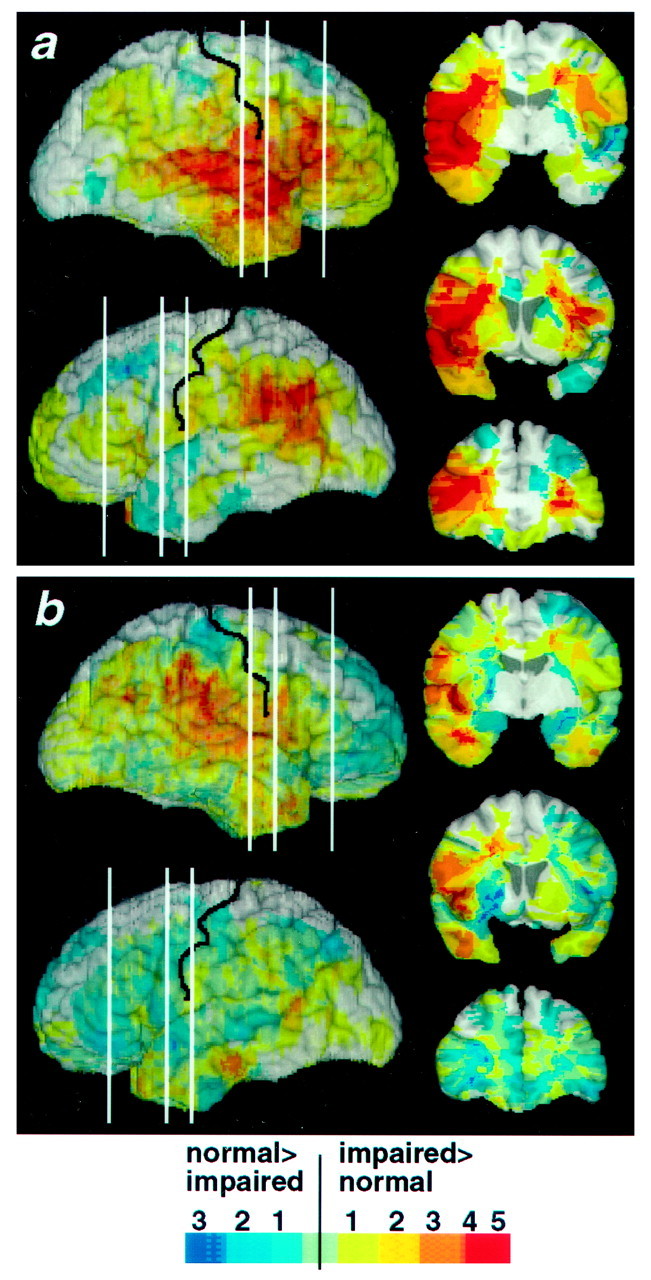

Fig. 4.

Neuroanatomical regions critical for naming or for sorting emotions. Images were calculated as described in Figure2a. a, Neuroanatomical regions critically involved in choosing the name of an emotion (Experiment 2).b, Neuroanatomical regions critically involved in sorting emotions into categories without requiring naming (Experiment 3).

Tasks

Experiment 1: rating the intensity of basic emotions expressed by faces (see Figs. 1-3). Subjects were shown six blocks of the same 36 facial expressions of basic emotions (Ekman and Friesen, 1976) in randomized order (6 faces each of happiness, surprise, fear, anger, disgust, and sadness). For each block of the 36 faces, subjects were asked to rate all the faces with respect to the intensity of one of the six basic emotions listed above. This is a difficult and sensitive task; for example, subjects are asked to rate an angry face not only with respect to the intensity of anger displayed but also with respect to the intensity of fear, disgust, and all other emotions. We correlated the rating profile each subject produced for each face with the mean ratings given to that face by 18 normal controls (nine males and nine females; age = 56 ± 16). The stimuli and the correlation procedure were identical to what we have used previously in studies of facial emotion recognition after brain damage (Adolphs et al., 1994, 1995, 1996).

From the correlation measure obtained for each face, average correlations were calculated. We calculated the average correlation across all the 36 stimuli to obtain a mean emotion recognition score (see Figs. 2, 3). We also calculated the correlation for individual emotions by averaging the correlations of the six faces within an emotion category (described in Results but not shown in the figures). All correlations used Pearson product–moment correlations. For averaging multiple correlation values, we calculated the FisherZ transforms (hyperbolic arctangent) of the correlations to normalize their population distribution (Adolphs et al., 1995).

Experiment 2: matching facial expressions with the names of basic emotions (see Fig. 4a). Subjects were shown 36 facial expressions (the same as above) and asked to choose a label from a printed list of the six basic emotion words that best matched the face. This task is essentially a six-alternative forced-choice face–label matching task and has been used previously to assess emotion recognition in subjects with bilateral amygdala damage (Young et al., 1995; Calder et al., 1996). Performances were scored as correct if they matched the intended emotion and incorrect otherwise.

Experiment 3: sorting facial expressions into emotion categories (see Fig. 4b). Subjects were asked to sort photographs of 18 facial expressions (3 of each emotion, a subset of the ones used in the above tasks) into 3, 4, 7, and 10 piles (in random order) on the basis of the similarity of the emotion expressed. A measure of similarity between each pair of faces was calculated from this task by summing over the co-occurrences of that pair of faces in a pile, weighted by the number of possible piles, to yield a number between 0 and 24 (cf.Russell, 1980). For all pairwise combinations of faces from two different emotion categories, we calculated a subject's Zscore of derived similarity measure by comparison with the data obtained on this task from 12 normal controls.

Neuroanatomical analysis

We obtained all images using a method called “MAP-3” (Frank et al., 1997). The lesion visible on each brain-damaged subject's MR or CT scan was manually transferred onto the corresponding sections of a single, normal, reference brain. Lesions from multiple subjects were summed to obtain the lesion density at a given voxel and corendered with the reference brain to generate a three-dimensional reconstruction (Frank et al., 1997) of all the lesions in a group of subjects. We divided our sample of 108 subjects into two groups: the 54 with the lowest and the 54 with the highest mean emotion recognition score (for Fig. 3, the 27 with the lowest and the 27 with the highest scores). A lesion density image was generated for each group, and the two images were then subtracted from one another to produce images such as those shown (see Figs. 2a,3). Similar partitions that divided the subject sample in half were made for the other tasks described (see Fig. 4). In each case, we produced a difference image that showed, for all subjects who had lesions at a given voxel, the difference between the number of subjects in the bottom half of the partition and the number of subjects in the top half of the partition (or bottom and top quarter; see Fig. 3). By chance, one would expect this difference to be close to zero (an equal number of subjects from each group would be expected to have lesions at a given location), yet our analysis showed that, in certain regions of the brain, it was far from zero. To assign statistical significance to our results, we calculated the probability that a given difference in lesion density (resulting from a given partition of subjects) could have arisen by chance. Probability was calculated using a rerandomization approach (Monte-Carlo simulation), in which two partitions of equal size were created from each of one million random permutations of the entire data set (Sprent, 1998). Probabilities were summed over all differences in lesion density equal to or greater than the one we observed with our partition to obtain the cumulative probability that the results could have arisen by chance. Such a rerandomization approach makes no assumptions about the underlying distributions from which samples were drawn and gives an unbiased probability that the observed result could have arisen by chance. Allp values given in Results are Bonferroni corrected for multiple comparisons.

Other statistical analyses

Correlation with other tasks. The possible dependence of performance in Experiment 1 (see Figs. 1-3; mean emotion recognition score) with respect to background neuropsychology and demographics was examined with an interactive stepwise multiple linear regression model (DataDesk 5.0; DataDescription Inc.), as described previously (Adolphs et al., 1996). Gender, age, education, verbal IQ and performance IQ (from the WAIS-R or WAIS-III), the Benton facial recognition task, the judgment of line orientation, the Rey-Osterrieth complex figure (Copy), depression (from the Beck Depression Inventory or the MMPI-D scale), and visual neglect were entered as factors into the regression model on the basis of the Pearson correlation of a candidate factor with the regression residuals. Of particular relevance is the Benton facial recognition task, a standard neuropsychological instrument that assesses the ability to distinguish among faces of different individuals and that controls for any global impairments in face perception.

Neuroanatomical separation of naming and recognition. ANOVAs were performed for the labeling and pile-sorting tasks (see Fig.4a,b; Experiments 2, 3), using as factors the side of lesion and the neuroanatomically defined regions of interest that had been specified a priori and within which lesions might be expected to result in emotion recognition impairment. We chose five such regions in each hemisphere, on the basis of previous studies and on theoretical considerations. (1) The anterior supramarginal gyrus (Adolphs et al., 1996), (2) the ventral precentral and postcentral gyrus [somatomotor cortex primarily involving representation of the face (Adolphs et al., 1996)], and (3) the anterior insula (Phillips et al., 1997) were all chosen on the basis of the hypothesis that emotion recognition requires somatosensory knowledge (Damasio, 1994; Adolphs et al., 1996). (4) The frontal operculum was chosen because we anticipated that impairments on our task might also result from an impaired ability to name emotions. (5) The anterior temporal lobe, including the amygdala, was chosen on the basis of the amygdala's demonstrated importance in recognizing facial emotion (Adolphs et al., 1994; Young et al., 1995; Morris et al., 1996).

RESULTS

Experiment 1: mean correlations with normal ratings

In the first study, we investigated the recognition of emotion in 108 subjects with focal brain lesions that sampled the entire telencephalon, using a sensitive, standardized task that asked subjects to rate the intensity of the emotion expressed (Adolphs et al., 1994,1995, 1996). Sampling density in different brain regions as well as background demographics and neuropsychology of subjects is summarized in Table 1. Figure1 shows histograms of the distribution of subjects' correlations with normal ratings for each emotion, as well as their mean correlation score across all emotions (higher correlations indicate more normal ratings; lower correlations indicate more abnormal ratings). To investigate how different performances might vary systematically with brain damage in specific regions, we first partitioned the entire sample of 108 subjects into two groups: the 54 with the lowest scores and the 54 with the highest scores (Fig. 1,red lines). We computed the density of lesions at a given voxel, for all voxels in the brain, for each of the two groups of subjects and then subtracted the two data sets from each other, yielding images that showed regions in which lesions were systematically associated with either a low- or a high-performance score.

Low-performance scores, averaged across all emotions (Fig. 1,histogram on the right), were associated with lesions in right somatosensory-related cortices, including right anterior supramarginal gyrus, the lower sector of S-I and S-II, and insula, as well as with lesions in left frontal operculum and, to a lesser extent, also with lesions in right visual-related cortices as reported previously (Adolphs et al., 1996). Several of these regions had overlaps of lesions from 11 or more different individual subjects who were impaired (Fig. 2a,red regions; compare with scale attop). We tested the statistical significance of these results with a very conservative approach using rerandomization computation (Sprent, 1998), which confirmed that lesions in several of the above regions significantly impair the recognition of facial emotion (Fig. 2a, Table 2; see Materials and Methods for further details). In particular, the right somatosensory cortex yielded a highly significant result with this analysis: out of a total of 14 subjects with lesions at the voxel in right S-I that we sampled, 13 were in the group with the lowest scores, and only 1 subject was in the group with the highest scores (Table 2). It would be extremely unlikely for such a partition to have resulted by chance (resampling, p < 0.005, Bonferroni corrected).

Table 2.

Emotion recognition in subjects with lesions at six specific voxels

| Side | Region | Total n | Impairedn | p (corrected) |

|---|---|---|---|---|

| Right | Anterior SMG | 12 | 12 | 0.0012 |

| Right | Insula | 15 | 10 | 0.268 |

| Right | Temporal pole | 11 | 3 | NS |

| Right | S1 | 14 | 13 | 0.0032 |

| Left | Temporal pole | 10 | 5 | NS |

| Left | Frontal operculum | 9 | 7 | 0.094 |

Detailed information from the performances (mean emotion recognition on Experiment 1) of all 108 subjects for each of six neuroanatomically specified voxels (4 of which are shown as thewhite boxes in Figure 2a; see Fig. 2alegend for a description of how the voxels were chosen). Shown are voxel location (Region), numbers of all subjects with lesions at the voxel (Total n), numbers of subjects in the bottom 50% score partition with lesions at the voxel (Impaired n), andp value of the probability of obtaining the observed distribution of impaired/total subjects at that voxel from re-randomization. Bonferroni-corrected p values are given for those distributions in which there was a majority of impaired subjects.

The above analysis takes advantage of our large sample size of subjects in revealing focal “hot spots” that result from additive superposition of lesions from multiple impaired subjects, together with subtractive superposition of lesions from multiple subjects who were not impaired. Although many subjects had lesions that extended beyond the somatosensory cortices, some subjects had relatively restricted lesions that corroborated our group findings. Figure 2bshows examples of right cortical lesions from two individual subjects who were in the group with the lowest performance scores, and who had small lesions, as well as an example of a subject with a large lesion who was in the group with the highest scores. Both of the subjects from the low-score group had circumscribed damage restricted to the somatosensory cortices, whereas the subject with the higher score had a larger lesion that spared the somatosensory cortices.

Although the analysis shown in Figure 2a provides superior statistical power because of the large sample of subjects, we wished to complement it with a more conservative approach that did not simply divide subjects in half. We repeated the analysis shown in Figure2a but with only half of our subjects, the bottom quartile (27 subjects) compared with the top quartile (27 subjects); the middle 54 subjects were omitted from the analysis. The results, shown in Figure 3, confirm that subjects with the lowest scores tended to have lesions involving right somatosensory-related cortices and left frontal operculum. In the right hemisphere, the maximal overlap of impaired subjects (Fig. 3,red region) was confined to somatosensory cortices in S-I and S-II and the anterior supramarginal gyrus.

We examined the extent to which demographics or the neuropsychological profile might account for impaired emotion recognition. A multiple regression analysis showed that the only significant factor was performance IQ (t = 3.07; p < 0.003; adjusted R2 = 14.5%). Importantly, verbal IQ and several measures of visuoperceptual ability (including the discrimination of face identity; compare Materials and Methods and Table 1) did not account for any significant variance on our experimental task, demonstrating that the impairments we report in recognizing emotion cannot be attributed to impaired language or visuoperceptual function alone.

Experiment 1: correlations for individual basic emotions

To investigate emotion recognition with respect to individual basic emotions, we examined lesion density as a function of performance for each emotion category, using the red linesshown in Figure 1 to partition the subject sample. Our procedure here was identical to that described above for the mean emotion performance: difference overlap images contrasting the top 54 with the bottom 54 subjects, like those shown in Figure 2, were obtained for each of the six basic emotions (data not shown). We found that lesions that included right somatosensory-related cortices were associated with impaired recognition for every individual emotion. Thus, visually based recognition of emotion appears to draw in part on a common set of right hemisphere processes related to somatic information. However, some emotions also appeared to rely on specific additional regions, the details of which will be described in a separate report. Notably, damage to the right, but not to the left, anterior temporal lobe resulted in impaired recognition of fear, an effect that was statistically significant (p = 0.036 by rerandomization) (Anderson et al., 1996).

Experiment 2: naming emotions

The above recognition task (Experiment 1) requires both conceptual knowledge of the emotion shown in the face and lexical knowledge necessary for providing the name of the emotion. Because of the above findings, it would seem plausible that right somatosensory-related cortices are most important for the former and the left frontal operculum is most important for the latter set of processes. We investigated directly the possible dissociation of conceptual and lexical knowledge with two additional tasks that draw predominantly on one or the other of these two processes. To assess lexical knowledge, subjects chose from a list of the six names of the emotions the name that best matched each of the 36 faces (Young et al., 1995; Calder et al., 1996). Examination of lesion density as a function of performance on this task revealed critical regions in the bilateral frontal cortex, in the right superior temporal gyrus, and in the left inferior parietal cortex, as well as in S-I, S-II, and insula (Fig.4a), structures also important to recognize emotions in Experiment 1 (compare Fig. 2). Performances in Experiments 1 and 2 were correlated (r = 0.46;p < 0.002; n = 44; Pearson correlation).

Experiment 3: conceptual knowledge of emotions

To investigate conceptual knowledge, we asked subjects to sort photographs of 18 of the faces (3 of each basic emotion, a subset of the 36 used above) into piles according to the similarity of the emotion displayed. Examination of lesion density as a function of performance on this task showed that right somatosensory-related cortices, including S-I, S-II, insula, and supramarginal gyrus, were important to retrieve the conceptual knowledge that is required to sort facial expressions into emotion categories (Fig. 4b). Again, these regions were also important to recognize emotions in Experiment 1, and performances in Experiments 1 and 3 were significantly correlated (r = 0.61; p < 0.0001;n = 64).

Neuroanatomical separation of performance in Experiments 2 and 3

The neuroanatomical separation of lexical knowledge (Experiment 2) and conceptual knowledge (Experiment 3) was confirmed by ANOVAs from both of these tasks, using hemisphere (right or left) and the neuroanatomical site of lesion (five regions) as factors (see Materials and Methods for details). Impaired naming (Experiment 2) was associated with lesions in the right temporal lobe, in either the right or left frontal operculum, or in the right or left supramarginal gyrus (allp values < 0.05). Impaired sorting (Experiment 3) was associated with lesions in the right insula (p< 0.001). Thus, lesions in the frontal operculum, in the supramarginal gyrus, or in the right temporal lobe (Rapcsak et al., 1993) may impair recognition of facial emotion by interfering primarily with lexical processing. Lesions in the right insula (Phillips et al., 1997) may impair recognition of facial emotion by interfering with the retrieval of conceptual knowledge related to the emotion independent of language.

Impairments in emotion recognition correlate with impaired somatic sensation

The salient finding that the recognition of emotions requires the integrity of somatosensory-related cortices would predict that emotion recognition impairments should correlate with the severity of somatosensory impairment. We found preliminary support for such a correlation in a retrospective analysis of the subjects' medical records. We examined the sensorimotor impairments of all subjects in our study who had damage to the precentral and/or postcentral gyrus (n = 17 right, 11 left). For each subject with lesions in somatomotor cortices, somatosensory or motor impairments were classified on a scale of 0–3 (absent–extensive) from the neurological examination in the patients' medical records by one of us who was blind to the patient's performance on the experimental tasks (D.T.). Subjects with right hemisphere lesions showed a significant correlation between facial emotion recognition (mean score on Experiment 1) and impaired touch sensation (r = 0.44; p< 0.05) and a trend correlation with motor impairment (r = 0.33; p < 0.1; Kendall-tau correlations). When emotion recognition scores (from Experiment 1) were corrected to subtract the effect of performance IQ (using the residuals of the regression between performance IQ and emotion recognition), the correlation with somatosensory impairments further increased (r = 0.48; p < 0.01), whereas the correlation with motor impairment decreased (r = 0.19;p > 0.2). There were no significant correlations in subjects with left hemisphere lesions (r values < 0.2;p values > 0.4).

DISCUSSION

Summary of findings

The findings indicate that an ostensibly visually based performance can be severely impaired by dysfunction in right hemisphere regions that process somatosensory information, even in the absence of damage to visual cortices. Specifically, lesions in right somatosensory-related cortices, notably including S-I, S-II, anterior supramarginal gyrus, and, to a lesser extent, insula, were associated with impaired recognition of emotions from human facial expressions. The significance, specificity, and robustness of the findings were addressed in three analyses: (1) a statistical analysis of all 108 patients using rerandomization showed that the neuroanatomical partitions observed were highly unlikely to have arisen by chance; (2) a more stringent comparison of subjects using only the 25% who were most impaired and the 25% who were least impaired confirmed these findings; and (3) some individual subjects had lesions restricted to somatosensory cortices and were impaired.

Furthermore, we found a significant correlation between impaired somatic sensation and impaired recognition of facial emotion; such a correlation held only for lesions in the right hemisphere and was not found in relation to motor impairments. Although this analysis relied on retrospective data from the patients' medical records, which only provided an approximate index of somatosensory cortex function, the positive finding corroborates our neuroanatomical analyses and lends further support to the hypothesis that somatosensory representation plays a critical role in recognizing facial emotion.

Finally, we performed two follow-up tasks that demonstrated a partial neuroanatomical separation between the regions critical for retrieving conceptual knowledge about the emotions signaled by facial expressions, independent of naming them, and those regions critical for linking the facial expression to the name of the emotion. A similar separation was already evident from our first task (compare Fig. 3) in which there were clearly two separate hot spots: the left frontal operculum (more important for naming) and the right somatosensory-related cortices (more important for concept retrieval).

Caveats and future extensions

Both the investigation of the neural underpinnings of emotion recognition and the lesion method have some caveats associated with them (cf. Adolphs, 1999a). Recognizing an emotion from stimuli engages multiple cognitive processes, depending on the demands and constraints of the particular task used to assess such recognition. In our primary recognition task, subjects need to perceive visual features of the stimuli, to reconstruct conceptual knowledge about the emotion that those stimuli signal, to link this conceptual knowledge with the emotion word on which they are rating, and to generate a numerical rating that reflects the intensity of the emotion with respect to that word. Our three tasks provide some further dissociation of the processes involved in such emotion recognition, but future experiments could provide additional constraints, for example, by measuring reaction time or using briefly presented stimuli, to begin dissecting the component processes in more detail. Imaging methods with high-temporal resolution, such as evoked potential measurements, could also shed light on the temporal sequence of processes.

The lesion method can reveal critical roles for structures only when lesions are confined to those structures. Classically, associations between impaired performances and single structures have relied on relatively rare single- or multiple-case study approaches. Our principal approach here was to combine data from a large number of patients with lesions, but most of those subjects did not have lesions that were confined to the somatosensory cortex. Although our statistical analysis does show that the somatosensory cortex is critical to recognize facial emotion, it remains possible that, in general, impaired performance results from lesions in somatosensory cortex in addition to damage in surrounding regions. Although we addressed this issue by providing data from three individual subjects (Fig. 2b), future cases with lesions restricted to somatosensory cortices will be necessary to map out the precise extent of the somatosensory-related sectors that are critical. The present findings provide a strong hypothesis that could be tested further with additional lesion studies or with functional-imaging studies in normal subjects.

Somatosensory representation and emotion

The finding that somatosensory regions are critical for a visual recognition task might be considered counterintuitive outside of a theoretical framework in which somatosensory representations are seen as integral to processing emotions (Damasio, 1994). For subjects to retrieve knowledge regarding the association of certain facial configurations with certain emotions, we presume it is necessary to reactivate circuits that had been involved in the learning of past emotional situations of comparable category. Such situations would have been defined both by visual information from observing the faces of others as well as by somatosensory information from the observing subject corresponding to the subject's own emotional state. (It is also possible that certain facial expressions may be linked to innate somatosensory knowledge of the emotional state, without requiring extensive learning. Furthermore, observation of emotional expressions in others later in development need not always trigger in the subject the overt emotional state with which the expression has been associated; the circuits engaged in earlier learning may be engaged covertly in the adult.)

But in addition to retrieval of visual and somatic records, the difficulty of the particular task we used may make it necessary for subjects to construct an on-line somatosensory representation by internal simulation (Goldman, 1992; Rizzolatti et al., 1996; Gallese and Goldman, 1999). Such an internally constructed somatic activity pattern would simulate components of the emotional state depicted by the target face (Adolphs, 1999a,b). Although this interpretation is speculative at this point, it is intriguing to note that a recent functional-imaging study (Iacoboni et al., 1999) found that imitation of another person from visual observation resulted in activation in the left frontal operculum and in the right parietal cortex. These two sites bear some similarity to the two main regions identified in our study. We note again that no conscious feeling need occur in the subject during simulation; the state could be either overt or covert.

We thus suggest that visually recognizing facial expressions of emotion will engage regions in the right hemisphere that subserve both (1) visual representations of the perceptual properties of facial expressions and (2) somatosensory representations of the emotion associated with such expressions, as well as (3) additional regions (and white matter) that serve to link these two components. This composite visuosomatic system could permit attribution of mental states to other individuals, in part, by simulation of their visually observed body state. Our interpretation is consonant with the finding that producing facial expressions by volitional contraction of specific muscles induces components of the emotional state normally associated with that expression (Adelmann and Zajonc, 1989; Levenson et al., 1990), and it is consonant also with the finding of spontaneous facial mimicry in infants (Meltzoff and Moore, 1983). However, it is important to note that the comprehensive evocation of emotional feelings could be achieved without the need for actual facial mimicry, by the central generation of a somatosensory image, a process we have termed the “as-if loop” (Damasio, 1994). The finding of a disproportionately critical role for right somatosensory-related cortices in emotion recognition may also be related to the observation that damage to those regions can result in anosognosia, an impaired knowledge of one's own body state, often accompanied by a flattening of emotion (Babinski, 1914; Damasio, 1994). It remains unclear precisely which components of the somatosensory cortex would be most important in our task: those regions that represent the face or additional regions that could provide more comprehensive knowledge about the state of the entire body associated with an emotion. Our data suggest a disproportionate role for the face representation within S-I (compare Figs. 2,3) but also implicate additional somatic representations in S-II, insula, and anterior supramarginal gyrus.

The present findings extend our understanding of the structures important for emotion recognition in humans; the right somatosensory-related cortices, together with the amygdala, may function as two indispensable components of a neural system for retrieving knowledge about the emotions signaled by facial expressions. The details of how these two structures interact during development and their relative contributions to the retrieval of particular aspects of knowledge, or knowledge of specific emotions, remain important issues for future investigations. Of equal importance are the theoretical implications of our interpretation; the construction of knowledge by simulation has been proposed as a general evolutionary solution to predicting and understanding other people's actions (Gallese and Goldman, 1999), but future studies will need to address to what extent such a strategy might be of disproportionate importance specifically for understanding emotions.

Footnotes

This research was supported by a National Institute of Neurological Disorders and Stroke program project grant (A.R.D., Principal Investigator), a first award from the National Institute of Mental Health (R.A.), and research fellowships from the Sloan Foundation and the EJLB Foundation (R.A.). We thank Robert Woolson for statistical advice, Jeremy Nath for help with testing subjects, and Denise Krutzfeldt for help in scheduling their visits.

Correspondence should be addressed to Dr. Ralph Adolphs, Department of Neurology, University Hospitals and Clinics, 200 Hawkins Drive, Iowa City, IA 52242. E-mail: ralph-adolphs@uiowa.edu.

REFERENCES

- 1.Adelmann PK, Zajonc RB. Facial efference and the experience of emotion. Annu Rev Psychol. 1989;40:249–280. doi: 10.1146/annurev.ps.40.020189.001341. [DOI] [PubMed] [Google Scholar]

- 2.Adolphs R. Neural systems for recognizing emotions in humans. In: Hauser M, Konishi M, editors. Neural mechanisms of communication. MIT; Cambridge, MA: 1999a. pp. 187–212. [Google Scholar]

- 3.Adolphs R. Social cognition and the human brain. Trends Cognit Sci. 1999b;3:469–479. doi: 10.1016/s1364-6613(99)01399-6. [DOI] [PubMed] [Google Scholar]

- 4.Adolphs R, Tranel D, Damasio H, Damasio A. Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature. 1994;372:669–672. doi: 10.1038/372669a0. [DOI] [PubMed] [Google Scholar]

- 5.Adolphs R, Tranel D, Damasio H, Damasio AR. Fear and the human amygdala. J Neurosci. 1995;15:5879–5892. doi: 10.1523/JNEUROSCI.15-09-05879.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Adolphs R, Damasio H, Tranel D, Damasio AR. Cortical systems for the recognition of emotion in facial expressions. J Neurosci. 1996;16:7678–7687. doi: 10.1523/JNEUROSCI.16-23-07678.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Anderson AK, LaBar KS, Phelps EA. Facial affect processing abilities following unilateral temporal lobectomy. Soc Neurosci Abstr. 1996;22:1866. [Google Scholar]

- 8.Babinski J. Contribution a l'etude des troubles mentaux dans l'hemiplegie organique cerebrale (agnosognosie). Rev Neurol. 1914;27:845–847. [Google Scholar]

- 9.Borod JC, Obler LK, Erhan HM, Grunwald IS, Cicero BA, Welkowitz J, Santschi C, Agosti RM, Whalen JR. Right hemisphere emotional perception: evidence across multiple channels. Neuropsychology. 1998;12:446–458. doi: 10.1037//0894-4105.12.3.446. [DOI] [PubMed] [Google Scholar]

- 10.Bowers D, Bauer RM, Coslett HB, Heilman KM. Processing of faces by patients with unilateral hemisphere lesions. Brain Cogn. 1985;4:258–272. doi: 10.1016/0278-2626(85)90020-x. [DOI] [PubMed] [Google Scholar]

- 11.Calder AJ, Young AW, Rowland D, Perrett DI, Hodges JR, Etcoff NL. Facial emotion recognition after bilateral amygdala damage: differentially severe impairment of fear. Cognit Neuropsychology. 1996;13:699–745. [Google Scholar]

- 12.Cole J. About face. MIT; Cambridge, MA: 1998. [Google Scholar]

- 13.Damasio AR. Descartes' error: emotion, reason, and the human brain. Grosset/Putnam; New York: 1994. [Google Scholar]

- 14.Damasio H, Frank R. Three-dimensional in vivo mapping of brain lesions in humans. Arch Neurol. 1992;49:137–143. doi: 10.1001/archneur.1992.00530260037016. [DOI] [PubMed] [Google Scholar]

- 15. Darwin C. The expression of the emotions in man and animals. Reprint. 1872. University of Chicago; Chicago, 1965. [Google Scholar]

- 16.Ekman P. Darwin and facial expression: a century of research in review. Academic; New York: 1973. [Google Scholar]

- 17.Ekman P, Friesen W. Pictures of facial affect. Consulting Psychologist's; Palo Alto, CA: 1976. [Google Scholar]

- 18.Frank RJ, Damasio H, Grabowski TJ. Brainvox: an interactive, multi-modal visualization and analysis system for neuroanatomical imaging. NeuroImage. 1997;5:13–30. doi: 10.1006/nimg.1996.0250. [DOI] [PubMed] [Google Scholar]

- 19.Fridlund AJ. Human facial expression. Academic; New York: 1994. [Google Scholar]

- 20.Gallese V, Goldman A. Mirror neurons and the simulation theory of mind-reading. Trends Cognit Sci. 1999;2:493–500. doi: 10.1016/s1364-6613(98)01262-5. [DOI] [PubMed] [Google Scholar]

- 21.Goldman A. In defense of the simulation theory. Mind Lang. 1992;7:104–119. [Google Scholar]

- 22.Gur RC, Skolnick BE, Gur RE. Effects of emotional discrimination tasks on cerebral blood flow: regional activation and its relation to performance. Brain Cogn. 1994;25:271–286. doi: 10.1006/brcg.1994.1036. [DOI] [PubMed] [Google Scholar]

- 23.Iacoboni M, Woods RP, Brass M, Bekkering H, Mazziotta JC, Rizzolatti G. Cortical mechanisms of human imitation. Science. 1999;286:2526–2528. doi: 10.1126/science.286.5449.2526. [DOI] [PubMed] [Google Scholar]

- 24.Levenson RW, Ekman P, Friesen WV. Voluntary facial action generates emotion-specific autonomic nervous system activity. Psychophysiology. 1990;27:363–384. doi: 10.1111/j.1469-8986.1990.tb02330.x. [DOI] [PubMed] [Google Scholar]

- 25.Meltzoff AN, Moore MK. Newborn infants imitate adult facial gestures. Child Dev. 1983;54:702–709. [PubMed] [Google Scholar]

- 26.Morris JS, Frith CD, Perrett DI, Rowland D, Young AW, Calder AJ, Dolan RJ. A differential neural response in the human amygdala to fearful and happy facial expressions. Nature. 1996;383:812–815. doi: 10.1038/383812a0. [DOI] [PubMed] [Google Scholar]

- 27.Phillips ML, Young AW, Senior C, Brammer M, Andrew C, Calder AJ, Bullmore ET, Perrett DI, Rowland D, Williams SCR, Gray JA, David AS. A specific neural substrate for perceiving facial expressions of disgust. Nature. 1997;389:495–498. doi: 10.1038/39051. [DOI] [PubMed] [Google Scholar]

- 28.Rapcsak SZ, Comer JF, Rubens AB. Anomia for facial expressions: neuropsychological mechanisms and anatomical correlates. Brain Lang. 1993;45:233–252. doi: 10.1006/brln.1993.1044. [DOI] [PubMed] [Google Scholar]

- 29.Rizzolatti G, Fadiga L, Gallese V, Fogassi L. Premotor cortex and the recognition of motor actions. Cognit Brain Res. 1996;3:131–141. doi: 10.1016/0926-6410(95)00038-0. [DOI] [PubMed] [Google Scholar]

- 30.Russell JA. A circumplex model of affect. J Pers Soc Psychol. 1980;39:1161–1178. [Google Scholar]

- 31.Russell JA, Fernandez-Dols JM. The psychology of facial expression. Cambridge UP; Cambridge, MA: 1997. [Google Scholar]

- 32.Sprent P. Data-driven statistical methods. Chapman and Hall; New York: 1998. [Google Scholar]

- 33.Tranel D. The Iowa-Benton school of neuropsychological assessment. In: Grant I, Adams KM, editors. Neuropsychological assessment of neuropsychiatric disorders. Oxford UP; New York: 1996. pp. 81–101. [Google Scholar]

- 34.Tranel D, Damasio AR, Damasio H. Intact recognition of facial expression, gender, and age in patients with impaired recognition of face identity. Neurology. 1988;38:690–696. doi: 10.1212/wnl.38.5.690. [DOI] [PubMed] [Google Scholar]

- 35.Young AW, Aggleton JP, Hellawell DJ, Johnson M, Broks P, Hanley JR. Face processing impairments after amygdalotomy. Brain. 1995;118:15–24. doi: 10.1093/brain/118.1.15. [DOI] [PubMed] [Google Scholar]