Abstract

Health care report cards are intended to address information asymmetries and enable consumers to choose providers of better quality. However, the form of the information may matter to consumers. Nursing Home Compare, a website that publishes report cards for nursing homes, went from publishing a large set of indicators to a composite rating in which nursing homes are assigned one to five stars. We evaluate whether the simplified ratings motivated consumers to choose better-rated nursing homes. We use a regression discontinuity design to estimate changes in new admissions six months after the publication of the ratings. Our main results show that nursing homes that obtained an additional star gained more admissions, with heterogeneous effects depending on baseline number of stars. We conclude that the form of quality reporting matters to consumers, and that the increased use of composite ratings is likely to increase consumer response.

Keywords: Report cards, quality, star rating, nursing home, regression discontinuity

JEL classification: L15, I11, I18

I. Introduction

Public reporting of quality information through provider report cards is regarded as a potentially compelling tool to address information asymmetries and to enable consumers to choose providers of better quality. This change in consumer behavior may in turn motivate providers to improve quality of care. Public reporting for nursing homes certified by Medicare and Medicaid was first implemented in 1998 and then expanded in 2002 as Nursing Home Compare, a web-based portal that publishes quality information. As in other health care sectors, however, the empirical evidence on the effects of quality reporting on Nursing Home Compare has been mixed. Because research to date indicates a small and somewhat inconsistent response of consumers to public reporting, policy efforts are now focused on finding ways to increase consumer use of report cards.

While there are numerous required steps for report cards to be effective (Hibbard et al. 2002, Faber et al. 2009) and potential barriers associated with each step, much attention has focused on making report card information more understandable to consumers. Insights from the fields of cognitive psychology and decision science indicate that presenting consumers with less information may increase its salience and thus its impact on decision making (Peters et al. 2007). These principles have been supported by experimental studies evaluating the impact of public reporting formats (Peters et al. 2007, Schultz et al. 2001, Hibbard et al. 2002, Palsbo and Kroll 2007, Sibbald et al. 1996). Research has also found that consumers have difficulty both processing the large number of quality metrics that are often included in report cards (Peters et al. 2007, Schultz et al. 2001) and understanding the relationship between these quality metrics and a provider’s overall quality (Sibbald et al. 1996). Additional research has found that patients often prefer composite scores (summary measures) which both decrease the cognitive burden of using report cards and are easier to interpret (Hibbard et al. 2002, Peters et al. 2007).

In recent years, policymakers started using composite measures to display providers’ overall quality information in a simpler manner. Composite scores have the advantage of presenting less information while representing a large range of quality attributes. They may thus increase consumer comprehension and use of report cards to identify and choose high-quality providers. Furthermore, if providers expect or observe that consumers are more likely to use the simplified report cards, they may have more incentive to make investments that improve quality of care, particularly those aspects of quality that are captured in the composite ratings.

Accordingly, the Centers for Medicare and Medicaid (CMS) made significant changes to Nursing Home Compare in December 2008. It went from publishing sets of clinical outcome measures, staffing measures, and health inspections deficiency citations to a composite rating in which nursing homes are assigned stars, from one star indicating quality “much below average” to five stars indicating quality “much above average.” The five-star rating system gives consumers a simplified look at nursing home quality using a visual representation (stars) to describe overall performance. It summarizes nursing home performance for both long-stay and post-acute patients in a single measure. Although it is still possible to find the individual measures on the Nursing Home Compare site, the star ratings now overshadow the individual measures they combine, appearing first and much more prominently. Prior work finds that when consumers use report cards with summary measures such as star ratings, most report card viewers visit the summary page with the star ratings but do not click through the more detailed pages (Rainwater et al. 2005). Furthermore, in two qualitative studies about Nursing Home Compare’s five-star ratings, both consumers and providers stated that rating users mostly pay attention to the overall number of stars (Konetzka et al. 2015, Perraillon, Brauner, and Konetzka 2017). Thus, this evidence suggests that once the five-star rating system was implemented, consumers focused primarily on the star-rating system over the individual measures.

Evidence on the effects of the Nursing Home Compare system on consumer behavior prior to the introduction of the five-star ratings was mixed, in part due to the challenge of identifying causal effects when policies are implemented on a national basis without a control group. Grabowski and Town (2011) found no meaningful effect on market share, suggesting that consumers did not choose nursing homes based on the publication of quality measures. On the other hand, Werner et al. (2012) found that patients were more likely to choose facilities with higher reported post-acute care quality after the initial publication of the quality measures but the magnitude of the effect was small. Because of the complexity of the initial Nursing Home Compare web portal, these results may reflect consumers’ lack of knowledge about Nursing Home Compare or a limited understanding of the information reported (Konetzka et al. 2015). Physicians and other hospital employees, particularly hospital discharge planners, are potentially more likely than consumers to use Nursing Home Compare when suggesting nursing homes for their clients. One survey (Bearing 2004) found that 28 percent of hospital discharge planners used Nursing Home Compare as part of their discharge planning.

Evidence on response to the five-star system is still sparse. To our knowledge, just one paper has examined consumer response to the five-star system, using a discrete choice conditional logit model to assess consumer admission to nursing homes by star rating before and after the five-star system was implemented and calculating five -star scores prior to implementation to control for prior consumer knowledge (Werner, Konetzka, and Polsky 2016). In that paper, using a conditional logit model comparing consumers’ choices before and after the release of the five-star system, Werner and colleagues found that one-star facilities lost market share and five-star facilities gained market share under the five-star system. However, like prior work on Nursing Home Compare, the analysis was limited in its ability to draw causal inference due to the pre-post design with no natural experiment to rely on for a control group. In addition, the design made it difficult to assess consumer choice in the middle groups of quality, since those nursing homes could be gaining market share relative to lower-rated homes and losing market share to higher-rated homes and only the net effect could be measured.

The objective of this paper is to evaluate whether reporting simplified and composite ratings causes consumers to choose higher-quality nursing homes. To estimate causal effects, we employ a regression discontinuity design comparing nursing homes that are close to one of the four cut-off points in the score used to assign stars, exploiting the fact that star assignment close to a cut-off point can be viewed as random. Thus, we ask a different question than the paper by Werner and colleagues; rather than assessing effects on overall market share, we assess consumer choice between nursing homes of adjacent star levels to evaluate whether an additional star makes a difference in choices at the margin. We evaluate the change in the number of admissions six months after the launch of the new ratings and find that gaining an extra star generally leads to an increase in new admissions. However, we find that effects are heterogeneous by baseline level of quality in that obtaining 2 stars instead of 1 star has no effect on admissions, but admissions increase for nursing homes receiving 4 stars (instead of 3) or 5 stars (instead of 4). These effects are larger in nursing homes that are not fully occupied and in more competitive markets. We present evidence that the assumptions of the regression discontinuity design are met and conduct multiple robustness checks of our results. Although our primary interest in the regression discontinuity estimation is to assess reaction to the reported ratings, regardless of whether the ratings reflect actual quality of care, we also present evidence that suggests that the new five-star ratings have some face validity. We find that the five-star ratings are correlated with factors known to be associated with the quality of care in nursing homes. In particular, we find that low-rated nursing homes have a larger proportion of Medicaid patients, are larger, are located in areas of lower household income, and tend to be for-profit. Thus, a switch to more highly rated nursing homes may be welfare-improving. We also discuss how these characteristics may help to explain the heterogeneity of our estimated effects.

II. Institutional Details and Background

In this section, we describe the details of the Nursing Home Compare system and the algorithms used to assign the new star ratings, an understanding of which is important to evaluate the plausibility of our regression discontinuity approach.

A. NURSING HOME COMPARE

In 1998 CMS introduced a website portal called Nursing Home Compare to provide consumers with quality information for all CMS-certified nursing homes, which account for over 95 percent of all facilities in the country (National Center for Health Statistics 2004). The initial release of Nursing Home Compare published information on the characteristics of nursing homes, such as ownership status, size, Medicare/Medicaid participation, and health-related deficiencies cited at on-site inspections. Over the years, more information was added. Nurse and nurse aide staffing levels were added in 2000 as part of a Nursing Home Quality Initiative by the Department of Health and Human Services. Clinical quality measures were added in 2002 after an initial pilot test in six states. The quality measures are expressed as the percent of residents in a nursing home experiencing particular adverse events, calculated separately for long- and short-stay residents. By 2002, Nursing Home Compare included a total of ten quality measures across the long- and short-stay populations. These measures included the percent of residents with pressure ulcers, loss of ability in basic daily activities, urinary tract infections, excessive weight loss, and moderate to severe pain, among others (Grabowski and Town 2011, Werner et al. 2012). By 2008, the number of clinical quality measures had expanded to include 19 measures (Mukamel et al. 2008).

B. FIVE-STAR COMPOSITE RATINGS

In December 2008, recognizing that the numerous and disparate measures on the Nursing Home Compare website might present an obstacle to use by consumers, CMS released the new composite summary rating for nursing homes. The new Nursing Home Compare website (Centers for Medicare and Medicaid Services 2014) reports composite star ratings at the nursing home level; thus, the new ratings are not calculated separately for long- and short-stay residents. From the start of the development of the new rating system, the intention was not to change the underlying content used for ratings but to incorporate all the available dimensions into summary measures to make the information easier to use (Williams et al. 2010).

In addition to the overall number of stars, the new website presents star ratings for the subcategories of staffing, health inspections, and quality measures. The number of stars a nursing home is assigned in each domain is based on continuous scores and cut-off points that determine the number of stars. Stars in each domain are then aggregated into a single overall five-star rating. In determining the overall number of stars, the health inspection rating is the most important domain. The health inspection score is based on a modified version of the Special Focus Facility (SFF) algorithm developed to identify nursing homes that have a greater number of quality problems, more serious problems than average, and demonstrated pattern of quality problems (Centers for Medicare and Medicaid Services 2012, Castle and Engberg 2010). The algorithm assigns points to health inspection deficiencies (violations). To be certified to admit Medicare or Medicaid residents, nursing homes must meet requirements set by Congress, which are enforced at the state level. On-site health inspections certify that nursing homes comply with hundreds of regulatory standards, from the handling and storage of food to ensuring that residents are protected from physical or mental abuse. Each nursing home is required to have an initial inspection to be certified, with additional unannounced visits carried out approximately once a year, although poor-performing nursing homes may receive additional visits and some visits are conducted to investigate consumer complaints. The total number of points a nursing home receives for the inspections domain is based on the scope of the violation (isolated, pattern, or widespread) and its severity (immediate jeopardy to resident health or safety, actual harm that is not immediate jeopardy, no actual harm with potential for more than minimal harm, and no actual harm with potential for minimal harm). The total number of points is calculated using the three most recent inspection surveys, with the most recent surveys given larger weights. Stars are then assigned using cut-off points in the percentiles of the distribution of health inspection scores at the state level, since inspection standards, and therefore total points, may have substantial variability between states. Within a state, the top 10 percent of nursing homes with the lowest scores receive five stars, while the bottom 20 percent of nursing homes with the highest scores receives one star. The remaining 70 percent are equally divided among two, three, and four stars. Thus, for the inspection domain, the cut-off points were set to percentiles 10, 33.33, 56.66, and 80 for 5 to 1 stars, respectively. If a nursing home is exactly at the threshold, that nursing home receives the higher star.

Stars for the staffing domain are based on case-mix-adjusted measures of staffing levels. At the time of the on-site health inspection, nursing homes self-report their total staffing hours over the two weeks prior. CMS does not verify the accuracy of the reported data, although it uses algorithms to identify facilities with unreliable staffing or resident census data which are then omitted from reporting (Williams et al. 2010). Total staffing hours are converted to staff hours per resident per day and adjusted for case-mix of patients, relying upon the case-mix system developed for prospective payment by Medicare and Medicaid. The final staffing score a nursing home receives depends on its reported staffing levels compared to the expected national average. Stars are assigned based on both the distribution of this score and thresholds determined by a study of staffing levels and quality of care (Kramer 2001).

For the quality measures domain during the time period of our study, a score is calculated using ten of the quality measures published on Nursing Home Compare (seven for long-stay and three for short-stay residents). A weighted average of the last three quarters of data are calculated, where the weights are a function of the number of residents who are eligible for each of the measures. Points are calculated based on quintiles of the weighted quality measure and summed across the ten measures. Stars are then assigned based on the distribution of summed points, much like the health inspection stars.

The overall number of stars a nursing home ultimately receives follows a hierarchical set of rules. The starting point is the number of stars a nursing home obtains on the health inspection domain. One star is added if the staffing rating is four or five stars and greater than the health inspection rating, while one star is subtracted if the staffing rating is one star. To the resulting number of stars, one is added if the quality measure rating is five stars, while one is subtracted if the quality rating is one star. Furthermore, nursing homes in the SFF program cannot receive more than three stars on the overall rating. If the health inspection rating is only one star, a facility cannot be upgraded by more than one star based on ratings on the quality and staffing domains. A consequence of this algorithm is that the health inspection rating is the most important domain, followed by staffing and quality measures ratings. As explained by Williams et al. (2010), “CMS wanted the health inspection domain to play a predominant role in determining the overall [five-star] rating” because “independent surveyors carry out the health inspection; thus, the data should be more objective and unbiased.” In contrast, staffing and quality measures are based on data self-reported by nursing homes with little or no verification by CMS. Detailed information on how the ratings were calculated is available in several technical guides published by CMS (Centers for Medicare and Medicaid Services 2008, 2012, Williams et al. 2010).

III. Conceptual framework

In a standard utility framework, we assume that consumers (residents and their agents) decide whether to use nursing home care as a function of need (health status), availability of alternatives such as family care or home care, and price relative to the alternatives. Contingent on a decision to receive nursing home care, the choice of a particular nursing home is a function of that nursing home’s perceived quality, price, proximity to home/family, and bed availability relative to other homes in the local market (including bed availability specific to a payer, such as Medicaid). Because the majority of nursing home residents pays for their nursing home care with public insurance with administratively set prices, price is not relevant to many prospective residents. In this context, nursing home quality should be positively related to demand.

However, like in other health care markets, the nursing home market is also subject to imperfect and asymmetric information (Arrow 1963). Consumers are not able to accurately determine the quality of care they would receive before they choose a nursing home. Using the framework proposed by Dranove and Satterthwaite (1992), nursing homes select both price and quality to maximize profits and consumers make decisions that maximize utility, which also depends on price and the quality of care. Because consumers cannot accurately determine true quality a priori, nursing homes face a demand that is relatively inelastic with respect to quality. Under this framework, interventions such as public reporting increase the precision with which consumers observe the quality of care in nursing homes and lead to more elastic demand with respect to quality. Thus, public reporting should lead to consumer choice of higher-quality providers and to a higher optimal level of quality provided.

Similarly, consumer responsiveness should increase with the release of the five-star ratings given that it was a significant change in the way CMS reports quality of care. The new ratings allow consumers to directly rank and compare nursing homes in a simpler manner. Previously, consumers were able to compare a selected quality measure to those of other nursing homes within a state. Consumers, however, were not able to compare all the information available on the website at the same time in a systematic way, and no attempt was made at ranking nursing homes. Because the new star ratings are prominently displayed on Nursing Home Compare and evidence indicates that consumers prefer summary ratings, we expect that better rated nursing homes will gain admissions after the release of the ratings, even though public reporting, in a more complicated manner, previously existed.

We do not expect, however, a uniform response across star levels. Increased consumer responsiveness to the simplified ratings could be heterogeneous because low- and high-quality nursing homes operate in markets with different types of consumers. Because Medicaid residents are generally less educated than private pay and Medicare residents, they may be less likely to access web-based quality ratings and thus may be less likely to choose nursing homes of better quality (Konetzka and Perraillon 2016, Perraillon, Brauner, and Konetzka 2017). Other important factors limiting choice for some consumers may be the limited supply of high -quality nursing homes in low-income areas or some nursing homes in these areas being at full capacity (He and Konetzka 2015, Konetzka et al. 2015). On the other hand, a simplified summary rating based on stars may be helpful to less educated individuals because it is easier to understand.

IV. Methods

A. DATA

Data for this study come from several sources. The first is the nursing home Minimum Data Set (MDS), which contains detailed clinical data collected at regular intervals for every resident in a Medicare and/or Medicaid certified nursing home. Since 1998, data on residents’ mental status, psychological well-being, and physical functioning have been collected electronically. Data from the MDS are used by CMS to calculate Medicare prospective reimbursement rates and are the basis for the quality measures reported by Nursing Home Compare. The MDS is also used by nursing homes to develop a care plan that considers residents’ comorbidities, strengths, and residual capacity (Mor 2005). We use the MDS to calculate the total number of new admissions for each nursing home before and after the release of the five-star ratings. In addition, we use MDS data to obtain demographic and clinical characteristics of residents in each nursing home including age, race, ethnicity, educational attainment, Cognitive Performance Score (CPS) (Morris et al. 1994) and difficulties with Activities of Daily Living (ADL). We used the number of total admissions in 2008 to calculate the Herfindahl–Hirschman Index (HHI) at the county level. The second source of data is the Online Survey Certification and Reporting (OSCAR) database, maintained by CMS in cooperation with state surveying agencies. OSCAR contains all the data elements from the on-site state inspections. We use data from 2007 and 2008 to obtain baseline information at the nursing home level, including total number of beds, number of residents, and ownership status (for profit, non-profit, or government owned), chain status, urban indicator, and whether a nursing home is based in a hospital. The last source of data contains the unpublished underlying scores and thresholds that were used to determine the number of stars, obtained through a special request to CMS. These data include both the continuous scores for the inspection domain as well as the continuous percentiles based on the scores, which were calculated at several decimal points. We merged all three datasets by nursing home identifier and time.

B. EMPIRICAL STRATEGY

Our empirical strategy takes advantage of quasi-randomization close to cut-off points of the score used to assign stars. Because nursing homes could not precisely manipulate how close their scores were to one of the four cut-off points, the number of stars received (i.e. treatment) close to a cut -point can be viewed as randomly assigned. Close to a cut-off point, nursing homes should be similar in all their pre-intervention characteristics. Accordingly, we use a regression discontinuity design (Lee and Lemieux 2009, Imbens and Lemieux 2008) to estimate the effect of the new five-star rating on subsequent new admissions at each of the four cut-off points, thus estimating the causal effect of obtaining an additional star (relative to not obtaining that additional star).

As with all regression discontinuity designs, the assumption of no precise manipulation is particularly important because if nursing homes could determine with precision on which side of a cut-off point they would fall, some nursing homes could have made small improvements to obtain an extra star. A consequence of this form of self-selection is that nursing homes above and below a cut point would not be similar. However, there are several reasons to believe that this assumption is satisfied in our study. First, CMS did not make public the complex algorithm it used to create the ratings before the ratings were posted on the Nursing Home Compare portal for the first time. Second, to manipulate their relative position, nursing homes not only needed to accurately calculate their own score, they also needed to precisely calculate the score of all other nursing homes in their state since star ratings depend on the distribution of scores within a state. Third, the data that are required to replicate the ratings were not available to nursing homes before the ratings were published. Finally, even if nursing homes knew their relative score with precision, they could not take any action to exceed a particular cut-off point before a subsequent health inspection, which occurs approximately once a year. Therefore, the identifying assumption of no precise manipulation near a cut-off point is plausible in our study.

To evaluate consumers’ response while accounting for the structure and form of the data, we estimate local random-effects (also known as mixed-effects) Poisson models for each of the four cut-off points (Cameron and Trivedi 2013; Rabe-Hesketh and Skrondal 2008). For each cut-off point, we estimate the following model separately:

| (1) |

where admist is the count of new admissions for nursing home i in state s at time t. When t=0, admis0 is the count of admissions from January to June 2008 and when t=1 admist is the number of admissions in the same period in the year 2009 (after the release of the five-star ratings). year is a dummy variable equal to 1 after the release of the five-star ratings. Tist is a dummy variable equal to 1 if a nursing home received an additional star at t=1 and zero if a nursing home does not receive an additional star in that period. In the pre-period, T is equal to zero for all nursing homes. For example, when analyzing nursing homes that received either 4 or 5 stars, T equals 1 for those nursing homes that received 5 stars and is zero otherwise. Consequently, the causal effect (in the logarithm scale) of obtaining an additional star is given by the coefficient β1, holding other factors constant. Note that this specification is analogous to a difference-in-difference model in which the pre-period number of admissions is the same in treated and control nursing homes. Vectors Xis and Zist include key control variables that are time-variant (payer mix, demographic, and clinical characteristics of residents) and, in the short-run, time-invariant (ownership, size, chain status, HHI, number of stars in the quality and staffing domains), respectively. The variables us and vis are random effects at the state and nursing home level, respectively, to take into account the within-state and within-nursing-home correlation as well as overdispersion of the count outcome.

In our main models, we also include the “assignment” or “running” variable; that is, the variable that CMS used to assign the number of stars, which we explain in more detail below. As is the standard practice in regression discontinuity models, we centered the assignment variable at each of the four cutoff points by subtracting the threshold value from the assignment variable. In this way, the treatment effect is estimated at each of the thresholds or discontinuities (since the centered assignment variable equals zero at the threshold). Following Gelman and Imbens (2014), we considered polynomials up to the second degree in the specification of the assignment variable. We present results controlling for the running variable linearly (in the log scale) as in most models a quadratic term did not improve fit (Bayesian Information Criterion), nor did it affect our estimates of treatment effects.

In sharp regression discontinuity designs, treatment assignment is known and depends on an observable and continuous variable. Even though percentiles of the inspection score determine the number of stars (i.e. the assignment or running variable) for the inspection domain, which is the starting point for the overall number of stars, the overall number of stars a nursing home ultimately receives may be affected by the number of stars in the staffing and quality measures domains if nursing homes receive very low or very high ratings on those domains. To accommodate this feature of the ratings, we use two strategies. First, when the overall number of stars is the same as the stars in the health inspection domain, the assignment variable is solely based on the percentiles of the health inspection score. Our main analyses use this sub-sample of nursing homes, prioritizing the internal validity of our empirical strategy in that the assumptions of the regression discontinuity design are met with most confidence in this subset of observations. This sub-sample accounts for about 60 percent of nursing homes. Second, as use of the subsample may sacrifice some external validity, we also present results using the full set of nursing homes. To do so, we created an adjusted assignment variable that preserves the distance to an inspection cut-off point but changes the treatment level (i.e. the overall number of stars) if ratings in the staffing and quality domains change the overall number of stars. For example, a nursing home that obtained four stars based on the inspection domain and was 2 percentiles above the 3–4 cut-off point could have actually obtained five overall stars if it received a high rating in the quality domain. Therefore, this nursing home’s overall “treatment” level is five stars versus four stars, but in the adjusted assignment variable, the distance above the 4–5 cut-off point is still 2 percentile points. This method reflects the fact that the number of stars in the staffing and quality domains do not change the distance to a cut-off point but may change the overall number of stars. In these models, we do not control for the running variable, as adjusted assignment is based on mix of the inspection-based percentile and these shifts for staffing and clinical quality. Models using the full sample, however, are intended to shed light on whether the results from the main sub-sample may be generalizable to the broader population of nursing homes.

We first estimate models using a bandwidth of 3 percentile points around a cut-off point and check if nursing homes around a cut-off point have similar baseline characteristics. In addition to our main specification, we conduct multiple sub-analyses and robustness checks. First, we run the analysis on two subsamples where we expect the results to be stronger: 1) highly competitive counties (HHI<.25), because consumers have more choice in these counties; and 2) among nursing homes with less than 95 percent occupancy, because nursing homes at full or near-full occupancy are less able to accept more admissions even if demand increases. Second, we repeat our main analysis separating the outcome, count of new admissions, into post-acute and long-stay admissions based on the initial reason for assessment in the MDS, because we expect potentially different responses by type of care needs. Since we cannot directly observe if an admission is post-acute or long-stay, we use payment source (Medicare versus non-Medicare) as a proxy since most post-acute stays are paid by Medicare. Using MDS 2.0 admissions assessments, Medicare admissions can be identified reliably, but non-Medicare admissions include a mix of Medicaid and private-pay and other sources, which cannot be reliably distinguished. Finally, we conduct sensitivity analyses using both narrower and wider bandwidths. A smaller bandwidth compares a smaller set of nursing homes with the advantage that the smaller set of nursing homes are more likely to be similar in both observed and unobserved characteristics. On the other hand, using a wider bandwidth increases the sample size at the risk of comparing nursing homes that are potentially different in important characteristics related to the outcome.

V. RESULTS

Table 1 shows baseline characteristics for all the nursing homes (N=15,584) that received a Nursing Home Compare star rating on December 2008 and had matching information on both the MDS and OSCAR datasets (99 percent of rated facilities). Top-rated (five-star) nursing homes tend to have a larger share of Medicare and other payers (mostly private payers) than low-rated facilities, which tend to have a larger share of Medicaid residents. Top-rated facilities are smaller in both baseline census of residents and number of certified beds, while having a higher occupancy rate. More top-rated nursing homes are hospital-based than low-rated nursing homes. The payer mix, occupancy, and hospital-based statistics reflect the fact that top-rated nursing homes are more likely to have a higher proportion of post-acute than long-stay patients. Low-rated nursing homes are more likely to be part of a chain, with a difference of 24 percentage points between one star and five stars nursing homes. Low-rated nursing homes are also much more likely to be for profit than top-rated facilities, 82.3 versus 51.2 percent for one-star and five-star nursing homes, respectively. In terms of demographic characteristics, top-rated facilities have a smaller proportion of black residents and a higher proportion of residents with more than high school completed. No appreciable differences are observed in terms of case-mix variables, such as CPS and ADL.

Table 1.

Baseline characteristics by star, all nursing homes

| Star Rating | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

|

| ||||||||||

| 1 | 2 | 3 | 4 | 5 | ||||||

|

| ||||||||||

| Mean | SD | Mean | SD | Mean | SD | Mean | SD | Mean | SD | |

| N | 3560 | 3187 | 3350 | 3657 | 1830 | |||||

| Payer | ||||||||||

| Medicaid | 0.66 | 0.16 | 0.64 | 0.20 | 0.59 | 0.22 | 0.55 | 0.27 | 0.55 | 0.29 |

| Medicare | 0.14 | 0.10 | 0.14 | 0.12 | 0.16 | 0.17 | 0.18 | 0.22 | 0.16 | 0.22 |

| Other | 0.20 | 0.13 | 0.22 | 0.15 | 0.24 | 0.17 | 0.27 | 0.20 | 0.29 | 0.24 |

| Residents | 103.04 | 51.30 | 98.29 | 60.52 | 89.98 | 56.58 | 80.62 | 61.55 | 71.22 | 53.67 |

| Beds | 125.72 | 59.15 | 118.14 | 68.06 | 108.86 | 63.99 | 96.46 | 67.86 | 84.80 | 61.29 |

| Occupancy | 0.82 | 0.15 | 0.83 | 0.15 | 0.83 | 0.16 | 0.83 | 0.17 | 0.84 | 0.19 |

| Occupancy > 0.95 | 0.17 | 0.37 | 0.21 | 0.40 | 0.22 | 0.41 | 0.23 | 0.42 | 0.31 | 0.46 |

| HHI | 0.20 | 0.23 | 0.20 | 0.24 | 0.21 | 0.24 | 0.22 | 0.25 | 0.19 | 0.22 |

| HHI < 0.25 | 0.72 | 0.45 | 0.72 | 0.45 | 0.69 | 0.46 | 0.69 | 0.46 | 0.73 | 0.45 |

| Special focus facility | 0.69 | 0.46 | 0.67 | 0.47 | 0.64 | 0.48 | 0.62 | 0.49 | 0.64 | 0.48 |

| Urban | 0.03 | 0.16 | 0.01 | 0.10 | 0.00 | 0.06 | - | - | ||

| Area income | 40.32 | 13.10 | 41.32 | 15.08 | 41.70 | 14.86 | 41.82 | 15.19 | 43.47 | 17.20 |

| Chain | 0.65 | 0.48 | 0.56 | 0.50 | 0.55 | 0.50 | 0.47 | 0.50 | 0.40 | 0.49 |

| Hospital based | 0.03 | 0.17 | 0.05 | 0.21 | 0.07 | 0.25 | 0.13 | 0.33 | 0.13 | 0.34 |

| Ownership | ||||||||||

| Profit | 0.82 | 0.38 | 0.75 | 0.44 | 0.70 | 0.46 | 0.57 | 0.50 | 0.51 | 0.50 |

| Non-profit | 0.16 | 0.36 | 0.22 | 0.41 | 0.26 | 0.44 | 0.37 | 0.48 | 0.42 | 0.49 |

| Government | 0.02 | 0.14 | 0.04 | 0.19 | 0.04 | 0.20 | 0.06 | 0.23 | 0.07 | 0.25 |

| Total admissions 2008 | 230.02 | 174.63 | 221.66 | 198.58 | 224.00 | 215.12 | 216.37 | 218.55 | 173.47 | 215.16 |

| Medicare admissions | 166.89 | 130.63 | 159.06 | 149.88 | 163.68 | 160.94 | 157.64 | 164.49 | 123.21 | 152.37 |

| Age | 78.18 | 5.30 | 78.79 | 6.58 | 80.25 | 6.01 | 80.83 | 6.65 | 80.12 | 10.60 |

| Female | 0.65 | 0.10 | 0.66 | 0.11 | 0.68 | 0.10 | 0.69 | 0.11 | 0.69 | 0.15 |

| Race | ||||||||||

| White | 0.79 | 0.23 | 0.81 | 0.23 | 0.84 | 0.22 | 0.86 | 0.21 | 0.85 | 0.23 |

| Black | 0.15 | 0.20 | 0.12 | 0.19 | 0.10 | 0.17 | 0.08 | 0.15 | 0.08 | 0.15 |

| Other | 0.06 | 0.13 | 0.07 | 0.14 | 0.06 | 0.13 | 0.06 | 0.14 | 0.07 | 0.17 |

| Hispanic | 0.04 | 0.10 | 0.04 | 0.10 | 0.04 | 0.10 | 0.03 | 0.10 | 0.04 | 0.11 |

| Education | ||||||||||

| Less than HS | 0.31 | 0.14 | 0.31 | 0.15 | 0.30 | 0.15 | 0.29 | 0.16 | 0.28 | 0.18 |

| HS | 0.38 | 0.12 | 0.38 | 0.12 | 0.38 | 0.13 | 0.38 | 0.13 | 0.35 | 0.14 |

| More than HS | 0.19 | 0.10 | 0.21 | 0.11 | 0.23 | 0.13 | 0.25 | 0.15 | 0.28 | 0.19 |

| Marital status | ||||||||||

| Married | 0.18 | 0.07 | 0.17 | 0.08 | 0.18 | 0.09 | 0.19 | 0.10 | 0.17 | 0.11 |

| Widowed | 0.33 | 0.12 | 0.33 | 0.14 | 0.35 | 0.13 | 0.36 | 0.15 | 0.34 | 0.17 |

| Other | 0.20 | 0.12 | 0.19 | 0.13 | 0.16 | 0.11 | 0.15 | 0.12 | 0.16 | 0.15 |

| Living alone | 0.27 | 0.10 | 0.27 | 0.11 | 0.28 | 0.11 | 0.30 | 0.12 | 0.28 | 0.14 |

| Case-mix | ||||||||||

| CPS | 2.33 | 0.57 | 2.35 | 0.62 | 2.30 | 0.69 | 2.25 | 0.77 | 2.33 | 0.90 |

| ADL | 11.93 | 1.47 | 11.70 | 1.74 | 11.89 | 1.62 | 11.78 | 1.72 | 11.44 | 2.18 |

Notes: HHI: Herfindahl–Hirschman Index. HS: High school. CPS: Cognitive performance scores. ADL: Activities of Daily Living. Area income is mean household income (median) in thousands at the Census 2000 ZIP-code level.

In contrast to the overall relationship between baseline characteristics and the five-star ratings, for the regression discontinuity design to be valid, nursing homes characteristics should be similar on both sides of each cut-off point. Table 2 shows baseline characteristics around a bandwidth of 3 percentile points, restricting the sample to nursing homes in which the overall number of stars is the same as the stars in the inspection domain, our preferred analysis sample. For most of the variables and cut-off points, there is excellent balance between treated and control nursing homes, corroborating that nursing homes close a cut-off point are comparable. Table 3 shows baseline characteristics of all nursing homes (including those that shifted cut-off points due to their staffing or quality scores) around a bandwidth of 3 percentile points of the adjusted inspection score. For most of the variables and cut-off points, there is excellent balance between treatment and control nursing homes. A notable exception is for nursing homes around the 4–5 cut-off point. Nursing homes that received 4 stars have a lower number of admissions in 2008, they are less likely to be based in a hospital, and have a higher occupancy rate than five-star nursing homes. As mentioned above, while the inspection score largely determines the overall number of stars, nursing homes may gain or lose overall stars if they receive high or low ratings in the quality and staffing domains, which in turn may affect the balance close to a cut-off point.

Table 2.

Baseline characteristics for nursing homes close to cut-off points in the sub-sample with overall stars same as inspection stars

| Star Rating | ||||||||

|---|---|---|---|---|---|---|---|---|

|

| ||||||||

| 1–2 | 2–3 | 3–4 | 4–5 | |||||

|

| ||||||||

| Treated | Control | Treated | Control | Treated | Control | Treated | Control | |

| N | 194 | 424 | 189 | 174 | 271 | 194 | 403 | 298 |

| Payer | ||||||||

| Medicaid | 0.65 (0.18) | 0.65 (0.17) | 0.61 (0.21) | 0.61 (0.22) | 0.58 (0.21) | 0.57 (0.24) | 0.54 (0.27) | 0.55 (0.26) |

| Medicare | 0.13 (0.12) | 0.15 (0.10) | 0.15 (0.14) | 0.16 (0.17) | 0.15 (0.16) | 0.18 (0.19) | 0.16 (0.19) | 0.18 (0.20) |

| Other | 0.22 (0.13) | 0.21 (0.14) | 0.24 (0.16) | 0.23 (0.15) | 0.26 (0.18) | 0.24 (0.17) | 0.29 (0.23) | 0.27 (0.19) |

| Residents | 108.43 (73.03) | 101.54 (53.65) | 96.06 (57.76) | 93.82 (44.45) | 96.28 (99.13) | 88.26 (54.08) | 74.29 (51.42) | 75.94 (57.17) |

| Beds | 130.07 (79.01) | 121.85 (56.40) | 114.08 (0.61) | 114.31 (53.17) | 111.20 (101.88) | 108.21 (65.86) | 88.50 (60.25) | 90.02 (62.45) |

| Occupancy | 0.83 (0.15) | 0.83 (0.14) | 0.83 (0.15) | 0.83 (0.15) | 0.85 (0.14) | 0.83* (0.17) | 0.85 (0.19) | 0.85 (0.16) |

| Occupancy > 0.95 | 0.19 (0.39) | 0.17 (0.38) | 0.20 (0.40) | 0.23 (0.42) | 0.24 (0.43) | 0.23 (0.42) | 0.29 (0.45) | 0.28 (0.45) |

| HHI | 0.18 (0.24) | 0.19 (0.23) | 0.19 (0.22) | 0.18 (0.23) | 0.21 (0.22) | 0.19 (0.23) | 0.20 (0.23) | 0.22 (0.24) |

| HHI < 0.25 | 0.76 (0.43) | 0.76 (0.43) | 0.72 (0.45) | 0.75 (0.43) | 0.71 (0.46) | 0.72 (0.45) | 0.71 (0.45) | 0.67 (0.47) |

| Special focus facitliy | 0.01 (0.10) | 0.01 (0.10) | 0.02 (0.01) | 0.01 (0.08) | - | - | - | - |

| Urban | 0.77 (0.42) | 0.71 (0.45) | 0.66 (0.48) | 0.72 (0.45) | 0.62** (04.9) | 0.71 (0.46) | 0.62 (0.49) | 0.61 (0.49) |

| Chain | 0.59 (0.49) | 0.63 (0.48) | 0.60 (0.49) | 0.51 (0.50) | 0.52 (0.50) | 0.59 (0.49) | 0.43 (0.50) | 0.41 (0.49) |

| Hospital based | 0.04 (0.20) | 0.04 (0.20) | 0.07 (0.25) | 0.05 (0.21) | 0.08 (0.28) | 0.07 (0.26) | 0.11 (0.32) | 0.10 (0.31) |

| Ownership | ||||||||

| Profit | 0.77 (0.42) | 0.80 (0.40) | 0.70** (0.46) | 0.81 (0.40) | 0.65 (0.48) | 0.70 (0.46) | 0.60 (0.49) | 0.61 (0.49) |

| Non-profit | 0.19 (0.39) | 0.18 (0.38) | 0.28** (0.45) | 0.17 (0.38) | 0.29 (0.45) | 0.25 (0.43) | 0.35 (0.48) | 0.33 (0.47) |

| Government | 0.05** (0.21) | 0.02 (0.14) | 0.02 (0.13) | 0.02 (0.15) | 0.06 (0.25) | 0.05 (0.21) | 0.05 (0.22) | 0.06 (0.23) |

| Total admissions 2008 | 254.54 (226.95) | 247.94 (213.81) | 224.45 (186.92) | 247.91 (274.36) | 218.98 (207.21) | 225.68 (184.92) | 188.81 (205.47) | 200.51 (211.82) |

| Medicare admissions 2008 | 176.12 (157.66) | 179.89 (160.88) | 167.39 (152.64) | 176.38 (180.52) | 159.13 (161.58) | 167.83 (150.15) | 135.53 (151.67) | 144.20 (157.55) |

| Age | 78.63 (5.75) | 78.16 (6.49) | 79.69 (7.46) | 79.63 (5.26) | 81.14** (5.42) | 80.19 (150.15) | 81.1 (8.14) | 81.36 (7.04) |

| Female | 0.66 (0.11) | 0.65 (0.10) | 0.68 (0.11) | 0.67 (0.10) | 0.70*** (0.10) | 0.67 (0.11) | 0.70 (0.13) | 0.70 (0.12) |

| Race | ||||||||

| White | 0.80 (0.23) | 0.79 (0.24) | 0.84 (0.22) | 0.81 (0.21) | 0.86 (0.21) | 0.83 (0.26) | 0.87 (0.21) | 0.88 (0.20) |

| Black | 0.13 (0.18) | 0.15 (0.20) | 0.11 (0.17) | 0.13 (0.18) | 0.09 (0.17) | 0.11 (0.21) | 0.07 (0.14) | 0.06 (0.11) |

| Other | 0.08 (0.14) | 0.07 (0.14) | 0.06 (0.12) | 0.06 (0.11) | 0.06 (0.12) | 0.06 (0.15) | 0.06 (0.15) | 0.07 (0.15) |

| Hispanic | 0.05 (0.12) | 0.04 (0.10) | 0.04 (0.10) | 0.04 (0.08) | 0.03 (0.08) | 0.04 (0.12) | 0.04 (0.12) | 0.12 (0.11) |

| Education | ||||||||

| Less than HS | 0.31 (0.14) | 0.31 (0.14) | 0.32 (0.15) | 0.30 (0.15) | 0.29 (0.15) | 0.30 (0.16) | 0.28 (0.18) | 0.29 (0.16) |

| HS | 0.38 (0.11) | 0.38 (0.12) | 0.37 (0.13) | 0.39 (0.13) | 0.39 (0.13) | 0.39 (0.13) | 0.36*** (0.13) | 0.38 (0.13) |

| More than HS | 0.21 (0.09) | 0.20 (0.10) | 0.21 (0.11) | 0.22 (0.11) | 0.23 (0.13) | 0.22 (0.14) | 0.28** (0.19) | 0.25 (0.16) |

| Marital status | ||||||||

| Married | 0.17** (0.07) | 0.18 (0.07) | 0.17 (0.08) | 0.18 (0.08) | 0.18 (0.09) | 0.19 (0.09) | 0.17 (0.10) | 0.19 (0.10) |

| Widowed | 0.32 (0.12) | 0.33 (0.13) | 0.34 (0.13) | 0.344 (0.13) | 0.37* (0.14) | 0.34 (0.13) | 0.36 (0.15) | 0.37 (0.15) |

| Other | 0.19 (0.12) | 0.20 (0.12) | 0.17 (0.12) | 0.18 (0.11) | 0.15 (0.10) | 0.17 (0.12) | 0.15 (0.15) | 0.15 (0.12) |

| Living alone | 0.26 (0.09) | 0.27 (0.10) | 0.29* (0.10) | 0.27 (0.11) | 0.29 (0.11) | 0.29 (0.11) | 0.29 (0.13) | 0.30 (0.12) |

| Case-mix | ||||||||

| CPS | 2.34 (0.66) | 2.30 (0.58) | 2.34 (0.72) | 2.33 (0.62) | 2.33*** (0.72) | 2.16 (0.64) | 2.29 (0.78) | 2.35 (0.79) |

| ADL | 11.76 (1.59) | 11.93 (1.29) | 12.07 (1.55) | 11.86 (1.57) | 11.97 (1.52) | 12.01 (1.68) | 11.66 (1.93) | 11.83 (1.70) |

Notes: Standard deviation in parentheses.

indicate significantly different between treatment and control nursing homes at 90%, 95%, and 99% confidence levels, respectively.

Bandwidth of 3 percentile points. HHI: Herfindahl–Hirschman Index. HS: High school. CPS: Cognitive performance scores. ADL: Activities of Daily Living.

Table 3.

Baseline characteristics close to cut-off point for the full sample of nursing homes

| Star Rating | ||||||||

|---|---|---|---|---|---|---|---|---|

|

| ||||||||

| 1–2 | 2–3 | 3–4 | 4–5 | |||||

|

| ||||||||

| Treated | Control | Treated | Control | Treated | Control | Treated | Control | |

| N | 408 | 611 | 436 | 432 | 497 | 402 | 588 | 548 |

| Payer | ||||||||

| Medicaid | 0.64 (0.19) | 0.64 (0.17) | 0.59** (0.23) | 0.63 (0.21) | 0.57 (0.25) | 0.58 (0.24) | 0.55 (0.28) | 0.53 (0.29) |

| Medicare | 0.14 (0.12) | 0.15 (0.10) | 0.17 (0.15) | 0.16 (0.15) | 0.17 (0.20) | 0.17 (0.19) | 0.15*** (0.20) | 0.21 (0.26) |

| Other | 0.22 (0.15) | 0.21 (0.13) | 0.25** (0.16) | 0.26 (0.15) | 0.26 (0.20) | 0.25 (0.17) | 0.30*** (0.23) | 0.26 (0.19) |

| Residents | 101.78 (60.28) | 99.45 (48.7) | 94.01 (57.07) | 93.63 (52.88) | 87.17 (81.96) | 87.2 (65.19) | 75.53 (54.15) | 71.69 (54.81) |

| Beds | 123.6 (65.79) | 119.29 (51.27) | 112.87 (63.98) | 113.54 (59.36) | 103.22 (88.27) | 106.67 (72.58) | 90.80 (63.86) | 86.67 (60.42) |

| Occupancy | 0.82 (0.16) | 0.83 (0.14) | 0.84 (0.15) | 0.83 (0.15) | 0.84 (0.16) | 0.82 (0.18) | 0.85** (0.19) | 0.82 (0.17) |

| Occupancy > 0.95 | 0.20 (0.40) | 0.17 (0.38) | 0.21 (0.41) | 0.22 (0.41) | 0.22 (0.41) | 0.24 (0.43) | 0.29** (0.45) | 0.23 (0.42) |

| HHI | 0.20 (0.24) | 0.20 (0.24) | 0.23 (0.25) | 0.21 (0.23) | 0.22 (0.24) | 0.21 (0.24) | 0.19** (0.22) | 0.22 (0.25) |

| HHI < 0.25 | 0.72 (0.45) | 0.74 (0.44) | 0.68 (0.47) | 0.7 (0.46) | 0.7 (0.46) | 0.71 (0.45) | 0.73* (0.44) | 0.68 (0.47) |

| Special focus facility | 0.03*** (0.17) | 0.01 (0.24) | 0.00 (0.05) | 0.01 (0.08) | - | - | - | - |

| Urban | 0.71 (0.45) | 0.69 (0.46) | 0.61* (0.49) | 0.66 (0.47) | 0.63 (0.48) | 0.68 (0.47) | 0.64 (0.48) | 0.62 (0.49) |

| Chain | 0.62 (0.49) | 0.64 (0.48) | 0.57 (0.5) | 0.52 (0.5) | 0.5 (0.5) | 0.55 (0.5) | 0.43 (0.5) | 0.41 (0.49) |

| Hospital based | 0.03 (0.17) | 0.03 (0.18) | 0.07 (0.26) | 0.06 (0.23) | 0.12** (0.32) | 0.07 (0.26) | 0.12** (0.32) | 0.17 (0.38) |

| Ownership | ||||||||

| Profit | 0.78 (0.41) | 0.81 (0.4) | 0.68** (0.47) | 0.74 (0.44) | 0.58 (0.49) | 0.67 (0.47) | 0.55 (0.5) | 0.56 (0.5) |

| Non-profit | 0.18 (0.38) | 0.17 (0.38) | 0.30** (0.46) | 0.22 (0.41) | 0.35** (0.48) | 0.28 (0.45) | 0.39 (0.49) | 0.37 (0.48) |

| Government | 0.04 (0.19) | 0.02 (0.15) | 0.03 (0.16) | 0.04 (0.19) | 0.07** (0.26) | 0.05 (0.22) | 0.07 (0.25) | 0.07 (0.26) |

| Total admissions 2008 | 235.18 (211.03) | 235.56 (191.07) | 239.15 (234.03) | 221.85 (218.05) | 211.84 (202.6) | 213.62 (183.98) | 181.75*** (197.53) | 217.86 (224.4) |

| Medicare admissions 2008 | 168.59 (161.94) | 173.62 (146.68) | 177.37 (174.18) | 160.79 (153.7) | 154.67 (160.15) | 157.85 (145.43) | 128.79*** (147.04) | 161.24 (175.52) |

| Age | 78.61 (5.88) | 78.42 (5.96) | 80.2 (5.97) | 79.33 (6.17) | 80.5 (7.01) | 80.33 (5.35) | 80.25 (9.95) | 80.72 (7.11) |

| Female | 0.66 (0.1) | 0.66 (0.09) | 0.68* (0.09) | 0.67 (0.11) | 0.68 (0.12) | 0.67 (0.1) | 0.69 (0.15) | 0.69 (0.11) |

| Race | ||||||||

| White | 0.81 (0.22) | 0.8 (0.23) | 0.84* (0.21) | 0.82 (0.22) | 0.85 (0.22) | 0.83 (0.24) | 0.84 (0.24) | 0.86 (0.22) |

| Black | 0.12 (0.17) | 0.14 (0.19) | 0.1 (0.18) | 0.12 (0.18) | 0.09 (0.17) | 0.1 (0.19) | 0.08 (0.15) | 0.08 (0.15) |

| Other | 0.07 (0.14) | 0.06 (0.13) | 0.06 (0.11) | 0.07 (0.13) | 0.06 (0.13) | 0.06 (0.15) | 0.08 (0.17) | 0.07 (0.15) |

| Hispanic | 0.05 (0.12) | 0.04 (0.1) | 0.03 (0.09) | 0.03 (0.09) | 0.03 (0.07) | 0.04 (0.11) | 0.04 (0.13) | 0.04 (0.1) |

| Education | ||||||||

| Less than HS | 0.31 (0.14) | 0.31 (0.14) | 0.32 (0.15) | 0.31 (0.15) | 0.29 (0.16) | 0.3 (0.15) | 0.29 (0.18) | 0.28 (0.16) |

| HS | 0.38 (0.11) | 0.38 (0.12) | 0.37 (0.12) | 0.38 (0.12) | 0.38 (0.13) | 0.38 (0.12) | 0.35*** (0.14) | 0.38 (0.14) |

| More than HS | 0.21* (0.1) | 0.2 (0.1) | 0.22 (0.12) | 0.22 (0.11) | 0.24 (0.14) | 0.23 (0.13) | 0.27* (0.19) | 0.26 (0.16) |

| Marital status | ||||||||

| Married | 0.17* (0.08) | 0.18 (0.07) | 0.18* (0.08) | 0.17 (0.08) | 0.18 (0.1) | 0.19 (0.09) | 0.17*** (0.1) | 0.2 (0.11) |

| Widowed | 0.32* (0.13) | 0.34 (0.13) | 0.35 (0.14) | 0.34 (0.14) | 0.35 (0.15) | 0.35 (0.13) | 0.34*** (0.16) | 0.37 (0.15) |

| Other | 0.19 (0.12) | 0.2 (0.12) | 0.17 (0.12) | 0.18 (0.12) | 0.16 (0.12) | 0.16 (0.13) | 0.16 (0.15) | 0.16 (0.12) |

| Living alone | 0.26 (0.09) | 0.27 (0.1) | 0.29* (0.1) | 0.27 (0.11) | 0.28 (0.12) | 0.29 (0.11) | 0.28 (0.14) | 0.29 (0.12) |

| Case-mix | ||||||||

| CPS | 2.31 (0.65) | 2.32 (0.57) | 2.30 (0.69) | 2.31 (0.64) | 2.33** (0.78) | 2.21 (0.68) | 2.33* (0.86) | 2.23 (0.85) |

| ADL | 11.73** (1.65) | 11.98 (1.48) | 12.01** (1.56) | 11.76 (1.65) | 11.86 (1.73) | 11.83 (1.73) | 11.56 (2.05) | 11.74 (1.73) |

Notes: Standard deviation in parentheses.

indicate significantly different between treatment and control nursing homes at 90%, 95%, and 99% confidence levels, respectively.

Bandwidth of 3 percentile points. HHI: Herfindahl–Hirschman Index. HS: High school. CPS: Cognitive performance scores. ADL: Activities of Daily Living.

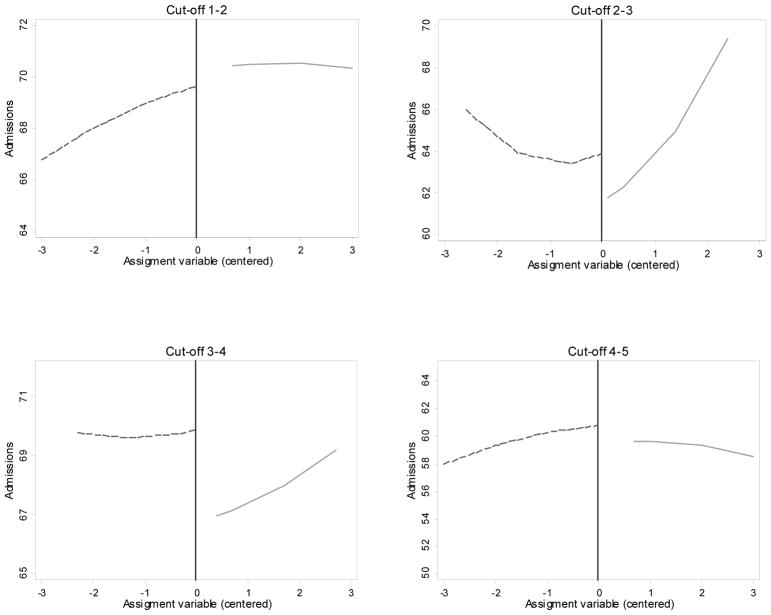

Table 4 shows results from the local random-effects Poisson models for each of the four cut-off points (Equation 1) using our preferred sample of the nursing homes with overall stars equal to the stars in the inspection domain. Nursing homes that obtained an extra star gained more admissions six months after the release of the new ratings, with the exception of lower rated nursing homes, for which the effect is close to zero and not statistically significant (Table 4, first row). The effects are larger for nursing homes that received a rating of better than average instead of average (four versus three stars) and average instead of below average (three versus two stars). In terms of admissions rates (eβ1) between treated and control nursing homes, the effects are 0.993, 1.036, 1.047, and 1.021 for cut-off points 1–2, 2–3, 3–4, and 4–5, respectively. In other words, admissions at nursing homes receiving 3 stars increased by 3.6 percent relative to nursing homes receiving 2 stars; admissions at nursing homes with 4 stars increased by 4.7 percent, and admissions at homes with 5 stars relative to 4 stars increased by 2.1 percent. Thus, our results show that, for most cut-off points, nursing homes that obtained an extra star gained admissions. These gains are largest in the middle of the quality distribution but are not statistically significant and close to a rate of one at the lowest end of the quality ratings. Figure 1 depicts local, smoothed predictions based on Poisson models for each of the cut-off points for the sample in which the overall rating is the same as the rating in the inspection domain (Table 4, row 1).

Table 4.

Estimates of treatment effect (β1) by cut-off point

| Cut-off point | ||||

|---|---|---|---|---|

|

| ||||

| Sample | 1–2 | 2–3 | 3–4 | 4–5 |

| Overall = Inspection | −0.007 >(0.011) |

0.035** >(0.014) |

0.046*** >(0.013) |

0.021* >(0.011) |

|

| ||||

| Occupancy < 0.95 | −0.031*** >(0.012) |

0.069*** >(0.016) |

0.048*** >(0.015) |

0.044*** >(0.013) |

|

| ||||

| Ti | 0.0040 >(0.029) |

0.054* >(0.026) |

−0.012 >(0.023) |

−0.007 >(0.018) |

| HHI < 0.25 | 0.229*** >(0.046) |

0.225*** >(0.060) |

0.132* >(0.063) |

0.018 >(0.055) |

| Ti*(HHI < 0.25) | −0.0120 >(0.030) |

−0.023 >(0.026) |

0.069*** >(0.022) |

0.036** >(0.018) |

Notes: Standard errors in parentheses.

indicate treatment effects significantly different between treatment and control nursing homes at 90%, 95%, and 99% confidence levels, respectively.

Outcome is number of admissions. All models control for the running variable (centered at cut-off point), payer mix, chain status, hospital-based indicator, type of ownership, Herfindahl–Hirschman Index (except for models controlling for HHI <0.25), Cognitive performance scores, occupancy rate, number of beds, demographics characteristics (education and race), and ratings in the quality and staffing domains. HHI: Herfindahl–Hirschman Index.

Figure 1.

Admissions Close to Cut-off Points

Predicted number of admissions using local Poisson regression controlling for the assignment variable centered at each threshold. Dashed line is for nursing homes that received an additional star. Vertical line denotes the threshold values, which were set to the 10th, 33.33th, 56.66th, and 80th percentile of the total inspection score for cut-off points 4-5 to 1-2, respectively.The model s include a quadratic term for the centered assignment variable. Sample consists of nursing homes for which the overall rating is the same as the inspection domain rating. Model-based estimates of treatment effects using this sample are presented in Table 4 (row 1).

As expected, the treatment effects are larger in magnitude and statistically significant when we restrict the sample to nursing homes with occupancy less than 95 percent (Table 4, second row). In term of admission rates, the effects are 0.969, 1.071, 1.049, and 1.045 for cut -off points 1–2, 2–3, 3–4, and 4–5, respectively. Models interacting the treatment variable with an indicator of more competitive markets at the county level (HHI < 0.25) show that the effect is larger in competitive areas for cut-off points 3–4 and 4–5.

Table 5 shows results for the same subset of nursing homes for models in which the outcome is the count of new admissions by payer source upon admission. Nursing homes that received four stars instead of three gained more Medicare admissions, which are, at least initially, post-acute admissions. On the other hand, nursing homes that received five stars instead of four or four stars instead of three obtained more non-Medicare admissions, an effect that may be driven by private-pay residents since they are generally seen as more responsive to quality than Medicaid residents. At the lower end of the quality spectrum, nursing homes that received two stars instead of one saw no increase in Medicare admissions, which is consistent with low-end nursing homes being dominated by Medicaid and perhaps not seen as desirable locations for post-acute care whether they received one or two stars. Nursing homes that received two stars versus one star also saw a decline in non-Medicare admissions. This negative effect is possibly the result of supply constraints or perhaps heterogeneous reactions by private-pay and Medicaid residents that make up the non-Medicare group. Few private-pay residents are found in one-star nursing homes, and rather than choosing two-star homes over one-star homes, private-pay residents on this margin may have sought out even higher levels of quality. Combined with a lack of response on the part of Medicaid residents who would go to one-star nursing homes, a negative effect could result. Due to data limitations, we cannot test this explanation directly.

Table 5.

Estimates of treatment effect (β1) by cut-off point and payer

| Cut-off point | ||||

|---|---|---|---|---|

| Sample | 1–2 | 2–3 | 3–4 | 4–5 |

| Medicare | 0.010 >(0.013) |

0.048*** >(0.016) |

0.037** >(0.015) |

0.006 >(0.013) |

| Non-Medicare | −0.047** >(0.021) |

−0.005 >(0.026) |

0.073*** >(0.025) |

0.059*** >(0.021) |

Notes: Standard errors in parentheses.

indicate treatment effects significantly different between treatment and control nursing homes at 90%, 95%, and 99% confidence levels, respectively.

Outcome is number of admissions by payer. Payer source based on 5-day Minimum Data Set assessment. All models control for the running variable (centered at cut-off point), chain status, hospital-based indicator, type of ownership, Herfindahl–Hirschman Index, Cognitive performance scores, occupancy rate, number of beds, demographics characteristics (education and race), and ratings in the quality and staffing domains. HHI: Herfindahl–Hirschman Index.

Table 6 shows results of sensitivity analyses using different bandwidths around cut-off points. Using a narrow bandwidth substantially reduces the sample size but the positive effect on admissions is still evident, particularly for comparisons in the 2–3 and 3–4 cut-off points. Using a wider bandwidth increases the sample size but treatment effects become smaller and lose statistical significance. In none of these models is the effect for lower rated nursing homes (cut-off point 1–2) large or statistically significant.

Table 6.

Estimates of treatment effect (β1) for different bandwidths

| Cut-off point | ||||

|---|---|---|---|---|

|

| ||||

| Sample | 1–2 | 2–3 | 3–4 | 4–5 |

| Bandwidth 1 | ||||

| N (Control - Treated) | 201-65 | 59–72 | 65–111 | 110–200 |

| Treatment effect | 0.003 | 0.074*** | 0.023 | 0.007 |

| SE | (0.018) | (0.024) | (0.022) | (0.017) |

|

| ||||

| Bandwidth 2 | ||||

| N (Control - Treated) | 308-125 | 119–134 | 120–176 | 203–298 |

| Treatment effect | −0.015 | 0.060*** | 0.079*** | −0.0160 |

| SE | (0.013) | (0.016) | (0.016) | (0.013) |

|

| ||||

| Bandwidth 4 | ||||

| N (Control - Treated) | 547-252 | 231–250 | 257–333 | 388–518 |

| Treatment effect | −0.004 | 0.023* | 0.052*** | 0.023** |

| SE | (0.010) | (0.012) | (0.011) | (0.010) |

|

| ||||

| Bandwidth 5 | ||||

| N (Control - Treated) | 654-321 | 294–308 | 330–405 | 455–623 |

| Treatment effect | 0.002 | 0.009 | 0.033*** | 0.023** |

| SE | (0.009) | (0.011) | (0.010) | (0.009) |

|

| ||||

| Bandwidth 6 | ||||

| N (Control - Treated) | 764-384 | 357–377 | 385–514 | 547–744 |

| Treatment effect | 0.001 | −0.007 | 0.007 | 0.016* |

| SE | (0.008) | (0.010) | (0.009) | (0.008) |

Notes:

indicate treatment effects significantly different between treatment and control nursing homes at 90%, 95%, and 99% confidence levels, respectively.

Outcome is number of admissions. All models control for the running variable (centered at cut-off point), payer mix, chain status, hospital-based indicator, type of ownership, Herfindahl–Hirschman Index, Cognitive performance scores, occupancy rate, number of beds, demographics characteristics (education and race), and ratings in the quality and staffing domains.

Finally, Table 7 shows results of models for the full set of nursing homes using a bandwidth of 3 percentile points around the adjusted assignment score. The results are qualitatively similar to those reported in Table 4 but the magnitudes of the effects are smaller. Because there were significant differences in baseline characteristics around the 4–5 cut-off point, identification of the models presented in Table 7 for the high end of the quality rating relies more on controlling for covariates than the base model.

Table 7.

Estimates of treatment effect (β1) by cut-off point, full sample

| Cut-off point | ||||

|---|---|---|---|---|

|

| ||||

| Model | 1–2 | 2–3 | 3–4 | 4–5 |

| All | −0.005 >(0.008) |

0.016* >(0.009) |

0.018* >(0.009) |

0.010 >(0.008) |

|

| ||||

| Occupancy < 0.95 | −0.019** >(0.009) |

0.026*** >(0.010) |

0.021** >(0.011) |

0.018* >(0.010) |

|

| ||||

| Ti | −0.002 >(0.017) |

0.022 >(0.015) |

−0.028* >(0.016) |

0.008 >(0.015) |

| HHI < 0.25 | 0.15*** >(0.035) |

0.180*** >(0.041) |

0.173*** >(0.048) |

0.048 >(0.046) |

| Ti*(HHI < 0.25) | −0.003 >(0.017) |

−0.007 >(0.015) |

0.056*** >(0.016) |

0.004 >(0.015) |

Notes: Standard errors in parentheses.

indicate treatment effects significantly different between treatment and control nursing homes at 90%, 95%, and 99% confidence levels, respectively.

All models control for payer mix, chain status, hospital-based indicator, type of ownership, Herfindahl– Hirschman Index (except for models controlling for HHI <0.25), Cognitive performance scores, occupancy rate, number of beds, demographics characteristics (education and race), and ratings in the quality and staffing domains.

The magnitudes of our treatment effect estimates are relatively small but not trivial in terms of gains in the number of admissions after the release of the ratings. Based on the effect we found for nursing homes that received four instead of three stars in our base models (Table 4), an increase of 5 percent in admissions between treatment and control nursing homes is approximately equivalent to 9 extra admissions per year (assuming an average of 180 new admissions). However, assuming that the cost structure remains unchanged, this change could be interpreted as a net increase of 5 percent in profits as a consequence of receiving a better star rating. Furthermore, the true effect on profits could be larger because some of the extra admissions caused by a better rating could be long-term.

In additional robustness checks, we estimated linear models (OLS) including nursing home fixed effects. Our estimates of treatment effects did not change in a meaningful way as nursing homes close to threshold appear to be comparable, including on unobserved time-invariant endogenous variables.

VI. DISCUSSION

A primary purpose of public reporting of quality information is to motivate consumers to choose providers of better quality. In this paper, we found that simplified, composite ratings of nursing home quality caused an increase in admissions to better rated nursing homes, with heterogeneous effects depending on baseline ratings. Importantly, we find evidence of increased demand for higher-rated facilities after the composite score was published in late 2008, despite the fact that the individual components of the star ratings had been published since 2002. Thus, our results reflect response to reporting of the composite ratings relative to individual measures. Consumers and their agents (e.g., family members, discharge planners, and/or referring physicians) appear to have found the simplified five-star ratings useful over and above the information that was previously reported in a more complex manner. The findings that consumers, and providers, respond to composite ratings of quality is support by two qualitative studies (Konetzka and Perraillon 2016, Perraillon, Brauner, and Konetzka 2017).

The identification of causal effects relies on the quasi-randomization near cut-off points of the assignment variable. Our results corroborate that observed baseline characteristics of nursing homes and residents are comparable close to cut-off points. The balance is even stronger when the assignment mechanism is solely determined by the health inspection score. These findings, and the fact that nursing homes could not manipulate the assignment score with precision, indicate that the main assumptions of the regression discontinuity design are satisfied, providing strong causal evidence that better rated nursing homes gained new admissions because of the release of the composite ratings.

Importantly, our results do not depend on whether the new composite ratings actually reflect quality of care, even though the clear differences in baseline characteristics by star rating for the full sample of nursing homes lend some face validity to the new composite ratings, as factors such as ownership, chain status, size, and payer mix have been previously shown to be associated with quality of resident care (Grabowski et al. 2013, Hillmer et al. 2005, Kamimura et al. 2007, Grabowski et al. 2014, Rahman et al. 2014, Hirth et al. 2014, Mor et al. 2010). These findings also suggest that low- and high-rated nursing homes tend to serve different types of patients in different geographic areas. Other research has also found that in some cities top-rated nursing homes tend to be located in areas with higher median household income (Konetzka et al. 2015).

The overall welfare effects of the new composite ratings of quality, however, depend on whether the ratings truly reflect the quality of resident care. Although we found that the these ratings are correlated with factors associated with quality of care, press reports have cast doubts on the validity of the ratings and their evolution over time, particularly in the domains that are based on self-reported data (Thomas 2014, Perraillon, Brauner, and Konetzka 2017). While making quality information easier to understand is an important policy objective, ensuring the validity of the ratings should also be a priority

In most of our model specifications, nursing homes that received a rating of above average instead of average and average instead of below average gained admissions after the release of the new ratings. The effect for nursing homes that received a top rating (five stars instead of four) was also positive but smaller in magnitude. In most of our models, there are no statistically or practically significant effects for lower rated nursing homes (cut-off 1–2). There are several plausible explanations for the lack of effect at the lower end of the quality spectrum. First, one- and two-star nursing homes have a larger proportion of Medicaid residents, who may be less likely to respond to quality information perhaps due to lack of access to the online information, although, if accessed, the new rating may be easier to understand (Konetzka and Perraillon 2016). Second, consumers who are contemplating admission to a one-star or a two-star facility may see little difference in the actual quality of the homes at this level and may put more weight on other considerations such as distance to family. Finally, supply constraints may play a role. Whether due to heterogeneity by type of resident or lack of meaningful distinction in quality, it seems clear that the Nursing Home Compare system in its current form may be limited in its ability to help consumers avoid the lowest-quality nursing homes. A qualitative study found evidence suggesting that nursing homes operating at full capacity and with a proportion of Medicaid patients do not consider the five-star ratings important for their business (Perraillon, Brauner, and Konetzka 2017).

Our stratification and interaction models support our main results. Effects are stronger among nursing homes that are not fully occupied and also in more competitive markets. Overall, our results by payer source are consistent with evidence suggesting that education improves the response of Medicare-funded post-acute patients to public reporting in nursing homes (Werner et al. 2012), as well as evidence suggesting that the likelihood of choosing the highest-rated nursing homes was substantially smaller for dual-eligibles (dually enrolled in Medicare and Medicaid) than for non-dual eligibles (Konetzka et al. 2015).

As in all regression discontinuity designs, a consequence of estimating treatment effects for nursing homes that are comparable close to cut-off points is that treatment estimates are local average treatment effects (LATE) and apply only to nursing homes that are close to one of the cut-off points. In particular, we cannot estimate treatment effects for nursing homes that are of extremely poor reported quality or extremely high reported quality since these nursing homes are not close to cut-off points. Our results are also limited to those nursing homes that receive one additional star relative to one fewer star, although it would be reasonable to expect that differences of several stars would elicit an even larger consumer response. For this reason, our results are not directly comparable to studies that estimated the effects of public reporting in nursing homes on market shares (Werner et al. 2012, Grabowski and Town 2011), as we do not examine overall shifts in market share but rather admission decisions at the margin of each star level. Although we found that nursing homes close to cut-off points are comparable in almost all measured baseline characteristics, this method remains a quasi-experimental design and it is not possible to verify that balance was achieved in unmeasured characteristics that could be correlated with the number of admissions.

Overall, we contribute to the literature on consumer response to public reporting of quality using a plausible regression discontinuity design to assess the effects of reporting a composite measure of quality over and above sets of individual measures of quality. Our results show that the form of quality reporting matters to consumers. Although there are certainly trade-offs to consider, the current momentum toward use of simplified, composite rating systems may be warranted in order to improve consumer response to public reporting. Additional policies may also be warranted to improve the choices of consumers who may be less able, for a variety of reasons, to benefit from even a simplified rating system.

Acknowledgments

Funding was provided in part by AHRQ (R21HS021877, R21HS021861, and T32HS000084) and NIA (R21AG040498 and K24AG047908). Funders did not have a role in the study design, data collection, analysis, interpretation, writing, or manuscript submission. We are grateful for substantive input from Ron Thisted, Tina Shih, Robert Gibbons and David Cutler. We are also greatful to Rich Lindrooth and participants of the 2014 American Society of Health Economists and 2014 Academy Health Annual Meetings for helpful comments.

Contributor Information

Marcelo Coca Perraillon, University of Colorado, Department of Health Systems, Management & Policy.

R. Tamara Konetzka, University of Chicago, Department of Public Health Sciences.

Daifeng He, Swarthmore College, Department of Economics.

Rachel M. Werner, University of Pennsylvania

References

- Arrow Kenneth J. Uncertainty and the Welfare Economics of Medical Care. The American Economic Review. 1963;53(5):941–973. [Google Scholar]

- Bearing Point. A National Survey of Hospital Discharge Planners. McLean, VA: Health Services Research & Management Group; 2004. [Google Scholar]

- Cameron A Colin, Trivedi Pravin K. Regression Analysis of Count Data. Vol. 53. Cambridge University Press; 2013. [Google Scholar]

- Castle Nicholas G, Engberg John. An Examination of Special Focus Facility Nursing Homes. The Gerontologist. 2010;50(3):400–407. doi: 10.1093/geront/gnq008. [DOI] [PubMed] [Google Scholar]

- Centers for Medicare and Medicaid Services, CMS. Improving the Nursing Home Compare Web site: The Five-Star Nursing Home Quality Rating System. 2008. [Google Scholar]

- Centers for Medicare and Medicaid Services, CMS. Design for Nursing Home Compare Five-Star Quality Rating System: Technical Users Guide. 2012. [Google Scholar]

- Centers for Medicare and Medicaid Services, CMS. Nursing Home Compare. 2014. [Google Scholar]

- Dranove David, Satterthwaite Mark A. Monopolistic Competition when Price and Quality are Imperfectly Observable. The RAND Journal of Economics. 1992;23(4):518–534. [Google Scholar]

- Faber Marjan, Bosch Marije, Wollersheim Hub, Leatherman Sheila, Grol Richard. Public reporting in health care: how do consumers use quality-of-care information? A systematic review. Medical care. 2009;47(1):1–8. doi: 10.1097/MLR.0b013e3181808bb5. [DOI] [PubMed] [Google Scholar]

- Gelman Andrew, Imbens Guido. Why high-order polynomials should not be used in regression discontinuity designs. National Bureau of Economic Research; 2014. [Google Scholar]

- Grabowski David C, Elliot Amy, Leitzell Brigitt, Cohen Lauren W, Zimmerman Sheryl. Who are the innovators? Nursing homes implementing culture change. The Gerontologist. 2014;54(Suppl 1):S65–75. doi: 10.1093/geront/gnt144. [DOI] [PubMed] [Google Scholar]

- Grabowski David C, Feng Zhanlian, Hirth Richard, Rahman Momotazur, Mor Vincent. Effect of Nursing Home Ownership on the Quality of Post-Acute Care: An Instrumental Variables Approach. Journal of health economics. 2013;32(1):12–21. doi: 10.1016/j.jhealeco.2012.08.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grabowski David C, Town Robert J. Does Information Matter? Competition, Quality, and the Impact of Nursing Home Report Cards. Health Services Research. 2011;46(6pt1):1698–1719. doi: 10.1111/j.1475-6773.2011.01298.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- He Daifeng, Tamara Konetzka R. Public Reporting and Demand Rationing: Evidence from the Nursing Home Industry. Health Economics. 2015;24(11):1437–51. doi: 10.1002/hec.3097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hibbard Judith H, Berkman Nancy, McCormack Lauren A, Jael Elizabeth. The impact of a CAHPS report on employee knowledge, beliefs, and decisions. Medical Care Research and Review. 2002;59(1):104–16. doi: 10.1177/107755870205900106. [DOI] [PubMed] [Google Scholar]

- Hillmer Michael P, Wodchis Walter P, Gill Sudeep S, Anderson Geoffrey M, Rochon Paula A. Nursing Home Profit Status and Quality of Care: Is There Any Evidence of an Association? Medical Care Research and Review. 2005;62(2):139–66. doi: 10.1177/1077558704273769. [DOI] [PubMed] [Google Scholar]

- Hirth Richard A, Grabowski David C, Feng Zhanlian, Rahman Momotazur, Mor Vincent. Effect of Nursing Home Ownership on Hospitalization of Long-Stay Residents: An Instrumental Variables Approach. International Journal of Health Care Finance and Economics. 2014;14(1):1–18. doi: 10.1007/s10754-013-9136-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Imbens Guido W, Lemieux Thomas. Regression Discontinuity Designs: A Guide to Practice. Journal of Econometrics. 2008;142(2):615–35. [Google Scholar]

- Kamimura Akiko, Banaszak-Holl Jane, Berta Whitney, Baum Joel AC, Weigelt Carmen, Mitchell Will. Do Corporate Chains Affect Quality of Care in Nursing Homes? The Role of Corporate Standardization. Health Care Management Review. 2007;32(2):168–78. doi: 10.1097/01.HMR.0000267794.55427.52. [DOI] [PubMed] [Google Scholar]

- Konetzka R Tamara, Grabowski DC, Perraillon MC, Werner RM. Nursing Home 5-Star Rating System Exacerbates Disparities In Quality, By Payer Source. Health Affairs. 2015;34(5):819–27. doi: 10.1377/hlthaff.2014.1084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Konetzka R Tamara, Perraillon Marcelo Coca. Use Of Nursing Home Compare Website Appears Limited By Lack Of Awareness And Initial Mistrust Of The Data. Health Affairs. 2016;35(4):706–713. doi: 10.1377/hlthaff.2015.1377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kramer AM, Fish R. The Relationship between Nurse Staffing Levels and the Quality of Nursing Home Care, Report to Congress, Phase 2. Washington, DC: 2001. Appropriateness of Minimum Nurse Staffing Ratios in Nursing Homes; pp. 1–26. [Google Scholar]

- Lee David S, Lemieux Thomas. Regression Discontinuity Designs in Economics. National Bureau of Economic Research; 2009. [Google Scholar]

- Mor Vincent. Improving the Quality of Long-Term Care with Better Information. The Milbank Quarterly. 2005;83(3):333–64. doi: 10.1111/j.1468-0009.2005.00405.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mor Vincent, Intrator Orna, Feng Zhanlian, Grabowski David C. The Revolving Door Of Rehospitalization From Skilled Nursing Facilities. Health Affairs. 2010;29(1):57–64. doi: 10.1377/hlthaff.2009.0629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris JN, Fries BE, Mehr DR, Hawes C, Phillips C, Mor V, Lipsitz LA. MDS Cognitive Performance Scale. Journal of Gerontology. 1994;49(4):M174–82. doi: 10.1093/geronj/49.4.m174. [DOI] [PubMed] [Google Scholar]

- Mukamel Dana B, Weimer David L, Spector William D, Ladd Heather, Zinn Jacqueline S. Publication of Quality Report Cards and Trends in Reported Quality Measures in Nursing Homes. Health Services Research. 2008;43(4):1244–62. doi: 10.1111/j.1475-6773.2007.00829.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- National Center for Health Statistics. National Nursing Home Survey. Hyattsville, MD: 2004. [Google Scholar]

- Palsbo Susan E, Kroll Thilo. Meeting information needs to facilitate decision making: report cards for people with disabilities. Health Expectations: An International Journal of Public Participation in Health Care and Health Policy. 2007;10(3):278–285. doi: 10.1111/j.1369-7625.2007.00453.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perraillon MC, Brauner Daniel J, Tamara Konetzka R. Nursing Homes Response to Nursing Home Compare: The Provider Perspective. Medical Care Research and Review. 2017 doi: 10.1177/1077558717725165. Forthcoming. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peters Ellen, Dieckmann Nathan, Dixon Anna, Hibbard Judith H, Mertz CK. Less is more in presenting quality information to consumers. Medical Care Research and Review. 2007;64(2):169–90. doi: 10.1177/10775587070640020301. [DOI] [PubMed] [Google Scholar]

- Rabe-Hesketh Sophia, Skrondal Anders. Multilevel and Longitudinal Modeling using Stata. STATA press; 2008. [Google Scholar]