Abstract

Objectives

To describe and evaluate two different models of a clinical informaticist service.

Design

A case study approach, using various qualitative methods to illuminate the complexity of the project groups' experiences.

Setting

UK primary health care.

Interventions

Two informaticist projects to provide evidence based answers to questions arising in clinical practice and thereby support high quality clinical decision making by practitioners.

Results

The projects took contrasting and complementary approaches to establishing the service. One was based in an academic department of primary health care. The service was academically highly rigorous, remained true to its original proposal, included a prominent research component, and involved relatively little personal contact with practitioners. This group achieved the aim of providing general information and detailed guidance to others intending to set up a similar service. The other group was based in a service general practice and took a much more pragmatic, flexible, and facilitative approach. They achieved the aim of a credible, acceptable, and sustainable service that engaged local practitioners beyond the innovators and enthusiasts and secured continued funding.

Conclusion

An informaticist service should be judged on at least two aspects of quality—an academic dimension (the technical quality of the evidence based answers) and a service dimension (the facilitation of questioning behaviour and implementation). This study suggests that, while the former may be best achieved within an academic environment, the latter requires a developmental approach in which pragmatic service considerations are addressed.

What is already known about this topic

Many clinicians lack the skills or time to practise evidence based health care (that is, develop focused questions, search electronic databases, evaluate research papers, and extract a “clinical bottom line”)

A potential solution is an informaticist service in which clinicians submit questions by telephone, fax, or email and receive a structured response based on a thorough search and appraisal of the relevant literature

Preliminary descriptive studies of informaticist services suggest that some general practitioners will use them and that those who do generally find them useful

What this study adds

The study described two contrasting models of an informaticist service—an academically focused project that aimed to provide a central, highly rigorous answering service (a “laboratory test for questions”) and a service focused project (“friendly local facilitator”) that aimed to engage local general practices, promote questioning behaviour, and link with other local initiatives to support evidence based care

Both models had important strengths and notable limitations, from which general recommendations about the design of informaticist services could be drawn

Introduction

Evidence based health care involves deriving focused questions from clinical problems, searching systematically and thoroughly for best relevant evidence, critically appraising the evidence, and applying new knowledge in the clinical context. But, although most clinicians support the notion of evidence based health care in principle and wish to use evidence based information generated by others, only a tiny fraction seek to acquire all the requisite skills themselves.1 A study in British general practice found that the commonest reason cited for not practising evidence based health care was lack of time, followed by “personal and organisational inertia.”1 Acknowledging that this resonated with their own experience, Guyatt and colleagues recently formally withdrew their call that all practitioners should become fully competent in evidence based medicine,2 and others have called for the development of pragmatic, as well as systematic, approaches to supporting best practice.3

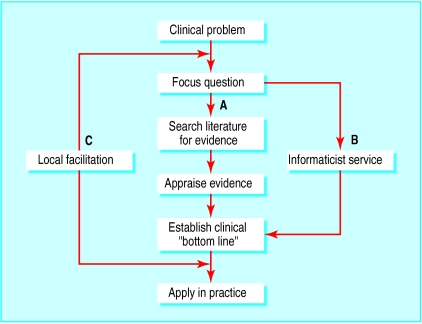

One such pragmatic approach might be to provide an informaticist service, in which a specialist individual (informaticist) or group could assist general practitioners, nurses, and other health professionals to answer questions arising in day to day practice (see fig 1).4 Preliminary research from the United States suggests that such services are effective and cost effective in improving practice in the hospital setting.5,6 However, despite similar theoretical benefits in primary care,7 the feasibility, acceptability, and impact on patient outcomes is yet to be demonstrated in this setting. This paper describes and contrasts two projects to establish an informaticist service for primary care staff.

Figure 1.

Evidence based health care involves deriving focused questions from clinical problems, searching and appraising the evidence, and applying the knowledge in practice (A). An informaticist service could perform this function, helping health professionals to answer questions arising in practice (B). A local facilitation service can help clinicians formulate questions and apply evidence to routine practice (C)

Background and methods

The projects

The projects were funded for two years from 1998 by North Thames Regional Office R & D Implementation Group. They were part of a programme of five projects exploring different ways of getting evidence into practice in primary care, which was evaluated by the Research into Practice in Primary Care in London Evaluation (RiPPLE) group at University College London. The RiPPLE group supported the projects' own evaluative activities and carried out a qualitative evaluation of the programme as a whole, with the aim of understanding more about the processes involved in changing clinical practice in primary care.

The brief for the two projects reported here was to test the value of a clinical informaticist, whose role would be “to find, critically appraise, and summarise evidence to inform clinical decision making.” Two groups were funded—one based in a university department of primary care, at Imperial College London, with various local stakeholders having signed their support, and one based in the new town of Basildon, Essex, led by a general practitioner who had retired from clinical practice but was still active in education, research, and development locally and in collaboration with the community healthcare trust and the health authority. In both cases, participants (that is, those invited to send questions to the service) were general practitioners, practice nurses, and nurse practitioners based in practices that had joined the study.

Evaluation methods

The RiPPLE team adopted a holistic approach, using multiple methods. The role of the researcher in this approach has been described as that of a “bricoleur” or jack of all trades, who deploys whatever strategies, methods, or empirical materials are at hand in a pragmatic and self reflexive manner in order to secure an in depth understanding of the phenomenon in question from as many angles as possible.8 Data collection involved documentary analysis, observation of participants and non-participants, and semistructured interviews. We had access to all minutes, reports, and other relevant documents generated by the projects. We participated in all steering group and other ad hoc meetings for both projects. At various stages over the two year funded period, we interviewed staff on both projects and local primary care practitioners receiving the informaticist services. (See box A on bmj.com for further details of methods used and analysis of data.)

Results

Aims and objectives

The original proposal from the Imperial College group gave 12 objectives (the main ones are listed in box B on bmj.com), all with a research or academic focus—that is, the project explicitly aimed to use rigorous methods to generate descriptive and evaluative data that would inform the design of informaticist services more generally. The Basildon project effectively limited its aims to setting up the service locally, seeing if it worked, and helping practice and community staff implement the answers to questions.

The informaticist's role

For the Imperial College group, the role of the informaticist was to provide answers from the clinical research literature to questions submitted by post or fax by primary care staff. The goal of the project was to achieve technical excellence and rapid turnaround for this service; to address specific research questions such as whether the service changed clinicians' knowledge of, or attitudes to, evidence based health care; and to document which resources were used in answering the questions. The expected standard of work was to provide rigorously researched answers to all questions submitted, even if that meant waiting for obscure references and appraising lengthy papers. Personal visits by project team workers were offered when practices enrolled in the project. The box gives details of the methods used by the project teams.

Methods used by the two informaticist service projects

Imperial College project

Metaphor for the service

“Like a laboratory test service”

Methods used to encourage questioning behaviour

Research assistant “reminded” participants. Project worker saw priority as maintaining good turnaround time for questions

In later stages, project website (with limited access) and newsletter to raise awareness of the service

Method for dealing with questions

Participants encouraged to submit, using fax or email, an answerable, three part clinical question (such as relating to course and outcome of disease, risk factors, efficacy of treatment, etc) plus details of how the question arose

If question needed refining discussion was sought with questioner by telephone or fax, but this rarely occurred in practice; the reformulated question was sent with the answer

Question was addressed via thorough search of literature and appraisal of all high quality, relevant secondary and primary sources, even if this required a lengthy wait for obscure papers

Answer sent by fax or post on a form that included the original question and the question on which the search was based plus questionnaire to seek feedback and evaluation

Additional services offered

Towards end of project, training offered to small cadre of local general practitioners in searching and critical appraisal by informaticist

Basildon project

Metaphor for the service

“Friendly local facilitator”

Methods used to encourage questioning behaviour

Personal contact seen as the key to stimulating participants to ask questions. Informaticist visited practices regularly or invited general practitioners to lunchtime meetings where examples of clinical questions asked (and answers) were presented and discussed

Specific focus on engaging nurses, with involvement of nursing hierarchies and visits to nursing teams; this task was difficult and took time to bear fruit—eventually a nurse facilitator was recruited

Method for dealing with questions

Participants encouraged to ask “any questions,” including clinical and organisational ones

Questions refined through face to face dialogue

Searches limited to mainstream, easily accessible journals and reliable secondary sources; no attempt was made to produce an exhaustive answer: philosophy was to “find out what we can and then share it”

Answer sent to questioner by fax or post, with follow up by telephone or in person to ask if it was helpful, but this was not done systematically or evaluated formally

Previous questions shared with other interested participants

Additional services offered

Making information accessible (such as production and distribution of desk mat for assessing and managing cardiovascular risk)

Aimed to provide help in applying answer in practice

In contrast, the Basildon group wanted their informaticist to “identify the important questions in primary care” by talking to general practitioners generally about their problems, and to offer information and help that had a bearing on these. They called their project worker a “facilitator,” as they thought clinicians would not understand the term informaticist. His training included presentation skills as well as searching and critical appraisal. From the outset, he was expected to make face to face contact with clinicians to explain the service, elicit questions, and feed back information (ensuring that the client had got what he or she wanted). The Basildon facilitator worked to an explicit policy of using trusted secondary sources for most questions and not spending large amounts of time selecting or appraising primary sources. Quality control was provided by the project leader, who closely supervised his work.

Establishing local links

Both projects were meant in principle to become locally embedded. At the time bids were submitted, it was felt that this would involve working with newly emerging primary care groups and linking in with local developments in research and postgraduate education. Both groups found this more difficult than anticipated. They had assumed, for example, that the informaticist post would be filled by a local general practitioner, but, in the absence of suitable local applicants, both posts were filled from outside the area.

Both groups adopted a strategy of starting with a small group of innovators and building on local enthusiasm. The idea for the Imperial College project grew out of a research club that had been started for local general practitioners two years previously; one member had shown enthusiasm for the suggested informaticist service and offered two health centres as pilot practices. These were believed to have good practice information systems, an interest in research and clinical effectiveness, a willingness to innovate, and a culture of working together. Unfortunately, because of competing demands, the volunteer “product champion” did not deliver the anticipated contacts and commitment.

After a disappointing response from the pilot practices, the Imperial College group decided to “work with volunteers rather than conscripts,” meaning committed and reflective individuals who would spontaneously submit questions for which evidence based answers could be prepared. Although they visited practices at the outset to introduce the project, they did not see it as a priority to provide an “outreach” service or to teach questioning behaviour, relying largely on the production of answers as a stimulus to further questioning behaviour.

The Basildon project was sited within a two-practice health centre which had strong research and educational interests. Expanding beyond these innovators was part of the plan from the outset, but it was recognised that this would be challenging. The group stuck to their aim of offering the service to all primary care and community staff, despite initial difficulties with engaging nurses. They adopted a dual strategy of working intensively with practices within the local primary care group and responding to enthusiasts across the whole health authority.

An important difference between the research focused Imperial College project and the service focused Basildon project was that Imperial College group abandoned their pilot practices at the end of the pilot phase, partly because of a perceived problem of “contamination” and partly because of the disappointing uptake of the service by these participants. In contrast, the Basildon group, who also experienced a slow start even among the so called enthusiasts, were able to “snowball” from a small local base.

How the project groups viewed themselves

As might be expected from an academic department with an international research reputation, the Imperial College project was designed, implemented, and internally evaluated as a research study with distinct phases. The group aimed to develop a rigorous, efficient, and reproducible service to doctors and nurses who sought evidence based answers to clinical questions; to describe the nature of those questions; and to develop a database of answers. In their original proposal, the group used the analogy of the laboratory test to describe their vision: questions would be sent off to a central processing service that would have highly trained staff and high quality procedures, “results” would be sent out within a reasonable time, and the quality of the service would be expressed in terms of the accuracy and timeliness of responses and clients' satisfaction.

Because the Imperial College project was designed as a traditional research study, an inability to adapt or evolve was almost a defining feature from the outset. Research requires a predefined question and an agreed study protocol, which should be followed through without “violation.”9 The downside of conventional research rigour is therefore rigidity and inflexibility in the face of a changing external environment. The goal of the Imperial College project was delivering the research; what happened afterwards was, in an academic sense, less important. It was undoubtedly a disadvantage that none of the Imperial group was a service general practitioner in the study catchment area.

The Basildon group saw their initiative not as a discrete research project into the role of the informaticist but as a starting impetus for wider cultural change towards high quality, evidence based care in a locality with a reputation for variable standards and where the project leader was an established service general practitioner. Their focus was on achieving a shared vision and adapting seamlessly into the “business as usual” of primary health care.

The adaptability and tenacity of the Basildon group, helped by a small and committed steering group, were crucial elements in establishing the service. For example, it was initially the project administrator's job to make contact with practices and arrange meetings between clinicians and the informaticist, but she had little success as reception staff were very protective of clinicians' time. The project leader therefore took on the task of “fronting” the project for the first year, with much greater success. Doctors who were initially reluctant to attend lunchtime meetings often became involved in discussions and stayed for two hours or more. The group noted the importance of face to face contacts and social interaction and gave it increased priority in their dissemination strategy.

The original plan in Basildon was to engage nurses via their management hierarchy, but this was unsuccessful. A different strategy was therefore devised, which involved face to face contact with individual nurses. A district nurse attended the project steering group and became an enthusiastic convert, engaging many of her colleagues. The facilitator also spent a day accompanying one district nurse on her rounds and helping her generate questions from clinical cases.

The Basildon group's initial aim was to orient the new service with the newly formed primary care group, but this largely failed. However, the project was sufficiently adaptable to link in with a new clinical effectiveness unit being established within the health authority. The determined work of the project leader in spreading the vision for the service led to several new applicants for the expanding facilitator post, which is now split between four local general practitioners and a nurse, each working two sessions a week in his or her locality.

Questions and questioning

The quantity and nature of the questions in the two projects are available as separate reports10,11 and are summarised in the table on bmj.com. Both project groups found that relatively few primary care staff spontaneously submitted questions to the service.

The Imperial College group preferred focused, single topic, “three part” questions (population-intervention-outcome or population-exposure-outcome) that could be answered from research literature. Questions not phrased in this way were reformulated before being answered. The Imperial College informaticist commented that many of the questions were relatively idiosyncratic (such as queries about vitamins or alternative remedies featured in the popular press) and not related to what she described as “the burning questions of primary care” such as how to manage diabetes or cardiovascular risk.

The Imperial College group included as part of their research the link between questioning behaviour and clinical practice. For each question submitted, they collected data via a questionnaire on how the question arose, what the practitioner would otherwise have done, and whether the answer supplied was useful, relevant, and likely to be incorporated into practice (though they did not attempt to verify this). Initially, they assumed that participants would have little interest in questions asked by others and had not considered a mechanism for sharing or disseminating questions along with their evidence based answers. The most enthusiastic of the pilot practices posted their questions and answers on a practice intranet; subsequently, the project group established a website with a question and answer library, but unfortunately, most local practices were unable to access it.

The Basildon group invited questions on any topic and welcomed questions about the organisation of care. They even took seriously a question about the effect of music in the waiting room. The project leader identified a need for tools to assess risk of coronary heart disease and, using the New Zealand guidelines, produced laminated desk mats of colour risk charts, which the facilitator distributed in person as a kind of “icebreaker” for further dialogue. This example illustrates the difference in focus: having identified an important question with the help of the project leader, the Basildon facilitator developed an aid to implementation and used his social skills and contacts to disseminate the answer (and the tool) as widely as possible.

Evaluation and outputs

The Imperial College group invested heavily in documenting, monitoring, and asking participants to assess the service. This enabled them to provide a detailed and valuable written report on the frequency of questioning, the turnaround time for responding, the nature of the questions asked, and the extent to which the responses were considered to have affected patient care. While they took these research responsibilities seriously, they did not view it as their key objective to ensure that the initiative continued beyond the funded project. Rather, they felt their main task was to pass on information and skills to those motivated and able to benefit from them.

In contrast, the Basildon group were, from the outset, focused on establishing a sustainable local initiative and were wary of becoming “diverted” into evaluation. The project leader felt that this would require skills not available in the group and that detailed, systematic evaluation was inappropriate in the early, “fluid” phase of the project. Instead, the project's resources were put into promoting the repeated personal contact between the facilitator and potential participants, which they felt was the key to promoting questioning behaviour. The Basildon group's final report to the funder was described as a “log of problems, solutions, successes and failures” and was essentially the project leader's reflections on the experience supported by some quantitative data and examples of questions.

Different implicit models of change

The Imperial College group followed the conventional approach taken in evidence based medicine, viewing questioning as an individual psychological process.4,12–15 Clinical questions were considered to arise from a doctor's (or nurse's) thoughts during a consultation. Hence, this group oriented their informaticist service towards individual doctors (and nurse practitioners) rather than practices or teams.

In contrast, the Basildon initiative was predicated on a complexity approach to change. Primary health care teams were viewed as complex systems, and changes in practice were seen as the result of interplay between individual reflection, social interaction, team relations, and organisational and professional culture. The project group were conscious of their own need to grow, adapt, and respond sensitively to feedback in order to survive and integrate. They were resistant to the use of the word “project” or associated terms such as stages, phases, timetables, or boundaries. Practices, they recognised, are suspicious of time limited and often high profile projects that appear in response to politically or academically driven funding and which create dependency before disappearing with little to show except descriptive reports.9

Discussion

Estimates of the frequency of questioning in clinical practice vary considerably. One study in secondary care found about five new questions generated per patient seen.16 One study in primary care found one question asked for every four patients,13 and another found 0.5 per half day.17 Other studies suggest that most questions in primary care, especially those not considered urgent or easily answerable, go unanswered.14 Only one study has been published on community nurses' questioning behaviour in primary care, and no firm conclusions were drawn.18 A small qualitative study found that practice nurses said they needed clinical trial evidence not primarily for immediate clinical decision making but in order to understand the rationale behind national or local guidelines or protocols on particular topics and, perhaps more importantly, to support their role as information providers to patients.19

The published literature on informaticist services is sparse. One small Australian study ran for a month and drew 20 questions from nine general practitioners; no general conclusions about the transferability of the service could be drawn.15 Preliminary data from the Welsh ATTRACT study showed that a fast (6 hour turnaround) questioning service using a pragmatic search protocol and fronted by a librarian informaticist was popular and led to (or supported) changes in practice in about half the cases; the general practitioners in this study did not seem to be concerned that the informaticist was not clinically qualified.20 Most questions submitted from general practitioners to distant informaticist services concern the choice or dose of a drug, the cause of a symptom, or the selection of a diagnostic test.20 We are not aware of any studies that addressed initiatives to promote questioning behaviour or help practitioners apply the results in practice.

The Imperial College group's approach was academically oriented—that is, their work in developing the informaticist service was systematic, focused, thorough, consistent, and rigorous. Their main intended output was to provide general information and detailed guidance to others intending to set up a similar service, and they have achieved this aim. In contrast, the Basildon group's approach was service oriented—that is, their work was locally directed, pragmatic, flexible, emergent, and based to a high degree on personal contact. Their main intended output was a credible, acceptable, and sustainable service that engaged local practitioners beyond the innovators and enthusiasts and secured continued funding; and they, in turn, achieved this aim.

Given their different emphasis from the outset, it would be invidious to compare these projects with a view to stating the “correct” way to proceed. When judged by academic criteria, the informaticist service at Imperial College scores highly, but the group might be criticised for providing an “ivory tower” service with which only those with prior understanding of evidence based medicine can fully engage. When judged by service criteria, the Basildon project scores highly, but this group might be criticised for lacking a clear internal quality standard for the evidence they provide.

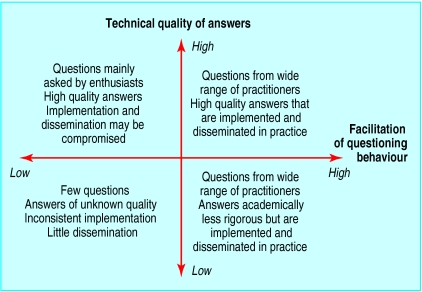

We believe that the two approaches to information support in primary health care (and probably beyond it) are not mutually exclusive but are two essential dimensions of a high quality service. To promote questioning behaviour among clinicians, address those questions competently, disseminate the results to others likely to benefit, and ensure that the results are applied in practice requires both an academic connection (the “laboratory test” service) and a service connection (the “friendly local facilitator”) (see fig 1). Figure 2 shows their complementary nature.

Figure 2.

Two dimensions of a clinical informaticist service

The evidence on getting research findings into practice is diffuse and conflicting, but some consistent messages are emerging. Sustained behaviour change among clinicians occurs more readily when interventions are locally driven, multifaceted, perceived as relevant, personalised (such as through social influence and local opinion leaders), supported by high quality evidence, delivered via interactive educational methods, and include a prompt relating to the individual patient encounter.21 Potentially, an informaticist service that took the best elements of both projects described here (the top right quadrant of fig 2) could combine many of these known requirements for success.

So far, the published literature has focused exclusively on describing and evaluating the academic dimension, and there is now an urgent need to address and refine the service dimension.4,13–17,20 We suggest that such initiatives should not be undertaken as conventional research projects but should take a developmental approach in which the pragmatic service considerations are given validity and voice.

Supplementary Material

Acknowledgments

We thank our colleagues on the project teams (Mary Pierce, Ayyaz Kauser, Mandy Cullen, and Joan Fuller); the steering group members; and the general practitioners, nurses, and practice staff involved for their contributions to the primary care informaticist services and for generously giving their time to undertake and evaluate this work.

Footnotes

Funding: The projects and the evaluation were funded by North Thames Region R & D Implementation Group.

Competing interests: None declared.

Further details of the study's methods and results appear on bmj.com

References

- 1.McColl A, Smith H, White P, Field J. General practitioners' perceptions of the route to evidence based medicine: a questionnaire survey. BMJ. 1998;316:361–365. doi: 10.1136/bmj.316.7128.361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Guyatt GH, Meade MO, Jaeschke RZ, Cook DJ, Haynes RB. Practitioners of evidence based care. Not all clinicians need to appraise evidence from scratch but all need some skills [see comments] BMJ. 2000;320:954–955. doi: 10.1136/bmj.320.7240.954. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Clarke J, Wentz R. Pragmatic approach is effective in evidence based health care [letter] BMJ. 2000;321:566–567. [PMC free article] [PubMed] [Google Scholar]

- 4.Davidoff F, Florance V. The informationist: a new health profession? Ann Intern Med. 2000;132:996–998. doi: 10.7326/0003-4819-132-12-200006200-00012. [DOI] [PubMed] [Google Scholar]

- 5.Scura G, Davidoff F. Case-related use of the medical literature. Clinical librarian services for improving patient care. JAMA. 1981;245:50–52. [PubMed] [Google Scholar]

- 6.Veenstra RJ, Gluck EH. A clinical librarian program in the intensive care unit. Crit Care Med. 1992;20:1038–1042. doi: 10.1097/00003246-199207000-00023. [DOI] [PubMed] [Google Scholar]

- 7.Swinglehurst DA, Pierce M. Questioning in general practice—a tool for change. Br J Gen Pract. 2000;50:747–750. [PMC free article] [PubMed] [Google Scholar]

- 8.Denzin M, Lincoln P. Handbook of qualitative research. London: SAGE; 1994. [Google Scholar]

- 9.Harries J, Gordon P, Plamping D, Fischer M. Projectitis: spending lots of money and the trouble with project bidding. Whole systems thinking working paper series. London: King's Fund; 1998. [Google Scholar]

- 10.Martin P, Kauser A. An informaticist working in primary care. A descriptive study. Health Inf J. 2001;7:66–70. [Google Scholar]

- 11.Swinglehurst DA, Pierce M, Fuller JC. A clinical informaticist to support primary care decision making. Qual Health Care. 2001;10:245–249. doi: 10.1136/qhc.0100245... [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ely JW, Osheroff JA, Gorman PN, Ebell MH, Chambliss ML, Pifer EA, et al. A taxonomy of generic clinical questions: classification study. BMJ. 2000;321:429–432. doi: 10.1136/bmj.321.7258.429. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Barrie AR, Ward AM. Questioning behaviour in general practice: a pragmatic study. BMJ. 1997;315:1512–1515. doi: 10.1136/bmj.315.7121.1512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Gorman PN, Helfand M. Information seeking in primary care: how physicians choose which clinical questions to pursue and which to leave unanswered. Med Decis Making. 1995;15:113–119. doi: 10.1177/0272989X9501500203. [DOI] [PubMed] [Google Scholar]

- 15.Hayward JA, Wearne SM, Middleton PF, Silagy CA, Weller DP, Doust JA. Providing evidence-based answers to clinical questions. A pilot information service for general practitioners. Med J Aust. 1999;171:547–550. [PubMed] [Google Scholar]

- 16.Osheroff JA, Forsythe DE, Buchanan BG, Bankowitz RA, Blumenfeld BH, Miller RA. Physicians' information needs: analysis of questions posed during clinical teaching. Ann Intern Med. 1991;114:576–581. doi: 10.7326/0003-4819-114-7-576. [DOI] [PubMed] [Google Scholar]

- 17.Chambliss ML, Conley J. Answering clinical questions. J Fam Pract. 1996;43:140–144. [PubMed] [Google Scholar]

- 18.Buxton V, Janes T, Harding W. Using research in community nursing. Nurs Times. 1998;94:55–59. [PubMed] [Google Scholar]

- 19.Greenhalgh T, Douglas H-R. Experiences of general practitioners and practice nurses of training courses in evidence-based health care: a qualitative study. Br J Gen Pract. 1999;49:536–540. [PMC free article] [PubMed] [Google Scholar]

- 20.Brassey J, Elwyn G, Price C, Kinnersley P. Just in time information for clinicians: a questionnaire evaluation of the ATTRACT project. BMJ. 2001;322:529–530. doi: 10.1136/bmj.322.7285.529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Greenhalgh T. Implementing research findings. In: Greenhalgh T, editor. How to read a paper: the basics of evidence based medicine. London: BMJ Books; 2000. pp. 179–199. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.