Abstract

Speech-in-noise (SIN) comprehension deficits in older adults have been linked to changes in both subcortical and cortical auditory evoked responses. However, older adults’ difficulty understanding SIN may also be related to an imbalance in signal transmission (i.e., functional connectivity) between brainstem and auditory cortices. By modeling high-density scalp recordings of speech-evoked responses with sources in brainstem (BS) and bilateral primary auditory cortices (PAC), we show that beyond attenuating neural activity, hearing loss in older adults compromises the transmission of speech information between subcortical and early cortical hubs of the speech network. We found that the strength of afferent BS→PAC neural signaling (but not the reverse efferent flow; PAC→BS) varied with mild declines in hearing acuity and this “bottom-up” functional connectivity robustly predicted older adults’ performance in a SIN identification task. Connectivity was also a better predictor of SIN processing than unitary subcortical or cortical responses alone. Our neuroimaging findings suggest that in older adults (i) mild hearing loss differentially reduces neural output at several stages of auditory processing (PAC > BS), (ii) subcortical-cortical connectivity is more sensitive to peripheral hearing loss than top-down (cortical-subcortical) control, and (iii) reduced functional connectivity in afferent auditory pathways plays a significant role in SIN comprehension problems.

Keywords: Aging, auditory evoked potentials, auditory cortex, frequency-following response (FFR), functional connectivity, source waveform analysis, neural speech processing

INTRODUCTION

Difficulty perceiving speech in noise (SIN) is a hallmark of aging. Hearing loss and reduced cognitive flexibility may contribute to speech comprehension deficits that emerge after the fourth decade of life (Humes, 1996; Humes et al., 2012). Notably, older adults’ SIN difficulties are present even without substantial hearing impairments (Gordon-Salant and Fitzgibbons, 1993; Schneider et al., 2002), suggesting robust speech processing requires more than audibility.

Emerging views of aging suggest that in addition to peripheral changes (i.e., cochlear pathology) (Humes, 1996), older adults’ perceptual SIN deficits might arise due to poorer decoding and transmission of speech sound features within the brain’s central auditory pathways (Anderson et al., 2013a; Caspary et al., 2008; Peelle et al., 2011; Schneider et al., 2002; Wong et al., 2010). Although “central presbycusis” offers a powerful framework for studying the perceptual consequences of aging (Humes, 1996), few studies have explicitly investigated how the auditory system extracts and transmits features of the speech signal across different levels of the auditory neuroaxis. Senescent-related changes have been observed in pontine, midbrain, and cortical neurons (Peelle and Wingfield, 2016). Yet, such insight into brainstem- cortex interplay has been limited to animal models.

Age-related changes in hierarchical auditory processing can be observed in scalp-recorded frequency-following responses (FFR) and event-related brain potentials (ERPs), dominantly reflecting activity of midbrain and cerebral structures, respectively (Bidelman et al., 2013). Both speech-FFRs (Anderson et al., 2013b; Bidelman et al., 2014b) and ERPs (Alain et al., 2014; Bidelman et al., 2014b; Tremblay et al., 2003) reveal age-related changes in the responsiveness (amplitude) and precision (timing) of how subcortical and cortical stages of the auditory system represent complex sounds. In our studies we record these potentials simultaneously and show that aging is associated with increased redundancy (higher shared information) between brainstem and cortical speech representations (Bidelman et al., 2014b; Bidelman et al., 2017). Our previous findings imply that SIN problems in older listeners might result from aberrant transmission of speech signals from brainstem en route to auditory cortex, a possibility that has never been formally tested.

A potential candidate for these central encoding/transmission deficits in aging (Humes, 1996) could be the well-known afferent and efferent (corticofugal) projections that carry neural signals bidirectionally between brainstem and primary auditory cortex (BS↔PAC) (Bajo et al., 2010; Suga et al., 2000). Descending corticocollicular (PAC→BS) fibers have been shown to calibrate sound processing of midbrain neurons by fine tuning their receptive fields in response to behaviorally relevant stimuli (Suga et al., 2000). Germane to our studies, corticofugal efferents drive learning-induced plasticity in animals (Bajo et al., 2010) and may also account for the neuroplastic enhancements observed in human FFRs across the age spectrum (Anderson et al., 2013b; Musacchia et al., 2007; Wong et al., 2007). While assays of olivocochlear (peripheral efferent) function are well-established (e.g., otoacoustic emissions; de Boer and Thornton, 2008) there have been no direct measurements of corticofugal (central efferent) system function in humans, despite its assumed role in complex listening skills like SIN (Slee and David, 2015).

To elucidate brainstem-cortical reciprocity in humans, we recorded neuroelectric FFR and ERP responses during active speech perception. Examining older adults with normal or mild hearing loss for their age allowed us to investigate how hierarchical coding changes with declining sensory input. We used source imaging and functional connectivity analyses to parse activity within and directed transmission between sub- and neo-cortical levels. To our knowledge, this is the first study to document afferent and corticofugal efferent function in human speech processing. We hypothesized (i) hearing loss would alter the relative strengths of afferent (BS→PAC) and/or corticocollicular efferent (PAC→BS) signaling and more importantly, (ii) poorer connectivity would account for older adults’ performance in SIN identification. Beyond aging, such findings would also establish a biological mechanism to account for the pervasive, parallel changes in brainstem and cortical speech-evoked responses previously observed in highly skilled listeners (e.g., musicians) and certain neuropathologies (Bidelman and Alain, 2015; Bidelman et al., 2017; Musacchia et al., 2008).

METHODS

Participants

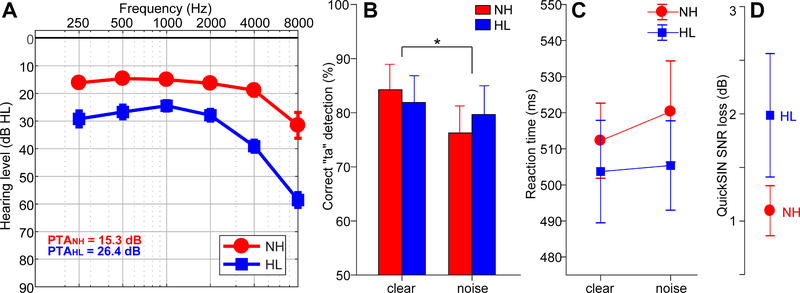

Thirty-two older adults aged 52–75 years were recruited from the Greater Toronto Area to participate in our ongoing studies on aging and the auditory system. None reported history of neurological or psychiatric illness. Pure-tone audiometry was conducted at octave frequencies between 250 and 8000 Hz. Based on listeners’ hearing thresholds, the cohort was divided into normal and hearing-impaired groups (Fig. 1A). In this study, normal-hearing (NH; n=13) listeners had average thresholds (250 to 8000 Hz) better than 25 dB HL across both ears, whereas listeners with hearing loss (HL; n=19) had average thresholds poorer than 25 dB HL. This division resulted in pure-tone averages (PTAs) (i.e., mean of 500, 1000, 2000 Hz) that were ~10 dB better in NH compared to HL listeners (mean ±SD; NH: 15.3±3.27 dB HL, HL: 26.4±7.1 dB HL; t2.71=−5.95, p<0.0001; NH range = 8.3–20.83 dB HL, HL range = 15.8–45 dB HL). PTA between ears was symmetric in both the NH [t12=−0.15, p=0.89] and HL [t18=−2.02, p=0.06] groups. Our definition of hearing impairment further helped the post hoc matching of NH and HL listeners on other demographic variables while maintaining adequate sample sizes per group. It also always for a direct comparison to published full-brain functional connectivity data reported for this cohort (Bidelman et al., 2019). Both groups had signs of age-related presbycusis at very high frequencies (8000 Hz), which is typical in older adults. However, it should be noted that the audiometric thresholds of our NH listeners were better than the hearing typically expected based on the age range of our cohort, even at higher frequencies (Cruickshanks et al., 1998; Pearson et al., 1995). Importantly, besides hearing, the groups were otherwise matched in age (NH: 66.2±6.1 years, HL: 70.4±4.9 years; t2.22=−2.05, p = 0.052) and gender balance (NH: 5/8 male/female; HL: 11/8; Fisher’s exact test, p=0.47). Age and hearing loss were not correlated in our sample (Pearson’s r=0.29, p=0.10). The study was carried out in accordance with relevant guidelines and regulations and was approved by the Baycrest Hospital Human Subject Review Committee. Participants gave informed written consent before taking part in the study and received a small honorarium for their participation.

Figure 1: Audiometric and perceptual results.

(A) Audiograms for listeners with normal hearing (NH) and hearing loss (HL) pooled across ears. Hearing was ~10 dB better in NH vs. HL listeners. (B) Behavioral accuracy for detecting infrequent /ta/ tokens in clear and noise-degraded conditions. Noise-related declines in behavioral performance were prominent but no group differences were observed. (C) Reaction times (RTs) for speech detection were similar between groups and speech SNRs. (D) HL listeners showed more variability and marginally poorer QuickSIN performance than NH listeners. errorbars = ± s.e.m., *p< 0.05.

Stimuli and task

Three tokens from the standardized UCLA version of the Nonsense Syllable Test were used in this study (Dubno and Schaefer, 1992). These tokens were naturally produced English consonant-vowel phonemes (/ba/, /pa/, and /ta/), spoken by a female talker. Each phoneme was 100-ms in duration and matched in terms of average root mean square sound pressure level (SPL). Each had a common voice fundamental frequency (mean F0=150 Hz) and first and second formants (F1= 885, F2=1389 Hz). This relatively high F0 ensured that FFRs would be of dominantly subcortical origin and cleanly separable from cortical activity (Bidelman, 2018), since PAC phase-locking (cf. “cortical FFRs”; Coffey et al., 2016) is rare above ~100 Hz (Bidelman, 2018; Brugge et al., 2009). CVs were presented in both clear (i.e., no noise) and noise-degraded conditions. For each noise condition, the stimulus set included a total of 3000 /ba/, 3000/pa/, and 210 /ta/ tokens (spread evenly over three blocks to allow for breaks).

For each block, speech tokens were presented back-to-back in random order with a jittered interstimulus interval (95–155 ms, 5ms steps, uniform distribution). Frequent (/ba/, /pa/) and infrequent (/ta/) tokens were presented according to a pseudo-random schedule such that at least two frequent stimuli intervened between target /ta/ tokens. Listeners were asked to respond each time they detected the target (/ta/) via a button press on the computer. Reaction time (RT) and detection accuracy (%) were logged. These procedures were then repeated using an identical speech triplet mixed with eight talker noise babble (cf. Killion et al., 2004) at a signal-to-noise ratio (SNR) of 10 dB. Thus, in total, there were 6 blocks (3 clear, 3 noise). The babble was presented continuously so that it was not time-locked to the stimulus, providing a constant backdrop of interference in the noise condition (e.g., Alain et al., 2012; Bidelman, 2016; Bidelman and Howell, 2016). Comparing behavioral performance between clear and degraded stimulus conditions allowed us to assess the impact of acoustic noise and differences between normal and hearing-impaired listeners in speech perception. Importantly, our task ensured that FFRs/ERPs were recorded online, during active speech perception. This helps circumvent issues in interpreting waveforms recorded across different attentional states or task demands (for discussion, see Bidelman, 2015b).

Stimulus presentation was controlled by a MATLAB (The Mathworks, Inc.; Natick, MA) routed to a TDT RP2 interface (Tucker-Davis Technologies; Alachua, FL) and delivered binaurally through insert earphones (ER-3; Etymotic Research; Elk Grove Village, IL). The speech stimuli were presented at an intensity of 75 dBA SPL (noise at 65 dBA SPL) using alternating polarity and FFRs/ERPs were derived by summing an equal number of condensation and rarefaction responses. This approach helps minimize stimulus artifact and cochlear microphonic from scalp recordings (which flip with polarity) and accentuates portions of the FFR related to signal envelope, i.e., fundamental frequency (F0) (Aiken and Picton, 2008; Skoe and Kraus, 2010a; Smalt et al., 2012).

QuickSIN test

We measured listeners’ speech reception thresholds in noise using the QuickSIN test (Killion et al., 2004). Participants were presented lists of six sentences with five key words per sentence embedded in four-talker babble noise. Sentences were presented at 70 dB SPL using pre-recorded SNRs that decreased in 5 dB steps from 25 dB (very easy) to 0 dB (very difficult). Correctly recalled keywords were logged for each sentence and “SNR loss” (in dB) was determined as the SNR required to correctly identify 50% of the key words (Killion et al., 2004). Larger scores reflect worse performance in SIN recognition. We averaged SNR loss from four list presentations per listener.

Electrophysiological recordings and analysis

EEG acquisition and preprocessing

During the primary behavioral task, neuroelectric activity was recorded from 32 channels at standard 10–20 electrode locations on the scalp (Oostenveld and Praamstra, 2001). Recording EEGs during the active listening task allowed us to control for attention and assess the relative influence of brainstem and cortex during online speech perception. The montage included electrode coverage over frontocentral (Fz, Fp1/2, F3/4, F7/8, F9/10, C3/4), temporal (T7/8, TP7/9, TP8/10), parietal (Pz, P3/4, P7/8), and occipital-cerebellar (Oz, O1/2, CB1/2, Iz) sites. Electrodes placed along the zygomatic arch (FT9/10) and the outer canthi and superior/inferior orbit of the eye (IO1/2, LO1/2) monitored ocular activity and blink artifacts. Electrode impedances were maintained at ≤ 5 kΩ. EEGs were digitized at a sampling rate of 20 kHz using SynAmps RT amplifiers (Compumedics Neuroscan; Charlotte, NC). Data were re-referenced off-line to a common average reference for further analyses.

Subsequent pre-processing was performed in BESA® Research v6.1 (BESA, GmbH). Ocular artifacts (saccades and blinks) were first corrected in the continuous EEG using a principal component analysis (PCA) (Picton et al., 2000). Cleaned EEGs were then epoched (−10–200 ms), baseline corrected to the pre-stimulus period, and subsequently averaged in the time domain to obtain compound evoked responses, containing both brainstem and cortical activity (Bidelman et al., 2013), for each stimulus condition per participant.

Source waveform derivations

Scalp potentials (sensor-level recordings) were transformed to source space using BESA. We seeded three dipoles located in (i) midbrain (inferior colliculus, IC) of the brainstem (BS) and (ii-iii) bilateral primary auditory cortex (PAC) (Bidelman, 2018). Dipole orientations for the PAC sources were set using the tangential component of BESA’s default auditory evoked potential (AEP) montage (Scherg et al., 2002). The tangential component was selected given that it dominantly explains the auditory cortical ERPs (Picton et al., 1999). Orientation of the BS source followed the oblique, fronto-centrally directed dipole of the FFR (Bidelman, 2015a). Focusing on BS and PAC source waveforms allowed us to reduce the dimensionality of the scalp data from 32 sensors to 3 source channels and allowed specific hypothesis testing regarding hearing-induced changes in brainstem-cortical connectivity. We did not fit dipoles at the individual subject level in favor of using a published leadfield that robustly models the EEG-based FFR (Bidelman, 2018). While simplistic, this model’s average goodness of fit (GoF) across groups and stimuli was 88.1±3.8%, meaning that residual variance (RV) between recorded and source-modeled data was low (RV= 11.9±3.9%). For further details of this modelling approach, the reader is referred to Bidelman (2018).

To extract individuals’ source waveforms within each region of interest (ROI), we transformed scalp recordings into source-level responses using a virtual source montage (Scherg et al., 2002). This digital re-montaging applies a spatial filter to all electrodes (defined by the foci of our three-dipole configuration). Relative weights were optimized in BESA to image activity within each brain ROI while suppressing overlapping activity stemming from other active brain regions (for details, see Scherg and Ebersole, 1994; Scherg et al., 2002). For each participant, the model was held fixed and was used as a spatial filter to derive their source waveforms (Alain et al., 2009; Zendel and Alain, 2014), reflecting the neuronal current (in units nAm) as seen within each anatomical ROI. Compound source waveforms were then bandpass filtered into high (100–1000 Hz) and low (1–30 Hz) frequency bands to isolate the periodic brainstem FFR vs. slower cortical ERP waves from each listeners’ compound evoked response (Bidelman, 2015b; Bidelman et al., 2013; Musacchia et al., 2008). Comparing FFR and ERP source waveforms allowed us to assess the relative contributions of brainstem and cortical activity to SIN comprehension in normal and hearing-impaired listeners. Results reported herein were collapsed across /ba/ and /pa/ tokens to reduce the dimensionality of the data. Infrequent /ta/ responses were not analyzed given the limited number of trials for this condition and to avoid mismatch negativities in our analyses.

FFR source waveforms

We measured the magnitude of the source FFR F0 to quantify the degree of neural phase-locking to the speech envelope rate, a neural correlate of “voice pitch” encoding (Bidelman and Krishnan, 2010; Bidelman and Alain, 2015; Parbery-Clark et al., 2013). F0 was the most prominent spectral component in FFR spectra (see Fig. 4) and is highly replicable both within and between listeners (Bidelman et al., 2018b). F0 was taken as the peak amplitude in response spectra nearest the 150 Hz bin, the expected F0 based on our speech stimuli. Previous studies assessing (scalp-level) FFRs, have shown F0 is highly sensitive to age-and hearing-related changes (Anderson et al., 2011; Bidelman et al., 2014b; Bidelman et al., 2017; Clinard et al., 2010) as well as noise degradation (Song et al., 2011; Yellamsetty and Bidelman, 2019).

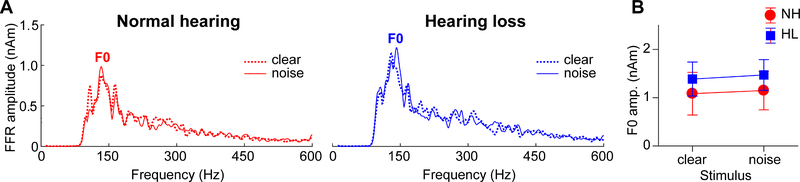

Figure 4: Brainstem speech processing as a function of noise and hearing loss.

(A) Source FFR spectra for response to clear and degraded speech. Strong energy is observed at the voice fundamental frequency (F0) but much weaker energy tagging the upper harmonics of speech, consistent with age-related declines in high-frequency spectral coding. Group and noise-related effects in FFRs were less apparent than in the cortical ERPs (cf. Fig. 3). errorbars = ± s.e.m.

ERP source waveforms

Prominent components of the ERP source responses were quantified in latency and amplitude using BESA’s automated peak analysis for both left and right PAC waveforms in each participant. Appropriate latency windows were first determined by manual inspection of grand averaged traces. For each participant, the P1 wave was then defined as the point of maximum upward deflection from baseline between 40 and 70 ms; N1 as the negative-going deflection within 90 and 145 ms; P2 as the maximum positive deflection between 145 and 175 ms (Hall, 1992). These measures allowed us to evaluate the effects of noise and hearing loss on the magnitude and efficiency of cortical speech processing. Additionally, differentiation between hemispheres enabled us to investigate the relative contributions of each auditory cortex to SIN processing.

Functional connectivity

We measured directed information flow between nodes of the brainstem-cortical network using phase transfer entropy (PTE) (Lobier et al., 2014). We have previously shown age- and noise-related differences in full-brain connectivity within the auditory-linguistic-motor loop using a similar connectivity approach (Bidelman et al., 2019). For data reduction purposes, responses were collapsed across left and right hemispheres and stimuli prior to connectivity analysis. PTE is a non-parametric, information theoretic measure of directed signal interaction. It is ideal for measuring functional connectivity between regions because it can detect nonlinear associations between signals and is robust against the volume conducted cross-talk in EEG (Hillebrand et al., 2016; Vicente et al., 2011). PTE was estimated using the time series of the instantaneous phases of pairwise signals (i.e., BS and PAC waveforms) (Hillebrand et al., 2016; Lobier et al., 2014). PTE was computed according to Eq. 1:

| (Eq. 1) |

where X and Y are the ROI signals and the log(.) term is the conditional probabilities between signals at time t+τ for sample m and n. The probabilities were obtained by building histograms of occurrences of pairs of phase estimates in the epoch window (Lobier et al., 2014). Following Hillebrand et al. (2016), the number of histogram bins was set to e0.626+04ln(Ns − τ − 1) (Otnes and Enochson, 1972). The delay parameter τ accounts for the number of times the phase flips across time and channels (here sources). PTE can be implemented in a frequency-specific manner, e.g., to assess connectivity in individual EEG bands (Lobier et al., 2014). However, since our source signals (BS, PAC) were filtered into different frequency bands we set τ = 100 ms to include coverage of the entirety of the FFR and cortical ERP phase time courses (see Fig. 2). Although this τ was based on a priori knowledge of the ERP time course, it should be noted that PTE yields similar results with comparable sensitivity across a wide range of analysis lags (Lobier et al., 2014). PTE was implemented using the PhaseTE_MF function as distributed in Brainstorm (Tadel et al., 2011)1.

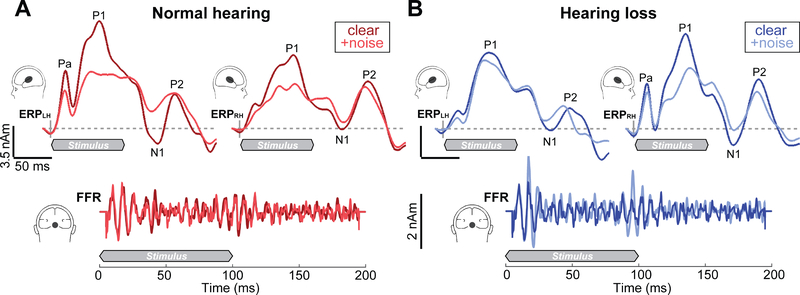

Figure 2: ERP (top traces) and FFR (bottom) source waveforms reflect the simultaneous encoding of speech within cortical and brainstem tiers of the auditory system.

(A) NH listeners show a leftward asymmetry in PAC responses compared to HL listeners (B), who show stronger activation in right PAC. Noise weakens the cortical ERPs to speech across the board, particularly in the timeframe of P1 and N1, reflecting the initial registration of sound in PAC. In contrast to cortical responses, BS FFRs are remarkably similar between groups and noise conditions. Shaded regions demarcate the 100 ms speech stimulus. BS, brainstem; PAC, primary auditory cortex.

Intuitively, PTE can be understood as the reduction in information (units bits) necessary to describe the present ROIY signal using both the past of ROIX and ROIY. PTE cannot be negative and has no upper bound. Higher values indicate stronger connectivity, whereas PTEX→Y =0 implies no directed signaling, as would be the case for two random variables. In this sense, it is similar to the definition of Granger Causality (Barnett et al., 2009), which states that ROIX has a causal influence on the target ROIY if knowing the past of both signals improves the prediction of the target’s future compared to knowing only its past. Yet, PTE has several important advantages over other connectivity metrics (Lobier et al., 2014): (i) PTE is more robust to realistic amounts of noise and mixing in the EEG that can produce false-positive connections; (ii) PTE can detect nonlinear associations between signals; (iii) PTE relaxes assumptions about data normality and is therefore model-free; (iv) unlike correlation or covariance measures, PTE is asymmetric so it can be computed bi-directionally between pairs of sources (X→Y vs. Y→X) to infer causal, directional flow of information between interacting brain regions. Computing PTE in both directions between BS and PAC allowed us to quantify the relative weighting of information flowing between subcortical and cortical ROIs in both feedforward (afferent; BS→PAC) and feedback (efferent; PAC→BS) directions2.

Statistical analysis

Unless otherwise noted, two-way mixed model ANOVAs were conducted on all dependent variables (GLIMMIX, SAS® 9.4, SAS Institute; Cary, NC). Degrees of freedom where estimated using PROC GLIMMIX’s containment option. Group (2 levels; NH, HL) and stimulus SNR (2 levels; clear, noise) functioned as fixed effects; participants served as a random factor. With the exception of ERP amplitude measures, initial diagnostics confirmed normality and homogeneity of variance assumptions for parametric statistics. Tukey–Kramer adjustments controlled Type I error inflation. An a priori significance level was set at α = 0.05 for all statistical analyses. Effect sizes are reported as Cohen’s-d (Wilson, 2018). Independent samples t-tests (un-pooled variance, two-tailed) were used to contrast demographic variables. One sample t-tests (against a null PTE=0) were used to confirm significant (above chance) connectivity between BS and PAC sources prior to group comparisons.

Correlational analyses (Pearson’s-r) and robust regression (bisquare weighting) were used to evaluate relationships between neural and behavioral measures. Robust fitting was achieved using the ‘fitlm’ function in MATLAB. We used an efficient, bootstrapping implementation of the Sobel statistic (Preacher and Hayes, 2004; Sobel, 1982) (N=1000 resamples) to test for mediation effects between demographic, neural connectivity, and behavioral measures.

RESULTS

We recorded EEGs in N=32 older adults (aged 52–75 years) with and without mild hearing loss during a rapid speech detection task (see Methods). In both a clear and noise-degraded listening condition, participants monitored a continuous speech stream consisting of several thousand consonant-vowel tokens (/ba/, /pa/) and indicated their detection of infrequent /ta/ target sounds during online EEG recording.

Behavioral data

Behavioral accuracy and reaction time for target speech detection are shown for each group and noise condition in Figure 1. An ANOVA revealed a main effect of SNR on /ta/ detection accuracy, which was lower for noise-degraded compared to clear speech [F1,30=5.66, p=0.024, d=0.88; Fig. 1B]. However, groups differed neither in their accuracy [F1,30=0.01, p=0.94; d=0.04] nor speed [F1,30=0.47, p=0.49; d=0.26; Fig. 1C] of speech identification. On average, HL individuals achieved QuickSIN performance within ~1 dB of NH listeners, and scores did not differ between groups [t2.35=−1.43, p=0.16] (Fig. 1D). Nevertheless, HL listeners showed more inter-subject variability in SIN performance compared to NH listeners [Equal variance test (two-sample F-test): F18,12=8.81, p=0.0004]. Collectively, these results suggest that the hearing loss in our sample was not yet egregious enough to yield substantial deficits in speech perception and/or no clear differentiation of listeners into different clinical categories.

Electrophysiological data

Speech-evoked brainstem FFR and cortical ERP source waveforms are shown in Figure 2. FFR and ERP waveforms were not correlated for either group (t-test against zero mean correlation: NH: t12=0.11, p=0.92; HL t18=1.51, p=0.15), indicating that brainstem and cortical neural activity were not dependent on one another, per se. Cortical activity appeared as a series of obligatory waves developing over ~200 ms after the initiation of speech that were modulated by noise and cerebral hemisphere. The unusually large P1 and reduced N1-P2 are likely due to the fast stimulus presentation rate and the differential effect of habituation on each component wave (Crowley and Colrain, 2004). Noise-related changes in the ERPs were particularly prominent in the earlier P1 and N1 deflections reflecting the initial registration of sound in medial portions of PAC and secondary auditory cortex (Liegeois-Chauvel et al., 1994; Picton et al., 1999; Scherg and von Cramon, 1986).

These observations were confirmed via quantitative analysis of source ERP latency and amplitude. ANOVA diagnostics indicated positive skew in ERP amplitude measures. Thus, we used a natural log transform in analyses of the cortical amplitude data. An ANOVA conducted on log-transformed ERP amplitudes revealed a main effect of SNR for both P1 and N1 with stronger responses for clear compared to noise-degraded speech [P1 amp: F1, 94=12.67, p<0.001, d=1.28; N1 amp: F1,94=6.70, p=0.01, d=0.93; data not shown]. These results replicate the noise-related degradation in speech-evoked activity observed in previous studies (Alain et al., 2014; Bidelman and Howell, 2016). Unlike the early ERP waves, P2 amplitude varied between hemispheres [F1,94=9.38, p=0.003, d=1.10], with greater activation in right PAC. There was also a main effect of group with larger P2 responses in NH listeners [F1,30=4.74, p=0.038, d=0.78] (Fig. 3A and 3B). The group x condition x hemisphere (p=0.66) as well as all other constituent two-way interactions were not significant (ps > 0.09). The P2 deflection is thought to reflect the signal’s identity, recognition of perceptual objects, and perceptual-phonetic categories of speech (Alain et al., 2007; Bidelman and Lee, 2015; Bidelman and Yellamsetty, 2017; Bidelman et al., 2013; Eulitz et al., 1995; Wood et al., 1971). The effects of hearing loss and noise on the ERP wave could indicate deficits in mapping acoustic details into a more abstract phonemic representation.

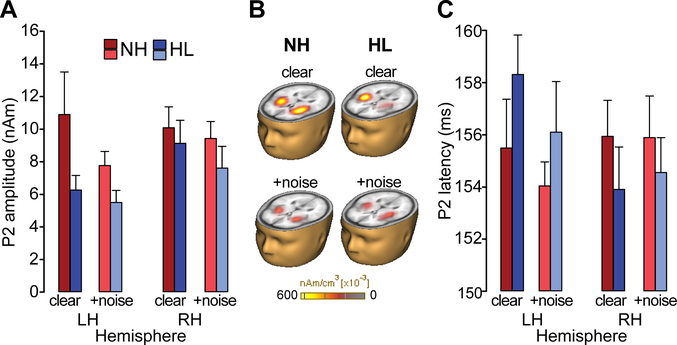

Figure 3: Cortical speech processing is modulated by noise interference, hearing status, and cerebral hemisphere.

(A) P2 amplitudes are stronger in NH listeners regardless of SNR. (B) Brain volumes show distributed source activation maps using Cortical Low resolution electromagnetic tomography Analysis Recursively Applied (CLARA; BESA v6.1) (Iordanov et al., 2014). Functional data are overlaid on the BESA brain template (Richards et al., 2016). (C) P2 latency revealed a group x hemispheric interaction. In HL listeners, responses were ~3 ms earlier in right compared to left hemisphere (R<L) whereas no latency differences were observed in NH ears (L=R). errorbars = ± s.e.m.

For latency, no effects were observed at P1. However, hemispheric differences were noted for N1 latencies [F1,94=9.49, p=0.003, d=1.11], where responses were ~4 ms earlier in the right compared to left hemisphere across both groups. P2 latency also showed a group x hemisphere interaction [F1,93=5.27, p=0.02, d=0.82] (Fig. 3B). Post hoc analyses revealed a significant asymmetry for the HL group: P2 latencies were ~3 ms earlier in right relative to left PAC whereas no hemispheric asymmetry was observed in the NH listeners. These results indicate an abnormal hemispheric asymmetry beginning as early as N1 extending through P2 (~150 ms) in listeners with mild hearing impairment.

In contrast to slow cortical activity, brainstem FFRs showed phase-locked neural activity to the periodicities of speech (Fig. 2, bottom traces). Analysis of response spectra revealed strong energy at the voice fundamental frequency (F0) and weaker energy tagging the upper harmonics of speech (Fig. 4). Previous FFR studies have shown that older adults have limited coding of the high-frequency harmonics of speech (e.g., Anderson et al., 2013c; Bidelman et al., 2014b; Bidelman et al., 2017; Clinard and Cotter, 2015). The latter is particularly susceptible to noise (Bidelman, 2016; Bidelman and Krishnan, 2010) and hearing loss (Henry and Heinz, 2012) and reduced amplitudes may be attributable to age- and hearing-related changes in brainstem phase-locking (Parthasarathy et al., 2014). Weaker harmonic energy of the F0 may also be due to the relatively short duration of vowel periodicity (< 40 ms) of our stimuli. Group and noise-related effects in FFRs were not apparent as they were in the ERPs. An ANOVA conducted on F0 amplitudes showed that FFRs in older adults were not modulated by hearing loss [main effect of group: F1,30=0.38, p=0.54, d=0.22] or background noise [main of effect of SNR: F1,30=0.41, p=0.53, d=0.23] (Fig. 4B). These results suggest that neither the severity of noise nor mild hearing impairment had an appreciable effect on the fidelity of brainstem F0 coding in our listeners. Yet, comparing across levels of the neuraxis, age-related hearing loss had a differential effect on complex sound coding across levels, exerting an effect at cortical but not subcortical stages of the auditory system (cf. Bidelman et al., 2014b).

Lastly, we did not observe correlations between FFRs and the QuickSIN for either clear or noise-degraded responses (ps > 0.76). Of the cortical PAC measures, only P2 latency (in noise) correlated with QuickSIN scores (r=0.47, p=0.0068), where earlier responses predicted better performance on the QuickSIN test. P2 latency was not however correlated with PTA (r=0.31, p=0.09). However, the lack of strong correspondence between FFR/ERP measures and the QuickSIN might be expected given differences in task complexity between neural recordings (rapid speech detection task) vs. the QuickSIN (sentence-level recognition).

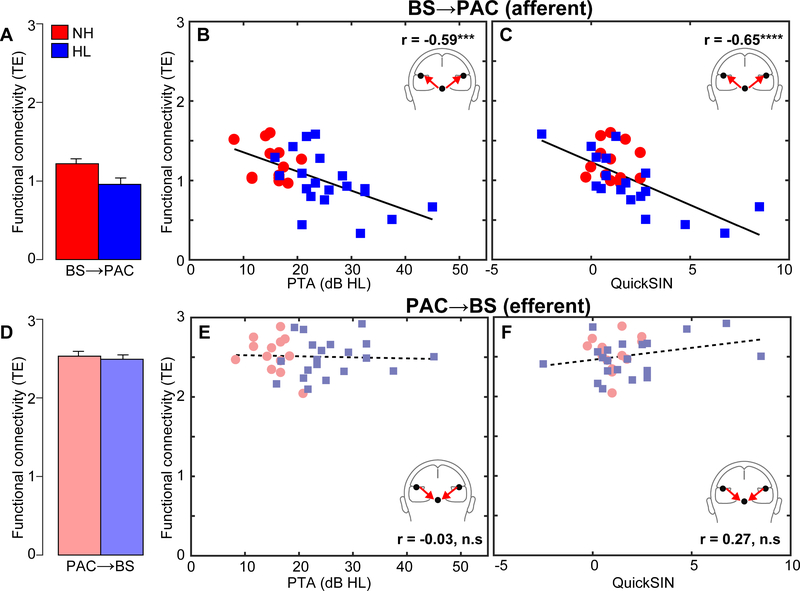

Brainstem-cortical functional connectivity

Phase transfer entropy (PTE), quantifying the feedforward (afferent) and feedback (efferent) functional connectivity between BS and PAC, is shown in Figure 5. All afferent (BS→PAC) and efferent (PAC→BS) PTE values were well above zero (t-tests against PTE=0; Ps< 0.001) confirming significant (non-random) neural signaling in both directions. We found that afferent BS→PAC signaling was stronger in NH vs. HL listeners [F1,30=5.52, p=0.0256, d=0.84] (Fig. 5A) and negatively correlated with the degree of listeners’ hearing impairment based on their PTAs (Fig. 5B) [r=−0.59, p=0.0004]. Individuals with poorer hearing acuity showed reduced neural signaling directed from BS to PAC. More interestingly, we found afferent connectivity also predicted behavioral QuickSIN scores (Fig. 5C) [r=−0.65, p<0.0001], such that listeners with weaker BS→PAC transmission showed poorer SIN comprehension (i.e., higher QuickSIN scores)3. Given that nearly all (cf. P2 latency in noise) FFR/ERP measures by themselves did not predict QuickSIN scores, these findings indicate that afferent connectivity is a unique predictor of SIN processing (Bidelman et al., 2018a), above and beyond the responsivity in BS or PAC alone.

Figure 5: Functional connectivity between auditory brainstem and cortex varies with hearing loss and predicts SIN comprehension.

Neural responses are collapsed across hemispheres and SNRs. (A) Transfer entropy reflecting directed (casual) afferent neural signaling from BS→PAC. Afferent connectivity is stronger in normal-hearing compared to hearing-impaired listeners. (B) Afferent connectivity is weaker in listeners with poorer hearing (i.e., worse PTA thresholds) and predicts behavioral SIN performance (C). Individuals with stronger BS→PAC connectivity show better (i.e., lower) scores on the QuickSIN. (D) Efferent neural signaling from PAC→BS does not vary between NH and HL listeners, suggesting similar top-down processing between groups. Similarly, efferent connectivity did not covary with hearing loss (E) nor did it predict SIN comprehension (F). Solid lines=significant correlations; dotted lines=n.s. relationships. errorbars = ± s.e.m., ***p<0.001, ****p<0.0001.

In contrast to afferent flow, efferent connectivity directed from PAC→BS, did not differentiate groups [F1,30=0.21, p=0.65, d=0.16] (Fig. 5D). Furthermore, while efferent connectivity was generally stronger than afferent connectivity [t31=2.52, p=0.0171], PAC→BS transmission was not correlated with hearing thresholds (Fig. 5E) [r=−0.03, p=0.86] nor behavioral QuickSIN scores (Fig. 5F) [r=0.27, p=0.14]. Collectively, connectivity results suggest that mild hearing loss alters the afferent-efferent balance of neural communication between auditory brainstem and cortical structures. However, in the aging auditory system, bottom-up (BS→PAC) transmission appears more sensitive to peripheral hearing loss (as measured by pure tone thresholds) and is more predictive of perceptual speech outcomes than top-down signaling (PAC→BS).

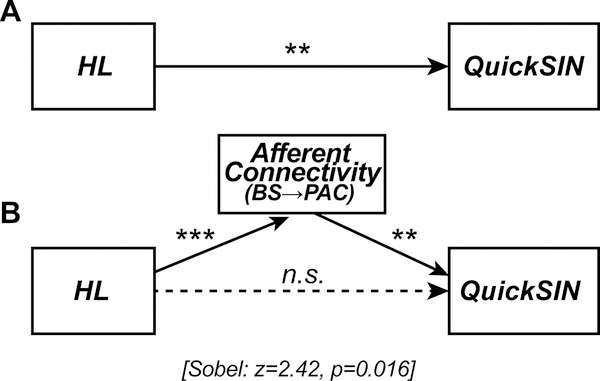

BS→PAC connectivity was correlated with both mild hearing loss and behavioral QuickSIN measures, which suggests that neural signaling could mediate SIN comprehension in older adults in addition to peripheral hearing loss. To test this possibility, we used Sobel mediation analysis (Preacher and Hayes, 2004; Sobel, 1982) to tease apart the contributions of hearing loss (PTA) and afferent connectivity (PTE) on listeners’ QuickSIN scores (among the entire sample). The Sobel test contrasts the strength of regression between a pairwise vs. a triplet (mediation) model (i.e., X→Y vs. X→M→Y). M is said to mediate the relation between the X→Y if (i) X first predicts Y on its own, (ii) X predicts M, and (iii) the functional relation between X→Y is rendered insignificant after controlling for the mediator M (Baron and Kenny, 1986; Preacher and Hayes, 2004).

PTA by itself was a strong predictor of QuickSIN scores (Fig. 6A) [b=0.13; t=3.23, p=0.0030]; reduced hearing acuity was associated with poorer SIN comprehension. However, when introducing BS→PAC afferent connectivity into the model, the direct relation between PTA and QuickSIN was no longer significant (Fig. 6B) [Sobel mediation effect: z=2.42, p=0.016]. PTA predicted the strength of afferent connectivity [b=−0.02; t=−4.01, p=0.0004] and in turn, connectivity predicted QuickSIN scores [b=−3.28; t=−3.12, p=0.0041], but the effect of hearing loss on SIN comprehension was indirectly mediated by BS→PAC connectivity strength4. In contrast to afferent signaling, efferent connectivity was not a mediator of SIN comprehension [Sobel z=−0.16, p=0.87]. However, this result might be anticipated given the lack of group differences in efferent PAC→BS connectivity. These results indicate that while hearing status is correlated with perception, the underlying afferent flow of neural activity from BS→PAC best predicts older adults’ SIN listening skills.

Figure 6: Afferent neural signaling from BS to PAC mediates the relation between hearing loss and SIN comprehension.

Sobel mediation analysis (Sobel, 1982) between listeners’ hearing loss (PTA thresholds), neural connectivity (BS→PAC signaling), and SIN comprehension (QuickSIN scores). Edges show significant relations between pairwise variables identified via linear regression. (A) Hearing loss by itself strongly predicts QuickSIN scores such that reduced hearing is associated with poorer SIN comprehension. (B) Accounting for BS→PAC afferent connectivity renders this relation insignificant (Sobel test: z=2.42, p=0.016; Sobel, 1982), indicating the strength of neural communication between BS and PAC, rather than hearing loss per se, mediates older adults’ SIN comprehension. **p <0.01, ***p<0.001.

DISCUSSION

By examining functional connectivity between brainstem and cortical sources of speech-evoked responses, we demonstrate a critical dissociation in how hearing loss impacts speech representations and the transfer of information between functional levels of the auditory pathway. We show that afferent (BS→PAC), but not efferent (PAC→BS), neural transmission during active speech perception weakens with declining hearing and this connectivity predicts listeners’ SIN comprehension/identification. These findings reveal that while age-related hearing loss alters neural output within various tiers of the auditory system (PAC>BS) (i) bottom-up subcortical-cortical connectivity is more sensitive to diminished hearing than top-down (cortical-subcortical) connectivity, and (ii) afferent BS→PAC neural transmission accounts for reduced speech understanding in the elderly.

Comparisons between source-level FFRs and ERPs revealed that age-related hearing loss had a differential impact on brainstem vs. cortical speech processing. This finding is reminiscent of animal work demonstrating that online changes in neurons receptive field within the inferior colliculus are smaller and in the opposite direction of changes in auditory cortex for the same task (Slee and David, 2015). In our own EEG studies, we showed that hearing loss weakens brainstem encoding of speech (e.g., F0 pitch and formant cues) whereas both age and hearing loss exert negative effects at the cortical level (Bidelman et al., 2014b). Here, we show that age-related hearing loss reduces amplitude and prolongs the latency of cortical speech-evoked responses, indicative of weaker and less efficient neural processing. In contrast, FFRs showed negligible group differences. The lack of significant difference related to age- related hearing loss in lower-level (BS) compared to higher-level (PAC) auditory sources suggests that declines in hearing acuity associated with normal aging exert a differential effect on neural encoding across functional stages of the auditory hierarchy. These findings contrast those of prior FFR studies on aging (Anderson et al., 2013c; Bidelman et al., 2014b; Bidelman et al., 2017; Clinard and Cotter, 2015). The discrepancy may be due to the fact that our FFR analyses focused on source responses—a more “pure” measurement of midbrain activity—rather than scalp potentials (previous studies), which can blur the contributions of various subcortical and cortical FFR generators (Bidelman, 2018; Coffey et al., 2016). Previous studies documenting hearing-related changes in sensor space (i.e., scalp electrodes) would have included a mixture of activity from more peripheral auditory structures known to dominate the FFR (e.g., auditory nerve; Bidelman, 2015a; Bidelman, 2018), which are also highly sensitive to age-related neurodegeneration in hearing (Kujawa and Liberman, 2006). Thus, it is possible that in more pure assays of rostral midbrain activity (present study) sensory, synaptic, and neural loss likely degrade brainstem representations of speech in ways that are not reflected in the wideband speech responses at the scalp. Moreover, we have found that changes in speech-FFRs only become apparent when hearing impairments exceed PTAs of 30–40 dB HL (Bidelman et al., 2014b), which are greater than those observed in the present study. Nevertheless, our results support the notion that brainstem and auditory cortex provide functionally distinct contributions enabling speech representation (Bidelman et al., 2013), which are differentially susceptible to the various insults of the aging process (Bidelman et al., 2014b; Bidelman et al., 2017). Future studies with a wider range of hearing losses and stimuli are needed to test these possibilities.

The lines between peripheral vs. central function and impaired sensory encoding vs. signal transmission issues are difficult to disentangle in humans (Bidelman et al., 2014b; Humes, 1996; Marmel et al., 2013). Functional changes may result from an imbalance of excitation and inhibition in brainstem (Parthasarathy and Bartlett, 2012), cortex (Caspary et al., 2008; Chao and Knight, 1997), or both structural levels (Bidelman et al., 2014b). Conversely, neurodegeneration at peripheral sites may partially explain our findings (Makary et al., 2011). Under this interpretation, observed changes in evoked activity might reflect maladaptive plasticity in response to deficits in the ascending auditory pathway. However, we would expect that degeneration due to age alone would produce similar effects between groups since both cohorts were elderly listeners. Instead, it is likely that listeners’ hearing loss (whether central or peripheral in origin) is what produces the cascade of functional changes that alter the neural encoding of speech at multiple stages of the auditory system. In this sense, our data corroborate evidence in animals that more central (i.e., cortical) gain helps restore auditory coding following hearing loss (Chambers et al., 2016). Interestingly, such neural rebound is stronger at cortical compared to brainstem levels (Chambers et al., 2016), consistent with the more extensive changes we find in human PAC relative to BS responses.

Our ERP data further imply that hearing loss might reorganize functional asymmetries at the cortical level (Du et al., 2016; Pichora-Fuller et al., 2017). Source waveforms from left and right PAC revealed that the normal hearing listeners showed bilateral symmetric cortical activity (Figs. 2–3). This pattern was muted in listeners with mild hearing impairment, who showed faster response in right hemisphere. These differences imply that the hemispheric laterality of speech undergoes a functional reorganization following sensory loss where processing might be partially reallocated to right hemisphere in a compensatory manner. Similar shifts in the cortical activity have been observed in sudden onset, idiopathic hearing loss (He et al., 2015), implying that our results might reflect central reorganization following longer-term sensory declines. Previous studies have also shown that hemispheric asymmetry is correlated with SIN perception (Bidelman and Howell, 2016; Javad et al., 2014; Thompson et al., 2016). Conceivably, the reduction in left hemisphere speech processing we find in hearing-impaired listeners, along with reduced BS→PAC connectivity, might reflect a form of aberrant cortical function that could exacerbates SIN comprehension behaviorally.

Our cortical ERP data contrast recent reports on senescent changes in the cortical encoding of speech. Previous studies have shown larger ERP amplitudes to speech and non-speech stimuli among older relative to younger listeners (Bidelman et al., 2014b; Herrmann et al., 2013; Presacco et al., 2016), possibly resulting from the peripheral auditory filter widening (Herrmann et al., 2013) and/or decreased top-down (frontal) gating of sensory information (Bidelman et al., 2014b; Chao and Knight, 1997; Peelle et al., 2011). In contrast, studies reporting larger ERP amplitudes in older, hearing-impaired adults focus nearly entirely on sensor (i.e., electrode-level) responses, which mixes temporal and frontal source contributions that are involved in SIN processing in younger (Alain et al., 2018; Bidelman and Dexter, 2015; Bidelman and Howell, 2016; Bidelman et al., 2018a; Du et al., 2014) and especially older adults (Du et al., 2016). A parsimonious explanation of our source ERP data then, is that weaker auditory cortical responses reflect reduced sensory encoding (within PAC) secondary to the diminished stimulus input from hearing loss.

Our results extend previous brainstem and cortical studies by desmonstrating age-related changes in the neural representations within certain auditory areas but also how information is communicated between functional levels. Notably, we found that robust feedforward neural transmission between brainstem and cortex is necessary for successful SIN comprehension in older adults, particularly those with mild hearing loss. To our knowledge, this is the first direct quantification of auditory brainstem-cortical connectivity in humans and how this functional reciprocity relates to complex listening skills.

Despite ample evidence for online subcortical modulation in animals (Bajo et al., 2010; Slee and David, 2015; Suga et al., 2000; Vollmer et al., 2017), demonstrations of corticofugal effects in human brainstem responses have been widely inconsistent and loosely inferred through manipulations of task-related attention (Forte et al., 2017; Picton et al., 1971; Rinne et al., 2007; Skoe and Kraus, 2010b; Varghese et al., 2015; Woods and Hillyard, 1978). Theoretically, efferent modulation of brainstem should occur only for behaviorally relevant stimuli in states of goal-directed attention (Slee and David, 2015; Suga et al., 2002; Vollmer et al., 2017), and should be stronger in more taxing listening conditions (e.g., difficult SIN tasks; Krishnan and Gandour, 2009). In this regard, our assay of central connectivity during online SIN identification should have represented optimal conditions to detect possible afferent-efferent BS-PAC communication most relevant to behavior.

Our findings revealed that corticofugal (PAC→BS) efferent signaling was stronger than afferent connectivity overall, implying considerable top-down processing in older adults. These results converge with theoretical frameworks of aging that posit higher-level brain regions are recruited to aid speech perception in older adults (Reuter-Lorenz and Cappell, 2008; Wong et al., 2009). Behaviorally, older adults tend to expend more listening effort during SIN recognition than younger individuals (Gosselin and Gagne, 2011). Consequently, one interpretation of our data is that the elevated, invariant PAC→BS efferent connectivity we observe across the board reflects an increase in older adults’ listening effort or deployment of attentional resources. However, we note that efferent connectivity was not associated with hearing loss or SIN performance, despite our use of an active listening task. Without concomitant data from younger adults (and passive tasks) it remains unclear how (if) the magnitude of corticofugal connectivity might change across the lifespan or with more egregious hearing impairments. Alternatively, the weaker connectivity observed in the forward direction (BS→PAC) might result from the decreased fidelity of representation of auditory signals observed in our elderly listeners (e.g., Fig. 4). This in turn would weaken the prediction of the cortical response and result in a lower afferent compared to efferent PTE (as in Fig. 5). Indeed, we have recently shown that younger normal hearing adults have slightly higher BS→PAC connectivity magnitudes during SIN processing than those reported here in older listeners. Additionally, mild cognitive impairment is known to alter brainstem and cortical speech processing (Bidelman et al., 2017). As we did not measure cognitive function, it is possible that at least some of group differences we observe in BS→PAC connectivity reflect undetected cognitive dysfunction which is often comorbid with declines in auditory processing (Humes et al., 2013).

In stark contrast, afferent directed communication (BS→PAC) differentiated normal- and hearing-impaired listeners and was more sensitive to hearing loss than corticofugal signaling. More critically, afferent transmission was a strong predictor of listeners’ reduced speech understanding at the behavioral level and was mediating variable for speech-in-noise (QuickSIN) performance, above and beyond hearing loss, per se. Said differently, our data suggest that afferent connectivity is necessary to explain the link between hearing loss (i.e., a marker of peripheral cochlear integrity) and SIN perception (behavior). This notion is supported by our correlational data, which showed that BS→PAC connectivity (but not FFR/ERP measures alone) predicted QuickSIN performance. This suggests afferent connectivity is a unique predictor of SIN processing (Bidelman et al., 2018a), above and beyond responsivity in individual auditory brain regions. Simplicity of our task notwithstanding, neurophysiological changes in cross-regional communication seem to precede behavioral SIN difficulties since groups showed similar levels of performance in SIN detection despite neurological variations. This agrees with notions that sensory coding deficits in brainstem-cortical circuitry mark the early decline of hearing and other cognitive abilities resulting from biological aging or neurotrauma (Bidelman et al., 2017; Kraus et al., 2017).

Our data align with previous neuroimaging studies suggesting that age-related hearing loss is associated with reduced gray matter volume in auditory temporal regions (Eckert et al., 2012; Lin et al., 2014), PAC volume (Eckert et al., 2012; Husain et al., 2011; Peelle et al., 2011), and compromised integrity of auditory white matter tracts (Chang et al., 2004; Lin et al., 2008). Accelerated neural atrophy from hearing impairment is larger in right compared to left temporal lobe (Lin et al., 2014; Peelle et al., 2011). Such structural changes and/or rebalancing in excitation/inhibition might account for the functional declines and redistribution of cortical speech processing among our hearing-impaired cohort. Diffusion tensor imaging also reveals weaker fractional anisotropy (implying reduced white matter) in the vicinity of inferior colliculus in listeners with sensorineural hearing (Lin et al., 2008). These structural declines in brainstem could provide an anatomical basis for the reduced functional connectivity (BS→PAC) among our hearing-impaired cohort.

Collectively, our findings provide a novel link between (afferent) subcortical-cortical functional connectivity and individual differences in auditory behavioral measures related to cocktail party listening (SIN comprehension). We speculate that similar individual differences in BS↔PAC connectivity strength might account more broadly for the pervasive and parallel neuroplastic changes in brainstem and cortical activity observed among highly experienced listeners, certain neuropathologies, and successful auditory learners (Bidelman and Alain, 2015; Bidelman et al., 2014a; Bidelman et al., 2017; Chandrasekaran et al., 2012; Kraus et al., 2017; Musacchia et al., 2008; Reetzke et al., 2018). Our findings underscore the importance of brain connectivity in understanding the biological basis of age-related hearing deficits in real-world acoustic environments and pave the way for new avenues of inquiry into the biological basis of auditory skills.

Highlights.

Measured source brainstem and cortical speech-evoked potentials in older adults

Hearing loss alters functional connectivity from brainstem to auditory cortex

Afferent (not efferent) BS→PAC signaling predicts speech-in-noise perception

Subcortical-cortical connectivity more sensitive to hearing insult than top-down signaling

Acknowledgements

This work was supported by grants from the Canadian Institutes of Health Research (MOP 106619) and the Natural Sciences and Engineering Research Council of Canada (NSERC, 194536) awarded to C.A, and The Hearing Research Foundation awarded to S.R.A, and the National Institutes of Health (NIDCD) R01DC016267 awarded to G.M.B.

Footnotes

Competing interests: The authors declare no competing interests.

Available at https://github.com/brainstorm-tools/brainstorm3/blob/master/external/fraschini/PhaseTE_MF.m

By referring to “efferent function” we mean the corticocollicular component of the corticofugal system. Still, connectivity via EEG cannot adjudicate the relative roles of sub-nuclei among the PAC-BS loop that compose the auditory afferent-efferent pathways. The main afferent pathway to BS to PAC is tonotopic [“core” central nucleus of the IC (ICC)→MGBv→PAC], which is not reciprocal with the corticocollicular projections. PAC-BS efferent projections primarily innervate “belt” regions of the IC, including the dorsal and external cortex (ICx). Though not tonotopically organized itself, ~70% of cells in the ICx do show phase-locked responses to periodic sounds (Liu et al., 2006; p.1930). In addition to intrinsic feedforward connections directly from the ICC (Aitkin et al., 1978; Vollmer et al., 2017), corticocollicular axons monosynaptically innervate (Xiong et al., 2015) and excite neurons in the ICx which in turn inhibit ICC units (Jen et al., 1998). This neural organization effectively forms a feedback loop. Consequently, one putative function of the corticofugal (corticocollicular) system is to provide cortically-driven gain control that also reshapes sensory coding in the IC (Suga et al., 2000). Moreover, while source analysis allows us to interpret our ROI signals as stemming from gross anatomical levels (midbrain vs. auditory cortex), our PTE analysis should be taken as a broad measure of causal signal interactions between two evoked responses rather than stemming definitively from unitary nuclei locations.

An identical pattern of results was observed when considering correlations between listeners’ average audiometric thresholds (from 250–8000 Hz) which defined the NH and HL group membership (see Methods). BS→PAC afferent (but not efferent) connectivity was negatively correlated with average hearing thresholds (r=−0.63, p=0.0001; data not shown).

Although the causality would be questionable, we also could treat PTA as a mediator between afferent connectivity and QuickSIN scores (i.e., PTA→BS/PAC→QuickSIN). Importantly, this arrangement was not significant [Sobel z=−13.04, p=0.29]. This (i) indicates hearing loss (PTA) does not mediate the relation between afferent BS→PAC connectivity and SIN and (ii) strengthens the causality of the relation between neural afferent signaling and QuickSIN performance reported in the text.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Aiken SJ, Picton TW 2008. Envelope and spectral frequency-following responses to vowel sounds. Hear. Res 245, 35–47. [DOI] [PubMed] [Google Scholar]

- Aitkin LM, Dickhaus H, Schult W, Zimmermann M 1978. External nucleus of inferior colliculus: auditory and spinal somatosensory afferents and their interactions. J. Neurophysiol 41, 837–47. [DOI] [PubMed] [Google Scholar]

- Alain C, McDonald K, Van Roon P 2012. Effects of age and background noise on processing a mistuned harmonic in an otherwise periodic complex sound. Hear. Res 283, 126–135. [DOI] [PubMed] [Google Scholar]

- Alain C, Roye A, Salloum C 2014. Effects of age-related hearing loss and background noise on neuromagnetic activity from auditory cortex. Front. Sys. Neurosci 8, 8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alain C, Snyder JS, He Y, Reinke KS 2007. Changes in auditory cortex parallel rapid perceptual learning. Cereb. Cortex 17, 1074–84. [DOI] [PubMed] [Google Scholar]

- Alain C, McDonald KL, Kovacevic N, McIntosh AR 2009. Spatiotemporal analysis of auditory “what” and “where” working memory. Cereb. Cortex 19, 305–314. [DOI] [PubMed] [Google Scholar]

- Alain C, Du Y, Bernstein LJ, Barten T, Banai K 2018. Listening under difficult conditions: An activation likelihood estimation metaD analysis. Hum. Brain Mapp 39, 2695–2709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson S, Parbery-Clark A, Yi HG, Kraus N 2011. A neural basis of speech-in-noise perception in older adults. Ear Hear. 32, 750–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson S, White-Schwoch T, Parbery-Clark A, Kraus N 2013a. A dynamic auditory-cognitive system supports speech-in-noise perception in older adults. Hear. Res 300C, 18–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson S, White-Schwoch T, Parbery-Clark A, Kraus N 2013b. Reversal of age-related neural timing delays with training. Proc. Natl. Acad. Sci. USA 110, 4357–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson S, Parbery-Clark A, White-Schwoch T, Drehobl S, Kraus N 2013c. Effects of hearing loss on the subcortical representation of speech cues. J. Acoust. Soc. Am 133, 3030–3038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bajo VM, Nodal FR, Moore DR, King AJ 2010. The descending corticocollicular pathway mediates learning-induced auditory plasticity. Nat. Neurosci 13, 253–260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barnett L, Barrett AB, Seth AK 2009. Granger causality and transfer entropy are equivalent for Gaussian variables. Phys Rev Lett 103, 238701. [DOI] [PubMed] [Google Scholar]

- Baron RM, Kenny DA 1986. The moderator-mediator variable distinction in social psychological research: conceptual, strategic, and statistical considerations. J. Pers. Soc. Psychol 51, 1173–82. [DOI] [PubMed] [Google Scholar]

- Bidelman GM 2015a. Multichannel recordings of the human brainstem frequency-following response: Scalp topography, source generators, and distinctions from the transient ABR. Hear. Res 323, 68–80. [DOI] [PubMed] [Google Scholar]

- Bidelman GM 2015b. Towards an optimal paradigm for simultaneously recording cortical and brainstem auditory evoked potentials. J. Neurosci. Meth 241, 94–100. [DOI] [PubMed] [Google Scholar]

- Bidelman GM 2016. Relative contribution of envelope and fine structure to the subcortical encoding of noise-degraded speech. J. Acoust. Soc. Am 140, EL358–363. [DOI] [PubMed] [Google Scholar]

- Bidelman GM 2018. Subcortical sources dominate the neuroelectric auditory frequency-following response to speech. Neuroimage 175, 56–69. [DOI] [PubMed] [Google Scholar]

- Bidelman GM, Krishnan A 2010. Effects of reverberation on brainstem representation of speech in musicians and non-musicians. Brain Res. 1355, 112–125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bidelman GM, Lee C-C 2015. Effects of language experience and stimulus context on the neural organization and categorical perception of speech. Neuroimage 120, 191–200. [DOI] [PubMed] [Google Scholar]

- Bidelman GM, Dexter L 2015. Bilinguals at the “cocktail party”: Dissociable neural activity in auditory-linguistic brain regions reveals neurobiological basis for nonnative listeners’ speech-in-noise recognition deficits. Brain Lang. 143, 32–41. [DOI] [PubMed] [Google Scholar]

- Bidelman GM, Alain C 2015. Musical training orchestrates coordinated neuroplasticity in auditory brainstem and cortex to counteract age-related declines in categorical vowel perception. J. Neurosci 35, 1240–1249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bidelman GM, Howell M 2016. Functional changes in inter- and intra-hemispheric auditory cortical processing underlying degraded speech perception. Neuroimage 124, 581–590. [DOI] [PubMed] [Google Scholar]

- Bidelman GM, Yellamsetty A 2017. Noise and pitch interact during the cortical segregation of concurrent speech. Hear. Res 351, 34–44. [DOI] [PubMed] [Google Scholar]

- Bidelman GM, Moreno S, Alain C 2013. Tracing the emergence of categorical speech perception in the human auditory system. Neuroimage 79, 201–212. [DOI] [PubMed] [Google Scholar]

- Bidelman GM, Davis MK, Pridgen MH 2018a. Brainstem-cortical functional connectivity for speech is differentially challenged by noise and reverberation. Hear. Res. 367, 149–160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bidelman GM, Weiss MW, Moreno S, Alain C 2014a. Coordinated plasticity in brainstem and auditory cortex contributes to enhanced categorical speech perception in musicians. Eur. J. Neurosci 40, 2662–2673. [DOI] [PubMed] [Google Scholar]

- Bidelman GM, Villafuerte JW, Moreno S, Alain C 2014b. Age-related changes in the subcortical- cortical encoding and categorical perception of speech. Neurobiol. Aging 35, 2526–2540. [DOI] [PubMed] [Google Scholar]

- Bidelman GM, Lowther JE, Tak SH, Alain C 2017. Mild cognitive impairment is characterized by deficient hierarchical speech coding between auditory brainstem and cortex. J. Neurosci 37, 3610–3620. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bidelman GM, Pousson M, Dugas C, Fehrenbach A 2018b. Test-retest reliability of dual-recorded brainstem vs. cortical auditory evoked potentials to speech. J. Am. Acad. Audiol 29, 164–174. [DOI] [PubMed] [Google Scholar]

- Bidelman GM, Mahmud MS, Yeasin M, Shen D, Arnott S, Alain C 2019. Age-related hearing loss increases full-brain connectivity while reversing directed signaling within the dorsal-ventral pathway for speech. Brain Structure and Function 10.1007/s00429-019-01922-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brugge JF, Nourski KV, Oya H, Reale RA, Kawasaki H, Steinschneider M, Howard MA 3rd. 2009. Coding of repetitive transients by auditory cortex on Heschl’s gyrus. J. Neurophysiol 102, 2358–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caspary DM, Ling L, Turner JG, Hughes LF 2008. Inhibitory neurotransmission, plasticity and aging in the mammalian central auditory system. Journal of Experimental Biology and Medicine 211, 1781–1791. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chambers AR, Resnik J, Yuan Y, Whitton JP, Edge AS, Liberman MC, Polley DB 2016. Central gain restores auditory processing following near-complete cochlear denervation. Neuron 89, 867–79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chandrasekaran B, Kraus N, Wong PC 2012. Human inferior colliculus activity relates to individual differences in spoken language learning. J. Neurophysiol 107, 1325–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang Y, Lee SH, Lee YJ, Hwang MJ, Bae SJ, Kim MN, Lee J, Woo S, Lee H, Kang DS 2004. Auditory neural pathway evaluation on sensorineural hearing loss using diffusion tensor imaging. Neuroreport 15, 1699–703. [DOI] [PubMed] [Google Scholar]

- Chao LL, Knight RT 1997. Prefrontal deficits in attention and inhibitory control with aging. Cereb. Cortex 7, 63–69. [DOI] [PubMed] [Google Scholar]

- Clinard CG, Cotter CM 2015. Neural representation of dynamic frequency is degraded in older adults. Hear. Res 323, 91–98. [DOI] [PubMed] [Google Scholar]

- Clinard CG, Tremblay KL, Krishnan AR 2010. Aging alters the perception and physiological representation of frequency: Evidence from human frequency-following response recordings. Hear. Res 264, 48–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coffey EB, Herholz SC, Chepesiuk AM, Baillet S, Zatorre RJ 2016. Cortical contributions to the auditory frequency-following response revealed by MEG. Nat. Commun 7, 11070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crowley KE, Colrain IM 2004. A review of the evidence for P2 being an independent component process: Age, sleep and modality. Clin. Neurophysiol 115, 732–744. [DOI] [PubMed] [Google Scholar]

- Cruickshanks KJ, Wiley TL, Tweed TS, Klein BE, Klein R, Mares-Perlman JA, Nondahl DM 1998. Prevalence of hearing loss in older adults in Beaver Dam, Wisconsin. The epidemiology of hearing loss study. Am. J. Epidemiol 148, 879–86. [DOI] [PubMed] [Google Scholar]

- de Boer J, Thornton AR 2008. Neural correlates of perceptual learning in the auditory brainstem: Efferent activity predicts and reflects improvement at a speech-in-noise discrimination task. J. Neurosci 28, 4929–37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Du Y, Buchsbaum BR, Grady CL, Alain C 2014. Noise differentially impacts phoneme representations in the auditory and speech motor systems. Proc. Natl. Acad. Sci. USA 111, 1–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Du Y, Buchsbaum BR, Grady CL, Alain C 2016. Increased activity in frontal motor cortex compensates impaired speech perception in older adults. Nat. Commun 7, 1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dubno JR, Schaefer AB 1992. Comparison of frequency selectivity and consonant recognition among hearing-impaired and masked normal-hearing listeners. J. Acoust. Soc. Am 91, 2110–21. [DOI] [PubMed] [Google Scholar]

- Eckert MA, Cute SL, Vaden KI, Kuchinsky SE, Dubno JR 2012. Auditory cortex signs of age- related hearing loss. J. Assoc. Res. Oto 13, 703–713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eulitz C, Diesch E, Pantev C, Hampson S, Elbert T 1995. Magnetic and electric brain activity evoked by the processing of tone and vowel stimuli. J. Neurosci 15, 2748–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forte AE, Etard O, Reichenbach T 2017. The human auditory brainstem response to running speech reveals a subcortical mechanism for selective attention. Elife 6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gordon-Salant S, Fitzgibbons PJ 1993. Temporal factors and speech recognition performance in young and elderly listeners. J. Speech Hear. Res 36, 1276–1285. [DOI] [PubMed] [Google Scholar]

- Gosselin PA, Gagne JP 2011. Older adults expend more listening effort than young adults recognizing speech in noise. J. Speech. Lang. Hear. Res 54, 944–58. [DOI] [PubMed] [Google Scholar]

- Hall JW 1992. Handbook of Auditory Evoked Responses Allyn and Bacon, Needham Heights. [Google Scholar]

- He W, Goodkind D, Kowal P 2015. An Aging World: 2015 - International Population Reports, https://www.census.gov/content/dam/Census/library/publications/2016/demo/p95-16-1.pdf.

- Henry KS, Heinz MG 2012. Diminished temporal coding with sensorineural hearing loss emerges in background noise. Nat. Neurosci 15, 1362–1364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herrmann B, Henry MJ, Scharinger M, Obleser J 2013. Auditory filter width affects response magnitude but not frequency specificity in auditory cortex. Hear. Res 304, 128–136. [DOI] [PubMed] [Google Scholar]

- Hillebrand A, Tewarie P, van Dellen E, Yu M, Carbo EWS, Douw L, Gouw AA, van Straaten ECW, Stam CJ 2016. Direction of information flow in large-scale resting-state networks is frequency-dependent. Proc. Natl. Acad. Sci. USA 113, 3867–3872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humes LE 1996. Speech understanding in the elderly. J. Am. Acad. Audiol 7, 161–167. [PubMed] [Google Scholar]

- Humes LE, Busey TA, Craig J, Kewley-Port D 2013. Are age-related changes in cognitive function driven by age-related changes in sensory processing? Atten. Percept. Psychophys 75, 508–524. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humes LE, Dubno JR, Gordon-Salant S, Lister JJ, Cacace AT, Cruickshanks KJ, Gates GA, Wilson RH, Wingfield A 2012. Central presbycusis: A review and evaluation of the evidence. J. Am. Acad. Audiol 23, 635–666. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Husain FT, Medina RE, Davis CW, Szymko-Bennett Y, Simonyan K, Pajor NM, Horwitz B 2011. Neuroanatomical changes due to hearing loss and chronic tinnitus: A combined vbm and dti study. Brain Res. 1369, 74–88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iordanov T, Hoechstetter K, Berg P, Paul-Jordanov I, Scherg M 2014. CLARA: classical LORETA analysis recursively applied, OHBM 2014.

- Javad F, Warren JD, Micallef C, Thornton JS, Golay X, Yousry T, Mancini L 2014. Auditory tracts identified with combined fMRI and diffusion tractography. Neuroimage 84, 562–574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jen PH-S, Chen QC, Sun XD 1998. Corticofugal regulation of auditory sensitivity in the bat inferior colliculus. Journal of Comparative Physiology A 183, 683–697. [DOI] [PubMed] [Google Scholar]

- Killion MC, Niquette PA, Gudmundsen GI, Revit LJ, Banerjee S 2004. Development of a quick speech-in-noise test for measuring signal-to-noise ratio loss in normal-hearing and hearing- impaired listeners. J. Acoust. Soc. Am 116, 2395–405. [DOI] [PubMed] [Google Scholar]

- Kraus N, Lindley T, Colegrove D, Krizman J, Otto-Meyer S, Thompson EC, White-Schwoch T 2017. The neural legacy of a single concussion. Neurosci. Lett 646, 21–23. [DOI] [PubMed] [Google Scholar]

- Krishnan A, Gandour JT 2009. The role of the auditory brainstem in processing linguistically-relevant pitch patterns. Brain Lang. 110, 135–48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kujawa SG, Liberman MC 2006. Acceleration of age-related hearing loss by early noise exposure: Evidence of a misspent youth. J. Neurosci 26, 2115–2123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liegeois-Chauvel C, Musolino A, Badier JM, Marquis P, Chauvel P 1994. Evoked potentials recorded from the auditory cortex in man: Evaluation and topography of the middle latency components. Electroencephalogr. Clin. Neurophysiol 92, 204–214. [DOI] [PubMed] [Google Scholar]

- Lin FR, Ferrucci L, An Y, Goh JO, Doshi J, Metter EJ, Davatzikos C, Kraut MA, Resnick SM 2014. Association of hearing impairment with brain volume changes in older adults. Neuroimage 90, 84–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin Y, Wang J, Wu C, Wai Y, Yu J, Ng S 2008. Diffusion tensor imaging of the auditory pathway in sensorineural hearing loss: Changes in radial diffusivity and diffusion anisotropy. J. Magn. Reson. Imaging 28, 598–603. [DOI] [PubMed] [Google Scholar]

- Liu LF, Palmer AR, Wallace MN 2006. Phase-locked responses to pure tones in the inferior colliculus. J. Neurophysiol 95, 1926–35. [DOI] [PubMed] [Google Scholar]

- Lobier M, Siebenhuhner F, Palva S, Palva JM 2014. Phase transfer entropy: a novel phase-based measure for directed connectivity in networks coupled by oscillatory interactions. Neuroimage 85 Pt 2, 853–72. [DOI] [PubMed] [Google Scholar]

- Makary CA, Shin J, Kujawa SG, Liberman MC, Merchant SN 2011. Age-related primary cochlear neuronal degeneration in human temporal bones. J. Assoc. Res. Oto 12, 711–717. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marmel F, Linley D, Carlyon RP, Gockel HE, Hopkins K, Plack CJ 2013. Subcortical neural synchrony and absolute thresholds predict frequency discrimination independently. J. Assoc. Res. Oto 14, 755–766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Musacchia G, Strait D, Kraus N 2008. Relationships between behavior, brainstem and cortical encoding of seen and heard speech in musicians and non-musicians. Hear. Res 241, 34–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Musacchia G, Sams M, Skoe E, Kraus N 2007. Musicians have enhanced subcortical auditory and audiovisual processing of speech and music. Proc. Natl. Acad. Sci. USA 104, 15894–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oostenveld R, Praamstra P 2001. The five percent electrode system for high-resolution EEG and ERP measurements. Clin. Neurophysiol. 112, 713–719. [DOI] [PubMed] [Google Scholar]

- Otnes R, Enochson L 1972. Digital Time Series Analysis Wiley.

- Parbery-Clark A, Anderson S, Kraus N 2013. Musicians change their tune: How hearing loss alters the neural code. Hear. Res. 302, 121–131. [DOI] [PubMed] [Google Scholar]

- Parthasarathy A, Bartlett E 2012. Two-channel recording of auditory-evoked potentials to detect age- related deficits in temporal processing. Hear. Res 289, 52–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parthasarathy A, Datta J, Torres JA, Hopkins C, Bartlett EL 2014. Age-related changes in the relationship between auditory brainstem responses and envelope-following responses. J. Assoc. Res. Oto 15, 649–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pearson JD, Morrell CH, Gordon-Salant S, Brant LJ, Metter EJ, Klein LL, Fozard JL 1995. Gender differences in a longitudinal study of age-associated hearing loss. J. Acoust. Soc. Am 97, 1196–1205. [DOI] [PubMed] [Google Scholar]

- Peelle JE, Wingfield A 2016. The neural consequences of age-related hearing loss. Trends in Neuroscience 39, 486–497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peelle JE, Troiani V, Grossman M, Wingfield A 2011. Hearing loss in older adults affects neural systems supporting speech comprehension. J. Neurosci 31, 12638–12643. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pichora-Fuller KM, Alain C, Schneider B 2017. Older adults at the cocktail party In: Middlebrook JC, Simon JZ, Popper AN, Fay RF, (Eds.), Springer Handbook of Auditory Research: The Auditory System at the Cocktail Party. Springer-Verlag; pp. 227–259. [Google Scholar]

- Picton TW, Hillyard SA, Galambos R, Schiff M 1971. Human auditory attention: A central or peripheral process? Science 173, 351–3. [DOI] [PubMed] [Google Scholar]

- Picton TW, van Roon P, Armilio ML, Berg P, Ille N, Scherg M 2000. The correction of ocular artifacts: A topographic perspective. Clin. Neurophysiol 111, 53–65. [DOI] [PubMed] [Google Scholar]

- Picton TW, Alain C, Woods DL, John MS, Scherg M, Valdes-Sosa P, Bosch-Bayard J, Trujillo NJ 1999. Intracerebral sources of human auditory-evoked potentials. Audiol. Neurootol. 4, 64–79. [DOI] [PubMed] [Google Scholar]

- Preacher KJ, Hayes AF 2004. SPSS and SAS procedures for estimating indirect effects in simple mediation models. Behavior Research Methods, Instruments, & Computers 36, 717–731. [DOI] [PubMed] [Google Scholar]

- Presacco A, Simon JZ, Anderson S 2016. Evidence of degraded representation of speech in noise, in the aging midbrain and cortex. J. Neurophysiol 116, 2346–2355. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reetzke R, Xie Z, Llanos F, Chandrasekaran B 2018. Tracing the trajectory of sensory plasticity across different stages of speech learning in adulthood. Curr. Biol 28, 1419–1427.e4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reuter-Lorenz PA, Cappell KA 2008. Neurocognitive aging and the compensation hypothesis. Curr. Dir. Psychol. Sci 17, 177–182. [Google Scholar]

- Richards JE, Sanchez C, Phillips-Meek M, Xie W 2016. A database of age-appropriate average MRI templates. Neuroimage 124, 1254–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rinne T, Stecker GC, Kang X, Yund EW, Herron TJ, Woods DL 2007. Attention modulates sound processing in human auditory cortex but not the inferior colliculus. Neuroreport 18, 1311–1314. [DOI] [PubMed] [Google Scholar]

- Scherg M, von Cramon DY 1986. Evoked dipole source potentials of the human auditory cortex. Electroencephalogr. Clin. Neurophysiol 65, 344–360. [DOI] [PubMed] [Google Scholar]

- Scherg M, Ebersole JS 1994. Brain source imaging of focal and multifocal epileptiform EEG activity. Neurophysiol. Clin 24, 51–60. [DOI] [PubMed] [Google Scholar]

- Scherg M, Ille N, Bornfleth H, Berg P 2002. Advanced tools for digital EEG review: virtual source montages, whole-head mapping, correlation, and phase analysis. J. Clin. Neurophysiol 19, 91112. [DOI] [PubMed] [Google Scholar]

- Schneider BA, Daneman M, Pichora-Fuller MK 2002. Listening in aging adults: From discourse comprehension to psychoacoustics. Can. J. Exp. Psychol 56, 139–152. [DOI] [PubMed] [Google Scholar]

- Skoe E, Kraus N 2010a. Auditory brain stem response to complex sounds: A tutorial. Ear Hear. 31, 302–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skoe E, Kraus N 2010b. Hearing it again and again: On-line subcortical plasticity in humans. PLoS ONE 5, e13645. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Slee SJ, David SV 2015. Rapid task-related plasticity of spectrotemporal receptive fields in the auditory midbrain. J. Neurosci 35, 13090–13102. [DOI] [PMC free article] [PubMed] [Google Scholar]