Abstract

Speech comprehension difficulties are ubiquitous to aging and hearing loss, particularly in noisy environments. Older adults’ poorer speech-in-noise (SIN) comprehension has been related to abnormal neural representations within various nodes (regions) of the speech network, but how senescent changes in hearing alter the transmission of brain signals remains unspecified. We measured electroencephalograms in older adults with and without mild hearing loss during a SIN identification task. Using functional connectivity and graph-theoretic analyses, we show that hearing-impaired (HI) listeners have more extended (less integrated) communication pathways and less efficient information exchange among widespread brain regions (larger network eccentricity) than their normal-hearing (NH) peers. Parameter optimized support vector machine classifiers applied to EEG connectivity data showed hearing status could be decoded (> 85% accuracy) solely using network-level descriptions of brain activity, but classification was particularly robust using left hemisphere connections. Notably, we found a reversal in directed neural signaling in left hemisphere dependent on hearing status among specific connections within the dorsalventral speech pathways. NH listeners showed an overall net “bottom-up” signaling directed from auditory cortex (A1) to inferior frontal gyrus (IFG; Broca’s area), whereas the HI group showed the reverse signal (i.e., “top-down” Broca’s → A1). A similar flow reversal was noted between left IFG and motor cortex. Our full-brain connectivity results demonstrate that even mild forms of hearing loss alter how the brain routes information within the auditory-linguistic-motor loop.

Keywords: EEG, Hearing loss, Functional connectivity, Graph theory, Machine learning, Global and nodal network features

Introduction

Age-related hearing loss is associated with declines in speech comprehension, which can lead to social isolation, confusion, and poorer quality of life in the elderly (Betle-jewski 2006). Older adults’ speech comprehension issues are more prevalent in adverse (noisy) listening environments and occur even when hearing thresholds are controlled (Bilodeau-Mercure et al. 2015) or compensated using hearing aids (Ricketts and Hornsby 2005). Difficulties with speech-in-noise (SIN) comprehension persist even without measurable hearing loss (Cruickshanks et al. 1998; Gordon-Salant and Fitzgibbons 1993; Strouse et al. 1998; Hutka et al. 2013; Konkle et al. 1977; Schneider et al. 2002; van Rooij and Plomp 1992), suggesting that speech understanding is not simply a matter of audibility (Gordon-Salant and Fitzgibbons 1993), but also involves central auditory processes (Wong et al. 2010; Bidelman et al. 2014; Presacco et al. 2016; Martin and Jerger 2005) as well as interactions between perceptual and cognitive systems (Humes et al. 2013; Anderson et al. 2013b; Pichora-Fuller et al. 2017).

Physiological evidence suggests that aging produces a cascade of changes in central auditory processing from the cochlea to cortex in both humans and animal models (e.g., Bidelman et al. 2014; Kujawa and Liberman 2006; Abdala and Dhar 2012; Presacco et al. 2016; Parthasarathy et al. 2014; Alain et al. 2014; Peelle and Wingfield 2016). Scalp recordings of event-related brain potentials (ERPs) have shown both decreases (Goodin et al. 1978; Brent et al. 1976; Tremblay et al. 2003b) and increases in neural activity (Woods and Clayworth 1986; Pfefferbaum et al. 1980; Alain and Snyder 2008; Bidelman et al. 2014; Tremblay et al. 2003a) within various auditory-linguistic brain regions of old listeners. However, changes in the responsivity of any one brain region might be expected given the pervasive changes in neural excitation/inhibition (Caspary et al. 2008; Parthasarathy and Bartlett 2011; Makary et al. 2011; Kujawa and Liberman 2006) and compensatory processing (Peelle et al. 2011; Du et al. 2016) that accumulate during the aging process. In our recent ERP studies, we showed that in addition to overall differences in the strength of neural encoding, aging is associated with increased redundancy (higher shared information) between brainstem and cortical representations for speech (Bidelman et al. 2014, 2017, 2019). These findings implied that SIN problems in older listeners might result from aberrant transmission of speech signals among auditory-linguistic brain regions in addition to diminished (or over-exaggerated) neural encoding. Relatedly, age-related deficits in interhemispheric processing are thought to account for some of the listening problems among seniors (Martin and Jerger 2005). However, few studies to date have investigated how age-related hearing loss alters functional brain connectivity, so these premises remain largely untested.

Several lines of research imply that age-related hearing loss might be associated with widespread changes in brain connectivity. Still, most studies have focused on structural changes (e.g., tractography, volumetrics). Diffusion tensor imaging suggests both sensorineural hearing loss and aging are associated with reduced white matter integrity along the brainstem-cortical pathways, including the inferior colliculus, auditory radiations, and superior temporal gyri (Lutz et al. 2007; Chang et al. 2004; Lin et al. 2008; Liu et al. 2015). Similar neuroanatomical findings have been reported in children with hearing loss and may be related to functional deficits during development (Propst et al. 2010). In older adults with normal hearing, functional connectivity of fMRI data reveal reduced coherence within the auditory-linguistic-motor loop during complex speech perception tasks (Peelle et al. 2010). This suggests that the aging brain might have limited ability to coordinate the exchange of neural information between brain regions, ultimately leading to difficulty in speech comprehension. Still, in testing links between structural changes and speech perception, most studies have used tasks that require relatively complex mental processes (e.g., semantic/syntactic processing, memory retrieval), faculties which are known to diminish with age (Schneider et al. 2005; Cohen 1979; Martin and Jerger 2005; Schneider et al. 2002). Age-related deficits in attention and/or memory are probably more egregious for sentence compared to simple word recognition (Schneider et al. 2002). Thus, it is unclear how (if) previous observed structural changes (1) carry functional consequences and (2) might account for older listeners’ difficulties merely encoding or recognizing individual speech sounds without the assistance of context or lexical cues that aid comprehension (e.g., Schneider et al. 2002).

Increasingly, neuroimaging studies are probing functional connectomics including the default mode network and long-range cortical connections outside the central auditory system that accompany tinnitus and related sensorineural hearing loss (Schlee et al. 2008; Schmidt et al. 2013; Boyen et al.2014). In relation to SIN processing, we recently reported that in younger normal-hearing adults, functional connectivity (neural signaling measured via EEG) directed between Broca’s area (inferior frontal gyrus, IFG) and primary auditory cortex (A1) is stronger in individuals with better SIN perception (i.e., “good perceivers”; Bidelman et al. 2018). Presumably, auditory deprivation (e.g., hearing loss) may exacerbate such effects, leading to widespread deficits in the capacity to process information even beyond lemniscal auditory brain regions (Kral et al. 2016; Schlee et al. 2008). Under this notion, sensory impairments could alter or reduce effective connectivity between auditory and high-order brain regions subserving neurocognitive functions (e.g., memory, attention). This has led to speculations that certain forms of hearing loss might manifest as a “connectome disease” (Kral et al. 2016). Receptive speech function is affected by multiple aspects of the aging process including age itself (Bidelman et al. 2014; Anderson et al. 2012, 2013a), hearing loss (Bidelman et al. 2014), and comorbid cognitive impairment (Bidelman et al. 2017; Khatun et al. 2019). Given the multifactored nature of aging and speech perception, it is reasonable to postulate that subtle (even pre-clinical) decline in hearing acuity may lead to complex changes in the brain networks supporting speech perception.

Extending previous fMRI work (Zhang et al. 2018), we examined different global and nodal graph-theoretic properties of the brain’s functional connectome during speech processing. We hypothesized that age-related hearing loss would be associated with altered cortical signaling within the dorsal-ventral pathways, and more specifically, the auditory-linguistic-motor loop (Rauschecker and Scott 2009; Du et al. 2014, 2016). These hubs are heavily implicated in SIN perception and may be particularly sensitive to the effects of age (e.g., Bidelman and Howell 2016; Bidelman et al. 2018; Alain et al. 2018; Bidelman and Dexter 2015; Du et al. 2016). To assess modulations in brain connectivity that accompany senescent changes in speech processing, we measured high-density neuroelectric responses (EEG) to speech in older adults with and without mild hearing loss during a speech identification task. Task difficulty was varied by presenting speech in clear and noise-degraded conditions. Neural classifiers were applied to EEG data to determine if hearing status could be decoded using solely functional patterns of brain connectivity to speech. Our findings demonstrate hearing-impaired (HI) listeners have more extended communication pathways and less efficient information exchange among brain regions than their normalhearing (NH) peers.

Methods

Analyses of the scalp/source-level ERPs and behavioral data associated with this dataset are reported in Bidelman et al. (2019). Here, we present new analyses of older adults’ functional brain connectivity during SIN perception using graph-theoretic and machine learning techniques.

Participants

Thirty-two older adults aged 52–75 years were recruited from the Greater Toronto Area to participate in our ongoing studies on aging and the auditory system (Bidelman et al. 2019; Mahmud et al. 2018). None reported history of neurological or psychiatric illness. Pure-tone audiometry was conducted at octave frequencies between 250 and 8000 Hz. Based on listeners’ hearing thresholds, the cohort was divided into normal and hearing-impaired groups (Fig. 1a). In this study, normal-hearing (NH; n = 13) listeners had average thresholds (250–8000 Hz) better than 25 dB HL across both ears, whereas listeners with hearing loss (HL; n = 19; hereafter also referred to as hearing-impaired (“HI”) listeners) had average thresholds poorer than 25 dB HL. This division resulted in pure-tone averages (PTAs) (i.e., mean of 500, 1000, 2000 Hz) that were ~ 10 dB better in NH compared to HL listeners (mean ± SD; NH: 15.3 ± 3.27 dB HL, HL: 26.4 ± 7.1 dB HL; t2.71 = − 5.95, p < 0.0001; NH range = 8.3–20.83 dB HL, HL range = 15.8–45 dB HL). This definition of hearing impairment further helped the post hoc matching of NH and HL listeners on other demographic variables while maintaining adequate sample sizes per group. Both groups had signs of age-related presbycusis at very high frequencies (8000 Hz), which is typical in older adults (Cruickshanks et al. 1998). However, it should be noted that the audiometric thresholds of our NH listeners were better than the hearing typically expected based on the age range of our cohort, even at higher frequencies (Pearson et al. 1995; Cruickshanks et al. 1998). Importantly, besides hearing, the groups did not differ statistically in age (NH: 66.2 ± 6.1 years, HL: 70.4 ± 4.9 years; t2.22 = − 2.05, p = 0.052) and gender distribution (NH: 5/8 male/female; HL: 11/8; Fisher’s exact test, p=0.47). Importantly, age and hearing loss were not correlated in our sample (Pearson’s r = 0.29, p = 0.10). Cognitive function was not screened. However, we note that mild cognitive impairment (without hearing loss) actually increases brainstem and cortical speech-evoked potentials (Bidelman et al. 2017; Khatun et al. 2019). Older adults in this cohort showed weaker neural responses to speech than in our previous study (Bidel-man et al. 2019), which is more consistent with hearing loss (Bidelman et al. 2014; Presacco et al. 2019). Moreover, cognitively impaired older adults show more variable reactions times (RTs) in speeded speech identification tasks (Bidelman et al. 2017), which we do not observe in this cohort (see Fig. 1c). None of the participants had prior experience with hearing aids. All gave informed written consent in accordance with a protocol approved by the Baycrest Hospital Human Subject Review Committee.

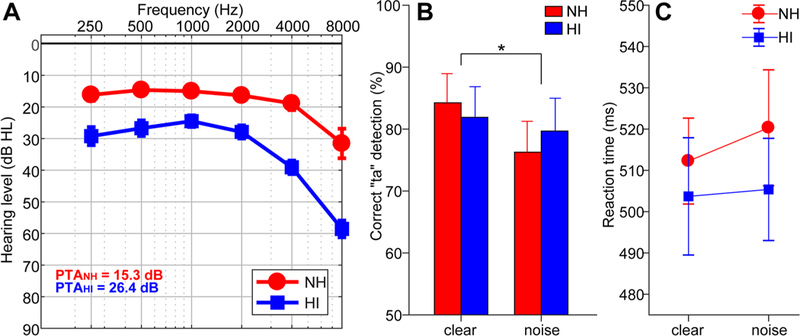

Fig. 1.

Behavioral data. a Audiograms of normal-hearing (NH) and hearing-impaired (HI) listeners. b Behavioral accuracy for detecting infrequent /ta/ tokens in clear and noise-degraded conditions. Noise-related decline in behavioral performance was prominent but no group differences in speech perception were observed. c Reaction times (RTs) for speech detection were similar between groups and speech SNRs. Error bars = ± s.e.m., *p < 0.05

Stimuli and task

Three tokens from the standardized UCLA version of the Nonsense Syllable Test were used in this study (Dubno and Schaefer 1992). These tokens were naturally produced English consonant-vowel phonemes (/ba/, /pa/, and /ta/), spoken by a female talker. Each phoneme was 100 ms in duration and matched in terms of average RMS sound pressure level (SPL). Each had a common voice fundamental frequency (mean F0 = 150 Hz) and first and second formants (F1 = 885, F2 = 1389 Hz). CVs were presented in both clear (i.e., no noise) and noise conditions. For each condition, the stimulus set included a total of 3000 /ba/, 3000 /pa/, and 210 /ta/ tokens (spread evenly over three blocks to allow for breaks).

For each block, speech tokens were presented back to back in random order with a jittered interstimulus interval (95–155 ms, 5 ms steps, uniform distribution). Frequent (/ba/, /pa/) and infrequent (/ta/) tokens were presented according to a pseudo-random schedule such that at least two frequent stimuli intervened between target /ta/ tokens. Listeners were asked to respond each time they detected the target (/ta/) via a button press on the computer. Reaction time (RT) and detection accuracy (%) were logged. These procedures were then repeated using an identical speech triplet mixed with eight talker noise babble (cf. Killion et al. 2004) at a signal-to-noise ratio (SNR) of + 10 dB. Thus, there were six blocks in total (three clear and three noise). The babble was presented continuously so that it was not time locked to the stimulus, providing a constant backdrop of interference in the noise condition (e.g., Alain et al. 2012; Bidelman and Howell 2016). Comparing behavioral performance between clear and degraded stimulus conditions allowed us to assess the impact of acoustic noise and differences between normal and hearing-impaired listeners in speech perception. This task ensured that EEGs were recorded online, during active speech perception rather than mere passive exposure.

Stimulus presentation was controlled by a MATLAB (The Mathworks, Inc.; Natick, MA) routed to a TDT RP2 interface (Tucker-Davis Technologies; Alachua, FL) and delivered binaurally through insert earphones (ER-3; Etymotic Research; Elk Grove Village, IL). The speech stimuli were presented at an intensity of 75 dBA SPL (noise at 65 dBA SPL) using alternating polarity.

Electrophysiological recordings and data pre-processing

During the behavioral task, neuroelectric activity was recorded from 32 channels at standard 10–20 electrode locations on the scalp (Oostenveld and Praamstra 2001). The montage included electrode coverage over fronto-central (Fz, Fp½, F¾, F7/8, F9/10, C¾), temporal (T7/8, TP7/9, TP8/10), parietal (Pz, P¾, P7/8), and occipital-cerebellar (Oz, O½, CB½, Iz) sites. Electrodes placed along the zygomatic arch (FT9/10) and the outer canthi and superior/inferior orbit of the eye (IO½, LO½) monitored ocular activity and blink artifacts. Electrode impedances were maintained at ≤ 5 kΩ. EEGs were digitized at a sampling rate of 20 kHz using SynAmps RT amplifiers (Compumedics Neuroscan; Charlotte, NC). Data were re-referenced offline to a common average reference for analyses.

Subsequent pre-processing was performed in BESA® Research (v6.1) (BESA, GmbH). Ocular artifacts (saccades and blinks) were first corrected in the continuous EEG using a principal component analysis (PCA) (Picton et al. 2000). Cleaned EEGs were then filtered (1–40 Hz), epoched (− 10–200 ms), baseline corrected to the pre-stimulus period, and averaged in the time domain to derive ERPs for each stimulus condition per participant. Connectivity results reported herein were collapsed across /ba/ and /pa/ tokens to reduce the dimensionality of the data. Infrequent /ta/ responses were not analyzed given the limited number of trials for this condition and to avoid time-locked mismatch (e.g., MMN) and target-related activity (e.g., N2b and P3b waves) from confounding our data (Bidelman et al. 2019).

EEG source localization

Functional connectivity (described below) is spurious for scalp EEG that is a volume conducted mixture of brain signals (Bastos and Schoffelen 2016; Lai et al. 2018); it is only meaningful on “unmixed” source reconstructed responses. Hence, we analyzed our data in source space by performing a distributed source analysis to more directly assess the neural generators underlying speech-evoked neural activity (Bidelman and Howell 2016; Alain et al. 2017; Bidelman and Dexter 2015). Source reconstruction was implemented in the MATLAB package Brainstorm (Tadel et al. 2011). We used a realistic, boundary element model (BEM) volume conductor (Fuchs et al. 1998, 2002) standardized to the MNI brain (Mazziotta et al. 1995). The BEM head model was created using the OpenMEEG (Gramfort et al. 2010) as implemented in Brainstorm (Tadel et al. 2011). A BEM is less prone to spatial errors than other head models (e.g., concentric spherical conductor) (Fuchs et al. 2002). Essentially, the BEM model parcellates the cortical surface into 15,000 vertices and assigns a dipole at each vertex with orientation perpendicular to the cortical surface. The noise covariance matrix was estimated from the pre-stimulus interval. We then used standard low-resolution brain electromagnetic tomography (sLORETA) to create inverse solutions (Pascual-Marqui et al. 2002) as implemented in Brainstorm (Tadel et al. 2011). We used Brainstorm’s default regularization parameters (regularized noise covariance = 0.1; SNR=3.00). sLORETA provides a smoothness constraint that ensures the estimated current changes little between neighboring neural populations (Michel et al. 2004; Picton et al. 1999). sLORETA is better than other inverse solutions because of its smaller average localization error. While higher channel counts improve source localization, for a 32-ch electrode array as used here, best case estimates of localization error for sLORETA are as low as 1.45 mm (Song et al. 2015).1 From each sLORETA map, we extracted the time course of source activity within regions of interest (ROI) defined by the Desikan-Killany (DK) atlas parcellation (Desikan et al. 2006). This atlas has 68 ROIs with 34 in each of the left (LH) and right (RH) hemisphere. Time-series data from each ROI were then used for full-brain functional (effective) connectivity analyses, where each ROI was considered a “node” in a graph-theoretic description of the evoked brain activity (Friston 2011).

Undirected functional connectivity

We investigated weighted, undirected brain connectivity via a graph-theoretic approach (de Haan et al. 2009). Undirected functional connectivity reflects the statistical dependence of inter-regional brain activity. Graphs can be characterized by global (full-brain) and nodal (regional) measures. Each approach allows for different hypothesis testing of the data. Mathematically, a graph consists of vertices (nodes) and edges (connections among the nodes). We computed graphs of each participant’s ROI source data using the BRain Analysis using graPH theory (BRAPH) toolbox (Mijalkov et al. 2017). For a given pair of ROIs, the bivariate Pearson correlation was computed using the entire time-series signals (all time samples). This resulted in an index of the similarity (undirected) connectivity between the ROIs. This procedure was then repeated for all pairwise ROIs, resulting in a 68 × 68 adjacency matrix describing the connectivity across all sources in the brain network.2 This described the signal dependences between all ROIs as a weighted sum between nodes [self-node weightings (e.g., A↔A) are not meaningful and were set to zero]. Because Pearson correlations are bi-directional, this estimated undirected functional connectivity between all ROIs, similar to approaches in fMRI (e.g., Peelle et al. 2010). Note that the temporal dimension of the data is discarded in this analysis, since correlations only considered signal correspondence over the entire epoch window. Connectivity matrices were visualized on the template brain anatomy in BRAPH (Mijalkov et al. 2017), resulting in a network graph of the functional connectivity strength (paths) between all pair-wise nodes (ROIs).3

Graph-theoretic analysis of brain networks

Global (full-brain) graph properties.

From the connectivity graphs, we used well-established graph-theoretic analysis to describe group differences in full-brain connectivity (Bassett and Bullmore 2006; Kaiser 2011). We measured 15 different global measures from the full-brain connectivity graphs using the BRAPH software (Mijalkov et al. 2017). These included global eccentricity, radius, diameter, characteristic path length, clustering, transitivity, modularity, assortativity and small-worldness. Only global graph metrics showing significant differences between normal and hearing-impaired adults are reported hereafter. These included characteristic path length and eccentricity. Characteristic path length is the average shortest path length when considering all edges of the entire graph. Nodal eccentricity is the maximal shortest path length between a given node and any other node, whereas global eccentricity is the average of nodal eccentricities across the graph. Diameter is the maximum eccentricity, whereas radius is the minimum eccentricity. Detailed explanations and mathematical equations of these graph measures are reported elsewhere (Harris et al. 2008; Rubinov and Sporns 2010).

To test for differences in connectivity between groups, we used non-parametric permutation tests (Benjamini and Hochberg 1995). The data were reshuffled N = 1000 times resulting in a p value describing the statistical contrast between groups. In each iteration, the difference between groups was computed and then the empirical distribution tested against a null hypothesis of 0 mean group difference to compute the p value. BRAPH does not provide a straight-forward means to correct for multiple comparisons on global measures so these measures were uncorrected.

Focal (nodal) graph properties.

Nodal measures represent different properties of each node of a graph. Using BRAPH, we computed 12 nodal measures (degree, strength, triangles, eccentricity, path length, global efficiency nodes, local efficiency nodes, clustering nodes, betweenness centrality, closeness centrality, within module degree z score, and participation) for each node. We investigated nodal graph properties within the full-brain network to test specific hypotheses regarding physiological changes in auditory-linguistic brain regions that might be sensitive to cognitive aging (e.g., Du et al. 2016) and degraded speech perception (e.g., Bidelman et al. 2018; Bidelman and Howell 2016; Du et al. 2014; Scott and Johnsrude 2003; Alain et al. 2018). These included four specific ROIs within each hemisphere (LH and RH) associated with speech processing including primary auditory cortex (A1; transverse temporal gyrus), primary motor cortex (M1; precentral gyrus), and Broca’s area [defined here as the pars opercularis (B1) and pars triangularis (B2)]. These DK regions roughly correspond to Brodmann areas 41 (A1), BA 4 (M1), and BA 44/45 (B1, B2). As with global measures, permutation tests were used to assess group differences. Nodal measures were corrected for multiple comparisons using FDR correction (Benjamini and Hochberg 1995).

Directed functional connectivity (PTE): auditory-linguistic-motor loop

Our full-brain approach considered undirected connectivity, which is limited to quantifying only the strength of communication within different neural channels. To test whether the direction (causality) of neural signaling changes with hearing impairment within the auditory-linguistic-motor loop, we measured directed functional connectivity using phase transfer entropy (PTE) (Lobier et al. 2014; Bidelman et al. 2018). PTE is advantageous because it is asymmetric so it can be computed bi-directionally (X→Y and Y→X) to identify causal, directional flow of information between interacting brain regions. PTE is also more powerful than other connectivity metrics (e.g., Granger causality), because it is model free and thus relaxes assumptions about data normality, which are often violated in EEG. Lastly, we have shown that PTE is sensitive to differences in directed neural signaling within auditory-linguistic pathways that account for individual differences in SIN perception in young adults (Bidelman et al. 2018). Higher values of PTE indicate stronger connectivity, whereas PTE = 0 reflects no directed connectivity.

We measured PTE among the LH and RH ROIs that constitute the auditory-linguistic-motor loop (A1, M1, B1, B2) as implemented in Brainstorm (Tadel et al. 2011). PTE was computed between all pairwise ROIs using full bandwidth (1–40 Hz) source waveforms. Values were normalized in the range − 0.5 to + 0.5 for each participant, where positive values denote information flow from A→ B, and negative values B →A.4

SVM classification: predicting hearing loss from brain connectivity

We used parameter optimized support vector machine (SVM) classifiers to determine if group membership (i.e., NH vs. HI hearing status) could be determined solely from functional brain connectivity measures alone (cf. Bidelman et al. 2017). SVM is a popular classifier used to categorize non-linearly separable data. We extracted global measures that exhibited significant group differences and used them as a feature vector to segregate groups. This resulted in a variable space that included four global measures (diameter, eccentricity, characteristic path length, and radius; see Fig. 2) as well as nodal eccentricity that defined each ROI (Fig. 4). These variables were then fed to an SVM classifier as an input feature vector. Separate SVM classifiers were trained and tested using different sets of connectivity features including global, LH nodal, RH nodal, and combinations of these features (global + nodal features).

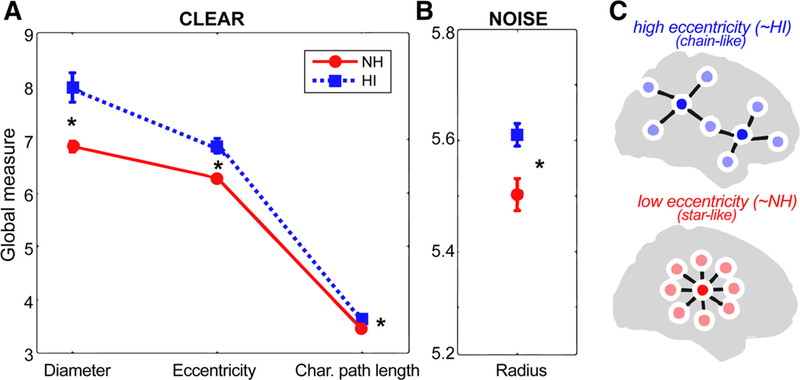

Fig. 2.

Group comparison of mean global brain connectivity measures during clear and noise-degraded speech processing. a Graph measures of diameter, eccentricity, and characteristic path length were larger in HI compared to NH listeners when processing clear speech. b For noise-degraded speech, only radius measures of the network differed between groups. c Schematic of two functional brain networks varying in eccentricity (see Fig. 3 for raw data). High eccentricity networks (like that of HI listeners) have more chain-like global configuration reflecting less integration and more long-range neural signaling; low eccentricity networks (like that of NH listeners) have configurations that are more integrated and “star-like.” After He et al. (2018). See text for definitions of graph metrics. *p < 0.05, error bars = ± s.e.m

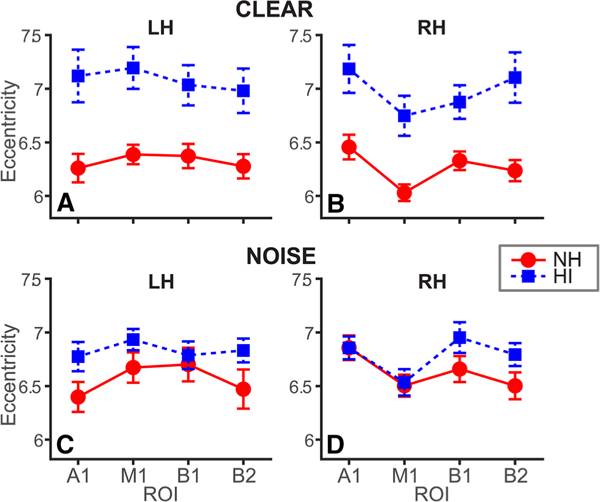

Fig. 4.

Age-related hearing loss is associated with more extended pathways of neural communication, particularly in the left hemi-sphere. Average eccentricity within specific nodes of the auditory-linguistic-motor loop in left (LH) and right (RH) hemispheres. a, b Clear speech. c, d Noise-degraded speech. While HI listeners show increased network eccentricity, group differences are largest in LH. A1, primary auditory cortex; M1, primary motor cortex; B1-B2, Broca’s area (pars opercularis and pars triangularis). Error bars = ± s.e.m

Following previous studies (Park et al. 2011; Zhiwei and Minfen 2007), the data were split into a training and test set (80% and 20%, respectively). The choice of SVM kernel and tunable parameters (ɣ and C) affect classifier performance. Thus, during model training, we carried out a grid search to identify optimized parameters for the classifier that maximally distinguished groups in the test data. This resulted in the following parameter set for our data: C = 100, ɣ = 0.003, and kernel = “rbf.” Applying these parameters, SVM was then used to derive the support vectors from the training dataset based on learning the features (e.g., graph measures) and class labels (e.g., NH vs. HI) from the data. Once the model was trained, the resulting vectors were used to predict the unseen test data. Classifier performance was evaluated using fivefold cross-validation and was quantified using standard formulae for binary classification (accuracy, precision, and recall; Saito and Rehmsmeier 2015). Several SVMs were constructed using different combinations of global connectivity features, LH nodal features, RH nodal features and the combined nodal and global features. This allowed us to compare how full-brain versus specific hemispheric connectivity might differentially predict the presence of hearing impairment.

Results

Behavioral data

Audiograms and behavioral responses (%, RTs) for the target speech detection task are shown per group in Fig. 1. Full analysis of these data is reported in Bidelman et al. (2019). Briefly, an ANOVA revealed a main effect of SNR on /ta/ detection accuracy, which was lower for noise-degraded compared to clear speech [F1,30 = 5.66, p = 0.024; Fig. 1b]. However, groups differed neither in their accuracy [F1,30 = 0.01, p = 0.94] nor speed [F1,30 = 0.47, p = 0.49; Fig. 1c] of speech identification.

Functional connectivity

We calculated various global and nodal graph measures from each listener’s full-brain network. Global graph features that survived group-level permutation statistics are illustrated in Fig. 2. For clear speech, only three global measures reliably distinguished the two groups: diameter, eccentricity, and characteristic path length were all larger for HI relative to NH listeners (Fig. 2a). For noise-degraded speech, only the radius (i.e., minimum eccentricity) of the connectivity network showed group differences (Fig. 2b), which was again larger for HI listeners. Characteristic path length is defined as the average shortest path length in the network. The eccentricity of a graph node is interpreted as the maximal shortest path length between a node and any other node and is related to the degree of integration in a network (van Lutterveld et al. 2017; He et al. 2018). Larger eccentricity values reflect less integration and thus more long-range neural signaling. Networks with high eccentricity have more chain-like configuration reflecting less integration and more long-range neural signaling (Fig. 2c). Low eccentricity net-works have more star-like configurations (He et al. 2018). Relatedly, radius and diameter are the minimum and maximum eccentricity, respectively.

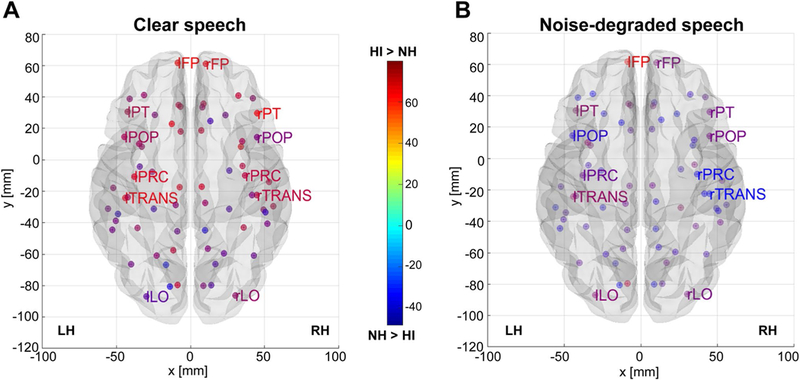

Notable group differences were found in graph eccentricity (Fig. 3). While larger eccentricity was observed in HI listeners across a majority of nodes, group differences were most prominent in frontal and temporal areas. Higher values of these network properties suggest that HI listeners have more extended communication pathways and less efficient information exchange with the full-brain connectome than NH listeners.

Fig. 3.

Group contrast of full-brain nodal eccentricity as a function of speech SNR. a Clear speech. b Noise-degraded speech. Hotter colors denote nodes of the DK atlas (Desikan et al. 2006) where HI listeners showed larger interconnectivity (eccentricity) between network nodes [(i.e., HI > NH); cooler colors, NH > HI]. Note the stronger eccentricity in the HI group in frontal and temporal sites. Functional data are projected onto the BRAPH glass brain template (Mijalkov et al. 2017). For clarity, only select DK ROIs are labeled. FP, frontal pole; LO, lateral occipital; POP, pars opercularis (i.e., “B1”); PT, pars triangularis (i.e., “B2”); PC, precentral gyrus (i.e., “M1”); TRANS, transverse temporal gyrus (i.e., “A1”)

Nodal eccentricity measured in specific auditory (A1), motor (M1), and inferior frontal (Broca’s, B1/B2) ROIs of the left and right hemispheres is shown in Fig. 4. These regions were selected given their well-known role in degraded speech processing and cognitive aging (Alain et al. 2018). A four-way ANOVA (group × SNR × hemisphere × ROI) conducted on nodal eccentricity revealed a main effect of group [F130 = 11.77, p = 0.002] as well as a group x SNR [F1,450 = 37.56, p < 0.0001] and hemisphere × ROI interactions [F3,450 = 7.60, p< 0.0001]. To understand these interactions, we conducted separate analyses by hemisphere and group. In both LH and RH, eccentricity was modulated by both group and SNR [group × SNR, LH: F1,210 = 15.80, p < 0.0001; RH: F1,210 = 24.62, p < 0.0001]. Tukey-Kramer corrected multiple comparisons revealed left/right hemispheric differences in nodal eccentricity among the NH group for A1 and M1. In contrast, only A1 showed hemispheric differences in the HI group. While all LH nodes showed larger eccentricity in the HI group across the board (Fig. 4a), these group differences were smaller in the RH and for noise-degraded speech (Fig. 4c, d). Contrasts by group showed that in HI listeners, clean speech was associated with larger eccentricity than noisy speech but only in A1. In contrast, in NH listeners, noisy speech produced greater eccentricity across all ROIs. Critically, eccentricity did not differ between groups in a control region seeded in lateral occipital (visual) cortex [clean: t30 = − 2.03, p = 0.051; noise: t30 = - 1.17, p = 0.25]. This provides converging evidence that group differences in neural connectivity were restricted to the auditory-linguistic networks for speech. Paralleling global connectivity results, these findings imply less efficient neural signaling among the auditory-linguistic-motor loop in individuals with mild age-related hearing loss. Moreover, while it is tempting to suggest HI listeners’ noise-related reduction in A1 eccentricity reflects increased neural efficiency in noise, another interpretation is that noise forced a more integrated (“star-like”, Fig. 2c) network arrangement surrounding auditory cortex in these listeners, perhaps due to increased listening effort (Lopez et al. 2014). The star-like network architecture could reflect neural compensation, whereby HI listeners tap any source of information available. This type of undifferentiated strategy is analogous to connectivity observed in children, where neural networks are still in nascent stages of maturation (Gozzo et al. 2009). Still, some caution is warranted for this latter interpretation given this effect was only observed in A1 and HI listeners had larger eccentricity than NH across the board.

SVM classification of groups based on brain connectivity

Having established that properties of the brain’s functional connectome vary with hearing loss, we next aimed to predict (classify) group membership based on connectivity measures alone. Among the ROIs, nodal eccentricity showed the most widespread group differences (e.g., Figs. 3, 4). Separate SVM classifiers were trained and tested using different sets of connectivity features including global, LH nodal, RH nodal, and combinations of these features (global + nodal features). SVM classification performance is shown in Table 1.

Table 1.

SVM classifier performance (%) distinguishing hearing status (NH vs. HI) using brain connectivity measures

| Speech stimulus | Measure | Global features | Nodal and global features |

Nodal features of LH |

Nodal features of RH |

|---|---|---|---|---|---|

| Clear | Accuracy | 71.4 | 85.7 | 85.7 | 71.4 |

| AUC | 75.0 | 87.5 | 87.5 | 70.8 | |

| F1 score | 70.0 | 86.0 | 86.0 | 71.0 | |

| Noise | Accuracy | 57.1 | 57.1 | 85.7 | 57.1 |

| AUC | 50.0 | 50.0 | 83.3 | 50.0 | |

| F1 score | 42.0 | 42.0 | 85.0 | 42.0 | |

Classification is based on nodal eccentricity measured within ROIs of the auditory-linguistic-motor loop. AUC area under the receiver operating characteristic (ROC) curve. F1 score = 2(precision × recall)/(precision + recall). Values are averaged between groups. Chance level is 50%

LH left hemisphere, RH right hemisphere

For clear speech, the classifier obtained maximum accuracy (86%) using the LH’s nodal measures as well as the combined feature set. Classification was less successful using RH measures (71%). Similarly, classification was generally poorer using connectivity data in the noise condition, regardless of which feature set was submitted to the classifier. Noise-related changes in classifier performance were not observed using LH nodal features, indicating LH was robust in identifying groups regardless of speech SNR. These findings suggest that while changes in neural signaling occur in both hemispheres, LH connectivity dominates the differentiation of NH and HI groups based on patterns of brain connectivity.

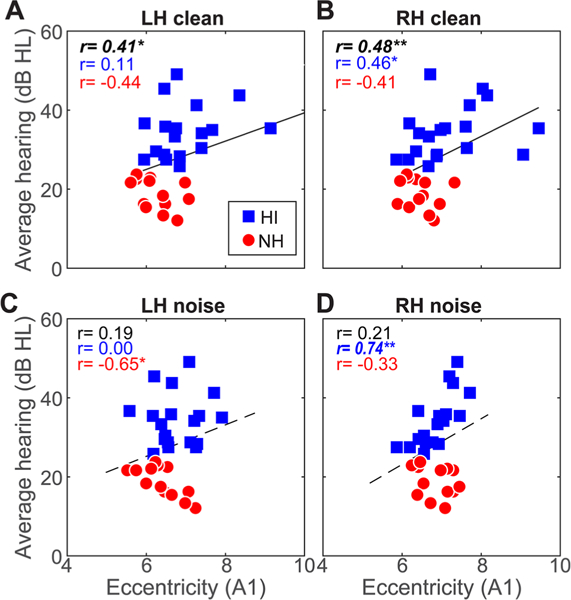

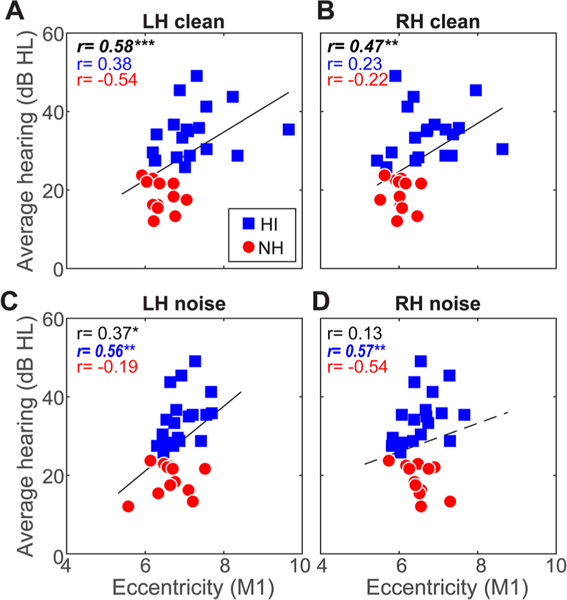

Relations between functional connectivity and hearing loss

To assess whether brain connectivity was predicted by the severity of listeners’ hearing loss, we conducted Spearman correlations between behavioral audiometric thresholds (average 250–8000 Hz) and ROI-specific connectivity. Correlations are shown for the entire sample as well as per group in Figs. 5 and 6. Overall across our sample, we found strong associations between bilateral A1 eccentricity evoked by clear speech and behavioral thresholds (Fig. 5a, b; r = 0.41–0.48, ps < 0.02), whereby larger eccentricity was linked with poorer hearing. As might be expected from the lack of age difference among groups, this effect was independent of age, which did not correlate with A1 eccentricity (p > 0.071). The correlation for noise responses across the sample was not significant (Fig. 5c, d). Bilateral M1 eccentricity was also associated with thresholds for clear speech (Fig. 6a, b; r = 0.47–0.58 ps < 0.01). Interestingly, for noise-degraded speech, we found links between M1 responses in the LH and hearing thresholds (Fig. 6c; r=0.37, p = 0.0379), which again was not predicted by age (r = 0.31, p = 0.08). Again, more severe hearing impairment predicted increased eccentricity—implying increased inter-regional communication (see Fig. 2c). Similarly, regional network eccentricity in Broca’s area and its RH homologue were also associated with hearing status (r = 0.42–0.48, ps < 0.0161; FDR corrected) but not age (ps > 0.07) (data not shown), implying that changes in inferior prefrontal connectivity were associated with age-related hearing loss. No other correlations were significant. Collectively, these results provide a link between graph-theoretic measures of dorsal-ventral connectivity and the severity of listeners’ hearing impairment.

Fig. 5.

Connectivity in/outflow of auditory cortex is associated with hearing acuity. a, b Correlations between A1 connectivity during clear speech processing and hearing thresholds. Both LH and RH connectivity covaries with hearing acuity; larger eccentricity is linked with more severe hearing loss. c, d A1 eccentricity in the noise condition is not related to audiometric thresholds when considering all listeners. Solid lines, significant correlations; dotted lines, n.s. Bold italics = correlations surviving FDR correction across SNR and hemisphere. *p < 0.05, **p < 0.01, ***p < 0.001

Fig. 6.

Connectivity in/outflow of motor cortex is associated with hearing acuity for both clear and noise-degraded speech. Hearing loss is predicted by M1 connectivity in both LH and RH for clear and noise-degraded speech. Otherwise as in Fig. 5

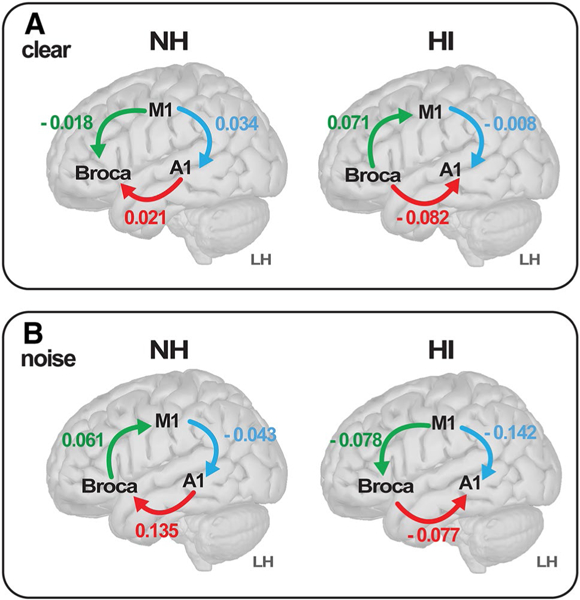

Directed connectivity within the auditory-linguistic-motor loop

Our prior analyses focused on undirected measures of neural signaling, which cannot reveal directional flow (causality) of neural signaling within the auditory-linguistic-motor loop that might be important for understanding mechanistic changes from hearing loss. Consequently, we measured directed connectivity within the auditory-linguistic-motor loop using PTE (Lobier et al. 2014) (Fig. 7). This analysis was limited to LH nodes since those were the most distinct regions in segregating groups via SVM classification (e.g., Table 1). Within the A1↔Broca’s (B1) connection, a two-way ANOVA (group x SNR) revealed a main effect of group [F1,30 = 7.50, p = 0.0103], with stronger connectivity in NH listeners across the board. The NH listeners showed “bottom-up” signaling directed from A1→Broca’s, whereas the HI group showed the reverse, i.e., “top-down” Broca’s A1 signaling. Connectivity also was modulated by both SNR and group within the Broca’s→M1 pathway [SNR x group interaction: F1,30 = 3.96, p = 0.05]. Tukey-Kramer adjusted contrasts revealed this was attributable to a noise-related change neural signaling within the HI group; the NH group did not show a noise decrement. This suggests that in HI listeners, with added noise, communication between linguistic and motor areas reverses from Broca’s driving Ml to Ml driving Broca’s activation.

Fig. 7.

Directional flow of neural signaling within the dorsal–ventral stream reverses with age-related hearing loss. a Clear speech. b Noise-degraded speech. Values represent the strength of connectivity within LH computed via phase transfer entropy (Lobier et al. 2014), whereas the direction (causality) of communication is determined by sign. Arrows denote flow from region A → B. The NH listeners show signaling directed from A1 → Broca’s (pars opercularis), whereas the HI group shows the reverse (Broca’s → A1), suggesting bottom-up versus top-down configurations within the same pathway dependent on hearing status. During noise-degraded speech, communication between linguistic and motor areas reverses from Broca’s driving M1 to M1 driving Broca’s, but only in HI listeners (cf. green connection, A vs. B)

As a control, we assessed PTE between lateral occipital (visual) cortex and Ml, since this pathway should not be engaged in our auditory-based task. As expected, left hemisphere LO→Ml connectivity did not differ between groups for either SNR [clean: t30 = − 0.55, p = 0.59; noise: t30 = l.32, p = 0.l95]. This confirms group differences in directed connectivity were largely restricted to the auditory-linguistic-motor loop, pathways necessary for our SIN listening task.

Discussion

By measuring functional connectivity via EEG during difficult SIN perception tasks, we demonstrate that age-related hearing loss is associated with changes in neural signaling at both the full-brain and regional (local) levels. Our results imply that HI listeners’ communication pathways supporting cocktail party speech perception are less efficient in routing information among auditory-linguistic, frontal, and motor regions compared to normal-hearing older adults. Among the dorsal-ventral pathways for speech, we found a surprising reversal in directed functional connectivity dependent on hearing status. Within the very same pathways, we observed a reorganization in the directional flow of neural signaling that changes states from a “bottom-up” to “top-down” configuration with hearing loss.

At the micro- and mesoscopic levels, several mechanisms might contribute to senescent changes in SIN perception including reduced neural inhibition and increased “sluggishness” of the system (Caspary et al. 2008; Anderson et al. 2010), neural deafferentation (Kujawa and Liberman 2006), cortical thinning (Salat et al. 2004; Bilodeau-Mercure et al.2015), and increased “neural noise” (Salthouse and Lichty 1985; Bidelman et al. 2014). From a macroscopic perspective, tinnitus-inducing hearing loss has been associated with a reduction in functional connectivity between brainstem inferior colliculus and cortical auditory relays, suggesting a failure of thalamic gating between subcortical and cortical auditory processing (Boyen et al. 2014). Similarly, in the same listeners as reported here, we have shown that brainstem-cortical afferent signaling directed from the inferior colliculus to primary auditory cortex is modulated by mild hearing loss and predicts older adults’ SIN perception (Bidelman et al. 2019). Aging has also been associated with decreased entropy (more commonality) between brain states that leads to less perceptual-cognitive flexibility (Bidelman et al. 2014; Garrett et al. 2013) as well as global shifts in brain dynamics from a more posterior to anterior functioning system (Zhang et al. 2017).

Global and regional changes in functional connectivity with age-related hearing loss

We extend previous neuroimaging studies by demonstrating pervasive group differences in full-brain functional connectivity that covary with hearing loss. Graph-theoretic measures of undirected global connectivity revealed that HI listeners had larger network eccentricity, diameter, radius, and characteristic path length than NH listeners. While brain-wide, these differences were largest in frontotemporal areas (Fig. 3). Given the definitions of these graph measures (van Lutterveld et al. 2017; Mijalkov et al. 2017; He et al. 2018), our results imply that HI listeners have longer communication pathways and less efficient or integrated information exchange than NH listeners (see Fig. 2c). In other words, HI brains have increased in/outflow of functional connectivity characterized by more inter-regional signaling brainwide, but particularly in LH. Although our speech detection task was simplistic, we note that neural effects were observed absent of any group differences in perception (Fig. 1). Thus, our global connectivity results suggest that even mild degrees of age-related hearing loss produce broad neural reorganization at the full-brain level, which may occur prior to the emergence of perceptual SIN deficits.

We found similar connectivity effects when considering specific dorsal-ventral hubs of the auditory-linguistic-motor loop. Auditory (A1), motor (M1), and inferior frontal regions (Broca’s; B1/B2) showed larger nodal eccentricity in HI compared to NH listeners, particularly in LH (Fig. 4a). Group differences in connectivity were smaller in these same regions in RH and for noise-degraded speech (Fig. 4c, d). Both behavior (Fig. 1a) and neural (e.g., ERPs; Bidelman et al. 2019) responses to speech are weaker in the noise condition, which could result in poorer estimates of connectivity and more muted group effects for noise-degraded speech. Moreover, neural SVM classifiers showed functional connectivity among left A1-M1-Broca’s nodes was superior at distinguishing NH and HI listeners (Table 1). The leftward bias in the predictive value of our connectivity data is perhaps expected given the well-known left lateralization of speech processing, particularly for SIN perception (Bidelman and Howell 2016; Alain et al. 2018). Still, results suggest that in addition to global changes in brain connectomics (Fig. 3), inter-regional signaling within the dorsal-ventral speech pathways differs in older adults with hearing loss. Previous studies have shown that hemispheric asymmetry is correlated with SIN perception (Javad et al. 2014; Bidelman and Howell 2016; Thompson et al. 2016). Our connectivity data extend these findings by revealing that in addition to diminished (or over-exaggerated) neural encoding within individual brain regions (Bidelman et al. 2014, 2017; Presacco et al. 2016), hearing loss in older adults is associated with aberrant transmission of information within the auditory-linguistic pathways. This notion is further supported by our correlational analyses, which showed A1, M1, and IFG (Broca’s) connectivity was associated with hearing status, even when considering the HL group alone (Figs. 5, 6). This indicates that the severity of hearing loss (not just presence or absence) is predicted by functional connectivity measures. Given that regional eccentricity was larger in HI listeners, our findings suggest that reduced hearing acuity is associated with increased in/outflow of information within the auditory-linguistic-motor loop. This may reflect a form of neural compensation and over-recruitment of additional resources to achieve successful speech perception (Wong et al. 2009; Du et al. 2016).

Our data converge with studies demonstrating aging is associated with increased activation of frontal and motor cortex that helps compensate for impaired SIN perception in older adults (Du et al. 2016; Bilodeau-Mercure et al. 2015). Using fMRI, Du et al. (2016) showed that older listeners have greater specificity of phoneme representations in frontal articulatory regions compared to auditory brain areas. Age-dependent intelligibility effects were also observed in sensorimotor and left dorsal anterior insula by Bilodeau-Mercure et al. (2015). Similarly, we find that in older HI listeners, frontal-motor signaling is routed from motor to linguistic brain areas (M1→Broca’s; Fig. 7b). Relatedly, the only link between M1 connectivity and hearing for noise-degraded speech was in the HI listeners’ LH (Fig. 6c). Assuming motor representations are more accurate depictions of the speech signal in older adults (Du et al. 2016), increased M1→Broca’s connectivity in HI listeners may reflect the need to transmit speech motor representations to linguistic brain areas to better decode impoverished speech representations in adverse listening situations.

Age-related hearing loss reverses neural signaling within the dorsal-ventral pathway

Directional connectivity analysis revealed unique patterns of neural communication between groups. Among the dorsal-ventral pathways, we found a reversal in directed neural signaling that depended on both the clarity (SNR) of the speech signal and hearing status of the listener (Fig. 7). We have previously shown that functional connectivity within the A1↔IFG pathway predicts SIN perception in young, normal-hearing adults (Bidelman et al. 2018). However, our previous study examined A1↔IFG connectivity only under passive listening and in young adults, so a direct comparison to the current findings can only be loosely made. In the present study, under active SIN perception in older listeners, we found this frontotemporal connection showed stronger connectivity in NH listeners across the board. However, whereas NH listeners showed “bottom-up” signaling directed from A1→Broca’s, HI listeners showed the reverse pattern (i.e., “top-down” Broca’s→A1 signaling). Similar effects were observed between IFG and motor cortex. With added noise, signaling between linguistic and motor areas reversed from Broca’s driving M1 to M1 driving Broca’s, but only in HI listeners (cf. Du et al. 2016). This is not to imply that neural signaling is only unidirectional within these pathways. Rather, our results imply that within the very same neural circuits, the predominant flow of signaling during SIN perception changes states from a “bottom-up” to “top-down” system with hearing loss. This finding is also reminiscent of the apparent posterior-anterior shift in resting-state connectivity that occurs with auditory aging (Zhang et al. 2017). New experiments are currently underway in our laboratory to directly compare how directed connectivity between A1↔IFG during SIN processing is modulated by age and attention.

Our data align with several models of cognitive aging, which suggest a rebalancing of bottom-up to top-down processing depending on age-related deterioration of sensory cues and the need to maintain goal-oriented actions (West 1996; Zhuravleva et al. 2014). Decreased sensory input (i.e., hearing loss) would tend to deliver impoverished speech representations to higher-order brain areas upon which to make behavioral selections (Grady et al. 1994; Tay et al. 2006). This may necessitate additional top-down control to compensate for poorer speech input, thereby changing the relative weighting of auditory afferent versus efferent signaling (e.g., Bidelman et al. 2019). This notion further converges with the common cause hypothesis, which states that neither bottom-up nor top-down processing is disproportionately affected by aging, per se (Christensen et al. 2001). Rather, age-related deterioration occurs across all levels of the nervous system, including neurophysiological function regulating sensory-perceptual, motor, and cognitive processing. Indeed, the spared speech perception performance we find in HI listeners despite robust changes in their functional brain connectivity may reflect a form of widespread neural compensation that emerges with age-related decline in hearing.

More broadly, the neural dynamics we observe within the auditory-linguistic-motor loop could reflect an ongoing (re)balance between feedforward and feedback modes of this circuit that maintain a quasi-homeostasis to enable robust speech perception. In related studies on working memory, we showed that the directional flow of information between sensory and frontal brain areas reverses when encoding versus maintaining items in the mind (encoding: sensory frontal; maintenance: frontal→sensory), revealing feedforward and feedback modes in the same underlying brain network dependent on task demands (Bashivan et al. 2014, 2017). The current results offer parallels within the auditory-linguistic-motor network during speech perception by suggesting a reversal of neural signaling within this circuit depending on the clarity (SNR) of the speech signal and characteristic of the listener (i.e., degree of hearing loss).

Our results are also consistent with the decline compensation hypothesis (Wong et al. 2009) or related compensation-related utilization of neural circuit hypothesis (Reuter-Lorenz and Cappell 2008). Both frameworks posit that with low levels of task complexity, brain regions are over-recruited in older adults, reflecting neural processing inefficiencies farther downstream. Under this interpretation, the minimal differences we find in HI listeners’ behavioral performance (Fig. 1) could result from the overactivation of speech-relevant networks to compensate for the patho-physiological declines related to diminished hearing and maintain a computational output similar to NH individuals. Previous studies have indeed suggested higher functional connectivity in older adults with reduced cognitive function (Lopez et al. 2014). Under this interpretation, older adults with lower cognitive reserve—perhaps catalyzed by reduced hearing acuity (Lin et al. 2011, 2013)—may require greater “effort” to achieve the same level of performance thereby necessitating increased brainwide connectivity as observed here (Lopez et al. 2014). However, an argument against a general listening effort explanation is the fact that the speed of perceptual decisions (RT) did not vary across groups (Fig. 1c). Similarly, dedifferentiation frameworks (Li and Lindenberger 1999) suggest that age-related impairments stem from reductions in the fidelity of neural representations. In ongoing studies, we have found that age-related hearing loss (of similar degree as in the present study) reduces the fidelity of neural speech representations at both subcortical and cortical levels (Bidelman et al. 2014, 2017, 2019) (see also Presacco et al. 2016).

In sum, our findings suggest strong links between age-related changes in hearing and how information is (or is not) broadcast between sensory-perceptual, linguistic, and cognitive systems across the brain. Future studies might explore the extent and robustness of full-brain connectomics as potential biomarkers of early stages of (subclinical) hearing loss.

Acknowledgments

Funding This work was funded by Grants from the Canadian Institutes of Health Research (MOP 106619) and the Natural Sciences and Engineering Research Council of Canada (NSERC, 194536) awarded to C.A, and The Hearing Research Foundation awarded to S.R.A, and the National Institute on Deafness and Other Communication Disorders of the National Institutes of Health under award number NIH/NIDCD R01DC016267 (G.M.B.).

Ethical approval Research involving human subjects for this study was approved by the Baycrest Hospital Human Subject Review Committee and participants gave written informed consent in accordance with protocol REB# 06–31.

Footnotes

An array of 32 ch was used since our dataset included recording of brainstem potentials (Bidelman et al. 2019), which require a very high sample rate that taxes available throughput of our EEG system. Estimates indicate that the accuracy of LORETA for 32 ch is ~ 1.5 × less accurate than 64 ch (Michel et al. 2004). Still, inverse methods were applied uniformly across all listeners/groups so while overall localization precision might be underestimated, this would not account for group differences.

BRAPH does not implement directed measures of connectivity (Mijalkov et al. 2017) so our full-brain (global) analysis used undirected measures. As such, our full-brain analysis offers a somewhat qualitative view of hearing-related changes in brain connectivity. Consequently, we used directed connectivity metrics (PTE) for specific hypothesis testing and to evaluate hearing-related changes in specific connections with the auditory–linguistic-motor loop (e.g., Fig. 7). Together, our undirected and directed analyses at the fullbrain and circuit level offer two complementary approaches that provide different, yet converging evidence for hearing-related changes in brain connectivity.

Our connectivity matrices were derived from source signals, which yield the most veridical estimate of functional brain connectivity (Bastos and Schoffelen 2016; Lai et al. 2018). Nevertheless, effects of field spread can never be fully abolished in EEG, even at the source level (Schoffelen and Gross 2009; Zhang et al. 2016) and correlated activity of adjacent sources reduces the accuracy of functional connectivity analysis (for an excellent review of this tradeoff, see Schoffelen and Gross 2009). However, proper interpretation of source connectivity results can be achieved by analyzing the relative changes in connectivity caused by experimental manipulations (Schoffelen and Gross 2009). Thus, because field spread effects are identical across our experimental conditions (i.e., groups; noise levels) they subtract out and are unlikely to account for group differences. Moreover, our characterizations of topographic connectivity patterns (Figs. 2, 3, 4, 56) reflect the organizational properties of the connectome at the fullbrainlevel and these measures show good reliability in the face of residual correlated activity (Hardmeier et al. 2014).

This differs from Bidelman et al. (2019), where PTE values were not normalized between ± 0.5.

Compliance with ethical standards

Publisher’s Note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Conflict of interest The authors declare they have no conflict of interest.

References

- Abdala C, Dhar S (2012) Maturation and aging of the human cochlea: a view through the DPOAE looking glass. J Assoc Res Otolaryngol 13(3):403–421 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alain C, Snyder JS (2008) Age-related differences in auditory evoked responses during rapid perceptual learning. Clin Neurophysiol 119(2):356–366 [DOI] [PubMed] [Google Scholar]

- Alain C, McDonald K, Van Roon P (2012) Effects of age and back-ground noise on processing a mistuned harmonic in an otherwise periodic complex sound. Hear Res 283:126–135 [DOI] [PubMed] [Google Scholar]

- Alain C, Roye A, Salloum C (2014) Effects of age-related hearing loss and background noise on neuromagnetic activity from auditory cortex. Front Syst Neurosci 8:8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alain C, Arsenault JS, Garami L, Bidelman GM, Snyder JS (2017) Neural correlates of speech segregation based on formant frequencies of adjacent vowels. Sci Rep 7(40790):1–11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alain C, Du Y, Bernstein LJ, Barten T, Banai K (2018) Listening under difficult conditions: an activation likelihood estimation meta-analysis. Hum Brain Mapp 39(7):2695–2709 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson S, Skoe E, Chandrasekaran B, Kraus N (2010) Neural timing is linked to speech perception in noise. J Neurosci 30(14):4922–4926 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson S, Parbery-Clark A, White-Schwoch T, Kraus N (2012) Aging affects neural precision of speech encoding. J Neurosci 32(41):14156–14164 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson S, White-Schwoch T, Choi HJ, Kraus N (2013a) Training changes processing of speech cues in older adults with hearing loss. Front Syst Neurosci 7(97):1–9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson S, White-Schwoch T, Parbery-Clark A, Kraus N (2013b) A dynamic auditory-cognitive system supports speech-in-noise perception in older adults. Hear Res 300C:18–32 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bashivan P, Bidelman GM, Yeasin M (2014) Modulation of brain connectivity by cognitive load in the working memory network. In: Proceedings on the IEEE symposium series on computational intelligence (IEEE SSCI), Orlando, FL, December 9–12 [Google Scholar]

- Bashivan P, Yeasin M, Bidelman GM (2017) Temporal progression in functional connectivity determines individual differences in working memory capacity. In: Proceedings of the international joint conference on neural networks (IJCNN 2017) (Anchorage, AK, May 14–19). pp 2943–2949 [Google Scholar]

- Bassett DS, Bullmore E (2006) Small-world brain networks. Neuro-scientist 12(6):512–523 [DOI] [PubMed] [Google Scholar]

- Bastos AM, Schoffelen J-M (2016) A tutorial review of functional connectivity analysis methods and their interpretational pitfalls. Front Syst Neurosci 9:175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benjamini Y, Hochberg Y (1995) Controlling the false discovery rate: a practical and powerful approach to multiple testing. J R Stat Soc Ser B (Methodol) 57(1):289–300 [Google Scholar]

- Betlejewski S (2006) Age connected hearing disorders (presbyacusis) as a social problem. Otolaryngol Pol 60(6):883–886 [PubMed] [Google Scholar]

- Bidelman GM, Dexter L (2015) Bilinguals at the “cocktail party”: dissociable neural activity in auditory-linguistic brain regions reveals neurobiological basis for nonnative listeners’ speech-in-noise recognition deficits. Brain Lang 143:32–41 [DOI] [PubMed] [Google Scholar]

- Bidelman GM, Howell M (2016) Functional changes in inter- and intra-hemispheric auditory cortical processing underlying degraded speech perception. Neuroimage 124:581–590 [DOI] [PubMed] [Google Scholar]

- Bidelman GM, Villafuerte JW, Moreno S, Alain C (2014) Age-related changes in the subcortical-cortical encoding and categorical perception of speech. Neurobiol Aging 35(11):2526–2540 [DOI] [PubMed] [Google Scholar]

- Bidelman GM, Lowther JE, Tak SH, Alain C (2017) Mild cognitive impairment is characterized by deficient hierarchical speech coding between auditory brainstem and cortex. J Neurosci 37(13):3610–3620 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bidelman GM, Davis MK, Pridgen MH (2018) Brainstem-cortical functional connectivity for speech is differentially challenged by noise and reverberation. Hear Res 367:149–160 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bidelman GM, Price CN, Shen D, Arnott S, Alain C (2019) Afferent-efferent connectivity between auditory brainstem and cortex accounts for poorer speech-in-noise comprehension in older adults. bioRxiv [preprint]: 10.1101/568840 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bilodeau-Mercure M, Lortie CL, Sato M, Guitton MJ, Tremblay P (2015) The neurobiology of speech perception decline in aging. Brain Struct Funct 220(2):979–997 [DOI] [PubMed] [Google Scholar]

- Boyen K, de Kleine E, van Dijk P, Langers DRM (2014) Tinnitus-related dissociation between cortical and subcortical neural activity in humans with mild to moderate sensorineural hearing loss. Hear Res 312:48–59 [DOI] [PubMed] [Google Scholar]

- Brent GA, Smith DBD, Michalewski HJ, Thompson LW (1976) Differences in the evoked potential in young and old subjects during habituation and dishabituation procedures. Psychophysiology 14:96–97 [Google Scholar]

- Caspary DM, Ling L, Turner JG, Hughes LF (2008) Inhibitory neurotransmission, plasticity and aging in the mammalian central auditory system. J Exp Biol Med 211:1781–1791 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang Y, Lee SH, Lee YJ, Hwang MJ, Bae SJ, Kim MN, Lee J, Woo S, Lee H, Kang DS (2004) Auditory neural pathway evaluation on sensorineural hearing loss using diffusion tensor imaging. NeuroReport 15(11):1699–1703 [DOI] [PubMed] [Google Scholar]

- Christensen H, Mackinnon AJ, Korten A, Jorm AF (2001) The “common cause hypothesis” of cognitive aging: evidence for not only a common factor but also specific associations of age with vision and grip strength in a cross-sectional analysis. Psychol Aging 16(4):588–599 [DOI] [PubMed] [Google Scholar]

- Cohen G (1979) Language comprehension in old age. Cognit Psychol 11(4):412–429 [DOI] [PubMed] [Google Scholar]

- Cruickshanks KJ, Wiley TL, Tweed TS, Klein BE, Klein R, Mares-Perlman JA, Nondahl DM (1998) Prevalence of hearing loss in older adults in Beaver Dam, Wisconsin: the epidemiology of hearing loss study. Am J Epidemiol 148(9):879–886 [DOI] [PubMed] [Google Scholar]

- de Haan W, Pijnenburg YAL, Strijers RLM, van der Made Y, van der Flier WM, Scheltens P, Stam CJ (2009) Functional neural network analysis in frontotemporal dementia and Alzheimer’s disease using EEG and graph theory. BMC Neurosci 10(1):101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desikan RS, Segonne F, Fischl B, Quinn BT, Dickerson BC, Blacker D, Buckner RL, Dale AM, Maguire RP, Hyman BT, Albert MS, Killiany RJ (2006) An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. Neuroimage 31(3):968–980 [DOI] [PubMed] [Google Scholar]

- Du Y, Buchsbaum BR, Grady CL, Alain C (2014) Noise differentially impacts phoneme representations in the auditory and speech motor systems. Proc Natl Acad Sci USA 111(19):1–6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Du Y, Buchsbaum BR, Grady CL, Alain C (2016) Increased activity in frontal motor cortex compensates impaired speech perception in older adults. Nat Commun 7(12241):1–12 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dubno JR, Schaefer AB (1992) Comparison of frequency selectivity and consonant recognition among hearing-impaired and masked normal-hearing listeners. J Acoust Soc Am 91(4 Pt 1):2110–2121 [DOI] [PubMed] [Google Scholar]

- Friston KJ (2011) Functional and effective connectivity: a review. Brain Connect 1(1):13–36 [DOI] [PubMed] [Google Scholar]

- Fuchs M, Drenckhahn R, Wischmann H-A, Wagner M (1998) An improved boundary element method for realistic volume-conductor modeling. IEEE Trans Biomed Eng 45(8):980–997 [DOI] [PubMed] [Google Scholar]

- Fuchs M, Kastner J, Wagner M, Hawes S, Ebersole J (2002) A standardized boundary element method volume conductor model. Clin Neurophysiol 113(5):702–712 [DOI] [PubMed] [Google Scholar]

- Garrett DD, Samanez-Larkinc GR, MacDonaldd SWS, Lindenberger U, McIntosh AR, Grady CL (2013) Moment-to-moment brain signal variability: a next frontier in human brain mapping? Neurosci Biobehav Rev 37:610–624 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goodin DS, Squires KC, Henderson BH, Starr A (1978) Age-related variations in evoked potentials to auditory stimuli in normal human subjects. Electroencephalogr Clin Neurophysiol 44(4):447–458 [DOI] [PubMed] [Google Scholar]

- Gordon-Salant S, Fitzgibbons PJ (1993) Temporal factors and speech recognition performance in young and elderly listeners. J Speech Hear Res 36:1276–1285 [DOI] [PubMed] [Google Scholar]

- Gozzo Y, Vohr B, Lacadie C, Hampson M, Katz KH, Maller-Kesselman J, Schneider KC, Peterson BS, Rajeevan N, Makuch RW, Constable RT, Ment LR (2009) Alterations in neural connectivity in preterm children at school age. Neuroimage 48(2):458–463 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grady CL, Maisog JM, Horwitz B, Ungerleider LG, Mentis MJ, Salerno JA, Pietrini P, Wagner E, Haxby JV (1994) Age-related changes in cortical blood flow activation during visual processing of faces and location. J Neurosci 14(3 Pt 2):1450–1462 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gramfort A, Papadopoulo T, Olivi E, Clerc M (2010) Open MEEG: Opensource software for quasistatic bioelectromagnetics. Biomed Eng Online 9(1):45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hardmeier M, Hatz F, Bousleiman H, Schindler C, Stam CJ, Fuhr P (2014) Reproducibility of functional connectivity and graph measures based on the phase lag index (PLI) and weighted phase lag index (wPLI) derived from high resolution EEG. PLoS One 9(10):e108648–e108648 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harris JM, Hirst JL, Mossinghoff MJ (2008) Combinatorics and graph theory, vol 2 Springer, New York [Google Scholar]

- He W, Sowman PF, Brock J, Etchell AC, Stam CJ, Hillebrand A (2018) Topological segregation of functional networks increases in developing brains. bioRxiv [preprint]:378562 [DOI] [PubMed] [Google Scholar]

- Humes LE, Busey TA, Craig J, Kewley-Port D (2013) Are age-related changes in cognitive function driven by age-related changes in sensory processing? Atten Percept Psychophys 75:508–524 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hutka S, Alain C, Binns M, Bidelman GM (2013) Age-related differences in the sequential organization of speech sounds. J Acoust Soc Am 133(6):4177–4187 [DOI] [PubMed] [Google Scholar]

- Javad F, Warren JD, Micallef C, Thornton JS, Golay X, Yousry T, Mancini L (2014) Auditory tracts identified with combined fMRI and diffusion tractography. Neuroimage 84(Supplement C):562–574 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaiser M (2011) A tutorial in connectome analysis: topological and spatial features of brain networks. Neuroimage 57(3):892–907 [DOI] [PubMed] [Google Scholar]

- Khatun S, Morshed BI, Bidelman GM (2019) A single-channel EEG-based approach to detect mild cognitive impairment via speech-evoked brain responses. IEEE Trans Neural Syst Rehabilit Eng 27(5):1063–1070 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Killion MC, Niquette PA, Gudmundsen GI, Revit LJ, Banerjee S (2004) Development of a quick speech-in-noise test for measuring signal-to-noise ratio loss in normal-hearing and hearing-impaired listeners. J Acoust Soc Am 116(4 Pt 1):2395–2405 [DOI] [PubMed] [Google Scholar]

- Konkle DF, Beasley DS, Bess FH (1977) Intelligibility of time-altered speech in relation to chronological aging. J Speech Hear Res 20:108–115 [DOI] [PubMed] [Google Scholar]

- Kral A, Kronenberger WG, Pisoni DB, O’Donoghue GM (2016) Neurocognitive factors in sensory restoration of early deafness: a connectome model. Lancet Neurolol 15(6):610–621 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kujawa SG, Liberman MC (2006) Acceleration of age-related hearing loss by early noise exposure: evidence of a misspent youth. J Neurosci 26(7):2115–2123 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lai M, Demuru M, Hillebrand A, Fraschini M (2018) A comparison between scalp- and source-reconstructed EEG networks. Sci Rep 8(1):12269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li S-C, Lindenberger U (1999) Cross-level unification: a computational exploration of the link between deterioration of neurotransmitter systems and dedifferentiation of cognitive abilities in old age In: Nilsson LG, Markowitsch HJ (eds) Cognitive neuroscience of memory. Hogrefe & Huber, Kirkland, pp 103–146 [Google Scholar]

- Lin Y, Wang J, Wu C, Wai Y, Yu J, Ng S (2008) Diffusion tensor imaging of the auditory pathway in sensorineural hearing loss: changes in radial diffusivity and diffusion anisotropy. J Magn Reson Imaging 28(3):598–603 [DOI] [PubMed] [Google Scholar]

- Lin FR, Metter EJ, O’Brien RJ, Resnick SM, Zonderman AB, Ferrucci L (2011) Hearing loss and incident dementia. Arch Neurol 68(2):214–220 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin FR, Yaffe K, Xia J, Xue QL, Harris TB, Purchase-Helzner E, Satterfield S, Ayonayon HN, Ferrucci L, Simonsick EM (2013) Hearing loss and cognitive decline in older adults. JAMA Intern Med 173(4):293–299 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu B, Feng Y, Yang M, Chen JY, Li J, Huang ZC, Zhang LL (2015) Functional connectivity in patients with sensorineural hearing loss using resting-state mri. Am J Audiol 24(2):145–152 [DOI] [PubMed] [Google Scholar]

- Lobier M, Siebenhuhner F, Palva S, Palva JM (2014) Phase transfer entropy: a novel phase-based measure for directed connectivity in networks coupled by oscillatory interactions. Neuroimage 85(Pt 2):853–872 [DOI] [PubMed] [Google Scholar]

- Lopez ME, Aurtenetxe S, Pereda E, Cuesta P, Castellanos NP, Bruna R, Niso G, Maestu F, Bajo R (2014) Cognitive reserve is associated with the functional organization of the brain in healthy aging: a MEG study. Front Aging Neurosci 6:125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lutz J, Hemminger F, Stahl R, Dietrich O, Hempel M, Reiser M, Jager L (2007) Evidence of subcortical and cortical aging of the acoustic pathway: a diffusion tensor imaging (DTI) study. Acad Radiol 14(6):692–700 [DOI] [PubMed] [Google Scholar]

- Mahmud MS, Yeasin M, Shen D, Arnott S, Alain C, Bidelman GM (2018) What brain connectivity patterns from EEG tell us about hearing loss: a graph theoretic approach. In: Proceedings of the 10th international conference on electrical and computer engineering (ICECE 2018), Dhaka, Bangladesh, Dec. 20–22 pp 205–208 [Google Scholar]

- Makary CA, Shin J, Kujawa SG, Liberman MC, Merchant SN (2011) Age-related primary cochlear neuronal degeneration in human temporal bones. J Assoc Res Otolaryngol 12(6):711–717 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martin JS, Jerger JF (2005) Some effects of aging on central auditory processing. J Rehabilit Res Dev 42(4 Suppl 2):25–44 [DOI] [PubMed] [Google Scholar]

- Mazziotta JC, Toga AW, Evans A, Lancaster JL, Fox PT (1995) A probabilistic atlas of the human brain: theory and rationale for its development. Neuroimage 2:89–101 [DOI] [PubMed] [Google Scholar]

- Michel CM, Murray MM, Lantz G, Gonzalez S, Spinelli L, Grave de Peralta R (2004) EEG source imaging. Clin Neurophysiol 115(10):2195–2222 [DOI] [PubMed] [Google Scholar]

- Mijalkov M, Kakaei E, Pereira JB, Westman E, Volpe G (2017) BRAPH: a graph theory software for the analysis of brain connectivity. PLoS One 12(8):e0178798 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oostenveld R, Praamstra P (2001) The five percent electrode system for high-resolution EEG and ERP measurements. Clin Neuro-physiol 112:713–719 [DOI] [PubMed] [Google Scholar]

- Park Y, Luo L, Parhi KK, Netoff T (2011) Seizure prediction with spectral power of EEG using cost-sensitive support vector machines. Epilepsia 52(10):1761–1770 [DOI] [PubMed] [Google Scholar]

- Parthasarathy A, Bartlett E (2011) Age-related auditory deficits in temporal processing in F-344 rats. Neuroscience 192:619–630 [DOI] [PubMed] [Google Scholar]

- Parthasarathy A, Datta J, Torres JA, Hopkins C, Bartlett EL (2014) Age-related changes in the relationship between auditory brainstem responses and envelope-following responses. J Assoc Res Otolaryngol 15(4):649–661 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pascual-Marqui RD, Esslen M, Kochi K, Lehmann D (2002) Functional imaging with low-resolution brain electromagnetic tomography (LORETA): a review. Methods Find Exp Clin Pharmacol 24(Suppl C):91–95 [PubMed] [Google Scholar]

- Pearson JD, Morrell CH, Gordon-Salant S, Brant LJ, Metter EJ, Klein LL, Fozard JL (1995) Gender differences in a longitudinal study of age-associated hearing loss. J Acoust Soc Am 97:1196–1205 [DOI] [PubMed] [Google Scholar]

- Peelle JE, Wingfield A (2016) The neural consequences of age-related hearing loss. Trends Neurosci 39(7):486–497 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peelle JE, Troiani V, Wingfield A, Grossman M (2010) Neural processing during older adults’ comprehension of spoken sentences: age differences in resource allocation and connectivity. Cereb Cortex 20(4):773–782 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peelle JE, Troiani V, Grossman M, Wingfield A (2011) Hearing loss in older adults affects neural systems supporting speech comprehension. J Neurosci 31(35):12638–12643 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfefferbaum A, Ford JM, Roth WT, Kopell BS (1980) Age-related changes in auditory event-related potentials. Electroencepha-logr Clin Neurophysiol 49(3–4):266–276 [DOI] [PubMed] [Google Scholar]

- Pichora-Fuller KM, Alain C, Schneider B (2017) Older adults at the cocktail party In: Middlebrook JC, Simon JZ, Popper AN, Fay RF (eds) Springer handbook of auditory research: the auditory system at the cocktail party. Springer, Berlin, pp 227–259 [Google Scholar]

- Picton TW, Alain C, Woods DL, John MS, Scherg M, Valdes-Sosa P, Bosch-Bayard J, Trujillo NJ (1999) Intracerebral sources of human auditory-evoked potentials. Audiol Neurootol 4(2):64–79 [DOI] [PubMed] [Google Scholar]

- Picton TW, van Roon P, Armilio ML, Berg P, Ille N, Scherg M (2000) The correction of ocular artifacts: a topographic perspective. Clin Neurophysiol 111(1):53–65 [DOI] [PubMed] [Google Scholar]

- Presacco A, Simon JZ, Anderson S (2016) Evidence of degraded representation of speech in noise, in the aging midbrain and cortex. J Neurophysiol 116(5):2346–2355 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Presacco A, Simon JZ, Anderson S (2019) Speech-in-noise representation in the aging midbrain and cortex: effects of hearing loss. PLoS One 14(3):e0213899 [DOI] [PMC free article] [PubMed] [Google Scholar]