Abstract

We develop a robust and efficient iterative method for hyper-elastodynamics based on a novel continuum formulation recently developed in [1]. The numerical scheme is constructed based on the variational multiscale formulation and the generalized-α method. Within the nonlinear solution procedure, a block factorization is performed for the consistent tangent matrix to decouple the kinematics from the balance laws. Within the linear solution procedure, another block factorization is performed to decouple the mass balance equation from the linear momentum balance equations. A nested block preconditioning technique is proposed to combine the Schur complement reduction approach with the fully coupled approach. This preconditioning technique, together with the Krylov subspace method, constitutes a novel iterative method for solving hyper-elastodynamics. We demonstrate the efficacy of the proposed preconditioning technique by comparing with the SIMPLE preconditioner and the one-level domain decomposition preconditioner. Two representative examples are studied: the compression of an isotropic hyperelastic cube and the tensile test of a fully-incompressible anisotropic hyperelastic arterial wall model. The robustness with respect to material properties and the parallel performance of the preconditioner are examined.

Keywords: Variational multiscale method, Saddle-point problem, Nested iterative method, Block preconditioner, Anisotropic incompressible hyperelasticity, Arterial wall model

1. Introduction

In our recent work [1], a unified continuum modeling framework was developed. In this framework, hyperelastic solids and viscous fluids are distinguished only through the deviatoric part of the Cauchy stress, in contrast to prior modeling approaches. In our derivation, the Gibbs free energy, rather than the Helmholtz free energy, is chosen as the thermodynamic potential, resulting in a unified model for compressible and incompressible materials. A beneficial outcome of the modeling framework is that it naturally allows one to apply a computational fluid dynamics (CFD) algorithm to solid dynamics, or vice versa. In our work [1], the variational multiscale (VMS) analysis, a mature numerical modeling approach in CFD [2], is taken to design the spatial discretization for solid dynamics. This numerical model provides a stabilization mechanism that circumvents the Ladyzhenskaya-Babuška-Brezzi (LBB) condition for equal-order interpolations. In particular, it allows one to use low-order tetrahedral elements, even for fully incompressible materials. This gives us the maximum flexibility in geometrical modeling and mesh generation.

In this work, we build upon the proposed unified formulation to develop a robust and efficient iterative method. Traditional black-box preconditioners are non-robust, and the convergence rate of the linear solver drops significantly under certain conditions. The lack of robustness may be attributed to the saddle-point nature of the problem. Algebraic preconditioners built based on incomplete factorizations are prone to fail due to zero-pivoting; one-level domain decomposition preconditioners do not perform well due to its locality. In this work, we design a preconditioning technique tailored for the VMS formulation for hyper-elastodynamics [1]. The design of the preconditioner is based on a nested block factorization of the consistent tangent matrix in the Newton-Raphson iteration. A block factorization is performed in the nonlinear solution procedure to decouple the kinematics from the balance laws [3]. The resulting 2×2 block matrix is further factorized in the linear solution procedure. This strategy is, in part, related to the classical projection method [4, 5] and the block preconditioning technique [6, 7, 8] that have been widely used in the CFD community. We examine the solver performance for both isotropic and anisotropic hyperelastic models. The significance of this work is that it paves the way towards robust, efficient, and scalable implicit solver technology for biomechanics and monolithic fluid-solid interaction (FSI) simulations [1]. In the rest part of this section, we give an overview of the background and an outline of the work.

1.1. Projection method and block preconditioners

The development of efficient solver techniques for multiphysics problems has been an active area of research in recent years [9]. One simple but important prototype multiphysics problem is the Stokes or the Navier-Stokes equations, representing the coupling between the mass conservation and the balance of the linear momentum for incompressible flows. In the late 1960s, the Chorin-Teman projection method [4, 5] was proposed to solve for the pressure and the velocity separately based on the Helmholtz decomposition. Since then, the projection method and its variants have attracted concentrated research and lead to a voluminous literature [10, 11, 12, 13]. The projection method is attractive because the nonlinear system of equations is decomposed into a series of linear elliptic equations. Although this method has attracted significant attention, it still poses several major challenges. One critical issue is that the physics-based splitting necessitates the introduction of an artificial boundary condition for the pressure. There is no general theory to guide the choice of the artificial boundary conditions, and most likely this artificial boundary condition limits the solution accuracy. For an overview of the projection method, the readers are referred to the review article [14].

In recent years, it has been realized that one can invoke an arbitrary time stepping scheme (e.g. fully implicit) and achieve the decoupling of physics within the linear solver. Indeed, in each iteration of the Krylov subspace method, one only needs to solve with a preconditioner and perform a matrix-vector multiplication to construct the new search direction. Therefore, if the preconditioner is endowed with a block structure, one may sequentially solve each block matrix with less cost. It has been pointed out that the Chorin-Teman projection method is closely related to a block preconditioner [15]. Consider a matrix problem with a 2×2 block structure,

This matrix can be factored into lower triangular, diagonal, and upper triangular matrices as follows,

wherein I is the identity matrix, and O is the zero matrix. The diagonal block matrix contains a Schur complement S := D−CA−1B, which acts as an algebraic analogue of the Laplacian operator for the pressure field [16]. To construct a preconditioner for , one needs to provide approximations for A and S that can be conveniently solved with. The new formulation for hyper-elastodynamics we consider here is similar to the generalized Stokes equations, in which the operator A arises from the discretization of a combination of zeroth order and second order differential operators. Thus, A is amenable for approximation by a standard preconditioning technique. Due to the presence of A−1, S is a dense matrix. When the matrix A represents a discretization of a zeroth-order differential operator, an effective choice is to replace S by to construct the preconditioner for . This choice is closely related to the SIMPLE scheme commonly used in CFD [17, 18]. When the matrix A represents a discretization of a second-order differential operator, a scaled mass matrix is often effective [19]. For more complicated problems, designing a spectrally equivalent preconditioner for the Schur complement is challenging and, in a broad sense, remains an open question. In recent years, progress has been made for problems where A is dominated by a discrete convection operator. Notable examples include the BFBT preconditioner [20], the pressure convection diffusion preconditioner [21], and the least squares commutator (LSC) preconditioner [22]. Based on the Sherman-Morrison formula, a different preconditioner for the Schur complement can be designed for problems with significant contributions from the boundary conditions [23]. In all, the block preconditioner, as an algebraic interpretation of the projection method, has become increasingly popular, since it does not necessitate ad hoc pressure boundary conditions and allows fully implicit time stepping schemes.

If one can solve the sub-matrices A and S to a prescribed tolerance, the matrix is solved in one pass without generating a Krylov subspace. This is commonly known as the Schur complement reduction (SCR) or segregated approach [6, 24, 25, 26]. In contrast, the aforementioned strategy, where is solved by a preconditioned iterative method, is referred to as the coupled approach [6]. For many problems, it is impractical to explicitly construct the Schur complement. Still, the action of the Schur complement on a vector can be obtained in a “matrix-free” manner (see Algorithm 2 in Section 4.2). Thus, one can still solve with the Schur complement by iterative methods. To achieve high accuracy, a sufficient number of bases of the Krylov subspace for S need to be generated, and this procedure can be prohibitively expensive.

1.2. Nested preconditioning technique

The difference between the coupled approach and the segregated approach can be viewed as follows. In the coupled approach, S is replaced by a sparse approximation to generate a preconditioner for . In the segregated approach or SCR, one strives to solve directly with S. The distinction between the two approaches is blurred by using the SCR procedure as a preconditioner. In doing so, one does not need to solve with S to a high precision, thus alleviating the computational burden. In comparison with the coupled approach, the information of the Schur complement is maintained in the preconditioner (up to the tolerances of SCR), and this will improve the robustness. Therefore, in solving with , there are three nested levels. In the outer level, a Krylov subspace method is applied for with a block preconditioner. In the intermediate level, the block preconditioner is applied by solving with the matrices A and S. In the inner level, a solver of A is invoked to approximate the action of S on a vector. Two mechanisms guarantee and accelerate the convergence. In the outer level, the Krylov subspace method for minimizes the residual of the coupled problem. In the intermediate and inner levels, the SCR procedure is utilized as the preconditioner, which itself can be viewed as an inaccurate solver for .

Using SCR as a preconditioner was first proposed within a Richardson iteration scheme [27]. Due to the symmetry property of that problem, a conjugate gradient method is applied to solve the Schur complement equation. Later, the nested iterative scheme was investigated for CFD problems [28, 29], and the reported results indicate that using the SCR procedure as a preconditioner in a Richardson iteration outperforms the coupled approach with a Krylov subspace method. The nested algorithm was then further investigated using the biconjugate gradient stabilized method (BiCGStab) as the outer solver [30]. The nested iterative scheme in [30] uses rather crude stopping criteria for the intermediate and inner solvers. Still, its performance is superior to that of BiCGStab preconditioned by a BFBT preconditioner.

Our investigation of the VMS formulation for hyper-elastodynamics starts with a SIMPLE-type block preconditioner using our in-house code [31]. As will be shown in Section 5, the Krylov subspace method with a block preconditioner like SIMPLE is not always robust. This can be attributed to the ignorance of the off-diagonal entries in A. Because of that, it is appealing to consider preconditioners like LSC, since the off-diagonal information of A is maintained. However, non-convergence has been reported for LSC when solving the Navier-Stokes equations with stabilized finite element schemes [32]. We then ruled out this option since our VMS formulation involves a similar pressure stabilization term. Consequently, we consider using SCR with relaxed tolerances as a preconditioner. In doing so, the Schur complement is approximated through using an inner solver. In contrast to the nested iterative approaches introduced above, we adopt the following techniques in our study: (1) we use GMRES [33] and its variant [34] as the Krylov subspace method in all three levels to leverage their robustness in handling non-symmetric matrix problems; (2) we apply the algebraic multigrid (AMG) preconditioner [35] for problems at the intermediate level to enhance the robustness of the overall algorithm; (3) we use the sparse approximation as a preconditioner when solving with S. We demonstrate application of this method to hyper-elastodynamics, however we anticipate its general use in CFD and FSI problems in future work.

1.3. Structure and content of the paper

The remainder of the study is organized as follows. In Section 2, we state the governing equations of hyper-elastodynamics [1]. In Section 3, the numerical scheme is presented. A block factorization for the consistent tangent matrix is performed to reduce the size of the linear algebra problem. In Section 4, the nested block preconditioning technique is discussed in detail. In Section 5, we present two representative examples to demonstrate the efficacy of the proposed solver technology. The first example is the compression of an isotropic elastic cube [36], and the second is the tensile test of a fully incompressible anisotropic hyperelastic arterial wall model [37]. Comparisons with other preconditioners are made. We draw conclusions in Section 6.

2. Hyper-elastodynamics

In this section, we state the initial-boundary value problem for hyper-elastodynamics, following the derivation in [1]. Let ΩX and Ωx be bounded open sets in with Lipschitz boundaries, where nsd represents the number of space dimensions. They represent the initial and the current configurations of the body, respectively. The motion of the body is described by a family of diffeomorphisms, parametrized by the time coordinate t,

In the above, x is the current position of a material particle originally located at X. This requires that φ(X, 0) = X. The displacement and velocity of the material particle are defined as

In the definition of V and in what follows, d (·) /dt designates a total time derivative. The spatial velocity is defined as Analogously, we define . The deformation gradient, the Jacobian determinant, and the right Cauchy-Green tensor are defined as

We define and as

which represent the distortional parts of F and C, respectively. We denote the thermodynamic pressure of the continuum body as p. The mechanical behavior of an elastic material can be described by a Gibbs free energy . In [1], it is shown that the Gibbs free energy enjoys a decoupled structure,

where Gich and Gvol represent the isochoric and volumetric elastic responses. Under the isothermal condition, the energy equation is decoupled from the system, and it suffices to consider the following equations for the motion of the continuum body,

| (2.1) |

| (2.2) |

| (2.3) |

In the above, β(p) is the isothermal compressibility coefficient, ρ(p) denotes the density in the current configuration, and σdev represents the deviatoric part of the Cauchy stress. Equations (2.1) describe the kinematic relation between the displacement and the velocity, and equations (2.2) and (2.3) describe the balance of linear momentum and mass. The constitutive relations of the elastic material are represented in terms of the Gibbs free energy as follows,

| (2.4) |

wherein the projector and the fictitious second Piola-Kirchhoff stress are defined as

is the fourth-order identity tensor, and ρ0 is the density in the referential configuration. Interested readers are referred to [1] for a detailed derivation of the governing equations and the constitutive relations. The boundary Γx = ∂Ωx can be partitioned into two non-overlapping subdivisions: , wherein is the Dirichlet part of the boundary, and is the Neumann part of the boundary. Boundary conditions can be stated as

| (2.5) |

Given the initial data u0, v0, and p0, the initial conditions can be stated as

| (2.6) |

The equations (2.1)–(2.6) constitute an initial-boundary value problem for hyper-elastodynamics.

3. Numerical formulation

In this section, we present the numerical formulation for the strong-form problem. The spatial discretization is based on a VMS formulation [1, 2], and the temporal scheme is based on the generalized-α scheme [1, 38]. A block factorization, originally introduced in [3], is performed to consistently reduce the size of the linear algebra problem in the Newton-Raphson iterative algorithm.

3.1. Variational multiscale formulation

We consider a partition of by nel non-overlapping, shape-regular elements . The diameter of an element is denoted by he, and the maximum diameter of the elements is denoted as h. Let denote the space of complete polynomials of order k on . The finite element trial solution spaces for the displacement, velocity, and pressure are defined as

and the corresponding test function spaces are defined as

The semi-discrete formulation can be stated as follows. Find yh(t) := {uh(t), vh(t), ph(t)}T ∈ Suh ×Svh ×Sph such that for t ∈ [0, T],

| (3.1) |

| (3.2) |

| (3.3) |

for , with and yh(0) := {uh0, vh0,ph0}T. Here uh0, vh0, and ph0 the projections of the initial data onto the finite dimensional trial solution spaces. In the above and henceforth, the formulations for the kinematic equations, the linear momentum equations, and the mass equation are indicated by the subscripts k, m, and p respectively.

The terms involving in (3.3) arise from the subgrid-scale modeling [1]. These terms improve the stability of the Galerkin formulation without sacrificing the consistency. The design of the stabilization parameter is the crux of the design of the VMS formulation. In this work, the following choices are made,

In the above, is the second-order identity tensor; cm is a dimensionless parameter; c is the maximum wave speed in the solid body. For compressible materials, c is given by the bulk wave speed. Under the isotropic small-strain linear elastic assumption, where λ and μ are the Lamé parameters. For incompressible materials, is the shear wave speed. We point out that, although the choices made above are based on a simplified material model, the stabilization terms still provide an effective pressure stabilization mechanism for a range of elastic and inelastic problems [1, 3, 39, 40, 41]. In this work, we fix cm to be 10−3 and restrict our discussion to the low-order finite element method (i.e. k = 1).

3.2. Temporal discretization

Based on the semi-discrete formulation (3.1)–(3.3), we invoke the generalized-α method [38] for time integration. The time interval [0, T] is divided into a set of nts subintervals of size Δtn := tn+1−tn delimited by a discrete time vector . The solution vector and its first-order time derivative evaluated at the time step tn are denoted as yn and ; the basis function for the discrete function spaces is denoted as NA. With these notations, the residual vectors can be represented as

The fully discrete scheme can be stated as follows. At time step tn, given , yn, the time step size Δtn, and the parameters αm, αf, and γ, find and yn+1 such that

| (3.4) |

| (3.5) |

| (3.6) |

| (3.7) |

| (3.8) |

| (3.9) |

The choice of the parameters αm, αf and γ determines the accuracy and stability of the temporal scheme. Importantly, the high-frequency dissipation can be controlled via a proper parametrization of these parameters, while maintaining second-order accuracy and unconditional stability (for linear problems). For first-order dynamic problems, the parameters are chosen as

wherein denotes the spectral radius of the amplification matrix at the highest mode [38]. We adopt for all computations presented in this work.

Remark 1. Interested readers are referred to [42] for the parametrization of the parameters for second-order structural dynamics. A recent study shows that using the generalized-α method for the first-order structural dynamics enjoys improved dissipation and dispersion properties and does not suffer from overshoot [43]. Moreover, using a first-order structural dynamic model is quite propitious for the design of a FSI scheme [1].

3.3. A Segregated predictor multi-corrector algorithm

One may apply an inverse of the mass matrix at both sides of the equations (3.4) and obtain the following simplified kinematic equations,

| (3.10) |

This procedure can be regarded as the application of a left preconditioner on the nonlinear algebraic equations. The new equations (3.10), together with (3.6) and (3.5), constitute the system of nonlinear algebraic equations to be solved in each time step. The Newton-Raphson method with consistent linearization is invoked to solve the nonlinear system of equations. At the time step tn+1, the solution vector yn+1 is solved by means of a predictor multi-corrector algorithm. We denote yn+1,(l) :={un+1,(l), vn+1,(l), pn+1,(l)}T as the solution vector at the Newton-Raphson iteration step l = 0,⋯,lmax. The residual vectors evaluated at the iteration stage l are denoted as

The consistent tangent matrix associated with the above residual vectors is

Wherein

As was realized in [3], this special block structure in the first row of K(l) can be utilized for a block factorization,

| (3.11) |

With (3.11), the solution procedure of the linear system of equations in the Newton-Raphson method can be consistently reduced to a two-stage algorithm [1, 3, 44]. In the first stage, one obtains the increments of the pressure and velocity at the iteration step l by solving the following linear system,

| (3.12) |

In the second stage, one obtains the increments for the displacement by

| (3.13) |

To simplify notations in the following discussion, we denote

| (3.14) |

| (3.15) |

Remark 2. In [1], it was shown that for l ≥ 2 for general predictor multi-corrector algorithms; in [44], a special predictor is chosen so that for l ≥ 1.

Remark 3. In Appendix A, the detailed formula for the block matrices are given, and it can be observed that A(l) consists primarily of a mass matrix and a stiffness matrix; B(l) is a discrete gradient operator; C(l) is dominated by a discrete divergence operator; D(l) contains a mass matrix scaled with β and contributions from the stabilization terms.

Based on the above discussion, a predictor multi-corrector algorithm for solving the nonlinear algebraic equations in each time step can be summarized as follows.

Predictor stage: Set:

Multi-corrector stage: Repeat the following steps for l = 1, …, lmax:

- Evaluate the solution vectors at the intermediate stages:

Assemble the residual vectors Rm,(l) and Rp,(l) using the solution evaluated at the intermediate stages.

- Let denote the of the residual vector. If either one of the following stopping criteria

is satisfied for two prescribed tolerances tolR, tolA, set the solution vector at time step tn+1 as and yn+1 = yn+1,(l−1), and exit the multi-corrector stage; otherwise, continue to step 4. - Solve the following linear system of equations for and ,

(3.16) Obtain from the relation (3.13).

- Update the solution vector as

and return to step 1.

For all the numerical simulations presented in this work, we adopt the tolerances for the nonlinear iteration as tolR = tolA = 10−6 and the maximum number of iterations as lmax = 20.

4. Iterative linear solver

In the predictor multi-corrector algorithm presented above, the linear system of equations (3.16) is solved repeatedly, and this step constitutes the major cost for implicit dynamic calculations. In this section, we design an iterative solution procedure for the linear problem , in which the matrix and vectors adopt the following block structure,

Since its inception, GMRES is among the most popular iterative methods for solving sparse nonsymmetric matrix problems. With a proper preconditioner , the convergence rate of iterative methods like GMRES can be significantly expedited. Roughly speaking, in the GMRES iteration, one constructs the Krylov subspace and search for the solution that minimize the residual in this Krylov subspace by the Arnoldi algorithm [33, 34]. To construct the Krylov subspace, one applies to the residual vector in order to enlarge the Krylov subspace. This procedure corresponds to first solving a linear system of equations associated with and then performing a matrix-vector multiplication associated with . Often times, to reduce the computational burden, the GMRES algorithm is restarted every m steps. Within this work, this algorithm is denoted as GMRES().

In Section 4.1, we perform a diagonal scaling for with the purpose of improving the condition number [24, 31, 45]. In Section 4.2, we introduce the block factorization of and present the SCR algorithm. In Section 4.3, we present the coupled approach with a particular focus on the SIMPLE preconditioner. In Section 4.4, the nested block preconditioning technique is introduced as a combination of the SCR approach and the coupled approach.

4.1. Symmetrically diagonal scaling

Before constructing an iterative method, we first apply a symmetrically diagonal scaling to the matrix . This approach is adopted to improve the condition number of the matrix problem and is sometimes referred to as a “pre-preconditioning” technique [45]. We introduce as a diagonal matrix defined as follows,

In the above definition, ϵdiag is a user-specified tolerance to avoid undefined or unstable numerical operations. In this work, we set ϵdiag = 1.0 × 10−15. Applying as a left and right preconditioner simultaneously, we obtain an altered system as

| (4.1) |

Wherein , , and . The iterative methods discussed in the subsequent sections are applied to the above system. Once x* is obtained from (4.1), one has to perform to recover the true solution. In the remainder of Section 4, we focus on solving (4.1), and for notational simplicity, the superscript * is neglected.

4.2. Schur complement reduction

Recall that adopts the block factorization

| (4.2) |

wherein S ≔ D – CA−1 B is the Schur complement of A. Applying on both sides of the equation , one obtains

The upper triangular block matrix problem can be solved by a back substitution. Consequently, the solution procedure for can be summarized as the following segregated algorithm [24, 25, 26].

Algorithm 1.

Solution procedure for based on SCR.

| 1: Solve for an intermediate velocity from the equation | ||||

|

||||

| 2: Update the continuity residual by | ||||

| 3: Solve for xp from the equation | ||||

|

||||

| 4: Update the momentum residual by | ||||

| 5: Solve for xv from the equation | ||||

|

||||

For hyper-elastodynamics problems, it is reasonable to apply GMRES preconditioned by AMG for (4.3) and (4.5). The stopping condition for solving with A includes the tolerance for the relative error , the tolerance for the absolute error , and the maximum number of iterations . In (4.4), the Schur complement is a dense matrix due to the presence of A−1 in its definition. It is expensive and often impossible to directly compute with S. Recall that in a Krylov subspace method, the search space is iteratively expanded by performing matrix-vector multiplications. Although the algebraic form of S is impractical to obtain, its action on a vector is readily available through the following “matrix-free” algorithm [24, 26].

Algorithm 2.

The multiplication of S with a vector xp.

| 1: Compute the matrix-vector multiplication | ||||

| 2: Compute the matrix-vector multiplication | ||||

| 3: Solve for from the linear system | ||||

|

||||

| 4: Compute the matrix-vector multiplication | ||||

| 5: return | ||||

In Algorithm 2, the action of S on a vector is realized through a series of matrix-vector multiplications, and the action of A−1 on a vector is achieved by solving the linear system (4.6). This solver is located inside the solution procedure of (4.4), and we call it the inner solver. The stopping condition of the inner solver includes the tolerance for the relative error , the tolerance for the absolute error , and the maximum number of iterations .

With Algorithm 2, one can construct a Krylov subspace for S and solve the equation (4.4). However, without preconditioning, GMRES may stagnate or even break down. More importantly, each matrix-vector multiplication given in Algorithm 2 involves solving a linear system (4.6), and this inevitably makes the matrix-vector multiplication quite expensive. To mitigate the number of this expensive matrix-vector multiplications, we solve (4.4) with as a right preconditioner [46]. If the time step size is small, A is dominated by the mass matrix, and acts as an effective preconditioner for solving (4.4). On the other side, if the time step size is large, A is dominated by the stiffness matrix. The situation then is analogous to the Stokes problem, where the Schur complement is spectrally equivalent to an identity matrix. We may reasonably expect that an unpreconditioned GMRES using Algorithm 2 is sufficient for solving (4.4). Still, using may accelerate the convergence rate. Therefore, we solve (4.4) by GMRES, where the stopping criteria include the tolerance for the relative error , the tolerance for the absolute error , and the maximum number of iterations .

4.3. Coupled approach with block preconditioners

The block factorization (4.2) also inspires the design of a preconditioner for . Following the nomenclature used in [16], we use H1 and H2 to denote the approximations of A−1 in the Schur complement and the upper triangular matrix , respectively. This results in a block preconditioner expressed as

| (4.7) |

The two approximated sparse matrices are introduced so that the spectrum of has a clustering around {1}. With the block preconditioner, one can apply the Krylov subspace method directly to solve , and the bases of the Krylov subspace are constructed by applying on a vector. The action of is achieved through a procedure similar to the Algorithm 1. The differences are that the inner solver is not needed and one does not need to solve the equations associated with the sub-matrices to a high precision. The Krylov subspace method is typically used with a multigrid [17, 32] or a domain decomposition [47] preconditioner to solve with the sub-matrices. Consequently, the algebraic definition of varies over iterations, and one has to apply a flexible method, like the Flexible GMRES (FGMRES) [34], as the iterative method for . Choosing H1 = H2 = diag(A)−1 leads to the SIMPLE preconditioner [17, 16],

The SIMPLE preconditioner is an algebraic analogue of the Semi-Implicit Method for Pressure Linked Equations (SIMPLE) [18]. It introduces a perturbation to the pressure operator in the linear momentum equation. This preconditioner and its variants are among the most popular choices for problems in CFD [32, 48], FSI [47], and multiphysics problems [49, 50].

Remark 4. There are cases when the symmetry of A is broken, and using the SIMPLE-type preconditioner leads to poor performance. It is the case in CFD with large Reynolds numbers. To take into account of the off-diagonal entries of A, sophisticated preconditioners, like the LSC preconditioner [22], have been developed. Those preconditioners have been shown to be robust with respect to the Reynolds number using inf-sup stable discretizations of the CFD problem (i.e., D = O). Note that, for the stabilized methods, the LSC preconditioner may not converge [32].

4.4. Flexible GMRES algorithm with a nested block preconditioner

The SIMPLE preconditioner can be viewed as the SCR approach built based on an inexact block factorization. Its main advantage is that the application of this preconditioner is inexpensive. However, for certain problems, this inexact factorization misses some key information of the original matrix, and stagnation of the solver is observed. We want to leverage the robustness of the SCR approach built from the exact block factorization by using it as a right preconditioner, denoted as The action of on a vector is given by Algorithm 1, in which the equations (4.3)–(4.5) are solved with prescribed tolerances. The algebraic form of is defined implicitly through the solvers in Algorithm 1 and varies over iterations. Assuming that the three equations (4.3)–(4.5) are solved exactly, the spectrum of will be {1}, and the solver will converge in one iteration. Because the preconditioner varies over iterations, we invoke FGMRES as the iterative method for . The stopping condition of the FGMRES algorithm includes the tolerance for the absolute error δa, the tolerance for the relative error δr, and the maximum number of iterations nmax.

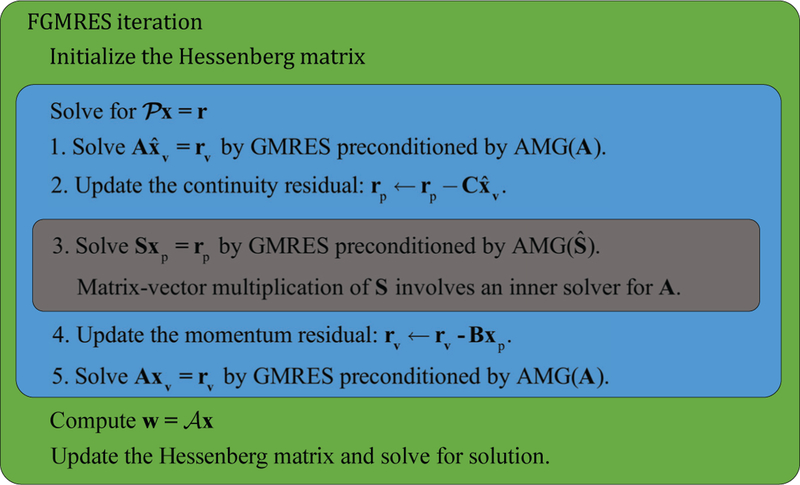

The FGMRES iteration for serves as the outer solver which tries to minimize the residual of Inside this FGMRES iteration, the application of is achieved through Algorithm 1, and one needs to solve with the block matrices A and S at this stage. We call it the intermediate solver. When solving with the Schur complement, its action on a vector is defined by Algorihtm 2, which necessitates using the inner solver to solve with A. The three levels of solvers are illustrated in Figure 1 with different colors.

Figure 1:

Implementation of the FGMRES with the nested block preconditioner. The green color represents the outer solver; the blue color represents the intermediate solver; the grey color represents the inner solver.

Remark 5. In the construction of the proposed block preconditioners, the full factorization of is utilized. One can surely use only part of the factorization to devise different preconditioners. For example, the diagonal part is an efficient candidate for the Stokes equations [19, 51]. Assuming exact arithmetic, itgives convergence within 4 iterations. Using the upper triangular part often gives a good balance between the convergence rate and the computational cost [52], as it leads to convergence within 2 iterations [53, 54], assuming exact arithmetic. In our case, the full block factorization gives the fastest convergence rate. We prefer this because the solution of the Schur complement equation is often the most expensive part of the overall algorithm. Therefore, in comparison with an upper triangular block preconditioner, we pay the price of solving the matrix problem A twice with the purpose of mitigating the number of the solution procedure for the Schur complement.

Remark 6. In the above algorithm, the nested block preconditioner can be regarded as a result of an inexact factorization of . The inexactness is due to the approximation made by the solvers in the intermediate and inner levels. The preconditioner is thus defined by the tolerances of these solvers. Using strict tolerances apparently makes closer to . However, this is impractical since this makes the algorithm as expensive as the SCR approach. On the other extreme, one may solve (4.4) by applying the preconditioner once without invoking the inner solver. This makes the algorithm as simple as the coupled approach with the SIMPLE preconditioner and potentially endangers the robustness. We adjust the tolerances to tune the preconditioner, noting there is a lot of leeway in the choice of the tolerance value ranging from strict to loose. The effect of the tolerances of the intermediate and inner solvers will be studied in Section 5.

Remark 7. Choosing a good preconditioner for the Schur complement is critical for the performance of the proposed nested block preconditioner. In our experience, using a scaled pressure mass matrix gives satisfactory results as well [55]. For compressible materials, this preconditioner does not need to be explicitly assembled, and one can use D directly (See Appendix A). In this work, we focus on D − C (diag (A))−1 B, since this choice apparently is a better approximation of S. In [56], a sparse approximate inverse is utilized to construct the preconditioner for the Schur complement, which is worth of future study.

5. Numerical Results

In our work, the outer solver is FGMRES(200) with nmax = 200 and δa = 10−50. In the intermediate level, (4.3) and (4.5) are solved by GMRES(500) preconditioned by AMG with and . The equation (4.4) is solved by GMRES(200), with and . We use the AMG preconditioner constructed from . In the inner level, the linear system is solved via GMRES(500) preconditioned by AMG with and . We use the BoomerAMG [57] from the Hypre package [58] as the parallel AMG implementation. The settings of the BoomerAMG are summarized in Table 1. With the above settings, the accuracy of the solution is dictated by δr, and the convergence rate is controlled by the tolerances , , and .

Table 1:

Settings of the BoomerAMG preconditioner [58].

| Cycle type | V-cycle |

| Coarsening method | HMIS |

| Interpolation method | Extended method (ext+i) |

| Truncation factor for the interpolation | 0.3 |

| Threshold for being strongly connected | 0.5 |

| Maximum number of elements per row for interp. | 5 |

| The number of levels for aggressive coarsening | 2 |

To provide baseline examples, we solve the system of equations (4.1) by two different preconditioners. As the first example, we solve the the system of equations by FGMRES(200) using with nmax=200 and δa = 10−50. In this preconditioner, the settings of the linear solver (including the Krylov subspace method, the preconditioners, and the stopping criteria) associated with A and are exactly the same as the ones used in the nested block preconditioner. The accuracy of the solver is determined by δr, and the performance of the preconditioner is controlled by and Notice that, in this preconditioner, is the tolerance for solving with the matrix .

As another baseline example, we choose to solve the linear system by GMRES(200) preconditioned by a one-level additive Schwarz domain decomposition preconditioner [59]. The maximum number of iterations is fixed at 10000, and the tolerance for the absolute error is fixed at 10−50. In this preconditioner, each processor is assigned with a single subdomain, and an incomplete LU factorization (ILU) with a fill-in ratio 1.0 is invoked to solve the problem on the subdomains. This preconditioner is purely algebraic and is usually very competitive for medium-size parallel simulations. However, as will be shown in the numerical examples, the one-level domain decomposition preconditioner is not a robust option. Also, as the problem size and the number of subdomains grows, more iterations are needed to propagate information across the whole domain. In our implementation, the restricted additive Schwarz method from PETSc [60] is utilized as the domain decomposition preconditioner; the PILUT routine from Hypre [58] is used as the solver for the subdomain algebraic problem.

All numerical simulations are performed on the Stampede2 supercomputer at Texas Advanced Computing Center (TACC), using the Intel Xeon Platinum 8160 node. Each node contains 48 cores, with 2.1GHz nominal clock rate and 192GB RAM per node (4 GB RAM per core).

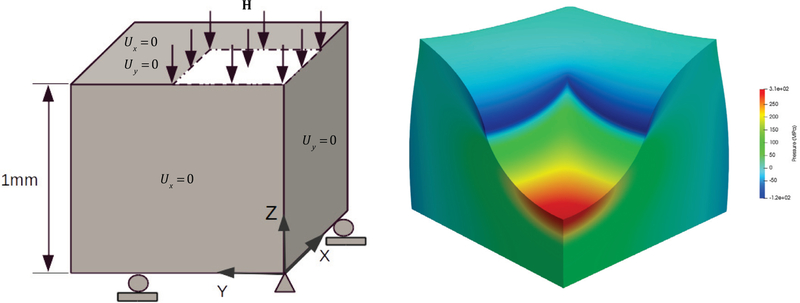

5.1. Compression of a block

The compression of a unit block was proposed as a benchmark problem for nearly incompressible solids [36]. The geometrical configuration and the boundary conditions are illustrated in Figure 2. The problem is discretized in space by a uniform structured tetrahedral mesh generated by Gmsh [61], and we use Δx to denote the edge length of the mesh. The original benchmark problem was proposed in the quasi-static setting, and a ‘dead’ surface load H is applied on a quarter portion of the top surface, pointing in the negative z-direction with magnitude |H| = 320 MPa. In this work, the problem is investigated in the dynamic setting by gradually increasing the load force as a linear function of time. The material is described by a Neo-Hookean model, whose Gibbs free energy function takes the form

Following [36], the material parameters are chosen as μ = 80.194 MPa, κ = 400889.806 MPa, and ρ0 =1.0 × 103 kg/m3. The corresponding Poisson’s ratio is 0.4999. In Section 5.1.3, we examine the robustness of the preconditioner with regard to varying material moduli. In the following discussion, the governing equations have been non-dimensionalized by the centimetre-gram-second units. Note that the edge length of the cube is 1 mm = 0.1 cm. Then the number of elements in each direction of the cube is given by 1/(10Δx).

Figure 2:

Three-dimensional compression of a block: (left) geometry of the referential configuration and the boundary conditions; (right) pressure profile in the current configuration with Δx = 1/3840.

5.1.1. Performance with varying inner solver accuracy

In this test, we investigate the impact of the accuracy of the inner solver on the overall iterative method. We fix the mesh size to be Δx = 1/640 and the time step size to be Δt = 10−1. The simulation is performed with 8 CPUs, with approximately 131072 equations assigned to each CPU. In this study, we choose δr = 10−8, and we consider two settings for the intermediate solver: and We collect the statistics of the solver in the first time step with varying values of (Table 2). The results associated with are obtained by solving in step 3 of Algorithm 1. This choice corresponds to choosing H1 = diag (A)−1 and H2 = A−1 in (4.7) for , making it similar to the SIMPLE preconditioner.

Table 2:

The impact of the accuracy of the inner solver on the performance of the linear solver. The CPU time is collected for the linear solver only; represents the total number of nonlinear iterations; n represents the total number of FGMRES iterations; epresents the averaged number of iterations for solving with A in (4.3) and (4.5); represents the averaged number of iterations for solving (4.4); represents the averaged number of iterations for solving (4.6).

| CPU time (sec.) | n | ||||||

|---|---|---|---|---|---|---|---|

| 100 | 4.86 × 103 | 4 | 477 | 74.52 | 33.89 | - | |

| 10−2 | 9.02 × 102 | 4 | 17 | 75.62 | 22.29 | 29.31 | |

| 10−4 | 8.08 × 102 | 4 | 11 | 75.30 | 22.27 | 45.00 | |

| 10−6 | 6.97 × 102 | 4 | 8 | 75.19 | 23.13 | 55.82 | |

| 10−8 | 8.11 × 102 | 4 | 8 | 75.19 | 22.13 | 65.15 | |

| 10−10 | 8.47 × 102 | 4 | 7 | 74.86 | 23.29 | 74.62 | |

| 100 | 4.87 × 103 | 4 | 664 | 55.60 | 21.29 | - | |

| 10−2 | 6.30 × 102 | 4 | 18 | 56.68 | 13.74 | 30.35 | |

| 10−4 | 5.10 × 102 | 4 | 11 | 56.30 | 13.73 | 46.14 | |

| 10−6 | 5.12 × 102 | 4 | 9 | 56.06 | 14.11 | 56.91 | |

| 10−8 | 6.01 × 102 | 4 | 9 | 56.17 | 14.44 | 65.62 | |

| 10−10 | 6.82 × 102 | 4 | 9 | 56.17 | 14.56 | 74.87 |

In our numerical experiments, we observe that with the choice of , the outer solver converges in less than two iterations on average. In fact, we also experimented with stricter tolerances and observed convergence of the outer solver in one iteration. (We do not report this because this stricter choice requires larger size of the Krylov subspace which is incompatible with our current settings.) This result corroborates the fact that the full block preconditioner gives convergence in one iteration with exact arithmetic.

In the literature, the choice for the inner solver accuracy is under debate. In [24], it is suggested that the inner solver should be more accurate than its upper-level counterpart (i.e., in our case) to guarantee accurate representation of the Schur complement. Meanwhile, it is shown in≤[62] that the Krylov methods are in fact very robust under the presence of inexact matrix-vector multiplications. In our test, as we gradually release the tolerance , it is observed that the inner solver converges with fewer iterations while the outer solver requires more iterations to reach convergence to compensate for the inaccurate evaluations of the Schur complement. As gets larger than , initially the overhead is low. As the tolerance further increases, the outer solver requires more iterations and the overall cost of the solver grows correspondingly. For the two cases, the break-even points are achieved with and 10−4, respectively. Examining the number of iterations for the outer solver, we observe a steady growth of n once grows larger than . Though it is hard to predict the optimal choice of δrI for general cases, we observe that a choice of is safe for robust performances; a slightly relaxed tolerance for the inner solver (e.g. ) is beneficial for efficiency. We also note that is insensitive to the inner solver accuracy as long as .

For comparison, we also examined the solver performance without the inner solver. We solve with instead of S in (4.4) directly. This corresponds to a highly inaccurate evaluation of the Schur complement. We see that the iteration number n, the averaged iteration number for the intermediate solver , and the CPU time of the outer solver increase significantly. The severe degradation of solver performance signifies the importance of an accurate evaluation of the Schur complement.

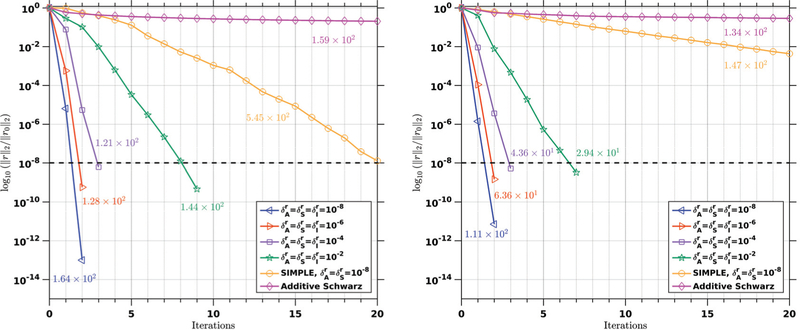

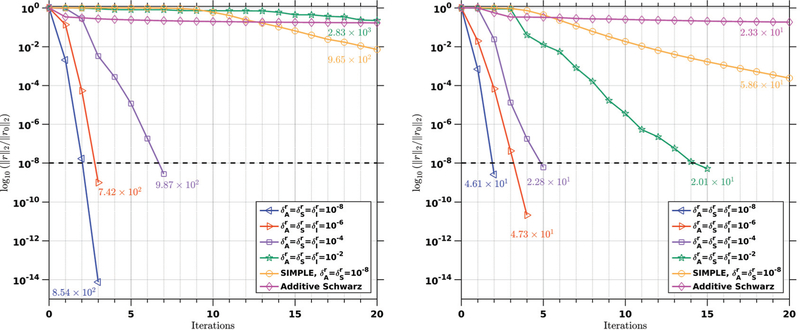

5.1.2. Performance with varying intermediate solver accuracy

In this example, we examine the effect of varying intermediate solver tolerances on the solver performance. We consider a uniform mesh with Δx = 1/640, with two time step sizes: Δt = 10−1 and 10−5. We choose δr = 10−8 for the outer solver. We set and vary their values from 10−8 to 10−2. To make comparisons, the same problem is simulated with the SIMPLE preconditioner and the additive Schwarz preconditioner. In the SIMPLE preconditioner, we solve the equations associated with A and with . The convergence is monitored for the first time step, which is usually the most challenging part of dynamic calculations. The convergence history of the linear solver in the first nonlinear iteration is plotted in Figure 3. It can be seen that the accuracy of the intermediate solvers affects the convergence rate of the linear solver. When the equations in the intermediate level are solved to a high precision, the convergence rate of the outer solver is steep. As one looses the tolerances for the intermediate solvers, the proposed algorithm requires more iterations for convergence. Yet, even for the tolerance as loose as 10−2, the convergence rate is still much steeper than that of the SIMPLE preconditioner. The average time for solving the matrix problem per nonlinear iteration is reported in the figures as well. We observe that when choosing a strict tolerance for the intermediate and inner solvers, although convergence is achieved with fewer iterations, the cost per iteration is high and the overall time to solution is correspondingly high. A looser tolerance renders the application of the nested block preconditioner more cost-effective, and the overall algorithm is faster. In comparison with the SIMPLE and additive Schwarz methods, the proposed nested block preconditioning technique is fairly competitive.

Figure 3:

Convergence history for Δt = 10−1 (left) and 10−5 (right). The horizontal dashed black line indicates the prescribed stopping criterion for the relative error, which is 10−8 here. In the case of Δt = 10−1, the SIMPLE method converges in 21 iterations, and the additive Schwarz method converges in 2644 iterations. In the case of Δt = 10−5, the SIMPLE method converges in 71 iterations, and the additive Schwarz method converges in 2030 iterations. The numbers indicate the averaged time per nonlinear iteration in seconds.

5.1.3. Performance with varying material properties

In this example, we vary the material properties and study the robustness of the proposed preconditioner. The Poisson’s ratio varies from 0.0 to 0.5, spanning the range relevant to most engineering and biological materials. The shear modulus μ is taken as 80.194 × η MPa, wherein η is a non-dimensional number. Correspondingly, the compression force is adjusted by multiplying with the scaling factor η for values of 10−2,100, and 102. The stopping condition for the linear solver is δr = 10−8, and we choose . The mesh size is fixed to be Δx = 1/480, and the problem is simulated with 8 CPUs. The time step size is Δt = 10−1 and we integrate the problem up to T = 1.0. We use a relatively large time step size here to make the matrix A dominated by the stiffness matrix. The statistics of the solver performance are collected over ten time steps (Table 3).

Table 3:

The performance of the linear solver with varying material properties. represents the averaged number of FGMRES iterations; represents the averaged number of iterations for solving with A in (4.3) and (4.5); represents the averaged number of iterations for solving (4.4); represents the averaged CPU time for one nonlinear iteration in seconds; represents the Poisson’s ratio; η is a non-dimensional scaling factor for the shear modulus.

| η = 10−2 | η = 100 | η = 102 | |

| = 0.0 | 2.0 [46.9, 15.9] (46.6) | 2.0 [48.1, 16.0] (48.3) | 2.0 [47.9, 15.3] (46.2) |

| = 0.1 | 2.0 [48.5, 19.0] (49.2) | 2.0 [48.4, 17.9] (50.3) | 2.0 [48.1, 15.5] (46.5) |

| = 0.2 | 2.0 [48.3, 20.2] (52.8) | 2.0 [48.0, 19.9] (52.9) | 2.0 [48.3, 16.8] (47.0) |

| = 0.3 | 2.0 [47.9, 23.1] (56.4) | 2.0 [41.1, 21.5] (57.9) | 2.0 [48.5, 17.7] (48.6) |

| = 0.4 | 2.0 [47.4, 28.5] (66.4) | 2.0 [48.2, 25.8] (65.5) | 2.0 [48.6, 19.2] (50.6) |

| = 0.5 | 2.2 [47.1, 36.3] (101.2) | 2.0 [47.4, 24.6] (66.3) | 2.0 [46.5, 20.3] (48.6) |

For all cases, the number of iterations for the outer solver maintains around two. In fact, it is only for the case of and η = 10−2 that the outer solver needs slightly more than two iterations. The number of iterations for solving with A in (4.3) and (4.5) is maintained around 47, and hence can be regarded as independent with respect to the material property. The number of iterations for solving (4.4) increases with increasing the Poisson’s ratio. This can be explained by looking at S = D − CA−1B. The matrix D is dominated by the mass matrix scaled with a factor of β. As approaches 0.5, the isothermal compressibility coefficient β goes to zero. Consequently, the well-conditioned matrix D diminishes, and the condition number of the Schur complement gets larger. This is reflected in the increase of as goes from 0.0 to 0.5 for all three shear moduli. On the other hand, increases as the material gets softer, and this trend is pronounced as the Poisson’s ratio gets larger. This can be explained by looking at A−1 in the Schur complement. For large time steps, A contains a significant contribution from the stiffness matrix, and the inverse of the stiffness matrix is proportional to 1/μ. It is known that diag (A) is not a good candidate for approximating the stiffness matrix, and this is magnified for softer materials due to the factor 1/μ.

5.1.4. Parallel performance

We investigate the efficiency of the method by evaluating the fixed-size scalability performance. The spatial mesh size is Δx = 1/1280, with about 8.39 × 106 degrees of freedom. The time step size is fixed at 10−5, and we integrate the problem in time up to T = 10−4. The stopping criterion for the FGMRES iteration is δr = 10−3; the tolerances for the intermediate and inner solvers are . The communication speed of MPI messages between nodes is typically slower than that within a single node. To rule out the discrepancy of the communication speed, we run this test by assigning only one CPU per node. This means that the MPI messages are communicated purely by the cluster network. We observe that the efficiency of the numerical simulation is maintained at a high level (around 90%), and the average number of FGMRES iterations maintains at 2.0 for a wide range of processor counts (Table 4).

Table 4:

The strong scaling performance. represents the averaged number of FGMRES iterations for solving (3.16). TA and TL represent the timings for matrix assembly and linear solver, respectively. The efficiency is computed based on the total time.

| Proc. | TA (sec.) | TL (sec.) | Total (sec.) | Efficiency | |

|---|---|---|---|---|---|

| 2 | 2.0 | 3.13 × 103 | 2.16 × 104 | 2.49 × 104 | 100% |

| 4 | 2.0 | 1.57 × 103 | 1.09 × 104 | 1.26 × 104 | 99% |

| 8 | 2.0 | 8.49 × 102 | 5.58 × 103 | 6.48 × 103 | 96% |

| 16 | 2.0 | 4.38 × 102 | 2.96 × 103 | 3.43 × 103 | 91% |

| 32 | 2.0 | 2.33 × 102 | 1.62 × 103 | 1.87 × 103 | 83% |

| 64 | 2.0 | 1.10 × 102 | 8.37 × 102 | 9.56 × 102 | 81% |

| 128 | 2.0 | 5.65 × 101 | 3.84 × 102 | 4.49 × 102 | 87% |

To compare the performance of different preconditioners, we also perform a weak scaling test of the solver, with δr = 10−3. Tolerances are set to for the nested block preconditioner and to for the SIMPLE preconditioner. The computational mesh is progressively refined and each CPU is assigned approximately 5.53 × 104 equations. We simulate the problem with two different time step sizes: Δt = 10−1 and 10−5. The statistics of the solver performance are collected for ten time steps (Table 5). We observe that the iteration counts for the outer solver using the nested block preconditioner are independent of mesh refinement. At large time steps, A is dominated by the stiffness matrix and its solution procedure requires more iterations. In the meantime, the Schur complement has a better condition number and converges with fewer iterations. At small time steps, the situation is opposite. The matrix A is dominated by the mass matrix, and it can be solved with fewer iterations. The mesh refinement has an impact on the intermediate solvers, and we observe an increase of the number of iterations in and . For the outer solver, is maintained around a constant value, suggesting the outer solver is insensitive to the mesh refinement. The averaged CPU time for the linear solver grows with mesh refinement, which is primarily attributed to the AMG preconditioner adopted. Indeed, there are known bottlenecks of the parallel AMG preconditioner [35, 63], which prohibits ideal weak scalability of . For the SIMPLE preconditioner, the iteration counts and the CPU time grow faster than those of the nested block preconditioner. The additive Schwarz method converges faster per iteration. However, the number of iterations for convergence is much higher. For the finest mesh, the additive Schwarz method fails to converge in 10000 iterations. The proposed nested block preconditioner gives the most robust and efficient performance.

Table 5:

Comparison of the averaged iteration counts and CPU time in seconds for the nested block preconditioner , the SIMPLE preconditioner, and the additive Schwarz preconditioner. NC stands for no convergence. For the Δx = 1/3840 case, the additive Schwarz preconditioner failed to converge in 10000 iterations.

| Proc. | SIMPLE | Additive Schwarz | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Δt = 10−1 | |||||||||

| 480 | 8 | 2.3 | 31.7 | 6.7 | 19.7 | 13.3 | 21.6 | 1114.4 | 20.4 |

| 960 | 64 | 2.5 | 43.1 | 7.4 | 50.2 | 17.9 | 63.6 | 3368.9 | 106.8 |

| 1920 | 512 | 2.7 | 55.4 | 9.1 | 108.0 | 25.0 | 153.6 | 8642.4 | 305.4 |

| 3840 | 4096 | 2.9 | 68.8 | 9.6 | 220.8 | 47.6 | 504.2 | NC | NC |

| Δt = 10−5 | |||||||||

| 480 | 8 | 2.3 | 4.6 | 16.1 | 5.3 | 22.7 | 6.3 | 916.0 | 17.0 |

| 960 | 64 | 2.0 | 6.9 | 26.4 | 18.5 | 38.6 | 31.6 | 2133.9 | 67.4 |

| 1920 | 512 | 2.0 | 9.1 | 34.3 | 52.8 | 65.7 | 71.0 | 9669.1 | 315.0 |

| 3840 | 4096 | 2.2 | 11.3 | 42.0 | 139.0 | 101.2 | 221.4 | NC | NC |

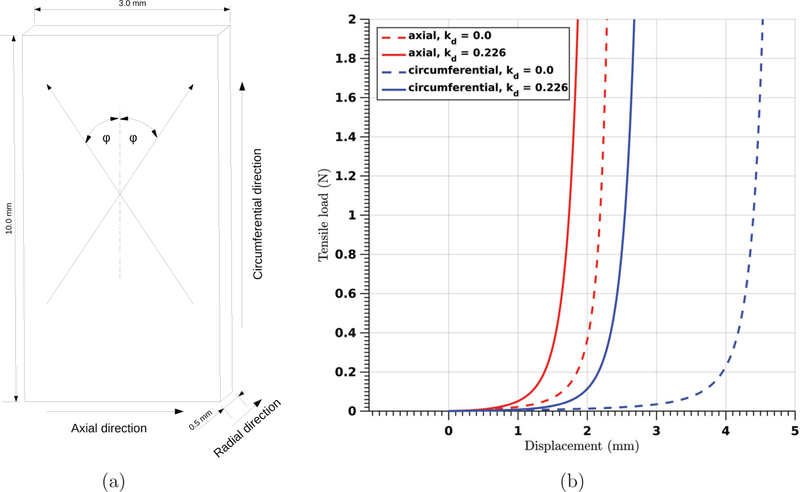

5.2. Tensile test of an anisotropic fibre-reinforced hyperelastic soft tissue model

In this example, we apply the proposed preconditioning technique to an anisotropic hyperelastic material model, which has been used to describe arterial tissue layers with distributed collagen fibres. The isochoric and volumetric parts of the free energy are

In the above, models the groundmatrix via an isotropic Neo-Hookean material, with μ being the shear modulus; models the ith family of collagen fibres by an exponential function. In , ai is a unit vector that describes the mean orientation of the ith family of fibres in the reference configuration. The parameter kd ∈ [0, 1/3] is a structural parameter that characterizes the dispersion of the collagen fibres. For ideally aligned fibres, the dispersion parameter kd is 0, while for isotropically distributed fibres, it takes the value 1/3. The parameter k1 is a material parameter that describes the stiffness of the fibre, and k2 is a non-dimensional parameter. The volumetric energy Gvol indicates that the model is fully incompressible. Interested readers are referred to [37] for detailed discussions of the histology and constitutive modeling of the arterial layers. In the numerical study, we perform a tensile test for the tissue model. Following [37], the geometry of the specimen has length 10.0 mm, width 3.0 mm, and thickness 0.5 mm. The material parameters are μ = 7.64 kPa, k1 = 996.6 kPa, k2 = 524.6. Assuming that the fibre orientation has no radial component, the unit vector is characterized completely by φ, the angle between the circumferential direction and the mean fibre orientation direction (see Figure 4 (a)). For the circumferential specimen, φ = 49.98°; for the axial specimen, φ = 40.02°. On the loading surface, traction force is applied and the face is constrained to move only in the loading direction. Symmetry boundary conditions are properly applied, and we only consider one-eighth of the specimen in the simulations.

Figure 4:

Three-dimensional tensile test of an iliac adventitial strip: (a) geometry of the referential configuration; (b) computed load-displacement curves of the circumferential (blue) and axial specimens (red) with (κ = 0.226, solid curves) and without (κ = 0.0, dashed curves) dispersion of the collagen fibres.

Before studying the solver performance, we perform a simulation with 3.5 million unstructured linear tetrahedral elements to examine the VMS formulation for this material model. In this study, the tensile test is performed in a dynamic approach. The loading force is applied as a linear function of time and reaches 2 N in 100 seconds. We set the density of the tissue as 1.0 g/cm3. The tensile load-displacement curves for the circumferential and axial specimens with kd = 0.0 and 0.226 are plotted in Figure 4 (b). We observe that before the fibres align along the loading direction, the groudmatrix provides the load carry capacity and the material response is very soft. When the fibres rotate to align with the loading direction, they take over the load burden, the material becomes stiffer, and the stiffness grows exponentially. For the axial specimen, the mean orientation of the fibres are closer to the loading direction, and hence it stiffens earlier than the circumferential specimen. Compared with the dispersed case, the specimen with perfectly aligned fibres (i.e., kd = 0.0) needs a significant amount of rotation before they can carry load. In Figure 5 (a) and (b), the Cauchy stresses in the tensile direction for the circumferential and axial specimens with kd = 0.226 at the tensile load 1.0 N are illustrated. The value of a1 · Ca2∥Fa1∥∥Fa2∥characterizes the current fibre alignment, and it is illustrated in Figure 5 (c) and (d) for the circumferential and axial specimens. The maximum values in these specimens are 0.347 and 0.459, respectively. Correspondingly, the angles between the current mean fibre direction and the circumferential direction are 34.85° and 31.34°, respectively. In the following discussion, the problem has been non-dimensionalized by the centimetre-gram-second units. Except the study performed in Section 5.2.3, we adopt the axial specimen with the dispersion parameter kd = 0.226 as the model problem for the study of the solver performance.

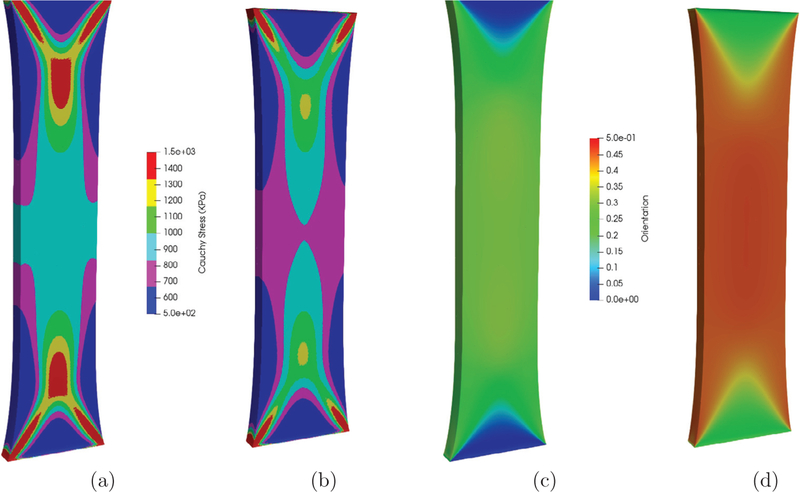

Figure 5:

Three-dimensional tensile test: Cauchy stress in the loading direction are plotted for the circumferential (a) and axial (b) specimens. The mean orientation of the collagen fibres in the current configuration are plotted for the circumferential (c) and axial (d) specimens.

5.2.1. Performance with varying inner solver accuracy

In this test, we study the impact of the inner solver accuracy on the iterative solution algorithm. We fix the mesh size to be 1/400 and the time step size to be 10−5. The simulation is performed with 8 CPUs. In this test, the settings of the linear solver are identical to the study performed in Section 5.1.2. The statistics of the solver are collected for the first time step of the simulation with varying values of (Table 6). The impact of the inner solver accuracy is similar to what was observed in Section 5.1.1. It is confirmed that using the inner solver may significantly improve the convergence rate of the linear solver. In both cases, the optimal performance in terms of time to solution is achieved by setting .

Table 6:

The impact of the accuracy of the inner solver on the performance of the linear solver. The CPU time is collected for the linear solver only; represents the total number of nonlinear iterations; n represents the total number of FGMRES iterations; represents the averaged number of iterations for solving with A in (4.3) and (4.5); represents the averaged number of iterations for solving (4.4); represents the averaged number of iterations for solving (4.6).

| CPU time (sec.) | n | ||||||

|---|---|---|---|---|---|---|---|

| 100 | 7.56 × 101 | 1 | 47 | 16.81 | 19.81 | - | |

| 10−2 | 7.19 × 101 | 1 | 9 | 15.78 | 58.56 | 1.95 | |

| 10−4 | 6.52 × 101 | 1 | 5 | 15.30 | 58.80 | 3.75 | |

| 10−6 | 6.20 × 101 | 1 | 3 | 14.33 | 58.33 | 7.81 | |

| 10−8 | 5.83 × 101 | 1 | 2 | 13.75 | 59.50 | 11.85 | |

| 10−10 | 7.55 × 101 | 1 | 2 | 13.75 | 60.00 | 15.19 | |

| 100 | 4.38 × 101 | 1 | 47 | 7.83 | 12.02 | - | |

| 10−2 | 4.20 × 101 | 1 | 9 | 8.17 | 34.22 | 1.97 | |

| 10−4 | 3.67 × 101 | 1 | 5 | 7.90 | 34.40 | 3.40 | |

| 10−6 | 4.73 × 101 | 1 | 4 | 7.50 | 35.75 | 7.37 | |

| 10−8 | 6.87 × 101 | 1 | 4 | 7.00 | 35.75 | 11.50 | |

| 10−10 | 8.23 × 101 | 1 | 4 | 7.00 | 35.75 | 15.47 |

5.2.2. Performance with varying intermediate solver accuracy

We examine the solver performance for anisotropic hyperelastic materials with varying tolerances for the intermediate solvers. The mesh size is fixed to be Δx = 1/400, and the time step sizes are fixed to be Δt = 10−1 and 10−5. The simulations are performed with 8 CPUs. We choose δr = 10−8 and vary the values of from 10−8 to 10−2. The SIMPLE preconditioner and the additive Schwarz preconditioner are also simulated for comparison. In the SIMPLE preconditioner, the block matrices A and are solved with . The convergence history of the linear solver in the first nonlinear iteration is plotted in Figure 6. We observe that the nested block preconditioner performs robustly with a strict choice of the intermediate and inner solver tolerances. When the tolerances for the intermediate and inner solvers are loose (10−2) and the time step is large (Δt = 10−1), the convergence rate of the nested block preconditioner slows dramatically and is slower than the SIMPLE preconditioner. It should be emphasized that the SIMPLE preconditioner uses a very strict tolerance () here. We also note that the additive Schwarz preconditioner fails to converge to the prescribed tolerance in 10000 iterations when Δt = 10−1.

Figure 6:

Convergence history for Δt = 10−1 (left) and 10−5 (right). The horizontal dashed black line indicates the prescribed stopping criterion for the relative error, which is 10−8 here. In the case of Δt = 10−1, the block preconditioner with tolerance 10−2 converge in 90 iterations, the SIMPLE method converges in 45 steps, and the additive Schwarz method failed to converge. In the case of Δt = 10−5, the SIMPLE method converges in 46 iterations, and the additive Schwarz method converges in 1070 iterations. The numbers indicate the averaged time per nonlinear iteration in seconds.

5.2.3. Performance with varying fibre orientations and dispersions

In this test, we examine the robustness of the solver with different collagen fibre orientations and dispersions. The structure of the arterial wall is described by the collagen fibre mean orientation φ and the dispersion parameter kd. We vary the value of φ from 20° to 80°, and the value of kd from 0.1 to 0.3. The rest material properties are kept the same as the ones used in the previous studies. The simulations are performed with Δx = 1/100 on 8 CPUs. The time step size is Δt = 10−1, and we simulate the problem up to T = 1.0 to collect statistics of the solver performance. The stopping condition for the FGMRES iteration is δr = 10−8, and we choose . The averaged number of iterations and the averaged CPU time for one nonlinear iteration is reported in Table 7.

Table 7:

The performance of the nested block preconditioner with varying fibre orientations and dispersions. represents the averaged number of FGMRES iterations; represents the averaged number of iterations for solving with A in (4.3) and (4.5); represents the averaged number of iterations for solving (4.4); represents the averaged CPU time for one nonlinear iteration in seconds; φ is the collagen fibre mean orientation; kd is the dispersion parameter.

| kd = 0.1 | kd = 0.2 | kd = 0.3 | |

| = 20° | 3.0 [214.1, 17.6] (4.8 × 101) | 3.0 [161.2, 18.0] (3.4 × 101) | 3.0 [103.4, 19.7] (2.1 × 101) |

| = 40° | 3.0 [241.3, 17.7] (5.8 × 101) | 3.0 [176.8, 18.4] (4.1 × 101) | 2.9 [105.7, 20.5] (2.1 × 101) |

| = 60° | 2.8 [221.4, 17.8] (4.6 × 101) | 2.9 [169.1, 19.2] (3.7 × 101) | 2.9 [104.5, 20.9] (2.1 × 101) |

| = 80° | 2.9 [220.8, 18.1] (5.3 × 101) | 3.0 [168.2, 19.8] (4.1 × 101) | 3.0 [103.0, 20.9] (2.2 × 101) |

We observe that the outer solver converges in around three iterations regardless of the structural properties. In the intermediate level, the linear solver for S is not sensitive to the two structural parameters; the linear solver for A is affected by both parameters. The dispersion parameter kd has a significant impact on the performance of the solver associated with A. For the case of kd = 0.1, the solver for A requires slightly more than 200 iterations for convergence; for the case of kd = 0.3, the number of iterations drops to around 100. As the dispersion parameter grows, there are more fibres providing stiffness. Thus, the trend of is in agreement with the observations made in Section 5.1.3.

5.2.4. Parallel performance

We compare the performance of different preconditioners by performing a weak scaling test. The tolerance for the linear solver is set to be δr = 10−3. In the nested block preconditioner, we set , and we use for the SIMPLE preconditioner. The computational mesh is progressively refined and each CPU is assigned with approximately 6.0×104 equations. We simulate the problem with two different time step sizes: Δt = 10−1 and 10−5. The statistics of the solver performance are collected for five time steps, and the results are reported in Table 8. The number of iterations at the intermediate level shows a similar trend to the isotropic case studied in Section 5.1.4. The difference is that, for the anisotropic material, the solver for A requires more iterations to converge when the time step size is large. The degradation of the AMG preconditioner for anisotropic problems is known, and using a higher complexity coarsening, like the Falgout method, will improve the performance [57]. Notably, for large time steps, the additive Schwarz preconditioner just cannot deliver converged solutions within 10000 iterations, regardless of the spatial mesh size. Examining the results, the proposed nested block preconditioner gives the most robust and efficient performance for most of the cases considered.

Table 8:

Comparison of the averaged iteration counts and CPU time in seconds for the nested block preconditioner the SIMPLE preconditioner, and the additive Schwarz preconditioner. NC stands for no convergence. For the Δt = 10−1 case, the additive Schwarz preconditioner failed to achieve convergence in 10000 iterations.

| Proc. | SIMPLE | Additive Schwarz | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Δt = 10−1 | |||||||||

| 200 | 8 | 6.2 | 207.1 | 10.3 | 579.3 | 54.1 | 888.5 | NC | NC |

| 400 | 04 | 7.8 | 331.3 | 10.5 | 2062.7 | 102.5 | 6262.8 | NC | NC |

| 600 | 216 | 10.3 | 389.4 | 11.1 | 3204.1 | 140.5 | 8051.3 | NC | NC |

| Δt = 10−5 | |||||||||

| 200 | 8 | 4.0 | 2.3 | 15.5 | 9.7 | 22.6 | 9.4 | 485.6 | 10.8 |

| 400 | 64 | 4.1 | 3.1 | 18.6 | 29.2 | 45.3 | 53.3 | 986.3 | 47.76 |

| 600 | 216 | 5.9 | 3.9 | 20.3 | 119.8 | 71.3 | 202.5 | 1453.0 | 431.6 |

6. Conclusions

In this work, we designed a preconditioning technique based the novel hyper-elastodynamics formulation [1]. This preconditioning technique is based on a series of block factorizations in the Newton-Raphson solution procedure [1, 3, 44] and is inspired from the preconditioning techniques developed in the CFD community [27, 28, 29, 30]. It uses the Schur complement reduction with relaxed tolerances as the preconditioner inside a Krylov subspace method. This strategy enjoys the merits of both the SCR approach and the fully coupled approaches. It shows better robustness and efficiency in comparison with the SIMPLE and the additive Schwarz preconditioners. Tuning the intermediate and the inner solvers allows the user to adjust the nested algorithm for specific problems to attain a balance between robustness and efficiency. In this work, to make the presentation coherent, we adopted the same solver at the intermediate and the inner levels. In practice, one is advised to flexibly apply the most efficient solver at the inner level. For example, one may symmetrize the matrix in (4.6) [30] and use the conjugate gradient method as the inner solver. In our experience, this will further reduce the computational cost. In all, the methodology developed in this work provides a sound basis for the design of effective preconditioning techniques for hyper-elastodynamics.

There are several promising directions for future work. (1) Improvements will be made to design a better preconditioner for the Schur complement. It is tempting to consider using the sparse approximate inverse method to construct this preconditioner [64]. (2) Geometric multigrid preconditioners will be developed to replace the AMG preconditioner. This is expected to further improve the scalability of the proposed solution method. (3) This preconditioning technique will be extended to inelastic calculations [39, 40] as well as FSI problems [1].

Highlights.

A nested block preconditioning technique is developed for hyper-elastodynamics.

The Schur complement reduction is used as a block preconditioner.

The intermediate and inner solvers can be tuned for robustness and efficiency.

An anisotropic fibre-reinforced arterial wall model is studied with the method.

Acknowledgements

This work is supported by the National Institutes of Health under the award numbers 1R01HL121754 and 1R01HL123689, the National Science Foundation (NSF) CAREER award OCI-1150184, and computational resources from the Extreme Science and Engineering Discovery Environment (XSEDE) supported by the NSF grant ACI-1053575. The authors acknowledge TACC at the University of Texas at Austin for providing computing resources that have contributed to the research results reported within this paper.

Appendix A. Consistent linearization

We report the explicit formulas of the residual vectors and tangent matrices used in the Newton-Raphson solution procedure at the iteration step l. For notational simplicity, the subscript (l) is neglected in the following discussion.

| (A.1) |

| (A.2) |

| (A.3) |

| (A.4) |

In the above, we used the following notation conventions,

Note that ρ = ρ(p) and β = β(p) are given by the constitutive relations, and Hi := hi ° φt.

| (A.5) |

| (A.6) |

| (A.7) |

| (A.8) |

| (A.9) |

| (A.10) |

In and , we used the following notation,

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Ju Liu, Email: liuju@stanford.edu.

Alison L. Marsden, Email: amarsden@stanford.edu.

References

- [1].Liu J, Marsden A, A unified continuum and variational multiscale formulation for fluids, solids, and fluid-structure interaction, Computer Methods in Applied Mechanics and Engineering 337 (2018) 549–597. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Hughes T, Multiscale phenomena: Green’s functions, the Dirichlet-to-Neumann formulation, subgrid scale models, bubbles and the origins of stabilized methods, Computer Methods in Applied Mechanics and Engineering 127 (1995) 387–401. [Google Scholar]

- [3].Scovazzi G, Carnes B, Zeng X, Rossi S, A simple, stable, and accurate linear tetrahedral finite element for transient, nearly, and fully incompressible solid dynamics: a dynamic variational multiscale approach, International Journal for Numerical Methods in Engineering 106 (2016) 799–839. [Google Scholar]

- [4].Chorin A, Numerical solution of the Navier-Stokes equations, Mathematics of computation 22 (1968) 745–762. [Google Scholar]

- [5].Teman R, Sur l’approximation de la solution des équations de Navier-Stokes par la méthode des pas fractionnaires (II), Archive for Rational Mechanics and Analysis 33 (1969) 377–385. [Google Scholar]

- [6].Benzi M, Golub G, Liesen J, Numerical solution of saddle point problems, Acta Numerica 14 (2005) 1–137. [Google Scholar]

- [7].Elman H, Silvester D, Wathen A, Finite Elements and Fast Iterative Solvers, 2nd Edition, Oxford University Press, 2014. [Google Scholar]

- [8].Turek S, Efficient Solvers for Incompressible Flow Problems: An Algorithmic and Computational Approache, Springer Science & Business Media, 1999. [Google Scholar]

- [9].Keyes D, et al. , Multiphysics simulations: Challenges and opportunities, The International Journal of High Performance Computing Applications 27 (2013) 4–83. [Google Scholar]

- [10].Kim J, Moin P, Application of a fractional-step method to incompressible Navier-Stokes equations, Journal of Computational Physics 59 (1985) 308–323. [Google Scholar]

- [11].van Kan J, A second-order accurate pressure-correction scheme for viscous incompressible flow, SIAM Journal on Scientific and Statistical Computing 7 (1986) 970–891. [Google Scholar]

- [12].Karniadakis G, Israeli M, Orszag S, High-order splitting methods for the incompressible Navier-Stokes equations, Journal of Computational Physics 97 (1991) 414–443. [Google Scholar]

- [13].Guermond J, Shen J, Velocity-correction projection methods for incompressible flows, SIAM Journal on Numerical Analysis 41 (2003) 112–134. [Google Scholar]

- [14].Guermond J, Minev P, Shen J, An overview of projection methods for incompressible flows, Computer Methods in Applied Mechanics and Engineering 195 (2006) 6011–6045. [Google Scholar]

- [15].Perot J, An analysis of the fractional step method, Journal of Computational Physics 108 (1993) 51–58. [Google Scholar]

- [16].Quarteroni A, Saleri F, Veneziani A, Factorization methods for the numerical approximation of Navier-Stokes equations, Computer Methods in Applied Mechanics and Engineering 188. [Google Scholar]

- [17].Elman H, Howle V, Shadid J, Shuttleworth R, Tuminaro R, A taxonomy and comparison of parallel block multi-level preconditioners for the incompressible Navier-Stokes equations, Journal of Computational Physics 227 (2008) 1790–1808. [Google Scholar]

- [18].Patankar S, Spalding D, A calculation procedure for heat, mass and momentum transfer in three-dimensional parabolic flows, in: Numerical Prediction of Flow, Heat Transfer, Turbulence and Combustion, Elsevier, 1983, pp. 54–73. [Google Scholar]

- [19].Silvester D, Wathen A, Fast iterative solution of stabilised Stokes systems Part II: using general block preconditioners, SIAM Journal on Numerical Analysis 31 (1994) 1352–1367. [Google Scholar]

- [20].Elman H, Preconditioning for the steady-state Navier-Stokes equations with low viscosity, SIAM Journal on Scientific Computing 20 (1999) 1299–1316. [Google Scholar]

- [21].Kay D, Loghin D, Wathen A, A preconditioner for the steady-state Navier-Stokes equations, SIAM Journal on Scientific Computing 24 (2002) 237–256. [Google Scholar]

- [22].Elman H, Howle V, Shadid J, Shuttleworth R, Tuminaro R, Block preconditioners based on approximate commutators, SIAM Journal on Scientific Computing 27 (2006) 1651–1668. [Google Scholar]

- [23].Moghadam M, Bazilevs Y, Marsden A, A new preconditioning technique for implicitly coupled multidomain simulations with applications to hemodynamics, Computational Mechanics 52 (2013) 1141–1152. [Google Scholar]

- [24].May D, Moresi L, Preconditioned iterative methods for Stokes flow problems arising in computational geodynamics, Physics of the Earch and Planetary Interiors 171 (2008) 33–47. [Google Scholar]

- [25].Lun L, Yeckel A, Derby J, A Schur complement formulation for solving free-boundary, Stefan problems of phase change, Journal of Computational Physics 229 (2010) 7942–7955. [Google Scholar]

- [26].Furuichi M, May D, Tackley P, Development of a stokes flow solver robust to large viscosity jumps using a Schur complement approach with mixed precision arithmetic, Journal of Computational Physics 230 (2011) 8835–8851. [Google Scholar]

- [27].Bank R, Welfert B, Yserentant H, A class of iterative methods for solving saddle point problems, Numerische Mathematik 56 (1990) 645–666. [Google Scholar]

- [28].Baggag A, Sameh A, A nested iterative scheme for indefinite linear systems in particulate flows, Computer Methods in Applied Mechanics and Engineering 193 (2004) 1923–1957. [Google Scholar]

- [29].Manguoglu M, Sameh A, Tezduyar T, Sathe S, A nested iterative scheme for computation of incompressible flows in long domains, Computational Mechanics 43 (2008) 73–80. [Google Scholar]