Abstract

Optical coherence tomography (OCT) has become an established clinical routine for the in vivo imaging of the optic nerve head (ONH) tissues, that is crucial in the diagnosis and management of various ocular and neuro-ocular pathologies. However, the presence of speckle noise affects the quality of OCT images and its interpretation. Although recent frame-averaging techniques have shown to enhance OCT image quality, they require longer scanning durations, resulting in patient discomfort. Using a custom deep learning network trained with 2,328 ‘clean B-scans’ (multi-frame B-scans; signal averaged), and their corresponding ‘noisy B-scans’ (clean B-scans + Gaussian noise), we were able to successfully denoise 1,552 unseen single-frame (without signal averaging) B-scans. The denoised B-scans were qualitatively similar to their corresponding multi-frame B-scans, with enhanced visibility of the ONH tissues. The mean signal to noise ratio (SNR) increased from 4.02 ± 0.68 dB (single-frame) to 8.14 ± 1.03 dB (denoised). For all the ONH tissues, the mean contrast to noise ratio (CNR) increased from 3.50 ± 0.56 (single-frame) to 7.63 ± 1.81 (denoised). The mean structural similarity index (MSSIM) increased from 0.13 ± 0.02 (single frame) to 0.65 ± 0.03 (denoised) when compared with the corresponding multi-frame B-scans. Our deep learning algorithm can denoise a single-frame OCT B-scan of the ONH in under 20 ms, thus offering a framework to obtain superior quality OCT B-scans with reduced scanning times and minimal patient discomfort.

Subject terms: Machine learning, Translational research, Biomedical engineering

Introduction

In recent years, optical coherence tomography (OCT) imaging has become a well-established clinical tool for assessing optic nerve head (ONH) tissues, and for monitoring many ocular1,2 and neuro-ocular pathologies3. However, despite several advancements in OCT technology4, the quality of B-scans is still hampered by speckle noise5–11, low signal strength12, blink12,13 and motion artefacts12,14.

Specifically, the granular pattern of speckle noise deteriorates the image contrast, making it difficult to resolve small and low-intensity structures (e.g., sub-retinal layers)5–7, thus affecting the clinical interpretation of OCT data. Also, poor image contrast can lead to automated segmentation errors14–16, and incorrect tissue thickness estimation17, potentially affecting clinical decisions. For instance, segmentation errors for the retinal nerve fiber layer (RNFL) thickness can lead to over/under estimation of glaucoma18.

Currently, there exist many hardware19–27 and software schemes27–29 to denoise OCT B-scans. Hardware approaches offer robust noise suppression through frequency compounding24–27 and multi-frame averaging (spatial compounding)19–23. While multi-frame averaging techniques have shown to enhance image quality and presentation28,29, they are sensitive to registration errors29, and require longer scanning times30. Moreover, elderly patients often face discomfort and strain31, when they remain fixated for long durations31,32. Software techniques, on the other hand, attempt to denoise through numerical algorithms5–11 or filtering techniques33–35. However, registration errors36, computational complexity5,37–39, and sensitivity to choice of parameters40 limit their usage in the clinic.

While deep learning has shown promising segmentation41–44, classification45–47, and denoising48–50 applications in the field of medical imaging for modalities such as magnetic resonance imaging (MRI), its application to OCT imaging is still in its infancy51–62. Although recent deep learning studies have shown successful segmentation51–59 and classification applications60–62 in OCT imaging, to the best of our knowledge no study exists yet to assess the success of denoising OCT B-scans.

In this study, we propose a deep learning approach to denoise OCT B-scans. We aimed to obtain multi-frame quality B-scans (i.e. signal-averaged) from single-frame (without signal averaging) B-scans of the ONH. We hope to offer a denoising framework to obtain superior quality B-scans, with reduced scanning duration and minimal patient discomfort.

Results

When trained on 23,280 pairs (2,328 B-scans after extensive data augmentation resulted in 23,280 B-scans) of ‘clean’ (multi-frame) and their corresponding ‘noisy’ B-scans (clean B-scans + Gaussian noise), our deep learning network was able to successfully denoise the unseen single-frame B-scans. An independent test set of 1,552 single-frame B-scans was used to evaluate the denoising performance of the proposed network qualitatively and quantitatively.

Denoising performance – qualitative analysis

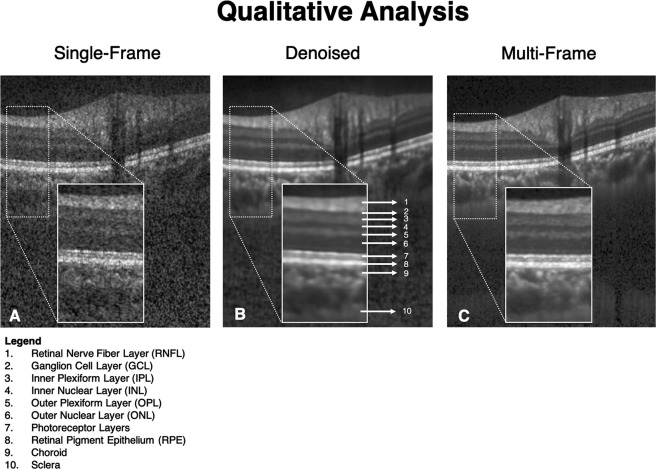

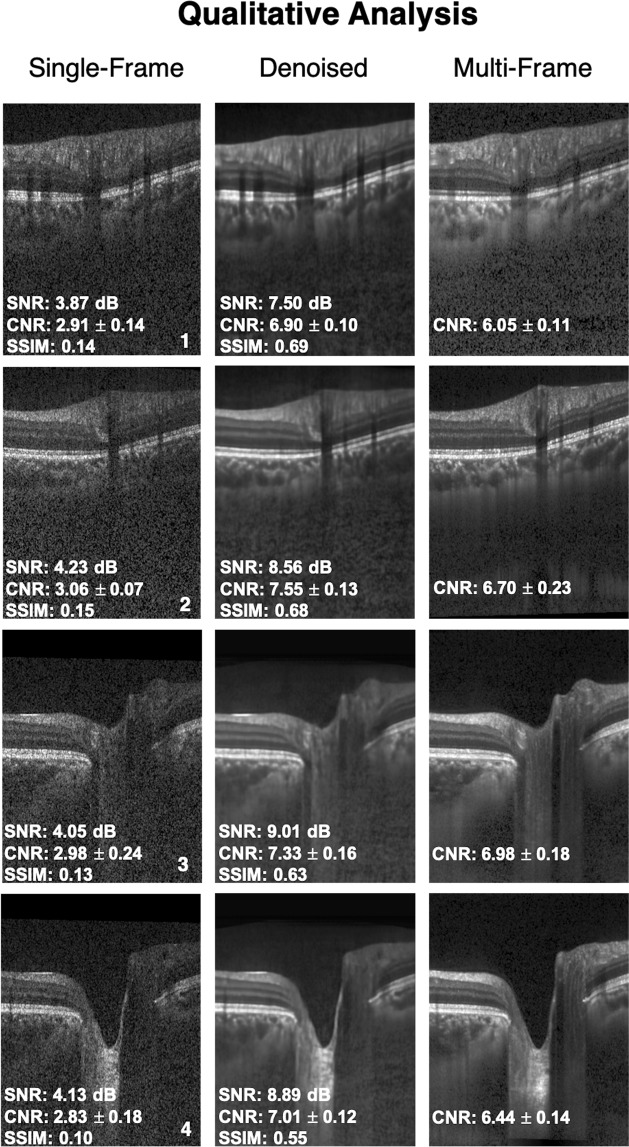

The single-frame, denoised and multi-frame B-scan for a healthy subject can be found in Fig. 1. In all the cases, the denoised B-scans were qualitatively similar to their corresponding multi-frame B-scans (Fig. 2). Specifically, in all the denoised B-scans, we observed no deep learning induced image artifacts, and the overall visibility of all the ONH tissues were prominently enhanced (Fig. 2; 2nd column).

Figure 1.

Single-frame (A), denoised (B), and multi-frame (C) B-scans for a healthy subject are shown. The denoised B-scan can be observed to be qualitatively similar to its corresponding multi-frame B-scan. Specifically, the visibility of the retinal layers, and choroid, and lamina cribrosa were prominently improved. Sharp and clear boundaries were also obtained for retinal layers, and the choroid-scleral interface.

Figure 2.

Single-frame, denoised and multi-frame B-scans for four healthy subjects (1–4) are shown. The signal to noise ratio (SNR), contrast to noise ratio (CNR; mean of all tissues) and the structural similarity index (SSIM) for the respective B-scans are shown as well. In all cases, the denoised B-scans (2nd column) were consistently similar (qualitatively) to their corresponding multi-frame B-scans (3rd column).

Denoising performance – quantitative analysis

When evaluated on the independent test set of 1,552 B-scans, on average, we observed a two-fold increase in SNR upon denoising. Specifically, the mean SNR for the unseen single-frame/denoised B-scans were: 4.02 ± 0.68 dB/8.14 ± 1.03 dB, respectively, when computed against their respective multi-frame B-scans. The two-fold reduction in the noise levels resulted in the enhanced overall visibility of the ONH tissues.

In all cases, the multi-frame B-scans always offered a higher CNR compared to their corresponding single-frame B-scans. Further, the denoised B-scans consistently offered a higher CNR compared to the single-frame B-scans, for all tissues (Table 1). Specifically, the mean CNR (mean of all tissues) increased from 3.50 ± 0.56 (single-frame) to 7.63 ± 1.81 (denoised). For each tissue, mean CNR in a single-frame, denoised and multi-frame B-scan can be found in Table 1. The increased CNR values for each ONH tissue in the denoised images implied sharper and improved visibility of the tissue boundaries.

Table 1.

Mean CNR for all ONH tissues computed for the single-frame, denoised and multi-frame B-scans.

| Tissue | Single-frame | Denoised | Multi-frame |

|---|---|---|---|

| RNFL | 2.97 ± 0.42 | 7.28 ± 0.63 | 5.18 ± 0.76 |

| GCL + IPL | 3.83 ± 0.43 | 12.09 ± 4.22 | 11.62 ± 1.85 |

| All other retinal layers | 2.71 ± 0.33 | 5.61 ± 1.46 | 4.62 ± 0.86 |

| RPE | 5.62 ± 0.72 | 9.25 ± 2.25 | 8.10 ± 1.44 |

| Choroid | 2.99 ± 0.43 | 5.99 ± 0.45 | 5.75 ± 0.63 |

| Sclera | 2.42 ± 0.39 | 6.40 ± 1.68 | 6.00 ± 0.96 |

| LC | 4.02 ± 1.23 | 6.81 ± 1.99 | 6.46 ± 1.81 |

Finally, on average, our denoising approach offered a five-fold increase in MSSIM. Specifically, the mean MSSIM for the single-frame/denoised B-scans were: 0.13 ± 0.02/0.65 ± 0.03, when computed against their respective multi-frame B-scans. Thus, the denoised B-scans were five-times structural more similar (compared to the single-frame B-scans) to the multi-frame B-scans.

Denoising performance – effect of data augmentation

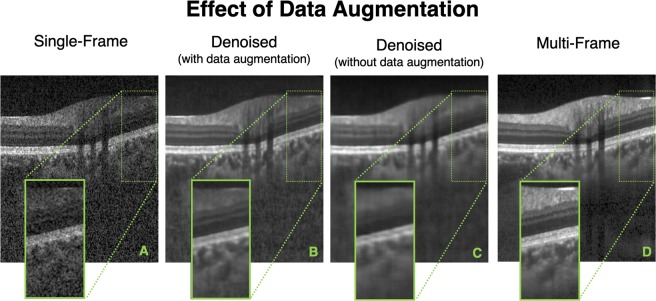

When trained without data augmentation, in all the test cases, while the network was able to reduce the speckle noise primarily, the denoised B-scans appeared to be over-smoothened (blurred). Specifically, the tissue boundaries (especially for the retinal layers) appeared smudged and unclear (Fig. 3).

Figure 3.

Single-frame (A), denoised (with data augmentation) (B), denoised (without data augmentation) (C), and multi-frame (D) B-scans for a healthy subject are shown. The denoised B-scan (B) obtained from a network trained with data augmentation can be observed to be qualitatively similar to its corresponding multi-frame B-scan (D). However, when trained with limited training data (without data augmentation), although the network is able to reduce the speckle noise primarily, the denoised B-scan is over-smoothened (C) with smudged and unclear tissue boundaries.

While the network trained with the baseline dataset (without data augmentation) offered a slightly higher mean SNR of 10.11 ± 2.13 dB, (vs. with data augmentation: 8.14 ± 1.03 dB), the higher SNR can be attributed to the over-smoothened (blur) denoised B-scans, and not the improved image quality and reliability.

Further, we would like to clarify that we were unable to obtain reliable CNR values for the individual ONH tissues in all the denoised images (network trained without data augmentation) due to smudged and unclear tissue boundaries.

Finally, while the network trained with the baseline dataset resulted in denoised B-scans with nearly two-fold increased mean MSSIM (0.25 ± 0.07; ‘noisy’ B-scans mean MSSIM: 0.13 ± 0.02), the use of data augmentation helped retrieve structural information (mean MSSIM: 0.65 ± 0.03; five-fold increase compared to ‘noisy’ B-scans).

Overall, we observed that a 10-fold increase in the dataset size through extensive data augmentation did improve the overall quality and reliability of the denoised B-scans.

Denoising performance: clinical reliability

For all the three structural parameters, there were no significant (p > 0.05) differences (means) in the measurements when obtained from the denoised or the multi-frame radial B-scans.

The percentage errors (denoised vs. multi-frame radial B-scans; mean ± standard deviation) in the measurements of the p-RNFLT, the p-GCCT, and the p-CT were 3.07 ± 0.75%, 2.95 ± 1.02%, and 3.90 ± 2.85% respectively.

Discussion

In this study, we present a custom deep learning approach to denoise single-frame OCT B-scans of the ONH. When trained with the ‘clean’ (multi-frame) and the corresponding ‘noisy’ B-scans, our network denoised unseen single-frame B-scans. The proposed network leveraged on the inherent advantages of U-Net, residual learning, and dilated convolutions58. Further, the multi-scale hierarchical feature extraction63 pathway helped the network recover ONH tissue boundaries degraded by speckle noise. Having successfully trained, tested and validated our network on 1,552 single-frame OCT B-scans of the ONH, we observed a consistently higher SNR and CNR for all ONH tissues, and a consistent five-fold increase in MSSIM in all the denoised B-scans. Thus, we may be able to offer a robust deep learning framework to obtain superior quality OCT B-scans with reduced scanning duration and minimal patient discomfort.

Using the proposed network, we obtained denoised B-scans that were qualitatively similar to their corresponding multi-frame B-scans (Figs 1 and 2), owing to the reduction in noise levels. The mean SNR for the denoised B-scans was 8.14 ± 1.03 dB, a two-fold improvement (reduction in noise level) from 4.02 ± 0.68 that was obtained for the single-frame B-scans. Given the significance of the neural (retinal layers)64–68 and connective tissues (sclera and LC)69–73, in ocular pathologies such as glaucoma2, and age-related macular degeneration74, their enhanced visibility is critical in a clinical setting. Furthermore, reduced noise levels would likely increase the robustness of aligning/registration algorithms used to monitor structural changes over time17. This is crucial for the management of multiple ocular pathologies75,76.

In denoised B-scans (vs single-frame B-scans), we consistently observed higher CNRs. Our approach enhanced the visibility of small (e.g. RPE and photoreceptors) and low-intensity tissues (e.g. GCL and IPL; Fig. 2: 2nd column). For all tissues, the mean CNR increased from 3.50 ± 0.56 (single-frame) to 7.63 ± 1.81 (denoised). Since existing automated segmentation algorithms rely on high contrast, we believe that our approach could potentially reduce the likelihood of segmentation errors that are relatively common in commercial algorithms14–16,77. For instance, the incorrect segmentation of the RNFL can lead to inaccurate thickness measurements, leading to under-/over- estimation of the glaucomatous damage18. By using the denoising framework as a precursor to automated segmentation/thickness measurement, we could increase the reliability78 of such clinical tools.

Upon denoising, we observed a five-fold increase in MSSIM (single-frame/denoised: 0.13 ± 0.02/0.65 ± 0.03), when validated against the multi-frame B-scans. The preservation of features and structural information plays an important role in accurately measuring cellular level disruption to determine retinal pathology. For instance, the measurement of the ellipsoid zone (EZ) disruption79 provides an insight into the photoreceptor structure, that is significant in pathologies such as diabetic retinopathy80, macular hole81, macular degeneration82, and ocular trauma83. Existing multi-frame averaging techniques29 significantly enhance and preserve the integrity of the structural information by supressing speckle noise30,39–41. However, they are limited by a major clinical challenge: the inability of the patients to remain fixated for long scanning times31,32, and the resultant discomfort31.

In this study, we are proposing a methodology to significantly reduce scanning time while enhancing OCT signal quality. In our healthy subjects, it took on average 3.5 min to capture a ‘clean’ (multi frame) volume, and 25 s for a ‘noisy’ (single frame) volume. Since we can denoise a single B-scan in 20 ms (or 2 s for a volume of 97 B-scans), this means that we can theoretically generate a denoised OCT volume in about 27 seconds (=time of acquisition of the ‘noisy’ volume [25 s] + denoising processing [2 s]). Thus, we may be able to drastically reduce the scanning duration by more than 7 folds, while maintaining superior image quality.

Besides speckle noise, patient dependent factors such as cataract84–87 and/or lack of tear film in dry eyes can significantly diminish OCT scan quality12,84–88. While lubricating eye drops and frequent blinking can instantly improve image quality for patients with corneal drying88,89, the detrimental effects of cataract on OCT image quality might be reduced only if cataract surgery is performed12,84,85. Moreover, pupillary dilation may be needed especially in subjects with small pupil sizes to obtain acceptable quality B-scans12,90, which is highly crucial in the monitoring of glaucoma90. Pupillary dilation is also time consuming and may cause patient discomfort91. It is plausible that the proposed framework, when extended, could be a solution to the afore-mentioned factors that limit image quality, avoiding the need for any additional clinical procedure.

In this study, several limitations warrant further discussion. First, the proposed network was trained and tested only on B-scans from one device (Spectralis). Every commercial OCT device has its own proprietary algorithm to pre-process the raw OCT data, potentially presenting a noise distribution different from what our network was trained with. Hence, we are unsure of our network’s performance on other devices. Nevertheless, we offer a proof of concept which could be validated by other groups on multiple commercial OCT devices.

Second, we were unable to train our network with a speckle noise model representative of the Spectralis device. Such a model is currently not provided by the manufacturer and would be extremely hard to reverse-engineer because information about all pre- and post-processing done to the OCT signal is also not provided. While there exist a number of OCT denoising studies that assume a Rayleigh8/Generalized Gamma distribution to describe speckle noise39, we observed that they were ill-suited for our network. From our experiments, the best denoising performance was obtained when our network was trained with a simple Gaussian noise model (μ = 0, σ = 1). It is possible that a thorough understanding of the raw noise distribution prior to the custom pre-processing on the OCT device could improve the performance of our network. We aim to test this hypothesis with a custom-built OCT system in our future works.

Third, while we have discussed the need for reliable clinical information from poor quality OCT scans, that could be critical for the diagnosis and management of ocular pathology (e.g., glaucoma), we have yet to test the networks’ performance on pathological B-scans.

Fourth, we observed that the CNR was higher for the denoised B-scans than for the corresponding multi-frame B-scans. This could be attributed to over-smoothening (or blurring) of tissue textures that was consistently present in the denoised B-scans. We are currently exploring other deep learning techniques to improve the B-scan sharpness that is lost during denoising.

Fifth, we were unable to provide further validation of our algorithm by comparing our outputs to histology data. Such a validation would be extremely difficult, as one would need to first image a human ONH with OCT, process with histology, and register both datasets. Furthermore, while we believe our algorithm is able to restore tissue texture accurately (when comparing denoised B-scans with multi-frame B-scans), an exact validation of our approach is not possible. Long fixation times in obtaining the multi-frame B-scans lead to subtle motion artifacts (eye movements caused by microsaccades or unstable fixation)92, displaced optic disc center93, and axial misalignment12, causing minor registration errors between the single-frame and multi-frame B-scans, thus preventing an exact comparison between the denoised B-scans and the multi-frame B-scans.

Finally, although we observed no significant differences in the measurements of the p-RNFLT, p-GCCT and the p-CT when measured on the denoised or the multi-frame B-scans, it must be noted that the measurements were obtained on a testing cohort of limited (8 subjects) and healthy subjects only. Further studies on larger and diverse (presence of pathology) cohorts are necessary to assert and robustly validate the clinical relevance of the proposed technique.

In conclusion, we have developed a custom deep learning approach to denoise single-frame OCT B-scans. With the proposed network, we were able to denoise a single-frame OCT B-scan in under 20 ms. We hope that the proposed framework could resolve the current trade-off in obtaining reliable and superior quality scans, with reduced scanning times and minimal patient discomfort. Finally, we believe that our approach may be helpful for low-cost OCT devices, whose noisy B-scans may be enhanced by artificial intelligence (as opposed to expensive hardware) to the same quality as in current commercial devices.

Methods

Patient recruitment

A total of 20 healthy subjects were recruited at the Singapore National Eye Centre. All subjects gave written informed consent. This study was approved by the institutional review board of the SingHealth Centralized Institutional Review Board and adhered to the tenets of the Declaration of Helsinki. The inclusion criteria for healthy subjects were: an intraocular pressure (IOP) less than 21 mmHg, and healthy optic nerves with a vertical cup-disc ratio (VCDR) less than or equal to 0.5.

Optical coherence tomography imaging

The subjects were seated and imaged under dark room conditions by a single operator (TAT). A spectral-domain OCT (Spectralis, Heidelberg Engineering, Heidelberg, Germany) was used to image both eyes of each subject. Each OCT volume consisted of 97 horizontal B-scans (32-μm distance between B-scans; 384 A-scans per B-scan), covering a rectangular area of 15° × 10° centered on the ONH. For each eye, single-frame (without signal averaging), and multi-frame (75x signal averaging) volume scans were obtained. Enhanced depth imaging (EDI)94 and eye tracking92,95 modalities were used during the acquisition. From all the subjects, we obtained a total of 3,880 B-scans for each type of scan (single-frame or multi-frame).

Volume registration

The multi-frame volumes were reoriented to align with the single-frame volumes through rigid translation/rotation transformations using 3D software (Amira, version 5.6; FEI). This registration was performed using a voxel-based algorithm that maximized mutual information between two volumes96. Registration was essential to quantitatively validate the corresponding regions between the denoised and multi-frame B-scans. Note that Spectralis follow-up mode was not used in this study. Although the follow-up mode allows a new scanning of the same area by identifying previous scan locations, in many cases, it can distort B-scans and thus provide unrealistic tissue structures in the new scan.

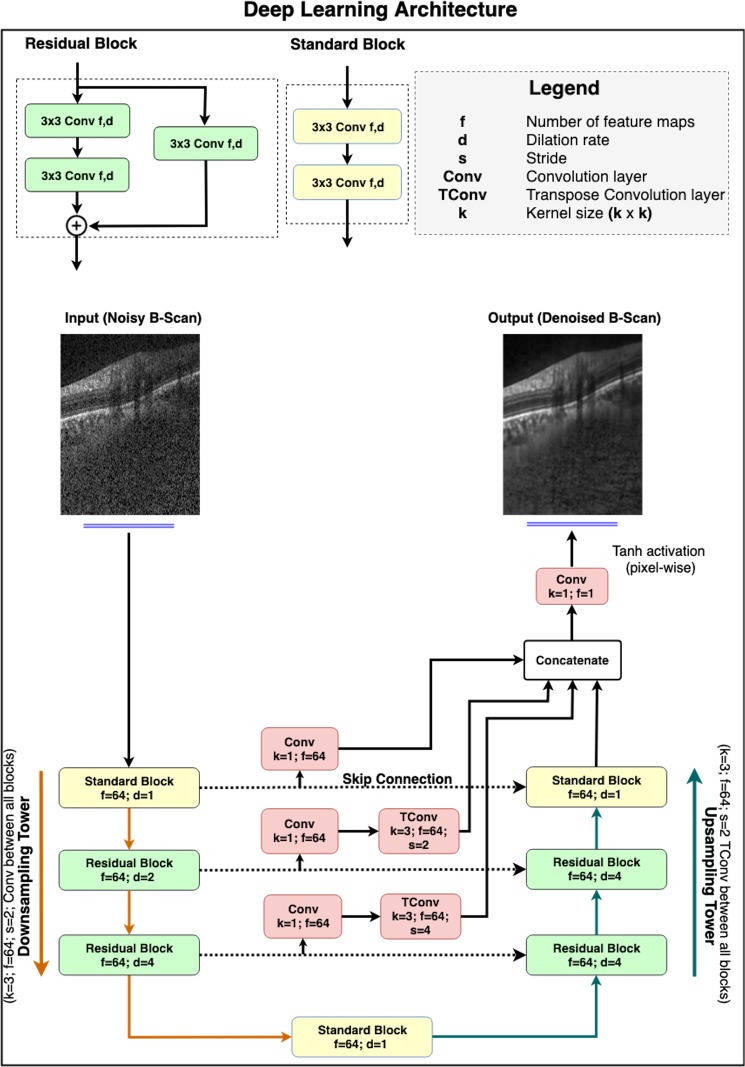

Deep learning based denoising

In this study, we developed a fully-convolutional neural network, inspired by our earlier DRUNET architecture58 to denoise single-frame OCT B-scans of the ONH. It leverages on the inherent advantages of U-Net97, residual learning98, dilated convolutions99, and multi-scale hierarchical feature extraction63 to obtain multi-frame quality B-scans. Briefly, the U-Net and its skip connections helped the network learn both the local (tissue texture) and contextual information (spatial arrangement of tissues). The contextual information was further exploited using dilated convolution filters. Residual connections improved the flow of the gradient information through the network, and multi-scale hierarchical feature extraction helped restore tissue boundaries in the B-scans.

Network architecture

The proposed network consisted of two types of feature extraction units: (1) standard block; and (2) residual block. While each block consisted of two subsequent dilated convolutional layers (64 filters; size = 3 × 3) for feature extraction, the residual block consisted of an additional 3 × 3 convolutional layer (identity connection)98 that improved the flow of the gradient information throughout the depth of the network. The use of dilated convolution filters99 helped the network better understand the contextual information (i.e., the spatial arrangement of tissues), that is crucial for obtaining reliable tissue boundaries.

Inspired by the U-Net97, the network was composed of a downsampling and an upsampling tower, connected via skip-connections (Fig. 4). By sequentially halving the dimensionality of the B-scan after each feature extraction unit, the downsampling tower extracted the contextual information (i.e., the spatial arrangement of tissues). On the other hand, in the process of sequentially restoring the B-scan to its original dimensions, the upsampling tower extracted local information (i.e., tissue texture). Skip connections between both towers helped the network learn the extracted contextual and local information jointly.

Figure 4.

The architecture comprised of two towers: (1) A downsampling tower – to capture the contextual information (i.e., spatial arrangement of the tissues), and (2) an upsampling tower – to capture the local information (i.e., tissue texture). Each tower consisted of two blocks: (1) a standard block, and (2) a residual block. The latent space was implemented as a standard block. The multi-scale hierarchical feature extraction unit helped better recover tissue edges eroded by speckle noise. The network consisted of 900 k trainable parameters.

In the downsampling tower, an input B-scan (size: 496 × 384) was first passed on to a standard block (dilation rate: 1) followed by two residual blocks (dilation rate: 2 and 4, respectively). A convolution layer (64 filters; size = 3 × 3; stride = 2) after every block sequentially halved the dimensionality of the B-scan.

A standard block (dilation rate: 1) was then used to transfer the contextual feature maps from the downsampling to the upsampling tower.

The upsampling tower consisted of two residual blocks (dilation rate: 4) and a standard block (dilation rate: 1). After each block, a transpose convolution layer (64 filters; size = 3 × 3; stride = 2) was used to restore the B-scan sequentially to its original dimension.

The use of multi-scale hierarchical feature extraction63 improved the recovery of tissue boundaries eroded by speckle noise in the single-frame B-scans. It was implemented by passing the feature maps at each downsampling level through a convolution layer (64 filters; size = 1 × 1), followed by a transpose convolution layer (64 filters; size = 3 × 3) to restore the original B-scan resolution. The restored maps were then concatenated with the output feature maps from the upsampling tower.

Finally, the concatenated feature maps were fed to the output convolution layer (1 filter; size = 1 × 1), followed by pixel-wise hyperbolic tangent (tanh) activation to produce a denoised B-scan.

In both towers, all layers except the last output layer, were activated by an exponential linear unit (ELU)100 function. In addition, in each residual block, the feature maps were batch normalized101 and ELU activated before addition.

The proposed network comprised of 900,000 training parameters. The network was trained end-to-end using the Adam optimizer102, and we used the mean absolute error as loss function. We trained and tested the proposed network on an NVIDIA GTX 1080 founders edition GPU with CUDA v8.0 and cuDNN v5.1 acceleration. With the given hardware configuration, each single-frame B-scan was denoised under 20 ms.

Training and testing of the network

From the dataset of 3,880 B-scans, 2,328 of them (from both eyes of 12 subjects) were used as a part of the training dataset. The training set consisted of ‘clean’ B-scans and their corresponding ‘noisy’ versions. The ‘clean’ B-scans were simply the multi-frame (75x signal averaging) B-scans. The ‘noisy’ B-scans were generated by adding Gaussian noise (μ = 0, σ = 1) to the respective ‘clean’ B-scans (Fig. 5).

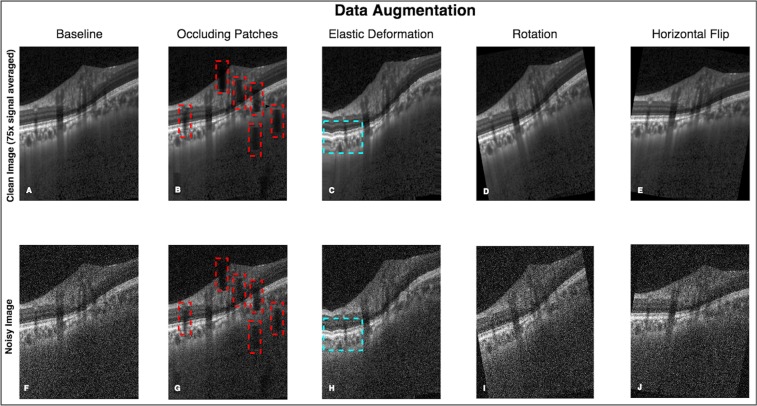

Figure 5.

An exhaustive offline data augmentation was done to circumvent the scarcity of training data. (A–E) represent the original and the data augmented ‘clean’ B-scans (multi-frame). (F–J) Represent the same for the corresponding ‘noisy’ B-scans. The occluding patches (B,G; red boxes) were added to make the network robust in the presence of blood vessel shadows. Elastic deformations (C,H; cyan boxes) were used to make the network invariant to atypical morphologies104. A total of 23,280 B-scans of each type (clean/noisy) were generated from 2,328 baseline B-scans.

The testing set consisted of 1,552 single-frame B-scans (from both eyes of 8 subjects) to be denoised. We ensured that the scans from the same subject weren’t used in both training and testing sets.

Data augmentation

An exhaustive offline data augmentation was done to circumvent the scarcity of training data. We used elastic deformations58,103, rotations (clockwise and anti-clockwise; 10°), occluding patches58, and horizontal flipping for both ‘clean’ and ‘noisy’ B-scans. Briefly, elastic deformations were used to produce the combined effects of shearing and stretching in an attempt to make the network invariant to atypical morphologies (as seen in glaucoma104). Ten occluding patches of size 60 × 20 pixels were added at random locations to non-linearly reduce (pixel intensities multiplied by a random factor between 0.2 and 0.8) the visibility of the ONH tissues. This was done to make the network invariant to blood vessel shadows that are common in OCT B-scans105. Note that a full description of our data augmentation approach can be found in our previous paper58.

Using data augmentation, we were able to generate a total of 23,280 ‘clean’ and 23,280 corresponding ‘noisy’ B-scans that were added to the training dataset. An example of data augmentation performed on a single ‘clean’ and corresponding ‘noisy’ B-scan is shown in Fig. 5.

Denoising performance – qualitative analysis

All denoised single-frame B-scans were manually reviewed by expert observers (S.K.D. & G.S.) and qualitatively compared against their corresponding multi-frame B-scans to assess the following: 1) presence of deep learning induced image artifacts in the denoised B-scans; and 2) overall visibility of the ONH tissues.

Denoising performance – quantitative analysis

The following image quality metrics were used to assess the denoising performance of the proposed algorithm: (1) signal to noise ratio (SNR); (2) contrast to noise ratio (CNR); and (3) mean structural similarity index measure (MSSIM)106. These metrics were computed for the single-frame, multi-frame, and denoised B-scans (all from the independent testing set; 1,552 B-scans of each type).

The SNR (expressed in dB) was a measure of signal strength relative to noise. It was defined as:

| 1 |

where is the pixel-intensity values of the ‘clean’ (multi-frame) B-scan, and is the pixel-intensity values of the B-scan (either the ‘noisy’ [single-frame] or the denoised B-scan) to be compared with . A high SNR value indicates low noise in the given B-scan with respect to the ‘clean’ B-scan.

The CNR was a measure of contrast difference between different tissue layers. It was defined as:

| 2 |

where μr and denoted the mean and variance of pixel intensity for a chosen ROI within the tissue ‘i’ in a given B-scan, while μb and represented the same for the background ROI. The background ROI was chosen as a 20 × 384 (in pixels) region at the top of the image (within the vitreous). A high CNR value suggested enhanced visibility of the given tissue.

The CNR was computed for the following tissues: (1) RNFL; (2) ganglion cell layer + inner plexiform layer (GCL + IPL); (3) all other retinal layers; (4) retinal pigment epithelium (RPE); (5) peripapillary choroid; (6) peripapillary sclera; and (7) lamina cribrosa (LC). Note that the CNR was computed only in the visible portions of the peripapillary sclera and LC. For each tissue, the CNR was computed as the mean of twenty-five ROIs (8 × 8 pixels each) in a given B-scan. All the ROIs were manually chosen in each tissue by an expert observer (G.S.) using a custom MATLAB (R2015a, MathWorks Inc., Natick, MA) graphical user interface.

The structural similarity index measure (SSIM)106 was computed to assess the changes in tissue structures (i.e., edges) between the single-frame/denoised B-scans and the corresponding multi-frame B-scans (ground-truth). The SSIM was defined between −1 and +1, where −1 represented ‘no similarity’, and +1 ‘perfect similarity’. It was defined as:

| 3 |

where x and y represented the denoised and multi-frame B-scan respectively; , denoted the mean intensity and standard deviation of the chosen ROI in B-scan x, while , represented the same for B-scan y; represented the cross-covariance of the ROIs in B-scans x and y. C1 and C2 (constants to stabilize the division) were chosen as 6.50 and 58.52, as recommended in a previous study106.

The MSSIM was computed as the mean of SSIM from ROIs (8 × 8 pixels each) across a B-scan (stride = 1; scanned horizontally). It was defined as:

| 4 |

Note that the SNR, and MSSIM were computed for an entire B-scan, as opposed to the CNR that was computed for individual tissues.

Denoising performance: effect of data augmentation

In an attempt to understand the significance of data augmentation, the entire process of training and testing was performed on two datasets: (1) baseline dataset (without data augmentation; 2,328 pairs of ‘clean’ and corresponding ‘noisy’ B-scans); and (2) data augmented dataset (23,280 pairs of ‘clean’ and corresponding ‘noisy’ B-scans).

Denoising performance: clinical reliability

The clinical reliability of the denoised B-scans was assessed by comparing the measurements of 3 clinically relevant ONH structural parameters obtained from the denoised and its corresponding multi-frame radial B-scans.

For each of the eight subjects in the testing set, we obtained 12 radial B-scans passing through the center of the Bruch’s membrane opening (BMO) from both the denoised volumes (single-frame volumes denoised using the proposed network) and their corresponding multi-frame volumes. A total of 192 pairs (8 subjects; both eyes; 12 B-scans per volume) of radial B-scans (denoised and its corresponding multi-frame B-scans) were obtained.

In all the radial B-scans, the peripapillary retinal nerve fiber layer thickness (p-RNFLT), the peripapillary ganglion cell complex layer thickness (ganglion cell layer + inner plexiform layer; p-GCCT), and the peripapillary choroidal thickness (p-CT) were manually measured by an expert observer (S.K.D.) using ImageJ107.

In each radial B-scan, the BMO points were defined as the extreme-tips of the RPE. The BMO reference line was obtained by joining the BMO points.

The p-RNFLT was computed as the distance between the inner limiting membrane and the posterior RNFL boundary taken at 1.7 mm on either side from the center of the BMO reference line.

The p-GCCT was computed as the distance between the posterior RNFL boundary and the posterior inner plexiform layer boundary taken at 1.7 mm on either side from the center of the BMO reference line.

The p-CT was computed as the distance between the posterior RPE boundary and the choroid-sclera interface taken at 1.7 mm on either side from the center of the BMO reference line.

All the three structural parameters were calculated as the average of the measurements taken on either side of the BMO.

For each structural parameter, paired t-test were used to assess the difference (means) in measurements when obtained from the denoised and their corresponding multi-frame radial B-scans.

Finally, we also computed the percentage error (mean) in measurements (between denoised and multi-frame radial B-scans) for each parameter.

Acknowledgements

Singapore Ministry of Education Academic Research Funds Tier 1 (R-155-000-168-112 [A.H.T.]; R-397-000-294-114 [M.J.A.G.]); National University of Singapore (NUS) Young Investigator Award Grant (NUSYIA_FY16_P16, R-155-000-180-133; [A.H.T.]); Singapore Ministry of Education Academic Research Funds Tier 2 (R-397-000-280-112, R-397-000-308-112 [M.J.A.G.]; MOE2016-T2-2-135 [A.H.T.]); National Medical Research Council (Grant NMRC/STAR/0023/2014 [T.A.]).

Author Contributions

S.K.D. and G.S. conceived the study, designed the experiments, and wrote the paper; T.H.P. assisted the design of the algorithm; X.W. and T.A.T. assisted in imaging all the subjects, and edited the manuscript; S.P., T.A., L.S., edited the manuscript, A.H.T. and M.J.A.G., supervised the study and edited the manuscript.

Data Availability

The dataset used for the training, validation, and testing of the proposed deep learning network was obtained from the Singapore National Eye Center and transferred to the National University of Singapore in a de-identified format. The study was approved by the SingHealth Centralized Institutional Review. Due to regulations, SingHealth does not authorize the dataset to be shared publicly.

Code Availability

The authors wish to license the code to another party and thus are unable to release the entire codebase publicly at this stage. However, all the experiments, network descriptions, and data augmentations are described in sufficient details to enable independent replication with non-proprietary libraries.

Competing Interests

Dr. Michaël J. A. Girard and Dr. Alexandre H. Thiéry are co-founders of Abyss Processing Pte Ltd.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Sripad Krishna Devalla, Giridhar Subramanian, Alexandre H. Thiéry and Michaël J. A. Girard contributed equally.

Contributor Information

Alexandre H. Thiéry, Email: a.h.thiery@nus.edu.sg

Michaël J. A. Girard, Email: mgirard@nus.edu.sg

References

- 1.Adhi M, Duker JS. Optical coherence tomography – current and future applications. Current opinion in ophthalmology. 2013;24:213–221. doi: 10.1097/ICU.0b013e32835f8bf8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bussel II, Wollstein G, Schuman JS. OCT for glaucoma diagnosis, screening and detection of glaucoma progression. British Journal of Ophthalmology. 2014;98:ii15. doi: 10.1136/bjophthalmol-2013-304326. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Maldonado RS, Mettu P, El-Dairi M, Bhatti MT. The application of optical coherence tomography in neurologic diseases. Neurology: Clinical Practice. 2015;5:460–469. doi: 10.1212/CPJ.0000000000000187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.van Velthoven ME, Faber DJ, Verbraak FD, van Leeuwen TG, de Smet MD. Recent developments in optical coherence tomography for imaging the retina. Progress in retinal and eye research. 2007;26:57–77. doi: 10.1016/j.preteyeres.2006.10.002. [DOI] [PubMed] [Google Scholar]

- 5.Du Yongzhao, Liu Gangjun, Feng Guoying, Chen Zhongping. Speckle reduction in optical coherence tomography images based on wave atoms. Journal of Biomedical Optics. 2014;19(5):056009. doi: 10.1117/1.JBO.19.5.056009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Bashkansky M, Reintjes J. Statistics and reduction of speckle in optical coherence tomography. Optics letters. 2000;25:545–547. doi: 10.1364/OL.25.000545. [DOI] [PubMed] [Google Scholar]

- 7.Schmitt, J. M., Xiang, S. H. & Kin Man, Y. Speckle in optical coherence tomography. Journal of Biomedical Optics4 (January 1999). [DOI] [PubMed]

- 8.Baghaie A, Yu Z, D’Souza RM. State-of-the-art in retinal optical coherence tomography image analysis. Quantitative Imaging in Medicine and Surgery. 2015;5:603–617. doi: 10.3978/j.issn.2223-4292.2015.07.02. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Szkulmowski M, et al. Efficient reduction of speckle noise in Optical Coherence Tomography. Optics express. 2012;20:1337–1359. doi: 10.1364/oe.20.001337. [DOI] [PubMed] [Google Scholar]

- 10.Esmaeili M, Dehnavi AM, Rabbani H, Hajizadeh F. Speckle Noise Reduction in Optical Coherence Tomography Using Two-dimensional Curvelet-based Dictionary Learning. Journal of Medical Signals and Sensors. 2017;7:86–91. doi: 10.4103/2228-7477.205592. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Jian Z, et al. Speckle attenuation in optical coherence tomography by curvelet shrinkage. Optics letters. 2009;34:1516–1518. doi: 10.1364/OL.34.001516. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hardin, J. S., Wong, G. T. & Seth, C. N., Chao, D., and Vizzeri, G. Factors Affecting Cirrus-HD OCT Optic Disc Scan Quality: A Review with Case Examples. Journal of Ophthalmology2015 (2015). [DOI] [PMC free article] [PubMed]

- 13.Mansouri K, Medeiros FA, Tatham AJ, Marchase N, Weinreb RN. Evaluation of Retinal and Choroidal Thickness by Swept-Source Optical Coherence Tomography: Repeatability and Assessment of Artifacts. American journal of ophthalmology. 2014;157:1022–1032. doi: 10.1016/j.ajo.2014.02.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Asrani S, Essaid L, Alder BD, Santiago-Turla C. Artifacts in spectral-domain optical coherence tomography measurements in glaucoma. JAMA ophthalmology. 2014;132:396–402. doi: 10.1001/jamaophthalmol.2013.7974. [DOI] [PubMed] [Google Scholar]

- 15.Liu Y, et al. Patient characteristics associated with artifacts in Spectralis optical coherence tomography imaging of the retinal nerve fiber layer in glaucoma. American journal of ophthalmology. 2015;159:565–576.e562. doi: 10.1016/j.ajo.2014.12.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kim KE, Jeoung JW, Park KH, Kim DM, Kim SH. Diagnostic classification of macular ganglion cell and retinal nerve fiber layer analysis: differentiation of false-positives from glaucoma. Ophthalmology. 2015;122:502–510. doi: 10.1016/j.ophtha.2014.09.031. [DOI] [PubMed] [Google Scholar]

- 17.Balasubramanian M, Bowd C, Vizzeri G, Weinreb RN, Zangwill LM. Effect of image quality on tissue thickness measurements obtained with spectral-domain optical coherence tomography. Optics express. 2009;17:4019–4036. doi: 10.1364/OE.17.004019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Mansberger SL, Menda SA, Fortune BA, Gardiner SK, Demirel S. Automated Segmentation Errors When Using Optical Coherence Tomography to Measure Retinal Nerve Fiber Layer Thickness in Glaucoma. American journal of ophthalmology. 2017;174:1–8. doi: 10.1016/j.ajo.2016.10.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Iftimia N, Bouma BE, Tearney GJ. Speckle reduction in optical coherence tomography by “path length encoded” angular compounding. J Biomed Opt. 2003;8:260–263. doi: 10.1117/1.1559060. [DOI] [PubMed] [Google Scholar]

- 20.Desjardins AE, et al. Angle-resolved optical coherence tomography with sequential angular selectivity for speckle reduction. Optics express. 2007;15:6200–6209. doi: 10.1364/OE.15.006200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Bajraszewski T, et al. Improved spectral optical coherence tomography using optical frequency comb. Optics express. 2008;16:4163–4176. doi: 10.1364/OE.16.004163. [DOI] [PubMed] [Google Scholar]

- 22.Kennedy BF, Hillman TR, Curatolo A, Sampson DD. Speckle reduction in optical coherence tomography by strain compounding. Optics letters. 2010;35:2445–2447. doi: 10.1364/ol.35.002445. [DOI] [PubMed] [Google Scholar]

- 23.Klein T, Andre R, Wieser W, Pfeiffer T, Huber R. Joint aperture detection for speckle reduction and increased collection efficiency in ophthalmic MHz OCT. Biomed Opt Express. 2013;4:619–634. doi: 10.1364/boe.4.000619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Pircher M, Gotzinger E, Leitgeb R, Fercher AF, Hitzenberger CK. Speckle reduction in optical coherence tomography by frequency compounding. J Biomed Opt. 2003;8:565–569. doi: 10.1117/1.1578087. [DOI] [PubMed] [Google Scholar]

- 25.Schmitt JM. Array detection for speckle reduction in optical coherence microscopy. Physics in medicine and biology. 1997;42:1427–1439. doi: 10.1088/0031-9155/42/7/015. [DOI] [PubMed] [Google Scholar]

- 26.Schmitt JM. Restoration of Optical Coherence Images of Living Tissue Using the CLEAN Algorithm. J Biomed Opt. 1998;3:66–75. doi: 10.1117/1.429863. [DOI] [PubMed] [Google Scholar]

- 27.Schmitt JM, Xiang SH, Yung KM. Speckle in optical coherence tomography. J Biomed Opt. 1999;4:95–105. doi: 10.1117/1.429925. [DOI] [PubMed] [Google Scholar]

- 28.Behar V, Adam D, Friedman Z. A new method of spatial compounding imaging. Ultrasonics. 2003;41:377–384. doi: 10.1016/S0041-624X(03)00105-7. [DOI] [PubMed] [Google Scholar]

- 29.Wu W, Tan O, Pappuru RR, Duan H, Huang D. Assessment of frame-averaging algorithms in OCT image analysis. Ophthalmic surgery, lasers & imaging retina. 2013;44:168–175. doi: 10.3928/23258160-20130313-09. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Chen C-L, et al. Virtual Averaging Making Nonframe-Averaged Optical Coherence Tomography Images Comparable to Frame-Averaged Images. Translational Vision Science & Technology. 2016;5:1. doi: 10.1167/tvst.5.1.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Chitchian S, Mayer MA, Boretsky AR, van Kuijk FJ, Motamedi M. Retinal optical coherence tomography image enhancement via shrinkage denoising using double-density dual-tree complex wavelet transform. Journal of Biomedical Optics. 2012;17:116009. doi: 10.1117/1.JBO.17.11.116009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.R. Daniel Ferguson, D. X. H., Lelia Adelina Paunescu, Siobahn Beaton, Joel S. Schuman Tracking Optical Coherence Tomography Optics letters29 (2004). [DOI] [PMC free article] [PubMed]

- 33.Ozcan A, Bilenca A, Desjardins AE, Bouma BE, Tearney GJ. Speckle reduction in optical coherence tomography images using digital filtering. Journal of the Optical Society of America. A, Optics, image science, and vision. 2007;24:1901–1910. doi: 10.1364/JOSAA.24.001901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Bernardes R, et al. Improved adaptive complex diffusion despeckling filter. Optics express. 2010;18:24048–24059. doi: 10.1364/oe.18.024048. [DOI] [PubMed] [Google Scholar]

- 35.Wong A, Mishra A, Bizheva K, Clausi DA. General Bayesian estimation for speckle noise reduction in optical coherence tomography retinal imagery. Optics express. 2010;18:8338–8352. doi: 10.1364/oe.18.008338. [DOI] [PubMed] [Google Scholar]

- 36.Bian, L., Suo, J., Chen, F. & Dai, Q. Multiframe denoising of high-speed optical coherence tomography data using interframe and intraframe priors. Journal of Biomedical Optics20, 1–11, 11 (2015). [DOI] [PubMed]

- 37.Grzywacz NM, et al. Statistics of optical coherence tomography data from human retina. IEEE transactions on medical imaging. 2010;29:1224–1237. doi: 10.1109/tmi.2009.2038375. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Hossein Rabbani, M. S. & Abramoff, M. D. Optical Coherence Tomography Noise Reduction Using Anisotropic Local Bivariate Gaussian Mixture Prior in 3D Complex Wavelet Domain. International Journal of Biomedical Imaging2013 (2013). [DOI] [PMC free article] [PubMed]

- 39.Li M, Idoughi R, Choudhury B, Heidrich W. Statistical model for OCT image denoising. Biomedical Optics Express. 2017;8:3903–3917. doi: 10.1364/BOE.8.003903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Mayer MA, et al. Wavelet denoising of multiframe optical coherence tomography data. Biomedical Optics Express. 2012;3:572–589. doi: 10.1364/BOE.3.000572. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Agravat, R. R. & Raval, M. S. In Soft Computing Based Medical Image Analysis (eds Nilanjan Dey, Amira S. Ashour, Fuqian Shi, & Valentina E. Balas) 183–201 (Academic Press, 2018).

- 42.Akkus Z, Galimzianova A, Hoogi A, Rubin DL, Erickson BJ. Deep Learning for Brain MRI Segmentation: State of the Art and Future Directions. Journal of Digital Imaging. 2017;30:449–459. doi: 10.1007/s10278-017-9983-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Cui, Z., Yang, J. & Qiao, Y. In 2016 35th Chinese Control Conference (CCC). 7026–7031.

- 44.Liu J, et al. Applications of deep learning to MRI images: A survey. Big Data Mining and Analytics. 2018;1:1–18. doi: 10.26599/BDMA.2018.9020001. [DOI] [Google Scholar]

- 45.Mohsen H, El-Dahshan E-SA, El-Horbaty E-SM, Salem A-BM. Classification using deep learning neural networks for brain tumors. Future Computing and Informatics Journal. 2018;3:68–71. doi: 10.1016/j.fcij.2017.12.001. [DOI] [Google Scholar]

- 46.Viktor Wegmayr, S. A. & Buhmann, J. Classification of brain MRI with big data and deep 3D convolutional neural networks. Proceedings Volume 10575, Medical Imaging 2018: Computer-Aided Diagnosis; 105751S (2018).

- 47.Bertrand, H., Perrot, M., Ardon, R. & Bloch, I. Classification of MRI data using Deep Learning and Gaussian Process-based Model Selection. Preprint at, https://arxiv.org/abs/1701.04355 (2017).

- 48.Benou A, Veksler R, Friedman A, Riklin Raviv T. Ensemble of expert deep neural networks for spatio-temporal denoising of contrast-enhanced MRI sequences. Medical image analysis. 2017;42:145–159. doi: 10.1016/j.media.2017.07.006. [DOI] [PubMed] [Google Scholar]

- 49.Jiang, D. et al. Denoising of 3D magnetic resonance images with multi-channel residual learningofconvolutionalneuralnetwork. Preprin at, https://arxiv.org/abs/1712.08726 (2017).

- 50.Gondara, L. in 2016 IEEE 16th International Conference on Data Mining Workshops (ICDMW). 241–246 (2016).

- 51.Sui X, et al. Choroid segmentation from Optical Coherence Tomography with graph-edge weights learned from deep convolutional neural networks. Neurocomputing. 2017;237:332–341. doi: 10.1016/j.neucom.2017.01.023. [DOI] [Google Scholar]

- 52.Al-Bander, B., Williams, B. M., Al-Taee, M. A., Al-Nuaimy, W. & Zheng, Y. In 2017 10th International Conference on Developments in eSystems Engineering (DeSE). 182–187 (2017).

- 53.Zhang, Q., Cui, Z., Niu, X., Geng, S. & Qiao, Y. In Neural Information Processing. (eds Derong Liu et al.) 364-372 (Springer International Publishing).

- 54.Venhuizen FG, et al. Robust total retina thickness segmentation in optical coherence tomography images using convolutional neural networks. Biomedical Optics Express. 2017;8:3292–3316. doi: 10.1364/BOE.8.003292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Fang L, et al. Automatic segmentation of nine retinal layer boundaries in OCT images of non-exudative AMD patients using deep learning and graph search. Biomedical Optics Express. 2017;8:2732–2744. doi: 10.1364/BOE.8.002732. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Lu Donghuan, Heisler Morgan, Lee Sieun, Ding Gavin Weiguang, Navajas Eduardo, Sarunic Marinko V., Beg Mirza Faisal. Deep-learning based multiclass retinal fluid segmentation and detection in optical coherence tomography images using a fully convolutional neural network. Medical Image Analysis. 2019;54:100–110. doi: 10.1016/j.media.2019.02.011. [DOI] [PubMed] [Google Scholar]

- 57.Roy Abhijit Guha, Conjeti Sailesh, Karri Sri Phani Krishna, Sheet Debdoot, Katouzian Amin, Wachinger Christian, Navab Nassir. ReLayNet: retinal layer and fluid segmentation of macular optical coherence tomography using fully convolutional networks. Biomedical Optics Express. 2017;8(8):3627. doi: 10.1364/BOE.8.003627. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Devalla SK, et al. DRUNET: a dilated-residual U-Net deep learning network to segment optic nerve head tissues in optical coherence tomography images. Biomedical Optics Express. 2018;9:3244–3265. doi: 10.1364/BOE.9.003244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Devalla SK, et al. A Deep Learning Approach to Digitally Stain Optical Coherence Tomography Images of the Optic Nerve Head. Investigative ophthalmology & visual science. 2018;59:63–74. doi: 10.1167/iovs.17-22617. [DOI] [PubMed] [Google Scholar]

- 60.Awais, M., Müller, H., Tang, T. B. & Meriaudeau, F. In 2017 IEEE International Conference on Signal and Image Processing Applications (ICSIPA). 489–492 (2017).

- 61.Prahs P, et al. OCT-based deep learning algorithm for the evaluation of treatment indication with anti-vascular endothelial growth factor medications. Graefe’s Archive for Clinical and Experimental Ophthalmology. 2018;256:91–98. doi: 10.1007/s00417-017-3839-y. [DOI] [PubMed] [Google Scholar]

- 62.Lee CS, Baughman DM, Lee AY. Deep Learning is Effective for Classifying Normal versus Age-Related Macular Degeneration OCT Images. Ophthalmology Retina. 2017;1:322–327. doi: 10.1016/j.oret.2016.12.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Liu, Y., Cheng, M. M., Hu, X., Wang, K. & Bai, X. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 5872–5881.

- 64.Al-Mujaini A, Wali UK, Azeem S. Optical Coherence Tomography: Clinical Applications in Medical Practice. Oman Medical Journal. 2013;28:86–91. doi: 10.5001/omj.2013.24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Bowd C, Weinreb RN, Williams JM, Zangwill LM. The retinal nerve fiber layer thickness in ocular hypertensive, normal, and glaucomatous eyes with optical coherence tomography. Archives of ophthalmology (Chicago, Ill.: 1960) 2000;118:22–26. doi: 10.1001/archopht.118.1.22. [DOI] [PubMed] [Google Scholar]

- 66.McLellan GJ, Rasmussen CA. Optical Coherence Tomography for the Evaluation of Retinal and Optic Nerve Morphology in Animal Subjects: Practical Considerations. Veterinary ophthalmology. 2012;15:13–28. doi: 10.1111/j.1463-5224.2012.01045.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Miki A, et al. Rates of retinal nerve fiber layer thinning in glaucoma suspect eyes. Ophthalmology. 2014;121:1350–1358. doi: 10.1016/j.ophtha.2014.01.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Ojima T, et al. Measurement of retinal nerve fiber layer thickness and macular volume for glaucoma detection using optical coherence tomography. Japanese journal of ophthalmology. 2007;51:197–203. doi: 10.1007/s10384-006-0433-y. [DOI] [PubMed] [Google Scholar]

- 69.Downs JC, et al. Posterior scleral thickness in perfusion-fixed normal and early-glaucoma monkey eyes. Investigative ophthalmology & visual science. 2001;42:3202–3208. [PubMed] [Google Scholar]

- 70.Lee KM, et al. Anterior lamina cribrosa insertion in primary open-angle glaucoma patients and healthy subjects. PLoS One. 2014;9:e114935. doi: 10.1371/journal.pone.0114935. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Park SC, et al. Lamina cribrosa depth in different stages of glaucoma. Investigative ophthalmology & visual science. 2015;56:2059–2064. doi: 10.1167/iovs.14-15540. [DOI] [PubMed] [Google Scholar]

- 72.Quigley HA, Addicks EM. Regional differences in the structure of the lamina cribrosa and their relation to glaucomatous optic nerve damage. Archives of ophthalmology (Chicago, Ill.: 1960) 1981;99:137–143. doi: 10.1001/archopht.1981.03930010139020. [DOI] [PubMed] [Google Scholar]

- 73.Quigley HA, Addicks EM, Green WR, Maumenee AE. Optic nerve damage in human glaucoma. II. The site of injury and susceptibility to damage. Archives of ophthalmology (Chicago, Ill.: 1960) 1981;99:635–649. doi: 10.1001/archopht.1981.03930010635009. [DOI] [PubMed] [Google Scholar]

- 74.Regatieri CV, Branchini L, Duker JS. The Role of Spectral-Domain OCT in the Diagnosis and Management of Neovascular Age-Related Macular Degeneration. Ophthalmic surgery, lasers & imaging: the official journal of the International Society for Imaging in the Eye. 2011;42:S56–S66. doi: 10.3928/15428877-20110627-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Health Quality, O. Optical Coherence Tomography for Age-Related Macular Degeneration and Diabetic Macular Edema: An Evidence-Based Analysis. Ontario Health Technology Assessment Series9, 1–22 (2009). [PMC free article] [PubMed]

- 76.Srinivasan VJ, et al. High-Definition and 3-dimensional Imaging of Macular Pathologies with High-speed Ultrahigh-Resolution Optical Coherence Tomography. Ophthalmology. 2006;113:2054.e2051–2054.2014. doi: 10.1016/j.ophtha.2006.05.046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Alshareef RA, et al. Prevalence and Distribution of Segmentation Errors in Macular Ganglion Cell Analysis of Healthy Eyes Using Cirrus HD-OCT. PLOS ONE. 2016;11:e0155319. doi: 10.1371/journal.pone.0155319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Stankiewicz A., Marciniak T., Dąbrowski A., Stopa M., Rakowicz P., Marciniak E. Denoising methods for improving automatic segmentation in OCT images of human eye. Bulletin of the Polish Academy of Sciences Technical Sciences. 2017;65(1):71–78. doi: 10.1515/bpasts-2017-0009. [DOI] [Google Scholar]

- 79.Scoles D, et al. Assessing Photoreceptor Structure Associated with Ellipsoid Zone Disruptions Visualized with Optical Coherence Tomography. Retina (Philadelphia, Pa.) 2016;36:91–103. doi: 10.1097/IAE.0000000000000618. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Kern TS, Berkowitz BA. Photoreceptors in diabetic retinopathy. Journal of Diabetes Investigation. 2015;6:371–380. doi: 10.1111/jdi.12312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Baba T, et al. Correlation of visual recovery and presence of photoreceptor inner/outer segment junction in optical coherence images after successful macular hole repair. Retina. 2008;28:453–458. doi: 10.1097/IAE.0b013e3181571398. [DOI] [PubMed] [Google Scholar]

- 82.Hayashi H, et al. Association between Foveal Photoreceptor Integrity and Visual Outcome in Neovascular Age-related Macular Degeneration. American journal of ophthalmology. 2009;148:83–89.e81. doi: 10.1016/j.ajo.2009.01.017. [DOI] [PubMed] [Google Scholar]

- 83.Flatter JA, et al. Outer retinal structure after closed-globe blunt ocular trauma. Retina. 2014;34:2133–2146. doi: 10.1097/iae.0000000000000169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Bambo MP, et al. Influence of cataract surgery on repeatability and measurements of spectral domain optical coherence tomography. British Journal of Ophthalmology. 2014;98:52. doi: 10.1136/bjophthalmol-2013-303752. [DOI] [PubMed] [Google Scholar]

- 85.Kok PHB, et al. The relationship between the optical density of cataract and its influence on retinal nerve fibre layer thickness measured with spectral domain optical coherence tomography. Acta Ophthalmologica. 2012;91:418–424. doi: 10.1111/j.1755-3768.2012.02514.x. [DOI] [PubMed] [Google Scholar]

- 86.Mwanza JC, et al. Effect of cataract and its removal on signal strength and peripapillary retinal nerve fiber layer optical coherence tomography measurements. Journal of glaucoma. 2011;20:37–43. doi: 10.1097/IJG.0b013e3181ccb93b. [DOI] [PubMed] [Google Scholar]

- 87.Savini G, Zanini M, Barboni P. Influence of pupil size and cataract on retinal nerve fiber layer thickness measurements by Stratus OCT. Journal of glaucoma. 2006;15:336–340. doi: 10.1097/01.ijg.0000212244.64584.c2. [DOI] [PubMed] [Google Scholar]

- 88.Stein DM, et al. Effect of Corneal Drying on Optical Coherence Tomography. Ophthalmology. 2006;113:985–991. doi: 10.1016/j.ophtha.2006.02.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Nicola G. Ghazi, J. W. M The effect of lubricating eye drops on optical coherence tomography imaging of the retina. Digital Journal of Ophthalmology15 (2009). [DOI] [PMC free article] [PubMed]

- 90.Smith M, Frost A, Graham CM, Shaw S. Effect of pupillary dilatation on glaucoma assessments using optical coherence tomography. The British Journal of Ophthalmology. 2007;91:1686–1690. doi: 10.1136/bjo.2006.113134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Moisseiev E, et al. Pupil dilation using drops vs gel: a comparative study. Eye. 2015;29:815. doi: 10.1038/eye.2015.47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Ferguson RD, Hammer DX, Paunescu LA, Beaton S, Schuman JS. Tracking optical coherence tomography. Optics letters. 2004;29:2139–2141. doi: 10.1364/OL.29.002139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Shin JW, et al. The Effect of Optic Disc Center Displacement on Retinal Nerve Fiber Layer Measurement Determined by Spectral Domain Optical Coherence Tomography. PLOS ONE. 2016;11:e0165538. doi: 10.1371/journal.pone.0165538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Wong IY, Koizumi H, Lai WW. Enhanced depth imaging optical coherence tomography. Ophthalmic surgery, lasers & imaging: the official journal of the International Society for Imaging in the Eye. 2011;42(Suppl):S75–84. doi: 10.3928/15428877-20110627-07. [DOI] [PubMed] [Google Scholar]

- 95.Hammer D, et al. Advanced scanning methods with tracking optical coherence tomography. Optics express. 2005;13:7937–7947. doi: 10.1364/OPEX.13.007937. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Wells WM, 3rd, Viola P, Atsumi H, Nakajima S, Kikinis R. Multi-modal volume registration by maximization of mutual information. Medical image analysis. 1996;1:35–51. doi: 10.1016/S1361-8415(01)80004-9. [DOI] [PubMed] [Google Scholar]

- 97.O. Ronneberger PF, Brox T. U-Net: Convolutional Networks for Biomedical Image Segmentation. Medical Image Computing and Computer-Assisted Intervention (MICCAI) 2015;9351:234–241. [Google Scholar]

- 98.He, K., Zhang, X., Ren, S. & Sun, J. in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 770–778 (2016).

- 99.Yu, F. & Koltun, V. in 4th International Conference on Learning Representations, ICLR 2016 (Poster) (2016).

- 100.Clevert, D.-A., Unterthiner, T. & Hochreiter, S. in 4th International Conference on Learning Representations, ICLR 2016 (Poster) (Feb 2016).

- 101.Sergey Ioffe, C. S. In Proceedings of the 32nd International Conference on Machine Learning - Volume 37 448–456 (JMLR.org, Lille, France, 2015).

- 102.Kingma, D. P. & Ba, J. in 3rd International Conference for Learning Representations, ICLR 2015 (Poster) (2015).

- 103.Simard, P. Y. & John, D. S. C. Platt Best Practices for Convolutional Neural Networks Applied to Visual Document Analysis Proceedings of the Seventh International Conference on Document Analysis and Recognition (ICDAR 2003) (2003).

- 104.Wu Z, Xu G, Weinreb RN, Yu M, Leung CK. Optic Nerve Head Deformation in Glaucoma: A Prospective Analysis of Optic Nerve Head Surface and Lamina Cribrosa Surface Displacement. Ophthalmology. 2015;122:1317–1329. doi: 10.1016/j.ophtha.2015.02.035. [DOI] [PubMed] [Google Scholar]

- 105.Girard MJ, Strouthidis NG, Ethier CR, Mari JM. Shadow removal and contrast enhancement in optical coherence tomography images of the human optic nerve head. Investigative ophthalmology & visual science. 2011;52:7738–7748. doi: 10.1167/iovs.10-6925. [DOI] [PubMed] [Google Scholar]

- 106.Zhou W, Bovik AC, Sheikh HR, Simoncelli EP. Image quality assessment: from error visibility to structural similarity. IEEE Transactions on Image Processing. 2004;13:600–612. doi: 10.1109/TIP.2003.819861. [DOI] [PubMed] [Google Scholar]

- 107.Rueden, C. T. S. et al. ImageJ2: ImageJ for the next generation of scientific image data. BMC Bioinformatics18 (2017). [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The dataset used for the training, validation, and testing of the proposed deep learning network was obtained from the Singapore National Eye Center and transferred to the National University of Singapore in a de-identified format. The study was approved by the SingHealth Centralized Institutional Review. Due to regulations, SingHealth does not authorize the dataset to be shared publicly.

The authors wish to license the code to another party and thus are unable to release the entire codebase publicly at this stage. However, all the experiments, network descriptions, and data augmentations are described in sufficient details to enable independent replication with non-proprietary libraries.