Abstract

The increasing prevalence of antimicrobial-resistant bacteria drives the need for advanced methods to identify antimicrobial-resistance (AMR) genes in bacterial pathogens. With the availability of whole genome sequences, best-hit methods can be used to identify AMR genes by differentiating unknown sequences with known AMR sequences in existing online repositories. Nevertheless, these methods may not perform well when identifying resistance genes with sequences having low sequence identity with known sequences. We present a machine learning approach that uses protein sequences, with sequence identity ranging between 10% and 90%, as an alternative to conventional DNA sequence alignment-based approaches to identify putative AMR genes in Gram-negative bacteria. By using game theory to choose which protein characteristics to use in our machine learning model, we can predict AMR protein sequences for Gram-negative bacteria with an accuracy ranging from 93% to 99%. In order to obtain similar classification results, identity thresholds as low as 53% were required when using BLASTp.

Subject terms: Bioinformatics, Machine learning, Applied microbiology, Computational science, Computer science

Introduction

Bacteria can cause bloodstream infections, and with the increasing prevalence of antimicrobial resistance (AMR) in bacteria treatment can become complicated1–7. AMR results in increased mortality and an increase in the duration of hospitalization. Every year, millions of people in the United States are infected by AMR bacteria, and thousands of people die8,9. Hence, accurate identification of AMR in bacteria is essential for the proper administration of appropriate antibacterial agents. To detect AMR in bacteria, in vitro cultures are used to monitor the growth of bacteria for different concentrations of drugs and may require several days to obtain accurate antibiotic susceptibility results10. In addition, many bacteria cannot be cultured, and a large number of these are becoming available via metagenomic studies11,12.

With breakthroughs in whole genome sequencing (WGS) method, it is possible to apply sequence alignment approaches such as best-hit methods to identify AMR genes using sequence similarity in public databases4,13,14. These methods show good performance in identifying known and highly conserved AMR genes and produce small number of false positives, i.e., detecting non-AMR genes as AMR genes15. However, they may not be able to to find AMR sequences that have high dissimilarity with known AMR genes, producing unacceptable numbers of false negatives13,16,17. A machine learning approach can be used as an alternative solution for identifying putative AMR genes. To train a machine learning algorithm to detect AMR sequences, training data are needed in the form of protein sequences for known AMR genes (positive training data) and protein sequences that are known not to be AMR genes (negative training data). From these training data, we must determine what protein characteristics distinguish AMR genes from non-AMR genes. These characteristics are known as features. Numerous features exist for protein sequences, and a goal of any accurate machine learning model is to determine which features provide the most useful information. Recently, two studies have proposed machine learning approaches for predicting AMR genes. Arango-Argoty et al. discuss a deep learning approach—DeepARG18 to identify novel antimicrobial resistance genes from metagenomic data. DeepARG employs an artificial neural network based classifier, taking into account the similarity distribution of sequences to all known AMR genes. The other approach is the pairwise comparative modelling (PCM)19 which leverages protein structure information for AMR sequence identification. PCM builds two structural models for each candidate sequence with respect to AMR and non-AMR sequences, and a machine learning model is applied to find the best structural model for determining whether the sequence belongs to an AMR or non-AMR family. In contrast to these earlier works, we consider all possible candidate features for protein sequences based on the composition, physicochemical, evolutionary, and structural characteristics of protein sequences whose sequence identity ranges between 10% and 90%.

The earlier works discussed above did not apply any feature reduction strategy to find the most relevant, non-redundant and interdependent features. Identifying important features from a set of features to attain high classification accuracy is a challenging problem in machine learning because irrelevant or redundant features can compromise accuracy. Several feature selection techniques can be used to obtain an optimal feature set, and these are broadly classified into three categories: embedded, wrapper, and filter approaches. Both embedded and wrapper methods20–22 are tightly coupled with a particular learning algorithm, and both achieve good classification accuracy. However, these approaches have a high computational overhead and less generalization of features. Alternatively, filter methods measure feature relevance by considering the intrinsic properties of the data23–25. In addition, the filter approach has a lower computational cost than the other methods, and it facilitates comparable accuracy for most classifiers26. Thus, we only considered filter method for our feature selection approach.

Most filter methods reject features that are poorly predictive when used alone, even though they may work well when combined with other variables26,27. In contrast, in this paper we introduce a game theoretic dynamic weighting based feature evaluation (GTDWFE) approach in which features are selected one at a time based on relevance and redundancy measurements with dynamic re-weighting of candidate features based on their interdependency with the current selected features. Re-weighting is determined using a Banzhaf power index28, and features are not necessarily rejected because they are poorly predictive as single variables. Instead we consider how features work together as a whole using a game theory approach. In simple terms, game theory is the study of mathematical models for determining how the behavior of one participant depends on the behavior of other participants. We consider features from the protein sequences—both AMR and non-AMR—of the Gram-negative bacterial genera Acinetobacter, Klebsiella, Campylobacter, Salmonella, and Escherichia for acetyltransferase (aac), β-lactamase (bla), and dihydrofolate reductase (dfr). Next we apply our game theory approach to select a small subset of features from the bacterial protein sequences, and finally we utilize this small feature subset to predict AMR genes using a support vector machine (SVM)29. We use protein sequences from different Gram-negative bacteria Pseudomonas spp., Vibrio spp., and Enterobacter spp. to test our classifier. We also make a performance comparison between our classifier and BLASTp.

Results

Interdependent group size based comparative analysis

We compared the classification performance of our method using an SVM with an interdependent group size of where δ is used in the computation of the Banzhaf power index. Using the top k features and 30 different feature subsets () of Acinetobacter, Klebsiella, Campylobacter, Salmonella, and Escherichia and dividing the dataset into 70%/30% training/test samples, we tuned the SVM based on an equal number of positive and negative samples, to determine the best parameters for the SVM models. We selected the radial basis function kernel for each SVM30,31 but different C and γ values for each feature subset. C is used to control the cost of misclassification in the SVM, and γ is the kernel parameter. We used the resulting models trained using 70% of the dataset to identify putative AMR genes for the remaining data set. The numbers of the best feature subsets using oversampling and undersampling techniques for different δ values are shown in Table 1 where the classification accuracy achieved for each respective best feature subset is shown in parentheses. Oversampling and undersampling are methods in data analytics for balancing sets of data for which there are inherently more samples of one class than another. For this work, the number of positive samples (AMR) is smaller than the number of negative samples (non-AMR). To compensate, we duplicated the positive training samples (oversampling) and we removed some of the negative training samples (undersampling) to achieve balanced datasets.

Table 1.

Classification performance for different δ values (corresponding classification accuracy in parentheses).

| AMR | Oversampling | Undersampling | ||||

|---|---|---|---|---|---|---|

| δ = 1 | δ = 2 | δ = 3 | δ = 1 | δ = 2 | δ = 3 | |

| acetyltransferase (aac) | 6 (0.97) | 6 (0.97) | 6 (0.97) | 5 (0.97) | 5 (0.97) | 5 (0.97) |

| β-lactamase (bla) | 15 (1) | 19 (1) | 18 (1) | 9 (0.97) | 9 (0.97) | 11 (0.97) |

| dihydrofolate reductase (dfr) | 5 (1) | 5 (1) | 5 (1) | 18 (0.96) | 28 (1) | 25 (1) |

The classification accuracies achieved for aac, bla, and dfr vary from 96% to 100%. We also determined to be the overall best interdependent group size, so we set δ to 3 for the remainder of our analyses. The C and γ parameter values for the SVM radial model for selecting each best feature subset with are listed as a supplementary table (Table S1).

Feature selection method comparative analysis

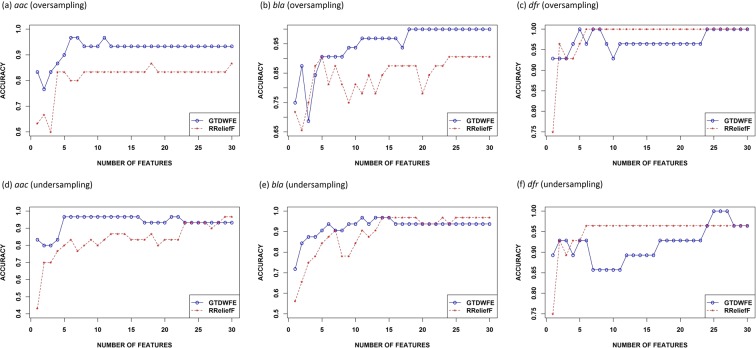

To assess the performance of our GTDWFE feature evaluation method, we compared it with a popular feature selection algorithm — RReliefF32. RReliefF is an updated version of Relief and ReliefF33,34, a filter-based method that uses distance between instances to find the relevance weight of each feature to rank the features. For RReliefF, we considered 5 neighbors and 30 instances as suggested in a previous study35. We used in our feature selection approach. Comparisons of the results for the two methods are shown in Fig. 1 for oversampling and undersampling. As can be seen in these results, the maximum accuracies our GTDWFE achieved w.r.t. the number of features are better than those of RReliefF for all cases. Note that in some cases, RReliefF achieved equal maximum accuracies as GTDWFE method, but the latter required fewer number of features.

Figure 1.

Comparison between GTDWFE and RReliefF accuracies for oversampling and undersampling. Accuracies are given as a function of the number of features used.

Identification of antimicrobial-resistance proteins from Pseudomonas, Vibrio, and Enterobacter

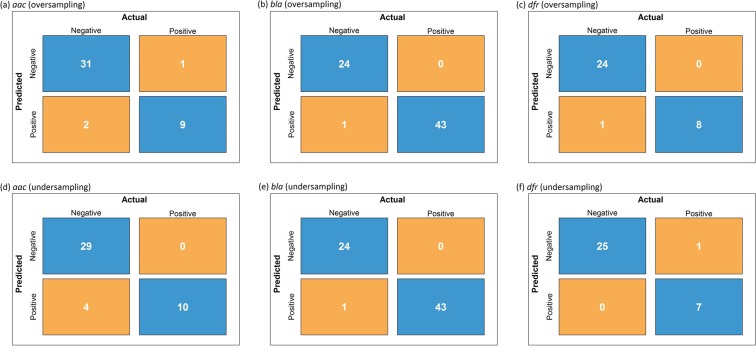

To acquire a better understanding of whether the GTDWFE method can identify putative AMR genes in Gram-negative bacteria, we trained SVM classifiers using 100% of the positive and negative samples for Acinetobacter, Klebsiella, Campylobacter, Salmonella, and Escherichia. We used the same features obtained previously for to train the SVM model. We then tested the SVM classifiers using the same features for positive and negative samples for Pseudomonas, Vibrio, and Enterobacter. Importantly, for acetyltransferase, we used eight non-AMR samples of acetyltransferase to ascertain whether the SVM classifier was able to distinguish between resistant and non-resistant samples of acetyltransferase. The confusion matrices for both oversampling and undersampling cases are shown in Fig. 2. In a confusion matrix, ‘Positive’ and ‘Negative’ indicate AMR (positive) and non-AMR (negative) classes, respectively, and falling diagonal entries indicate correctly identified instances. For oversampling, the GTDWFE method achieved accuracies of 0.93, 0.99, and 0.97 for aac, bla, and dfr, respectively. Moreover, 6 of the 8 non-AMR samples of acetyltransferase were correctly predicted to be negative. For undersampling, the GTDWFE approach had accuracies of 0.91, 0.99, and 0.97 for aac, bla, and dfr, respectively. Of the 8 non-AMR samples, 5 were correctly predicted to be negative samples. Here, as for our test cases in the previous section, the GTDWFE method gives better results when oversampling is used.

Figure 2.

Confusion matrices for oversampling and undersampling.

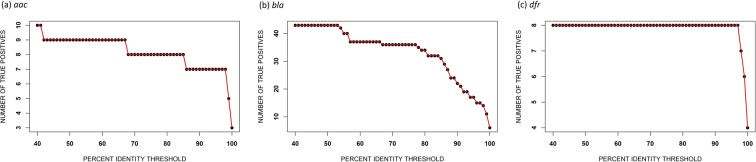

Based on the results above, we conclude that our game theory approach for determining protein features for use in a machine learning algorithm can be used with high accuracy to predict AMR in Gram-negative bacteria. We used training data from five different Gram-negative bacterial genera and predicted AMR in three different Gram-negative bacterial genera. The results based on oversampling were the most accurate with 93% accuracy for acetyltransferase resistance, 97% accuracy for dihydrofolate reductase resistance, and 99% accuracy for β-lactamase resistance. The performance of our game theory method was also compared with the BLASTp results considering default parameter settings (https://blast.ncbi.nlm.nih.gov/Blast.cgi?PAGE=Proteins). The outcomes shown in Fig. 3 are the number of matched AMR protein sequences as a function of percent identity for Pseudomonas, Vibrio, and Enterobacter using the AMR genes from Acinetobacter, Klebsiella, Campylobacter, Salmonella, and Escherichia. For example, considering percent identity ≥90 for a sequence from Pseudomonas, Vibrio, or Enterobacter to be matched (true positive) with an AMR sequence from Acinetobacter, Klebsiella, Campylobacter, Salmonella, or Escherichia, we obtain eight true positives out of ten aac sequences, 22 true positives out of 43 bla sequences, and eight true positives out of eight dfr sequences. The results for dfr are much better, but this may in part be due to the limited overall diversity of available dfr sequences. Note that the percent identity threshold has to be as low as 41% to obtain accurate results for aac and bla, but this results in an increased number of false positives. As an example, when the percent identity threshold is set to 41%, six out of eight histone acetyltransferases are incorrectly detected as AMR samples, indicating a large false positive rate. Therefore, using an appropriate threshold setting for BLASTp compromises the accuracy of the results. To obtain the equal true positives as for our oversampling cases (Fig. 2), the percent identity threshold of BLASTp need to be 67% for aac and 53% for bla; however, these sequence identity thresholds produce three false positives for aac (two of them are histone acetyltransferases) and six false positives for bla. Thus, false positive rates using BLASTp classification for aac and bla are still high compared to our GTDWFE algorithm. Our machine learning approach provides greater accuracy than conventional methods for highly diverse protein sequences.

Figure 3.

Identification of AMR sequences in Pseudomonas, Vibrio, or Enterobacter using BLASTp as a function of percent identity using AMR sequences from Acinetobacter, Klebsiella, Campylobacter, Salmonella, and Escherichia.

Discussion

In this paper we presented a machine learning method for prediction of antimicrobial-resistance genes for three antibiotic classes. The strength of a machine learning algorithm is that it uses features based on the structural, physicochemical, evolutionary, and compositional properties of protein sequences rather than simply their sequence similarity. The novel game theory approach we used to determine protein features for our machine learning algorithm has not been used previously for such a purpose and is especially powerful because features are chosen on the basis of how well they work together as a whole to identify putative antimicrobial-resistance genes by taking into account both the relevance and interdependency of features. As such, we were able to use protein sequences to train the machine learning algorithm using functionally-equivalent amino acid sequences with shared identity that ranged from 10% to 90%. The algorithm was then able to correctly identify genes from an independent data set with 93% to 99% accuracy. The only way this can be achieved by means of a best-hit approach such as BLASTp is by considering sequence matches with as low as 53% similarity. Compared to our approach, this then leads to a greater number of false positives, that is, sequences incorrectly identified as antimicrobial-resistance genes.

Our work included collection of resistance and non-resistance protein sequences, feature extraction, feature evaluation for dimension reduction, handling of imbalanced data sets, and comparison of our method with an existing feature selection approach. The RReliefF algorithm for selecting features is well-known for its accuracy and ability to rank features by their importance, but it does not account for feature interdependence. This was made clear by comparison between results obtained using the game theory algorithm, GTDWFE, and RReliefF. GTDWFE achieved the highest accuracy for all cases using fewer features than RReliefF because of its reduction in irrelevant and/or redundant information. The results of our approach using oversampling were better than those using undersampling.

With growth in both antimicrobial resistance and the number of sequenced genomes available, implementation of machine learning models for accurate prediction of AMR genes represents a significant development toward new and more accurate tools in the field of predictive antimicrobial resistance. In future work, we will create a user-friendly and publicly available program for predicting AMR in bacteria based on the method presented in this paper.

Methods

Data collection

Amino acid sequences for antimicrobial-resistance genes were retrieved from the Antibiotic Resistance Genes Database (ARDB)36, and non-AMR sequences were obtained from the Pathosystems Resource Integration Center (PATRIC)37. A BLASTp search using default parameter settings was performed to find all matching sequences. Initial AMR sequences for the Gram-negative bacteria Acinetobacter spp., Klebsiella spp., Campylobacterspp., Salmonella spp., and Escherichia spp. numbered 387 for aac, 1113 for bla, and 804 for dfr; there were 159 non-AMR sequences (73 essential genes and 86 histone acetyltransferesases38) randomly chosen. Because of the number of duplicate sequences, we used CD-HIT39,40 to find the unique sequences. Sequences having ≥90% similarity were removed for further consideration. The final number of unique sequences obtained were 33 aac, 43 bla, and 28 dfr AMR sequences and 71 non-AMR sequences (64 essential genes and 7 histone acetyltransferases). This data set was used as the training and test set for our model. The histone acetyltransferases together with the essential sequences were used as negative training data only for the aac classifier. For bla and dfr, only essential sequences were used as negative training data. In addition to the training/test data set, 199 aac, 588 bla, 66 dfr AMR sequences and 82 non-AMR sequences (35 essential genes and 47 histone acetyltransferases) for the Gram-negative bacteria Pseudomonas spp., Vibrio spp., and Enterobacter spp. were collected from the data sources indicated above. After application of CD-HIT, 10 aac, 43 bla, and 8 dfr AMR sequences and 33 non-AMR sequences (25 essential genes and 8 histone acetyltransferases) were retained. These were used to test the accuracy of the final classifier. Again, histone acetyltransferases were used only in the aac model. Note that sequence similarity for the AMR sequences could be quite low.

Protein features

A literature search was used to identify the composition, physicochemical characteristics, and secondary structure properties of protein sequences41–46. As a result of this search, we created 20D feature vectors based on amino acid composition with each of the 20 feature values in a vector representing one of the 20 amino acids. Next the composition, transition, and distribution (CTD) model proposed by Dubchak et al.47,48 was used to retrieve global physicochemical features from protein sequences. The CTD model results in a 3D feature vector for composition, a 3D feature vector for transition, and a 15D feature vector for distribution. As there 8 physicochemical amino acid properties, the CTD paradigm provides a total of features. We obtained evolutionary-relevant features using a position-specific scoring matrix (PSSM). After producing a PSSM for a protein sequence by applying PSI-BLAST49, we computed transition scores between adjacent amino acids which resulted in a 400D feature vector for each protein sequence.

Finally, features were obtained for the secondary structure of proteins which provides relevant information in protein fold recognition. PSIPRED50 was applied to sequences to predict their secondary structures. These were used as described in previous studies43,44,51–53 to obtain our secondary structure features. Location-oriented features were produced from the spatial arrangements of the α-helix, β-strand, γ-coil states. Normalized maximum spatially consecutive states in the secondary structure sequences were also calculated. Additionally, we retrieved features from segment sequences by disregarding the coil portions in the secondary structure. In such a way, a total of six features were generated from the protein structure information.

Three global information features were generated from the structure probability matrix (SPM) produced by PSIPRED. Local information features were acquired by dividing the SPM into δ submatrices, each with entries. By selecting , we generated 3D features for a particular sub-matrix using the same approach considered for the generation of global information features. Hence, we obtained 3 × 8 = 24 local information features. In total, 3 + 24 = 27 features with global and local information were generated.

By combining all the features, we obtained a 621D high-dimensional feature vector. Detailed descriptions of all the extracted candidate features together with the formulas used to calculate values can be found in our previous work54. The objective of this work was to then reduce the dimension of our feature vector in such a way as to produce accurate machine learning predictions for AMR.

Feature evaluation

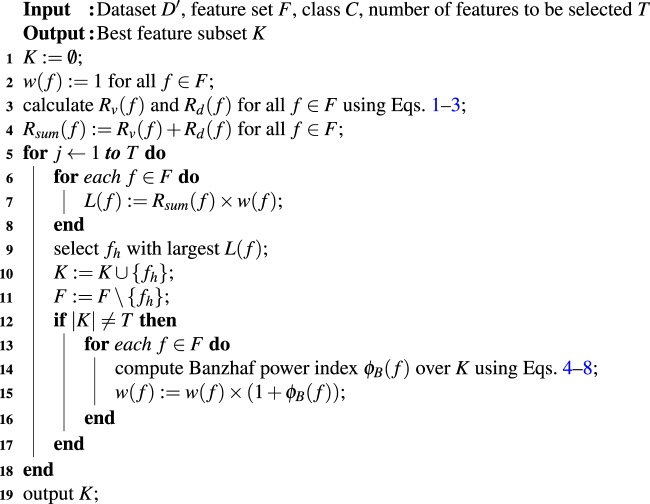

We adopted a feature evaluation method to select a small number of features based on a relevance, redundancy, and interdependency estimation of all the features. In our approach, we calculated the weight of a feature based on the features selected earlier, and weight readjustment of a feature was performed dynamically when a new selected feature was added to the previously selected feature subset. Here, the weight of a feature actually resembles the interdependence relationship with features previously selected. The details of our feature selection approach called the game theoretic dynamic weighting based feature evaluation (GTDWFE) algorithm are presented in Algorithm 1.

Algorithm 1.

Game theoretic dynamic weighting based feature evaluation (GTDWFE) algorithm.

Algorithm 1 takes data set D′, feature set F, binary classes C, and number of features to be selected T and outputs the best feature subset K. To implement this algorithm we first initialize the parameters, setting the same weight to each feature, i.e., weight w(f) of a feature f is set to 1 initially (line 2). Relevance to the target class Rv(f) and similarity value of a feature Rd(f) are calculated for all features (line 3). The greater the relevance of a feature to the target (AMR or non-AMR sequence), the more it can contribute to the prediction by sharing information with the target class. Also, the greater the distance of a feature from all other features, the lower the similarity of the feature with the remaining features, which indicates lower redundancy. We computed Pearson’s correlation coefficient between a feature and class using Eq. 1, that is, we estimated the linear correlation between feature f and class C. Pearson’s correlation coefficient (denoted by ) is computed using

| 1 |

where the expectation is represented by E, μf and μc are the means, and σf and σc correspond to the standard deviations for f and c, respectively. We used the absolute value as the value of Rv(f) for the feature f. To find the Rd(f) value of a feature f, we measured the average distance of a feature f with all other features using the Tanimoto Coefficient given in Eqs. 2 and 3. Here, d is the total number of features, for our case .

| 2 |

| 3 |

After summing the Rv( f ) and Rd( f ) values for all features (line 4), the algorithm iterates until the required T features have been selected. For every iteration the value of L( f ) is computed (lines 6–8), and the feature with the largest L( f ) is selected, added into subset K, and then eliminated from feature set F (lines 9–11).

The weights of the remaining candidate features are recalculated in each iteration to determine the impact of the candidate features on the features selected earlier (lines 13–16). We used the Banzhaf power index28 to readjust the weight w( f ) of a feature f. The Banzhaf power index is widely used in game theory approaches to measure the power of a player to form a coalition with a set of other players S. Winning and losing coalitions in a game are those coalitions with and , respectively. For every winning coalition of if S would lose without player r, then r is crucial to winning the game. Because player r is a feature, we made a slight modification to the original Banzhaf power index, and the updated definition of the Banzhaf power index for a player r is given in Eq. 4.

| 4 |

where the marginal contribution of the feature r to all coalitions is where . δ is the upper bound of the cardinality of S, and gives the total number of subsets of F\r bounded by δ. This means that , and g is the cardinality of a feature subset with .

If we consider two features r and t as two players, we can calculate their interdependence using Eq. 5 where C represents the binary classes 1 (AMR or positive class) and −1 (non-AMR or negative class).

| 5 |

We can formulate as

| 6 |

From these equations we see that a feature is important if it increases the relevance of the subset S with the binary classes (i.e., 1 and −1), and it should be interdependent with 50% or more of the members. The I’s in these equations are the mutual information and conditional mutual information and are calculated using Eqs. 7 and 8, respectively, where U, V, and Z are random variables.

| 7 |

| 8 |

The algorithm is aborted when T features have been selected from the feature set F. The output feature subset K is the optimal feature subset for providing maximum relevance, minimum redundancy, and informative interdependence relations (line 19).

Imbalanced data

An imbalanced data set has significantly more of one class of training data than the other. Such a data set leads a classifier to predict the majority class more accurately while lowering the accuracy of the minority class predictions. This happens because of over-training of the majority class and under-training of the minority class. To avoid this, a data set can be balanced via sampling techniques55,56. There are two major sampling methods, namely oversampling and undersampling. In oversampling, we duplicate data from the minority class to balance the data set. In undersampling, we remove data from the majority class to balance the data set. In this study, we applied both over-sampling and under-sampling techniques to measure the performance of our prediction model.

Support Vector Machine

A Support Vector Machine (SVM)29 is a supervised machine learning algorithm that represents each data item as a point in p-dimensional space and constructs a hyperplane (decision boundary) to separate data points into two groups. The core set of vectors identifying the hyperplane are known as support vectors. Unlike many classifiers, an SVM avoids overfitting by regularizing its parameters. Overfitting occurs when a classifier models the training data so well that it affects the accuracy of the classifier on new data. The SVM has proven to be a good classifier for protein sequences, classifying them with high accuracy. As predicting AMR is a binary classification problem, we chose an SVM for this work. A radial basis function30,31 was used as its kernel.

R Scripts and Packages

R (https://cran.r-project.org/mirrors.html), a popular programming language for statistical analysis, provides many built-in packages for data analysis and machine learning. We wrote scripts in R to implement our GTDWFE feature evaluation algorithm and SVM classifier. We utilized the R stats (v3.5.0) package to perform the Pearson’s correlation measurements, the proxy (v0.4–22) package for calculating the Tanimoto coefficients, and infotheo (v1.2.0) for finding the mutual information and conditional mutual information. We applied the ROSE (v0.0–3) package to balance the data set and the tune() function in the e1071 (v1.6–8) package to find the best SVM parameters. The caret (v4.20) package was used to generate confusion matrices. Finally, we utilized the FSelector (v0.31) package to implement RReliefF32.

Performance measurement

We measured the performance of the SVM classifier with our optimized feature set by generating confusion matrices which were used to calculate classification accuracies. Table 2 shows the structure of a confusion matrix, where TP, TN, FP, and FN are true positives (positives accurately classified), true negatives (negatives accurately classified), false positives (negatives classified as positives), and false negatives (positives classified as negatives), respectively. Classification accuracy is calculated from these values as given in Eq. 9.

| 9 |

Table 2.

Confusion matrix for classification performance.

| Actual / Predicted | Negative | Positive |

|---|---|---|

| Negative | TN | FN |

| Positive | FP | TP |

Accession codes

NCBI57 accession numbers for all proteins used in this work are listed in Supplementary Tables S2–S11.

Supplementary information

Acknowledgements

This work was supported in part by the Carl M. Hansen Foundation.

Author Contributions

A.S.C. collected the data, designed the method, performed the experiments, analyzed the experimental results, and prepared the initial manuscript. D.R.C. and S.L.B. analyzed the collected data, approved the method, guided the experiments, edited the manuscript, and further interpreted the experimental results. All authors reviewed and approved the manuscript.

Code Availability

The R scripts written to implement our method are available at https://github.com/abu034004/GTDWFE.

Data Availability

All experimental data are available at https://github.com/abu034004/GTDWFE.

Competing Interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Change history

1/30/2020

An amendment to this paper has been published and can be accessed via a link at the top of the paper.

Supplementary information

Supplementary information accompanies this paper at 10.1038/s41598-019-50686-z.

References

- 1.Hsueh P-R, Chen W-H, Luh K-T. Relationships between antimicrobial use and antimicrobial resistance in gram-negative bacteria causing nosocomial infections from 1991–2003 at a university hospital in taiwan. International journal of antimicrobial agents. 2005;26:463–472. doi: 10.1016/j.ijantimicag.2005.08.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Chopra I, et al. Treatment of health-care-associated infections caused by gram-negative bacteria: a consensus statement. The Lancet infectious diseases. 2008;8:133–139. doi: 10.1016/S1473-3099(08)70018-5. [DOI] [PubMed] [Google Scholar]

- 3.Slama TG. Gram-negative antibiotic resistance: there is a price to pay. Critical Care. 2008;12:S4. doi: 10.1186/cc6820. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Davis JJ, et al. Antimicrobial resistance prediction in patric and rast. Scientific reports. 2016;6:27930. doi: 10.1038/srep27930. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kang C-I, et al. Bloodstream infections caused by antibiotic-resistant gram-negative bacilli: risk factors for mortality and impact of inappropriate initial antimicrobial therapy on outcome. Antimicrobial agents and chemotherapy. 2005;49:760–766. doi: 10.1128/AAC.49.2.760-766.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Davies J, Davies D. Origins and evolution of antibiotic resistance. Microbiology and molecular biology reviews. 2010;74:417–433. doi: 10.1128/MMBR.00016-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.El Chakhtoura NG, et al. Therapies for multidrug resistant and extensively drug-resistant non-fermenting gram-negative bacteria causing nosocomial infections: a perilous journey toward ‘molecularly targeted’ therapy. Expert review of anti-infective therapy. 2018;16:89–110. doi: 10.1080/14787210.2018.1425139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.for Disease Control, C. & (US), P. Antibiotic resistance threats in the United States, 2013 (Centres for Disease Control and Prevention, US Department of Health and Human Services, 2013).

- 9.Navon-Venezia S, Kondratyeva K, Carattoli A. Klebsiella pneumoniae: a major worldwide source and shuttle for antibiotic resistance. FEMS microbiology reviews. 2017;41:252–275. doi: 10.1093/femsre/fux013. [DOI] [PubMed] [Google Scholar]

- 10.Didelot X, Bowden R, Wilson DJ, Peto TE, Crook DW. Transforming clinical microbiology with bacterial genome sequencing. Nature Reviews Genetics. 2012;13:601. doi: 10.1038/nrg3226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Thomas T, Gilbert J, Meyer F. Metagenomics-a guide from sampling to data analysis. Microbial informatics and experimentation. 2012;2:3. doi: 10.1186/2042-5783-2-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Oulas A, et al. Metagenomics: tools and insights for analyzing next-generation sequencing data derived from biodiversity studies. Bioinformatics and biology insights. 2015;9:BBI–S12462. doi: 10.4137/BBI.S12462. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Yang Y, et al. Args-oap: online analysis pipeline for antibiotic resistance genes detection from metagenomic data using an integrated structured arg-database. Bioinformatics. 2016;32:2346–2351. doi: 10.1093/bioinformatics/btw136. [DOI] [PubMed] [Google Scholar]

- 14.Kleinheinz KA, Joensen KG, Larsen MV. Applying the resfinder and virulencefinder web-services for easy identification of acquired antibiotic resistance and e. coli virulence genes in bacteriophage and prophage nucleotide sequences. Bacteriophage. 2014;4:e27943. doi: 10.4161/bact.27943. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Forsberg KJ, et al. Bacterial phylogeny structures soil resistomes across habitats. Nature. 2014;509:612. doi: 10.1038/nature13377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.McArthur AG, Tsang KK. Antimicrobial resistance surveillance in the genomic age. Annals of the New York Academy of Sciences. 2017;1388:78–91. doi: 10.1111/nyas.13289. [DOI] [PubMed] [Google Scholar]

- 17.Xavier, B. B. et al. Consolidating and exploring antibiotic resistance gene data resources. Journal of clinical microbiology JCM–02717 (2016). [DOI] [PMC free article] [PubMed]

- 18.Arango-Argoty G, et al. Deeparg: a deep learning approach for predicting antibiotic resistance genes from metagenomic data. Microbiome. 2018;6:23. doi: 10.1186/s40168-018-0401-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Ruppé E, et al. Prediction of the intestinal resistome by a three-dimensional structure-based method. Nature microbiology. 2019;4:112. doi: 10.1038/s41564-018-0292-6. [DOI] [PubMed] [Google Scholar]

- 20.Lal, T. N., Chapelle, O., Weston, J. & Elisseeff, A. Embedded methods. In Feature extraction, 137–165 (Springer, 2006).

- 21.Kohavi R, John GH. Wrappers for feature subset selection. Artificial intelligence. 1997;97:273–324. [Google Scholar]

- 22.Chowdhury, A. S., Alam, M. M. & Zhang, Y. A biomarker ensemble ranking framework for prioritizing depression candidate genes. In Computational Intelligence in Bioinformatics and Computational Biology (CIBCB), 2015 IEEE Conference on, 1–6 (IEEE, 2015).

- 23.He, X., Cai, D. & Niyogi, P. Laplacian score for feature selection. In Advances in neural information processing systems, 507–514 (2006).

- 24.Talavera, L. An evaluation of filter and wrapper methods for feature selection in categorical clustering. In International Symposium on Intelligent Data Analysis, 440–451 (Springer, 2005).

- 25.Dash, M., Choi, K., Scheuermann, P. & Liu, H. Feature selection for clustering-a filter solution. In Data Mining, 2002. ICDM 2003. Proceedings. 2002 IEEE International Conference on, 115–122 (IEEE, 2002).

- 26.Guyon I, Elisseeff A. An introduction to variable and feature selection. Journal of machine learning research. 2003;3:1157–1182. [Google Scholar]

- 27.Kotsiantis, S. Feature selection for machine learning classification problems: a recent overview. Artificial Intelligence Review 1–20 (2011).

- 28.Banzhaf JF., III Weighted voting doesn’t work: A mathematical analysis. Rutgers L. Rev. 1964;19:317. [Google Scholar]

- 29.Cortes C, Vapnik V. Support-vector networks. Machine learning. 1995;20:273–297. [Google Scholar]

- 30.Chang Y-W, Hsieh C-J, Chang K-W, Ringgaard M, Lin C-J. Training and testing low-degree polynomial data mappings via linear svm. Journal of Machine Learning Research. 2010;11:1471–1490. [Google Scholar]

- 31.Vert J-P, Tsuda K, Schölkopf B. A primer on kernel methods. Kernel methods in computational biology. 2004;47:35–70. [Google Scholar]

- 32.Robnik-Šikonja, M. & Kononenko, I. An adaptation of relief for attribute estimation in regression. In Machine Learning: Proceedings of the Fourteenth International Conference (ICML’97), 296–304 (1997).

- 33.Kira, K. & Rendell, L. A. A practical approach to feature selection. In Machine Learning Proceedings 1992, 249–256 (Elsevier, 1992).

- 34.Kononenko, I. Estimating attributes: analysis and extensions of relief. In European conference on machine learning, 171–182 (Springer, 1994).

- 35.Robnik-Šikonja M, Kononenko I. Theoretical and empirical analysis of relieff and rrelieff. Machine learning. 2003;53:23–69. [Google Scholar]

- 36.Liu B, Pop M. Ardb–antibiotic resistance genes database. Nucleic acids research. 2008;37:D443–D447. doi: 10.1093/nar/gkn656. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Wattam AR, et al. Improvements to patric, the all-bacterial bioinformatics database and analysis resource center. Nucleic acids research. 2016;45:D535–D542. doi: 10.1093/nar/gkw1017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Favrot L, Blanchard JS, Vergnolle O. Bacterial gcn5-related n-acetyltransferases: from resistance to regulation. Biochemistry. 2016;55:989–1002. doi: 10.1021/acs.biochem.5b01269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Li W, Godzik A. Cd-hit: a fast program for clustering and comparing large sets of protein or nucleotide sequences. Bioinformatics. 2006;22:1658–1659. doi: 10.1093/bioinformatics/btl158. [DOI] [PubMed] [Google Scholar]

- 40.Fu L, Niu B, Zhu Z, Wu S, Li W. Cd-hit: accelerated for clustering the next-generation sequencing data. Bioinformatics. 2012;28:3150–3152. doi: 10.1093/bioinformatics/bts565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Liu B, et al. Combining evolutionary information extracted from frequency profiles with sequence-based kernels for protein remote homology detection. Bioinformatics. 2013;30:472–479. doi: 10.1093/bioinformatics/btt709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Ding CH, Dubchak I. Multi-class protein fold recognition using support vector machines and neural networks. Bioinformatics. 2001;17:349–358. doi: 10.1093/bioinformatics/17.4.349. [DOI] [PubMed] [Google Scholar]

- 43.Zhang S, Ding S, Wang T. High-accuracy prediction of protein structural class for low-similarity sequences based on predicted secondary structure. Biochimie. 2011;93:710–714. doi: 10.1016/j.biochi.2011.01.001. [DOI] [PubMed] [Google Scholar]

- 44.Wei L, Liao M, Gao X, Zou Q. Enhanced protein fold prediction method through a novel feature extraction technique. IEEE transactions on nanobioscience. 2015;14:649–659. doi: 10.1109/TNB.2015.2450233. [DOI] [PubMed] [Google Scholar]

- 45.Cai C, Han L, Ji ZL, Chen X, Chen YZ. Svm-prot: web-based support vector machine software for functional classification of a protein from its primary sequence. Nucleic acids research. 2003;31:3692–3697. doi: 10.1093/nar/gkg600. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Li YH, et al. Svm-prot 2016: a web-server for machine learning prediction of protein functional families from sequence irrespective of similarity. PloS one. 2016;11:e0155290. doi: 10.1371/journal.pone.0155290. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Dubchak I, Muchnik I, Holbrook SR, Kim S-H. Prediction of protein folding class using global description of amino acid sequence. Proceedings of the National Academy of Sciences. 1995;92:8700–8704. doi: 10.1073/pnas.92.19.8700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Dubchak I, Muchnik I, Mayor C, Dralyuk I, Kim S-H. Recognition of a protein fold in the context of the scop classification. Proteins: Structure, Function, and Bioinformatics. 1999;35:401–407. [PubMed] [Google Scholar]

- 49.Altschul SF, et al. Gapped blast and psi-blast: a new generation of protein database search programs. Nucleic acids research. 1997;25:3389–3402. doi: 10.1093/nar/25.17.3389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Jones DT. Protein secondary structure prediction based on position-specific scoring matrices1. Journal of molecular biology. 1999;292:195–202. doi: 10.1006/jmbi.1999.3091. [DOI] [PubMed] [Google Scholar]

- 51.Kurgan LA, Homaeian L. Prediction of structural classes for protein sequences and domains—impact of prediction algorithms, sequence representation and homology, and test procedures on accuracy. Pattern Recognition. 2006;39:2323–2343. [Google Scholar]

- 52.Kurgan L, Cios K, Chen K. Scpred: accurate prediction of protein structural class for sequences of twilight-zone similarity with predicting sequences. BMC bioinformatics. 2008;9:226. doi: 10.1186/1471-2105-9-226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Liu T, Jia C. A high-accuracy protein structural class prediction algorithm using predicted secondary structural information. Journal of theoretical biology. 2010;267:272–275. doi: 10.1016/j.jtbi.2010.09.007. [DOI] [PubMed] [Google Scholar]

- 54.Chowdhury, A. S., Khaledian, E. & Broschat, S. L. Capreomycin resistance prediction in two species of Mycobacterium using a stacked ensemble method. Journal of Applied Microbiology (2019). [DOI] [PubMed]

- 55.Lin W-C, Tsai C-F, Hu Y-H, Jhang J-S. Clustering-based undersampling in class-imbalanced data. Information Sciences. 2017;409:17–26. [Google Scholar]

- 56.Junsomboon, N. & Phienthrakul, T. Combining over-sampling and under-sampling techniques for imbalance dataset. In Proceedings of the 9th International Conference on Machine Learning and Computing, 243–247 (ACM, 2017).

- 57.for Biotechnology Information, N. C. NCBI accession number, https://www.ncbi.nlm.nih.gov/ (Last accessed on August 17, 2018).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The R scripts written to implement our method are available at https://github.com/abu034004/GTDWFE.

All experimental data are available at https://github.com/abu034004/GTDWFE.