Abstract

Study design

Prospective study.

Objective

The purpose of this study was to determine the feasibility and usability of a family member and a clinician completed home evaluation process using an adapted commercial MagicPlan mobile application (MPMA) and laser distance measurer (LDM).

Setting

An acute inpatient rehabilitation unit and homes in the community.

Methods

MPMA allows users to create floor plans (FPs), which may include virtually inserted pieces of durable medical equipment (DME). The MPMA with an LDM allows users to virtually demonstrate that ordered DME will fit within the measured environments. After training with an online educational module, the family member of someone on the acute inpatient unit (lay participant) went home to measure room dimensions and to create FPs using the MPMA and LDM. After completing the task, lay participants returned the FPs for review by clinicians responsible for ordering DME.

Results

Forty-three lay participants and nine clinicians finished the study. Clinicians were satisfied with 75% of the FPs created by the lay participants using version 2 of the educational module. The lay participants and clinicians both thought the MPMA is useful and recommended its use.

Conclusion

The MPMA with an LDM can be used to virtually complete a home evaluation and to evaluate for the appropriateness of various considered DME. The method of performing home evaluations may lead to more appropriate DME recommendations to improve functional independence for individuals with disabilities.

Sponsorship

This work was supported by Craig H. Neilsen Foundation, QOL Sustainable Impact Projects (431146).

Subject terms: Rehabilitation, Patient education, Quality of life

Introduction

Appropriate prescription of durable medical equipment (DME), defined as functional equipment that can be used for medical purposes (e.g., wheelchairs and commodes), significantly improves quality of life, community participation, and activity level for persons with disability, as well as for their families [1, 2]. Conversely, inappropriate DME prescription is a source of medical resource waste [2–4]. In order to ensure ordered DME fits within a particular environment, and therefore will be of use, an evaluation of that environment is necessary.

A home evaluation is an assessment of an individual’s home that involves measurements of room dimensions, furniture, and door widths to ensure accessability and DME fit. Home evaluations play an important role in wheeled mobility service delivery [3] and are a key component of the process needed to justify the necessity of a particular piece of DME to a payor. A report from Department of Health & Human Services indicated that about 52% of the claims for power wheelchairs from Medicare were not sufficiently documented, including not enough evidence to show that the beneficiary could safely use the power wheelchair at home [5], and underscored the necessity for accurate and detailed home evaluations in order for clinicians to make appropriate DME ordering decisions.

Historically clinicians visited individual’s homes to perform home evaluations directly. This method requires extensive clinician time that is not typically reimbursed by payors. Some rehabilitation facilities provide a patient’s designated person a tape measure and paper form detailing the measurements clinicians need for a home evaluation, such as doorway and hallway widths, and bathroom layout and space. Having lay persons with minimal training perform measurements introduces the risk of obtaining inaccurate measurements. The measurements written on a paper form also cannot provide clinicians a clear visual picture of the home setup.

With a goal of creating an efficient and visually representative home evaluation, the study team adapted the MagicPlan mobile application (MPMA) developed by Sensopia Inc., and utilized a laser distance measurer (LDM) to assist in performing home evaluations. Both the MPMA and LDM are commercially available devices. The MPMA fulfills the requirements that are thought to be necessary by the lay persons and clinicians who perform home evaluations. The MPMA is freely available on both iOS and Android platforms and enables an individual to create a floor plan (FP) showing room dimensions, door widths, and the placement of objects in the home with a smartphone or tablet. The MPMA also allows users to add photos and annotations into FPs, which could facilitate the home evaluation process by adding visual effects and discussion. An LDM is an electronic tape measure that measures distance by projecting a laser beam to the target wall or object, and then based on the reflection of the beam calculates the distance. The LDM is easy to set up and could be used by one person to take accurate measurements unlike using a tape measure, which requires two people for measuring longer distances such as are encountered in determining room dimensions. The LDM (Leica DISTOTM E7100i) used in this study has passed the standards from International Organization for Standardization (ISO, 16331-1) to ensure its consistent quality and reliability with typical measuring tolerance (±1.5 mm/±0.06 in.) [6]. The purpose of this study was to determine the feasibility and usability of the MPMA and an LDM by lay participants and clinicians in completing clinical home evaluations. We hypothesized that most people could use the MPMA with an LDM to create FPs with detailed home measurements (feasibility) that would provide clinicians with accurate home evaluations from which they could make appropriate DME recommendations, and both lay participants and clinicians think the MPMA and an LDM are useful tools and easy to use (usability).

Methods

Participants

The study was conducted on an acute inpatient rehabilitation unit (AIR) and within the community at home. Participants were eligible for the study if they spoke English and had never used the MPMA. People who could not understand the study instructions or were not willing to use the MPMA were excluded from participating. Once the care team identified a patient that required DME and received permission for providing more information about the study, our research team would recruit a patient’s family member or other designated person (lay participant) who could go to the home to use the MPMA to create an FP. A clinician that was responsible for ordering DME was recruited to evaluate the FP for home evaluations. Use of a signed consent form was waived by the Icahn School of Medicine at Mount Sinai Institutional Review Board because this study only involved use of questionnaires that did not solicit participant identifiers or protected health information. We certify that all applicable institutional and governmental regulations concerning the ethical use of human volunteers were followed during the course of this research.

Creating FPs with the MPMA and an LDM

There are four steps needed to create a virtual FP using the MPMA. First, participants create different shapes of rooms mirroring the actual room shapes. Second, participants measure room dimensions using an LDM and wirelessly upload or manually enter them into the MPMA, The room dimensions then proportionately change based on the entered values. Participants then assemble the rooms together to create an FP. Next, the rooms are digitally decorated with objects (e.g., doors, couches, and beds) inserted from MPMA object databases accessed through dropdown menus mirroring these items in the home. Finally, participants measure the dimensions of these actual objects with a tape measure, and enter these dimensions onto their virtual representative objects within the MPMA.

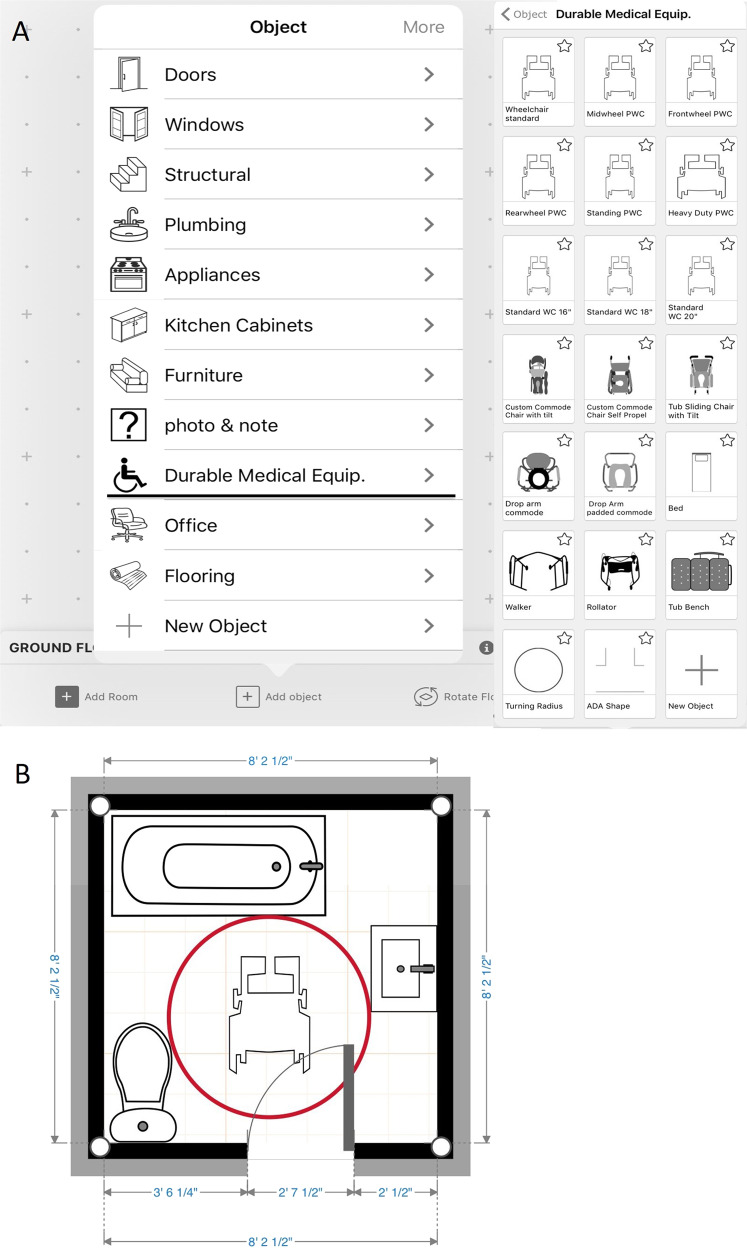

The research team worked with the MPMA developer, Sensopia Inc., to build and incorporate a database containing major categories of DME including power wheelchairs, manual wheelchairs, commodes, shower chairs, and hospital beds (Fig. 1a). Object size within the MPMA was customizable starting from actual DME specifications. Users can change each object’s width, depth, and height based on actual dimensions. The standardized Americans with Disabilities Act footprint circle and T-shape representing the space necessary to maneuver certain DME through the home were added as attributes to wheeled mobility devices (Fig. 1b) [7]. The database allows an individual to place different selected DME within the FP at the same time and determine whether the environment is accessible and whether the object can turn safely (without hitting other embedded objects).

Fig. 1.

a The durable medical equipment database is incorporated into the MPMA. b Clinicians could perform virtual home evaluation by interpreting the lay participant generated floor plans and by additionally inserting potential DME (a wheelchair is shown) that they wished to evaluate in the space

Home evaluation protocol

An iPad installed MPMA, LDM, and educational modules developed by the research team were introduced to lay participants to help them understand how to complete the study. The educational module version 1 (EMv1) included: (1) an introduction of the study and online educational videos, (2) an FP sample printed on a paper, and (3) a checklist of steps needed to complete the project. The EMv1 had nine separate videos each lasting between 30 and 90 s. After the introduction, the lay participant went to the place where the patient intended to stay after discharge to measure the rooms that the patient would use using the MPMA and LDM. After the lay participant returned all the devices and FPs, the FPs created by the lay participants then were provided to the clinicians to read the measurements to see if the doorway, hallway, and pathway between objects are wide enough for the DME needed to move through and to insert equipment from the DME database into the FP to see if the DME could fit into the space (Fig. 1b).

Midway through the study the research team modified the educational modules after receiving feedback from lay participants and participants of an advisory meeting with the MPMA developer and consumer advisory board. The updated educational module, termed version 2 (EMv2), had only one educational video of 4 min and 45 s duration, including a final 1-min video segment designed specifically for clinicians. While introducing the study to lay participants, the study team member played the educational video and made sure that lay participants watched the educational video at least once.

Questionnaires

Two questionnaires were used in the study to gather feedback from lay participants and clinicians and rate the feasibility and usability of the MPMA in performing home evaluations. The questionnaire for lay participants included information about (1) age, (2) sex, (3) level of education, (4) experience of using mobile applications, (5) time used to finish an FP using the MPMA, (6) usability, and (7) additional comments for the MPMA. The questionnaire for clinicians included questions intended to rate the satisfaction of the FP they received, the use of DME database, the time needed to complete an evaluation, a rating of the usability, and additional comments for the MPMA. The criteria used by clinicians to rate FPs as satisfactory included: (1) a display of areas of the home an individual would use; (2) measurements on the FP which would allow clinicians to judge if the DME would be usable in the space; and (3) appropriate object setup, such as toilet, sink, and door locations within the FP.

Lay participants and clinicians rated usability of the MPMA on a 5-point likert scale, where 1 is poor, 2 fair, 3 good, 4 very good, and 5 is excellent. The five mobile application usability concept questions [8, 9] were the following: (1) visibility—“Please rate the user interface”; (2) ease of use—“Please rate the ease of use”; (3) error prevention—“Please rate the clarity of error messages”; (4) usefulness—“Please rate how useful the MA is overall”; and (5) satisfaction—“How likely would you recommend using this MA for home evaluation?”

Data analysis

Data analysis was performed using SPSS 22.0 software. Descriptive statistics were calculated for lay participants’ demographic data and all the questionnaire results. The additional comments for MPMA’s usability in the lay participant and clinician questionnaires were summarized. The satisfaction rate of the FPs was defined as the number of FPs, which were judged satisfactory by the clinicians, divided by the total number of FPs the study received. The satisfaction rate of FPs, lay participants’ time used to finish FPs using the MPMA, and clinicians’ time needed to complete a home evaluation using FPs on MPMA were analyzed to determine the feasibility of MPMA in performing home evaluations. The results of usability questionnaires and comments were used to evaluate the usability of MPMA in performing home evaluations. The χ² test was used to see if lay participants’ age and level of education were related to the satisfaction rate of the FPs.

Results

Participants

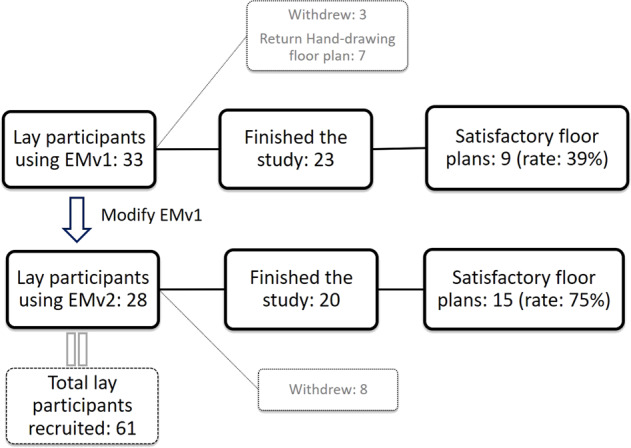

Thirty-three lay participants used EMv1. As three participants withdrew from the study (one due to discharge from AIR before the FP was completed, another due to discharge to a skilled nursing facility where a home evaluation was not immediately needed, and another having in their possession a professionally completed FP on hand) and seven participants returned only hand-drawn FPs, 23 participants created full FPs using MPMA with EMv1 and returned the FPs (Fig. 2).

Fig. 2.

The number of lay participants who were recruited, who finished the study, and who created floor plans thought to be satisfactory by clinician participants

Twenty-eight lay participants were trained using EMv2. As eight withdrew from the study for the same reasons as the previous three withdrew, 20 participants created full FPs using MPMA with EMv2 and returned the FPs (Fig. 2).

Thirty-eight lay participants returned questionnaires (Table 1). The age of half of the lay participants ranged from 26 to 55 years. The number of lay participants with a bachelor’s degree or higher educational level (N = 19) was equal to participants with other degrees (N = 19). Ninety-two percent of the lay participants had experience in using other mobile applications.

Table 1.

Number (%) of lay participants with certain demographic characteristics who created satisfactory and unsatisfactory FPs

| Demographic category | N (%) | Satisf. FP | Un-satisf. FP | χ² | |

|---|---|---|---|---|---|

| Age (years) | 18–25 | 4 (11%) | 3 | 1 |

Number of Satisf. FP from participants ≤ 45 vs. > 45 years χ2(1) = 6.47, P = 0.01* |

| 26–35 | 10 (26%) | 8 | 2 | ||

| 36–45 | 1 (3%) | 1 | 0 | ||

| Subtotal of ≤45 | 15 | 12 | 3 | ||

| (Five missing participant questionnaires, one prefer not to mention) | 46–55 | 9 (24%) | 2 | 6 | |

| 56–65 | 6 (16%) | 1 | 4 | ||

| 66–75 | 6 (16%) | 3 | 1 | ||

| Over 75 | 1 (3%) | 0 | 0 | ||

| Subtotal of >45 | 22 | 6 | 11 | ||

| Educational level | Vocational | 1 (3%) | 1 | 0 |

Number of Satif. FP from high participants with education degrees vs. other degrees χ2(1) = 0.02, P = 0.88 |

| Associate’s degree | 6 (16%) | 2 | 3 | ||

| High school diploma or GED | 12 (32%) | 6 | 4 | ||

| Subtotal | 19 | 9 | 7 | ||

| (Five missing participant questionnaires) | Bachelor’s degree | 10 (26%) | 6 | 4 | |

| Master’s degree | 8 (21%) | 3 | 3 | ||

| Doctorate degree | 1 (3%) | 1 | 0 | ||

| Subtotal | 19 | 10 | 7 |

FP floor plan, vs. versus, GED general educational degree, Satisf satisfactory

*P < 0.05

A total of nine clinician participants, including seven occupational therapists and two physical therapists, participated in the study. They reviewed 43 FPs created using the MPMA and returned the clinician questionnaires.

Lay participant analysis

There was significant association between lay participants’ age and if they could create FPs deemed satisfactory by clinicians (X2(1) = 6.47, P = 0.01, Table 1). The odds of creating an FP judged satisfactory by the clinicians were 7.3 times higher if lay participants’ age were ≤45 years when compared with those older than 45 years. There was no significant association between lay participants’ education level and the satisfaction rate of FPs (X2(1) = 0.02, P = 0.88, Table 1).

Feasibility

Total 43 FPs were created and returned by the lay participants. Out of the 43, 24 FPs received were judged satisfactory by the clinicians in questionnaires (satisfaction rate: 24/43 = 56%).

Out of the 43, 23 FPs were created by the lay participants educated using the EMv1 (Fig. 2), and of these, only 9 out of the 23 FPs were judged satisfactory by the clinicians (satisfaction rate: 9/23 = 39%). The remaining 20 out of the 43 FPs were created by lay participants educated using EMv2 (Fig. 2), and of these, 15 out of the 20 FPs were judged satisfactory by the clinicians in questionnaires (satisfaction rate: 15/20 = 75%).

After using the EMv2, the training time for lay participants, including introducing the MPMA and LDM and watching the educational video, was about 10 min. Seventy-seven percent of the lay participants spent <60 min completing the FPs, which were judged satisfactory by the clinicians (15% spent 61–90 min and 8% spent >90 min to finish it). Of those FPs deemed satisfactory, all the clinician participants were able to use the DME database and insert DME objects into these satisfactory FPs for home evaluations except for one clinician who did not attempt to insert DME objects. With satisfactory FPs, the clinician participants could complete the virtual home evaluations, including determining the DME patients needed and evaluating if the DME would fit in the designated place, within 5 min in 73% of the cases, whereas it took 6–10 min for 15% of the cases and 11–20 min for the remainder.

Usability

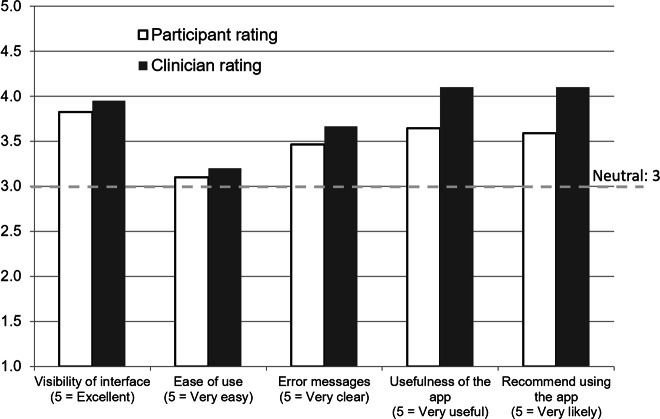

Figure 3 shows the results of the usability questionnaires from both lay and clinician participants. The lay and clinician participants both thought the MPMA had a good user interface and delivered clear error messages. They felt that using MPMA for home evaluations was useful and recommended it. However, the lay and clinician participants both scored neutral ratings for ease of use of the MPMA. Clinician participants however tended to rate everything more positively than the lay participants.

Fig. 3.

The results of the usability questionnaires from lay and clinician participants. The horizontal dash line indicates neutral opinion

Discussion

Inappropriate DME, such as inappropriate size of manual wheelchairs, continues to not only threaten the quality of life (including the increase of environmental barriers and risk of falling accidents) for people who have mobility impairment, but is also a source of a misappropriation of medical resources (such as equipment abandonment) [3]. DME must not only be appropriate for the needs and diagnosis of the person, but also be able to fit in said person’s home; otherwise it will not be useful [10]. The application of technology has the potential to improve utilization and quality of health care [11]. This MPMA approach is feasible and demonstrates in a visual manner if DME will fit within a home. Through use of the MPMA DME database, clinicians can consider objects to determine the best combinations of equipment to allow the patient to live as independently and functionally as possible within their home environment. After such a virtual home evaluation, time and effort may be saved that was previously spent procuring and trying out equipment that may not fit within the environment. This may reduce the waste of limited resources on equipment that ultimately will not fit while, also expediting the procurement of beneficial devices that previously may not have been ordered. Although initially it takes time to train users to use the MPMA and LDM, this is similar to what has been documented regarding the adoption of other new health care systems and technologies [12, 13].

Online educational modules facilitate easy access as users are able to learn how to use the MPMA directly by themselves [14, 15]. Online training has been shown to have similar effects to in-person training, potentially decreasing training time and staff burden [14, 15]. Although, the quality of the FPs produced from using original EMv1 was not satisfactory, in EMv2, we simplified and combined several educational videos and instructed participants to watch the resultant educational video at least once before they use the MPMA [16]. The modification in the educational modules improved the satisfaction rate of the FPs from 39 to 75%.

In the questionnaire, some lay participants mentioned that the MPMA was not very intuitive to use and the learning curve of using the method was perceived to be too steep given participants only needed to create FPs once for discharge planning purposes. Despite these comments, about three quarters of the participants could create satisfactory FPs in <1 h.

Some clinicians’ comments in the questionnaire mentioned that the MPMA was a very useful tool to facilitate recommending appropriate DME, and that it provided a good method for family members or other designated persons to take measurements of home and to have them readily available for the rehabilitation team. The study clinicians also remarked that the MPMA facilitated family and clinician interaction and provided a realistic view of anticipated mobility barriers. One of the clinicians said “when I have had family members who feel like everything will be okay and they will figure it out, but they really require visual feedback regarding the fact that DME is needed or home modifications are required.” Another benefit of virtual FPs is that they can be stored electronically. When clinicians want to follow up the use of ordered DME, or patients need new DME in the future, the old FPs can be reviewed again.

Both the MPMA and LDM are technologies that we adapted for this clinical application. Studies have shown that age greatly influences the use of technology. Older users seem to have more technical problems with the use of an iPad [17]. After controlling education and jobs, younger people who are less than 56 years are more likely to use mobile technologies than people who are older than 69 years [18]. People who are 35 years and younger are more likely to use and more excited in trying health related mobile applications [19, 20]. Therefore, it is reasonable that younger population could adapt this new method faster and have more chances to provide meaningful FPs.

Study limitations

The study was conducted in an AIR unit where it is relatively easy to meet with family members, ask them to create the FPs, and then return the devices and FPs. This approach may be more difficult in other settings where family members have difficulties in traveling back and forth between home and hospital. A mobile application, by nature, can be installed on any mobile device. The finished FPs could be returned through e-mail or be uploaded onto a cloud server. This latter method has potential to be used in different clinical settings, but needs be further tested. The sample size in this study is also small. The results may not be generalizable to different locations and populations. The study only used questionnaires to obtain lay and clinician participants’ feedback, but didn’t have a follow-up component to objectively prove that the actual DME ordered as a results of the MPMA assisted home evaluation was appropriate for the home. A multisite study with large sample size and longitudinal follow-up of DME use is necessary to further clarify the feasibility and efficacy of using this MPMA method to replace the traditional home evaluation.

In conclusion, the MPMA with an LDM can be feasibly used to create a home evaluation. Most people are able use this approach to create a satisfactory FP. Performing home evaluations with this method may help clinicians and patients to select the correct DME for home use and discuss home modifications which will in turn help individuals with disabilities to live more independently wherever they may reside.

Acknowledgements

The authors would like to acknowledge all the occupational and physical therapists and research coordinators, Andrew Delgado and Kristell Taylor, in the Department of Rehabilitation and Human Performance at the Mount Sinai Hospital for helping conduct this study. This work was supported by Craig H. Neilsen Foundation, QOL Sustainable Impact Projects (431146).

Author contributions

All authors listed above have met all of the following authorship criteria: (1) Conceived and/or designed the work that led to the submission, acquired data, and/or played an important role in interpreting the results. (2) Drafted or revised the manuscript. (3) Approved the final version. (4) Agreed to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Compliance with ethical standards

Conflict of interest

The authors declare that they have no conflict of interest.

Footnotes

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Salminen A-L, Brandt Å, Samuelsson K, Töytäri O, Malmivaara A. Mobility devices to promote activity and participation: a systematic review. J Rehabil Med. 2009;41:697–706. doi: 10.2340/16501977-0427. [DOI] [PubMed] [Google Scholar]

- 2.Sund T, Iwarsson S, Anttila H, Brandt Å. Effectiveness of powered mobility devices in enabling community mobility-related participation: a prospective study among people with mobility restrictions. PM R. 2015;7:859–70. doi: 10.1016/j.pmrj.2015.02.001. [DOI] [PubMed] [Google Scholar]

- 3.Greer N, Brasure M, Wilt TJ. Wheeled mobility (wheelchair) service delivery: scope of the evidence. Ann Intern Med. 2012;156:141–6. doi: 10.7326/0003-4819-156-2-201201170-00010. [DOI] [PubMed] [Google Scholar]

- 4.Hammel J, Lai J-S, Heller T. The impact of assistive technology and environmental interventions on function and living situation status with people who are ageing with developmental disabilities. Disabil Rehabil. 2002;24:93–105. doi: 10.1080/09638280110063850. [DOI] [PubMed] [Google Scholar]

- 5.Daniel R. L. Most power wheelchairs in the medicare program did not meet medical necessity guidelines. Washington, DC: Office of Inspector General, U.S. Department of Health and Human Services; 2011. www.oig.hhs.gov/oei/reports/oei-04-09-00260.pdf. Accessed 12 Oct 2011.

- 6.Leica Geosystems. Owner’s FAQ DISTO E7100i (D110). Leica Geosystems; 2019. https://lasers.leica-geosystems.com/blog/owners-faq-disto-e7100i-d110.

- 7.ADA Standards for Accessible Design. Chaper 3: Building Blocks, Section 304: Turning Space. Department of Justice. 2010.

- 8.Nielsen J. Usability inspection methods. Conference companion on Human factors in computing systems. Boston, Massachusetts, USA: ACM; 1994.

- 9.Barnum CM. Usability testing essentials: ready, set… test!. Burlington, MA, USA: Morgan Kaufmann; 2010.

- 10.LaPlante MP, Kaye HS. Demographics and trends in wheeled mobility equipment use and accessibility in the community. Assist Technol. 2010;22:3–17. doi: 10.1080/10400430903501413. [DOI] [PubMed] [Google Scholar]

- 11.Chaudhry B, Wang J, Wu S, Maglione M, Mojica W, Roth E, et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med. 2006;144:742–52. doi: 10.7326/0003-4819-144-10-200605160-00125. [DOI] [PubMed] [Google Scholar]

- 12.Valdes I, Kibbe DC, Tolleson G, Kunik ME, Petersen LA. Barriers to proliferation of electronic medical records. Inform Prim Care. 2004;12:3–9. doi: 10.14236/jhi.v12i1.102. [DOI] [PubMed] [Google Scholar]

- 13.Ash JS, Stavri PZ, Kuperman GJ. A consensus statement on considerations for a successful CPOE implementation. J Am Med Inform Assoc. 2003;10:229–34. doi: 10.1197/jamia.M1204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Wantland DJ, Portillo CJ, Holzemer WL, Slaughter R, McGhee EM. The effectiveness of web-based vs. non-web-based interventions: a meta-analysis of behavioral change outcomes. J Med Internet Res. 2004;6:e40. doi: 10.2196/jmir.6.4.e40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Worobey LA, Rigot SK, Hogaboom NS, Venus C, Boninger ML. Investigating the efficacy of web-based transfer training on independent wheelchair transfers through randomized controlled trials. Arch Phys Med Rehabil. 2018;99:9–16 e0. doi: 10.1016/j.apmr.2017.06.025. [DOI] [PubMed] [Google Scholar]

- 16.Mayer RE. Learning with animation: research implications for design. New York, NY, US: Cambridge University Press; 2008. Research-based principles for learning with animation; pp. 30–48. [Google Scholar]

- 17.Shem K, Sechrist SJ, Loomis E, Isaac L. SCiPad: effective implementation of telemedicine using iPads with individuals with spinal cord injuries, a case series. Front Med. 2017;4:58. doi: 10.3389/fmed.2017.00058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Gallagher R, Roach K, Sadler L, Glinatsis H, Belshaw J, Kirkness A, et al. Mobile technology use across age groups in patients eligible for cardiac rehabilitation: survey study. JMIR Mhealth Uhealth. 2017;5:e161. doi: 10.2196/mhealth.8352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Naszay M, Stockinger A, Jungwirth D, Haluza D. Digital age and the public eHealth perspective: prevailing health app use among Austrian Internet users. Inform Health Soc Care. 2018;43:390–400. doi: 10.1080/17538157.2017.1399131. [DOI] [PubMed] [Google Scholar]

- 20.Lella A, Lipsman A. The 2017 U.S. mobile app report. Reston, VA, USA: comScore, Inc; 2017.