Abstract

Objective. To characterize use of the Pharmacy Curriculum Outcomes Assessment (PCOA) in terms of timing, manner of delivery, and application of the results by accredited colleges of pharmacy.

Methods. Accredited pharmacy programs were surveyed regarding PCOA administration, perceived benefits, and practical application of score reports. Survey items were comprised of new items developed from a literature review and items from prior studies. The survey addressed five domains: program demographics, administration, student preparation, use of results, and recommendations to improve the utility of the PCOA.

Results. Responses were received from 126 of 139 (91%) surveyed programs. The majority of respondent programs administered PCOA in one session on a single campus. Most indicated PCOA results had limited use for individual student assessment. Almost half reported that results were or could be useful in curriculum review and benchmarking. Considerable variability existed in the preparation and incentives for PCOA performance. Differences in some results were found based on prior PCOA experience and between new vs older programs. Open-ended responses provided suggestions to enhance the application and utility of PCOA.

Conclusion. The intended uses of PCOA results, such as for student assessment, curricular review, and programmatic benchmarking, are not being implemented across the academy. Streamlining examination logistics, providing additional examination-related data, and clarifying the purpose of the examination to faculty members and students may increase the utility of PCOA results.

Keywords: Pharmacy Curriculum Outcomes Assessment, PCOA, curriculum assessment, standardized testing, benchmarking

INTRODUCTION

Revised accreditation standards from the Accreditation Council for Pharmacy Education (ACPE) were implemented in 2016.1 The new standards require that all pharmacy programs administer the Pharmacy Curriculum Outcomes Assessment (PCOA), a standardized test created and administered by the National Association of Boards of Pharmacy (NABP), to all third-year equivalent Doctor of Pharmacy (PharmD) students before they begin their advanced pharmacy practice experiences (APPEs). Both ACPE and NABP state that PCOA results are useful for curricular assessment, review of student performance in the curriculum, longitudinal assessment of students, and benchmarking of either individual student or program level performance.1-3

The NABP provides programs with PCOA results that offer information about student and program performance in four domains: basic biomedical sciences, pharmaceutical sciences, social/administrative sciences, and clinical sciences.3 Schools are provided with individual student and program results that include scaled scores and percentile ranks indicating performance relative to a normed reference sample.3 In 2016, all pharmacy programs were required to administer the PCOA to third-year equivalent pharmacy students as an accreditation requirement. At that time, several anecdotal reports from pharmacy education leaders and school administrators within the American Association of Colleges of Pharmacy (AACP) suggested significant limitations with regard to PCOA examination items, administration, interpretability, and usefulness. The purpose of this project was to characterize the timing and manner in which the PCOA is administered and how the results are used across pharmacy programs.

METHODS

In February 2017, AACP charged a Council of Deans (COD) Task Force to work with ACPE to identify and clarify the intended uses of PCOA and to collect evidence to assess its usefulness for those purposes. The task force then formed a workgroup of assessment experts chosen to represent a diverse mix of public and private programs from different geographical regions and from programs having differing curricular structures. The assessment workgroup was charged with studying and reporting how PCOA is administered and used nationally, program perceptions about the usefulness of PCOA, and program recommendations regarding strategies to enhance the usefulness of PCOA results. To accomplish these goals, the workgroup designed a prospective study to survey all accredited pharmacy programs about their experiences with administering the PCOA and using PCOA results. This project was deemed as not regulated by the institutional review board at the University of Michigan.

At the time the survey was administered, there were 142 accredited pharmacy programs. To be eligible for this study, programs had to have had students enrolled with a third-professional-year (or equivalent) status during or before the 2015-2016 academic year. New programs that had no experience with administering the PCOA were excluded; three programs met this exclusion criterion. Thus, there were 139 eligible programs at the time of the survey. To maximize the survey response rate, the deans of the eligible programs were asked in advance to identify the person who was most familiar with PCOA administration at their respective institutions. All deans identified their PCOA contact.

The survey assessed how the PCOA was administered in pharmacy programs, the ways in which programs used PCOA results, perceived benefits and challenges with administering the examination, and the potential practical applications of the results. Items and response options were derived from a review of the literature and other survey instruments that were used regionally to collect information about schools’ experiences with the PCOA. Issues that had been adequately addressed by a recent national survey were excluded to avoid redundancy.4 Items addressed five domains: program demographics, factors related to test administration, opportunities for test preparation for students, program use of PCOA results, and program recommendations for how PCOA could be more useful. Items designed to learn about program experience with PCOA asked respondents to focus on their experience with the most recent administration of the PCOA. The survey was beta-tested through review by the COD Task Force members and assessment experts at six institutions prior to launch. It was then distributed to the 139 program contacts on October 18, 2017. Recipients were asked to respond with regards to third-year (or equivalent) students from the class of 2018. Following three reminders, the survey was closed on October 30, 2017.

Data were summarized using descriptive statistics and organized around the following three themes, which were based on the broad uses defined in the Guidance for Standards 2016: individual student learning assessment, curricular assessment, and student and program-level benchmarking.2 In addition to the overall analysis, the data were compared for two subgroups: programs that administered the PCOA prior to the 2016 ACPE accreditation requirement and those that only administered it after the requirement (prior use vs no prior use); and new programs (graduated less than five cohorts) vs established programs (graduated more than five cohorts). Responses to open-ended questions were independently reviewed by at least two investigators to identify and quantify the frequency of common themes.

RESULTS

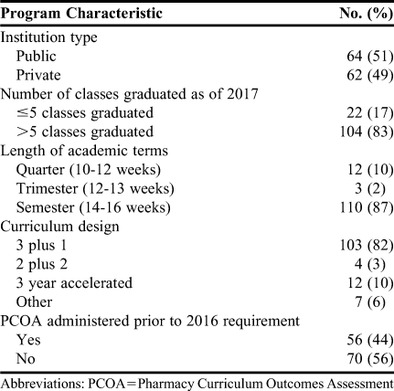

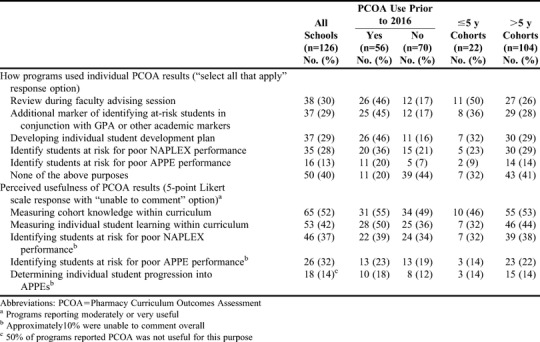

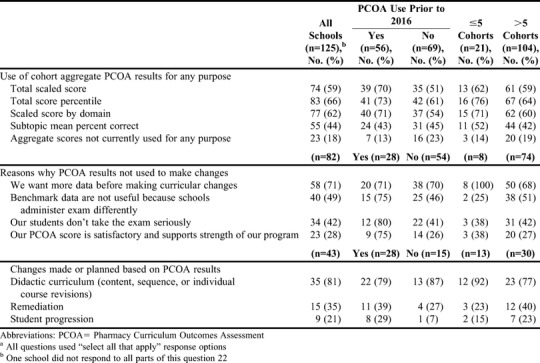

Responses were received from 126 of 139 programs (91% response rate, Table 1). Respondents included a similar number of public and private schools and those who administered the PCOA prior to 2016 versus only after the 2016 requirement. The majority of programs had graduated more than five cohorts, and most had traditional PharmD programs consisting of three years of didactic instruction followed by one year of APPEs (“3+1” programs). Of the 56 programs that administered PCOA prior to the 2016 requirement, 29 (52%) administered it only to the third-year (or equivalent) cohort. Of those 29 programs, 14 (48%) administered the examination only once prior to 2016. Since the 2016 requirement, 96 of 125 programs (77%) administered it only to the third-year (or equivalent) cohort; one respondent indicated that their program had not yet administered the examination. The majority of programs administered the PCOA as a one-time event on either a single campus (67%) or on multiple campuses (17%). The remaining programs (16%) administered the PCOA over multiple sessions. Ninety-two percent of respondent programs indicated that a faculty administrator (eg, associate or assistant dean or director of assessment) was responsible for planning PCOA logistics; 31% also had administrative assistant support. The majority of programs indicated ease with the process of registering (55%) and assigning (69%) students to a given examination window. While the individual registration steps were not difficult, several respondents indicated that they were time consuming to complete, particularly when changes arose (eg, student name changes). There were no notable differences in logistical challenges based on the program type (ie, traditional “3+1,” accelerated, etc) or number of examination administrations. Twenty-five percent of programs indicated difficulty securing rooms for the examination. The ways in which respondents’ used individual student PCOA results and how useful they felt the PCOA results were for individual student assessment are shown in Table 2. Individual PCOA results were used by 76 (60%) respondents. Programs that administered the examination prior to the 2016 requirement reported using individual PCOA results more frequently than those who administered the examination after the 2016 requirement. However, the perceived usefulness of the PCOA results for individual student assessment was similar between these two subgroups. Fifty percent of respondents indicated that they believed PCOA scores were not useful in determining individual student readiness for progression into APPEs. This result aligns with the finding that fewer than 13% of programs used individual PCOA scores to identify students at risk for poor APPE performance, and only 32% of respondents felt scores were applicable for identifying at-risk students (Table 2). Fifty-two percent of respondents perceived that PCOA results, as currently provided, could be useful in measuring cohort knowledge in the curriculum and 42% perceived that individual PCOA results could be useful for measuring individual student learning in the curriculum. These findings were relatively consistent across those who administered PCOA prior to vs after the 2016 requirement and for new vs established programs. Twenty-nine percent of respondents indicated that PCOA results were an additional marker to identify at-risk students when used in conjunction with grade point average or other academic markers. Few programs appear to use the PCOA for longitudinal assessment of individual students as the majority of the programs that administered PCOA prior to 2016 (30/56, 54%) only administered PCOA to one cohort. The majority of programs (97%) provided individual scores to students; 60% of programs also provided the class/cohort score to students. Poor performers were required to meet with an advisor/administrator in 29% of programs with an additional 11% of programs requiring all students to meet with an advisor. Nearly half (47%) of respondents agreed or strongly agreed that PCOA aggregate results are or could be useful for curricular quality improvement, with responses being consistent across those who administered the PCOA prior to vs after the 2016 requirement. The aggregate total scaled PCOA score, total score percentile, and scaled score by domain are each used by approximately 60% of programs (Table 3); 18% of programs did not use the aggregate PCOA scores for any purpose. The use of aggregate PCOA scores was more common among newer schools and those that administered PCOA prior to 2016. Subtopic mean percent scores were used for decisions by 44% of programs despite NABP stating that these scores should not be used for strict score comparisons or to make inferences about specific performance outcomes.3 The most common reason reported by the 82 programs not using the PCOA results was related to them wanting more data before using the results to make curricular changes (Table 3). Curricular changes based on PCOA results were made by 81% of the 43 programs who reported using PCOA results for curriculum assessment (Table 3). Reported changes that were made or were planned included remediation and, to a lesser extent, student progression policies and procedures.

Table 1.

Program Demographics of Accredited US Pharmacy Schools and Colleges Included in a Study on the Use of the Pharmacy Curriculum Outcomes Assessment (N=126)

Table 2.

Responses from Accredited US Schools and Colleges of Pharmacy Regarding the Use of Scores on the Pharmacy Curriculum Outcomes Assessment on Individual Student Assessment

Table 3.

Responses From Accredited US Schools and Colleges of Pharmacy Regarding the Use of Results From the Pharmacy Curriculum Outcomes Assessment for Curriculum Assessmenta

To assess the usefulness of the PCOA results to support individual and curricular benchmarking, programs were asked about how they administer the examination. There was considerable variability in how the PCOA was administered to third-year (or equivalent) students across programs. One variation related to the percentage of the curriculum completed at the time PCOA was administered. This varied from <84% of curriculum completed by 45% of programs to >90% of curriculum completed by 34% of programs. Additionally, some programs had administered the PCOA to their third-year (or equivalent) students at an earlier point in their studies, so not all students were taking the test for the first time.

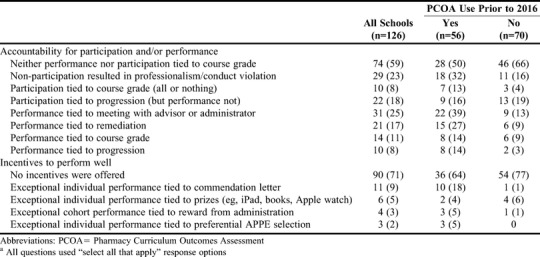

Student accountability for performance and/or participation and incentives used to enhance performance differed across programs, and included tying either performance or participation to a course grade, progression, or remediation (Table 4). While the majority of programs (71%) offered no incentives for students who did well, a variety of incentives were used by a handful of programs. Sixty percent of programs provided no preparation or resources to help students prepare for the examination. Students were directed to existing library resources in 23% of programs, a commercial or school-based practice examination was provided by 6% of programs, a NAPLEX preparation resource was provided by 6%, and a school-based PCOA review session was provided by 5%.

Table 4.

Responses From Accredited US Pharmacy Schools and Colleges Regarding Student Accountability and Incentives for Performance on the Pharmacy Curriculum Outcomes Assessmenta

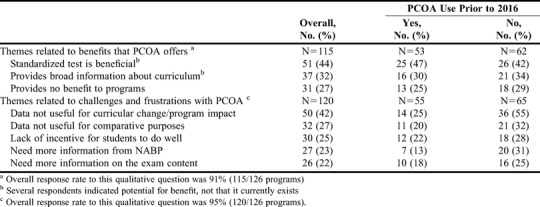

Two open-ended survey questions asked respondents to identify perceived benefits and challenges with the PCOA as it currently exists. These questions were answered by over 90% of respondents and then organized into general themes (Table 5). Respondents indicated the types of information or additional data programs might want to better interpret or use PCOA results. Ninety-one percent of respondents wanted to see a correlation between PCOA and NAPLEX scores, over 70% wanted to see benchmarking data from a peer cohort and/or from programs that administer the examination in a similar manner (ie, incentives, preparation, stakes, etc), and 45% wanted information about the time students spent taking the examination as a measure for how important students perceived the examination to be. Schools also expressed interest in better defining the intended purpose of the PCOA and determining whether it is valid for predicting student success in APPEs.

Table 5.

Summary of Qualitative Themes Identified in Responses From Accredited US Pharmacy Schools and Colleges Regarding the Pharmacy Curriculum Outcomes Assessment

DISCUSSION

The NABP recommends PCOA as a measure of individual student learning and promotes the examination as an independent, objective, external measure of student performance in pharmacy curricula.3 The ACPE indicates in the guidance document (Standard 24g) that the PCOA is a valid and reliable measure of student competence in the four domains defined in ACPE Appendix 1, and that the examination can be used to track student learning in the program over time.2 Both the NABP and the ACPE suggest that the PCOA can be used to determine whether the curriculum is producing the desired program outcomes or to evaluate the effectiveness of quality-improvement initiatives in the curriculum.1-3 Both agencies also mention the ability to use PCOA results for benchmarking within a national representative sample to compare program-level results or student performance. The results of this national survey indicate that while many programs in the academy believe the PCOA has the potential to meet some of these goals, it is not effectively doing so.

Respondents reported that the individual PCOA results could be useful for assessing cohort knowledge in a given curriculum, yet 40% of programs were not using individual student results for any purpose. Previous studies found that program grade-point average (GPA) correlates with PCOA scores. Scott and colleagues found a correlation between third- year GPA and PCOA scores, although they noted several limitations related to student motivation to do well.5 Meszaros and colleagues found that their Triple Jump Examination (a “homegrown” APPE-readiness examination) was a stronger predictor of APPE grades than GPA or PCOA scores (r=0.60 vs 0.45 vs 0.33, respectively).6 Multivariate analysis showed that GPA was a significant predictor of APPE performance, and the addition of PCOA did not increase predictive power. Published data show that PCOA scores do not appear to provide additional information to assess individual student performance over GPA, something that is readily available to programs. For this reason, PCOA may be a more useful tool for those programs that do not assign grades.

The relationship between PCOA and NAPLEX performance has also been explored. If PCOA is an accurate indicator of individual or cohort student performance, it could be a predictor for successful NAPLEX outcomes. Garavalia and colleagues found a correlation between PCOA score and both grade point average (GPA; r=0.47) and NAPLEX score (r=0.51).7 However, GPA explained a larger portion of unique variance on the NAPLEX than did the PCOA score (14% vs 8%, respectively). Hein and colleagues found a relationship specifically between third-year pre-APPE GPA (r=0.60) and PCOA score as well as NAPLEX score (r=0.64).8 Correlations between PCOA total scaled score and NAPLEX total score (r=0.59) and for nearly all areas of NAPLEX were found by Naughton and Friesner.9 At this time, however, programs are unable to readily explore the relationship between student PCOA performance and NAPLEX pass rates beyond their own program data because national data for all programs are not currently made available by the NABP.

While 82% (102/125) of programs indicated they used aggregate cohort results, programs do not seem to find PCOA results useful for curricular assessment or student performance, and two-thirds of programs (82/125) have not made data-driven changes based on PCOA results. One-third of programs (43/125) reported using PCOA results to make changes, a practice that may be threatened because of lack of student incentives for performance. Most programs are not incentivizing or holding students accountable to optimal performance, thereby placing programs at risk of acting on data that may not accurately reflect student knowledge. This raises the question of whether the PCOA is accurately meeting its goal of being an objective measure of student performance. Waskiewicz found that PCOA scores of those who were incentivized to do well were more likely to be an accurate reflection of content knowledge, and that scores of those not motivated to do well should be filtered out.10

The PCOA has value to some schools for curriculum review. As a formative examination, it may be best suited as an optional tool for those implementing a new curriculum or when making curriculum revisions. The PCOA results for only one point in time per cohort are unlikely to provide sufficient specificity to inform meaningful curricular quality assurance. Not surprisingly, fewer than half of survey respondents believe PCOA results, as they currently exist, are useful for curricular quality improvement. The variability of when and how PCOA is administered across programs, including prior exposure to the examination, use of incentives or accountability measures, and examination preparation, interfere with meaningful benchmarking. In addition, benchmarking across similar programs or peer groups is not currently possible.

In a summary of past research on PCOA, Mok and Romanelli indicated that PCOA results may assist in determining student knowledge but that additional research is needed to ascertain the utility of PCOA results overall and as a predictive factor for licensing examination performance.11 Those conclusions are consistent with the results of this current survey as reflected in the marked inconsistencies across programs in the actual and perceived utility of individual and aggregate PCOA results. Those inconsistencies are most likely related to lack of clarity regarding the intended purposes for PCOA, beyond meeting an accreditation requirement, and the resulting differences in student preparation, use of incentives, examination logistics, and outcomes related to PCOA. A clearly defined purpose combined with consistent processes related to PCOA and its administration should be established if PCOA administration continues to be required for all PharmD students prior to advancing to APPEs.

From the standpoint of examination logistics, the currently available testing windows do not work for approximately a third of schools. Several respondents recommended extended or modified testing windows (eg, monthly examination options, March option, Saturday testing times) and a summer testing window for accelerated programs. All schools would like a faster turnaround time for the results as evidenced by many schools planning to select an earlier testing window in order to receive results prior to students beginning APPEs. There is a high administrative burden for administering the PCOA in all pharmacy programs. This, coupled with the administrative burden of holding individual advisor/administrator meetings with students as 40% of programs reported doing, characterizes an examination that is a considerably labor-intensive effort for programs. Our results do not account for the cost to NABP for developing and maintaining a validated examination intended for one-time use by all programs. Fewer than half of programs believed that PCOA results were or could be useful for curricular improvement (47%). Even fewer (40%) reported using individual scores for any specific purpose, suggesting the need to clearly define the purpose of the PCOA and ensure it is effectively meeting that purpose.

The evidence-based, informed design of the survey instrument and response options, coupled with an extremely high response rate, ensure that the findings from this study accurately reflect the perspectives and experiences of programs to date with the PCOA. Selection bias was addressed by surveying all accredited pharmacy programs. Recall bias was minimized by ensuring the survey was sent to the individuals who were most familiar with the PCOA process in their program. Our instrumentation was not validated, so future qualitative research (eg, standardized interviews or focus groups) may help validate the findings reported here.

CONCLUSION

The results of this national survey, which are representative of the diversity of programs in the academy, provide useful information about the strengths and limitations of the PCOA as it currently exists. The stated purposes of the PCOA are to provide an objective measure of individual student performance in the didactic curriculum, provide data useful for curriculum assessment, and allow for individual and programmatic benchmarking across the academy. Based on responses from 91% of pharmacy programs, these intended uses are not currently implemented across the academy. Schools across demographics (new vs old, prior PCOA users vs new users, public vs private) are requesting more data before they can do so. Universally, schools share the same concerns regarding challenges in implementing the PCOA requirement. Opportunities for improvement exist in the area of examination logistics, including expanding testing windows to potentially include monthly offerings or weekend examination options. A shortened turnaround time for results would allow programs to receive and potentially act on individual results before students proceed to APPEs. Providing additional data on PCOA results (eg, individual student time spent testing, standard deviation around the scaled scores, program demographics such as new versus older programs, correlation of individual and/or aggregate PCOA scores with NAPLEX results) may increase the utility of PCOA results to inform curricular quality assurance efforts and the ability to benchmark to similar programs. In conclusion, a clear purpose for the examination and additional study of the predictive value of PCOA as a measure of curricular performance and/or individual student performance within a program is needed, particularly given the high administrative burden borne by schools and their students.

REFERENCES

- 1.American Council Pharmaceutical Education. Accreditation Standards and Key Elements for the Professional Program in Pharmacy Leading to the Doctor of Pharmacy Degree (Standards 2016)https://www.acpe-accredit.org/pdf/Standards2016FINAL.pdf . Published February 2015. Accessed March 21, 2018. [Google Scholar]

- 2.Accreditation Council for Pharmacy Education. 2016. Guidance for the Accreditation Standards and Key Elements for the Professional Program in Pharmacy Leading to the Doctor of Pharmacy Degree.https://www.acpe-accredit.org/pdf/GuidanceforStandards2016FINAL.pdf . Accessed March 21, 2018. [Google Scholar]

- 3.Pharmacy curriculum outcomes assessment. registration and administration guide for schools and colleges of pharmacy.https://nabp.pharmacy/wp-content/uploads/2018/01/PCOA-Registration-Administration-School-Guide-2018.pdf . Accessed March 13, 2018. [Google Scholar]

- 4.Gortney J, Rudolph MJ, Augustine JM, et al. National trends in the adoption of PCOA for student assessment and remediation. Am J Pharm Educ. doi: 10.5688/ajpe6796. http://www.ajpe.org/doi/abs/10.5688/ajpe6796 . Accessed March 21, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Scott DM, Bennett LL, Ferrill MJ, Brown DL. Pharmacy curriculum outcomes assessment for individual student assessment and curricular evaluation. Am J Pharm Educ. 2010;74(10):Article 183. doi: 10.5688/aj7410183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Meszaros K, Barnett MJ, McDonald K, et al. Progress examination for assessing students' readiness for advanced pharmacy practice experiences. Am J Pharm Educ. 2009;73(6):Article 109. doi: 10.5688/aj7306109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Garavalia LS, Prabhu S, Chung E, Robinson DC. An analysis of the use of Pharmacy Curriculum Outcomes Assessment (PCOA) scores within one professional program. Curr Pharm Teach Learn. 2017;9:178–184. doi: 10.1016/j.cptl.2016.11.008. [DOI] [PubMed] [Google Scholar]

- 8.Hein B, Messinger NJ, Penm J, Wigle PR, Buring S. Correlation of the pharmacy curriculum outcomes assessment (PCOA) and selected pre-pharmacy and pharmacy performance variables. Am J Pharm Educ. 2019;83(3):Article 6579. doi: 10.5688/ajpe6579. http://www.ajpe.org/doi/pdf/10.5688/ajpe6579. Accessed March 22, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Naughton C, Friesner DL. Correlation of P3 PCOA scores with future NAPLEX scores. Curr Pharm Teach Learn. 2014;6(6):877–883. [Google Scholar]

- 10.Waskiewicz RA. Pharmacy students' test-taking motivation-effort on a low-stakes standardized test. Am J Pharm Educ. 2011;75(3):Article 41. doi: 10.5688/ajpe75341. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Mok TY, Romanelli F. Identifying best practices for and utilities of the Pharmacy Curriculum Outcome Assessment Examination. Am J Pharm Educ. 2016;80(10):Article 163. doi: 10.5688/ajpe8010163. [DOI] [PMC free article] [PubMed] [Google Scholar]