Abstract

The Affordable Care Act provides opportunities to reimburse non-medical “enabling services” that promote the delivery of medical care for patients with social barriers. However, limited evidence exists to guide delivery of these services. We addressed this gap by convening community health center patients, providers and other stakeholders in two panels that developed a framework for defining and evaluating these services. We adapted a group consensus method where the panelists rated services for effectiveness in increasing access to, use, and understanding of medical care. Panelists defined 6 broad categories, 112 services, and 21 variables including the type of provider delivering the service. We identified sixteen highest-rated services and found that the service provider’s level of training affected effectiveness for some but not all services. In a field with little evidence, these findings provide guidance to decision-makers for the targeted spread of services that enable patients to overcome social barriers to care.

Keywords: Group processes, healthcare disparities, health policy, community health centers, underserved, Health Services Research, Health Services Accessibility

Community health centers (CHCs) are safety net health practices that serve a predominantly low-income and minority patient population at over 9000 sites across the United States 1,2. CHCs have a long history of delivering non-medical services intended to reduce the social barriers to care faced by many of their low-income patients 3. CHCs must deliver these ‘enabling services’ in order to receive designation as a Federally Qualified Health Center 4. These include providing eligibility assistance, performing community outreach, and delivering case management 5,6. Each of these services aims to reduce a barrier to care and thereby enable a patient to attend a medical appointment and to complete any prescribed follow-up steps.

There have been two major impediments to the widespread and systematic delivery of enabling services: payment models and evidence of effectiveness. Many enabling service programs are funded by short-term grants or philanthropy and less commonly through a patient’s health insurance plan. This lack of reliable funding has limited the reach and sustainability of these programs 7. The Affordable Care Act (ACA) 8 creates several mechanisms that could potentially deliver reliable funding. Firstly, the expansion of Medicaid extends eligibility to millions of previously uninsured, low-income patients who currently receive their healthcare from CHCs representing a potential shift in reimbursement source for these organizations 9. Secondly, the shared savings payments of the accountable care organization (ACO) model may allow health systems to fund enabling services at the population-level 10–12. An ACO may calculate that enabling services improve access to primary care resulting in better chronic disease management and the cost savings of fewer emergency visits and hospitalizations. However, in order for the Centers for Medicare & Medicaid Services (CMS) and state Medicaid offices to include enabling services as covered services and for ACOs to implement these services, the second impediment of evidence of effectiveness needs to be answered. We began to address this gap in evidence by developing a research framework for analyzing this field of health care services and by starting the initial evaluation of these services.

Enabling services are unlike other healthcare services because they touch the fabric of patients’ social lives 13 and not just discrete disease conditions; it has, consequently, been challenging to define and measure their effectiveness. We used a patient-centered research approach 14,15 that adapted a validated group judgment method to synthesize input from people who either receive, provide, or make policy on enabling service. Based on the RAND/UCLA appropriateness method 16,17, we elicited input from underserved patients and their representatives to build the evidence base on all the different ways these services are delivered. Next we developed an instrument cataloguing this list of services and the variables key to their delivery. Expert panelists including patients, providers, and policy-makers then rated these services for their effectiveness in increasing access to, use and understanding of medical care. The resulting categorization and prioritization provides initial guidance to researchers and operational decision-makers about how to approach testing effectiveness in clinical trials and developing patient-facing programs.

Methods

Expert panel planning and process

Group consensus methods 18 elicit the opinion of experts on a particular issue where little definitive science exists 19–21. The validated RAND/UCLA appropriateness method is widely used to determine the appropriateness of medical interventions weighing risks and benefits for defined patient scenarios. The experts review the medical literature, independently rate a particular intervention’s appropriateness for numerous scenarios, come together as a group to revise the scenarios and discuss their initial ratings, and then independently rerate the final list of scenarios. This efficient process utilizes individual expert opinion and structured discussion to produce a list of interventions quantitatively determined by the group to be either appropriate, inappropriate, or equivocally appropriate for a particular patient scenario 22,23. In applying an expert group’s opinion to evaluating the effectiveness of non-medical services, we maintained the core of the traditional validated method but certain adaptations were necessary. We tested the validity of our adapted method by measuring convergence of panelist ratings between the two rounds. There were statistically significant levels of convergence indicating acceptable validity, and these results are described elsewhere (A Escaron, personal communication).

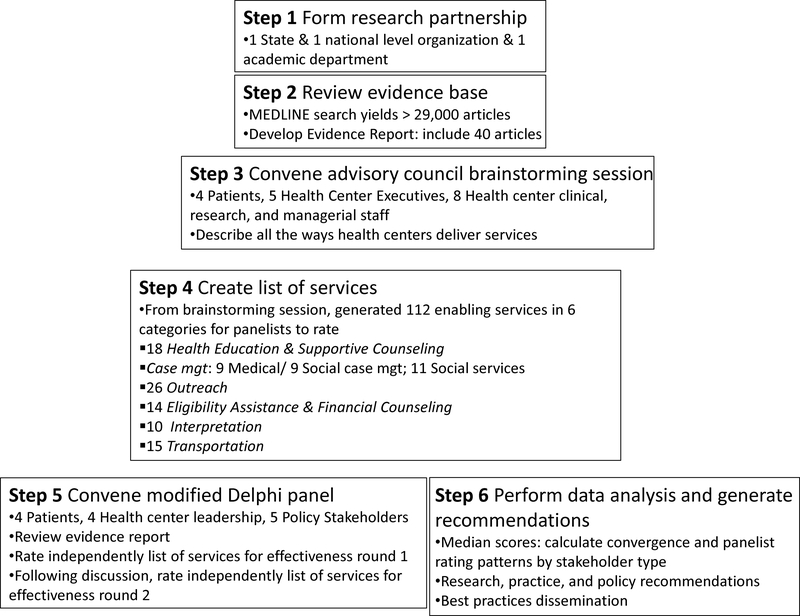

The method included six steps (Figure 1). In step 1, an academic health services research department formed a research partnership with two organizations engaged in CHC advocacy and research. In step 2, we performed a literature review for English-language, peer-reviewed publications on enabling services. Over 27,000 articles were identified in Medline (Appendix Table 1), a number that was beyond the scope of this project to systematically review. Instead, the most highly cited that used experimental, quasi-experimental, and observational research designs to evaluate effectiveness were incorporated into the evidence report used by the panels. Only 40 articles met these criteria underscoring the dearth of scientific studies in this area. We incorporated these articles into an evidence report and organized them into six distinct categories of enabling services. This categorization schema was adapted from established, pre-existing definitions in the field 4,5.

Figure 1.

Steps followed in the multiple stakeholder engagement process to develop research, practice, and policy recommendations on delivery of enabling services for low-income patients are presented. Relying on existing evidence, an expert panel rated a list of these services for effectiveness.

In step 3, the first of two groups, the advisory council, was convened for a day-long, in-person meeting. Seventeen people who were active providers or recipients of enabling services were recruited. The council used the evidence report and their own experiences to brainstorm all the different types of enabling services and the variable ways these services can be delivered (e.g. by personnel with different training).

In step 4, our research team used the brainstormed ideas from the advisory council to develop a list of 112 discrete services. For each category, we also identified variables that represented the intensity of service delivery. This intensity manifested as the provider type who delivered that service or whether it was offered directly by the CHC or in partnership with an external organization. Modeled on what the RAND/UCLA method used, the list of services and variable ways of delivering each service were made into an instrument that the expert panel used to rate effectiveness.

In step 5, we convened the second group, the modified Delphi expert panel. This panel was composed of thirteen people: 4 patients, 4 enabling service providers, and 5 CHC leaders and policy-makers. The task of the expert panel was to draw on the evidence report and their heterogeneous experiences to rate the list of services and variables captured in the instrument. The panel first agreed on a definition of “effectiveness” for enabling services: the degree by which a service increases a patient’s access to, use and understanding of his or her medical care. This panel process allowed for both qualitative and quantitative data collection regarding the panelists’ expert opinions. The qualitative data are reflected in how the panelists agreed to refine the category definitions and added or deleted services and variables. Panelists agreed that the primary variable that they should rate was the type of provider who delivered the service. We used the traditional method’s standardized rating system to collect and analyze the quantitative results. Panelists rated each service from low to high using a 9-point scale where 1–3 = ineffective, 4–6 = uncertain, 7–9 = effective. The panelists rated the instrument twice: first individually 2 weeks before the in-person meeting, and then again after the in-person meeting.

Lastly, in step 6, we analyzed the expert panelist ratings 24–27 from the second round and identified the group’s recommendations. For each service, we calculated a median effectiveness score across the 13 panelists using the technique established by the traditional method 17. Based on these median effectiveness scores, we then analyzed for patterns that would identify outlying services or differences in effectiveness based on the provider type.

Data analyses

Analyses were conducted at three levels: category, service, and provider type. For the category-level analysis, for each category rated by the panel- we took the average of the median panelist effectiveness ratings by variable for each service. The range of these median scores is also presented. For the service-level analysis, we a priori defined those that fell in the top quartile as most effective. The cutoff needed to identify the top quartile was an average median effective rating of 8 or higher. We then tested whether a service’s effectiveness varied based on the provider type who delivered it by calculating the median and interquartile range (IQR) for each provider type within a particular service. Then, a Friedman test was used to analyze whether one type of provider had a significantly different median and IQR from the other types (p-value < 0.05 indicates a significant difference). All analyses were performed using SAS 9.3 (SAS Institute Inc, North Carolina).

Results

Category-level analysis

Consistent with the traditional method, panelists used the literature and their experiences to rewrite the list of categories and services prepared by the research team. Definitions for each category and the service intensity variables were agreed upon (Table 1). Two categories in particular were substantially revised by the panel. Panelists modified the scope of the Outreach category definition by including those services that bring new patients from the community into primary care—rather than just limiting to those services delivered on-site at the health center. Transportation was the second substantially changed category, as the panel decided it could not be rated using the modified Delphi approach. Instead, panelists defined three essential dimensions for effective services.

Table 1.

Expert panelist refined definitions and median effectiveness scores for enabling services categories

| Category (Average Median Effectiveness Score) | Definition |

|---|---|

| Case management (7.1) |

Pairing comprehensive assessment and support of non-medical needs – socioeconomic status, wellness, or other non-medical health status – with the provision of medical services. 3 Provider types: 1) Licensed Professional, 2) Nonlicensed professional, 3) Promotora/CHW |

| Outreach (7.5) |

Services that build awareness of available health center medical, preventive, and social services which ultimately bring new patients into primary care; health center departments communicate accurate CHC medical and social service selection and are prepared to communicate this information to community members. 2 Provider types: 1) Paid employee, 2) Volunteer |

| Health Education & Supportive Counseling (6.6) |

Teaching patients how to stay well, improve their health, and/or manage their disease(s). Example topics include taking medications and changing diet. Health Education focuses on educational activities that take place either through individual or group visits. Supportive Counseling focuses on therapeutic services that tend to be delivered one-on-one. 2 Provider types: 1) Licensed Professional, 2) Promotora/community health worker (CHW) |

| Eligibility Assistance & Financial Counseling (7.4) |

Counseling a patient with financial limitations with the goal of a completed application to sliding fee scale, health insurance program – Medicaid, Medicare, or pharmaceutical benefits program – or other public assistance programs (e.g. food assistance, housing). 2 Provider types: 1) Non-dedicated CHC staff, 2) Trained, dedicated personnel |

| Interpretation (6.9) |

Provision of linguistic translation services and cultural competency in addressing the medical and social needs of patients (includes sign language and Braille). 4 Provider types: 1) Self-attested fluency, 2) Internal health center fluency test, 3) Certified Medical Interpreter (CMI) credentialed by external program, 4) CMI in linguistic and cultural fluency (i.e. dual certification) |

| Transportation (Not rated) |

For patients who would not receive healthcare because they lack transportation, the health center provides services to transport patients or delivers care off-site. 3 Variablesa: 1) Time 2) Mode 3) Cost |

1) Time: patient satisfaction with travel time to needed medical care 2) Mode: accessibility issues for those with physical challenges, disabilities, and children boarding vehicles 3) Cost of the transportation service to the payer.

For each category rated by the panel, we took the average of the median panelist effectiveness ratings by variable for each service.

By category, the most consistently highest rated category was outreach with an average median effectiveness score of 7.5 (range 5–9). Then, in descending order, eligibility assistance & financial counseling median = 7.4 (5–9); social case management 7.1 (5–9); interpretation services 6.9 (3–9); and health education & supportive counseling 6.6 (5–8).

Service-level analysis

The panelists rated all 112 services developed from the brainstorming of the advisory council. A full list of the rated services and median effectiveness ratings is provided (Appendix Table 2). During the expert panel session, they reached consensus in revising service definitions and electing not to rate certain services. Panelists modified 11 service descriptions to better capture services delivered by CHCs. Because the panel had limited time for discussion, the panel decided to rate only those services delivered directly by CHCs, so nine services provided in partnership with community organizations were not rated. The panel focused case management on the social services, so nine services involving only medical case management were not rated. As described above, all 15 transportation services were not rated.

Therefore, expert panelists rated 77 enabling services. Each service was rated for one or more provider types who could deliver that service. There were 13 different provider types, varying by category, resulting in 181 different service-provider type combinations being rated. The expert panel discussed and rated services in the following categories: health education & supportive counseling (14 services); case management (9); outreach (22); interpretation (9); and eligibility assistance & financial counseling (12). The results from these ratings are described below. A secondary section within case management included 11 services that were repeated from other categories but were rated based on the variable of whether they were provided by the CHC or an external organization. Presenting these results was beyond the scope of this article, so the results of this section are only displayed in Appendix 2.

Overall sixteen services were identified in the highest quartile of effectiveness by having a median effectiveness score of 8 or 9 (Table 2). At least one service from each category was included on the highly rated list. Outreach as a category had the most number of services rated highly with 10 of 22 services very effective. In contrast, the categories of health education & supportive counseling and interpretation each only had one service highly rated.

Table 2.

Expert panel highest-rated services across all categories based on median effectiveness score of 8 or 9 (out of 9)

| By Category, Services with a Median Effectiveness Score = 8 or 9 (Total # services in category) |

|---|

| Social case management services (9) |

| • Meets regularly with patient to review/assess progress towards goals |

| • Tracks patient’s use of programs |

| • Reviews patients’ use of social services with medical providers |

| Outreach(22) |

| • Offers screenings to community members in a setting outside of the health center |

| • Contacts patients with positive screening tests in a setting outside of the health center to make a primary care appointment |

| • Works with patients to obtain medications/assist with health care related needsa |

| • Travels with patient to off-site specialty appointments |

| • Through repeated off-site encounters, CHC staff provide hard-to-reach patients with resources such as meals for homeless and HIV testing for substance abusers |

| • Staff reaches out to consumer patient leadership council group, community leaders, members, and organizations to build rapport with community membersa |

| • Staff perform home visits to assess a person’s need and to educate about health center services |

| • Conducts local community needs assessment to identify those at risk in the population and links these groups with community resources |

| Health education & Supportive counseling services delivered by health center (14) |

| • In one-on-one visit, delivers supportive counseling and adapts the treatment plan as necessary over time |

| Eligibility assistance & Financial counseling (12) |

| • Screens/initiates and tracks application for public health care coverage |

| • Checks patients’ insurance eligibility before appointment and resolves eligibility issues ahead of appointment |

| • Initiates and completes patients’ application to programs to pay for medications |

| Interpretation (9) |

| • Provider speaks patient’s language |

Expert panelists used the following scale to rate the effectiveness of non-medical services: 1 = Definitely not effective; 5 = Neither effective nor ineffective; 9 = Extremely effective

Due to the elevated number of highly rated outreach services, two related services were merged to succinctly summarize the panel’s recommendations.

Provider type analysis

The ratings instrument allowed the panelists to rate each service as delivered by different provider types using a variable construct. Consistent with the traditional method, the panelists substantially modified these variables. Across the five categories, the panelists identified thirteen provider roles at the CHC or levels of training that might affect a service’s effectiveness (Table 1). For many services, ratings were similar regardless of the provider type. But, for some services, there was statistically significant variation in effectiveness based on the provider’s level of training. Some of the most pronounced effects were found in social case management, health education & supportive counseling, and interpretation.

Three social case management services had statistically different ratings based on what type of personnel delivered the service (Table 3). For the two services related to assessing needs and developing patient goals, panelists rated licensed professionals as highly effective and community health workers (CHWs) with equivocal effectiveness. In contrast, panelists rated CHWs as more effective than licensed professionals at performing home visits.

Table 3.

Provider type analysis of social case management services

| Median Effectiveness Score (Interquartile range) | Friedman test P-value | ||||

|---|---|---|---|---|---|

| Provider type | |||||

| Social Case Management Service | Overall | Licensed Prof | Non-licensed Prof | Promotora | |

| 1. Assesses patient needs through psychosocial assessment | 7 (6–9) | 9 (9–9) | 6 (6–7) | 5 (3–7) | < .001 |

| 2. In a one-time meeting sets patient goals including meeting basic needs | 7 (6–8) | 8 (7–8) | 7 (7–8) | 6 (5–7) | 0.02 |

| 3. Meets regularly with patient to review goals and progress towards meeting them | 8 (7–9) | 8 (8–9) | 8 (7–9) | 8 (7–8) | 0.69 |

| 4. Provides contact information like a phone number | 5 (4–7) | 6 (4–7) | 5 (4–7) | 5 (4–7) | 0.81 |

| 5. Provides description of services available to patient, e.g. offers informational pamphlet | 7 (4–7) | 6 (5–7) | 7 (4–7) | 7 (4–7) | 1.0 |

| 6. Ensures initial enrollment in programs but does not track use | 7 (6–7) | 7 (6–7) | 7 (6–8) | 7 (6–7) | 0.18 |

| 7. Tracks patient’s use of programs over time and adapts social treatment plan as needed | 8 (8–9) | 8 (8–9) | 8 (7–9) | 8 (6–9) | 0.15 |

| 8. Reviews patients’ use of social services with medical providers | 8 (7–9) | 8 (7–9) | 8 (7–8) | 8 (7–8) | 0.41 |

| 9. Performs home visits | 8 (7–8) | 7 (6–8) | 7 (7–8) | 8 (8–9) | 0.02 |

The score is the Median effectiveness rating completed by 13 expert panelists using a 9 point scale: 1–3 = ineffective, 4–6 = uncertain, 7–9 = effective. For the provider type analysis, we compared the distribution of panelist effectiveness ratings between different provider types for a particular service using a Friedman test. A resulting P-value < 0.05 indicates a significant difference in the distribution of the ranked median effectiveness scores by provider type for a particular service. The median effectiveness score and interquartile range are presented to describe what drives the difference in ranks. The interquartile range is between the first and third quartiles.

Abbreviations: Licensed Prof, Licensed professional; Non-Licensed Prof, non-licensed professional; Promotora, promotora/community health worker.

In the health education & supportive counseling category, two services had statistically different effectiveness rating distributions by provider type (Appendix Table 2). Licensed professionals delivering one-time supportive counseling in a one-on-one visit were more highly rated than CHW. However, CHWs delivering a longitudinal education program in a group visit format were rated more highly effective than licensed professionals.

All interpretation services had statistically different ratings based on level of linguistic and cultural credential. For an encounter with the clinical team, self-attested evaluation rated of lower effectiveness compared to the three other credentials. Panelists rated non-clinical staff as highly effective if they had passed an external rather than internal certification program (Appendix Table 2). In contrast, a provider speaking a patient’s language was rated highly effective even if they only pass the internal health center fluency test. For additional services outside the clinic visit, dual certification rated of higher effectiveness compared to internal health center testing.

Discussion

In this study, our community-academic research team used a group consensus method to bring patient-centered definition and initial prioritization to the field of enabling services. The strength of this approach was the synthesis of perspectives from the direct recipients and providers of these services to fill a knowledge gap where little scientific evidence exists. The two patient and stakeholder panels defined three constructs – six broad categories, 112 granular-level services, and various provider types – as a framework for evaluating the effectiveness of these services. While not exhaustive, this list can serve as an important, initial classification scheme to guide further research. In addition, we used this framework to begin to evaluate and prioritize amongst these constructs based on panelists’ rating of service effectiveness.

While expert group consensus methods are efficient and targeted in contributing to knowledge in a field where little scientific evidence exists, the approach had some limitations. First, the stakeholder groups only permitted a limited number of people to participate, and therefore, the ratings of the panel may not be representative of what would be valued, identified, and assessed had additional panelists been included. Second, the panel developed a definition of effectiveness that was multi-dimensional, but the rating method only produced a single value. The assessment might vary if the metrics were isolated by dimension – that is, if the panelists rated individually the increase to access, use, or understanding. Third, standardization of social programs is difficult as frequently multiple types of services are needed to overcome a particular barrier to care, and there is significant customization required for patient subpopulations 28. A more specified context may be needed to determine how services may be complementary or additive. Lastly, the reliability of the traditional panel process has been shown to be dependent on the quality of the evidence 22. As the evidence for enabling services is limited, discussion on this subject was particularly dependent on panelists’ perspectives.

Despite these limitations, this is the first report of multiple stakeholders’ perspectives on enabling services and provides guidance for community health center leadership seeking input from underserved patients and their representatives. Some of the high priority services were described as in widespread use by the panelists, but others were seen as more innovative. The identification of these potentially novel services that the literature has not been able to describe and define was an advantage of this expert opinion-driven method. An example of a less widely used yet highly rated service was dedicating staff time to integrating the coordination of services to meet a patient’s basic needs with the clinical team’s medical care. The panel endorsed performing outreach to at-risk and hard-to-reach populations (e.g. homeless and migrant patients) through free screening blood sugar tests and contacting those with positive results to make a primary care appointment. These strategies extend the reach of primary care preventive services and suggest the important role CHCs can play in optimizing regional population health aimed for vulnerable community members.

This panel rating process also allows for the identification of likely lower-yield services. These include several widely used services such as referring patients to health education information on the web, referring patient to needed services by referring to a telephone number, and CHC staff distributing materials at health fairs. This finding is consistent with the panel prioritizing services that more actively support patients in overcoming their barriers to care.

The distributions of ratings by provider type identified opportunities to target resources to services where they are most needed to improve effectiveness. For case management, licensed personnel are an integral resource to the effective delivery of some but not all services. Panelists favored licensed professionals for services that rely on their clinical training whereas other – less highly trained – personnel types can be equally effective in delivering services that execute or maintain a plan of care. Panelists rated the contributions of community health workers highly because these peers build trust with patients through shared experiences and background.

Reducing social barriers to health care through targeted non-medical services has tremendous potential to improve population health at the societal level – especially now that possible mechanisms for sustainably funding these services emerge 13. Organizations beginning to deliver comprehensive population health management within the ACO model could use evidence about which enabling services and at what level of intensity are most effective to inform their decisions about service implementation 11,12,29,30. This study provides policy-makers, providers, and researchers with structured guidance about understanding the effectiveness of enabling services by harnessing the experience of CHCs and the knowledge of the stakeholders who deliver and receive these services. Based on this expert opinion-defined framework and initial prioritization, further research is needed to test enabling services’ effectiveness in clinical settings using both medical and patient-reported outcomes.

Supplementary Material

Acknowledgments

This work was partially supported through a Patient-Centered Outcomes Research Institute (PCORI) Pilot Project Program Award (1IP2PI000240-01). All statements in this report, including its findings and conclusions, are solely those of the authors and do not necessarily represent the views of the PCORI, its Board of Governors or Methodology Committee. PCORI’s Pilot Projects Program supports the collection of preliminary data on evidence-based methods and strategies that can be used to advance the field of patient-centered outcomes research. This research contributes to PCORI’s overall research agenda by identifying gaps where methodological research needs further development, particularly around effectively involving patients in the entire research process, from the selection of research topics to the dissemination of results.

This work was also supported through CTSI Grant UL1TR000124. We thank participants from the panels and acknowledge Sitaram S. Vangala and Tristan R. Grogan for contributing statistical analyses.

We thank participants from both panels and Arleen Brown, M.D., Ph.D.

Grant support

PCORI Pilot project

CTSI Grant UL1TR000124

Appendix Table 1.

MESH terms included in the MEDLINE search to assess the evidence base on enabling services

| MESH terms |

|---|

| Outcome and Process Assessment (Health Care); Health Services Research; Cost-Benefit Analysis; Observation; Quality of Health Care; Health Services Accessibility; Patient-Centered Care; Physician-Patient Relations; Continuity of Patient Care; Medically Underserved Area; Poverty; ethnic groups; Community Health Centers; Mobile Health Units; Transportation; Transportation of Patients; Delivery of Health Care, Integrated; Case Management; Financing, Personal; Eligibility Determination; Translating; Social Work; Social Welfare; Community Health Workers; Health Fairs |

References

- 1.Politzer RM, Yoon J, Shi L, Hughes RG, Regan J, Gaston MH. Inequality in America: The Contribution of Health Centers in Reducing and Eliminating Disparities in Access to Care. Med Care Res Rev. 2001;58(2):234–248. [DOI] [PubMed] [Google Scholar]

- 2.National Association of Community Health Centers. America’s Health Centers. Bethesda, MD: National Association of Community Health Centers; Available at: http://www.nachc.com/client/documents/America’s_Health_Centers_2013.pdf. Accessed July 11, 2013. [Google Scholar]

- 3.Dievler A, Giovannini T. Community Health Centers: Promise and Performance. Med Care Res Rev. 1998;55(4):405–431. [DOI] [PubMed] [Google Scholar]

- 4.The Medical Group Management Association Center for Research. Health Center Enabling Services: A Validation Study of the Methodology Used To Assign a Coding Structure and Relative Value Units To Currently Non-billable Services.; 2000.

- 5.The New York Academy of Medicine; Association of Asian Pacific Community Health Organizations Handbook for Enabling Services. The New York Academy of Medicine; Association of Asian Pacific Community Health Organizations; 2010. Available at: http://www.aapcho.org/resources_db/handbook-for-enabling-services-data-collection/. Accessed May 30, 2013. [Google Scholar]

- 6.Weir RC, Emerson HP, Tseng W, et al. Use of enabling services by Asian American, Native Hawaiian, and other Pacific Islander patients at 4 community health centers. Am J Public Health. 2010;100(11):2199–2205. doi: 10.2105/AJPH.2009.172270. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Clarke RMA, Tseng C, Brook RH, Brown AF. Tool used to assess how well community health centers function as medical homes may be flawed. Health Aff (Millwood). 2012;31(3):627–635. doi: 10.1377/hlthaff.2011.0908. [DOI] [PubMed] [Google Scholar]

- 8.Patient Protection and Affordable Care Act.

- 9.Gomez O, Day L, Artiga S. Connecting Eligible Immigrant Families to Health Coverage and Care: Key Lessons from Outreach and Enrollment Workers. Washington DC: Kaiser Commission on Medicaid and the Uninsured; 2011. Available at: http://kff.org/disparities-policy/issue-brief/connecting-eligible-immigrant-families-to-health-coverage/. Accessed May 30, 2013. [Google Scholar]

- 10.Fisher ES, Staiger DO, Bynum JPW, Gottlieb DJ. Creating accountable care organizations: the extended hospital medical staff. Health Aff (Millwood). 2007;26(1):w44–57. doi: 10.1377/hlthaff.26.1.w44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.McClellan M, McKethan AN, Lewis JL, Roski J, Fisher ES. A national strategy to put accountable care into practice. Health Aff (Millwood). 2010;29(5):982–990. doi: 10.1377/hlthaff.2010.0194. [DOI] [PubMed] [Google Scholar]

- 12.Berwick DM. Launching Accountable Care Organizations — The Proposed Rule for the Medicare Shared Savings Program. N Engl J Med. 2011;364(16):e32. doi: 10.1056/NEJMp1103602. [DOI] [PubMed] [Google Scholar]

- 13.Doran KM, Misa EJ, Shah NR. Housing as Health Care — New York’s Boundary-Crossing Experiment. N Engl J Med. 2013;369(25):2374–2377. doi: 10.1056/NEJMp1310121. [DOI] [PubMed] [Google Scholar]

- 14.Selby JV, Beal AC, Frank L. The Patient-Centered Outcomes Research Institute (PCORI) National Priorities for Research and Initial Research Agenda. J Am Med Assoc. 2012;307(15):1583–1584. [DOI] [PubMed] [Google Scholar]

- 15.Concannon T, Meissner P, Grunbaum J, et al. A New Taxonomy for Stakeholder Engagement in Patient-Centered Outcomes Research. J Gen Intern Med. 2012;27(8):985–991. doi: 10.1007/s11606-012-2037-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Fitch K, Bernstein SJ, Aguilar MD, et al. The Rand/UCLA Appropriateness Method User’s Manual. Santa Monica, CA: RAND; 2001. [Google Scholar]

- 17.Park RE, Fink A, Brook RH, et al. Physician ratings of appropriate indications for six medical and surgical procedures. Am J Public Health. 1986;76(7):766–772. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Linstone Harold A., Turoff Murray, eds. The Delphi Method Techniques and Applications. Reading, Massachusetts: Addison-Wesley Publishing Company, Inc.; 1975. [Google Scholar]

- 19.Shaneyfelt TM, Mayo-Smith MF, Rothwangl J. Are guidelines following guidelines? The methodological quality of clinical practice guidelines in the peer-reviewed medical literature. JAMA J Am Med Assoc. 1999;281(20):1900–1905. [DOI] [PubMed] [Google Scholar]

- 20.Kötter T, Blozik E, Scherer M. Methods for the guideline-based development of quality indicators--a systematic review. Implement Sci IS. 2012;7:21. doi: 10.1186/1748-5908-7-21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Turner T, Misso M, Harris C, Green S. Development of evidence-based clinical practice guidelines (CPGs): comparing approaches. Implement Sci IS. 2008;3:45. doi: 10.1186/1748-5908-3-45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Shekelle PG, Kahan JP, Bernstein SJ, Leape LL, Kamberg CJ, Park RE. The reproducibility of a method to identify the overuse and underuse of medical procedures. N Engl J Med. 1998;338(26):1888–1895. doi: 10.1056/NEJM199806253382607. [DOI] [PubMed] [Google Scholar]

- 23.Shekelle PG, Kravitz RL, Beart J, Marger M, Wang M, Lee M. Are nonspecific practice guidelines potentially harmful? A randomized comparison of the effect of nonspecific versus specific guidelines on physician decision making. Health Serv Res. 2000;34(7):1429–1448. [PMC free article] [PubMed] [Google Scholar]

- 24.Ayanian JZ, Landrum MB, Normand SL, Guadagnoli E, McNeil BJ. Rating the appropriateness of coronary angiography--do practicing physicians agree with an expert panel and with each other? N Engl J Med. 1998;338(26):1896–1904. doi: 10.1056/NEJM199806253382608. [DOI] [PubMed] [Google Scholar]

- 25.McDonnell J, Meijler A, Kahan JP, Bernstein SJ, Rigter H. Panellist consistency in the assessment of medical appropriateness. Heal Policy Amst Neth. 1996;37(3):139–152. [DOI] [PubMed] [Google Scholar]

- 26.Herrin J, Etchason JA, Kahan JP, Brook RH, Ballard DJ. Effect of panel composition on physician ratings of appropriateness of abdominal aortic aneurysm surgery: elucidating differences between multispecialty panel results and specialty society recommendations. Heal Policy Amst Neth. 1997;42(1):67–81. [DOI] [PubMed] [Google Scholar]

- 27.Kahan JP, Park RE, Leape LL, et al. Variations by specialty in physician ratings of the appropriateness and necessity of indications for procedures. Med Care. 1996;34(6):512–523. [DOI] [PubMed] [Google Scholar]

- 28.Cook WK, Weir RC, Ro M, et al. Improving Asian American, Native Hawaiian, and Pacific Islander health: national organizations leading community research initiatives. Prog Community Heal Partnerships Res Educ Action. 2012;6(1):33–41. doi: 10.1353/cpr.2012.0008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Putre L Caring for Health Care’s Costliest Patients Communities Find Ways to Identify and Treat ER “Super-Utilizers.” Robert Wood Johnson Foundation; 2014. Available at: http://www.rwjf.org/content/dam/farm/reports/issue_briefs/2014/rwjf409911. Accessed January 28, 2014. [Google Scholar]

- 30.Shier G, Ginsburg M, Howell J, Volland P, Golden R. Strong Social Support Services, Such As Transportation And Help For Caregivers, Can Lead To Lower Health Care Use And Costs. Health Aff (Millwood). 2013;32(3):544–551. doi: 10.1377/hlthaff.2012.0170. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.