Abstract

Background: For several years now, research teams worldwide have been conducting high-tech research on the development of acupuncture robots. In this article, the design of such an acupuncture robot is presented. Methods: Robot-controlled acupuncture (RCA) equipment consists of three components: (a) Acupuncture point localization, (b) acupuncture point stimulation through a robotic arm, and (c) automated detection of a deqi event for the efficacy of acupuncture point stimulation. Results: This system is under development at the Department of Computer Science and Information Engineering, National Cheng Kung University, Tainan. Acupuncture point localization and acupuncture point stimulation through a robotic arm works well; however, automated detection of a deqi sensation is still under development. Conclusions: RCA has become a reality and is no longer a distant vision.

Keywords: robot-controlled acupuncture (RCA), robot-assisted acupuncture (RAA), computer-controlled acupuncture (CCA), high-tech acupuncture

1. Introduction

Robots are an indispensable part of healthcare today. They perform services silently and in the background, transporting medical equipment and material or laundry, and shaking test tubes or filling in samples. However, should a robot perform an operation or can it make a diagnosis? Most of us will have strong reservations on this. We all want an experienced person to conduct operations. The use of robots in the operating room, however, does not exclude the experienced physician; on the contrary, robots are ideal at supporting them at work. A robot cannot and should not replace a doctor, but can be an important asset. Like other tools, it can have a variety of shapes. For example, medical surgical systems can be more flexible than a human and can have more than two arms that are moved by a console controlled by the physician. Thus, the various robots in medicine are special tools that can support the services of the doctor and ensure better results. The robot’s role as a team member in the operating room will certainly be enhanced in the future. The fact that the robot with its high degree of specialization can help people and work tirelessly makes medicine safer. So why waive the support of such a helper in acupuncture and not benefit from its many advantages?

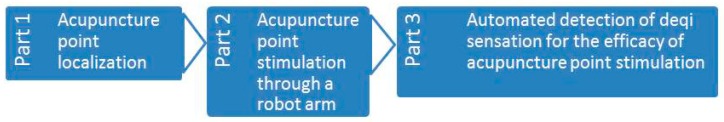

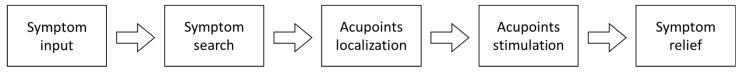

In this article, the design of an automated robotic acupuncture system is presented. The system consists of three main components: (1) Acupuncture point localization, (2) acupuncture point stimulation through a robotic arm, and (3) automated detection of a possible deqi event for the efficacy of acupuncture point stimulation (developmental stage). The overall system design is schematically shown in Figure 1.

Figure 1.

Schematic presentation of the robot-controlled acupuncture system. Note: Part 3 is still under development.

Parts 1 and 2 of the system were developed at the Department of Computer Science and Information Engineering at the National Cheng Kung University in Tainan. The first measurements will take place at the Traditional Chinese Medicine (TCM) Research Center, Medical University of Graz.

2. Acupuncture Point Localization

TCM is a system that seeks to prevent, diagnose and cure illnesses, dating back to several thousand of years. In TCM, qi is the vital energy circulating through channels called meridians, which connect various parts of the body. Acupuncture points (or acupoints) are special locations on meridians, and diseases can be treated or relieved by stimulating these points. However, it is not easy to remember all of the acupoint locations and their corresponding therapeutic effects without extensive training.

Traditionally, people use a map or dummy to learn about the locations of acupuncture points. Given that now over one-third of the world’s population owns a smartphone [1], a system using Augmented Reality (AR) is proposed to localize the acupuncture points. The proposed system includes three stages: Symptom input, database search and acupuncture point visualization.

-

(1)

Symptom input: The user interacts with a chatbot on the smartphone to describe her/his symptoms.

-

(2)

Database search: According to the symptom described by the user, a TCM database is searched and symptom-related acupuncture points are retrieved.

-

(3)

Acupoint visualization: Symptom-related acupoints are visualized in an image of the human body.

Compared to the traditional acupoint probe devices that work by measuring skin impedance, the presented system does not require any additional hardware and is purely software-based. In the case of mild symptoms (e.g., headache, sleep disorder), with the aid of the proposed system, the patient can quickly locate the corresponding acupuncture points for the application of acupuncture or massage, and relieve her/his symptoms without special help from TCM physicians.

While there are studies [2,3] that propose similar ideas (the use of AR for acupoint localization), they did not consider the effect of different body shapes and changes of the viewing angle on the accuracy of acupoint localization. In this project, several techniques, including facial landmark points, image deformation, and 3D morphable model (3DMM) are combined to resolve these issues. Due to clarity, the focus of the discussion on localizing acupoints should be on the face, because it is one of the most challenging parts of the human body, with regards to RCA.

2.1. Image-Based Acupoint Localization

Jiang et al. proposed Acu Glass [2] for acupoint localization and visualization. They created a reference acupoint model for the frontal face, and the acupoint coordinates are expressed as a ratio to face bounding box (returned by the face detector). The reference acupoints are rendered on top of the input face based on the height and width of the subject’s face and the distance between the eyes, relative to the reference model. They used Oriented FAST and Rotated BRIEF (ORB) feature descriptors to match feature points from the current frame and the reference frame, to obtain the estimated transformation matrix. Instead of scaling the reference acupoints like [2], Chang et al. [3] implemented a localization system based on cun measurement. Cun is a traditional Chinese unit of length (its traditional measure is the width of a person’s thumb at the knuckle, whereas the width of the two forefingers denotes 1.5 cun and the width of four fingers side-by-side is three cuns). They used the relative distance between landmarks to convert pixel into cun, assuming that the distance between hairline and eyebrow is 3 cun. The acupoint can be located based on its relative distance (in cun) from some landmark point. However, there are issues with the above approaches for acupoint localization, including:

-

(i)

The differences of face shape between different people are not considered

-

(ii)

A conversion of pixel to cun, based on the frontal face, cannot be directly applied to locating acupoints on the side of the face.

Since landmark points contain shape information, all landmark points should be considered, instead of just a few being used to estimate the location of an acupoint (like in [3]). In the current project, landmark points are used as the control points for image deformation, and the locations of acupoints can be estimated by deforming a reference acupuncture model. In addition, the reference acupuncture model is allowed to be changed dynamically to match different viewing angles. More specifically, a 3D model with labeled acupoints is created so that the 3D model can rotate to match the current viewing angle. Finally, a 3D morphable model for different face shapes is provided. In other words, a 3D morphable model is deformed to best fit the input face, and the acupoints are projected to 2D as the reference acupuncture model. A summary of previous studies in acupoint localization is shown in Table 1.

Table 1.

Comparison of related work.

| Method | Angle-aware | Shape-aware | Reference Model | Estimation | Limitation |

|---|---|---|---|---|---|

| Chang et al. [2] | No | No | 2D | Using cun measurement system by first converting pixel into cun | 1) Assuming that the hairline is not covered with hair 2) Does not work for side face |

| Jiang et al. [3] | Partially | No | 2D | Scaling of the reference model | 1) The scaling factor is based on the bounding box ratio returned by the edge detector, which can be unreliable 2) Does not work for side face |

| Proposed system | Yes | Yes | 3D | 3DMM, weighted deformation, landmarks | Need landmark points |

2.2. Landmark Detection

A marker is often used in AR for localization. Instead of putting markers on the user, landmark points and landmark detection are often used for markerless AR. The processing overhead of the landmark detection algorithm is vital for our application due to its real-time requirement. Therefore, the complexity of the algorithm must be considered. Real-time face alignment algorithms have been developed [4,5], and the Dlib [6] implementation by [4] is used in the present work.

2.3. Image Deformation

Image deformation transforms one image to another, wherein the transformed image is deformed from the original image by being rotated, scaled, sheared or translated. A scaling factor can be estimated with two sets of corresponding points. Jiang et al. [2] used the distance between eyes and the height of the face as the scaling factor of the x and y directions. Then the same transformation is applied to every pixel on the reference acupoint model. However, given that their scaling factors are based on a frontal face model, it cannot be applied to the side face. In addition, they did not consider difference face shapes. In the present work, we use a weighted transformation based on moving least squares [7]. The distance between the control points is used as the weight during the transformation. Here, the control points are the landmark points.

2.4. 3D Morphable Model (3DMM)

Given that the acupoints are defined in a 3D space, a 3D face model is useful for acupoint localization. The 3D Morphable Models (3DMM) [8,9,10,11] are common for face analysis because the intrinsic properties of 3D faces provide a representation that is immune to intra-personal variations. Given a single facial input image, a 3DMM can recover 3D face via a fitting process. More specifically, a large dataset of 3D face scans is needed to create the morphable face model, which is basically a multidimensional 3D morphing function based on the linear combination of these 3D face scans. By computing the average face and the main modes of variation in the dataset, a parametric face model is created that is able to generate almost any face.

After the 3DMM is created, a fitting process is required to reconstruct the corresponding 3D face from a new image. Such a fitting process seeks to minimize the difference between an input new face and its reconstruction by the face model function. Most fitting algorithms for 3DMM require several minutes to fit a single image. Recently, Huber et al. [12] proposed a fitting method using local image feature that leverages cascaded regression, which is being widely used in pose estimation and 2D face alignment. Paysan et al. [13] published the Basel Face Model (BFM), which can be obtained under a license agreement. However, they did not provide the fitting framework. On the other hand, Huber et al. [14] released the Surry Face Model (SFM), which is built from 169 radically diverse scans. SFM is used in the present work, as it provides both the face model and the fitting framework.

2.5. Face Detection (FD) and Tracking

Haar-like features [15] with Adaboost learning algorithm [16,17] have been commonly used for object detection. However, they tend to have a high false positive rate. Zondag et al. [18] showed that the histogram-of-oriented-gradient (HOG) [19] feature consistently achieves lower average false positive rates than the Haar feature for face detection. In the present work, a face detector based on HOG-based features and support vector machine (SVM) were implemented. However, the computation overhead to combine HOG and SVM could be a bit significant when running the face detector on a smartphone. In addition, a low detection rate might occur for a fast-moving subject. For example, during the process of acupuncture or acupressure, some part of the face might be obscured by the hand such that the face is undetected by the face detector. Therefore, a face-tracking mechanism has been implemented to mitigate these problems.

A correlation-based tracker is known for its fast tracking speed, which is vital for the application. Bolme et al. proposed the minimum output sum of squared error (MOSSE) based on the correlation filter [20]. Danelljan et al. introduced the discriminative scale space tracker (DSST) [21] based on the MOSSE tracker. DSST can estimate the target size and multi-dimension feature such as PCA-HOG [22], which makes the tracker more robust to intensity changes. The Dlib [6] implementation of DSST [21] is used in this work.

2.6. Method—Acupuncture Point Localization

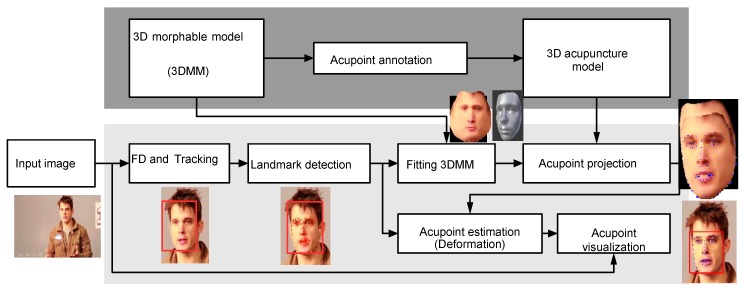

A flow chart of the 3D acupuncture point localization is shown in Figure 2. The offline process is shown in dark gray and online process in light gray. The acupoints are labeled in the offline process. During the online process, face detection is performed on the input image, and then landmark detection is applied to the face region to extract facial landmark points.

Figure 2.

Flow chart of 3D acupuncture point estimation.

The landmark points are then utilized in the fitting process of 3D morphable model (3DMM) to have a fitted model to the input face, and the 3D model is then rotated to the same angle as the input face. Next, landmark points on the 3D model will be projected onto 2D. During the acupoint estimation process, the projected acupoints are deformed using the control points, which contain the projected landmark points and the input landmarks points. Finally, the estimated acupoints are warped into the input image to visualize the acupoints.

2.6.1. 3D Morphable Model

A facial 3D morphable model (3DMM) is a static model generated from multiple 3D scans of human faces. The 3DMM can be used to reconstruct a 3D model from a 2D image. In this work, Surry Face Model (SFM) [14] is used to implement our 3DMM model. The generated model is fitted using the landmark points on the 2D image, such that different face shapes can be considered.

The generated 3D model is expressed as S = [x1, y1, z1, . . . , xn, yn, zn]T, where [xn, yn, zn]T is the n-th vertex. Principal component analysis (PCA) is applied to the 3DMM, which yields a number of m components. A 3D model can be approximated by linear combination of the basis vectors S = v¯+ ∑m αivi, where v¯ is mean mesh, α is the shape coefficients, v is the basis vector, m is the number of principal components.

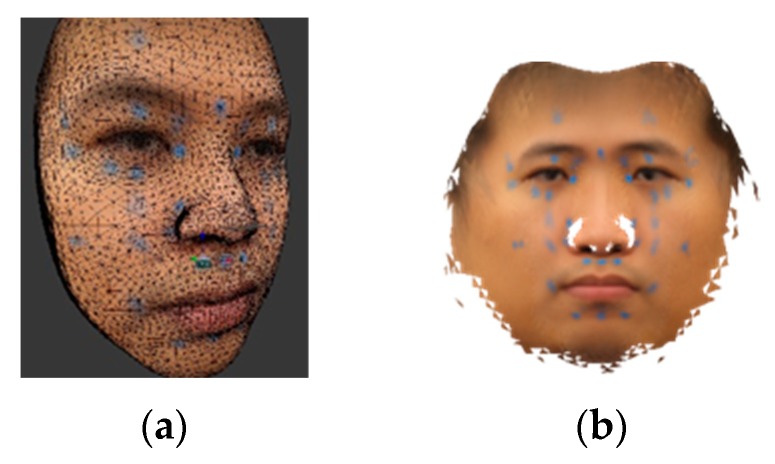

2.6.2. Acupoint Annotation

Face images from a large number of subjects with different face angels should be captured, and blue stickers put on their faces to annotate the acupoints. To define an acupoint reference model, a mean face for thirteen different face angles will be built, and all mean faces then merged into an isomap texture, as shown in Figure 3b. The texture and mean model of the 3DMM are then loaded into the 3D graphics software. For every acupoint, the 3D coordinates of the center point of the blue sticker and its three nearest vertices are marked. The coordinates of the landmark points are marked as well.

Figure 3.

3D acupoint annotation. (a) acupoint annotation, (b) isomap texture.

2.6.3. 3D Acupuncture Model

The 3D acupuncture model contains labeled metadata during the step of acupuncture annotation, including the indices of landmark vertex (denoted as Lindex), the 3D coordinates of acupoints (denoted as Aref 3D), and the indices of three nearest vertices to the acupoint (denoted as AneiIndex).

2.6.4. Face Detection and Tracking

We plan to implement a face detector using SVM with HOG-based features. In addition, a DSST-based tracker will be employed to speed up the system and reduce face detection failure when the face is occluded by the hand during acupuncture or acupressure. The face is tracked by the tracker once the face is detected.

Peak to Sidelobe Ratio (PSR) [23] is a metric that measures the peak sharpness of the correlation plane, commonly used to evaluate tracking quality. For estimation of the PSR, the peak is located first. Then the mean and standard deviation of the sidelobe region, excluding a central mask centered at the peak, are computed. In this work, a 11x11 pixel area is used as the central mask.

Once the face is detected, the face area is used to calculate filter Hl, which is then used to track the face for future frames. If the PSR is lower than a defined threshold, the face is re-detected to reduce the tracking loss. For every FD frame, we select a region around the current face image. The selected region is slightly larger than the face region and we try to re-locate the face region in order to keep the region constrained. The constrained face region improves the accuracy of the landmark detection.

2.6.5. Landmark Detection

For concerns of computation speed, an ensemble of regression trees is used in our work for landmark detection [4]. The difference of pixel intensity between a pair of landmark points are selected as the feature used to train the regression tree. Each tree-based regressor is learned using the gradient boosting tree algorithm [24].

2.6.6. Fitting 3DMM

2D landmarks are used in the process of fitting 3DMM to find optimal PCA coefficients. The goal is to minimize the re-projected distance of the landmark points.

2.6.7. Acupoint Projection

Pose estimation problem is often referred as perspective-n-point (PNP) problem [25,26]. Given the input of a set of 2D points and its corresponding 3D points, a pose estimation function estimates the pose by minimizing re-projection errors. The output of such a function contains information that can transform a 3D model from the model space to the camera space. We plan to utilize the pose estimation algorithm included in the SFM fitting framework [14]. In our case, the 2D points set are obtained from landmark detection, while the 3D points are labeled at the offline stage.

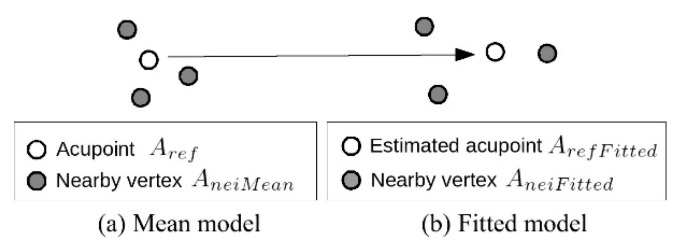

2.6.8. Acupoint Estimation

The acupoint estimation problem is treated as an image deformation process, in which image deformation using moving least square [7] is used. The basic image deformation affine transform is defined as lv(x) = Mx + T, where M is a matrix controls scaling, rotation and shear, x is a 2D point, T controls the translation. Let f be a function of image deformation, f(p) = q transform a set of control points p to a new position q.

As shown in Figure 4, since the reference acupoints Aref are only available in the mean model, the actual acupoint location ArefFitted in the fitted model will be estimated using the nearby vertices as the control points (see Equations (1) and (2)).

| ArefFitted = deform(AneiMean, AneiFitted, Aref) | (1) |

| Ain = deform(Lfitted, Lin, Aref F itted) | (2) |

Figure 4.

Visualized acupoint during the estimation process. (a) Mean model, (b) Fitted model.

2.6.9. Acupoint Visualization

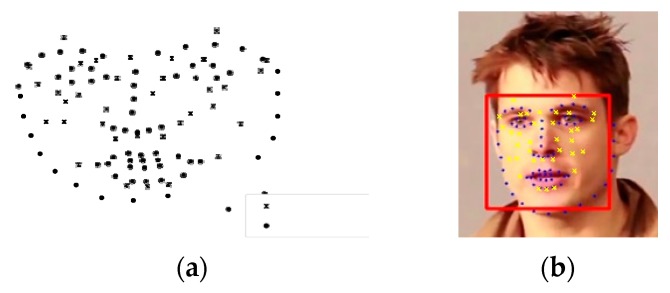

Landmark points (denoted as dots) and acupoints (denoted as crosses) are illustrated in Figure 5a. The landmark points in the input image are used as the destination control points, while landmark points in the reference model (i.e. the fitted 3DMM model) are used as the control points to deform the reference acupoints. The deformed acupoints are then visualized onto the input image, as shown in Figure 5b. The warping not only visualizes the acupoints, but also takes care of the non-rigid part of the face, such as facial expressions, the mouth opening, etc.

Figure 5.

Acupoint visualization. (a) acupuncture model; (b) warped acupoints.

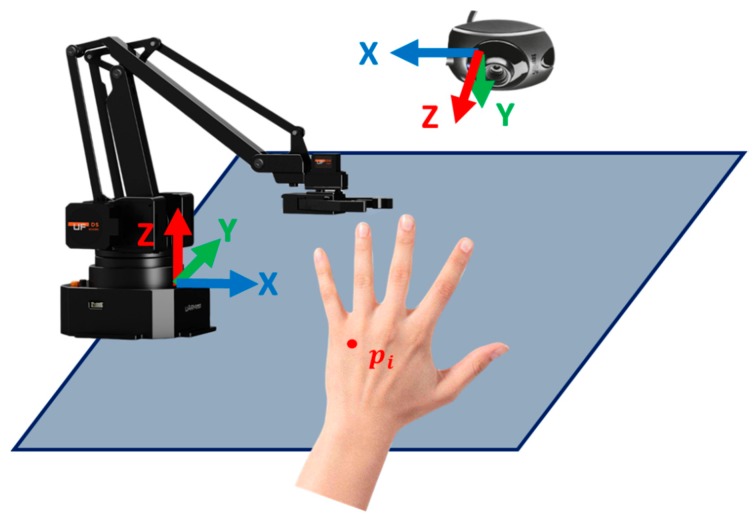

3. Automated Acupoint Stimulation

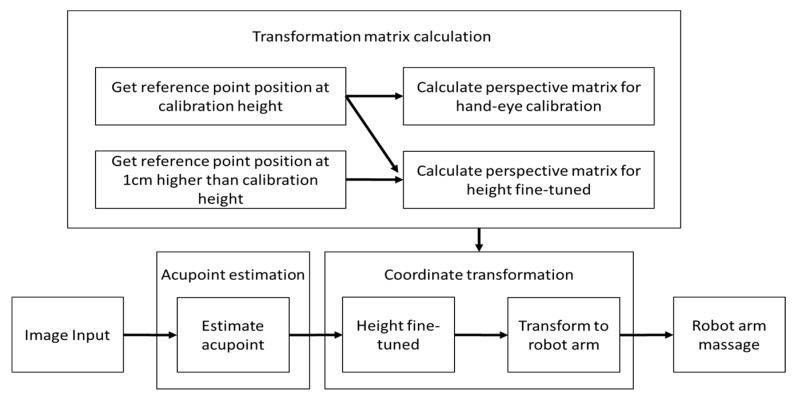

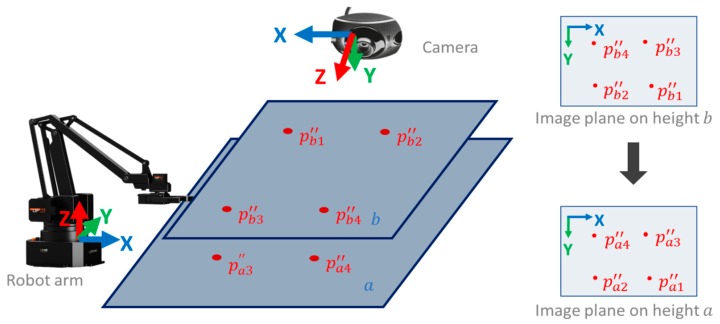

The second part of this future-oriented work is to build a system for automated acupuncture. Given that a depth camera is still quite expensive (8–10 times more expensive than a 2D camera), this project plans to build an automated acupuncture system with an inexpensive 2D monocular camera and a robot arm. The main research issue is identifying how to localize the acupoint from the image space to the robot arm space. The system architecture is shown in Figure 6.

Figure 6.

System architecture.

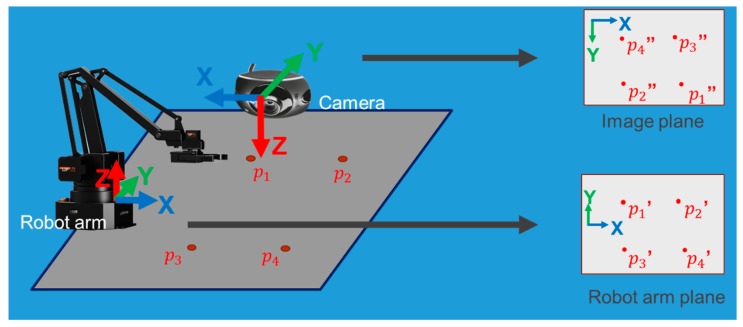

3.1. Hand-Eye Calibration

The problem of determining the relationship between the camera and the robot arm is referred to as the hand-eye calibration problem, which is basically to transform the target coordinate position in the image plane to the corresponding coordinate position in the robot arm plane. Due to the use of a 2D camera, we limit the conversion from the 2D image plane to the robot arm X-Y plane. In this project, perspective coordinate transformation is used, also called 8-parameter coordinate transformation [27], which does not have to maintain parallelism compared to other coordinate transformation methods.

The present system can be divided into three big parts—acupoint estimation, transformation matrix calculation and coordinate transformation. Transformation matrix calculation estimates the matrix used by coordinate transformation and only performs at the first time of setting up the system (Figure 7). The camera first reads an image as input, and then moves to the acupoint estimation part (as discussed in the previous acupoint localization section) to get the image coordinates of the acupuncture points. Then, the acupoint image coordinate is passed to the coordinate transformation part to get the robot arm coordinates of the acupoints.

Figure 7.

System components for robot arm acupuncture or massage.

3.2. Transformation Matrix Calculation

Perspective transformation transform coordinates between image plane and robot arm plane was used here:

| (3) |

Perspective equation (3) shows that one can transform the coordinate from the original coordinate () to the destination coordinate (X,Y). The eight parameters (a–h) control in-plane-rotation, out-plane-rotation, scaling, shearing, and translation. The goal is to calculate the eight unknowns (a–h), so that Equation (5) can be used to transform coordinates from origin to destination. With these eight unknowns (a–h), at least four known points in both systems are required. Intuitively, using more known reference positions to solve the equation can reduce errors and increase accuracy. If we have more than four known points in both systems to solve eight parameters’ transformation, we call this as an overdetermined system. The method of least squares is a standard approach in regression analysis to approximate the solution of overdetermined systems. Considering the time and distribution, we selected 13 reference points to obtain 13 known points in both systems and solve the perspective transformation equation. We can simply find the least squares solution by using linear algebra (4):

| (4) |

| (5) |

Since the original equation is nonlinear (3), we have to linearize (5) it in order to solve it using linear algebra.

Finally, it can be converted to , and Equation (4) is used to find the least squares solution of the perspective transformation. We apply perspective transformation to the camera and robot arm to conduct hand-eye calibration, as shown in Figure 8. We take the image plane as the original coordinate and the robot arm plane as the destination coordinate, in order to calculate perspective matrix (6,7).

| (6) |

| (7) |

Figure 8.

Diagram of hand-eye calibration.

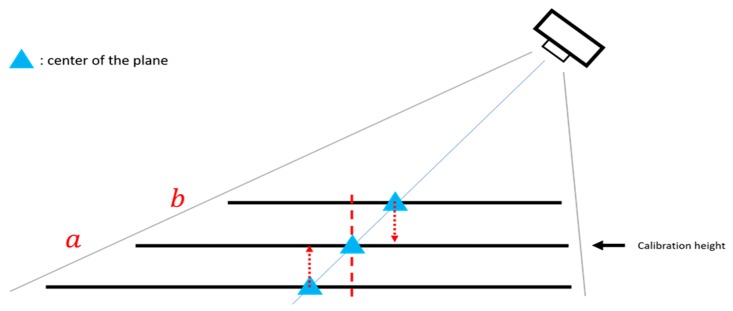

It is to be kept in mind that the camera might be placed at the side of the image and different acupoints might have different heights; therefore, when we project the coordinates of the actual height of the acupoint to the calibration height, it might not be the point of the acupoint position we see in the image, which could result in an estimation error, as shown in Figure 9.

Figure 9.

Errors caused by different heights.

Therefore, perspective transformation is again applied to the image plane at a different height to obtain the fine-tuned height matrix as shown in Figure 10. The image plane is taken at the calibration plane height (plane a) as the original coordinate and the image plane at the height higher than the calibration height (plane b) as the destination coordinate to calculate perspective matrix , as shown in Figure 10.

Figure 10.

Diagram of the fine-tuned height.

Combining Equations (7) and (8), we get Equation (9), which converts the acupoint from the image space to the robot arm space, as shown in Figure 11.

| (8) |

| (9) |

Figure 11.

Diagram of the practical application.

4. Automated Detection of Deqi Event

The arrival of qi (deqi) is considered to be related to acupuncture efficacy [28]. Deqi can be measured by the sensation of the acupuncturists and the reaction of the patient.

While a deqi sensation scale is considered an important qualitative and quantitative measuring tool, there is no standardization or reliable deqi scale due to lack of sufficient evidence [29,30,31,32]. In 1989, the Vincent deqi scale was invented based on the McGill pain questionnaire. The Park deqi scale and MacPherson deqi scale followed [33,34].

The Massachusetts General Hospital acupuncture sensation scale (MASS) [35] is composed of various needling sensations and has a system that measures the spread of deqi and patient’s anxiety levels during needling. These scales mainly focus on the patient’s sensations and are pretty subjective. Ren et al. [36] conducted a survey to determine the perspectives of acupuncturists on deqi. Again, their results are based on subjective questionnaires collected from a small number of acupuncturists [36].

The effects of acupuncture are well reflected in electroencephalogram (EEG) changes, as revealed by recent reports [37,38,39]. Therefore, the EEG can also be used as an objective indicator of deqi, as its change is significantly associated with autonomic nervous functions. Observing EEG changes in relation to acupuncture is more objective than using a questionnaire.

While observing the deqi effect via EEG is considered a more subjective measure, the results from prior studies are not consistent. Yin et al. [40] observed significant changes in alpha band electroencephalogram powers during deqi periods, while Streitberger et al. [41] only found significant changes in alpha1 band. Other studies [37,39,42,43] have shown that EEG power spectrum increases in all frequency bands, including alpha-wave (8–13 Hz), beta-wave (13–30 Hz), theta-wave (4–8 Hz), and delta-wave (0.5–4 Hz) in the frontal, temporal, and occipital lobes when a deqi effect is reported by the subjects. Note that the number of subjects in these studies are generally small (10–20 people) and different acupuncture points are used in different studies. Furthermore, while deqi sensation is commonly believed to be the most important factor in clinical efficacy during acupuncture treatment, the detection of deqi mostly relies on the participant’s subjective sensations and sometimes the acupuncturist’s perceptions. It has been found that there can be some differences between a patient’s real-life experience and the acupuncturist’s sensation [44]. In clinical practice, many acupuncturists can only detect deqi effects based on the patient’s report or observation of the reaction of a patient to deqi.

In this context, there is the plan to design a portable device based on collection of EEG signals to automatically detect a deqi effect. Such a device can be useful for clinical practice and acupuncture training in schools. It should be developed such that it automatically detects deqi events and reports it wirelessly to the acupuncturist.

5. Discussion

Reports on medical robotics [45] show applications in the field of acupuncture. In the humor section for the online publication, Science-based Medicine, Jones [46] picturizes a patient being treated for fatigue syndrome by an acupuncturist and an acupuncture anesthetist, who use robotic acupuncture.

It is interesting, as Jones states, that one of the greatest strengths of acupuncture, namely the direct connection between the doctor and the acupuncture needle, is also one of its major weaknesses. As mentioned in the introduction, surgeons use robotic technology to perform an increasing number of minimally invasive procedures [45].

The healing art of acupuncture is deeply rooted in ancient Eastern culture, but the modern technology that is now being used to expand it comes from Western medicine [45,46]. Jones says that state-of-the-art medical robotic technologies feature high-resolution 3D magnification systems and instruments that can maneuver with far-greater precision than the human wrist and fingers. This allows specially-trained acupuncturists to locate and successfully control hard-to-reach acupuncture points (those next to the eye or in the genital area). This could expand the number of indications suitable for acupuncture [45].

However, robot-assisted acupuncture is not the only high-tech therapeutic option in the field of complementary medicine. Other methods have been incorporating modern technology into their protocols for years. Chiropractors, for example, adopt the latest high-tech electronics devices. This helps facilitate the localization of subluxations in the spine. Once a safe diagnosis is made, it can be treated using traditional hands-on techniques [45,46].

6. Conclusions

In 1997, our Graz research team at the Medical University of Graz in 1997 showed how acupuncture worked without the aid of an acupuncturist and we introduced the term “computer-controlled acupuncture” [47]. However, we did not imply that the computer would replace the acupuncturist; rather, we were seeking to highlight the quantification of the measurable effects of acupuncture. This vision of “robot-controlled acupuncture” is now a reality [47,48,49,50]; the research work in this article clearly shows its present status. Further modernization of acupuncture, like automatic artificially-based detection of dynamic pulse reactions for robot-controlled ear acupuncture, is under development.

Author Contributions

K.-C.L. created and built the acupuncture robot, G.L. designed the manuscript with the help of colleagues in Tainan, and both authors read the final manuscript.

Funding

This joint publication was supported by funds from the MOST (Ministry of Science and Technology) by a MOST Add-on Grant for International Cooperation (leader in Taiwan: Prof. Kun-Chan Lan (K.-C.L.), leader in Austria: Prof. Gerhard Litscher (G.L.)). Robot-Controlled Acupuncture – Demo video: https://www.youtube.com/watch?v=NvCV0dSq7TY.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Statista, Smartphone user penetration as percentage of total global population from 2014 to 2021. [(accessed on 22 May 2019)]; Available online: https://www.statista.com/statistics/203734/global-smartphone-penetration-per-capita-since-2005.

- 2.Jiang H., Starkman J., Kuo C.H., Huang M.C. Acu glass: Quantifying acupuncture therapy using google glass; Proceedings of the 10th EAI International Conference on Body Area Networks; Sydney, Australia. 28–30 September 2015; pp. 7–10. [Google Scholar]

- 3.Chang M., Zhu Q. Automatic location of facial acupuncture-point based on facial feature points positioning; Proceedings of the 5th International Conference on Frontiers of Manufacturing Science and Measuring Technology (FMSMT); Taiyuan, China. 24–25 June 2017. [Google Scholar]

- 4.Kazemi V., Sullivan J. One millisecond face alignment with an ensemble of regression trees; Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR); Washington, WA, USA. 23–28 June 2014; pp. 1867–1874. [Google Scholar]

- 5.Ren S., Cao X., Wei Y., Sun Y. Face alignment at 3000 fps via regressing local binary features; Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR); Columbus, OH, USA. 23–28 June 2014; pp. 1685–1692. [Google Scholar]

- 6.King D.E. Dlib-ml: A machine learning toolkit. J. Mach. Learn. Res. 2009;10:1755–1758. [Google Scholar]

- 7.Schaefer S., McPhail T., Warren J. Image deformation using moving least squares. ACM Trans. Graph. 2006;25:533–540. doi: 10.1145/1141911.1141920. [DOI] [Google Scholar]

- 8.Kim J., Kang D.I. Partially automated method for localizing standardized acupuncture points on the heads of digital human models. Evid. Based Complement. Altern. Med. 2015:1–12. doi: 10.1155/2015/483805. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Zheng L., Qin B., Zhuang T., Tiede U., Höhne K.H. Localization of acupoints on a head based on a 3d virtual body. Image Vis. Comput. 2005;23:1–9. doi: 10.1016/j.imavis.2004.03.005. [DOI] [Google Scholar]

- 10.Kim J., Kang D.I. Positioning standardized acupuncture points on the whole body based on x-ray computed tomography images. Med. Acupunct. 2014;26:40–49. doi: 10.1089/acu.2013.1002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Blanz V., Vetter T. A morphable model for the synthesis of 3d faces; Proceedings of the 26th annual conference on Computer Graphics and Interactive Techniques (SIGGRAPH); Los Angeles, CA, USA. 8–13 August 1999; pp. 187–194. [Google Scholar]

- 12.Huber P., Feng Z.H., Christmas W., Kittler J., Rätsch M. Fitting 3d morphable models using local features; Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP); Quebec City, QC, Canada. 27–30 September 2015; pp. 1195–1199. [Google Scholar]

- 13.Paysan P., Knothe R., Amberg B., Romdhani S., Vetter T. A 3d face model for pose and illumination invariant face recognition; Proceedings of the 6th IEEE International Conference on Advanced Video and Signal based Surveillance (AVSS) for Security, Safety and Monitoring in Smart Environments; Genova, Italy. 2–4 September 2009. [Google Scholar]

- 14.Huber P., Hu G., Tena R., Mortazavian P., Koppen P., Christmas W., Ratsch M.J., Kittler J. A multiresolution 3d morphable face model and fitting framework; Proceedings of the 11th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications; Rome, Italy. 27–29 February 2016. [Google Scholar]

- 15.Viola P., Jones M. Rapid object detection using a boosted cascade of simple features; Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR); Kauai, HI, USA. 8–14 December 2001; pp. 511–518. [Google Scholar]

- 16.Wilson P.I., Fernandez J. Facial feature detection using haar classifiers. J. Comput. Sci. Coll. 2006;21:127–133. [Google Scholar]

- 17.Chen Q., Georganas N.D., Petriu E.M. Hand gesture recognition using haar-like features and a stochastic context-free grammar. IEEE Trans. Instrum. Meas. 2008;57:1562–1571. doi: 10.1109/TIM.2008.922070. [DOI] [Google Scholar]

- 18.Zondag J.A., Gritti T., Jeanne V. Practical study on real-time hand detection; Proceedings of the 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops; Amsterdam, The Netherlands. 10–12 September 2009; pp. 1–8. [Google Scholar]

- 19.Dalal N., Triggs B. Histograms of oriented gradients for human detection; Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR); San Diego, CA, USA. 20–25 June 2005; pp. 886–893. [Google Scholar]

- 20.Bolme D., Beveridge J.R., Draper B.A., Lui Y.M. Visual object tracking using adaptive correlation filters; Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR); San Francisco, CA, USA. 13–18 June 2010; pp. 2544–2550. [Google Scholar]

- 21.Danelljan M., Häger G., Khan F., Felsberg M. Accurate scale estimation for robust visual tracking; Proceedings of the British Machine Vision Conference BMVC; Nottingham, UK. 1–5 September 2014. [Google Scholar]

- 22.Felzenszwalb P.F., Girshick R.B., McAllester D., Ramanan D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2010;32:1627–1645. doi: 10.1109/TPAMI.2009.167. [DOI] [PubMed] [Google Scholar]

- 23.Chen J., Gu H., Wang H., Su W. Mathematical analysis of main-to-sidelobe ratio after pulse compression in pseudorandom code phase modulation CW radar; Proceedings of the 2008 IEEE Radar Conference; Rome, Italy. 26–30 May 2008; pp. 1–5. [Google Scholar]

- 24.Jiang J., Jiang J., Cui B., Zhang C. Tencent Boost: A Gradient Boosting Tree System with Parameter Server; Proceedings of the 2017 IEEE 33rd International Conference on Data Engineering (ICDE); San Diego, CA, USA. 19–22 April 2017; pp. 281–284. [Google Scholar]

- 25.Fischler M.A., Bolles R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM. 1981;24:381–395. doi: 10.1145/358669.358692. [DOI] [Google Scholar]

- 26.Grest D., Petersen T., Krüger V. A comparison of iterative 2d-3d pose estimation methods for real-time applications; Proceedings of the 16th Scandinavian Conference on Image Analysis; Oslo, Norway. 15–18 June 2009; pp. 706–715. [Google Scholar]

- 27.The Homography transformation. [(accessed on 23 May 2019)]; Available online: http://www.corrmap.com/features/homography_transformation.php.

- 28.Liang F.R., Zhao J.P. Acupuncture and Moxibustion. People’s Medical Publishing House; Beijing, China: 2012. [Google Scholar]

- 29.Xu S., Huang B., Zhang C.Y., Du P., Yuan Q., Bi G.J., Zhang G.B., Xie M.J., Luo X., Huang G.Y., et al. Effectiveness of strengthened stimulation during acupuncture for the treatment of Bell palsy: A randomized controlled trial. CMAJ. 2013;185:473–479. doi: 10.1503/cmaj.121108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Park J.E., Ryu Y.H., Liu Y. A literature review of de qi in clinical studies. Acupunct. Med. 2013;31:132–142. doi: 10.1136/acupmed-2012-010279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Chen J., Li G., Zhang G., Huang Y., Wang S., Lu N. Brain areas involved in acupuncture needling sensation of de qi: A single-photon emission computed tomography (SPECT) study. Acupunct. Med. 2012;30:316–323. doi: 10.1136/acupmed-2012-010169. [DOI] [PubMed] [Google Scholar]

- 32.Sandberg M., Lindberg L., Gerdle B. Peripheral effects of needle stimulation (acupuncture) on skin and muscle blood flow in fibromyalgia. Eur. J. Pain. 2004;8:163–171. doi: 10.1016/S1090-3801(03)00090-9. [DOI] [PubMed] [Google Scholar]

- 33.Park J., Lee H., Lim S. Deqi sensation between the acupuncture-experienced and the naive: A Korean study II. Am. J. Chin. Med. 2005;33:329–337. doi: 10.1142/S0192415X0500293X. [DOI] [PubMed] [Google Scholar]

- 34.MacPherson H., Asghar A. Acupuncture needle sensations associated with De Qi: A classification based on experts’ ratings. J. Altern. Complement. Med. 2006;12:633–637. doi: 10.1089/acm.2006.12.633. [DOI] [PubMed] [Google Scholar]

- 35.Kong J., Fufa D.T., Gerber A.J. Psychophysical outcomes from a randomized pilot study of manual, electro, and sham, acupuncture treatment on experimentally induced thermal pain. J. Pain. 2005;6:55–64. doi: 10.1016/j.jpain.2004.10.005. [DOI] [PubMed] [Google Scholar]

- 36.Ren Y.L., Guo T.P., Du H.B., Zheng H.B., Ma T.T. A survey of the practice and perspectives of Chinese acupuncturists on deqi. Evid. Based Comple. Altern. Med. 2014 doi: 10.1155/2015/684708. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Kim M.S., Kim H.D., Seo H.D., Sawada K., Ishida M. The effect of acupuncture at the PC-6 on the electroencephalogram and electrocardiogram. Am. J. Chin. Med. 2008 doi: 10.1142/S0192415X08005928. [DOI] [PubMed] [Google Scholar]

- 38.Kim S.Y., Kim S.W., Park H.J. Different responses to acupuncture in electroencephalogram according to stress level: A randomized, placebo-controlled, cross-over trial. Korean J. Acupunct. 2014;31:136–145. doi: 10.14406/acu.2014.022. [DOI] [Google Scholar]

- 39.Kim M.S., Cho Y.C., Moon J.H., Pak S.C. A characteristic estimation of bio-signals for electro-acupuncture stimulations in human subjects. Am. J. Chin. Med. 2009;37:505–517. doi: 10.1142/S0192415X09007016. [DOI] [PubMed] [Google Scholar]

- 40.Yin C.S., Park H.J., Kim S.Y., Lee J.M., Hong M.S., Chung J.H., Lee H.J. Electroencephalogram changes according to the subjective acupuncture sensation. Neurol. Res. 2010;32:31–36. doi: 10.1179/016164109X12537002793841. [DOI] [PubMed] [Google Scholar]

- 41.Streitberger K., Steppan J., Maier C., Hill H., Backs J., Plaschke K. Effects of verum acupuncture compared to placebo acupuncture on quantitative EEG and heart rate variability in healthy volunteers. J. Altern. Complement. Med. 2008;14:505–513. doi: 10.1089/acm.2007.0552. [DOI] [PubMed] [Google Scholar]

- 42.Sakai S., Hori K., Umeno K., Kitabayashi N., Ono T., Nishijo H. Specific acupuncture sensation correlates with EEGs and autonomic changes in human subjects. Auton. Neurosci. Basic Clin. 2007;133:158–169. doi: 10.1016/j.autneu.2007.01.001. [DOI] [PubMed] [Google Scholar]

- 43.Tanaka Y., Koyama Y., Jodo E. Effects of acupuncture to the sacral segment on the bladder activity and electroencephalogram. Psychiatry Clin. Neurosci. 2002;56:249–250. doi: 10.1046/j.1440-1819.2002.00976.x. [DOI] [PubMed] [Google Scholar]

- 44.Zhou K., Fang J., Wang X. Characterization of De Qi with electroacupuncture at acupoints with different properties. J. Altern. Complement. Med. 2011;17:1007–1013. doi: 10.1089/acm.2010.0652. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Litscher G. From basic science to robot-assisted acupuncture. Guest editorial. Med. Acupunct. 2019;31:139–140. doi: 10.1089/acu.2019.29117.glt. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Jones C. Robotically-Assisted Acupuncture Brings Ancient Healing Technique into the 21th Century; Science-Based Satire. Science-Based Medicine; New Haven, CT, USA: 2016. [Google Scholar]

- 47.Litscher G., Cho Z.H. Computer-Controlled Acupuncture. Pabst Science Publishers; Lengerich, Germany: 2000. [Google Scholar]

- 48.Litscher G., Schwarz G., Sandner-Kiesling A. Computer-controlled acupuncture. AKU. 1998;26:133–142. [Google Scholar]

- 49.Litscher G., Schwarz G., Sandner-Kiesling A., Hadolt I. Transcranial Doppler sonography robotic-controlled sensors for quantification of the effects of acupuncture. DZA. 1998;3:3–70. [Google Scholar]

- 50.Litscher G. Robot-assisted acupuncture a technology for the 21st century? Acupunct. Auricular Med. 2017;4:9–10. [Google Scholar]