Abstract

Background

New electronic cohort (e-Cohort) study designs provide resource-effective methods for collecting participant data. It is unclear if implementing an e-Cohort study without direct, in-person participant contact can achieve successful participation rates.

Objective

The objective of this study was to compare 2 distinct enrollment methods for setting up mobile health (mHealth) devices and to assess the ongoing adherence to device use in an e-Cohort pilot study.

Methods

We coenrolled participants from the Framingham Heart Study (FHS) into the FHS–Health eHeart (HeH) pilot study, a digital cohort with infrastructure for collecting mHealth data. FHS participants who had an email address and smartphone were randomized to our FHS-HeH pilot study into 1 of 2 study arms: remote versus on-site support. We oversampled older adults (age ≥65 years), with a target of enrolling 20% of our sample as older adults. In the remote arm, participants received an email containing a link to enrollment website and, upon enrollment, were sent 4 smartphone-connectable sensor devices. Participants in the on-site arm were invited to visit an in-person FHS facility and were provided in-person support for enrollment and connecting the devices. Device data were tracked for at least 5 months.

Results

Compared with the individuals who declined, individuals who consented to our pilot study (on-site, n=101; remote, n=93) were more likely to be women, highly educated, and younger. In the on-site arm, the connection and initial use of devices was ≥20% higher than the remote arm (mean percent difference was 25% [95% CI 17-35] for activity monitor, 22% [95% CI 12-32] for blood pressure cuff, 20% [95% CI 10-30] for scale, and 43% [95% CI 30-55] for electrocardiogram), with device connection rates in the on-site arm of 99%, 95%, 95%, and 84%. Once connected, continued device use over the 5-month study period was similar between the study arms.

Conclusions

Our pilot study demonstrated that the deployment of mobile devices among middle-aged and older adults in the context of an on-site clinic visit was associated with higher initial rates of device use as compared with offering only remote support. Once connected, the device use was similar in both groups.

Keywords: wearable electronic devices, cell phone, fitness trackers, electrocardiography, epidemiology

Introduction

Background

Recent advances in mobile health (mHealth) technology have improved the feasibility of collecting digital data and have the potential to revolutionize both research and health care delivery [1-4]. The term mHealth technology refers to the use of smartphones and other mobile devices for personal health monitoring, health care delivery, or research [5]. Expert recommendations from the National Institutes of Health (NIH) National Heart, Lung, and Blood Institute (NHLBI) advocated for using innovative approaches, such as study designs that utilize mHealth technology, to provide new opportunities for population science [6]. Innovative electronic cohort (e-Cohort) study designs that incorporate mHealth technology into traditional cohort studies have been proposed, minimizing the requirement of physical resources by collecting data remotely (reducing or completely eliminating in-person clinical examinations) [7-10]. In 2015, the NIH funded a national resource to mobilize research by creating an infrastructure for conducting research using mHealth technology and has recently initiated the All of Us Research Program (formerly called the Precision Medicine Initiative) [11]. The All of Us program is a large, national study, with the goal of recruiting 1 million participants, which differs from other national cohorts such as the United Kingdom Biobank Study [12], by allowing for electronic (remote) enrollment. Successful recruitment in previous e-Cohort studies such as Health eHeart (HeH) Study and MyHeart Counts, which do not require on-site visits [13,14], have paved the way for new, large e-Cohorts such as All of Us.

The e-Cohort approach may provide a cost-effective methodology to remotely collect population-level data outside of standard research clinic settings, using mHealth devices and internet-based questionnaires [7-10], but may introduce substantial selection bias beyond that of typical research studies [13,15]. Investigators from HeH reported that HeH participants are more likely to be female, white/non-Hispanic, college-educated, nonsmokers, in excellent general health, but are also more likely to have cardiovascular disease and risk factors, compared with a national research study with more traditional recruitment practices [13]. Moreover, the level of technical support that may be required by participants for mHealth device data collection is unclear, especially with regard to middle-aged and older adults who may have less familiarity and require more support with mHealth technology [16]. Finally, despite several theoretical advantages of merging these newer remote studies (lacking on-site visits) with established conventional cohorts, this practice has not yet been carefully studied [6].

Objectives

We conducted a 5-month pilot study in the well-characterized Framingham Heart Study (FHS) cohort to test the feasibility of incorporating mHealth technology in a long-standing epidemiologic cohort study using remote versus in-person device set up. Our approach to pilot test and scale up the use of mHealth technology and electronic surveys (e-surveys) within FHS [17] leveraged the committed study participants and infrastructure of FHS. For the pilot study, we partnered with the HeH Study, which had an established protocol and infrastructure for collecting mHealth data.

The main purpose of our FHS-HeH pilot study was to assess whether remote mHealth data collection supported by email was equivalent to a strategy that involved in-person support on-site at the FHS Research Center by measuring the rates of mHealth device set up and continued use over the 5-month study. In addition to testing the feasibility and optimal data collection strategy, we also assessed the clinical characteristics of enrolled versus declined participants, completion rates of internet-based self-report data, and study design acceptability among participants.

Methods

Study Design

The FHS began enrolling participants for the Original cohort in 1948 [18]. In 1971, the offspring of the Original cohort and the spouses of these offspring were enrolled in the Offspring study [19]. In 1994 and 2002, ethnic/racial minority Omni cohorts were recruited to increase the diversity represented in FHS to better reflect the contemporary diversity of the town of Framingham, Massachusetts. In addition, in 2002, Third Generation participants were recruited from a sample of individuals that had at least 1 parent in the Offspring cohort [20]. These participants have been followed at 2- to 8-year intervals in the subsequent years and the study is ongoing. The most recent Offspring examination (including Omni cohort 1) occurred between 2011 and 2014 and the last Third Generation (including Omni cohort 2) examination was conducted during 2008 to 2011. Previous FHS examinations primarily used phone calls to recruit participants to return to the FHS Research Center.

FHS Offspring, Third Generation, and Omni participants [19,20] who had an email address, lived within a 1-hour drive of the FHS Research Center and owned an iPhone [21] were eligible for participation in this investigation. The iPhone requirement was included as, at the time, not all devices were supported by Android. A previous report from FHS, Framingham Digital Connectedness Survey, permitted us to identify participants reporting iPhone ownership and internet use for recruitment purposes [21]. During the recruitment (May-October 2015), 363 participants were sent an email invitation Figure 1. Our goal was to recruit 100 participants in each of the study arms (remote vs on-site support) and to sample at least 20% older participants (age ≥65 years). Our study protocol followed Zelen design [22], in which participants were randomized to one of the following 2 groups before invitations were sent and consent was obtained:

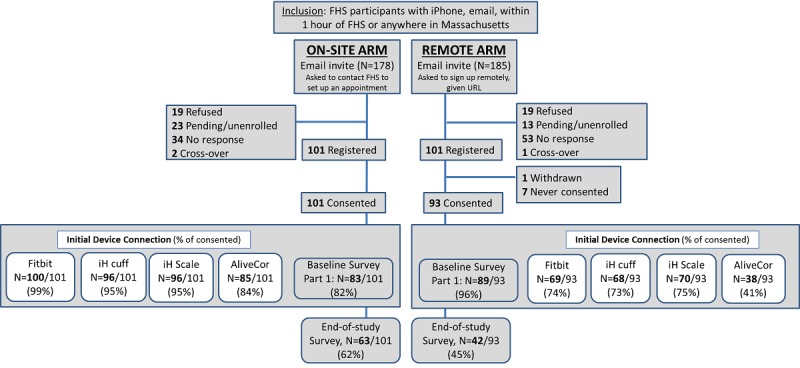

Flow chart of recruitment and initial device connection for the Framingham Heart Study–Health eHeart pilot study. Pending/unenrolled participants responded to the initial email invitation, but they did not respond to further communications. FHS: Framingham Heart Study; iH: iHealth; AliveCor: electrocardiogram device.

Remote support: Participants randomized to the remote support group received an email invitation with an explanation about the FHS-HeH pilot study and a URL they could follow to learn more and register for the study (first figure, Multimedia Appendix 1). For those who did not register within 1 week of the initial email, a second email was sent. After a second week of no response, a phone call was placed to their home. No more than 3 phone calls were placed to any individual for recruitment purposes.

On-site support: Participants randomized to the on-site support group were contacted by the same email/phone call protocol to register for the study and set up a study visit (second figure, Multimedia Appendix 1). Trained FHS staff members assisted the participants in-person to register with the FHS-HeH pilot study, sign the Web-based consent, and connect the devices to their iPhones and the study website. If requested, participants were able to return to the FHS Center if they required additional in-person support.

After the study termination (March 2016), all participants were emailed an end-of-study survey, through an internet link, to assess the participant burden and the overall FHS-HeH experience. The survey went out after 98% of the participants had completed the 5-month study (4 participants had not yet completed 5 months). The FHS-HeH study was approved by the Institutional Review Board (IRB) at the University of California, San Francisco, and the participants provided written informed consent. The Boston University Medical Center had an approved IRB authorization agreement.

Covariates

The following demographic information was collected from the most recent FHS examination attended: age, sex, body mass index (BMI), physical activity index [23], history of smoking (defined as former or current smokers, having at least 1 cigarette per day in the past year), hyperlipidemia (total cholesterol ≥200 mg/dL or being on lipid treatment), education, diabetes mellitus (defined as fasting glucose ≥126 mg/dL or treatment with hypoglycemic agent or insulin), hypertension (defined as systolic BP ≥140 mm Hg or diastolic BP ≥90 mm Hg or being on treatment), atrial fibrillation, and cardiovascular disease (includes myocardial infarction, coronary insufficiency, atherothrombotic brain infarct, transient ischemic attack, intermittent claudication, and heart failure). Participants with missing demographic data (detailed in the Results section) either did not attend their last FHS examination cycle or did not complete that part of the examination. Participants with missing covariate data were included in all tables.

Statistical Analysis

Demographic information was reported as mean (SD) for each study arm and for FHS participants who declined to participate in this investigation. Study adherence was defined conservatively as simply taking 1 measurement each month to get a broad assessment of continued device use. Study adherence and survey responses were compared between the 2 study arms in the total study sample by calculating the mean percent differences and 95% CIs. All statistical analyses were performed by using SAS, version 8 (SAS Institute Inc). Significant differences were reported at the P<.05 level.

Results

Study Enrollment

Of the 363 participants invited, 87 participants did not respond to the initial recruitment efforts, 38 declined to participate, and 36 communicated an intent to participate but did not follow through with enrollment (Figure 1). There were 101 participants who completed enrollment in each of the randomized study arms (n=202 total). Owing to the 2 early withdrawals (1 withdrawal in each study arm), additional participants were allowed to enroll to replace these withdrawals. In the on-site arm, there was a study technician available to answer questions and we observed 100% completion of the consent process. In contrast, individuals in the remote arm were emailed a link to initiate the consent process; only 93/101 (92%) completed the consent. In total, 82 participants responded to the invitation but did not complete the consent (38 participants declined, 36 were pending/not enrolled, and 8 enrolled but did not complete consent). Consenting participants were more likely to be women, tended to be younger, were less likely to smoke or have diabetes mellitus, and were more likely to have attended at least some college (Table 1). The rates of missing demographic data from Table 1 were low (BMI, missing [m]=11; physical activity index, m=13; history of smoking, m=3; hyperlipidemia, m=11; education, m=5; diabetes mellitus, m=15; and hypertension, m=11). Missing data were because of either missing the most recent FHS examination or missing the questionnaire/biomarker data at the most recent examination. None of the participants missing diabetes mellitus data had a diagnosis of diabetes mellitus on FHS examinations that occurred before the most recent FHS examination.

Table 1.

Demographic information from study participants collected at their last Framingham Heart Study examination.

| Demographics | Consented to study (n=194) | Responded to invitation, but not consenteda (n=82) | P value for difference between consented and not consentedb | ||

| Randomized to on-site arm (n=101) | Randomized to remote arm (n=93) | ||||

| Age (years), mean (SD) | 55 (11) | 53 (10) | 58 (12) | .009 | |

| Women, n (%) | 60 (59) | 57 (61) | 38 (46) | .04 | |

| Cohort, n (%) |

|

|

|

|

|

|

|

Offspring | 19 (19) | 12 (13) | 30 (37) | —c |

|

|

Third Generation | 76 (75) | 75 (81) | 49 (60) | — |

|

|

Omni 1 | 2 (2) | — | — | — |

|

|

Omni 2 | 4 (4) | 6 (6) | 3 (4) | — |

| Education, n (%) |

|

|

|

|

|

|

|

Less than high school | — | — | — | — |

|

|

High school | 6 (6) | 3 (3) | 14 (17) | — |

|

|

Some college | 10 (10) | 19 (20) | 17 (21) | — |

|

|

College and higher | 85 (84) | 71 (76) | 51 (62) | — |

| Body mass index (kg/m2), mean (SD) | 27 (5) | 29 (6) | 28 (6) | .48 | |

| Physical Activity Index, mean (SD) | 35 (5) | 35 (7) | 36 (5) | .26 | |

| History of smoking, n (%) | 15 (15) | 21 (23) | 29 (35) | .002 | |

| Hyperlipidemia, n (%) | 47 (46) | 47 (53) | 40 (50) | .99 | |

| Diabetes mellitus, n (%) | 5 (5) | 2 (2) | 8 (10) | .09 | |

| Hypertension, n (%) | 20 (20) | 22 (25) | 19 (24) | .87 | |

| Cardiovascular disease, n (%) | 2 (2) | 7 (8) | 4 (5) | .99 | |

| Atrial fibrillation, n (%) | 2 (2) | 1 (1) | 1 (1) | .99 | |

aThe not consented column includes 38 participants who declined, 36 pending/not enrolled, and 8 enrolled but did not complete consent.

bP values were not calculated for differences in cohort and education because of low numbers in some groups.

cNot applicable.

Importantly, recruitment of the older adults (age ≥65 years) for this e-Cohort study was less efficient (50% of individuals consented, 27 out of the 54 individuals who responded to the email invitation to participate) compared with the recruitment of adults aged <65 years (75% consented, 167 out of the 222 individuals who responded to the email invitation), as calculated from Table 1 and the first table of Multimedia Appendix 1. Older adults choosing to participate in our study had completed more education (100% completing at least some college) than those choosing not to participate, of which 26% (n=7/27) had not continued on to college after high school.

Device Use

In the on-site arm, 99% of the consenting participants (100/101) initially connected to the Fitbit device, 95% (96/101) to the iHealth BP cuff and scale, and 84% (85/101) to the AliveCor ECG. As for the remote arm, 74% of those that consented initially (69/93) connected to the Fitbit device, 73% (n=68/93) to the iHealth BP cuff, 75% (70/93) to the iHealth scale, and 41% (38/93) to the AliveCor ECG (Figure 1 and Table 2). The on-site arm had 20% to 43% more participants initially connected to the devices at baseline (mean percent difference was 25% [95% CI 17-35] for activity monitor, 22% [95% CI 12-32] for BP cuff, 20% [95% CI 10-30] for scale, and 43% [95% CI 30-55] for ECG).

Table 2.

Primary analysis: Rate of device connection at baseline and continued use at 5 months.

| Device | On-site (n=101), n (% consent) | Remote (n=93), n (% consent) | Difference in proportion of device connection rate between study arms | |||

|

|

Baseline connection | Fifth month device usea | Baseline connection | Fifth month device use | Mean percent difference between study arms in baseline connection rate (95% CI) | Mean percent difference between study arms in fifth month device use rate (95% CI) |

|

|

|

|

|

|

|

|

| Fitbit device | 100 (99) | 79 (78) | 69 (74) | 54 (58) | 25 (17 to 35) | 20 (7 to 33) |

| iHealth blood pressure cuff | 96 (95) | 54 (53) | 68 (73) | 40 (43) | 22 (12 to 32) | 10 (−4 to 24) |

| iHealth scale | 96 (95) | 57 (56) | 70 (75) | 40 (43) | 20 (10 to 30) | 13 (−1 to 27) |

| AliveCor | 85 (84) | 54 (53) | 38 (41) | 33 (35) | 43 (30 to 55) | 18 (4 to 31) |

aA total of 4 participants in the on-site arm did not have the opportunity to participate for the full 5 months owing to study termination.

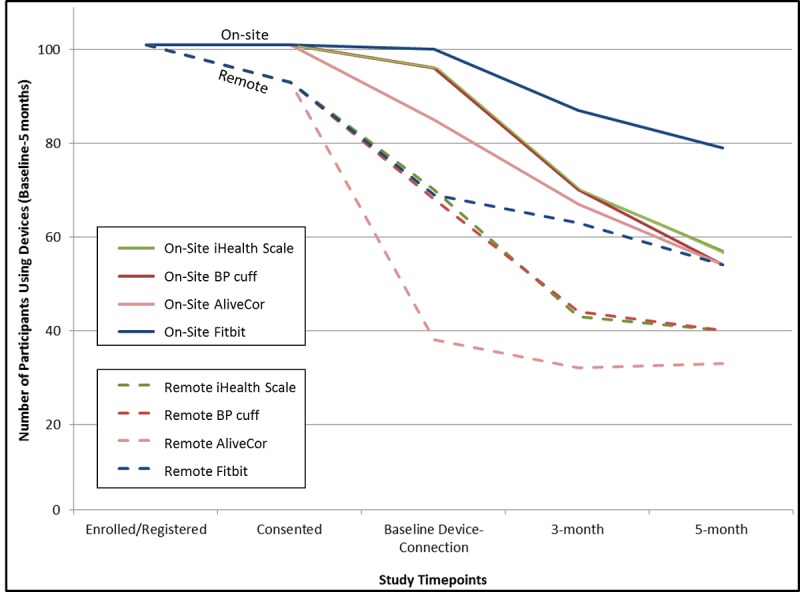

After the initial connection, the proportion of participants that continued to use the devices declined consistently in both arms of the study (Table 3 and Figure 2). Although 4 study participants in the on-site arm did not have the opportunity to participate in the full 5-month study, removal of these participants in sensitivity analyses did not change the results considerably (second and third table of Multimedia Appendix 1).

Table 3.

Secondary analysis: Continued use of devices for participants who were initially able to connect to the devices during the first month. The n (%) values are given with regard to baseline device connection.

| Device | On-site (N=101) | Remote (N=93) | Difference in proportion of continued device use between study arms | |||||

|

|

Baseline connection, n | Third month device use, n (% baseline) | Fifth month device use, n (% baseline)a | Baseline connection, n | Third month device use, n (% baseline) | Fifth month device use, n (% baseline) | Mean percentage difference between study arms in baseline connection rate (95% CI) | Mean percentage difference between study arms in fifth month device use rate (95% CI) |

|

|

|

|

|

|

|

|

|

|

| Fitbit device | 100 | 87 (86) | 79 (79) | 69 | 63 (91) | 54 (78) | −4 (−14 to 6) | 1 (−12 to 14) |

| iHealth blood pressure cuff | 96 | 70 (69) | 54 (56) | 68 | 44 (65) | 40 (59) | 8 (−6 to 23) | −3 (−18 to 13) |

| iHealth scale | 96 | 70 (69) | 57 (59) | 70 | 43 (61) | 40 (57) | 11 (−3 to 26) | 2 (−13 to 17) |

| AliveCor | 85 | 67 (66) | 54 (64) | 38 | 32 (84) | 33 (87) | −5 (−9 to 11) | −23 (−37 to −6) |

aA total of 4 participants in the on-site arm did not have the opportunity to participate for the full 5 months owing to study termination.

Number of participants using devices throughout the study from study enrollment through the 5-month follow-up period. BP: blood pressure.

Survey Data

All consenting participants were sent links to participate in the 2 internet-based surveys: a baseline core survey and an end-of-study survey after the study termination. The baseline core survey comprised 34 separate parts assessing self-reported health outcomes that could be completed in any order and was well attended by participants in both arms. The first survey was completed by 83 (82%) participants from the on-site arm and 89 (96%) participants from the remote arm (Figure 1.) After the study completion, all participants were sent an end-of-study survey, of which only 63% of the on-site arm and 45% of the remote arm participated (fourth table, Multimedia Appendix 1). Overall, the participants endorsed positive statements about their study participation. At least 95% of the participants in both study arms agreed to the statement, “I would participate in this type of study again in the future.” Over 85% of the participants in the on-site arm agreed with almost all the survey questions (as demonstrated by the shaded boxes in the fourth table of Multimedia Appendix 1), whereas there was slightly lower agreement for the remote arm.

Discussion

Principal Findings

Our FHS-HeH pilot study was conducted in collaboration with the HeH Study to test feasibility of mHealth and digital data collection in FHS participants using remote versus in-person support for device set up and use over a 5-month period. Participants in our on-site study arm had the opportunity to visit the FHS Research Center for consent and mobile device set up. We observed that the on-site participants were more likely to consent and had better success with initial device connection and use compared with the individuals who received only remote support by phone or email. However, once connected to the devices, the rates of continued device use were similar in both groups. Our findings suggest that it is possible to maximize participation by leveraging in-person support for e-Cohort studies. Furthermore, we observed reasonable adherence with mHealth technology by older adults.

In both study arms combined, almost 79% of the participants who successfully initialized the Fitbit device at the beginning of the study continued to use the device for the full 5-month study, representing 69% of the total sample of consenting study participants. We defined continued use very conservatively, as 1 measurement per month, to get a broad assessment of continued device use. Preliminary data from a new FHS initiative separate from FHS-HeH, called eFHS, reported that 76% (306 of 402 participants given an Apple Watch device) wore the device at least weekly over 3 months and received reminder messages if no data were sent for 14 days [17].

In 2 other studies that recruited participants using snowball (social network/internet-based) sampling strategies specifically to enroll participants into e-Cohort studies, surprisingly, the frequency of device use did not appear to be more successful, and may have even been lower, than in FHS-HeH or eFHS which enrolled from within the ongoing FHS cohort [14,24]. In the MyHeart Counts study, investigators reported that 47% of their >48,000 consented study participants completed just 2 consecutive days of fitness monitor data as measured by a smartphone app in the first week and adherence only declined from there [14]. In the mPower substudy of HeH, a 6-month smartphone-based study, 87% of 9520 study participants completed at least one task on the smartphone app after consenting to the study, but only 9% contributed data on ≥5 separate days, confirming that consistency in device or app use is one of the major challenges of this type of research [24]. Physical activity intervention studies provide additional comparative data, with considerable drop-off in device use over the short term (3-6 months) and over longer periods (6 months to 1 year), especially after the participant incentives are terminated [25-27]. Unfortunately, owing to our study termination after at least 5-months follow-up, we are unable to test whether there would be an effect of device setup strategy (on-site versus remote) on longer follow-up of continued device use. It is also unclear what type of communication, support, or incentives might maximize adherence with mHealth devices. In our study, participants were only sent reminders to sync their devices, briefly, midstudy. Our study was not designed to assess whether these reminders affected device use. However, there is a burgeoning field of study testing communication methods/strategies to increase and sustain health behavior [28-31]. Messaging may need to be tailored to participants based on the current adherence, and investigators should be cautious that the language does not infer that data are not received, unless that is the message meant to be communicated.

Overall use of the BP cuff, scale, and AliveCor ECG were somewhat lower than the continued use of the Fitbit device in our FHS-HeH on-site arm, but generally, once connected, the use was similar for both the study arms. Across both arms, 56% to 59% of the participants who successfully connected the BP cuff or scale at baseline, continued to use it through the 5-month study duration. For comparison, in a meta-analysis, rates of adherence to self-monitoring BP in hypertensive patients participating in an intervention to lower BP varied widely by study, but true comparison with our study is difficult as most of our FHS-HeH participants were not hypertensive [32]. In addition, most studies from the meta-analysis used traditional nonconnected BP devices, instead of mHealth devices with smartphone apps.

Device connection to the AliveCor ECG device was lower than other devices. Our technical staff reported that the AliveCor was typically the last device they connected during the in-person visit. Another contributing factor could be the more complex instructions for setting up the AliveCor device, including multiple steps in which the participants were required to log in to their email. Other than these reasons, it is unclear why the connection rates were much lower in the remote arm (41%) compared with the on-site arm (84%).

In contrast to the diminishing rates of the BP cuff or scale use over time, the rate of continued adherence for those that were initially able to connect to the AliveCor ECG remained relatively high at 5 months (especially in the remote arm, 87%). However, enthusiasm about apparently high AliveCor adherence should be tempered by the fact that only a small number of participants connected to this device at baseline. Thus, participants who successfully connected to the AliveCor at baseline may differ from those who connected to other devices. We hypothesize that AliveCor users may be more interested in their health, more motivated study participants, and/or more technologically savvy. However, one limitation to our study is that we did not measure the reasons for differences in device use, so we are not able to determine the facilitators and barriers to the use of specific devices [13,14,16,33,34].

Internet-Based Survey Data Can Be Successfully Administered Via Different Strategies

In addition to answering important questions about device connection and use, our study was able to assess the rate of internet-based survey initiation using our 2 study arms. Until recently, the FHS has conducted most questionnaires in-person and only administered short health history updates in the interim between examinations by phone or using traditional mail via the postal service. Although consent and device connection appeared more successful in the on-site arm of the study, the participation in the baseline core survey was higher in the remote arm (82% vs 96% in the on-site and remote arms, respectively). Therefore, in-person contact may not be an important part of a study designed only to perform surveys with participants. Instead, higher survey participation rates in the remote arm may be reflective of the lower burden imposed initially in the remote arm before devices were shipped. These results provide some evidence that internet-based surveys may be effective means to conduct a health history questionnaire in FHS participants.

Other e-Cohort studies have had variable success with participant engagement in e-surveys, which may depend on the timing and strategies used to present surveys to participants. In MyHeart Counts, 41% of the study participants completed a cardiovascular health survey, whereas 73% completed a physical activity survey, and only 17% provided race/ethnicity [14]. The HeH Study (with >210,000 participants) reported that 86% of participants completed at least one survey, but 37% provided complete survey data [13]. Another traditional cardiovascular epidemiology cohort, Coronary Artery Risk Development in Young Adults has also explored the electronic administration of surveys through the internet (eCARDIA), reporting 52% survey completion [35]. On the basis of the results from these studies, it may be important to prioritize survey administration in e-Cohort studies to ensure that the most important surveys have strong adherence.

In contrast to the high participation rates in the baseline core survey, the end-of-study survey was not completed as frequently (62% vs 45% of on-site and remote participants, respectively). Study design and communication with participants are not only important for the baseline connection and use of the device, but also for good adherence to device use at follow-up. These considerations are especially important for longitudinal studies that continue to engage participants over a long follow-up period as poor communication and frustration from participants may impact future participation. On the basis of data from approximately half of the study participants who provided feedback, approximately 96% of the participants said that they would participate in this type of study again, regardless of the study arm. Although participation bias influences our ability to interpret results from the end-of-study survey, it does appear that the on-site participants responded more favorably overall.

Strengths, Bias, and Limitations of Our Study Design

The strengths of our study lie in our study design, which leveraged infrastructure and the strengths of FHS and HeH, including a recruitment sample of committed study participants across middle and older age. Our design not only enabled the examination of different methodologies for incorporating consumer-facing mHealth technology into an epidemiological study, but may also provide insight for other study designs, including clinical trials.

Important limitations to consider include the limited exploration of participation bias by demographic factors other than age. The study was small, and we had limited power to examine subgroup findings. The FHS primarily comprises white individuals residing in New England; therefore, we were unable to analyze how the study design influenced participation by racial/ethnic group or region. Certain demographic groups may be more unlikely to be eligible for participation in mHealth studies, such as those that do not have a smartphone [21]. In our FHS Digital Connectedness Survey, administered during 2014 to 2015, we reported that smartphone users in FHS were younger, more highly educated, with less cardiovascular risk factors than individuals without a smartphone [21]. However, even among the participants who were eligible for our study (ie, had an iPhone and email address), those who agreed to participate were more likely to have attended at least some college (95% vs 82% among participants who were eligible but declined to participate) and were less likely to be smokers. Both trends are similar to what was seen in other e-Cohort studies [35,36], including the preliminary HeH recruitment analysis in which participants were less likely to smoke and were more likely to be women, had higher educational attainment, reported excellent general health, and were likely to be white (rather than black, Hispanic, or Asian) when compared with the traditional National Health and Nutrition Examination Survey study design [13]. Although issues of generalizability plague all epidemiological studies, it may be a particular concern in e-Cohort studies.

Previous studies in minority communities in the United States cited concerns and misconceptions by the participants in mHealth studies, such as the type of information that would be tracked by mobile technology, legal risks that might be introduced through participation, a lack of familiarity with certain devices, and unwanted attention from others when wearing or using devices in public [37,38]. These concerns can impact both study participation and adherence and may require cultural sensitivity (or age/generational sensitivity), creativity, and patience from the study team. The study team must weigh cost-effectiveness of potential adaptations, with limiting selection bias and maximizing the equity in research across diverse populations [37,38]. We did not analyze the cost between the study arms, so we are not able to compare the differences in our study. It is possible that personal communications with knowledgeable study coordinators and the research team may help to overcome some of the barriers mentioned above. The introduction of mHealth technology raised some concerns even in FHS participants who are familiar with research studies. We observed a barrier to consent that was somewhat overcome through the on-site study design, in which participants spoke with study coordinators who could explain the study, answer questions, and provide in-person support for setting up the mHealth devices. Future studies should assess whether other forms of participant engagement, such as text messaging, will influence mobile device use and study adherence.

We also acknowledge the conservative measure of study adherence (device use once per month) as we were most interested in assessing the overall adherence as a primary study aim. In future studies, it will also be important to understand the barriers preventing study adherence and to investigate the factors contributing to the frequency of use and how to improve these metrics. We acknowledge that providing 4 devices might have been burdensome for some participants, especially as participants needed to visit 3 different consumer-facing websites/apps to create accounts for each device (iHealth, Fitbit, and AliveCor) to connect the devices to the HeH platform, adding complexity to the initial user experience. Using 1 single app to connect multiple devices may improve connection for participants, especially for participants connecting remotely. Another key future step will be testing different methods of supporting and engaging participants, including assessing how participants engage with the website/apps using Web analytics tools. Providing in-person support, as we showed in our FHS-HeH pilot study, has the potential to increase study efficiency and may also minimize participation bias.

Conclusions

Our feasibility study demonstrated that offering on-site support for studies involving mHealth technology maximizes participation and initial rates of device use, compared with offering only remote support. However, once connected, drop-off rates were similar in both groups. Future studies may find it to be cost-effective to provide in-person support for studies involving mHealth technology for middle-aged and older populations.

Acknowledgments

The FHS is supported by the following grants from the NIH and the US Department of Health and Human Services (HHS): NHLBI-N01-HC25195, HHSN268201500001I, and NHLBI Intramural Research Program. EJB, JMM, and DMM are supported by Robert Wood Johnson award 74624. DDM is supported by R01HL126911, R01HL136660, R15HL121761, and UH2TR000921. EJB is supported by R01HL128914, 2R01 HL092577, 2U54HL120163, American Heart Association, and 18SFRN34110082. JEO, MJP, and GMM are supported by U2CEB021881. LT is supported by 18SFRN34110082. HL is also supported by the Boston University Digital Health Initiative; Boston University Alzheimer’s Disease Center Pilot Grant; and the National Center for Advancing Translational Sciences, NIH, through BU-CTSI grant number 1UL1TR001430. The views expressed in this paper are those of the authors and do not necessarily represent the views of the NIH or the US Department of HHS.

Abbreviations

- BMI

body mass index

- BP

blood pressure

- ECG

electrocardiogram

- e-Cohort

electronic cohort

- e-survey

electronic survey

- FHS

Framingham Heart Study

- HeH

Health eHeart

- HHS

Health and Human Services

- IRB

Institutional Review Board

- mHealth

mobile health

- NHLBI

National Heart, Lung, and Blood Institute

- NIH

National Institutes of Health

Appendix

Supplemental material containing the participant recruitment emails and tables including demographics of participants at least 65 years of age, sensitivity analyses, and "end of study" survey responses.

CONSORT‐EHEALTH checklist (V 1.6.1).

Footnotes

Conflicts of Interest: GMM receives research support from Jawbone Heath. CSF is currently employed by Merck Research Laboratories and owns stock in the company. DDM discloses equity stakes or consulting relationships with Flexcon, Bristol-Myers Squibb, Mobile Sense, ATRIA, Pfizer, Boston Biomedical Associates, Rose consulting group, and research funding from Sanofi Aventis, Flexcon, Otsuka Pharmaceuticals, Philips Healthcare, Biotronik, Bristol-Myers Squibb, and Pfizer. No other authors have relevant disclosures.

Editorial notice: This randomized study was not prospectively registered. The editor granted an exception of ICMJE rules for prospective registration of randomized trials because the risk of bias appears low and the study was considered formative. However, readers are advised to carefully assess the validity of any potential explicit or implicit claims related to primary outcomes or effectiveness.

References

- 1.Lauer MS. Time for a creative transformation of epidemiology in the United States. J Am Med Assoc. 2012 Nov 7;308(17):1804–5. doi: 10.1001/jama.2012.14838. [DOI] [PubMed] [Google Scholar]

- 2.Sorlie PD, Bild DE, Lauer MS. Cardiovascular epidemiology in a changing world--challenges to investigators and the National Heart, Lung, and Blood Institute. Am J Epidemiol. 2012 Apr 1;175(7):597–601. doi: 10.1093/aje/kws138. http://europepmc.org/abstract/MED/22415032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Manolio TA, Weis BK, Cowie CC, Hoover RN, Hudson K, Kramer BS, Berg C, Collins R, Ewart W, Gaziano JM, Hirschfeld S, Marcus PM, Masys D, McCarty CA, McLaughlin J, Patel AV, Peakman T, Pedersen NL, Schaefer C, Scott JA, Sprosen T, Walport M, Collins FS. New models for large prospective studies: is there a better way? Am J Epidemiol. 2012 May 1;175(9):859–66. doi: 10.1093/aje/kwr453. http://europepmc.org/abstract/MED/22411865. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Khoury MJ, Lam TK, Ioannidis JP, Hartge P, Spitz MR, Buring JE, Chanock SJ, Croyle RT, Goddard KA, Ginsburg GS, Herceg Z, Hiatt RA, Hoover RN, Hunter DJ, Kramer BS, Lauer MS, Meyerhardt JA, Olopade OI, Palmer JR, Sellers TA, Seminara D, Ransohoff DF, Rebbeck TR, Tourassi G, Winn DM, Zauber A, Schully SD. Transforming epidemiology for 21st century medicine and public health. Cancer Epidemiol Biomarkers Prev. 2013 Apr;22(4):508–16. doi: 10.1158/1055-9965.EPI-13-0146. http://cebp.aacrjournals.org/cgi/pmidlookup?view=long&pmid=23462917. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Burke LE, Ma J, Azar KM, Bennett GG, Peterson ED, Zheng Y, Riley W, Stephens J, Shah SH, Suffoletto B, Turan TN, Spring B, Steinberger J, Quinn CC, American Heart Association Publications Committee of the Council on Epidemiology and Prevention‚ Behavior Change Committee of the Council on Cardiometabolic Health‚ Council on Cardiovascular and Stroke Nursing‚ Council on Functional Genomics and Translational Biology‚ Council on Quality of Care and Outcomes Research‚ Stroke Council Current science on consumer use of mobile health for cardiovascular disease prevention: a scientific statement from the American Heart Association. Circulation. 2015 Sep 22;132(12):1157–213. doi: 10.1161/CIR.0000000000000232. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Roger VL, Boerwinkle E, Crapo JD, Douglas PS, Epstein JA, Granger CB, Greenland P, Kohane I, Psaty BM. Strategic transformation of population studies: recommendations of the working group on epidemiology and population sciences from the National Heart, Lung, and Blood Advisory Council and Board of External Experts. Am J Epidemiol. 2015 Mar 15;181(6):363–8. doi: 10.1093/aje/kwv011. http://europepmc.org/abstract/MED/25743324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Steinhubl SR, Muse ED, Topol EJ. Can mobile health technologies transform health care? J Am Med Assoc. 2013 Dec 11;310(22):2395–6. doi: 10.1001/jama.2013.281078. [DOI] [PubMed] [Google Scholar]

- 8.Topol EJ, Steinhubl SR, Torkamani A. Digital medical tools and sensors. J Am Med Assoc. 2015 Jan 27;313(4):353–4. doi: 10.1001/jama.2014.17125. http://europepmc.org/abstract/MED/25626031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Powell AC, Landman AB, Bates DW. In search of a few good apps. J Am Med Assoc. 2014 May 14;311(18):1851–2. doi: 10.1001/jama.2014.2564. [DOI] [PubMed] [Google Scholar]

- 10.Kaiser J. Epidemiology. Budget woes threaten long-term heart studies. Science. 2013 Aug 16;341(6147):701. doi: 10.1126/science.341.6147.701. [DOI] [PubMed] [Google Scholar]

- 11.Sankar PL, Parker LS. The precision medicine initiative's All of Us research program: an agenda for research on its ethical, legal, and social issues. Genet Med. 2017 Jul;19(7):743–50. doi: 10.1038/gim.2016.183. [DOI] [PubMed] [Google Scholar]

- 12.Sudlow C, Gallacher J, Allen N, Beral V, Burton P, Danesh J, Downey P, Elliott P, Green J, Landray M, Liu B, Matthews P, Ong G, Pell J, Silman A, Young A, Sprosen T, Peakman T, Collins R. UK biobank: an open access resource for identifying the causes of a wide range of complex diseases of middle and old age. PLoS Med. 2015 Mar;12(3):e1001779. doi: 10.1371/journal.pmed.1001779. http://dx.plos.org/10.1371/journal.pmed.1001779. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Guo X, Vittinghoff E, Olgin JE, Marcus GM, Pletcher MJ. Volunteer participation in the health eheart study: a comparison with the US population. Sci Rep. 2017 May 16;7(1):1956. doi: 10.1038/s41598-017-02232-y. doi: 10.1038/s41598-017-02232-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.McConnell MV, Shcherbina A, Pavlovic A, Homburger JR, Goldfeder RL, Waggot D, Cho MK, Rosenberger ME, Haskell WL, Myers J, Champagne MA, Mignot E, Landray M, Tarassenko L, Harrington RA, Yeung AC, Ashley EA. Feasibility of obtaining measures of lifestyle from a smartphone app: the MyHeart Counts cardiovascular health study. JAMA Cardiol. 2017 Jan 1;2(1):67–76. doi: 10.1001/jamacardio.2016.4395. [DOI] [PubMed] [Google Scholar]

- 15.Wirth KE, Tchetgen EJ. Accounting for selection bias in association studies with complex survey data. Epidemiology. 2014 May;25(3):444–53. doi: 10.1097/EDE.0000000000000037. http://europepmc.org/abstract/MED/24598413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Anderson M, Perrin A. Pew Research Center. 2017. Tech Adoption Climbs Among Older Adults https://www.pewinternet.org/2017/05/17/tech-adoption-climbs-among-older-adults/

- 17.McManus DD, Trinquart L, Benjamin EJ, Manders ES, Fusco K, Jung LS, Spartano NL, Kheterpal V, Nowak C, Sardana M, Murabito JM. Design and preliminary findings from a new electronic cohort embedded in the Framingham heart study. J Med Internet Res. 2019 Mar 1;21(3):e12143. doi: 10.2196/12143. http://www.jmir.org/2019/3/e12143/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Dawber TR, Kannel WB. The Framingham study. An epidemiological approach to coronary heart disease. Circulation. 1966 Oct;34(4):553–5. doi: 10.1161/01.cir.34.4.553. [DOI] [PubMed] [Google Scholar]

- 19.Kannel WB, Feinleib M, McNamara PM, Garrison RJ, Castelli WP. An investigation of coronary heart disease in families. The Framingham offspring study. Am J Epidemiol. 1979 Sep;110(3):281–90. doi: 10.1093/oxfordjournals.aje.a112813. [DOI] [PubMed] [Google Scholar]

- 20.Splansky GL, Corey D, Yang Q, Atwood LD, Cupples LA, Benjamin EJ, D'Agostino Sr RB, Fox CS, Larson MG, Murabito JM, O'Donnell CJ, Vasan RS, Wolf PA, Levy D. The third generation cohort of the National Heart, Lung, and Blood Institute's Framingham heart study: design, recruitment, and initial examination. Am J Epidemiol. 2007 Jun 1;165(11):1328–35. doi: 10.1093/aje/kwm021. [DOI] [PubMed] [Google Scholar]

- 21.Fox CS, Hwang SJ, Nieto K, Valentino M, Mutalik K, Massaro JM, Benjamin EJ, Murabito JM. Digital connectedness in the Framingham heart study. J Am Heart Assoc. 2016 Apr 13;5(4):e003193. doi: 10.1161/JAHA.116.003193. http://www.ahajournals.org/doi/full/10.1161/JAHA.116.003193?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%3dpubmed. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Torgerson DJ, Roland M. What is Zelen's design? Br Med J. 1998 Feb 21;316(7131):606. doi: 10.1136/bmj.316.7131.606. http://europepmc.org/abstract/MED/9518917. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Kannel WB, Belanger A, D'Agostino R, Israel I. Physical activity and physical demand on the job and risk of cardiovascular disease and death: the Framingham study. Am Heart J. 1986 Oct;112(4):820–5. doi: 10.1016/0002-8703(86)90480-1. [DOI] [PubMed] [Google Scholar]

- 24.Bot BM, Suver C, Neto EC, Kellen M, Klein A, Bare C, Doerr M, Pratap A, Wilbanks J, Dorsey ER, Friend SH, Trister AD. The m-power study, Parkinson disease mobile data collected using ResearchKit. Sci Data. 2016 Mar 3;3:160011. doi: 10.1038/sdata.2016.11. http://europepmc.org/abstract/MED/26938265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Finkelstein EA, Haaland BA, Bilger M, Sahasranaman A, Sloan RA, Nang EE, Evenson KR. Effectiveness of activity trackers with and without incentives to increase physical activity (TRIPPA): a randomised controlled trial. Lancet Diabetes Endocrinol. 2016 Dec;4(12):983–95. doi: 10.1016/S2213-8587(16)30284-4. [DOI] [PubMed] [Google Scholar]

- 26.Cheatham SW, Stull KR, Fantigrassi M, Motel I. The efficacy of wearable activity tracking technology as part of a weight loss program: a systematic review. J Sports Med Phys Fitness. 2018 Apr;58(4):534–48. doi: 10.23736/S0022-4707.17.07437-0. [DOI] [PubMed] [Google Scholar]

- 27.Patel MS, Benjamin EJ, Volpp KG, Fox CS, Small DS, Massaro JM, Lee JJ, Hilbert V, Valentino M, Taylor DH, Manders ES, Mutalik K, Zhu J, Wang W, Murabito JM. Effect of a game-based intervention designed to enhance social incentives to increase physical activity among families: the BE FIT randomized clinical trial. JAMA Intern Med. 2017 Nov 1;177(11):1586–93. doi: 10.1001/jamainternmed.2017.3458. http://europepmc.org/abstract/MED/28973115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Adams MA, Hurley JC, Todd M, Bhuiyan N, Jarrett CL, Tucker WJ, Hollingshead KE, Angadi SS. Adaptive goal setting and financial incentives: a 2 × 2 factorial randomized controlled trial to increase adults' physical activity. BMC Public Health. 2017 Mar 29;17(1):286. doi: 10.1186/s12889-017-4197-8. https://bmcpublichealth.biomedcentral.com/articles/10.1186/s12889-017-4197-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Scott-Sheldon LA, Lantini R, Jennings EG, Thind H, Rosen RK, Salmoirago-Blotcher E, Bock BC. Text messaging-based interventions for smoking cessation: a systematic review and meta-analysis. JMIR Mhealth Uhealth. 2016 May 20;4(2):e49. doi: 10.2196/mhealth.5436. http://mhealth.jmir.org/2016/2/e49/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Thakkar J, Kurup R, Laba TL, Santo K, Thiagalingam A, Rodgers A, Woodward M, Redfern J, Chow CK. Mobile telephone text messaging for medication adherence in chronic disease: a meta-analysis. JAMA Intern Med. 2016 Mar;176(3):340–9. doi: 10.1001/jamainternmed.2015.7667. [DOI] [PubMed] [Google Scholar]

- 31.Long H, Bartlett YK, Farmer AJ, French DP. Identifying brief message content for interventions delivered via mobile devices to improve medication adherence in people with type 2 diabetes mellitus: a rapid systematic review. J Med Internet Res. 2019 Jan 9;21(1):e10421. doi: 10.2196/10421. http://www.jmir.org/2019/1/e10421/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Fletcher BR, Hartmann-Boyce J, Hinton L, McManus RJ. The effect of self-monitoring of blood pressure on medication adherence and lifestyle factors: a systematic review and meta-analysis. Am J Hypertens. 2015 Oct;28(10):1209–21. doi: 10.1093/ajh/hpv008. [DOI] [PubMed] [Google Scholar]

- 33.Deloitte US. 2017. [2019-07-30]. Global Mobile Consumer Survey: US Edition. A New Era in Mobile Continues https://www2.deloitte.com/us/en/pages/technology-media-and-telecommunications/articles/global-mobile-consumer-survey-us-edition.html.

- 34.Levine DM, Lipsitz SR, Linder JA. Changes in everyday and digital health technology use among seniors in declining health. J Gerontol A Biol Sci Med Sci. 2018 Mar 14;73(4):552–9. doi: 10.1093/gerona/glx116. [DOI] [PubMed] [Google Scholar]

- 35.Kershaw KN, Liu K, Goff Jr DC, Lloyd-Jones DM, Rasmussen-Torvik LJ, Reis JP, Schreiner PJ, Garside DB, Sidney S. Description and initial evaluation of incorporating electronic follow-up of study participants in a longstanding multisite cohort study. BMC Med Res Methodol. 2016 Sep 23;16(1):125. doi: 10.1186/s12874-016-0226-z. https://bmcmedresmethodol.biomedcentral.com/articles/10.1186/s12874-016-0226-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Andreeva VA, Deschamps V, Salanave B, Castetbon K, Verdot C, Kesse-Guyot E, Hercberg S. Comparison of dietary intakes between a large online cohort study (etude nutrinet-santé) and a nationally representative cross-sectional study (etude nationale nutrition santé) in France: addressing the issue of generalizability in e-epidemiology. Am J Epidemiol. 2016 Nov 1;184(9):660–9. doi: 10.1093/aje/kww016. http://europepmc.org/abstract/MED/27744386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Murray KE, Ermias A, Lung A, Mohamed AS, Ellis BH, Linke S, Kerr J, Bowen DJ, Marcus BH. Culturally adapting a physical activity intervention for Somali women: the need for theory and innovation to promote equity. Transl Behav Med. 2017 Mar;7(1):6–15. doi: 10.1007/s13142-016-0436-2. http://europepmc.org/abstract/MED/27558245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Nebeker C, Murray K, Holub C, Haughton J, Arredondo EM. Acceptance of mobile health in communities underrepresented in biomedical research: barriers and ethical considerations for scientists. JMIR Mhealth Uhealth. 2017 Jun 28;5(6):e87. doi: 10.2196/mhealth.6494. http://mhealth.jmir.org/2017/6/e87/ [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental material containing the participant recruitment emails and tables including demographics of participants at least 65 years of age, sensitivity analyses, and "end of study" survey responses.

CONSORT‐EHEALTH checklist (V 1.6.1).