Abstract

Cortical neurons exhibit tremendous variability in the number and temporal distribution of spikes in their discharge patterns. Furthermore, this variability appears to be conserved over large regions of the cerebral cortex, suggesting that it is neither reduced nor expanded from stage to stage within a processing pathway. To investigate the principles underlying such statistical homogeneity, we have analyzed a model of synaptic integration incorporating a highly simplified integrate and fire mechanism with decay. We analyzed a “high-input regime” in which neurons receive hundreds of excitatory synaptic inputs during each interspike interval. To produce a graded response in this regime, the neuron must balance excitation with inhibition. We find that a simple integrate and fire mechanism with balanced excitation and inhibition produces a highly variable interspike interval, consistent with experimental data. Detailed information about the temporal pattern of synaptic inputs cannot be recovered from the pattern of output spikes, and we infer that cortical neurons are unlikely to transmit information in the temporal pattern of spike discharge. Rather, we suggest that quantities are represented as rate codes in ensembles of 50–100 neurons. These column-like ensembles tolerate large fractions of common synaptic input and yet covary only weakly in their spike discharge. We find that an ensemble of 100 neurons provides a reliable estimate of rate in just one interspike interval (10–50 msec). Finally, we derived an expression for the variance of the neural spike count that leads to a stable propagation of signal and noise in networks of neurons—that is, conditions that do not impose an accumulation or diminution of noise. The solution implies that single neurons perform simple algebra resembling averaging, and that more sophisticated computations arise by virtue of the anatomical convergence of novel combinations of inputs to the cortical column from external sources.

Keywords: noise, rate code, temporal coding, correlation, interspike interval, spike count variance, response variability, visual cortex, synaptic integration, neural model

Since the earliest single-unit recordings, it has been apparent that the irregularity of the neural discharge might limit the sensitivity of the nervous system to sensory stimuli (for review, see Rieke et al., 1997). In visual cortex, for example, repeated presentations of an identical stimulus elicit a variable number of action potentials (Schiller et al., 1976; Dean, 1981; Tolhurst et al., 1983; Vogels et al., 1989; Snowden et al., 1992;Britten et al., 1993), and the time between successive action potentials [interspike interval (ISI)] is highly irregular (Tomko and Crapper, 1974; Softky and Koch, 1993). These observations have led to numerous speculations on the nature of the neural code (Abeles, 1991;Konig et al., 1996; Rieke et al., 1997). On the one hand, the irregular timing of spikes could convey information, imparting broad information bandwidth on the neural spike train, much like a Morse code. Alternatively this irregularity may reflect noise, relegating the signal carried by the neuron to a crude estimate of spike rate.

In principle we could ascertain which view is correct if we knew how neurons integrate synaptic inputs to produce spike output. One possibility is that specific patterns or coincidences of presynaptic events give rise to precisely timed postsynaptic spikes. Accordingly, the output spike train would reflect the precise timing of relevant presynaptic events (Abeles, 1982, 1991; Lestienne, 1996). Alternatively, synaptic input might affect the probability of a postsynaptic spike, whereas the precise timing is left to chance. Then presynaptic inputs would determine the average rate of postsynaptic discharge, but spike times, patterns, and intervals would not convey information.

In this paper we propose that the irregular ISI arises as a consequence of a specific problem that cortical neurons must solve: the problem of dynamic range or gain control. Cortical neurons receive 3000–10,000 synaptic contacts, 85% of which are asymmetric and hence presumably excitatory (Peters, 1987; Braitenberg and Schüz, 1991). More than half of these contacts are thought to arise from neurons within a 100–200 μm radius of the target cell, reflecting the stereotypical columnar organization of neocortex. Because neurons within a cortical column typically share similar physiological properties, the conditions that excite one neuron are likely to excite a considerable fraction of its afferent input as well (Mountcastle, 1978; Peters and Sethares, 1991), creating a scenario in which saturation of the neuron’s firing rate could easily occur. This problem is exacerbated by the fact that EPSPs from individual axons appear to exert substantial impact on the membrane potential (Mason et al., 1991; Otmakhov et al., 1993; Thomson et al., 1993b; Matsumura et al., 1996). An individual EPSP depolarizes the membrane by 3–10% of the necessary excursion from resting potential to spike threshold, and this seems to hold for synaptic contacts throughout the dendrite regardless of the distance between synapse and soma (Hoffman et al., 1997), suggesting that a large fraction of the synapses are capable of influencing somatic membrane potential. Absent inhibition, a neuron ought to produce an action potential whenever 10–40 input spikes arrive within 10–20 msec of each other.

These findings begin to reveal the full extent of the cortical neuron’s problem. The neuron computes quantities from large numbers of synaptic input, yet the excitatory drive from only 10–40 inputs, discharging at an average rate of 100 spikes/sec, should cause the postsynaptic neuron to discharge near 100 spikes/sec. If as few as 100 excitatory inputs are active (of the ≥3000 available), the postsynaptic neuron should discharge at a rate of ≥200 spikes/sec. It is a wonder, then, that the neuron can produce any graded spike output at all. We need to understand how cortical neurons can operate in a regime in which many (e.g., ≥100) excitatory inputs arrive for every output spike. We will refer to this as a “high-input regime” to distinguish it from situations common in subcortical structures in which the activity of a few inputs determines the response of the neuron. We emphasize that we refer only to the active inputs of a neuron, which may be as few as 5–10% of its afferent synapses, although our arguments apply to all larger fractions as well. The actual fraction active is not known for any cortical neuron, but most cortical physiologists realize that large numbers of neurons are activated by simple stimuli (McIlwain, 1990) and would probably estimate the fraction as considerably greater than 5–10%.

In this paper we analyze a simple model of synaptic integration that permits presynaptic and postsynaptic neurons to respond over the same dynamic range, solving the gain control problem. The model is a variant of the random walk model proposed by Gerstein and Mandelbrot (1964) and others (for review, see Tuckwell, 1988). Although constrained by neural membrane biophysics, the model is not a biophysical implementation. There are no synaptic or voltage-gated conductances, etc. Instead, we have chosen to attack the problem of synaptic integration as a counting problem, focusing on the consequences of counting input spikes to produce output spikes. We show in Appendix , however, that a more realistic, conductance-based model undergoes the same statistical behavior.

The paper is divided into three main parts. The first concerns the problem of synaptic integration in the high-input regime. Given a plethora of synaptic input, how do neurons achieve an acceptable dynamic range of response? It turns out that the solution to this problem imposes a high degree of irregularity on the pattern of action potentials—the price of a reasonable dynamic range is noise. The rest of the paper concerns implications of this noise on the reliability of neural signals. Part 2 explores the consequences of shared connections among neurons. Redundancy is a natural strategy for encoding information in noisy neurons and is a well established principle of cortical organization (Mountcastle, 1957). We examine its implications for correlation, synchrony, and coding fidelity. In part 3 we consider how neurons can receive variable inputs, compute with them, and produce a response with variability that is, on average, neither greater nor less than its inputs. We find a stable solution to the propagation of noise in networks of neurons and in so doing gain insight into the nature of neural computation itself. Together the exercise supports a view of neuronal coding and computation that requires large numbers of connections, much redundancy, and, consequentially, a great deal of noise.

BACKGROUND: THE OBSERVED VARIABILITY OF SINGLE NEURONS

The variability of the neuronal response is characterized in two ways: interval statistics and count statistics. Interval statistics refer to the time between successive action potentials, known as the ISI. For cortical neurons, the ISI is highly irregular. Because this interval is simply the reciprocal of the discharge rate at any instant, a neuron that modulates its discharge rate over time must exhibit variability in its ISIs. Yet even a neuron that fires at a constant rate over some epoch will exhibit considerable variability among its ISIs. In fact the distribution of ISIs resembles the exponential probability density of a random (Poisson) point process. To a first approximation, the time to the next spike depends only on the expected rate and is otherwise random.

Count statistics refer to the number of spikes produced in an epoch of fixed duration. Under experimental conditions it is possible to estimate the mean and variability of the spike count by repeating the measurement many times. A typical example is the number of spikes produced by a neuron in the primary visual cortex when a bar of light is passed through its receptive field. For cortical neurons, repeated presentations of the identical stimulus yield highly variable spike counts. The variance of spike counts over repeated trials has been measured in several visual cortical areas in monkey and cat. The relationship between the count variance and the count mean is linear when plotted on log–log graph, with slope just greater than unity. A reasonable approximation is that the response variance is about 1.5 times the mean response (Dean, 1981; Tolhurst et al., 1983; Bradley et al., 1987; Scobey and Gabor, 1989; Vogels et al., 1989; Snowden et al., 1992; Britten et al., 1993; Softky and Koch, 1993; Geisler and Albrecht, 1997).

What is particularly striking about both interval and counting statistics is that they seem to be fairly homogeneous throughout the cerebral cortex (Softky and Koch, 1993; Lee et al., 1998). Measurements of ISI variability are difficult, because any measured variation is only meaningful if the rate is a constant. Nevertheless, the sound of a neural spike train played through a loudspeaker is remarkably similar in most regions of the neocortex and contrasts remarkably with subcortical spike trains, whose regularity often evokes tones. Such gross homogeneity among cortical areas implies that the inputs to, and the outputs from, a typical cortical neuron conform to common statistical principles. To the electrophysiologist recording from neurons in cortical columns, it is clear that nearby neurons respond under similar conditions and that their response magnitudes are roughly similar. Neurons encountered within the column are fairly representative of the inputs of any one neuron and, in a rough sense, the targets of any one neuron (Braitenberg and Schüz, 1991). Again, we emphasize that it is only the neuron’s activeinputs to which we refer.

Table 1 lists properties of the neural response that apply more or less equivalently to a neuron as well as to its inputs and its targets. These properties are to be interpreted as rough rules of thumb, but they pose important constraints for the flow of impulses and information through networks of cortical neurons.

Table 1.

Properties of statistical homogeneity for cortical neurons

| Response property | Approximate value |

|---|---|

| Dynamic range of response | 0–200 spikes/sec |

| Distribution of interspike intervals | Approximately exponential |

| Spike count variance | Variance ∼1–1.5 times the mean count |

| Spike rate modulation | Expected rate can vary in ∼1 ISI, 5–10 msec |

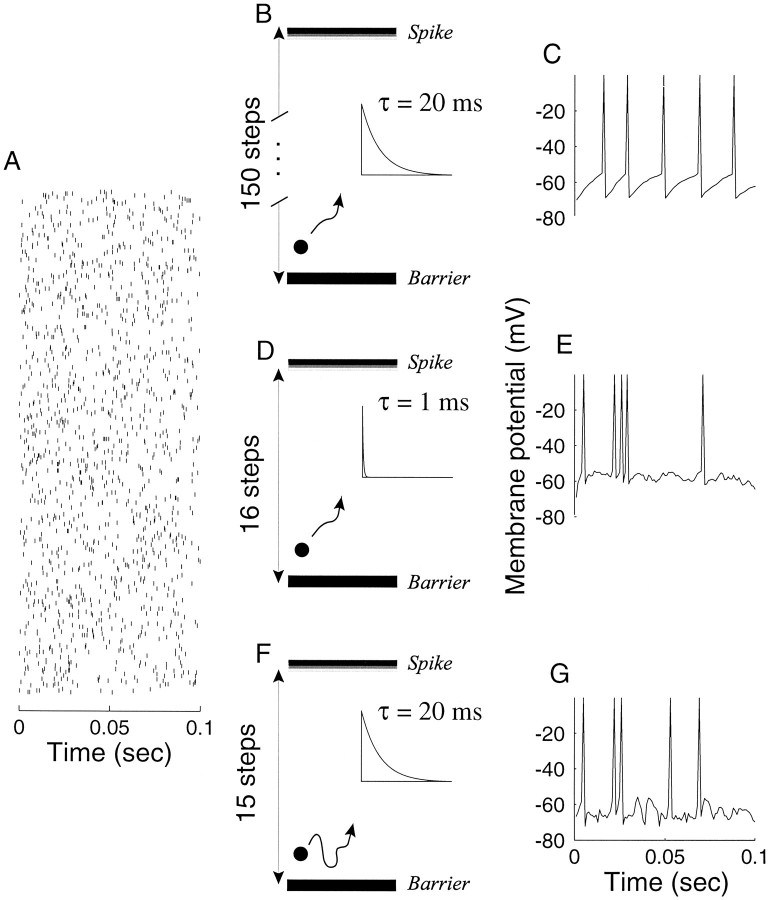

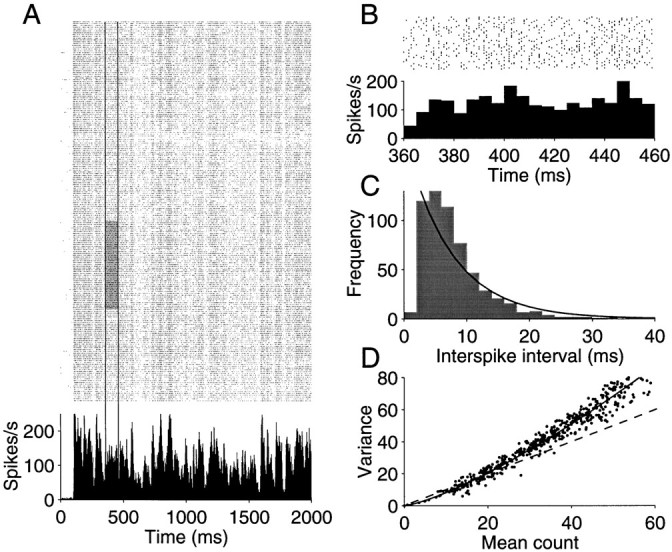

Figure 1 illustrates these properties for a neuron recorded from the middle temporal visual area (MT or V5) of a behaving monkey, a visual area that is specialized for processing motion information (for review, see Albright, 1993). Figure 1 shows 210 repetitions of the spike train produced by this neuron when an identical sequence of random dots was displayed dynamically in the receptive field of the neuron. The stimulus contained rapid fluctuations of luminance and random motion, which produced similarly rapid fluctuations in the neural discharge. The fluctuations in discharge appear stereotyped from trial to trial, as is evident from the vertical stripe-like structure in the raster and from the peristimulus time histogram (PSTH) below. The PSTH shows the average response rate calculated in 2 msec epochs. The spike rate varied between 15 and 220 impulses/sec (mean ± 2ς). A power spectral density analysis of this rate function reveals significant modulation at 50 Hz, suggesting that the neuron is capable of expressing a change in its rate of discharge every 10 msec or less (Bair and Koch, 1996). Thus the neuron is capable of computing quantities over an interval comparable to the average ISI of an active neuron.

Fig. 1.

Response variability of a neuron recorded from area MT of an alert monkey. A, Raster and peristimulus time histogram (PSTH) depicting response for 210 presentations of an identical random dot motion stimulus. The motion stimulus was shown for 2 sec. Raster points represent the occurrence of action potentials. The PSTH plots the spike rate, averaged in 2 msec bins, as a function of time from the onset of the visual stimulus. The response modulates between 15 and 220 impulses/sec. Vertical lines delineate a period in which spike rate was fairly constant. The gray region shows 50 trials from this epoch, which were used to construct B and C. B, Magnified view of the shaded region of the raster in A. The spike rate, computed in 5 msec bins, is fairly constant. Notice that the magnified raster reveals substantial variability in the timing of individual spikes. C, Frequency histogram depicting the spike intervals in B. The solid line is the best fitting exponential probability density function. D, Variance of the spike count is plotted against the mean number of spikes obtained from randomly chosen rectangular regions of the raster in A. Eachpoint represents the mean and variance of the spikes counted from 50 to 200 adjacent trials in an epoch from 100 to 500 msec long. The shaded region of A would be one such example. The best fitting power law is shown by the solid curve. Thedashed line is the expected relationship for a Poisson point process.

At first glance, the pattern of spike arrival times appears fairly consistent from trial to trial, but this turns out to be illusory. A closer examination of any epoch reveals considerable variability in both the time of spikes and their counts. Figure 1B magnifies the spikes occurring between 360 and 460 msec after stimulus onset for 50 consecutive trials, corresponding to the shaded region of Figure 1A. We selected this subset of the raster, because the discharge rate was fairly constant during this epoch and because it represents one of the more consistent patterns of spiking in the record. Nevertheless, the ISIs show marked variability. The mean is 7.35 msec, and the SD is 5.28. We will frequently refer to the ratio, SD/mean, as the coefficient of variation of the ISI distribution (CVISI). The value from these intervals is 0.72. The ISI frequency histogram (Fig. 1C) is fit reasonably well by an exponential distribution (solid curve)—the expected distribution of interarrival times for a random (Poisson) point process. Although some of the variability in the ISIs may be attributable to fluctuations in spike rate, the pattern of spikes is clearly not the same from trial to trial.

This point is emphasized further by simply counting the spikes produced during the epoch. If the pattern of spikes were at all reproducible, we would expect consistency in the spike count. The mean for the highlighted epoch was 12.8 spikes, and its variance was 8.22. We made similar calculations of the mean count and its associated variance for randomly selected epochs lasting from 100 to 500 msec, including from 50 to 200 consecutive trials. The mean and variance for 500 randomly chosen epochs are shown by the scatter plot in Figure 1D. The main diagonal in this graph, (Var = mean), is the expected relationship for a Poisson process. Notice that the measured variance typically exceeds the mean count. The example illustrated above (highlighted region of Fig. 1A) is one of the rare exceptions. The variance is commonly described by a power law function of the mean count. The solid curve depicts the fit, Var = 0.4mean1.3, but the fit is only marginally better than a simple proportional rule: Var ≈ 1.3mean. Both the timing and count analyses suggest that the structured spike discharge apparent in the raster could be explained as a random point process with varying rate. The process is not exactly Poisson (e.g., the variance is too large), a point to which we shall return in detail. However, the key point is that the structure evident in the raster of Figure 1 is only a manifestation of a time-varying spike rate. The visual stimulus causes the neuron to modulate its spike rate consistently from trial to trial, whereas the timing of individual spikes—their intervals and patterns—is effectively random, hence best regarded as noise.

The neuron in Figure 1 illustrates the four properties of statistical homogeneity listed in Table 1: dynamic range, irregularity of the ISI, spike count variance, and the time course of spike rate modulation. As suggested above, it seems likely that these properties are characteristic of the neuron’s afferent inputs and its output targets alike. Its dynamic range is typical of MT neurons, as well as of V1 neurons that project to MT (Movshon and Newsome, 1996) and neurons in MST (Celebrini and Newsome, 1994), a major target of MT projections. Indeed, neurons throughout the neocortex appear to be capable of discharging over a dynamic range of ∼0–200 impulses/sec. Second, the ISIs from this neuron are characteristic of other neurons in its column and elsewhere in the visual cortex (Softky and Koch, 1993). Where it has been examined, the distribution of ISIs has a long “exponential” tail that is suggestive of a Poisson process. Third, the variance of the spike count of this neuron exceeds the mean by a margin that is typical of neurons throughout the visual cortex. Finally, the rapid modulation of the discharge rate occurs at a time scale that is on the order of an ISI of any one of its inputs. Our goal is to understand the basis of these statistical properties in single neurons and their conservation in networks of interconnected neurons.

MATERIALS AND METHODS

Physiology. Electrophysiological data (as in Fig. 1) were obtained by standard extracellular recording of single neurons in the alert rhesus monkey (Macaca mulatta). A full description of methods can be found in Britten et al. (1992). Experiments were in compliance with the National Institutes of Health guidelines for care and treatment of laboratory animals. The unit in Figure 1 was recorded from the middle temporal visual area (MT or V5). These trials were extracted from an experiment in which the monkey judged the net direction of motion of a dynamic random dot kinematogram, which was displayed for 2 sec in the receptive field while the monkey fixated a small spot. In the particular trials shown in Figure 1, dots were plotted at high speed at random locations on the screen, resulting in a stochastic motion display with no net motion in any direction. Importantly, however, the exact pattern of random dots was repeated for each of the trials shown.

Model neuron. We performed computer simulations of neural discharge using a simple counting model of synaptic integration. Both excitatory and inhibitory inputs to the neuron are modeled as simple time series. With a few key exceptions, they are constructed as a sequence of spike arrival times with intervals that are drawn from an exponential distribution. The model neuron counts these inputs; when the count exceeds some threshold barrier, it emits an output spike and resets the count to zero. Each excitatory synaptic input increments the count by one unit step. The count decays to zero with a time constant, τ, representing the membrane time constant or integration time constant. Each inhibitory input decrements the count by one unit. If the count reaches a negative barrier, however, it can go no further. Thus inhibition subtracts from any accumulated count, but it does not hyperpolarize the neuron beyond this barrier. Except where noted, we placed this reflecting barrier at the resting potential (zero) or one step below it.

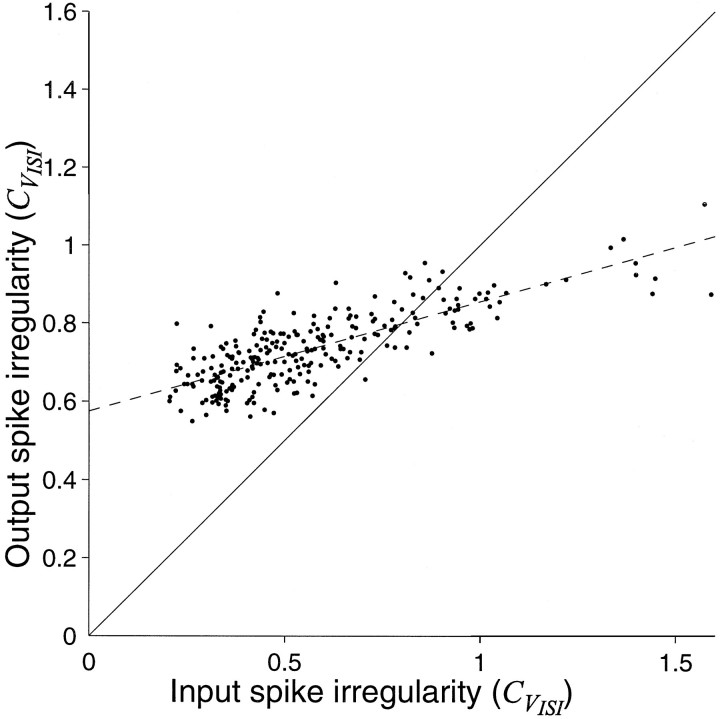

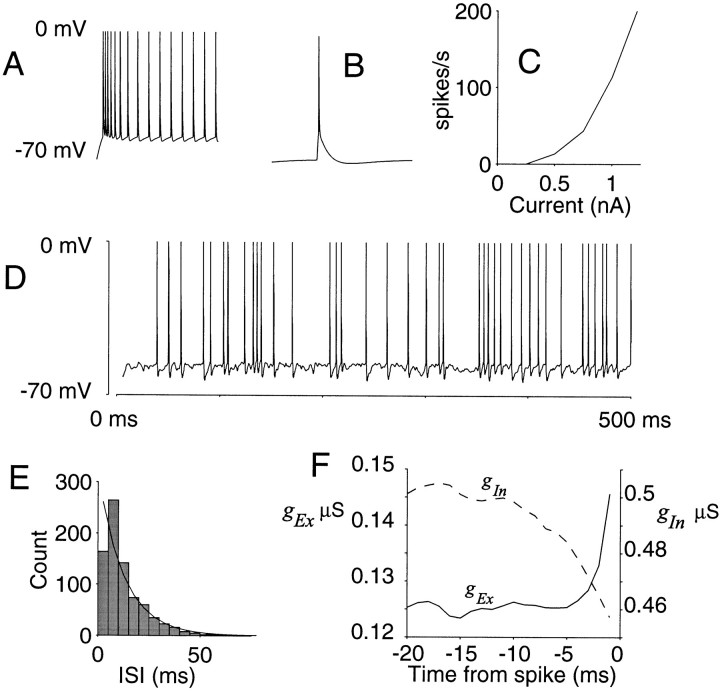

Figure 2, B, D, and F, represents the count by the height of a particle. Excitation drives the particle toward an absorbing barrier at spike threshold, whereas inhibition drives the particle toward a reflecting barrier (represented by the thick solid line) just below zero. The particle represents the membrane voltage or the integrated current arriving at the axon hillock. The height of the absorbing barrier is inversely related to the size of an excitatory synaptic potential. It is the number of synchronous excitatory inputs necessary to depolarize the neuron from rest to spike threshold.

Fig. 2.

Three counting models for synaptic integration in the high-input regime. The diagrams (B, D, F) depict three strategies that would permit a neuron to count many input spikes and yet produce a reasonable spike output. For each of the strategies, model parameters were adjusted to produce an output spike count that is the same, on average, as any one input. The membrane state is represented by a particle that moves between a lower barrier and spike threshold (top bar). The height of the particle reflects the input count. Each EPSP drives the particle toward spike threshold, but the height decays to the ground state with time constant, τ (insets). When the particle reaches the top barrier, an action potential occurs, and the process begins again with the count reset to 0. A, Excitatory input to the model neurons. The 300 input spike trains are depicted as rows of a raster. Each input is modeled as a Poisson point process with a mean rate of 50 spikes/sec. The simulated epoch is 100 msec. C, E, G, Model response. The particle height is interpreted as a membrane voltage that is plotted as a function of time. These outputs were obtained using input spikes in A and the model illustrated in the middle column (B, D, F). B, C, Integrate-and-fire model with negligible inhibition and 20 msec time constant. To achieve an output of five spikes in the 100 msec interval, the spike threshold was set to 150 steps above the resting/reset state. Notice the regular interspike intervals in C. D, E, Coincidence detector. The spike threshold is only 16 steps above rest/reset, but the time constant must be 1 msec to achieve five spikes out. The coincidence detector fires if and only if there is sufficient synchronous excitation. F, G, Balanced excitation–inhibition. A second set of inputs, like the ones shown in A, provide inhibitory input. Each inhibitory event moves the particle toward thelower barrier. The spike threshold is 15 steps above rest/reset, and the time constant is 20 msec. The particle follows a random walk, constrained by the lower barrier and the absorption state at spike threshold. This model is most consistent with known properties of cortical neurons. A more realistic implementation is described in Appendix .

The model makes a number of simplifying assumptions, which are known to be incorrect. There are no active or passive electrical components in the model. We have ignored electrochemical gradients or any other factor that would influence the impact of a synaptic input on membrane polarization—with one exception. The barrier to hyperpolarization at zero is a crude implementation of the reversal potential for the ionic species that mediate inhibition. We have intentionally disregarded any variation in synaptic efficacy. All excitatory synaptic events count the same amount, and the same can be said of inhibitory inputs. Thus we are considering only those synaptic events that influence the postsynaptic neuron (no failures). We have ignored any variation in synaptic amplitude that would affect spikes arriving from the same input—because of adaptation, facilitation, potentiation, or depression—and we have ignored any differences in synaptic strength that would distinguish inputs. In this sense we have ignored the geometry of the neuron. We will justify this simplification in Discussion but state here that our strategy is conservative with respect to our aims and the conclusions we draw. Finally, we did not impose a refractory period or any variation that would occur on reset after a spike (e.g., afterhyperpolarization). The model rarely produces a spike within 1 msec of the one preceding, so we opted for simplicity. Appendix describes a more realistic model with several of the biophysical properties omitted here.

We have used this model to study the statistics of the output spike discharge. It is important to note that there is no noise intrinsic to the neuron itself. Consistent with experimental data (Calvin and Stevens, 1968; Mainen and Sejnowski, 1995; Nowak et al., 1997), all variability is assumed to reflect the integration of synaptic inputs. Because there are no stochastic components in the modeled postsynaptic neuron, the variability of the spike output reflects the statistical properties of the input spike patterns and the simple integration process described above.

A key advantage to the model is its computational simplicity. It enables large-scale simulations of synaptic integration under the assumption of dense connectivity. Thus a unique feature of the present exercise is to study the numerical properties of synaptic integration in a high-input regime, in which one to several hundred excitatory inputs arrive at the dendrite for every action potential the neuron produces.

RESULTS

1.1: Problem posed by high-input regime

Figure 2 illustrates three possible strategies for synaptic integration in the high-input regime. Figure 2A depicts the spike discharge from 300 excitatory input neurons over a 100 msec epoch. Each input is modeled as a random (Poisson) spike train with an average discharge rate of 50 impulses/sec (five spikes in the 100 msec epoch shown). The problem we wish to consider is how the postsynaptic neuron can integrate this input and yet achieve a reasonable spike rate. To be concrete, we seek conditions that allow the postsynaptic neuron to discharge at 50 impulses/sec. There is nothing special about the number 50, but we would like to conceive of a mechanism that produces a graded response to input over a range of 0–200 spikes/sec. One way to impose this constraint is to identify conditions that would allow the neuron to respond at the average rate of any one of its inputs (that is, output spike rate should approximate the number of spikes per active input neuron per time).

A counting mechanism can achieve this goal through three types of parameter manipulations: a high absorption barrier (spike threshold), a short integration time (membrane time constant), or a balancing force on the count (inhibition). Figure 2 shows how each of these manipulations can lead to an output spike rate that is approximately the same as the average input. The simplest way to get five spikes out of the postsynaptic neuron is to impose a high spike threshold. Figure 2B depicts the output from a simple integrate-and-fire mechanism when the threshold is set to 150 steps. Each synaptic input increments the count toward the absorption barrier, but the count decays with an integration time constant of 20 msec. The counts might be interpreted as voltage steps of 50–100 μV, pushing the membrane voltage from its resting potential (−70 mV) to spike threshold (−55 mV). This textbook integrate-and-fire neuron (Stein, 1965; Knight, 1972) responds at approximately the same rate as any one of its 300 excitatory inputs. There are problems, however, that render this solution untenable. The mechanism grossly underestimates the impact of individual excitatory synaptic inputs (Mason et al., 1991; Otmakhov et al., 1993; Thomson et al., 1993a, b; Thomson and West, 1993), and it produces a periodic output spike train. The regularity of the spike output in Figure 2C contrasts markedly with the random ISIs that constitute the inputs in Figure 2A. As suggested bySoftky and Koch (1993), these observations are clear enough indication to jettison this mechanism.

If relatively few counts are required to reach the absorption barrier, then the synaptic integration process must incorporate an elastic force that pulls the count back toward the ground state. This can be accomplished by shortening the integration time constant or by incorporating a balancing inhibitory force that diminishes the count. Figure 2D depicts a particle that steps toward the absorption barrier with each excitatory event. It takes only 16 steps to reach spike threshold, but the count decays according to an exponential with a short time constant (τ = 1 msec). There is no appreciable inhibitory input. The resulting output is shown in Figure 2E. The simulated spike train is quite irregular, reflecting occasional coincidences of spikes among the inputs. Because of the short time constant, the coincidences are sensed with precision well below the average interspike interval. Again, had we chosen a higher threshold, we could have achieved a proper spike output with a longer time constant, but only at the price of a regular ISI (even 3 msec is too long). The mechanism illustrated in Figure 2, D and E, detects coincidental synaptic input such that only the synchronous excitatory events are represented in the output spike train. Although the coincidence detector produces an irregular ISI, it requires an unrealistically short membrane time constant (Mason et al., 1991; Reyes and Fetz, 1993). This requirement can be relaxed somewhat when spike rates are low and the inputs are sparse (Abeles, 1982), but the mechanism is probably incompatible with the high-input regime considered in this paper. This is disappointing because this model would effectively time stamp presynaptic events that are sufficient to produce a spike, providing the foundation for propagation of a precise temporal code in the form of spike intervals (Abeles, 1991; Engel et al., 1992; Abeles et al., 1993; Softky, 1994; Konig et al., 1996;Meister, 1996).

The third strategy is to balance the excitation with inhibitory input. This is illustrated in the bottom panels of Figure 2. For each of the 300 excitatory inputs shown in Figure 2A, there is an equivalent amount of inhibitory drive (data not shown). Each excitatory synaptic input drives the particle toward the absorption barrier, as in Figure 2, B and C; each inhibitory input moves the particle toward the ground state. The accumulated count decays with a time constant of 20 msec. The particle follows a random walk to the absorption barrier situated 15 steps away. The lower barrier just below the reset value crudely implements a synaptic reversal potential for the inhibitory current. The membrane potential is not permitted to fall below this value. In other words, inhibitory synaptic input is only effective when the membrane is depolarized from rest.

This model is an integrate-and-fire neuron with balanced excitation and inhibition. It implies that the neuron varies its discharge rate as a consequence of harmonious changes in its excitatory and inhibitory drive. Conditions that lead to greater excitation also lead to greater inhibition. This idea is reasonable because most of the inhibitory input to neurons arises from smooth stellate cells within the same cortical column (Somogyi et al., 1983a; DeFelipe and Jones, 1985;Somogyi, 1989; Beaulieu et al., 1992). Thus excitatory and inhibitory inputs are activated by the same stimuli; e.g., they share the same preference for orientation (Ferster, 1986), or they are affected similarly by somatosensory stimulation (Carvell and Simons, 1988;McCasland and Hibbard, 1997). Contrast this idea with the standard concept of a push–pull arrangement in which the neural response reflects the degree of imbalance between excitation and inhibition. In the high-input regime, more inhibition is needed to balance the excitatory drive. The balance confers a proper firing rate without diminishing the impact of single EPSPs or the membrane time constant (but see Appendix ). The cost, however, is an irregular ISI.Gerstein and Mandelbrot (1964) first proposed that such a process would give rise to an irregular ISI, and numerous investigators have implemented similar strategies, termed random walk or diffusion models (Ricciardi and Sacerdote, 1979; Lansky and Lanska, 1987; for review seeTuckwell, 1988). What is novel in our analysis is that the same idea allows the neuron to respond over the same dynamic range as any one of its many inputs. That is, it allows the neuron to operate in a high-input regime. This simple idea has important implications for the propagation of signal and noise through neural networks of the neocortex.

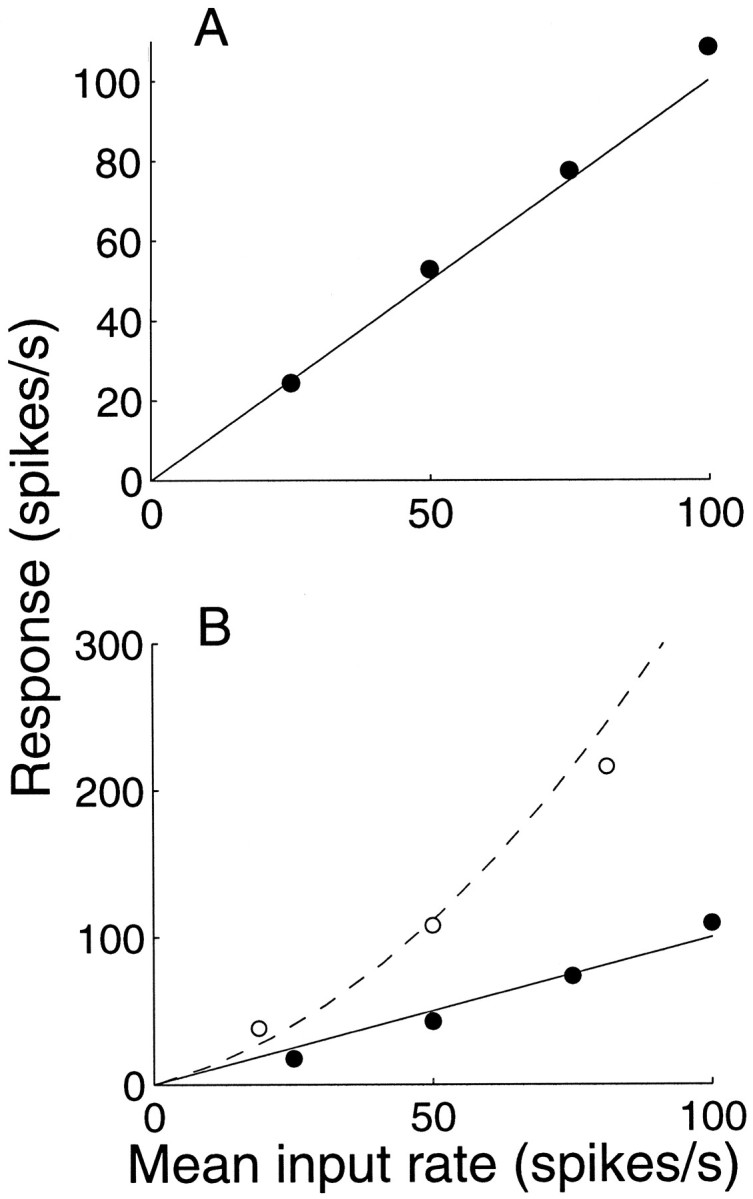

1.2: Dynamic range

The counting model with balanced excitation and inhibition achieves a proper dynamic range of response using reasonable parameters. Figure 3A shows the response of a model neuron as a function of the average response of the inputs. We used 300 excitatory and 300 inhibitory inputs in these simulations. The output response is nearly identical to the response of any one input, on average. This neuron is performing a very simple calculation, averaging, but it is doing so in a high-input regime. Consider that there are ∼300 excitatory synaptic inputs for every spike, yet each excitatory input delivers the depolarization necessary to reach spike threshold. By balancing the surfeit of excitation with a similar inhibitory drive, the neuron effectively compresses a large number of presynaptic events into a more manageable number of spikes. Sacrificed are details about the input spike times; they are only reflected in the tiny bumps and wiggles that describe the membrane voltage during the interspike interval. This capacity for compression permits the neuron to integrate inputs from its dendrites and thus to perform calculations on large numbers of inputs.

The mechanism should also allow the neuron to adapt to a broad range of activation in which more or fewer inputs are active. Figure 3B shows the results of simulations using twice the number of excitatory and inhibitory inputs. The dashed curvedepicts the model response using the identical parameters to those in Figure 3A. The output response is now a little larger than the average input, and the relationship is approximately quadratic. The departure from linearity is attributable to the membrane time constant. At higher input rates, the count frequently accumulates toward spike threshold before there is any time to decay. Although the range of response is reasonable, it is not a sustainable solution. If every neuron were to exhibit such amplification, the response would exceed the observed dynamic range in very few synapses. Imagine a chain of neurons, each squaring the response of its averaged input.

A small adjustment to the model repairs this. The solid curve in this graph was obtained after changing the height of the threshold barrier from 15 to 25. The neuron can now accommodate a doubling of the number of inputs. With what amounts to a few millivolts of hyperpolarization, the neuron can achieve substantial control of its gain. Such a mechanism has been shown to underlie the phenomenon of contrast adaptation in visual cortical neurons (Carandini and Ferster, 1997). In addition, the curves in Figure 3B raise the possibility that a neuron could compute the square of a quantity by a small adjustment in its resting membrane potential or conductance. This observation may be relevant to computational models that use squaring-type nonlinearities (Adelson and Bergen, 1985; Heeger, 1992a).

Our central point is that a simple counting model can accommodate large numbers of inputs with relatively modest adjustment of parameters. It is essential, however, that a balance of inhibition holds. Because excitatory synapses typically outnumber inhibitory inputs by about 6:1 for cortical neurons (Somogyi, 1989; Braitenberg and Schüz, 1991), it is possible that the control of excitation (e.g., presynaptic release probability or synaptic depression) may play a role in maintaining the balance (Markram and Tsodyks, 1996; Abbott et al., 1997).

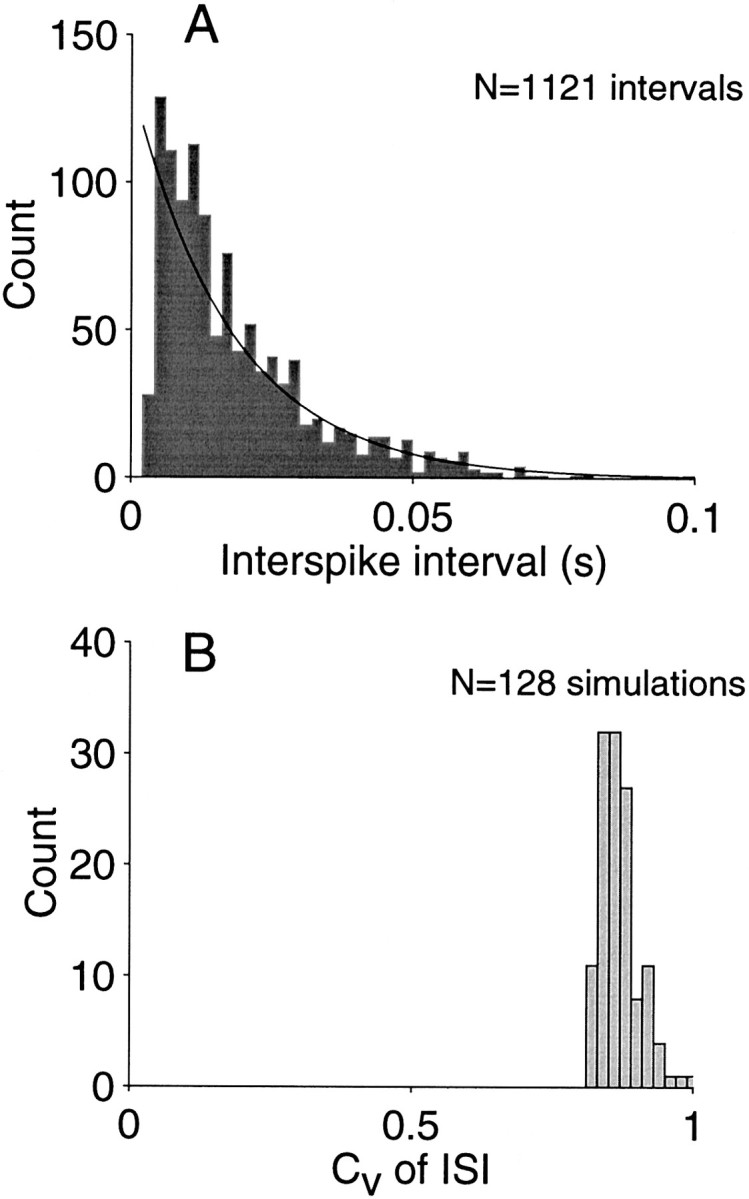

1.3: Irregularity of the interspike interval

As indicated in the preceding section, a consequence of the balanced excitation–inhibition model is an irregular ISI. Figure 4A shows a representative interval histogram for one simulation. The intervals were collated from 20 sec of simulated response at a nominal rate of 50 spikes/sec. Thesolid curve is the best fitting exponential probability density function.

The variability of the ISI is commonly measured by its coefficient of variation (CVISI = SD/mean). The value for the example in Figure 4A is 0.9, just less than the value expected of a random process (for an exponential distribution, CVISI = 1). The value is typical for these simulations, appearing impervious to spike rate or the number of inputs. Figure 4B shows the distribution of CVISI obtained for 128 simulations incorporating a variety of parameters including those used to produce Figure 3 (solid symbols). The simulations encompassed a broad range of spike rates, but all produced an irregular spike output. The CVISI of 0.8–0.9 reflects a remarkable degree of variation in the ISI. Because the model is effectively integrating the response from a very large number of neurons, one might expect such a process to effectively “clean up” the irregularity of the inputs, as in Figure 2C (Softky and Koch, 1993). The irregularity is a consequence of the balance between excitation and inhibition, suggesting an analogy between the ISI and the distribution of first passage times of random-walk (diffusion) processes.

Fig. 3.

Conservation of response dynamic range. The spike rate of the model neuron is plotted as a function of the average input spike rate. A, Simulations with 300 excitatory inputs and 300 inhibitory inputs; parameters are the same as in Figure 2, F and G (barrier height, 15 steps; τ = 20 msec). The balanced excitation–inhibition model produces a response that is approximately the same as one of its many inputs. B, Simulations with 600 excitatory and inhibitory inputs. Open symbols and dashed curve show the response obtained using the same model parameters as in A. Solid symbolsand curve show the response when the barrier height is increased to 25 steps. These simulations suggest that a small hyperpolarization could be applied to enforce a unity gain input–output relationship when the number of active inputs is large.

In our simple counting model, the relationship between input and output spikes is entirely deterministic. All inputs affect the neuron with the same strength, and there is no chance for an input to fail. The only source of irregularity in the model is the time of the input spikes themselves. We simulated the input spike trains as random point processes, and the counting mechanism nearly preserved the exponential distribution of ISIs in its output. But it did not do so completely; the CVISI was slightly <1. This raises a possible concern. To what extent does the output spike irregularity depend on our choice of inputs? Suppose the input spike trains are more regular than Poisson spike trains; suppose they are only as irregular as the spike trains produced by the model. Would the counting mechanism reduce the irregularity further?

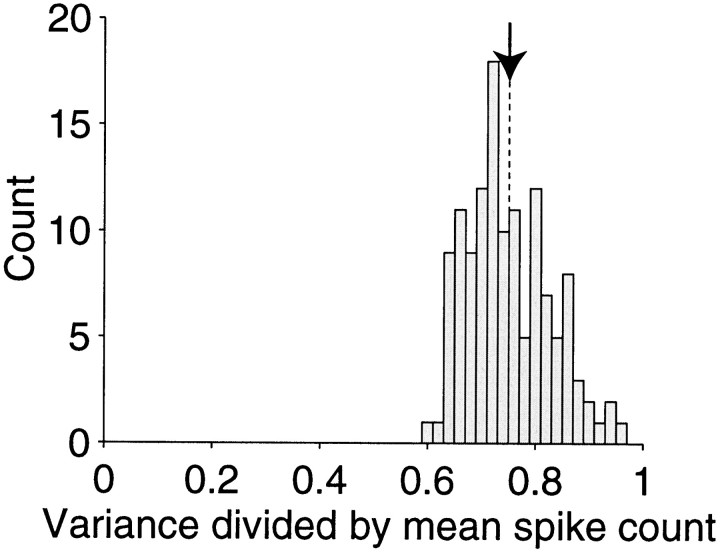

Figure 5 shows the results of a series of simulations in which we varied the statistics of the input spike trains. We used the same simulation parameters as in Figure 3A but constructed the input spike trains by drawing ISIs from families of γ distributions which lead to greater or less irregular intervals than the Poisson case (Mood et al., 1963). By varying the parameters of the distribution we maintained the same input rate while affecting the degree of irregularity of the spike intervals. Figure 5 plots the CVISI of our model neuron as a function of the CVISI for the inputs. The Poisson-like inputs would have a CVISI of 1. Notice that for a wide range of input CVISI, the output of the counting model attains a CVISI that is quite restricted and relatively large. The fit intersects the main diagonal at CVISI = 0.8. This implies that the mechanism would effectively randomize more structured input and tend to regularize (slightly) a more irregular input. Most importantly, the result indicates that the output irregularity is not merely a reflection of input spike irregularity. The irregular ISI is a consequence of the balanced excitation–inhibition model.

Fig. 5.

Irregularity of the spike discharge is not merely a reflection of input spike irregularity. The graph compares the irregularity of the ISI produced by the balanced excitation–inhibition model with the irregularity of the intervals constituting the 300 excitatory and inhibitory input spike trains. The input spike trains were constructed by drawing intervals randomly from a gamma distribution. By varying the parameters of the gamma distribution, the input CVISI was adjusted from relatively regular to highly irregular (abscissa). Eachpoint represents the results of one simulation, using different parameters for the input interval distribution. Notice that the degree of input irregularity has only a weak effect on the distribution of output interspike intervals. Points abovethe main diagonal represent simulations in which the counting model produced a more irregular discharge than the input spike trains. Points below the main diagonal represent simulations in which the output is less irregular than the input spike trains. The dashed line is the least squares fit to the data. This line intersects the main diagonal at CVISI = 0.8. The best fitting line does not extrapolate to the origin, because the inputs are not necessarily synchronous.

These ideas are consistent with Calvin and Steven’s (1968) seminal observations in motoneurons that the noise affecting spike timing is attributable to synaptic input rather than stochastic properties of the neuron itself (e.g., variable spike threshold) (Calvin and Stevens, 1968; Mainen and Sejnowski, 1995; Nowak et al., 1997). Nonetheless, if the random walk to a barrier offers an adequate explanation of ISI variability, then it is natural to view the irregular ISI as a signature of noise and to reject the notion that it carries a rich temporal code. The important insight is that the irregular ISI may be a consequence of synaptic integration and yet may reflect little if any information about the temporal structure of the synaptic inputs themselves (Shadlen and Newsome, 1994; van Vreeswijk and Sompolinsky, 1996).

1.4: Variance of spike count

It is important to realize that the coefficient of variation that we have calculated is an idealized quantity. It rests on the assumption that the input rates are constant and that the input spike trains are uncorrelated. Under these assumptions the number of input spikes arriving in any epoch would be quite precise. For example, at an average input rate of 100 spikes/sec, the number of spikes arising over the ensemble of 300 inputs varies by only ∼2% in any 100 msec interval. Variability produced by the model is therefore telling us how much noise the neuron would add to a simple computation (e.g., the mean) when the solution ought to be the same in any epoch. This will turn out to be a useful concept (see section 3 below), but it is not anything that we can actually measure in a living brain. In reality, inputs are not independent, and the number of spikes among the population of inputs would be expected to be more variable. We will attach numbers to these caveats in subsequent sections. For now, it is interesting to calculate one more idealized quantity.

If, over repeated epochs, the number of input spikes were indeed identical (or nearly so), how would the spike count of the output neuron vary over repeated measures? Using the same simulations as in Figures 3 and 4, we divided each 20 sec simulation into 200 epochs of 100 msec. We computed the mean and variance of the spikes counted in these epochs and calculated the ratio: variance/mean. Figure6 shows the distribution of variance/mean ratios for a variety of spike output rates and model parameters. The ratios are concentrated between 0.7 and 0.8, just slightly less than the value expected for a random Poisson point process.

Fig. 4.

Variability of the interspike interval. A, Frequency histogram of ISIs from one simulation using 300 inputs at 50 spikes/sec. Notice the substantial variability. The SD divided by the mean interval is known as the coefficient of variation of the interspike interval (CVISI). The value for this simulation is 0.9. The distribution is approximated by an exponential probability density (solid curve), which would predict CVISI = 1. B, Coefficient of variation of the interspike interval (CVISI) from 128 simulations using 300 and 600 inputs and a variety of spike rates. Each simulation generated 20 sec of spike discharge using parameters that led to a similar rate of discharge for input and output neurons (i.e., a common dynamic range). The average CVISI was 0.87.

Fig. 6.

Frequency histogram of the spike count variance-to-mean ratios obtained from the same simulations as in Figure 4B. For each of the simulations, the spikes were counted in 200 epochs of 100 msec duration. The variance in the number of spikes produced by the model in each of these epochs is proportional to the mean of the counts obtained for these epochs. Spike count variability is therefore conveniently summarized by the variance-to-mean ratio. The average ratio is 0.75 (arrow).

There are two salient points. First, notice that the histogram of variance/mean ratios appears similar to the histogram of CVISI from the same simulations (Fig. 4B). In fact, the ratios in Figure 6 are approximated by squaring the values for CVISI in Figure 4B. This is a well known property of interval and count statistics for a class of stochastic processes known as renewals (Smith, 1959). We will elaborate this point in section 3. Second, the variance/mean ratios fall short of the value measured in visual cortex (i.e., 1–1.5). Clearly the variability observed in vivoreflects sources of noise beyond the mechanisms we have considered. In contrast to our simulations, a real neuron does not receive an identical number of input spikes in each epoch; the input is itself variable. A key part of this variability arises from correlation among the inputs. In the next section we turn attention to properties of cortical neurons that lead to correlated discharge. We will return to the issue of spike count variance in section 3.

2: Redundancy, correlation, and signal fidelity

The preceding considerations lead us to depict the neuronal spike train as a nearly random realization of an underlying rate term reflecting the average input spike rate (i.e., the number of input spikes per input neuron per time), or some calculation thereon. Whether we accept this argument on principle, there is little doubt that many cortical neurons indeed transmit information via changes in their rate of discharge. Yet, the irregular ISI precludes single neurons from transmitting a reliable estimate of this very quantity. Because the spike count from any one neuron is highly variable, several ISIs would be required to estimate the mean firing rate accurately (Konig et al., 1996). The irregular ISI therefore poses an important constraint on the design of cortical architecture: to transmit rate informationrapidly—say, within a single ISI—several copies of the signal must be transmitted. In other words, the cortical design must incorporate redundancy. In this section we will quantify the notion of redundancy and explore its implications for the propagation of signal and noise in the cortex.

2.1: Redundancy necessitates shared connections

By redundancy we refer to a group of neurons, each of which encodes essentially the same signal. Ideally, each neuron would transmit an independent estimate of the signal through its rate of discharge. If the variability of the spike trains were truly uncorrelated (independent), then an ensemble of neurons could convey, in its average, a high-fidelity signal in a very short amount of time (e.g., a fraction of an ISI; see below). Although this is a desirable objective, the assumption of independence is unlikely to hold in real neural systems. Redundancy implies that cortical neurons must share connections and thus a certain amount of common variability.

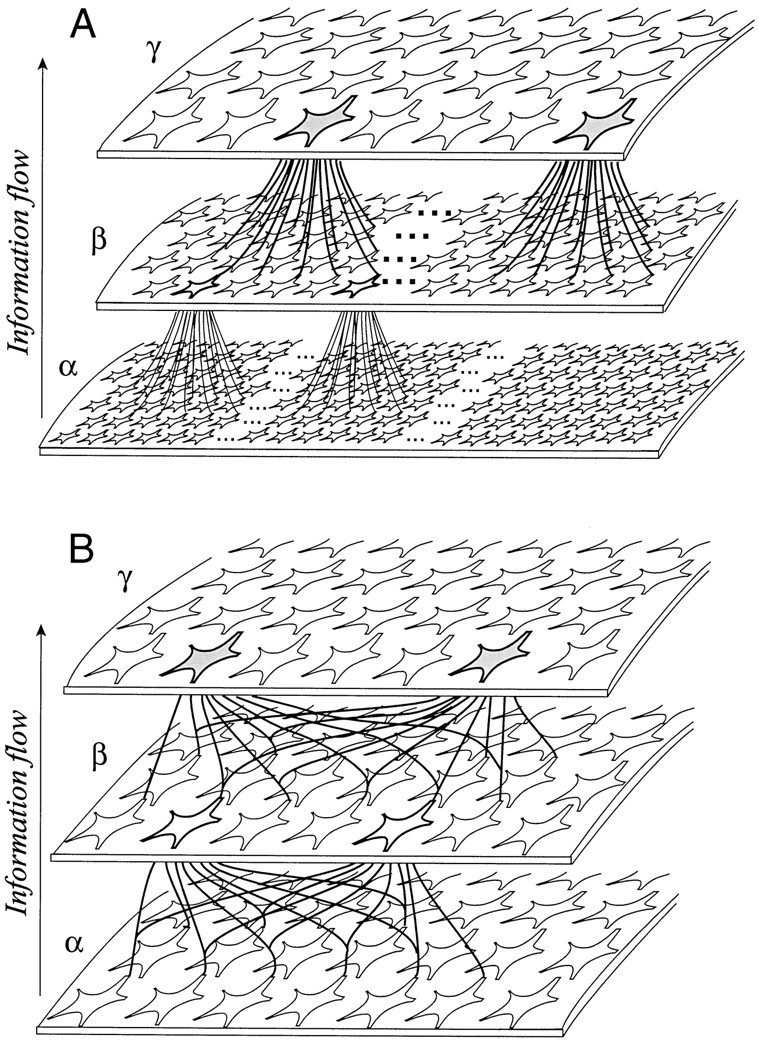

The need for shared connections is illustrated in Figure 7A. The flow of information in this figure is from the bottom layer of neurons to the top. The neurons at the top of the diagram represent some quantity, γ. Many neurons are required to represent γ accurately, because the discharge from any one neuron is so variable. To compute its estimate of γ, each neuron in the upper layer requires an estimate of some other quantity, β, supplied by the neurons in middle tier of the diagram. To compute γ rapidly, however, each neuron at thetop of the diagram must receive many samples of β. Note, however, that to compute β, each of the neurons in themiddle of the diagram needs an estimate of some other quantity, α. What was said of the neurons at the topapplies to those in the middle panel as well. Thus each β neuron must receive inputs from many α neurons. The chain of processing resembles a pyramid and is clearly untenable as a model for how neurons deep in the CNS come to encode any particular quantity. We cannot insist on geometrically large numbers of independent, lower-order neurons to sustain the responses of a higher-order neuron positioned a few synaptic links away. From this perspective, shared connectivity is necessary to achieve redundancy, and hence rapid processing, in a system of noisy neurons.

In Figure 7B, the same three tiers are illustrated, but the neurons encoding γ receive some input in common. Each neuron projects to many neurons at the next stage. Viewed from the top, some fraction of the inputs to any pair of neurons is shared. In principle, a shared input scheme, such as the one in Figure 7B , would permit the cortex to represent quantities in a redundant fashion without requiring astronomical numbers of neurons. There is, however, a cost. If the neurons at the top of the diagram receive too much input in common, the trial-to-trial variation in their responses will be similar; hence the ensemble response will be little more reliable than the response of any single neuron.

We therefore wish to explore the influence of shared inputs on the responses of two cortical neurons such as the ones shaded at the top of Figure 7B. How much correlated variability results from differing amounts of shared input? How does correlated variability among the input neurons themselves (as in themiddle tier of Fig. 7B) influence the estimate of γ at the top tier? To what extent does shared connectivity lead to synchronous action potentials among neurons at a given level? How does synchrony among inputs influence the outputs of neurons in higher tiers? We can use the counting model developed in the previous section to explore these questions. Our goal is to clarify the relationship among common input, synchronous spikes, and noise covariance. A useful starting point is to consider the effect of shared inputs on the correlation in spike discharge from pairs of neurons.

2.2: Shared connections lead to response correlation

We simulated the responses from a pair of neurons like the onesshaded at the top of Figure 7B. Each neuron received 100–600 excitatory inputs and the same number of inhibitory inputs. A fraction of these inputs were identical for the pair of neurons. We examined the consequences of varying the fraction of shared inputs on the output spike trains. Except for this manipulation, the model is the same one used to produce the results in Figures 3 and 4. Thus each neuron responded approximately at the average rate of its inputs. We now have a pair of spike trains to analyze, and once again we are interested in interval and count statistics. For a pair of neurons, interval statistics are commonly summarized by the cross-correlation spike histogram (or cross-correlogram); count statistics have their analogy in measures of response covariance. We will proceed accordingly.

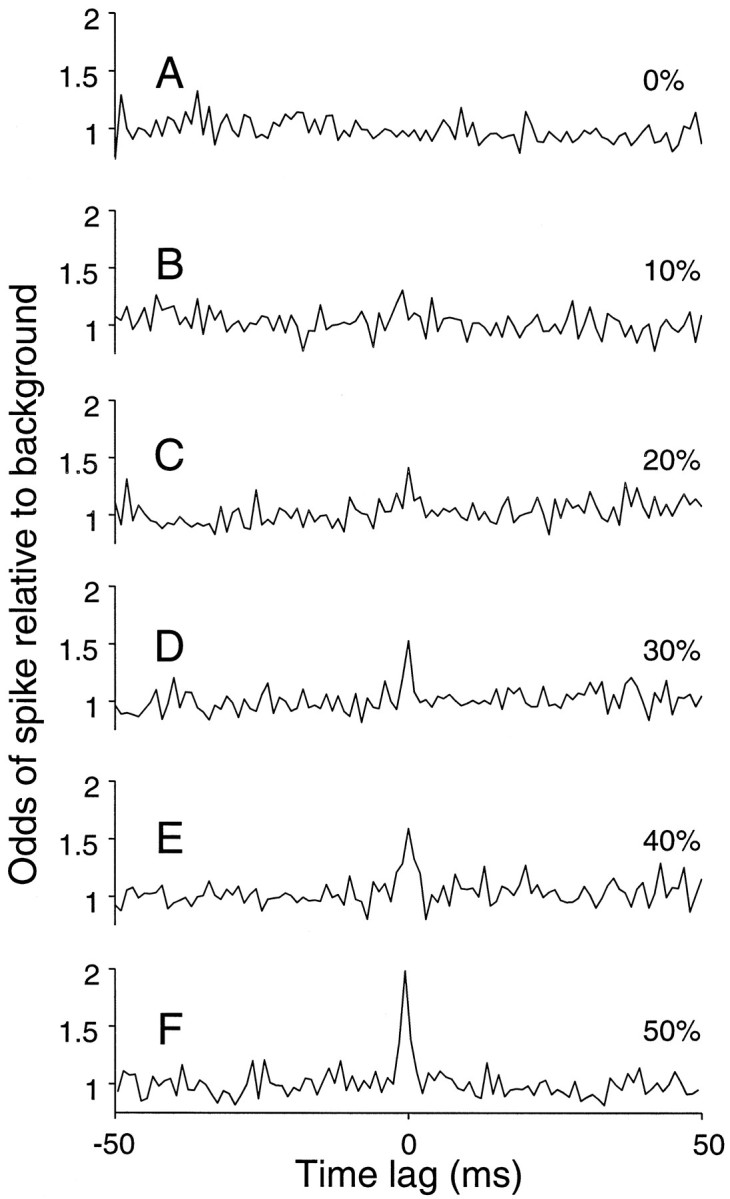

Figure 8 depicts a series of cross-correlograms (CCGs) obtained for a variety of levels of common input. We obtained these functions from 20 sec of simulated spike trains using the same parameters as in Figure 3A. The normalized cross-correlogram depicts the relative increase in the probability of obtaining a spike from one neuron, given a spike from the second neuron, at a time lag represented along the abscissa (Melssen and Epping, 1987; Das and Gilbert, 1995b). The probabilities are normalized to the expectation given the base firing rate for each neuron. Two observations are notable. First, the narrow central peak in the CCG reflects the amount of shared input to a neuron, as previously suggested (Moore et al., 1970; Fetz et al., 1991; Nowak et al., 1995). Second, no structure is visible in the correlograms until a rather substantial fraction of the inputs are shared. This is despite several simplifications in the model that should boost the effectiveness of correlation. For example, introducing variation in synaptic amplitude attenuates the correlation. Thus it is likely that the modest peak in the correlation obtained with 40% shared excitatory and inhibitory inputs represents an exaggeration of the true state of affairs.

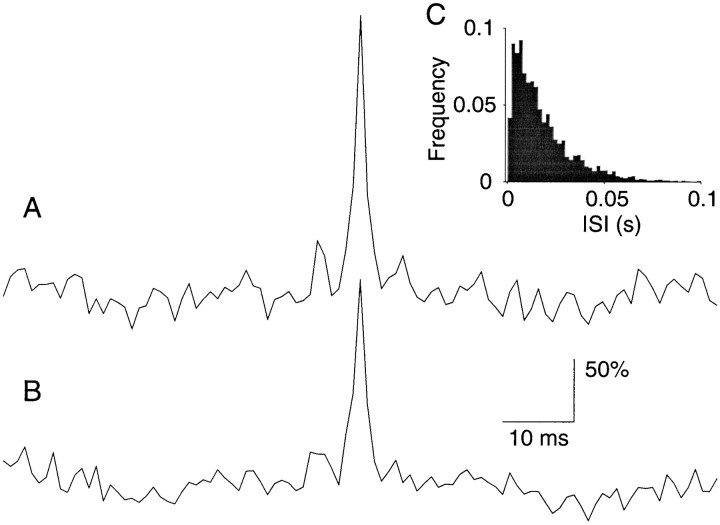

Fig. 8.

Cross-correlation response histograms from a pair of simulated neurons. The correlograms represent the relative change in response from one neuron when a spike has occurred in the other neuron at a time lag indicated along the abscissa. The spike train for each neuron was simulated using the random walk counting model with 300 excitatory and 300 inhibitory inputs. Plots A–F differ in the amount of common input that is shared by the simulated pair. A small central peak in the correlogram is apparent when the pair of neurons share 20–50% of their inputs.

Rather than viewing the entire CCG for each combination of shared excitation and inhibition, we have integrated the area above the baseline and used it to derive a simpler scalar value:

| Equation 1 |

where A11 and A22represent the area under the normalized autocorrelograms for neurons 1 and 2, respectively, and A12 is the area under the normalized cross-correlogram (the autocorrelogram is the cross-correlogram of one neural spike train with itself). The value of rc reflects the strength of the correlation between the two neurons on a scale from −1 to 1. This value is equivalent to the correlation coefficient that would be computed from pairs of spike counts obtained from the two neurons across many stimulus repetitions (W. Bair, personal communication).FNaIt provides a much simpler measure of correlation than the entire CCG function.

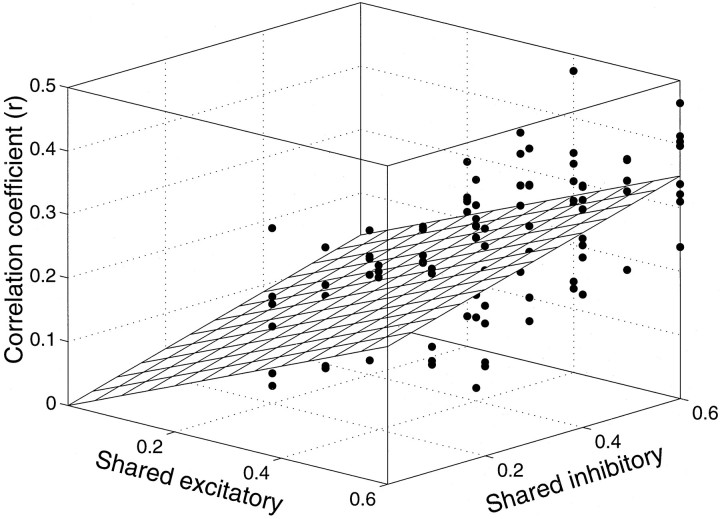

Using rc we can summarize the effect of shared excitation and inhibition in a single graph. Figure9 is a plot of rcas a function of the fraction of shared excitatory and shared inhibitory inputs. The points represent correlation coefficients from simulations using 100, 300, and 600 excitatory and inhibitory inputs and a variety of spike rates. The threshold barrier was adjusted to confer a reasonable dynamic range of response (input spike rate divided by output spike rate was 0.75–1.5). Over the range of simulated values, the correlation coefficient is approximated by the plane:

| Equation 2 |

where φE and φI are the fraction of shared excitatory and inhibitory inputs, respectively. The graph shows that both the fractions of shared excitatory and shared inhibitory connections affect the correlation coefficient. Shared excitation has a greater impact, because it can lead directly to a spike from both neurons.

Fig. 9.

Effect of common input on response covariance. The correlation coefficient is plotted as a function of the fraction of shared excitatory and shared inhibitory input to a pair of model neurons. Each point was obtained from 20 sec of simulated spike discharge using a variety of model parameters (input spike rate, number of inputs, and barrier height). In each simulation, the output spike rate was approximately the same as the average of any one input (within a factor of ±0.25). The best fitting plane through the origin is shown. A substantial degree of shared input is required to achieve even modest correlation.

Over the range of counting model parameters tested, we find this planar approximation to be fairly robust (the fraction of variance of rc accounted for by Eq. 2 is 42%). We can improve the fit with a more complicated model (e.g., spike rate has a modest effect), but such detail is unimportant for the exercise at hand. Of course, Equation 2 must fail as the fraction of shared input approaches 1; the two neurons will follow identical random walks to spike threshold, and the correlation coefficient must therefore approach 1.

The most striking observation from Figure 9 is that only modest correlation is obtained when nearly half of the inputs are identical. The counting model is impressively resilient to common input, especially from inhibitory neurons. Electrophysiological recordings in visual cortex indicate that adjacent neurons covary weakly from trial to trial on repeated presentations of the same visual stimulus, with measured correlation coefficients typically ranging from 0.1 to 0.2 (van Kan et al., 1985; Gawne and Richmond, 1993; Zohary et al., 1994). The counting model suggests that such modest correlation might entail rather substantial common input, ∼30% shared connections, by Equation 2. This is larger than the amount of common input that might be expected from anatomical considerations. The probability that a pair of nearby neurons receive an excitatory synapse from the same axon is believed to be ∼0.09 (Braitenberg and Schüz, 1991; Hellwig et al., 1994). Comparable estimates are not known for the axons from inhibitory neurons, although the probability is likely to be considerably larger (Thomson et al., 1996), because there are fewer inhibitory neurons to begin with. Still, it is unlikely that pairs of neurons share 50% of their inhibitory input; yet this is the value for φI needed to attain a correlation of 0.15 (when φE = 0.09, Eq. 2). We suspect that this discrepancy arises in part because the covariation measured electrophysiologically exists not only because of common input to a pair of neurons at the anatomical level, but also because the signals actually transmitted by the input neurons are contaminated by common noise arising at earlier levels of the system. As an extreme example, small eye movements could introduce shared variability among all neurons performing similar visual computations (Gur et al., 1997).

2.3: Response correlation limits fidelity

Why should we care about such modest correlation? The reason is that even weak correlation severely limits the ability of the neural population to represent a particular quantity reliably (Johnson, 1980;Britten et al., 1992; Seung and Sompolinsy, 1993; Abbott, 1994; Zohary et al., 1994; Shadlen et al., 1996). Importantly for our present purposes, developing an intuition for this principle will help us understand a major component of the variability in the discharge of a cortical neuron.

Consider one of the shaded neurons shown in the top tier of Figure 7B. Its rate of discharge is supposed to represent the result of some computation involving the quantity β. For present purposes we need not worry about exactly what the neuron is computing with this value. What is important is that in any epoch, all that the shaded neuron knows about β is the number of spikes it receives from neurons in the middle tier of Figure 7B. Clearly, the variability of the shaded neuron’s spike output depends to some extent on the variability of the number of input spikes, no matter what the neuron is calculating. If the shaded neuron receives input from hundreds of neurons, each contributing an independentestimate of β, then the number of input spikes per neuron per unit time would vary minimally. For example, suppose that some visual stimulus contains a feature represented by the quantity β = 40 spikes/sec. Each of the neurons representing this quantity would be expected to produce four spikes in a 100 msec epoch, but because the spike train of any neuron is highly variable, each produces from zero to eight spikes. This range reflects the 95% confidence interval for a Poisson process with an expected count of four. We might say that the number of spikes from any one neuron is associated with an uncertainty of 50% (because the SD is two spikes; we use the term uncertainty here, rather than coefficient of variation, to avoid confusion withCVISI). In contrast, theaverage number of spikes from 100 independentneurons should almost always fall between 3.6 and 4.4 spikes per input (i.e., ± 2 SE of the mean). The shaded neuron would receive a fairly reliable estimate of β, which it would incorporate into its calculation of γ. In this example, the uncertainty associated with the average input spike count is 5%, that is, a 10-fold reduction because of averaging from 100 neurons. With more neurons, the uncertainty can be further reduced, as illustrated by the gray line (r = 0) in Figure10.

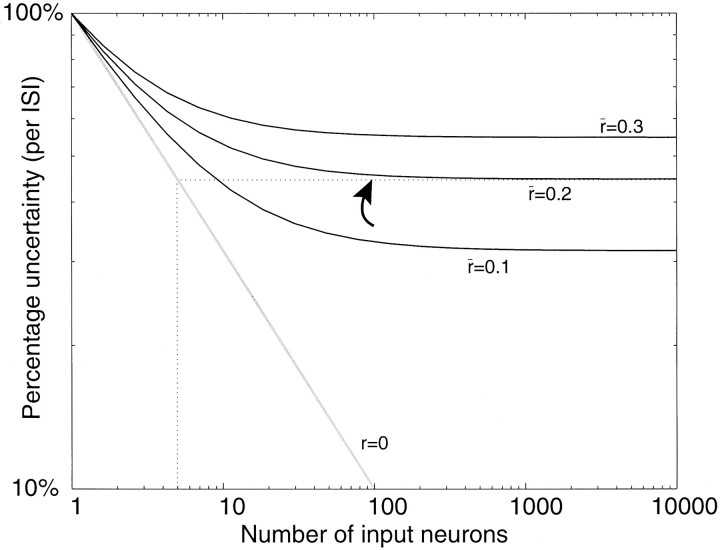

Fig. 10.

Weak correlation limits the fidelity of a neural code. The plot shows the variability in the number of spikes that arrive in an average ISI from a pool of input neurons modeled as Poisson point processes. Pool size is varied along theabscissa. In one ISI, the expected number of input spikes equals the number of neurons. Uncertainty is the SD of the input spike count divided by the mean. For one input neuron, the uncertainty is 100%. The diagonal gray line shows the expected relationship for independent Poisson inputs; uncertainty is reduced by the square root of the number of neurons. If the input neurons are weakly correlated, then uncertainty approaches an asymptote of (see Appendix ). For an average correlation of 0.2, the uncertainty from a pool of 100 neurons (arrow) is approximately the same as for five independent neurons or, equivalently, the count from one neuron in an epoch of five average ISIs.

Unfortunately, the neurons representing β, or any other quantity, do not respond independently of each other. Some covariation in response is inevitable, because any pair of neurons receive a fraction of their inputs in common, a necessity illustrated by Figure 7. The preceding section suggests that the amount of shared input necessary to elicit a small covariation in spike discharge may be quite substantial, but even a small departure from independence turns out to be important. It is easy to see why; any noise that is transmitted via common inputs cannot be averaged away. This is true even when the number of inputs is very large. For example, Zohary et al. (1994) showed that the signal-to-noise ratio of the averaged response cannot exceed −1/2 where is the average correlation coefficient among pairs of neurons.

Fig. 7.

Redundancy necessitates shared connections. Three ensembles of neurons represent the quantities α, β, and γ. Each neuron that represents γ receives input from many neurons that represent β, and each neuron that represents β receives input from many neurons that represent α. A, There are no shared connections; each neuron receives a distinct set of inputs from its neighbor. The shaded neurons receive no common input, and the same can be said of any pair of neurons in the ensemble that represents β. The scheme would require an inordinately large number of neurons. B, Neurons share a fraction of their inputs. Theshaded neurons receive some of the same inputs from the ensemble that represents β. Likewise, any pair of neurons in the β ensemble receive some common input from the neurons that represent α. This architecture allows for redundancy without necessitating immense numbers of neurons. Neither the number of neurons nor the number of connections are drawn accurately. Simulations suggest that the pair ofshaded neurons might receive as much as 40% common input, and each needs about 100 inputs to compute with the quantity β.

We would like to know how correlation among input neurons affects the variability of neural responses at the next level of processing. We can start by asking how variable are the quantities that a neuron inherits to incorporate in its own computation. From the perspective of one of the neurons in the top tier of Figure 7B, what is the variability in the number of spikes that it receives from neurons in the middle layer? In other words, how unreliable is the estimate of β?

The answer is shown in Figure 10. We have calculated the uncertainty in the number of spikes arriving from an ensemble of neurons in the middle layer. Each curve in Figure 10 shows uncertainty as a function of the number of neurons in the input ensemble, where uncertainty is expressed as the percentage variation (SD/mean) in the number of spikes that a neuron in the top layer would receive from the middle layer in an epoch lasting one typical ISI. This characterization of variability is appealing, because it bears directly on neural computation at a natural time scale.

If there is just one neuron, then the mean number of spikes arriving in an average ISI is one, of course, and so is the SD, assuming a Poisson spike train. Hence the uncertainty is 100%. If there are 100 inputs from the middle layer, then the expected number of spikes is 100: one spike per neuron. If each spike train is an independent Poisson process (Fig. 10, gray line), then the SD is 10 spikes (0.1 spikes per neuron), for a percentage uncertainty of 10%. If the spike trains are weakly correlated, however, then the percentage uncertainty is given by:

| Equation 3 |

where m is the number of neurons, and is the average correlation coefficient among all pairs of input neurons (see Appendix ). Each of the curvesin Figure 10 was calculated using a different value for . The solid curves indicate the approximate level of correlation that is believed to be present among pairs of cortical neurons and that is consistent with our simulations using a large fraction of common input ( = 0.1–0.3). Even at the lower end of this range, there is a substantial amount of variability that cannot be averaged away. For an average correlation coefficient of 0.2, the percentage uncertainty for 100 neurons is 45%; only a twofold improvement (approximately) over a single neuron!

Three important points follow from this analysis. First, modest amounts of correlated noise will indeed lead to substantial uncertainty in the quantity β, received by the top tier neurons in Figure 7B that compute γ, even if ≥100 neurons provide the ensemble input. This variability in the input quantity will influence the variance of the responses of the top tier neurons, an issue to which we shall return in section 3. Second, the modest reduction in uncertainty achieved by pooling hardly seems worth the trouble until one recalls that what is gained by this strategy is the capacity to transmit information quickly. For example, using 100 neurons with an average correlation of 0.19, the brain transmits information in one ISI with the same fidelity as would be achieved from one neuron for five times this duration. This fact is shown by the dotted linesin Fig. 10. If we interpret the abscissa for the r = 0 curve as m ISIs from one neuron (instead of one ISI from m input neurons), we can appreciate that the uncertainty reduction achieved in five ISIs is approximately the same as the uncertainty achieved by about 100 weakly correlated neurons ( = 0.2; Fig. 10, arrow). Third, the fidelity of signal transmission approaches an asymptote at 50–100 input neurons; there is little to be gained by adding more inputs. This observation holds for any realistic amount of correlation, suggesting that 50–100 neurons might constitute a minimal signaling unit in cortex. Here lies an important design principle for neocortical circuits. Returning to Figure 7, we can appreciate that the more neurons that are used to transmit a signal, the more common inputs the brain is likely to use. The strategy pays off until an asymptotic limit in speed and accuracy is approached: ∼50–100 neurons.

A most surprising finding of sensory neurophysiology in recent years is that single neurons in visual cortex can encode near-threshold stimuli with a fidelity that approximates the psychophysical fidelity of the entire organism (Parker and Hawken, 1985; Hawken and Parker, 1990;Britten et al., 1992; Celebrini and Newsome, 1994; Shadlen et al., 1996). This finding is understandable, however, in light of Equation 3, which implies that psychophysical sensitivity can exceed neural sensitivity by little more than a factor of 2, given a modest amount of correlation in the pool of sensory neurons.

2.4: Synchrony among input neurons

If pairs of neurons carrying similar signals are indeed correlated, it is natural to inquire whether such correlation influences the spiking interval statistics considered earlier. How do synchronous spikes such as those reflected in the cross-correlograms of Figure 8 influence the postsynaptic neuron? What is the effect on ISI variability of weak correlation and synchronization in the input spike trains themselves (recall that the inputs in the simulations of Fig. 8were independent)?

We tested this by simulating the response of two neurons using inputs with pairwise correlation that resembles Figure 8E. We generated a large pool of spike trains using our counting model with 300 excitatory and 300 inhibitory inputs. Each spike train was generated by drawing 300 inputs from a common pool of 750 independent Poisson spike trains representing excitation and another 300 inputs from a common pool of 750 Poisson spike trains, which represented the inhibitory input. The strategy ensures that, on average, any pair of spike trains was produced using 40% common excitatory input and 40% common inhibitory input. Thus any pair of spike trains has an expected correlation of 0.25–0.3 and a correlogram like the one in Figure 8E. We simulated several thousand responses in this fashion and used these as the input spike trains for a second round of simulations. The correlated spike trains now served as inputs to a pair of neurons using the identical model. Again, 40% of the inputs to the pair were identical. By using the responses from the first round of simulations as input to the second, we introduced numerous synchronized spikes to the input ensemble.

The result is summarized in Figure 11. The cross-correlogram among the output neurons (Fig. 11A) resembles the correlogram obtained from the inputs (Fig. 11B). We failed to detect an increase in synchrony. In fact the correlation coefficient among the pair of outputs was 0.29, compared with 0.30 for the inputs. The synchronized spikes among the input ensemble did not lead to more synchronized spikes in the two output neurons. Nor did input correlation boost the spike rate or cause any detectable change in the pattern of spiking. As in our earlier simulations, the output response was approximately the same as any one of the 600 inputs. Moreover, the spike trains were highly irregular, the distribution of ISIs approximating an exponential probability density function (Fig. 11, inset; CVISI = 0.94). We detected no structure in the output response or in the unit autocorrelation functions.

Fig. 11.

Homogeneity of synchrony among input and output ensembles of neurons. A, Normalized cross-correlogram from a pair of neurons receiving 300 excitatory and inhibitory inputs, the typical pairwise cross-correlogram of which is shown in B. The pair share 40% common excitatory and inhibitory input. The CCG was computed from 80 1 sec epochs. The simulation produced a correlation coefficient of 0.29. B, The average correlogram for pairs of neurons serving as input to the pair of neurons, whose CCG is shown in A. The correlogram was obtained from 80 1 sec epochs using randomly selected pairs of input neurons. The mean correlation coefficient, , was 0.3. Vertical scale reflects percent change in the odds of a spike, relation to background. C, Spike interval histogram for the output neurons. Synchrony among input neurons does not lead to detectable structure in the output spike trains (CVISI = 0.94).

The finding contradicts the common assumption that synchronous spikes must exert an exaggerated influence on networks of neurons (Abeles, 1991; Singer, 1994; Aertsen et al., 1996; Lumer et al., 1997). This idea only holds practically when input is relatively sparse so that a few presynaptic inputs are likely to yield a postsynaptic spike (Abeles, 1982; Kenyon et al., 1990; Murthy and Fetz, 1994). The key insight here is that the cortical neuron is operating in a high-input regime in which the majority of inputs are effectively synchronous. Given the number of input spikes that arrive within a membrane time constant, there is little that distinguishes the synchronous spikes that arise through common input. If as few as 5% of the ∼3000 inputs to a neuron are active at an average rate of 50 spikes/sec, the neuron receives an average of 75 input spikes every 10 msec. The random walk mechanism effectively buffers the neuron from the detailed rhythms of the input spike trains just as it allows the neuron to discharge in a graded fashion over a limited dynamic range.

3: Noise propagation and neural computation

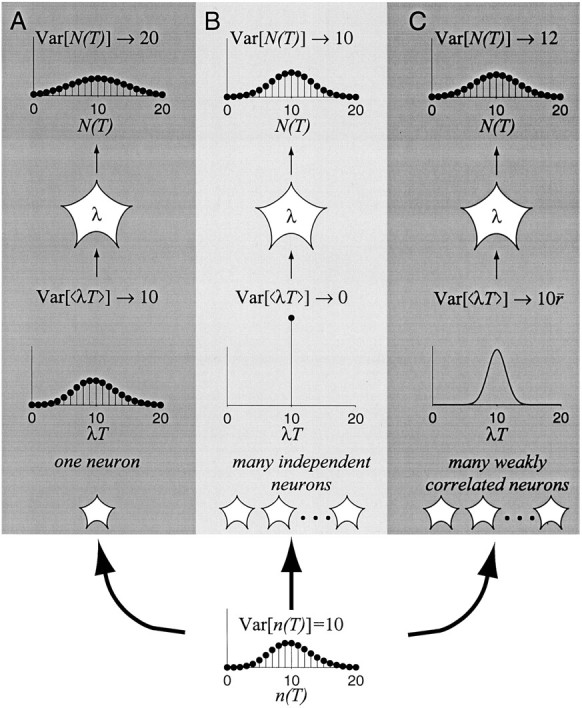

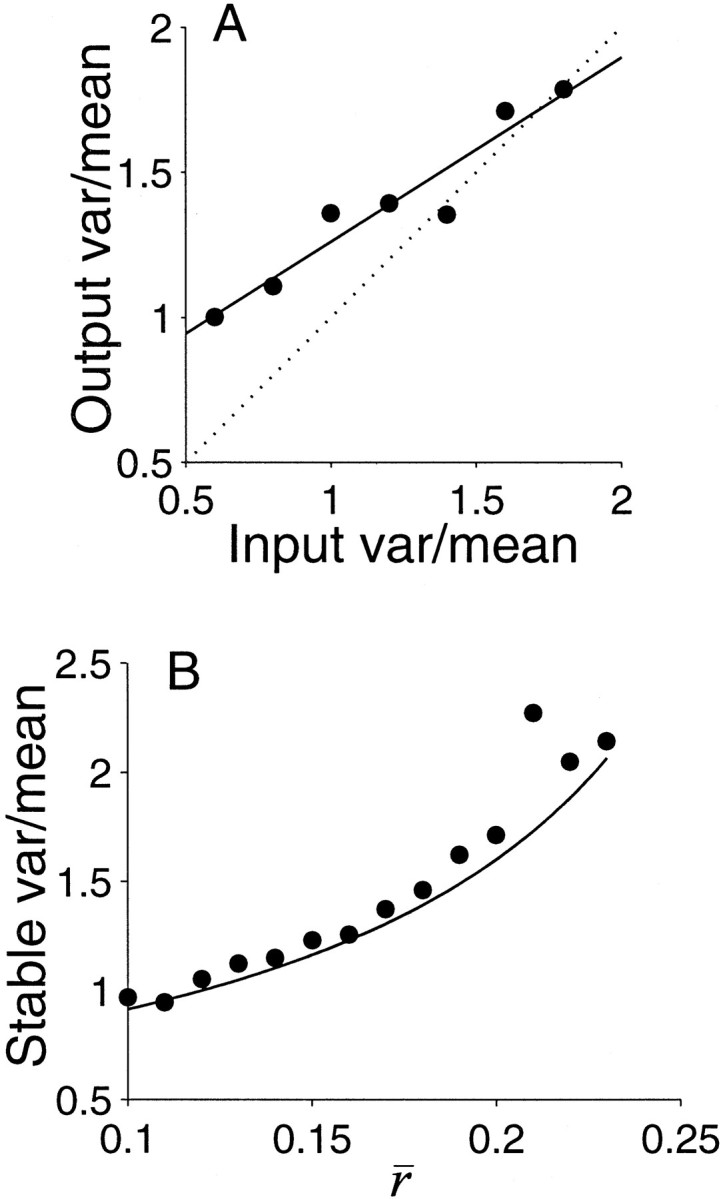

As indicated previously, a remarkable property of the neocortex is that neurons display similar statistical variation in their spike discharge at many levels of processing. For example, throughout the primary and extrastriate visual cortex, neurons exhibit comparable irregularity of their ISIs and spike count variability. When an identical visual stimulus is presented for several repetitions, the variance of the neural spike count has been found to exceed the mean spike count by a factor of ∼1–1.5 wherever it has been measured (see Background). The apparent consistency implies that neurons receive noisy synaptic input, but they neither compound this noise nor average it away. Some balancing equilibrium is at play.

Recall that our simulations led to a spike count variance that was considerably less than the mean count (Fig. 6), in striking contrast to real cortical neurons. Because the variance of the spike count affects signal reliability, it is important to gain some understanding of this fundamental property of the response. In this section we will develop a framework for understanding the relationship between the mean response and its variance under experimental conditions involving repetitions of nominally identical stimuli. Why does the variance exceed the mean spike count, and how is the ratio of variance to mean count preserved across levels of processing? The elements of the variance turn out to be the very quantities we have enumerated in the preceding sections: irregular ISIs and weak correlation.

3.1: Background and terminology

At first glance it may seem odd that investigators have measured the variance of the spike count; after all it is theSD that bears on the fidelity of the neural discharge. However, a linear relationship between the mean count and its variance would be expected for a family of stochastic point processes known as renewal processes. A stochastic point process is a random sequence of stereotyped events (e.g., spikes) that are only distinguishable on the basis of their time of occurrence. In a renewal process the intervals from one event to the next (e.g., ISIs) are independent of one another and drawn from a common distribution (i.e., they are independent and identically distributed; Karlin and Taylor, 1975). The Poisson process (e.g., radioactive decay) is a well known example. Recall that the intervals of a Poisson process are described by the exponential probability density function:

| Equation 4 |

where λ is the average event rate, and λ−1 is both the mean interval and SD (i.e., CV = 1). The number of events observed in an epoch of duration, T, is also a random number. The count, which we shall denote, N(T), obeys a Poisson distribution; the mean count is λT, as is the variance. We will use angled brackets to indicate the mean of many repetitions and summarize the Poisson case by writing, Var [N(T)] = 〈N(T)〉 = λT. For any renewal process (not just the Poisson process), the number of events counted in an epoch, N(T), is a random number with variance that scales linearly with the mean count. The constant of proportionality is the squared coefficient of variation (CV) of the interval distribution: Var [N(T)] = CV2〈N(T)〉 (Smith, 1959).