Abstract

Human cortex is comprised of specialized networks that support functions, such as visual motion perception and language processing. How do genes and experience contribute to this specialization? Studies of plasticity offer unique insights into this question. In congenitally blind individuals, “visual” cortex responds to auditory and tactile stimuli. Remarkably, recent evidence suggests that occipital areas participate in language processing. We asked whether in blindness, occipital cortices: (1) develop domain-specific responses to language and (2) respond to a highly specialized aspect of language–syntactic movement. Nineteen congenitally blind and 18 sighted participants took part in two fMRI experiments. We report that in congenitally blind individuals, but not in sighted controls, “visual” cortex is more active during sentence comprehension than during a sequence memory task with nonwords, or a symbolic math task. This suggests that areas of occipital cortex become selective for language, relative to other similar higher-cognitive tasks. Crucially, we find that these occipital areas respond more to sentences with syntactic movement but do not respond to the difficulty of math equations. We conclude that regions within the visual cortex of blind adults are involved in syntactic processing. Our findings suggest that the cognitive function of human cortical areas is largely determined by input during development.

SIGNIFICANCE STATEMENT Human cortex is made up of specialized regions that perform different functions, such as visual motion perception and language processing. How do genes and experience contribute to this specialization? Studies of plasticity show that cortical areas can change function from one sensory modality to another. Here we demonstrate that input during development can alter cortical function even more dramatically. In blindness a subset of “visual” areas becomes specialized for language processing. Crucially, we find that the same “visual” areas respond to a highly specialized and uniquely human aspect of language–syntactic movement. These data suggest that human cortex has broad functional capacity during development, and input plays a major role in determining functional specialization.

Keywords: blindness, language, plasticity, syntax

Introduction

The human brain consists of distinct functional networks that support language processing, face perception, and motor control. How do genes and experience produce this functional specialization? Studies of experience-based plasticity provide unique insights into this question. In blindness, the visual system responds to auditory and tactile stimuli (e.g., Hyvärinen et al., 1981). Visual cortices are active when blind adults localize sounds, hear auditory motion, and discriminate tactile patterns (Weeks et al., 2000; Merabet et al., 2004; Saenz et al., 2008). Analogously, the auditory cortex of deaf individuals responds to visual and somatosensory stimuli (Finney et al., 2001, 2003; Karns et al., 2012).

If intrinsic physiology narrowly constrains cortical function, we would expect a close correspondence between the cortical area's new cross-modal function and its typical function. For example, in deaf cats, visual localization of objects in space is in part supported by auditory areas that typically perform sound localization (Lomber et al., 2010). In blind humans, the middle temporal visual motion complex responds to moving sounds (Saenz et al., 2008; Wolbers et al., 2011). Similarly, the visual word form area is recruited during Braille reading (Büchel et al., 1998b; Reich et al., 2011). Such findings are consistent with a limited role for experience in shaping cortical function.

One case of cross-modal plasticity seems to break from this pattern. In blind humans, the visual cortex is recruited during language processing. Occipital areas are active when blind people read Braille, generate verbs to heard nouns, and listen to spoken sentences (Sadato et al., 1996, 1998; Büchel et al., 1998a, 1998b; Röder et al., 2000, 2002; Burton et al., 2002a, b; Amedi et al., 2003; Reich et al., 2011; Watkins et al., 2012). Occipital cortex responds more to lists of words than meaningless sounds, and more to sentences than unconnected lists of words (Bedny et al., 2011). Responses to language are observed both in secondary visual areas and in primary visual cortex (Amedi et al., 2003; Burton, 2003; Bedny et al., 2012). Occipital plasticity contributes to behavior. Transcranial magnetic stimulation to the occipital pole impairs blind individuals' ability to read Braille and produce semantically appropriate verbs to aurally presented nouns (Cohen et al., 1997; Amedi et al., 2004).

Visual cortex plasticity for language is striking in light of the cognitive and evolutionary differences between vision and language. A key open question that we address in this study is whether visual cortex supports language-specific operations or domain general operations that contribute to language (Makuuchi et al., 2009; Fedorenko et al., 2011, 2012; Monti et al., 2012).

We also test the hypothesis that visual cortex processes aspects of language that are uniquely human and highly specialized. Language contains many levels of representation, including phonology, morphology, semantics, and syntax. One possibility is that intrinsic physiology restricts the kinds of information occipital cortex can process within language. In particular, syntactic structure building is thought to require specialized cortical circuitry (Pinker and Bloom, 1990; Hauser et al., 2002; Fitch and Hauser, 2004). Does occipital cortex participate in syntactic structure building?

Evidence for this possibility comes from a study by Röder et al. (2002) who found larger occipital responses to German sentences with noncanonical word orders (with scrambling), as well as larger occipital responses to sentences than matched jabberwocky speech. A key question left open by this study is whether the responses to syntactic complexity are specific to language. Previous studies have shown that a subset of areas within prefrontal and lateral temporal cortex are sensitive to linguistic content, but not to difficulty of working memory tasks (Fedorenko et al., 2011). Does this form of selectivity exist within visual cortex?

To address these questions, we conducted two experiments with congenitally blind participants. We compared occipital activity during sentence comprehension with activity during verbal sequence memory and symbolic math. Like sentences, the control tasks involve familiar symbols, tracking order information, and hierarchical structures. We predicted that occipital areas would respond more during sentence comprehension than the control tasks. Crucially, we manipulated the syntactic complexity of the sentences, half of the sentences contained syntactic movement. We also manipulated the difficulty of math equations. We predicted that regions of occipital cortex would respond more to syntactically complex sentences but would be insensitive to math difficulty.

Materials and Methods

Participants.

Nineteen congenitally blind individuals (13 females; 3 left-handed, 2 ambidextrous) and 18 age- and education-matched controls (8 females; 2 left-handed, 2 ambidextrous) contributed data to Experiments 1 and 2. All blind participants had at most minimal light perception since birth. Blindness was due to an abnormality anterior to the optic chiasm and not due to brain damage (Table 1).

Table 1.

Participant demographic information

| Participant | Gender | Age (yr) | Cause of blindness | Light perception | Education |

|---|---|---|---|---|---|

| B1 | M | 22 | Leber's congenital amaurosis | Minimal | BA in progress |

| B2 | F | 32 | Retinopathy of prematurity | Minimal | BA |

| B3 | F | 70 | Retinopathy of prematurity | Minimal | High school |

| B4 | M | 43 | Unknown eye condition | None | JD |

| B5 | M | 67 | Retinopathy of prematurity | Minimal | High school |

| B6 | F | 67 | Retinopathy of prematurity | None | MA |

| B7 | F | 26 | Retinopathy of prematurity | Minimal | MA |

| B8 | F | 64 | Retinopathy of prematurity | None | MA |

| B9 | F | 35 | Leber's congenital amaurosis | Minimal | MA |

| B10 | M | 47 | Leber's congenital amaurosis | None | JD |

| B11 | F | 39 | Retinopathy of prematurity | None | PhD in progress |

| B12 | F | 49 | Leber's congenital amaurosis | Minimal | MA |

| B13 | F | 25 | Leber's congenital amaurosis | Minimal | MA |

| B14 | F | 62 | Retinopathy of prematurity | None | MA |

| B15 | M | 36 | Congenital glaucoma and cataracts | None | MA |

| B16 | M | 62 | Retinopathy of prematurity | None | BA |

| B17 | F | 60 | Retinopathy of prematurity | None | JD |

| B18 | F | 46 | Retinopathy of prematurity | None | BA |

| B19 | F | 61 | Retinopathy of prematurity | None | High school |

| Average | |||||

| Blind (n = 19) | 13F | 48 | — | — | BA |

| Sighted (n = 18) | 8F | 47 | — | — | BA |

Participants were between 21 and 75 years of age (Table 1). Three additional sighted participants were scanned but excluded due to lack of brain volume coverage during the scan. We also excluded participants who failed to perform above chance on sentence comprehension in either of the two experiments. For both experiments, we set the performance criterion to be the 75th percentile of the binomial “chance performance” distribution (Experiment 1, 53.7% correct; Experiment 2, 54.2% correct). This resulted in three blind participants and zero sighted participants being excluded from further analyses. None of the participants suffered from any known cognitive and neurological disabilities. All participants gave written informed consent and were compensated $30 per hour.

Stimuli and procedure.

In both experiments, participants listened to stimuli presented over Sensimetrics MRI-compatible earphones (http://www.sens.com/products/model-s14/). The stimuli were presented at the maximum comfortable volume for each participant (average sound pressure level 76–84 dB). All participants were blindfolded for the duration of the study.

In Experiment 1, participants heard sentences and sequences of nonwords. On sentence trials, participants heard a sentence, followed by a yes/no question. Comprehension questions required participants to attend to thematic relations of words in the sentence (i.e., who did what to whom), and could not be answered based on recognition of individual words. Participants indicated their responses by pressing buttons on a button pad. We measured response time to the question and comprehension accuracy.

We manipulated the syntactic complexity of the sentences in Experiment 1. The manipulation focused on an aspect of syntactic representation that has received special attention in linguistic theory: syntactic movement (Chomsky, 1957, 1995). Sentences with syntactic movement dependencies require distant words or phrases to be related during comprehension. For example, in the sentence “The farmer that the teacher knew __ bought a car,” the verb “knew” and its object “the farmer” are separated by “that the teacher.” Sentences with movement are more difficult to process as measured by comprehension accuracy, reading times, and eye movements during reading (King and Just, 1991; Gibson, 1998; Gordon et al., 2001; Chen et al., 2005; Staub, 2010).

The sentence in each trial appeared in one of two conditions. In the +MOVE condition, sentences contained a syntactic movement dependency in the form of an object-extracted relative clause (e.g., “The actress [that the creator of the gritty HBO crime series admires __] often improvises her lines.”) In the −MOVE condition, sentences contained identical content words, and had similar meanings, but did not contain any movement dependencies. The −MOVE condition contained an embedded sentential complement clause, so that the number of clauses was identical in +MOVE and −MOVE conditions (e.g., “The creator of the gritty HBO crime series admires [that the actress often improvises her lines].”) The sentences were counterbalanced across two lists, such that each participant saw only one version of the sentence. Some of the sentences were adapted from a published set of stimuli (Gordon et al., 2001).

On nonword sequence memory trials, participants heard a sequence of nonwords (the target) followed by a shorter sequence (the probe), made up of some of the nonwords from the original set. Participants judged whether the nonwords in the probe were in the same order as in the target. On “match” trials, the probe consisted of consecutive nonwords from the target. On “nonmatch” trials, the nonwords in the probe were chosen from random positions in the target and presented in a shuffled order. On nonmatch trials, no two nonwords that occurred consecutively in the target sequence appeared in the same order in the probe sequence.

There were a total of 54 trials of each type: +MOVE, −MOVE, and nonword sequence, divided evenly into 6 runs. The items of each trial type were presented in a random order for each participant. Condition order was counterbalanced within each run. The sentence and nonword trials were both 16 s long. Each trial began with a tone, followed by a 6.7 s sentence or nonword sequence and a 2.9 s probe/question. Participants had until the end of the 16 s period to respond. The sentences and target nonword sequences were matched in number of words (sentence = 17.9, nonword = 17.8; p > 0.3), number of syllables per word (sentence = 1.61, nonword = 1.59; p > 0.3), and mean bigram frequency per word (sentence = 2342, nonword = 2348; p > 0.3) (Duyck et al., 2004).

Experiment 2 contained two primary conditions: sentences and math equations (Monti et al., 2012). There were a total 48 sentence trials and 96 math trials in the experiment. On sentence trials, participants heard pairs of sentences. The task was to decide whether the two sentences had the same meaning. One of the sentences in each pair was in active voice (e.g., “The receptionist that married the driver brought the coffee.”), whereas the other was in passive voice (“The coffee was brought by the receptionist that married the driver.”) On “same” trials, the roles and relations were maintained across both sentences (as in the previous example). On “different” trials, the roles of the people in the sentences were reversed in the second sentence (e.g., “The coffee was brought by the driver that married the receptionist.”)

On math trials, participants heard pairs of spoken subtraction equations involving two numbers and a variable X. The task was to decide whether the value of X was the same in both equations. Across trials, X could occur either as an operand (e.g., X − 5 = 3) or as the answer (e.g., 8 − 5 = X). Equations occurred in one of two conditions: difficult or easy. Difficult equations involved double-digit operands and answers (e.g., 28 − 14 = X), whereas easy equations involved single-digit numbers (e.g., 8 − 4 = X). Both conditions appeared equally often.

The sentence and math trials were both 13.5 s long. The pairs of stimuli were each 3.5 s long and were separated by a 2.5 s interstimulus interval. Participants had 4 s after the offset of the second stimulus to enter their response.

MRI data acquisition and cortical surface analysis.

MRI structural and functional data of the whole brain were collected on a 3 Tesla Phillips scanner. T1-weighted structural images were collected in 150 axial slices with 1 mm isotropic voxels. Functional, BOLD images were collected in 36 axial slices with 2.4 × 2.4 × 3 mm voxels and TR = 2 s. Data analyses were performed using FSL, Freesurfer, the HCP workbench, and custom software (Dale et al., 1999; Smith et al., 2004; Glasser et al., 2013).

All analyses were surface-based. Cortical surface models were created for each subject using the standard Freesurfer pipeline. During preprocessing, functional data were motion corrected, high pass filtered with a 128 s cutoff, and resampled to the cortical surface. Once on the surface, the data were smoothed with a 10 mm FWHM Gaussian kernel. The data from the cerebellum and subcortical structures were not analyzed.

A GLM was used to analyze BOLD activity as a function of condition for each subject. Fixed-effects analyses were used to combine runs within subject. Data were prewhitened to remove temporal autocorrelation. Covariates of interest were convolved with a standard hemodynamic response function. Covariates of no interest included a single regressor to model trials on which the participant did not respond and individual regressors to model time points with excessive motion (blind: 1.7 drops per run, SD = 2.7; sighted: 1.3 drops per run, SD = 2.8). Temporal derivatives for all but the motion covariates were also included in the model.

Because of lack of coverage, one blind and three sighted subjects were missing data in a small subset of occipital vertices. For vertices missing data in some but not all runs, a 4-D “voxelwise” regressor was used to model out the missing runs during fixed-effects analysis. The remaining missing vertices were filled in from neighboring vertices within a 10 mm radius (area filled in for each subject: 5, 39, 11, and 82 mm2).

Group-level random-effects analyses were corrected for multiple comparisons using a combination of vertex-wise and cluster-based thresholding. p value maps were first thresholded at the vertex level p < 0.05 false discovery rate (FDR) (Genovese et al., 2002). Nonparametric permutation testing was then used to cluster-correct at p < 0.05 family-wise error rate (FWE).

Visual inspection of the data suggested that language-related activity was less likely to be left lateralized among blind participants (for similar observations, see Röder et al., 2000, 2002). To account for this difference between groups, cortical surface and ROI analyses were conducted in each subject's language dominant hemisphere. For Experiment 1 analyses, Experiment 2 (sentence > math) data were used to determine language laterality. For Experiment 2 analyses, Experiment 1 (sentence > nonword) was used. Laterality indices were calculated using the formula (L − R)/(L + R), where L and R denote the sum of positive z-statistics >2.3 (p < 0.01 uncorrected) in the left and right hemisphere, respectively. For the purposes of analyses, participants with laterality indices >0 were operationalized as left hemisphere language dominant, and right hemisphere dominant otherwise. On this measure, 12 of 19 blind and 15 of 18 sighted participants were left hemisphere dominant for the sentence > nonword contrast. For the sentence > math contrast, 11 of 19 blind and 16 of 18 sighted participants were left hemisphere dominant. To align data across subjects, cortical surface analyses were conducted in the left hemisphere with data for right-lateralized subjects reflected to the left hemisphere. For ROI analyses, we extracted percent signal change (PSC) from either the left or right hemisphere for each subject, depending on which hemisphere was dominant for language in that participant. The laterality analysis procedure was orthogonal to condition and group and thus could not bias the results. To ensure that results do not depend on the laterality procedure, all analyses were also conducted in the left and right hemisphere separately.

ROI analyses.

We used orthogonal group functional ROIs to test for effects of syntactic movement in the visual cortex in the blind and sighted groups. Group ROIs were used because individual subject ROIs could not be defined in occipital cortex of sighted subjects.

Group ROIs were based on the Experiment 2 sentence > math contrast. We defined four ROIs, one for each activation peak in the blind average map: lateral occipital, fusiform, cuneus, and lingual. We first created a probabilistic overlap map across blind participants, where the value at each vertex is the fraction of blind participants who show activity at that vertex (p < 0.01, uncorrected) (Fedorenko et al., 2010). The overlap map was then smoothed at 5 mm FWHM. We divided the overlap map into four search spaces, one surrounding each activation peak, by manually tracing the natural boundaries between peaks. The top 20% of vertices with highest overlap were selected as the ROI for each search space.

We additionally defined a separate group functional ROI within V1. The anatomical boundaries of V1 were based on previously published retinotopic data that were aligned with the brains of the current participants using cortical folding patterns (Hadjikhani et al., 1998; Van Essen, 2005). This alignment procedure has previously been shown to accurately identify V1 in sighted adults (Hinds et al., 2008). As with the other ROIs, the top 20% of vertices within V1 showing greatest intersubject overlap for the sentence > math contrast were selected as the group ROI.

Orthogonal individual subject functional ROIs were defined in left dorsolateral prefrontal cortex (LPFC) to test for effects of math difficulty. The LPFC ROIs were defined as the 20% most active vertices for the math > sentences contrast of Experiment 2. ROIs were defined within a prefrontal region that was activated for math > sentences across blind and sighted participants (p < 0.01 FDR corrected).

In perisylvian cortex, we defined two sets of individual subject ROIs, located in the inferior frontal gyrus and middle-posterior lateral temporal cortex. Following Fedorenko et al. (2010), we defined the ROIs using a combination of group-level search spaces and individual subject functional data. For search spaces, we selected two parcels from the published set of functional areas (Fedorenko et al., 2010), previously shown to respond to linguistic content (inferior frontal gyrus, middle-posterior lateral temporal cortex). Within each search space, ROIs were defined based on each subject's Experiment 2 sentence > math activation map, in their language dominant hemisphere. The individual ROIs were defined as the 20% most active vertices in each search space.

PSC in each ROI was calculated relative to rest after averaging together each vertex's time-series across the ROI. Only trials on which the participant made a response contributed to the PSC calculation. Statistical comparisons were performed on the average PSC responses during the predicted peak window for each experiment (6–12 s for Experiment 1, 8–14 s for Experiment 2).

Results

Behavioral performance

In Experiment 1, both blind and sighted participants were more accurate on sentence trials than on nonword sequence memory trials (group × condition ANOVA, main effect of condition, F(1,35) = 93.89, p < 0.001). Accuracy on sentence trials was higher in the blind group, but the group × condition interaction was not significant (main effect of group, F(1,35) = 1.77, p = 0.19, group × condition interaction, F(1,35) = 2.4, p = 0.13) (Fig. 1).

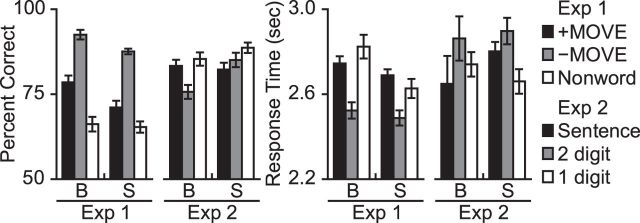

Figure 1.

Behavioral performance. Percent correct and response times for blind (B) and sighted (S) participants in Experiments 1 and 2. Error bars indicate the within-subjects SEM (Morey, 2008).

Blind participants were faster at responding on sentence trials than on nonword sequence trials (t(18) = −2.89, p = 0.01). There was no difference in response time (RT) between conditions in the sighted group (t(17) = −0.88, p = 0.39). In a group × condition ANOVA, there was a main effect of condition (F(1,35) = 7.69, p = 0.009), no effect of group (F(1,35) = 0.98, p = 0.33), and a marginal group × condition interaction (F(1,35) = 2.75, p = 0.11).

Within the sentence condition of Experiment 1, blind and sighted participants made more errors and were slower on +MOVE than −MOVE sentences (group × condition ANOVA, accuracy: main effect of condition, F(1,35) = 94.15, p < 0.001, group × condition interaction, F(1,35) = 0.62, p = 0.44; RT: main effect of condition, F(1,35) = 61.16, p < 0.001, group × condition interaction: F(1,35) = 0.14, p > 0.5). Blind participants were slightly more accurate at answering comprehension questions (main effects of group, accuracy on sentence trials: F(1,35) = 3.96, p = 0.05; RT on sentence trials: F(1,35) = 0.1, p > 0.5).

In Experiment 2, blind participants performed numerically better on sentence than math trials, whereas sighted participants performed better on math than sentence trials (blind: t(18) = 1.22, p = 0.24; sighted: t(17) = −1.81, p = 0.09; group × condition interaction, F(1,35) = 4.7, p = 0.04). Response times were not different between the sentence and math conditions in either group (group × condition ANOVA, main effect of condition, F(1,35) = 0.53, p = 0.47, main effect of group F(1,35) = 0.12, p > 0.5, group × condition interaction, F(1,35) = 0.97, p = 0.33).

Within the math condition of Experiment 2, participants made more errors and were slower to respond on the hard (double-digit) than easy (single-digit) trials (group × condition ANOVA, main effect of condition for accuracy: F(1,35) = 11.11, p = 0.002, main effect of condition for RT: F(1,35) = 7.83, p = 0.008). There were no effects of group or group × condition interactions in either accuracy or RT (accuracy: main effect of group, F(1,35) = 1.72, p = 0.2, group × condition interaction, F(1,35) = 2.33, p = 0.14; RT: main effect of group, F(1,35) = 0.01, p > 0.5, group × condition interaction, F(1,35) = 0.81, p = 0.38).

“Visual” cortex of blind adults responds more to sentences than nonword sequences or math equations

In the blind group, we observed greater responses to sentences than nonword sequences in lateral occipital cortex, the calcarine sulcus, the cuneus, the lingual gyrus, and the fusiform (p < 0.05 FWE corrected). We observed responses in the superior occipital sulcus at a more lenient threshold (p < 0.05 FDR corrected) (Fig. 2; Table 2). Relative to published visuotopic maps (Hadjikhani et al., 1998; Tootell and Hadjikhani, 2001; Van Essen, 2005), responses to sentences were centered on ventral V2, extending into V1 and V3, on the medial surface, V4 and V8 on the ventral surface, and the middle temporal visual motion complex in lateral occipital cortex (Fig. 3).

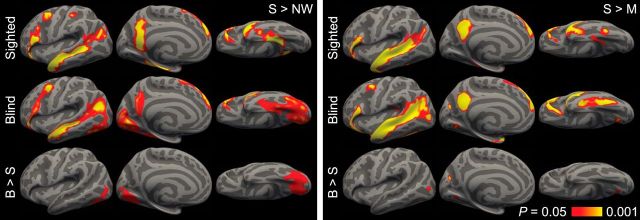

Figure 2.

Language responses in sighted and blind individuals in each subject's language dominant hemisphere. Left, Responses to sentences > nonword sequences. Right, Responses to sentences > math equations. p < 0.05 (FDR corrected; 90 mm2 cluster threshold).

Table 2.

Language responsive brain regions in blind and sighted individualsa

| Brain region | x | y | z | Peak t | PFDR | mm2 | Pcluster |

|---|---|---|---|---|---|---|---|

| Sentence > nonword | |||||||

| Sighted | |||||||

| Superior/middle temporal sulcus/gyrus | −46 | 13 | −30 | 14.98 | <0.0001 | 3579 | <0.001 |

| Parahippocampal gyrus | −32 | −36 | −15 | 10.79 | <0.0001 | 1446 | 0.008 |

| Inferior frontal gyrus | −44 | 26 | −14 | 9.34 | <0.0001 | 1350 | 0.009 |

| Precuneus | −5 | −60 | 33 | 8.31 | <0.0001 | 1307 | 0.010 |

| Superior frontal gyrus | −6 | 54 | 31 | 7.97 | <0.0001 | 914 | 0.023 |

| Middle frontal gyrus | −42 | 2 | 50 | 7.21 | <0.0001 | 398 | 0.095 |

| Postcentral sulcus | −46 | −29 | 45 | 5.64 | 0.0006 | 170 | 0.308 |

| Blind | |||||||

| Superior/middle temporal sulcus/gyrus | −39 | −60 | 18 | 10.29 | <0.0001 | 8638 | <0.001 |

| Lingual gyrus | −3 | −75 | 2 | 6.06 | 0.0006 | ||

| Fusiform | −36 | −77 | −15 | 5.58 | 0.0011 | ||

| Lateral occipital cortex | −46 | −71 | −6 | 5.2 | 0.0018 | ||

| Inferior frontal gyrus | −48 | 33 | −9 | 9.67 | <0.0001 | 1476 | 0.016 |

| Middle frontal gyrus | −43 | 3 | 42 | 8.55 | <0.0001 | ||

| Superior frontal gyrus | −7 | 49 | 43 | 7.3 | 0.0002 | ||

| Precuneus | −7 | −60 | 30 | 5.35 | 0.0014 | 665 | 0.059 |

| Superior occipital sulcus | −23 | −83 | 16 | 5.46 | 0.0012 | 565 | 0.072 |

| Blind > sighted | |||||||

| Fusiform | −36 | −77 | −15 | 5.66 | 0.0089 | 2160 | <0.001 |

| Lingual gyrus | −6 | −74 | −2 | 4.95 | 0.0089 | ||

| Superior occipital sulcus | −23 | −83 | 15 | 5.06 | 0.0089 | 310 | 0.021 |

| Lateral occipital cortex | −46 | −71 | −6 | 4.97 | 0.0089 | 221 | 0.047 |

| Sentence > math | |||||||

| Sighted | |||||||

| Superior/middle temporal sulcus/gyrus | −50 | 13 | −21 | 13.56 | <0.0001 | 4792 | <0.001 |

| Inferior frontal gyrus | −52 | 31 | −1 | 8.79 | <0.0001 | 984 | 0.029 |

| Precuneus | −5 | −60 | 33 | 9.63 | <0.0001 | 918 | 0.032 |

| Superior frontal gyrus | −6 | 55 | 32 | 8.41 | <0.0001 | 804 | 0.040 |

| Parahippocampal gyrus | −26 | −35 | −19 | 4.52 | 0.0034 | 316 | 0.163 |

| Fusiform | −41 | −45 | −19 | 6.49 | 0.0001 | 132 | 0.395 |

| Blind | |||||||

| Superior/middle temporal sulcus/gyrus | −51 | −17 | −12 | 14.3 | <0.0001 | 5767 | 0.001 |

| Lateral occipital cortex | −49 | −78 | 8 | 6.53 | <0.0001 | ||

| Inferior frontal gyrus | −55 | 22 | 10 | 10.03 | <0.0001 | 1251 | 0.030 |

| Superior frontal gyrus | −7 | 52 | 40 | 8.53 | <0.0001 | 1124 | 0.036 |

| Fusiform | −39 | −39 | −24 | 7.9 | <0.0001 | 967 | 0.043 |

| Precuneus | −7 | −57 | 23 | 13.6 | <0.0001 | 791 | 0.056 |

| Middle frontal gyrus | −44 | 4 | 48 | 5.91 | 0.0002 | 227 | 0.309 |

| Lingual gyrus | −16 | −66 | −9 | 4.92 | 0.001 | 197 | 0.352 |

| Cuneus | −12 | −74 | 20 | 7.08 | <0.0001 | 138 | 0.479 |

| Blind > sighted | |||||||

| Cuneus | −12 | −74 | 20 | 7.05 | 0.0005 | 141 | 0.019 |

| Lingual gyrus | −16 | −66 | −9 | 4.8 | 0.0143 | 98 | 0.041 |

| Lateral occipital cortex | −49 | −78 | 8 | 5.67 | 0.0028 | 95 | 0.043 |

aCoordinates are reported in MNI space. Peak t, t values for local maxima; PFDR, FDR adjusted p values for local maxima; mm2, area of the cluster on the cortical surface; Pcluster, FWE corrected p value for the entire cluster.

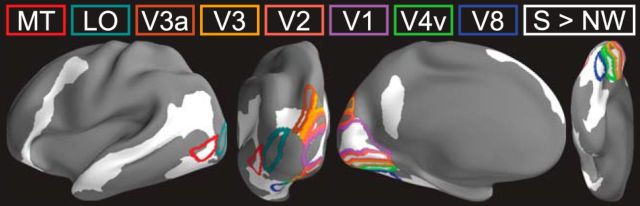

Figure 3.

Activation for sentences > nonword sequences in the blind group, relative to visuotopic boundaries (p < 0.05 FDR corrected; 90 mm2 cluster threshold) (Hadjikhani et al., 1998; Tootell and Hadjikhani, 2001; Van Essen, 2005).

Occipital responses to sentences were specific to the blind group. A group × condition interaction analysis of the sentences > nonwords contrast, in blind > sighted identified activity in the fusiform gyrus, the lingual gyrus, the superior occipital sulcus, and lateral occipital cortex (p < 0.05 FWE corrected).

A similar but spatially less extensive pattern of activation was observed for sentences relative to math equations in Experiment 2 (Fig. 2; Table 2). Occipital areas that were more active in blind than sighted participants included the cuneus, anterior lingual gyrus, and lateral occipital cortex (p < 0.05 FWE corrected).

To verify that results were not dependent on the laterality procedure, we conducted the same analyses in the left and right hemisphere separately. The pattern of results was similar to the language dominant hemisphere analyses. We again observed occipital responses to sentences in both experiments in the blind but not sighted subjects. Effects were somewhat weaker, suggesting that the laterality procedure reduced variability across participants. The left/right hemisphere analysis also revealed that, on average, the bind group responses to sentences > nonwords were slightly larger in the left hemisphere, whereas responses to sentences > math were larger in the right hemisphere.

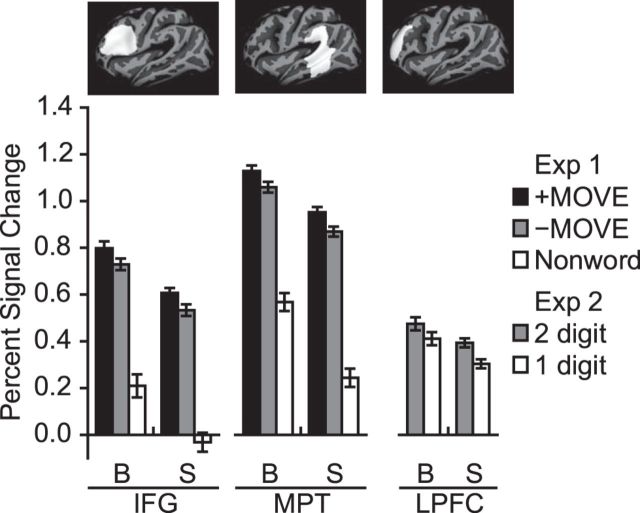

Visual cortex of blind adults responds more to sentences with syntactic movement

Above, we identified occipital areas that respond more to sentences than memory for nonword sequences and symbolic math, including lateral occipital cortex, cuneus, lingual gyrus, and fusiform. The same occipital regions showed a reliable effect of syntactic movement in the blind group, but not in the sighted group (ROI × movement ANOVA in blind group, main effect of syntactic movement, F(1,18) = 10.46, p = 0.005, ROI × movement interaction, F(3,54) = 0.96, p = 0.42, main effect of ROI, F(3,54) = 21.03, p < 0.001; ROI × movement ANOVA in sighted group, main effect of syntactic movement, F(1,17) = 0.01, p > 0.5, ROI × movement interaction, F(3,51) = 0.97, p = 0.41, main effect of ROI, F(3,51) = 10.89, p < 0.001; group × ROI × movement ANOVA, group × movement interaction, F(1,35) = 5.22, p = 0.03) (Fig. 4).

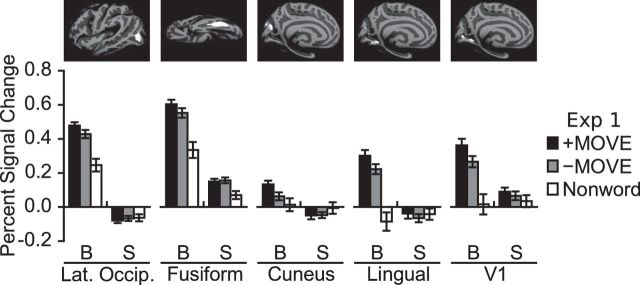

Figure 4.

Responses to syntactic movement in occipital group ROIs in blind (B) and sighted (S) participants. For each subject, PSC was extracted from the left or right hemisphere, depending on which was dominant for language. Error bars indicate the within-subjects SEM (Morey, 2008).

In addition, we conducted the same analysis in the left and right hemisphere separately. Similar to the language dominant hemisphere analysis, we found a significant effect of movement and a movement × group interaction in the left hemisphere, and a weaker but similar trend in the right hemisphere (ROI × movement ANOVA in blind group, main effect of syntactic movement, LH: F(1,18) = 9.19, p = 0.007, RH: F(1,18) = 3.13, p = 0.09; group × ROI × movement ANOVA, group × movement interaction, LH: F(1,35) = 4.94, p = 0.03, RH: F(1,35) = 2.4, p = 0.13).

The language-responsive visual cortex ROIs did not respond more to difficult than simple math equations (blind group paired t tests, p > 0.16). The lack of response to math difficulty in visual cortex was not due to the failure of the math difficulty manipulation to drive brain activity. Left dorsolateral prefrontal cortex responded more to double-digit (difficult) than single-digit (easy) math equations across sighted and blind groups (group × difficulty ANOVA, main effect of difficulty, F(1,35) = 9.38, p = 0.004, main effect of group, F(1,35) = 0.93, p = 0.34, difficulty × group interaction, F(1,35) = 0.27, p > 0.5) (Fig. 5).

Figure 5.

PSC in individual subject frontal and temporal ROIs in blind (B) and sighted (S) groups. Left, Responses during Experiment 1 in inferior frontal gyrus (IFG) and middle-posterior lateral temporal (MPT) language-responsive ROIs. For each subject, PSC was extracted from the left or right hemisphere, depending on which was dominant for language. Right, Responses to math difficulty in a left hemisphere math-responsive dorsolateral prefrontal ROI. Pictured above the graphs are the search spaces used to define the ROIs. Error bars indicate the within-subjects SEM (Morey, 2008).

Visual cortex activity predicts comprehension performance in blind adults

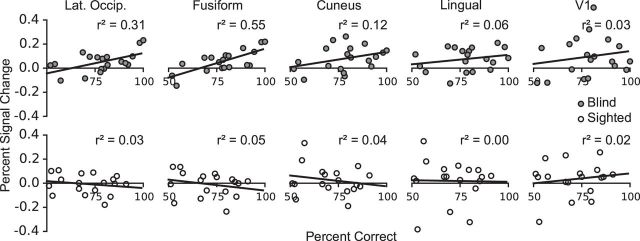

Blind participants who showed greater sensitivity to the movement manipulation in occipital cortex (+MOVE > −MOVE; percent signal change extracted from correct trials only) also performed better at comprehending +MOVE sentences (Fig. 6). We observed the strongest relationship between brain activation and behavior in the fusiform and lateral occipital ROIs (fusiform: r = 0.74, p = 0.001; lateral occipital: r = 0.55, p = 0.03, FDR corrected for the number of ROIs). The correlations with behavior were in the same direction but not reliable for regions on medial surface (cuneus: r = 0.35, p = 0.19; lingual: r = 0.23, p = 0.33, FDR corrected). The relationship between movement-related occipital activity and comprehension accuracy was specific to the blind group (sighted, r2 < 0.06, p > 0.35). The relationship was also specific to visual cortex and was not found in inferior frontal and lateral temporal ROIs (blind and sighted, r2 < 0.07, p > 0.3). Finally, the correlation was specific to the +Move > −Move neural differences. The amount of language activity in visual cortex (sentences > nonwords) was not a good predictor of comprehension accuracy (blind, r2 < 0.09, p > 0.22).

Figure 6.

PSC in occipital group ROIs correlated with sentence comprehension accuracy on the complex +MOVE sentences. Top, Correlations in the blind group. Bottom, Correlations in the sighted group. PSC shown is the difference between +MOVE and −MOVE conditions. PSC was extracted from correct trials only, and in each subject's language dominant hemisphere. Data points indicate individual subjects.

Responses to language in V1

We next asked whether voxels within the boundaries of V1 responded to syntactic movement. We identified a V1 ROI that responded more to sentences than math in the blind group (Fig. 4). In this region, we saw an effect of syntactic movement in the blind but not sighted group (paired t test in the blind group, t(18) = 2.85, p = 0.01; sighted group, t(17) = 0.86, p = 0.4, group × movement ANOVA, group × movement interaction, F(1,35) = 2.59, p = 0.12, main effect of movement F(1,35) = 7.37, p = 0.01). There was no effect of math difficulty in this V1 ROI (blind group paired t test, t(18) = 0.29, p > 0.5). This analysis suggests that in the blind group a region within V1 is sensitive to syntactic movement but not math difficulty. Notably, however, the relationship between neural activity and performance observed in secondary visual areas was not present in V1 (r = 0.18, p = 0.45).

Responses to language and syntactic movement in perisylvian cortex are preserved in blindness

In sighted individuals, listening to sentences relative to sequences of nonwords activated a network of areas in prefrontal, lateral temporal, and temporoparietal cortex (Fig. 2; Table 2). We observed sentence-related activity in the inferior and superior frontal gyri, and along the extent of the middle and superior temporal gyri (p < 0.05 FWE corrected). The posterior aspect of the middle frontal gyrus was active at a more lenient threshold (p < 0.05 FDR corrected). A similar pattern of activation was observed for sentences relative to math equations, except with reduced response in the middle frontal gyrus, and more extensive temporal activation (p < 0.05 FWE corrected).

Blind individuals activated the same areas of prefrontal and lateral temporal cortex during sentence comprehension, relative to both nonword sequence memory and math calculation (p < 0.05, FWE corrected). A group × condition interaction analysis did not reveal any brain areas that were more active in sighted than blind participants, for either the sentences > nonword or sentence > math contrasts (p < 0.05, FDR corrected). However, in blind participants, responses were less likely to be left-lateralized and more likely to either be bilateral or right lateralized.

Across blind and sighted groups, inferior frontal and mid-posterior lateral temporal ROIs had higher activity for +MOVE than −MOVE sentences (group × movement × ROI ANOVA, main effect of movement, F(1,35) = 35.39, p < 0.001; movement × group interaction, F(1,35) = 0.14, p > 0.5; movement × ROI interaction F(1,35) = 0.15, p > 0.5) (Fig. 5).

Discussion

We find that, within the visual cortices of congenitally blind adults, a subset of regions is selective for linguistic content and sensitive to grammatical structure. Our findings replicate and extend the results of a prior study (Röder et al., 2002) showing responses to syntactic complexity in visual cortex of blind adults. Here we show that such responses are selective to language in that (1) they are observed in cortical areas that respond to language more than sequence memory and symbolic math tasks and (2) these same regions are not sensitive to the complexity of math equations. We further find that responses to syntactic complexity in visual cortex predict sentence comprehension performance across blind participants.

Occipital language areas are sensitive to syntactic structure

Sensitivity to syntactic movement in visual cortex is surprising, in light of evolutionary theories positing specific neural machinery for syntax (Pinker and Bloom, 1990; Hauser et al., 2002; Fitch and Hauser, 2004; Makuuchi et al., 2009). It has been suggested that Broca's area is specialized for processing syntactic movement dependencies (Grodzinsky, 2000; Grodzinsky and Santi, 2008). Understanding sentences with syntactic movement requires maintaining syntactic information in memory in the presence of distractors. It is unlikely that occipital cortex is evolutionarily adapted either for maintaining information over delays or for representing syntactic structure. Our findings suggest that language-specific adaptations are not required for a brain area to participate in syntactic processing.

How are we to reconcile the idea that nonspecialized brain areas participate in syntax with the fact that language is uniquely human (Terrace et al., 1979)? On the one hand, it could be argued that language is a cultural, rather than biological, adaptation, and there are no brain networks that are innately specialized for language processing (Christiansen and Chater, 2008; Tomasello, 2009). On the other hand, there is evidence that evolution enabled the human brain for language (Goldin-Meadow and Feldman, 1977; Enard et al., 2002; Vargha-Khadem et al., 2005). Consistent with this idea, the functional profile of perisylvian cortex is preserved across deafness and blindness (e.g., Neville et al., 1998). One possibility is that biological adaptations are only needed to initiate language acquisition. A subset of perisylvian areas may contain the “seeds” of language-processing capacity. During the course of development, the capacities of these specialized regions may spread to nonspecialized cortical areas. In blindness, this process of colonization expands into occipital territory. On this view, small evolutionary adaptations have cascading effects when combined with uniquely human experience.

An important open question concerns the relative behavioral contribution of occipital and perisylvian cortex to language. Having more cortical tissue devoted to sentence processing could improve sentence comprehension. Consistent with this idea, blind participants were slightly better at sentence comprehension tasks in the current experiments. We also found that blind participants with greater sensitivity to movement in occipital cortex were better at comprehending sentences. This suggests that occipital plasticity for language could be behaviorally relevant. However, multiple alternative possibilities remain open. Sentence processing behavior might depend entirely on perisylvian areas. Although prior work has shown that occipital cortex is behaviorally relevant for Braille reading and verb generation, relevance of occipital activity for sentence processing is not established. Occipital regions could also be performing redundant computations that have no impact on linguistic behavior. Finally, occipital cortex might actually hinder performance, perhaps because occipital cytoarchitecture and connectivity are suboptimal for language. Future studies could adjudicate among these possibilities using techniques, such as transcranial magnetic stimulation. If occipital cortex contributes to sentence comprehension, then transient disruption with transcranial magnetic stimulation should impair performance.

Developmental origins of language responses in occipital cortex

Our findings raise questions about the developmental mechanisms of language-related plasticity in blindness. With regard to timing, there is some evidence that occipital plasticity for language has a critical period. One study found that only individuals who lost their vision before age 9 show occipital responses to language (Bedny et al., 2012). This time course differs from other kinds of occipital plasticity, which occur in late blindness and even within several days of blindfolding (Merabet et al, 2008).

One possibility is that blindness prevents the pruning of exuberant projections from language areas to “visual” cortex. Language information could reach occipital cortex from language regions in prefrontal cortex, lateral temporal cortex, or both. In support of the prefrontal source hypothesis, blind individuals have increased resting state correlations between prefrontal cortex and occipital cortex (Liu et al., 2007; Bedny et al., 2010, 2011; Watkins et al., 2012). Prefrontal cortex is connected with occipital cortex by the fronto-occipital fasciculus (Martino et al., 2010) and to posterior and inferior temporal regions by the arcuate fasciculus (Rilling et al., 2008). In blindness, these projections may extend into visuotopic regions, such as middle temporal visual motion complex. Alternatively, temporal-lobe language areas may themselves expand posteriorly into visuotopic cortex. Language information could then reach primary visual areas (V1, V2) through feedback projections.

Domain-specific responses to language in occipital cortex

We find that areas within occipital cortex respond to sentence processing demands more than to memory for nonword sequences, or symbolic math. Like the control tasks, sentence processing requires maintaining previously heard items and their order in memory, and building hierarchical structures from symbols. Furthermore, both the nonwords and math tasks were harder than the sentence comprehension tasks. Despite this, regions within visual cortex responded more during the sentence comprehension tasks than the control tasks. This result is consistent with our prior findings that language-responsive regions of visual cortex are sensitive to linguistic content but not task difficulty per se (Bedny et al., 2011). Our findings suggest that occipital cortex develops domain-specific responses to language, mirroring specialization in frontal and temporal cortex (Makuuchi et al., 2009; Fedorenko et al., 2011, 2012; Monti et al., 2012).

Occipital plasticity for language has implications for theories on how domain specificity emerges in the human brain. Domain-specificity is often thought to result from an intrinsic match between cortical microcircuitry and cognitive computations. By contrast, our findings suggest that input during development can cause specialization for a cognitive domain in the absence of preexisting adaptations. A similar conclusion is supported by recent studies of development in object-selective occipitotemporal cortex. The ventral visual stream develops responses to object categories that do not have an evolutionary basis. For example, the visual word form area responds selectively to written words and letters (Cohen et al., 2000; McCandliss et al., 2003). Like other ventral stream areas, such as the fusiform face area and the parahippocampal place area, the visual word form area falls in a systematic location across individuals. In macaques, long-term training with novel visual objects (cartoon faces, Helvetica font, and Tetris shapes) leads to the development of cortical patches that are selective for these objects (Srihasam et al., 2014).

The present data go beyond these findings in one important respect. Specialization for particular visual objects is thought to build on existing innate mechanisms for object perception in the ventral visual stream. Even the location of the new object-selective areas within the ventral stream is thought to depend on intrinsic predispositions for processing specific shapes (jagged or curved lines) or particular parts of space (foveal as opposed to peripheral parts of the visual field) (Srihasam et al., 2014). The present findings demonstrate that domain specificity emerges even without such predispositions.

Why would selectivity emerge in the absence of innate predisposition? One possibility is that there are computational advantages to segregating different domains of information into different cortical areas (Cosmides and Tooby, 1994). Some inputs may be particularly effective colonizers of cortex, forcing out other processing. Other cognitive domains might be capable of sharing cortical circuits. Domain specificity could also be a natural outcome of the developmental process, without conferring any computational advantage.

The current results demonstrate that regions within the occipital cortex of blind adults show domain-specific responses to language. However, they leave open the possibility that other regions within visual cortex serve domain general functions. Evidence for this idea comes from a recent study that found increased functional connectivity between visual cortices and domain general working memory areas in blind adults (Deen et al., 2015). Such domain general responses may coexist with domain-specific responses to language.

It is also important to point out that visual cortices of blind individuals are likely to have other functions aside from language. There is ample evidence that the visual cortex is active during nonlinguistic tasks, including spatial localization of sounds, tactile mental rotation, and somatosensory tactile and auditory discrimination tasks (e.g., Rösler et al., 1993; Röder et al., 1996; Collignon et al., 2011). We hypothesize that some of the heterogeneity of responses observed across studies is attributable to different functional profiles across regions within the visual cortices. In sighted individuals, the visual cortices are subdivided into a variety of functional areas. Such subspecialization is also likely present in blindness.

Notes

Supplemental material for this article is available at http://pbs.jhu.edu/research/bedny/publications/Lane_2015_Supplement.pdf. Supplemental material includes a figure showing language responses for sighted and blind individuals in each subject's language dominant and non–language-dominant hemisphere. This material has not been peer reviewed.

Footnotes

This work was supported in part by the Science of Learning Institute at Johns Hopkins University. We thank the Baltimore blind community for making this research possible; and the F.M. Kirby Research Center for Functional Brain Imaging at the Kennedy Krieger Institute for their assistance in data collection.

The authors declare no competing financial interests.

References

- Amedi A, Raz N, Pianka P, Malach R, Zohary E. Early “visual” cortex activation correlates with superior verbal memory performance in the blind. Nat Neurosci. 2003;6:758–766. doi: 10.1038/nn1072. [DOI] [PubMed] [Google Scholar]

- Amedi A, Floel A, Knecht S, Zohary E, Cohen LG. Transcranial magnetic stimulation of the occipital pole interferes with verbal processing in blind subjects. Nat Neurosci. 2004;7:1266–1270. doi: 10.1038/nn1328. [DOI] [PubMed] [Google Scholar]

- Bedny M, Konkle T, Pelphrey K, Saxe R, Pascual-Leone A. Sensitive period for a multimodal response in human visual motion area MT/MST. Curr Biol. 2010;20:1900–1906. doi: 10.1016/j.cub.2010.09.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bedny M, Pascual-Leone A, Dodell-Feder D, Fedorenko E, Saxe R. Language processing in the occipital cortex of congenitally blind adults. Proc Natl Acad Sci U S A. 2011;108:4429–4434. doi: 10.1073/pnas.1014818108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bedny M, Pascual-Leone A, Dravida S, Saxe R. A sensitive period for language in the visual cortex: distinct patterns of plasticity in congenitally versus late blind adults. Brain Lang. 2012;122:162–170. doi: 10.1016/j.bandl.2011.10.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Büchel C, Price C, Frackowiak RS, Friston K. Different activation patterns in the visual cortex of late and congenitally blind subjects. Brain. 1998a;121:409–419. doi: 10.1093/brain/121.3.409. [DOI] [PubMed] [Google Scholar]

- Büchel C, Price C, Friston K. A multimodal language region in the ventral visual pathway. Nature. 1998b;394:274–277. doi: 10.1038/28389. [DOI] [PubMed] [Google Scholar]

- Burton H. Visual cortex activity in early and late blind people. J Neurosci. 2003;23:4005–4011. doi: 10.1523/JNEUROSCI.23-10-04005.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burton H, Snyder AZ, Conturo TE, Akbudak E, Ollinger JM, Raichle ME. Adaptive changes in early and late blind: a fMRI study of Braille reading. J Neurophysiol. 2002a;87:589–607. doi: 10.1152/jn.00285.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burton H, Snyder AZ, Diamond JB, Raichle ME. Adaptive changes in early and late blind: a FMRI study of verb generation to heard nouns. J Neurophysiol. 2002b;88:3359–3371. doi: 10.1152/jn.00129.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen E, Gibson E, Wolf F. Online syntactic storage costs in sentence comprehension. J Mem Lang. 2005;52:144–169. doi: 10.1016/j.jml.2004.10.001. [DOI] [Google Scholar]

- Chomsky N. Syntactic structures. The Hague, The Netherlands: Mouton; 1957. [Google Scholar]

- Chomsky N. The minimalist program. Cambridge, MA: Massachusetts Institute of Technology; 1995. [Google Scholar]

- Christiansen MH, Chater N. Language as shaped by the brain. Behav Brain Sci. 2008;31:489–508. doi: 10.1017/S0140525X08004998. discussion 509–558. [DOI] [PubMed] [Google Scholar]

- Cohen LG, Celnik P, Pascual-Leone A, Corwell B, Falz L, Dambrosia J, Honda M, Sadato N, Gerloff C, Catalá MD, Hallett M. Functional relevance of cross-modal plasticity in blind humans. Nature. 1997;389:180–183. doi: 10.1038/38278. [DOI] [PubMed] [Google Scholar]

- Cohen L, Dehaene S, Naccache L, Lehéricy S, Dehaene-Lambertz G, Hénaff MA, Michel F. The visual word form area: spatial and temporal characterization of an initial stage of reading in normal subjects and posterior split-brain patients. Brain. 2000;123:291–307. doi: 10.1093/brain/123.2.291. [DOI] [PubMed] [Google Scholar]

- Collignon O, Vandewalle G, Voss P, Albouy G, Charbonneau G, Lassonde M, Lepore F. Functional specialization for auditory-spatial processing in the occipital cortex of congenitally blind humans. Proc Natl Acad Sci U S A. 2011;108:4435–4440. doi: 10.1073/pnas.1013928108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cosmides L, Tooby J. Mapping the mind: domain specificity in cognition and culture. Cambridge, UK: Cambridge UP; 1994. Origins of domain specificity: the evolution of functional organization; pp. 84–116. [Google Scholar]

- Dale AM, Fischl B, Sereno MI. Cortical surface-based analysis: I. Segmentation and surface reconstruction. Neuroimage. 1999;9:179–194. doi: 10.1006/nimg.1998.0395. [DOI] [PubMed] [Google Scholar]

- Deen B, Saxe R, Bedny M. Occipital cortex of blind individuals is functionally coupled with executive control areas of frontal cortex. J Cogn Neurosci. 2015;27:1633–1647. doi: 10.1162/jocn_a_00807. [DOI] [PubMed] [Google Scholar]

- Duyck W, Desmet T, Verbeke LP, Brysbaert M. WordGen: a tool for word selection and nonword generation in Dutch, English, German, and French. Behav Res Methods Instrum Comput. 2004;36:488–499. doi: 10.3758/BF03195595. [DOI] [PubMed] [Google Scholar]

- Enard W, Przeworski M, Fisher SE, Lai CS, Wiebe V, Kitano T, Monaco AP, Pääbo S. Molecular evolution of FOXP2, a gene involved in speech and language. Nature. 2002;418:869–872. doi: 10.1038/nature01025. [DOI] [PubMed] [Google Scholar]

- Fedorenko E, Hsieh PJ, Nieto-Castañón A, Whitfield-Gabrieli S, Kanwisher N. New method for fMRI investigations of language: defining ROIs functionally in individual subjects. J Neurophysiol. 2010;104:1177–1194. doi: 10.1152/jn.00032.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fedorenko E, Behr MK, Kanwisher N. Functional specificity for high-level linguistic processing in the human brain. Proc Natl Acad Sci U S A. 2011;108:16428–16433. doi: 10.1073/pnas.1112937108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fedorenko E, Duncan J, Kanwisher N. Language-selective and domain-general regions lie side by side within Broca's area. Curr Biol. 2012;22:2059–2062. doi: 10.1016/j.cub.2012.09.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Finney EM, Fine I, Dobkins KR. Visual stimuli activate auditory cortex in the deaf. Nat Neurosci. 2001;4:1171–1173. doi: 10.1038/nn763. [DOI] [PubMed] [Google Scholar]

- Finney EM, Clementz BA, Hickok G, Dobkins KR. Visual stimuli activate auditory cortex in deaf subjects: evidence from MEG. Neuroreport. 2003;14:1425–1427. doi: 10.1097/00001756-200308060-00004. [DOI] [PubMed] [Google Scholar]

- Fitch WT, Hauser MD. Computational constraints on syntactic processing in a nonhuman primate. Science. 2004;303:377–380. doi: 10.1126/science.1089401. [DOI] [PubMed] [Google Scholar]

- Genovese CR, Lazar NA, Nichols T. Thresholding of statistical maps in functional neuroimaging using the false discovery rate. Neuroimage. 2002;15:870–878. doi: 10.1006/nimg.2001.1037. [DOI] [PubMed] [Google Scholar]

- Gibson E. Linguistic complexity: locality of syntactic dependencies. Cognition. 1998;68:1–76. doi: 10.1016/S0010-0277(98)00034-1. [DOI] [PubMed] [Google Scholar]

- Glasser MF, Sotiropoulos SN, Wilson JA, Coalson TS, Fischl B, Andersson JL, Xu J, Jbabdi S, Webster M, Polimeni JR, Van Essen DC, Jenkinson M. The minimal preprocessing pipelines for the Human Connectome Project. Neuroimage. 2013;80:105–124. doi: 10.1016/j.neuroimage.2013.04.127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldin-Meadow S, Feldman H. The development of language-like communication without a language model. Science. 1977;197:401–403. doi: 10.1126/science.877567. [DOI] [PubMed] [Google Scholar]

- Gordon PC, Hendrick R, Johnson M. Memory interference during language processing. J Exp Psychol Learn Mem Cogn. 2001;27:1411–1423. doi: 10.1037/0278-7393.27.6.1411. [DOI] [PubMed] [Google Scholar]

- Grodzinsky Y, Santi A. The battle for Broca's region. Trends Cogn Sci. 2008;12:474–480. doi: 10.1016/j.tics.2008.09.001. [DOI] [PubMed] [Google Scholar]

- Grodzinsky Y. The neurology of syntax: language use without Broca's area. Behav Brain Sci. 2000;23:1–21. doi: 10.1017/s0140525x00002399. discussion 21–71. [DOI] [PubMed] [Google Scholar]

- Hadjikhani N, Liu AK, Dale AM, Cavanagh P, Tootell RB. Retinotopy and color sensitivity in human visual cortical area V8. Nat Neurosci. 1998;1:235–241. doi: 10.1038/681. [DOI] [PubMed] [Google Scholar]

- Hauser MD, Chomsky N, Fitch WT. The faculty of language: what is it, who has it, and how did it evolve? Science. 2002;298:1569–1579. doi: 10.1126/science.298.5598.1569. [DOI] [PubMed] [Google Scholar]

- Hinds OP, Rajendran N, Polimeni JR, Augustinack JC, Wiggins G, Wald LL, Diana Rosas H, Potthast A, Schwartz EL, Fischl B. Accurate prediction of V1 location from cortical folds in a surface coordinate system. Neuroimage. 2008;39:1585–1599. doi: 10.1016/j.neuroimage.2007.10.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hyvärinen J, Carlson S, Hyvärinen L. Early visual deprivation alters modality of neuronal responses in area 19 of monkey cortex. Neurosci Lett. 1981;26:239–243. doi: 10.1016/0304-3940(81)90139-7. [DOI] [PubMed] [Google Scholar]

- Karns CM, Dow MW, Neville HJ. Altered cross-modal processing in the primary auditory cortex of congenitally deaf adults: a visual-somatosensory fMRI study with a double-flash illusion. J Neurosci. 2012;32:9626–9638. doi: 10.1523/JNEUROSCI.6488-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- King J, Just MA. Individual differences in syntactic processing: the role of working memory. J Mem Lang. 1991;30:580–602. doi: 10.1016/0749-596X(91)90027-H. [DOI] [Google Scholar]

- Liu Y, Yu C, Liang M, Li J, Tian L, Zhou Y, Qin W, Li K, Jiang T. Whole brain functional connectivity in the early blind. Brain. 2007;130:2085–2096. doi: 10.1093/brain/awm121. [DOI] [PubMed] [Google Scholar]

- Lomber SG, Meredith MA, Kral A. Cross-modal plasticity in specific auditory cortices underlies visual compensations in the deaf. Nat Neurosci. 2010;13:1421–1427. doi: 10.1038/nn.2653. [DOI] [PubMed] [Google Scholar]

- Makuuchi M, Bahlmann J, Anwander A, Friederici AD. Segregating the core computational faculty of human language from working memory. Proc Natl Acad Sci U S A. 2009;106:8362–8367. doi: 10.1073/pnas.0810928106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martino J, Brogna C, Robles SG, Vergani F, Duffau H. Anatomic dissection of the inferior fronto-occipital fasciculus revisited in the lights of brain stimulation data. Cortex. 2010;46:691–699. doi: 10.1016/j.cortex.2009.07.015. [DOI] [PubMed] [Google Scholar]

- McCandliss BD, Cohen L, Dehaene S. The visual word form area: expertise for reading in the fusiform gyrus. Trends Cogn Sci. 2003;7:293–299. doi: 10.1016/S1364-6613(03)00134-7. [DOI] [PubMed] [Google Scholar]

- Merabet L, Thut G, Murray B, Andrews J, Hsiao S, Pascual-Leone A. Feeling by sight or seeing by touch? Neuron. 2004;42:173–179. doi: 10.1016/S0896-6273(04)00147-3. [DOI] [PubMed] [Google Scholar]

- Merabet L, Hamilton R, Schlaug G, Swisher J, Kiriakopoulos E, Pitskel N, Kauffman T, Pascual-Leone A. Rapid and reversible recruitment of early visual cortex for touch. PLoS One. 2008;3:e3046. doi: 10.1371/journal.pone.0003046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Monti MM, Parsons LM, Osherson DN. Thought beyond language: neural dissociation of algebra and natural language. Psychol Sci. 2012;23:914–922. doi: 10.1177/0956797612437427. [DOI] [PubMed] [Google Scholar]

- Morey RD. Confidence intervals from normalized data: a correction to Cousineau (2005) Tutor Quant Methods Psychol. 2008;4:61–64. [Google Scholar]

- Neville HJ, Bavelier D, Corina D, Rauschecker J, Karni A, Lalwani A, Braun A, Clark V, Jezzard P, Turner R. Cerebral organization for language in deaf and hearing subjects: biological constraints and effects of experience. Proc Natl Acad Sci U S A. 1998;95:922–929. doi: 10.1073/pnas.95.3.922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pinker S, Bloom P. Natural language and natural selection. Behav Brain Sci. 1990;13:707–727. doi: 10.1017/S0140525X00081061. [DOI] [Google Scholar]

- Reich L, Szwed M, Cohen L, Amedi A. A ventral visual stream reading center independent of visual experience. Curr Biol. 2011;22:363–368. doi: 10.1016/j.cub.2011.01.040. [DOI] [PubMed] [Google Scholar]

- Rilling JK, Glasser MF, Preuss TM, Ma X, Zhao T, Hu X, Behrens TE. The evolution of the arcuate fasciculus revealed with comparative DTI. Nat Neurosci. 2008;11:426–428. doi: 10.1038/nn2072. [DOI] [PubMed] [Google Scholar]

- Röder B, Rösler F, Hennighausen E, Näcker F. Event-related potentials during auditory and somatosensory discrimination in sighted and blind human subjects. Cogn Brain Res. 1996;4:77–93. doi: 10.1016/0926-6410(96)00024-9. [DOI] [PubMed] [Google Scholar]

- Röder B, Rösler F, Neville HJ. Event-related potentials during auditory language processing in congenitally blind and sighted people. Neuropsychologia. 2000;38:1482–1502. doi: 10.1016/S0028-3932(00)00057-9. [DOI] [PubMed] [Google Scholar]

- Röder B, Stock O, Bien S, Neville H, Rösler F. Speech processing activates visual cortex in congenitally blind humans. Eur J Neurosci. 2002;16:930–936. doi: 10.1046/j.1460-9568.2002.02147.x. [DOI] [PubMed] [Google Scholar]

- Rösler F, Röder B, Heil M, Hennighausen E. Topographic differences of slow event-related brain potentials in blind and sighted adult human subjects during haptic mental rotation. Brain Res Cogn Brain Res. 1993;1:145–159. doi: 10.1016/0926-6410(93)90022-W. [DOI] [PubMed] [Google Scholar]

- Sadato N, Pascual-Leone A, Grafman J, Ibañez V, Deiber MP, Dold G, Hallett M. Activation of the primary visual cortex by Braille reading in blind subjects. Nature. 1996;380:526–528. doi: 10.1038/380526a0. [DOI] [PubMed] [Google Scholar]

- Sadato N, Pascual-Leone A, Grafman J, Deiber MP, Ibañez V, Hallett M. Neural networks for Braille reading by the blind. Brain. 1998;121:1213–1229. doi: 10.1093/brain/121.7.1213. [DOI] [PubMed] [Google Scholar]

- Saenz M, Lewis LB, Huth AG, Fine I, Koch C. Visual motion area MT+/V5 responds to auditory motion in human sight-recovery subjects. J Neurosci. 2008;28:5141–5148. doi: 10.1523/JNEUROSCI.0803-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith SM, Jenkinson M, Woolrich MW, Beckmann CF, Behrens TEJ, Johansen-Berg H, Bannister PR, De Luca M, Drobnjak I, Flitney DE, Niazy RK, Saunders J, Vickers J, Zhang Y, De Stefano N, Brady JM, Matthews PM. Advances in functional and structural MR image analysis and implementation as FSL. Neuroimage. 2004;23(Suppl 1):S208–S219. doi: 10.1016/j.neuroimage.2004.07.051. [DOI] [PubMed] [Google Scholar]

- Srihasam K, Vincent JL, Livingstone MS. Novel domain formation reveals proto-architecture in inferotemporal cortex. Nat Neurosci. 2014;17:1776–1783. doi: 10.1038/nn.3855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Staub A. Eye movements and processing difficulty in object relative clauses. Cognition. 2010;116:71–86. doi: 10.1016/j.cognition.2010.04.002. [DOI] [PubMed] [Google Scholar]

- Terrace HS, Petitto LA, Sanders RJ, Bever TG. Can an ape create a sentence? Science. 1979;206:891–902. doi: 10.1126/science.504995. [DOI] [PubMed] [Google Scholar]

- Tomasello M. The cultural origins of human cognition. Cambridge, MA: Harvard UP; 2009. [Google Scholar]

- Tootell RB, Hadjikhani N. Where is “dorsal V4” in human visual cortex? Retinotopic, topographic and functional evidence. Cereb Cortex. 2001;11:298–311. doi: 10.1093/cercor/11.4.298. [DOI] [PubMed] [Google Scholar]

- Van Essen DC. A Population-Average, Landmark- and Surface-based (PALS) atlas of human cerebral cortex. Neuroimage. 2005;28:635–662. doi: 10.1016/j.neuroimage.2005.06.058. [DOI] [PubMed] [Google Scholar]

- Vargha-Khadem F, Gadian DG, Copp A, Mishkin M. FOXP2 and the neuroanatomy of speech and language. Nat Rev Neurosci. 2005;6:131–138. doi: 10.1038/nrn1605. [DOI] [PubMed] [Google Scholar]

- Watkins KE, Cowey A, Alexander I, Filippini N, Kennedy JM, Smith SM, Ragge N, Bridge H. Language networks in anophthalmia: maintained hierarchy of processing in “visual” cortex. Brain. 2012;135:1566–1577. doi: 10.1093/brain/aws067. [DOI] [PubMed] [Google Scholar]

- Weeks R, Horwitz B, Aziz-Sultan A, Tian B, Wessinger CM, Cohen LG, Hallett M, Rauschecker JP. A positron emission tomographic study of auditory localization in the congenitally blind. J Neurosci. 2000;20:2664–2672. doi: 10.1523/JNEUROSCI.20-07-02664.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolbers T, Zahorik P, Giudice NA. Decoding the direction of auditory motion in blind humans. Neuroimage. 2011;56:681–687. doi: 10.1016/j.neuroimage.2010.04.266. [DOI] [PubMed] [Google Scholar]