Abstract

The cortical reinstatement hypothesis of memory retrieval posits that content-specific cortical activity at encoding is reinstated at retrieval. Evidence for cortical reinstatement was found in higher-order sensory regions, reflecting reactivation of complex object-based information. However, it remains unclear whether the same detailed sensory, feature-based information perceived during encoding is subsequently reinstated in early sensory cortex and what the role of the hippocampus is in this process. In this study, we used a combination of visual psychophysics, functional neuroimaging, multivoxel pattern analysis, and a well controlled cued recall paradigm to address this issue. We found that the visual information human participants were retrieving could be predicted by the activation patterns in early visual cortex. Importantly, this reinstatement resembled the neural pattern elicited when participants viewed the visual stimuli passively, indicating shared representations between stimulus-driven activity and memory. Furthermore, hippocampal activity covaried with the strength of stimulus-specific cortical reinstatement on a trial-by-trial level during cued recall. These findings provide evidence for reinstatement of unique associative memories in early visual cortex and suggest that the hippocampus modulates the mnemonic strength of this reinstatement.

Keywords: cortical reinstatement, hippocampus, multivariate analysis, visual cortex

Introduction

Retrieving memories of past events is central to adaptive behavior. Tulving's episodic memory theory describes memory retrieval as “mental time travel”: when recalling an event, it is as if one was “transported” back to the situation in which that event happened, thereby reactivating cortical representations during retrieval that were involved during initial encoding (James, 1890; Tulving and Thomson, 1973). Indeed, reactivation of regions involved during encoding was found throughout the sensory hierarchy with associative memory paradigms (Nyberg et al., 2000; Wheeler et al., 2000; Düzel et al., 2003; Khader et al., 2005; Ranganath et al., 2005; Woodruff et al., 2005; Slotnick and Schacter, 2006; Diana et al., 2013; Rugg and Vilberg, 2013). Studies using multivariate methods showed that the reactivation of higher-order sensory cortical areas carries information about the recalled stimulus category (Polyn et al., 2005; Lewis-Peacock and Postle, 2008; Johnson et al., 2009; Kuhl et al., 2011; Buchsbaum et al., 2012; Gordon et al., 2013). Notably, this content-specific reactivation was only observed for higher-order sensory regions, begging the question whether cortical reinstatement selectively occurs higher up in the sensory hierarchy. Thus, it remains unclear until what level of detail memory reinstatement can occur: what is the mnemonic resolution of neural reinstatement? Is only object-based, higher-order information subject to cortical reinstatement, or are lower-level features reinstated as well?

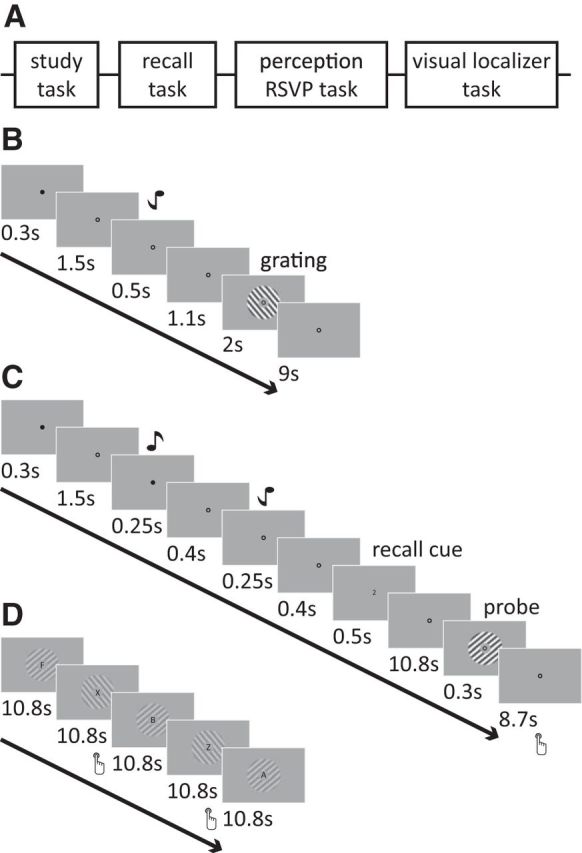

Here, we investigated the generalizability of the reinstatement phenomenon to early sensory cortex. By using basic visual and auditory stimuli, we carefully controlled our paradigm for potential confounding factors, such as unspecific attentional or stimulus differences, and rigorously examined whether activity patterns in early visual cortex during cued memory recall (1) reflect stimulus-specific mnemonic representations, (2) share common representations with stimulus-driven activity patterns, and (3) what the role of the hippocampus is in mnemonic reinstatement in early visual cortex. Participants first learned two audiovisual associations (specific tones paired with the orientation of visual gratings; Fig. 1B). Subsequently, they performed a cued recall task (Fig. 1C), in which they were cued with a tone and covertly recalled the associated grating. After this recall phase, a probe grating was presented, on which participants performed an orientation discrimination task. Crucially, there was no visual information present during the covert recall phase, so any information pertaining to the orientation of the recalled stimulus must have been attributable to the retrieval of the associated grating. Participants additionally performed a separate task in which they passively viewed the same visual gratings and performed a rapid serial visual presentation (RSVP) letter task (Fig. 1D). We used standard retinotopy procedures to delineate visual regions V1, V2, and V3 for each participant. We subsequently extracted the signal time courses from V1–V3 during the different tasks and applied a linear classification algorithm to predict the recalled and perceived gratings from the neural patterns in early visual cortex. To investigate the role of the hippocampus during reinstatement, we examined the relationship between classifier decision values and hippocampal signal strength.

Figure 1.

Schematic illustration of session and trial structure. A, Participants completed four experimental tasks in the study. First, they learned tone–grating associations, after which they performed six runs of a cued recall task. Subsequently, participants completed two runs of an RSVP task with unattended gratings and two runs of a visual localizer task. B, Participants learned to associate two tone–grating pairs. During the study task, a tone was presented, after which the associated grating was shown. The grating was presented for 2 s (250 ms on, 250 ms off, with randomized phase). C, During cued recall, a black cue at fixation indicated the start of the trial. Subsequently, two tones were presented briefly, followed by a recall cue (1 or 2, denoting the first or second tone, respectively) that indicated from which tone to recall the associated grating. The presentation of both tones in each trial reduced the difference between the recall trials to a minimum. After a 10.8 s (6 volumes) recall phase, a probe grating (slightly tilted with respect to the recall stimulus) was presented. Participants indicated by button press whether the probe was rotated clockwise or counterclockwise with respect to the recalled stimulus. D, In the passive viewing task, participants performed a letter detection task at fixation, with task-irrelevant gratings presented on the background.

Materials and Methods

Participants.

Twelve healthy adult volunteers (aged 22–29 years; average, 26 years; four females) with normal or corrected-to-normal vision gave written informed consent and participated in the experiment. The study was approved by the local ethical review board (Arnhem-Nijmegen, The Netherlands).

Experimental paradigm.

Participants completed four experimental tasks in the study (Fig. 1A). First, they learned tone–grating associations, after which they performed six runs of a cued recall task. Between recall runs 3 and 4, associations were shown again. Subsequently, participants completed two runs of an RSVP task with unattended gratings and two runs of a visual localizer task. Before and after the experimental sessions, short resting-state scans were obtained. Participants were instructed to maintain fixation on the central bull's eye throughout all tasks.

Stimuli.

The stimuli were generated using MATLAB and the Psychophysics Toolbox (Brainard, 1997; Research Resource ID (RRID):rid_000041). Stimuli were displayed on a rear-projection screen using a luminance-calibrated Eiki projector (1024 × 768 resolution, 60 Hz refresh rate) against a uniform gray background. Pure tones (450 or 1000 Hz) were used as auditory stimuli, presented to both ears over MR-compatible in-ear headphones. Visual stimuli comprised sinusoidal annular gratings (55 or 145°; grating outer radius, 7.5°; inner radius, 1.875°; contrast, 20%; spatial frequency, 0.5 cycles/° with randomized spatial phase) that were presented around a central fixation point (radius, 0.25°). Contrast decreased linearly to zero over the outer 0.5° radius of the grating.

Learning task.

Participants learned associations between two pairs, with each pair consisting of a tone and an orientation stimulus. Using a counterbalanced design, participants were randomly assigned to the two different combinations of the two tones (450 or 1000 Hz) and gratings (55° or 145°). Each trial started with the presentation of a black cue at fixation (300 ms on, 1500 ms off), followed by the tone (500 ms), an interstimulus interval (1100 ms), the associated grating (flashed on and off every 250 ms for 2 s), and ended with an intertrial interval (ITI) of 9 s (Fig. 1B). Each pair of stimuli was presented 10 times.

Cued recall task.

In six separate runs (of 16 trials each), participants performed a cued recall task. In this task, each trial started with a central black cue (300 ms on, 1500 ms off), followed by both tones, presented in counterbalanced order (each 250 ms on, 400 ms off), a recall cue consisting of either a “1” or “2” presented at fixation (500 ms), a 10.8 s recall phase (6 TRs, see below), a probe grating (300 ms, 20% contrast), and an ITI of 8.7–12.3 s (Fig. 1C). On each trial, participants performed a two-alternative forced-choice orientation discrimination task and reported via a button press whether the probe grating was rotated clockwise (middle finger of right hand) or counterclockwise (index finger of right hand) relative to the recalled grating (Harrison and Tong, 2009). The change in orientation between the recalled grating and the probe gratings on each trial and subsequent orientation discrimination threshold estimates were determined using an adaptive staircase procedure at 75% accuracy (Watson and Pelli, 1983). The staircase was seeded with an orientation difference of 10° and dynamically adapted based on the participants' accuracy. The maximum orientation difference between the probe and the recalled orientation was set at 20°.

Passive viewing task.

Subsequent to the main experimental tasks, participants performed two runs of an unattended gratings task, in which they were required to report whenever a “Z” or “X” appeared within a sequence of centrally presented letters (approximately two letters per second; mean ± SEM performance accuracy, 82.0 ± 4.0%), whereas task-irrelevant gratings around fixation flashed on and off every 250 ms during each 18 s stimulus block (Fig. 1D). There were 18 stimulus blocks per run. The gratings were identical to those used in the cued recall task but presented at lower contrast (4%).

Visual localizer task.

Spatially selective visual regions were identified using a visual localizer task that consisted of blocked presentations of flickering checkerboards (checker size, 0.5°; display rate, 10 Hz; edge, 0.5° linear contrast ramp) presented in the same location as the gratings in the cued recall task but within a slightly smaller annulus (grating radius, 6.5°). This smaller window was used to minimize selection of retinotopic regions corresponding to the edges of the grating stimuli. The checkerboard stimulus was presented in 10.8 s blocks, interleaved between blocks of fixation (seven blocks of fixation, six blocks of stimulation). Participants were instructed to press a button when the contrast of the fixation bull's eye changed (mean ± SEM performance accuracy, 97.8 ± 1.9%).

Eye tracking.

Eye position was successfully monitored in the MRI scanner for all participants using an MR-compatible eye-tracking system (60 Hz; SMI Systems). Analysis of the data confirmed that participants maintained stable fixation throughout the recording sessions. Mean ± SEM eye position deviated by 0.06 ± 0.02° of visual angle between stimulus blocks, and the stability of the eye position did not differ between the orientation conditions (all p > 0.5).

fMRI acquisition.

fMRI data were recorded on a 3 T MR scanner (TIM Trio; Siemens) with a 3D EPI sequence (64 slices; TR, 1.8 s; voxel size, 2 × 2 × 2 mm; TE, 25 ms; flip angle, 15°; field of view, 224 × 224 mm) and a 32-channel head coil. Using the AutoAlign Head software by Siemens, we ensured that the orientation of our field of view was tilted −25° from the transverse plane for each of our participants, resulting in the same tilt relative to the individual participant's head position. In addition, T1-weighted structural images (MPRAGE; voxel size, 1 × 1 × 1 mm; TR, 2.3 s) and a field map (gradient echo; voxel size, 3.5 × 3.5 × 2 mm; TR, 1.02 s) were acquired.

fMRI data preprocessing.

The Automatic Analysis Toolbox (https://github.com/rhodricusack/automaticanalysis/wiki) was used for fMRI data preprocessing, which uses core functions from SPM8 (http://www.fil.ion.ucl.ac.uk/spm/software/spm8/; RRID:nif-0000-00343) and FreeSurfer (http://surfer.nmr.mgh.harvard.edu/; RRID:nif-0000-00304), combined with custom scripts. Multivariate analyses were performed using functions of the Donders Machine Learning Toolbox (https://github.com/distrep/DMLT). Functional imaging data were initially motion corrected and coregistered using SPM functions. No spatial or temporal smoothing was performed. A high-pass filter of 128 s was used to remove slow signal drifts. The T1 structural scan was segmented using FreeSurfer functions.

Multivoxel pattern analyses.

For the cued recall task, fMRI data samples included averaged activity of individual voxels across time points 5.4–9 s (i.e., TRs 4–5) after the recall cue. We selected the start point of this time window to account for the hemodynamic lag of the BOLD response (4–6 s). We adopted a conservative strategy in selecting the endpoint of the analysis window at 9 s. This procedure prevented the possible inclusion of any BOLD activity associated with the presentation of the test grating at time 10.8 s (which, in principle, could begin to influence fMRI activity partway through the acquisition of TR 6 and beyond). All trials were included in the classification analyses. For classification analysis of individual fMRI time points, no temporal averaging was performed.

For the unattended gratings task, fMRI data samples were created by averaging activity over each 18 s stimulus block, after accounting for a 3 volume (5.4 s) lag in the BOLD response.

All fMRI data were transformed from MRI signal intensity to units of percentage signal change, calculated relative to the average level of activity for each voxel across all samples within a given run. In addition, the data were z-normalized across voxels. All fMRI data samples for a given experiment were labeled according to the corresponding orientation and served as input to the orientation classifier. On average, V1 included 396 ± 33 (mean ± SEM), V2 included 236 ± 23, V3 included 166 ± 19, and V1–V3 included 797 ± 60 voxels.

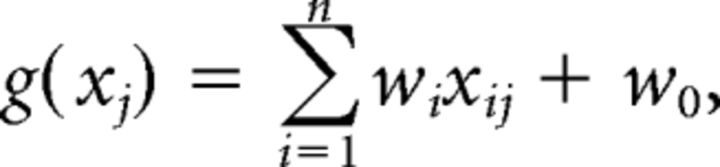

Linear support vector machine.

A linear support vector machine (SVM) classifier was used to obtain a linear discriminant function distinguishing between the two orientations θ1 and θ2:

|

where xj is a vector specifying the BOLD amplitude of all n voxels on block j, xi and wi are the amplitude of voxel i and its weight, respectively, and w0 is the overall bias. The classifier solved this function so that, for a set of training data, the following relationship was satisfied:

Patterns in the test data were assigned to orientation θ1 when the decision value g(xj) was >0 and to orientation θ2 otherwise. The size of the deviation of the decision value from 0 was taken as an index of classification strength. Cross-validation was performed in a leave-one-run-out procedure for all classification analyses. Performance over test iterations was averaged and tested against chance level with paired-sample t tests, with a threshold of p < 0.05.

Univariate parametric recall analysis.

The functional data from the recall runs for each participant were also modeled in a general linear model (GLM). Four task regressors were included: (1) one representing the trial cues; (2) one for the audio cues; (3) one for the recall cue and recall phase; and (4) one representing the probe presentations. These regressors were convolved with a canonical hemodynamic response function (HRF), as well as its temporal and dispersion derivatives (Friston et al., 1998). Six movement parameters and the time courses from the white matter and lateral ventricles were modeled as nuisance regressors. In addition, the recall phase regressor was parametrically modulated by an additional regressor. This parametric modulator was constructed from the absolute trial-by-trial decision values of the SVM classifier that was trained on the perception task and tested on the cued recall task, because this generalization classifier was least biased by possible attentional effects during recall. The reported parametric effects were thresholded at p < 0.05, cluster-corrected using threshold-free cluster enhancement (Smith and Nichols, 2009).

Regions of interest.

Freesurfer was used to delineate the visual areas using standard retinotopic mapping procedures (Sereno et al., 1995; DeYoe et al., 1996; Engel et al., 1997; Wandell et al., 2007). Retinotopy data were obtained during scan sessions on a separate day. These visual regions of interest (ROIs) were defined on the reconstructed cortical surface for V1 and extrastriate areas V2 and V3, separately for each hemisphere (Sereno et al., 1995; Engel et al., 1997). Within each retinotopic ROI, we identified the stimulus-responsive voxels according to their response to the checkerboard stimulus in the independent functional localizer task. Voxels in the foveal confluences were not selected. For the V1–V3 ROI, we combined the stimulus-responsive voxels from the separate visual ROIs.

The functional data from the localizer runs for each participant were modeled using a block-design approach within a GLM. A regressor representing the visual checkerboard stimulation blocks was created and convolved with the HRF. The same filtering kernel and nuisance regressors were used as described above. The contrast “stimulation” versus “fixation” was thresholded at p < 0.05 (familywise error corrected).

Results

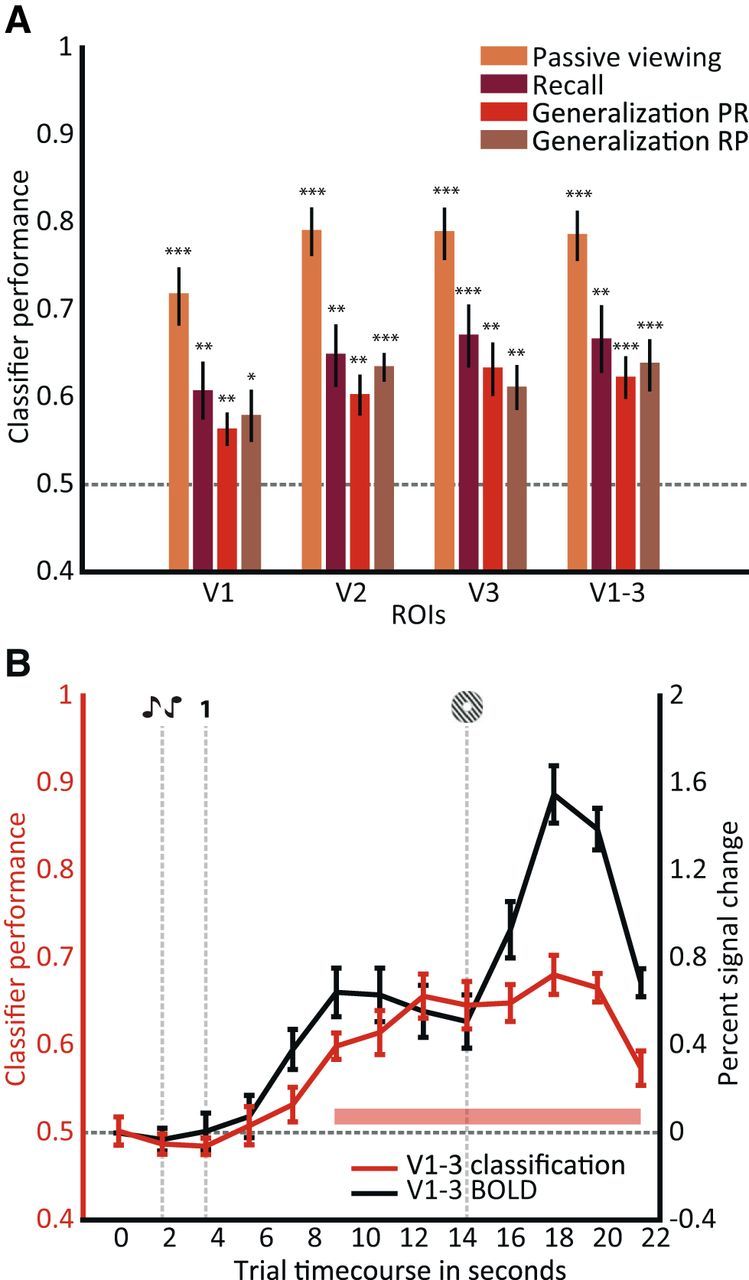

First, we asked whether we could predict the recalled orientation from voxel patterns in visual cortex during the recall phase. A linear SVM classifier was trained and tested on the neural patterns in early visual regions during recall in a leave-one-run-out cross-validation procedure. The time window, ranging between 5.4 and 9 s (volumes 3–5), of each recall phase was used for classification. The start of this time window was chosen to allow for peak BOLD activity to fully emerge; a conservative endpoint of 9 s was used to exclude any potential activity elicited by the probe grating (at 10.8 s or 6 volumes after the recall cue). As illustrated in Figure 2A (purple bars), we could reliably decode the recalled orientation from activity patterns in retinotopically defined early visual areas V1–V3 (decoding accuracy, 67%; chance-level accuracy, 50%; t(11) = 4.29; p < 10e-3). Note that these patterns in visual cortex could not have been instated by the auditory cues. No visual stimulus was presented immediately before and during each of the recall phases; thus, the information about the decoded stimulus is likely attributable to reinstatement based on memory retrieval.

Figure 2.

SVM classifier performance for different experimental sessions and visual ROIs. A, For each of the delineated visual ROIs (V1, V2, and V3), as well as a combined ROI (V1–V3), decoding accuracies were calculated over 5.4–9 s (TR 3–TR 5) after onset of the recall phase. Performance of the four classifiers was significantly above chance level (50%, gray dotted line; *p < 0.05; **p < 0.01; ***p < 0.001). The passive viewing classifier was trained and tested on data from the passive viewing task, the cued recall classifier was trained and tested on data from the cued recall task, the generalization PR classifier was trained on passive viewing (P) data and tested on recall (R) data, and the generalization RP classifier was trained on cued recall and tested on passive viewing data. Error bars indicate ±1 SEM. B, In red (left axis), performance of the generalization PR classifier is depicted for individual fMRI time points. Data for the combined visual ROI (V1–V3) is shown. The pink bar denotes significant decoding performance at p < 0.001. In black (right axis), the percentage signal change across voxels from V1–V3 during the trial is shown. Chance level was 50% (horizontal gray dotted line). The vertical gray dotted lines indicate trial events. Error bars indicate ±1 SEM.

The classification of the different recalled orientations revealed that the patterns could be distinguished in early visual cortex. However, this does not necessarily mean that these patterns are similar to those during encoding or perception: reinstatement and perception could be differently represented in early sensory cortex (Nyberg et al., 2000; Wheeler et al., 2000). Cortical reinstatement suggests similarity between activity patterns during encoding and retrieval. However, already during encoding the memory might be represented in an abstracted format and guided by attentional processes, making it difficult to dissociate memory and attention (Vicente-Grabovetsky et al., 2014). Our approach allowed us to test the hypothesis that top-down retrieval-related patterns in early visual cortex resembled bottom-up, passive viewing-related patterns.

To probe these bottom-up activity patterns, our participants performed a letter-detection task at fixation, while task-irrelevant low-contrast gratings were presented around the fixation bull's eye. The same grating orientations were used as in the recall task. To test classification on this passive viewing task, a classifier was trained and tested on the neural patterns for the orientations during the passive viewing experiment in a leave-one-run-out procedure. This classifier performed well in regions V1–V3 (t(11) = 9.87; p = 8.4 × 10e-7; Fig. 2A, yellow bars), consistent with previous work (Kamitani and Tong, 2005; Jehee et al., 2011, 2012). To investigate whether the orientation-selective responses for recalled gratings were similar to stimulus-driven activity, a third classifier was trained on activity patterns generated by passive viewing (P) and tested on the recalled orientation from neural patterns during cued recall (R). Performance for this generalization PR classifier was significantly above chance in regions V1–V3 (t(11) = 5.01; p = 4.0 × 10e-4; Fig. 2A, red bars). The fact that classification performance generalizes across these two tasks suggests that there are shared neural representations in early visual cortex for stimulus-driven activity and cued recall. Finally, a classifier trained on data from the cued recall task and tested on passive viewing data (generalization RP; Fig. 2A, brown bars) also performed significantly above chance (t(11) = 4.57; p = 8.1 × 10e-4). Note that this generalization of classification performance across tasks also suggests that our above-chance classification is not attributable to a verbally mediated encoding/retrieval strategy, because the generalization PR classifier was trained on unattended gratings. A repeated-measures analysis across visual areas and the abovementioned classifiers (PP, RR, PR, and RP) yielded a main effect of classifier (F(3) = 61.73; p = 2.6 × 10e-27) but not of visual areas (F(3) = 2.45; p = 0.07) or their interaction term (F(3) = 0.5; p = 0.87).

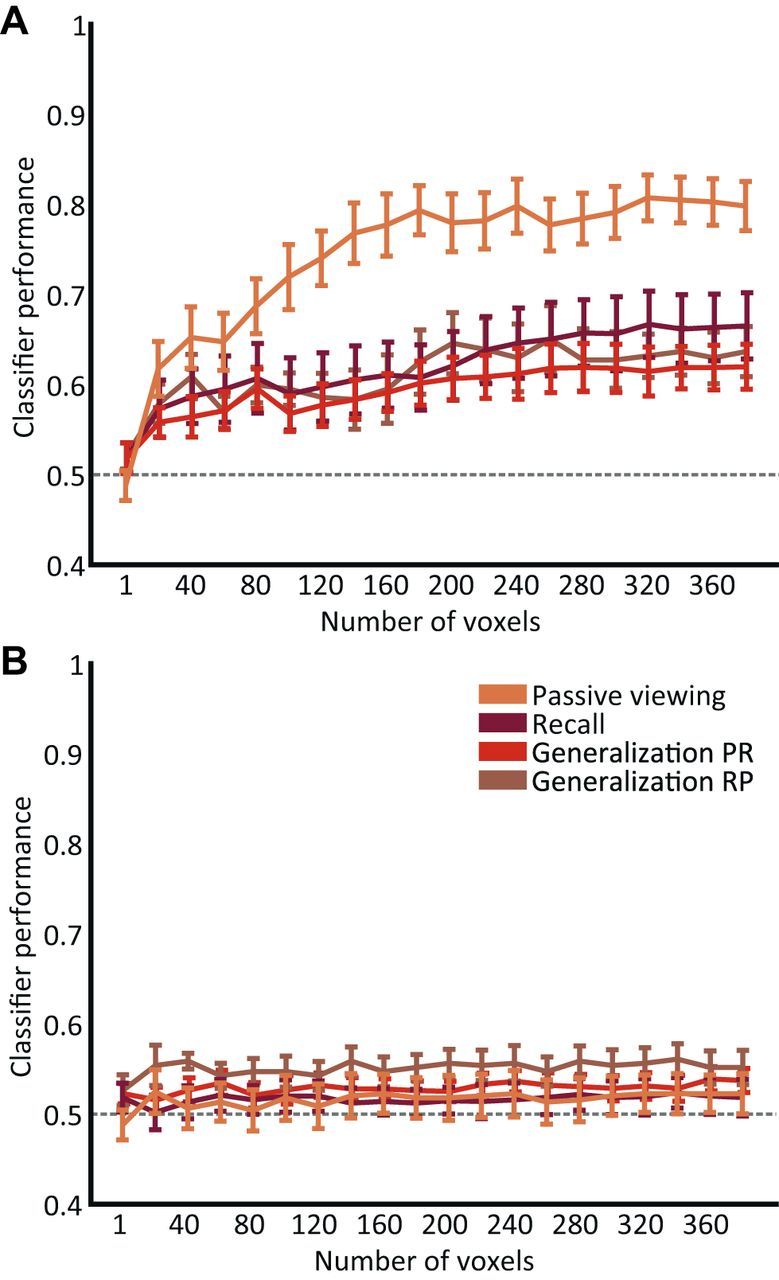

Importantly, the number of visual cortical voxels used for classification did not influence classifier performance, indicating that the performance effects were robust and stable (Fig. 3A). To investigate whether global differences in response amplitudes elicited by the two orientations could account for the above-chance classification observed in early visual cortex, we trained and tested four classifiers (perception, cued recall, and generalization) on the average response amplitude of the originally selected voxels. None of the three classifiers achieved above-chance performance (t < 2; p > 0.05) on the averaged response (Fig. 3B), indicating that global BOLD differences cannot account for the observed classification performance in our analyses.

Figure 3.

Effect of voxel number on classifier performance. A, To investigate the stability of classifier performance, the classifiers (passive viewing, cued recall, and generalization) were applied on different numbers of voxels. The voxels were sorted according to their response to the localizer stimulus. All four classifiers were trained and tested in a leave-one-run-out cross-validation procedure. Classifier performance gradually improved as a function of voxel number for each classifier, reaching near-asymptotic performance at ∼200–250 voxels. Error bars indicate ±1 SEM. B, The responses of all originally selected voxels, for different numbers of voxels (sorted according to their response to the localizer stimulus), were averaged to obtain the mean response amplitude of V1–V3. This average response was used as input for the classifiers. All four classifiers were trained and tested in a leave-one-run-out cross-validation procedure. The absence of above-chance classifier performance indicates that global BOLD differences could not account for the classifier performance obtained in V1–V3 (see Fig. 2).

We subsequently looked at individual volumes during the recall phase to assess how classification performance unfolds throughout each trial (Fig. 2B, red line and left axis). Classification started at chance level during presentation of the audio cues and the recall cue. From approximately 2 to 3 volumes (3.6–5.4 s) after the recall cue, the classifier selected the recalled grating with above-chance level accuracy. Classifier performance continued to increase after the presentation of the probe grating. This is attributable to the fact that, for every trial, the orientation of the probe grating only slightly deviated from the recalled orientation: in other words, the probe grating, albeit having a slightly different orientation, adds information to the activity pattern that is used for classification. After participants finished the orientation discrimination judgment, classification accuracy dropped back to chance level. The overall BOLD amplitude in early visual cortex followed a similar pattern throughout the trial as the classification performance (Fig. 2B, black line and right axis). However, note that there was no overall BOLD difference between the two classes of recalled orientations during the phase between recall cue and probe grating.

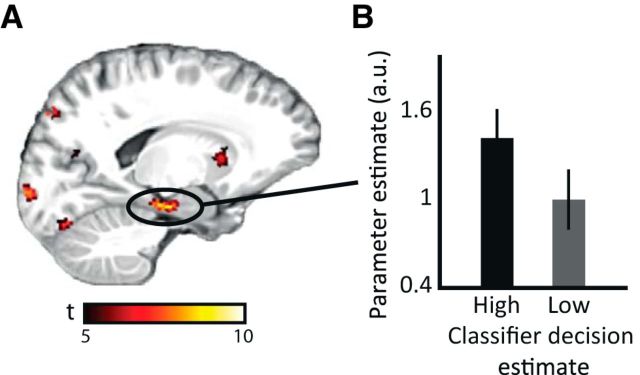

The cortical reinstatement hypothesis predicts that the hippocampus mediates reinstatement in neocortex (Marr, 1971; Tulving and Thomson, 1973). Therefore, we repeated the above classification analyses for a hippocampal mask. None of the classifiers reached above-chance level performance. This is not surprising, because the hippocampus is unlikely to represent the associated orientation itself but rather an index of the mnemonic association in cortex (Marr, 1971). Therefore, we asked whether hippocampal activation was related to the reinstatement we observed in visual cortex. To investigate these putative hippocampal–cortical interactions, we obtained the decision value of the generalization classifier for each recall trial. The decision value for a given trial can be taken as an indication of how similar the neural patterns of passive viewing and cued recall were in visual cortex for each recall trial; in other words, it reflects the strength of reinstatement. We performed a GLM analysis on the cued recall data, with this absolute trial-by-trial decision value of the visual cortex SVM classifier as a parametric modulator of the recall-related regressor. We found that BOLD fluctuations in left hippocampus, extending into left entorhinal cortex, covaried with cortical reinstatement accuracy in early visual cortex. This finding supports the view that hippocampal activity signals, on a trial-by-trial level, stimulus-specific cortical reinstatement accuracy (as indexed by classifier decision estimate) in early visual cortex (Fig. 4A; for the full list of regions that showed effects for this parametric modulation, see Table 1). To illustrate this effect, the bars in Figure 4B show that, for the trials with the highest absolute classifier decision estimates in V1–V3, hippocampal activity is higher compared with activity in the trials with lower V1–V3 classifier decision estimates (trials divided in half based on their decision estimate). The observed effects were corrected for multiple comparisons (p < 0.05, using threshold-free cluster enhancement). The observed covariation of hippocampal activity with classifier decision estimates suggests crosstalk between hippocampus and visual cortex during cued memory recall.

Figure 4.

Hippocampal activity correlates with trial-by-trial classifier decision value. A, SPM t map of the parametric modulation of classifier decision value overlaid on a structural template. The map is thresholded at p < 0.05, cluster-corrected, with cluster size of >50 voxels. B, Bar plots show effect size for the hippocampal peak voxel in A, binned by absolute classifier decision value in visual cortex (see Materials and Methods). Error bars indicate ± 1 SEM.

Table 1.

Summary of regions that show a parametric modulation of decision estimate

| Region | MNI coordinates |

z | ||

|---|---|---|---|---|

| x | y | z | ||

| R lateral frontal cortex | 36 | 28 | 19 | 4.55 |

| R insular cortex | 31 | 18 | 5 | 5.40 |

| L insular cortex | −28 | 26 | 5 | 5.52 |

| Posterior cingulate cortex | 4 | 8 | 46 | 5.98 |

| R inferior frontal gyrus | 41 | 7 | 28 | 4.73 |

| L caudate nucleus | −13 | 14 | 3 | 5.14 |

| L hippocampus | −12 | −18 | −14 | 4.19 |

| R thalamus | 10 | −15 | 12 | 4.34 |

| L thalamus | −9 | −18 | 12 | 4.87 |

| L cerebellum | −22 | −41 | −36 | 3.74 |

| Striate cortex | −6 | −94 | −2 | 4.12 |

MNI coordinates and z statistics are shown (from anterior to posterior) for all regions for which activity was significantly modulated by the trial-by-trial classifier decision value (p < 0.05, cluster-corrected; cluster size, >50 voxels) during the recall phase. L, Left; R, right.

Discussion

In this study, we investigated cortical reinstatement in early visual cortex. We used a multivariate analysis approach to assess whether encoded stimulus-specific patterns are reinstated during cued recall, compared the cortical patterns during stimulus reinstatement with those during passive viewing of the stimuli, and related strength of reinstatement to hippocampal activity. We observed cortical reinstatement of the mnemonic representation of tone–grating associations in early visual cortex. This reinstatement was association and feature specific: the orientation of the recalled grating could reliably be predicted from the neural pattern across voxels in visual cortex. In addition, the neural activity patterns during stimulus recall resembled those elicited by physically presented stimuli, indicating shared representations between cued recall and perception. Furthermore, we found that hippocampal activity covaries with the strength of reactivation, consistent with the hypothesis that the hippocampus mediates cortical reinstatement (Marr, 1971; Tulving and Thomson, 1973).

Early neuroimaging work on cortical reinstatement (Nyberg et al., 2000; Wheeler et al., 2000) showed that, during cue word presentation, retrieval of associated pictures and sounds elicited activity in higher-order visual and auditory regions and not in primary sensory cortex. The authors interpreted this as evidence for the dissimilarity of encoding and retrieval mechanisms (Nyberg et al., 2000; Wheeler et al., 2000). We argue that, depending on the nature of the task, reinstatement involves different sensory regions: if the task requires participants to recall a higher-order sensory representation (like in the previous work: pictures and words), those areas reinstate the representation during recall. However, when the participants have to recall more detailed representations (like in our paradigm, an orientation), early sensory cortex supports the reinstatement. Our results suggest that reinstatement generalizes across the entire breadth of the sensory hierarchy and indicate that retrieval can entail detailed sensory aspects of the memory representation.

We predicted that the reinstated representations are association and stimulus specific: the representation should specifically reflect the recalled stimulus instead of the stimulus category, for instance. Most studies on cortical reinstatement pooled recall stimuli of one category (e.g., faces or objects) to assess category-specific reinstatement (Polyn et al., 2005; Lewis-Peacock and Postle, 2008; Johnson et al., 2009; Gordon et al., 2013; Liang et al., 2013). Some studies show evidence for memory-specific episodic reinstatement of different videos/pictures in the medial temporal lobe, specifically the hippocampus (Chadwick et al., 2010) and parahippocampal cortex (Staresina et al., 2012). Here, we extend these findings by showing that early visual cortex can also support stimulus-specific reinstatement, providing evidence for the predicted specificity of reinstatement even at the lowest levels of the sensory hierarchy.

The core prediction of the cortical reinstatement hypothesis is that the reinstated representation during recall resembles the representation during encoding. However, similarity between encoding and recall does not necessarily indicate that mnemonic representations built during encoding are reinstated at retrieval: indeed, factors such as attention or executive strategy could interact with memory at encoding (Chun and Turk-Browne, 2007), retrieval (Vicente-Grabovetsky et al., 2014), or both (Summerfield et al., 2006). With our well controlled design, we could rigorously test a stringent reinstatement hypothesis, namely whether stimulus recall resembles passive and unattended perception of the stimulus. We show that the reinstated patterns in early visual cortex are indeed similar to patterns driven by unattended stimuli. This indicates a common representation of bottom-up and top-down signals in these cortical areas. Note that this generalization can rule out certain confounds that might cause the above-chance classification performance, such as attention: because the classifier was trained on a task in which participants were not actively attending the oriented gratings, it is not sensitive to such biases in the cued recall data.

Our findings dovetail with previous neuroimaging work that showed that voxel patterns in visual cortex are not only predictive of bottom-up visual processes, such as specific visual stimulus properties (Kamitani and Tong, 2005) and unconscious perception of a stimulus (Haynes and Rees, 2005), but that visual cortex is also involved in complex, top-down visual computations (Mumford, 1991; Lamme and Roelfsema, 2000): several fMRI studies showed that the participants' attentional state (Kamitani and Tong, 2005; Liu et al., 2007; Serences and Boynton, 2007; Jehee et al., 2011) and stimulus expectation (Kok et al., 2012) can be predicted from activity patterns in early visual cortex.

Recently, it was shown that activity patterns in early visual cortex during working memory contain stimulus-specific information about the maintained stimulus. The neural representations of this top-down working memory process were shown to be similar to those during bottom-up, passive viewing of the stimuli (Harrison and Tong, 2009; Serences et al., 2009; Xing et al., 2013). Shared neural representations were also found for perception and imagery, throughout the higher visual hierarchy (Kosslyn et al., 1995; Stokes et al., 2009; Reddy et al., 2010; Cichy et al., 2012; Lee et al., 2012), and recently in early visual cortex (Albers et al., 2013). The converging evidence of shared neural representations between perception and working memory (Harrison and Tong, 2009; Xing et al., 2013), imagery (Reddy et al., 2010; Cichy et al., 2012; Albers et al., 2013), and memory reinstatement suggest that these processes might be implemented in early visual cortex in a very similar manner (Tong, 2013) and may support conscious retrieval of memories (Slotnick and Schacter, 2006; Thakral et al., 2013).

Although working memory and memory retrieval mechanisms might converge in early sensory cortex, differences between the two processes are expected in the medial temporal lobe: reinstatement is more dependent on hippocampus than working memory maintenance (Ranganath et al., 2004). There is little debate that successful memory reinstatement is mediated by the hippocampus (Marr, 1971; Eichenbaum et al., 1992; Eldridge et al., 2000; Squire et al., 2004). Indeed, stronger hippocampal activity was observed for correct, rather than incorrect, memory reinstatement trials in several previous studies (Davachi et al., 2003; Düzel et al., 2003; Kuhl et al., 2011; Gordon et al., 2013; Liang et al., 2013; Staresina et al., 2013). A recent study found correlations between hippocampal activity and encoding-retrieval pattern similarity in parahippocampal cortex (Staresina et al., 2012). In the current study, we show that there are fine-grained, trial-by-trial interactions between the hippocampus, in conjunction with entorhinal cortex (the hippocampal–cortical interface; van Strien et al., 2009; Doeller et al., 2010), and early visual cortex: hippocampal activity was stronger for recall trials with higher reinstatement strength, i.e., in trials in which the early visual neural patterns during cued recall resembled those during passive perception most.

Our findings fit well with human and animal studies that observed crosstalk between the hippocampus and sensory cortex during post-encoding “offline” replay (Ji and Wilson, 2007; van Dongen et al., 2012; Deuker et al., 2013; Tambini and Davachi, 2013). Although we cannot infer the directionality of this hippocampo-cortical crosstalk, our results are consistent with two recent studies suggesting that hippocampus might drive reinstatement in higher-order regions (Gordon et al., 2013; Staresina et al., 2013).

In conclusion, we observed stimulus-specific reinstatement of neural activity patterns in early visual cortex that resembled stimulus-driven neural activity patterns. These findings provide evidence for cortical reinstatement on a feature level at some of the lowest levels of the sensory hierarchy and suggest that the hippocampus modulates the level of mnemonic detail reactivated in early sensory regions.

Footnotes

This work was supported by the European Research Council Starting Grant RECONTEXT 261177 and Netherlands Organisation for Scientific Research VIDI Grant 452-12-009. G.F. is supported by European Research Council Advanced Grant NEUROSCHEMA 268800. We thank Ruben van Bergen for help during retinotopy acquisition and Alexander Backus, Lorena Deuker, Alejandro Vicente Grabovetsky, and Marcel van Gerven for useful discussions.

The authors declare no competing financial interests.

References

- Albers AM, Kok P, Toni I, Dijkerman HC, de Lange FP. Shared representations for working memory and mental imagery in early visual cortex. Curr Biol. 2013;23:1427–1431. doi: 10.1016/j.cub.2013.05.065. [DOI] [PubMed] [Google Scholar]

- Brainard DH. The psychophysics toolbox. Spat Vis. 1997;10:433–436. doi: 10.1163/156856897X00357. [DOI] [PubMed] [Google Scholar]

- Buchsbaum BR, Lemire-Rodger S, Fang C, Abdi H. The neural basis of vivid memory is patterned on perception. J Cogn Neurosci. 2012;24:1867–1883. doi: 10.1162/jocn_a_00253. [DOI] [PubMed] [Google Scholar]

- Chadwick MJ, Hassabis D, Weiskopf N, Maguire EA. Decoding individual episodic memory traces in the human hippocampus. Curr Biol. 2010;20:544–547. doi: 10.1016/j.cub.2010.01.053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chun MM, Turk-Browne NB. Interactions between attention and memory. Curr Opin Neurobiol. 2007;17:177–184. doi: 10.1016/j.conb.2007.03.005. [DOI] [PubMed] [Google Scholar]

- Cichy RM, Heinzle J, Haynes JD. Imagery and perception share cortical representations of content and location. Cereb Cortex. 2012;22:372–380. doi: 10.1093/cercor/bhr106. [DOI] [PubMed] [Google Scholar]

- Davachi L, Mitchell JP, Wagner AD. Multiple routes to memory: distinct medial temporal lobe processes build item and source memories. Proc Natl Acad Sci U S A. 2003;100:2157–2162. doi: 10.1073/pnas.0337195100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deuker L, Olligs J, Fell J, Kranz TA, Mormann F, Montag C, Reuter M, Elger CE, Axmacher N. Memory consolidation by replay of stimulus-specific neural activity. J Neurosci. 2013;33:19373–19383. doi: 10.1523/JNEUROSCI.0414-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeYoe EA, Carman GJ, Bandettini P, Glickman S, Wieser J, Cox R, Miller D, Neitz J. Mapping striate and extrastriate visual areas in human cerebral cortex. Proc Natl Acad Sci U S A. 1996;93:2382–2386. doi: 10.1073/pnas.93.6.2382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doeller CF, Barry C, Burgess N. Evidence for grid cells in a human memory network. Nature. 2010;463:657–661. doi: 10.1038/nature08704. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diana RA, Yonelinas AP, Ranganath C. Parahippocampal cortex activation during context reinstatement predicts item recollection. J Exp Psychol Gen. 2013;142:1287–1297. doi: 10.1037/a0034029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Düzel E, Habib R, Rotte M, Guderian S, Tulving E, Heinze HJ. Human hippocampal and parahippocampal activity during visual associative recognition memory for spatial and nonspatial stimulus configurations. J Neurosci. 2003;23:9439–9444. doi: 10.1523/JNEUROSCI.23-28-09439.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eichenbaum H, Otto T, Cohen NJ. The hippocampus—what does it do? Behav Neural Biol. 1992;57:2–36. doi: 10.1016/0163-1047(92)90724-I. [DOI] [PubMed] [Google Scholar]

- Eldridge LL, Knowlton BJ, Furmanski CS, Bookheimer SY, Engel SA. Remembering episodes: a selective role for the hippocampus during retrieval. Nat Neurosci. 2000;3:1149–1152. doi: 10.1038/80671. [DOI] [PubMed] [Google Scholar]

- Engel SA, Glover GH, Wandell BA. Retinotopic organization in human visual cortex and the spatial precision of functional MRI. Cereb Cortex. 1997;7:181–192. doi: 10.1093/cercor/7.2.181. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Josephs O, Rees G, Turner R. Nonlinear event-related responses in fMRI. Magn Reson Med. 1998;39:41–52. doi: 10.1002/mrm.1910390109. [DOI] [PubMed] [Google Scholar]

- Gordon AM, Rissman J, Kiani R, Wagner AD. Cortical reinstatement mediates the relationship between content-specific encoding activity and subsequent recollection decisions. Cereb Cortex. 2013 doi: 10.1093/cercor/bht194. doi: 10.1093/cercor/bht194. Advance online publication. Retrieved April 27, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harrison SA, Tong F. Decoding reveals the contents of visual working memory in early visual areas. Nature. 2009;458:632–635. doi: 10.1038/nature07832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haynes JD, Rees G. Predicting the orientation of invisible stimuli from activity in human primary visual cortex. Nat Neurosci. 2005;8:686–691. doi: 10.1038/nn1445. [DOI] [PubMed] [Google Scholar]

- James W. The principles of psychology. New York: Holt; 1890. [Google Scholar]

- Jehee JF, Brady DK, Tong F. Attention improves encoding of task-relevant features in the human visual cortex. J Neurosci. 2011;31:8210–8219. doi: 10.1523/JNEUROSCI.6153-09.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jehee JF, Ling S, Swisher JD, van Bergen RS, Tong F. Perceptual learning selectively refines orientation representations in early visual cortex. J Neurosci. 2012;32:16747–16753a. doi: 10.1523/JNEUROSCI.6112-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ji D, Wilson MA. Coordinated memory replay in the visual cortex and hippocampus during sleep. Nat Neurosci. 2007;10:100–107. doi: 10.1038/nn1825. [DOI] [PubMed] [Google Scholar]

- Johnson JD, McDuff SG, Rugg MD, Norman KA. Recollection, familiarity, and cortical reinstatement: a multivoxel pattern analysis. Neuron. 2009;63:697–708. doi: 10.1016/j.neuron.2009.08.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kamitani Y, Tong F. Decoding the visual and subjective contents of the human brain. Nat Neurosci. 2005;8:679–685. doi: 10.1038/nn1444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Khader P, Burke M, Bien S, Ranganath C, Rösler F. Content-specific activation during associative long-term memory retrieval. Neuroimage. 2005;27:805–816. doi: 10.1016/j.neuroimage.2005.05.006. [DOI] [PubMed] [Google Scholar]

- Kok P, Jehee JF, de Lange FP. Less is more: expectation sharpens representations in the primary visual cortex. Neuron. 2012;75:265–270. doi: 10.1016/j.neuron.2012.04.034. [DOI] [PubMed] [Google Scholar]

- Kosslyn SM, Thompson WL, Kim IJ, Alpert NM. Topographical representations of mental images in primary visual cortex. Nature. 1995;378:496–498. doi: 10.1038/378496a0. [DOI] [PubMed] [Google Scholar]

- Kuhl BA, Rissman J, Chun MM, Wagner AD. Fidelity of neural reactivation reveals competition between memories. Proc Natl Acad Sci U S A. 2011;108:5903–5908. doi: 10.1073/pnas.1016939108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lamme VA, Roelfsema PR. The distinct modes of vision offered by feedforward and recurrent processing. Trends Neurosci. 2000;23:571–579. doi: 10.1016/S0166-2236(00)01657-X. [DOI] [PubMed] [Google Scholar]

- Lee SH, Kravitz DJ, Baker CI. Disentangling visual imagery and perception of real-world objects. Neuroimage. 2012;59:4064–4073. doi: 10.1016/j.neuroimage.2011.10.055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis-Peacock JA, Postle BR. Temporary activation of long-term memory supports working memory. J Neurosci. 2008;28:8765–8771. doi: 10.1523/JNEUROSCI.1953-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liang JC, Wagner AD, Preston AR. Content representation in the human medial temporal lobe. Cereb Cortex. 2013;23:80–96. doi: 10.1093/cercor/bhr379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu T, Larsson J, Carrasco M. Feature-based attention modulates orientation-selective responses in human visual cortex. Neuron. 2007;55:313–323. doi: 10.1016/j.neuron.2007.06.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marr D. Simple memory: a theory for archicortex. Philos Trans R Soc Lond B Biol Sci. 1971;262:23–81. doi: 10.1098/rstb.1971.0078. [DOI] [PubMed] [Google Scholar]

- Mumford D. On the computational architecture of the neocortex. I. The role of the thalamo-cortical loop. Biol Cybern. 1991;65:135–145. doi: 10.1007/BF00202389. [DOI] [PubMed] [Google Scholar]

- Nyberg L, Habib R, McIntosh AR, Tulving E. Reactivation of encoding-related brain activity during memory retrieval. Proc Natl Acad Sci U S A. 2000;97:11120–11124. doi: 10.1073/pnas.97.20.11120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polyn SM, Natu VS, Cohen JD, Norman KA. Category-specific cortical activity precedes retrieval during memory search. Science. 2005;310:1963–1966. doi: 10.1126/science.1117645. [DOI] [PubMed] [Google Scholar]

- Ranganath C, Cohen MX, Dam C, D'Esposito M. Inferior temporal, prefrontal, and hippocampal contributions to visual working memory maintenance and associative memory retrieval. J Neurosci. 2004;24:3917–3925. doi: 10.1523/JNEUROSCI.5053-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ranganath C, Heller A, Cohen MX, Brozinsky CJ, Rissman J. Functional connectivity with the hippocampus during successful memory formation. Hippocampus. 2005;15:997–1005. doi: 10.1002/hipo.20141. [DOI] [PubMed] [Google Scholar]

- Reddy L, Tsuchiya N, Serre T. Reading the mind's eye: decoding category information during mental imagery. Neuroimage. 2010;50:818–825. doi: 10.1016/j.neuroimage.2009.11.084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rugg MD, Vilberg KL. Brain networks underlying episodic memory retrieval. Curr Opin Neurobiol. 2013;23:255–260. doi: 10.1016/j.conb.2012.11.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Serences JT, Boynton GM. Feature-based attentional modulations in the absence of direct visual stimulation. Neuron. 2007;55:301–312. doi: 10.1016/j.neuron.2007.06.015. [DOI] [PubMed] [Google Scholar]

- Serences JT, Ester EF, Vogel EK, Awh E. Stimulus-specific delay activity in human primary visual cortex. Psychol Sci. 2009;20:207–214. doi: 10.1111/j.1467-9280.2009.02276.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sereno MI, Dale AM, Reppas JB, Kwong KK, Belliveau JW, Brady TJ, Rosen BR, Tootell RB. Borders of multiple visual areas in humans revealed by functional magnetic resonance imaging. Science. 1995;268:889–893. doi: 10.1126/science.7754376. [DOI] [PubMed] [Google Scholar]

- Slotnick SD, Schacter DL. The nature of memory related activity in early visual areas. Neuropsychologia. 2006;44:2874–2886. doi: 10.1016/j.neuropsychologia.2006.06.021. [DOI] [PubMed] [Google Scholar]

- Smith SM, Nichols TE. Threshold-free cluster enhancement: addressing problems of smoothing, threshold dependence and localisation in cluster inference. Neuroimage. 2009;44:83–98. doi: 10.1016/j.neuroimage.2008.03.061. [DOI] [PubMed] [Google Scholar]

- Squire LR, Stark CE, Clark RE. The medial temporal lobe. Annu Rev Neurosci. 2004;27:279–306. doi: 10.1146/annurev.neuro.27.070203.144130. [DOI] [PubMed] [Google Scholar]

- Staresina BP, Henson RN, Kriegeskorte N, Alink A. Episodic reinstatement in the medial temporal lobe. J Neurosci. 2012;32:18150–18156. doi: 10.1523/JNEUROSCI.4156-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Staresina BP, Cooper E, Henson RN. Reversible information flow across the medial temporal lobe: the hippocampus links cortical modules during memory retrieval. J Neurosci. 2013;33:14184–14192. doi: 10.1523/JNEUROSCI.1987-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stokes M, Thompson R, Cusack R, Duncan J. Top-down activation of shape-specific population codes in visual cortex during mental imagery. J Neurosci. 2009;29:1565–1572. doi: 10.1523/JNEUROSCI.4657-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Summerfield JJ, Lepsien J, Gitelman DR, Mesulam MM, Nobre AC. Orienting attention based on long-term memory experience. Neuron. 2006;49:905–916. doi: 10.1016/j.neuron.2006.01.021. [DOI] [PubMed] [Google Scholar]

- Tambini A, Davachi L. Persistence of hippocampal multivoxel patterns into postencoding rest is related to memory. Proc Natl Acad Sci U S A. 2013;110:19591–19596. doi: 10.1073/pnas.1308499110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thakral PP, Slotnick SD, Schacter DL. Conscious processing during retrieval can occur in early and late visual regions. Neuropsychologia. 2013;51:482–487. doi: 10.1016/j.neuropsychologia.2012.11.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tong F. Imagery and visual working memory: one and the same? Trends Cogn Sci. 2013;17:489–490. doi: 10.1016/j.tics.2013.08.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tulving E, Thomson DM. Encoding specificity and retrieval processes in episodic memory. Psychol Rev. 1973;80:352–373. doi: 10.1037/h0020071. [DOI] [Google Scholar]

- van Dongen EV, Takashima A, Barth M, Zapp J, Schad LR, Paller KA, Fernández G. Memory stabilization with targeted reactivation during human slow-wave sleep. Proc Natl Acad Sci U S A. 2012;109:10575–10580. doi: 10.1073/pnas.1201072109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Strien NM, Cappaert NL, Witter MP. The anatomy of memory: an interactive overview of the parahippocampal-hippocampal network. Nat Rev Neurosci. 2009;10:272–282. doi: 10.1038/nrn2614. [DOI] [PubMed] [Google Scholar]

- Vicente-Grabovetsky A, Carlin JD, Cusack R. Strength of retinotopic representation of visual memories is modulated by strategy. Cereb Cortex. 2014;24:281–292. doi: 10.1093/cercor/bhs313. [DOI] [PubMed] [Google Scholar]

- Wandell BA, Dumoulin SO, Brewer AA. Visual field maps in human cortex. Neuron. 2007;56:366–383. doi: 10.1016/j.neuron.2007.10.012. [DOI] [PubMed] [Google Scholar]

- Watson AB, Pelli DG. QUEST: a Bayesian adaptive psychometric method. Percept Psychophys. 1983;33:113–120. doi: 10.3758/BF03202828. [DOI] [PubMed] [Google Scholar]

- Wheeler ME, Petersen SE, Buckner RL. Memory's echo: vivid remembering reactivates sensory-specific cortex. Proc Natl Acad Sci U S A. 2000;97:11125–11129. doi: 10.1073/pnas.97.20.11125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woodruff CC, Johnson JD, Uncapher MR, Rugg MD. Content-specificity of the neural correlates of recollection. Neuropsychologia. 2005;43:1022–1032. doi: 10.1016/j.neuropsychologia.2004.10.013. [DOI] [PubMed] [Google Scholar]

- Xing Y, Ledgeway T, McGraw PV, Schluppeck D. Decoding working memory of stimulus contrast in early visual cortex. J Neurosci. 2013;33:10301–10311. doi: 10.1523/JNEUROSCI.3754-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]