Abstract

Objectives

The Emergency Medicine Milestone Project, a framework for assessing competencies, has been used as a method of providing focused resident feedback. However, the emergency medicine milestones do not include specific objective data about resident clinical efficiency and productivity, and studies have shown that milestone‐based feedback does not improve resident satisfaction with the feedback process. We examined whether providing performance metric reports to resident physicians improves their satisfaction with the feedback process and their clinical performance.

Methods

We conducted a three‐phase stepped‐wedge randomized pilot study of emergency medicine residents at a single, urban academic site. In phase 1, all residents received traditional feedback; in phase 2, residents were randomized to receive traditional feedback (control group) or traditional feedback with performance metric reports (intervention group); and in phase 3, all residents received monthly performance metric reports and traditional feedback. To assess resident satisfaction with the feedback process, surveys using 6‐point Likert scales were administered at each study phase and analyzed using two‐sample t‐tests. Analysis of variance in repeated measures was performed to compare impact of feedback on resident clinical performance, specifically patient treatment time (PTT) and patient visits per hour.

Results

Forty‐one residents participated in the trial of which 21 were randomized to the intervention group and 20 in the control group. Ninety percent of residents liked receiving the report and 74% believed that it better prepared them for expectations of becoming an attending physician. Additionally, residents randomized to the intervention group reported higher satisfaction (p = 0.01) with the quality of the feedback compared to residents in the control group. However, receiving performance metric reports, regardless of study phase or postgraduate year status, did not affect clinical performance, specifically PTT (183 minutes vs. 177 minutes, p = 0.34) or patients visits per hour (0.99 vs. 1.04, p = 0.46).

Conclusions

While feedback with performance metric reports did not improve resident clinical performance, resident physicians were more satisfied with the feedback process, and a majority of residents expressed liking the reports and felt that it better prepared them to become attending physicians. Residency training programs could consider augmenting feedback with performance metric reports to aide in the transition from resident to attending physician.

In 2013, the Accreditation Council for Graduate Medical Education (ACGME) along with the American Board of Emergency Medicine officially launched the emergency medicine milestones as a component of the Next Accreditation System.1 These milestones were created as a framework for assessing competencies within several domains of emergency medicine training. Many programs have adapted these milestones as a method to provide focused resident feedback.2, 3, 4, 5, 6

The annual ACGME survey, a national survey that monitors residency compliance with graduate medical clinical education, includes a question directed to trainees on whether the training program has “provided data about practice habits.”7 While the emergency medicine milestones include competencies in general patient flow and systems‐based management, they do not include specific data about resident practice habits in the emergency department (ED). In addition, studies have shown that milestone‐based feedback has not improved resident perception of the quality or satisfaction with the feedback process.8, 9 Objective data on residency efficiency and productivity provide an opportunity to augment feedback on these specific milestones, address the ACGME annual survey question, and improve resident satisfaction with the feedback process.

Satisfaction with feedback has been linked to improvements in staff motivation, satisfaction with level of responsibility and involvement, and perceived support from managers.10 Providing feedback has also been shown to lower rates of burnout, increase employee engagement, and improve patient safety culture.11 High‐quality feedback is recommended as a tool for program leadership to combat trainee burnout.12

Performance metrics obtained from electronic health records (EHRs) are widely used as a way to gauge emergency medicine physician efficiency and quality in the clinical setting with these metrics often tied to physician compensation.13, 14, 15 However, studies on the use of these data as a feedback mechanism to improve resident training and performance have been limited. This study examines whether providing emergency medicine trainees clinical performance metric based feedback improves resident physician 1) satisfaction with the feedback process and 2) performance in the clinical setting, specifically with patient treatment time (PTT) and patient visits per hour (PVHR).

Methods

From July 1, 2015, to June 30, 2016, we conducted a three‐phase stepped‐wedge randomized pilot study of emergency medicine residents at a single, urban academic site with an annual census of approximately 61,000. Informed consent was obtained from resident physicians who were randomized within each postgraduate year (PGY) level to receive either traditional feedback (control group) or monthly performance metric reports in addition to traditional feedback (intervention group). Traditional feedback at this institution included end‐of‐shift milestone‐based assessment and qualitative comments from faculty, peers, and medical students, as well as semiannual data regarding attendance and involvement in educational experiences, performance on in‐service examination, number of procedures completed, and compliance with other requirements of the residency program. Notably, aside from the monthly performance metric report, no additional feedback on resident efficiency in the clinical learning environment was provided during the study period.

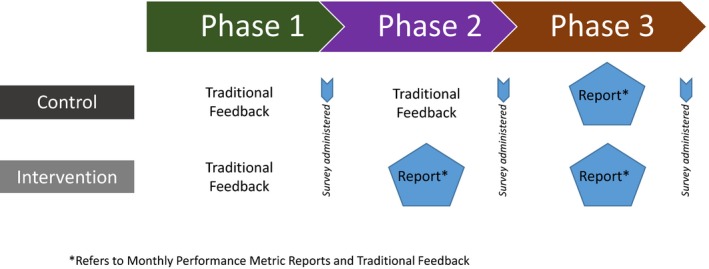

A stepped‐wedge model was employed with each phase lasting 4 months. During phase 1 of the study, all residents received traditional feedback. During phase 2 of the study, residents were randomized to receive traditional feedback (control group) or traditional feedback and performance metric reports (intervention group). During the final phase of the study, all residents received monthly performance metric reports and traditional feedback. Off‐service residents were not included in the study. Surveys were administered to the residents during each phase of the study (Figure 1). This study was approved by our institutional review board.

Figure 1.

Three‐phase stepped‐wedge randomization model with control and intervention group. *Refers to monthly performance metric reports and traditional feedback.

Survey

The three surveys were electronically administered through an online survey program, Survey Monkey, to all study participants at key junctures of the study phase (Figure 1). Likert scale (1–6 = strongly disagree to strongly agree) questions inquired into satisfaction with the feedback process, subjective experience of receiving data on performance in relation to peers, and perceived accuracy of the reports. A free‐text component was also included, allowing participants to provide general observations and recommend additional performance metrics that should be included in the scorecard (see Data Supplement S1, available as supporting information in the online version of this paper, which is available at http://onlinelibrary.wiley.com/doi/10.1002/aet2.10348/full). The survey was piloted through an iterative process with the resident on the study team. Results were anonymous.

Performance Metric Report

The performance metric report was created using data queried from our EHR (Epic System) and our shift scheduling software (ShiftAdmin). Provider‐specific metrics extracted from the EHR included total visits, total PVHR, acuity level of patients seen as measured by the Emergency Severity Index (ESI), median treatment times, and the disposition of patients. Over the course of the year, all resident physicians had worked an equal number and a similar distribution of shifts compared to their colleagues in the same PGY of training. Individual performance metrics were displayed in relation to the mean values of similar PGY level (Table 1). Reports were delivered monthly to the resident physicians via the hospital e‐mail system.

Table 1.

Sample Monthly Performance Scorecard

| Metrics | July | August | September | October | Your Class | |

|---|---|---|---|---|---|---|

| Median | 25%–75% | |||||

| Efficiency | ||||||

| Total visits | 41 | 188 | 44 | 152 | 107 | 49–145 |

| Visits per hour | 1.3 | 1.41 | 1.22 | 1.22 | 1.08 | 0.97–1.22 |

| Median treatment time (minutes) | 187 | 167 | 161 | 163 | 195 | 118–300 |

| Admitted patients | 187 | 149 | 134.5 | 161 | 171 | 91–280 |

| Discharged patients | 196 | 174 | 161 | 161 | 205 | 129–309 |

| Median time from bed request to CRC approval (minutes) | 25 | 40.5 | 44 | 49 | 25 | 14–47 |

| Median time from bed request to doc‐to‐doc (minutes) | 120 | 145 | 94 | 130 | 102 | 63–159 |

| Median length of stay (minutes) | 468 | 324 | 285 | 342 | 390 | 258–558 |

| ESI level (%) | ||||||

| 1 | 2.5 | 1 | 0 | 2.6 | 0.9 | 0.0–1.9 |

| 2 | 57.5 | 35.1 | 29.6 | 38.8 | 44.7 | 39.2–49.4 |

| 3 | 35 | 55.9 | 50 | 50 | 45.7 | 41.7–50.4 |

| 4 | 2.5 | 7.4 | 18.2 | 7.2 | 6.6 | 4.5–8.7 |

| 5 | 2.5 | 0.5 | 2.3 | 1.3 | 1 | 0.0–2.0 |

| Disposition rates (%) | ||||||

| Inpatient | 46.3 | 25 | 27.3 | 30.3 | 31.8 | 28.7–36.2 |

| Discharge | 51.2 | 72.9 | 70.5 | 67.1 | 66.4 | 61.5–70.2 |

| Left against medical advice | 2.4 | 2.1 | 2.3 | 2 | 1.5 | 0.4–2.6 |

Definitions: total visits = number of visits each month in which you were the first resident assigned to a patient; visits per hour = total visits divided by hours worked in ShiftAdmin; treatment time = time in minutes from when a patient was placed in a room until a disposition was documented in Epic; time from bed request to CRC approval = time in minutes from bed order placement until the CRC approved the bed for admission; time from bed request to doc‐to‐doc = time in minutes from when a bed order was placed until doc‐to‐doc was completed in Epic; length of stay = time in minutes from registration in triage until the patient left the emergency department.

Data Analysis

To examine resident clinical performance, median PTT and mean PVHR were calculated for each resident during the three study phases. The median PTT was chosen over the mean PTT due to nonnormality of the data. In contrast, the mean PVHRs were chosen over median PVHR as that data set had a normal distribution. Mean PVHR was restricted to the PGY‐1 and PGY‐2 residents as the study site had PGY‐3 and PGY‐4 resident physicians engaged in supervisory roles and, as such, could not accurately attribute patients to the senior level residents during these shifts. Median PTT was calculated for all levels of trainees across the three study phases. The senior‐level residents occasionally saw patients primarily, and as such, the median PTT could be calculated in those instances. If a handoff occurred, the initial assigned resident was the trainee attributed to that patient.

To determine differences between the control group and the intervention group with regard to PTT and PVPH, an analysis of variance in repeated measures was performed, with study phase (n = 3) as the repeated measure and feedback group (n = 2) and PGY status (n = 2 [for PGY‐1 and PGY‐2] and n = 4, respectively) as grouping variables. To reduce variability in the data set, mean patient ESI level at triage and percentage of patients admitted during each study phase was added as time‐varying covariates.

Summary statistics (frequencies and percentages) were used to describe feedback on the survey reports. To assess survey data by feedback group, two‐sample t‐tests were performed, as not all participants completed the surveys at all study points. All analyses were performed using SAS statistical software (version 9.4, SAS Institute).

Results

Of the 43 emergency medicine residents at our academic institution, 42 were eligible to receive performance metric scorecards (one resident was excluded due to involvement in creation of the study) with 41 (98%) electing to participate. During phase 2 of the study, 20 residents were randomized to the control group and 21 residents to the intervention group, with equal representation per PGY of training.

Survey

Of the 41 residents participating in the study, 28 (68%) completed the survey during study phase 1, 14 (70%) control group residents and 15 (71%) intervention group residents completed the subsequent survey at the end of study phase 2 (randomization), and finally nine (45%) control group and 12 (57%) intervention group residents completed the survey at the end of the study (phase 3).

All of the residents checked their reports. Over 90% of the residents liked receiving the report with 50% believing that the reports helped them identify areas of improvement. A total of 74% believed that the reports better prepared them for understanding the expectations of becoming an attending physician. Several residents noted that the reports were “really helpful [and of] great benefit.” Forty percent of respondents noted that they had some increase in anxiety about their performance compared with their peers because of the reports.

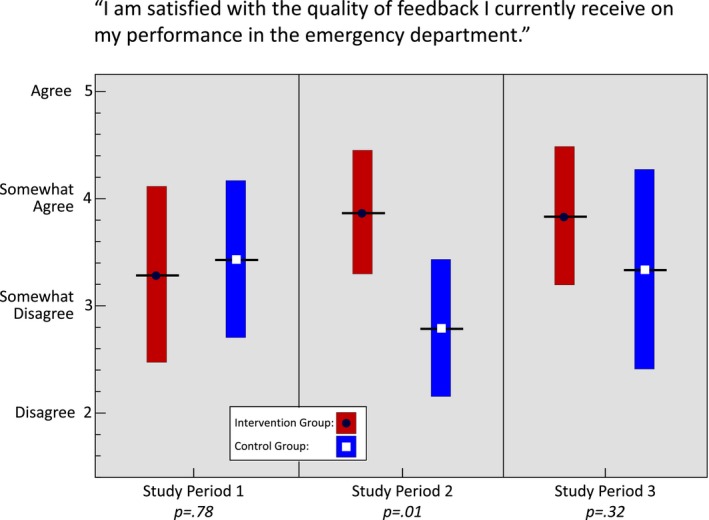

Prior to receiving the performance metric report, the resident physicians reported no difference in their satisfaction with the quality of the feedback they received (intervention vs. control group 3.3 vs. 3.4, p = 0.78; Figure 2. After the intervention, residents who received performance metric monthly score cards reported a statistically significant increase (3.9 vs 2.8, p = 0.01) in their satisfaction with the quality of the feedback compared to the control group during phase 2 of the study. During phase 3 of the study, when both groups were receiving the performance scorecards, there was no statistically significant difference in satisfaction with the quality of feedback (3.8 vs. 3.3, p = 0.32; Figure 2). Additional metrics that the residents believed would be of value as a feedback mechanism included patient satisfaction, new patients per hour, hospital capacity at time of patient visit, and the comparable results for the attending physicians to use as a target.

Figure 2.

Resident satisfaction with feedback by feedback group.

Performance Metric Report

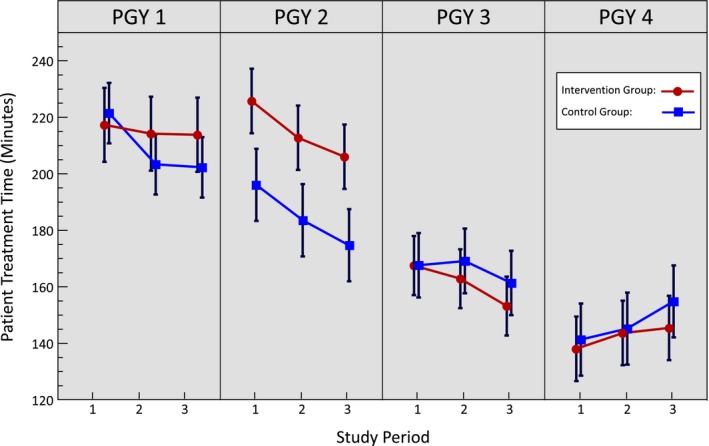

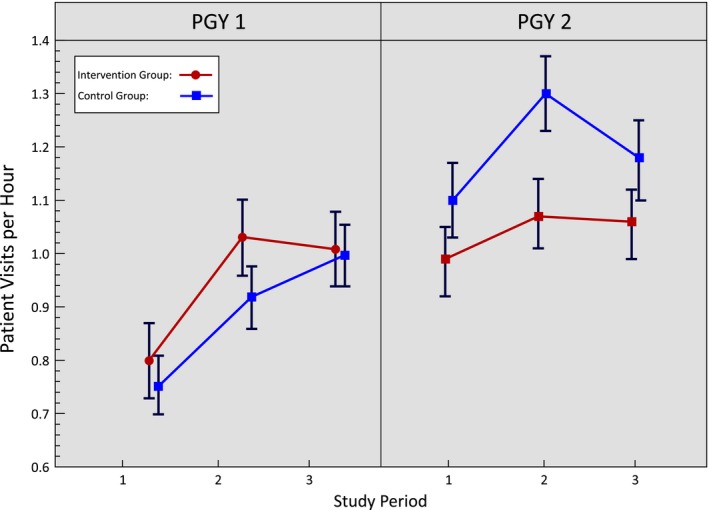

Residents receiving performance metric reports and traditional feedback (intervention group) compared to those receiving traditional feedback (control group), regardless of time period or PGY status did not differ by median PTT (183 minutes vs. 177 minutes, p = 0.34; Figure 3) or mean PVHR (0.99 vs. 1.04, p = 0.46; Figure 4). For the PGY‐2 level, the control and intervention groups had started off at different points prior to the intervention, but improved their median PTTs at the same rate despite the intervention. For median PTT, there were significant improvements in treatment times as PGY status increased (means = 212, 200, 164, and 145 minutes, for PGY‐1 to PGY‐4, respectively; p < 0.0001) as well as a decrease over the three study phases (means = 184, 179, and 177 minutes, p = 0.10 for PGY‐1 vs. PGY‐2 and p = 0.01 for periods 1 vs. 3; Figure 3). For PVHR, again, the same pattern was seen. PGY‐2's treated more patients per hour (0.92 vs. 1.1, p = 0.01) compared to PGY‐1's, and both PGY groups increased the number of patients by the second study phase but leveled off by the third (0.91 vs 1.08 vs. 1.06 PVHR, p < 0.0001; PGY‐1 vs. PGY‐2), again regardless of feedback group (Figure 4). Including ESI and admission percentage to control for variability did not alter the above associations.

Figure 3.

Median patient treatment time in minutes by PGY status and feedback group.

Figure 4.

Mean patient visits per hour by PGY status and feedback group.

Discussion

To our knowledge, this is one of the first studies examining the effect of performance metric reports on emergency medicine resident satisfaction with the feedback process and performance in the clinical setting. Emergency medicine attending physicians are measured on their clinical productivity with their performance on these metrics often tied to financial incentives and compensation. However, there is a dearth of literature on resident physician engagement and awareness of specific clinical productivity metrics.13, 14, 15 Emergency medicine residents may benefit from access to their clinical performance metrics to better inform them of the current expectations of attending clinical practice. We found that approximately three‐quarters of the resident physicians felt that receiving these metrics made them more likely to understand the expectations of becoming an attending physician.

While the vast majority of resident physicians reported that they liked receiving the report and it better prepared them for the expectations of becoming an attending, 40% of residents noted that they had some increase in anxiety about their performance compared with their peers. These results are important when designing feedback opportunities for learners. A prior study examining feedback in medical students demonstrated that institutional culture was most effective in combating anxiety and resistance through the use of engagement throughout the institution and meaningful feedback to support professional development.16 Standardization of the feedback process has also been shown to decrease the anxiety of feedback delivery to residents.17 As incorporation of performance metric reports in the feedback process has not yet become standardized at our institution, it is not surprising that resident physicians felt anxiety about the process. Prior studies have demonstrated a need for improved quantitative feedback provided to residents beyond the milestone scores and a recent study found that feedback can improve patient safety culture and lower burnout rates.8, 9, 10, 11 Performance metric reports provide an opportunity to integrate data from the EHR and augment feedback from the competencies patient flow and system‐based management to improve feedback opportunities for our learners.

Providing feedback with clinical performance reports did not significantly impact resident clinical productivity and efficiency. A recent Cochrane review revealed that feedback to health care professionals may be more effective when it is offered in multiple methods over multiple sessions and should include explicit targets and action plans.18 We designed our study to provide monthly performance scorecards in a single method without providing additional in‐person feedback regarding resident efficiency in the clinical setting as a method of standardizing the study to measure efficacy. Learners would benefit coupling performance metric scorecards with in‐person feedback with specific action plans to improve clinical performance. As the goals and objectives for resident physicians differ based on their level of training, resident physicians may also benefit from different types of feedback: senior‐level learners would likely benefit from performance metric scorecards coupled with explicit action plans, junior‐level learners may benefit from awareness of specific clinical productivity metrics and its role within the field of emergency medicine.

Interestingly, we also showed a statistically significant improvement in resident clinical performance with PTTs throughout the year, independent of type of feedback given. This is reassuring given that we would expect resident physicians to improve in clinical performance with experience throughout the year. We also showed a statistically significant improvement in resident productivity with PVHR and PTTs as they progress through each year of residency training, which has been found in prior studies modeling resident productivity in the emergency department.19

Limitations

While we were able to provide treatment time metrics for residents at all levels of training, we were not able to accurately provide PVHR metrics for the senior residents when their shifts included a supervisory role. Arguably, these metrics would be most helpful for the senior residents as they are preparing to become independent practitioners and may have compensation or other incentives tied to their clinical performance. In addition, as a method of standardizing the study, no additional feedback on resident clinical efficiency and productivity was provided during the study period. Providing feedback in a single format may have limited the ability for performance metric scorecards to be as effective as possible in changing resident behavior. Future studies including more robust metrics for senior‐level residents and feedback with explicit action plans to target deficiencies should be performed to determine impact on clinical productivity and satisfaction.

The survey used in our study was not a validated instrument. We created it through an iterative process in our study committee. It is possible that the structure of the survey or wording of questions introduced bias. We attempted to limit this by writing simply worded questions as affirmative statements with agreement assessed on a 6‐point Likert scale. Future studies on resident perceptions of feedback would benefit from a validated instrument.

This pilot study was performed at a single institution and, as such, other institutions may have different ranges for patients’ visits per hour and PTTs. In addition, learners in other settings may find additional metrics to be of greater value for their specific clinical learning environment.

Conclusions

Our study reveals that residents are more satisfied with the feedback process when receiving monthly performance metric reports. Furthermore, a majority of the residents liked receiving reports and felt that it better prepared them for the expectations of being an attending physician. Residency training programs could consider including standardized clinical performance reports as a way of augmenting feedback to aid in the transition from resident to attending physician. Feedback inclusive of performance metrics, however, did not show statistically significant improvement in resident productivity in the clinical setting. Future studies would need to be conducted to determine which metrics would be of most value to medical trainees and inclusive of a larger group of participants to better understand how to impact learners’ productivity in the clinical learning environment.

Supporting information

Data Supplement S1. Intervention group survey.

AEM Education and Training 2019; 3: 323–330

Presented at the Society for Academic Emergency Medicine Annual Meeting, Orlando, FL, May 2017; Council of Emergency Medicine Residency Directors Academic Assembly, Ft. Lauderdale, FL, April 2017; and the Council of Emergency Medicine Residency Directors Academic Assembly, Nashville, TN, March 2016.

The authors have no relevant financial information or potential conflicts to disclose.

References

- 1. Swing SR, Beeson MS, Carraccio C, et al. Educational milestone development in the first 7 specialties to enter the next accreditation system. J Grad Med Educ 2013;5:98–106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Lurie SJ, Mooney CJ, Lyness JM. Measurement of the general competencies of the accreditation council for graduate medical education: a systematic review. Acad Med 2009;84:301–9. [DOI] [PubMed] [Google Scholar]

- 3. Rosenbaum ME, Ferguson KJ, Kreiter CD, Johnson CA. Using a peer evaluation system to assess faculty performance and competence. Fam Med 2005;37:429–33. [PubMed] [Google Scholar]

- 4. Brasel KJ, Bragg D, Simpson DE, Weigelt JA. Meeting the Accreditation Council for Graduate Medical Education competencies using established residency training program assessment tools. Am J Surg 2004;188:9–12. [DOI] [PubMed] [Google Scholar]

- 5. Higgins RS, Bridges J, Burke JM, O'Donnell MA, Cohen NM, Wilkes SB. Implementing the ACGME general competencies in a cardiothoracic surgery residency program using 360‐degree feedback. Ann Thorac Surg 2004;77:12–7. [DOI] [PubMed] [Google Scholar]

- 6. Reisdorff EJ, Hayes OW, Carlson DJ, Walker GL. Assessing the new general competencies for resident education: a model from an emergency medicine program. Acad Med 2001;76:753–7. [DOI] [PubMed] [Google Scholar]

- 7. The Accreditation Council of Graduate Medical Education . Resident Survey Question Content Areas. 2019. Available at: https://www.acgme.org/Data-Collection-Systems/Resident-Fellow-and-Faculty-Surveys. Accessed March 25, 2019.

- 8. Angus S, Moriarty J, Nardino RJ, Chmielewski A, Rosenblum MJ. Internal medicine residents’ perspectives on receiving feedback in milestone format. J Grad Med Educ 2015;7:220–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Yarris LM, Jones D, Kornegay JG, Hansen M. The milestones passport: a learner centered application of the Milestone framework to prompt real‐time feedback in the emergency department. J Grad Med Educ 2014;6:555–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Frampton A, Fox F, Hollowood A, et al. Using real‐time anonymous staff feedback to improve staff experience and engagement. BMJ Qual Improv Rep 2017;6(1). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Sexton JB, Adair KC, Leonard MW, et al. Providing feedback following Leadership Walk Rounds is associated with better patient safety culture, higher employee engagement and lower burnout. BMJ Qual Saf 2018;27:261–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Gordon EK, Baranaov DY, Fleisher L. The role of feedback in ameliorating burnout. Curr Opin Anaesthesiol 2018;31:361–5. [DOI] [PubMed] [Google Scholar]

- 13. Pines JM, Ladhania R, Black BS, Corbit CK, Carlson JN, Venkat A. Changes in reimbursement to emergency physicians after Medicaid expansion under the Patient Protection and Affordable Care Act. Ann Emerg Med 2019;73:213–24. [DOI] [PubMed] [Google Scholar]

- 14. Scholle SH, Roski J, Adams JL, et al. Benchmarking physician performance: reliability of individual and composite measures. Am J Manage Care 2008;14:833. [PMC free article] [PubMed] [Google Scholar]

- 15. Scholle SH, Roski J, Dunn DL, et al. Availability of data for measuring physician quality performance. Am J Manage Care 2009;15:67. [PMC free article] [PubMed] [Google Scholar]

- 16. Nofziger AC, Naumburg EH, Davis BJ, Mooney CJ, Epstein RM. Impact of peer assessment on the professional development of medical students: a qualitative study. Acad Med 2010;85:140–7. [DOI] [PubMed] [Google Scholar]

- 17. Bing‐You RG. Internal medicine residents’ attitudes toward giving feedback to medical students. Acad Med 1993;68:388. [DOI] [PubMed] [Google Scholar]

- 18. Joseph JW, Henning DJ, Strouse CS, Chiu DT, Nathanson LA, Sanchez LD. Modeling resident productivity in the emergency department. Ann Emerg Med 2017;70:185–90. [DOI] [PubMed] [Google Scholar]

- 19. Ivers N, Jamtvedt G, Flottorp S, et al. Audit and feedback: effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev 2012;(6):CD000259. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Supplement S1. Intervention group survey.