Abstract

Objective

The objective was to compare attending emergency physician (EP) time spent on direct and indirect patient care activities in emergency departments (EDs) with and without emergency medicine (EM) residents.

Methods

We performed an observational, time–motion study on 25 EPs who worked in a community‐academic ED and a nonacademic community ED. Two observations of each EP were performed at each site. Average time spent per 240‐minute observation on main‐category activities are illustrated in percentages. We report descriptive statistics (median and interquartile ranges) for the number of minutes EPs spent per subcategory activity, in total and per patient. We performed a Wilcoxon two‐sample test to assess differences between time spent across two EDs.

Results

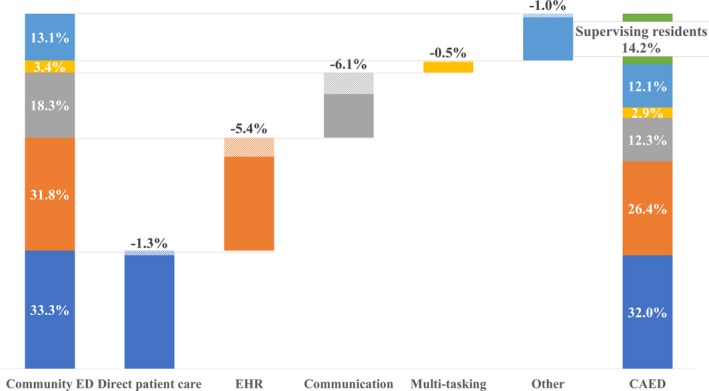

The 25 observed EPs executed 34,358 tasks in the two EDs. At the community‐academic ED, EPs spent 14.2% of their time supervising EM residents. Supervision activities included data presentation, medical decision making, and treatment. The time spent on supervision was offset by a decrease in time spent by EPs on indirect patient care (specifically communication and electronic health record work) at the community academic ED compared to the nonacademic community ED. There was no statistical difference with respect to direct patient care time expenditure between the two EDs. There was a nonstatistically significant difference in attending patient load between sites.

Conclusions

EPs in our study spent 14.2% of their time (8.5 minutes/hour) supervising residents. The time spent supervising residents was largely offset by time savings related to indirect patient care activities rather than compromising direct patient care.

Background

One of the primary benefits of residency training is the opportunity for residents to observe the work of, and learn from the direct interaction with, the attending emergency physician (EP).1 Direct attending–resident interaction is also considered one of the best assessment tools for resident competencies.2

In 2001, DeBehnke wrote, “… [e]ducating and supervising residents and students while simultaneously providing patient care requires quantifiable faculty time and effort. Academic EDs must identify this time and effort accurately since providing this joint product line has the potential to make our emergency care system inefficient.”3

Maintaining effective supervision can be very costly for emergency departments (ED). First, engaging with residents may limit the time that EPs devote to patient care and ED flow. This additional demand on the EP may also increase stress and anxiety, potentially leading to burnout. Second, the EP is responsible for calibrating the level of supervision based on each resident's knowledge and clinical skill. Residents who lack requisite knowledge with complex cases may struggle and potentially cause patient harm.4 Third, supervising residents consumes resources. Evidence shows that care at academic hospitals is less cost‐effective than care at nonacademic hospitals because of higher frequency of testing and other resource use in the teaching setting.5

Resident training takes place in a variety of ED settings. EDs can broadly be categorized into three types: academic—defined as university‐based, teaching hospitals; community‐academic (which will be referenced as “CAED” in the remainder of this paper) defined as community EDs with residents and/or students who rotate through and are supervised by EPs; and nonacademic EDs (which will be referred as “community ED” in the remainder of this paper) defined as an ED with no learners. In CAEDs, resident supervision is but one of many responsibilities that must be skillfully orchestrated by EPs, alongside other essential tasks such as direct patient care, communication, and documentation.

There are limited data identifying the differences in the time and effort spent by EPs at CAEDs in comparison to community EDs. Chisholm et al.2, 6 tracked EPs’ time expenditures on direct/indirect patient care and personal activities in academic versus community EDs, but did not specify the time spent supervising residents or performing other care‐related activities. Other studies only assessed aggregate effect of residents on departmental throughput.7, 8, 9, 10, 11 To the best of our knowledge, this is the first study to comprehensively quantify and compare the time EPs spend on resident supervision and care‐related activities in CAEDs versus community EDs.

Objective of Investigation

The objective of this study was to compare the time utilization profiles of a group of EPs in a community ED where patients were the only “customers”3 versus that of the same group of EPs in a CAED where patients and residents generate competing demands for EPs’ time. First, we quantified the time EPs “reallocate” to resident supervision at the CAED. Second, we determined the categories of activities from which EPs shaved time to accommodate supervision.

Methods

Study Site

This study was approved by the first and second author's respective institutional review boards.

We conducted an observational, time–motion study at a 25‐bed CAED versus a 15‐bed community ED. Both are Level II trauma centers within a four‐hospital health system in northern Illinois. The health system is the primary affiliate and community training site for a university‐based emergency medicine (EM) residency program. The CAED trains residents from multiple Accreditation Council for Graduate Medical Education (ACGME)‐accredited residency programs. The community ED does not have ACGME trainees. Table 1 shows key performance metrics for both EDs during the fiscal year of 2017.

Table 1.

Performance Metrics for Community‐Academic and Community in FY2017

| Community‐Academic | Community | |

|---|---|---|

| ED visits | 37,042 | 27,555 |

| % Observation admissions | 13.3 | 14.5 |

| % Inpatient admissions | 14.7 | 13.5 |

| LWBS | 708 | 352 |

| Pediatric volume | 3,993 | 4,881 |

| Median turnaround time (minutes) | ||

| Admission | 271 | 242 |

| Discharge | 196 | 178 |

| % Female | 55.3 | 56.0 |

| % Male | 44.7 | 44.0 |

| ESI level (%) | ||

| 1 | 1.0 | 0.3 |

| 2 | 28.1 | 20.0 |

| 3 | 52.5 | 56.4 |

| 4 | 16.3 | 20.3 |

| 5 | 0.4 | 0.5 |

| Medicine admissions | 8,609 | 6,363 |

| Surgery admissions | 1,831 | 1,420 |

| Avg. hourly patients seen per shift | 2.8 | 2.2 |

ESI = Emergency Severity Index; LWBS = leave without being seen.

The EDs have comparable attending physician staffing ratios despite a 10,000 patient census differential between the CAED and community ED. The same group of EPs staff both EDs in our study. Both EDs have double EP coverage from 9 am to midnight and single coverage overnight. During the double‐coverage hours, each EP is supported by a separate team of nurses and is responsible for an evenly divided subset of rooms. Chart documentation is completed via dictation or by typing directly into the electronic health record (EHR). Neither ED has scribes.

Most residents in our study were second‐ (PGY‐2) and third‐ (PGY‐3) year emergency medicine residents. All patients at the CAED evaluated by a resident are staffed by an EP as well, which corresponds to practice previously described.12 In general, a resident takes the patient's history, performs a physical examination, and then presents the findings to the EP. During the presentation, the resident ideally proposes a differential diagnosis and management plan. The EP then provides feedback to the resident (i.e., probing and clarifying questions, nodding, correcting, and using case vignettes from other related cases as supporting evidence). In this study, we consider such care‐related, direct EP–resident interaction as the main activity of supervising residents. After the discussion, the EP examines the patient independently with or without the resident. After the independent evaluation, the EP continues supervision by evaluating the resident's assessment and modifying the treatment plan together with the resident, if needed. Orders for medications, procedures, and laboratory tests are entered into the EHR and communicated to the nurse, either by the resident or by the EP herself. Residents and EPs both write notes on the patient. The resident notes are generally complete history and physical examination findings, as well as assessments and plans that comprise the medical decision making. EPs generally write an abbreviated supervisory or physicians at teaching hospitals (PATH) note as stipulated by Centers for Medicare & Medicaid Services. Importantly, the residents at the CAED do not take the EPs role in all these care‐related activities. The EP remains the chief provider of care, with the supervision of residents being an added responsibility.

Data Collection

We followed the activity categorization developed by Tipping et al.,13, 14 in which they suggested to include the primary categories of “direct” and “indirect” patient care. We also followed their definition of direct patient care as “those activities involving face‐to‐face interaction between the [EP] and the patient.”13 In our study, all the time that EPs spent in patient rooms is quantified as direct patient care. Accordingly, bedside charting or teaching is counted toward direct patient care because the EPs are performing these activities in the presence of patients. Indirect patient care includes activities “relevant to the patient's care but not performed in the presence of the patient.”13 Following Tipping et al.,14 we adjusted to the specifics of our sites by adding customized subcategories. We kept refining the subcategories to ensure that they were “easily observable and identifiable without subjective interpretation from the observer” during a pilot study.14 Two experienced EPs in our research team helped finalize the categorization (see Table 2). The multitasking activity only involved an EP simultaneously communicating with other nonresident providers while working on EHR. A resident presenting a case while the EP is reviewing the related EHR is categorized as supervision.

Table 2.

Categories of Activity

| Primary Category | Main Category | Subcategory | Definition |

|---|---|---|---|

| Direct patient care | Direct patient care | EPs examine, treat, or communicate with patient at bedside or explain treatment to patient's family | |

| Indirect patient care | EHR | Reviewing | EPs collect information from the EHR (e.g., reviewing patient history, test results, radiology images, and ECGs). |

| Charting and putting orders | EPs enter information into the EHR (e.g., writing patient note, order test and medicines). | ||

| Communication | Phone calls and consults | EPs communicate with other care providers (e.g., hospitalists and specialists) over the phone for patient care purposes | |

| Face‐to‐face communication with other providers | EPs communicate with other nonresident care providers (e.g., nurses and technicians) for patient care purposes | ||

| Multitasking | Communicating with other non‐resident providers while working on EHR | EPs communicate with other nonresident care providers for patient care purposes, either over the phone or face to face, while working on EHR | |

| Supervision | Supervising residents | EPs interact with residents for patient care purposes without the presence of patients | |

| Other | Personal | EPs get food, eat, visit the restroom, check private phone/e‐mail or requests privacy explicitly; EPs chat with other people for nonclinical purpose | |

| Transit/travel | EPs wait, move, and wash their hands |

ECG = electrocardiogram; EHR = electronic health record.

Hypothesis Development

Hypothesis 1. A recent study by Hexom et al.15 reported a mean resident supervision time by EM faculty of 60.8 minutes over 8‐hour observation periods. Chisholm et al.2 also demonstrated that EM faculty devoted 11.9% of their time to resident supervision. Therefore, we expected to see EPs in our study tailoring resident supervision to their workflow at the CAED.

Hypothesis 2. In order to fulfill the supervisory responsibility EPs would have to reallocate time spent on other care‐related activities. We hypothesized that EPs would delegate portions of indirect patient care activities to residents, such as communication and EHR work.

Hypothesis 3. It was unclear how EP direct patient care time would differ between ED settings. On the one hand, patient care by residents might reduce the time that EPs spend on direct patient care. On the other hand, bedside teaching of residents would increase this time. It was also unclear which of these effects would materialize in practice. EPs may constrain the autonomy of residents for reasons of efficiency and safety. Previous studies show limited use of bedside teaching in practice as this also may take more time.2, 16 We hypothesized that time spent by EPs on direct patient care at the CAED would be less than that at the community ED.

Pilot Observation

In October 2016, we conducted nine pilot observations totaling 1,764 minutes to design the data collection process and to train the observer (a third‐year PhD candidate in operations). The observer was trained to be responsive to EP's activity transition and to rapidly track them down. During the pilot study, the observed EPs explained to the observer his/her current activity (e.g., “I am charting” or “I am going to have lunch”). The observer also asked the EPs about ambiguous situations to avoid misinterpreting the activities being performed. Because we only had a single observer in the study, there was no concern for interobserver reliability or consistency in measurement.

Selection of Participants

We selected a nonrandomized convenience sample from a total of 51 EPs in this health system. The primary inclusion criteria required that the participating EP worked at both EDs during the study. Authors were excluded from the sample. This resulted in 30 eligible EPs, from which 25 EPs verbally consented. They were informed that the study was about ED workflow but were blinded from the specific objective. Data confidentiality was ensured.

Study Protocol

We observed each EP twice at each ED for a total of 100 observations (50 at the CAED and 50 at the community ED.) The length of a single observation session was 240 minutes,6, 17 totaling 400 observation hours. Importantly, the EPs served as their own control. The observed shifts were a convenience sample but were evenly distributed over the days of the week and AM/PM shifts.

Residents, nurses, and advanced practice providers were not observed directly, but their interactions with the observed EP were recorded. The observer shadowed an individual EP continuously except when the EP was inside the patient room or requested privacy. Tracked information included locations, categorized activity, with start and end times for activity executions. All observational data were recorded using an iPad app called “Eternity.” To minimize the Hawthorne effect, the observer maintained a “safe distance” from the observed EP and did not engage in conversation during the observation periods. To protect patient privacy, the observer did not enter patient rooms. The iPad was held by the observer and was obscured from the EP during observations.

We calculated patient load and left without being seen (LWBS) rate for each observation using the patient‐level turnaround data derived from the health system's data warehouse. Patient load was calculated by adding the number of new patients assigned to the observed EP who received care by that observed EP during the observation, to the number of patients already under the observed EP's care at the beginning of the observation.13 We also calculated the percentages of patient load in each Emergency Severity Index (ESI) level. ESI provides clinically relevant stratification of patients into five levels from 1 (most urgent) to 5 (least urgent) based on acuity and resource needs.18 The LWBS rate was defined as the ratio of the number of LWBS new patients (i.e., new patients who arrived during the observation and were assigned to the observed EP, but subsequently left without being seen by this EP),19 to the number of total new patients who arrived during the observation and were assigned to the observed EP.

The supervised residents were queried confidentially using a survey compiled from previously reported surveys.20 Residents were asked to rate “How sufficient is the supervision you received from attending in the past four hours” on a three‐point Likert scale with the descriptors: “very sufficient,” “sufficient,” and “not at all/slightly sufficient.”21 The residents were also asked to “Describe your learning outcome in the past four hours” using a three‐point scale with the descriptors: “I have learned a significant amount,” “I have learned something,” and “I didn't learn anything.” These surveys were completed shortly after the observations (within 2 hours).

Measurements and Primary Data Analysis

The time spent on each activity was measured in seconds. Mean time for each main category at community ED versus CAED were presented in percentages (out of a 240‐minute observation). We then reported the detailed time spent by EPs on each subcategory across two EDs during observations. We also reported the time an EP spent per patient load on each subcategory during an observation, using the total time the EP spent on the subcategory divided by the patient load during this observation.13

Most of our time‐spent data did not follow normal distribution (see Data Supplement S1, Tables A.1 and A.2, available as supporting information in the online version of this paper, which is available at http://onlinelibrary.wiley.com/doi/10.1002/aet2.10334/full); we thereby present the median and interquartile ranges (IQRs) of minutes spent on each subcategory at CAED versus community ED. A Wilcoxon two‐sample test was performed. To control the Type I error, we corrected the p‐values using the Benjamini‐Hochberg procedure with a false discovery rate (FDR) of 0.05.22, 23 Two‐sided FDR‐adjusted p‐values < 0.05 were considered statistically significant. All analyses were run on R version 3.4.3.24

Results

During the formal observational study from March 2017 to August 2017, the 25 observed EPs performed a total of 35,348 executions of the subcategorized activities during the entire 400 observation hours. On average 355 activities were performed per observation at the CAED versus 332 at the community ED (p = 0.11). The average patient load across observations was 15 at the CAED versus 13 at the community ED (p = 0. 40). The ESI scores of these patients were significantly different between the CAED versus community ED for levels 1 to 3: level 1 (1.1% vs. 0.1%, p = 0.008), level 2 (37.7% vs. 20.7%, p < 0.001), and level 3 (47.9% vs. 62.7%, p < 0.001). There was no difference in the average number of patients discharged by the participating EP during the observations (CAED = 7 vs. community = 7, p = 0.59). The average LWBS rates were similar (CAED = 1% vs. community = 0.9%, p = 0.33).

Figure 1 illustrates the time utilization profile of an average EP per 240‐minute observation session. EPs spent 34.2 minutes (14.2%) supervising residents (8.5 minutes/hour). Direct patient care accounted for 76.8 minutes (32%) versus 79.9 minutes (33.3%; p = 0.31) at the CAED and community ED, respectively. Indirect patient care accounted for 99.8 minutes (41.6%) and 128.4 minutes (53.5%) at the CAED and community ED, respectively (P < 0.001). Non–care‐related activities (personal time and travel) accounted for 12.1% versus 13.1% (p = 0.96), respectively, and did not statistically differ.

Figure 1.

Mean task percentages for the 240‐minute observations. CAED = community‐academic ED; EHR = electronic health record.

Comparing the CAED to the community ED, significant median time decreases were found in EHR review (32.2 minutes vs. 23.9 minutes, FDR‐adjusted p = 0.003), charting and placing orders (41.6 minutes vs. 36.7 minutes, FDR‐adjusted p = 0.029), face‐to‐face communication (25.8 minutes vs. 17.9 minutes, FDR‐adjusted p = 0.002), phone calls/consult communication (17.1 minutes vs. 8.4 minutes, FDR‐adjusted p < 0.001), and multitasking (7.2 minutes vs. 4.32 minutes, FDR‐adjusted p = 0.031). Personal time did not change significantly.

Median minutes spent per patient load on communication, either face to face or by phone, at the CAED decreased by almost 40%. EPs working at the CAED spent 1.14 median minutes less reviewing in EHR (FDR‐adjusted p = 0.028). No significant change was found with respect to direct patient care, charting and putting orders in EHR, multitasking, and other non–patient care activities.

Survey Results

Thirty‐one residents completed 47 unique session survey responses (response rate is 78.3% from 60 session surveys). One hundred percent of the responses described the supervision as “very sufficient.” Forty‐three percent of the responses reported having “learned something” and 57% reported having “learned a lot” during the corresponding sessions.

Discussion

Building on DeBehnke's call for a more refined understanding of the time and effort expended on educating and supervising residents, we studied how EPs adjust their clinical practices when resident supervision is added to their responsibilities. The primary strength of this study is an extensive data set and a subject group that served as their own controls. To our knowledge, ours is the first time–motion study to fully map the time utilization profile of EPs working with and without residents. While this information represents two EDs and mostly upper‐level residents, we believe that it is a reasonable starting point for other studies on this important topic.

The key findings are: 1) EPs spent a substantial portion of their clinical time supervising residents, 2) EPs spent the majority of their clinical time in direct patient care, and 3) EPs experienced a significant reduction in indirect patient care when working with residents.

First, our EP cohort spent 14.2% of their time supervising residents. This translates to 68 minutes over an 8‐hour shift, consistent with the supervision time found by Hexom et al.15 Time spent interacting with EPs may determine perceived education quality by the residents.15 This also echoes the positive responses from the residents to our survey.

Second, we confirmed that our EPs spent the majority of their time performing direct patient care.2, 15, 17 More importantly, even though the EPs devoted a substantial amount of their time to resident supervision at the CAED, direct patient care time did not change significantly. Direct patient care, the primary priority for our EPs, was preserved despite substantial effort toward supervision. Consistent with the findings by Chisholm et al.,2 seldom do the EP and the resident at the CAED work simultaneously at the patient's bedside, except for verification of resident findings on history and physical or procedural supervision.

Third, we observed that time savings from EPs’ offloading indirect patient care activities to the residents largely offset the supervision time: EM residents contributed to indirect patient care and expanded EPs capacity in addition to the direct patient care they provided—a “win–win situation” of resident supervision. In our setting, adding an intermediate or upper‐level EM resident to the CAED team extended the EPs’ ability to evaluate approximately 10,000 more patients per year (who were arguably sicker by our ESI score) with comparable staffing and the same bedside time.

Finally, residents freed EPs from specific indirect patient care activities such as communication and EHR work. At both aggregate and per‐patient‐load levels, EPs delegated most of the communication to their residents, spending significantly less time making phone calls or face‐to‐face communication to other health care providers. The EPs providing supervision in exchange for release from the tasks can be viewed as an apprenticeship‐type of experience: “The resident will perform these indirect patient care tasks under my supervision, and in return, I will provide the resident with diagnostic feedback on how to perform them better and more effectively.” The presence of residents also endows the EPs with discretion as to how and when to expense their efforts—a “currency of resident apprenticeship.” Several of our EPs suggested that when working at the CAED, they delegated more of the charting to residents when the patient load was low, but would complete more of it on their own when the patient load was high so that residents can help do more direct patient care to accelerate the patient throughput. When working at the community ED, their time spent per patient on charting is relatively stable and independent of the patient load. This anecdotal evidence is consistent with the substantially wider IQR of per‐patient‐load EHR charting time at the CAED (Data Supplement S1, Table S.4).

Limitations

Our results should be interpreted in the light of several limitations. First, some possible confounding factors were beyond our control. For example, the CAED had a larger concentration of high‐acuity patients both anecdotally and suggested by their ESI scores. This would bias the observed change in time on direct patient care upward at the CAED, as critical patients typically require more direct patient care. Such bias was difficult to avoid without randomizing patients across EDs and EPs. Meanwhile, ESI has been criticized for its low accuracy and high variability in clinical practice.25, 26 Observing differences in patient ESI distributions thereby may not suffice to confirm the difference in the actual acuity distributions across two EDs.

Patient load was another potential confounder. With high patient load, EPs probably deferred documentation to make more time for direct patient care. We tried to balance the distribution of patient loads across two EDs by spreading the observations over days of week and AM/PM shifts. The average patient load across observations at the CAED turned out to be higher than at the community ED (15 vs. 13). This difference was not statistically significant (p = 0.40), probably because of the large variance in patient loads, but anecdotally, the EPs “feel” the difference of simultaneously carrying more patients at the CAED. To further adjust for the patient load, we reported the average time spent per patient load on each subcategory in Table S.4. Future study with more observations may achieve more consistent estimate and comparison of patient loads.

A potentially stronger method to eliminate these biases introduced by systematic differences in different EDs is to compare the same EP at the same ED on shifts with versus shifts without residents. Although we did not find such opportunity, Salazar et al.27 achieved this by using a resident strike period as the control and compared quality indicators of patient care during days when residents were on duty versus on strike.

Second, we did not separate the time spent on bedside teaching from direct patient care time. This observation rubric followed prior reported studies.14 Furthermore, the supervision time we recorded already accounted for a major part of the total EM resident–faculty interaction time as shown in previous study.2

Third, we did not capture EPs’ time spent after shifts. EPs might have to stay late after shifts to complete patient notes. More data capturing EPs’ work after shifts would provide a more holistic analysis of resident effect on their time utilization profile.

Fourth, the accuracy of the single nonclinical observer’s interpretation, as well as the Hawthorne effect may limit the results. But we believe that the pilot observations totaling almost 30 hours provided sufficient practice for the observer to achieve reasonable recording accuracy and to remain as unobtrusive as possible during observations.13 Besides, the inherent bias of the observer’s assessment would be carried across both EDs and would not affect the comparison results. Having multiple observers may make the study more robust and replicable, but that imposes a higher requirement for resources and study design.28, 29

Fifth, we did not capture overnight shifts in our observations, concerning that the difference in coverage and availability of other medical providers may confound the results. Future research specifically focusing on potential differences of the resident effect on overnight shifts is warranted.

Sixth, these results may not be generalizable to other CAEDs and traditional university‐based teaching hospitals, where staffing, supervision, and institutional culture can be quite different. For example, our resident sample consisted entirely of intermediate and upper‐level residents. The results may not generalize to institutions that were staffed primarily with junior trainees (i.e., medical students and interns), as senior residents are reported to be more productive than junior trainees.30, 31, 32

Finally, we had limited number of observations per EP per ED. A larger sample size, with a moderate‐large number of observations per EP, per ED, or even under different levels of patient load and acuity, would support a more detailed empirical study that goes beyond comparison of descriptive statistics aggregated at ED level.

Conclusion

Emergency physicians working with emergency medicine residents in a community‐academic ED reap significant time savings from the responsibilities of indirect patient care and remunerate those savings in kind to the residents in the form of supervision which accounted for 14.2% of their clinical time. These time savings allow them to foster a clinical learning environment “where residents and fellows can interact with patients under the guidance and supervision of qualified faculty members who give value, context, and meaning to those interactions” and achieve “balance of service and education” in residency training.33, 34 More importantly, it allows community academic emergency physicians to preserve their ability to provide direct high‐quality safe patient care, which remains their core mission.

Supporting information

Data Supplement S1. Supplemental material.

AEM Education and Training 2019;3:308–316

Poster was displayed on Medical Education Day, University of Chicago Pritzker School of Medicine, November 16, 2017.

The authors have no relevant financial information or potential conflicts to disclose.

Author contributions: EEW and JVM conceived the study and designed the trial; YY, JVM, and IG designed the data collection instrument; MSK and EEW were responsible for coordinating physician scheduling; CR, JN, JA, CB, and SHB were responsible for manuscript review and coordination of resident participation; YY was primarily responsible for observation, data collection, and managing the data; YY and IG provided statistical oversight and analysis as well as manuscript preparation; and EEW, YY, and JVM were primarily responsible for manuscript generation and takes responsibility for the paper as a whole.

References

- 1. Merritt C, Shah B, Santen S. Apprenticeship to entrustment: a model for clinical education. Acad Med 2017;92:1646. [DOI] [PubMed] [Google Scholar]

- 2. Chisholm CD, Whenmouth LF, Daly EA, Cordell WH, Giles BK, Brizendine EJ. An evaluation of emergency medicine resident interaction time with faculty in different teaching venues. Acad Emerg Med 2004;11:149–55. [PubMed] [Google Scholar]

- 3. DeBehnke DJ. Clinical supervision in the emergency department: a costly inefficiency for academic medical centers. Acad Emerg Med 2001;8:827–8. [DOI] [PubMed] [Google Scholar]

- 4. Atzema C, Bandiera G, Schull MJ, Coon TP, Milling TJ. Emergency department crowding: the effect on resident education. Ann Emerg Med 2005;45:276–81. [DOI] [PubMed] [Google Scholar]

- 5. Pitts SR, Morgan SR, Schrager JD, Berger TJ. Emergency department resource use by supervised residents vs attending physicians alone. JAMA 2014;312:2394. [DOI] [PubMed] [Google Scholar]

- 6. Chisholm CD, Weaver CS, Whenmouth L, Giles B. A task analysis of emergency physician activities in academic and community settings. Ann Emerg Med 2011;58:117–22. [DOI] [PubMed] [Google Scholar]

- 7. Svirsky I, Stoneking LR, Grall K, Berkman M, Stolz U, Shirazi F. Resident‐initiated advanced triage effect on emergency department patient flow. J Emerg Med 2013;45:746–51. [DOI] [PubMed] [Google Scholar]

- 8. Bhat R, Dubin J, Maloy K. Impact of learners on emergency medicine attending physician productivity. West J Emerg Med 2014;15:41–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. McGarry J, Krall SP, McLaughlin T. Impact of resident physicians on emergency department throughput. West J Emerg Med 2010;11:333–5. [PMC free article] [PubMed] [Google Scholar]

- 10. Anderson D, Golden B, Silberholz J, Harrington M, Hirshon JM. An empirical analysis of the effect of residents on emergency department treatment times. IIE Trans Healthc Syst Eng 2013;3:171–80. [Google Scholar]

- 11. Henning DJ, McGillicuddy DC, Sanchez LD. Evaluating the effect of emergency residency training on productivity in the emergency department. J Emerg Med 2013;45:414–8. [DOI] [PubMed] [Google Scholar]

- 12. Cobb T, Jeanmonod D, Jeanmonod R. The impact of working with medical students on resident productivity in the emergency department. West J Emerg Med 2013;14:585–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Tipping MD, Forth VE, O'Leary KJ, et al. Where did the day go?–A time‐motion study of hospitalists. J Hosp Med 2010;5:323–8. [DOI] [PubMed] [Google Scholar]

- 14. Tipping MD, Forth VE, Magill DB, Englert K, Williams MV. Systematic review of time studies evaluating physicians in the hospital setting. J Hosp Med 2010;5:353–9. [DOI] [PubMed] [Google Scholar]

- 15. Hexom B, Trueger NS, Levene R, Ioannides KL, Cherkas D. The educational value of emergency department teaching: it is about time. Intern Emerg Med 2017;12:207–12. [DOI] [PubMed] [Google Scholar]

- 16. Aldeen AZ, Gisondi MA. Bedside teaching in the emergency department. Acad Emerg Med 2006;13:860–6. [DOI] [PubMed] [Google Scholar]

- 17. Füchtbauer LM, Nørgaard B, Mogensen CB. Emergency department physicians spend only 25% of their working time on direct patient care. Dan Med J 2013;60:A4558. [PubMed] [Google Scholar]

- 18. Gilboy N, Tanabe T, Travers DR. Emergency Severity Index (ESI) a triage tool for emergency department care implementation, version 4. In: Implementation Handbook 2012 Edition. AHRQ Publication No. 12‐0014. Rockville, MD: Agency for Healthcare Research and Quality, 2011. https://www.ahrq.gov/sites/default/files/wysiwyg/professionals/systems/hospital/esi/esihandbk.pdf. Accessed October 8, 2018.

- 19. Polevoi SK, Quinn JV, Kramer NR. Factors associated with patients who leave without being seen. Acad Emerg Med 2005;12:232–6. [DOI] [PubMed] [Google Scholar]

- 20. Holt KD, Miller RS, Philibert I, Heard JK, Nasca TJ. Residentsʼ perspectives on the learning environment: data from the Accreditation Council for Graduate Medical Education resident survey. Acad Med 2010;85:512–8. [DOI] [PubMed] [Google Scholar]

- 21. Jacoby J, Matell MS. Three‐point likert scales are good enough. J Market Res 1971;8:495. [Google Scholar]

- 22. Benjamini Y, Hochberg Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J R Stat Soc Ser B 1995;57:289–300. [Google Scholar]

- 23. Genovese CR, Lazar NA, Nichols T. Thresholding of statistical maps in functional neuroimaging using the false discovery rate. Neuroimage 2002;15:870–8. [DOI] [PubMed] [Google Scholar]

- 24. R: A Language and Environment for Statistical Computing. Vienna: R Foundation for Statistical Computing, 2015, 2018. https://scholar.google.com/scholar?cluster=4702143145247627313&hl=en&oi=scholarr. Accessed September 6, 2018.

- 25. Mistry B, Stewart De Ramirez S, Kelen G, et al. Accuracy and reliability of emergency department triage using the Emergency Severity Index: an international multicenter assessment. Ann Emerg Med 2018;71:581–7.e3. [DOI] [PubMed] [Google Scholar]

- 26. Buschhorn HM, Strout TD, Sholl JM, Baumann MR. Emergency medical services triage using the Emergency Severity Index: is it reliable and valid? J Emerg Nurs 2013;39:55–63. [DOI] [PubMed] [Google Scholar]

- 27. Salazar A, Corbella X, Onaga H, Ramon R, Pallares R, Escarrabill J. Impact of a resident strike on emergency department quality indicators at an urban teaching hospital. Acad Emerg Med 2001;8:804–8. [DOI] [PubMed] [Google Scholar]

- 28. Lopetegui MA, Bai S, Yen PY, Lai A, Embi P, Payne PR. Inter‐observer reliability assessments in time motion studies: the foundation for meaningful clinical workflow analysis. AMIA Annu Symp Proc 2013;2013:889–96. [PMC free article] [PubMed] [Google Scholar]

- 29. Zheng K, Guo MH, Hanauer DA. Using the time and motion method to study clinical work processes and workflow: methodological inconsistencies and a call for standardized research. J Am Med Inform Assoc 2011;18:704–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Jeanmonod R, Jeanmonod D, Ngiam R. Resident productivity: does shift length matter? Am J Emerg Med 2008;26:789–91. [DOI] [PubMed] [Google Scholar]

- 31. Lammers RL, Roiger M, Rice L, Overton DT, Cucos D. The effect of a new emergency medicine residency program on patient length of stay in a community hospital emergency department. Acad Emerg Med 2003;10:725–30. [DOI] [PubMed] [Google Scholar]

- 32. Brennan DF, Silvestri S, Sun JY, Papa L. Progression of emergency medicine resident productivity. Acad Emerg Med 2007;14:790–4. [DOI] [PubMed] [Google Scholar]

- 33. Accreditation Council for Graduate Medical Education . Common Program Requirements. Available at: http://www.acgme.org/What-We-Do/Accreditation/Common-Program-Requirements. Accessed April 25, 2018.

- 34. Accreditation Council for Graduate Medical Education . ACGME Program Requirements for Graduate Medical Education in Emergency Medicine. Available at: http://www.acgme.org/Portals/0/PFAssets/ProgramRequirements/110_emergency_medicine_2017-07-01.pdf. Accessed September 5, 2018.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Supplement S1. Supplemental material.