Abstract

Multisensory interactions are a fundamental feature of brain organization. Principles governing multisensory processing have been established by varying stimulus location, timing and efficacy independently. Determining whether and how such principles operate when stimuli vary dynamically in their perceived distance (as when looming/receding) provides an assay for synergy among the above principles and also means for linking multisensory interactions between rudimentary stimuli with higher-order signals used for communication and motor planning. Human participants indicated movement of looming or receding versus static stimuli that were visual, auditory, or multisensory combinations while 160-channel EEG was recorded. Multivariate EEG analyses and distributed source estimations were performed. Nonlinear interactions between looming signals were observed at early poststimulus latencies (∼75 ms) in analyses of voltage waveforms, global field power, and source estimations. These looming-specific interactions positively correlated with reaction time facilitation, providing direct links between neural and performance metrics of multisensory integration. Statistical analyses of source estimations identified looming-specific interactions within the right claustrum/insula extending inferiorly into the amygdala and also within the bilateral cuneus extending into the inferior and lateral occipital cortices. Multisensory effects common to all conditions, regardless of perceived distance and congruity, followed (∼115 ms) and manifested as faster transition between temporally stable brain networks (vs summed responses to unisensory conditions). We demonstrate the early-latency, synergistic interplay between existing principles of multisensory interactions. Such findings change the manner in which to model multisensory interactions at neural and behavioral/perceptual levels. We also provide neurophysiologic backing for the notion that looming signals receive preferential treatment during perception.

Introduction

Understanding how the brain generates accurate representations of the world requires characterizing the organizing principles governing and neural substrates contributing to multisensory interactions (Calvert et al., 2004; Wallace et al., 2004; Ghazanfar and Schroeder, 2006; Stein and Stanford, 2008). Structurally, monosynaptic projections identified between unisensory (including primary) cortices raise the possibility of interactions during early stimulus processing stages (Falchier et al., 2002, 2010; Rockland and Ojima, 2003; Cappe and Barone, 2005; Cappe et al., 2009a; see also Beer et al., 2011). In agreement, functional data support the occurrence of multisensory interactions within 100 ms poststimulus onset and within low-level cortical areas (Giard and Peronnet, 1999; Molholm et al., 2002; Martuzzi et al., 2007; Romei et al., 2007, 2009; Cappe et al., 2010; Raij et al., 2010; Van der Burg et al., 2011). Nevertheless, the organizing principles governing such multisensory interactions in human cortex and their links to behavior/perception remain largely unresolved.

Based on single-neuron recordings, Stein and Meredith (1993) formulated several “rules” governing multisensory interactions. The principle of inverse effectiveness states that facilitative multisensory interactions are inversely proportional to the effectiveness of the best unisensory response. The temporal rule stipulates that multisensory interactions are dependent on the approximate superposition of neural responses to the constituent unisensory stimuli. The “spatial rule” states that multisensory interactions are contingent on stimuli being presented to overlapping excitatory zones of the neuron's receptive field. Until now, the spatial rule has faithfully accounted for spatial modulation in azimuth and elevation. But, how spatial information in depth as well as the covariance of information in space, time and effectiveness (i.e., “interactions” between the abovementioned principles) is integrated remains unresolved and was the focus here.

The investigation of looming (approaching) signals is a particularly promising avenue to address synergy between principles of multisensory interactions. Looming signals dynamically increase in their effectiveness and spatial coverage relative to receding stimuli that diminish their effectiveness and spatial coverage. It is also noteworthy that looming cues can indicate both potential threats/collisions and success in acquiring sought-after objects/goals (Schiff et al., 1962; Schiff, 1965; Neuhoff, 1998, 2001; Ghazanfar et al., 2002; Seifritz et al., 2002; Graziano and Cooke, 2006). Recent evidence in non-human primates further suggests that processing of looming signals may benefit from multisensory conditions (Maier et al., 2004, 2008); a suggestion recently confirmed in human performance and consistent with there being synergistic interplay between principles of multisensory interactions (Cappe et al., 2009b). Parallel evidence at the single-neuron level similarly nuances how principles of multisensory interactions cooperate. Responses expressing multisensory interactions within subregions of a neuron's receptive field are heterogeneous and give rise to integrative “hot spots” (Carriere et al., 2008).

In this framework, the present study sought to demonstrate such synergy by identifying the underlying neural mechanisms of multisensory integration for depth cues in humans. We used a multivariate signal analysis approach for EEG termed “electrical neuroimaging” that differentiates modulations in response strength, topography, and latency, as well as localizes effects using a distributed source model (Michel et al., 2004; Murray et al., 2008).

Materials and Methods

Subjects

Fourteen healthy individuals (aged 18–32 years: mean = 25 years; 7 women and 7 men; 13 right-handed) with normal hearing and normal or corrected-to-normal vision participated. Handedness was assessed with the Edinburgh questionnaire (Oldfield, 1971). No subject had a history of neurological or psychiatric illness. All participants provided written informed consent to the procedures that were approved by the Ethics Committee of the Faculty of Biology and Medicine of the University Hospital and University of Lausanne.

Stimuli and procedure

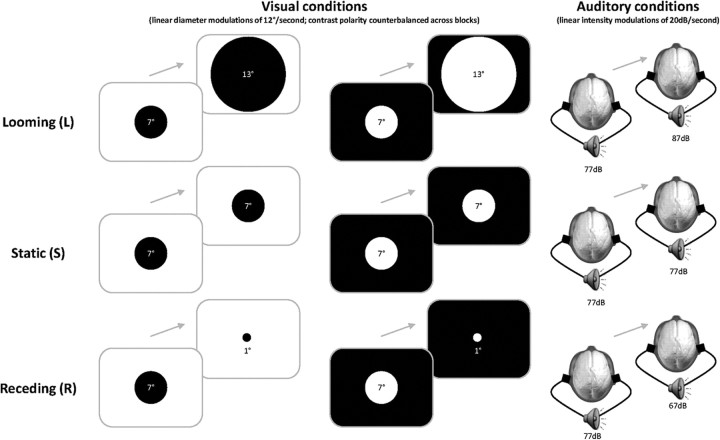

The main experiment involved the go/no-go detection of moving versus static stimuli that could be auditory, visual, or multisensory auditory-visual (A, V, and AV, respectively), as described in our recent paper describing the behavioral part of this study (Cappe et al., 2009b). To induce the perception of movement, visual stimuli changed in size and auditory stimuli changed in volume so as to give the impression of either looming or receding (denoted by L and R, respectively). Static stimuli were of constant size/volume. The stimulus conditions are schematized in Figure 1. Specific multisensory conditions were generated using the full range of combinations of movement type (L, R, and S) and congruence between the senses. For convenience we use shorthand to describe experimental conditions such that, for example, ALVL refers to the multisensory combination of auditory looming and visual looming and ARVL refers to the multisensory combination of auditory receding and visual looming. There were 15 configurations of stimuli in total (6 unisensory and 9 multisensory). Go trials (i.e., those on which either or both sensory modalities contained moving stimuli) occurred on 80% of the trials. Each of the 15 conditions was repeated 252 times across 18 blocks of randomly intermixed trials. Additional details appear in Cappe et al., 2009b.

Figure 1.

Stimuli and paradigm. Participants performed a go/no-go detection of moving (looming, receding) versus static stimuli that could be auditory, visual, or multisensory auditory-visual. All the stimuli were initially of the same size/intensity to ensure that subjects used dynamic information to perform the task. The perception of movement was induced by linearly changing the size of the centrally displayed disk for the visual condition and by changing the intensity of the complex tone for the auditory condition. To control for differences in stimulus energy in the visual modality, opposite contrast polarities were used across blocks of trials.

Auditory stimuli, 10 dB rising-intensity (looming signal) and falling-intensity (receding signal) 1000 Hz complex tones composed of a triangular waveform, were generated with Adobe Audition software (Adobe Systems Inc.). Prior research has shown that tonal stimuli produce more reliable perceptions of looming and receding (Neuhoff, 1998) and may also be preferentially involved in multisensory integration (Maier et al., 2004; Romei et al., 2009). Auditory stimuli were presented over insert earphones (Etymotic model ER4S). They were sampled at 44.1 kHz, had 10 ms onset and offset ramps (to avoid clicks). The visual stimulus consisted of a centrally presented disc (either black on a white background or white on a black background, counterbalanced across blocks of trials to avoid difference of contrast and size between these dynamic stimuli) that symmetrically expanded (from 7° to 13° diameter with the radius increasing linearly at a constant rate) in the case of looming or contracted (from 7° to 1° diameter) in the case of receding. Additionally, the stimuli were 500 ms in duration and the interstimulus interval varied from 800 to 1400 ms such that participants could not anticipate the timing of stimulus presentation. Stimulus delivery and response recording were controlled by E-Prime (Psychology Software Tools; www.pstnet.com).

EEG acquisition and analyses

Continuous EEG was acquired at 1024 Hz through a 160-channel Biosemi ActiveTwo AD-box (www.biosemi.com) referenced to the common mode sense (CMS; active electrode) and grounded to the driven right leg (DRL; passive electrode), which functions as a feedback loop driving the average potential across the electrode montage to the amplifier zero (full details, including a diagram of this circuitry, can be found at http://www.biosemi.com/faq/cms&drl.htm). Epochs of EEG from 100 ms prestimulus to 500 ms poststimulus onset were averaged for each of the four stimulus conditions and from each subject to calculate the event-related potential (ERP). Only trials leading to correct responses were included. In addition to the application of an automated artifact criterion of ±80 μV, the data were visually inspected to reject epochs with blinks, eye movements, or other sources of transient noise. Baseline was defined as the 100 ms prestimulus period. For each subject's ERPs, data at artifact electrodes were interpolated (Perrin et al., 1987). Data were baseline corrected using the prestimulus period, bandpass filtered (0.18–60.0 Hz), and recalculated against the average reference.

General analysis strategy.

Multisensory effects and effects of spatial congruence were identified with a multistep analysis procedure, which we refer to as electrical neuroimaging and which is implemented in the freeware Cartool (Brunet et al., 2011; http://sites.google.com/site/fbmlab/cartool). Analyses were applied that use both local and global measures of the electric field at the scalp. These so-called electrical neuroimaging analyses allowed us to differentiate effects following from modulations in the strength of responses of statistically indistinguishable brain generators from alterations in the configuration of these generators (viz. the topography of the electric field at the scalp), as well as latency shifts in brain processes across experimental conditions (Michel et al., 2004, 2009; Murray et al., 2005, 2008). In addition, we used the local autoregressive average distributed linear inverse solution (LAURA; Grave de Peralta Menendez et al., 2001, 2004) to visualize and statistically contrast the likely underlying sources of effects identified in the preceding analysis steps.

ERP waveform modulations.

As a first level of analysis, we analyzed waveform data from all electrodes as a function of time poststimulus onset in a series of pairwise comparisons (t tests) between responses to the multisensory pair and summed constituent unisensory responses. Temporal auto-correlation at individual electrodes was corrected through the application of an 11 contiguous data-point temporal criterion (∼11 ms) for the persistence of differential effects (Guthrie and Buchwald, 1991). Similarly, spatial correlation was addressed by considering as reliable only those effects that entailed at least 11 electrodes from the 160-channel montage. Nonetheless, we would emphasize that the number of electrodes exhibiting an effect at a given latency will depend on the reference, and this number is not constant across choices of reference because significant effects are not simply redistributed across the montage (discussed in Tzovara et al., in press). Likewise, the use of an average reference receives support from biophysical laws as well as the implicit recentering of ERP data to such when performing source estimations (discussed by Brunet et al., 2011). Analyses of ERP voltage waveform data (vs the average reference) are presented here to provide a clearer link between canonical ERP analysis approaches and electrical neuroimaging. The results of this ERP waveform analysis are presented as an area plot representing the number of electrodes exhibiting a significant effect as a function of time (poststimulus onset). This type of display was chosen to provide a sense of the dynamics of a statistical effect between conditions as well as the relative timing of effects across contrasts. We emphasize that while these analyses give a visual impression of specific effects within the dataset, our conclusions are principally based on reference-independent global measures of the electric field at the scalp that are described below.

Global electric field analyses.

The collective poststimulus group-average ERPs were subjected to a topographic cluster analysis based on a hierarchical clustering algorithm (Murray et al., 2008). This clustering identifies stable electric field topographies (hereafter template maps). The ERP topography is independent of the reference, and modulations in topography forcibly reflect modulations in the configuration of underlying generators (Lehmann, 1987). Additionally, the clustering is exclusively sensitive to topographic modulations, because the data are first normalized by their instantaneous Global Field Power (GFP). The optimal number of temporally stable ERP clusters (i.e., the minimal number of maps that accounts for the greatest variance of the dataset) was determined using a modified Krzanowski-Lai criterion (Murray et al., 2008). The clustering makes no assumption on the orthogonality of the derived template maps (Pourtois et al., 2008; De Lucia et al., 2010). Template maps identified in the group-average ERP were then submitted to a fitting procedure wherein each time point of each single-subject ERP is labeled according to the template map with which it best correlated spatially (Murray et al., 2008) so as to statistically test the presence of each map in the moment-by-moment scalp topography of the ERP and the differences in such across conditions. Additionally, temporal information about the presence of a given template map was derived, quantifying (among other things) when a given template map was last labeled in the single-subject ERPs. These values were submitted to repeated-measures ANOVA. In addition to testing for modulations in the electric field topography across conditions, this analysis also provides a more objective means of defining ERP components. That is, we here defined an ERP component as a time period of stable electric field topography.

Modulations in the strength of the electric field at the scalp were assessed using GFP (Lehmann and Skrandies, 1980; Koenig and Melie-García, 2010) for each subject and stimulus condition. GFP is calculated as the square root of the mean of the squared value recorded at each electrode (vs the average reference) and represents the spatial SD of the electric field at the scalp. It yields larger values for stronger electric fields. Because GFP is calculated across the entire electrode montage, comparisons across conditions will be identical, regardless of the reference used (though we would note that the above formula uses an average reference). In this way, GFP constitutes a reference-independent measure. GFP modulations were analyzed via ANOVAs over the periods of interest defined by the above topographic cluster analysis (i.e., 73–113 ms and 114–145 ms).

Source estimations.

We estimated the localization of the electrical activity in the brain using a distributed linear inverse solution applying the LAURA regularization approach comprising biophysical laws as constraints (Grave de Peralta Menendez et al., 2001, 2004; for review, see also Michel et al., 2004; Murray et al., 2008). LAURA selects the source configuration that better mimics the biophysical behavior of electric vector fields (i.e., activity at one point depends on the activity at neighboring points according to electromagnetic laws). In our study, homogenous regression coefficients in all directions and within the whole solution space were used. LAURA uses a realistic head model, and the solution space included 4024 nodes, selected from a 6 × 6 × 6 mm grid equally distributed within the gray matter of the Montreal Neurological Institute's average brain (courtesy of R. Grave de Peralta Menendez and S. Gonzalez Andino; http://www.electrical-neuroimaging.ch/). Prior basic and clinical research from members of our group and others has documented and discussed in detail the spatial accuracy of the inverse solution model used here (Grave de Peralta Menendez et al., 2004; Michel et al., 2004; Gonzalez Andino et al., 2005; Martuzzi et al., 2009). In general, the localization accuracy is considered to be along the lines of the matrix grid size (here 6 mm). The results of the above topographic pattern analysis defined time periods for which intracranial sources were estimated and statistically compared between conditions (here 73–113 ms poststimulus). Before calculation of the inverse solution, the ERP data were down-sampled and affine-transformed to a common 111-channel montage. Statistical analyses of source estimations were performed by first averaging the ERP data across time to generate a single data point for each participant and condition. This procedure increases the signal-to-noise ratio of the data from each participant. The inverse solution was then estimated for each of the 4024 nodes. These data were then submitted to a three-way ANOVA using within-subject factors of pair/sum condition, stimulus congruence/incongruence, and visual looming/receding. A spatial extent criterion of at least 17 contiguous significant nodes was likewise applied (see also Toepel et al., 2009; Cappe et al., 2010; De Lucia et al., 2010; Knebel et al., 2011; Knebel and Murray, 2012 for a similar spatial criterion). This spatial criterion was determined using the AlphaSim program (available at http://afni.nimh.nih.gov) and assuming a spatial smoothing of 6 mm FWHM. This criterion indicates that there is a 3.54% probability of a cluster of at least 17 contiguous nodes, which gives an equivalent node-level p-value of p ≤ 0.0002. The results of the source estimations were rendered on the Montreal Neurologic Institute's average brain with the Talairach and Tournoux (1988) coordinates of the largest statistical differences within a cluster indicated.

Results

The behavioral results with this paradigm (Fig. 1) have been published separately (Cappe et al., 2009b). Our main findings were a selective facilitation for multisensory looming stimuli (auditory-visual looming denoted ALVL). When asking participants to detect stimulus movement, facilitation of behavior was seen for all multisensory conditions compared with unisensory conditions. Interestingly, human subjects were faster to detect movement of multisensory looming stimuli versus receding (auditory-visual receding denoted ARVR) or incongruent stimuli (auditory looming with visual receding and auditory receding with visual looming denoted ALVR and ARVL, respectively). For a movement rating task with the same stimuli, this selective facilitation for looming stimuli was shown again in higher movement ratings for looming stimuli than for receding stimuli, and even more in multisensory conditions (Cappe et al., 2009b). Only multisensory looming stimuli resulted in enhancement beyond that induced by the sheer presence of auditory-visual stimuli, as revealed by contrasts with multisensory conditions where one sensory modality consisted of static (i.e., constant size/volume) information (cf. Cappe et al., 2009b, their Fig. 5). These behavioral results are recapitulated here in Table 1. During the detection task, we recorded ERPs for each subject and we analyzed these data as described below and in the Materials and Methods.

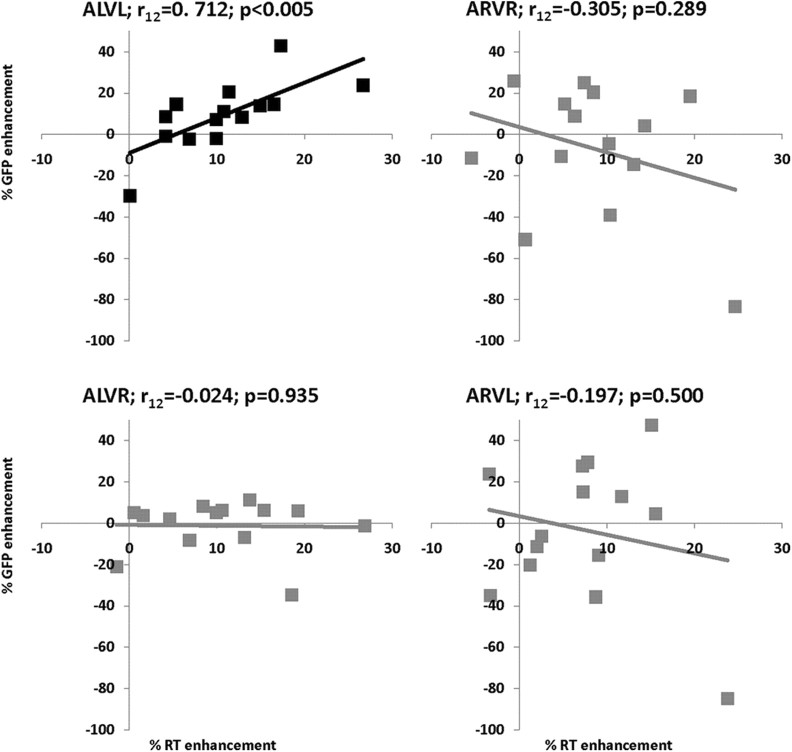

Figure 5.

Relationship between RT and GFP multisensory enhancements. These scatter plots relate the percentage of RT enhancement to the percentage of GFP enhancement over the 73–113 ms period (x-axis and y-axis, respectively) for each of the multisensory conditions. The multisensory enhancement index is defined as the difference between the multisensory condition and the best unisensory condition divided by the best unisensory condition for each participant. A significant, positive, and linear correlation was exhibited only for the multisensory looming condition (ALVL).

Table 1.

Psychophysics results

| Condition | Mean reaction times (ms) ± SEM | Mean movement rating (1–5 scale) ± SEM |

|---|---|---|

| ALVL | 439 ± 19 | 4.20 ± 0.15 |

| ARVR | 457 ± 20 | 3.46 ± 0.17 |

| ALVR | 447 ± 20 | 4.08 ± 0.15 |

| ARVL | 456 ± 21 | 3.28 ± 0.17 |

ERP waveform analyses

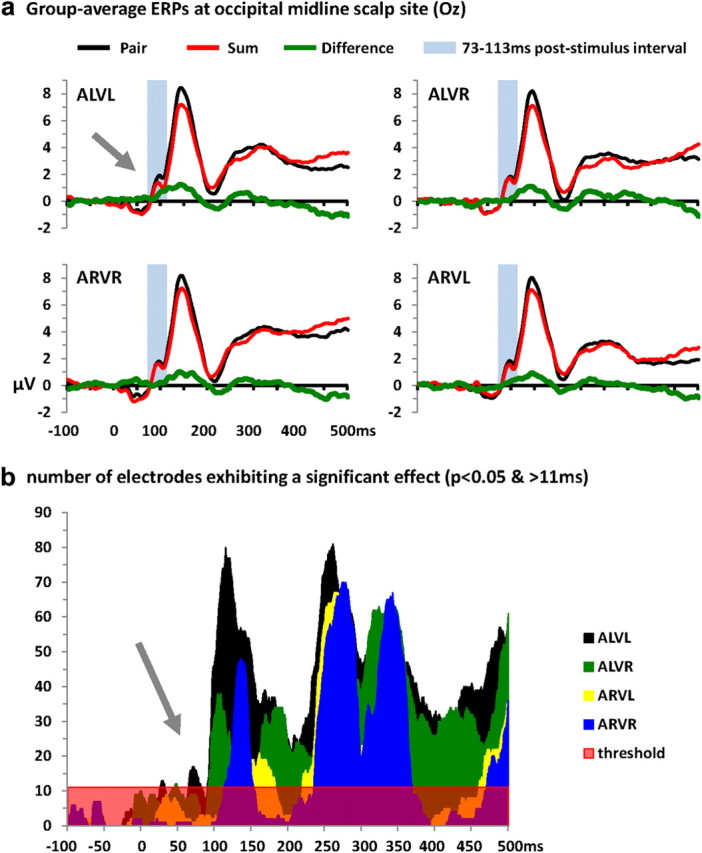

Our analyses here are based on the application of an additive model to detect nonlinear neural responses interactions, wherein the ERP in response to the multisensory condition is contrasted with the summed ERPs in response to the constituent auditory and visual conditions (hereafter referred to as “pair” and “sum” ERPs, respectively). The first level of analysis focused on determining the timing differences between the multisensory pair and the sum of unisensory ERPs. Visual inspection of an exemplar occipital electrode suggests there to be nonlinear interactions beginning earlier for looming conditions (ALVL) than for receding (ARVR) or incongruent conditions (ALVR and ARVL) (Fig. 2a). The group-averaged ERPs from the pair and sum responses were compared statistically by paired t tests. These analyses were applied for each condition (ALVL, ARVR, ALVR, ARVL; for the analyses of static conditions, see Cappe et al., 2010). Statistical analyses of the pair versus sum ERP waveforms as a function of time are displayed in Figure 2b and show significant and temporally sustained nonlinear neural response interactions for each condition, but with different latencies (statistical criteria are defined as p < 0.05 for a minimum of 11 ms duration at a given electrode and a spatial criterion of at least 11 electrodes). Using these criteria, the earliest nonlinear response interactions began at 68 ms poststimulus onset for the multisensory looming condition (similar results were also found for static conditions, see Cappe et al., 2010), whereas such effects were delayed until 119 ms for the multisensory receding condition. For incongruent conditions, these differences were observed at 95 ms poststimulus for ALVR and at 140 ms poststimulus for ARVL.

Figure 2.

Group-averaged (N = 14) voltage waveforms and ERP voltage waveform analyses. a, Data are displayed at a midline occipital electrode site (Oz) from the response to the multisensory pair (black traces), summed unisensory responses (red traces), and their difference (green traces). The arrow indicates modulations evident for multisensory looming conditions that were not apparent for any other multisensory combination over the ∼70–115 ms poststimulus interval. b, The area plots show results of applying millisecond-by-millisecond paired contrasts (t tests) across the 160 scalp electrodes comparing multisensory and the sum of unisensory stimuli. The number of electrodes showing a significant difference are plotted as a function of time (statistical criteria: p < 0.05 for a minimum of 11 consecutive milliseconds and 11 scalp sites). Nonlinear neural response interactions started at 68 ms poststimulus onset for multisensory looming stimuli (ALVL), at 119 ms for the multisensory receding (ARVR) condition, and at 95 and 140 ms for incongruent multisensory conditions ALVR and ARVL, respectively.

Global electric field analyses

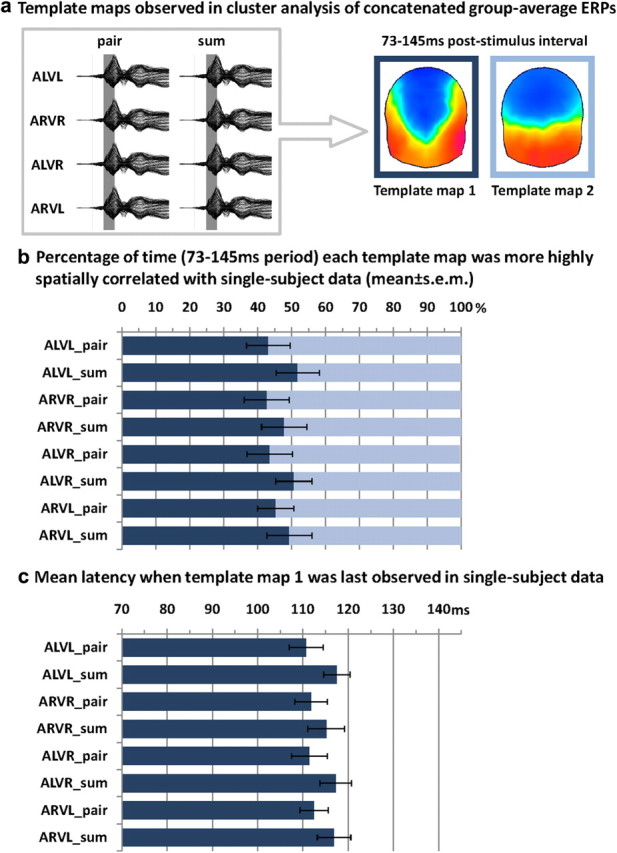

A hierarchical topographic cluster analysis was performed on the group-average ERPs concatenated across the 8 experimental conditions (pair/sum × ALVL, ARVR, ALVR, ARVL) to identify periods of stable electric field topography both within and between experimental conditions. For this concatenated dataset, 9 template maps were identified with a global explained variance of 95.1%. Two different maps were identified in the group-average data over the 73–145 ms poststimulus period that appeared to differently account for pair and sum conditions (Fig. 3a). The first map appeared earlier for multisensory pair than for summed unisensory responses for all conditions (i.e., all combinations of looming and receding auditory and visual stimuli). The amount of time each template map yielded a higher spatial correlation with the single-subject data from each condition was quantified over the 73–145 ms poststimulus period as “the frequency of map presence” and submitted to a repeated-measures ANOVA using within-subject factors of pair/sum condition, stimulus congruence/incongruence, visual looming/receding, and map (Fig. 3b). In accordance with a faster transition from one map to another under multisensory conditions, there was a significant interaction between pair/sum condition and template map (F(1,13) = 11.957; p = 0.004; ηp2 = 0.479). By extension, such topographic differences argue for a latency shift in the configuration of the underlying intracranial sources. This latency shift was further supported by an analysis of the timing at which the first of the two template maps was last observed (i.e., yielded a higher spatial correlation than the other template map) in the single-subject data (Fig. 3c). The same factors as above were used, save for that of template map. Consistent with the above, there was a significant main effect of pair/sum condition (F(1,9) = 5.154; p = 0.049; ηp2 = 0.364; note the lower degrees of freedom in this specific analysis because not all maps were observed in all subjects, leading to missing values rather than entries of 0). Specifically, in the case of responses to multisensory stimuli, the transition occurred at 113 ms poststimulus on average; a latency that is used below to define time windows of interest for analyses of Global Field Power and source estimations.

Figure 3.

Topographic cluster analyses and single-subject fitting based on spatial correlation. a, The hierarchical clustering analysis was applied to the concatenated group-averaged ERPs from all pair and sum conditions (schematized by the gray box) and identified two template maps accounting for responses over the 73–145 ms poststimulus period that are shown on the right of this panel. b, The spatial correlation between each template map (Template maps 1 and 2) was calculated with the single-subject data from each condition, and the percentage of time a given template map yielded a higher spatial correlation was quantified (mean ± SEM shown) and submitted to ANOVA that revealed a significant interaction between pair versus sum conditions and template map. c, The latency when template map 1 was last observed (measured via spatial correlation) in the single-subject data from each condition was quantified and submitted to ANOVA. There was an earlier transition from template map 1 to template map 2 under multisensory versus summed unisensory conditions.

Distinct topographies were also identified at the group-average level across conditions (pair versus sum) over the 250–400 ms poststimulus period (Fig. 3a). The topographic analysis indicated that predominated maps differed between the multisensory pair (one map) and unisensory sum conditions (two maps) over this period. However, the differences after 250 ms could also be due to the use of the additive model to determine nonlinear interactions (summation of motor activity; discussed by Cappe et al., 2010). We therefore will not focus on this observation (see also Besle et al., 2004; Murray et al., 2005).

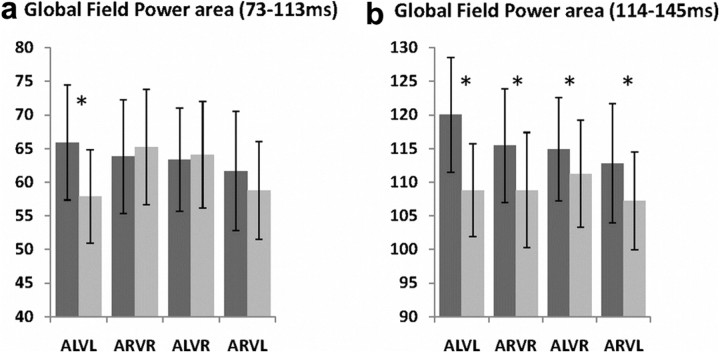

For each pair and sum condition as well as each subject, the mean Global Field Power was calculated over the 73–113 ms and 114–145 ms periods and in turn submitted to a three-way ANOVA, using within-subjects factors of pair/sum condition, stimulus congruence/incongruence, and visual looming/receding. Over the 73–113 ms period (i.e., during the first period of stable topography), there were superadditive interactions for the multisensory looming condition that were not observed for other conditions (Fig. 4a; three-way interaction F(1,13) = 4.862; p = 0.046; ηp2 = 0.272 and post hoc t test for the ALVL condition p = 0.02). Over the 114–145 ms period (i.e., during the second period of stable topography), there were superadditive interactions for all multisensory conditions (Fig. 4b; main effect of pair vs sum F(1,13) = 4.913; p = 0.045; ηp2 = 0.274). The early preferential nonlinear interactions observed for multisensory looming conditions here is consistent with observations based on voltage ERP waveforms (Fig. 2).

Figure 4.

Global field power analyses. a, b, Modulations in response strength were identified using global field power (GFP), which was quantified over the 73–113 ms poststimulus period (a) and 114–145 ms poststimulus period (b) for each multisensory condition and the sum of unisensory conditions (dark and light gray bars, respectively). Mean ± SEM values are displayed, and asterisks indicate significant effects between specific pair and sum conditions. There was a significant three-way interaction over the 73–113 ms period, with evidence of selective nonlinear modulations for multisensory looming conditions. There was a significant main effect of pair versus sum conditions over the 114–145 ms period, indicative of generally stronger responses to multisensory versus summed unisensory conditions.

Excluding accounts based on differences in stimulus energy

We deliberately used an experimental design wherein all stimulus conditions had the same initial volume and/or size (Fig. 1) so that subjects could not perform the task based on initial differences across conditions but instead needed to evaluate the stimuli dynamically. However, a reasonable criticism is that the conditions consequently differ in their total stimulus energy. With regard to the visual modality, we controlled for such differences by counterbalancing the contrast polarity across blocks of trials such that the total number of black and white pixels was equivalent across conditions. With regard to the auditory modality, no such control was implemented. Thus, there is a potential confound between perceived direction and stimulus intensity.

However, it is important to recall that all of the pair versus sum comparisons are fully equated in terms of stimulus energy. Likewise, a posteriori our results provide one level of argumentation against this possibility. Neither the main effect of pair/sum condition (or its interaction with map) in the topographic cluster analysis nor the three-way interaction observed in the global field power analysis can be explained by simple differences in acoustic intensity. Moreover, strict application of the principle of inverse effectiveness would predict that receding stimuli would yield greater interactions than looming stimuli. Yet, there was no evidence of such in our analyses. Rather, only the multisensory looming condition resulted in early-stage global field power (and voltage waveform) modulations, and subsequent effects were common to all multisensory conditions regardless of looming/receding (and therefore stimulus intensity confounds).

To more directly address this potential confound, we contrasted ERPs in response to unisensory conditions, using the same analysis methods as described above for examining multisensory interactions. With regard to responses to looming and receding sounds, voltage waveform analyses revealed effects beginning at 220 ms poststimulus onset. A millisecond-by-millisecond analysis of the GFP waveforms revealed effects beginning at 234 ms poststimulus onset. Finally, a millisecond-by-millisecond analysis of the ERP topography (normalized by its instantaneous GFP) revealed effects beginning at 264 ms poststimulus onset. These analyses across local and global measures of the electric field all indicate that response differences between unisensory looming and receding stimuli are substantially delayed relative to the latency of the earliest nonlinear neural response interactions observed for all multisensory conditions as well as the preferential interactions between multisensory looming stimuli. This finding provides additional support to the proposition that discrimination/differentiation of motion signals is facilitated by multisensory interactions and extends this notion to motion across perceived distances. Future work varying the acoustic structure of the stimuli will be able to capitalize on evidence that the impression of looming is limited to harmonic or tonal sounds (Neuhoff, 1998) as are multisensory effects involving looming sounds (Maier et al., 2004; Romei et al., 2009; Leo et al., 2011).

Relation between behavioral facilitation and GFP enhancement

In a further analysis, we determined an index of multisensory enhancements for reaction times (RTs) and GFP area over the 73–113 ms period. The percentage of multisensory RT enhancement was calculated as the ratio of the difference between the multisensory condition and the best constituent unisensory condition relative to the best unisensory condition for each participant (see also Stein and Meredith, 1993; Cappe et al., 2009b). The percentage of multisensory GFP enhancement was calculated as the ratio of the difference between the GFP to the multisensory pair and summed unisensory conditions relative to the multisensory pair. Interestingly, a positive correlation was exhibited between RTs and GFP multisensory enhancements for looming conditions over the 73–113 ms period (r(12) = 0.712; p < 0.005; Fig. 5). No other condition showed a reliable correlation (all p-values >0.05; Fig. 5). These results suggest that early integrative effects are behaviorally relevant (particularly in the case of looming signals that convey strong ethological significance) and that greater integrative effects result in greater behavioral facilitation. Similar correlations have recently been reported by Van der Burg et al. (2011), where participants with greater early-latency interactions showed bigger benefits of task-irrelevant sounds in the context of a visual feature detection task. Such findings thus add to a growing literature demonstrating the direct behavioral relevance of early-latency and low-level multisensory interactions (Sperdin et al., 2010).

Source estimations

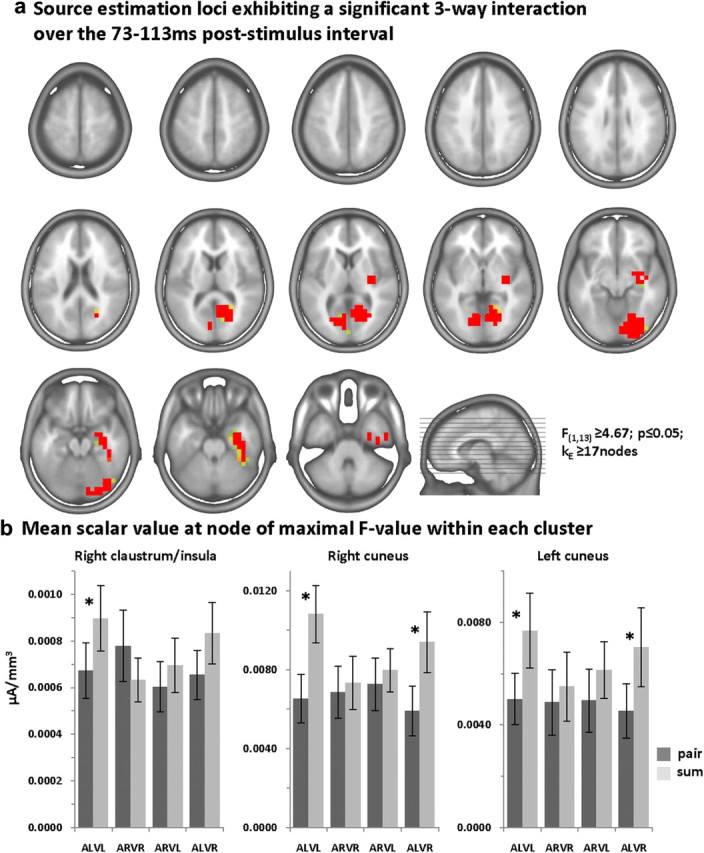

Given the results of the above voltage waveform and GFP analyses, we estimated sources over the 73–113 ms poststimulus period. Scalar values from the source estimations throughout the entire brain volume from each participant and condition were submitted to a three-way ANOVA (spatial criterion described in Materials and Methods). There was evidence for a three-way interaction between pair/sum condition, stimulus congruence/incongruence, and visual looming/receding within the right claustrum/insula extending into the anterior inferior temporal lobe and amygdala as well as within the bilateral cuneus extending within the right hemisphere inferiorly into the lingual gyrus and posteriorly into the lateral middle occipital gyrus (Fig. 6a; Table 2). To ascertain the basis for this interaction, group-average scalar values at the node exhibiting maximal F-values within each of these three clusters are shown as bar graphs in Figure 6b. In all three clusters subadditive effects were seen for multisensory looming conditions. The other conditions failed to exhibit significant nonlinear effects, with the exception of the ALVR condition (pair vs sum contrast) that exhibited significant subadditive effects in the cuneus bilaterally. Of note, however, is that nonlinear effects within the claustrum/insula were limited to multisensory looming conditions, suggesting these regions are particularly sensitive to and/or themselves integrating information regarding perceived motion direction and congruence across modalities (see also Bushara et al., 2001; Calvert et al., 2001; Naghavi et al., 2007; but see Remedios et al., 2010). While determining the precise manner of relating the directionality of changes in GFP to the directionality of effects observed within source estimation nodes awaits further investigation, it is important to note that both levels of analysis indicate there to be effects specific to multisensory looming conditions.

Figure 6.

Statistical analyses of source estimations: three-way interaction. Group-averaged source estimations were calculated over the 73–113 ms poststimulus period for each experimental condition and submitted to a three-way ANOVA. Regions exhibiting significant interactions between pair/sum conditions, congruent/incongruent multisensory pairs, and visual looming versus receding stimuli are shown in a on axial slices of the MNI template brain. Only nodes meeting the p ≤ 0.05 α criterion as well as a spatial extent criterion of at least 17 contiguous nodes were considered reliable (see Materials and Methods for details). Three clusters exhibited an interaction, and the mean scalar values (SEM indicated) from the node exhibiting the maximal F-value in each cluster are shown in b. Asterisks indicate significant differences between pair and sum conditions.

Table 2.

Source estimation clusters exhibiting a three-way interaction over the 73–113 ms interval

| Brain area | Talairach and Tournoux (1988) coordinates of maximal F-value | Maximal F-value | Cluster size (number of nodes) |

|---|---|---|---|

| Right claustrum/insula extending inferiorly into the anterior temporal cortex and amygdala | 35, −17, 2 mm | 12.071 | 78 |

| Right cuneus extending inferiorly into the lingual gyrus and posteriorly to lateral occipital cortex | 23, −70, −4 mm | 15.484 | 104 |

| Left cuneus | −17, −75, 11 mm | 9.157 | 23 |

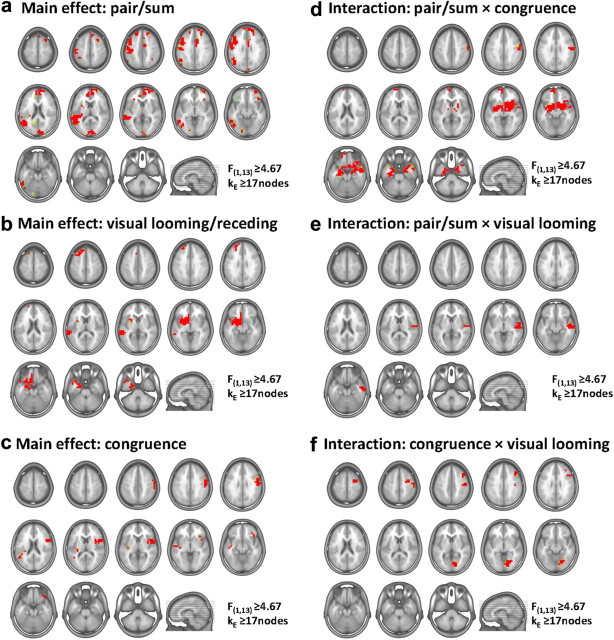

Aside from this three-way interaction, several other main effects and interactions were observed (Fig. 7). There was evidence for a main effect of pair versus sum conditions within a widespread network of regions that included the bilateral cuneus extending along the calcarine sulcus, the left superior temporal gyrus extending superiorly into the angular gyrus, the left inferior frontal gyrus, and bilateral medial frontal gyrus (Fig. 7a). This main effect was the consequence of subadditive interactions, consistent with prior EEG and fMRI findings (Bizley et al., 2007; Martuzzi et al., 2007; Besle et al., 2008; Kayser et al., 2009; Cappe et al., 2010; Raij et al., 2010). The main effect of visual looming versus receding produced differences within the left superior temporal gyrus as well as left claustrum/insula extending inferiorly into the amygdala (Fig. 7b). Responses were stronger to visual looming versus receding stimuli, despite our counterbalancing contrast polarity (and therefore mean stimulus energy) across blocks of trials. This suggests that this main effect is driven by an analysis of the perceived direction of motion. Finally, there was evidence for a main effect of congruent versus incongruent multisensory combinations within the right inferior frontal gyrus such that responses were stronger to congruent combinations (Fig. 7c), consistent with studies implicating these regions in processing multisensory object congruence/familiarity (Doehrmann and Naumer, 2008). There was likewise evidence for a significant interaction between pair and sum conditions and congruent versus incongruent multisensory combinations within bilateral limbic and subcortical structures, including the amygdala and putamen (Fig. 7d). There was evidence for a significant interaction between pair and sum conditions and visual looming versus receding stimuli within the right superior and middle temporal gyri (Fig. 7e). Finally, there was evidence for a significant interaction between congruent versus incongruent multisensory combinations and visual looming versus receding stimuli within the right cuneus and right inferior frontal gyrus (Fig. 7f).

Figure 7.

Statistical analyses of source estimations: main effects and two-way interactions. Group-averaged source estimations were calculated over the 73–113 ms poststimulus period for each experimental condition and submitted to a three-way ANOVA. Only nodes meeting the p ≤ 0.05 α criterion as well as a spatial extent criterion of at least 17 contiguous nodes were considered reliable (see Materials and Methods for details). Regions exhibiting significant main effects are shown in a–c on axial slices of the MNI template brain. Regions exhibiting significant two-way interactions are shown in d–f on axial slices of the MNI template brain.

Discussion

This study provides the first demonstration that the human brain preferentially integrates multisensory looming signals. Such findings complement observations in non-human primates of preferential looking behavior with multisensory looming stimuli (Maier et al., 2004) as well as enhanced neural synchrony between auditory and superior temporal cortices (Maier et al., 2008). The present observation of selective superadditive interactions of responses to multisensory looming signals during early poststimulus onset periods (73–113 ms) that were moreover positively correlated with behavioral facilitation argues for synergistic interplay in humans between principles of multisensory integration established from single-neuron recordings in animals (Stein and Meredith, 1993). These results, in conjunction with the extant literature, highlight the challenge of directly transposing models of multisensory interactions from single-neuron to population-level responses and perception (Krueger et al., 2009; Ohshiro et al., 2011). They likewise suggest that multisensory interactions can facilitate the processing and perception of specific varieties of ethologically significant environmental stimuli; here those signaling potential collisions/dangers.

Synergistic interplay between principles of multisensory integration

Looming and receding stimuli provide an effective means for investigating the interplay between established principles of multisensory interactions, because the perceived distance and motion direction are higher-order indices that follow from first-order changes in the visual size or auditory intensity of the stimuli. In both senses a dynamic change in size/intensity (and by extension effectiveness) is interpreted at least perceptually and presumably coded neurophysiologically as a source varying in its distance from the observer. Likewise, because stimulus intensity at trial onset was equated across all conditions, participants necessarily treated stimulus dynamics. Also, no differential processing of looming versus receding signals was required by the task, but rather the differentiation of moving versus stationary stimuli. This allowed for the same task-response requirements for congruent and incongruent multisensory conditions. As such, the present differences can be considered implicit.

It is likewise indispensable to consider the suitability of transposing the spatial and inverse effectiveness principles to studies of multisensory interactions in humans (the temporal principle is not at play here as the stimuli were always synchronously covarying). Direct transposition of the spatial principle, particularly within the auditory modality, is challenged by evidence for population-based coding of sounds' positions rather than a simple spatio-topic mapping (Stecker and Middlebrooks, 2003; Murray and Spierer, 2009). Instead, recent single-unit recordings within auditory fields along the superior temporal plane in macaque monkeys indicate that these neurons are responsive to the full 360° of azimuth (Woods et al., 2006). With regard to rising versus falling intensity sound processing, there is evidence for the involvement of core auditory fields as well as for a general neural response bias (in terms of spiking rate, but not latency) for rising-intensity sounds regardless of their specific frequency or volume (Lu et al., 2001). Such findings suggest that unisensory looming stimuli may receive preferential processing and may in turn be one basis for the enhanced salience of looming stimuli (Kayser et al., 2005) that in turn cascades to result in selective integration of multisensory looming stimuli. The present results also run counter to a simple instantiation of the principle of inverse effectiveness, wherein receding stimuli would have been predicted to yield the largest enhancement of behavior and brain activity (though not forcibly the largest absolute amplitude responses). This was clearly not the case either with regard to the facilitation of reaction times (Cappe et al., 2009b) or ERPs (Fig. 4). In agreement, the extant literature in humans provides several replications from independent laboratories of early-latency (i.e., <100 ms poststimulus onset) nonlinear neural response interactions between high-intensity auditory-visual stimulus pairs (Giard and Peronnet, 1999; Teder-Sälejärvi et al., 2002; Gondan and Röder, 2006; Cappe et al., 2010; Raij et al., 2010; but see Senkowski et al., 2011), although it remains to be detailed under which circumstances effects are superadditive versus subadditive (cf. Cappe et al., 2010 for discussion).

Evidence for synergy between principles of multisensory interactions is likewise accumulating in studies of single-unit spiking activity within the cat anterior ectosylvian sulcus. The innovative discovery is that the firing rate within individual receptive fields of neurons is heterogeneous and varies with stimulus effectiveness in spatially and temporally dependent manners (for review, see Krueger et al., 2009). Superadditive and subadditive hotspots are not stationary within the neuron's receptive field either in cortical (Carriere et al., 2008) or subcortical (Royal et al., 2009) structures and furthermore are not straightforwardly predicted by unisensory response patterns. These features were further evident when data were analyzed at a population level, such that the percentage of integration was higher (in their population of neurons) along the horizontal meridian than for other positions, even though response profiles were uniformly distributed (Krueger et al., 2009). Regarding potential functional consequences of this organization of responsiveness, Wallace and colleagues postulate that such heterogeneity could be efficient in encoding dynamic/moving stimuli and in generating a “normalized” response profile (at least during multisensory conditions) across the receptive field (Krueger et al., 2009). The present results may be highlighting the consequences of such architecture (to the extent it manifests in humans) on the discrimination and population-level neural response to dynamic looming stimuli.

Mechanisms subserving the integration of looming signals

Mechanistically, we show that the selective integration of multisensory looming cues manifests as a superadditive nonlinear interaction in GFP over the 73–113 ms poststimulus period in the absence of significant topographic differences between responses to multisensory stimulus pairs and summed responses from the constituent unisensory conditions. Stronger GFP is consistent with greater overall synchrony of the underlying neural activity. In this regard, our finding is therefore in keeping with observations of enhanced inter-regional synchrony between auditory core and STS regions (Maier et al., 2008), though their limited spatial sampling cannot exclude the involvement of other regions, including the claustrum/insula as well as cuneus identified in the present study. Prior research suggests that the right claustrum/insula is sensitive to multisensory congruency during object processing (Naghavi et al., 2007) as well as when determining multisensory onset (a)synchrony (Bushara et al., 2001; Calvert et al., 2001). Such functions may similarly be at play here. Over the same time interval, significant effects with multisensory looming stimuli were observed in the cuneus bilaterally; regions observed during early-latency multisensory interactions (Cappe et al., 2010; Raij et al., 2010) and during multisensory object processing (Stevenson and James, 2009; Naumer et al., 2011). This network of regions is thus in keeping with synergistic (and dynamic) processing of multisensory features present in looming stimuli, as well as with evidence for the differential processing of looming signals in the amygdala (Bach et al., 2008).

While evidence is increasingly highlighting the role of oscillatory activity in multisensory phenomena (Lakatos et al., 2007, 2008, 2009; Senkowski et al., 2008), such signal analysis methods have yet to be optimized for application to single-trial source estimations based on scalp-recorded EEG (Van Zaen et al., 2010; Ramírez et al., 2011). Such notwithstanding, our results therefore indicate there to be phase-locked and stimulus-locked activities at early poststimulus latencies that exhibit nonlinear multisensory interactions. It will be particularly informative to ascertain which oscillatory components, as well as their potential hierarchical interdependencies and prestimulus contingencies, engender the selective effects observed with multisensory looming stimuli. However, such investigations must currently await further analytical developments.

In addition to these selective interactions following multisensory looming stimuli, we also demonstrate a robust positive linear correlation between behavioral and neural indices of multisensory facilitation that was not evident for any of the other multisensory conditions in this study (Fig. 5). This further highlights the behavioral relevance of early-latency and low-level multisensory interactions in humans (Romei et al., 2007, 2009; Sperdin et al., 2009, 2010; Noesselt et al., 2010; Van der Burg et al., 2011) as well as monkeys (Wang et al., 2008). Such a linear relationship also provides further support to the suggestion that looming signals are on the one hand preferentially processed neurophysiologically (Maier et al., 2008) and on the other hand subject to perceptual biases (Maier et al., 2004). Our findings provide a first line of evidence for a causal link between these propositions.

Aside from this looming-selective effect, there was also a generally earlier transition from one stable ERP topography (and by extension configuration of active brain regions) to another one following multisensory stimuli, regardless of the direction and congruence of perceived stimulus motion, over the 73–145 ms poststimulus period. The overall timing of our effects generally concurs with prior studies using stationary stimuli that were task-relevant, task-irrelevant (but nonetheless attended) or passively presented (Giard and Peronnet, 1999; Molholm et al., 2002; Vidal et al., 2008; Cappe et al., 2010; Raij et al., 2010). While the use of dynamic stimuli may conceivably result in delayed effects relative to these prior studies, this was not the case for multisensory looming stimuli. In these prior studies, nonlinear neural responses interactions were consistently observed over the 50–100 ms poststimulus period and oftentimes thereafter within near-primary cortices.

Footnotes

This work has been supported by the Swiss National Science Foundation (Grant 3100AO-118419 and 310030B-133136 to M.M.M.) and the Leenaards Foundation (2005 Prize for the Promotion of Scientific Research to M.M.M. and G.T.). The Cartool software (http://sites.google.com/site/fbmlab/cartool) has been programmed by Denis Brunet, from the Functional Brain Mapping Laboratory, Geneva, Switzerland, and is supported by the EEG Brain Mapping Core of the Center for Biomedical Imaging (www.cibm.ch) of Geneva and Lausanne. Prof. Lee Miller provided helpful comments on an earlier version of this manuscript.

References

- Bach DR, Schächinger H, Neuhoff JG, Esposito F, Di Salle F, Lehmann C, Herdener M, Scheffler K, Seifritz E. Rising sound intensity: an intrinsic warning cue activating the amygdala. Cereb Cortex. 2008;18:145–150. doi: 10.1093/cercor/bhm040. [DOI] [PubMed] [Google Scholar]

- Beer AL, Plank T, Greenlee MW. Diffusion tensor imaging shows white matter tracts between human auditory and visual cortex. Exp Brain Res. 2011;213:200–308. doi: 10.1007/s00221-011-2715-y. [DOI] [PubMed] [Google Scholar]

- Besle J, Fort A, Giard MH. Interest and validity of the additive model in electrophysiological studies of multisensory interactions. Cogn Process. 2004;5:189–192. [Google Scholar]

- Besle J, Fischer C, Bidet-Caulet A, Lecaignard F, Bertrand O, Giard MH. Visual activation and audiovisual interactions in the auditory cortex during speech perception: intracranial recordings in humans. J Neurosci. 2008;28:14301–14310. doi: 10.1523/JNEUROSCI.2875-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bizley JK, Nodal FR, Bajo VM, Nelken I, King AJ. Physiological and anatomical evidence for multisensory interactions in auditory cortex. Cereb Cortex. 2007;17:2172–2189. doi: 10.1093/cercor/bhl128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brunet D, Murray MM, Michel CM. Spatiotemporal analysis of multichannel EEG: CARTOOL. Comput Intell Neurosci. 2011;2011:813870. doi: 10.1155/2011/813870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bushara KO, Grafman J, Hallett M. Neural correlates of auditory-visual stimulus onset asynchrony detection. J Neurosci. 2001;21:300–304. doi: 10.1523/JNEUROSCI.21-01-00300.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calvert GA, Hansen PC, Iversen SD, Brammer MJ. Detection of audio-visual integration sites in humans by application of electrophysiological criteria to the BOLD effect. Neuroimage. 2001;14:427–438. doi: 10.1006/nimg.2001.0812. [DOI] [PubMed] [Google Scholar]

- Calvert G, Spence C, Stein BE, editors. The handbook of multisensory processes. Cambridge, MA: MIT; 2004. [Google Scholar]

- Cappe C, Barone P. Heteromodal connections supporting multisensory integration at low levels of cortical processing in the monkey. Eur J Neurosci. 2005;22:2886–2902. doi: 10.1111/j.1460-9568.2005.04462.x. [DOI] [PubMed] [Google Scholar]

- Cappe C, Rouiller EM, Barone P. Multisensory anatomic pathway. Hear Res. 2009a;258:28–36. doi: 10.1016/j.heares.2009.04.017. [DOI] [PubMed] [Google Scholar]

- Cappe C, Thut G, Romei V, Murray MM. Selective integration of auditory–visual looming cues by humans. Neuropsychologia. 2009b;47:1045–1052. doi: 10.1016/j.neuropsychologia.2008.11.003. [DOI] [PubMed] [Google Scholar]

- Cappe C, Thut G, Romei V, Murray MM. Auditory-visual multisensory interactions in humans: timing, topography, directionality, and sources. J Neurosci. 2010;30:12572–12580. doi: 10.1523/JNEUROSCI.1099-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carriere BN, Royal DW, Wallace MT. Spatial heterogeneity of cortical receptive fields and its impact on multisensory interactions. J Neurophysiol. 2008;99:2357–2368. doi: 10.1152/jn.01386.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Lucia M, Clarke S, Murray MM. A temporal hierarchy for conspecific vocalization discrimination in humans. J Neurosci. 2010;30:11210–11221. doi: 10.1523/JNEUROSCI.2239-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doehrmann O, Naumer MJ. Semantics and the multisensory brain: how meaning modulates processes of audio-visual integration. Brain Res. 2008;1242:136–150. doi: 10.1016/j.brainres.2008.03.071. [DOI] [PubMed] [Google Scholar]

- Falchier A, Clavagnier S, Barone P, Kennedy H. Anatomical evidence of multimodal integration in primate striate cortex. J Neurosci. 2002;22:5749–5759. doi: 10.1523/JNEUROSCI.22-13-05749.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Falchier A, Schroeder CE, Hackett TA, Lakatos P, Nascimento-Silva S, Ulbert I, Karmos G, Smiley JF. Projection from visual areas V2 and prostriata to caudal auditory cortex in the monkey. Cereb Cortex. 2010;20:1529–1538. doi: 10.1093/cercor/bhp213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghazanfar AA, Schroeder CE. Is neocortex essentially multisensory? Trends Cog Sci. 2006;10:278–285. doi: 10.1016/j.tics.2006.04.008. [DOI] [PubMed] [Google Scholar]

- Ghazanfar AA, Neuhoff JG, Logothetis NK. Auditory looming perception in rhesus monkeys. Proc Natl Acad Sci U S A. 2002;99:15755–15757. doi: 10.1073/pnas.242469699. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giard MH, Peronnet F. Auditory-visual integration during multimodal object recognition in humans: a behavioral and electrophysiological study. J Cogn Neurosci. 1999;11:473–490. doi: 10.1162/089892999563544. [DOI] [PubMed] [Google Scholar]

- Gondan M, Röder B. A new method for detecting interactions between the senses in event-relate potentials. Brain Res. 2006;1073–1074:389–397. doi: 10.1016/j.brainres.2005.12.050. [DOI] [PubMed] [Google Scholar]

- Gonzalez Andino SL, Murray MM, Foxe JJ, de Peralta Menendez RG. How single-trial electrical neuroimaging contributes to multisensory research. Exp Brain Res. 2005;166:298–304. doi: 10.1007/s00221-005-2371-1. [DOI] [PubMed] [Google Scholar]

- Grave de Peralta Menendez R, Gonzalez Andino S, Lantz G, Michel CM, Landis T. Noninvasive localization of electromagnetic epileptic activity: I. Method descriptions and simulations. Brain Topogr. 2001;14:131–137. doi: 10.1023/a:1012944913650. [DOI] [PubMed] [Google Scholar]

- Grave de Peralta Menendez R, Murray MM, Michel CM, Martuzzi R, Gonzalez Andino SL. Electrical neuroimaging based on biophysical constraints. Neuroimage. 2004;21:527–539. doi: 10.1016/j.neuroimage.2003.09.051. [DOI] [PubMed] [Google Scholar]

- Graziano MS, Cooke DF. Parieto-frontal interactions, personal space, and defensive behavior. Neuropsychologia. 2006;44:845–859. doi: 10.1016/j.neuropsychologia.2005.09.009. [DOI] [PubMed] [Google Scholar]

- Guthrie D, Buchwald JS. Significance testing of difference potentials. Psychophysiology. 1991;28:240–244. doi: 10.1111/j.1469-8986.1991.tb00417.x. [DOI] [PubMed] [Google Scholar]

- Kayser C, Petkov CI, Lippert M, Logothetis NK. Mechanisms for allocating auditory attention: an auditory saliency map. Curr Biol. 2005;15:1943–1947. doi: 10.1016/j.cub.2005.09.040. [DOI] [PubMed] [Google Scholar]

- Kayser C, Petkov CI, Logothetis NK. Multisensory interactions in primate auditory cortex: fMRI and electrophysiology. Hear Res. 2009;258:80–88. doi: 10.1016/j.heares.2009.02.011. [DOI] [PubMed] [Google Scholar]

- Knebel JF, Murray MM. Towards a resolution of conflicting models of illusory contour processing in humans. Neuroimage. 2012;58:2808–2817. doi: 10.1016/j.neuroimage.2011.09.031. [DOI] [PubMed] [Google Scholar]

- Knebel JF, Javitt DC, Murray MM. Impaired early visual response modulations to spatial information in chronic schizophrenia. Psychiatry Res. 2011;193:168–176. doi: 10.1016/j.pscychresns.2011.02.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koenig T, Melie-García L. A method to determine the presence of averaged event-related fields using randomization tests. Brain Topogr. 2010;23:233–242. doi: 10.1007/s10548-010-0142-1. [DOI] [PubMed] [Google Scholar]

- Krueger J, Royal DW, Fister MC, Wallace MT. Spatial receptive field organization of multisensory neurons and its impact on multisensory interactions. Hear Res. 2009;258:47–54. doi: 10.1016/j.heares.2009.08.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lakatos P, Chen CM, O'Connell MN, Mills A, Schroeder CE. Neuronal oscillations and multisensory interaction in primary auditory cortex. Neuron. 2007;53:279–292. doi: 10.1016/j.neuron.2006.12.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lakatos P, Karmos G, Mehta AD, Ulbert I, Schroeder CE. Entrainment of neuronal oscillations as a mechanism of attentional selection. Science. 2008;320:110–113. doi: 10.1126/science.1154735. [DOI] [PubMed] [Google Scholar]

- Lakatos P, O'Connell MN, Barczak A, Mills A, Javitt DC, Schroeder CE. The leading sense: supramodal control of neurophysiological context by attention. Neuron. 2009;64:419–430. doi: 10.1016/j.neuron.2009.10.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lehmann D. Principles of spatial analysis. In: Gevins AS, Remond A, editors. Handbook of electroencephalography and clinical neurophysiology, Vol 1: Methods of analysis of brain electrical and magnetic signals. Amsterdam: Elsevier; 1987. pp. 309–405. [Google Scholar]

- Lehmann D, Skrandies W. Reference-free identification of components of checkerboard-evoked multichannel potential fields. Electroencephalogr Clin Neurophysiol. 1980;48:609–621. doi: 10.1016/0013-4694(80)90419-8. [DOI] [PubMed] [Google Scholar]

- Leo F, Romei V, Freeman E, Ladavas E, Driver J. Looming sounds enhance orientation sensitivity for visual stimuli on the same side as such sounds. Exp Brain Res. 2011;213:193–201. doi: 10.1007/s00221-011-2742-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lu T, Liang L, Wang X. Neural representations of temporally asymmetric stimuli in the auditory cortex of awake primates. J Neurophysiol. 2001;85:2364–2380. doi: 10.1152/jn.2001.85.6.2364. [DOI] [PubMed] [Google Scholar]

- Maier JX, Neuhoff JG, Logothetis NK, Ghazanfar AA. Multisensory integration of looming signals by rhesus monkeys. Neuron. 2004;43:177–181. doi: 10.1016/j.neuron.2004.06.027. [DOI] [PubMed] [Google Scholar]

- Maier JX, Chandrasekaran C, Ghazanfar AA. Integration of bimodal looming signals through neuronal coherence in the temporal lobe. Curr Biol. 2008;18:963–968. doi: 10.1016/j.cub.2008.05.043. [DOI] [PubMed] [Google Scholar]

- Martuzzi R, Murray MM, Michel CM, Thiran JP, Maeder PP, Clarke S, Meuli RA. Multisensory interactions within human primary cortices revealed by BOLD dynamics. Cereb Cortex. 2007;17:1672–1679. doi: 10.1093/cercor/bhl077. [DOI] [PubMed] [Google Scholar]

- Martuzzi R, Murray MM, Meuli RA, Thiran JP, Maeder PP, Michel CM, Grave de Peralta Menendez R, Gonzalez Andino SL. Methods for determining frequency- and region-dependent relationships between estimated LFPs and BOLD responses in humans. J Neurophysiol. 2009;101:491–502. doi: 10.1152/jn.90335.2008. [DOI] [PubMed] [Google Scholar]

- Michel CM, Murray MM, Lantz G, Gonzalez S, Spinelli L, Grave de Peralta R. EEG source imaging. Clin Neurophysiol. 2004;115:2195–2222. doi: 10.1016/j.clinph.2004.06.001. [DOI] [PubMed] [Google Scholar]

- Michel CM, Koenig T, Brandeis D, Gianotti LR, Wackermann J. Electrical neuroimaging. Cambridge UK: Cambridge UP; 2009. [Google Scholar]

- Molholm S, Ritter W, Murray MM, Javitt DC, Schroeder CE, Foxe JJ. Multisensory auditory-visual interactions during early sensory processing in humans: a high-density electrical mapping study. Brain Res Cogn Brain Res. 2002;14:115–128. doi: 10.1016/s0926-6410(02)00066-6. [DOI] [PubMed] [Google Scholar]

- Murray MM, Spierer L. Auditory spatio-temporal brain dynamics and their consequences for multisensory interactions in humans. Hear Res. 2009;258:121–133. doi: 10.1016/j.heares.2009.04.022. [DOI] [PubMed] [Google Scholar]

- Murray MM, Molholm S, Michel CM, Heslenfeld DJ, Ritter W, Javitt DC, Schroeder CE, Foxe JJ. Grabbing your ear: rapid auditory somatosensory multisensory interactions in low-level sensory cortices are not constrained by stimulus alignment. Cereb Cortex. 2005;15:963–974. doi: 10.1093/cercor/bhh197. [DOI] [PubMed] [Google Scholar]

- Murray MM, Brunet D, Michel CM. Topographic ERP analyses: a step-by-step tutorial review. Brain Topogr. 2008;20:249–264. doi: 10.1007/s10548-008-0054-5. [DOI] [PubMed] [Google Scholar]

- Naghavi HR, Eriksson J, Larsson A, Nyberg L. The claustrum/insula region integrates conceptually related sounds and pictures. Neurosci Lett. 2007;422:77–80. doi: 10.1016/j.neulet.2007.06.009. [DOI] [PubMed] [Google Scholar]

- Naumer MJ, van den Bosch JJ, Wibral M, Kohler A, Singer W, Kaiser J, van de Ven V, Muckli L. Investigating human audio-visual object perception with a combination of hypothesis-generating and hypothesis-testing fMRI analysis tools. Exp Brain Res. 2011;213:309–320. doi: 10.1007/s00221-011-2669-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neuhoff JG. Perceptual bias for rising tones. Nature. 1998;395:123–124. doi: 10.1038/25862. [DOI] [PubMed] [Google Scholar]

- Neuhoff JG. An adaptive bias in the perception of looming auditory motion. Ecol Psychol. 2001;13:87–110. [Google Scholar]

- Noesselt T, Tyll S, Boehler CN, Budinger E, Heinze HJ, Driver J. Sound-induced enhancement of low-intensity vision: multisensory influences on human sensory-specific cortices and thalamic bodies relate to perceptual enhancement of visual detection sensitivity. J Neurosci. 2010;30:13609–13623. doi: 10.1523/JNEUROSCI.4524-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ohshiro T, Angelaki DE, DeAngelis GC. A normalization model of multisensory integration. Nat Neurosci. 2011;14:775–782. doi: 10.1038/nn.2815. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Perrin F, Pernier J, Bertrand O, Giard MH, Echallier JF. Mapping of scalp potentials by surface spline interpolation. Electroencephalogr Clin Neurophysiol. 1987;66:75–81. doi: 10.1016/0013-4694(87)90141-6. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Delplanque S, Michel C, Vuilleumier P. Beyond conventional event-related brain potential (ERP): exploring the time-course of visual emotion processing using topographic and principal component analyses. Brain Topography. 2008;20:265–277. doi: 10.1007/s10548-008-0053-6. [DOI] [PubMed] [Google Scholar]

- Raij T, Ahveninen J, Lin FH, Witzel T, Jääskeläinen IP, Letham B, Israeli E, Sahyoun C, Vasios C, Stufflebeam S, Hämäläinen M, Belliveau JW. Onset timing of cross-sensory activations and multisensory interactions in auditory and visual sensory cortices. Eur J Neurosci. 2010;31:1772–1782. doi: 10.1111/j.1460-9568.2010.07213.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramírez RR, Kopell BH, Butson CR, Hiner BC, Baillet S. Spectral signal space projection algorithm for frequency domain MEG and EEG denoising, whitening, and source imaging. Neuroimage. 2011;56:78–92. doi: 10.1016/j.neuroimage.2011.02.002. [DOI] [PubMed] [Google Scholar]

- Remedios R, Logothetis NK, Kayser C. Unimodal responses prevail within the multisensory claustrum. J Neurosci. 2010;30:12902–12907. doi: 10.1523/JNEUROSCI.2937-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rockland KS, Ojima H. Multisensory convergence in calcarine visual areas in macaque monkey. Int J Psychophysiol. 2003;50:19–26. doi: 10.1016/s0167-8760(03)00121-1. [DOI] [PubMed] [Google Scholar]

- Romei V, Murray MM, Merabet LB, Thut G. Occipital transcranial magnetic stimulation has opposing effects on visual and auditory stimulus detection: implications for multisensory interactions. J Neurosci. 2007;27:11465–11472. doi: 10.1523/JNEUROSCI.2827-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romei V, Murray MM, Cappe C, Thut G. Pre-perceptual and stimulus-selective enhancement of human low-level visual cortex excitability by sounds. Curr Biol. 2009;19:1799–1805. doi: 10.1016/j.cub.2009.09.027. [DOI] [PubMed] [Google Scholar]

- Royal DW, Carriere BN, Wallace MT. Spatiotemporal architecture of cortical receptive fields and its impact on multisensory interactions. Exp Brain Res. 2009;198:127–136. doi: 10.1007/s00221-009-1772-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schiff W. Perception of impending collision: a study of visually directed avoidant behavior. Psychol Monogr. 1965;79:1–26. doi: 10.1037/h0093887. [DOI] [PubMed] [Google Scholar]

- Schiff W, Caviness JA, Gibson JJ. Persistent fear responses in rhesus monkeys to the optical stimulus of “looming”. Science. 1962;136:982–983. doi: 10.1126/science.136.3520.982. [DOI] [PubMed] [Google Scholar]

- Seifritz E, Neuhoff JG, Bilecen D, Scheffler K, Mustovic H, Schächinger H, Elefante R, Di Salle F. Neural processing of auditory looming in the human brain. Curr Biol. 2002;12:2147–2151. doi: 10.1016/s0960-9822(02)01356-8. [DOI] [PubMed] [Google Scholar]

- Senkowski D, Schneider TR, Foxe JJ, Engel AK. Crossmodal binding through neural coherence: implications for multisensory processing. Trends Neurosci. 2008;31:401–409. doi: 10.1016/j.tins.2008.05.002. [DOI] [PubMed] [Google Scholar]

- Senkowski D, Saint-Amour D, Höfle M, Foxe JJ. Multisensory interactions in early evoked brain activity follow the principle of inverse effectiveness. Neuroimage. 2011;56:2200–2208. doi: 10.1016/j.neuroimage.2011.03.075. [DOI] [PubMed] [Google Scholar]

- Sperdin HF, Cappe C, Foxe JJ, Murray MM. Early, low-level auditory somatosensory multisensory interactions impact reaction time speed. Front Integr Neurosci. 2009;3:2. doi: 10.3389/neuro.07.002.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sperdin HF, Cappe C, Murray MM. The behavioral relevance of multisensory neural response interactions. Front Neurosci. 2010;4:9. doi: 10.3389/neuro.01.009.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stecker GC, Middlebrooks JC. Distributed coding of sound locations in the auditory cortex. Biol Cybern. 2003;89:341–349. doi: 10.1007/s00422-003-0439-1. [DOI] [PubMed] [Google Scholar]

- Stein BE, Meredith MA. The merging of the senses. Cambridge, MA: MIT; 1993. [Google Scholar]

- Stein BE, Stanford TR. Multisensory integration: current issues from the perspective of the single neuron. Nat Rev Neurosci. 2008;9:255–266. doi: 10.1038/nrn2331. [DOI] [PubMed] [Google Scholar]

- Stevenson RA, James TW. Audiovisual integration in human superior temporal sulcus: inverse effectiveness and the neural processing of speech and object recognition. Neuroimage. 2009;44:1210–1223. doi: 10.1016/j.neuroimage.2008.09.034. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-planar stereotaxic atlas of the human brain: 3-dimensional proportional system—an approach to cerebral imaging. New York: Thieme; 1988. [Google Scholar]

- Teder-Sälejärvi WA, McDonald JJ, Di Russo F, Hillyard SA. An analysis of audio-visual crossmodal integration by means of event related potential (ERP) recordings. Brain Res Cogn Brain Res. 2002;14:106–114. doi: 10.1016/s0926-6410(02)00065-4. [DOI] [PubMed] [Google Scholar]

- Toepel U, Knebel JF, Hudry J, le Coutre J, Murray MM. The brain tracks the energetic value in food images. Neuroimage. 2009;44:967–974. doi: 10.1016/j.neuroimage.2008.10.005. [DOI] [PubMed] [Google Scholar]

- Van der Burg E, Talsma D, Olivers CN, Hickey C, Theeuwes J. Early multisensory interactions affect the competition among multiple visual objects. Neuroimage. 2011;55:1208–1218. doi: 10.1016/j.neuroimage.2010.12.068. [DOI] [PubMed] [Google Scholar]

- Van Zaen J, Uldry L, Duchêne C, Prudat Y, Meuli RA, Murray MM, Vesin JM. Adaptive tracking of EEG oscillations. J Neurosci Methods. 2010;186:97–106. doi: 10.1016/j.jneumeth.2009.10.018. [DOI] [PubMed] [Google Scholar]

- Vidal J, Giard MH, Roux S, Barthélémy C, Bruneau N. Crossmodal processing of auditory-visual stimuli in a no-task paradigm: a topographic event-related potential study. Clin Neurophysiol. 2008;119:763–771. doi: 10.1016/j.clinph.2007.11.178. [DOI] [PubMed] [Google Scholar]

- Wallace MT, Ramachandran R, Stein BE. A revised view of sensory cortical parcellation. Proc Natl Acad Sci U S A. 2004;101:2167–2172. doi: 10.1073/pnas.0305697101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang Y, Celebrini S, Trotter Y, Barone P. Visuo-auditory interactions in the primary visual cortex of the behaving monkey: electrophysiological evidence. BMC Neurosci. 2008;9:79. doi: 10.1186/1471-2202-9-79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woods TM, Lopez SE, Long JH, Rahman JE, Recanzone GH. Effects of stimulus azimuth and intensity on the single-neuron activity in the auditory cortex of the alert macaque monkey. J Neurophysiol. 2006;96:3323–3337. doi: 10.1152/jn.00392.2006. [DOI] [PubMed] [Google Scholar]