Abstract

To address the longstanding questions of whether the blind-from-birth have an innate face-schema, what plasticity mechanisms underlie non-visual face learning, and whether there are interhemispheric face processing differences in face processing in the blind, we used a unique non-visual drawing-based training in congenitally blind (CB), late-blind (LB) and blindfolded-sighted (BF) groups of adults. This Cognitive-Kinesthetic Drawing approach previously developed by Likova (e.g., 2010, 2012, 2013) enabled us to rapidly train and study training-driven neuroplasticity in both the blind and sighted groups. The five-day two-hour training taught participants to haptically explore, recognize, memorize raised-line images, and draw them free-hand from memory, in detail, including the fine facial characteristics of the face stimuli. Such drawings represent an externalization of the formed memory. Functional MRI was run before and after the training. Tactile-face perception activated the occipito-temporal cortex in all groups. However, the training led to a strong, predominantly left-hemispheric reorganization in the two blind groups, in contrast to right-hemispheric in blindfolded-sighted, i.e., the post-training response-change was stronger in the left hemisphere in the blind, but in the right in the blindfolded. This is the first study to discover interhemispheric differences in non-visual face processing. Remarkably, for face perception this learning-based change was positive in the CB and BF groups, but negative in the LB-group. Both the lateralization and inversed-sign learning effects were specific to face perception, but absent for the control nonface categories of small objects and houses. The unexpected inversed-sign training effect in CB vs LB suggests different stages of brain plasticity in the ventral pathway specific to the face category. Importantly, the fact that only after a very few days of our training, the totally-blind-from-birth CB manifested a very good (haptic) face perception, and even developed strong empathy to the explored faces, implies a preexisting face schema that can be “unmasked” and “tuned up” by a proper learning procedure. The Likova Cognitive-Kinesthetic Training is a powerful tool for driving brain plasticity, and providing deeper insights into non-visual learning, including emergence of perceptual categories. A rebound learning model and a neuro-Bayesian economy principle are proposed to explain the multidimensional learning effects. The results provide new insights into the Nature-vs-Nurture interplay in rapid brain plasticity and neurorehabilitation.

Keywords: face learning, plasticity, blindness, lateralization, training, non-visual learning, spatial cognition, tactile memory, drawing training

Introduction and Research Questions

Recognizing and memorizing faces is of vital importance in the society. Face perception involves a complex network of brain regions that have been intensively studied over the years by many groups.

It is well established that the occipito-temporal cortex is involved in face perception in the sighted (e.g., Kanwisher et al., 1997; Tarr & Gauthier, 2000; Haxby et al., 2001; Spiridon & Kanwisher, 2002). Although face activation in this part of cortex has been reported in late-onset blind (LB), contradictory results have been found in congenital blind (CB) subjects (e.g., Pietrini et al., 2004; Goyal et al., 2006). Here we directly compared face vs (small) object and house representations in CB and LB subjects. Furthermore, we asked whether training on tactile face perception in adulthood would operate similarly in the two groups; these results were compared to a third group, consisting of sighted but temporarily blindfolded people (BF). Our training paradigm made it possible to address a set of further longstanding questions, such as whether those blind from birth have an innate face-schema or not, whether face processing in the blind is lateralized as in the sighted, and what plasticity mechanisms are engaged in non-visual face learning.

Does the occipito-temporal cortex respond to tactile objects in the sighted?

It has been shown that the occipito-temporal cortex responds to tactile objects in the sighted. For example, a study by Pietrini et al. (2004) investigated this question by using three object categories: faces, bottles, and shoes. When sighted subjects explored these objects haptically, the occipito-temporal cortex was significantly activated. Visual exploration, however, produced a much stronger activation. A comparative analysis showed a significant overlap between the activation patterns for the two sensory modalities. The same study also found high cross-modal correlations between the visual and tactile object-category (bottles and shoes) responses, but not for faces.

How are faces represented in the occipito-temporal of the blind?

Contradictory results have been found in the occipito-temporal cortex. Goyal et al. (2006) compared the activation for a plastic doll’s head and a well-matched non-face object, explored for 15 s by touch. This study did not find cortical activation among the congenitally blind or the sighted, but only in the late-onset blind. Only one doll face was used, and there was no behavioral task. In contrast, Pietrini et al. (2004) did observe cortical activations in the congenitally blind as well using a larger number of face masks in two conditions: One-back repetition detection and a simple tactile exploration. The instructions may be a critical factor in these differences.

Learning faces without vision?

To our best knowledge, however, no study has compared the congenital blind and late-onset blind on tactile face perception, nor done so before and after special training. Thus, in this paper, we compare congenitally, late-onset blind and temporarily blinded (blindfolded) sighted people. Does the brain of the blind individuals have a specialized processing mechanism for faces in spite the fact that they don’t use faces in social communications? Is the cortical representation the same across groups of different visual status?

Main research questions

To fill the knowledge gap, in this study we address the following questions:

Face perception without vision:

How are faces processed non-visually as a function of visual experience and respectively, level of development of the visual system? We compare activation for tactile faces in CB vs. LB, with BF as control.

Face perception vs. nonface perception without vision:

If there are any inter-group differences in face perception, are they specific to the face category or not? We compare faces with nonface categories (small objects and houses).

Learning face perception in late adulthood:

Could an elaborated training, such as the Likova Cognitive-Kinesthetic Training, significantly affect non-visual face processing and provide deeper insights into the effect of perceptual experience on the brain mechanisms underlying ‘learning’ of face perception? To establish the specificity or generality of the potentially involved plasticity mechanisms, we measure the pre/post-training change of BOLD response among the three subject groups for each of the three perceptual categories.

Face-schema innateness:

Do the blind from birth have an innate face-schema? We evaluate and correlate behavioral and fMRI changes before and after training.

Lateralization for face perception in the blind:

Inter-hemispheric asymmetry has been established for face perception in the sighted. Is there such an asymmetry in the blind? We compare pre/post-training BOLD response changes between the two hemispheres across all three perceptual categories and all three visual deprivation groups.

Methods

The Cognitive-Kinesthetic Drawing Training in Blindness

Although there are many years of neuroimaging studies on blindness, they typically do not include any training, but instead, simply compare blind with sighted.

In contrast, the Likova lab takes a different approach. Our studies have shown that proper training method can drive highly effectively and efficiently the needed strong brain reorganization in the blind, thus allowing its study in the lab. We employ the Cognitive-Kinesthetic (C-K) Drawing Training, developed by Likova (e.g., 2010, 2012, 2013, 2014, 2017, 2018), which is uniquely based on drawing, although drawing is traditionally considered ‘visual’ art. The experimental platform includes the first multi-sensory custom Magnetic Resonance Imaging (MRI)-Compatible Drawing Lectern, a Fiber-Optic Motion-Capture System adapted to the high-resolution needs of the drawing, and a battery of custom advanced analyses.

Drawing as a training method

The Cognitive-Kinesthetic Drawing Training is a complex interactive procedure, based on a novel conceptual framework (Likova, 2012, 2013) that unfolds over five two-hour training sessions. It is described in Likova (e.g., 2012, 2013, 2013, 2014). A five-day two-hour C-K training protocol was applied to teach participants to haptically explore, recognize, memorize and reproduce free-hand from memory the explored raised-line configurations, in detail, including the fine facial characteristics in the face stimuli.

Pre/post-training functional MRI (fMRI) assessment

Such reproduction by drawing represents an explicit externalization of the formed memory. Before and after training, fMRI (Siemens 3T scanner) was run, while the subjects were performing the following four tasks: (i) “Explore and Memorize”: haptic exploration of a set of raised-line tactile images of faces with the left hand, (ii) “Memory-Draw”: drawing of the explored images from memory with the right hand, (iii) “Control Scribble”: doodling/scribbling as a motor and memory control, and (iv) “Copy”: observational drawing, or, ‘copying’ – drawing the image with right hand while simultaneously ‘looking’ at it with left hand. Each task duration was 20 s, separated by 20 s rest period. The fMRI experimental design is shown schematically in the left panel of Fig. 1. Tactile images of facial profiles differing from each other in both appearance and expression, plus two control nonface categories – (small) objects and houses – were used as stimuli. The custom MRI-compatible lectern was used for both stimulus presentation and drawing. The group blood-oxygen-level-dependent (BOLD) activation maps were projected on a Montreal Neurological Institute (MNI) average brain. First, a large-scale occipito-temporal (LSOT) region of interest (ROI) was defined (Fig. 2) in the posterior part of the ventral pathway. Then, five sub-regional divisions of the LSOT were delineated (Fig. 4). This paper presents the results from the “Explore and Memorize” task only.

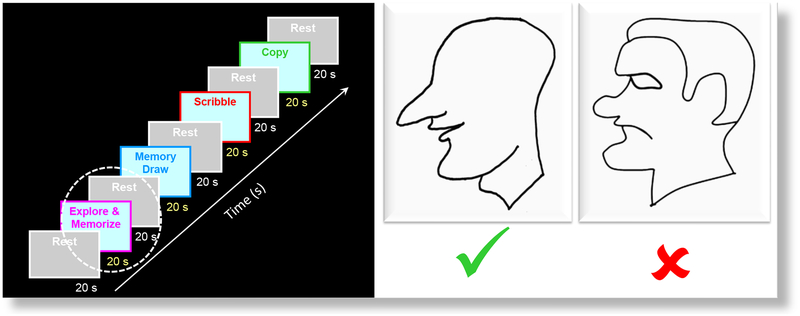

FIG. 1.

Left panel. The fMRI experimental design includes a sequence of five tasks, separated by rest periods. This paper focusses on the “Explore and Memorize” task only (white dashed outline). Right Panel. Examples of the raised-line face images. Initially, the congenitally blind usually had no interest in faces and their structural understanding of face images was quite limited -many were not even able to relate the organization of facial parts to their own faces. Some were wondering how the sighted can enjoy any drawings, as drawings are “flat” images. Thus, it was remarkable when after only the few days of the Cognitive-Kinesthetic Training, they became able to easily recognize and fully understand faces, their appearance, and even facial expressions. Furthermore, some CB developed such strong empathy that refused to work with the ‘unhappy’ face (right, red cross) but liked the ‘happy’, smiling face and didn’t at all mind his boldness (left, green checkmark).

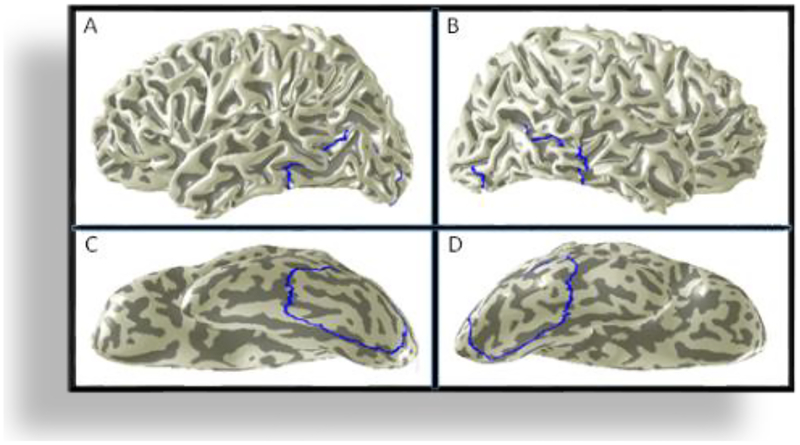

FIG. 2.

To measure the large-scale effect, a large-scale ROI was defined at the occipito-temporal cortex (LSOT; blue outline). The upper panels show the location of the LSOT in the left (A) and right (B) hemispheres. The lower panels show the LSOT on the inflated and rotated to the ventral side surfaces of the left (C) and the right (D) hemispheres. LSOT ~1000 voxels per hemisphere.

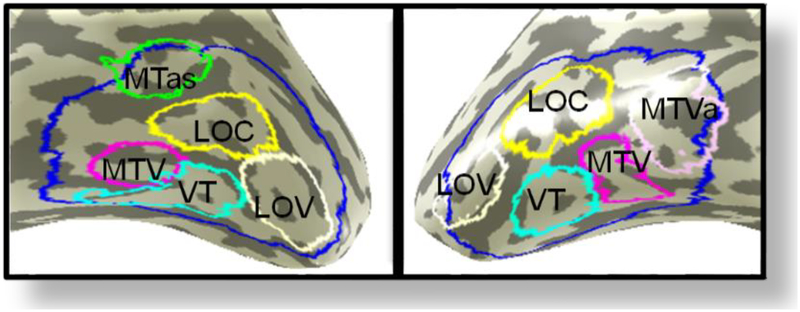

FIG. 4.

Sub-divisions of the LSOT are outlined in different colors. Four ROIs are blilaterally symmetrical: LOC (yellow), LOV (white), VT (cyan), and MTV (magenta). The MTas (green) is unique for left hemisphere, and the MTVa (violet) - for the right hemisphere.

The MRI acquisition and data analyses were as in Likova (2012).

Results

Haptic face perception

Opposite lateralization and inversed-sign learning effects in non-visual face perception

To measure large-scale effects, a large-scale ROI was defined in the occipito-temporal cortex (Fig. 2). Figure 3 clearly demonstrates involvement of the occipito-temporal cortex in non-visual face processing. Remarkably, the five-day two-hour C-K training caused highly significant changes in the initial pre-training responses in all subject groups.

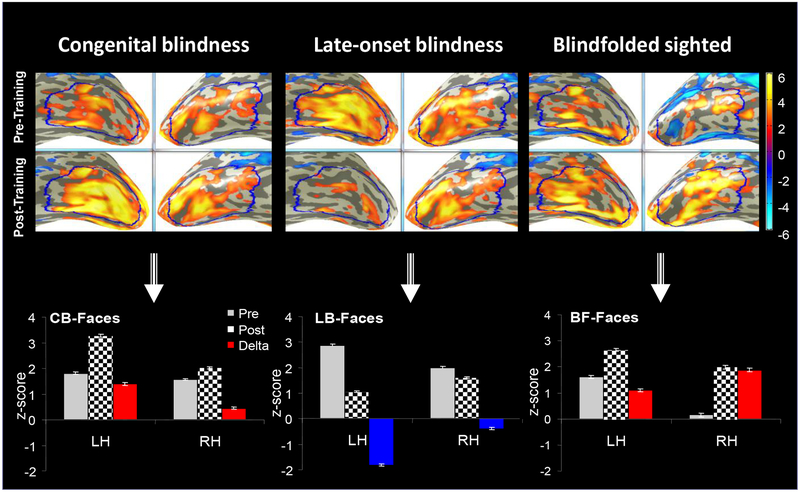

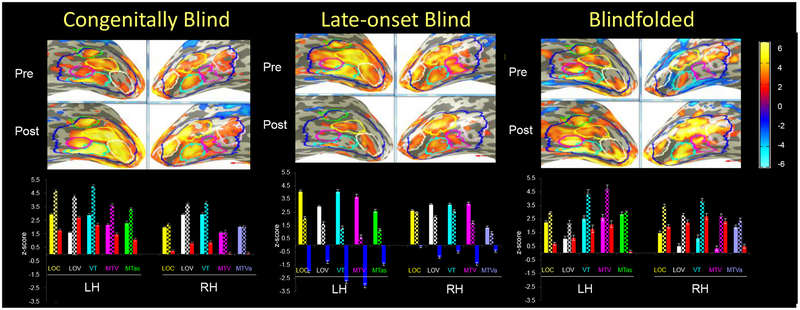

FIG. 3.

Average activation maps of the two groups of blindness (CB and LB) and of blindfolded (BF) in the LSOT area of the visual ventral pathway. LSOT region in the left (LH) and right (RH) hemispheres (blue) were defined to measure the large-scale effects. The grey bars represent the pre-training BOLD response bilaterally; the checkerboard bars - the post-training BOLD response. The training effect is measured by the difference between the post-training and pre-training responses. Increased training effects are shown by red bars, and reduced training effects are shown by blue bars. Note the opposite sign effect in the acquired, or late-onset, blindness. The training effect was stronger in the left hemispheres of the blind groups, but in the right hemisphere of the blindfolded-sighted.

Inversed-sign learning effect in congenital blindness vs late-onset blindness

The strongest learning change was in the congenitally blind group – an almost doubled strength of the BOLD response in the left hemisphere after training. In contrast, the training led to a dramatic signal drop in the same hemisphere in the late-onset blind. Thus, the pattern of changes in CB and LB was exactly opposite. What about the blindfolded sighted – would they be more similar to the late-onset blind as it would be expected? The answer is “no”: in terms of training effects, BF were more similar to CB than to LB.

Opposite inter-hemispheric effect in blind vs sighted

Furthermore, a comparison between all three groups showed that, while the two blind groups demonstrated a stronger training effect in left hemisphere, the BF group demonstrates a stronger training effect in the right hemisphere.

Sub-divisions of the LSOT region: Differential tactile-face responses

Is the training effect uniform across the whole LSOT region? Looking at the activation across the region (Fig. 3), one can see that this is not the case. Several sub-regional divisions of the LSOT were defined, based on anatomy, its functional pattern, learning-driven change, and Talairach coordinates.

Figure 4 shows the functional sub-divisions of the LSOT. Four ROIs are billaterally symmetrical: the lateral occipital complex (LOC, yellow), lateral occipital functional pattern, learning-driven change, and Talairach coordinates. ventral area (LOV, white), posterior ventrotemporal area (PVT, cyan), and (mediotemporal ventral area MTV, magenta). The MTas (green) is unique for left hemisphere, and the MTVa (pink) - for the right hemisphere.

Inverse learning effect on face perception in CB and BF vs LB across all sub-divisions of LSOT

Congenitally blind (Fig. 5, left panel).

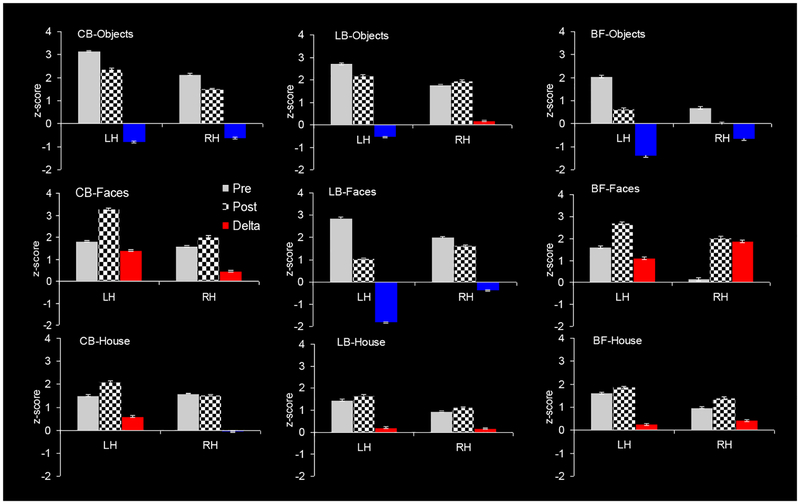

FIG. 5.

For each sub-divisional ROI within LSOT, a triplet of bars is shown, representing the BOLD responses (i) pre-training, (ii) post-training and (iii) the change from pre-to-post. The pre-training responses (the first bar in each group) are shown in filled colors, corresponding to the color coding of the respective ROI (see Fig. 4). The post-training signals (second bar) are shown in checkerboard bars. The training-caused change is shown in either red (positive change, enhancement) or in blue (negative change, reduction). LH - Left hemisphere; RH – Right hemisphere.

For each sub-divisional ROI, a triplet of bars is shown, representing the BOLD responses (i) pre-training, (ii) post-training and (iii) as the change from pre-to-post. The pre-training responses (the first bar in each group) are shown in filled colors, corresponding to the color coding of the respective ROI from Fig. 4. The post-training signals (second bar) are shown in checkerboard bars. The training-caused change is shown in either red (positive change, enhancement) or blue (negative change, reduction). In the congenitally blind, all non-visual training changes were positive (red bars) In contrast to the initial bilaterally-balanced activation, however, these changes are significantly stronger in the left hemisphere. More detailed analysis shows that this effect is not uniform but is carried out by the more posterior VT and LOV regions, followed by the LOC, while the more anterior MTV and MTa regions show weaker training effects. The same tendency of a stronger enhancement effect in the posterior sub-regions is observed in the right hemisphere, but (although significant) much smaller than in the LH; the two anterior regions did not change significantly.

Late-onset blind (Fig. 5, middle panel).

As in the LSOT as a whole (Fig. 3), the training caused a totally inverse effect in the LB group. Instead of increasing, the activation was reduced bilaterally. However, while the reduction was strongly expressed in all left-hemisphere ROIs, it was weak to negligible in the right-hemisphere ROIs.

Another difference from the CB was that in the left hemisphere not only the posterior but also the anterior ROIs were strongly affected. In summary, in both CB and LB, the ROIs in the left hemisphere were strongly changed but in an opposite manner, while the right hemisphere was much less or not at all affected in either of the blind groups.

Blindfolded sighted (Fig. 5, right panel).

Similarly to the congenitally blind, the non-visual post-training responses were enhanced in blindfolded sighted, though from an initial level that was strongly left-hemisphere dominated (in contrast to the bilaterally balanced in CB).

Opposite lateralization of non-visual face-learning changes:

In both blind groups, the training-driven changes in face perception were larger in the left hemisphere. This was in contrast to the blindfolded-sighted, which showed a larger training-driven change in the right hemisphere. Interestingly, this is consistent with the established right-hemispheric dominance for faces in the sighted, despite the left-hemisphere dominance of pre-training activation. This switch may indicate that the initial BF processing was based on local features rather than the face-specific specific configuration.

What is the cause of the inverse-sign training effect in non-visual face-perception?

Is it the visual status (CB, LB, BF group), or is it instead the particular stimulus category (faces) that causes these dramatic inverse-sign learning effects?

Alternatively, is it an interaction between subject group and stimulus category? To address these questions, we looked separately at the pre/post-training responses in LSOT for each of the three stimulus categories - faces, objects and houses. These responses were compared across the visual-status groups.

If it is only an effect of the different visual status, “Objects” and “Houses” should show a training pattern similar to that for “Faces” in the respective groups. If, instead, it is an effect of stimulus category, the response changes for the nonface categories will be quite different from that for faces. Here we look at the large-scale effects in the LSOT ROI.

Objects (Fig. 6, top row):

FIG. 6.

Non-visual training effect in each hemisphere as function of stimulus category (objects, faces and houses) and visual-status group (congenitally blind, CB; late-onset blind, LB; and blindfolded, BF). As above, the triplet of bars represents the BOLD responses: (i) pre-training (gray), (ii) post-training (checkerboard), (iii) change from pre-to-post (color). The training-caused change is shown in either red (positive change, enhancement) or in blue (negative change, reduction). LH - Left hemisphere; RH – Right hemisphere. Note that the inverse learning response for LB vs CB is present only in the face category (second row).

Interestingly, all CB, LB and BF groups show similar strength and same-direction (reduction) for (small) object perception.¬

Faces (Fig. 6, middle row):

Fig. 6 shows that faces are the only perceptual category that exhibits the inversed-sign effect we have described in the previous section. This implies a face-specific nature of the inversion. Furthermore, the strongest response changes were produced by face learning.

Houses (Fig. 6, bottom row):

The house category generated same-direction response change across CB, LB and BF, however, in contrast to the object category, the change was very weak to non-existent, except in the left hemisphere of CB.

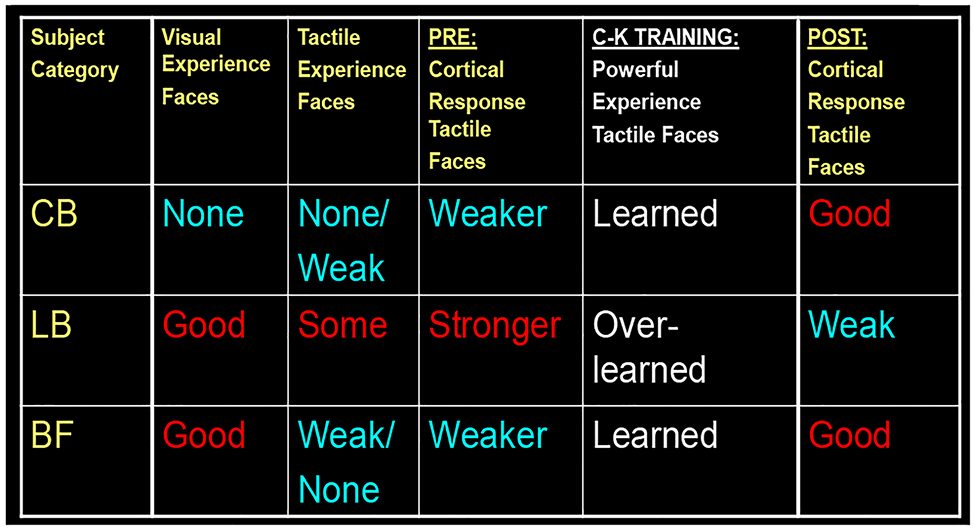

In summary, all three visual-status groups showed similar training effect with nonface categories (objects and houses), but different with the face category. Thus, faces stood out as a special learning case. It is particularly interesting that a seemingly unlikely pair - CB and BF - showed the same type of learning change (response increase), although exhibiting opposite hemispheric asymmetry. CB and BF are an unlikely pair from a view point of visual status – CB had never had any vision, while BF have always had full vision. What they have in common is the lack of experience with non-visual faces: Faces are of great importance for BF, but their experience is based on visual experience; the CB have lifelong non-visual experience, but none or highly limited with non-visual faces, which don’t have the high social value they have for the sighted-BF. Thus, BF and CB are in a closer experience-based position before the C-K training. In some sense, they both are just starting to learn (non-visual) face-perception (see Fig. 7, left and right plots), and are thus at the beginning of its developmental trajectory.

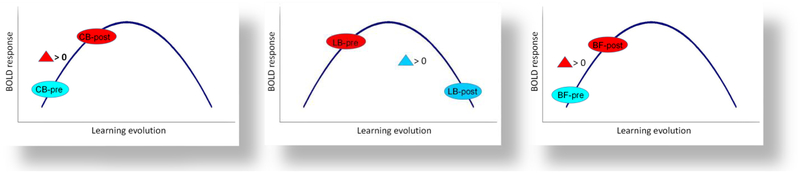

FIG. 7.

We propose a Rebound Learning Model to explain the observed inverted-U curve for learning-driven activation of non-visual face perception. It clearly manifests when comparing (i) a case in which at least one of the variables is new and has not been learned before starting training – e.g., either the perceptual category (as faces in the case of CB) or the use of particular sensory modality (as the use of tactile modality for face perception in the BF), vs. (ii) a case in which all these variables have been already learned and used to some degree, so as they have become “overlearned” after training. This model is inspired by our data on brain activation changes driven by the Cognitive-Kinesthetic face learning (see Figs. 5, 6; Table 1).

This is not the case with the LB group. They have had years of visual face perception, they are also used to the importance of faces, have more interest in them, and at the same time they already have had years of tactile experience. Thus, before training LB are at a higher point along the learning curve of (non-visual) face-perception (Fig. 7, middle panel). Table 1 schematically summarizes these points.

Table 1.

Multisensory experience with faces

Rebound Learning Model to reflect inverted U-shaped learning activation

Our data imply that the training-induced changes of neural activation for the (non-visual) learning of face perception follows an inverted ‘U”-shape law. Such an inverted ‘U”-shape would predict the observed increase for CB and BF, and reduction for LB. With training, LB reach neural optimization (an “overlearned” state beyond the peak of the learning curve) and find themselves on the decreasing slope of that inverted-U function (see Discussion and Conclusions section). Thus, although the learned skill continues to improve, the increased efficiency provided by the overlearning allows the same performance to be produced by reduced neural activation.

Statistical analysis notes.

Large-scale LSOT ROI on faces (repeated ANOVA and post-hoc analyses):

An overall effect of Group (F = 102, p = 0), Training Session (F=215, p=0), Hemisphere (F=494, p=0).

CB: an increased training effect in LH (F=1032, p=0), and RH (F = 53, p=0)

LB: a reduced training effect in LH (F=1828, p=0), and RH (F=36, p=0) BF: an increased training effect in LH (F=164, p=0), RH (F=388, p=0)

Sub-divisional level analysis on faces (repeated ANOVA and post-hoc analyses):

LH: except for MT in the BF group, all ROIs showed significant training effects

RH: several ROIs did not show significant training effect

LOC and MTVa did not show training effect in either blind group; MTVa did not show significant training effect in any of the three groups

Training effects on other stimulus categories:

Objects: CB and LB did not show the opposite training effects. BF showed similar trend as CB

House: Training effect in three groups were all weak or non-significant. CB-RH (F=3.26, p<0.07); LB-LH (F=4.54, p<0.03)

Discussion and Conclusions

Our results reveal a number of systematic differences in the evolution of the learning process as function of visual status and stimulus category.

Occipito-temporal involvement in non-visual face perception

First, all subject groups - congenitally blind, late-onset blind and blindfolded - demonstrated activation for tactile face perception in the occipito-temporal part of the ventral pathway. This initial response underwent training-driven reorganization that was function of visual status/development and experience.

Hemispheric asymmetry of face-learning effect

To our surprise, lateralization of face processing was completely different from that known for visual face processing. Before training, haptic processing of the faces in the sighted strongly activated the left hemisphere, in contrast to the usual right-hemisphere bias for visual face processing. A similar bias was evident in the late-onset blind, while the congenitally blind show no interhemispheric difference. One interpretation of this result is that the initial haptic processing is not differentiated in respect to object category in the left hemisphere. It may be predicted therefore that the training should produce a stronger enhancement of the right hemisphere in the sighted (blindfolded) group that use it already for higher level face-processing and could now learn to access these mechanisms in the case of non-visual input as well.

Lateralization of face-learning effect

In both blind groups, the Cognitive-Kinesthetic training on tactile face perception led to stronger changes in the left hemisphere, in contrast to the right hemisphere bias for the changes in the sighted (blindfolded). This training-driven lateralization was not evident for the nonface categories, however, suggesting profound functional differences specific to faces between the structurally homologous left and right regions.

To our best knowledge, this is the first study to discover interhemispheric differences in face processing without vision. Moreover, these differences were found through the investigation of the development of (non-visual) face perception in late adulthood driven by our Cognitive-Kinesthetic training.

In the sighted, hemispheric differences in (visual) face processing have been found previously (e.g., De Renzi, 1986; De Renzi et al., 1994; McCarthy et al., 1997; Sprengelmeyer et al., 1998; Gazzaniga & Smylie, 1983; Mattson et al., 2000; Rossion et al., 2000; de Gelder & Rouw, 2001; Verstichel, 2001; Barton et al., 2003; Meng et al., 2012). In particular, Meng at al. (1997) presented experimental evidence consistent with “the notion that the left hemisphere might be involved in rapid processing of face features, whereas the right hemisphere might be involved with ‘deep’ cognitive processing of faces” as proposed by the group of Gazzaniga (Miller et al., 2002; Wolford et al., 2004), “revealing the functional lateralization of analyses driven by bottom-up image attributes versus by the perceived category”.

Thus, one possibility is that our findings mean a stronger reorganization of rapid lower-level analysis driven by bottom-up image attributes in both late-onset and blind-from-birth participants (left hemisphere) vs. higher-level, deeper cognitive processing of perceived face category in the sighted.

There is, however, an alternative, based on the fact that in the sighted, the respective left-hemispheric region is very much “taken over” by “grapheme/word-form” processing. In other words, in the sighted, faces and grapheme/word-form areas have a lateralization opposite to that for faces. In the blind, Braille writing has shown engagement of the homologous word-form area (Likova et al., 2016), but in spite of that, there still is the possibility that in the blind the left-hemisphere face processing regions remains less specialized for such nonface functions, and it is thus open to strong developmental changes under our non-visual face learning.

Inversed sign of the face learning effect in the late-onset blind

Importantly, this learning-based change was positive in the congenitally blind group bilaterally, but negative in the late-onset blind group, with this unexpected sign-inversion being specific to face perception but absent for the nonface categories. A further surprise came from the finding that similarly to the congenitally blind, the blindfolded-sighted controls showed increased post-training response for faces (although with opposite hemispheric lateralization). This unique inversed training effect for faces in the late-onset blind vs. congenitally blind and blindfolded suggests different stages of brain plasticity in the ventral pathway specific to the face category.

Rebound Learning Model: Proposal of an inverted-U learning curve to explain the inversed-sign plasticity changes between the blind groups

We propose an Inverted-U activation function for learning (Fig. 7). It clearly manifests when comparing a case in which at least one of two variables is novel before training – e.g., either the perceptual category (as faces for the congenitally blind) or the particular use of sensory modality (as the tactile modality for face perception in the BF), vs a case in which these variables have already been learned to some degree and thus, they become “overlearned” after training. The increased efficiency resulting from the overlearning allows for reduced neural activation as the learning progresses. This model is based on the data we have accumulated on training-driven brain activation changes (see Figs. 5, 6 and Table 1), which are analogous to those reported in other kinds of learning studies (e.g., Jenkins et al., 1994).

This learning model contrasts with the arousal function from the 1908 study of Robert Yerkes and John Dodson of behavioral performance (in “a dancing mouse”), which resulted into the Inverted-U model known as the Yerkes-Dodson Law. Whereas behavioral performance decreases as the stimulus strength increases beyond the optimal arousal level, the opposite is the case for the Rebound Learning Model, in that the behavioral performance continues to improve while the neural activation signal decreases.

Our data further expand this model by revealing that an Inverted-U curve operates in the realm of brain plasticity and training-based reorganization of functional response of the human brain.

Proposal of a Neuro-Bayesian Economy Principle underlying the inverted-U learning activation curve

We further propose, that the Inverted-U function we have found reflects the operation of a “Neuro-Bayesian Economy Principle” throughout the stages of learning. Thus, we postulate that learning implies reaching an optimal “neuro-architectural” solution in terms of minimum neural resources being involved for achieving maximum task performance efficiency. In a simplified, schematic form, this principle implies that during the earlier stages of learning, while the brain is in the mode of searching the optimal neuro-architectural solution, it engages and “explores” an increased number of neural regions to test different network configurations, which leads to an increase in BOLD response. With overlearning, the optimal configuration is reached and the neural activation required to achieve improved performance is reduced.

The Cognitive-Kinesthetic Training greatly accelerated the non-visual face learning, such as over the 5 sessions the neural organization in the CB and BF changed significantly. However, it did not reach the optimal solution, i.e., the peak of the learning curve. Instead, it was still in the mode of ‘testing’ further cortical regions and network configurations, thus producing an increasing BOLD activation (increased response across the LSOT area; Fig. 7, left and right panels, respectively; see Fig. 6 as well). In contrast, the late-onset blind, who have already had experience with both the perceptual category of faces (while being sighted) and with the tactile modality (since becoming blind), and for whom it is most likely that faces were still important and they touched them more often, were already at a higher point along the inverted-U trajectory of learning before training (Fig. 7, middle panel). Thus, the training ‘pushes’ them over the peak to the downward slope (similar to tapping sequence overlearning effect; see Jenkins et al., 1994; and to the evolution of blind drawing learning responses in the temporal lobe; see Likova, 2015). This is the state of overlearning when the brain has already found the optimal decision in terms of functional organization with minimal neural resources involved: we see this state manifested by reduction in the activation, but improvement of performance.

Thus, after Cognitive-Kinesthetic training, “less is more” in the sense that the neural activation is reduced because the system has become more functionally economical for the late-onset blind.

Why are faces so special for the sighted?

Why are faces so different from other perceptual categories?

The answer is considered obvious – we are social beings. We interact with each other extensively through facial signaling.

Our results show that faces are special even without vision, at least in terms of learning changes

However, the blind don’t see and use faces as a source of significant social information, so are faces important to them at all, and do they have an innate face-schema? In particular, are they important for the blind-from-birth who have never visually perceived any facial characteristics and expressions and thus have never used this kind of information?

“Appreciation of visual images seems such an effortless process that we are not aware of the invisible work of powerful brain mechanisms that provide the artist with the ability to transform 3D objects into their 2D projections by abstracting just the right contours into a line drawing; neither are we aware of how complex is the ‘inverse transformation’ of such 2D drawings into an immediate understanding of the 3D objects that they represent” (Likova, 2012).

In contrast to the sighted or late-onset blind, many of our congenitally blind participants were initially wondering how sighted people can enjoy any drawn image at all in contrast to their 3D haptic experience of the world, and asking “How can you enjoy visual art – it is flat!?” Thus, it was remarkable to see how rapidly – under the Cognitive-Kinesthetic Training - they developed facial understanding, and became able to recognize drawn faces and their expressions much easier. Some of the congenitally blind participants developed such strong face empathy that they refused to work with ‘unhappy’ faces, such the one in Fig. 1 (right) but liked instead the ‘happy’ smiling face shown in the left panel of the Fig.1 (and didn’t at all mind his boldness). This means that face information can become important even to people who had never seen a face before. If they haptically explore a statue or tactile cartoon books, they would now have a reaction fully comparable to that of somebody exploring these visually.

Face perception is not restricted to the visual modality but is multisensory

These observations suggest that face perception is never visual-only, because of the central role of faces in empathy and embodiment. Face studies neglect the fact that proprioceptive perception of our own faces in always involved to some degree, so we propose that face perception is always multimodal. Neither sighted nor blind see their own faces, however, they are aware of their facial expression, forming a powerful although neglected form of ‘face perception’ [through proprioceptive encoding of (their own) facial activity]. If we think in terms of a model of “multi-modal integration” where each modality has a different weight, vision doubtlessly would have the highest weight but we suggest that proprioception (including efference copy) would be next.

Insights into face processing modularity?

Our many years of experience show that, before the Cognitive-Kinesthetic training, congenitally blind people usually have no interest in faces. Most surprisingly, many of them don’t even have a proper understanding of facial configuration and the organization of facial parts within the facial structure as a whole. Early in training, when asked to recall and draw from memory, such participants suddenly feel “lost”, start wondering and – remarkably – can end up drawing the nose below the mouth, etc. When asked to use their tactile knowledge of the spatial configuration of their own faces, many were unable to do so. Furthermore, we found that the structure of some facial parts, in particular the mouth, is most challenging for them. The facial profile of the lips forms a pair of convex ‘bumps’, one above the other. However, many blind participants would misremember and draw only one ‘bump’ or even worse - more than two. Such observations provide novel insights into the neural “modules” of face processing and the effect of both visual deprivation and targeted learning.

Interestingly, studies on congenital prosopagnosia have shown behavioral and MEG/EEG evidence for impaired early/configurational processing in that clinical population (e.g., Carbon et al., 2007, 2010; Lueschow et al., 2015).

As described above, before the Cognitive-Kinesthetic training procedure many of our congenitally blind participants manifested a type of early stage, configurational processing deficit, and as a consequence – higher-level deficits related to recognition, emotional response and in particular, empathy. These findings supports an (at least partial) modularity, but it is revealed not by a permanent impairment but by a temporally deprived face-perception functionality, which is evident from the behavioral and neural recovery after our 5-session training. Our findings have implications for future methods on improvement of face perception in clinical populations beyond blindness.

Is there an innate “face-schema” in people born without vision?

The observations in the CB group before the training might also be interpreted to imply the lack of an inborn “face schema”. However, the fact that only after a few days of the Likova Cognitive-Kinesthetic Training, the same people demonstrated a very good (haptic) face perception and memory, and moreover, strong empathy to facial expressions, implies otherwise. We propose that the training “unmasked” and “tuned up” a preexisting, unused face schema, similarly to other brain plasticity manifestations.

General Conclusions

The multidimensional findings of this study are of considerable importance for better understanding of learning, brain lateralization, principles of brain plasticity effective non-invasive rehabilitation strategies in blindness, and the mechanisms of ongoing interplay between ‘Nature and Nurture’.

Acknowledgements

NIH/NEI ROIEY024056 and NSF/SL-CN 1640914 grants to LT Likova. We thank Christopher W. Tyler for reviewing the manuscript.

Author Biography

Dr. Lora Likova, Ph.D., has a multidisciplinary background that encompasses studies in cognitive neuroscience and computer science, perception, psychophysics and computational modeling, with patents in the field of magnetic physics and many years of experience in human vision and visual impairment, neurorehabilitation research and human brain imaging. She is the Director of “Brain Plasticity, Learning & Neurorehabilitation Lab” at The Smith-Kettlewell Eye Research Institute in San Francisco.

References

- Barton JJ, Cherkasova M (2003) Face imagery and its relation to perception and covert recognition in prosopagnosia. Neurology. 61 (2): 220–5. doi: 10.1212/01.WNL.0000071229.11658.F8. [DOI] [PubMed] [Google Scholar]

- Carbon CC, Gruter T, Weber JE, Lueschow A (2007) Faces as objects of non-expertise: processing of thatcherised faces in congenital prosopagnosia. Perception. 36(11):1635–45. [DOI] [PubMed] [Google Scholar]

- Carbon CC, Gruter T, Gruter M, Weber JE, Lueschow A (2010) Dissociation of facial attractiveness and distinctiveness processing in congenital prosopagnosia. Visual Cognition. 18(5):641–54. doi: 10.1080/13506280903462471. [DOI] [Google Scholar]

- Casey SM (1978). Cognitive mapping by the blind. Journal of Visual Impairment & Blindness. ???? [Google Scholar]

- de Gelder B & Rouw R (2001) Beyond localisation: a dynamical dual route account of face recognition. Acta Psychol. (Amst) 1–3, 183–207. [DOI] [PubMed] [Google Scholar]

- De Renzi E (1986). Prosopagnosia in two patients with CT scan evidence of damage confined to the right hemisphere. Neuropsychologia. 24 (3): 385–389. doi: 10.1016/0028-3932(86)90023-0. [DOI] [PubMed] [Google Scholar]

- De Renzi E, Perani D, Carlesimo GA, Silveri MC, Fazio. (1994) Prosopagnosia can be associated with damage confined to the right hemisphere--an MRI and PET study and a review of the literature. Neuropsychologia. 32 (8): 893–902. doi: 10.1016/0028-3932(94)90041-8. [DOI] [PubMed] [Google Scholar]

- Deibert E, Kraut M, Kremen S, Hart J (1999) Neural pathways in tactile object recognition. Neurology 52:1413–1417. [DOI] [PubMed] [Google Scholar]

- Dial JG, Chan F, Mezger C, & Parker HJ (1991). Comprehensive vocational evaluation system for visually impaired and blind persons. Journal of Visual Impairment & Blindness. ??? [Google Scholar]

- Gaunet F, & Thinus-Blanc C (1996). Early-blind subjects’ spatial abilities in the locomotor space: Exploratory strategies and reaction-to-change performance. Perception, 25(8), 967–981. [DOI] [PubMed] [Google Scholar]

- Gazzaniga MS & Smylie CS (1983) Facial recognition and brain asymmetries: clues to underlying mechanisms. Ann. Neurol 5, 536–540. [DOI] [PubMed] [Google Scholar]

- Goyal MS, Hansen PJ, Blakemore CB (2006) Tactile perception recruits functionally related visual areas in the late-blind. NeuroReport 17:1381–1384. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P (2001) Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science 293, 2425–2430. [DOI] [PubMed] [Google Scholar]

- Jenkins IH, Brooks DJ, Nixon PD, Frackowiak RS, Passingham RE (1994) Motor sequence learning: a study with positron emission tomography. J Neurosci. 14(6):3775–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM (1997) The fusiform face area: a module in human extrastriate cortex specialized for face perception. Journal of Neuroscience. 17(11):4302–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Likova LT, Cacciamani L , Nicholas S, Mineff K (2017) Top-down working memory reorganization of the primary visual cortex: Granger Causality analysis. Journal of Vision 17 (10), 594. [Google Scholar]

- Likova LT (2010) Drawing in the blind and the sighted as a probe of cortical reorganization In IS&T/SPIE Electronic Imaging (pp. 752708–752720). International Society for Optics and Photonics. [Google Scholar]

- Likova LT (2012) Drawing enhances cross-modal memory plasticity in the human brain: a case study in a totally blind adult. Frontiers in Human Neuroscience, 6, 3389. doi: 10.3389/fnhum.2012.00044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Likova LT (2013) A cross-modal perspective on the relationships between imagery and working memory. Frontiers in Psychology, 3:561. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Likova LT (2014) Learning-based cross-modal plasticity in the human brain: insights from visual deprivation fMRI. Advanced Brain Neuroimaging Topics in Health and Disease-Methods and Applications, 327–358. [Google Scholar]

- Likova LT (2015) Temporal evolution of brain reorganization under cross-modal training: insights into the functional architecture of encoding and retrieval networks In IS&T/SPIE Electronic Imaging (pp. 939417–939432). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Likova LT, Cacciamani L, Mineff KN, & Nicholas SC (2016). The cortical network for Braille writing in the blind. Electronic Imaging, 2016, 1–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Likova LT (2017) Addressing long-standing controversies in conceptual knowledge representation in the temporal pole: A cross-modal paradigm. Human Vision and Electronic Imaging 2017, 268–272(5) doi: 10.2352/ISSN.2470-1173.2017.14.HVEI-155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Likova LT (2018) Brain reorganization in adulthood underlying a rapid switch in handedness induced by training in memory-guided drawing In Open Access Peer-Reviewed Chapter In “Neuroplasticity”. Ed. Chaban V. Neuroplasticity. 10.5772/intechopen.76317. [DOI] [Google Scholar]

- Lueschow A, Weber JE, Carbon CC, Deffke I, Sander T, Grüter T, Grüter M, Trahms L, Curio G (2015) The 170ms response to faces as measured by MEG (M170) is consistently altered in congenital prosopagnosia. PLOS One. 1–22. 1080/13506280903462471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mattson AJ, Levin HS, Grafman J; Levin; Grafman (February 2000). A case of prosopagnosia following moderate closed head injury with left hemisphere focal lesion. Cortex. 36 (1): 125–37. doi: 10.1016/S0010-9452(08)70841-4. [DOI] [PubMed] [Google Scholar]

- McCarthy G, Puce A, Gore JC & Allison T (1997) Face-specific processing in the human fusiform gyrus. J. Cogn. Neurosci 5, 605–610. [DOI] [PubMed] [Google Scholar]

- Miller MB, Kingstone A & Gazzaniga MS (2002) Hemispheric encoding asymmetry is more apparent than real. J. Cogn. Neurosci 5, 702–708. [DOI] [PubMed] [Google Scholar]

- Joyce A, Dial J, Nelson P, & Hupp G (2000). Neuropsychological predictors of adaptive living and work behaviors. Archives of Clinical Neuropsychology, 15(8), 665–665. [Google Scholar]

- Passini R, Proulx G, & Rainville C (1990) The spatio-cognitive abilities of the visually impaired population. Environment and Behavior, 22(1), 91–118. [Google Scholar]

- Pietrini P, Furey ML, Ricciardi E, Ida Gobbini M, Carolyn Wu W-H, Cohen L, et al. (2004) Beyond sensory images: object-based representation in the human ventral pathway. Proc Natl Acad Sci USA 101: 5658–5663. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rieser JJ, Guth DA, & Hill EW (1986) Sensitivity to perspective structure while walking without vision. Perception, 15(2), 173–188. [DOI] [PubMed] [Google Scholar]

- Rossion B, Dricot L, Devolder A, Bodart JM, Crommelinck M, De Gelder B & Zoontjes R (2000) Hemispheric asymmetries for whole-based and part based face processing in the human fusiform gyrus. J. Cogn. Neurosci 5, 793–802. [DOI] [PubMed] [Google Scholar]

- Spiridon M, Kanwisher N, 2002. How distributed is visual category information in human occipital –temporal cortex? An fMRI study. Neuron 35, 1157–1165. [DOI] [PubMed] [Google Scholar]

- Sprengelmeyer R, Rausch M, Eysel UT, Przuntek H; (1998) Neural structures associated with recognition of facial expressions of basic emotions. Proc. Biol. Sci 265 (1409): 1927–31. doi: 10.1098/rspb.1998.0522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tarr MJ, Gauthier I. (2000) FFA: a flexible fusiform area for subordinate-level visual processing automatized by expertise. Nat Neurosci. 3(8):764–9.. doi: 10.1038/77666 [DOI] [PubMed] [Google Scholar]

- Tinti C, Adenzato M, Tamietto M, & Cornoldi C (2006). Visual experience is not necessary for efficient survey spatial cognition: evidence from blindness. The Quarterly Journal of Experimental Psychology, 59(7), 1306–1328. [DOI] [PubMed] [Google Scholar]

- Verstichel P (2001). [Impaired recognition of faces: implicit recognition, feeling of familiarity, role of each hemisphere]. Bull. Acad. Natl. Med (in French). 185 (3): 537–49, discussion 550–3. [PubMed] [Google Scholar]

- Wolford G, Miller MB & Gazzaniga MS (2004) Split decisions In The Cognitive Neurosciences III (ed. Gazzaniga MS), pp. 1189–1199. Cambridge, MA:MIT Press. [Google Scholar]

- Yerkes RM, Dodson JD (1908) The relation of strength of stimulus to rapidity of habit-formation. Journal of Comparative Neurology, 18:5, 459–48. [Google Scholar]