Significance

Conventional wisdom in the laboratory sciences suggests that PhD students develop their research skills as a function of mentorship from their faculty advisors (i.e., principal investigators; PIs). However, no prior research has identified empirically a relationship between specific PI practices and the development of research skills. Here we show that PIs’ laboratory and mentoring activities do not significantly predict students’ skill development trajectories, but the engagement of postdocs and senior graduate students in laboratory interactions do. These findings support the practice of “cascading mentorship” as differentially effective and identify a critical but previously unrecognized role for postdocs in the graduate training process. They also illustrate both the importance and the feasibility of identifying evidence-based practices in graduate education.

Keywords: graduate training, mentorship, research skills, postdocs, doctoral education

Abstract

The doctoral advisor—typically the principal investigator (PI)—is often characterized as a singular or primary mentor who guides students using a cognitive apprenticeship model. Alternatively, the “cascading mentorship” model describes the members of laboratories or research groups receiving mentorship from more senior laboratory members and providing it to more junior members (i.e., PIs mentor postdocs, postdocs mentor senior graduate students, senior students mentor junior students, etc.). Here we show that PIs’ laboratory and mentoring activities do not significantly predict students’ skill development trajectories, but the engagement of postdocs and senior graduate students in laboratory interactions do. We found that the cascading mentorship model accounts best for doctoral student skill development in a longitudinal study of 336 PhD students in the United States. Specifically, when postdocs and senior doctoral students actively participate in laboratory discussions, junior PhD students are over 4 times as likely to have positive skill development trajectories. Thus, postdocs disproportionately enhance the doctoral training enterprise, despite typically having no formal mentorship role. These findings also illustrate both the importance and the feasibility of identifying evidence-based practices in graduate education.

Developing a highly skilled scientific workforce through doctoral training is critical to the advancement of science, but faculty who supervise these students consistently articulate reliance on their own experiences as students, rather than evidence-based practices, to inform their approaches to training (1–3). The doctoral advisor—typically the principal investigator (PI)—is often characterized as a singular or primary mentor who guides students using a cognitive apprenticeship model (4). Alternatively, the “cascading mentorship” model (5) describes the members of laboratories or research groups receiving mentorship from more senior laboratory members and providing it to more junior members (i.e., PIs mentor postdocs, postdocs mentor senior graduate students, senior students mentor junior students, etc.). However, it is unclear how successful each model may be in fostering the development of doctoral students’ research skills.

Understanding research skill development requires attention to both the growth of specific skills (e.g., experimental design, data analysis) and collective profiles that reflect consistent patterns of growth within and across skills over time. For example, discrete graduate training experiences such as teaching (6) or coauthoring with a faculty mentor (7) are associated with growth in certain research skills. Other analyses have documented differences in rates of skill development overall as a function of small differences in initial skill level (8). However, little is known about the sustained effects of programmatic features of doctoral training that predict collective skill development over the course of multiple years.

Despite the popularity of the classic, single-mentor model in characterizations of graduate training and its positive association with scholarly productivity (9), no studies to date have linked the quality of mentorship to differential learning or skill outcomes. Likewise, the cascading mentorship model has been described as a “signature pedagogy” of laboratory-based sciences (5), but no studies have tested the efficacy of that structure.

In this 4-y longitudinal study, we measured research skills of a cohort of 336 PhD students in the biological sciences who began their programs of study in fall 2014, drawn from 53 universities across the United States (see Materials and Methods for details regarding participant recruitment). Specific subdisciplines included cellular and molecular biology, developmental biology, microbiology, and genetics. Research skills were measured annually using sole-authored writing samples (e.g., draft manuscripts, qualifying or comprehensive examinations, dissertation proposals) that proposed or reported the results of empirical studies. Each writing sample was scored on all target skills by 2 blind raters using a validated rubric (0.818 ≤ intraclass correlation [ICC] ≤ 0.969; SI Appendix, Table S1).

The specific research skills measured for this study were: introducing/setting the study in context (INT), appropriately integrating primary literature (LIT), establishing testable hypotheses (HYP), using appropriate controls and replication (CTR), experimental design (EXP), selecting data for analysis (SEL), data analysis (ANA), presenting results (PRE), basing conclusions on results (CON), identifying alternative explanations of findings (ALT), identifying limitations of the study (LIM), and discussing implications of the findings (IMP). These planks were selected through a review of relevant literature and iterative development of criteria (10) as well as analyses from previous studies (6–8). We acknowledge that there are other important skills that likely contribute to expertise in the biological sciences, which were not measured in this study (e.g., bench skills). Written research products that make a scientific argument are an end goal of research. Thus, they represent authentic and ecologically valid embodiments of skill with the potential to directly impact scientific career trajectories (11, 12).

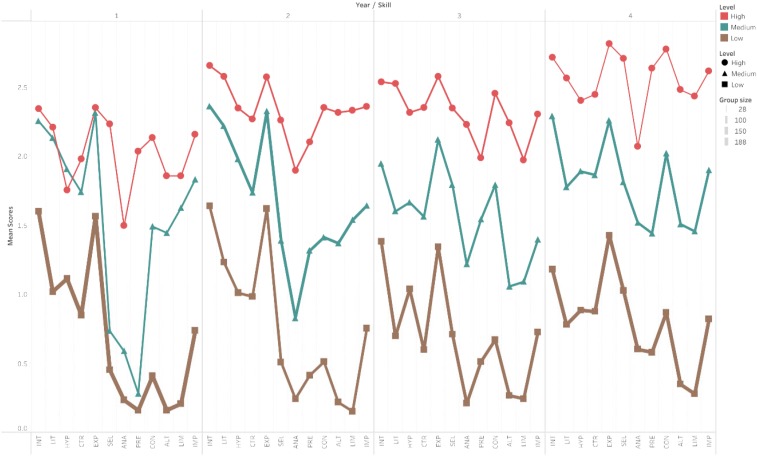

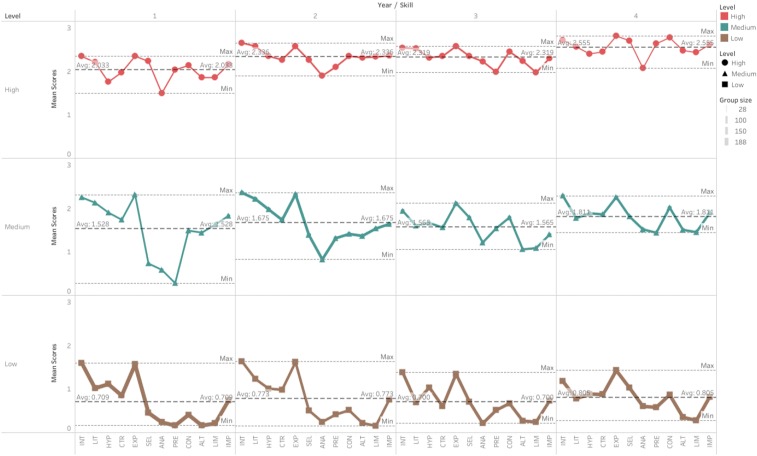

We applied latent profile transition analysis (13) (LPTA) to examine year-over-year growth in all research skills by identifying discrete patterns of performance among the target skills that were common across subgroups of participants within and across years. LPTA is a longitudinal, person-oriented technique for modeling change and stability in subgroup membership (i.e., latent class) across time. Following cross-sectional latent profile analyses that identified discrete participant subgroups of the sample based on constellations of the 12 research skills within each year, LPTA estimated the transitions of participants between these subgroups from year to year. Based on a comparison of model fit criteria (see full descriptions in Materials and Methods and SI Appendix, Table S7), results showed 3 latent subgroups among our sample of doctoral students with regard to their scores in a set of research skills: low-, medium-, and high-skill groups within each year (Fig. 1). The collective scores within each subgroup increased their average skill mean values over time in differing amounts on a 3-point scale: The low group increased its mean by 0.096, the medium group increased by 0.283, and the high group increased by 0.522 (Fig. 2), reflecting similar patterns to those reported in ref. 8. Students transitioned among these subgroups across time with the following transitions being considered positive: low- to medium-skill, low- to high-skill, medium- to high-skill, and high- to high-skill. From year 1 to 2, year 2 to 3, and year 3 to 4, 37, 24, and 7% of students had positive transitions, respectively.

Fig. 1.

High-, medium-, and low-skill latent profiles, showing estimated mean scores for each research skill within each year according to the final LPTA model.

Fig. 2.

Latent profiles by time, showing minimum, maximum, means, and number of participants in each profile.

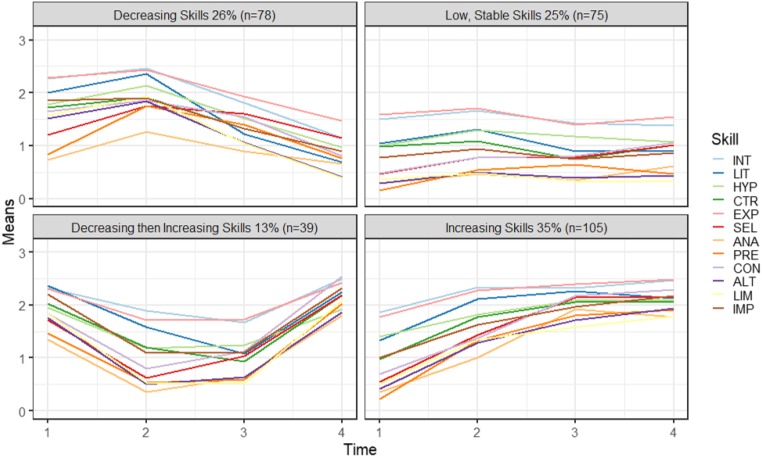

To capture the overall trajectories of skill growth across the 4 y of data collection, we examined latent growth curves [LGCs (14)] within a structural equation modeling framework that included all measured skills. We identified 4 distinct LGCs, with 1 reflecting positive linear growth over time (n = 75; 35.4% of the sample), 1 reflecting decreasing then increasing growth (n = 39; 13.1% of the sample), and 2 reflecting flat or slightly decreasing trajectories (n = 153; 51.5% of the sample) (Fig. 3). Participants with positive transitions at any transition point were between 2.28 (95% CI [1.48, 3.51]) and 4.62 (95% CI [2.93, 7.29]) times as likely to have a positive linear LGC over 4 y than any of the other 3 LGC trajectories, showing that positive transitions between years were substantially associated with general positive growth across all years.

Fig. 3.

Latent growth model results, reflecting common trajectories of skill development within each latent class.

Next, we tested the extent to which different features of participants’ doctoral training differentially predicted their year-over-year LPTA transitions and 4-y LGC trajectories. To do this, we collected survey data from participants on an annual basis to elicit details of their interactions with faculty as mentors and information about the roles that various individuals (i.e., PI, other faculty, postdocs, senior graduate students, junior graduate students, undergraduates, laboratory technicians) took on in the context of their laboratory. We used data from the second year of participants’ PhD programs as predictors because students began permanent laboratory placements with a designated faculty PI at the outset of their second year (15).

Using logistic regression models, we examined predictors of positive LPTA transitions and LGC trajectories (coded as 1), contrasted with all other transition and trajectory patterns (coded as 0). First, we tested whether laboratory roles during year 2 predicted positive transitions. The year 1 to year 2 transition was excluded from analysis, because data related to permanent laboratory features and faculty mentor interactions were not available prior to year 2. All laboratory roles for all possible laboratory members were included as predictors of positive transitions and trajectories. In addition, we interviewed n = 82 participants (24.4% of the total sample) on an annual basis to explore contemporaneous descriptions of participants’ experiences and interactions within their respective laboratories.

Results

Latent Profile Transition Analysis Results.

Our results indicate that the latent profiles yielded by the latent profile analyses tended to differ from each other mostly by skill level rather than by difference in shape. Specifically, participants in latent class 1 scored relatively high on all of the 12 research skills, and were accordingly referred to as high-skill students, comprising 13, 21, 21, and 9% of the sample for years 1, 2, 3, and 4, respectively. Participants in latent classes 2 and 3, on the other hand, scored moderately or low on all research skills relative to the rest of the sample. We therefore referred to these latent classes as medium-skill students, comprising 23, 41, 31, and 35%, and low-skill students, comprising 63, 38, 48, and 56%, of the full samples for years 1, 2, 3, and 4, respectively (Figs. 1 and 2).

The LPTA model examined how students transitioned among skill levels from year to year. Students showed positive, negative, and no transitions among latent classes across time as shown in SI Appendix, Fig. S1. For example, 20% of students in the high-skilled student researchers latent class in year 1 moved to the moderate-skilled student researchers latent class in year 2, indicating negative movement; 23% of students in the low-skilled student researchers latent class in year 2 moved to the moderate-skilled student researchers latent class in year 3, indicating positive movement; and 67% of students in the low-skilled student researchers latent class in year 3 remained in the low research skills latent class in year 4. The percentages given are based on the most likely latent class at each time point for each student. Means and SDs for the performance-based research skills measures across time are shown in SI Appendix, Table S2.

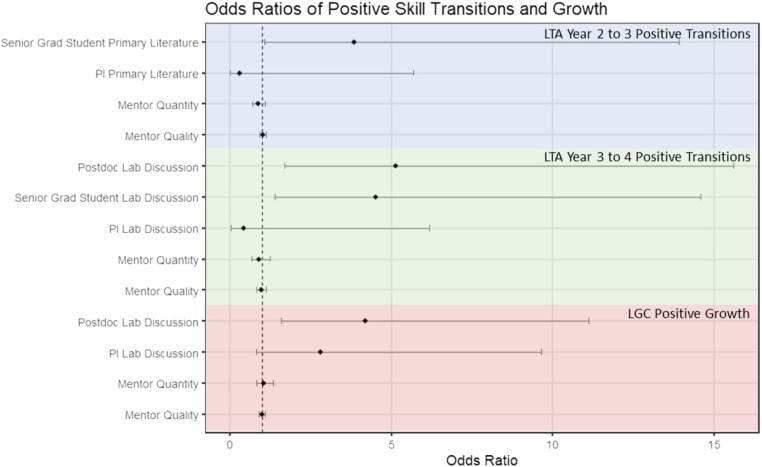

Results show that senior graduate students engaging with primary literature strongly predicted positive LPTA transitions for study participants in years 2 to 3 (odds ratio [OR] 3.85, 95% CI [1.07, 13.89]), and both postdocs and senior graduate students participating in laboratory discussions strongly predicted positive LPTA transitions in years 3 to 4 (OR 5.14, 95% CI [1.69, 15.60]; OR 4.50, 95% CI [1.39, 14.58]).

Latent Growth Curve Results.

Results from the LGC trajectory logistic regression likewise showed that postdocs participating in laboratory discussions strongly predicted the likelihood of participants belonging to the linear positive growth LGC (OR 4.20, 95% CI [1.59, 11.10]). Other predictors, including demographic characteristics, presence of postdocs in the laboratory (independent of activities), faculty interactions, and peer interactions were nonsignificant in predicting either positive LPTA transitions or LGC trajectories (SI Appendix, Tables S3–S5). Positive, significant predictors of positive transitions and trajectories are shown in Fig. 4.

Fig. 4.

Odds ratios with 95% confidence intervals displaying significant predictors of positive transitions (year 2 to year 3, year 3 to year 4) and skill trajectories (LGCs), as well as contrasting nonsignificant predictors. Odds ratios with confidence intervals containing 1 (indicated by vertical dashed line) are nonsignificant.

Qualitative Results.

Of the interviews conducted (n = 82, drawn from the larger study sample), 48.8% of interviews conducted with study participants yielded at least one characterization of postdocs as valued mentors and instructors within the laboratory context. Four themes emerged related to how students interact with and receive support from postdocs. Specifically, we found that postdocs provide hands-on instruction in the laboratory (n = 18), give professional and academic feedback (n = 17), model how an academic career may look for the graduate student (n = 13), and provide personal/emotional support (n = 13). Representative quotes are provided in SI Appendix, Table S6.

Discussion

These findings indicate that PhD students in the biological sciences are 4.50 times as likely to have positive year-to-year LPTA transitions when senior graduate students are active participants in laboratory discussion. They are also 5.14 times as likely to have positive year-to-year LPTA transitions when postdocs are active participants in laboratory discussion. Similarly, they are 4.20 times as likely to have positive LGC trajectories when postdocs are active participants in laboratory discussion. Further, the qualitative data indicate that postdocs mentor doctoral students in myriad ways, most commonly by being present in the laboratory to provide ongoing and hands-on instruction and professional guidance. Notably, PI activities and reported faculty mentorship measures do not predict positive year-to-year transitions or overall positive trajectory. In combination with the extensive set of variables found to have no relationship to positive transitions and growth trajectories, our results suggest that active engagement in collective laboratory discussion by senior peers (i.e., senior graduate students and postdocs) better predicts PhD students’ skill development than the mentoring or laboratory activities of faculty mentors. As such, the cascading model of mentorship is not only a descriptive norm of doctoral student support in university-based laboratory environments (5) but also a differentially beneficial practice that uniquely predicts positive research skill development.

In this context, our findings have substantial implications for both programmatic doctoral training in the biological sciences and the conceptualization of the value that postdocs contribute to the larger research enterprise. As the practice of science has shifted toward larger team enterprises and an increasing pace and volume of workload, the nature of the PI’s role has shifted to one that often entails less direct contact with students (16, 17). Postdocs and others within the laboratory may step into the gap that is created, with unexpected dividends. In this context, our findings suggest that adoption of a cascading mentorship model which encourages active engagement of postdocs within the laboratory as mentors to PhD students may be beneficial to student skill development. Accordingly, it is possible that providing training to postdocs in effective mentoring practices may further enhance the benefits to graduate students identified in this study.

Recent analyses of the postdoctoral role within the research enterprise indicate that postdocs are underpaid relative to the value they contribute to scholarly productivity (18). However, their total value within the laboratory may be substantially more than currently recognized based on their skill development contributions. Conversely, postdocs may realize value in terms of their own development from engaging in informal mentoring of graduate students. Previous research has identified benefits for graduate students’ research skill development from a combination of teaching and research activities over research as a sole focus (6). It may be that postdocs benefit similarly from their roles as mentors in the laboratory. Exploratory studies suggest that postdoctoral mentoring of student researchers may facilitate further thinking and risk taking on postdocs’ topics of research, along with the development of other skills, such as teaching and the use of scientific communication skills (19). Opportunities to develop such skills align with current recommendations for postdoctoral training (20, 21).

Materials and Methods

Participant Recruitment.

Study recruitment materials instructing prospective participants to contact the research team were disseminated in 2 phases. First, we contacted program directors and department chairs of the 100 largest PhD programs in the biological sciences across the United States as well as public flagship universities and minority-serving institutions (i.e., historically black colleges and universities and Hispanic-serving institutions) with PhD programs in the biological science subfields of interest. All program directors and department chairs were given information about the purpose of the study and asked to share recruitment materials with incoming PhD students in the fall of 2014. Next, the research team sent recruitment emails to several listservs, including those of the American Society for Cell Biology and the Center for the Integration of Research, Teaching, and Learning Network for broader dissemination. All students who responded to these emails were entering PhD programs that we contacted in the first phase of recruitment, suggesting that recruitment efforts approached saturation at the institutional level. All prospective participants who contacted the research team were then screened to ensure that they met the criteria for participation and understood the expectations for participation. Participants signed informed consent per the requirement by the Utah State University Institutional Review Board (IRB) for human subjects research under protocol 5888. To incentivize study participation, students received a $400 annual incentive. The full procedure for this study was approved by the IRB.

In total, we recruited 336 participants from 53 institutions across the United States. Of the institutions represented, 42 are classified as R1 (highest research activity), 7 institutions are R2 (higher research activity), and the remaining 4 institutions fall in other Carnegie categories.

Data Collection.

Data for the present study were obtained through web-based surveys and the collection of single-authored writing samples via email. Both survey data and writing samples were collected annually during the first 4 y of the doctoral program.

After removing cases with missing data on all key variables and accounting for attrition (both from the study and the doctoral program), the present study relies on a longitudinal sample of n = 297 students. Most participants were female (n = 183), continuing-generation (n = 210), from majority racial/ethnic groups (n = 240), or domestic students (n = 237). Fewer participants were male (n = 114), first-generation (n = 83), from underrepresented racial/ethnic minority groups (n = 53), or international students (n = 57). Four students did not provide data on their generation status nor racial/ethnic identity, and 3 did not provide data on international student status.

Measures.

Background variables.

During the first year of the study, students completed a demographic questionnaire that included questions about their race/ethnicity, gender, parents’ education level, and international student status.

Race.

Students indicated their race/ethnicity by selecting one or more of the following: American Indian or Alaska Native; Asian or Asian American; black or African American; Latino/a; Native Hawaiian or other Pacific Islander; white. Students’ responses were aggregated to create a measure of underrepresented racial/ethnic minority (URM) status (0 = majority; 1 = URM) where students who selected only a white and/or Asian identity were coded as majority; all other students were coded as URM.

Gender.

As a proxy for gender, students reported their sex as female or male (female = 0; male = 1).

First-generation college status.

Students were asked to indicate the highest degree obtained by their parent(s); students who had no parent with a 4-y college degree were coded as first-generation (0 = continuing-generation; 1 = first-generation).

International student status.

Students also self-reported whether or not they were an international student (0 = no; 1 = yes).

Performance-based research skills.

To measure research skills, students submitted a sole-authored research product each year immediately following the spring semester of their doctoral training. Expectations that (i) documents were written within the preceding 4 mo and (ii) were not to have been edited or contributed to by others were clearly communicated. Consequently, writing samples were typically unpublished manuscripts, which may or may not have later been published in subsequent collaboration with others. Two independent reviewers rated each document on 12 research skills according to clearly defined rubric criteria. Rubric criteria drew heavily from prior studies (6, 10). Skills included:

-

i)

introducing/setting the study in context (INT)

-

ii)

appropriately integrating primary literature (LIT)

-

iii)

establishing testable hypotheses (HYP)

-

iv)

using appropriate experimental controls and replication (CTR)

-

v)

experimental design (EXP)

-

vi)

selecting data for analysis (SEL)

-

vii)

data analysis (ANA)

-

viii)

presenting results (PRE)

-

ix)

basing conclusions on results (CON)

-

x)

identifying alternative explanations of findings (ALT)

-

xi)

identifying limitations of the study (LIM)

-

xii)

discussing implications of the findings (IMP)

All raters reviewed criteria on a scale from 0 to 3.25. Interrater reliability as measured by intraclass correlations (2-way random effects) was good, 0.818 to 0.969. Exact ICC values are shown in SI Appendix, Table S1. Scores were averaged across raters to create a composite measure for each skill.

Lab roles.

Roles of other laboratory members were evaluated by asking students: In your research experience during your PhD program so far, who participates in:

-

i)

laboratory discussions to understand contemporary concepts in your field?

-

ii)

making use of the primary scientific research literature in your field (e.g., journal articles)?

-

iii)

identifying a specific question for investigation based on the research in your field?

-

iv)

formulating research hypotheses based on a specific question?

-

v)

designing an experiment or theoretical test of hypotheses?

-

vi)

developing the “controls” in research?

-

vii)

collecting data?

-

viii)

statistically analyzing data?

-

ix)

interpreting data by relating results to the original hypothesis?

-

x)

reformulating original research hypotheses (as appropriate)?

Students responded to each question by selecting all persons in the laboratory who participated in each task. Possible responses included principal investigator(s), other faculty, research scientists/postdocs, senior graduate students, junior graduate students, laboratory technicians, and undergraduate students. Affirmative responses were coded as 1. Empty responses, as long as the student answered other questions, were coded as 0. Items that were seen by the participant but left unanswered, or items that were not seen by the participant, were considered missing data. Responses to laboratory roles from year 2, after students entered a permanent laboratory, were included in the present study.

Faculty interactions.

We examined the role of student–faculty interactions, relying on previously developed items (22). The occurrence of faculty interactions was measured using a composite variable made up of 4 items from the annual surveys, asking students whether or not they do any of the following with program faculty: engage in social conversation; discuss topics in his/her field; discuss other topics of intellectual interest; and talk about personal matters. These items showed adequate reliability, McDonald’s omega = 0.71. Items were added together to form a scale ranging from 0 (little to no interactions) to 4 (many types of interactions).

The quality of faculty interactions was computed using a 6-item composite variable where students indicated their agreement with the following items: The faculty are accessible for scholarly discussions outside of class; I feel free to call on the faculty for academic help; the faculty are aware of student problems and concerns; I can depend on the faculty to give me good academic advice; I am treated as a colleague by the faculty; and the faculty sees me as a serious scholar. Students responded to each item using a 5-point scale ranging from strongly disagree to strongly agree. These items showed good reliability, McDonald’s omega = 0.85. Again, items were added together to form a scale, ranging from 6 (low-quality interactions) to 30 (high-quality interactions).

Peer interactions.

We also examined the role of student–peer interactions using 2 subscales (22). The social interaction with peers was measured using 2 items that asked students to indicate (yes = 1; no = 0) whether they have interacted with peers in their department in the following ways: engage in social conversation and talk about personal matters. The academic interaction with peers was also assessed using 2 items that asked students to indicate (yes = 1; no = 0) whether they have interacted with peers in the following ways: discuss topics in his/her field and discuss other topics of intellectual interest. Items were summed to create each of the 2 subscales. These items yielded adequate to good reliability estimates, McDonald’s omega = 0.81 (academic) and 0.83 (social).

Interviews.

To provide a more nuanced interpretation of the findings, we used qualitative analysis to explore contemporaneous descriptions of postdocs’ interactions with graduate student participants. Specifically, we analyzed interview data from 82 participants who were recruited from the larger sample. The qualitative sample was largely representative of the quantitative survey sample; URM students and first-generation college students each represented nearly a third (29.3%) of participants, and women made up 68.3% of the sample. All 82 participants completed an hour-long, semistructured phone interview with a member of the research team during the summer after their fourth year in the doctoral program. The interview protocol focused on students’ experiences over the course of their doctoral program and included questions about experiences in the laboratory, along with probing questions asking specifically about interactions with PIs, faculty, postdocs, and other research staff. All interviews were recorded and transcribed verbatim.

Statistical Analysis.

All quantitative analyses accounted for students nested within university. Quantitative analyses were conducted in Mplus v8.1. Response variable rubric scores had 31% missing data at the first time point, up to 57% missing data at the fourth time point. A missing-values analysis [χ2(312) = 346.41, P = 0.09] showed that the missing data met the assumption for missing completely at random [MCAR (23)]. Missing data were handled more conservatively under missing at random [MAR (24)] assumptions by using a maximum-likelihood estimation algorithm robust to nonnormally distributed data (MLR).

The potential heterogeneity of doctoral student skill development was evaluated using 2 different methods: latent profile transition analysis and latent class growth analysis. Multinomial logistic regression analyses were conducted to examine positive transitions and positive growth. To account for familywise error due to multiple sets of logistic regression analyses, a false discovery rate was applied to results (25).

Latent profile transition analysis.

Latent profile transition analysis is an extension of latent profile analysis (LPA) to longitudinal measures. LPA is a person-oriented technique used for identifying unobserved subgroups in a sample based on the patterns of means (and variances) of observed variables within a given time point. LPTA additionally evaluates the stability and mobility of subgroup memberships over time by evaluating the probabilities of individuals transitioning from one latent class at time t to another latent class at time t + 1 (13). This analysis allowed us to examine discrete transitions of individuals moving from one skill profile to another skill profile between consecutive time points.

Prior to conducting LPTAs, we first performed LPAs to identify distinct latent subpopulations among doctoral students who were scored on a set of 12 indicators of research skills at each assessment point (year 1 to year 4). Our analysis estimated LPA solutions with 1 through 5 latent classes, and were estimated using 500 starting values. We inspected different model fit criteria (Bayesian information criterion [BIC] and entropy) across solutions for selecting the best-fitting solution (SI Appendix, Table S7). BIC is recommended as the most powerful measure for evaluating competing models to determine the optimal number of latent classes, with lower values representing better model fit. Entropy is an indicator of the precision with which individuals are assigned to each latent class, with values close to 1 representing more accurate latent class assignments. Based on these measures, our results showed that the 3-class LPA model was the best fit to the data for all 4 assessment points. Although the values of BICs continued to decrease without actually reaching the minimum value, the plots of these values showed that the slope plateaued between 3 and 5 latent classes, indicating that the 3-class solution was better than other solutions. The 3-class model also had very high entropy (0.95, 0.94, 0.94, and 0.96 for years 1, 2, 3, and 4, respectively). Accordingly, we chose the 3-class LPA model as the measurement model for the subsequent LPTA models.

After evaluating the profiles of skills within time, we examined how students transitioned between profiles across time, using LPTA. Two different LPTA models were evaluated: a model without assumptions about the model structure across time and a model that assumed measurement equivalence across time. Confirmation of measurement equivalence would indicate that the skill profiles are the same across time (e.g., high skills at time 1 are the same as high skills at time 2, etc.). The model without measurement equivalence across time best fit the data (SI Appendix, Table S8).

Latent class growth analysis.

Latent class growth analysis (LCGA) is a longitudinal, person-oriented analysis (14). The primary use of LCGA is to identify latent subgroups (or “classes”) of participants based on similar latent growth curve trajectories. Group membership is static and unchanging in LCGA because the goal of LCGA is to identify latent trajectories rather than latent transitions. This analysis allowed us to examine skill trajectories across all time points.

LCGA models with 1 to 6 latent classes were evaluated. All models were evaluated using 500 starting values. Models were compared using BIC and entropy (SI Appendix, Table S9) to determine the number of latent classes that best represented the skills trajectory data. Like the LPTA models, the BIC values continued to decrease as the number of latent classes increased. Although these values decreased, examining plots of the BIC showed that the BIC slope plateaued at the 4-class solution, and this solution was chosen as the best-fitting model.

To characterize doctoral student skill development across latent classes, we examined the mean values of each skill at each time point within each latent class. Class 1 represented 26% of the sample and showed decreasing skill levels across time. Class 2 represented 25% of the sample and showed stable, low skill levels across time. Class 3 represented 13% of the sample and showed initially decreasing then increasing skill levels across time. Class 4 represented 35% of the sample and showed linear, increasing skill levels across time.

Multinomial logistic regression analysis.

Logistic regression analyses were used to examine whether independent predictors impacted positive transitions as well as positive skills trajectory. All variables (as shown in SI Appendix, Tables S6–S8), a total of n = 78, were included as predictors of positive transitions between years 2 and 3 as well as years 3 and 4. All variables were also included as predictors of the positive latent growth curve skill trajectory. Analyses were evaluated in separate statistical models due to the relatively low sample size. Confidence intervals were adjusted post hoc using false discovery rate (FDR) (25) to maintain a familywise error rate of 0.05. Results for all independent variables predicting positive transitions between times 2 and 3, and 3 and 4, and positive skill trajectories are presented in SI Appendix, Tables S6–S8.

Qualitative analysis.

Data were analyzed using NVivo 12 software. Because we were particularly interested in students’ experiences with postdocs and full-time research staff related to the current paper, we purposely selected transcripts from all participants who mentioned their interactions with a postdoc(s) and other research staff as part of their interview. To identify these participants, we first conducted exploratory analyses using a sample of 20 transcripts to identify the language used by students to discuss relevant interactions in the laboratory. Based on this preliminary analysis, we used NVivo’s text search query function to search for interviews where participants used phrases containing any variation of the following terms: “postdoc” OR “post doc” OR “post-doc” OR “lab manager” OR “research scientist.” Notably, the query also detected instances where the identified terms were the stem of the words used in the interview (e.g., if students discussed “postdoctoral researchers,” this would have also been captured by the query). Finally, results from the query were reviewed to remove any interviews/excerpts that were not relevant. After closely reviewing the query results, we identified 53 participants with relevant data.

All 53 transcripts were coded in a systematic, 2-phase process (26). During the first phase, we read and reread each excerpt to identify emergent themes related to how students interact with postdocs and other full-time research staff. Next, we developed a codebook with descriptions and examples of the themes and used this codebook to analyze each transcript. Two members of the research team independently coded 28% of the transcripts (n = 15) to ensure reliability and trustworthiness. Additionally, we met throughout the analysis process to participate in peer debriefing (27).

Data and Code Availability.

The data generated and analyzed during the current study are available in Utah State University’s Open Access Institutional Repository at https://doi.org/10.26078/X535-HW49. The statistical code used to analyze the data during the current study is available from the corresponding author on reasonable request.

Supplementary Material

Acknowledgments

We gratefully acknowledge the support of the National Science Foundation. This material is based upon work supported under Awards 1431234, 1431290, and 1760894. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation. We also thank Alok Shenoy for his data management services.

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1912488116/-/DCSupplemental.

References

- 1.Delamont S., Parry O., Atkinson P., Creating a delicate balance: The doctoral supervisor’s dilemmas. Teach. High. Educ. 3, 157–172 (1998). [Google Scholar]

- 2.Lee A., How are doctoral students supervised? Concepts of doctoral research supervision. Stud. High. Educ. 33, 267–281 (2008). [Google Scholar]

- 3.Stephens S., The supervised as the supervisor. Educ. Train. 56, 537–550 (2014). [Google Scholar]

- 4.Austin A. E., Cognitive apprenticeship theory and its implications for doctoral education: A case example from a doctoral program in higher and adult education. Int. J. Acad. Dev. 14, 173–183 (2009). [Google Scholar]

- 5.Golde C., Bueschel A. C., Jones L., Walker G., “Advocating apprenticeship and intellectual community: Lessons from the Carnegie Initiative on the Doctorate” in Doctoral Education and Faculty of the Future, Ehrenberg R. G., Kuh C. V., Eds. (Cornell University Press, 2009), pp. 53–64. [Google Scholar]

- 6.Feldon D. F., et al. , Graduate students’ teaching experiences improve their methodological research skills. Science 333, 1037–1039 (2011). [DOI] [PubMed] [Google Scholar]

- 7.Feldon D. F., Shukla K., Maher M. A., Faculty-student coauthorship as a means to enhance STEM graduate students’ research skills. Int. J. Res. Dev 7, 178–191 (2016). [Google Scholar]

- 8.Feldon D. F., Maher M. A., Roksa J., Peugh J., Cumulative advantage in the skill development of STEM graduate students: A mixed-methods study. Am. Educ. Res. J. 53, 132–161 (2016). [Google Scholar]

- 9.Paglis L., Green S., Bauer T., Does adviser mentoring add value? A longitudinal study of mentoring and doctoral student outcomes. Res. High. Educ. 47, 451–476 (2006). [Google Scholar]

- 10.Timmerman B. E., Strickland D. C., Johnson R. L., Payne J., Development of a rubric for assessing undergraduates’ science inquiry and reasoning skills using scientific writing across multiple courses. Assess. Eval. High. Educ. 36, 509–547 (2011). [Google Scholar]

- 11.Simonton D. K., Scientific creativity as constrained stochastic behavior: The integration of product, person, and process perspectives. Psychol. Bull. 129, 475–494 (2003). [DOI] [PubMed] [Google Scholar]

- 12.Feldon D. F., Maher M.A., Timmerman B.E., Graduate education. Performance-based data in the study of STEM Ph.D. education. Science 329, 282–283 (2010). [DOI] [PubMed] [Google Scholar]

- 13.Collins L., Lanza S., Latent Class and Latent Transition Analysis: With Applications in the Social, Behavioral, and Health Sciences (John Wiley & Sons, Hoboken, NJ, 2010). [Google Scholar]

- 14.Nagin D. S., Analyzing developmental trajectories: A semi-parametric, group-based approach. Psychol. Methods 4, 139–157 (1999). [DOI] [PubMed] [Google Scholar]

- 15.Maher M. A., Wofford A. M., Roksa J., Feldon D. F., Doctoral student experiences in biological sciences laboratory rotations. Stud. Grad. Postdoc. Educ. 10, 69–82 (2019). [Google Scholar]

- 16.Gappa J. M., Austin A. E., Trice A. G., Rethinking Faculty Work: Higher Education’s Strategic Imperative (Jossey-Bass, San Francisco, 2007). [Google Scholar]

- 17.Jones B. F., Wuchty S., Uzzi B., Multi-university research teams: Shifting impact, geography, and stratification in science. Science 322, 1259–1262 (2008). [DOI] [PubMed] [Google Scholar]

- 18.Kahn S., Ginther D. K., The impact of postdoctoral training on early careers in biomedicine. Nat. Biotechnol. 35, 90–94 (2017). [DOI] [PubMed] [Google Scholar]

- 19.Dolan E., Johnson D., Toward a holistic view of undergraduate research experiences: An exploratory study of impact on graduate/postdoctoral mentors. J. Sci. Educ. Technol. 18, 487–500 (2009). [Google Scholar]

- 20.Daniels R., Beninson L., The Next Generation of Biomedical and Behavioral Sciences Researchers: Breaking Through (The National Academies Press, Washington, DC, 2018). [PubMed] [Google Scholar]

- 21.Committee to Review the State of Postdoctoral Experience in Scientists and Engineers , The Postdoctoral Experience Revisited (The National Academies Press, Washington, DC, 2014). [PubMed] [Google Scholar]

- 22.Weidman J. C., Stein E. L., Socialization of doctoral students to academic norms. Res. High. Educ. 44, 641–656 (2003). [Google Scholar]

- 23.Little R. J. A., A test of missing completely at random for multivariate data with missing values. J. Am. Stat. Assoc. 83, 1198–1202 (1988). [Google Scholar]

- 24.Enders C. K., Applied Missing Data Analysis (Guilford Press, 2010). [Google Scholar]

- 25.Benjamini Y., Hochberg Y., Controlling the false discovery rate: A practical and powerful approach to multiple testing. J. R. Stat. Soc. 57, 289–300 (1995). [Google Scholar]

- 26.Percy W. H., Kostere K., Kostere S., Generic qualitative research in psychology. Qual. Rep. 20, 76–85 (2015). [Google Scholar]

- 27.Creswell J., Miller D., Determining validity in qualitative inquiry. Theory Pract. 39, 124–130 (2000). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data generated and analyzed during the current study are available in Utah State University’s Open Access Institutional Repository at https://doi.org/10.26078/X535-HW49. The statistical code used to analyze the data during the current study is available from the corresponding author on reasonable request.