Abstract

We estimate the extent of defensive medicine by physicians, embracing the no-liability counterfactual made possible by the structure of liability rules in the Military Heath System. Active-duty patients seeking treatment from military facilities cannot sue for harms resulting from negligent care, while protections are provided to dependents treated at military facilities and to all patients—active-duty or not—that receive care from civilian facilities. Drawing on this variation and exploiting exogenous shocks to care location choices stemming from base-hospital closures, we find suggestive evidence that liability immunity reduces inpatient spending by 5% with no measurable negative effect on patient outcomes.

One of the most controversial aspects of the U.S. health care system is the impacts of medical malpractice pressures on patient treatments and health care costs. The annual administrative expenses associated with the medical liability system ($4 billion per year) and the aggregate liability payments made by malpractice defendants ($5.7 billion) are small relative to the three-trillion-dollar health care economy in the U.S. (Mello et al. 2010). However, of much larger concern is the potential practice of “defensive medicine,” an unintended effect of the liability system in which physicians order extraneous tests, procedures and other services as a result of fears over medical liability. Such costs have played a central role in health care debates in recent years. A key question surrounding this debate, however, is just how large these costs really are.

Some have argued that defensive medicine is the major driver of excessive health care spending in the U.S. Former Congressman (and former Secretary of the Department of Health and Human Services) Tom Price suggested in 2010 that as much as 26 percent of all money spent on health care is attributable to this phenomenon.1 Others have argued that, while it may exist, it has at most a small impact on spending.2 The most important arbiter of this debate, the Congressional Budget Office, predicted in 2009 that a proposed aggressive package of liability reforms would lower medical costs by only 0.3% through reduced defensive medicine.

What we know thus far about the extent of defensive medicine has largely come from observing physician responses to various medical liability reforms that states have passed over the past 4 decades. The typical reform has operated largely to reduce the extent of damages—e.g., dollar caps on non-economic damages awards. While physicians face little direct financial exposure to lawsuits (Zeiler et al. 2009), a damage-cap could theoretically alter a physician’s perceived liability threat to the extent caps discourage suits in the first place. The literature, however, has produced mixed results regarding the relationship between caps and the frequency of suits.3 Moreover, self-admissions by physicians that they practice defensively is not associated with the presence of a damages cap (Carrier et al, 2010). Ultimately, it is uncertain that incremental reforms such as caps have represented a sizeable enough change in perceived or actual liability pressure from which to tease out the law’s influence on behavior. Moreover, even if caps do indeed lead to reductions in perceived and actual liability risks, they are not likely to be informative on the full impacts of liability to the extent that caps only operate to shield physicians from a portion of damages.

Recent proposals to reform the malpractice system have reached beyond remedy-focused reforms such as caps and have considered broader, more structural changes. For instance, one prominent proposal has suggested imposing liability “safe harbors” that exempt providers from malpractice risk if the treatment adheres to certain clearly delineated guidelines.4 Predicting the impact of this new approach requires addressing a very different question: will doctors practice medicine differently if they know with greater certainty that they cannot be sued?

The structure of malpractice protections under the Military Health System (MHS) provides a novel opportunity to answer this policy relevant question. The MHS is a $50 billion program that provides insurance for all active duty military and their dependents. For those who seek care at Military Treatment Facilities (MTFs), this care is provided by government employees or contractors; for those who use purchased care outside of MTFs, the MHS pays for their costs through a contract with a private sector managed care plan.

Most importantly for our purposes, the MHS provides what is missing in previous studies: a true “treatment group” of patients who cannot sue for malpractice. Pursuant to a long-standing and highly controversial federal law, active-duty patients seeking medical treatment from active duty physicians at military facilities have no recourse under the law—i.e., they can sue neither the physician nor the government—should they suffer harm as a result of negligent medical care. Malpractice protections are afforded, however, to dependents and retirees treated at military facilities and to all patients—active-duty or not—that receive care from civilian facilities. By comparing those patients over which physicians are not subject to “defensive medicine” pressure to other patients over which physicians are subject to such pressure, we can identify the impact of defensive medicine pressure on practice patterns, medical costs, and patient outcomes.

Doing so requires tapping into a unique set of data that have not previously been used for health economics research, the Military Health System Data Repository (MDR), which is the main database of health records maintained by the Military Health System. Broadly, it provides incident-level claims data for both inpatient and outpatient settings, the former of which we rely upon for this analysis. Using this information, we formulate a number of health care spending and utilization metrics, in addition to quality metrics, from which we are able to investigate key questions regarding the link between liability forces and medical practices.

We use these data to develop an empirical strategy that differentiates cases where physicians are and are not subject to malpractice pressure while controlling for other factors which might cause patients to be treated differently. We start with a differences-in-differences comparison of active duty versus non-active duty patients who are treated on the base versus off the base. We then address endogeneity of choice of treatment location using instruments based on location of residence, while drawing upon exogenous shifts in access to MHS bases during our sample period due to military hospital closings pursuant to recommendations by the Base Realignment and Closure Commission. We also consider comparisons within hospitals and within doctors, allowing us to compare treatment of immune versus non-immune patients by the same medical providers.

Despite the potential of the base-hospital closing strategy to address non-comparabilities between the treatment and control groups, one may be concerned that mechanisms other than immunity from medical liability may explain any observed differences in the care delivered to the treatment group—i.e., the active duty treated on the base. We underscore this caveat and emphasize that our results are perhaps only suggestive of a medical liability effect. Nonetheless, to shed further light on a liability basis for these findings, we do perform a couple of additional tests based on certain predictions as to when one would expect stronger defensive medicine. First, we predict stronger negative effects of liability immunity in the case of diagnostic testing relative to non-diagnostic procedures. With respect to diagnostics, the prevailing risks are often those concerning errors of omission. Capitulating to these concerns, physicians may provide more tests. In the case of non-diagnostic procedures, the prevailing risks may be a mix of errors of omission and errors of commission, forces that may offset each other and that may lead to more muted aggregate responses by physicians. Second, we also predict stronger effects of liability immunity in states that have not imposed caps on damages awards relative to states that have imposed caps and that have already effectively immunized providers from some portion of liability. This latter analysis, of course, assumes that caps reduce liability pressure to at least some extent.

Our findings are striking. We consistently estimate that the liability-immunity treatment group—active duty patients treated on the base—receive less intensive health care. Our central estimate is that the intensity of medical care delivered during inpatient episodes—measured in various ways—is roughly 4-5% lower for this treatment group. Moreover, further suggestive of a liability mechanism, we find that this effect is stronger in the case of diagnostic as opposed to non-diagnostic procedures and in states without caps on non-economic damages awards. Consistent with a defensive interpretation of these effects, we do not find any evidence that the differences in treatment intensity are associated with differential patient outcomes, although our estimates are imprecise in the case of some of the outcome metrics explored. Collectively, our results are suggestive that malpractice pressure is a key determinant of medical treatment patterns in the U.S. and that major reforms to the system—that is reforms beyond the more incremental, remedy-focused reforms embraced to date—could have real effects on medical costs without large effects on quality. Even absent national reforms, our findings have important implications for the MHS, one of our nation’s largest (and almost certainly its most understudied) health care programs.

Our paper proceeds as follows. Part I reviews the malpractice environment and the existing literature on medical malpractice, and describes the MHS-specific structure that forms the basis of our identification strategy. Part II discusses our unique data set. Part III explains our empirical strategy. Part IV presents the results of our analysis, while Part V concludes.

I. Background

I.A. Background: Overview of Defensive Medicine

We acknowledge that physician behavior is likely shaped by a range of forces beyond medical liability fears—e.g., incentives arising from reimbursement systems, hospital disciplinary mechanisms, etc. Whether these other forces leave any work for the medical liability system to influence physicians on the margin is an empirical question, one that is at the heart of our analysis.

In particular, we test for the presence of “defensive medicine,” a practice by which a physician’s fear of liability causes her, on the margin, to shift her practices in a sub-optimal direction—e.g., to perform an unnecessary procedure that renders no additional clinical benefit. Analysts commonly attribute defensive medicine to uncertainties in the minds of physicians as to what liability standards are expected of them (Frakes 2015; Craswell and Calfee 1986). For instance, consider a physician deciding whether to perform a given treatment. Assume that the benefits of performing this treatment are truly less than the costs, in which case, optimally, the physician should elect not to treat. The physician, however, is unsure as to what care the courts will expect in this situation. Further, assume that she will proceed by taking the route that results in the lowest amount of potential liability. She may accordingly compare two scenarios under which courts might find her negligent: (1) one where she does not treat but where the court dictates she should have—i.e., an error of omission and (2) one where she performs the treatment but where the court dictates she should not have (or, relatedly, where the courts may, with some probability, find the treatment to have been executed negligently)—i.e., an error of commission. Should a physician perceive that the expected liability harm from the first scenario is greater than the second, she may be compelled to perform the unnecessary procedure on the margin.

Policymakers are often concerned with the bottom line—i.e., how much additional spending overall is resulting from the liability system—as opposed to simply focusing on a particular procedure like the one just imagined. In our primary empirical exercise in this paper, we attempt to ask the question of interest to policymakers and thus focus on understanding the relationship between medical liability pressure and overall treatment intensity. We of course acknowledge that this aggregate analysis may not paint a complete picture of the role of defensive medicine. After all, the direction—positive or negative—and the magnitude of defensive medicine may vary across different clinical contexts. While we do not look at all such contexts in this paper, our analysis below will return to this omission vs. commission framework in analyzing certain specific settings where we will predict that defensive medicine is likely to depart from this overall mean.

I.B. Background: Defensive Medicine Literature Review

A number of studies have endeavored to quantify the extent of defensive medicine, often by assessing how physician practices have changed in response to certain tort reforms. The seminal paper on this topic is Kessler and McClellan (1996), which found that tort reforms that directly reduce liability pressure—including damage caps—lead to reductions of 5 to 9 percent in medical expenditures associated with the care provided in the 1-year period following an acute myocardial infarction or new ischemic heart disease. The implication from such reductions is that higher malpractice pressure prior to the relevant reforms was inducing physicians to undergo added spending. In a follow-up study, Kessler and McClellan (2002) update this analysis to include information on managed-care penetration rates, modifying their findings to a 4-7 percent reduction in the relevant expenditures in connection with such reforms.

Sloan and Shadle (2009) and the Congressional Budget Office (2004) explored the relationship between caps and related tort reforms on the one hand and health care spending across a range of medical scenarios on the other hand but failed to replicate the cardiac-specific findings of Kessler and McClellan (1996, 2002). Similarly, a recent study by Paik et al. (2017) focusing on the most recent wave of damage-cap reforms finds no effect of caps on Medicare Part A spending (if anything, Paik et al. find an opposite-signed effect for Medicare Part B spending). A related study by Moghtaderi et al. (2017) finds little to no association between caps and Part A and B spending and between caps and a range of cardiac testing rates and interventions. A number of studies testing for defensive use of cesarean deliveries likewise find results inconsistent with the cardiac findings from Kessler and McClellan (1996, 2002). For instance, Frakes (2012) finds little to no relationship between damages caps and cesarean rates. Currie and MacLeod (2008) actually find evidence of an increase in cesareans following cap adoptions.

Nonetheless, several recent studies have employed similar methods and have found evidence consistent with Kessler and McClellan (1996, 2002). For instance, Avraham and Schanzenbach (2015) and Avraham et al. (2012) find a 3-5% reduction in intensive cardiac interventions following cap adoptions and a 2.1 reduction in employer-sponsored health insurance premiums following the adoption of any one of a set of traditional tort reforms (including caps), respectively.

Other methodological approaches exploring potentially more significant variation in the malpractice environment have likewise found results consistent with defensive medicine. Baicker et al. (2007) estimate that the 60 percent increase in malpractice premiums between 2000 and 2003 is associated with an increase in Medicare spending of more than $15 billion. Lakdawalla and Seabury (2011) draw on variations in the generosity of local juries and find that the growth in malpractice payments over the last decade and a half has contributed to a 5 percent increase in the growth of medical expenditures. And Frakes (2013) estimates a significant sensitivity of physician behavior to an alteration of the underlying negligence standard itself, finding an increase in treatment intensity of 5-16% when the negligence standard changes so as to expect that physicians follow more intensive practice styles (with a similarly sized reduction in intensity when the law changes so as to expect the delivery of less intensive styles).5

Since the concept of defensive medicine rests on the extraneous nature of liability-induced spending, important to such studies is an evaluation of whether greater liability forces improve health care quality in conjunction with any documented changes in treatment intensity. The literature on this quality link has thus far produced mixed results. Kessler and McClellan (1996) estimate a trivial effect of liability reforms on (1) survival rates during the one-year period following treatment for a serious cardiac event, and (2) hospital readmission rates for repeated serious cardiac events over that period. Sloan and Shadle (2009) undertake a similar analysis and reach similar conclusions. Lakdawalla and Seabury (2011) find that higher county-level malpractice pressure leads to a modest decline in county-level mortality rates, whereas other studies of the impact of malpractice reforms on infant mortality rates (or infant Apgar scores) generally find no relationship (Klick and Stratmann 2007; Frakes 2012; Dubay et al. 1999). Currie and MacLeod (2008) find that damage caps increase preventable complications of labor and delivery. Frakes and Jena (2016), on the other hand, find little relationship between damage caps and preventable delivery complications (along with various other quality metrics).

Collectively, the above findings paint a varied picture of both the size and existence of defensive medicine. These inconsistencies in the observational literature are perhaps surprising in light of another line of studies that has surveyed physicians on their behaviors and that has documented high rates of physicians admitting that they practice defensive medicine (Studdert et al. 2005).

I.C. Background: The Military Health System

The Military Health System (MHS) is the primary insurer for all active duty military, their dependents and retirees through the TriCare program. TriCare is not involved in health care delivery in combat zones and operates separately from the Department of Veterans Affairs’ Veterans Health Administration health service delivery system (Schoenfeld et al. 2016). For TriCare enrollees, care can be delivered in one of two ways: either directly at Military Treatment Facilities (MTFs) on military bases (direct care), or purchased from private providers (purchased care). For those who receive treatment at MTFs, the care is delivered primarily by a mix of providers including active duty military providers, federal government civilian employee providers, and providers hired using contract mechanisms who work full time at the MTF. “Purchased care” is delivered by a network run by a contracting insurer, where patients go to private physicians who are within the insurer’s contracted network, as with most privately insured in the U.S. In principle, enrollees who live within the “catchment area” of an MTF are supposed to go to the MTF for care. This area was defined as 40 miles originally, though the military has shifted to time-based boundaries over time. Our data show clearly that a mileage boundary rule was not rigorously enforced during our sample period. Those who live closer to an MTF are much more likely to go there, but with a more gradual fall off rather than a strong distance discontinuity.

I.D. Background: Liability Immunities in the Military

To explain the medical liability rights facing MHS beneficiaries and to demonstrate the source of liability immunities within the MHS, we separate the discussion into several scenarios depending on the type of patient receiving care and the type of physician providing care:

Active Duty Military Personnel Receiving Care from Military Providers.

Active duty members are barred from suing the government for injuries that arise out of or in the course of active military service under Feres v. United States, 340 U.S. 135 (1950). It is well established that receipt of medical care by service members in military facilities has been found to be “incident to service” and thus the Feres doctrine bars lawsuits against the government that arise from negligent medical treatment of active duty service members. Pursuant to subsequent case law,6 active duty personnel are likewise barred from suing military physicians in their individual capacities.

Non-Active Duty Personnel Receiving Care from Military Providers.

The Feres doctrine does not bar medical liability claims brought by dependents or non-active-duty military who receive negligent care at military facilities.7

Patients Receiving Care from Civilian Providers at Civilian Facilities.

Military beneficiaries, whether active- or non-active-duty, who receive care from civilian physicians at civilian facilities maintain their ability to sue under general tort rules should they receive negligent care.

All Patients Receiving Care from Civilian Providers at Military Facilities.

The Feres doctrine does not prevent patients—even active-duty patients—harmed by physicians independently contracting with the Military from suing the contracting physician for malpractice. A non-trivial share of care that is delivered at Military facilities is delivered by civilian providers. Many such providers, however, are federal employees and thus fail to implicate this contractor exception. It is not necessarily the case that all other non-employee civilian providers working at Military facilities will be deemed independent contractors (ICs). The designation of an IC—as it applies to tort law—is one that focuses on substance rather than form. If the Military hospitals exercise the same degree of oversight and control over the active duty physicians working in their facilities as they do their civilian providers, the law may not treat them differently and may thus protect the civilian providers under Feres as well.8 Ultimately, our records demonstrate that the workload of the civilian physicians practicing at military inpatient facilities is almost identical to the workloads of active-duty physicians working at those facilities, suggesting a likely similar legal treatment between these groups. For these various reasons, we elect in our analysis below not to exploit variations within MTFs along this active-duty-physician / civilian-physician dimension.

Of course, the above differences in legal regimes are irrelevant unless understood by practicing physicians. In May 2011, we informally surveyed 13 MHS physicians regarding their knowledge of these rules. Eleven out of those 13 indicated that they were aware of the law bearing on whether active duty or non-active duty could sue for negligent care received on the base. Despite this less-than-unanimous confidence regarding knowledge of such rules, all 13 physicians actually identified the correct rule when prompted via a true or false question—i.e., they identified that active duty patients could not sue in these instances, while non-active duty could.

II. Data

II.A. The MDR Data

The data for this analysis come from the Military Health System Data Repository (MDR), which is the main database of health records maintained by the MHS. Broadly, it provides incident-level claims data across a range of clinical settings and contexts. For certain reasons set forth below, we focus in this paper on the inpatient records contained within the MDR. Importantly, the MDR database collects claims information from both the direct care (at the MTF) and purchased care (off the MTF) settings. Each of these files provides information on the identity of the physician and facility and the date of the encounter, along with demographic and other information on the patient. Further, each file provides substantial details regarding the encounter itself: primarily, the diagnosis and procedure codes associated with the event, length of the inpatient stay, and other treatment intensity metrics discussed below. Moreover, the MDR database contains separate files with coverage, demographic, geographic and other information on each MHS beneficiary, which we link to the various claims records. All told, we collect records for each of nearly 12 million distinct beneficiaries over an 11-year period between the beginning of 2003 and the end of 2013.

For our analysis, we focus on inpatient hospital admissions. While outpatient care has been the subject of little investigation by the medical liability literature to date, we follow the conventional approach of focusing on inpatient records for certain methodological reasons. Represented by more severe circumstances with higher baseline probabilities of negative outcomes, inpatient encounters arguably implicate greater liability risk and provide a greater ability to link any observed changes in health status to the encounter itself, providing us with more power by which to estimate the relationship between the liability environment and observed treatments and outcomes. Moreover, we can cleanly identify the malpractice implications for the given encounter—considering that the active duty status of the patient will not likely change throughout the encounter, nor will the location of care (military facility versus civilian provider). Finally, one of the key methodological tools we embrace below—decisions stemming from the Base Realignment and Closure Process—are generally applicable only to hospital-based care.

While all military members, families and retirees face coverage under the MHS, the system does offer alternative insurance plans with different cost-sharing and other terms. All active-duty personnel are required to enroll in TRICARE Prime plans. Over 90% of the non-active duty in our analytical sample likewise choose to enroll in TRICARE Prime. To ensure comparability in insurance terms throughout our sample, we limit the analysis to those beneficiaries with TRICARE Prime coverage. Active duty and their dependents covered under PRIME face no cost-sharing obligations. We exclude retirees from our sample in that PRIME-covered retirees receiving care off the base do face some cost-sharing, compromising the difference-in-difference framework. We also focus only on the care provided at U.S. facilities and do not consider overseas encounters. Finally, we limit our specifications to adults between 18 and 60 years of age. Since children cannot be active-duty members, this restriction ensures common age support across the four key analytical groups to our motivating difference-in-difference design. For similar reasons we drop those above the age of 60 given the disproportionately few active duty above this age. Nonetheless, in the Online Appendix, we demonstrate the robustness of the results to no age restrictions at all.

With these restrictions, our analytical sample focuses on 2,452,892 inpatient encounters, 1,130,116 (46%) of which are at MTFs and 1,322,766 (54%) of which are at civilian hospitals. Out of the MTF encounters, 553,121 (49%) are connected with active-duty patients, while 576,995 (51%) are associated with dependents of active duty. Out of the civilian encounters, 395,924 (30%) are for active-duty patients, while 926,842 (70%) are for dependents.

II.B. Outcome Variables

Treatment Intensity Metrics.

The first half of our defensive medicine analysis entails investigating the link between immunities from liability and the intensity by which physicians treat patients. Our primary measure of interest is called the Relative Weighted Product or RWP, a Department of Defense-derived measure assigned to each inpatient encounter.9 The RWP is a treatment intensity metric designed to be comparable across the direct and purchased care settings. Using information from the indicated procedure and diagnosis codes, the Department of Defense assigns a Diagnosis Related Group (DRG) weight to the encounter and thereafter adjusts that weight based on the length of stay of the encounter. Even though this measure may fall short of the precise costs associated with the encounter, it is meant to provide a reliable sense of the relative costliness and utilization intensity of the actual encounters represented in the MDR database. Given that 55% of the variation across DRGs is actually explained by treatment intensity (McClellan, 1997), this measure reflects both treatment intensity and length of stay. As robustness checks, we also consider certain alternative treatment metrics: (1) number of inpatient bed days, (2) number of procedures performed and (3) the incidence of any procedure performed.

Moreover, a critical component to our empirical analysis focuses on exploring the impacts of liability immunity on the utilization of diagnostic procedures. For these purposes, we surveyed the medical literature to assess the most common diagnostic tests utilized across a number of Major Diagnostic Categories—e.g., respiratory care, cardiac and circulatory care, gastrointestinal care, etc.. Focusing on each such category separately, we then create indicator variables for whether the patient received any of the relevant diagnostic procedures. For those diagnoses not specifically investigated, we create a residual diagnostic category, with respect to which we create an indicator variable for whether any of the diagnostic tools used in the other categories collectively are employed (which largely captures the usage of radiological imaging). We also create a pooled any-diagnostic indicator variable across the full sample.10 Finally, to serve as a comparison point for our diagnostic-testing analysis, we also explore the effects of liability immunity on the incidence of any-non-diagnostic procedure.

Health Care Quality Metrics.

Also critical to our defensive medicine analysis is an assessment of the link between liability pressures and health care quality. For these purposes, we construct a number of quality indicators commonly employed in the health economics literature: (1) the incidence of mortality within 90 days following discharge (mean of 0.5%), (2) the incidence of an unplanned hospital readmission within 30 days (mean of 4.0%),11 and (3) the incidence of an adverse event, as captured by the presence of Patient Safety Indicators (PSIs) promulgated by the Agency for Health Care Research and Quality or AHRQ (mean of 1.5%).12

III. Empirical Strategy

Our core empirical strategy exploits the fact that there is differential malpractice pressure across types of patients and site of care. In particular, active duty patients treated on the base may not sue for negligent care and thus pose no liability pressure, while other patients treated at the same site may sue. At the same time, both groups may sue and thus pose liability threats when treated off the base. This set of facts naturally motivates a difference-in-difference specification:

| (1) |

Where i denotes individual patients, j denotes providers,13 e denotes episodes, and k denotes zip-codes (based on patient residence). Y is some measure of patient treatment.14 ACTIVE_DUTY is a dummy variable for whether the individual is active duty at the time of the treatment; ON-BASE is a dummy variable for whether the individual is treated on the base; X is a set of individual-episode specific controls (age dummies, patient sex, year dummies, pay-grade-level dummies, Charlson comorbidity scores for the patient at the time of the encounter and primary diagnosis-code dummies associated with the encounter, based on the first 2 digits of the primary ICD-9-CM diagnosis code). We cluster our standard errors at the MTF catchment-area level to capture any correlations between patient characteristics or treatment decisions within base catchment areas.15

While a straightforward representation of the variation in malpractice environment facing patients, this approach raises four potential empirical concerns.

III.A. Addressing Patient Case Mix Concerns

First, there may be differential selection into MTFs by active versus non-active duty patients. For example, it may be that the active duty patients treated on the base are particularly sick relative to active duty patients treated off base, while there is no such differential for dependents.

To explore this further, Table 1 shows the covariate balance in our difference-in-difference approach. There is clearly a lack of balance along most covariates individually, most notably in gender. In the last row of the table, we attempt to explore covariate balance collectively, an exercise that demonstrates only a modest degree of overall imbalance, if any. For these purposes, we draw on our main treatment intensity metric—the Relative Weighted Product (RWP)—and explore balance in a log RWP measure that is predicted on all of the available covariates. We find that our treatment group is predicted to have RWP levels that are 2% higher in this DD framework, which both biases against our ultimate conclusion and is nonetheless statistically indistinguishable from zero (while also being less than half the size of our ultimate treatment effects). Nonetheless, we will control for all of these covariates in the regression framework.

Table 1.

Covariate Balance Analysis in Full Inpatient Sample: Summary Statistics for Covariates by Patient Category

| (1) | (2) | (3) | (4) | (5) | |

|---|---|---|---|---|---|

| Means (Standard Deviations) of indicated Variables | |||||

| On-Base | Off-Base | Difference-in-Difference for Indicated Variable | |||

| Active-Duty | Non-Active-Duty | Active-Duty | Non-Active-Duty | ||

| Panel A: Evaluating Covariate Balance by Individual Covariates | |||||

| Male | 0.61 (0.49) |

0.02 (0.14) |

0.68 (0.47) |

0.04 (0.10) |

−0.06 (0.02) |

| Charlson Comorbidity Score | 0.13 (0.60) |

0.17 (0.60) |

0.22 (0.79) |

0.22 (0.73) |

−0.03 (0.01) |

| (Omitted: Junior Enlisted) | |||||

| Senior Enlisted | 0.27 (0.44) |

0.40 (0.49) |

0.36 (0.48) |

0.46 (0.50) |

−0.03 (0.01) |

| Warrant Officer | 0.01 (0.09) |

0.01 (0.11) |

0.01 (0.11) |

0.02 (0.14) |

0.00 (0.00) |

| Junior Officer | 0.04 (0.19) |

0.08 (0.27) |

0.03 (0.17) |

0.05 (0.22) |

−0.02 (0.00) |

| Senior Officer | 0.04 (0.20) |

0.06 (0.22) |

0.06 (0.23) |

0.09 (0.28) |

0.01 (0.00) |

| Pay-Grade Missing | 0.27 (0.44) |

0.18 (0.38) |

0.40 (0.49) |

0.27 (0.44) |

−0.05 (0.01) |

| (Omitted: Age < 30 Years Old) | |||||

| Age 30-40 Years Old | 0.22 (0.42) |

0.29 (0.45) |

0.25 (0.44) |

0.29 (0.45) |

−0.03 (0.01) |

| Age 40-50 Years Old | 0.10 (0.31) |

0.07 (0.26) |

0.15 (0.35) |

0.09 (0.28) |

−0.03 (0.01) |

| Age 50-60 Years Old | 0.02 (0.15) |

0.01 (0.09) |

0.04 (0.19) |

0.01 (0.11) |

−0.01 (0.00) |

| Panel B. Evaluating Covariate Balance Collectively | |||||

| Predicted Log Relative Weighted | |||||

| Product (based on regression of log of relative weighted product on covariates) | −0.29 (0.47) |

−0.53 (0.35) |

−0.26 (0.49) |

−0.49 (0.38) |

0.02 (0.02) |

Notes: Standard errors in Column 5 are corrected for within-catchment area correlation in the error term (based on catchment areas as they are defined at the beginning of the sample, prior to any base closings) and are reported in parentheses. Pay-grade status is based on the officer/enlisted status of the household sponsor.

We can further address this point by using an important quasi-experiment that took place during our sample period: the closing of a number of MTFs. In 1995 and 2005, the Base Realignment and Closure (BRAC) Commission—after recommendations from the Pentagon—proposed a set of bases to be either or closed or restructured, recommendations that were thereafter approved by the respective President at that time. The closings and restructurings themselves took place over the ensuing years. Pursuant to the decisions by the 1995 and 2005 BRAC Commissions (mostly the latter), 11 MTF hospitals were closed over the course of our sample period, causing nearly 20% of the sample to experience a change in the distance to the closest MTF.16

While the hospitals themselves closed pursuant to this process, each of the bases affected by these BRAC-induced MTF closings continued to operate to some degree following the BRAC event—i.e., the bases themselves were realigned rather than closed. As such, these events did not cause MHS beneficiaries to move away in masses. We demonstrate this in Column 1 of Table 2. The coefficient of the post-closing indicator suggests little change in the number of non-active duty in a catchment area following the closing of a base hospital in that area, though the coefficient on the interaction term shows that the closings led to a 12% decline in the number of active duty, relative to non-active duty, in the catchment area. But there appears to be no relative change in the underlying health mix of those who stay near the base: we find in Columns 2 and 3 of Table 2 that MTF closings have no effect on the age of active duty relative to non-active duty and on the log of predicted RWP (based on the available covariates) for active duty relative to non-active.17

Table 2.

Effects of Base Closures on Resident Population Counts, Resident Case Mix and Rates of Hospitalization

| (1) | (2) | (3) | (4) | |

|---|---|---|---|---|

| Log Number of People in Original Catchment Area (Aggregated Sample of MHS Beneficiaries) | Log Mean Age of MHS Beneficiary (Aggregated Sample of MHS Beneficiaries) | Log Predicted Relative Weighted Product (Individual Sample of Inpatient Admissions) | Likelihood of Hospitalization Within a Year (Individual Sample of MHS Beneficiaries), Coefficient Multiplied | |

| Active | 0.69 (0.15) |

0.51 (0.01) |

0.21 (0.01) |

−0.71 (0.13) |

| Post-Closing | 0.01 (0.03) |

−0.01 (0.01) |

−0.00 (0.01) |

−0.31 (0.23) |

| Active * Post-Closing |

−0.12 (0.04) |

0.01 (0.01) |

−0.00 (0.01) |

0.00 (0.29) |

Notes: robust standard errors corrected for within-original-catchment area correlation in the error term are reported in parentheses. Post-Closing equals 0 for all zip codes unaffected by a base closure (that is, all zip codes whose closest-MTF measure was not altered by a base closure) and 0 for years prior to the closure for those zip codes affected by a base closure. Post-Closing equals 1 for years after closure for those zip codes affected by a base closure. Original catchment areas signify geographic areas of beneficiary residence, where regions are grouped according to the MHS Catchment Area Number assigned to that region at the beginning of the sample. The regressions in Column 3 and 4 includes zip code and year fixed effects. The regressions in Columns 1 and 2 are aggregated to the catchment-year level and include original catchment area and year fixed effects; while drawing on information from the full MHS beneficiary file regardless of receipt of inpatient care. The regression in Column 4 is organized at the individual-beneficiary-by-year level. Regressions include regions unaffected by a base closure as a control. Regressions are also limited to regions that were within 40 miles of an MTF hospital as of the beginning of the sample period.

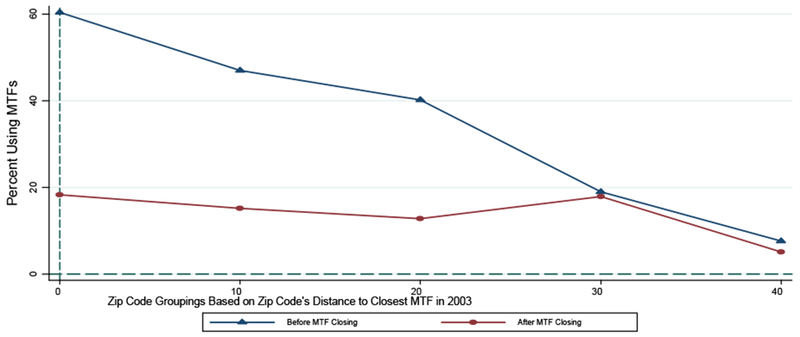

Rather than affecting the composition of MHS beneficiaries living in the affected regions, the key effect of these MTF closings is to provide an exogenous shift in the access of patients to inpatient direct care on the base. This is illustrated clearly in Figure 1. In this figure, we focus on those zip codes that experienced a base-closure induced change in the distance to the closest MTF during the sample. The top line of this figure is for the period of time prior to the base closing. As we see with this line, the distance to the closest MTF (the X-axis) has a strong relationship with the likelihood of using an MTF for inpatient care versus going to a civilian hospital (the Y-axis). For those zip codes that are within 0 and 10 miles from the closest MTF, nearly 60 percent of admissions take place at an MTF; for those zip codes that are greater than 40 miles away from the closest MTF, less than 10 percent of admission take place at an MTF.

Figure 1.

Effect of MTF Closings on Likelihoods of Using Any MTF for Inpatient Care in Regions Affected by Base Closings

Notes: the top line focuses on those encounters in zip codes affected by a base-hospital closing in the period of time prior to the closing. The bottom line focuses on those encounters in zip codes affected by a base-hospital closure in the period of time following the closing. In each case, the indicated point represents the percent of encounters that occur at an MTF as opposed to a civilian hospital, where this percentage is calculated for each of the following groups of zip codes based on their distance to the closest MTF at the beginning of the sample (i.e., in 2003): (1) 0-10 Miles (represented by 0 in the graph), (2) 10-20 Miles (represented by 10 in the graph), (3) 20-30 Miles (represented by 20 in the graph), (4) 30-40 Miles (represented by 30 in the graph), and (5) 40+ Miles (represented by 40 in the graph). Source: 2003-2013 Military Health System Data Repository.

In the period of time following the base closing, as shown by the lower line, all of these zip codes effectively become more alike—that is, they all become distant from the closest MTF. Even for those zip codes that were initially within 10 miles of the closest MTF, the average distance to the closest MTF among post-closing encounters is over 50 miles. As such, following the closings, we would expect that the MTF-usage gradient across these groups of zip codes will flatten and will do so at a relatively low rate of MTF usage. Figure 1 is consistent with these expectations. While encounters in those zip codes initially close to a base took place at base hospitals nearly 60 percent of the time prior to the closing, encounters in those same zip codes took place at a base hospital (generally at the next closest MTF) less than 20 percent of the time following the closing.

This exogenous shift from MTF inpatient care to civilian inpatient care allows us to measure the effect of MTF care free of the endogeneity of MTF choice. We do so in two ways. First, we use a straightforward differences-in-differences strategy that compares the treatment of active duty and non-active duty patients who initially live near a base, interacted with a variable indicating the closing of that base’s MTF. That is, we examine how care differs between active duty and non-active duty patients when they are denied access to hospital care on a nearby base. This implies an exogenous removal of malpractice protection for one group of patients relative to another, allowing us to model the effect on relative spending and outcomes.

Second, we incorporate the variation afforded by base-hospital closings more directly into equation (1). In essence, equation (1) captures the differential effect of going to an MTF for active duty relative to non-active duty patients. As above, one may be concerned over endogeneity in the MTF choice. To address this concern, we form a series of binary instruments representing distance of residence from the MTF (based on zip-code centroids): less than 10 miles from a military hospital, 10-20 miles away, 20-30 miles away, 30-40 miles away and 40+ miles away. We then instrument for ON-BASE and ACTVIE_DUTY X ON-BASE using these various distance bins and the interactions of each distance bin with the ACTIVE_DUTY indicator. And we include a set of zip code fixed effects, λk, so that any fixed locational effects are controlled for. In this way, we achieve identification of the ON-BASE and ACTIVE-DUTY X ON-BASE coefficients only from those enrollees whose distance to the closest MTF exogenously changed due to BRAC-induced hospital closures. Ultimately, this approach allows us to estimate the differential effect of being hospitalized on-base (versus off) for active duty relative to non-active duty while drawing upon exogenous variation in the on-base choice. Specifically, we implement this IV approach via two-stage least squares, where the first stages are represented by:

| (2) |

| (3) |

where DISTie represents a series of dummy variables capturing the distance bins identified above—e.g., residence within 10 miles of an MTF. Equation (1) above then captures the second stage of this 2SLS analysis. While Figure 1 graphically depicts the essence behind these first stage regressions, equations (2) and (3) formalize this analysis and capture the extent to which MTF utilization is associated (non-parametrically) with how far patients live from the closest MTF, again focusing on variation in these distances induced by base-hospital closings. We present the full first stage results in the Online Appendix. We note here, however, that MTF utilization (and its interaction with active-duty status) are strongly determined by these distance-based instruments, with a Cragg-Donald F-statistic on the instruments of 1960, well beyond conventional critical thresholds (Stock and Yogo, 2005).

Importantly, the resulting coefficients from this IV specification can be compared directly to our estimates of the difference-in-difference specification indicated in equation (1). As such, this IV estimation of the differential effect of MTF usage by active relative to non-active duty can provide suggestive evidence of the effect of malpractice immunity on treatment intensity and outcomes.18

III.B. Addressing Provider Selection Concerns

The second concern with our basic framework is that medical providers—be it physicians or hospitals—who serve the treatment group may be different in some systematic way from providers that serve other groups. We can address this concern by introducing provider-specific fixed effects (μj) in some models, doing so separately for physicians and hospitals across alternative specifications.19 This controls for general provider-specific treatment styles, by comparing the outcomes of active duty relative to non-active duty patients seen by the same provider. While we show results for both hospital effects and physician effects below, where space limits us in certain tables to adopt a primary approach, we elect to focus on hospital effects, given certain limitations with the physician data available to us.20

This provider fixed-effects approach does, however, raise some interpretation difficulties if there are patient treatment “spillovers” between immune and non-immune patients. For example, the threat of liability from some patients may contribute to a physician’s overall practice style that they exhibit to both active and non-active-duty patients. In this event, if physicians do practice defensively, then some amount of the estimated treatment effect picked up in the active-duty/non-active-duty differential inherent in the above design may derive from across provider variation in the share of active-duty patients. In this event, the inclusion of provider fixed effects could attenuate true treatment effects. As such, changes in the estimates with the inclusion of provider effects may be attributed to the presence of spillovers and/or to provider selection.

By way of preview, our results below demonstrate only a minor impact if any from including provider effects, which either suggests that both provider selection and spillovers are moderately inconsequential phenomena or that they operate in different directions and thus roughly offset each other. In Part IV.F below, we attempt to resolve this uncertainty by testing for markers suggestive of spillovers. As we will discuss, this analysis does suggest some degree of within-provider spillovers, though these tests are subject to various methodological challenges. Ultimately, while the provider effects specifications may allow us to account for provider selection concerns, the results may only constitute a lower bound of the full impacts of liability immunity depending on the extent of spillovers that actually exist.

III.C. Addressing Sample Selection Concerns

Our third concern with this empirical strategy is that we are conditioning on hospital utilization. If the differential malpractice pressure impacts the odds of entry into the hospital, this could change the mix of patients that we see in a way that potentially biases the results. To explore this concern, we take advantage of the fact that we have data on the full beneficiary population of the MHS and thus possess knowledge on who does and does not receive inpatient care in the first place. To shed light on the relationship between liability pressure and the odds of hospitalization, we then estimate a counterpart to our base-hospital-closing specification but with the unit of observation at the beneficiary-year level and with the incidence of a hospitalization over the relevant year as the outcome variable. We show in Column 4 of Table 2 that there is no differential selection into or out of the hospital by the active duty (relative to non-active duty) in the face of a base-hospital closing that drives patients to use civilian hospitals for hospitalizations.

In the alternative, we address this concern by excluding deferrable medical conditions. That is, following Card, Dobkin, and Maestas (2009) and Doyle et al. (2015), we focus on admissions associated with diagnosis fields whose weekend admission rates are close to 2/7,21 reflecting admissions types in which physicians have less discretion over the admission decision itself, thereby further appeasing these particular sample selection concerns.

III.D. Addressing Mechanism Concerns

The final concern we face is that the core effect that we identify—the differential treatment of active duty patients versus non-active duty patients on base, compared to that same differential off base—captures more than just differences in malpractice pressure. To be clear, this would require mechanisms (other than differential malpractice pressure) for less intensive treatment of active duty patients only when at the base that does not apply to non-active duty patients. This concern is important and is not fully ameliorated by the base-closure approach.

To demonstrate, we identify a few hypothetical scenarios. First, perhaps the Department of Defense (DOD) places pressure on MTFs to treat active duty patients in a non-invasive manner in order to facilitate their early return to work. Of course, even if the MHS would succumb to such return-to-work directives from the operational arms of the DOD (which is unclear), it is not immediately clear that this would lead to directives to practice non-intensively—after all, in numerous clinical contexts, immediate intensive approaches may allow for a quicker return to productivity. Second, one may be concerned about patient-driven mechanisms. For instance, perhaps active duty patients are reluctant to appear weak in front of their own—i.e., military physicians—and may thus refuse recommended care. A patient-driven bias, however, may work in the other direction as well—e.g., active-duty patients may be more inclined to show perceived strength in the face of civilian providers and differentially refuse care off the base.

Further, even if the closings approach can account for the concern that the negative treatment effect we estimate can be explained by an especially healthy treatment group, it cannot account for the possibility that MTFs care for healthy patients in a manner differently than civilian hospitals care for healthy patients (in each case relative to how they treat less healthy patients). This possibility is concerning for our difference-in-difference framework to the extent that active-duty patients are healthier than non-active-duty patients; however, it is not clear in which direction any bias resulting from differential treatment of healthy patients would go.

Moreover, beyond any consideration of how providers treat healthy patients relative to unhealthy patients, it is important to consider that the mix of healthy and unhealthy patients a provider faces may shape her more general practice style. For instance, if a provider treats a lot of healthy patients, she may develop a treatment style reflective of that healthy patient mix—in other words, the way in which a provider treats healthy patients may spill over into how she treats unhealthy patients. Importantly, if the nature of any such spillover mechanism differs between on-base providers and off-base providers, it may further confound our difference-in-difference exercise (again considering that active duty patients are healthier). However, as above, it is not clear in which direction any bias resulting from differential spillover mechanisms would go.22

Ultimately, these considerations reflect concerns that we cannot fully resolve and we emphasize that the treatment effects we observe may be attributable to the liability immunity status of the treatment group or to one of these alternative mechanisms. Nonetheless, to illuminate matters further, we proceed by identifying scenarios under which we would expect to find stronger defensive medicine and thereafter testing for evidence of such heterogeneous effects. To the extent any observed heterogeneity is consistent with a liability mechanism and not with alternative mechanisms, these additional findings may lend further support, even if still only suggestively, to a liability story.

First, we note that the prior literature provides little guidance by which to form predictions regarding the degree of defensive medicine across types of admissions. However, within any given category of admission, one can more readily predict that physicians will tend to exhibit positive defensive medicine—increasing procedure utilization in response to liability fears—to a stronger degree in the case of diagnostic relative to non-diagnostic procedures (Frakes 2015). To see this, return to the simple analytical framework set forth in Part 1(A). For any given diagnostic procedure, the prevailing liability risk is likely to be one concerning errors of omission—e.g., failing to perform a necessary test—as opposed to errors of commission—e.g., actionable harms resulting from performing the test. Responding to these concerns, physicians will tend to increase the amount of tests they perform. However, in the face of any given non-diagnostic procedure, a physician may face some fears over liability risks stemming from errors of commission—e.g., botching a procedure—and errors of omission—e.g., failing to perform a needed a procedure. These risks may encourage physicians to perform some additional procedures, while also discouraging them from performing others. Accordingly, the net influence of the law is likely to be more attenuated in this latter context. To the extent that we indeed find this predicted pattern of evidence, it is further supportive of a liability interpretation of the primary findings.23

Finally, we separately estimate our key specification in two distinct sub-samples: (1) states without caps on non-economic damages and total damages awards and (2) states with either noneconomic damages caps or total damages caps.24 By capping damages at some specified level, the relevant jurisdictions are already effectively immunizing providers from some portion of the potential damages award. As such, cap adoptions should absorb some portion of the overall impact of immunizing providers from liability. Accordingly, we would predict a smaller impact of liability immunities in states that have already imposed pain and suffering caps or total damages caps.25 Of course, just how much of a difference we would expect is subject to much uncertainty given our review of the literature above. Nonetheless, given that recent meta-analyses on defensive medicine predict some overall degree of aggregate spending reductions in response to caps (for instance, Mello et al. 2010), we do predict a difference—even if minor—in effects in states with and without caps; and if we do find such a difference, it will further reinforce the liability explanation behind a reduction in treatment intensity for active duty being treated at MTFs.

IV. Results

IV.A. Preliminary Difference-in-Difference Results

We begin in Column 1 of Table 3 by showing the results from the Ordinary Least Squares (OLS) difference-in-difference specification with Relative Weighted Product (logged) as the outcome variable. The coefficient of the MTF variables does evidence baseline differences in utilization levels across MTF and civilian care locations—indicative of lower intensity at military facilities, all else equal. The estimated coefficient of the interaction term—Active-Duty-Patient-by-MTF—captures the treatment effect of interest and suggests that that this negative differential between MTF and civilian care is notably more pronounced in the case of active duty patients—i.e., the group that lacks medical liability recourse when treated at MTFs. Specifically, we find that this treatment status is associated with a 5.0 log-point decrease in the intensity by which health care is delivered. Other coefficients in the regression have their expected signs, with higher comorbidity enrollees garnering more utilization, and variability across pay grade.26

Table 3.

Relationship between Medical Liability Immunity and Treatment Intensity (Relative Weighted Product, Logged)

| (1) | (2) | (3) | (4) | (5) | (6) | (7) | (8) | |

|---|---|---|---|---|---|---|---|---|

| MTF | −0.047 (0.004) |

- | - | - | - | 0.008 (0.025) |

- | - |

| Active Duty Patient | 0.038 (0.006) |

0.036 (0.006) |

0.028 (0.005) |

0.040 (0.006) |

0.029 (0.005) |

0.028 (0.010) |

0.034 (0.005) |

0.026 (0.005) |

| Active Duty Patient X MTF |

−0.050 (0.008) |

−0.054 (0.008) |

−0.039 (0.006) |

−0.057 (0.008) |

−0.041 (0.006) |

−0.050 (0.009) |

−0.049 (0.007) |

−0.035 (0.007) |

| Male | 0.012 (0.002) |

0.011 (0.002) |

0.016 (0.001) |

0.009 (0.002) |

0.029 (0.005) |

0.011 (0.002) |

0.009 (0.002) |

0.016 (0.011) |

| Charlson Comorbidity Score | 0.067 (0.002) |

0.058 (0.002) |

0.059 (0.002) |

0.058 (0.002) |

0.059 (0.002) |

0.068 (0.002) |

0.058 (0.002) |

0.059 (0.002) |

| (Omitted: Junior Enlisted) | ||||||||

| Senior Enlisted | −0.007 (0.001) |

−0.006 (0.002) |

−0.006 (0.001) |

−0.006 (0.001) |

−0.005 (0.001) |

0.005 (0.005) |

−0.005 (0.001) |

−0.006 (0.001) |

| Warrant Officer | −0.011 (0.003) |

−0.012 (0.004) |

−0.013 (0.003) |

−0.013 (0.004) |

−0.013 (0.003) |

0.003 (0.007) |

−0.013 (0.004) |

−0.013 (0.003) |

| Junior Officer | −0.030 (0.002) |

−0.033 (0.002) |

−0.031 (0.002) |

−0.029 (0.002) |

−0.027 (0.002) |

−0.022 (0.003) |

−0.029 (0.002) |

−0.028 (0.002) |

| Senior Officer | −0.029 (0.002) |

−0.037 (0.003) |

−0.033 (0.002) |

−0.029 (0.002) |

−0.028 (0.002) |

−0.011 (0.006) |

−0.029 (0.002) |

−0.028 (0.002) |

| (Estimated Coefficients for Age and Primary Diagnosis Dummies Not Listed) | ||||||||

| N | 2027632 | 2027362 | 2027362 | 2027362 | 2027362 | 2027362 | 2027362 | 2027362 |

| Hospital Fixed Effects | NO | YES | NO | YES | NO | NO | YES | NO |

| Physician Fixed Effects | NO | NO | YES | NO | YES | NO | NO | YES |

| Instrument for MTF & Active Duty Patient X MTF? | NO | NO | NO | NO | NO | YES | YES | YES |

| Zip code Fixed Effects? | NO | NO | NO | YES | YES | YES | YES | YES |

Notes: robust standard errors corrected for within-catchment-area correlation in the error term are reported in parentheses (based on original catchment area designations). All regressions include year fixed effects and controls for patient age (dummies), sex, Charlson comorbidity and paygrade (dummies), along with primary diagnosis code dummies.

In Columns 2 and 3 of Table 3, we add hospital and physician fixed effects, respectively, to address concerns over provider selection. In this specification, we thus show the relative effects within providers in their treatment of active duty versus non-active duty patients (and how such relative effects differ when looking at encounters on the base versus off). With this addition, the coefficient of the active duty-on-base interaction remains very similar at −0.054 and −0.039 across these two columns; moreover, these several estimates are statistically indistinguishable from each other, suggesting either little or no provider-based selection or an offsetting influence of provider selection and patient-level spillovers within providers.27 As discussed in Part III, should patient-level spillovers exist, our results can be seen as a lower bound for the full treatment effects stemming from liability immunity. Finally, in Columns 4 and 5, we replicate Columns 2 and 3 but with the addition of zip-code fixed effects. The results are nearly identical.

IV.B. Base-Hospital Closings Analysis

A major concern with this strategy noted above was the fact that choice of treatment at the MTF is endogenous. To address this, we turn to our closings analysis. We start with a simple presentation of this analysis and then more comprehensively build it into the estimation framework just discussed.

To begin, we estimate a difference-in-difference specification where we form a post-closings dummy variable that equals 0 for all zip codes not affected by a closing (i.e., whose closest-MTF distances do not change over the sample) and which equals 0 for the affected zip codes in the pre-closing period, while equaling 1 for the affected zip codes post-closing. We then interact this variable with the active-duty dummy. Since closings push beneficiaries to use civilian hospitals as opposed to MTFs, we expect to observe an opposite-signed coefficient of this interaction variable relative to the coefficients estimated in Table 3. A positive coefficient of the closing-active-duty interaction suggests that treatment intensity increases for active relative to non-active duty as care moves off the base—i.e., to an environment where active-duty attain liability rights.

Consistent with these expectations, we do indeed estimate a positive relationship between treatment intensity (log RWP) and the post-closings-active-duty interaction variable. We present the results of this exercise graphically in Figure 2 and in tabular format in Table A2 of the Online Appendix. We start in Table A2 by showing results for a difference-in-difference specification that focuses only on zip codes that were initially within 40 miles of a base hospital and that were affected by a base hospital closing—i.e., zip codes whose closest-MTF variable changed during the sample due to a closing. We then proceed across the various columns of Table A2 to incrementally enrichen this naive specification, adding zip-code effects and provider effects, while also including as controls encounters in zip codes unaffected by MTF closings. The findings are notably stable across each specification, with the most complete approach suggesting a 3.4 log-point increase in RWP for active duty relative to non-active duty in the post-closing period.

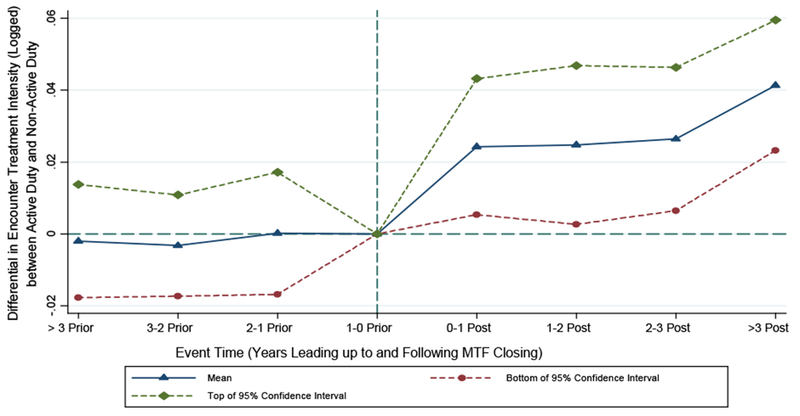

Figure 2.

Differential Effect of MTF Closings on Relative Weighted Product (Logged) for Active Duty Relative to Non-Active Duty: Event-Study Analysis

Notes: this figure plots coefficients from a regression that extends the specification estimated in Column 4 of Table A2 to include leads and lags of the closing variable and of the interaction between closing and active-duty-patient status (where the relevant lead/lag variable equals 1 in the indicated period of time and 0 in all other periods). The points in the graph represent the coefficients of the set of leads and logs of the interaction variables. The period of time in the one year preceding the MTF closure is the reference period. Source: 2003-2013 Military Health System Data Repository.

In Figure 2, we confront the base closings analysis more rigorously in an event-study framework. We modify the specification from Table A2 to include leads and lags of both the closing variable and the interaction term of interest, and plot the latter set of interactions over time. The estimated coefficients of the leads of this interaction term allow us to test whether the divergence in treatment intensity between active duty and non-active duty that we estimate in connection with MTF closings, in fact, began to materialize prior to the closing, an effect that would otherwise cast some doubt on a causal interpretation of the base-hospital-closure findings. This falsification exercise sounds no alarms, with each of the estimated lead coefficients being close to 0 in magnitude and statistically indistinguishable from 0. This finding suggests that the treatment intensity delivered to active duty would have trended in the same manner as it would for the non-active duty but for the closings. Following the moment of the closing, however, we begin to observe an increase in log RWP for active duty relative to non-active duty.

While this framework clearly illustrates the impact of closings on the treatment of active duty versus non-active duty patients, the magnitudes cannot be directly compared to the difference-in-difference coefficient of interest from Columns 1-5 of Table 3. To create a comparable estimate, we add additional columns to Table 3 to estimate the closings-based IV strategy discussed in Part III. This specification allows us to estimate the differential effect of using an MTF for active duty relative to non-active duty while accounting for endogeneity in the use of the base hospital (insofar as it draws on closings to induce within-zip-code changes in distances to the closest MTF and thus access to an MTF). With this specification, we estimate that the intensity of health care delivered to the treatment group—i.e., active-duty receiving care on the base—is roughly 5 log-points lower. When adding either hospital or physician fixed effects, these finding change very little. Overall, the pattern of IV results is nearly identical to the OLS results presented in Columns 1 – 5.28

Thus far, our results have focused only on the Relative Weighted Product, the Department of Defense derived intensity metric designed to be comparable across direct and purchased care settings. To explore matters further, in Table 4, we show the results for the following alternative measures of health care treatment intensity: hospital length of stay (logged), the incidence of any procedure being performed during the encounter, and the number of procedures administered during the encounter (logged).29 For the purposes of brevity, we show only our IV estimates with hospital fixed effects (though the results are robust to the inclusion of physician fixed effects and to the estimation of OLS specifications). Consistent with the RWP findings, the results from Table 4 indicate that our treatment status (active duty on the base) is associated with significant reductions—ranging from approximately 4 to 5 percent—in each of these alternative metrics.

Table 4.

Relationship between Medical Liability Immunity and Various Alternative Treatment Intensity Metrics among Full Inpatient Sample

| (1) | (2) | (3) | |

|---|---|---|---|

| Total Bed Days (Logged) | Incidence of Any Procedure | Number of Procedures (Logged) | |

| Active Duty Patient | 0.072 (0.010) |

0.011 (0.004) |

0.034 (0.012) |

| Active Duty Patient X MTF |

−0.053 (0.018) |

−0.028 (0.009) |

−0.052 (0.019) |

| N | 2408947 | 2418240 | 1888351 |

| Interaction Coefficient as a Fraction of Mean of Outcome Variable, For Non-Logged Outcome Variables | - | −0.04 | - |

Notes: robust standard errors corrected for within-catchment-area correlation in the error term are reported in parentheses (based on original catchment area designations). All regressions include year, primary diagnosis code, hospital and zip-code fixed effects and controls for patient age (dummies), sex, Charlson comorbidity and paygrade (dummies). MTF and Active-Duty-Patient X MTF are instrumented by a series of dummy variables capturing different distance bins between a patient’s residence and the closest MTF along with the interaction between such dummies and the active-duty-patient dummy. The coefficient of the MTF indicator is dropped due to the inclusion of provider fixed effects.

On a final note regarding Table 3, in Table A6 of the Online Appendix, we estimate an analogous table that focuses on a subset of admissions for medical conditions over which physicians have less discretion over whether or not to admit the patient to the hospital in the first place (as discussed in Part III). The resulting sample size is slightly less than 1/2 of the primary analytical sample. With this more restrictive approach, we continue to estimate a negative association between liability immunity and treatment intensity, though at a magnitude that is roughly 1-2 percentage points lower in absolute terms than the corresponding estimates in Table 3.30

IV.C. Diagnostic Utilization Analysis

In Table 5, we separately estimate the above IV specification on the utilization of any diagnostic procedure (Panel A) and on any non-diagnostic procedure (Panel B). Given the specificity of diagnostic tests to the particular clinical setting—e.g., esophagogastroduodenoscopy in the case of gastrointestinal encounters—we first perform this analysis separately across a range of the most common Major Diagnostic Categories represented in our data, with each column in Table 5 representing a different category. In the second-to-last column, we consider a residual category of the remaining diagnostic categories pooled and in the final column, we pool over all diagnostic categories.31 For the purposes of brevity, we present only the results of the IV specification with hospital fixed effects, though the resulting pattern of coefficients is robust to the estimation of OLS specifications and to the inclusion of physician effects.

Table 5.

Effect of Liability Immunity on Diagnostic-Procedure Utilization and Non-Diagnostic-Procedure Utilization, Separately Across Different Admission Types

| (1) | (2) | (3) | (4) | (5) | (6) | (7) | (8) | (9) | |

|---|---|---|---|---|---|---|---|---|---|

| Cardiac / Circulatory Admission | Respiratory Admission | Orthopedic Admission | Gastrointestinal Admission | Neurological Admission | Mental Health Admission | Endocrine, Nutritional and Metabolic Admission | Other Non-Childbirth Admission | Pooled Sample: All Non-Childbirth Admissions | |

| Panel A: Outcome Variable = Incidence of Appropriate Diagnostic Test for Indicated Admission Type | |||||||||

| Active | 0.015 (0.008) |

0.031 (0.013) |

0.030 (0.007) |

−0.008 (0.008) |

0.033 (0.009) |

0.004 (0.002) |

0.061 (0.014) |

0.027 (0.004) |

0.038 (0.003) |

| MTF * Active | −0.022 (0.013) |

−0.059 (0.022) |

−0.056 (0.015) |

−0.018 (0.011) |

−0.053 (0.020) |

−0.026 (0.015) |

−0.066 (0.018) |

−0.059 (0.007) |

−0.067 (0.005) |

| Interaction Coefficient as a Fraction of Mean of Dependent Variable | −0.03 | −0.15 | −0.16 | −0.04 | −0.09 | −0.55 | −0.67 | −0.31 | −0.22 |

| Panel B: Outcome Variable = Incidence of Any Non-Diagnostic Procedure | |||||||||

| Active | 0.004 (0.010) |

0.003 (0.012) |

0.019 (0.006) |

−0.001 (0.006) |

0.008 (0.008) |

−0.005 (0.003) |

−0.145 (0.020) |

−0.008 (0.004) |

−0.005 (0.003) |

| MTF * Active | 0.010 (0.017) |

−0.027 (0.025) |

0.026 (0.015) |

0.015 (0.008) |

0.007 (0.016) |

0.033 (0.025) |

0.009 (0.030) |

0.005 (0.010) |

0.003 (0.008) |

| Interaction Coefficient as a Fraction of Mean of Dependent Variable | 0.02 | −0.07 | 0.03 | 0.03 | 0.02 | 0.30 | 0.01 | 0.08 | 0.01 |

Notes: robust standard errors corrected for within-catchment-area correlation in the error term are reported in parentheses (based on original catchment area designations). All regressions include year, primary diagnosis code, hospital and zip-code fixed effects and controls for patient age (dummies), sex, Charlson comorbidity and paygrade (dummies). MTF and Active-Duty-Patient X MTF are instrumented by a series of dummy variables capturing different distance bins between a patient’s residence and the closest MTF along with the interaction between such dummies and the active-duty-patient dummy. The coefficient of the MTF indicator is dropped due to the inclusion of hospital fixed effects.

First of all, we note that, in the case of the diagnostic testing specifications, we document a negative coefficient for the ON-BASE X ACTIVE_DUTY interaction variable across every admission category. The magnitudes of these negative coefficients—as a percentage of the relevant mean diagnostic rates—are considerable, ranging from 3% to a 67%. In the case of the non-diagnostic-testing specifications, however, we estimate coefficients of the key interaction variable that are much closer to zero across each diagnostic category. Only two such estimates are marginally significant—in the case of orthopedic and gastrointestinal admissions—and, in those cases, the point estimates are actually small and positive. All told, across each and every category, the effect of our treatment status on procedure utilization rates is more strongly negative in the case of diagnostic testing relative to non-diagnostic procedures. To provide a collective sense of this analysis, in the final pooled column, we find a roughly 22 percent decrease in the incidence of diagnostic testing in connection with our active-duty/on-base treatment status, which is meaningfully and statistically different from the roughly 1 percent increase that we find in the incidence of any non-diagnostic procedure.

Providers are immune from liability when delivering care to our treatment group, in which event one may interpret our primary findings from Table 3 as evidence of a reduction in treatment intensity stemming from liability immunity. As above, however, one may be concerned that some other mechanisms may explain the lower care provided to the treatment group. The pattern of results presented in Table 5 and discussed in this sub-section supports the suggestion that our primary findings reflect the impacts of liability immunity on healthcare treatment intensity given our discussion in Part III that one would predict stronger negative impacts of liability immunity in the case of diagnostic tests relative to non-diagnostic procedures. Of course, to the extent that this pattern of heterogeneous effects may be consistent with one or more of the alternative mechanisms discussed above (or yet further alternative mechanisms)—e.g., a story in which MTFs treat their healthy patients differently than civilian hospitals treat their healthy patients—one may remain concerned that the pattern of results presented thus far arises from a mechanism other than the effects of liability pressures.

IV.D. Patient Outcomes

We have thus far provided evidence consistent with a story in which the removal of malpractice pressure substantially reduces treatment intensity in inpatient care. However, should these findings indeed reflect the actual impacts of liability, the welfare impacts are far from clear. If reduced treatment intensity significantly worsens patient outcomes, then malpractice pressure may be a productive way of ensuring that quality care is delivered to patients. But if reduced treatment has no effect on outcomes, or if it even improves outcomes, this would argue that the liability effects that we see in non-immune environments are wasteful and thus “defensive.”

As discussed in Part III, we consider several measures of patient outcomes.32 Table 6 shows the results for each measure, again focusing for brevity purposes on the IV specification with hospital fixed effects (as before, with the results being similar in the omitted OLS and other alternative specifications). In no case in Table 6 do we find evidence of a differential effect of MTF care on active-duty patients relative to non-active duty patients that would be suggestive of a worsening of health care outcomes in association with liability immunity. However, each individual result is somewhat noisy and, in the case of the two mortality outcomes and patient safety indicators, we cannot necessarily rule out that health care outcomes are worse for the active-duty / on-base treatment group. We show this in the remaining rows of the Table, which show the confidence intervals in absolute and percentage terms. For example, we cannot rule out that our treatment group is associated with an increase in mortality as high as 0.08 percentage points (or 17%).

Table 6.

Relationship between Medical Liability Immunity and Various Health Care Quality Metrics among Full Inpatient Sample

| (1) | (2) | (3) | (4) | |

|---|---|---|---|---|

| Incidence of Mortality within 90 Days of Discharge (Coefficients Multiplied by 100) | Incidence of Mortality within 1 Year of Discharge (Coefficients Multiplied by 100) | Incidence of Unplanned Hospital Readmission Within 30 Days (Coefficients Multiplied by 100) | Incidence of Patient Safety indicator (Coefficients Multiplied by 100) | |

| Active Duty Patient | 0.11 (0.04) |

0.07 (0.06) |

−0.48 (0.10) |

0.13 (0.06) |

| Active Duty Patient X MTF |

−0.03 (0.06) |

0.02 (0.10) |

−0.35 (0.15) |

0.06 (0.08) |

| 95% Confidence Interval for Interaction Variable | [−0.16, 0.08] | [−0.17, 0.23] | [−0.65, −0.06] | [−0.10, 0.23] |

| 95% Confidence Interval as Fraction of Mean of Outcome Variable | [−0.33, 0.17] | [−0.19, 0.26] | [−0.16, −0.01] | [−0.06, 0.13] |

| N | 2201771 | 2201771 | 2002653 | 2331195 |