Abstract

Longitudinal targeted maximum likelihood estimation (LTMLE) has very rarely been used to estimate dynamic treatment effects in the context of time-dependent confounding affected by prior treatment when faced with long follow-up times, multiple time-varying confounders, and complex associational relationships simultaneously. Reasons for this include the potential computational burden, technical challenges, restricted modeling options for long follow-up times, and limited practical guidance in the literature. However, LTMLE has desirable asymptotic properties, i.e. it is doubly robust, and can yield valid inference when used in conjunction with machine learning. It also has the advantage of easy-to-calculate analytic standard errors in contrast to the g-formula, which requires bootstrapping. We use a topical and sophisticated question from HIV treatment research to show that LTMLE can be used successfully in complex realistic settings and we compare results to competing estimators. Our example illustrates the following practical challenges common to many epidemiological studies 1) long follow-up time (30 months), 2) gradually declining sample size 3) limited support for some intervention rules of interest 4) a high-dimensional set of potential adjustment variables, increasing both the need and the challenge of integrating appropriate machine learning methods 5) consideration of collider bias. Our analyses, as well as simulations, shed new light on the application of LTMLE in complex and realistic settings: we show that (i) LTMLE can yield stable and good estimates, even when confronted with small samples and limited modeling options; (ii) machine learning utilized with a small set of simple learners (if more complex ones cannot be fitted) can outperform a single, complex model, which is tailored to incorporate prior clinical knowledge; (iii) performance can vary considerably depending on interventions and their support in the data, and therefore critical quality checks should accompany every LTMLE analysis. We provide guidance for the practical application of LTMLE.

Keywords: causal inference, g-methods, TMLE, HIV treatment

1. Introduction

Causal quantities, such as the average treatment effect, causal odds ratio or causal risk ratio, can often be estimated from observational data if assumptions about the data generating process are made and met. In this context, Targeted Maximum Likelihood Estimation (TMLE) is one estimation option. Since the mid 2000’s TMLE has been implemented successfully in numerous applications, initially for the effects of treatments or exposures at a single time point [1, 2, 3, 4, 5]. In recent years, an increasing body of work has dealt with the application of TMLE in longitudinal settings [6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19].

Longitudinal targeted maximum likelihood estimation (LTMLE) requires the fitting of models for the outcome, censoring, and treatment mechanisms, at each point in time. Alternative approaches include those based on estimating the treatment and censoring mechanism only (inverse probability of treatment weighted approaches)][20], the iterated outcome regressions only (one form of the g-formula)[21] or an outcome regression combined with the conditional densities of the confounders (“traditional” parametric g-formula)[22]. LTMLE is a doubly robust method which means that the causal quantity of interest is estimated consistently if either the iterated outcome regressions or the treatment and censoring mechanism are estimated consistently [23]. Moreover, LTMLE allows analytic estimation of standard errors and confidence intervals, based on estimates of the influence curve, as opposed to the g-formula which makes use of bootstrapping [24, 25]. To increase the likelihood of correct model specification, the use of machine learning algorithms are typically recommended. LTMLE, like other doubly robust methods, has the advantage over both inverse probability weighted and g-formula methods that it can more readily incorporate machine learning methods while retaining valid statistical inference.

The current literature is somewhat limited with respect to understanding the implications of using LTMLE for long follow-up times when dealing with small (or moderate) sample sizes and complex relationships among a multitude of confounders. Most studies to date were illustrative in nature and have dealt with a small number of follow-up time points, i.e. two to nine [6, 7, 8, 9, 10, 12, 13, 14, 15, 18, 19], or large sample sizes [11, 15, 18, 19], but to our knowledge very rarely with both small (or moderate) sample sizes and long follow-up. A notable exception is the study from Schnitzer et al [16] with a very long follow-up period, but the authors reported memory problems and had difficulties dealing with a decreasing sample size and sparsity of events. In spite of LTMLE’s double robustness feature, consistent estimation of both treatment and censoring mechanisms and outcome regressions, with reasonable rates of convergence, is important to improve efficiency, reduce finite sample bias, and provide the basis for valid statistical inference. A small sample may limit the application of data-adaptive approaches, such as additive models, boosting, random forests, shrinkage estimators, support vector machines and others, because the selection of tuning parameters and convergence of fitting algorithms may not work well, or not at all, under these circumstances – particularly when confronted with multiple time-dependent confounders which influence outcome and treatment non-linearly and in interaction with each other. Interestingly, in many studies using LTMLE the application of machine learning algorithms is limited [7, 8, 11, 17, 18], posing the question whether this was done intentionally because of restricted sample size or computational limitations.

A common estimation approach for LTMLE requires model fitting on the subset of subjects who follow the intervention of interest (and remain alive and uncensored). This may pose a problem if follow-up time is long and therefore the sample size gradually reduces: under these circumstances it may not be possible to successfully fit a multitude of learners which makes correct model specification more challenging. While there are alternative estimation approaches [12] which utilize the full sample, it remains to be investigated how the performance of these estimators differ in a finite sample.

For applying LTMLE in survival analyses, both the treatment and censoring mechanisms need to be modeled; in fact, for T time points, the cumulative inverse probabilities of remaining uncensored and following the intervention of interest, i.e. the product of T × 2 inverse probabilities, are part of the weighted fitting procedure. Unstable weights, possibly dealt with by truncation of the probabilities on which the weight calculation is based, could therefore emerge under long follow-up and non-ideal modeling strategies. It remains unclear to what degree this is a threat to the successful implementation of LTMLE.

The present article is based on our motivating question outlined in the next section. To answer it, one has to deal with long follow-up times, gradually reduced sample size (down to about 10% of the original sample size of 2604) and multiple time-dependent confounders. Clinical knowledge suggests that the data-generating process is complex in the sense that modeling should go beyond standard regression approaches. We investigate challenges and solutions with applying LTMLE in this setting, both by means of simulations and data analyses. In particular, we try to answer the following questions: (i) is it feasible to apply LTMLE in such a setting; (ii) if yes, will the complex setup reduce the applicability of super learning and would this impact the success of LTMLE?; (iii) under such a challenging scenario, could a manual implementation which is informed by prior clinical knowledge and is not data-adaptive be more successful than an automated LTMLE procedure?; (iv) how do long follow-up times, gradually reduced sample size, and truncated inverse probabilities impact on LTMLE for different intervention mechanisms? (v) what can be learned from these points that would benefit future analyses which attempt to apply LTMLE in complex data settings?

We introduce the motivating question in Section 2, followed by the theoretical framework in Section 3. After presenting our data analysis (Section 4) we discuss the implications of LTMLE in such a setting by means of both practical considerations and simulated studies in the following two sections. We conclude in Section 7.

2. Motivating Example

During the last decade the World Health Organization (WHO) updated their recommendations regarding the use of antiretroviral drugs for treating and preventing HIV infection several times. In the past, antiretroviral treatment (ART) was only given to a child if his/her measurements of CD4 lymphocytes fell below a critical value or if a clinically severe event (such as tuberculosis or persistent diarrhoea) occurred. Based on both increased knowledge from trials and causal modeling studies, as well as pragmatic and programmatic considerations, these criteria have been gradually expanded to allow earlier treatment initiation in children: ART has shown to be effective and to reduce mortality in infants and adults [26, 27], but concerns remain due to a potentially increased risk of toxicities, early development of drug resistance, and limited future options for people who fail treatment. In late 2015, WHO decided to recommend immediate treatment initiation in all children and adults.

It remains important to investigate the effect of different treatment initiation rules on mortality, morbidity and child development outcomes; for example, because of possible unknown long-term treatment effects and the increase in drug related toxicities. However given the shift in ART guidelines towards earlier treatment initiation it is no longer ethically possible to conduct a trial to answer this question. Moreover, trials are often limited by testing interventions in idealized, non-realistic, settings – for example by imposing regular medical visits and therefore additional personal attention which would not happen in most public health programmes.

Thus, observational data can be used to obtain effect estimates to compare early versus delayed treatment initiation rules. Methods such as inverse probability weighting of marginal structural models, the g-formula, and longitudinal targeted maximum likelihood estimation can be used to obtain results in complicated longitudinal settings where time-varying confounders affected by prior treatment are present — such as, for example, CD4 count which influences both the probability of ART initiation and outcomes [28, 12].

Below we focus on children’s growth outcomes, measured by height-for-age z-scores (HAZ), and explore the effect of different treatment initiation criteria on HAZ. The PREDICT trial, conducted among Asian children aged 1-12, suggested a growth difference between early and deferred treatment initiation, among those children who survived [29, Table 3]. However, unfortunately, the study was underpowered and had other limitations, including few very young children. In our analysis, we are particularly interested in whether strategies of earlier treatment initiation are beneficial for children’s growth.

3. Methodological Framework

Consider n subjects studied at baseline (t = 0) and during discrete follow-up times (t = 1,…, T) where T defines the end of follow-up. Some subjects may be censored and not be followed until T. The data for each time point t consists of the outcome Yt, an intervention variable At, q time-dependent covariates , and a censoring indicator Ct. Note that Lt contains indicator variables which indicate whether each covariate is measured for the individual at time t or not. Baseline variables, i.e. variables measured at t = 0, are denoted as . Treatment and covariate histories of an individual i up to and including time t are represented as and respectively. Ct equals 0 if a subject gets censored in the interval (t – 1, t], and 1 otherwise. Therefore, is the event that an individual remains uncensored until time t. Further, let St,i be an indicator variable which is 1 if a patient i is still alive at time t and 0 otherwise.

The counterfactual outcome refers to the hypothetical outcome that would have been observed at time t if subject i had received, possibly contrary to the fact, the treatment history . Similarly, are the counterfactual covariates related to the intervention . The above notation refers to static treatment rules; a treatment rule may, however, depend on covariates, and in this case it is called dynamic. A dynamic rule assigns treatment At,i ∈ {0, 1} as a function of the time-dependent confounder history ; and can also assign survival St,i = 1 and non-censoring Ct,i = 1 if required. The notation refers to the treatment (and censoring/survival) history up to and including time t according to the rule . The counterfactual outcome at time t related to a dynamic rule is , and the counterfactual covariates at the respective time point are .

3.1. Target quantity

We choose as the target quantity. More specifically, we are interested in the expected value of Y at time T under an intervention rule which assigns At as a function of and sets Ct and St deterministically to 1; that is, we consider rules which intervene on At = [At, Ct, St}. The choice of this target quantity is based on the motivating data example and justified in more detail in Section 4.

Below, in Sections 3.2, 3.3, and 3.4, we are going to introduce 3 different estimators which are generally suitable to estimate ψT. These estimators are further discussed in Section 3.5, and are implemented and compared in both the data analysis (Section 4) and the simulation study (Section 6).

3.2. The g-formula

The quantity of interest is identifiable through the g-formula.[22],

| (1) |

where f(·) refers to the probability density function of the respective random variable(s), Yt ∈ Lt (t < T) and At = [At, Ct, St}. It is an option to assume an order for the variables in which case we could write the joint distribution in (1)as

| (2) |

The factorization in (2) makes an implicit assumption about the time ordering of the relevant variables. For example, if we have 3 time-dependent confounders (q = 3), we assume that the order is → → . More generally we assume → → → Yt → At → Ct → St for t = 0, 1,…, T, see Sections 4.1 and 5.2 for more details on the chosen time ordering. We further assume time points to represent intervals and thus both covariates and a fatal event like death can potentially be measured at the same time t.

The equality holds under several assumptions. One is consistency which says that the counterfactuals equal the observed data under assignment to the treatment actually taken; more technically, if and if . Another assumption is (sequential) conditional exchangeability (or no unmeasured confounding) which requires the counterfactual outcome under the assigned treatment rule to be independent of the observed treatment assignment, given the observed past: , for , , t = 0,…, T. Positivity is the requirement that individuals have a positive probability of continuing to receive treatment according to the assigned treatment rule, given that they have done so thus far and irrespective of the covariate history: for ∀t, , with .

More comprehensive interpretations of the above assumptions can be found in [28, 30, 31, 32, 33] and are discussed in Section 5 as well.

3.2.1. Practical implementation of the (parametric) g-formula:

the integral (1) can be approximated by means of simulation, as shown previously [32, 34]:

Estimate the conditional densities in (1) by means of suitable, possibly semi-parametric, regression models. For example, if there are 3 time-dependent covariates one needs to fit 3 models for each time point, as well as a model for Yt for each point in time (because Yt ∈ Lt for t < T and for t = T one needs to estimate . Note that for this step some parametric assumptions are required, e.g. committing to a normal distribution of the residuals with homoscedastic variance when using a linear (additive) model.

Choose a particular intervention rule .

- Simulate data forward in time for the chosen intervention rule, based on the estimated conditional distributions from Step 1:

- for t = 0, the data are the observed data.

- for t > 0:

- set At–1 according to the treatment rule (we write ).

- take the fitted models from step 1 to estimate the densities under . For instance, when using the fit of a linear model where the outcome Yt is regressed on its past, set , use L0 from the observed data and Lt from the counterfactual estimates obtained in c) – and calculate and under this intervention and these data based on the fitted model parameters.

- then, generate stochastic draws of the conditional distributions under the respective intervention. For example, for a linear regression model as explained in b), draw from a distribution. This draw relates to the estimated counterfactual . The counterfactual estimates can be obtained in the same way.

Steps 1 to 3 produce a counterfactual data set under . Thus, can be easily calculated from this data set.

Confidence intervals are then created by means of bootstrapping.

3.3. The sequential g-formula

The g-formula integrates out time-dependent confounders, which are affected by prior treatment, with respect to their post intervention distribution. This can be interpreted as a standardization with respect to these confounders. The sequential g-formula, introduced below, shares the idea of standardization in the sense that one sequentially marginalizes the distribution with respect to L given the intervention rule of interest [21, 23]. It is just a re-expression of the traditional g-formula where integration with respect to L is not needed [9, 13]. The sequential g-formula is also known as the sequential g-computation estimator [35] or the iterated conditional expectation estimator [19]. In general, using the iterative conditional expectation rule (together with the above stated assumptions of sequential conditional exchangeability, consistency, positivity, time ordering → → → Yt → At → Ct → St, t = 1,…,T, Yt ∈ Lt for t < T and At = {At, Ct, St}) it holds that

| (3) |

3.3.1. Practical implementation of the sequential g-formula:

using equation (3) the target parameter can be estimated as follows:

For t = T, …, 1:

Use an appropriate model to estimate . The model is fitted on all subjects that are uncensored and alive (until t – 1). Note that the outcome refers to the measured outcome for t = T and to the prediction (of the conditional outcome) from step 2 (of iteration t – 1) if t < T.

Now, plug in based on rule and use the regression model from step 1 to predict Yt at time t, which we denote as .

Then:

-

3.

The estimate is obtained by calculating the mean of the predicted outcome from step 2 (where t = 1).

-

4.

Confidence intervals can be obtained using bootstrapping (or equation (5) below, which however may not yield nominal coverage).

Appendix B, which illustrates implementation details for the LTMLE estimator from Section 3.4, can also be seen as a guideline to implement the sequential g-formula: omitting step 3 from the code yields the desired solution.

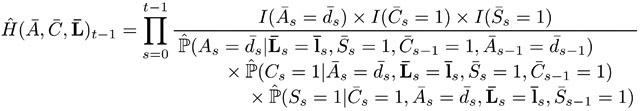

3.4. The LTMLE estimator

The LTMLE estimator, which is based on (3), was suggested by van der Laan and Gruber [23] who developed the asymptotic theory and algorithm of LTMLE. Their work builds on earlier work from Bang and Robins [21] who suggested a semi-parametric estimator that is defined through parametric regression models. For our target parameter ψT the LTMLE framework can be applied as follows: estimate ψT with the sequential g-formula, but with an additional targeted step for each t which enables doubly robust inference with respect to ψT, the quantity of interest.

3.4.1. Practical implementation of the LTMLE estimator:

For t = T, …, 1:

Use an appropriate regression model to estimate . The model is fitted on all subjects that are uncensored and alive (until t – 1). Note that the outcome refers to the measured outcome for t = T and to the prediction (of the conditional outcome) from step 3d (of iteration t – 1) if t < T. (“Q0 model”)

Now, plug in based on rule and use the regression model from step 1 to predict the outcome at time t, i.e. .

- To improve estimation with respect to the target parameter ψt update the initial estimate of step 2 by means of the following regression:

- the outcome refers again to the measured outcome for t = T and to the prediction from item 3d (of iteration t – 1) if t < T.

- the offset is the original predicted outcome from step 2 (iteration t).

-

the estimated “clever covariate” refers to the cumulative product of inverse treatment and censoring probabilities:

(4) Before (4) can be calculated the treatment, censoring and survival models in the denominator of (4) need to be fitted first. If it is assumed that St follows directly after Ct, then it is an option to assume and model only one censoring process per time point. We follow this approach in the data analysis for computational convenience (Appendix B). -

Predict the (updated) outcome, , based on the model defined through 3a, 3b, and 3c.This model contains no intercept and is fitted and evaluated for all subjects that are uncensored, alive (until t – 1), and follow treatment rule (“Q1 model”). Alternatively, the same model can be fitted with as a weight rather than a covariate [9, 19]. In this case an intercept is required. We follow the latter approach in our implementations of Section 4 and 6.

Then:

-

4.

The estimate is obtained by calculating the mean of the predicted outcome from step 3d (where t = 1).

-

5.

Confidence intervals can, for example, be obtained using the vector of the estimated influence curve [23] of ψT, which can be written as

| (5) |

where at time s = T is YT. An asymptotically normal 95% confidence interval is then given by

It has been shown that using the five steps above yields a doubly robust estimator of ψT [21]. Doubly robust means that the estimator is consistent as long as either the outcome model[s] (step 1) or the treatment model[s] (step 3c) are estimated consistently. If both are estimated consistently at reasonable rates, the estimator is asymptotically efficient. This is essentially because step 3, in particular the construction of the covariate in step 3c, guarantees that the estimating equation corresponding to the efficient influence curve is solved, which in turn yields desirable (asymptotic) inferential properties. The interested reader is referred to the appendix of van der Laan and Rose (2011) [24], or Schnitzer and Cefalu [36], for details on how the influence curve relates to inference in targeted maximum likelihood estimation.

3.5. Inferential considerations for the three presented estimators

Each of the above introduced estimators requires the estimation of conditional expectations which can be utilized with (parametric) regression models. Under the assumption that they are correctly specified this approach is valid. In a complex longitudinal setting such as ours, model mis-specification is a concern, and may yield a biased estimate of ψT.

An alternative to using regression models would be (supervised) machine learning, which is a loose term in the statistical literature referring to both traditional and modern estimation techniques, such as semi-parametric regression models, shrinkage estimators, boosting, random forests, support vector machines, and others. For a given data set, and an a priori specified set of estimation techniques, the method minimizing the expected prediction error (as estimated by k-fold cross validation) can be chosen. As it is not clear beforehand which method this is, and whether a combination of learners might perform even better than a single one, one may use super learning. Super learning, which is also known as stacking [37], means considering a set of prediction algorithms (“learners”); Instead of choosing the algorithm with the smallest cross validation error, super learning chooses a weighted linear combination of different algorithms, that is the weighted combination which minimizes the k-fold cross validation error (for a given loss function, e.g. quadratic loss). This combination is linear, the weights are non-negative and sum up to one. The loss can be easily minimized with either quadratic programming or a non-negative least squares estimation approach, and is implemented in the R-package SuperLearner [38]. It can be shown that this weighted combination will perform asymptotically at least as well as the best algorithm, if not better [39]. If none of the specified algorithms is a correctly specified parametric model, then the super learner will perform asymptotically as well as the oracle selector (which chooses the best weighted combination in the library), otherwise it will achieve an almost parametric rate of convergence (and will therefore perform reasonably well). For all methods a major advantage of using super learning (rather than a single regression model) is that the probability of model mis-specification is reduced because distributional assumptions are relaxed and complex multivariate relationships can potentially be represented by the weighted combination of (potentially simple) learners. However, i) good prediction algorithms may still yield biased model estimates of the conditional expectations (in exchange for reduced variance) and ii) cross validation evaluates the expected prediction error with respect to the global fit of the conditional expectation, and not the scalar parameter ψT. TMLE incorporates the targeting step towards ψT and can thus use super learning, whereas the (sequential) g-formula may not necessarily benefit from it.

The successful use of super learning requires a good and broad selection of prediction algorithms, and the ability to estimate these on the data. This may be challenging for longitudinal data with many time points and small sample size, as in our data. Section 5.3 gives a thorough discussion on the challenges and appropriate use of machine learning for data setups like ours.

4. Data Analysis

4.1. Study setting, data and DAG

Our analysis includes data from 16 HIV treatment cohorts from Cote d’Ivoire, Burkina Faso, Ghana, Senegal, Togo, South Africa, Malawi, and Zimbabwe. All cohorts are part of the International epidemiology Databases to Evaluate AIDS (IeDEA) cohort collaboration, from both West Africa and Southern Africa [40, 41]. HIV-positive children aged 12-59 months at enrolment, who acquired the virus before or at birth or during breast feeding, were included. To be eligible for the analysis, children had to have at least one visit before ART initiation, complete data at enrollment (baseline), and one follow-up visit. In total, 2604 children were included in the analysis. We evaluated their data at t* =0, 1, 3, 6, 9, …, 30 months after enrolment respectively. The follow-up time points refer to the intervals (0, 1.5), [1.5, 4.5), [4.5, 7.5), [7.5, 10.5), [10.5, 13.5), [13.5, 16.5), [16.5, 19.5), [19.5, 22.5), [22.5, 25.5), [25.5, 28.5), [28.5, 31.5) months. After 30 months of follow-up 1421 children were still alive and in care. Follow-up measurements, if available, refer to measurements closest to the middle of the interval. In our data Yt* refers to height for age z-score (HAZ) at time t* (i.e. measured during the interval (t* – 1, t*]). The binary treatment variable At*, refers to whether ART was taken at time t* or not. Similarly, Ct*, and St*, describe whether a patient was censored (administratively or due to loss to follow-up), or died, at time t* respectively. Children were defined as being lost to follow-up if, at the time of database closure, they had not had contact with their health care facility for at least 9 months since their last recorded visit. Time-dependent confounders affected by prior treatment refer to which are CD4 count, CD4%, weight for age z-score (WAZ) which is a proxy for WHO stage [42], i.e. a disease progression measure ranging from stage 1 (asymptomatic) to stage 4 (AIDS), and , t* < 30. Similarly, we write , , , for indicator variables which specify if Ls has been measured at time t* or not. They are needed to facilitate estimation step 3 for the g-formula because we don’t want to intervene on measurement frequency but instead model irregular measurements as in reality. L0 refers to baseline values of CD4 count, CD4%, WAZ, HAZ as well as sex, age, year of treatment initiation and region. Secondary outcome variables are Y6, Y12, Y24.

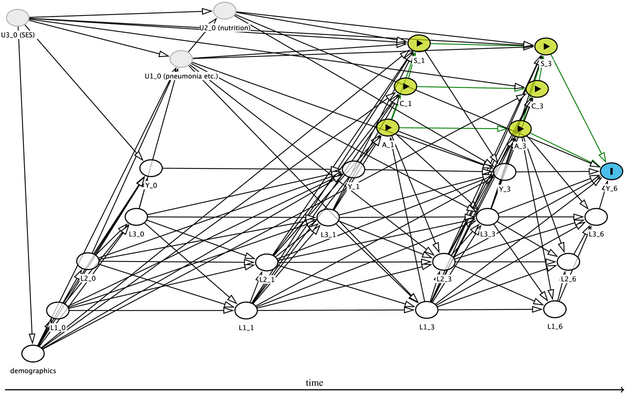

Our assumptions about the structural relationships of these variables are summarized in the DAG in Figure 1. It is known that (most recent) CD4 count and CD4%, as well as WHO stage (approximated by WAZ [42]), i.e. Lt* influence a clinician’s decision about treatment assignment, affect the outcome and are affected by prior treatment. This is because previous HIV treatment guidelines have recommended using these disease severity markers to decide whether to prescribe ART or not [34]. Both the (sequential) g-formula and LTMLE can adjust for the type of confounding induced by Lt*. A child’s growth depends on various factors, including ART [29], disease severity (L) [43], and unmeasured variables such as nutrition and socio-economic status (SES). It seems appropriate to assume that mortality depends predominantly on disease severity (and demographics) while the censoring process may depend on a multitude of factors. The time-ordering of the variables is based on the considerations of Section 5.2.

Figure 1.

DAG containing the structural assumptions about the data generating process. The target quantity is ψ6, and relates to Y6 which is represented by the I node. Intervention nodes (here: A1, A3, C1, C3, S1, S3) are represented by yellow nodes and the ▶ symbol. Measured confounders are white, and unmeasured variables are grey. Causal paths for the intervention to the outcome are shown by green arrows.

4.2. Target parameter and interventions

We consider the mean HAZ at 30 months, under no censoring, for a given treatment rule , j = 1,…, 4, to be the main quantity of interest, that is . Secondary quantities are ψ6, ψ12, and ψ24.

This target parameter is evaluated for the following four interventions, two of them static, two dynamic:

The first intervention () means immediate treatment initiation, the last one () means no treatment initiation, and interventions two and three (, ) mean deferring treatment until a particular CD4 threshold is reached. All interventions allow no censoring and death, and assume that a child stays on treatment once initiated.

Remark: Note that the above target parameter is different from the quantities estimated in other analyses which are concerned with estimation of the effect of timing on treatment initiation on growth. These analyses evaluate the mean HAZ under a particular treatment strategy conditional on either the observed subset of survivors at time t* – 1 (i.e. St*–1 = 1, [44]) or the subset of survivors that would have survived under the respective strategy (i.e. , [43, 34]). Conditioning on subsets of survivors is based on the rationale that one is typically interested in the benefits of treatment initiation beyond pure survival, i.e. general child development, including growth, among those children who are alive and can potentially be followed up. Unfortunately, conditioning on observed or counterfactual survival will lead to target parameters that can likely not be identified because conditional exchangeability may be violated.

For example, by conditioning on the subset of observed survivors several paths, including At → St → U → Yt+1 would be opened as unmeasured variables U, such as past pneumonia or tuberculosis episodes, could potentially affect both survival as well as the outcome, i.e. one would face collider bias (see Figure 1). Moreover, the population evaluated changes for each t*, and also for each if one conditions on ; this makes comparisons difficult. Future research may evaluate alternative effect measures, such as the survivor average causal effect, which is partially identifiable and compares the outcome under two different treatment strategies, but only among the subpopulation that would have survived irrespective of which treatment strategy they were assigned to [45, 46].

4.3. Estimation strategies

We estimate the target quantities Ψ30, ψ24, ψ12 and ψ6 based on four approaches:

using the g-formula as described in (1) with a manual implementation which includes prior clinical prior knowledge (i.e. using only additive regression models),

using the sequential g-formula as described in (3), using the ltmle package in R [10, 47] with a data adaptive estimation approach,

using TMLE as described in Section 3.4 with a manual implementation which includes prior clinical knowledge (i.e. using only additive regression models),

using TMLE as described in Section 3.4, using the ltmle package in R [10, 47] with a data adaptive estimation approach.

This choice essentially compares using standard software approaches (ltmle, existing and applicable super learning wrappers) with a manual implementation which uses only one learner (additive models), however one which is complex, not implemented in a wrapper, and incorporates prior clinical knowledge. Section 5.4 provides more details on the choice of these estimators.

4.3.1. Model specification:

The implementation details for both LTMLE estimators are given in appendices A and B, the supplementary material, and are discussed further in Section 5. Briefly, for the automated implementations, i.e. estimators (ii) and (iv), we use the following specifications: first, we do not specify the regression models for outcome, treatment, and censoring parametrically, but use super learning instead. The candidate learners were the arithmetic mean, (generalized linear) regression models with all main terms [GLM], GLMs based on an EM-algorithm-Bayesian model fitting, GLMs chosen by stepwise variable selection with Akaikes Information Criterion [AIC], GLMs containing interaction terms, as well as additive models – i.e. those learners which could be successfully fitted on our data.

Our manual implementation of the g-formula followed Schomaker et al. [43, Appendix]. Briefly, we used semi-parametric additive linear and logistic regression models to model the conditional densities in (1) and (2); To allow for flexible disease progression depending on how sick children are when they present at their first visit, interactions of baseline characteristics (represented in categories) with all other variables were added. Depending on the functional form of the covariates these interactions were either linear or non-linear (using penalized splines). Model selection by means of generalized cross validation was used to improve the bias-variance tradeoff in these models. All models implicitly assume that time-dependent risk factors measured before or on time t* – 2 do not predict the respective outcome. Note that the same approach of model building was used for our manual LTMLE estimator (iii). Sections 5.3 and 5.4 give a thorough discussion of the issue of model choice.

4.3.2. LTMLE implementation:

Both the automated and the manual implementations of the LTMLE algorithm do not use linear regression models to model the outcome, HAZ; rather, the data are transformed to lie between [0, 1] and a Quasi-binomial model is used [48]. This is the way the ltmle package deals with continuous outcomes with the aim of improving stability, particularly in the context of (near) positivity violations [49].

The clever covariate as defined in step 3 in Section 3.4 is included in the regression model not as a covariate, but as a weight [9]. This is another valid targeted maximum likelihood estimator, based on a refined loss function (and parametric submodel), and can improve stability [50, 19]. This approach is again part of the ltmle implementation.

Moreover, the denominator of the clever covariate (4) is bounded in the sense that values < g are truncated at g. The automated implementation uses the default of g = 0.01, the manual implementation a stricter version of g = 0.05; see Section 5.6 for a thorough discussion on truncation of the clever covariate.

4.3.3. Other details:

Our automated implementation of the sequential g-formula, i.e. estimator (ii), follows the automated implementation of LTMLE, except that step 3 in Section 3.4 is not part of the inference procedure.

All four methods use the square root of CD4 count to work with a more symmetric variable.

4.4. Results

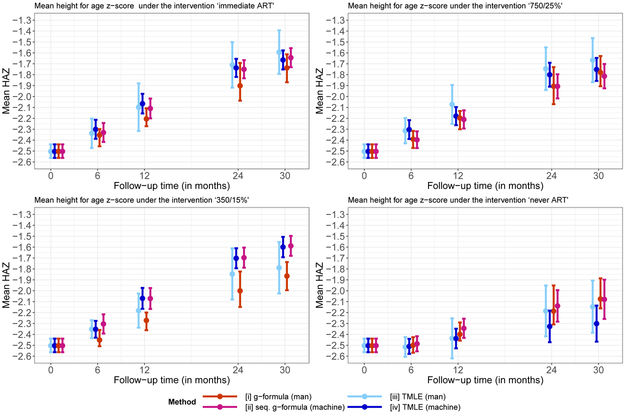

Figure 2 summarizes the results of the analyses, i.e the point and interval estimates for ψ6, ψ12, ψ24, ψ30.

Figure 2.

Mean HAZ for different interventions, time points, and methods.

For immediate ART initiation, and the dynamic intervention of starting ART if either CD4 count < 750 or CD4% < 25%, the point estimates of all estimators were generally similar (Figure 2, top panel). Deviations after 30 months of follow-up were not much greater than 0.1.

For the second dynamic intervention (Figure 2, bottom left) estimates from the manual implementations (i.e. estimators (i) and (iii)) where generally substantially lower than the estimates from the automated implementations. One of the most interesting results can be seen for the intervention “never ART”: here, automated estimation via LTMLE deviated by approximately −0.2 from all other methods (Figure 2, bottom right).

For all results confidence intervals were widest for our manual implementation of LTMLE, followed by our manual implementation of the g-formula. There is a tendency for all estimators to suggest better outcomes with earlier treatment initiation; however, the automated implementations are less clear about this for the intervention that uses the 350/15% threshold. Also, the automated TMLE procedure suggests more severe consequences of withholding antiretroviral treatment (“never ART”) than the other estimators.

In summary, the four methods produce slightly different results. The manual versions of the g-formula and LTMLE suggest that earlier treatment leads to better height gain. Our automated LTMLE procedure deviates from these results by considering the 350/15% intervention more positive and the never ART rule more negative.

Interestingly, the automated implementations of the sequential g-formula and LTMLE hardly differ for the first three interventions of interest, which is reassuring, but differ substantially for the fourth intervention of no antiretroviral treatment initiation. This could relate to positivity violations, as outlined in Section 5.6 and the simulation studies.

Our manual implementations of the g-formula and TMLE suggest broadly similar growth differences between the different intervention strategies as Schomaker et al. [43], who implemented a similar analysis on a much bigger dataset using the g-formula with good performance under the natural course scenario (see also Section 5.5), but with an emphasis on mortality estimates.

For all interventions and time points, super learning made extensive use of additive models in the Q-models (weights between 0.44 and 0.85), and moderate use in the g-models (weights between 0.18 and 0.65). It is worth pointing out that for the g-models, the arithmetic mean, which is a simple learner, was important with average weights between 0.15 and 0.61.

5. Practical Challenges in the Data Analysis and Diagnostics

Below, in Sections 5.1-5.4, we highlight the general practical challenges of our data analysis which are relevant to other longitudinal settings as well. Sections 5.5 and 5.6 discuss possible diagnostic approaches for both the g-formula and LTMLE.

5.1. Challenge: long follow-up and declining sample size

All 4 estimators under consideration require regression models (or data adaptive approaches) to be fitted on all subjects who are alive and uncensored at time t. It is evident that the longer the follow-up, the fewer subjects that can be used to fit the respective models, e.g. after 30 months of follow-up only 1421 patients remain.

As we discuss below, this can be a problem as all estimators rely on correct model specifications and with smaller sample sizes it may not be possible to use more complicated methods, such as additive models with non-linear interactions or advanced machine learning algorithms. Moreover, this problem can potentially become even more severe in step 3 of the LTMLE algorithm presented in Section 3.4.1: here, the models are estimated on the subset of patients alive, uncensored and following treatment .

In our data, after 30 months of follow-up, we have 371 (14.2%) patients alive and uncensored following rule , 396 (15.2%) patients alive and uncensored following rule , 505 (19.4%) patients alive and uncensored following rule , and 292 (11.2%) patients alive and uncensored following rule . This is the available sample size for the LTMLE estimator during its updating step. Note that after 30 months of follow-up we have evaluated 3 confounders, the outcome, as well as treatment at 11 different time points. Together with the baseline confounders this makes about 60 covariates which are potentially relevant to the respective regression models at time 30. With adding non-linear relationships, for example via penalized splines, the number of parameters that needs to be estimated can therefore be close to n. The consequence is that complex estimation procedures, which may be needed for data adaptive approaches, may either fail or yield unstable solutions.

5.2. Challenge: making structural temporal assumptions

In general, it is required to make assumptions about the time-ordering of the data. For our data analysis the time-ordering assumptions can be visualized as follows:

Note that L0 includes Y0, and that we do not explicitly specify A0, C0, and S0 here, as by definition everyone is uncensored, untreated and alive at t* = 0. Equation (2) implies that the ordering of the time-dependent confounders Lt* can possibly affect the results of the g-formula: the confounder distribution which comes last, i.e the one referring to , can be informed by the other confounders measured at the same time point, , but not vice versa. While all factorizations of Lt* are valid, they may yield somewhat different answers in a finite sample. It is clear that g-formula analyses benefit from sensitivity analyses with respect to the ordering of the time-dependent confounders. However, this may be very time-consuming or even unfeasible given the additional burden of bootstrapping. For LTMLE estimators the ordering of the Ls does not play a role (because no models for are required), and neither is bootstrapping needed – which shows the method’s benefits in this regard. Nevertheless, the assumed time ordering still plays a role for LTMLE, as the modeled iterated outcome and treatment and censoring mechanisms may only include those variables which are assumed to be measured prior to the respective variable.

Given the above considerations, the obvious choice for L3 in our data is WAZ, as it is a strong proxy for disease severity and physical development [42]. At each time point WAZ can be informed by CD4 count and CD4% at the same time point, but not vice versa. Since, for children, CD4% is a more accurate measure of disease severity than CD4 count it makes sense to treat the former as L2 and the latter as L1. Treatment decisions (A) can potentially be informed by laboratory measurements (L1, L2) and clinic measurements (L3, Y) which we have acknowledged in our time ordering. Since death and censoring can happen until the end of an interval, and a patient may still experience measurements before these events, we assume S and C to be measured at the end of each interval.

5.3. Challenge: machine learning in the light of long follow-up and declining sample size

The successful use of super learning for LTMLE estimators has been shown repeatedly in the literature [11,24]. However, the problem for our motivating example is that particularly for longer follow-up times most of the available machine learning algorithms failed. In fact, we were only successful in using the learners described in Section 4. All other algorithms provided by the SuperLearner package failed. One could argue that under such circumstances the use of super learning is limited, unless one is able to and willing to write custom-made wrappers.

Reasons for failure were manifold: (i) memory problems because of the use of complex fitting algorithms in the context of large covariate data (ii) non-convergence of fitting algorithms because of p ≈ n [as motivated in Section 5.1] (iii) sparse covariate data (iv) un-identified problems of the fitting algorithm (v) inability to use existing wrappers for Quasi-binomial models [48], which are used in ltmle for bounded and transformed continuous outcomes and (vi) others. Note that we need predictions from 3 prediction models/algorithms (one in step 1 and two in step 3c) for 11 time points, and for 4 interventions. Each time we work with a different subset of data with different covariate combinations. If problems occur for one model, potentially because of the specific sub-sample of data, super learning can fail as a whole. To tackle this problem one could pool models over time points [16], though this approach is not implemented in ltmle.

5.4. Challenge: incorporating prior clinical knowledge using non-linear interactions

A clinical hypothesis in our data is that patients who are sicker at presentation will have a different disease trajectory from patients who are healthier at presentation. Children who arrive with severe disease will not be able to recover as fast as healthy children and will also not be able to reach the same CD4 count levels [43]. A model describing this relationship could be of the form

or similar, where g(·) represents a link function (i.e. the identity link if Y refers to HAZ), Xβ refers to the parametric part of the model (e.g. binary baseline variables), and fj are unspecified general smoothing functions. The above model is a generalized additive model in its wider sense [51]. No matter whether using the g-formula or an LTMLE estimator it is important to deal with many multiple non-linear interactions at the same time. The R library mgcv can fit models as specified above, and this is the reason we use them in our manual implementations, i.e. estimators (i) and (iii). Using additive models in this context requires stepwise manual inclusion of non-linear interactions, if they improve the prediction error in terms of generalized cross validation, because inclusion of all possible interactions will make the fitting process too complex to converge and also results in models with unacceptably high variance.

Note that the automated estimation procedures (ii) and (iv) include the gam wrapper provided by the SuperLearner package. However, this wrapper doesn’t use the mgcv implementation described above but another one from package gam. While the latter has advantages in that it uses the simple backfitting algorithm [52] for estimation, and could therefore be regarded as non-parametric rather than semi-parametric, it lacks a flexible approach of dealing with non-linear interactions, i.e. fitting smooth functions from a first variable that vary with respect to the categories of a second variable. Moreover, it does not use automated smoothing parameter selection using cross validation but rather needs pre-specified knowledge of the degrees of freedom of the model. Also, the default wrapper implicitly assumes no (non-linear) interactions. This fact can be irrelevant for super learning if enough learners are available, such that the combined weighted super learner contains the predictive information related to the interactions; however, if the number of (pre-implemented) learners that can be fitted is limited, as in our data analysis, the question arises whether it may be preferable to proceed with a manual implementation rather than a restricted automated one. This question will be answered later in this paper. Of course, it is also possible for the user to write their own wrapper function, based on mgcv, with model selection with respect to non-linear interactions, though this would be rather computationally intensive.

5.5. Diagnostics for the parametric g-formula: the natural course scenario

Applying the g-formula assumes that the densities in (1) are estimated consistently. A necessary, but not sufficient, condition for correct specification is that the g-formula re-produces the raw data (at least approximately) if the treatment rule relates to the real treatment assignment process in the data. More specifically, the “natural course scenario” evaluates the stochastic treatment rule where treatment assignment probabilities are estimated from and censoring and death is not intervened upon but modeled via , and . All models are fitted on those alive and uncensored. Then, (and also ) under this stochastic treatment rule, and estimated by the g-formula as described in Section 3.2.1, can be compared to the mean HAZ at the end of follow-up in the data, and should approximately match. Reporting the natural course scenario is a standard procedure in g-formula analyses [53, 43, 32]. We note however that the approach used in the natural course scenario evaluates a target quantity conditional on survival, as opposed to the target quantity defined in Section 3 where survival is intervened upon; thus caution should be exercised when interpreting the below results.

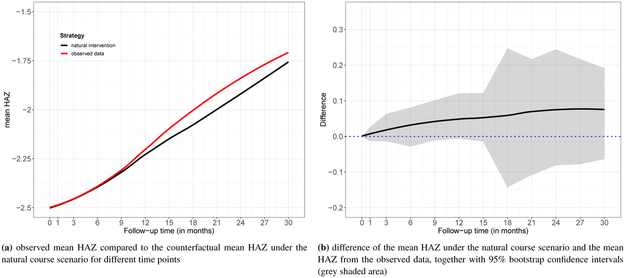

Figure 3 shows the natural course scenario for our data. The mean HAZ at the end of follow-up for both the raw data (“observed data”) and the data produced by the g-formula under the natural course scenario (“natural intervention”) are similar up to 1 year of follow-up, after which some deviation can be observed. The 95% confidence interval of the difference (Figure 3b) suggests that the difference may not be different from 0, but some caution with respect to model mis-specification for our manual implementations remains.

Figure 3.

Diagnostic for the g-formula: natural course scenario

5.6. Diagnostics for LTMLE: inverse probabilities and truncation to detect positivity problems

As opposed to the g-formula, LTMLE estimators require models for the treatment and censoring mechanism. This mechanism is reflected in the clever covariate used in step 3c of the LTMLE algorithm. While the LTMLE estimator is less sensitive than traditional inverse probability of treatment estimators [54] it nevertheless includes inverse probability weights in its fitting process which may lead to more variable estimates when the weights become more variable [36].

For our motivating data example we have 20 treatment models relating to both the treatment and censoring mechanism of the time points. Ideally the fitted cumulative probabilities are not too small because small estimated probabilities may indicate near-positivity violations; i.e. if there are covariate strata where the (estimated) probability of (not) receiving treatment is close to zero, for example when an extremely sick patient has not been treated although this would have been expected according to the data of similar patients. Practical positivity violations occur easily when dealing with many continuous confounders as in our data; but if the data is still “rich” enough both the g-formula and the LTMLE estimator may be able to deal with it and extrapolate based on the available data and estimated models [54].

One way to deal with small probabilities is to truncate them at a lower bound g. This reduces variability and improves stability, however at the cost of bias if the truncation is too severe [49].

Table 1 summarizes mean clever covariates (i.e. cumulative inverse probabilities until 30 months of follow-up) for different model specifications and interventions. Their mean should be about 1. More importantly, the percentage of truncated probabilities (here, [cumulative] probabilities < 0.01 are truncated), should be as small as possible.

Table 1.

Mean clever covariate for LTMLE estimators (at t* = 30) corresponding to different interventions and model specifications. The percentage of truncated values refers to the proportion of probabilities < 1%.

| %truncated | mean | clever | covariate | ||||

|---|---|---|---|---|---|---|---|

| Intervention | N | SL | GAM | GLM | SL | GAM | GLM |

| no ART | 292 | 0.7 | 68.5 | 100 | 0.97 | 8.96 | 11.21 |

| 350/15% | 505 | 0 | 32.9 | 45.9 | 1.21 | 9.02 | 9.32 |

| 750/25% | 396 | 0.3 | 18.2 | 11.6 | 0.88 | 4.96 | 2.28 |

| immediate ART | 371 | 0 | 15.6 | 0 | 0.83 | 4.30 | 0.53 |

GLM’s which do not use any transformations or interactions and include covariate history up to time t – 2 yield highly variable results, i.e. the mean clever covariate ranges from 0.53 to 11.21 and between 0% and 100% of truncated observations. Our manual implementation of TMLE [estimator (iii)], which uses additive models with non-linear interactions (“GAM”), leads to unstable weights with means that are far too high (> 4) and many small probabilities which have been truncated. Using super learning (“SL”) yields good mean clever covariates, but still 0.7% truncated values for the intervention of ‘no ART’.

Table 2 lists point and confidence interval estimates of LTMLE estimators (t = 30), as well as the corresponding mean clever covariate and truncation percentages, for different model specifications for the potentially problematic intervention .

Table 2.

Point and confidence interval estimates of LTMLE estimators (t* = 30), as well as the corresponding mean clever covariate, for different model specifications and truncation definitions. Results are provided for the intervention “no ART”.

| Estimates | mean CC | % truncated | ||||

|---|---|---|---|---|---|---|

| LTMLE | g=0.01 | g=0.05 | g=0.01 | g=0.05 | g=0.01 | g=0.05 |

| GLM | −2.05 (−3.53; −0.57) | −2.05 (−2.36; −1.75) | 11.21 | 2.24 | 100 | 100 |

| GAM | −2.19 (−3.19; −1.18) | −2.15 (−2.38; −1.91) | 8.96 | 2.14 | 68.5 | 90.8 |

| SL | −2.30 (−2.47; −2.14) | −2.25 (−2.35; −2.14) | 0.97 | 0.76 | 0.7 | 6.2 |

It can be seen that model specification has a considerable impact on the results. While truncation doesn’t affect the point estimates much, it is worth noting that an improved mean clever covariate comes at the cost of truncation levels way above 5%, even with super learning. This raises the question whether positivity violations are so severe that one wouldn’t trust any of the provided estimates for the fourth intervention of interest. Interestingly, our manual implementation of LTMLE (using additive models) yields estimates similar to the g-formula – in spite of the poor weights. This can be explained as follows: the cumulative probabilities are so small that most of them get truncated and the clever covariate can therefore hardly update the initial g-formula estimate in step 3 of the TMLE algorithm.

6. Simulation Study

The following simulation is meant to investigate under which conditions effect identification with LTMLE may be difficult, i.e. to what degree small samples, limited sets of learners, overuse of truncation and intervention definitions may affect its performance. Based on our data analysis and the discussions in Section 5 we generate longitudinal data (t = 0, 1,…, 12) for 3 time-dependent confounders, as well as baseline data for 7 variables, using structural equations and the R-package simcausal [55]. Baseline data includes region, sex, age, CD4 count, CD4%, WAZ and HAZ respectively (L0). Follow-up data refers to CD4 count, CD4%, WAZ and HAZ (, , , Yt), as well as antiretroviral treatment (At) and censoring (Ct). For simplicity, no deaths are assumed. The data generating mechanism contains interactions and nonlinear relationships and is described in detail in Appendix C. It leads to the following baseline values: region A = 75.5%; male sex = 51.2%; mean age = 3.0 years; mean CD4 count = 672.5 cells/microlitre; mean CD4% = 15.5%; mean WAZ = −1.5; mean HAZ = −2.5. At t = 12 the mean of CD4 count, CD4%, WAZ and HAZ are 1092 cells/microlitre, 27.2%, −0.8, −1.5 respectively. The target quantities ψ,j,t are defined as the expected value of Y at time t, t ∈ {6, 12}, under no censoring, for a given treatment rule , where

The true target parameters are ψ1,6, = −1.804, ψ2,6 = −1.850, ψ3,6 = −2.028, ψ4,6 = −2.452, ψ1,12 = −1.029, ψ2,12 = −1.119, ψ3,12 = −1.466, and ψ4,12 = −2.447.

For simulation runs we estimate the above parameters using ltmle under the following specifications: n ∈ {200, 600, 1000}; truncation levels g ∈ {0.01, 0.025, 0.04} and three different sets of learners. Learner 1 consists of simple GLM’s, learner 2 adds both the arithmetic mean and GLM’s with interactions to the set of learners, and learner 3 contains additionally GLM’s selected by AIC, standard additive models (no interactions, package gam), as well as GLM’s based on an EM-algorithm-Bayesian model fitting. For computational feasibility, all fitted models included only variables measured at time t, t – 1, and 0; in line with the data generating process.

The average useable size of the sample at the end of follow-up (i.e. excluding censored patients and only including those who followed the respective treatment rule) varied between 39 (intervention ) and 79 (intervention ) for a baseline sample size of 200; between 116 (intervention ) and 238 (intervention ) for a baseline sample size of 600; and between 190 (intervention ) and 396 (intervention ) for a baseline sample size of 1000.

After 12 months of follow-up, for all interventions super learning (for learner 3) made substantial use of additive models when estimating the Q-model: the respective super learner weight, averaged over time and simulation runs, was always greater than 0.73. The g-models made particular use of GLM’s selected by AIC.

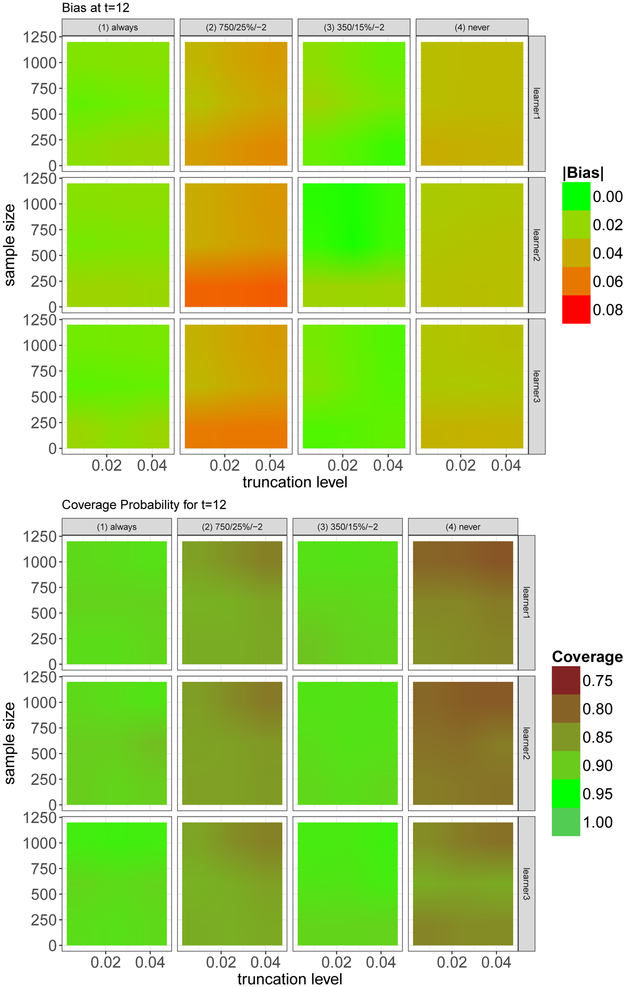

Figure 4 shows the estimated bias and coverage probability, for different truncation levels, interventions, learner sets, and sample sizes.

Figure 4.

Estimated bias and coverage probability, at t = 12, for different truncation levels, interventions, learner sets, and sample sizes.

One can see that the bias varies substantially with respect to the chosen intervention. Interestingly the interventions with the biggest usable sample size and with the lowest usable sample size, and , have the highest bias, showing that neither sample size nor type of intervention (static/dynamic) offers a reasonable guideline to judge the quality of the estimated target quantity. Interventions with high bias typically also have a poorer coverage. However, somewhat unexpectedly, for increasing sample size, coverage often decreases for the second and fourth intervention.

In this particular simulation setup the level of truncation hardly affects the results. There are gains with a larger sample size, as one would expect; it is however worth pointing out that even with small sample sizes results are relatively stable and good.

Of course, learner 3, with the biggest set of learners, typically performs best. Surprisingly the other two learners also yield good results – which is encouraging given that the data-generating process contains interactions and non-linear associations as well, and these effects are not specifically modeled by learner set 1 and learner set 2.

Since in the simulation the data-generating process is known, we can estimate , i.e. the probability of continuing to receive treatment according to the assigned treatment rule, given that a patient has received treatment so far and irrespective of the covariate history. That is, we can look at the indicator variable whether a patient has been treated according to the rule of interest at each time point t, or not, given the past covariates and conditional on those who were treated according to the rule at t – 1. This approach measures the “data support” for a given rule of interest. If a substantial proportion of subjects have very small cumulative probabilities, then this could be a sign of positivity violations. Table 3 lists the summaries of the cumulative probabilities.

Table 3.

Proportion of cumulative probabilities of continuing to receive treatment according to the assigned treatment rule (given that this has been done so far and irrespective of the covariate history) which are smaller than 0.025. The numbers are calculated based on the data-generating process in the simulation, and are estimated from the data set as well, and for both at the end of follow-up.

| simulation | 0.3% | 1.0% | 0.6% | 1.5% |

| data | 0% | 2.1% | 0.5% | 3.0% |

The table clearly shows that the highest proportion of small cumulative probabilities is found for the second and fourth intervention, i.e. those interventions which performed worst in the simulations. This highlights the fact that interventions need data support to meet the practical positivity assumption. In general this can also be estimated in a data analysis, for a given estimation technique. When using a standard logistic regression model we obtain the highest proportion of small cumulative probabilities for the fourth intervention, i.e. the intervention that was most volatile in the data analysis. We suggest that in addition to summaries of the clever covariates and percentage of truncated values, the data support for each intervention, as presented in Table 3, should also serve as a diagnostic tool in LTMLE analyses.

7. Conclusions

To our knowledge this is one of the first successful implementations of LTMLE for a data set with > 10 follow-up times and at least 3 time-dependent confounders (which are affected by prior treatment). Moreover, our data analysis was based on a realistic data set relevant to public health policy making, and was the first comparison between the (parametric) g-formula and LTMLE in such a complex context. Large longitudinal data sets require specific care when using LTMLE: we have shown that long follow-up may come with sample sizes that are small compared to the number of parameters that need to be fitted for machine learning algorithms, including semi-parametric regression models. This can yield instabilities, memory problems and identifiability problems that need to be addressed.

We have argued that there is practically no way around model selection in causal inference with both the g-formula and LTMLE, despite the implicit assumption that some of the included confounders from the structural causal model are irrelevant. This is because the causal parameter of interest can be best identified with good predictions coming from an optimal bias-variance tradeoff (though super learning provides a good bias-variance tradeoff for the nuisance models and not necessarily the target parameter and estimation approaches such as collaborative TMLE [36] have been suggested). This model selection process could incorporate clinical knowledge. We have included non-linear interactions using additive models to address such hypotheses. While this may have potential benefits for the g-formula, our LTMLE estimator could not be improved by such a strategy. In fact, a small super learner, with few algorithms, led to more reliable and precise results.

Ideally large longitudinal causal analyses are best analyzed by several methods. If the results match, then this is perfect. If not, diagnostics such as clever covariate and truncation summaries for LTMLE, or the natural course scenario for the g-formula are indispensable. Our analyses have shown that neither a good natural course scenario for the g-formula nor considerations of sample size and type of intervention (static/dynamic) offers a reasonable guideline to judge the quality of the estimated target quantity; what matters is the support in the data for the intervention rule of interest: high levels of truncation and small cumulative probabilities of continuing to follow the rule of interest are typically a sign of problems, likely due to practical positivity violations or poor modeling strategies. They can be addressed by an increase of appropriate learners, even if they are simple, or – less preferably – a change in the target quantity. Whatever the methodological choices are, it remains important to consider the substantive question at hand as well; for example, in our analysis, the most conservative estimates are provided by the g-formula, and a clinician may prefer to base her/his decisions on these estimates if the diagnostics for the method are acceptable.

We conclude the following with respect to the five questions posed in the introduction:

it can be feasible to implement LTMLE in complex settings with long follow-up times, small sample size, multiple time-dependent confounders, and dynamic interventions.

as seen in both the data analysis and the simulations, LTMLE can be successful even when confronted with limited modeling options, for example by a limited number of applicable learners when using super learning. Nevertheless, this may be different in other study settings; and it would be beneficial to have more learners available that work with existing software packages and are computationally feasible in settings with long follow-up and therefore multiple variables. We have made a selection of such learners available [56]; and researchers with advanced programming capacities are advised to implement their own custom-made wrappers, tailored to their own analysis of interest, if possible.

in our setting, we couldn’t find strong evidence that a flexible additive model, informed by prior clinical knowledge, possibly in conjunction with competing estimators such as the g-formula, may perform better than an automated LTMLE procedure with a limited set of learners.

in the settings studied, a reduced sample size or truncation of inverse probabilities didn’t have a major impact on the results; but it was clear that different interventions may have different support in the data and that the success of estimators such as LTMLE also varied with respect to the chosen intervention. This highlights the need to develop more diagnostic tools to diagnose practical positivity violations in the data. Reporting the percentage of truncated cumulative inverse probabilities, summaries of the clever covariates and the data support for each intervention (see Section 6) is highly recommended; but more research and guidance in this respect is needed.

Supplementary Material

Acknowledgements

We are immensely grateful to Maya Petersen for her constructive and helpful feedback on earlier versions of this manuscript. We appreciate the continuous help of Joshua Schwab who helped solving multiple issues related to the implementation of LTMLE, particularly in conjunction with the R package ltmle. We further thank Matthias Aßenmacher for his dedicated help in constructing the simulation setup. We also thank Lorna Renner, Shobna Sawry, Sylvie N’Gbeche, Karl-Günter Technau, Francois Eboua, Frank Tanser, Haby Sygnate-Sy, Sam Phiri, Madeleine Amorissani-Folquet, Vivian Cox, Fla Koueta, Cleophas Chimbete, Annette Lawson-Evi, Janet Giddy, Clarisse Amani-Bosse, and Robin Wood for sharing their data with us. Computations were performed using facilities provided by the University of Cape Town’s ICTS High Performance Computing team. We would also like to highlight the support of the International epidemiologic Databases to Evaluate AIDS Southern Africa (IeDEA-SA) and the West Africa Paediatric Working Group. The NIH has supported the above individuals, grant numbers U01AI069924 and U01AI069919. Miguel Angel Luque Fernandez is supported by the Spanish National Institute of Health, Carlos III Miguel Servet I Investigator Award (CP17/00206).

A. R code for automated estimation of Ψ6 with ltmle

To use the ltmle function from the package ltmle, one needs to provide the data in wide format. Below we show 5 patients of our data set, where b1, b2, b3, b4 refer to the baseline values of , CD4%, WAZ, and HAZ. Variable names ending with “.t*” refer to measurements at time t* (with t* =0, 1, 3, 6, 9,…,30). A.0 and C.0 are not needed and reported as by definition everyone is ART-naive and uncensored at baseline. The choice of the data structure in terms of the ordering of the variables is discussed in Section 5.2.

gender age_fv region2 b1 b2 b3 b4 cd4a_cf.1 cd4p_cf.1 wfa_cf.1 hfa_cf.1 1 1 4.752909 2 23.87467 15 −0.37 −0.30 23.87467 15 −0.37 −0.30 2 1 2.450377 2 26.98148 14 −0.81 −2.52 26.98148 14 −0.81 −2.52 3 1 2.318960 2 35.66511 14 −2.19 −3.13 35.66511 14 −2.19 −3.13 4 1 2.275154 2 34.46738 13 −2.13 −2.42 34.46738 13 −2.29 −2.38 5 1 3.526352 2 19.79899 26 −3.64 −2.52 19.79899 26 −3.66 −2.57 ART.1 censored.1 cd4a_cf.3 cd4p_cf.3 wfa_cf.3 hfa_cf.3 ART.3 censored.3 cd4a_cf.6 1 0 uncensored 23.87467 15 −0.50 −0.53 1 uncensored 23.87467 2 0 uncensored 26.98148 14 −1.30 −2.20 1 uncensored 26.98148 3 0 uncensored 35.66511 14 −2.54 −3.08 1 uncensored 35.66511 4 1 uncensored 34.46738 13 −2.41 −2.28 1 uncensored 36.63332 5 0 censored NA NA NA NA NA <NA> NA cd4p_cf.6 wfa_cf.6 hfa_cf.6 1 15 −0.50 −0.53 2 14 −1.36 −1.99 3 14 −2.72 −3.36 4 17 −2.41 −2.28 5 NA NA NA

Before applying the ltmle command we need to define the intervention matrix. For a static intervention, this could just be a vector such as c(0, 0), but needs to be a matrix for dynamic interventions as shown below. For illustrative purposes we use the intervention : “starting ART if either CD4 count < 750 cells/microlitre or CD4% < 25%” below. We also need to define the libraries used for the super learner, see Section 5.3 for more details.

# Define Intervention: start ART if CD4 count < 750 or CD4% < 25%

# Define Intervention: start ART if CD4 count < 750 or CD4% < 25%

cd4_750.1 <- (mydata_wide$cd4a_cf.1 < sqrt(750) ∣ mydata_wide$cd4p_cf.1 < 25)

cd4_750.3 <- (mydata_wide$cd4a_cf.3 < sqrt(750) ∣ mydata_wide$cd4p_cf.3 < 25) ∣ cd4_750.1

abar750 <- as.matrix(cbind(cd4_750.1,cd4_750.3))

abar750[is.na(abar750)] <- 0

# Define collection of estimation methods to be used by SuperLearner

mylibrary <- list(Q=c("SL.mean", "SL.glm", "SL.stepAIC", "SL.step.interaction", "SL.gam", "SL.bayesglm"),

g=c("SL.mean", "SL.glm", "SL.stepAIC", "SL.gam", "SL.bayesglm"))

Now, Ψ6 can be estimated easily as follows:

ltmle(mydata_wide[,1:23],

Anodes=c("ART.1","ART.3"),

Cnodes=c("censored.1","censored.3"),

Lnodes=c("cd4a_cf.1","cd4p_cf.1","wfa_cf.1", "cd4a_cf.3", "cd4p_cf.3", "wfa_cf.3",

"cd4a_cf.6", "cd4p_cf.6", "wfa_cf.6"),

Ynodes=c("hfa_cf.1", "hfa_cf.3", "hfa_cf.6"), Yrange=c(-15, 15),

Qform=NULL, gform=NULL, deterministic.g.function=MaintainTreatment, abar=abar750,

SL.library=mylibrary, estimate.time=T, variance.method="ic", gbounds = c(0.01, 1)))

Note that Anodes, Lnodes, Cnodes, Ynodes refer to the treatment, censoring, covariate, and outcome data respectively. By specifying a SL.library, and leaving the arguments for model formulae (Qform, gform) empty, model specification is done by super learning. Yrange=c(−15, 15) ensures that the outcome is bounded (i.e. absolute z-scores greater than 15 are regarded to be impossible).

B. R code for manual LTMLE estimation of Ψ6

The below implementation has been designed to be able to exactly reproduce the steps of the ltmle command; but to adhere to the framework and notation of Section 3 at the same time. As noted in the manuscript, we work with a transformed outcome and quasi-binomial models as ltmle does. We also add the clever covariate as weights, as ltmle does. Furthermore, we use additive models based on the package mgcv for model fitting. For comparing the manual and automated implementation, one could simply use simple GLMs (no super learning) and specify the model form in the Qform and gform arguments. The below code to truncate weights and to calculate confidence intervals is used later on in the main code.

gbounds <- function(x,bounds=c(0.05,1)){

x[x<min(bounds)] <- min(bounds)

x[x>max(bounds)] <- max(bounds)

return(x)

}

CI <- function(est, infcurv){

n <- length(infcurv)

ciwidth <- 1.959964 * sd(infcurv)/sqrt(n)

CI <- c(est-ciwidth, est+ciwidth)

return(CI)

}

library (mgcv)

To estimate ψ6 we need to do the following:

mydata_wide6 <- mydata_wide[,1:23]

attach(mydata_wide6)

# Already prepare step 3c: fit treatment and censoring models

# (Note that i) the data after censoring are defined as missing by us and thus the censoring models are

# implicitly fit on the subset of those that are uncensored until the respective previous time point and

# ii) the models contain only variables that have been selected by cross validation [see Section 4.3.1])

m1_c <- mgcv::gam(I(censored.1=="censored") ˜ age_fv + s(b2) + s(b3) + s(b4), family=binomial)

m3_c <- mgcv::gam(I(censored.3=="censored") ˜ gender + s(age_fv) + wfa_cf.1

+ s(I((hfa_cf.1 +15)/30)), family=quasibinomial)

m1_a <- mgcv::gam(ART.1 ˜ s(cd4a_cf.1) + s(cd4p_cf.1) + s(wfa_cf.1) + s(b1) + s(b2) + s(b3),

data=mydata_wide6, family=binomial)

m3_a <- mgcv::gam(ART.3 ˜ s(cd4p_cf.3) + s(wfa_cf.3) + s(cd4a_cf.1) + s(cd4p_cf.1) + s(b1) +

s(b2) + s(b3) + age_fv,

data=mydata_wide6[censored.1==1,], family=quasibinomial)

# Already prepare step 3c: define subsets of patients uncensored and following the treatment rule

# (Note that these subsets are needed to calculate the clever covariate as defined in (4))

subset1 <- censored.1=="uncensored" & ART.1==abar[,1]

subset3 <- censored.1=="uncensored" & ART.1==abar[,1] & censored.3=="uncensored" & ART.3==abar[,2]

# Still prepare data for step 3c: calculate the cumulative treatment and censoring probabilites;

# also: apply cutoff at 0.05, i.e. probabilities <0.05 are defined as 0.05 -> clever covariate <= 20

p1a <- gbounds((ART.1 == 1)*predict(m1_a, type="response", newdata=mydata_wide6) +

(ART.1 == 0)*(1 - predict(m1_a, type="response", newdata=mydata_wide6)))

p3a <- gbounds((ART.3 == 1)*predict(m3_a, type="response", newdata=mydata_wide6) +

(ART.3 == 0)*(1 - predict(m3_a, type="response", newdata=mydata_wide6)))

p1c <- gbounds((censored.1 == "uncensored")*(1 - predict(m1_c, type="response", newdata=mydata_wide6)))

p3c <- gbounds((censored.3 == "uncensored")*(1 - predict(m3_c, type="response", newdata=mydata_wide6)))

# Still prepare data for step 3c: calculate clever covariate (=0 [weight 0] for those excluded)

# (i.e. the inverse probabilities are only used for the subset of those patients

# following the treatment rule and are uncensored, see equation (4))

mydata_wide6$cc1 <- mydata_wide6$cc3 <- 0

mydata_wide6$cc1[subset1] <- (1/(gbounds(p1a*p1c)))[subset1]

mydata_wide6$cc3[subset3] <- (1/(gbounds(p1a*p1c*p3a*p3c)))[subset3]

# Prepare for step 2: Define Intervention

newdata_wide6 <- mydata_wide6

newdata_wide6$ART.1 <- abar[,1]

newdata_wide6$ART.3 <- abar[,2]

### LTMLE estimation ####

# time point 6: start to calculate innermost expectation

m6_y <- mgcv::gam(I((hfa_cf.6 +15)/30) ˜ s(age_fv) + s(b2) + s(b3) + s(b4) + ART.1 + s(cd4p_cf.3)

+ s(wfa_cf.3, by=b3) + s(I((hfa_cf.3 +15)/30)), data=mydata_wide6[censored.3==

"uncensored" & censored.1=="uncensored",], family=quasibinomial) # STEP 1

mydata_wide$ov6 <- predict(m6_y, newdata=newdata_wide6,type="response") # STEP 2

Qk6 <- glm("I((hfa_cf.6 +15)/30)˜ 1 + offset(qlogis(ov6)) ", data=mydata_wide6, weights=cc3,

family = quasibinomial) # STEP 3a-c

mydata_wide$y3.Q <- predict(Qk6, type="response", newdata=mydata_wide6) # STEP 3d

# time point 3

m3_y <- mgcv::gam(y3.Q ˜ (age_fv) + s(b3) + s(b4) + ART.1 + s(wfa_cf.1,by=b3)

+ s(I((hfa_cf.1 +15)/30),by=b3), data=mydata_wide6[censored.1=="uncensored",],

family=quasibinomial) # STEP 1

mydata_wide$ov3 <- predict(m3_y, newdata=newdata_wide6,type="response") # STEP 2

Qk3 <- glm("y3.Q ˜ 1 + offset(qlogis(ov3)) ", data=mydata_wide6, weights=cc1, # STEP 3a-c

family = quasibinomial)

mydata_wide$y1.Q <- predict(Qk3, type="response",newdata=mydata_wide6) # STEP 3d

# time point 1+0: calculate final TMLE estimate

# (as everything before ART.1/hfa_cf.1 is essentially baseline due to no prior intervention)

est_ltmle.6 <- mean(mydata_wide$y1.Q)*30-15 # STEP 4

# Confidence Interval: calculate IC and set HAZ=0 if unmeasured at time t # Step 5

mydata_wide6$Y <- (mydata_wide6$hfa_cf.6+15)/30

mydata_wide6$Y[is.na(mydata_wide6$Y)]<-0

mydata_wide6$y3.Q[is.na(mydata_wide6$y3.Q)]<-0

IC <- mydata_wide6$cc3*(mydata_wide6$Y-mydata_wide6$y3.Q) + mydata_wide6$cc1*

(mydata_wide6$y3.Q-mydata_wide6$y1.Q) + mydata_wide6$y1.Q-mean(mydata_wide6$y1.Q)

IC <- IC*30–15

CI(est_ltmle.6,IC) # Final Confidence Interval

Availability of code and data:

The above code can be easily adopted for t* = 12, 24, 30. The comprehensive R-code for this analysis is part of the supplementary material. The data are available from the authors upon request to verify the results.

C. Data generating process in the simulation study

Both baseline data (t = 0) and follow-up data (t = 1,…, 12) were created using structural equations using the R-package simcausal [55]. The below listed distributions, listed in temporal order, describe the data-generating process. Baseline data L0 refers to region, sex, age, CD4 count, CD4%, WAZ and HAZ respectively (V1, V2, V3, , , , Y0). Follow-up data refers to CD4 count, CD4%, WAZ and HAZ (, , , Yt), as well as a treatment (At) and censoring (Ct) indicator. In addition to Bernoulli (B), uniform (U) and normal (N) distributions, we also use truncated normal distributions which are denoted by N[a,b] where a and b are the truncation levels. Values which are smaller than a are replaced by a random draw from a U(a1, a2) distribution and values greater than b are drawn from a U(b1, b2) distribution. Values for (a1, a2, b1, b2) are (0, 50, 5000, 10000) for L1, (0.03,0.09,0.7,0.8) for L2, and (−10, 3, 3, 10) for both L3 and Y. The notation means “conditional on the data that has already been measured (generated) according to the time ordering”.