Abstract

Regularization by Denoising (RED), as recently proposed by Romano, Elad, and Milanfar, is powerful image-recovery framework that aims to minimize an explicit regularization objective constructed from a plug-in image-denoising function. Experimental evidence suggests that the RED algorithms are state-of-the-art. We claim, however, that explicit regularization does not explain the RED algorithms. In particular, we show that many of the expressions in the paper by Romano et al. hold only when the denoiser has a symmetric Jacobian, and we demonstrate that such symmetry does not occur with practical denoisers such as non-local means, BM3D, TNRD, and DnCNN. To explain the RED algorithms, we propose a new framework called Score-Matching by Denoising (SMD), which aims to match a “score” (i.e., the gradient of a log-prior). We then show tight connections between SMD, kernel density estimation, and constrained minimum mean-squared error denoising. Furthermore, we interpret the RED algorithms from Romano et al. and propose new algorithms with acceleration and convergence guarantees. Finally, we show that the RED algorithms seek a consensus equilibrium solution, which facilitates a comparison to plug-and-play ADMM.

I. Introduction

Consider the problem of recovering a (vectorized) image from noisy linear measurements of the form

| (1) |

where is a known linear transformation and e is noise. This problem is of great importance in many applications and has been studied for several decades.

One of the most popular approaches to image recovery is the “variational” approach, where one poses and solves an optimization problem of the form

| (2) |

In (2), ℓ(x; y) is a loss function that penalizes mismatch to the measurements, ρ(x) is a regularization term that penalizes mismatch to the image class of interest, and λ > 0 is a design parameter that trades between loss and regularization. A prime advantage of the variational approach is that, in many cases, efficient optimization methods can be readily applied to (2).

A key question is: How should one choose the loss ℓ(·; y) and regularization ρ(·) in (2)? As discussed in the sequel, the MAP-Bayesian interpretation suggests that they should be chosen in proportion to the negative log-likelihood and negative log-prior, respectively. The trouble is that accurate prior models for images are lacking.

Recently, a breakthrough was made by Romano, Elad, and Milanfar in [1]. Leveraging the long history (e.g., [2], [3]) and recent advances (e.g., [4], [5]) in image denoising algorithms, they proposed the regularization by denoising (RED) framework, where an explicit regularizer ρ(x) is constructed from an image denoiser using the simple and elegant rule

| (3) |

Based on this framework, they proposed several recovery algorithms (based on steepest descent, ADMM, and fixed-point methods, respectively) that yield state-of-the-art performance in deblurring and super-resolution tasks.

In this paper, we provide some clarifications and new interpretations of the excellent RED algorithms from [1]. Our work was motivated by an interesting empirical observation: With many practical denoisers f(·), the RED algorithms do not minimize the RED variational objective ℓ(x; y) + λρred(x).” As we establish in the sequel, the RED regularization (3) is justified only for denoisers with symmetric Jacobians, which unfortunately does not cover many state-of-the-art methods such as non-local means (NLM) [6], BM3D [7], TNRD [4], and DnCNN [5]. In fact, we are able to establish a stronger result: For non-symmetric denoisers, there exists no regularization ρ(·) that explains the RED algorithms from [1].

In light of these (negative) results, there remains the question of how to explain/understand the RED algorithms from [1] when used with non-symmetric denoisers. In response, we propose a framework called score-matching by denoising (SMD), which aims to match the “score” (i.e., the gradient of the log-prior) rather than to design any explicit regularizer. We then show tight connections between SMD, kernel density estimation [8], and constrained minimum mean-squared error (MMSE) denoising. In addition, we provide new interpretations of the RED-ADMM and RED-FP algorithms proposed in [1], and we propose novel RED algorithms with faster convergence. Inspired by [9], we show that the RED algorithms seek to satisfy a consensus equilibrium condition that allows a direct comparison to the plug-and-play ADMM algorithms from [10]

The remainder of the paper is organized as follows. In Section II we provide more background on RED and related algorithms such as plug-and-play ADMM [10]. In Section III, we discuss the impact of Jacobian symmetry on RED and test whether this property holds in practice. In Section IV, we propose the SMD framework. In Section V, we present new interpretations of the RED algorithms from [1] and new algorithms based on accelerated proximal gradient methods. In Section VI, we perform an equilibrium analysis of the RED algorithms, and, in Section VII, we conclude.

II. Background

A. The MAP-Bayesian Interpretation

For use in the sequel, we briefly discuss the Bayesian maximum a posteriori (MAP) estimation framework [11]. The MAP estimate of x from y is defined as

| (4) |

where p(x∣y) denotes the probability density of x given y. Notice that, from Bayes rule p(x∣y) = p(y∣x)p(x)/p(y) and the monotonically increasing nature of ln(·), we can write

| (5) |

MAP estimation (5) has a direct connection to variational optimization (2): the log-likelihood term – ln p(y∣x) corresponds to the loss ℓ(x; y) and the log-prior term – ln p(x) corresponds to the regularization λρ(x). For example, with additive white Gaussian noise (AWGN) , the log-likelihood implies a quadratic loss:

| (6) |

Equivalently, the normalized loss could be used if was absorbed into λ.

B. ADMM

A popular approach to solving (2) is through ADMM [12], which we now review. Using variable splitting, (2) becomes

| (7) |

Using the augmented Lagrangian, problem (7) can be reformulated as

| (8) |

using Lagrange multipliers (or “dual” variables) p and a design parameter β > 0. Using , (8) can be simplified to

| (9) |

The ADMM algorithm solves (9) by alternating the minimization of x and v with gradient ascent of u, as specified in Algorithm 1. ADMM is known to converge under convex ℓ(·; y) and ρ(·), and other mild conditions (see [12]).

| Algorithm 1 ADMM [12] | |

|---|---|

C. Plug-and-Play ADMM

Importantly, line 3 of Algorithm 1 can be recognized as variational denoising of xk + uk–1 using regularization λρ(x) and quadratic loss , where r = xk + uk–1 at iteration k. By “denoising,” we mean recovering x0 from noisy measurements r of the form

| (10) |

for some variance ν > 0.

Image denoising has been studied for decades (see, e.g., the overviews [2], [3]), with the result that high performance methods are now readily available. Today’s state-of-the-art denoisers include those based on image-dependent filtering algorithms (e.g., BM3D [7]) or deep neural networks (e.g., TNRD [4], DnCNN [5]). Most of these denoisers are not variational in nature, i.e., they are not based on any explicit regularizer λρ(x).

Leveraging the denoising interpretation of ADMM, Venkatakrishnan, Bouman, and Wolhberg [10] proposed to replace line 3 of Algorithm 1 with a call to a sophisticated image denoiser, such as BM3D, and dubbed their approach Plug-and-Play (PnP) ADMM. Numerical experiments show that PnP-ADMM works very well in most cases. However, when the denoiser used in PnP-ADMM comes with no explicit regularization ρ(x), it is not clear what objective PnP-ADMM is minimizing, making PnP-ADMM convergence more difficult to characterize. Similar PnP algorithms have been proposed using primal-dual methods [13] and FISTA [14] in place of ADMM.

Approximate message passing (AMP) algorithms [15] also perform denoising at each iteration. In fact, when A is large and i.i.d. Gaussian, AMP constructs an internal variable statistically equivalent to r in (10) [16]. While the earliest instances of AMP assumed separable denoising (i.e., [f(x)]n = f(xn) ∀n for some f) later instances, like [17], [18], considered non-separable denoising. The paper [19] by Metzler, Maleki, and Baraniuk proposed to plug an image-specific denoising algorithm, like BM3D, into AMP. Vector AMP, which extends AMP to the broader class of “right rotationally invariant” random matrices, was proposed in [20], and VAMP with image-specific denoising was proposed in [21]. Rigorous analyses of AMP and VAMP under non-separable denoisers were performed in [22] and [23], respectively.

D. Regularization by Denoising (RED)

As discussed in the Introduction, Romano, Elad, and Milanfar [1] proposed a radically new way to exploit an image denoiser, which they call regularization by denoising (RED). Given an arbitrary image denoiser , they proposed to construct an explicit regularizer of the form

| (11) |

to use within the variational framework (2). The advantage of using an explicit regularizer is that a wide variety of optimization algorithms can be used to solve (2) and their convergence can be tractably analyzed.

In [1], numerical evidence is presented to show that image denoisers f(·) are locally homogeneous (LH), i.e.,

| (12) |

for sufficiently small . For such denoisers, Romano et al. claim [1, Eq.(28)] that ρred(·) obeys the gradient rule

| (13) |

If ∇ρred(x) = x – f(x), then any minimizer of the variational objective under quadratic loss,

| (14) |

must yield , i.e., must obey

| (15) |

Based on this line of reasoning, Romano et al. proposed several iterative algorithms that find an satisfying the fixed-point condition (15), which we will refer to henceforth as “RED algorithms.”

III. Clarifications on RED

In this section, we first show that the gradient expression (13) holds if and only if the denoiser f(·) is LH and has Jacobian symmetry (JS). We then establish that many popular denoisers lack JS, such as the median filter (MF) [24], non-local means (NLM) [6], BM3D [7], TNRD [4], and DnCNN [5]. For such denoisers, the RED algorithms cannot be explained by ρred(·) in (11). We also show a more general result: When a denoiser lacks JS, there exists no regularizer ρ(·) whose gradient expression matches (13). Thus, the problem is not the specific form of ρred(·) in (11) but rather the broader pursuit of explicit regularization.

A. Preliminaries

We first state some definitions and assumptions. In the sequel, we denote the ith component of f(x) by fi(x), the gradient of fi(·) at x by

| (16) |

and the Jacobian of f(·) at x by

| (17) |

Without loss of generality, we take to be the set of possible images. A given denoiser f(·) may involve decision boundaries at which its behavior changes suddenly. We assume that these boundaries are a closed set of measure zero and work instead with the open set , which contains almost all images.

We furthermore assume that is differentiable on , which means [25, p.212] that, for any , there exists a matrix for which

| (18) |

When J exists, it can be shown [25, p.216] that J = Jf(x).

B. The RED Gradient

We first recall a result that was established in [1].

Lemma 1 (Local homogeneity [1]). Suppose that denoiser f(·) is locally homogeneous. Then [J f(x)]x = f(x).

Proof. Our proof is based on differentiability and avoids the need to define a directional derivative. From (18), we have

| (19) |

| (20) |

| (21) |

where (20) follows from local homogeneity (12). Equation (21) implies that . □

We now state one of the main results of this section.

Lemma 2 (RED gradient). For ρred(·) defined in (11),

| (22) |

Proof. For any and n = 1, …, N,

| (23) |

| (24) |

| (25) |

| (26) |

| (27) |

using the definition of Jf(x) from (17). Collecting into the gradient vector (13) yields (22). □

Note that the gradient expression (22) differs from (13).

Lemma 3 (Clarification on (13)). Suppose that the denoiser f(·) is locally homogeneous. Then the RED gradient expression (13) holds if and only if J f (x) = [J f (x)]⊤.

Proof. If J f(x) = [J f(x)]⊤, then the last term in (22) becomes , which equals by Lemma 1, in which case (22) agrees with (13). But if J f (x) ≠ [J f(x)]⊤, then (22) differs from (13). □

C. Impossibility of Explicit Regularization

For denoisers f(·) that lack Jacobian symmetry (JS), Lemma 3 establishes that the gradient expression (13) does not hold. Yet (13) leads to the fixed-point condition (15) on which all RED algorithms in [1] are based. The fact that these algorithms work well in practice suggests that “∇ρ(x) = x – f(x)” is a desirable property for a regularizer ρ(x) to have. But the regularization ρred(x) in (11) does not lead to this property when f(·) lacks JS. Thus an important question is:

Does there exist some other regularization ρ(·) for which ∇ρ(x) = x – f(x) when f (·) is non-JS?

The following theorem provides the answer.

Theorem 1 (Impossibility). Suppose that denoiser f (·) has a non-symmetric Jacobian. Then there exists no regularization ρ(·) for which ∇ρ(x) = x – f(x).

Proof. To prove the theorem, we view as a vector field. Theorem 4.3.8 in [26] says that a vector field f is conservative if and only if there exists a continuously differentiable potential for which . Furthermore, Theorem 4.3.10 in [26] says that if f is conservative, then the Jacobian J f is symmetric. Thus, by the contrapositive, if the Jacobian J f is not symmetric, then no such potential exists.

To apply this result to our problem, we define

| (28) |

and notice that

| (29) |

Thus, if J f(x) is non-symmetric, then J[x – f(x)] = I – J f(x) is non-symmetric, which means that there exists no ρ for which (29) holds. □

Thus, the problem is not the specific form of ρred(·) in (11) but rather the broader pursuit of explicit regularization. We note that the notion of conservative vector fields was discussed in [27, App. A] in the context of PnP algorithms, whereas here we discuss it in the context of RED.

D. Analysis of Jacobian Symmetry

The previous sections motivate an important question: Do commonly-used image denoisers have sufficient JS?

For some denoisers, JS can be studied analytically. For example, consider the “transform domain thresholding” (TDT) denoisers of the form

| (30) |

where g(·) performs componentwise (e.g., soft or hard) thresholding and W is some transform, as occurs in the context of wavelet shrinkage [28], with or without cycle-spinning [29]. Using to denote the derivative of gn(·), we have

| (31) |

and so the Jacobian of f(·) is perfectly symmetric.

Another class of denoisers with perfectly symmetric Jacobians are those that produce MAP or MMSE optimal under some assumed prior . In the MAP case, minimizes (over x) the cost for noisy input r. If we define , known as the Moreau-Yosida envelope of , then , as discussed in [30] (See also [31] for insightful discussions in the context of image denoising.) The elements in the Jacobian are therefore , and so the Jacobian matrix is symmetric. In the MMSE case, we have that f(r) = r – ∇ρTR(r) for ρTR(·) defined in (52) (see Lemma 4), and so , again implying that the Jacobian is symmetric. But it is difficult to say anything about the Jacobian symmetry of approximate MAP or MMSE denoisers.

Now let us consider the more general class of denoisers

| (32) |

sometimes called “pseudo-linear” [3]. For simplicity, we assume that W(·) is differentiable on . In this case, using the chain rule, we have

| (33) |

and so the following are sufficient conditions for Jacobian symmetry.

-

1)

W(x) is symmetric ,

-

2)

.

When W is x-invariant (i.e., f(·) is linear) and symmetric, both of these conditions are satisfied. This latter case was exploited for RED in [32]. The case of non-linear W(·) is more complicated. Although W(·) can be symmetrized (see [33], [34]), it is not clear whether the second condition above will be satisfied.

E. Jacobian Symmetry Experiments

For denoisers that do not admit a tractable analysis, we can still evaluate the Jacobian of f(·) at x numerically via

| (34) |

where en denotes the nth column of IN and ϵ > 0 is small (ϵ =1 × 10−3 in our experiments). For the purpose of quantifying JS, we define the normalized error metric

| (35) |

which should be nearly zero for a symmetric Jacobian.

Table I shows1 the average value of for 17 different image patches2 of size 16 × 16, using denoisers that assumed a noise variance of 252. The denoisers tested were the TDT from (30) with the 2D Haar wavelet transform and soft-thresholding, the median filter (MF) [24] with a 3 × 3 window, non-local means (NLM) [6], BM3D [7], TNRD [4], and DnCNN [5]. Table I shows that the Jacobians of all but the TDT denoiser are far from symmetric.

TABLE I.

Average Jacobian-symmetry error on 16 × 16 images

| TDT | MF | NLM | BM3D | TNRD | DnCNN | |

|---|---|---|---|---|---|---|

| 5.36e-21 | 1.50 | 0.250 | 1.22 | 0.0378 | 0.0172 |

Jacobian symmetry is of secondary interest; what we really care about is the accuracy of the RED gradient expressions (13) and (22). To assess gradient accuracy, we numerically evaluated the gradient of ρred(·) at x using

| (36) |

and compared the result to the analytical expressions (13) and (22). Table II reports the normalized gradient error

| (37) |

for the same ϵ, images, and denoisers used in Table I. The results in Table II show that, for all tested denoisers, the numerical gradient closely matches the analytical expression for ∇ρred(·) from (22), but not that from (13). The mismatch between and ∇ρred(·) from (13) is partly due to insufficient JS and partly due to insufficient LH, as we establish below.

TABLE II.

Average gradient error on 16 × 16 images

F. Local Homogeneity Experiments

Recall that the TDT denoiser has a symmetric Jacobian, both theoretically and empirically. Yet Table II reports a disagreement between the ∇ρred(·) expressions (13) and (22) for TDT. We now show that this disagreement is due to insufficient local homogeneity (LH).

To do this, we introduce yet another RED gradient expression,

| (38) |

which results from combining (22) with Lemma 1. Here, indicates that (38) holds under LH. In contrast, the gradient expression (13) holds under both LH and Jacobian symmetry, while the gradient expression (22) holds in general (i.e., even in the absence of LH and/or Jacobian symmetry). We also introduce two normalized error metrics for LH,

| (39) |

| (40) |

which should both be nearly zero for LH f(·). Note that quantifies LH according to definition (12) and closely matches the numerical analysis of LH in [1]. Meanwhile, quantifies LH according to Lemma 1 and to how LH is actually used in the gradient expressions (13) and (38).

The middle row of Table II reports the average gradient error of the gradient expression (38), and Table III reports average LH error for the metrics and . There we see that the average error is small for all denoisers, consistent with the experiments in [1]. But the average error is several orders of magnitude larger (for all but the MF denoiser). We also note that the value of for BM3D is several orders of magnitude higher than for the other denoisers. This result is consistent with Fig. 2, which shows that the cost function associated with BM3D is much less smooth than that of the other denoisers. As discussed below, these seemingly small imperfections in LH have a significant effect on the RED gradient expressions (13) and (38).

TABLE III.

Average local-homogeneity error on 16 × 16 images

| TDT | MF | NLM | BM3D | TNRD | DnCNN | |

|---|---|---|---|---|---|---|

| 2.05e-8 | 0 | 1.41e-8 | 7.37e-7 | 2.18e-8 | 1.63e-8 | |

| 0.0205 | 2.26e-23 | 0.0141 | 3.80e4 | 2.18e-2 | 0.0179 |

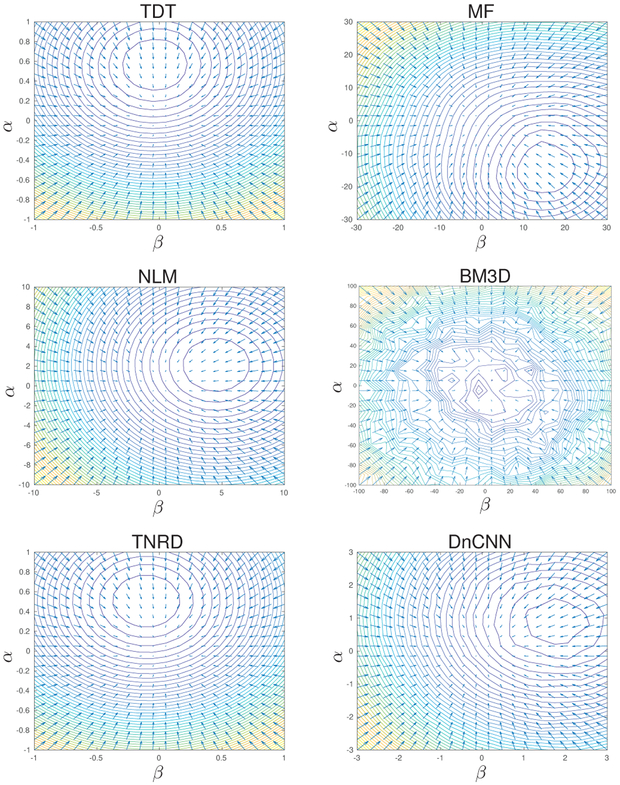

Fig. 2.

Contours show RED cost Cred(xα, β) from (14) and arrows show RED-algorithm gradient field g(xα, β) from (46) versus (α, β), where with randomly chosen e1 and e2. The subplots show that the minimizer of Cred(xα, β) is not the fixed-point , and that Cred(·) may be non-smooth and/or non-convex.

Starting with the TDT denoiser, Table II shows that the gradient error on (38) is large, which can only be caused by insufficient LH. The insufficient LH is confirmed in Table III, which shows that the value of for TDT is non-negligible, especially in comparison to the value for MF.

Continuing with the MF denoiser, Table I indicates that its Jacobian is far from symmetric, while Table III indicates that it is LH. The gradient results in Table II are consistent with these behaviors: the ∇ρred(x) expression (38) is accurate on account of LH being satisfied, but the ∇ρred(x) expression (13) is inaccurate on account of a lack of JS.

The results for the remaining denoisers NLM, BM3D, TNRD, and BM3D show a common trend: they have nontrivial levels of both JS error (see Table I) and LH error (see Table III). As a result, the gradient expressions (13) and (38) are both inaccurate (see Table II).

In conclusion, the experiments in this section show that the RED gradient expressions (13) and (38) are very sensitive to small imperfections in LH. Although the experiments in [1] suggested that many popular image denoisers are approximately LH, our experiments suggest that their levels of LH are insufficient to maintain the accuracy of the RED gradient expressions (13) and (38).

G. Hessian and Convexity

From (26), the (n, j)th element of the Hessian of ρred(x) equals

| (41) |

| (42) |

| (43) |

where δk = 1 if k = 0 and otherwise δk = 0. Thus, the Hessian of ρred(·) at x equals

| (44) |

This expression can be contrasted with the Hessian expression from [1, (60)], which reads

| (45) |

Interestingly, (44) differs from (45) even when the denoiser has a symmetric Jacobian J f(x). One implication is that, even if eigenvalues of J f(x) are limited to the interval [0, 1], the Hessian Hρred(x) may not be positive semi-definite due to the last term in (44), with possibly negative implications on the convexity of ρred(·). That said, the RED algorithms do not actually minimize the variational objective ℓ(x; y) + λρred(x) for common denoisers f(·) (as established in Section III-H), and so the convexity of ρred(·) may not be important in practice. We investigate the convexity of ρred(·) numerically in Section III-I.

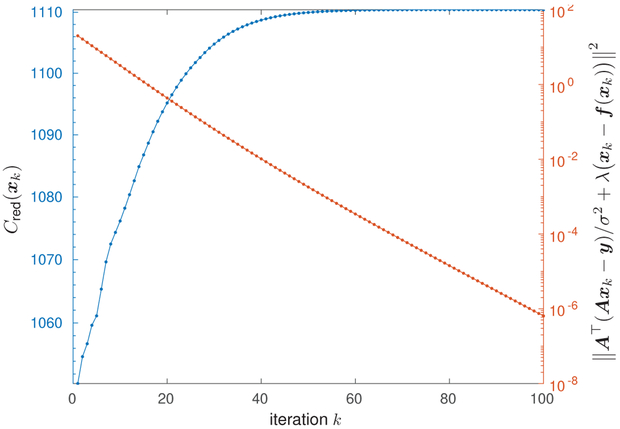

H. Example RED-SD Trajectory

We now provide an example of how the RED algorithms from [1] do not necessarily minimize the variational objective λ(x; y) + λρred(x).

For a trajectory produced by the steepest-descent (SD) RED algorithm from [1], Fig. 1 plots, versus iteration k, the RED Cost Cred(xk) from (14) and the error on the fixed-point condition (15), i.e., ∥g(xk)∥2 with

| (46) |

Fig. 1.

RED cost Cred(xk) and fixed-point error ∥A⊤(Axk – y)/σ2 + λ(xk – f(xk))∥2 versus iteration k for produced by the RED-SD algorithm from [1]. Although the fixed-point condition is asymptotically satisfied, the RED cost does not decrease with k.

For this experiment, we used the 3 × 3 median-filter for f(·), the Starfish image, and noisy measurements with σ2 = 20 (i.e., A = I in (14)).

Figure 1 shows that, although the RED-SD algorithm asymptotically satisfies the fixed-point condition (15), the RED cost function Cred(xk) does not decrease with k, as would be expected if the RED algorithms truly minimized the RED cost Cred(·). This behavior implies that any optimization algorithm that monitors the objective value Cred(xk) for, say, backtracking line-search (e.g., the FASTA algorithm [35]), is difficult to apply in the context of RED.

I. Visualization of RED Cost and RED-Algorithm Gradient

We now show visualizations of the RED cost Cred(x) from (14) and the RED algorithm’s gradient field g(x) from (46), for various image denoisers. For this experiment, we used the Starfish image, noisy measurements with σ2 = 100 (i.e., A = I in (14) and (46)), and λ optimized over a grid (of 20 values logarithmically spaced between 0.0001 and 1) for each denoiser, so that the PSNR of the RED fixed-point is maximized.

Figure 2 plots the RED cost Cred(x) and the RED algorithm’s gradient field g(x) for the TDT, MF, NLM, BM3D, TNRD, and DnCNN denoisers. To visualize these quantities in two dimensions, we plotted values of x centered at the RED fixed-point and varying along two randomly chosen directions. The figure shows that the minimizer of Cred(x) does not coincide with the fixed-point , and that the RED cost Cred(·) is not always smooth or convex.

IV. Score-Matching by Denoising

As discussed in Section II-D, the RED algorithms proposed in [1] are explicitly based on gradient rule

| (47) |

This rule appears to be useful, since these algorithms work very well in practice. But Section III established that ρred(·) from (11) does not usually satisfy (47). We are thus motived to seek an alternative explanation for the RED algorithms. In this section, we explain them through a framework that we call score-matching by denoising (SMD).

A. Tweedie Regularization

As a precursor to the SMD framework, we first propose a technique based on what we will call Tweedie regularization.

Recall the measurement model (10) used to define the “denoising” problem, repeated in (48) for convenience:

| (48) |

To avoid confusion, we will refer to r as “pseudo-measurements” and y as “measurements.” From (48), the likelihood of x0 is .

Now, suppose that we model the true image x0 as a realization of a random vector x with prior pdf . We write “” to emphasize that the model distribution may differ from the true distribution px (i.e., the distribution from which the image x is actually drawn). Under this prior model, the MMSE denoiser of x from r is

| (49) |

and the likelihood of observing r is

| (50) |

| (51) |

We will now define the Tweedie regularizer (TR) as

| (52) |

As we now show, ρTR(·) has the desired property (47).

Lemma 4 (Tweedie). For ρTR(r; ν) defined in (52),

| (53) |

where is the MMSE denoiser from (49).

Proof. Equation (53) is a direct consequence of a classical result known as Tweedie’s formula [36], [37]. A short proof, from first principles, is now given for completeness.

| (54) |

| (55) |

| (56) |

| (57) |

| (58) |

| (59) |

where (56) used . Stacking (59) for n = 1, …, N in a vector yields (53). □

Thus, if the TR regularizer ρTR(·; ν) is used in the optimization problem (14), then the solution must satisfy the fixed-point condition (15) associated with the RED algorithms from [1], albeit with an MMSE-type denoiser. This restriction will be removed using the SMD framework in Section IV-C.

It is interesting to note that the gradient property (53) holds even for non-homogeneous . This generality is important in applications under which is known to lack LH. For example, with a binary image x ∈ {0, 1}N modeled by , the MMSE denoiser takes the form , which is not LH.

B. Tweedie Regularization as Kernel Density Estimation

We now show that TR arises naturally in the data-driven, non-parametric context through kernel-density estimation (KDE) [8].

Recall that, in most imaging applications, the true prior px is unknown, as is the true MMSE denoiser fmmse,ν(·). There are several ways to proceed. One way is to design “by hand” an approximate prior that leads to a computationally efficient denoiser . But, because this denoiser is not MMSE for x ~ px, the performance of the resulting estimates will suffer relative to fmmse,ν.

Another way to proceed is to approximate the prior using a large corpus of training data . To this end, an approximate prior could be formed using the empirical estimate

| (60) |

but a more accurate match to the true prior px can be obtained using

| (61) |

with appropriately chosen ν > 0, a technique known as kernel density estimation (KDE) or Parzen windowing [8]. Note that if is used as a surrogate for px, then the MAP optimization problem becomes

| (62) |

| (63) |

with ρTR(·; ν) from (50)-(52) constructed using from (60). In summary, TR arises naturally in the data-driven approach to image recovery when KDE is used to smooth the empirical prior.

C. Score-Matching by Denoising

A limitation of the above TR framework is that it results in denoisers with symmetric Jacobians. (Recall the discussion of MMSE denoisers in Section III-D.) To justify the use of RED algorithms with non-symmetric Jacobians, we introduce the score-matching by denoising (SMD) framework in this section.

Let us continue with the KDE-based MAP estimation problem (62). Note that from (62) zeros the gradient of the MAP optimization objective and thus obeys the fixed-point equation

| (64) |

In principle, in (64) could be found using gradient descent or similar techniques. However, computation of the gradient

| (65) |

is too expensive for the values of T typically needed to generate a good image prior .

A tractable alternative is suggested by the fact that

| (66) |

| (67) |

where is the MMSE estimator of from . In particular, if we can construct a good approximation to using a denoiser fθ(·) in a computationally efficient function class , then we can efficiently approximate the MAP problem (62).

This approach can be formalized using the framework of score matching [38], which aims to approximate the “score” (i.e., the gradient of the log-prior) rather than the prior itself. For example, suppose that we want to want to approximate the score . For this, Hyvärinen [38] suggested to first find the best mean-square fit among a set of computationally efficient functions ψ(·; θ), i.e., find

| (68) |

and then to approximate the score by . Later, in the context of denoising autoencoders, Vincent [39] showed that if one chooses

| (69) |

for some function , then from (68) can be equivalently written as

| (70) |

In this case, is the MSE-optimal denoiser, averaged over and constrained to the function class .

Note that the denoiser approximation error can be directly connected to the score-matching error as follows. For any denoiser fθ(·) and any input x,

| (71) |

| (72) |

where (71) follows from (66) and (72) follows from (69). Thus, matching the score is directly related to matching the MMSE denoiser.

Plugging the score approximation (69) into the fixed-point condition (64), we get

| (73) |

which matches the fixed-point condition (15) of the RED algorithms from [1]. Here we emphasize that may be constructed in such a way that fθ(·) has a non-symmetric Jacobian, which is the case for many state-of-the-art denoisers. Also, θ does not need to be optimized for (73) to hold. Finally, need not be the empirical prior (60); it can be any chosen prior [39]. Thus, the score-matching-by-denoising (SMD) framework offers an explanation of the RED algorithms from [1] that holds for generic denoisers fθ(·), whether or not they have symmetric Jacobians, are locally homogeneous, or MMSE. Furthermore, it suggests a rationale for choosing the regularization weight λ and, in the context of KDE, the denoiser variance ν.

D. Relation to Existing Work

Tweedie’s formula (53) has connections to Stein’s Unbiased Risk Estimation (SURE) [40], as discussed in, e.g., [41, Thm. 2] and [42, Eq. (2.4)]. SURE has been used for image denoising in, e.g., [43]. Tweedie’s formula was also used in [44] to interpret autoencoding-based image priors. In our work, Tweedie’s forumula is used to provide an interpretation for the RED algorithms through the construction of the explicit regularizer (52) and the approximation of the resulting fixed-point equation (64) via score matching.

Recently, Alain and Bengio [45] studied the contractive auto-encoders, a type of autoencoder that minimizes squared reconstruction error plus a penalty that tries to make the autoencoder as simple as possible. While previous works such as [46] conjectured that such auto-encoders minimize an energy function, Alain and Bengio showed that they actually minimize the norm of a score (i.e., match a score to zero). Furthermore, they showed that, when the coder and decoder do not share the same weights, it is not possible to define a valid energy function because the Jacobian of the reconstruction function is not symmetric. The results in [45] parallel those in this paper, except that they focus on auto-encoders while we focus on variational image recovery. Another small difference is that [45] uses the small-ν approximation

| (74) |

whereas we use the exact (Tweedie’s) relationship (53), i.e.,

| (75) |

where is the “Gaussian blurred” version of from (51).

V. Fast RED Algorithms

In [1], Romano et al. proposed several ways to solve the fixed-point equation (15). Throughout our paper, we have been referring to these methods as “RED algorithms.” In this section, we provide new interpretations of the RED-ADMM and RED-FP algorithms from [1] and we propose new RED algorithms based on accelerated proximal gradient methods.

A. RED-ADMM

The ADMM approach was summarized in Algorithm 1 for an arbitrary regularizer ρ(·). To apply ADMM to RED, line 3 of Algorithm 1, known as the “proximal update,” must be specialized to the case where ρ(·) obeys (13) for some denoiser f(·). To do this, Romano et al. [1] proposed the following. Because ρ(·) is differentiable, the proximal solution υk must obey the fixed-point relationship

| (76) |

| (77) |

| (78) |

An approximation to υk can thus be obtained by iterating

| (79) |

over i = 1, …, I with sufficiently large I, initialized at z0 = vk–1. This procedure is detailed in lines 3-6 of Algorithm 2. The overall algorithm is known as RED-ADMM.

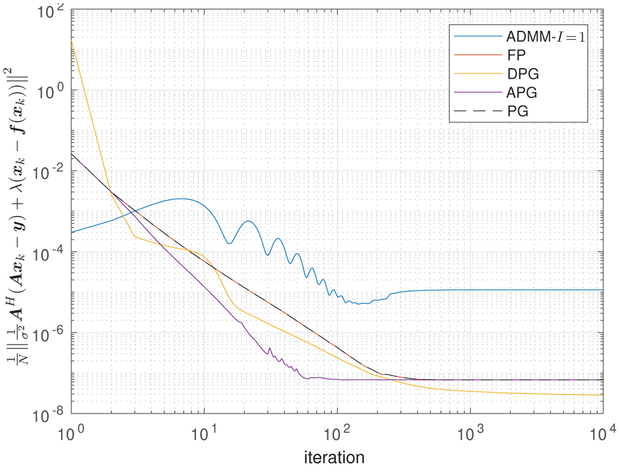

B. Inexact RED-ADMM

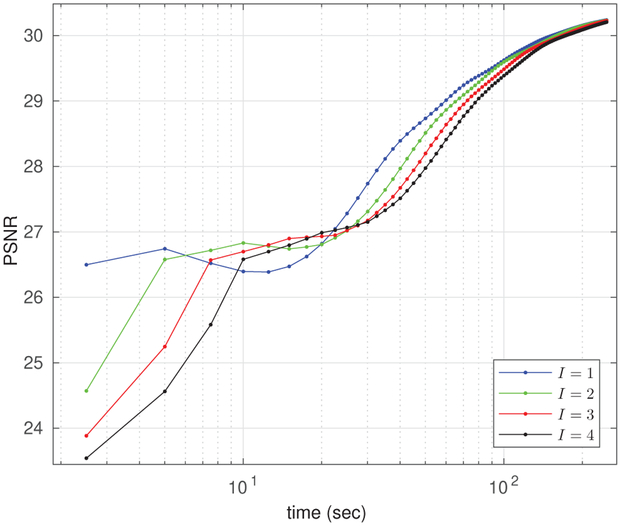

Algorithm 2 gives a faithful implementation of ADMM when the number of inner iterations, I, is large. But using many inner iterations may be impractical when the denoiser is computationally expensive, as in the case of BM3D or TNRD. Furthermore, the use of many inner iterations may not be necessary.

For example, Fig. 3 plots PSNR trajectories versus runtime for TNRD-based RED-ADMM with I = 1, 2, 3, 4 inner iterations. For this experiment, we used the deblurring task described in section V-G, but similar behaviors can be observed in other applications of RED. Figure 3 suggests that I = 1 inner iterations gives the fastest convergence. Note that [1] also used I = 1 when implementing RED-ADMM.

Fig. 3.

PSNR versus runtime for RED-ADMM with TNRD denoising and I inner iterations.

| Algorithm 2 RED-ADMM with I Inner Iterations [1] | |

|---|---|

With I = 1 inner iterations, RED-ADMM simplifies down to the 3-step iteration summarized in Algorithm 3. Since Algorithm 3 looks quite different than standard ADMM (recall Algorithm 1), one might wonder whether there exists another interpretation of Algorithm 3. Noting that line 3 can be rewritten as

| (80) |

| (81) |

we see that the I = 1 version of inexact RED-ADMM replaces the proximal step with a gradient-descent step under stepsize 1/(λ + β). Thus the algorithm is reminiscent of the proximal gradient (PG) algorithm [47], [48]. We will discuss PG further in the sequel.

| Algorithm 3 RED-ADMM with I =1 | |

|---|---|

| Algorithm 4 RED-PG Algorithm | |

|---|---|

C. Majorization-Minimization and Proximal-Gradient RED

We now propose a proximal-gradient approach inspired by majorization minimization (MM) [49]. As proposed in [50], we use a quadratic upper-bound,

| (82) |

on the regularizer ρ(x), in place of ρ(x) itself, at the kth algorithm iteration. Note that if ρ(·) is convex and ∇ρ(·) is Lρ-Lipschitz, then “majorizes” ρ(x) at xk when L ≥ Lρ, i.e.,

| (83) |

| (84) |

The majorized objective can then be minimized using the proximal gradient (PG) algorithm [47], [48] (also known as forward-backward splitting) as follows. From (82), note that the majorized objective can be written as

| (85) |

| (86) |

where (86) follows from assuming (47), which is the basis for all RED algorithms. The RED-PG algorithm then alternately updates υk as per the gradient step in (86) and updates xk+1 according to the proximal step

| (87) |

as summarized in Algorithm 4. Convergence is guaranteed if L ≥ Lρ; see [47], [48] for details.

We now show that RED-PG with L = 1 is identical to the “fixed point” (FP) RED algorithm proposed in [1]. First, notice from Algorithm 4 that υk = f(xk) when L = 1, in which case

| (88) |

| Algorithm 5 RED-DPG Algorithm | |

|---|---|

For the quadratic loss , (88) becomes

| (89) |

| (90) |

which is exactly the RED-FP update [1, (37)]. Thus, (88) generalizes [1, (37)] to possibly non-quadratic3 loss ℓ(·; y), and RED-PG generalizes RED-FP to arbitrary L > 0. More importantly, the PG framework facilitates algorithmic acceleration, as we describe below.

The RED-PG and inexact RED-ADMM-I = 1 algorithms show interesting similarities: both alternate a proximal update on the loss with a gradient update on the regularization, where the latter term manifests as a convex combination between the denoiser output and another term. The difference is that RED-ADMM-I = 1 includes an extra state variable, uk. The experiments in Section V-G suggest that this extra state variable is not necessarily advantageous.

D. Dynamic RED-PG

Recalling from (86) that 1/L acts as a stepsize in the PG gradient step, it may be possible to speed up PG by decreasing L, although making L too small can prevent convergence. If ρ(·) was known, then a line search could be used, at each iteration k, to find the smallest value of L that guarantees the majorization of ρ(x) by [47]. However, with a non-LH or non-JS denoiser, it is not possible to evaluate ρ(·), preventing such a line search.

We thus propose to vary Lk (i.e., the value of L at iteration k) according to a fixed schedule. In particular, we propose to select L0 and L∞, and smoothly interpolate between them at intermediate iterations k. One interpolation scheme that works well in practice is summarized in line 3 of Algorithm 5. We refer to this approach as “dynamic PG” (DPG). The numerical experiments in Section V-G suggest that, with appropriate selection of L0 and L∞, RED-DPG can be significantly faster than RED-FP.

| Algorithm 6 RED-APG Algorithm | |

|---|---|

E. Accelerated RED-PG

Another well-known approach to speeding up PG is to apply momentum to the υk term in Algorithm 4 [47], often known as “acceleration.” An accelerated PG (APG) approach to RED is detailed in Algorithm 6. There, the momentum in line 5 takes the same form as in FISTA [52]. The numerical experiments in Section V-G suggest that RED-APG is the fastest among the RED algorithms discussed above.

By leveraging the principle of vector extrapolation (VE) [53], a different approach to accelerating RED algorithms was recently proposed in [54]. Algorithmically, the approach in [54] is much more complicated than the PG-DPG and PG-APG methods proposed above. In fact, we have been unable to arrive at an implementation of [54] that reproduces the results in that paper, and the authors have not been willing to share their implementation with us. Thus, we cannot comment further on the difference in performance between our PG-DPG and PG-APG schemes and the one in [54].

F. Convergence of RED-PG

Recalling Theorem 1, the RED algorithms do not minimize an explicit cost function but rather seek fixed points of (15). Therefore, it is important to know whether they actually converge to any one fixed point. Below, we use the theory of non-expansive and α-averaged operators to establish the convergence of RED-PG to a fixed point under certain conditions.

First, an operator B(·) is said to be non-expansive if its Lipschitz constant is at most 1 [55]. Next, for α ∈ (0,1), an operator P(·) is said to be α-averaged if

| (91) |

for some non-expansive B(·). Furthermore, if P1 and P2 are α1 and α2-averaged, respectively, then [55, Prop. 4.32] establishes that the composition P2 ∘ P1 is α-averaged with

| (92) |

Recalling RED-PG from Algorithm 4, let us define an operator called T(·) that summarizes one algorithm iteration:

| (93) |

| (94) |

Lemma 5. If ℓ(·) is proper, convex, and continuous; f(·) is non-expansive; and L > 1, then T(·) from (94) is α-averaged with .

Proof. First, because ℓ(·) is proper, convex, and continuous, we know that the proximal operator proxℓ/(λL)(·) is α-averaged with α = 1/2 [55]. Then, by definition, is α-averaged with α = 1/L. From (94), T(·) is the composition of these two α-averaged operators, and so from (92) we have that T(·) is α-averaged with . □

With Lemma 5, we can prove the convergence of RED-PG.

Theorem 2. If ℓ(·) is proper, convex, and continuous; f(·) is non-expansive; L > 1; and T(·) from (94) has at least one fixed point, then RED-PG converges.

Proof. From (94), we have that Algorithm 4 is equivalent to

| (95) |

| (96) |

where B(·) is an implicit non-expansive operator that must exist under the definition of α-averaged operators from (91). The iteration (96) can be recognized as a Mann iteration [30], since α ∈ (0,1). Thus, from [55, Thm. 5.14], {xk} is a convergent sequence, in that there exists a fixed point such that limk→∞ ∥xk – x*∥ = 0. □

We note that similar Mann-based techniques were used in [9], [56] to prove the convergence of PnP-based algorithms. Also, we conjecture that similar techniques may be used to prove the convergence of other RED algorithms, but we leave the details to future work. Experiments in Section V-G numerically study the convergence behavior of several RED algorithms with different image denoisers f(·).

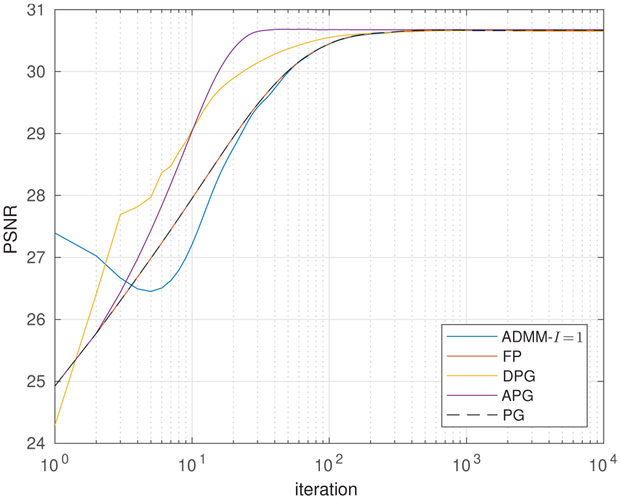

G. Algorithm Comparison: Image Deblurring

We now compare the performance of the RED algorithms discussed above (i.e., inexact ADMM, FP, DPG, APG, and PG) on the image deblurring problem considered in [1, Sec. 6.1]. For these experiments, the measurements y were constructed using a 9 × 9 uniform blur kernel for A and using AWGN with variance σ2 = 2. As stated earlier, the image x is normalized to have pixel intensities in the range [0, 255].

For the first experiment, we used the TNRD denoiser. The various algorithmic parameters were chosen based on the recommendations in [1]: the regularization weight was λ = 0.02, the ADMM penalty parameter was β = 0.001, and the noise variance assumed by the denoiser was ν = 3.252. The proximal step on ℓ(x; y), given in (90), was implemented with an FFT. For RED-DPG we used4 L0 = 0.2 and L∞ = 2, for RED-APG we used L = 1, and for RED-PG we used L = 1.01 since Theorem 2 motivates L > 1.

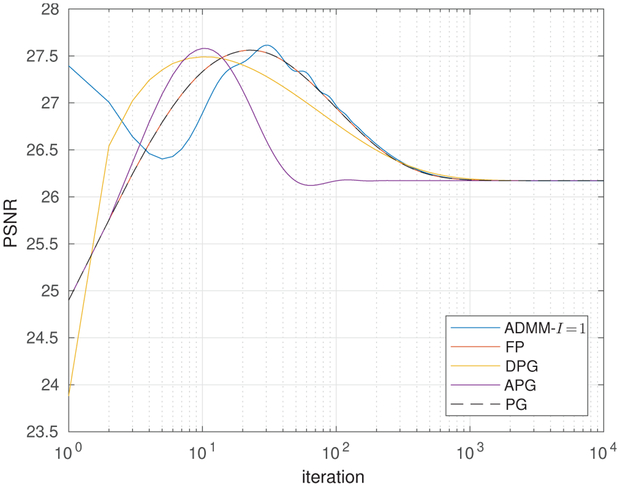

Figure 4 shows

versus iteration k for the starfish test image. In the figure, the proposed RED-DPG and RED-APG algorithms appear significantly faster than the RED-FP and RED-ADMM-I = 1 algorithms proposed in [1]. For example, RED-APG reaches PSNR = 30 in 15 iterations whereas RED-FP and inexact RED-ADMM-I = 1 take about 50 iterations.

Fig. 4.

PSNR versus iteration for RED algorithms with TNRD denoising when deblurring the starfish.

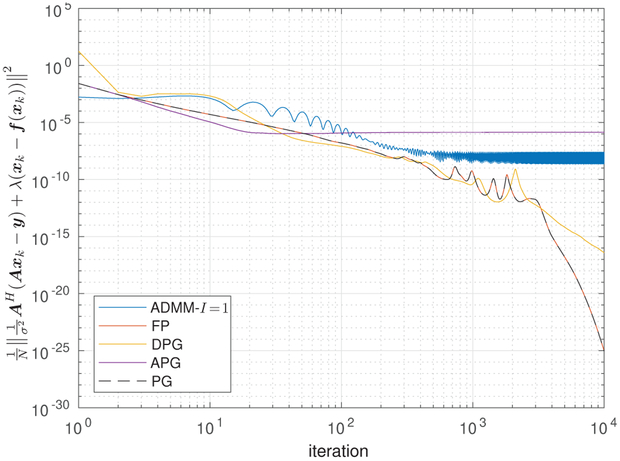

Figure 5 shows the fixed-point error

verus iteration k. All but the RED-APG and RED-ADMM algorithms appear to converge to the solution set of the fixed-point equation (15). The RED-APG and RED-ADMM algorithms appear to approximately satisfy the fixed-point equation (15), but not exactly satisfy (15), since the fixed-point error does not decay to zero.

Fig. 5.

Fixed-point error versus iteration for RED algorithms with TNRD denoising when deblurring the starfish.

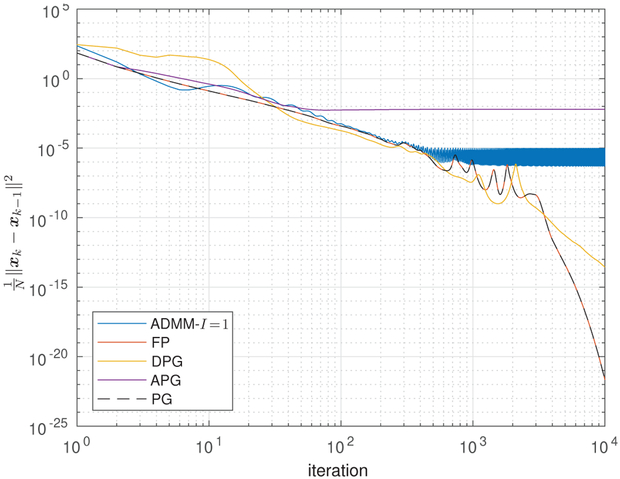

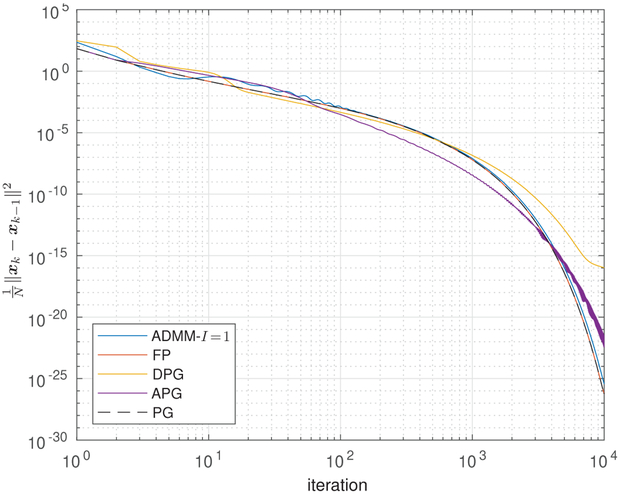

Figure 6 shows the update distance vs. iteration k for the algorithms under test. For most algorithms, the update distance appears to be converging to zero, but for RED-APG and RED-ADMM it does not. This suggests that the RED-APG and RED-ADMM algorithms are converging to a limit cycle rather than a unique limit point.

Fig. 6.

Update distance versus iteration for RED algorithms with TNRD denoising when deblurring the starfish.

Next, we replace the TNRD denoiser with the TDT denoiser from (30) and repeat the previous experiments. For the TDT denoiser, we used a Haar-wavelet based orthogonal discrete wavelet transform (DWT) W, with the maximum number of decomposition levels, and a soft-thresholding function g(·) with threshold value 0.001. Unlike the TNRD denoiser, this TDT denoiser is the proximal operator associated with a convex cost function, and so we know that it is -averaged and non-expansive.

Figure 7 shows PSNR versus iteration with TDT denoising. Interestingly, the final PSNR values appear to be nearly identical among all algorithms under test, but more than 1 dB worse than the values around iteration 20. Figure 8 shows the fixed-point error vs. iteration for this experiment. There, the errors of most algorithms converge to a value near 10−7, but then remain at that value. Noting that RED-PG satisfies the conditions of Theorem 2 (i.e., convex loss, non-expansive denoiser, L > 1), it should converge to a fixed-point of (15). Therefore, we attribute the fixed-point error saturation in Fig. 8 to issues with numerical precision. Figure 9 shows the normalized distance versus iteration with TDT denoising. There, the distance decreases to zero for all algorithms under test.

Fig. 7.

PSNR versus iteration for RED algorithms with TDT denoising when deblurring the starfish.

Fig. 8.

Fixed-point error versus iteration for RED algorithms with TDT denoising when deblurring the starfish.

Fig. 9.

Update distance versus iteration for RED algorithms with TDT denoising when deblurring the starfish.

We emphasize that the proposed RED-DPG, RED-APG, and RED-PG algorithms seek to solve exactly the same fixed- point equation (15) sought by the RED-SD, RED-ADMM, and RED-FP algorithms proposed in [1]. The excellent quality of the RED fixed-points was firmly established in [1], both qualitatively and quantitatively, in comparison to existing state-of-the-art methods like PnP-ADMM [10]. For further details on these comparisons, including examples of images recovered by the RED algorithms, we refer the interested reader to [1].

VI. Equilibrium View of RED Algorithms

Like the RED algorithms, PnP-ADMM [10] repeatedly calls a denoiser f(·) in order to solve an inverse problem. In [9], Buzzard, Sreehari, and Bouman show that PnP-ADMM finds a “consensus equilibrium” solution rather than a minimum of any explicit cost function. By consensus equilibrium, we mean a solution (, ) to

| (97a) |

| (97b) |

for some functions F, . For PnP-ADMM, these functions are [9]

| (98) |

| (99) |

A. RED Equilibrium Conditions

We now show that the RED algorithms also find consensus equilibrium solutions, but with G ≠ Gpnp. First, recall ADMM Algorithm 1 with explicit regularization ρ(·). By taking iteration k → ∞, it becomes clear that the ADMM solutions must satisfy the equilibrium condition (97) with

| (100) |

| (101) |

where we note that Fadmm = Fpnp.

The RED-ADMM algorithm can be considered as a special case of ADMM Algorithm 1 under which ρ(·) is differentiable with ∇ρ(x) = x – f(x), for a given denoiser f(·). We can thus find Gred-admm(·), i.e., the RED-ADMM version of G(·) satisfying the equilibrium condition (97b), by solving the right side of (101) under ∇ρ(x) = x – f(x). Similarly, we see that the RED-ADMM version of F(·) is identical to the ADMM version of F(·) from (100). Now, the that solves the right side of (101) under differentiable ρ(·) with ∇ρ(x) = x – f(x) must obey

| (102) |

| (103) |

which we note is a special case of (15). Continuing, we find that

| (104) |

| (105) |

| (106) |

| (107) |

where I represents the identity operator and (·)−1 represents the functional inverse. In summary, RED-ADMM with denoiser f(·) solves the consensus equilibrium problem (97) with F = Fadmm from (100) and G = Gred-admm from (107).

Next we establish an equilibrium result for RED-PG. Defining uk = υk – xk and taking k → ∞ in Algorithm 4, it can be seen that the fixed points of RED-PG obey (97a) for

| (108) |

Furthermore, from line 3 of Algorithm 4, it can be seen that the RED-PG fixed points also obey

| (109) |

| (110) |

| (111) |

| (112) |

which matches (97b) when G = Gred-pg for

| (113) |

Note that Gred-pg = Gred-admm when L = β/λ.

B. Interpreting the RED Equilibria

The equilibrium conditions provide additional interpretations of the RED algorithms. To see how, first recall that the RED equilibrium (, ) satisfies

| (114a) |

| (114b) |

or an analogous pair of equations involving Fred-admm and Gred-admm. Thus, from (108), (109), and (114a), we have that

| (115) |

| (116) |

| (117) |

When L = 1, this simplifies down to

| (118) |

Note that (118) is reminiscent of, although in general not equivalent to,

| (119) |

which was discussed as an “alternative” formulation of RED in [1, Sec. 5.2].

Insights into the relationship between RED and PnP-ADMM can be obtained by focusing on the simple case of

| (120) |

where the overall goal of variational image recovery would be the denoising of y. For PnP-ADMM, (90) and (98) imply

| (121) |

and so the equilibrium condition (97a) implies

| (122) |

| (123) |

Meanwhile, (99) and the equilibrium condition (97b) imply

| (124) |

| (125) |

In the case that λ = 1/σ2, we have the intuitive result that

| (126) |

which corresponds to direct denoising of y. For RED, is algorithm dependent, but is always the solution to (15), where now A = I due to (120). That is,

| (127) |

Taking λ = 1/σ2 for direct comparison to (126), we find

| (128) |

Thus, whereas PnP-ADMM reports the denoiser output f(y), RED reports the for which the denoiser residual negates the measurement residual . This can be expressed concisely as

| (129) |

VII. Conclusion

The RED paper [1] proposed a powerful new way to exploit plug-in denoisers when solving imaging inverse-problems. In fact, experiments in [1] suggest that the RED algorithms are state-of-the-art. Although [1] claimed that the RED algorithms minimize an optimization objective containing an explicit regularizer of the form when the denoiser is LH, we showed that the denoiser must also be Jacobian symmetric for this explanation to hold. We then provided extensive numerical evidence that practical denoisers like the median filter, non-local means, BM3D, TNRD, or DnCNN lack sufficient Jacobian symmetry. Furthermore, we established that, with non-JS denoisers, the RED algorithms cannot be explained by explicit regularization of any form.

None of our negative results dispute the fact that the RED algorithms work very well in practice. But they do motivate the need for a better understanding of RED. In response, we showed that the RED algorithms can be explained by a novel framework called score-matching by denoising (SMD), which aims to match the “score” (i.e., the gradient of the log-prior) rather than design any explicit regularizer. We then established tight connections between SMD, kernel density estimation, and constrained MMSE denoising.

On the algorithmic front, we provided new interpretations of the RED-ADMM and RED-FP algorithms proposed in [1], and we proposed novel RED algorithms with much faster convergence. Finally, we performed a consensus-equilibrium analysis of the RED algorithms that lead to additional interpretations of RED and its relation to PnP-ADMM.

Acknowledgments

The authors thank Peyman Milanfar, Miki Elad, Greg Buzzard, and Charlie Bouman for insightful discussions.

Their work is supported in part by the National Science Foundation under grants CCF-1527162 and CCF-1716388 and the National Institutes of Health under grant R01HL135489.

Footnotes

Matlab code for the experiments is available at http://www2.ece.ohio-state.edu/~schniter/RED/index.html.

We used the center 16 × 16 patches of the standard Barbara, Bike, Boats, Butterfly, Cameraman, Flower, Girl, Hat, House, Leaves, Lena, Parrots, Parthenon, Peppers, Plants, Raccoon, and Starfish test images.

The extension to non-quadratic loss is important for applications like phase-retrieval, where RED has been successfully applied [51].

Matlab code for these experiments is available at http://www2.ece.ohio-state.edu/~schniter/RED/index.html.

Contributor Information

Edward T. Reehorst, Email: reehorst.3@osu.edu.

Philip Schniter, Email: schniter.1@osu.edu.

References

- [1].Romano Y, Elad M, and Milanfar P, “The little engine that could: Regularization by denoising (RED),” SIAM J. Imag. Sci, vol. 10, no. 4, pp. 1804–1844, 2017. [Google Scholar]

- [2].Buades A, Coll B, and Morel J-M, “A review of image denoising algorithms, with a new one,” Multiscale Model. Sim, vol. 4, no. 2, pp. 490–530, 2005. [Google Scholar]

- [3].Milanfar P, “A tour of modern image filtering: New insights and methods, both practical and theoretical,” IEEE Signal Process. Mag, vol. 30, no. 1, pp. 106–128, 2013. [Google Scholar]

- [4].Chen Y and Pock T, “Trainable nonlinear reaction diffusion: A flexible framework for fast and effective image restoration,” IEEE Trans. Pattern Anal. Mach. Intell, vol. 39, no. 6, pp. 1256–1272, 2017. [DOI] [PubMed] [Google Scholar]

- [5].Zhang K, Zuo W, Chen Y, Meng D, and Zhang L, “Beyond a Gaussian denoiser: Residual learning of deep CNN for image denoising,” IEEE Trans. Image Process., vol. 26, no. 7, pp. 3142–3155, 2017. [DOI] [PubMed] [Google Scholar]

- [6].Buades A, Coll B, and Morel J-M, “A non-local algorithm for image denoising,” in Proc. IEEE Conf. Comp. Vision Pattern Recog, vol. 2, pp. 60–65, 2005. [Google Scholar]

- [7].Dabov K, Foi A, Katkovnik V, and Egiazarian K, “Image denoising by sparse 3-D transform-domain collaborative filtering,” IEEE Trans. Image Process, vol. 16, no. 8, pp. 2080–2095, 2007. [DOI] [PubMed] [Google Scholar]

- [8].Parzen E, “On estimation of a probability density function and mode,” Ann. Math. Statist, vol. 33, no. 3, pp. 1065–1076, 1962. [Google Scholar]

- [9].Buzzard GT, Chan SH, Sreehari S, and Bouman CA, “Plug-and-play unplugged: Optimization-free reconstruction using consensus equilibrium,” SIAM J. Imag. Sci, vol. 11, no. 3, pp. 2001–2020, 2018. [Google Scholar]

- [10].Venkatakrishnan SV, Bouman CA, and Wohlberg B, “Plug-and-play priors for model based reconstruction,” in Proc. IEEE Global Conf. Signal Info. Process, pp. 945–948, 2013. [Google Scholar]

- [11].Bishop CM, Pattern Recognition and Machine Learning. New York: Springer, 2007. [Google Scholar]

- [12].Boyd S, Parikh N, Chu E, Peleato B, and Eckstein J, “Distributed optimization and statistical learning via the alternating direction method of multipliers,” Found. Trends Mach. Learn, vol. 3, no. 1, pp. 1–122, 2011. [Google Scholar]

- [13].Ono S, “Primal-dual plug-and-play image restoration,” IEEE Signal Process. Lett, vol. 24, no. 8, pp. 1108–1112, 2017. [Google Scholar]

- [14].Kamilov U, Mansour H, and Wohlberg B, “A plug-and-play priors approach for solving nonlinear imaging inverse problems,” IEEE Signal Process. Lett, vol. 24, pp. 1872–1876, May 2017. [Google Scholar]

- [15].Donoho DL, Maleki A, and Montanari A, “Message passing algorithms for compressed sensing,” Proc. Nat. Acad. Sci, vol. 106, pp. 18914–18919, Nov. 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Bayati M and Montanari A, “The dynamics of message passing on dense graphs, with applications to compressed sensing,” IEEE Trans. Inform. Theory, vol. 57, pp. 764–785, Feb. 2011. [Google Scholar]

- [17].Som S and Schniter P, “Compressive imaging using approximate message passing and a Markov-tree prior,” IEEE Trans. Signal Process, vol. 60, pp. 3439–3448, July 2012. [Google Scholar]

- [18].Donoho DL, Johnstone IM, and Montanari A, “Accurate prediction of phase transitions in compressed sensing via a connection to minimax denoising,” IEEE Trans. Inform. Theory, vol. 59, June 2013. [Google Scholar]

- [19].Metzler CA, Maleki A, and Baraniuk RG, “From denoising to compressed sensing,” IEEE Trans. Inform. Theory, vol. 62, no. 9, pp. 5117–5144, 2016. [Google Scholar]

- [20].Rangan S, Schniter P, and Fletcher AK, “Vector approximate message passing,” in Proc. IEEE Int. Symp. Inform. Thy., pp. 1588–1592, 2017. [Google Scholar]

- [21].Schniter P, Rangan S, and Fletcher AK, “Denoising-based vector approximate message passing,” in Proc. Intl. Biomed. Astronom. Signal Process. (BASP) Frontiers Workshop, 2017. [Google Scholar]

- [22].Berthier R, Montanari A, and Nguyen P-M, “State evolution for approximate message passing with non-separable functions,” arXiv:1708.03950, 2017. [Google Scholar]

- [23].Fletcher AK, Rangan S, Sarkar S, and Schniter P, “Plug-in estimation in high-dimensional linear inverse problems: A rigorous analysis,” in Proc. Neural Inform. Process. Syst. Conf, 2018. (see also arXiv:1806.10466). [Google Scholar]

- [24].Huang TS, Yang GJ, and Tang YT, “A fast two-dimensional median filtering algorithm,” IEEE Trans. Acoust. Speech & Signal Process, vol. 27, no. 1, pp. 13–18, 1979. [Google Scholar]

- [25].Rudin W, Principles of Mathematical Analysis. New York: McGraw-Hill, 3rd ed., 1976. [Google Scholar]

- [26].Kantorovitz S, Several Real Variables. Springer, 2016. [Google Scholar]

- [27].Sreehari S, Venkatakrishnan SV, Wohlberg B, Buzzard GT, Drummy LF, Simmons JP, and Bouman CA, “Plug-and-play priors for bright field electron tomography and sparse interpolation,” IEEE Trans. Comp. Imag., vol. 2, pp. 408–423, 2016. [Google Scholar]

- [28].Donoho DL and Johnstone IM, “Ideal spatial adaptation by wavelet shrinkage,” Biometrika, vol. 81, no. 3, pp. 425–455, 1994. [Google Scholar]

- [29].Coifman RR and Donoho DL, “Translation-invariant de-noising,” in Wavelets and Statistics (Antoniadis A and Oppenheim G, eds.), pp. 125–150, Springer, 1995. [Google Scholar]

- [30].Parikh N and Boyd S, “Proximal algorithms,” Found. Trends Optim, vol. 3, no. 1, pp. 123–231, 2013. [Google Scholar]

- [31].Ong F, Milanfar P, and Getreurer P, “Local kernels that approximate Bayesian regularization and proximal operators,” arXiv:1803.03711, 2018. [DOI] [PubMed] [Google Scholar]

- [32].Teodoro A, Bioucas-Dias JM, and Figueiredo MAT, “Scene-adapted plug-and-play algorithm with guaranteed convergence: Applications to data fusion in imaging,” arXiv:1801.00605, 2018. [Google Scholar]

- [33].Milanfar P, “Symmetrizing smoothing filters,” SIAM J. Imag. Sci, vol. 30, no. 1, pp. 263–284, 2013. [Google Scholar]

- [34].Milanfar P and Talebi H, “A new class of image filters without normalization,” in Proc. IEEE Int. Conf. Image Process, pp. 3294–3298, 2016. [Google Scholar]

- [35].Goldstein T, Studer C, and Baraniuk R, “Forward-backward splitting with a FASTA implementation,” arXiv:1411.3406, 2014. [Google Scholar]

- [36].Robbins H, “An empirical Bayes approach to statistics,” in Proc. Berkeley Symp. Math. Stats. Prob, pp. 157–163, 1956. [Google Scholar]

- [37].Efron B, “Tweedie’s formula and selection bias,” J. Am. Statist. Assoc, vol. 106, no. 496, pp. 1602–1614, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [38].Hyvärinen A, “Estimation of non-normalized statistical models by score matching,” J. Mach. Learn. Res, vol. 6, pp. 695–709, 2005. [Google Scholar]

- [39].Vincent P, “A connection between score matching and denoising autoencoders,” Neural Comput, vol. 23, no. 7, pp. 1661–1674, 2011. [DOI] [PubMed] [Google Scholar]

- [40].Stein CM, “Estimation of the mean of a multivariate normal distribution,” Ann. Statist, vol. 9, pp. 1135–1151, 1981. [Google Scholar]

- [41].Luisier F, The SURE-LET Approach to Image Denoising. PhD thesis, EPFL, Lausanne, Switzerland, 2010. [Google Scholar]

- [42].Raphan M and Simoncelli EP, “Least squares estimation without priors or supervision,” Neural Comput, vol. 23, pp. 374–420, Feb. 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [43].Blu T and Luisier F, “The SURE-LET approach to image denoising,” IEEE Trans. Image Process, vol. 16, no. 11, pp. 2778–2786, 2007. [DOI] [PubMed] [Google Scholar]

- [44].Bigdeli SA and Zwicker M, “Image restoration using autoencoding priors,” arXiv:1703.09964, 2017. [Google Scholar]

- [45].Alain G and Bengio Y, “What regularized auto-encoders learn from the data-generating distribution,” J. Mach. Learn. Res, vol. 15, no. 1, pp. 3563–3593, 2014. [Google Scholar]

- [46].Ranzato MA, Boureau Y-L, and LeCun Y, “Sparse feature learning for deep belief networks,” in Proc. Neural Inform. Process. Syst. Conf, pp. 1185–1192, 2008. [Google Scholar]

- [47].Beck A and Teboulle M, “Gradient-based algorithms with applications to signal recovery,” in Convex optimization in signal processing and communications (Palomar DP and Eldar YC, eds.), pp. 42–88, Cambridge, 2009. [Google Scholar]

- [48].Combettes PL and Pesquet J-C, “Proximal splitting methods in signal processing,” in Fixed-Point Algorithms for Inverse Problems in Science and Engineering (Bauschke H, Burachik R, Combettes P, Elser V, Luke D, and Wolkowicz H, eds.), pp. 185–212, Springer, 2011. [Google Scholar]

- [49].Sun Y, Babu P, and Palomar DP, “Majorization-minimization algorithms in signal processing, communications, and machine learning,” IEEE Trans. Signal Process, vol. 65, no. 3, pp. 794–816, 2017. [Google Scholar]

- [50].Figueiredo MAT, Bioucas-Dias JM, and Nowak RD, “Majorization-minimization algorithms for wavelet-based image restoration,” IEEE Trans. Image Process, vol. 16, no. 12, pp. 2980–2991, 2007. [DOI] [PubMed] [Google Scholar]

- [51].Metzler CA, Schniter P, Veeraraghavan A, and Baraniuk RG, “prDeep: Robust phase retrieval with flexible deep neural networks,” in Proc. Int. Conf. Mach. Learning, 2018. (see also arXiv:1803.00212). [Google Scholar]

- [52].Beck A and Teboulle M, “A fast iterative shrinkage-thresholding algorithm for linear inverse problems,” SIAM J. Imaig. Sci, vol. 2, no. 1, pp. 183–202, 2009. [Google Scholar]

- [53].Sidi A, Vector Extrapolation Methods with Applications. SIAM, 2017. [Google Scholar]

- [54].Hong T, Romano Y, and Elad M, “Acceleration of RED via vector extrapolation,” arXiv:1805:02158, 2018. [Google Scholar]

- [55].Bauschke HH. and Combettes PL, Convex Analysis and Monotone Operator Theory in Hilbert Spaces. CMS Books in Mathematics, New York: Springer, 1st ed., 2011. [Google Scholar]

- [56].Sun Y, Wohlberg B, and Kamilov US, “An online plug-and-play algorithm for regularized image reconstruction,” arXiv:1809.04693, 2018. [Google Scholar]