Abstract

Diagnosis of breast carcinomas has so far been limited to the morphological interpretation of epithelial cells and the assessment of epithelial tissue architecture. Consequently, most of the automated systems have focused on characterizing the epithelial regions of the breast to detect cancer. In this paper, we propose a system for classification of hematoxylin and eosin (H&E) stained breast specimens based on convolutional neural networks that primarily targets the assessment of tumor-associated stroma to diagnose breast cancer patients. We evaluate the performance of our proposed system using a large cohort containing 646 breast tissue biopsies. Our evaluations show that the proposed system achieves an area under ROC of 0.92, demonstrating the discriminative power of previously neglected tumor associated stroma as a diagnostic biomarker.

Keywords: Digital pathology, Convolutional Neural Networks, Breast Cancer, Tumor Associated Stroma

1. INTRODUCTION

Definitive diagnosis and interpretation of breast tissue specimens have so far been based on morphological assessment of epithelial cells. Pathologists traditionally perform a semiquantitative microscopic assessment of the morphological features of the breast such as tubule formation, epithelial nuclear atypia, and epithelial mitotic activity to detect and characterize breast cancer [1]. While this assessment has been useful for disease management of cancer patients, the emergence of digital pathology encompassing computerized and computer-aided diagnostics can lead to discovery of valuable prognostic information that provide new insights into the biological factors contributing to breast cancer progression. In [2], Beck et al. generated new insights into the importance of stromal morphological characteristics as an important prognostic factor in breast cancer that have been previously ignored in the analysis of histopathology images. In addition, several studies have shown that higher stromal density and extent of fibrosis is correlated with increased mammography breast density [3] which confers a 4- to 6-fold risk for breast cancer. These results concord with recent studies which revealed that instead of cancer cells, the surrounding tumor stromal cells known as cancer-associated fibroblasts contribute to cancer progression [5, 6].

However, most of the existing algorithms for breast cancer detection and classification in histology images [7, 8, 9, 10] involve assessment of the morphology and arrangement of epithelial primitives (e.g. nuclei, ducts). Several studies developed automated classification systems based on an initial segmentation of nuclei and extraction of features to describe the morphology of nuclei or their spatial arrangement [7, 8]. Naik et al. [9] developed a method for automated detection and segmentation of nuclear and glandular structures for classification of breast cancer histopathology images. While all of the previously mentioned algorithms were designed to classify manually selected regions of interest (mostly selected by expert pathologists), in [10], we proposed an algorithm for automatic detection of ductal carcinoma in situ (DCIS) that operates at the whole slide level and distinguishes DCIS from a large set of benign disease conditions.

Unlike the existing work which focused on analysis of epithelial tissue to detect and characterize breast cancer, we sought to develop a novel data-driven system that primarily analyzes stromal morphologic features to discriminate between breast cancer patients and patients with benign breast disease. A crucial step in the development of the existing algorithms has been the design of relevant hand-crafted features. This step is intrinsically intractable for assessing the morphology of tumor stroma in our work. The main reason is that there is no precise definition of the morphological properties of cancer-associated stroma among the pathologists. Moreover, the origin of tumor associated-stromal fibroblasts is not entirely understood [5]. This motivates the use of machine learning algorithms that can create their own representations for the classification task. Within the field of machine learning, a class of algorithms called deep learning has been very successful in tasks such as image or speech recognition. Deep learning exploits the idea of hierarchical representation learning directly from input data to discover statistical variations in the data. The most successful type of deep learning models for image analysis are convolutional neural networks (CNN). CNNs have also been used to detect cancer areas in breast tissue specimen [11].

In this paper, we present a new automated system for analyzing H&E stained breast specimen whole-slide-images (WSI) based on CNNs. Our proposed system distinguishes breast cancer from normal breast tissue based on stromal characteristics of the tissue.

2. METHOD

Our proposed model for WSI classification of a breast biopsy specimen consists of several steps. As a pre-processing step, we used a pre-trained network for background/tissue classification. Subsequently, we trained two CNNs. The first one classifies the WSI into epithelium, stroma, and fat. The second operates on the stromal areas resulted from the classification output of the first CNN, to classify the stromal regions as normal stroma or cancer-associated stroma. Two sets of features were extracted from the output of the two CNNs. The first set of features, extracted from the first CNN, characterizes the global tissue amount per class and spatial arrangement of epithelial regions in the WSI. The second set was extracted from the output of the second CNN to characterize several global features related to regions classified as tumor associated stroma. Finally, a random forest classifier was trained using these features to classify the WSI into cancer or non-cancer.

2.1. Breast tissue component classification

Inspired by the success of VGG-net [12] which was ranked at the top of ILSVRC 2014 challenge, we trained a VGG-like convolutional neural network with 11 layers. VGG-net uses 3 × 3 filters throughout the convolutional layers of the network. Each convolutional layer was followed by a ReLU activation function. We used 2 × 2 max-pooling operation after the convolutional layers: 2, 4, 6, and 9. We started with 12 filters, and doubled the number of filters after each max-pooling operation. The densely connected hidden layers have 2048, and 1024 units. To train the network, we replaced the two fully connected layers of our network with 1 × 1 convolutions. This is because fully-connected layers require fixed-sized vectors, while convolutional layers accept arbitrary input size; hence, replacing them would allow us to adopt the deep network for images of arbitrary sizes. Hereafter, we denote this model as CNN I.

2.2. Classification of stromal regions into normal or tumor-associated stroma

For the second classification task we used the standard 16-layer VGG-net [12] which we refer to as CNN II. Similar to CNN I, we turned this network into an fully convolutional network to allow classification of arbitrary size inputs at test time.

2.3. Classification framework and model parameters

The CNN training procedure for the two networks involves optimizing the multinomial logistic regression objective (softmax) using stochastic gradient descent with Nesterov momentum [13]. The input to both networks is a 224 × 224 RGB patch image sampled at the highest magnification. The batch size was set to 128 and 22 for the CNN I and II, momentum to 0.9. We used L2-regularization (λCNN−I = 0.003 and λCNN−II = 0.0001) and dropout regularization with ratio 0.5 [14] (only applied to the last two layers of the network with 1 × 1 convolutions). We used an adaptive learning rate scheme. The learning rate was initially set to 0.01 and then decreased by a factor of 5 if no increase in performance was observed on the evaluation set, over a predefined number of epochs which we refer to as epoch patience (Ep). The initial value of Ep was set to 10. We increased this value by 20% after each drop incidence in the learning rate. This prevented the network from dropping the learning rate too fast at lower learning rates. The weights of our networks were initialized using the strategy by He et al. [15].

To augment the training set, patches were randomly rotated and flipped. We additionally performed color augmentations, by randomly jittering the hue and saturation of pixels in the HSV color space. To generate the data for each minibatch, we randomly sampled patches from previously annotated regions for each class with uniform probabilities.

The data for training our CNN II exhibits high class imbalance in its distribution (there exists considerably more normal stroma than cancer associated stroma). Although we tried to increase the capacity of the network in learning discriminative features to distinguish the minority class by uniformly sampling the data for each mini-batch, we may fall into the risk of training a very sensitive mode. Because the class distribution in each mini-batch does not represent the actual skewed distribution of the data, a small number of false positives in each mini-batch may translate to vast regions of false positives in the actual WSI. To ameliorate this effect, besides uniform sampling in each mini-batch, we gradually increased the missclassification loss for the normal class. The loss weight factor for the negative samples was initially set to 1, and multiplied by 1.0034 after each epoch (the weight factor becomes 2 by epoch 200). This ensured that the network learns discriminative features from the beginning of the training process and gradually learns the class distribution of the data as well.

2.4. Feature extraction and WSI classification

CNN I was applied to the WSI in a sliding window approach to generate a WSI map with three possible labels: epithelium, stroma, and fat. CNN II was used to generate WSI likelihood maps representing each pixel’s probability of belonging to the tumor associated stroma class. Details of the features extracted from the output of the CNN I model are presented in Table 1. These features include: global tissue amount for each class, morphological features of the epithelial areas, and features extracted from Delaunay Triangulation [16] (built on centroid of epithelial regions) and area-Voronoi diagrams [16] (generated using the epithelial region areas). We extracted similar features from the thresholded likelihood maps generated by CNN II (T = 0.9) for the connected components labeled as tumor associated stroma.

Table 1.

Features extracted from the classification results of CNN I.

| Feature category | Feature list |

|---|---|

| Global tissue amount | Total area of epithelium, stroma, and fat and the normalized areas of each tissue class by the total tissue amount. |

| Morphology | * Statistics of the area and eccentricity of epithelial regions |

| Delaunay Triangulation | * Statistics of the number of neighbors for each node and the distances of each node with respect to others |

| Area-Voronoi diagram | * Statistics of the areas of the Voronoi cells, and the area ratio between the actual epithelial region and its Voronoi zone of influence (ZOI) [17]. |

The statistics we computed are: mean, standard deviation, median, and inter-quartile range.

The resulting feature vector contained 71 features. These features were used to train a random forest classifier with 100 random decision trees. All the parameters including the threshold applied to likelihood maps generated by CNN II were tuned using the combination of training and validation sets with cross-validation.

3. EXPERIMENTS

3.1. Dataset description

A total of 646 H&E stained breast tissue sections obtained from 444 women referred for diagnostic image-guided breast biopsies (including needle core biopsy and vacuum-assisted biopsy) following an abnormal mammogram that were enrolled in the cross-sectional Breast Radiology Evaluation and Study of Tissues (BREAST) Stamp Project [18] (2007–2010) were included in this analysis. The tissue sections were digitized using Aperio ScanScope CS scanner and Hamamuatsu scanner at 20X magnification, and images have square pixels of size 0.455 × 0.455μm2.

Two trained students annotated a set of epithelial, stromal, and fat regions in 100 WSIs to be used for training and validation of CNN I. For the second network we used all the previously annotated stromal regions in normal slides as negative samples. Samples for the tumor associated stroma class were generated under the supervision of a pathologist by annotating stromal regions in the vicinity of epithelial cancer regions.

3.2. Experimental design

The dataset was divided into non-overlapping training (270 WSIs with 223 benign disease and 47 invasive cancer), validation (80 WSIs with 65 benign disease and 15 invasive cancer), and testing (296 WSIs with 251 benign disease and 45 invasive cancer) sets. The training and validation sets were used to find the best hyper-parameters for CNN I and II. The independent test set was used to evaluate the performance of the entire system. For training of both CNN I and II, we performed two steps of hard negative mining (generating new negative samples from the false positives of the model and retraining).

The final performance of our system was evaluated using receiver-operating characteristic (ROC) analysis on the likelihoods generated by the random forest classifier. Confidence intervals (CI) were generated using patient-stratified bootstrapping with 1000 intervals.

4. RESULTS AND DISCUSSION

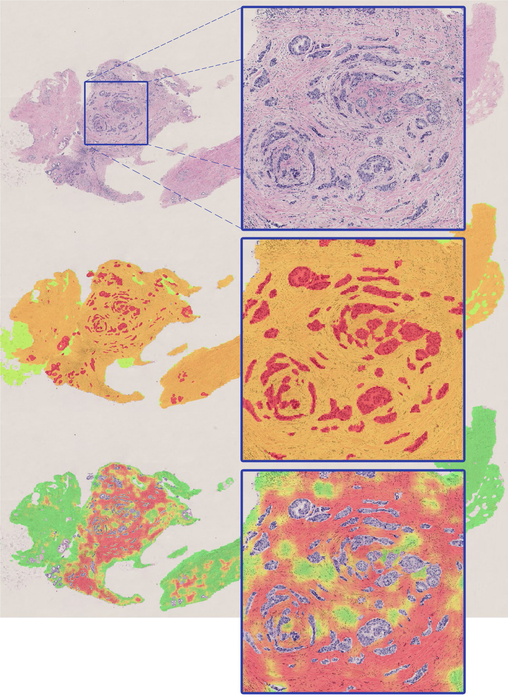

CNN I achieved a pixel-level accuracy of 95.5% for classification of tissue into epithelium, stroma, and fat. Fig. 1 shows an example of the result produced by this model on a WSI. CNN II achieved a pixel-level binary accuracy of 92.0% for classifying stroma into normal stroma or tumor-associated stroma. Fig. 1 also shows the results of classification for a slide containing cancer.

Fig. 1.

Sample classification result by CNN I (middle image) and CNN II (bottom image) for a WSI containing breast cancer. In the middle image, green, orange and red represent fat, stroma, and epithelium, respectively. The bottom image shows the likelihood map representing tumor-stroma probability overlaid on the original image (green represents low probability and red represents high probability of belonging to tumor-associated stroma class).

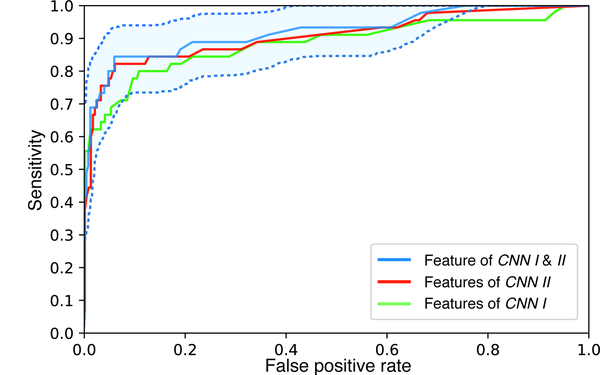

The ROC curve for the final performance of the system is shown in Fig. 2. The system achieved an AUC of 0.921 (95% CI 0.862–0.967) at the WSI level for distinguishing cancer from benign breast disease based on combination of both feature sets. The AUC of the system when only considering features from CNN I and CNN II independently was 0.882 and 0.904, respectively. The results demonstrate that breast cancer can be accurately diagnosed based on the analysis of stromal features alone, suggesting the centrality of alterations to the breast stroma in the process of breast carcinogenesis.

Fig. 2.

The ROC curve of the proposed system. Confidence interval is only shown for the system using both feature sets from CNN I and II.

5. CONCLUSION

In this paper we proposed a system for classification of WSIs of breast tissue biopsies. While most previous work has focused on identification of nuclei or glands to characterize abnormal texture patterns in the epithelial cancer regions, we proposed the first system developed based on deep learning techniques to assess the stromal properties of the tissue and investigate the discriminatory power of tumor associated stroma as a diagnostic bio-marker for detecting cancer. These results show that by using deep learning-based techniques, a large amount of information required to discriminate breast cancer from benign breast disease can be obtained from stromal tissue alone. In the future, we will assess the role of tumor associated stroma as a bio-marker to predict breast cancer recurrence and as a predictor of breast cancer development among women diagnosed with benign breast disease.

6. ACKNOWLEDGMENTS

This project was funded in part by the Intramural Research Program of the National Cancer Institute, National Institutes of Health, Bethesda, Maryland.

7. REFERENCES

- [1].Patey DH and Scarff RW, “The position of histology in the prognosis of carcinoma of the breast.,” The Lancet, vol. 211, no. 5460, pp. 801–804, 1928. [Google Scholar]

- [2].Beck AH, Sangoi AR, Leung S, Marinelli RJ, Nielsen TO, van de Vijver MJ, West RB, van de Rijn M, and Koller D, “Systematic analysis of breast cancer morphology uncovers stromal features associated with survival,” Science translational medicine, vol. 3, no. 108, pp. 108ra113–108ra113, 2011. [DOI] [PubMed] [Google Scholar]

- [3].Sun X et al. , “Relationship of mammographic density and gene expression: analysis of normal breast tissue surrounding breast cancer,” Clinical Cancer Research, vol. 19, no. 18, pp. 4972–4982, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Shiga K, Hara M, Nagasaki T, Sato T, Takahashi H, and Takeyama H, “Cancer-associated fibroblasts: their characteristics and their roles in tumor growth,” Cancers, vol. 7, no. 4, pp. 2443–2458, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Kalluri R and Zeisberg M, “Fibroblasts in cancer,” Nature Reviews Cancer, vol. 6, no. 5, pp. 392–401, 2006. [DOI] [PubMed] [Google Scholar]

- [6].Dundar MM, Badve S, Bilgin G, Raykar V, Jain R, Sertel O, and Gurcan MN, “Computerized classification of intraductal breast lesions using histopathological images,” Biomedical Engineering, IEEE Transactions on, vol. 58, no. 7, pp. 1977–1984, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Dong F et al. , “Computational pathology to discriminate benign from malignant intraductal proliferations of the breast,” PLoS ONE, vol. 9, no. 12, pp. e114885, 12 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Naik S, Doyle S, Agner S, Madabhushi A, Feldman M, and Tomaszewski J, “Automated gland and nuclei segmentation for grading of prostate and breast cancer histopathology,” in ISBI 2008. 5th IEEE International Symposium on, May 2008, pp. 284–287. [Google Scholar]

- [9].Ehteshami Bejnordi B, Balkenhol M, Litjens G, Holland R, Bult P, Karssemeijer N, and van der Laak J, “Automated detection of dcis in whole-slide H&E stained breast histopathology images,” IEEE Transactions on Medical Imaging, vol. 35, no. 9, pp. 2141–2150, September 2016. [DOI] [PubMed] [Google Scholar]

- [10].Cruz-Roa A, Basavanhally A, and González F et al. , “Automatic detection of invasive ductal carcinoma in whole slide images with convolutional neural networks,” in SPIE medical imaging, 2014, pp. 904103–904103. [Google Scholar]

- [11].Simonyan K and Zisserman A, “Very deep convolutional networks for large-scale image recognition,” arXiv preprint arXiv:1409.1556, 2014. [Google Scholar]

- [12].Nesterov Y, “A method of solving a convex programming problem with convergence rate O(1/sqr(k)),” Soviet Mathematics Doklady, vol. 27, pp. 372–376, 1983. [Google Scholar]

- [13].Srivastava N, E Hinton G, Krizhevsky A, Sutskever I, and Salakhutdinov R, “Dropout: a simple way to prevent neural networks from overfitting.,” Journal of Machine Learning Research, vol. 15, no. 1, pp. 1929–1958, 2014. [Google Scholar]

- [14].He K, Zhang X, Ren S, and Sun J, “Delving deep into rectifiers: Surpassing human-level performance on imagenet classification,” in Proceedings of the IEEE International Conference on Computer Vision, 2015, pp. 1026–1034. [Google Scholar]

- [15].Okabe A, Boots B, Sugihara K, and Chiu SN, Spatial tessellations: concepts and applications of Voronoi diagrams, vol. 501, John Wiley & Sons, 2009. [Google Scholar]

- [16].Ehteshami Bejnordi B, Moshavegh R, Sujathan K, Malm P, Bengtsson E, and Mehnert A, “Novel chromatin texture features for the classification of pap smears,” in SPIE Medical Imaging, 2013, pp. 867608–867608. [Google Scholar]

- [17].Gierach GL et al. , “Comparison of mammographic density assessed as volumes and areas among women undergoing diagnostic image-guided breast biopsy,” Cancer Epidemiology and Prevention Biomarkers, vol. 23, no. 11, pp. 1055–9965, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]